Abstract

Objectives

To assess changes in quality of care for children at risk for Autism Spectrum Disorders (ASD) due to process improvement and implementation of a digital screening form.

Study design

The process of screening for ASD was studied in an academic primary care pediatrics clinic before and after implementation of a digital version of the Modified Checklist for Autism in Toddlers – Revised with Follow up (M-CHAT-R/F) with automated risk assessment. Quality metrics included accuracy of documentation of screening results and appropriate action for positive screens (secondary screening or referral). Participating physicians completed pre- and post-intervention surveys to measure changes in attitudes toward feasibility and value of screening for ASD. Evidence of change was evaluated with statistical process control charts and chi-squared tests.

Results

Accurate documentation in the electronic medical record of screening results increased from 54% to 92% (38% increase, 95% CI [14%,64%]) and appropriate action for children screening positive increased from 25% to 85% (60% increase, 95% CI [35%,85%]). 90% of participating physicians agreed that the transition to a digital screening form improved their clinical assessment of autism risk.

Conclusions

Implementation of a tablet-based digital version of the M-CHAT-R/F led to improved quality of care for children at risk for ASD and increased acceptability of screening for ASD. Continued efforts towards improving the process of screening for ASD could facilitate rapid, early diagnosis of ASD and advance the accuracy of studies of the impact of screening.

Keywords: M-CHAT, Early Detection

Since the American Academy of Pediatrics first advocated universal screening for Autism Spectrum Disorders (ASD) in 2007, the most widely-used screening questionnaire has been the Modified Checklist for Autism in Toddlers. The original M-CHAT was a one-page questionnaire asking about social and language behaviors such as pointing and response to name. It was easily administered in pediatric offices, but it had high false positive rates to ensure adequate sensitivity.[1] To improve specificity, the M-CHAT-R/F was developed, which includes a set of follow-up questions for failed questions.[2] The follow up questions consist of a flowchart of clarifying questions for each item failed, asking about aspects such as frequency and context of behaviors. Although it takes as much as 30 minutes of physician time to interview parents using the follow-up questions, they are critical to clarify parental concerns and improve the screen’s positive predictive value. When implemented appropriately, children who fail the M-CHAT-R/F have an estimated 47.5–54% risk of being diagnosed with ASD and a 94.6–98% risk of any kind of clinically-relevant developmental delay.[2,3] Therefore, children who screen positive on the M-CHAT-R/F should be referred for further evaluation by early intervention services or specialists in child development (psychologists or developmental pediatricians).

Screening with the M-CHAT-R/F allows physicians to identify children at risk for ASD earlier and more accurately than developmental surveillance alone. One study of developmental screening showed pediatricians relying on clinical judgment alone missed 50% of children who went on to receive an ASD diagnosis.[4] In another study, ASD experts missed 39% of children with ASD in assessments made from viewing tapes of 10-minute segments of child behavior during an ASD testing session.[5] Such errors can translate into delays in diagnosis and services. In one large study of children with ASD, mean lag time between first parental concern and diagnosis of ASD was 2.7 years, and proactive physician response to parent concerns was associated with a 1 year reduction in lag time.[6] Studies showing the impact of early treatment for children with ASD have led to agreement that innovation in early screening and referral practices is of high importance.[7–9] However, in order for universal screening for ASD to be feasible, process improvement is needed to make an acceptable and high-quality screening process.

Although the M-CHAT-R/F allows for early identification of children at risk for ASD who would otherwise be missed, its administration has proven challenging. The follow up interview questions can take considerable time, and in the validation study this step was performed by research assistants rather than members of the clinical practices.[2] Secondary screening is not easily performed in the limited time available to a physician, nor do many pediatric offices have available staff to whom this important aspect of screening can be delegated. The extent to which pediatric practices in the United States using the M-CHAT-R actually use the follow up questions as intended is not known. In our community, feedback from local physicians and preliminary records review suggest that many physicians skip this important step due to scarce time or insufficient awareness of the importance of the follow up questions. Additional challenges in the screening process include mis-scoring of paper questionnaires, lack of awareness of the importance of screening, and a dearth of autism-specific resources for children who have screened positive for ASD.[7,10] These hindrances prevent accurate estimation of the impact of screening and impede appropriate and timely care for children with ASD.

Digital screening potentially offers an answer to the logistical challenges of administering the M-CHAT-R/F. Studies seeking to improve the ASD screening process have shown that introduction of digital smart form technology and electronic decision support can significantly impact autism-specific and general developmental screening.[10–13] Therefore, we designed a quality improvement study to address the following questions: 1) Can digital smart form technology be effectively used to implement the M-CHAT-R/F secondary follow up questions in routine care? 2) Does use of this technology increase fidelity of implementation, accurate documentation, and appropriate action? 3) Does use of this technology increase the acceptability of ASD screening to physicians in a primary care practice?

We prospectively monitored quality metrics during a baseline period and then during implementation of the intervention, as well as measures of feasibility and acceptability of the new screening process.

Methods

According to preliminary record review in 2014, 99% of children presenting for 18 and 24 months well child visits at all Duke Children’s Primary Care clinics were screened for ASD with the M-CHAT. However, feedback from Pediatricians and chart review revealed that there was minimal use of the follow up questions; most Pediatricians were using clinical judgment to decide whether to take action on a positive screen, which can result in over- as well as under-referrals. We selected one clinic that agreed to undertake a quality improvement project, and sought to quantify the quality of attempt to improve care. The selected clinic is staffed by approximately 20 resident and attending Pediatricians, who screen nearly 100 children for ASD each month. Demographic information on the children in our target population during the study period is presented in Table I. When we began planning, Duke Children’s Primary Care clinics had converted to the latest version of the M-CHAT (M-CHAT-R), and the physicians were being instructed to use the follow up interview to limit false positives. However, many physicians did not use the follow up questions, presumably due to the increased time required for implementation during routine care. Therefore, we decided to measure the impact of the intervention not only on quality metrics, but also on physician-perceived feasibility and acceptability.

Table 1.

Demographics of children presenting for target visits in the study periods.

| Characteristics | Baseline Period, N=657, n (%) | Intervention, N=534, n (%) | P |

|---|---|---|---|

| Males | 321 (49%) | 275 (51%) | .40 |

| Mean Age, months (standard dev) | 21.89 (3.38) | 21.88 (3.46) | .93 |

| Race/Ethnicity | .02* | ||

| Caucasian/not Hispanic or Latino | 271 (41%) | 230 (43%) | |

| Caucasian/Hispanic or Latino | 45 (7%) | 26 (5%) | |

| African American | 202 (31%) | 136 (25%) | |

| Asian | 47 (7%) | 35 (7%) | |

| Multiracial | 17 (3%) | 26 (5%) | |

| Othera | 75 (11%) | 81 (15%) |

Other includes American Indian, Hawaiian/Pacific Islander, and declined to state.

p<0.05

Planning

In September of 2014, the study team met with clinic staff to inquire about current screening practices and solicit feedback on necessary features and desired areas for improvement at the clinic. Staff requested the following process modifications: integration of follow-up questions, automatic scoring with decision support for referral action, integration of Spanish translation, and electronic importation of results into the electronic health record (EHR). The study team immediately implemented the requested electronic decision support in physician notes to raise awareness of screening guidelines and to control for potential confounding from this facet of screening practices. All discussed features were incorporated in the intervention period except integration with the EHR, which was not possible at this time. Data on study outcomes was collected prospectively for the next 7 months, which provided a baseline period before the digital smart form intervention was implemented.

We chose the quality improvement framework of Plan-Do-Study-Act (PDSA) cycles to allow us to deliver timely results to the clinic and adapt the intervention to suit the flow of the clinic. PDSA cycles involve collecting data on quality metrics at regular intervals and updating the intervention until statistical evidence of change is apparent.[14] We included children between 16–30 months old (the validated age range for the M-CHAT-R/F) presenting for a well-child check at the clinic. Process measures (variables selected as indicators of change in screening) included accurate documentation of positive screens in the EHR and appropriate action after positive screens. Appropriate action was defined as use of the follow up questions for secondary screening to rule out false positives, or referral of children persistently screening positive for developmental evaluation. We monitored the proportion of children receiving ASD screening as a balance measure to alert staff to potential harm or failure of the intervention and indicate a need to halt the new process.[15] Chart review was performed by a medical student who first received training in HIPAA guidelines, research ethics, and quality improvement methods. We obtained exemption for the study from the Duke University School of Medicine Institutional Review Board and approval from Duke’s Primary Care Research Consortium. We sought guidance from the Information Technology Security Office at Duke to adequately protect digital records of patient data.

To evaluate the impact of process improvement on physician perception of screening, we administered an electronic, anonymous survey of physician screening behaviors and perception of screening with the M-CHAT-R before and after the study period (based on that of Morelli et al) to measures physician attitudes towards autism screening.[10] Responses were given on a Likert scale from “Strongly Disagree” to “Strongly Agree”, and responses of “Agree” or “Strongly Agree” were collapsed into a measure of agreement. Questions that no longer made sense after transition to the digital M-CHAT were dropped from the post-intervention survey or modified to emphasize that the question was asking the respondent about their experience with the digital M-CHAT-R/F.

Implementation

The digital smart form intervention was introduced in April of 2015. The digital version of the M-CHAT-R/F was a custom-built tablet application that presented instructions and response items to parents. To protect patient data, the application required a provider’s password to access the stored scores. The digital M-CHAT-R/F automatically scored answers and presented and scored follow up questions for secondary screening of medium risk results (score of 3–7). Parents self-completed follow up questions and were not able to see the final score. Nurses used a password to view results and a secure network to wirelessly print a score report that provided the calculated risk category as well as the text of the screen positive questions both from the initial screen and the follow up questions. The score report was provided to the physician before he or she entered the room in order to give the physician the opportunity to use screening results and screen positive questions to guide their action and the clinical interview. To avoid reductions in screening rates due to potential technology failure and to cope with simultaneous scheduling of target visits, nurses were instructed to revert to the old paper screening forms if clinic flow necessitated its use. Proportion of target visits where nurses reverted to paper M-CHAT was collected to determine how quickly technology could be incorporated into practice and to study the amount of troubleshooting associated with adaptation of the intervention. Monthly updates on process measures were provided to the clinic and adaptations were made to the intervention in the framework of the PDSA cycles.[15] In response to lack of change in process measures in the first month of implementation, the intervention was adapted to include individual feedback to physicians on missed positive screens after the first PDSA cycle. After this adaptation, the intervention period, which was planned for 6 months, was extended 1 month. Near the end of the final month, preliminary results were presented to the physicians and clinic managers, and the survey was re-administered with additional questions about perceptions of feasibility of the digital screening process.

Statistical Analyses

Statistical process control charts were monitored for statistical evidence of change in process and balance measures by Western Electric Company (WECO) rules. In WECO rules, each point is within normal variation until one of the following criteria are met: one point is more than 3 standard deviation from the mean; 2 of 3 subsequent points are more than 2 standard deviations from the mean; 4 of 5 subsequent points are more than 1 standard deviation from the mean; or 8 successive points are on the same side of (above or below) the mean.[16] Chi-squared tests of independence and t-tests were planned to compare demographics of the children studied. For the final analysis, chi-squared tests of independence and fisher exact test were planned to compare the survey results and the process measures for the baseline and intervention periods. An alpha level of 0.05 was chosen for statistical significance. Data analysis for comparison of the baseline to the intervention period was shifted forward one month, to incorporate the adaptation of the intervention after the first month in response to lack of change in process measures. Additionally, secondary analysis was performed with this month added back in to determine if excluding this month impacted the results. All statistical analyses were carried out in R using the packages qcc, data.table, dplyr, and psych.[17–21]

Results

The analyzed sample included 1,191 children between 16–30 months old, with 99% of children screened. The baseline and intervention cohorts were not statistically different in age or sex distributions. For unknown reasons, the distribution of race and ethnicity differed slightly between the two cohorts, with 6% more African Americans in the baseline period (Table I). During the intervention months, a mean of 72% of children were screened with the smart form per month, with the remainder of those screened receiving paper forms. In the first month of intervention, 62% of children were screened with the digital form. During feedback sessions, nurses indicated that paper forms were used because of challenges with technology implementation in the first month, and adjustment to the logistics of multiple patients requiring screening simultaneously. However, by the final month of the intervention, 80% of children were being screened with the digital form, with continued use of the paper form occurring primarily when multiple patients arrived for visits and required screening simultaneously. Recorded scores in the EHR were confirmed by review of all digital score reports and re-scoring of all available paper forms (some paper forms were not retained, mean retained 88% per month, standard deviation 8%). Forty-four children from the baseline cohort were also included in the intervention cohort (3%) due to children presenting for 18-month visits returning for 24-month visits, potentially impacting the statistical assumption of independent observation. However, the monthly effect of these children was likely negligible and thus assumed to not impact results.

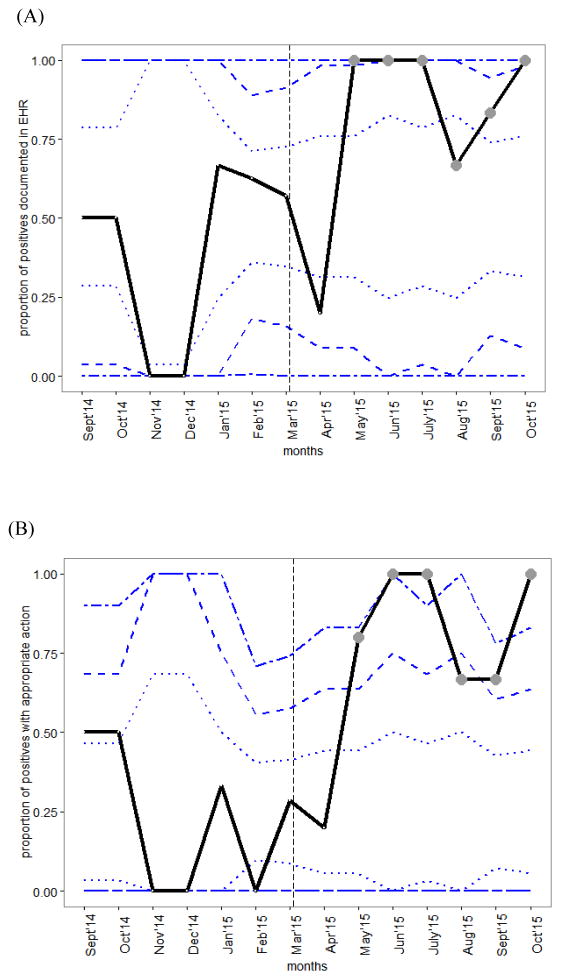

Throughout both phases of the study period, 99% of children received screening (Figure 1). To take into account the adaptation of the intervention in May of 2015, statistical analyses of the intervention were performed on the 6 months of May-October of 2015. To determine the impact of this decision, secondary analysis was performed with results from April added back in, and changes in process measures remained statistically significant. Due to adequate stability in the balance measure (proportion of children screened) over the course of intervention, the study was not halted at any point. During the baseline months, balance and process measures remained in statistical control (Figure 2; available at www.jpeds.com).

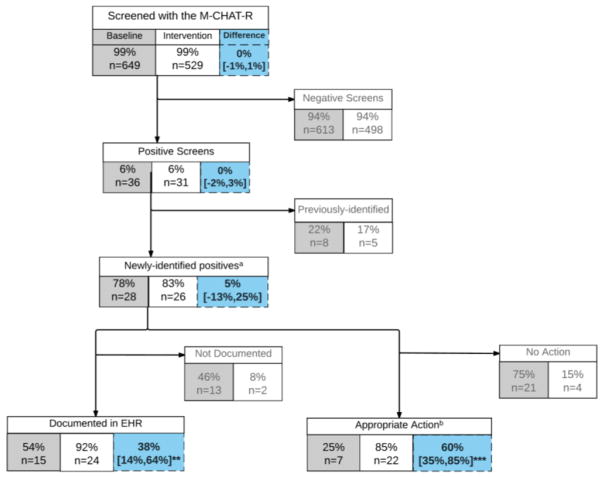

Figure 1. Flow chart of patients screened for Autism during baseline and intervention periods.

Baseline (grey boxes) and intervention (white boxes) measures of screening with electronic and paper M-CHAT-R, with % difference and 95% confidence interval of difference (blue boxes). a -newly-identified positives refers to children who do not already have a diagnosis of ASD or other developmental delay and are not already receiving treatment. b –appropriate action refers to use of the follow up questions or immediate referral for evaluation. **p<0.005, ***p<0.001

Figure 2. Control charts of monthly changes in process measures.

Statistically significant improvement (circle markers) starting in the second month of intervention for (A) proportion of positives documented in EMR and (B) proportion of positives with appropriate action by the physician. Vertical, dashed line separates baseline and intervention periods. Dashed horizontal lines show control limits (1, 2, and 3 standard deviations from baseline mean).

Process Measures

During the intervention period, monthly tests of change demonstrated statistically significant changes in both process measures beginning in the second month of intervention (Figure 2). The first process measure, proportion of children screening positive with accurate documentation in the EHR, increased from a mean of 54% to 92% (38% increase, 95% CI [14%,64%]). By WECO rules, this change in documentation of positive screens was statistically significant (4 out of 5 points more than 1 standard deviation above the baseline mean) (Figure 2, A). On the second process measure, proportion of children with appropriate action, the metric increased by 60% in the intervention period (95% CI [35%, 85%]). By WECO rules, statistical change was significant and maintained (2 of 3 points more than 2 standard deviations above the baseline mean) (Figure 2, B). In the intervention period, of the 22 children with appropriate action, 17 were presumed false positives ruled out with use of automated secondary screening and 5 children scored high risk or remained positive after automated secondary screening and were referred for evaluation (Figure 1). This amounted to an overall positive rate of 1.9% (5 previously identified + 5 newly identified out of 529 children studied) in the intervention period.

Survey

On the pre-intervention survey, 16 of 20 physicians responded, and 5 had greater than 10 years of experience practicing pediatrics. On the post-intervention survey, 10 of 15 surveyed physicians responded and 5 reported greater than 10 years of experience, suggesting that a similar proportion of attending and resident physicians completed each survey. New interns were excluded from the post-intervention survey because respondents were asked to compare their experiences before and after the intervention, resulting in fewer potential respondents post-intervention. Prior to the intervention, 56% of respondents stated that they routinely referred children for developmental evaluation after a positive screen, and only 25% stated that they used the follow up questions. After the intervention, 100% of respondents stated that they routinely referred children for developmental evaluation after a positive screen, 90% agreed that the digital version of the M-CHAT and score report improved their assessment of ASD risk, and 90% agreed that they preferred the digital method of screening over paper forms. Respondents stated (60%) that they had tried to use the paper follow up questions before introduction of the digital M-CHAT. Physicians commented that the limiting factor for use of the secondary screen was time of administration. No significant changed was observed in respondent’s perception of alterations in clinical flow due to screening (Table II). Respondents commented that the automatically-generated score report made use of the M-CHAT easier and faster. More attendings than residents stated that they had previously tried to use the follow up questions, otherwise feedback did not differ by level of experience.

Table 2.

Results of physician surveys

| Statements | Baseline, N=16, n Agree (%) | Intervention, N=10, n Agree (%) | P |

|---|---|---|---|

| 1. The M-CHAT is easy for parents/caregivers to complete. | 14 (88%) | 10 (100%) | .50 |

| 2. Screening with the M-CHAT disrupts my clinical workflow. | 2 (13%) | 0 (0%) | .51 |

| 3. I routinely use the follow up questions when a child screens positive on the initial M-CHAT screen. | 4 (25%) | N/A | - |

| 4. The M-CHAT adds useful information to my clinical picture of a child beyond parent-volunteered information. | 14 (88%) | 8 (80%) | .62 |

|

5a. Use of the M-CHAT improves my diagnostic ability beyond my clinical acumen. 5b. The Digital version of the M-CHAT-R/F and score report improved my assessment of autism risk. |

9 (56%) | 9 (90%) | - |

| 6. I routinely refer children for developmental evaluation who screen positive on the M-CHAT. | 9 (56%) | 10 (100%) | .02* |

| 7. I prefer using the Digital version of the M-CHAT-R/F and score report over the corresponding paper version (including follow up questions). | N/A | 9 (90%) | - |

Notes: Interns were excluded from the post-intervention survey because respondents were asked to compare their experiences before and after the intervention, resulting in fewer potential respondents post-intervention. N/A for question 3 indicates not asked on survey at this timepoint because question was irrelevant. Question 5a was replaced with question 5b in the second survey.

p<0.05

Discussion

This quality improvement study demonstrated significant gains in complete and accurate use of the M-CHAT-R/F, and positive changes in physician attitudes toward Autism screening. Our intervention process integrated secondary screening into routine care, which in previous studies has been shown to improve the accuracy of risk assessment for ASD. Importantly, a gap in documentation of positive screens during the baseline period appeared to be due to scoring errors on paper forms and paper forms that were never scored, highlighting the need for an automated scoring system that presents risk assessment results to physicians in a busy primary care clinic. Use of the digital format steadily increased over the course of the intervention, and adaptation to new technology likely contributed to the 1 month delay in change in quality metrics. Overall, physicians found the digital format and automatic scoring helped them assess risk for ASD and incorporate that information into their referral decision. As a result of this study, the practice leadership decided to continue using the processes introduced in this intervention after the study period.

Several other elements of our intervention could be applied to expansion or adaptation of this approach. Individual feedback and the use of risk categories presented to physicians in a practical and timely manner were likely key factors in our success. Results of the survey indicated that physicians found having the M-CHAT-R/F already scored and interpreted convenient and useful. Physicians also used the follow up question more often after the intervention, as was evident in occasional explicit references to follow up questions in their progress notes even when using the paper screening form. Uptake of digital screening could be improved with system-wide implementation of digital screening and integration with the EHR, which we hope to achieve with other planned improvements to our health system. Physicians’ survey responses mirrored quantitative results, suggesting that they also accurately judged their improvement in screening practices.

The question of maintaining high sensitivity while reducing false positives on the M-CHAT-R remains challenging, but may be aided by digital screening. In our intervention, 65% of initial positives were ruled out as potential false positives by electronic administration of the follow up questions (17 of the 26 newly-identified positives), which is comparable with the 67–78% reported in the literature (1023 of 1295 in Chlebowski et al and 152 of 227 in Brooks et al).[3,22] Our overall positive rate was 1.9% (previously-identified and newly-identified positives), which also fits with previous studies showing that prevalence of ASD is about 1% and about half of all positive screens are children with some other kind of developmental delay.[2] The quality improvement framework potentially detected patients screening positive who would not have been included in previous studies requiring consent and opting in, because parents who are not concerned about their child’s development or afraid of the implications of an ASD diagnosis may decline study participation. An ethical consideration for digital screening is that parents might answer follow up questions differently when presented with questions electronically than when interviewed. It is our experience that parents sometimes do not understand the underlying behavior described in the questions or intentionally give the screen-negative answer because they are not ready to hear that their child may be at risk for ASD. Therefore it is vital that physicians engage with parents over their responses to the questions and continue close follow up for families where either the physician or parent is concerned. Physicians in our study reported that because the score report displayed screen positive questions they could discuss the questions with parents. Although discussing screening results may add time to a well child visit, with the improved specificity of screening conferred with the digital form we observed an overall decrease in the number of positive screens, potentially reducing the number of visits where physicians need to discuss screening results. In North Carolina and many other states, both private insurance and Medicaid reimburse physicians for every administered M-CHAT, and implied here is reimbursement for the time taken to review results. Further adaption of digital screening could increase confidence in the specificity of the screen, allowing physicians to allocate time-consuming discussions of positive screening results to the families of children at highest risk for ASD.

Generalizability of these results beyond the intervention site is limited by the lack of a contemporaneous comparison site and potential co-interventions. We chose not to use a comparison site for this study, and instead planned a prospective baseline vs post-intervention analysis. However, in an effort to control for the impact of increased awareness on quality of screening, we introduced decision support elements in the EHR and promoted the importance of screening during the baseline period. We were surprised to find no significant change in outcome measures during baseline, suggesting that discussion of the screening guidelines and familiarity with the research goals had little impact on the changes that occurred during the intervention period, possibly because of the logistical challenges of administering the follow up questions. Similarly, sources of potential co-intervention, such as the CDC’s “Learn the Signs Act Early” campaign, North Carolina’s Assuring Better Child Health and Development initiative, and studies recruiting children with ASD were ongoing throughout the baseline and intervention periods. We suspect these had little impact on the intervention effect, although we cannot rule out their interaction with our intervention.

We also recognize the limitations afforded by lack of diagnostic outcomes results. We did not follow these patients to diagnostic result and clinical outcome, which is an important gap in the screening literature. However, studies that attempt to quantify the impact of screening have limited interpretability due to low use of the full M-CHAT-R/F (with follow up questions).[23] Given the evidence in this study of the logistical challenges inherent in implementing the M-CHAT-R/F in primary care practice, it is crucial that sustainable and scalable process improvement be undertaken prior to passing judgment on the impact of screening for ASD. Studies of both feasibility of screening processes and long-term outcomes of children who receive early screening will be necessary to fully understand the impact of early screening.[24] Future studies should quantify the impact of early screening on age of diagnosis for ASD and other developmental delays, and time to treatment initiation to further elucidate the impact of universal early screening on progress in clinical care.

We found a measurable impact of this intervention on number of positive screens, fidelity of documentation, and clinical action. We monitored the impact of changes in the screening process on early referral, which is an important step for many children toward early treatment, a proxy for improved outcomes in ASD. Use of the M-CHAT-R with follow up questions to reduce potential false positives improves the potential harm to benefit ratio of screening, and allows physicians to have more confidence in screening results. With expanding demands and short visit times, interventions that reduce time spent scoring and interpreting forms could preserve time for direct patient care. This study demonstrates the potential for technology to assist in improving feasibility and acceptability of universal screening for ASD.

Acknowledgments

Supported by the Duke Center for Autism and Brain Development; Duke Department of Psychiatry (PRIDe award); Duke Education and Human Development Initiative; Duke-Coulter Translational Partnership Grant Program; Information and Child Mental Health within the Information Initiative at Duke (iiD); the National Center For Advancing Translational Sciences of the National Institutes of Health (TL1TR001116). Partial support was also received from the National Science Foundation and the Department of Defense. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health, the National Science Foundation, or the Department of Defense. The funding agencies did not impact the design, analysis, or writing of the manuscript. G.D. received authorship royalties from Guilford Publications and Oxford University Press and is on the scientific advisory boards of Janssen Research and Development, Roche Pharmaceuticals, Akili, Inc., and Progenity, Inc., for which she receives travel reimbursement and honoraria. K. C. serves as a paid consultant to the DC:0-3R Revision Task Force. The authors report the filing of a provisional patent that covers technology described in this manuscript.

We thank Duke Children’s Primary Care at Southpoint for their efforts to improve early screening for children with autism. We also thank Hayden Bosworth, PhD and Gregory Samsa, PhD of Duke’s Clinical Research Training Program for their advice on study design and interpretation of results.

Abbreviations

- ASD

Autism Spectrum Disorders

- M-CHAT-R/F

Modified Checklist for Autism in Toddlers Revised with Follow up

- EHR

Electronic Health Record

- PDSA

Plan Do Study Act

Footnotes

Portions of this study were presented as a poster during the Pediatric Academic Societies Meeting, <city, state>, May <date>, 2015.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Kleinman JM, Robins DL, Ventola PE, Pandey J, Boorstein HC, Esser EL, et al. The Modified Checklist for Autism in Toddlers: A Follow-up Study Investigating the Early Detection of Autism Spectrum Disorders. J Autism Dev Disord. 2008;38:8273–9. doi: 10.1007/s10803-007-0450-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Robins DL, Casagrande K, Barton M, Chen C-MA, Dumont-Mathieu T, Fein D. Validation of the Modified Checklist for Autism in Toddlers, Revised With Follow-up (M-CHAT-R/F) PEDIATRICS. 2014;133:37–45. doi: 10.1542/peds.2013-1813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Chlebowski C, Robins DL, Barton ML, Fein D. Large-Scale Use of the Modified Checklist for Autism in Low-Risk Toddlers. Pediatrics. 2013;131:e1121–7. doi: 10.1542/peds.2012-1525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Miller JS, Gabrielsen T, Villalobos M, Alleman R, Wahmhoff N, Carbone PS, et al. The Each Child Study: Systematic Screening for Autism Spectrum Disorders in a Pediatric Setting. PEDIATRICS. 2011;127:866–71. doi: 10.1542/peds.2010-0136. [DOI] [PubMed] [Google Scholar]

- 5.Gabrielsen TP, Farley M, Speer L, Villalobos M, Baker CN, Miller J. Identifying Autism in a Brief Observation. PEDIATRICS. 2015;135:e330–338. doi: 10.1542/peds.2014-1428. [DOI] [PubMed] [Google Scholar]

- 6.Zuckerman KE, Lindly OJ, Sinche BK. Parental Concerns, Provider Response, and Timeliness of Autism Spectrum Disorder Diagnosis. J Pediatr. 2015;166:1431–9. e1. doi: 10.1016/j.jpeds.2015.03.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Daniels AM, Halladay AK, Shih A, Elder LM, Dawson G. Approaches to Enhancing the Early Detection of Autism Spectrum Disorders: A Systematic Review of the Literature. J Am Acad Child Adolesc Psychiatry. 2014;53:141–52. doi: 10.1016/j.jaac.2013.11.002. [DOI] [PubMed] [Google Scholar]

- 8.Dawson G, Rogers S, Munson J, Smith M, Winter J, Greenson J, et al. Randomized, Controlled Trial of an Intervention for Toddlers With Autism: The Early Start Denver Model. PEDIATRICS. 2010;125:e17–23. doi: 10.1542/peds.2009-0958. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.MacDonald R, Parry-Cruwys D, Dupere S, Ahearn W. Assessing progress and outcome of early intensive behavioral intervention for toddlers with autism. Res Dev Disabil. 2014;35:3632–44. doi: 10.1016/j.ridd.2014.08.036. [DOI] [PubMed] [Google Scholar]

- 10.Morelli DL, Pati S, Butler A, Blum NJ, Gerdes M, Pinto-Martin J, et al. Challenges to implementation of developmental screening in urban primary care: a mixed methods study. BMC Pediatr. 2014;14:16. doi: 10.1186/1471-2431-14-16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Guevara JP, Gerdes M, Localio R, Huang YV, Pinto-Martin J, Minkovitz CS, et al. Effectiveness of Developmental Screening in an Urban Setting. Pediatrics. 2013;131:30–7. doi: 10.1542/peds.2012-0765. [DOI] [PubMed] [Google Scholar]

- 12.Talmi A, Bunik M, Asherin R, Rannie M, Watlington T, Beaty B, et al. Improving Developmental Screening Documentation and Referral Completion. Pediatrics. 2014;134:e1181–8. doi: 10.1542/peds.2012-1151. [DOI] [PubMed] [Google Scholar]

- 13.Harrington JW, Bai R, Perkins AM. Screening Children for Autism in an Urban Clinic Using an Electronic M-CHAT. Clin Pediatr (Phila) 2013;52:35–41. doi: 10.1177/0009922812463957. [DOI] [PubMed] [Google Scholar]

- 14.Shojania KG, Grimshaw JM. Evidence-Based Quality Improvement: The State Of The Science. Health Aff (Millwood) 2005;24:138–50. doi: 10.1377/hlthaff.24.1.138. [DOI] [PubMed] [Google Scholar]

- 15.Courtlandt CD, Noonan L, Feld LG. Model for Improvement - Part 1: A Framework for Health Care Quality. Pediatr Clin North Am. 2009;56:757–78. doi: 10.1016/j.pcl.2009.06.002. [DOI] [PubMed] [Google Scholar]

- 16.Benneyan JC, Lloyd RC, Plsek PE. Statistical process control as a tool for research and healthcare improvement. Qual Saf Health Care. 2003;12:458–64. doi: 10.1136/qhc.12.6.458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Dowle M, Short T, Lianoglou S, Srinivasan A, Saporta R, Antonyan E. R package version 1.9.4. 2014. data.table: Extension of data.frame. [Google Scholar]

- 18.R Core Team. R: A language and environment for statistical computing. 2014. [Google Scholar]

- 19.Revelle W. psych: Procedures for Personality and Psychological Research. 2014. [Google Scholar]

- 20.Strucca L. qcc: an R package for quality control charting and statistical process control. 2004. [Google Scholar]

- 21.Wickham H, Francois R. dplyr: A Grammar of Data Manipulation. 2015. [Google Scholar]

- 22.Brooks BA, Haynes K, Smith J, McFadden T, Robins DL. Implementation of Web-Based Autism Screening in an Urban Clinic. Clin Pediatr (Phila) 2016;55:927–934. doi: 10.1177/0009922815616887. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Khowaja MK, Hazzard AP, Robins DL. Sociodemographic Barriers to Early Detection of Autism: Screening and Evaluation Using the M-CHAT, M-CHAT-R, and Follow-Up. J Autism Dev Disord. 2014;45:1797–808. doi: 10.1007/s10803-014-2339-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Dawson G. Why it’s important to continue universal autism screening while research fully examines its impact. JAMA Pediatr. 2016;170:527–528. doi: 10.1001/jamapediatrics.2016.0163. [DOI] [PubMed] [Google Scholar]