Abstract

Background

Many medical certifying bodies require that a minimum number of clinical procedures be completed during residency training to obtain board eligibility. However, little is known about the relationship between the number of procedures residents perform and their clinical competence.

Objective

This study evaluated associations between residents' medical procedure skills measured in a simulation laboratory and self-reported procedure experience and year of training.

Methods

This research synthesis extracted and summarized data from multiple cohorts of internal medicine, emergency medicine, anesthesiology, and neurology resident physicians who performed simulated clinical procedures. The procedures were central venous catheter insertion, lumbar puncture, paracentesis, and thoracentesis. We compared residents' baseline simulated performance to their self-reported procedure experience using data from 7 research reports written by Northwestern University investigators between 2006 and 2016. We also evaluated how performance differed by postgraduate year (PGY).

Results

A total of 588 simulated procedures were performed during the study period. We found significant associations between passing the skills examinations and higher number of self-reported procedures performed (P = .011) and higher PGY (P < .001). However, performance for all procedures was poor, as only 10% of residents passed the assessments with a mean of 48% of checklist items correct (SD = 24.2). The association between passing the skills examination and year of training was mostly due to differences between PGY-1 and subsequent years of training.

Conclusions

Despite positive associations between self-reported experience and simulated procedure performance, overall performance was poor. Residents' clinical experience is not a proxy for skill.

What was known and gap

Procedural experience requirements for residents are intended to ensure competence in graduates, yet few studies have assessed their validity.

What is new

A study of simulated procedure experience in 4 specialties analyzed the impact of prior experience and year of training on competence.

Limitations

Single institution study; recall bias for procedure experience reporting.

Bottom line

Despite overall poor simulated procedure performance, experience and year of training were positively associated with performance.

Introduction

Many medical accrediting and certifying bodies require that a minimum number of clinical procedures be completed before graduation from residency training or to obtain board eligibility,1,2 and some studies link clinical experience (often expressed as the number of procedures performed) with reduced complications.3–6 However, the applicability of this research to trainee certification is uncertain because the number of procedures needed to reduce complications in these studies is well beyond what might be achieved in normal residency training.3,6,7 Additionally, several recent studies questioned whether clinical experience can serve as a proxy for skill. One systematic review8 dispelled the common notion that physicians with more experience have better clinical skills by showing an inverse relationship between years in practice and quality of care. Another study9 used data from the American Board of Surgery to show that the number of procedures performed by surgical residents was much lower than what would be considered necessary to achieve competence. Within internal medicine, skill acquisition studies evaluating the relationship between the number of self-reported procedures performed during residency and procedure skills similarly failed to show significant correlations.10–15

These studies raise questions regarding how the common method of using the number of procedures performed is considered a surrogate for trainee clinical competence.1 The Accreditation Council for Graduate Medical Education (ACGME) recently changed the expectations of residency and fellowship programs to require use of standardized milestones.16 The Milestone Project denotes progress toward ensuring that training programs are graduating physicians who are competent to perform the tasks they are expected to execute in practice. This change is welcome due to evidence that residents and fellows are often not competent to perform patient care tasks before graduation,9,10,15,17–19 which translates into uneven performance in practice.6,20,21

One method to ensure that trainees are adequately prepared before performing procedures on patients is the use of simulation-based education. Simulation-based education can be used in a mastery model,22 in which participants are required to meet or exceed a minimum passing score (MPS) before the completion of training. In simulation-based mastery learning, time varies, while learning outcomes are uniform. This educational strategy ensures that all clinicians working with patients are competent despite variation in the number of procedures performed in the past.10–15

We performed a research synthesis to evaluate simulation-based mastery learning as a best practice for clinical skills assessment and certification of medical trainees in lieu of relying on clinical experience. We hypothesized that clinical experience (number of procedures performed during residency training or years in practice) is not meaningfully associated with the ability to meet or exceed the MPS for a clinical procedure in a controlled setting. Therefore, the current study had 2 aims: to assess the competence of a large number of medical residents performing a diverse set of clinical procedures, and to evaluate associations between measured procedural skill with self-reported procedure experience and year of training.

Methods

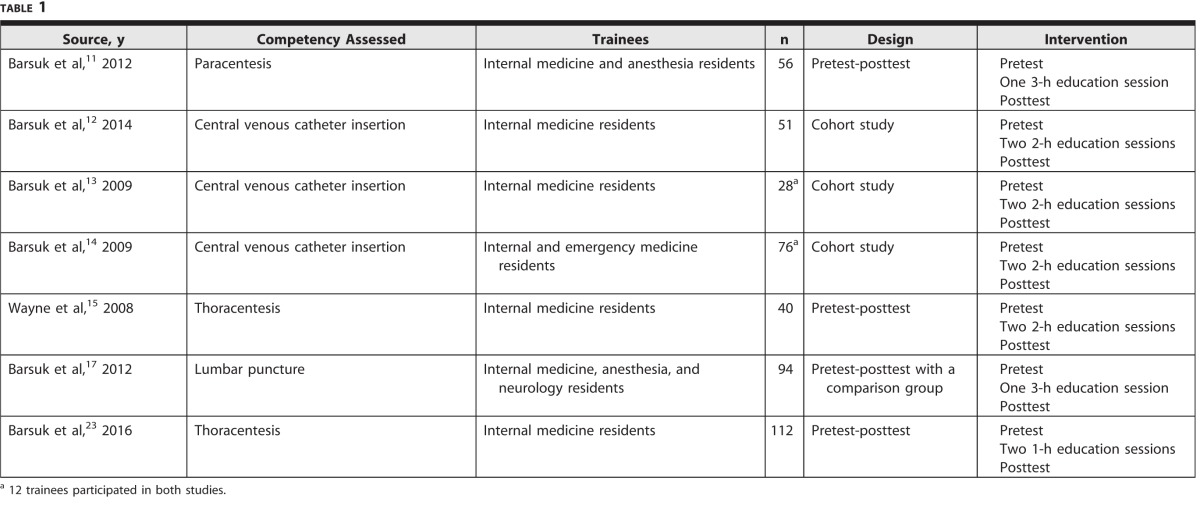

We performed a research synthesis of 7 studies performed by Northwestern University investigators, allowing us access to all data.11–15,17,23 The Northwestern University Institutional Review Board approved all 7 studies. Table 1 summarizes the 7 studies.

Table 1.

Characteristics of the Studies Selected for Review

These data included simulated procedures from multiple cohorts of internal medicine, emergency medicine, anesthesiology, and neurology resident physicians who performed central venous catheter (CVC) insertion, lumbar puncture (LP), paracentesis, and thoracentesis procedures.11–15,17,23 Resident physicians from 4 academic tertiary medical centers and 1 academic community hospital in Chicago performed these procedures. We compared objective evaluations of residents' baseline simulated performance (before any educational intervention) measured by residents' ability to achieve the MPS (competency standard) to self-reported experience (number of procedures performed) and postgraduate year (PGY) of training. The baseline simulated performance represents “traditional” medical education and informal learning that may have occurred during medical school or residency, before any simulation-based educational interventions.

The 7 published studies provided reliable data from checklist measures of CVC insertion at the internal jugular and subclavian vein sites,12–14 LP,17 paracentesis,11 and thoracentesis skills.15,23 Checklist items were scored dichotomously as steps done correctly versus not done/done incorrectly, and items had high interrater reliability.11–15,17,23 Residents provided demographic and clinical experience information, including age, sex, specialty, year of training, and experience (self-reported number of the specific procedures performed). To comply with accreditation requirements, all residents were required by their programs to keep procedure logs and were able to use these logs to help report the number of procedures performed. Data were collected using the same evaluation tools throughout the study period, which enabled us to combine data from the 7 studies.

As part of training in each of the studies, residents were required to participate in simulation-based mastery learning curricula for procedural skill acquisition, which have been described in detail elsewhere.11–15,17,23 In brief, residents underwent a baseline assessment on a procedure simulator. Subsequently, they watched a video and lecture and participated in deliberate practice on the simulator with directed feedback from an expert instructor. Residents then were required to meet or exceed the MPS at posttest before completion of training. Participants who did not meet the MPS participated in additional deliberate practice until they met or exceeded this score. Educational outcomes were uniform among trainees, while training time varied.

The MPS was calculated in all 7 studies by an expert panel of judges who used the Angoff and Hofstee standard-setting methods to devise a passing score that was judged safe for patient care.11–15,17,23 The MPS for CVC insertion and thoracentesis were subsequently changed in 2010 and 2014, respectively, based on reassessment of resident skills by an expert panel.23,24 For the purposes of this study, the original MPS was used.

Our unit of analysis was baseline simulated procedures, not residents, because more than 1 procedure type may have been done by a resident during training. However, residents performed each procedure only once. Continuous variables, such as age and number of self-reported procedures performed (experience), were changed to categorical variables based on their frequency distributions. We created a “dummy” variable for missing data on a number of procedures performed by each resident (when not reported). We used the χ2 test to evaluate relationships between the percentage of procedures where residents met or exceeded the MPS at baseline (pretest) with the number of procedures performed and PGY. We estimated logistic regression analyses of the association between the number of procedures performed and the likelihood of passing using the 0 procedures (completely inexperienced) category as the reference. We also evaluated the effect of the year of training on the likelihood of passing, using PGY-1 as the reference category. Finally, we estimated a logistic regression of the likelihood of passing with independent variables simultaneously, including the number of procedures performed, year of training, resident age, sex, medical specialty, and procedure type. Although each simulated procedure was considered an independent event, we performed a sensitivity analysis using random-effects logistic regression to adjust standard errors for multiple simulated procedures performed by the same resident.

We performed all statistical analyses using IBM SPSS Statistics version 22 (IBM Corp, Armonk, NY) and Stata version 14 (StataCorp LP, College Station, TX).

Results

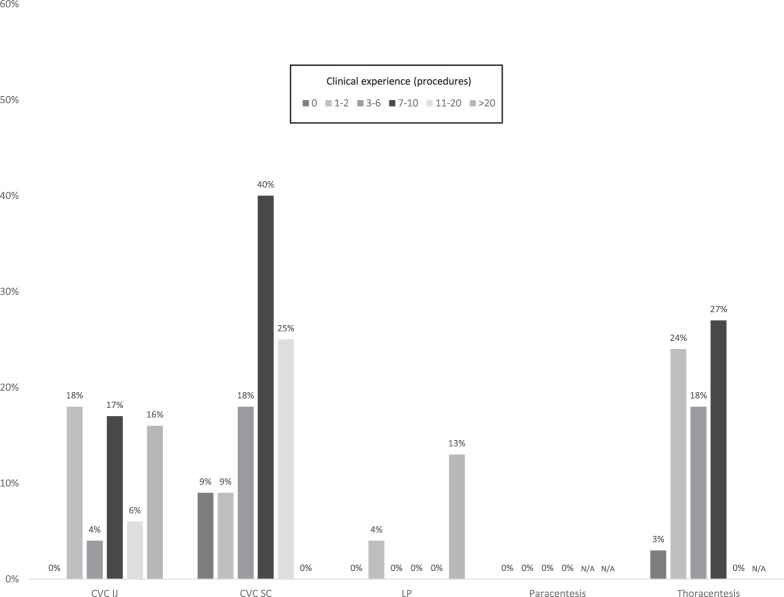

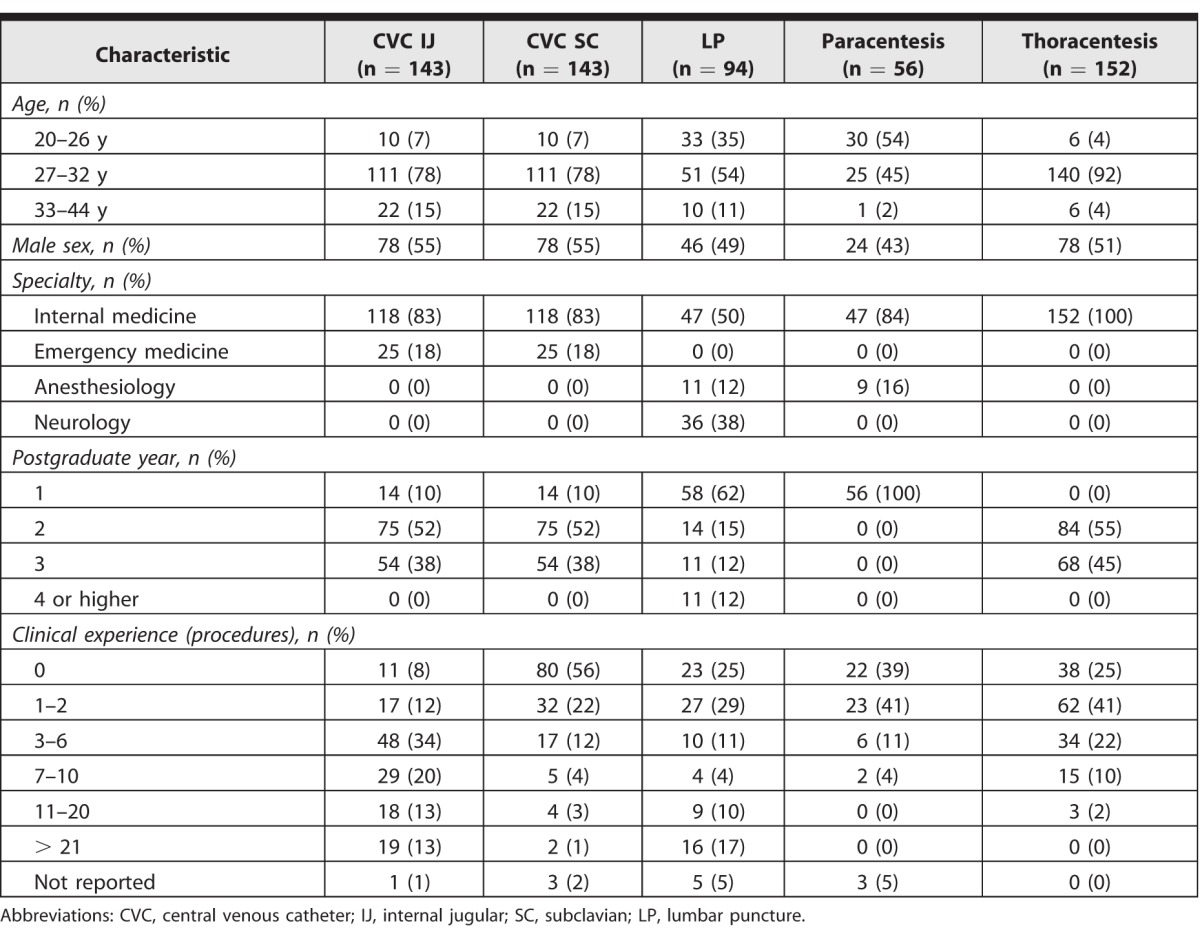

A total of 588 baseline measurements of simulated procedures were performed by 382 unique residents during the study period from 2006 to 2016. A total of 143 residents performed both CVC internal jugular and subclavian vein assessments; 38 performed only LP; 49 performed LP and paracentesis; 145 performed only thoracentesis; and 7 performed LP, paracentesis, and thoracentesis. A total of 12 of the 588 procedures (2%) did not include procedural experience reported by residents at the time of the simulated assessments. Resident demographic and clinical data for each procedure can be found in table 2. Resident performers met or exceeded the MPS at baseline assessment for only 59 of 588 procedures (10%). The figure shows the percentage of residents achieving the MPS and passing the baseline (pretest) on each of the individual procedures by number of procedures performed. Overall, baseline simulated procedure performance of all procedures combined was poor, with a mean of 48% of correct checklist items (SD = 24.2).

Table 2.

Demographics and Clinical Characteristics of Residents Who Performed Simulated Procedures

Figure.

Percentage of Residents Who Met or Exceeded the Baseline Minimum Passing Score on Each Procedural Skill by Level of Experience

Abbreviations: CVC, central venous catheter; IJ, internal jugular; SC, subclavian; LP, lumbar puncture.

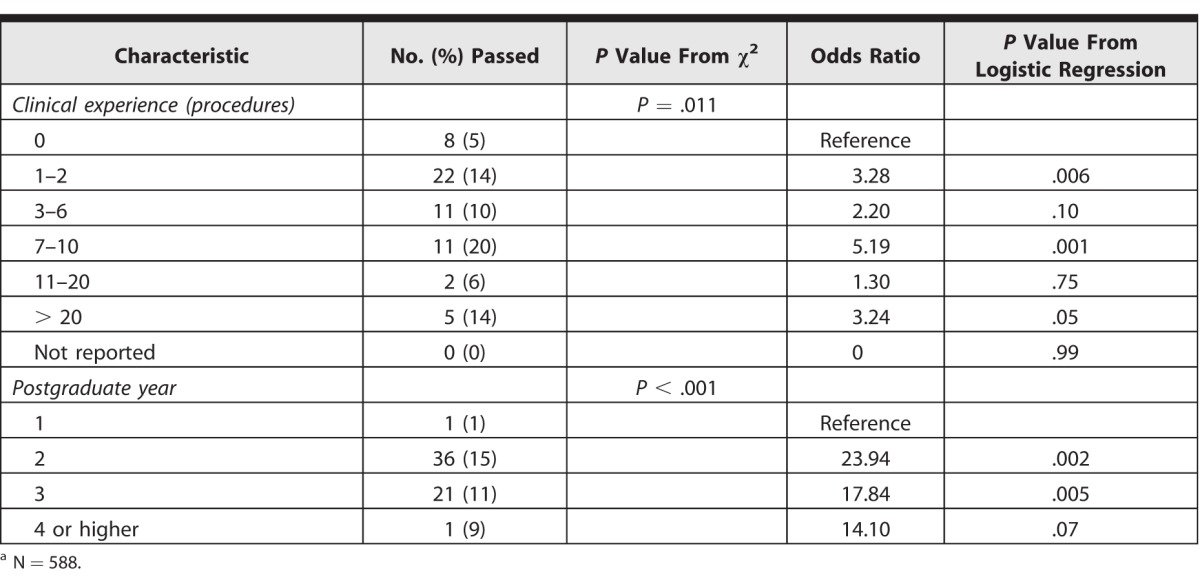

The χ2 test revealed significant associations between procedure experience and baseline procedure performance of meeting the MPS (P = .011), and a higher number of procedures performed and year of training (P < .001; table 3.) The odds ratios associating simulated procedure performance with number of procedures performed and year of training are shown in table 3. The association between meeting the MPS and year of training was mostly seen as a difference between PGY-1 and PGY-2, PGY-3, and PGY-4 (table 3), not between PGY-2, PGY-3, and PGY-4.

Table 3.

Numbers and Odds Ratios of Participants Who Met Minimum Passing Score for Combined Simulated Procedures at Baseline by Experience and Postgraduate Yeara

After controlling for independent variables, the association between meeting the MPS and higher experience remained significant. These associations were strongest in the 7 to 10 procedures performed category, where these residents were up to 7.5 times more likely to pass the baseline assessment than those with no experience (P = .001). Yet, the mean overall skills performance (including all procedures) among residents in this experience category was 56% checklist items correct (SD = 24.6), and only 20% of these procedures were performed competently as measured by meeting or exceeding the MPS. The association between meeting the MPS and year of training was no longer seen after controlling for covariates in the regression model. There were no significant associations between meeting the MPS and age, sex, type of procedure, or clinical specialty. The random effects modeling that adjusts results for clustering of procedures by residents produced virtually identical results (data not shown).

Discussion

This research synthesis shows that both procedure experience and year of training were positively associated with baseline competence across multiple clinical procedures. Previous studies failed to show any significant associations between procedure experience and performance.10–15 Combining data from multiple studies increased the power to detect these associations. Despite these associations, only 10% of assessed procedures demonstrated competence at baseline, with low passing rates even among the most experienced and senior trainees. For example, we showed that residents who performed 7 to 10 procedures on actual patients were most likely to meet the MPS on the simulated baseline assessment. However, these residents' overall procedure performance was still poor, with a mean overall skills performance of 56% checklist items correct (SD = 24.6), and the majority of residents (80%, 44 of 55) with this level of experience failed to reach the MPS at baseline assessment. Due to these findings, we believe that procedural experience should not be used as a surrogate for competence in medical trainees.

Few studies have linked an experience threshold to procedure performance outcomes. For instance, 1 study demonstrated that after an educational intervention, residents inserting CVCs had increased their success rate to approximately 90% after 10 ultrasound-guided catheter insertions (compared with approximately 80% after 1 to 3 insertions), and the rate of complications was (and persisted at) approximately 8% after only 4 catheter insertions (compared with approximately 13% after 1 to 3 insertions).25 Another study of CVC insertion suggested that up to 50 procedures were needed to reduce complications from subclavian line insertions,3 while a study of colonoscopy showed that up to 80 procedures were necessary for improved patient care.7 Bariatric surgery yearly volumes of over 150 were also associated with better performance and lower complications compared with lower-volume practitioners.6 These procedural numbers are greater than the minimum requirements for trainees, and they raise questions about the use of procedure tracking without parallel, rigorous, competency-based assessments. For example, the American Board of Internal Medicine specifies that “to assure adequate knowledge and understanding of the common procedures in internal medicine, each resident should be an active participant” for CVC procedures at least 5 times,26 while the American College of Surgeons recognizes CVC insertion as an essential skill, yet does not formally recommend a specific number needed to achieve competency.27

Assumptions of competence related to PGY are also problematic. Our findings show that PGY-1 residents were less likely to meet the MPS compared to residents at PGY-2 and above, but there were no observable differences between PGY-2 and PGY-3 passing rates, while skills seemed to decline slightly in the PGY-4 group. However, these findings were likely due to significant differences in the number of procedures performed between PGYs, because the regression analysis failed to confirm these associations after controlling for covariates (including PGY).

The ACGME has recognized the limitations of linking procedure numbers or time in training to competency.16 Rigorous simulation-based education is a natural fit with the ACGME milestone framework because it provides standardization, deliberate practice, feedback, translation of outcomes to improved patient care, and reliable formative evaluation until a mastery standard is met. For instance, gastroenterology fellows who practiced on a colonoscopy simulator rather than patients performed as well during actual patient colonoscopies as fellows who had already performed 80 procedures on actual patients.7 Simulation-based mastery learning has been used to improve skills in diverse clinical areas, including end-of-life discussions,28 cardiac auscultation,29 management of pediatric status epilepticus,30 advanced cardiac life support,18 and laparoscopic surgery,31 and has also been shown to reduce patient complications,12,14,32–34 decrease length of hospital stay,33 and reduce hospital costs.35,36

This study has several limitations. It was performed at a limited number of centers in 1 city, and it may not reflect other locations. Because local experts determined the checklists and minimum standards, it is possible trainees new to our system performed poorly because they previously trained in a different environment. Finally, prior experience was self-reported and may be subject to recall bias. However, residents were required to keep procedure logs and were able to review these while answering the procedural experience questions.

Conclusion

Our study revealed that experience and year of training were positively associated with procedure performance. However, overall performance was still poor even in the most experienced residents.

References

- 1. How many procedures makes competency? Hosp Peer Rev. 2014; 39 11: 121– 123. [PubMed] [Google Scholar]

- 2. Accreditation Council for Graduate Medical Education. ACGME program requirements for graduate medical education in general surgery. http://www.acgme.org/Portals/0/PFAssets/ProgramRequirements/440_general_surgery_2016.pdf?ver=2016-03-23-113938-870. Accessed January 12, 2017. [Google Scholar]

- 3. McGee DC, Gould MK. . Preventing complications of central venous catheterization. N Engl J Med. 2003; 348 12: 1123– 1133. [DOI] [PubMed] [Google Scholar]

- 4. Gordon CE, Feller-Kopman D, Balk EM, et al. . Pneumothorax following thoracentesis: a systematic review and meta-analysis. Arch Intern Med. 2010; 170 4: 332– 339. [DOI] [PubMed] [Google Scholar]

- 5. Nguyen NT, Paya M, Stevens CM, et al. . The relationship between hospital volume and outcome in bariatric surgery at academic medical centers. Ann Surg. 2004; 240 4: 586– 593; discussion 593–584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Birkmeyer JD, Finks JF, O'Reilly A, et al. . Surgical skill and complication rates after bariatric surgery. N Eng J Med. 2013; 369 15: 1434– 1442. [DOI] [PubMed] [Google Scholar]

- 7. Cohen J, Cohen SA, Vora KC, et al. . Multicenter, randomized, controlled trial of virtual-reality simulator training in acquisition of competency in colonoscopy. Gastrointest Endosc. 2006; 64 3: 361– 368. [DOI] [PubMed] [Google Scholar]

- 8. Choudhry NK, Fletcher RH, Soumerai SB. . Systematic review: the relationship between clinical experience and quality of health care. Ann Intern Med. 2005; 142 4: 260– 273. [DOI] [PubMed] [Google Scholar]

- 9. Bell RH., Jr. Why Johnny cannot operate. Surgery. 2009; 146 4: 533– 542. [DOI] [PubMed] [Google Scholar]

- 10. Barsuk JH, Ahya SN, Cohen ER, et al. . Mastery learning of temporary hemodialysis catheter insertion by nephrology fellows using simulation technology and deliberate practice. Am J Kidney Dis. 2009; 54 1: 70– 76. [DOI] [PubMed] [Google Scholar]

- 11. Barsuk JH, Cohen ER, Vozenilek JA, et al. . Simulation-based education with mastery learning improves paracentesis skills. J Grad Med Educ. 2012; 4 1: 23– 27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Barsuk JH, Cohen ER, Potts S, et al. . Dissemination of a simulation-based mastery learning intervention reduces central line-associated bloodstream infections. BMJ Qual Saf. 2014; 23 9: 749– 756. [DOI] [PubMed] [Google Scholar]

- 13. Barsuk JH, McGaghie WC, Cohen ER, et al. . Use of simulation-based mastery learning to improve the quality of central venous catheter placement in a medical intensive care unit. J Hosp Med. 2009; 4 7: 397– 403. [DOI] [PubMed] [Google Scholar]

- 14. Barsuk JH, McGaghie WC, Cohen ER, et al. . Simulation-based mastery learning reduces complications during central venous catheter insertion in a medical intensive care unit. Crit Care Med. 2009; 37 10: 2697– 2701. [PubMed] [Google Scholar]

- 15. Wayne DB, Barsuk JH, O'Leary KJ, et al. . Mastery learning of thoracentesis skills by internal medicine residents using simulation technology and deliberate practice. J Hosp Med. 2008; 3 1: 48– 54. [DOI] [PubMed] [Google Scholar]

- 16. Accreditation Council for Graduate Medical Education. Milestones. http://www.acgme.org/acgmeweb/tabid/430/ProgramandInstitutionalAccreditation/NextAccreditationSystem/Milestones.aspx. Accessed January 12, 2017. [Google Scholar]

- 17. Barsuk JH, Cohen ER, Caprio T, et al. . Simulation-based education with mastery learning improves residents' lumbar puncture skills. Neurology. 2012; 79 2: 132– 137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Wayne DB, Butter J, Siddall VJ, et al. . Mastery learning of advanced cardiac life support skills by internal medicine residents using simulation technology and deliberate practice. J Gen Intern Med. 2006; 21 3: 251– 256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Mattar SG, Alseidi AA, Jones DB, et al. . General surgery residency inadequately prepares trainees for fellowship: results of a survey of fellowship program directors. Ann Surg. 2013; 258 3: 440– 449. [DOI] [PubMed] [Google Scholar]

- 20. McQuillan RF, Clark E, Zahirieh A, et al. . Performance of temporary hemodialysis catheter insertion by nephrology fellows and attending nephrologists. Clin J Am Soc Nephrol. 2015; 10 10: 1767– 1772. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Barsuk JH, Cohen ER, Nguyen D, et al. . Attending physician adherence to a 29 component central venous catheter bundle checklist during simulated procedures. Crit Care Med. 2016; 44 10: 1871– 1881. [DOI] [PubMed] [Google Scholar]

- 22. McGaghie WC, Issenberg SB, Cohen ER, et al. . Medical education featuring mastery learning with deliberate practice can lead to better health for individuals and populations. Acad Med. 2011; 86 11: e8– e9. [DOI] [PubMed] [Google Scholar]

- 23. Barsuk JH, Cohen ER, Williams MV, et al. . The effect of simulation-based mastery learning on thoracentesis referral patterns. J Hosp Med. 2016; 11 11: 792– 795. [DOI] [PubMed] [Google Scholar]

- 24. Cohen ER, Barsuk JH, McGaghie WC, et al. . Raising the bar: reassessing standards for procedural competence. Teach Learn Med. 2013; 25 1: 6– 9. [DOI] [PubMed] [Google Scholar]

- 25. Maizel J, Guyomarc'h L, Henon P, et al. . Residents learning ultrasound-guided catheterization are not sufficiently skilled to use landmarks. Crit Care. 2014; 18 1: R36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. American Board of Internal Medicine. Policies and procedures for certification. http://www.abim.org/pdf/publications/Policies-and-Procedures-Certification.pdf. Accessed January 12, 2017. [Google Scholar]

- 27. Surgical Council on Residency Education. Curriculum outline for general surgery residency 2014–2015. http://www.absurgery.org/xfer/curriculumoutline2014-15.pdf. Accessed January 12, 2017. [Google Scholar]

- 28. Cohen ER, Barsuk JH, Moazed F, et al. . Making July safer: simulation-based mastery learning during intern boot camp. Acad Med. 2013; 88 2: 233– 239. [DOI] [PubMed] [Google Scholar]

- 29. Butter J, McGaghie WC, Cohen ER, et al. . Simulation-based mastery learning improves cardiac auscultation skills in medical students. J Gen Intern Med. 2010; 25 8: 780– 785. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Malakooti MR, McBride ME, Mobley B, et al. . Mastery of status epilepticus management via simulation-based learning for pediatrics residents. J Grad Med Educ. 2015; 7 2: 181– 186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Zendejas B, Cook DA, Hernandez-Irizarry R, et al. . Mastery learning simulation-based curriculum for laparoscopic TEP inguinal hernia repair. J Surg Educ. 2012; 69 2: 208– 214. [DOI] [PubMed] [Google Scholar]

- 32. Zendejas B, Cook DA, Bingener J, et al. . Simulation-based mastery learning improves patient outcomes in laparoscopic inguinal hernia repair: a randomized controlled trial. Ann Surg. 2011; 254 3: 502– 509; discussion 509–511. [DOI] [PubMed] [Google Scholar]

- 33. Barsuk JH, Cohen ER, Feinglass J, et al. . Clinical outcomes after bedside and interventional radiology paracentesis procedures. Am J Med. 2013; 126 4: 349– 356. [DOI] [PubMed] [Google Scholar]

- 34. Barsuk JH, Cohen ER, Feinglass J, et al. . Use of simulation-based education to reduce catheter-related bloodstream infections. Arch Intern Med. 2009; 169 15: 1420– 1423. [DOI] [PubMed] [Google Scholar]

- 35. Barsuk JH, Cohen ER, Feinglass J, et al. . Cost savings of performing paracentesis procedures at the bedside after simulation-based education. Simul Healthc. 2014; 9 5: 312– 318. [DOI] [PubMed] [Google Scholar]

- 36. Cohen ER, Feinglass J, Barsuk JH, et al. . Cost savings from reduced catheter-related bloodstream infection after simulation-based education for residents in a medical intensive care unit. Simul Healthc. 2010; 5 2: 98– 102. [DOI] [PubMed] [Google Scholar]