Abstract

Timely and accurate diagnosis is foundational to good clinical practice and an essential first step to achieving optimal patient outcomes. However, a recent Institute of Medicine report concluded that most of us will experience at least one diagnostic error in our lifetime. The report argues for efforts to improve the reliability of the diagnostic process through better measurement of diagnostic performance. The diagnostic process is a dynamic team-based activity that involves uncertainty, plays out over time, and requires effective communication and collaboration among multiple clinicians, diagnostic services, and the patient. Thus, it poses special challenges for measurement. In this paper, we discuss how the need to develop measures to improve diagnostic performance could move forward at a time when the scientific foundation needed to inform measurement is still evolving. We highlight challenges and opportunities for developing potential measures of “diagnostic safety” related to clinical diagnostic errors and associated preventable diagnostic harm. In doing so, we propose a starter set of measurement concepts for initial consideration that seem reasonably related to diagnostic safety and call for these to be studied and further refined. This would enable safe diagnosis to become an organizational priority and facilitate quality improvement. Health-care systems should consider measurement and evaluation of diagnostic performance as essential to timely and accurate diagnosis and to the reduction of preventable diagnostic harm.

Key Words: diagnostic errors, safety culture, quality measurement

Timely and accurate diagnosis is foundational to good clinical practice and essential to achieving optimal patient outcomes.1 We have learned that diagnostic errors are common,2–6 affecting approximately 1 in 20 adults each year in the United States.7 Yet, efforts to monitor and improve diagnostic performance are rarely, if ever part of initiatives to improve quality and safety.8 Diagnosis is a complex, largely cognitive process that is more difficult to evaluate and measure than many of the other parts of the patient safety agenda, such as falls, wrong-site surgery, nosocomial infections, and medication errors. The dearth of valid measurement approaches is a major barrier in efforts to study and ultimately improve diagnosis.9,10

A recent Institute of Medicine report “Improving Diagnosis in Health Care” concluded that most of us will experience at least one diagnostic error in our lifetime and argued for efforts to improve the diagnostic process through better measurement of diagnostic performance.11 It reiterated that the diagnostic process is a dynamic, team-based activity that involves uncertainty, plays out over time, and requires effective communication and collaboration among multiple providers, diagnostic services, and the patient. Measurement as a necessary first step in quality improvement is the cornerstone for many policy initiatives focused on improving quality and safety.12,13 The proliferation of health-care performance measures has been remarkable, with the National Quality Forum currently endorsing more than 600 measures in the United States.14 Health-care organizations (HCOs) commit substantial resources to comply with required measures from the Joint Commission and the Centers for Medicare and Medicaid Services, and many also participate in voluntary measure reporting sponsored by advocacy organizations such as the Leapfrog Group. Given the abundance of performance measures already in use, it is surprising how few are focused on diagnosis.15

Multitask theory, proposed by economists Holmstrom and Milgrom, posits that when incentives put in place by an organization omit key dimensions of performance, those dimensions will receive less attention; in effect, the organization risks getting only what is measured.16 Thus, it would not be surprising that in the absence of specific process or outcome measures related to diagnosis, the HCO and its members may focus their attention elsewhere. All HCOs are resource constrained, and by necessity, they will direct their attention first to the measures specifically required by accrediting agencies and payers.

The recent IOM report11 creates a propitious moment to rectify this imbalance and encourages development of measures related to diagnosis. Accepting that measurement is an effective and essential component of performance improvement and that the lack of measurement is in itself deleterious, the IOM report presents both the opportunity and the impetus to address this dilemma. In this article, we discuss how such an initiative can move forward by balancing the need for measures and measurement with the reality that the scientific knowledge needed to inform this process is still evolving. We focus on future measures to improve diagnosis and highlight opportunities and challenges to encourage further discussion and policymaking in this area.

The Challenges of Measuring the Diagnostic Process

Despite an identified need and abundant enthusiasm to act, there is little consensus and evidence to guide selection of appropriate performance measures. Measurement begins with a definition, and the IOM defined diagnostic error as the “failure to establish an accurate and timely explanation of the patient’s health problem(s) or communicate that explanation to the patient.” This definition provides 3 key concepts that need to be operationalized: (1) accurately identifying the explanation (or diagnosis) of the patient’s problem, (2) the timely provision of this explanation, and (3) effective communication of the explanation. Although there are well established tools for assessing communication in health care, none of these are focused primarily on discussions around diagnosis. Moreover, both the “accuracy” and the “timeliness” elements of the definition are problematic from a research perspective:

Accuracy. Inaccuracy is sometimes obvious (a patient diagnosed with indigestion who is really having a myocardial infarction), but in many other circumstances, accuracy is much harder to define. Is it acceptable to say “acute coronary syndrome” or does the label have to indicate actual infarction, or be even more specific, indicating location and transmural or not. Mental models of what is or is not an accurate diagnosis can differ even among clinicians in the same specialty.17,18 Some of these problems can be addressed by using predefined operational constructs or by using a consensus among experts, but given the uncertainties and evolving nature of diagnosis, either approach would be challenging.

Timeliness. Although we may all agree that asthma diagnosis should not require 7 visits over 3 years19 or that spinal cord compression from malignancy should probably be diagnosed within weeks rather than months,20 there are no widely accepted standards for how long diagnosis should take for any given condition. Furthermore, optimal diagnostic performance is not always about speed; sometimes, the best approach is to defer diagnosis or testing to some later time or to not make a definitive diagnosis until more information is available or if symptoms persist or evolve.

Experts have yet to define how we objectively identify clinicians or teams who excel in diagnosis and those that do not. One might argue that the best diagnosticians might be defined not only by their accuracy and timeliness but also by their efficiency (e.g., minimizing resource expenditure and limiting the patient’s exposure to risk).21 In this regard, Donabedian states, “In my opinion, the essence of quality or, in other words, ‘clinical judgment,’ is in the choice of the most appropriate strategy for the management of any given situation. The balance of expected benefits, risks, and monetary costs, as evaluated jointly by the physician and his patient, is the criterion for selecting the optimal strategy.”22 Thus, some, including authors of this paper, would argue that the measurement of the diagnostic process should really be thought of within the broader evaluation of value-based care that accounts for quality, risks, and costs, rather than using an overly simplistic focus on achieving the correct diagnosis in the shortest amount of time.23

Nevertheless, many would choose to focus on diagnostic errors as a key window into the diagnostic process, but this represents another major challenge. The instruments that organizations rely on to detect other patient safety concerns are poorly suited or fail completely in detecting diagnostic error.24 Newer approaches are needed that improve reporting by patients, physicians, and other clinicians and that take advantage of information stored in electronic medical records to detect errors or patients at risk for error.25,26 Autopsy reports, preoperative versus postoperative surgical discrepancies, escalations of care, and conducting selected chart reviews are other options for detecting missed diagnoses or preventable diagnostic delay.

Even when diagnostic errors are identified, learning from them can be challenging. Diagnosis is influenced by complex dynamics involving system-, patient-, and team-related and individual cognitive factors. While identifying these factors may be feasible in some cases,26 dissecting the root causes of these elements requires substantial inference, and there is risk of bias from looking retrospectively. Although factors can be suspected as “contributing,” it is hard to identify causal links.27 Discerning the effect of individual heuristics, biases, overconfidence, affective influences, distractions, and time constraints as well as key systems, environmental, and team factors is often not possible. For measurement to be effective and actionable, analysis needs to reflect real-world practice, in which systems, team members, and patients themselves inevitably influence the clinicians’ thought processes.28 For the many diagnoses that are made by teams, arriving at a diagnosis creates dual problems of attribution and ownership in the setting of fragmented and complex teams that exist in health care today. Thus, it might be difficult to determine who should receive the feedback that results from measurement and how to deliver useful and actionable feedback to a “team.”

Finally, there can be differences regarding whether it is more important to measure success or failure in diagnosis. Some experts29 have argued that “safety is better measured by how everyday work goes well than by how it fails.” This represents a paradigm change from the current dominant focus on errors that would substantially change how we would design a measurement system of “diagnostic safety.”

Suggestions for Moving Forward

One of the first steps toward useful measures of diagnostic safety is to understand and use appropriate definitions of diagnostic error. In addition to the IOM definition, there are 3 other definitions of diagnostic error in active use, and each may be appropriate for research in particular circumstances. Graber et al defines it as diagnosis that was unintentionally delayed (sufficient information was available earlier), wrong (another diagnosis was made before the correct one), or missed (no diagnosis was ever made), as judged from the eventual appreciation of more definitive information.30 Schiff et al defines it as any mistake or failure in the diagnostic process leading to a misdiagnosis, missed diagnosis, or delayed diagnosis.31 Lastly, Singh defines it as missed opportunities to make a correct or timely diagnosis based on the available evidence, regardless of patient harm,32 and calls for unequivocal evidence that some critical finding or abnormality was missed or not investigated when it should have been.26 These definitions convey complimentary concepts that are useful to understand the “failure” referred to in the IOM definition and might be useful to operationalize the IOM definition as it is used in future work.

Assuming sufficient motivation exists to address and improve diagnostic safety, what measures should be considered? Recalling Donabedian’s framework, measures that focus on structures and processes can and should be considered, and where possible their downstream diagnosis-related outcomes, bearing in mind Donabedian’s admonition that none of these aspects of care are worth measuring without convincing demonstration of the causal associations between them.33 Although this framework provides an appropriate and logical approach to begin developing measures of diagnosis, it is critical to continue to emphasize that candidate measures are only as good as the quality of the evidence that supports causal links between specific structures, processes, and outcomes, underscoring the need for substantial amount of research work that needs to be done in this area.

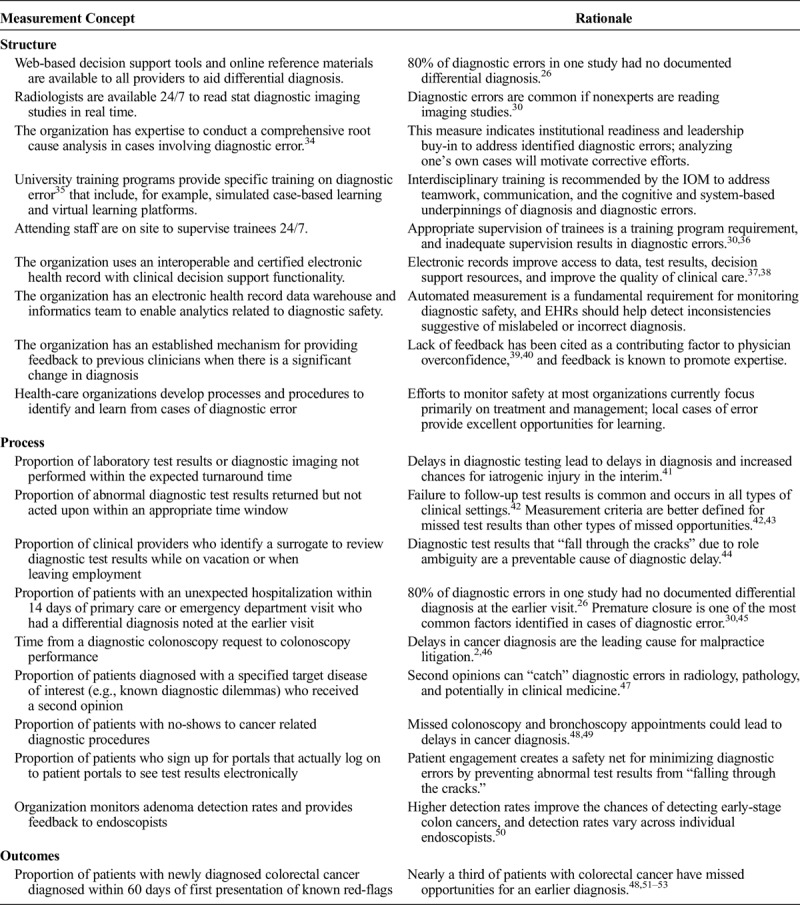

Table 1 describes a set of candidate measurement concepts drawn from recent studies that focus on diagnostic error. This is in no way a complete list but rather a conversation starter based on emerging evidence on risks related to diagnostic safety (versus patient safety in general). For example, many studies show lack of timely follow-up of diagnostic test results in missed diagnosis, but only a handful of HCOs in the United States are tracking follow-up of abnormal test results.24,41–43 Although these proposed measurement concepts are all reasonable candidates for consideration, developing an actionable set of measures would ideally require a validation process that samples a broader range of informed opinion and experience in keeping with the emerging standards for the development of quality measures. Even if a particular measure is endorsed broadly, it should be considered a hypothesis to be tested. Empirical confirmation of its beneficial effect on patient outcomes should be demonstrated before it can be considered a standard to which organizations are held accountable, an essential step that is rarely considered in the development of performance measure sets.

TABLE 1.

Candidate Set of Measurement Concepts to Consider for Evaluation of Diagnostic Safety

A real challenge to implementing performance measurement in diagnosis is that harm might outweigh the benefit. Launching more measures, especially measures lacking robust evidence, tends to alienate front-line caregivers and HCOs already overburdened with other performance measures.54 Recently, experts have called for a moratorium on new measures, citing concerns that flawed measures will be used for public reporting and value-based purchasing.12,15 Turning again to the theory of performance measurement, Holmstrom and Milgrom observe that “the desirability of providing incentives for any one activity decreases with the difficulty of measuring performance in any other activities that make competing demands on the [provider]’s time and attention.” A concern that follows from this observation is that unintended consequences of performance measures will inevitably emerge and undermine efforts to improve diagnostic safety. One could easily imagine that measures of underdiagnosis might lead to higher utilization of unnecessary tests.

Summary and Recommendations

Measurement, benchmarking, and transparency of performance are playing a major role in improving health care. Current performance measures pertain almost exclusively to treatment, and a recent IOM report has strongly endorsed broadening this focus to include diagnosis. We cannot make progress toward this goal without advancing the science of measurement around diagnostic performance. Compared with most performance measures, diagnostic safety may be particularly salient to physicians and their teams, given how central diagnosis is to our professional identity and the degree of control that physicians exert over the diagnostic process.

However, the IOM also recognizes the importance of system and organizational factors in improving diagnosis. For example, improved communication and care coordination and large scale initiatives to measure and improve care delivery (such as implementation of accountable care organizations) are important targets. The United Kingdom has already embraced measurement in its large initiative focused on improving the timeliness of cancer diagnosis,55 and the United States could follow this lead as a first step to measure diagnostic safety.

To create a foundation for further discussion on evidence for measures for diagnostic safety, 6 questions should be considered:

What are the appropriate time intervals to diagnose specific conditions of interest that are frequently associated with diagnostic error?

How can we measure competency in clinical reasoning in real-world practice settings?

What measurable physician or team behaviors characterize ideal versus suboptimal diagnostic performance?

What system properties translate into safe diagnostic performance, and how can we measure those?

How do we leverage information technology, including electronic health records (EHRs), to help measure and improve diagnostic safety?

How do we leverage patient experiences and reports to measure and improve diagnostic safety?

Pioneering organizations can begin by identifying “missed opportunities in diagnosis” or “diagnostic safety concerns.”32 For example, both Kaiser Permanente and the Department of Veterans Affairs are involved in initiatives to improve follow-up of abnormal test results.24,56 The case for measuring diagnostic outcomes in certain high-risk areas such as cancer diagnosis has also become clear.57 Nearly a third of patients with colorectal cancer have missed opportunities for an earlier diagnosis.48,53 Thus, outcome measures could be considered, such as ratio of early stage to late stage colorectal cancer diagnosed within the previous year and proportion of patients with newly diagnosed colorectal cancer diagnosed within 60 days of first presentation of known red flags.51,52

HCOs should also consider using their EHRs to enable diagnostic safety measurement. Although most HCOs are now using EHRs, very few are doing any analytics for patient safety improvement.58 In addition to using digital data to identify patients with potential diagnostic process failures, the EHR could be leveraged for recognizing incorrect diagnosis and internal inconsistencies suggestive of mislabeled diagnosis (patient with “coronary artery disease,” despite normal coronary angiogram; patient with “COPD” with normal lung function tests). This process would require HCOs to better capture and use structured clinical data in an electronic format for safety improvement, for which the time is now ripe.59

Additionally, in any efforts to measure underdiagnosis, it is important that attention also be paid to overdiagnosis,60 acknowledging that overdiagnosis has its own measurement-related conceptual challenges.61 We should learn from the mistakes of performance measurement in the treatment realm, where a single-minded focus on undertreatment in highly monitored areas of practice has led to harmful instances of overtreatment.62 We should also consider how perspectives from both patients and their care teams (physicians and other team members) can help develop novel measurement approaches that involve asking them directly about the diagnostic process and their roles. This approach is consistent with the fact that diagnosis is a “team sport” where patients play a critical role.63

Some experts caution against too much emphasis on measurement to guide decisions because of unknown and unknowable data.64 Nevertheless, evidence suggests it is now time to address measurement of diagnostic safety while balancing to avoid both underdiagnosis and overdiagnosis. We propose a starter set of measurement concepts for initial consideration that seem reasonably related to diagnostic quality and safety and call for these to be studied and further refined. This would enable safe diagnosis to become an organizational priority and facilitate quality improvement. Meanwhile, researchers should work on the evidence base needed for more rigorous measurement of structure and process elements that are connected to the real clinical outcomes of interest, more timely and accurate diagnosis, and less preventable diagnostic harm.

Footnotes

H.S. is supported by the VA Health Services Research and Development Service (CRE 12-033; Presidential Early Career Award for Scientists and Engineers USA 14-274), the VA National Center for Patient Safety and the Agency for Health Care Research and Quality (R01HS022087), and the Houston VA HSR&D Center for Innovations in Quality, Effectiveness and Safety (CIN 13-413) and has received honoraria from various health care organizations for speaking and/or consulting on ambulatory patient safety improvement activities. H.S. had full access to all of the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis.

The funding sources had no role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; and preparation, review, or approval of the manuscript.

This article is not under consideration for publication elsewhere. The authors have no conflicts of interest to disclose and declare: no support from any organization for the submitted work; no financial relationships with any organizations that might have an interest in the submitted work in the previous three years; no other relationships or activities that could appear to have influenced the submitted work.

Each author meets all of the following authorship requirements: substantial contributions to the conception or design of the work; or the acquisition, analysis, or interpretation of data for the work; drafting the work or revising it critically for important intellectual content; final approval of the version to be published; agreement to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

The views expressed in this article are those of the authors and do not necessarily represent the views of the Department of Veterans Affairs or any other funding agency. No funding agency had any role in the design and conduct of the study; data collection, management, analysis, and interpretation of the data; and preparation, review, or approval of the manuscript.

REFERENCES

- 1.Singh H, Graber ML. Improving diagnosis in health care—the next imperative for patient safety. N Engl J Med. 2015;373:2493–2495. [DOI] [PubMed] [Google Scholar]

- 2.Bishop TF, Ryan AM, Casalino LP. Paid malpractice claims for adverse events in inpatient and outpatient settings. JAMA. 2011;305:2427–2431. [DOI] [PubMed] [Google Scholar]

- 3.Chandra A, Nundy S, Seabury SA. The growth of physician medical malpractice payments: evidence from the National Practitioner Data Bank. Health Aff (Millwood). 2005. Suppl Web Exclusives:W5-240–W5-249. [DOI] [PubMed] [Google Scholar]

- 4.Gandhi TK, Kachalia A, Thomas EJ, et al. Missed and delayed diagnoses in the ambulatory setting: a study of closed malpractice claims. Ann Intern Med. 2006;145:488–496. [DOI] [PubMed] [Google Scholar]

- 5.Saber Tehrani AS, Lee H, Mathews SC, et al. 25-Year summary of US malpractice claims for diagnostic errors 1986–2010: an analysis from the National Practitioner Data Bank. BMJ Qual Saf. 2013;22:672–680. [DOI] [PubMed] [Google Scholar]

- 6.Schiff GD, Puopolo AL, Huben-Kearney A, et al. Primary care closed claims experience of Massachusetts malpractice insurers. JAMA Intern Med. 2013;173:2063–2068. [DOI] [PubMed] [Google Scholar]

- 7.Singh H, Meyer AN, Thomas EJ. The frequency of diagnostic errors in outpatient care: estimations from three large observational studies involving US adult populations. BMJ Qual Saf. 2014;23:727–731. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Graber ML, Wachter RM, Cassel CK. Bringing diagnosis into the quality and safety equations. JAMA. 2012;308:1211–1212. [DOI] [PubMed] [Google Scholar]

- 9.El-Kareh R. Making clinical diagnoses: how measureable is the process? The National Quality Measures Clearinghouse™ (NQMC) [serial online] 2014. Available at: Agency for Healthcare Research and Quality (AHRQ). Accessed June 6, 2016. [Google Scholar]

- 10.McGlynn EA, McDonald KM, Cassel CK. Measurement is essential for improving diagnosis and reducing diagnostic error: a report from the Institute of Medicine. JAMA. 2015;314:2501–2502. [DOI] [PubMed] [Google Scholar]

- 11.Improving diagnosis in health care. National Academies of Sciences Engineering and Medicine [serial online] 2015, Available at: The National Academies Press. Accessed June 14, 2016. [Google Scholar]

- 12.Meyer GS, Nelson EC, Pryor DB, et al. More quality measures versus measuring what matters: a call for balance and parsimony. BMJ Qual Saf. 2012;21:964–968. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Jha A, Pronovost P. Toward a safer health care system: the critical need to improve measurement. JAMA. 2016;315:1831–1832. [DOI] [PubMed] [Google Scholar]

- 14.National Quality Forum. National Quality Forum [serial online] 2016. [Google Scholar]

- 15.Thomas EJ, Classen DC. Patient safety: let's measure what matters. Ann Intern Med. 2014;160:642–643. [DOI] [PubMed] [Google Scholar]

- 16.Holmstrom B, Milfrom P. Multitask principal-agent analyses: incentive contracts, asset ownership, and job design. JELO. 1991;7:24–52. [Google Scholar]

- 17.Zwaan L, de Bruijne M, Wagner C, et al. Patient record review of the incidence, consequences, and causes of diagnostic adverse events. Arch Intern Med. 2010;170:1015–1021. [DOI] [PubMed] [Google Scholar]

- 18.Singh H, Giardina TD, Forjuoh SN, et al. Electronic health record-based surveillance of diagnostic errors in primary care. BMJ Qual Saf. 2012;21:93–100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Charlton I, Jones K, Bain J. Delay in diagnosis of childhood asthma and its influence on respiratory consultation rates. Arch Dis Child. 1991;66:633–635. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Levack P, Graham J, Collie D, et al. Don't wait for a sensory level–listen to the symptoms: a prospective audit of the delays in diagnosis of malignant cord compression. Clin Oncol (R Coll Radiol). 2002;14:472–480. [DOI] [PubMed] [Google Scholar]

- 21.Singh H. Diagnostic errors: moving beyond 'no respect' and getting ready for prime time. BMJ Qual Saf. 2013;22:789–792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Donabedian A. The quality of medical care. Science. 1978;200:856–864. [DOI] [PubMed] [Google Scholar]

- 23.Hofer TP, Kerr EA, Hayward RA. What is an error? Eff Clin Pract. 2000;3:261–269. [PubMed] [Google Scholar]

- 24.Graber ML, Trowbridge RL, Myers JS, et al. The next organizational challenge: finding and addressing diagnostic error. Jt Comm J Qual Patient Saf. 2014;40:102–110. [DOI] [PubMed] [Google Scholar]

- 25.Danforth KN, Smith AE, Loo RK, et al. Electronic clinical surveillance to improve outpatient care: diverse applications within an integrated delivery system. EGEMS (Wash DC). 2014;2:1056 [serial online] 2014;2. Available at: The Berkeley Electronic Press. Accessed June 6, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Singh H, Giardina TD, Meyer AN, et al. Types and origins of diagnostic errors in primary care settings. JAMA Intern Med. 2013;173:418–425. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Graber ML, Kissam S, Payne VL, et al. Cognitive interventions to reduce diagnostic error: a narrative review. BMJ Qual Saf. 2012;21:535–557. [DOI] [PubMed] [Google Scholar]

- 28.Henriksen K, Brady J. The pursuit of better diagnostic performance: a human factors perspective. BMJ Qual Saf. 2013;22:ii1–ii5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Braithwaite J, Wears RL, Hollnagel E. Resilient health care: turning patient safety on its head. Int J Qual Health Care. 2015;27:418–420. [DOI] [PubMed] [Google Scholar]

- 30.Graber ML, Franklin N, Gordon R. Diagnostic error in internal medicine. Arch Intern Med. 2005;165:1493–1499. [DOI] [PubMed] [Google Scholar]

- 31.Schiff GD, Hasan O, Kim S, et al. Diagnostic error in medicine: analysis of 583 physician-reported errors. Arch Intern Med. 2009;169:1881–1887. [DOI] [PubMed] [Google Scholar]

- 32.Singh H. Editorial: Helping health care organizations to define diagnostic errors as missed opportunities in diagnosis. Jt Comm J Qual Patient Saf. 2014;40:99–101. [DOI] [PubMed] [Google Scholar]

- 33.Donabedian A. The quality of care. How can it be assessed? JAMA. 1988;260:1743–1748. [DOI] [PubMed] [Google Scholar]

- 34.Reilly JB, Myers JS, Salvador D, et al. Use of a novel, modified fishbone diagram to analyze diagnostic errors. Diagnosis. 2014;1:167–171. [DOI] [PubMed] [Google Scholar]

- 35.Reilly JB, Ogdie AR, Von Feldt JM, et al. Teaching about how doctors think: a longitudinal curriculum in cognitive bias and diagnostic error for residents. BMJ Qual Saf. 2013;22:1044–1050. [DOI] [PubMed] [Google Scholar]

- 36.Singh H, Thomas EJ, Petersen LA, et al. Medical errors involving trainees: a study of closed malpractice claims from 5 insurers. Arch Intern Med. 2007;167:2030–2036. [DOI] [PubMed] [Google Scholar]

- 37.El-Kareh R, Hasan O, Schiff GD. Use of health information technology to reduce diagnostic errors. BMJ Qual Saf. 2013;22:ii40–ii51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Liebovitz D. Next steps for electronic health records to improve the diagnostic process. Diagnosis. 2015;2:111–116. [DOI] [PubMed] [Google Scholar]

- 39.Berner ES, Graber ML. Overconfidence as a cause of diagnostic error in medicine. Am J Med. 2008;121:S2–S23. [DOI] [PubMed] [Google Scholar]

- 40.Meyer AN, Payne VL, Meeks DW, et al. Physicians' diagnostic accuracy, confidence, and resource requests: a vignette study. JAMA Intern Med. 2013;173:1952–1958. [DOI] [PubMed] [Google Scholar]

- 41.Casalino LP, Dunham D, Chin MH, et al. Frequency of failure to inform patients of clinically significant outpatient test results. Arch Intern Med. 2009;169:1123–1129. [DOI] [PubMed] [Google Scholar]

- 42.Singh H, Thomas EJ, Sittig DF, et al. Notification of abnormal lab test results in an electronic medical record: do any safety concerns remain? Am J Med. 2010;123:238–244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Singh H, Thomas EJ, Mani S, et al. Timely follow-up of abnormal diagnostic imaging test results in an outpatient setting: are electronic medical records achieving their potential? Arch Intern Med. 2009;169:1578–1586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Menon S, Smith MW, Sittig DF, et al. How context affects electronic health record-based test result follow-up: a mixed-methods evaluation. BMJ Open. 2014;4:e005985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Balla J, Heneghan C, Goyder C, et al. Identifying early warning signs for diagnostic errors in primary care: a qualitative study. BMJ Open. 2012;2:e001539. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Wallace E, Lowry J, Smith SM, et al. The epidemiology of malpractice claims in primary care: a systematic review. BMJ Open. 2013;3:e002929. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Payne VL, Singh H, Meyer AN, et al. Patient-initiated second opinions: systematic review of characteristics and impact on diagnosis, treatment, and satisfaction. Mayo Clin Proc. 2014;89:687–696. [DOI] [PubMed] [Google Scholar]

- 48.Singh H, Daci K, Petersen L, et al. Missed opportunities to initiate endoscopic evaluation for colorectal cancer diagnosis. Am J Gastroenterol. 2009;104:2543–2554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Singh H, Hirani K, Kadiyala H, et al. Characteristics and predictors of missed opportunities in lung cancer diagnosis: an electronic health record-based study. J Clin Oncol. 2010;28:3307–3315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Corley DA, Jensen CD, Marks AR, et al. Adenoma detection rate and risk of colorectal cancer and death. N Engl J Med. 2014;370:1298–1306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Singh H, Kadiyala H, Bhagwath G, et al. Using a multifaceted approach to improve the follow-up of positive fecal occult blood test results. Am J Gastroenterol. 2009;104:942–952. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Singh H, Petersen LA, Daci K, et al. Reducing referral delays in colorectal cancer diagnosis: is it about how you ask? Qual Saf Health Care. 2010;19:e27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Singh H, Khan R, Giardina TD, et al. Postreferral colonoscopy delays in diagnosis of colorectal cancer: a mixed-methods analysis. Qual Manag Health Care. 2012;21:252–261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Cassel CK, Conway PH, Delbanco SF, et al. Getting more performance from performance measurement. N Engl J Med. 2014;371:2145–2147. [DOI] [PubMed] [Google Scholar]

- 55.National Awareness and Early Diagnosis Initiative—NAEDI. Cancer Research UK [serial online] 2014. Accessed June 6, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Murphy DR, Laxmisan A, Reis BA, et al. Electronic health record-based triggers to detect potential delays in cancer diagnosis. BMJ Qual Saf. 2014;23:8–16. [DOI] [PubMed] [Google Scholar]

- 57.Lyratzopoulos G, Vedsted P, Singh H. Understanding missed opportunities for more timely diagnosis of cancer in symptomatic patients after presentation. Br J Cancer. 2015;112:S84–S91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Russo E, Sittig DF, Murphy DR, et al. Challenges in patient safety improvement research in the era of electronic health records. Healthc (Amst). 2016;4:285–290. [DOI] [PubMed] [Google Scholar]

- 59.Sittig DF, Ash JS, Singh H. The SAFER guides: empowering organizations to improve the safety and effectiveness of electronic health records. Am J Manag Care. 2014;20:418–423. [PubMed] [Google Scholar]

- 60.Welch HG, Schwartz L, Woloshin S. Overdiagnosed: Making People Sick in the Pursuit of Health. 1st ed Boston: Beacon Press; 2012. [Google Scholar]

- 61.Hofmann B. Diagnosing overdiagnosis: conceptual challenges and suggested solutions. Eur J Epidemiol. 2014;29:599–604. [DOI] [PubMed] [Google Scholar]

- 62.Kerr EA, Lucatorto MA, Holleman R, et al. Monitoring performance for blood pressure management among patients with diabetes mellitus: too much of a good thing? Arch Intern Med. 2012;172:938–945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Heyhoe J, Lawton R, Armitage G, et al. Understanding diagnostic error: looking beyond diagnostic accuracy. Diagnosis. 2015;2:205–209. [DOI] [PubMed] [Google Scholar]

- 64.Berenson RA, News@JAMA If you can't measure performance, can you improve it? JAMA [serial online] 2016. Accessed July 6, 2016. [DOI] [PubMed] [Google Scholar]