Abstract

Purpose

This study examined the impact of social–cognitive stress on sentence-level speech variability, determinism, and stability in adults who stutter (AWS) and adults who do not stutter (AWNS). We demonstrated that complementing the spatiotemporal index (STI) with recurrence quantification analysis (RQA) provides a novel approach to both assessing and interpreting speech variability in stuttering.

Method

Twenty AWS and 21 AWNS repeated sentences in audience and nonaudience conditions while their lip movements were tracked. Across-sentence variability was assessed via the STI; within-sentence determinism and stability were assessed via RQA.

Results

Compared with the AWNS, the AWS produced speech that was more variable across sentences and more deterministic and stable within sentences. Audience presence contributed to greater within-sentence determinism and stability in the AWS. A subset of AWS who were more susceptible to experiencing anxiety exhibited reduced across-sentence variability in the audience condition compared with the nonaudience condition.

Conclusions

This study extends the assessment of speech variability in AWS and AWNS into the social–cognitive domain and demonstrates that the characterization of speech within sentences using RQA is complementary to the across-sentence STI measure. AWS seem to adopt a more restrictive, less flexible speaking approach in response to social–cognitive stress, which is presumably a strategy for maintaining observably fluent speech.

Persistent developmental stuttering (herein referred to simply as stuttering) is a communication disorder thought to emerge from a complex interaction of linguistic, cognitive, motor, and/or environmental processes (Alm, 2014; Smith & Kelly, 1997; van Lieshout, Hulstijn, & Peters, 2004; Zimmermann, 1980). Peripheral speech behaviors associated with stuttering are intermittent and range in expression from part-syllable repetitions and audible/inaudible sound prolongations to inconsistencies during perceptually fluent speech (e.g., increased kinematic variability, reduced articulatory rate). Genetic predispositions may form the basis for stuttering and stuttering behaviors in at least a small subset of people who stutter (Kang et al., 2010; Raza et al., 2016); however, the factors that underlie stuttering within and across individuals are not well understood.

One approach to hypothesis-driven research in stuttering involves isolating factors that differentially affect speech production in speakers who do and do not stutter. This allows investigators to make inferences regarding the factors that play a contributory role in stuttering. Work in this area has focused primarily on the impact of linguistic complexity, using speech variability (i.e., the inconsistency in speech movements across repeated utterances) as a proxy for speech function. This work reveals that speakers who stutter tend to exhibit greater speech variability than speakers who do not stutter when their systems are stressed syntactically (Cai et al., 2011; Kleinow & Smith, 2000) and phonologically (Smith, Goffman, Sasisekaran, & Weber-Fox, 2012; Smith, Sadagopan, Walsh, & Weber-Fox, 2010). Such findings have been interpreted as evidence that the speech motor systems of speakers who stutter are more susceptible to breaking down than those of speakers who do not stutter when stressed linguistically (e.g., Kleinow & Smith, 2000; MacPherson & Smith, 2013). However, variability in behavior is a complex phenomenon that reflects both stable and unstable underlying processes (M. A. Riley & Turvey, 2002; van Lieshout & Namasivayam, 2010). The current study extends the variability paradigm into the social–cognitive domain and implements both commonly used across-sentences measures and novel within-sentence measures to assess and interpret speech variability in adults who stutter (AWS) and adults who do not stutter (AWNS).

Social–Cognitive Stress

Alm (2014) posited that interference from social cognition contributes to stuttering. Social cognition refers broadly to an individual's expectations or the expectations of others in the individual's environment regarding how that individual should act or behave (Alm, 2014). For speakers who stutter, interference from social cognition may include a desire for uninterrupted communication despite its unlikeliness or negative expectations for how the listener(s) in the speaker's environment will react to their speech. The cognitive processes (e.g., dissonance, anxiety) associated with the possibility of speech disruption or a negative listener reaction may compete with the sensorimotor resources necessary to produce fluent speech, and the speech motor systems of AWS may indeed be vulnerable to this type of interference.

Little is known about the impact of social–cognitive stress on speech variability or speech production in general. One example of social–cognitive stress is speaking in the presence of an audience. This increases communicative pressure and the potential for negative evaluation or judgment, particularly for AWS (Arenas, 2012), which potentially elicits cognitive responses such as increased (or decreased) speech awareness, attention, and/or anxiety. 1 Audience presence has been linked to increases in stuttered speech in several studies (Commodore, 1980; Steer & Johnson, 1936; Van Riper & Hull, 1955; von Krais Porter, 1939), but to the best of our knowledge its impact on speech variability has been examined in only one study. Evans (2009) measured acoustic variability in terms of vowel, phrase, and word duration; voice onset time; and formant transition duration, extent, and rate. That study did not find significant group differences between the audience and nonaudience conditions, but this may have been because (a) duration-based measures will not reflect differences in articulatory patterning and (b) measures that are based on single sound segments (i.e., voice onset time, formant transitions) will not detect spatiotemporal differences in connected speech.

There is reason to expect that social–cognitive stress (here, the presence of an audience) will result in reduced variability in AWS. AWS exhibit reductions in heart rate compared with AWNS prior to stressful speaking situations (Peters & Hulstijn, 1984; Weber & Smith, 1990) as well as increased acoustic startle response magnitude (Guitar, 2003), which is an indicator of reactivity or the ability to prepare the body for upcoming aversive stimuli. Both responses are associated with motor “freezing” (Alm, 2004), which may temporarily restrict or partially immobilize the motor system. Indeed, van Lieshout, Ben-David, Lipski, and Namasivayam (2014) demonstrated that AWS exhibit smaller upper lip movement ranges when speaking under cognitive and emotional stress. It may be that this partial immobilization of the speech articulators is a compensation mechanism for underlying difficulty or malfunction, and a reduction in variability may be a motor strategy for maintaining fluent speech under aversive stimuli (see also Namasivayam & van Lieshout, 2011). Other neurologically based or developmental impairments are associated with reduced variability and a restrictive motor system. For example, individuals with Parkinson's disease, obsessive compulsive disorder, and myelogenous leukemia and those on the autism spectrum exhibit stereotypical or overly deterministic (i.e., patterned) motor behaviors (Goldberger, 1996, 1997).

This section focused on situations during which speakers maintain perceptually fluent speech because overtly stuttered speech would undoubtedly result in increased across-sentence variability. Some have questioned the utility of the so-called fluent speech paradigm (e.g., Armson & Kalinowski, 1994; Bloodstein & Bernstein-Ratner, 2008), primarily because perceptually fluent speech samples may be contaminated by instances of stuttering that are harder to observe (Armson & Kalinowski, 1994). However, a potential advantage of this paradigm is that “tenuous fluency” (Adams & Runyan, 1981, p. 206) or “subperceptual stuttering” (Armson & Kalinowski, 1994, p. 70) can actually be measured. These subtle forms of stuttering may be unavailable to even the most trained of perceivers but measurable using sophisticated techniques and equipment. Because we are investigating perceptually fluent speech in terms of variability, it is critical now to discuss how speech variability is interpreted in the context of speech motor control and stuttering.

Interpreting Speech Variability

The most widely used metric for assessing speech variability is the spatiotemporal index (STI; Smith, Goffman, Zelaznik, Ying, & McGillem, 1995). The STI is a linear amplitude- and time-normalized metric that assesses variability across a set of utterances. Smith and colleagues (Smith et al., 1995; Smith, Johnson, McGillem, & Goffman, 2000) rationalized the value of the STI as follows: If speech is preprogrammed, then unperturbed repetitions of a single utterance produced by a typically fluent speaker should reveal consistent movement patterns with high stability and thus a low STI value. The STI has been used to show that children exhibit greater speech variability than adults (Maner, Smith, & Grayson, 2000; Smith & Goffman, 1998; Smith & Zelaznik, 2004), that the speech systems of people who stutter are more vulnerable to system stress than those of people who do not stutter (Cai et al., 2011; Jackson, Tiede, & Whalen, 2013; Kleinow & Smith, 2000; MacPherson & Smith, 2013; Smith et al., 2010, 2012; Smith & Kleinow, 2000), and that individuals with Parkinson's disease are more variable in speech output than those without Parkinson's disease (Anderson, Lowit, & Howell, 2008; Kleinow, Smith, & Ramig, 2001).

There are three inherent limitations related to the interpretation of the STI in the context of speech and stuttering. First, the STI quantifies variability across speech utterances, which assumes that repeated utterances produced by a typical speaker should converge kinematically (Smith et al., 1995). Although increased articulatory variability may reflect instability in the speech motor system, it may also reflect an alternative—but not less fluent—speaking strategy (e.g., altered prosody or syllable stress; Dromey, Boyce, & Channell, 2014; Maner et al., 2000). Second, the STI requires time normalization through the consistent alignment of the beginnings and ends of utterances, which distorts potentially important temporal information (Lucero, 2005; Lucero, Munhall, Gracco, & Ramsay, 1997; Ward & Arnfield, 2001). This is particularly relevant to stuttering because as a group, people who stutter exhibit significantly longer utterance durations or slower speech rates than people who do not stutter (Bloodstein, 1944; Colcord & Adams, 1979; Jackson, 2015; Starkweather & Myers, 1979). Third, the STI holds that there is an inverse relationship between variability in behavior and global stability of the speech motor system (as described in Smith et al., 1995). That AWS exhibit greater STI (on average) than AWNS when their systems are stressed linguistically therefore implies that the speech motor systems of AWS become less stable under these conditions. However, several underlying processes (e.g., cognitive, linguistic) contribute to the emergence of speech and stuttering. As such, variability in peripheral behavior cannot by itself be used to characterize global speech motor function. For example, it may be the case that reduced variability in behavior reflects a system on the verge of breaking down (M. A. Riley & Turvey, 2002). We propose a dynamical systems account of variability (e.g., M. A. Riley & Turvey, 2002; Spencer, Perone, & Johnson, 2009; van Lieshout & Namasivayam, 2010), such that variability in observable behavior(s) can reflect both vulnerability in the speech motor system and flexibility or adaptability to changing contextual demands or other perturbations. This distinction is critical for testing the hypothesis proposed earlier—that AWS will exhibit reduced variability compared with AWNS under social–cognitive stress.

The concept of speech variability in the context of stuttering needs to be expanded if it is to be used to examine the impact of system stressors on speech motor functioning (as also argued by van Lieshout & Namasivayam, 2010). We argue that the STI is a useful tool for quantifying differences between AWS and AWNS across repeated productions but that it should be complemented by approaches that characterize speech within productions or utterances without distorting the temporal structure of speech and without assuming an inverse relationship between variability in behavior and global speech stability. Here, we complement STI with recurrence quantification analysis (RQA; Webber, 2004; Webber & Marwan, 2015; Webber & Zbilut, 1994, 2005).

RQA

RQA is a technique used to identify patterns in individual time series and so does not require time normalization procedures. Whereas the STI is used to examine spatiotemporal characteristics across a set of utterances (i.e., how well these utterances converge onto a motor “template”), RQA reveals spatiotemporal characteristics within speech utterances (i.e., how the dynamics evolve throughout the utterance). As typically implemented, RQA uses the phase space reconstruction method to retrieve the dynamics that underlie a system when only one variable of that system is known. These dynamics are represented when the known time series and its time-delayed copies are plotted in a phase space (Eckmann, Kamphorst, & Ruelle, 1987; Takens, 1981). For example, the dynamics of lip aperture (LA) during speech production, as well as the dynamics of other variables hypothesized to be part of that speech system (e.g., movements of other articulators, social–cognitive processes), will be reflected in a phase space plot of the time series for LA and its time-delayed copies. Recurrence plots (Eckmann et al., 1987) are then generated and a series of RQA measures are calculated, including indices that reflect determinism and stability (discussed in detail in the Method section). RQA addresses the reality that in speech and stuttering, multiple processes (e.g., linguistic, social–cognitive) underlie observable behavior.

RQA has been used to show that individuals affected by Parkinson's disease (Schmit et al., 2006) and stroke (Ghomashchi, Esteki, Nasrabadi, Sprott, & Bahrpeyma, 2011) exhibit more deterministic motor movements compared with individuals not affected by these conditions. Work from our lab (Jackson, Tiede, Riley, & Whalen, 2016) demonstrates the feasibility of using RQA to examine the impact of linguistic stressors on speech in typically developing adult speakers. For a tutorial-like presentation of RQA, including phase space reconstruction, see Webber and Zbilut (2005). For an example of RQA applied to speech data, see Jackson et al. (2016).

The purpose of this study was to examine the impact of social–cognitive stress on speech variability (including determinism and stability) in AWS and AWNS. Complementing existing measures (i.e., the STI) with novel methods (i.e., RQA) provided a means to both quantify variability across utterances and characterize spatiotemporal patterning of speech within utterances, allowing for new interpretations related to the nature of variability in stuttering and speech motor control.

Method

This research was approved by the institutional review board at the Graduate Center of the City University of New York (CUNY) and the National Stuttering Association Research Committee.

Participants

This study enrolled 24 AWS and 21 AWNS (i.e., controls) matched for age and sex. AWS were recruited via referrals from local speech-language pathologists (SLPs), e-mails distributed by the National Stuttering Association and within CUNY, and word of mouth. AWNS were recruited via e-mails distributed within CUNY and word of mouth. Three AWS were excluded because they did not produce at least 10 fluent trials for each sentence-condition set (more on exclusionary criteria below). An additional AWS was excluded due to technical malfunction and data loss. Thus, the current analysis included 20 AWS (six women, 14 men; mean age = 27.4 years, SD = 6.9) and 21 AWNS (seven women, 14 men; mean age = 25.3 years, SD = 2.5). Table 1 summarizes participant characteristics, formal and informal test scores, and therapy history. Therapy history was determined by asking participants what their cumulative time in therapy had been. All participants (except for the two who reported no therapy) reported participating in standard therapy approaches (e.g., stuttering modification, fluency shaping). All of the participants reported that their primary language was English and that they had mastered English before the age of 6 years. 2 No participants reported a positive history of speech-language disorder (other than stuttering) or hearing, neurological, or psychological impairment.

Table 1.

Participant characteristics.

| Participant | Group | Sex | Age (years) | OASES | SSI-4 | Therapy (months) | EOWPVT-3 | Shifter |

|---|---|---|---|---|---|---|---|---|

| P01 | AWS | M | 35 | 3.61 | 32 | 7–12 | 102 | N |

| P02 | AWS | M | 22 | 1.61 | 30 | 7–12 | 102 | N |

| P03 | AWS | M | 25 | 1.73 | 21 | >24 | 109 | N |

| P04 | AWS | M | 31 | 3.18 | 24 | >24 | 90 | Y |

| P05 | AWS | M | 33 | 2.32 | 22 | >24 | 115 | Y |

| P06 | AWS | M | 18 | 2.35 | 33 | >24 | 123 | N |

| P07 | AWS | M | 23 | 2.53 | 43 | >24 | 108 | Y |

| P08 | AWS | M | 27 | 2.59 | 29 | >24 | 92 | N |

| P09 | AWS | M | 36 | 3.02 | 17 | 12–24 | 117 | Y |

| P10 | AWS | M | 27 | 1.46 | 9 | None | 105 | N |

| P11 | AWS | M | 22 | 2.20 | 27 | >24 | 108 | Y |

| P12 | AWS | M | 28 | 2.03 | 11 | None | 99 | N |

| P13 | AWS | M | 24 | 1.48 | 17 | >24 | 101 | N |

| P14 | AWS | M | 26 | 2.65 | 19 | >24 | 100 | Y |

| P15 | AWS | F | 26 | 2.17 | 26 | 7–12 | 104 | N |

| P16 | AWS | F | 27 | 1.40 | 19 | 12–24 | 105 | N |

| P17 | AWS | F | 49 | 1.81 | 17 | 12–24 | 103 | N |

| P18 | AWS | F | 21 | 1.77 | 27 | >24 | 98 | N |

| P19 | AWS | F | 24 | 2.17 | 26 | 7–12 | 86 | N |

| P20 | AWS | F | 24 | 1.98 | 8 | 1–6 | 107 | N |

| P21 | AWNS | M | 28 | n/a | n/a | n/a | 136 | Y |

| P22 | AWNS | M | 26 | n/a | n/a | n/a | 106 | N |

| P23 | AWNS | M | 25 | n/a | n/a | n/a | 109 | N |

| P24 | AWNS | M | 26 | n/a | n/a | n/a | 100 | Y |

| P25 | AWNS | M | 30 | n/a | n/a | n/a | 108 | N |

| P26 | AWNS | M | 19 | n/a | n/a | n/a | 102 | N |

| P27 | AWNS | M | 30 | n/a | n/a | n/a | 103 | N |

| P28 | AWNS | M | 23 | n/a | n/a | n/a | 121 | Y |

| P29 | AWNS | M | 25 | n/a | n/a | n/a | 119 | N |

| P30 | AWNS | M | 22 | n/a | n/a | n/a | 104 | N |

| P31 | AWNS | M | 27 | n/a | n/a | n/a | 90 | N |

| P32 | AWNS | M | 26 | n/a | n/a | n/a | 91 | N |

| P33 | AWNS | M | 25 | n/a | n/a | n/a | 90 | N |

| P34 | AWNS | M | 26 | n/a | n/a | n/a | 126 | N |

| P35 | AWNS | F | 25 | n/a | n/a | n/a | 127 | Y |

| P36 | AWNS | F | 24 | n/a | n/a | n/a | 114 | N |

| P37 | AWNS | F | 24 | n/a | n/a | n/a | 127 | N |

| P38 | AWNS | F | 26 | n/a | n/a | n/a | 86 | Y |

| P39 | AWNS | F | 23 | n/a | n/a | n/a | 116 | N |

| P40 | AWNS | F | 25 | n/a | n/a | n/a | 119 | N |

| P41 | AWNS | F | 26 | n/a | n/a | n/a | 102 | N |

Note. OASES = Overall Assessment of the Speaker's Experience of Stuttering (measures impact of stuttering on quality of life; Yaruss & Quesal, 2008); SSI-4 = Stuttering Severity Instrument–Fourth Edition (measures severity of overt stuttering characteristics; Riley, 2009); therapy = cumulative time spent in therapy; EOWPVT-3 = Expressive One-Word Picture Vocabulary Test–Third Edition (Martin & Brownell, 2000; used as an informal measure of expressive vocabulary skills); AWS = adults who stutter; AWNS = adults who do not stutter; M = male; F = female. “Shifter” is a speaker subgroup—N = no; Y = an increase of 2 or more points in subjective anxiety rating from the nonaudience condition to the audience condition.

Stuttering diagnosis was made by the first author, a licensed SLP with extensive experience in the area of stuttering. Assessment included a detailed case history and interview and administration of the Overall Assessment of the Speaker's Experience of Stuttering (Yaruss & Quesal, 2008) and the Stuttering Severity Index–Fourth Edition (G. D. Riley, 2009). In addition, the Expressive One-Word Picture Vocabulary Test–Third Edition (EOWPVT-3; Martin & Brownell, 2000) was administered informally as a screener of expressive language skills. The EOWPVT-3 is normed up to age 18;11 (years;months), with a raw score of 116 being the norm for the oldest age group. Although the current participants were older than the oldest normed group, all of them attained a raw score of at least 116, which is 1 SD below the mean test score (Martin & Brownell, 2000). Descriptive statistics revealed that the AWNS exhibited a higher mean score than the AWS (109.33 and 103.70, respectively), but the difference was not significant, F(1, 39) = 2.36, p > .05. All participants passed a pure-tone hearing screening at 500, 1000, 2000, and 4000 Hz at 20 dB HL. Identification of psychological or neurological impairment was based on self-report.

Procedure

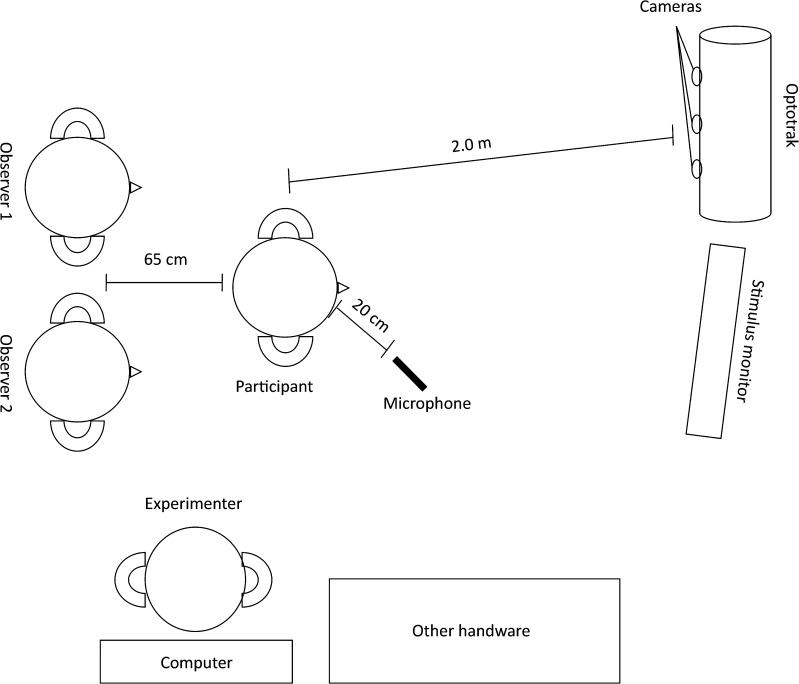

The consent process, diagnostic testing, and experimental procedures were completed in one session that lasted 90 to 120 min. To measure lip movement, an Optotrak Certus 3020 (Northern Digital, Waterloo, Ontario, Canada) was used. The Optotrak uses three cameras to quantify the movement of infrared light–emitting diodes (IREDs) in three dimensions. In this study, the IREDs were placed midsagittally at the vermillion border of the upper and lower lips. An Audio-Technica (Tokyo, Japan) MicroSet directional microphone was placed approximately 20 cm in front of the participant's mouth for audio recording.

Stimuli were presented on a 20-in. computer monitor using Presentation software (Neurobehavioral Systems, Albany, CA). The monitor was placed 12 to 16 in. beside the Optotrak camera unit, which minimized potential interference emitted from the screen. The Optotrak camera unit was placed approximately 2 m from participants (see Figure 1). Because Optotrak relies on line of sight to its cameras, participants were instructed before data collection to test the range of IRED tracking by moving their heads to the left and right; they were provided with verbal feedback when this movement caused the IREDS to go out of range. IREDs were monitored via First Principles (Northern Digital), the proprietary software for the Optotrak. Participants were instructed to attempt to remain stationary during the experiment, although small amounts of movement were permitted as long as IRED view was not obstructed (see below regarding trials that were discarded due to IRED obstruction).

Figure 1.

Experimental setup for the audience condition. The nonaudience condition was the same, excluding the two observers. Measurements are approximate.

Participants were verbally instructed by the first author to use a “normal” speaking voice during the experiment. Instructions were presented via the monitor (e.g., “Please read the sentences as they appear on the screen”). Stimuli were adapted from Kleinow and Smith (2000). The target utterance, “Buy Bobby a puppy,” was produced in isolation (referred to as Base) as well as embedded in one “longer only” sentence (L1; i.e., “Four one three two five buy Bobby a puppy ten eight nine eleven”) and two longer and more complex sentences. The two longer and more complex sentences contained differing levels of embedded perspective (Whalen, Zunshine, & Holquist, 2012; Zunshine, 2006). These linguistically complex stimuli included “He wants Karen to tell John to buy Bobby a puppy at my store” (P1; level 1 embedment) and “You want Samantha to buy Bobby a puppy now if he wants one” (P2; level 2 embedment). Results related to the differing levels of linguistic complexity are not reported in this article, although Base, L1, P1, and P2 are included as fixed factors in the statistical models (see the Statistical Analysis section below).

Each of the four sentences was presented 20 times in pseudorandomized order for a total of 80 trials. The entire sequence was repeated for the “audience” condition (see below) with a different pseudorandom order for a total of 160 trials. Audience and nonaudience blocks were counterbalanced such that half of the participants were exposed to the audience condition first and the other half were exposed to the nonaudience condition first.

The audience condition tested the effect of having two unseen observers present along with the seen experimenter. During that condition, two unfamiliar observers (one man and one woman) entered the testing room and remained out of sight for the duration of the block. The observers' entrance was timed to a text message sent by the examiner. Prior to the experiment, participants were told, “Observers may enter the room during the experiment—just ignore anybody that comes into the room.” The observers entered the room by opening a large door (easily audible for those with typical hearing) and sat in two chairs directly behind the participants so that participants were unable to see them. This minimized potential bias due to observer appearance. Observers deliberately coughed three to five times throughout the block so that participants could realize that the observers were a man and a woman—which all but two male participants were able to do (both instead thought that the audience consisted of two men). This minimized potential bias due to the sex of the participant. In addition to coughing, observers were instructed to scribble audibly on a pad approximately 10 times during the session, with the goal of increasing social–cognitive stress on the participants.

Stimuli (i.e., Base, L1, P1, and P2) were presented one at a time on the computer monitor. Each sentence appeared on the monitor for 5 s followed by 1 s of silence with a blank screen. All participants either completed the sentences (stuttered or nonstuttered) within 5 s or discontinued speech (i.e., stopped talking) when the blank screen appeared. After both blocks were completed, participants completed a short questionnaire that assessed their ability to identify the gender of their observers as well as subjective ratings of anxiety during the audience and nonaudience conditions 3 using a Likert scale questionnaire (see the Appendix).

Data Processing

The 20 productions of each utterance were collected to increase the probability that participants produced at least 10 fluent and usable utterances during each condition for all sentences. The first 10 fluent and usable trials have been used to calculate STI in past studies (e.g., Dromey et al., 2014; Kleinow & Smith, 2000, 2006; MacPherson & Smith, 2013; Smith & Kleinow, 2000), and that selection was used here. Fluent or disfluent utterances were identified as such during the experiment by the first author and then subsequently verified offline by the first author and an additional licensed and certified SLP. Fluent utterances were those free from atypical and typical disfluencies, hesitations, pauses, interjections, rewording, and aberrant prosody (as in Kleinow & Smith, 2000). To further operationalize the categorization of fluency or disfluency, utterances with pauses or gaps exceeding 6% of total utterance duration were considered disfluent (following Jackson, 2015). In that study, 6% was suggested as a threshold because it was the approximate point at which 80% of utterances were selected to be fluent. Regarding fluency of the full sentences, if the target utterance (i.e., “Buy Bobby a puppy”) was perceptually fluent, disfluency exhibited at other parts of the sentences (for L1, P1, and P2) did not preclude inclusion of the target utterance. Despite research that suggests excluding these utterances because of the potential influence of stuttering on surrounding kinematics (e.g., Pindzola, 1986; Prosek & Runyan, 1982; Shapiro, 1980), the current authors elected to examine all utterances that met the criteria set forth for perceptually fluent speech during the core target utterance. Examining utterances that are perceptually fluent but that are surrounded by clear instances of stuttering increases the probability that there will be subtle differences in fluent speech. Of 3,200 AWS trials, 200 target utterances (6.3%) were disfluent (e.g., stuttered, contained speech errors) and 56 (or 1.8%) contained technical errors. Of the 200 AWS disfluent utterances, 87 (43.5%) occurred during the audience condition and 113 (56.5%) occurred during the nonaudience condition. Of 3,360 AWNS trials, 81 target utterances (2.4%) were disfluent and 42 (1.3%) yielded technical errors. Of the 81 AWNS disfluent utterances, 46 (56.8%) occurred during the audience condition and 35 (43.2%) occurred during the nonaudience condition.

Following Jackson et al. (2016), kinematic signals were sampled at 250 Hz and subsequently low-pass filtered at 10 Hz. Audio signals were low-pass filtered prior to recording using a hardware filter at 7500 Hz and then sampled at 16500 Hz. LA was calculated as the Euclidean distance between the upper and lower lips. The registration landmarks for each target utterance (i.e., “Buy Bobby a puppy”) were determined by the peak velocities of the first lip opening movement (i.e., after /b/ in Buy) and the last opening movement (i.e., /pi/ in puppy), respectively (as in Smith et al., 1995). Custom procedures in MATLAB (MathWorks, Natick, MA) were used to identify these points and to calculate STI on the delimited LA trajectories.

Dependent Variables

The dependent variables reflected across-sentence variability (i.e., STI), within-sentence determinism and stability (i.e., RQA measures), and sentence duration.

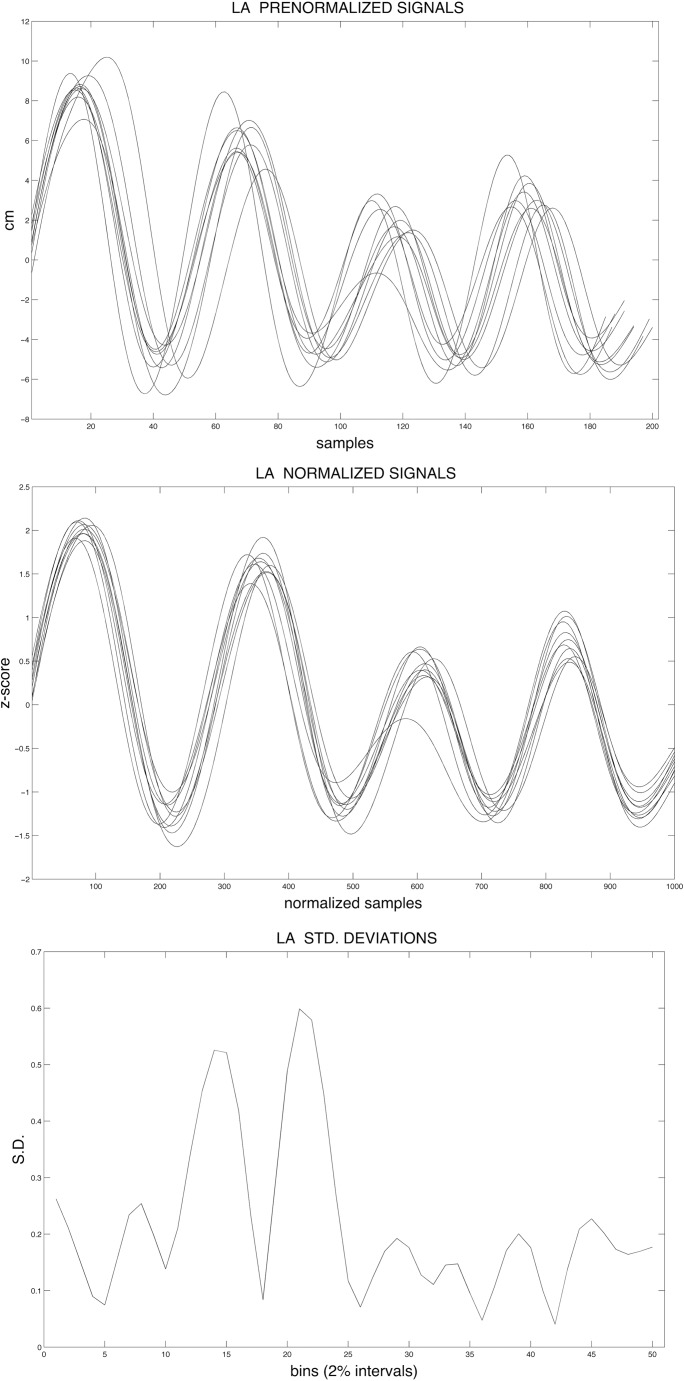

STI

LA STI 4 measured variability across the first 10 fluent and usable trials for each condition and sentence for each participant. To calculate LA STI, signals were amplitude and time normalized using the methodology of Smith et al. (1995). To normalize for amplitude, the mean was subtracted from each amplitude value of the trajectory and then divided by the standard deviation. Time normalization was achieved by (a) removing the linear trend of each amplitude-normalized signal, (b) computing the fast Fourier transform of the detrended signals, (c) mapping 10 retained coefficients onto a consistent time base of 1,000 points, and (d) reapplying the linear trend. Standard deviations were then calculated by binning samples for the first 10 viable trials of a given sentence and condition combination at 2% intervals. The sum of these 50 standard deviations produced the LA STI for that sentence or condition. Figure 2 illustrates these calculations.

Figure 2.

Graphical depiction of lip aperture spatiotemporal index (LA-STI) calculation: raw trajectories (top); normalized trajectories (middle); standard deviations at 50 points (i.e., 2% intervals; bottom).

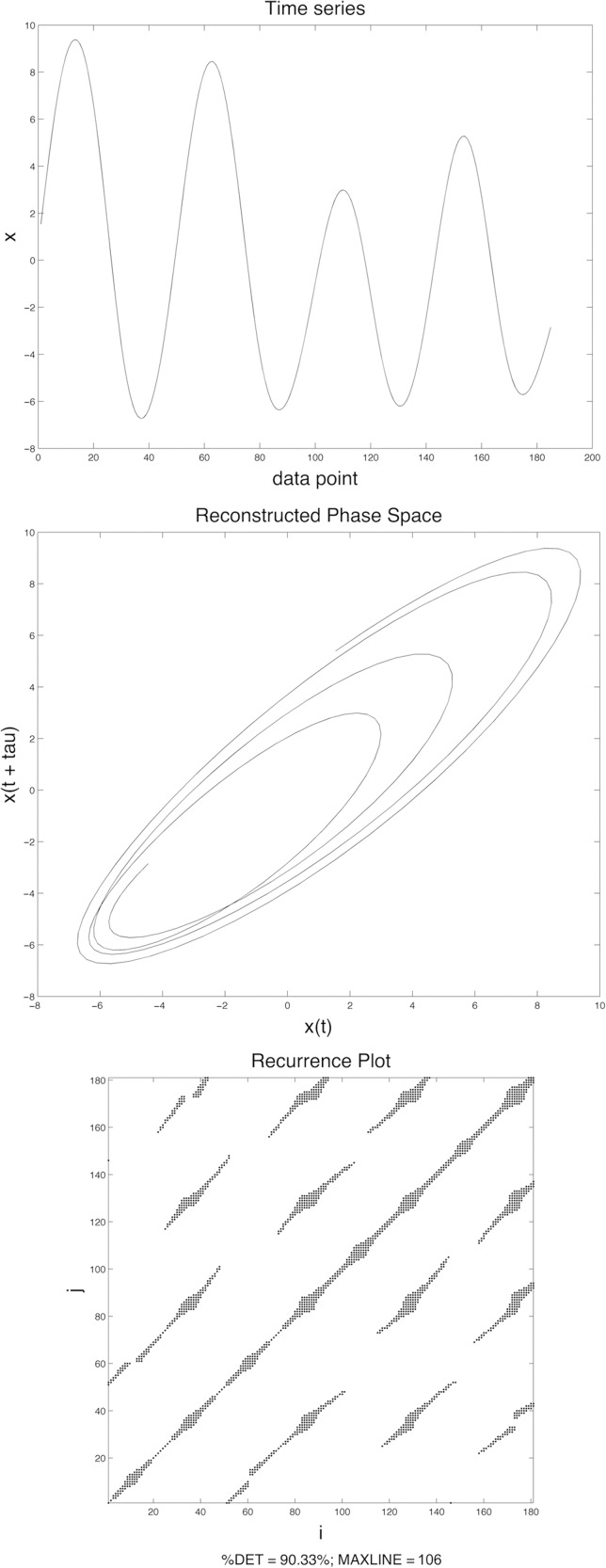

RQA

RQA with phase space reconstruction followed the same procedures as outlined in Jackson et al. (2016). The top panel in Figure 3 presents a raw time series reflecting LA during “Buy Bobby a puppy” for one participant during one trial. The middle panel of Figure 3 represents that same time series in a reconstructed phase space, with the original time series plotted on the x-axis and the time-delayed copy plotted on the y-axis (DELAY parameter is 4; EMBED parameter is 2). 5 A Euclidean distance matrix was then constructed on the basis of the phase space plot and subsequently transformed into a recurrence matrix using a radius parameter of 15% of the mean distance. The recurrence plot as presented in the bottom panel of Figure 3 is based on this recurrence matrix; points that reach the threshold are plotted, whereas points below the threshold are not plotted. An explicit example of distance and recurrence matrix construction and recurrence plot generation for one time series from the current experiment is provided in Jackson et al. (2016). RQA indices were calculated on the basis of standard heuristics applied to the recurrence plots and resulted in percentage determinism (%DET) and stability (MAXLINE) measures.

Figure 3.

Graphical depiction of phase space reconstruction and recurrence plot: raw trajectory (top); reconstructed phase space after delayed embedding (middle); recurrence plot (bottom).

Determinism

%DET measures degree of determinism. It is assumed in RQA that behavior is governed by a dynamical system whose controlling variables may or may not be directly observable. Determinism indicates how patterned versus random this underlying dynamical system is (Webber & Zbilut, 2005). In this context it is a plausible reflection of speech-planning processes (i.e., patterned is planned; random is not planned), where higher determinism reveals that the dynamical system underlying speech production is more highly structured, and low determinism reflects a system that is less structured. %DET was calculated as the percentage of points in the recurrence plot out of all recurrent points that were part of diagonal lines that were at least 5 points in length. For example, there are 1,127 points in the bottom right triangle of the recurrence plot at the bottom of Figure 3, not including the line of identity (i.e., the center diagonal) or the top left triangle. (The top left triangle is not used because it is a mirror image of the bottom right triangle.) Of these, 1,018 points fall on diagonal lines of at least 5 points. %DET for this particular recurrence plot equals 90.33% (1,018/1,127). Note that %DET values are not absolute, such that a trajectory that exhibits 95%DET is 95% deterministic. Rather, %DET values provide a useful comparison across a set of related signals to determine which instances are more and less deterministic.

Stability and Duration

MAXLINE measures the stability of the underlying dynamical system. Stability is related to the system dynamics such that lower stability reflects higher inherent chaotic behavior (Eckmann et al., 1987; Webber & Zbilut, 2005). A speech motor system that exhibits a high degree of stability is not likely to be influenced or perturbed by noise or other factors (e.g., social–cognitive or linguistic stress), whereas a speech system that exhibits low stability would be susceptible to this kind of interference. It is critical to note that stability is not simply the inverse of across-sentence variability (as measured by the STI). Rather, stability is one aspect of spatiotemporal patterning that is measured within a single time series. Stability (i.e., MAXLINE) is calculated as the longest sequence of consecutive diagonal data points excluding the line of identity in the recurrence plot (because longer diagonal lines signify that local patterning in the phase space is less likely to be interrupted). For example, MAXLINE for the recurrence plot at the bottom of Figure 3 is 106. Duration was calculated as the time in milliseconds between trajectory registration points (i.e., the LA peak velocity immediately following the release of /b/ in Buy and the peak velocity immediately following the second /p/ in puppy).

Statistical Analysis

Linear mixed-effects (LME) models were created using the lme4 package (Bates, Maechler, Bolker, & Walker, 2014, p. 4) in R (R Core Team, 2014). The lmerTest package (Kuznetsova, Brockhoff, & Christensen, 2014) was used to estimate degrees of freedom and p values on the basis of Satterthwaite approximations. Although estimating degrees of freedom in lmer models is controversial (e.g., Baayen, Davidson, & Bates, 2008; Barr, Levy, Scheepers, & Tily, 2013), the lmerTest values reported here represent an emerging standard provided to facilitate interpretation of the results.

There were two classes of models for across-sentence (i.e., STI) and within-sentence (i.e., RQA, duration) measures. Because previous research shows that AWS and AWNS exhibit different speech patterning during perceptually fluent speech production at least some of the time, a fixed effect of primary interest for the across-sentence measures was group, which had two levels (i.e., AWS, AWNS). Because research also suggests that these patterns are influenced by linguistic and social–cognitive factors, both sentence (i.e., Base, L1, P1, P2) and condition (i.e., audience, nonaudience) were also included as fixed effects. Participant was modeled as a random effect to adjust for (generally expected) variation in intercept due to individual differences in production and repeated measures. To determine which interactions to include in the model(s) to yield the best fit and minimize overfitting, possible models (e.g., including group*condition, and group*sentence interactions, a group*condition*sentence interaction) were compared using a likelihood ratio test. The model with the lowest Bayesian information criterion (BIC) was selected—in this case, lmer(STI ~ group*condition + group*sentence + (1|participant)).

Within-sentence analyses must also account for trial effects. The baseline model was identical to the model used for the across-sentence measures—that is, lmer(RQA ~ group*condition + group*sentence + (1|participant))—with the dependent variable RQA representing either of the RQA measures (%DET, MAXLINE) or duration. Measures associated with speech motor control may be affected by experimental familiarity or fatigue, which would be reflected in performance over trials. To determine whether adding trial to the model provided a better fit for the data, a model including c.(trial) as a fixed factor was compared with a model without c.(trial). Centering (i.e., c.) reduced the probability of spurious correlations in the model and was achieved by subtracting the overall mean from each trial number without scaling (Baayen, 2008). The model including c.(trial) yielded a lower BIC value than the model without c.(trial), suggesting an improved model fit. Furthermore, it was plausible that there were random trial effects by participant. A model including random slopes by participant for c.(trial) was compared with a model without this factor. A lower BIC value justified the inclusion of the random slopes for trial by participant. The model used for the within-sentence analyses was lmer(RQA_index ~ group*condition + group*sentence + c.(trial) + (1+c.(trial)|participant)).

Results

A subset of the data (from the control group for the nonaudience condition) was also reported in Jackson et al. (2016). In addition, the effects of linguistic complexity are not reported here, although they are available in the first author's doctoral dissertation (Jackson, 2015). Table 2 presents significant results from the statistical analyses and includes coefficients for the model, t values, estimated degrees of freedom and p values, Cohen's d values, and R 2 values. Only a portion of these results is presented below. Following Baayen (2008), t values of 2 or greater were considered to be significant. Cohen's d provided an indication of effect size and was calculated by dividing the mean difference between the dependent measures by the residual standard deviation of the model. R 2 provided an estimate of how well the LME models fit the actual data.

Table 2.

Output from linear mixed-effects models for each dependent variable.

| Factor | Variability (LA STI) |

Determinism (%DET) |

Stability (MAXLINE) |

Duration |

||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Coef. | t | df | p | d | R 2 | Coef. | t | df | p | d | R 2 | Coef. | t | df | p | d | R 2 | Coef. | t | df | p | d | R 2 | |

| All speakers | ||||||||||||||||||||||||

| Group | 2.40 | 1.98 | 106.30 | .050 | 0.76 | .49 | 1.59 | 3.14 | 45.00 | <.010 | 0.26 | .50 | 28.48 | 2.16 | 42.00 | <.050 | 0.54 | .62 | 106.04 | 3.26 | 40.00 | <.010 | 1.77 | .81 |

| Group × Condition | −0.85 | −3.77 | 2071.00 | <.001 | −0.26 | .50 | −7.35 | −2.00 | 3202.00 | <.050 | −0.54 | .62 | 12.46 | 2.15 | 1846.00 | <.050 | 1.77 | .81 | ||||||

| Audience (α/4) | 1.21 | 2.55 | 43.40 | <.010 | 0.47 | .38 | 107.35 | 2.99 | 39.50 | <.010 | 1.67 | .81 | ||||||||||||

| Nonaudience (α/4) | 145.91 | 4.64 | 37.90 | <.001 | 1.99 | .84 | ||||||||||||||||||

| AWNS (α/4) | ||||||||||||||||||||||||

| AWS (α/4) | −0.88 | −5.07 | 1167.00 | <.001 | −0.42 | .61 | −11.23 | −3.50 | 1790.50 | <.001 | −0.16 | .64 | ||||||||||||

| Shifters | ||||||||||||||||||||||||

| Group | 1.92 | 2.70 | 11.30 | <.050 | 0.91 | .32 | ||||||||||||||||||

| Group × Condition | 3.50 | 2.20 | 67.95 | <.050 | 1.05 | .63 | 13.18 | 2.20 | 166.90 | <.050 | 1.21 | .70 | ||||||||||||

| Audience (α/4) | ||||||||||||||||||||||||

| Nonaudience (α/4) | ||||||||||||||||||||||||

| AWNS (α/4) | −11.22 | −5.17 | 760.50 | <.001 | −0.29 | .57 | ||||||||||||||||||

| AWS (α/4) | 3.93 | 3.12 | 37.00 | <.010 | 0.85 | .61 | ||||||||||||||||||

| Nonshifters | ||||||||||||||||||||||||

| Group | 3.25 | 2.44 | 77.00 | <.050 | 0.66 | .48 | 1.48 | 2.33 | 32.00 | <.050 | 0.03 | .53 | 106.70 | 3.49 | 28.00 | <.010 | 1.75 | .81 | ||||||

| Group × condition | −1.49 | −1.68 | 197.30 | <.100 | −0.66 | .48 | −1.34 | −4.82 | 1813.00 | <.001 | −0.26 | .53 | −16.55 | −3.66 | 2726.00 | <.001 | −0.27 | .57 | ||||||

| Audience (α/4) | ||||||||||||||||||||||||

| Nonaudience (α/4) | ||||||||||||||||||||||||

| AWNS (α/4) | ||||||||||||||||||||||||

| AWS (α/4) | −1.64 | −2.65 | 88.80 | <.010 | −0.40 | .57 | −1.38 | −6.05 | 863.20 | <.001 | −0.56 | .63 | −19.08 | −4.72 | 1437.90 | <.001 | −0.29 | .59 | ||||||

Note. Coef. = coefficients for the model; d = Cohen's effect size. An across-sentence model was implemented for lip aperture spatiotemporal index (LA STI); a within-sentence model was implemented for determinism (%DET = percentage determinism), MAXLINE, and duration. Significant results are shown (p < .05); empty cells indicate insignificant effects. Audience and nonaudience lines compare sample means for adults who stutter (AWS) and adults who do not stutter (AWNS) such that negative signs indicate lower values for AWS. AWNS and AWS lines compare sample means for the audience and nonaudience conditions such that negative signs indicate lower values for the nonaudience condition.

Across Sentences and Conditions

The AWS exhibited greater across-sentence variability (STI) than AWNS across conditions and sentences (t = 1.98, p = .0506). AWS also exhibited greater determinism (i.e., %DET; t = 3.14, p < .01) and greater stability (i.e., MAXLINE; t = 2.16, p < .05) compared with AWNS, indicating that AWS—despite being more variable across utterances—were also more deterministic and more stable within utterances.

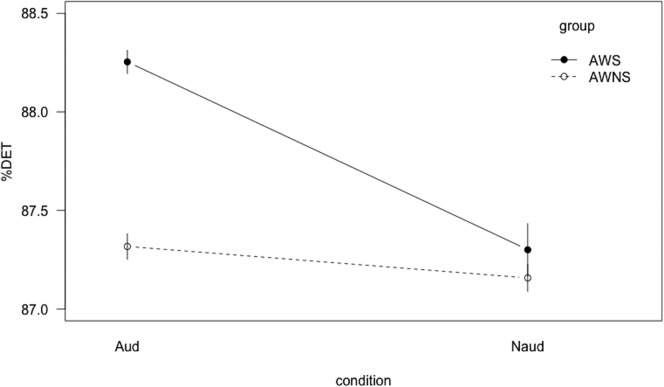

Presence of an Audience

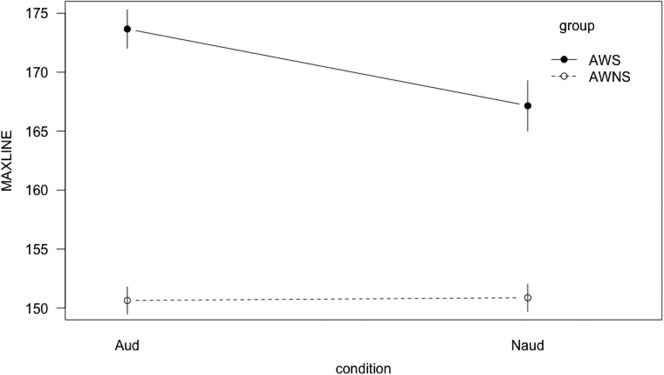

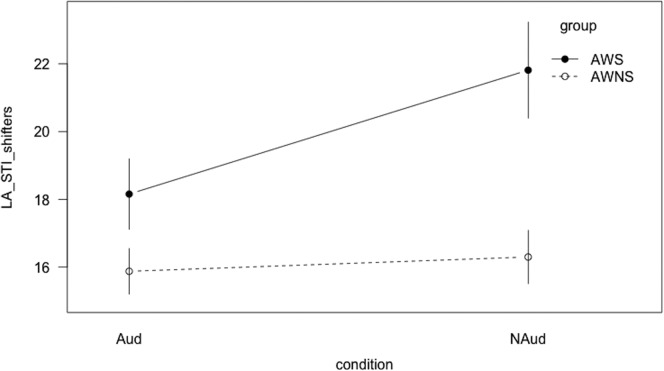

Although the audience condition did not differentiate between groups for across-sentence variability, it did affect within-sentence spatiotemporal features. A significant Group × Condition interaction (t = −3.77, p < .001) was found for %DET, indicating that AWS and AWNS responded differently to the presence of an audience. To assess whether one condition or the other was driving the group difference, post hoc tests were administered. The AWS exhibited greater determinism than the AWNS during the audience condition (t = 2.55, p = .01; Bonferroni adjustment at α/4 = .0125) but not during the nonaudience condition (see Figure 4). AWS exhibited greater determinism during the audience condition compared with the nonaudience condition (t = −5.07, p < .001; Bonferroni correction at α/4 = .0125), but there were no differences between conditions for AWNS. Regarding stability, a significant Group × Condition interaction (t = 2.00, p < .05; Bonferroni adjustment at α/4 = .0125) warranted post hoc testing. AWS exhibited greater stability in the audience condition compared with the nonaudience condition (t = −3.5, p < .001; Bonferroni adjustment at α/4 = .0125), but AWNS did not exhibit this pattern (see Figure 5).

Figure 4.

Interaction line plot for determinism (%DET). Aud = audience condition; Naud = nonaudience condition; AWS = adults who stutter; AWNS = adults who do not stutter. Error bars show the standard error of the mean.

Figure 5.

Interaction line plot for stability (MAXLINE). Aud = audience condition; Naud = nonaudience condition; AWS = adults who stutter; AWNS = adults who do not stutter. Error bars show the standard error of the mean.

Shifters

It is generally accepted that the factors that contribute to stuttering vary across individuals and that stuttering subgroups likely exist in this regard (Ambrose, Yairi, Loucks, Seery, & Throneburg, 2015; Seery, Watkins, Mangelsdorf, & Shigeto, 2007; Yairi, 2007). It is feasible that only those AWS who report a marked difference in subjective anxiety between nonaudience and audience conditions would alter their productions when subjected to this kind of social–cognitive stress. These “shifters” were participants who reported anxiety to be at least 2 points higher during the audience condition compared with the nonaudience condition (using the Likert scale in the Appendix). A shift of 2 points was estimated a priori to be an appropriate threshold for determining a significant change in self-reported anxiety levels for AWS and AWNS. There were six shifter AWS (all men) and five shifter AWNS (three men, two women), all of whom reported a change of 2 points, except for one female AWNS who reported a change of 3 points. Shifter AWS exhibited significantly lower variability during the audience condition compared with the nonaudience condition (t = 3.12, p < .01; Bonferroni adjustment at α/4 = .0125); AWNS did not follow this pattern (see Figure 6). It is interesting to note that the nonshifter AWS exhibited greater variability during the audience condition compared with the nonaudience condition (t = −2.65, p < .01; Bonferroni adjustment at α/4 = .0125). Descriptive statistics revealed that the mean EOWPVT-3 score for the shifter AWNS was higher than that for the shifter AWS (114.0 and 106.3, respectively), but the difference was not significant, F(1, 9) = −0.81, p > .05.

Figure 6.

Interaction line plot for variability (lip aperture spatiotemporal index) for the shifters subset. Aud = audience condition; Naud = nonaudience condition; AWS = adults who stutter; AWNS = adults who do not stutter. Error bars show the standard error of the mean.

There were no significant within-group or within-condition findings observed for shifter AWS related to determinism other than the fact that they exhibited greater determinism than the shifter AWNS (t = 2.70, p < .05). Nonshifter AWS exhibited greater determinism than nonshifter AWNS (t = 2.33, p < .05) and exhibited greater determinism (t = −6.05, p < .001) and stability (t = −4.72, p < .001) in the audience condition compared with the nonaudience condition.

Duration

The AWS exhibited longer utterance durations than AWNS across all conditions and sentences (t = 3.26, p < .01). A significant Group × Condition interaction warranted examination into within-group differences between conditions, but no between-conditions differences were found.

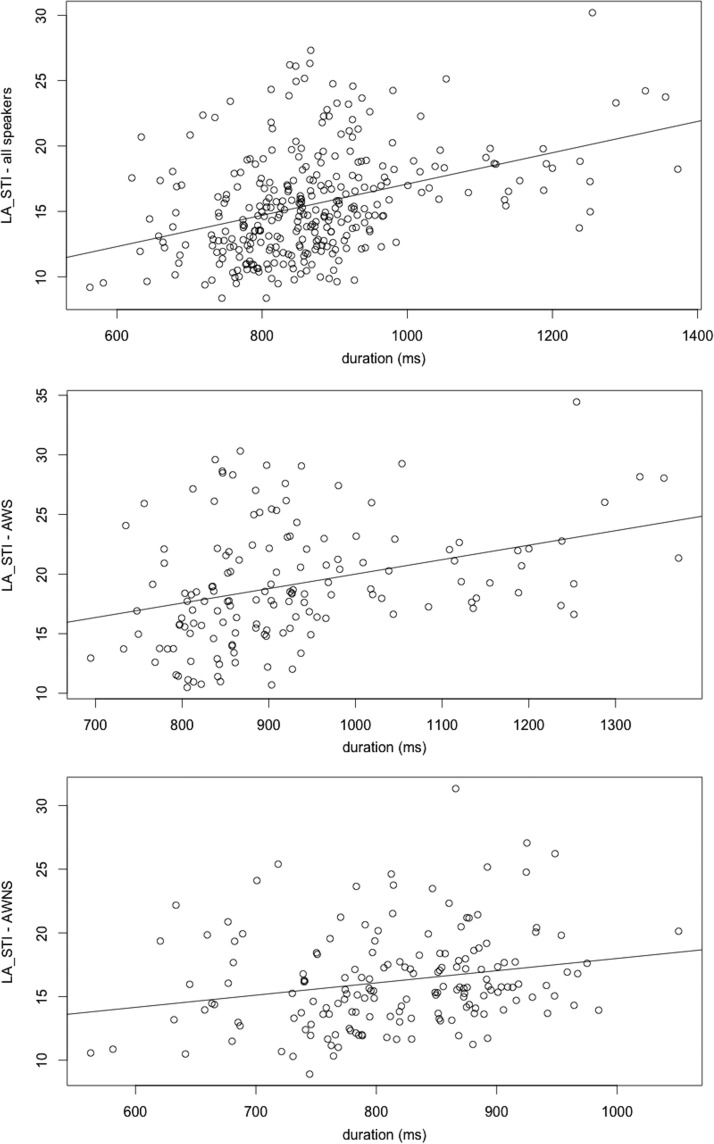

Because there have been questions related to the influence of duration on kinematic speech measures (specifically those assessing across-sentence variability), Pearson's correlations were calculated between the dependent variables and utterance duration. There was a positive correlation between duration and across-sentence variability (LA STI; r = .50, p = .001); this correlation approached significance for AWS (r = .42, p < .10) but not AWNS (r = .22, p > .10; see Figure 7). Significant correlations were not observed overall for %DET (r = .30, p > .10); this correlation was not significant for AWS (r = .11, p > .10) but was significant for AWNS (r = .64, p < .01). Significant correlations were observed for MAXLINE (r = .81, p < .001), which held for AWS (r = .78, p < .001) and AWNS (r = .86, p < .001).

Figure 7.

Correlations between lip aperture spatiotemporal index and average duration for all speakers (top), adults who stutter (AWS; middle), and adults who do not stutter (AWNS; bottom).

Discussion

The primary purpose of this study was to examine the impact of social–cognitive stress on speech variability, determinism, and stability in AWS and AWNS. Overall (i.e., across conditions and sentence types), the AWS exhibited greater across-sentence variability than the AWNS, corroborating previous findings (e.g., Cai et al., 2011; Kleinow & Smith, 2000; MacPherson & Smith, 2013; Smith et al., 2010). However, the speech of the AWS within sentences was also characterized as more deterministic and stable compared with the speech of the AWNS. Social–cognitive stress (i.e., the presence of an audience) increased within-sentence determinism and stability in the AWS but did not affect across-sentence variability. A subset of the data including those speakers who reported a marked increase in anxiety between the nonaudience and audience condition revealed that these shifter AWS exhibited reduced variability in the audience condition compared with the nonaudience condition. The AWS exhibited longer utterance durations than the AWNS across conditions and sentences, and this was not necessarily due to social–cognitive or linguistic stress.

Greater across-sentence variability and greater within-trial determinism and stability in AWS compared with AWNS suggest that AWS are less able to maintain consistent speech patterns across repeated utterances as well as between articulatory gestures within utterances. These findings were not surprising. If stuttering signatures during perceptually fluent speech are indeed intermittent (here, from utterance to utterance), it follows that some sentences will be produced with a greater degree of patterning than others. If some sentences reveal high within-sentence determinism and stability and others do not, variability measured across trials will be higher than if all of the sentences were produced with typical determinism or stability. Commenting on the complex relationship between variability in behavior and determinism, Riley and Turvey (2002) argue that “more variable does not mean more random and more controllable does not mean more deterministic” (p. 99; also see van Lieshout & Namasivayam, 2010). It may be that increased across-sentence variability coupled with increased within-trial determinism and stability reflect a lack of speech motor control, perhaps indicating excessive determinism and overstability. Stressing the speech motor system allowed us to further test this hypothesis.

Impact of Audience Presence

Speaking in front of an audience has been shown to increase overt stuttering for AWS (Commodore, 1980; Steer & Johnson, 1936; Van Riper & Hull, 1955; von Krais Porter, 1939). Our study examined the influence of audience presence on sentence-level speech kinematics during connected speech. The AWS and AWNS exhibited similar speech dynamics during the nonaudience condition, but the AWS were more deterministic and stable compared with the AWNS in the audience condition and more deterministic in the audience condition compared with the nonaudience condition. These findings indicate that AWS may adopt a rigid approach to speech production to compensate for underlying difficulty secondary to social–cognitive stress (i.e., the presence of an audience). This interpretation is in line with proposals by van Lieshout and colleagues (Namasivayam & van Lieshout, 2011; van Lieshout et al., 2004) and Alm (2014) that AWS are less flexible in adapting to social, cognitive, and/or linguistic stressors.

The findings reported in the previous paragraph are also in line with the constrained action hypothesis, which postulates that diverting an actor's attention to external stimuli facilitates motor performance, whereas diverting attention internally (i.e., to the body) impedes performance (e.g., Kal, Van der Kamp, & Houdijk, 2013; McNevin, Shea, & Wulf, 2003; Wulf, McNevin, & Shea, 2001). That is, focusing attention internally during relatively simple motor tasks may have the effect of interfering with motor actions because these tasks would otherwise be automatic. Social–cognitive stress, which involves expectations by the speaker for fluent speech or expectations regarding how the listener will respond to the speaker, may cause a speaker who is already susceptible to interference to shift attention to his or her speech production system (i.e., internally). This increased focus (or overfocus; also see Vasic & Wijnen, 2005) may therefore interfere with the otherwise subconscious process of speech production.

Shifters

The AWS overall exhibited similar across-sentence variability in the audience and nonaudience conditions even though they were different for within-sentence determinism and stability. The shifter AWS exhibited reduced across-sentences variability during the audience condition compared with the nonaudience condition. It is interesting to note that the nonshifter AWS exhibited greater across-sentence variability and greater within-sentence determinism or stability during the audience condition compared with the nonaudience condition. The nonshifter AWS results appear to more closely align with the general findings (including all AWS and AWNS) that AWS exhibit greater speech variability than AWNS, whereas the shifter AWS reveal a pattern in the opposite direction (i.e., reduced variability in the audience condition). These findings provide evidence that those AWS who are more prone to experience a social–cognitive response such as anxiety during a speaking task will also be more likely than AWS who are less prone to experience anxiety to alter their approach to speech production (i.e., by becoming less variable across trials). That nonshifter AWS exhibited greater variability in the audience condition compared with the nonaudience condition highlights the critical impact that self-reported anxiety plays in shaping speech behaviors.

Previous work shows that AWS exhibit greater across-sentences variability when their speech systems are stressed linguistically (Cai et al., 2011; Jackson, 2015; Kleinow & Smith, 2000). It is reasonable to speculate that this effect holds for shifter AWS because anxiety is not known to interact with linguistic functioning. The current work shows that the shifter AWS became less variable when social–cognitive stress (i.e., audience presence) was introduced. It appears that linguistic and social–cognitive stress may differentially affect shifter AWS; linguistic stress results in increased variability, and social–cognitive stress results in decreased variability. One interpretation is that linguistic stress contributes to a reduced ability to preserve speech kinematics across repeated productions, whereas social–cognitive stress contributes to a reduced capacity to maintain the necessary variability required for speech production (i.e., to flexibly and fluently move through speech movements). This claim requires further testing.

Utterance Duration

The finding that AWS exhibited longer target utterance durations than AWNS for all sentences was expected on the basis of previous results (e.g., Bloodstein, 1944; Colcord & Adams, 1979; Jackson, 2015; Starkweather & Myers, 1979). Given the significant correlation between duration and across-sentence variability (i.e., STI), it followed that variability should be higher for those utterances with longer durations. This was mostly the case: AWS overall exhibited longer durations and greater across-sentences variability. Furthermore, the current results indicate that rate influenced variability more for AWS compared with AWNS, suggesting that the within-group durational variance was contributing in some way to these correlations. However, there is also evidence from Jackson (2015) that does not support the hypothesis that duration influences across-sentence variability measures. For example, one of the more complex sentences (i.e., P1) yielded higher across-sentence variability than the Base phrase. It may have been expected, then, that P1 should also have exhibited longer durations than Base, but this was not the case (Jackson, 2015). Although there is evidence that rate influences across-sentence variability (i.e., the STI) from the current and prior work (Dromey et al., 2014; Lucero et al., 1997; Smith & Goffman, 1998; Smith et al., 1995; Smith & Kleinow, 2000), it cannot be concluded that fluctuations in the STI are solely due to durational fluctuations. It was difficult to parse the effect of duration in this study because it was treated as an additional dependent variable—one that changed on the basis of the same factors as STI and RQA (e.g., group, sentence, condition). Thus, it was not included as a fixed or random effect in any of the statistical models. For a detailed discussion regarding the influence of duration on the STI, see Jackson et al. (2016).

Within-sentence determinism appeared to be robust to durational effects, as demonstrated by the lack of a significant correlation between utterance duration and determinism. This was expected given that determinism is (a) reflected by a percentage value of the number of points out of all possible points that are considered to be patterned and (b) not subject to durational variability across a set of trials (because it is measured within trials). Within-sentence stability did, however, appear to be influenced by duration. This was not surprising either given that stability (i.e., MAXLINE) is calculated as the longest string of consecutive diagonal points in the recurrence plot. Longer time series for relatively stable processes (i.e., repeating a simple utterance that yields an approximately sinelike pattern) will yield longer consecutive strings of data points and, thus, greater stability. The interpretation of stability therefore requires consideration because AWS as a group are known to exhibit a slower rate of speech compared with AWNS. Thus, increased stability in AWS may be in part due to increased duration and not solely representative of speech stability.

Theoretical Implications

Speech variability is a complex phenomenon that can be assessed in various ways (e.g., within and across sentences), and different approaches can lead to different interpretations (van Lieshout & Namasivayam, 2010). For example, the STI reveals that the speech kinematics of AWS are generally more variable than those of AWNS across sentences or utterances, whereas RQA indicates that AWS are also more deterministic and stable within sentences or utterances. Without using within-sentence measures, one may interpret increased across-sentence variability as an indication of reduced global stability secondary to randomness or noise in the speech motor system (e.g., see Smith et al., 1995). However, increased levels of determinism and stability within sentences show that the speech of these same speakers may be characterized as overly stable or produced in such a way that the speaker is attempting to use too much control (see also Kleinow and Smith, 2000, who reported that some AWS exhibited STI values at the low end of the variability continuum). We approach this problem from a dynamical systems perspective in which complex biological systems are simultaneously variable and stable and motor variability comprises both deterministic and random components (M. A. Riley & Turvey, 2002).

From a dynamical perspective, a system that is overly deterministic and overly stable represents a system on the verge of breaking down. For example, prior work reveals that pathological systems are characterized by increased regularity or stereotypical (i.e., less flexible) behavioral patterns (Goldberger, 1997), and both individuals with Parkinson's disease (Schmit et al., 2006) and stroke patients (Ghomashchi et al., 2011) have been shown to exhibit greater determinism than control participants. McClean, Levandowski, and Cord (1994) found that AWS are less variable than AWNS on various timing measures (e.g., onset of first vowel glottal cycle, maximum point of jaw displacement). Kalveram (1993) demonstrated through implementation of a neural network model of sensorimotor learning that excessively strong couplings between underlying neuronal populations responsible for speech led to reduced motor variability and, subsequently, stuttering. Typically functioning systems are continuously cycling through stability and instability such that instabilities are required in order for changes in states (e.g., gestural configurations) to occur. Findings of greater determinism and greater stability in AWS may represent (intermittent) rigidity and overstability, which may be reflective of a compromised system—one that exhibits difficulty in, for example, transitioning between gestures (i.e., between onset and nucleus; Heyde, Scobbie, Lickley, & Drake, 2016; Wingate, 1988). This hypothesis would benefit from testing within a neurocomputational framework of stuttering. This work is currently ongoing by members of our research team.

Another important finding was that social–cognitive and linguistic stressors appear to differentially affect across-sentences variability in AWS, particularly for speakers who are more susceptible to becoming anxious (i.e., shifters). Social–cognitive stress appears to contribute to more restrictive and less variable across-sentence speech movements in AWS, whereas linguistic stress contributes to more variable across-sentence movements in AWS. Our results provide additional support for the multifactorial view of stuttering (e.g., Conture et al., 2006; Namasivayam & van Lieshout, 2011; Smith & Kelly, 1997; Zimmermann, 1980). However, it is critical that theoretical accounts reflect the reality that contextual stressors affecting differing underlying processes (i.e., social–cognitive, linguistic) may differentially affect the speech motor system in AWS.

Clinical Implications

That AWS are more deterministic in their movements during speech production suggests that AWS adopt a more restrictive or rigid speech pattern to maintain fluent speech despite underlying speech difficulty (see also Namasivayam & van Lieshout, 2011). This strategy is likely associated with increased muscle tension during speech production. Webber, Schmidt, and Walsh (1995) found that unimpaired participants exhibited greater determinism in electromyographic activity during heavy compared with light lifting. This may be similar to what happens in AWS when their systems are stressed in a social–cognitive way. It is well known clinically that AWS exhibit significant articulator tension, facial and neck tension, or tension in the chest or other parts of the body as a result of stuttering. Most treatment approaches involve some aspect of reducing tension during speech production. For example, a “pullout” is a strategy in which speakers identify articulatory tension during a stuttering event and subsequently (attempt to) reduce that tension to continue with speech production. Likewise, “light articulatory contacts” and “easy onsets” are speaking strategies in which speakers initiate phonation with reduced tension, either between the articulators (e.g., for consonant initial) or in the larynx (e.g., for vowel initial). However, there is little quantitative evidence to support the implementation of these strategies. It is possible that measuring determinism could facilitate the therapeutic approach of reducing tension because it provides a straightforward index (percentage) that may represent a degree of tension or restriction during speech. It may be that increasing deterministic structure allows the AWS to maintain fluent speech up to a certain point but simultaneously is maladaptive in that the speaker is teetering on the border of overt stuttering. This may be the “tenuous fluency” that Adams and Runyan (1981) used to characterize speech that was not overtly stuttered but was not fluent either. Allowing speakers to find individually specific ranges of determinism or “sweet spots” with the use of objective measures such as determinism may facilitate productive speaking strategies during those times that the speech of AWS is on the verge of breaking down. Identifying utterances that are individually difficult for AWS and then using the same approach to identify determinism as detailed in this article may be beneficial clinically. Of course, the usefulness of such an approach needs to be tested.

The approach that many AWS take to coping with stuttering—tensing or “pushing”—is likely maladaptive. Indeed, most approaches to stuttering therapy (e.g., stuttering modification, “normal talking,” fluency shaping), in one way or another, propose that AWS produce speech with less tension (e.g., pullouts, light articulatory contacts/approximations; Johnson, Knott, & Leutenegger, 1955; Lanyon, Barrington, & Newman, 1976; Manning, 2009; Van Riper, 1973; Williams, 1957; Yairi & Seery, 2015). Given that in the present study AWS who also exhibit higher anxiety levels are those who tend to change their speaking approach to a greater degree (e.g., by becoming more restrictive, increasing muscle tension), it follows that speakers who do exhibit communicative anxiety would benefit from desensitization procedures in therapy.

Other Considerations

Jackson et al. (2016) highlighted several considerations related to the use of RQA applied to speech production (e.g., a priori parameter selection, assumptions regarding time series cyclicity, and dimensionality). Three additional considerations are made here. First, differences in language ability between AWS and AWNS may have contributed to differences in speech variability. Language abilities were estimated using the EOWPVT-3, and although the difference between AWS and AWNS was not statistically significant (though the mean AWNS score was greater than that of AWS), subtle differences in language ability may have gone undetected. Thus, it cannot be ruled out that subtle language differences between the two groups may have differentially affected speech variability.

Second, the results may also have been influenced by prior therapy experience. It is likely that for some of the AWS, the use of speaking strategies (e.g., reducing rate, easily transitioning between gestures) affected variability, determinism, and/or stability measures. In addition, although therapy history was included in Table 1, it was not included as a factor in any of the statistical models because it was too varied and too dependent on specific therapists providing the treatment. A strong clinical argument can also be made that self-learned strategies (i.e., those not learned in therapy) are just as—if not more—impactful on speech kinematics. For example, many AWS who first enroll in therapy report using strategies such as slowing down, taking a breath, thinking about what words to use, and so on. It is undeniable that these kinds of strategies will affect across- and within-sentence measures. This is an important issue and should be explored in future work.

Third, data were collected in a controlled laboratory (i.e., unnaturalistic) environment. That is, participants were required to read relatively simple sentences from a monitor in the confines of a laboratory. Because stuttering is a disorder that primarily manifests in meaningful communicative exchanges, there may be concern related to how generalizable the current results are. The inclusion of an audience was meant to approximate a social environment, but it was intentionally noninteractive. Furthermore, the purpose of the study was to quantify and investigate subtle speech differences between AWS and AWNS. Thus, although this study may lack ecological validity, the controlled nature of the approach revealed speech differences that may not have been evident if data were collected in more naturalistic communicative contexts. That said, a goal of future research is to use RQA in more ecologically valid environments.

Conclusions

This study investigated the real-time impact of social–cognitive stress on speech motor control in AWS and AWNS by assessing across-sentence variability and within-sentence determinism and stability. Overall, AWS are more variable across utterances (i.e., sentences) and more deterministic and stable within these sentences compared with AWNS. The presence of an audience contributed to greater within-sentence determinism and stability in AWS, suggesting that AWS adopt an approach to speaking that is more restrictive and perhaps less flexible than that of AWNS. A subgroup of AWS who were more susceptible to experiencing anxiety exhibited reduced across-sentence variability when speaking in the presence of an audience, suggesting that these AWS adopt an approach to speaking that is different from that of AWS who are not as affected by social–cognitive stress. Future work should examine the impact of other stressors (e.g., speaking in different types of stressful situations or while engaging in dual tasks) on both across-sentence variability and within-sentence determinism and stability in AWS and AWNS. The methods used in this article easily can be applied to children to assess how variability changes across development. Furthermore, investigators should apply RQA to more complex speech examples (i.e., not repetitions of simple utterances) as well as other nonspeech movements (e.g., postural sway, head movement). Last, it will be revealing to examine correlations between kinematic (and acoustic) and neurophysiological data using the techniques described in this article.

Acknowledgments

This research was supported by National Institutes of Health Grant DC-002717 to Haskins Laboratories and National Science Foundation Grant 1513770 to the first author. The authors acknowledge Tricia Zebrowski and Michael A. Riley for helpful comments during the writing of this article. The authors also acknowledge the National Stuttering Association for helping with participant recruitment. The MATLAB procedures implemented for phase space reconstruction and recurrence quantification analysis were obtained at the American Psychological Association Advanced Training Institute on Nonlinear Methods for Psychological Science (http://www.apa.org/science/resources/ati/nonlinear.aspx).

Appendix

Audience and Nonaudience Debriefing Questionnaire

These questions will be read to each participant:

Did anybody come into the room during testing? Y / N

If so, how many people were there (besides the examiner)?

What do you think the gender(s) of the observer(s) was(were)?

Did you experience anxiety when people weren't in the room (besides the examiner)?

1 2 3 4 5 6 7

No anxiety Moderate anxiety Extreme anxiety

Did you experience anxiety when people entered the room?

1 2 3 4 5 6 7

No anxiety Moderate anxiety Extreme anxiety

Did you feel differently when the observers were in the room versus out of the room? If so, how?

Funding Statement

This research was supported by National Institutes of Health Grant DC-002717 to Haskins Laboratories and National Science Foundation Grant 1513770 to the first author.

Footnotes

Speaking or performing in the presence of an audience may elicit both cognitive and emotional responses (i.e., autonomic arousal), but disentangling these overlapping processes was not the focus of the current study. Here, anxiety refers to a cognitive response including evaluation of and reflection on the current situation.

Multilingual speakers were not excluded because it was determined that the benefits of including them (e.g., larger sample, more heterogeneous group) outweighed potential confounds (e.g., decreased language and/or speech ability due to less exposure to English).

The current work was not intended to provide a comprehensive examination of anxiety in AWS and AWNS. Anxiety as it relates to stuttering is a complex phenomenon that has social, cognitive, and emotional components. The term anxiety was used in the questionnaire to increase the probability that participants would be able to (a) identify with the kind of social–cognitive stress introduced (i.e., speaking in front of an audience) that was introduced during the experiment and (b) report it.

Known as LA variability index in previous studies (e.g., Kleinow & Smith, 2006; MacPherson & Smith, 2013).

Phase space reconstruction and RQA require a priori parameter selection (e.g., delay length, dimension size, radius, line length). For a complete discussion regarding parameter selection, see Webber and Zbilut (2005).

References

- Adams M. R., & Runyan C. M. (1981). Stuttering and fluency: Exclusive events or points on a continuum? Journal of Fluency Disorders, 6, 197–218. [Google Scholar]

- Alm P. A. (2004). Stuttering, emotions, and heart rate during anticipatory anxiety: A critical review. Journal of Fluency Disorders, 29, 123–133. [DOI] [PubMed] [Google Scholar]

- Alm P. A. (2014). Stuttering in relation to anxiety, temperament, and personality: Review and analysis with focus on causality. Journal of Fluency Disorders, 40, 5–21. [DOI] [PubMed] [Google Scholar]

- Ambrose N. G., Yairi E., Loucks T. M., Seery C. H., & Throneburg R. (2015). Relation of motor, linguistic and temperament factors in epidemiologic subtypes of persistent and recovered stuttering: Initial findings. Journal of Fluency Disorders, 45, 12–26. doi:10.1016/j.jfludis.2015.05.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson A., Lowit A., & Howell P. (2008). Temporal and spatial variability in speakers with Parkinson's disease and Friedreich's ataxia. Journal of Medical Speech-Language Pathology, 16, 173–180. [PMC free article] [PubMed] [Google Scholar]

- Arenas R. M. (2012). The role of anticipation and an adaptive monitoring system in stuttering: A theoretical and experimental investigation (Doctoral dissertation). Retrieved from Iowa Research Online, http://ir.uiowa.edu/etd/2812/

- Armson J., & Kalinowski J. (1994). Interpreting results of the fluent speech paradigm in stuttering research: Difficulties in separating cause from effect. Journal of Speech and Hearing Research, 37, 69–82. [DOI] [PubMed] [Google Scholar]

- Baayen R. H. (2008). Analyzing linguistic data: A practical introduction to statistics using R. Cambridge, United Kingdom: Cambridge University Press. [Google Scholar]

- Baayen R. H., Davidson D. J., & Bates D. M. (2008). Mixed-effects modeling with crossed random effects for subjects and items. Journal of Memory and Language, 59, 390–412. [Google Scholar]

- Barr D. J., Levy R., Scheepers C., & Tily H. J. (2013). Random effects structure for confirmatory hypothesis testing: Keep it maximal. Journal of Memory and Language, 68, 255–278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bates D. M., Maechler M., Bolker B., & Walker S. (2014). lme4: Linear mixed-effects models using Eigen and S4 (Version R, Package Version 1.1-7). Retrieved from http://CRAN.R-project.org/package=lme4

- Bloodstein O. (1944). Studies in the psychology of stuttering XIX: The relationship between oral reading rate and severity of stuttering. Journal of Speech Disorders, 9, 161–173. [Google Scholar]

- Bloodstein O., & Bernstein-Ratner N. (2008). A handbook on stuttering (6th ed.). New York, NY: Thomson-Delmar. [Google Scholar]

- Cai S., Beal D. S., Tiede M. K., Perkell J. S., Guenther F. H., & Ghosh S. S. (2011, November). Relating the kinematic variability of speech to MRI-based structural integrity of brain white matter in people who stutter and people with fluent speech. Poster presented at the Society for Neuroscience Annual Meeting, Washington, DC. [Google Scholar]

- Colcord R. D., & Adams M. R. (1979). Voicing duration and vocal SPL changes associated with stuttering reduction during singing. Journal of Speech and Hearing Research, 22, 468–479. [DOI] [PubMed] [Google Scholar]

- Commodore R. W. (1980). Communication stress and stuttering frequency during normal, whispered and articulation-without phonation speech: A further study. Human Communication, 5, 143–150. [Google Scholar]

- Conture E. G., Walden T. A., Arnold H. S., Graham C. G., Hartfield K. N., & Karrass J. (2006). Communication-emotional model of stuttering. Current Issues in Stuttering Research and Practice, 2, 17–47. [Google Scholar]

- Dromey C., Boyce K., & Channell R. (2014). Effects of age and syntactic complexity on speech motor performance. Journal of Speech, Language, and Hearing Research, 57, 2142–2151. [DOI] [PubMed] [Google Scholar]

- Eckmann J.-P., Kamphorst S. O., & Ruelle D. (1987). Recurrence plots of dynamical systems. Europhysics Letters, 4, 973–977. [Google Scholar]

- Evans D. L. (2009). The effect of linguistic, memory, and social demands on the speech motor control and autonomic response of adults who stutter (Doctoral dissertation). Retrieved from DigitalCommons at the University of Nebraska–Lincoln, http://digitalcommons.unl.edu/dissertations/AAI3352378/

- Ghomashchi H., Esteki A., Nasrabadi A. M., Sprott J. C., & Bahrpeyma F. (2011). Dynamic patterns of postural fluctuations during quiet standing: A recurrence quantification approach. International Journal of Bifurcation and Chaos, 21, 1163–1172. [Google Scholar]

- Goldberger A. L. (1996). Non-linear dynamics for clinicians: Chaos theory, fractals, and complexity at the bedside. The Lancet, 347, 1312–1314. [DOI] [PubMed] [Google Scholar]

- Goldberger A. L. (1997). Fractal variability versus pathologic periodicity: Complexity loss and stereotypy in disease. Perspectives in Biology and Medicine, 40, 543–561. [DOI] [PubMed] [Google Scholar]

- Guitar B. (2003). Acoustic startle responses and temperament in individuals who stutter. Journal of Speech, Language, and Hearing Research, 46, 233–240. [DOI] [PubMed] [Google Scholar]

- Heyde C. J., Scobbie J. M., Lickley R., & Drake E. K. E. (2016). How fluent is the fluent speech of people who stutter? A new approach to measuring kinematics with ultrasound. Clinical Linguistics & Phonetics, 30, 292–312. doi:10.3109/02699206.2015.1100684 [DOI] [PubMed] [Google Scholar]

- Jackson E. S. (2015). Variability, stability, and flexibility in the speech kinematics and acoustics of adults who do and do not stutter (Doctoral dissertation). Retrieved from CUNY Academic Works, http://academicworks.cuny.edu/gc_etds/986/