Significance

Knowledge about other people is critical for group survival and may have unique cognitive processing demands. Here, we investigate how person knowledge is represented, organized, and retrieved in the brain. We show that the anterior temporal lobe (ATL) stores abstract person identity representation that is commonly embedded in multiple sources (e.g. face, name, scene, and personal object). We also found the ATL serves as a “neural switchboard,” coordinating with a network of other brain regions in a rapid and need-specific way to retrieve different aspects of biographical information (e.g., occupation and personality traits). Our findings endorse the ATL as a central hub for representing and retrieving person knowledge.

Keywords: person knowledge, anterior temporal lobe, person identity node, semantic memory, social neuroscience

Abstract

Social behavior is often shaped by the rich storehouse of biographical information that we hold for other people. In our daily life, we rapidly and flexibly retrieve a host of biographical details about individuals in our social network, which often guide our decisions as we navigate complex social interactions. Even abstract traits associated with an individual, such as their political affiliation, can cue a rich cascade of person-specific knowledge. Here, we asked whether the anterior temporal lobe (ATL) serves as a hub for a distributed neural circuit that represents person knowledge. Fifty participants across two studies learned biographical information about fictitious people in a 2-d training paradigm. On day 3, they retrieved this biographical information while undergoing an fMRI scan. A series of multivariate and connectivity analyses suggest that the ATL stores abstract person identity representations. Moreover, this region coordinates interactions with a distributed network to support the flexible retrieval of person attributes. Together, our results suggest that the ATL is a central hub for representing and retrieving person knowledge.

As social creatures, it is essential that we develop a rich storehouse of knowledge about other members of our social network, such as who they are, how they look and sound, where they live, and what they do for a living. However, little is known about how and where such “person knowledge” is represented, stored, and retrieved in the brain. This inquiry is challenging because person knowledge is highly multimodal and multifaceted, being linked to both abstract features such as personality and social status as well as more concrete features such as eye color; in addition, familiar individuals are associated with detailed episodic and semantic memories (e.g., memories of shared experiences and biographic information) (1, 2). The neural circuit for person knowledge must therefore have the ability to combine multiple sources of information into an abstract representation accessible from multiplicative cues.

An influential theory by Burton and Bruce (3) proposes that person recognition is achieved through a hierarchical process that begins with the activation of modality-specific recognition units that selectively respond to the presence of a known face, name, or voice. This information is then sent to an amodal person identity node (PIN) that integrates information from the modality-specific recognition units into a multimodal representation for that individual. Excitation of the PIN ultimately allows the retrieval of person-specific semantic information independently of stimulus modality (4, 5). A similar design is embedded in the “hub-and-spoke” theory of semantic knowledge, which proposes that different features of a concept (such as its color or taste) are distributed throughout the brain (the “spokes”) and that a centralized “hub” integrates these features into a coherent, modality-invariant concept (6–8).

Here, we tested the hypothesis that a region in the anterior temporal lobe (ATL) has a function akin to a person identity node (1, 9, 10), subserving access to abstract person identity representations that can be retrieved from multiple cues, such as when you see a photo of Graceland and it cues your knowledge of Elvis (study 1). Next, we asked whether the ATL acts as a neural switchboard, performing in concert with other brain regions to enable the retrieval of different facets of person knowledge in a flexible and context-appropriate manner (study 2). We focus on the ATL because multiple lines of evidence from neuropsychology, electrophysiology, and neuroimaging have documented the critical role of the ATL in person identification (4, 5, 11–16), person-related learning (10, 17–21), semantic memory (6–8), and abstract social knowledge (1, 22–33). Individuals with ATL damage due to resection or stroke have multimodal person recognition deficits (34), lose access to stored knowledge about familiar people (35, 36), and have difficulties learning information about new people (4, 22, 37, 38). A subregion of the ATL contains a face-sensitive patch, first identified in monkeys, and more recently in humans (9).

Across two fMRI studies, 50 participants learned biographical information about a group of fictitious people for 2 d and then completed a person memory test in the MRI scanner on day 3. In study 1, we used stimuli from different categories commonly associated with familiar people (e.g., faces, names, homes, and objects) to cue memories for specific individuals (Fig. 1A). We compared the similarity of response patterns elicited by stimuli from different categories but associated with the same individual to test the abstract person representation properties of the ATL. In study 2, we asked participants to recollect specific content of fictitious people’s biographies (Fig. 1B) and examined whether the ATL coordinates the retrieval of different aspects of person knowledge by recruiting the activation of different brain regions depending on task requirements.

Fig. 1.

Training material and fMRI task depiction. (A) In study 1, participants learned biographical details (i.e., face, name, age, marital status, occupation, city of residence, and current house) of four fictitious males. (B) In the scanner, participants completed a person memory task where they first viewed a series of stimuli that cued a particular fictitious male and then answered a question about that person. Each run presented stimuli only in one specific category (i.e., face run, name run, house run, or object run). (C) In study 2, participants learned different biographic details (i.e., face, name, occupational status, and personality trait) associated with a different set of fictitious males. Status and trait information were manipulated in a 2 × 2 factorial design. (D) In the scanner, participants completed a person memory task in which they had to indicate the status or personality trait associated with the learned males cued by either a particular face or name. Participants also performed a control condition task (i.e., nonmemory baseline) where they saw unfamiliar faces and names and indicated the spatial location of the stimuli on the screen.

In study 1, findings from a series of multivariate pattern analyses (MVPAs) consistently suggested that portions of the ATL represent person knowledge in an abstract form that is divorced from the ground state of a person’s face or name. Specifically, the ATL contains multivoxel patterns for facial identity that are similarly sensitive to identity accessed through a person’s name, an image of their home, or even an object associated with that person’s occupation. In study 2, we found that the ATL person identity node is embedded in a neural circuit that is consistently engaged during person memory tasks. Multivariate analyses suggested that different content areas of person knowledge—social status, personality traits, and identity—were represented in discrete nodes within this distributed person identification circuit. Connectivity analyses further revealed that the ATL may serve as a “neural switchboard” and is capable of coordinating the flow of person-specific information between sensory brain regions that encode incoming cues and other nodes of this circuit that are engaged when retrieving specific person knowledge content.

Results

Study 1: The ATL Is a Person Identity Node.

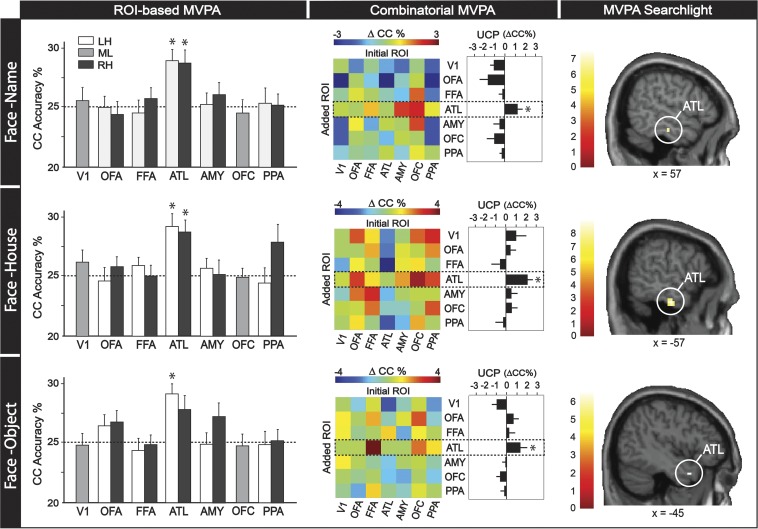

In study 1, we used two-way MVPA cross-category (CC) classification (39–42) to identify regions in which abstract and category-invariant person information is represented. The classifier was first trained to discriminate individual identities using one category of cues (e.g., that person’s face) and subsequently tested on a different category of cues (e.g., that person’s name), and vice versa (Materials and Methods). In this way, only the abstract conceptual information that was general to both stimulus categories was informative to the classifier. Three different decoding approaches were performed: regions of interest (ROI-based), combinatorial (43, 44), and whole-brain searchlight (11).

In the ROI-based analyses, we tested whether identity-specific information in face-selective regions can generalize across different categories of cues that have been linked to a specific person (e.g., Bill’s face ↔ Bill’s name, Bill’s face ↔ Bill’s house, Bill’s face ↔ Bill’s object). A separate functional localizer was used to identify face-selective regions in both hemispheres including the ATL, occipital face area (OFA), fusiform face area (FFA), amygdala (AMY), and orbitofrontal cortex face patch (OFC), as well as a place-selective region [i.e., parahippocampal place area (PPA)] and a control region in early visual cortex (V1) (Fig. S1A). In the current paradigm, the face-selective ATL region was the only ROI that showed above-chance CC classification accuracy for “face ↔ name” [left ATL: t(23) = 3.114, P = 0.030; right ATL: t(23) = 3.941, P = 0.006], “face ↔ house” [left ATL: t(23) = 3.952, P = 0.006; right ATL: t(23) = 4.346, P = 0.001], and “face ↔ object” [left ATL: t(23) = 3.555, P = 0.012] identity representation (Fig. 2). None of the face-selective ROIs were able to cross-classify individual identities based on “nonface” stimulus pairs (i.e., “name ↔ house,” “name ↔ object,” “house ↔ object”), likely due to the face-selective nature of these ROIs (Fig. S2).

Fig. S1.

Regions of interest (ROIs) localization. (A) Study 1 used a functional localizer to define five face-selective ROIs (i.e., bilateral: ATL, OFA, FFA, AMY; unilateral: OFC) as well as a control ROI in V1. A place-selective ROI (i.e., bilateral PPA) was also defined in study 1 because houses were an experimental category, but was not rendered in the current figure. Each color dot represents subject-specific location for each ROI. (B) Study 2 used functional contrasts to define six regions that were significantly recruited during person memory tasks. We used the same ROIs for subsequent MVPA, PPI, and DCM analyses, and they all were unilateral (i.e., left-ATL, right-FFA, left-VWFA, left-IPL, midline-PCC, and left-Hipp). For illustrative purpose only, the left-HIPP ROIs were plotted on the right hemisphere in current figure. Abbreviations: AMY, amygdala; ATL, anterior temporal lobe; FFA, fusiform face area; HIPP, hippocampus; IPL, inferior parietal lobe; OFA, occipital face area; OFC, orbitofrontal cortex; PCC, posterior cingulate cortex; VWFA, visual word form area.

Fig. 2.

MVPA analyses from study 1. Each column contains data from each of three decoding approaches (i.e., ROI-based, combinatorial, and searchlight); each row contains data from each of three cross-category (CC) classifications (i.e., face–name, face–house, and face–object). Three different decoding strategies were used to assess whether results were robust and generalized across different analytic methods. Asterisks indicate statistical significance, and error bars denote SE. Abbreviations: LH, left hemisphere; ML, midline; RH, right hemisphere; UCP, unique combinatorial performance.

Fig. S2.

Subthreshold results for MVPA analyses in study 1. Column plots represent two decoding approaches (i.e., ROI-based, searchlight) and rows plots represent three “nonface” cross-category (CC) classifications (i.e., name–house, name–object, and house–object). For ROI-based analyses, null results were found for all ROIs, even when we used a lenient threshold (one-tailed t tests of P < 0.1, uncorrected). For searchlight analyses, ATL was the only region found in all nonface CC classifications when using a lenient voxel-level threshold of P < 0.0001 (uncorrected; Table S1). Abbreviations: LH, left hemisphere; ML, midline; RH, right hemisphere.

Next, we used a combinatorial MVPA analysis to validate the unique role of the face-selective ATL region (among all face-selective ROIs) for the category-invariant representation of person identity. Combinatorial analyses allow the estimation of the unique information carried within an ROI (43, 44). For every ROI in the present analysis, we calculated the average increase (or decrease) in CC classification accuracy when paired with other ROIs, referred to as the unique combinatorial performance (UCP). A positive UCP means that an ROI carries information that contributes to classification beyond what is obtained from other ROIs. A negative UCP means that information within an ROI is largely redundant with that of other ROIs. As expected, the results suggested that only the ATL displayed significantly positive UCP for face ↔ name [UCP = 1.027, t(5) = 2.879, P = 0.018], face ↔ house [UCP = 2.083, t(5) = 4.471, P = 0.004], and face ↔ object [UCP = 1.273, t(5) = 2.063, P = 0.047] identity representation (Fig. 2).

Finally, we conducted searchlight analyses to explore whether any regions in the brain beyond our face-selective ROIs can represent person identity across stimulus categories. Consistent with the previous two approaches, significant clusters in the ATL for face ↔ name, face ↔ house, and face ↔ object CC classification (Fig. 2) were revealed in this analysis. Although we did not find any regions that represented identity across name ↔ house, name ↔ object, and house ↔ object at the standard threshold, further exploratory analyses suggest that the ATL was the only subthreshold region showing CC properties for all nonface CC pairs when using at a lenient threshold (Fig. S2 and Table S1).

Table S1.

MVPA searchlight analysis in study 1

| Region | Hemisphere | Voxels | T | PFWE-corr | x | y | z |

| Face ↔ name cross-category classification | |||||||

| Cerebellum | Midline | 6 | 7.55 | 0.002 | 6 | −85 | −32 |

| Anterior temporal lobe | R | 3 | 7.14 | 0.015 | 57 | −19 | −23 |

| Face ↔ house cross-category classification | |||||||

| Anterior temporal lobe | L | 19 | 8.76 | 0.001 | −57 | −16 | −29 |

| Face ↔ object cross-category classification | |||||||

| Anterior temporal lobe | L | 2 | 6.62 | 0.038 | −45 | 8 | −29 |

| Name ↔ house cross-category classification* | |||||||

| Inferior temporal sulcus | R | 21 | 6.34 | 0.066 | 48 | −58 | −8 |

| Inferior parietal lobe | R | 21 | 5.91 | 0.145 | 51 | −52 | 43 |

| Anterior temporal lobe | R | 12 | 5.36 | 0.360 | 57 | 8 | −26 |

| Name ↔ object cross-category classification* | |||||||

| Anterior temporal lobe | L | 22 | 6.49 | 0.053 | −36 | 14 | −23 |

| House ↔ object cross-category classification* | |||||||

| Anterior temporal lobe | L | 14 | 5.43 | 0.311 | −60 | −10 | −26 |

For exploratory purposes, three cross-classification conditions (marked with an asterisk) used a lenient voxel-level threshold of P < 0.0001 (uncorrected).

Taken together, the CC MVPA analyses in study 1 suggest that the ATL may be the only brain region that holds an abstract representation of person identity that can be accessed via multiple stimulus categories. Previous studies using face or voice stimuli have found amodal representations of person identity in unimodal and multimodal face-processing regions, including the FFA, superior temporal sulcus, and the ATL (5, 16, 42, 45). In the present paradigm, the only region consistently demonstrating classification of identity across all stimulus types was the ATL. In addition, we found that the bilateral ATLs engage in cross-modal representation, whereas hemispheric asymmetry has been previously reported in the ATLs for unimodal stimuli (1, 31, 46, 47).

Study 2: The ATL Is the Hub of Flexible Person Memory Retrieval.

Univariate analyses of the neural circuit for person memory.

In study 2, we first used univariate analyses to identify brain regions that were strongly engaged when retrieving information associated with an individual (Fig. S3). The contrast of “all memory conditions > baseline” revealed a set of brain regions that included the ATL, inferior parietal lobe (IPL), posterior cingulate cortex (PCC), and hippocampus (HIPP). A similar set of brain regions was found for the contrasts of “status memory > baseline” and “trait memory > baseline.” When comparing memory conditions cued by two different categories (face vs. name), we found that the right fusiform gyrus (i.e., FFA) was activated more during face-cued memory, whereas left fusiform gyrus [also known as the visual word form area (VWFA)] was activated more during name-cued memory.

Fig. S3.

Univariate GLM analyses in study 2. Brain regions showing increased activations for (A) status memory; (B) trait memory; (C) face-cue processing; and (D) name-cue processing (E). Coordinates are listed in Table S6.

MVPA analyses for person knowledge representations.

The univariate analyses delineated a network of brain regions (ROIs) important for person knowledge retrieval (i.e., left ATL, right FFA, left VWFA, left IPL, PCC, and left HIPP) (Fig. S1B). Next, we used MVPA analyses to examine whether distinct regions within this network represent different aspects of knowledge associated with a person (e.g., that person’s status, personality traits, or identity). As knowledge content associated with an individual per se should be invariant to changes in the stimulus category used to cue that person, we collapsed our MVPA analysis of memory condition across the two cue categories (i.e., face and name). Here, we used the three decoding approaches used in study 1 and a “leave-one-run-out” cross-validation (CV) classification scheme to identify brain regions sensitive to different aspects of person knowledge.

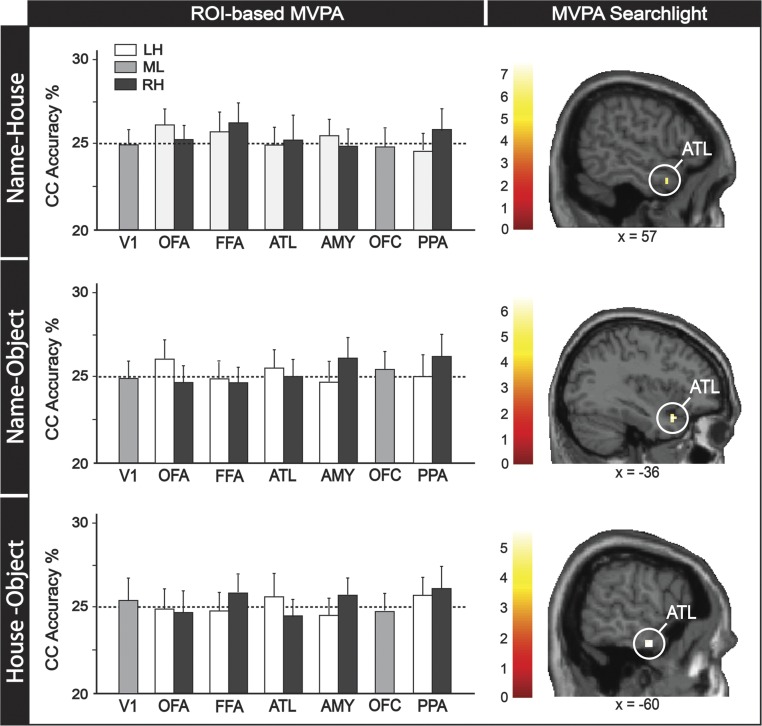

For status and personality-trait knowledge, ROI-based analyses suggested that the IPL was the only region within the person-knowledge network that accurately represented learned people’s occupational status [i.e., manager vs. janitor; t(25)=4.500, P < 0.001], whereas the PCC was the only region that accurately represented trait information [i.e., whether a person was extroverted vs. introverted; t(25) = 3.192, P = 0.012] (Fig. 3). Combinatorial analyses confirmed these findings by showing the only positive UCP in IPL for status information [UCP = 2.372, t(4) = 3.767, P = 0.01] and the highest positive UCP in PCC for personality trait knowledge [UCP = 2.628, t(4) = 3.982, P = 0.008]. Searchlight analyses further identified significant clusters in IPL for status classification and PCC for trait classification (Table S2). These consistent results are in line with previous neuroimaging studies suggesting a critical role of the IPL in representing social status (48, 49) and of the PCC in representing personality traits (50–52).

Fig. 3.

MVPA analyses from study 2. Each column contains data from each of three decoding approaches (i.e., ROI-based, combinatorial, and searchlight); each row contains data from cross-validation (CV) classification for three types of knowledge representations (i.e., status, personality trait, and identity). Asterisks indicate statistical significance, and error bars denote the SE. Abbreviations: UCP, unique combinatorial performance.

Table S2.

MVPA searchlight analyses in study 2

| Region | Hemisphere | Voxels | T | PFWE-corr | x | y | z |

| Social status (i.e., manager vs. janitor) | |||||||

| Cuneus | Midline | 101 | 10.49 | <0.001 | −21 | −70 | 10 |

| 169 | 8.81 | <0.001 | −3 | −91 | 19 | ||

| 16 | 7.19 | 0.010 | −18 | −97 | 22 | ||

| Postcentral gyrus | L | 112 | 9.08 | <0.001 | −48 | −22 | 61 |

| Inferior parietal lobe | L | 97 | 9.04 | <0.001 | −42 | −46 | 37 |

| 7.73 | <0.001 | −30 | −64 | 43 | |||

| Inferior temporal gyrus | L | 21 | 8.32 | 0.001 | −48 | −70 | −2 |

| Personality trait (i.e., extrovert vs. introvert) | |||||||

| Cuneus | Midline | 432 | 11.08 | <0.001 | 21 | −94 | 4 |

| Precentral gyrus | L | 206 | 10.80 | <0.001 | −42 | −10 | 46 |

| Posterior cingulate cortex | Midline | 18 | 9.24 | <0.001 | 12 | −31 | 46 |

| Person identity (eight fictitious people) | |||||||

| Cuneus | Midline | 661 | 12.26 | <0.001 | 21 | −94 | 4 |

| Anterior temporal lobe | L | 46 | 8.92 | <0.001 | −54 | −16 | −29 |

| Inferior frontal gyrus | L | 32 | 8.54 | <0.001 | −54 | −4 | 25 |

| Temporoparietal junction | L | 225 | 8.46 | <0.001 | −45 | −73 | 34 |

| R | 22 | 8.38 | <0.001 | 45 | −73 | 40 | |

For identity knowledge, we observed that the ATL was the only region that could accurately distinguish the eight learned people in ROI-based analyses [t(25) = 3.166, P = 0.012], combinatorial analyses [UCP = 1.811, t(4) = 5.232, P = 0.003], and searchlight analyses (Fig. 3 and Table S2). Along with the results of study 1, our MVPA analyses provide convergent evidence suggesting that the ATL is a critical region for person identity representation, regardless of whether the decoding was performed across categories (e.g., face/name/scene/object in study 1) or with categories collapsed together (e.g., face/name in study 2).

Overall, the MVPA analyses in study 2 suggest that a distributed network represents specific content of person knowledge: status information was represented in the IPL, trait information in the PCC, and, consistent with the results of study 1, person identity was represented in the ATL.

Functional connectivity analyses for neural dynamics of person memory.

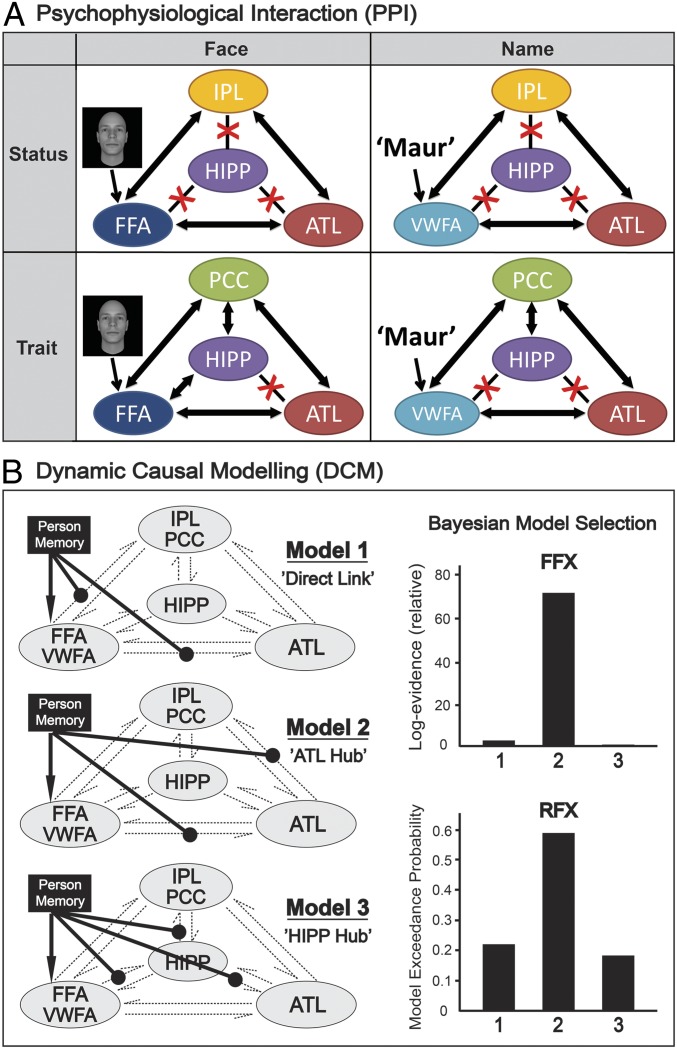

To elucidate the underlying neural dynamics supporting person knowledge retrieval, we performed a psychophysiological interaction (PPI) analysis to examine functional connectivity between nodes of the person-memory network during the retrieval of person-specific knowledge. PPI measures task-dependent interactions between different brain regions and by examining the similarity of activity patterns (“connectivity”) between a seed and other brain areas as a function of specific task demands (53). We took a comprehensive approach to defining the seed regions for our PPI analysis using regions identified by our univariate and MVPA analyses as being important for the distributed representation of person-related knowledge (Fig. S1B). We defined three types of seeds for the PPI analyses: sensory cue regions (FFA, VWFA), knowledge representation regions (IPL, PCC), and the ATL (Fig. S4).

Fig. S4.

Results from the PPI analyses. Twelve separate PPI analyses were carried out, based on 3 ways to define seed regions (i.e., sensory cues, knowledge representations, or ATL) × 4 task types (i.e., face/name × status/trait). Clusters emerging from these analyses reveal the strength of correlation over time between activity in that cluster and that in the seed region as a function of the task. (A) Seed regions were defined by sensory input ROIs (i.e., FFA for faces; VWFA for names). Analyses found sensory input regions have increased coupling with IPL and ATL during status memory but with PCC and ATL during trait memory. (B) Seed regions were defined by knowledge representations ROIs (i.e., IPL for status; PCC for trait). Analyses found knowledge representation regions have increased coupling with FFA and ATL during face-cued memory but with VWFA and ATL during name-cued memory. (C) ATL was set as the seed region. Analyses found ATL has increased coupling with IPL and FFA/VWFA during status memory but with PCC and FFA/VWFA during trait memory. ATL has stronger coupling with FFA and IPL/PCC during face-cued memory but with VWFA and IPL/PCC during name-cued memory.

Because information generally flows from posterior to more anterior regions during person perception (54), we first used sensory regions (FFA or VWFA) as seeds to explore where in the brain the sensory input is forwarded to, and then used regions in association cortex that may be involved in knowledge representation (IPL or PCC) as seeds to examine which brain area(s) are engaged in retrieving knowledge content. The results of our PPI analysis suggest that sensory regions had stronger coupling with the IPL and ATL during the retrieval of a person’s status, but with the PCC and ATL during the retrieval of a person’s personality traits (Fig. S4A). Knowledge representation regions showed enhanced coupling with the FFA and ATL when cued by faces, but with the VWFA and ATL when cued by names (Fig. S4B). The hippocampus also showed increased coupling with these two groups of seed regions, but not in all conditions (Fig. 4A and Tables S3 and S4).

Fig. 4.

Psychophysiological interaction (PPI) and dynamic causal modeling (DCM) analyses. (A) Overall summary of functional coupling between network regions in four different memory conditions. Bold double arrows indicate increased connectivity between two regions, whereas red crosses indicate nonsignificant connectivity change. For more detailed results, Fig. S4, and Tables S3–S5. (B) Three distinct models were compared in DCM, and the optimal one was determined by Bayesian model selection. Both FFX and RFX analysis suggested model 2 (ATL hub) as the optimal model. For more details of model specification, Fig. S5.

Table S3.

PPI analyses based on sensory input ROIs (i.e., FFA and VWFA) as seed regions

| Region | Hemisphere | Voxels | T | PFWE-corr | x | y | z |

| FFA as seed region: Face status > face baseline | |||||||

| Precuneus | Midline | 449 | 10.03 | <0.001 | 3 | −61 | 19 |

| 8.26 | <0.001 | 6 | −43 | 31 | |||

| Middle occipital gyrus | L | 215 | 8.82 | <0.001 | −42 | −73 | −11 |

| R | 130 | 8.53 | <0.001 | 48 | −67 | −14 | |

| 19 | 6.50 | 0.007 | 39 | −85 | 1 | ||

| Fusiform gyrus | L | 215 | 7.32 | <0.001 | −51 | −55 | −14 |

| R | 130 | 7.01 | <0.001 | 45 | −52 | −17 | |

| Amygdala | L | 427 | 8.28 | <0.001 | −30 | 5 | −11 |

| R | 427 | 6.78 | <0.001 | 24 | 2 | −11 | |

| Posterior cingulate cortex | Midline | 67 | 7.60 | 0.001 | 9 | −7 | 40 |

| Anterior temporal lobe | R | 40 | 6.81 | 0.004 | 60 | −7 | −20 |

| L | 45 | 6.61 | 0.006 | −51 | −10 | −29 | |

| Precentral gyrus | R | 109 | 6.69 | 0.005 | 36 | −16 | 43 |

| Inferior frontal gyrus | L | 32 | 6.67 | 0.005 | −48 | 29 | 13 |

| R | 12 | 6.45 | 0.008 | 48 | 5 | 34 | |

| Inferior parietal lobe | L | 83 | 6.65 | 0.005 | −30 | −49 | 52 |

| 6.58 | 0.006 | −21 | −61 | 49 | |||

| FFA as seed region: Face trait > face baseline | |||||||

| Inferior frontal gyrus | L | 88 | 13.95 | <0.001 | −45 | 23 | 28 |

| R | 112 | 13.91 | <0.001 | 36 | 8 | 25 | |

| Hippocampus | R | 50 | 11.00 | <0.001 | 27 | −19 | −17 |

| L | 10 | 9.44 | <0.001 | −21 | −10 | −26 | |

| Precuneus and posterior cingulate cortex | Midline | 404 | 11.71 | <0.001 | −3 | −52 | 28 |

| 10.39 | <0.001 | −6 | −34 | 43 | |||

| Middle occipital gyrus | R | 19 | 11.55 | <0.001 | 42 | −73 | 7 |

| 35 | 10.54 | <0.001 | 45 | −61 | −11 | ||

| L | 102 | 10.40 | <0.001 | −36 | −88 | −8 | |

| Superior temporal gyrus | R | 19 | 11.46 | <0.001 | 48 | −34 | 10 |

| Precentral gyrus | L | 195 | 11.41 | <0.001 | −30 | −28 | 70 |

| R | 153 | 10.10 | <0.001 | 27 | −31 | 70 | |

| Fusiform gyrus | R | 117 | 11.16 | <0.001 | 39 | −46 | −23 |

| Amygdala | L | 37 | 10.51 | <0.001 | −18 | −4 | −11 |

| Inferior parietal lobe | R | 31 | 10.43 | <0.001 | 30 | −58 | 43 |

| L | 68 | 9.61 | <0.001 | −27 | −67 | 40 | |

| Anterior temporal lobe | L | 6 | 10.32 | <0.001 | −54 | 5 | −14 |

| 8 | 9.81 | <0.001 | −63 | −13 | −8 | ||

| Insula | L | 17 | 9.79 | <0.001 | −27 | 23 | −2 |

| Orbitofrontal cortex | R | 156 | 6.17 | <0.001 | 33 | 23 | −11 |

| Anterior cingulate cortex | Midline | 70 | 5.52 | 0.020 | 12 | 20 | 28 |

| VWFA as seed region: Name status > name baseline | |||||||

| Middle occipital gyrus | L | 48 | 8.44 | <0.001 | −42 | −73 | −11 |

| R | 12 | 6.41 | 0.007 | 42 | −70 | −17 | |

| Inferior parietal lobe | L | 127 | 7.27 | 0.001 | −51 | −40 | 40 |

| 6.83 | 0.003 | −36 | −61 | 40 | |||

| Inferior frontal gyrus | L | 74 | 6.78 | 0.003 | −48 | 14 | 22 |

| R | 12 | 6.74 | 0.004 | 51 | 26 | 22 | |

| Anterior temporal lobe | L | 15 | 6.89 | 0.003 | −48 | 5 | −38 |

| Cuneus | Midline | 18 | 6.71 | 0.004 | −12 | −103 | 4 |

| Middle temporal gyrus | L | 77 | 6.66 | 0.004 | −63 | −43 | −8 |

| R | 10 | 6.30 | 0.009 | 63 | −49 | −8 | |

| Precentral gyrus | L | 17 | 6.24 | 0.010 | −45 | −16 | 40 |

| VWFA as seed region: Name trait > name baseline | |||||||

| Insula | R | 452 | 11.72 | <0.001 | 33 | −22 | 19 |

| L | 10 | 7.86 | <0.001 | −36 | −25 | 22 | |

| Inferior parietal lobe | L | 593 | 11.71 | <0.001 | −36 | −55 | 43 |

| R | 160 | 9.15 | <0.001 | 45 | −49 | 31 | |

| Posterior cingulate cortex, extended to precentral gyrus and precuneus | Midline | 1,300 | 11.42 | <0.001 | 27 | −25 | 55 |

| 11.29 | <0.001 | −24 | −31 | 52 | |||

| 11.20 | <0.001 | 12 | −25 | 52 | |||

| 9.99 | <0.001 | −6 | −55 | 40 | |||

| Superior temporal gyrus | L | 593 | 9.77 | <0.001 | −57 | −49 | 16 |

| 9.39 | <0.001 | −57 | −31 | −2 | |||

| R | 75 | 7.91 | <0.001 | 57 | −31 | 10 | |

| Anterior temporal lobe | R | 109 | 9.28 | <0.001 | 60 | −10 | −23 |

| 7.08 | 0.002 | 51 | 8 | −29 | |||

| L | 14 | 8.74 | <0.001 | −51 | 5 | −35 | |

| Fusiform gyrus | L | 12 | 7.85 | <0.001 | −27 | −46 | −26 |

| Cuneus | Midline | 37 | 7.80 | 0.001 | 24 | −91 | 19 |

| Cerebellum | Midline | 34 | 7.55 | 0.001 | −6 | −73 | −41 |

| Inferior occipital gyrus | L | 10 | 7.46 | 0.001 | −36 | −79 | −11 |

| Precentral gyrus | L | 18 | 7.38 | 0.001 | −42 | −16 | 31 |

Table S4.

PPI analyses based on knowledge representation ROIs (i.e., IPL and PCC) as seed regions

| Region | Hemisphere | Voxels | T | PFWE-corr | x | y | z |

| IPL as seed region: Face status > face baseline | |||||||

| Middle occipital gyrus | R | 389 | 10.24 | <0.001 | 33 | −85 | 16 |

| L | 137 | 8.88 | <0.001 | −27 | −85 | 16 | |

| Inferior parietal lobe | L | 154 | 9.62 | <0.001 | −36 | −49 | 43 |

| R | 82 | 8.50 | <0.001 | 33 | −46 | 52 | |

| Anterior temporal lobe | L | 17 | 9.61 | <0.001 | −42 | 11 | −41 |

| R | 15 | 7.12 | 0.003 | 54 | 2 | −29 | |

| Orbitofrontal cortex | R | 144 | 9.39 | <0.001 | 33 | 35 | −14 |

| L | 15 | 6.79 | 0.005 | −24 | 26 | −17 | |

| Midline | 54 | 9.04 | <0.001 | 15 | 14 | −14 | |

| Fusiform gyrus | R | 120 | 9.29 | <0.001 | 45 | −52 | −29 |

| L | 115 | 6.90 | 0.004 | −36 | −61 | −26 | |

| Cerebellum | R | 28 | 9.11 | <0.001 | 45 | −73 | −35 |

| Inferior frontal gyrus | R | 65 | 9.01 | <0.001 | 45 | 8 | 37 |

| Precuneus | Midline | 130 | 8.46 | <0.001 | −6 | −58 | 25 |

| Medial prefrontal cortex | Midline | 17 | 8.22 | <0.001 | −6 | 38 | 43 |

| 22 | 6.79 | 0.005 | −12 | 53 | −2 | ||

| Middle temporal gyrus | L | 57 | 8.08 | <0.001 | −60 | −31 | −17 |

| Cuneus | Midline | 48 | 6.98 | 0.004 | −9 | −94 | 1 |

| IPL as seed region: Name status > name baseline | |||||||

| Inferior frontal gyrus | L | 71 | 9.95 | <0.001 | −42 | 17 | 40 |

| 226 | 8.31 | <0.001 | −48 | 23 | 4 | ||

| R | 37 | 7.24 | 0.002 | 42 | 32 | 28 | |

| 64 | 7.09 | 0.002 | 39 | 41 | −8 | ||

| Inferior parietal lobe | L | 381 | 8.92 | <0.001 | −42 | −55 | 46 |

| R | 112 | 8.77 | <0.001 | 42 | −52 | 46 | |

| Cerebellum | R | 114 | 8.63 | <0.001 | 33 | −76 | −35 |

| L | 104 | 8.59 | <0.001 | −33 | −76 | −35 | |

| Anterior temporal lobe and middle temporal gyrus | L | 171 | 8.42 | <0.001 | −60 | −25 | −20 |

| 7.35 | 0.001 | −60 | −40 | −8 | |||

| 6.00 | 0.021 | −57 | −16 | −29 | |||

| R | 36 | 6.35 | 0.010 | 57 | −37 | −8 | |

| 12 | 6.26 | 0.012 | 60 | −13 | −26 | ||

| Medial frontal gyrus | Midline | 45 | 8.06 | <0.001 | −9 | 26 | 49 |

| Precentral gyrus | L | 161 | 7.82 | 0.001 | −30 | −16 | 61 |

| Precuneus | Midline | 246 | 7.68 | 0.001 | −9 | −46 | 28 |

| Medial orbitofrontal cortex | Midline | 13 | 6.34 | 0.010 | −3 | 41 | −11 |

| Fusiform gyrus | L | 104 | 6.07 | 0.018 | −36 | −64 | −20 |

| PCC as seed region: Face trait > face baseline | |||||||

| Hippocampus | L | 31 | 10.76 | <0.001 | −21 | −19 | −23 |

| Anterior temporal lobe and superior temporal gyrus | R | 67 | 10.01 | <0.001 | 45 | −10 | −11 |

| 6.70 | 0.004 | 45 | −7 | −20 | |||

| L | 119 | 9.56 | <0.001 | −45 | −13 | −11 | |

| 7.79 | <0.001 | −54 | −1 | −11 | |||

| Posterior cingulate cortex | Midline | 145 | 9.59 | <0.001 | −9 | −28 | 40 |

| 61 | 8.42 | <0.001 | 9 | −46 | 43 | ||

| Parahippocampal gyrus | L | 210 | 9.35 | <0.001 | −24 | −43 | −14 |

| Fusiform gyrus | R | 230 | 8.89 | <0.001 | 36 | −40 | −20 |

| Precentral gyrus | L | 87 | 8.99 | <0.001 | −24 | −28 | 58 |

| Supramarginal gyrus | R | 101 | 8.90 | <0.001 | 60 | −28 | 22 |

| L | 49 | 8.60 | <0.001 | −48 | −28 | 22 | |

| Superior parietal lobule | R | 17 | 8.44 | <0.001 | 21 | −55 | 58 |

| L | 23 | 8.09 | <0.001 | −21 | −49 | 58 | |

| PCC as seed region: Name trait > name baseline | |||||||

| Insula | R | 445 | 9.77 | <0.001 | 27 | 11 | −2 |

| 9.42 | <0.001 | 36 | −19 | −2 | |||

| L | 110 | 8.72 | <0.001 | −51 | −16 | 4 | |

| Anterior temporal lobe and superior temporal gyrus | R | 400 | 9.57 | <0.001 | 54 | −13 | −17 |

| 6.25 | 0.009 | 57 | −4 | −23 | |||

| L | 98 | 8.72 | <0.001 | −51 | −16 | 4 | |

| 6.29 | 0.009 | −51 | −13 | −2 | |||

| Fusiform gyrus | L | 261 | 8.98 | <0.001 | −30 | −40 | −11 |

| 7.57 | 0.001 | −39 | −55 | −11 | |||

| R | 59 | 7.63 | 0.001 | 30 | −46 | −14 | |

| Thalamus | Midline | 147 | 8.60 | <0.001 | −6 | −13 | 1 |

| Posterior cingulate cortex | Midline | 155 | 7.75 | <0.001 | −12 | −34 | 58 |

| 151 | 7.39 | 0.001 | 15 | −37 | 55 | ||

| Cuneus | Midline | 56 | 7.23 | 0.001 | 18 | −76 | −5 |

| Hippocampus | R | 24 | 7.52 | 0.001 | 24 | −16 | −26 |

| Middle occipital gyrus | R | 23 | 6.62 | 0.004 | 48 | −58 | 4 |

We also used the ATL as a seed region to more specifically test whether its functional connectivity with other nodes of the person-memory network changed as a function of task demands. The ATL showed increased connectivity with the IPL during the retrieval of a person’s status, but with the PCC during the retrieval of a person’s personality traits. The functional connectivity of the ATL with the FFA increased during memory retrieval when cued by faces, but with the VWFA when cued by names (Fig. S4C and Table S5). The functional connectivity between the ATL and the hippocampus did not change in any conditions.

Table S5.

PPI analyses based on ATL as the seed region

| Region | Hemisphere | Voxels | T | PFWE-corr | x | y | z |

| Status memory > baseline: (face–status, name–status) > (face–baseline, name–baseline) | |||||||

| Inferior parietal lobe | L | 317 | 9.82 | <0.001 | −42 | −40 | 37 |

| 109 | 6.69 | 0.007 | −36 | −58 | 43 | ||

| Precuneus | Midline | 120 | 8.95 | <0.001 | −9 | −67 | 37 |

| Supplementary motor area | Midline | 35 | 8.39 | <0.001 | 0 | −4 | 61 |

| Middle temporal gyrus | L | 41 | 7.71 | 0.001 | −51 | −22 | −14 |

| Superior temporal gyrus | R | 27 | 7.67 | 0.001 | 54 | −37 | 22 |

| L | 11 | 6.74 | 0.006 | −57 | −52 | 22 | |

| Fusiform gyrus | L | 46 | 7.63 | 0.001 | −33 | −52 | −20 |

| R | 10 | 7.01 | 0.003 | 30 | −46 | −17 | |

| Thalamus | Midline | 24 | 7.60 | 0.001 | −15 | −16 | 7 |

| Cuneus | Midline | 10 | 6.71 | 0.006 | 12 | −91 | 22 |

| Trait memory > baseline: (face–trait, name–trait) > (face–baseline, name–baseline) | |||||||

| Fusiform gyrus | R | 341 | 9.27 | <0.001 | 27 | −79 | −5 |

| 7.88 | <0.001 | 30 | −46 | −20 | |||

| L | 377 | 9.27 | <0.001 | −30 | −82 | −11 | |

| 8.02 | <0.001 | −30 | −55 | −23 | |||

| Middle temporal gyrus, extended to superior temporal gyrus and inferior frontal gyrus | L | 1,254 | 8.88 | <0.001 | −42 | −25 | −8 |

| 8.80 | <0.001 | −48 | 5 | 7 | |||

| R | 51 | 8.85 | <0.001 | 57 | −22 | −11 | |

| Temporoparietal junction | R | 415 | 8.03 | 0.001 | 54 | −40 | 19 |

| 7.29 | 0.002 | 63 | −52 | 16 | |||

| Anterior temporal lobe | L | 14 | 7.15 | 0.003 | −48 | 8 | −38 |

| Posterior cingulate cortex | Midline | 99 | 6.96 | 0.004 | −6 | −43 | 58 |

| 6.26 | 0.017 | 6 | −40 | 52 | |||

| 20 | 6.92 | 0.005 | 0 | −19 | 49 | ||

| Postcentral gyrus | L | 25 | 6.81 | 0.006 | −42 | −28 | 61 |

| Inferior frontal gyrus | R | 36 | 6.68 | 0.007 | 51 | 29 | 7 |

| Precuneus | Midline | 19 | 6.61 | 0.009 | −9 | −73 | 40 |

| 16 | 6.48 | 0.011 | −27 | −76 | 31 | ||

| Face memory > face baseline | |||||||

| Middle temporal gyrus, extended to superior temporal gyrus, postcentral gyrus and inferior parietal lobe | L | 1,113 | 9.76 | <0.001 | −48 | −19 | −11 |

| 9.59 | <0.001 | −60 | −13 | 22 | |||

| 8.92 | <0.001 | −54 | −19 | 1 | |||

| 8.30 | <0.001 | −36 | −34 | 55 | |||

| 7.75 | 0.001 | −51 | −49 | 25 | |||

| 61 | 8.42 | <0.001 | 9 | −46 | 43 | ||

| Superior temporal gyrus | R | 535 | 9.49 | <0.001 | 54 | −40 | 22 |

| Cuneus | R | 273 | 9.26 | <0.001 | 12 | −85 | −2 |

| Fusiform gyrus | R | 154 | 8.91 | <0.001 | 42 | −46 | −23 |

| Precuneus | Midline | 66 | 8.09 | 0.001 | −9 | −70 | 37 |

| Supplementary motor area and posterior cingulate cortex | Midline | 129 | 7.82 | 0.001 | −3 | −7 | 61 |

| 6.61 | 0.011 | 0 | −19 | 37 | |||

| Name memory > name baseline | |||||||

| Fusiform gyrus, extended to inferior parietal lobe and precuneus | L | 1,192 | 9.25 | <0.001 | −27 | −46 | −20 |

| 7.68 | 0.001 | −36 | −67 | 31 | |||

| 7.19 | 0.002 | −36 | −52 | 34 | |||

| 6.95 | 0.003 | −42 | −73 | −11 | |||

| 6.57 | 0.007 | −6 | −73 | 43 | |||

| R | 86 | 7.61 | 0.001 | 27 | −43 | −20 | |

| 32 | 6.61 | 0.006 | 30 | −70 | −5 | ||

| Anterior temporal lobe | L | 35 | 8.82 | <0.001 | −42 | 8 | −41 |

| Precentral gyrus, extended to posterior cingulate cortex | L | 264 | 7.74 | 0.001 | −42 | −22 | 61 |

| 6.51 | 0.008 | −9 | −40 | 55 | |||

| 5.80 | 0.033 | −3 | −49 | 37 | |||

| 22 | 6.09 | 0.019 | −3 | −19 | 55 | ||

| Inferior frontal gyrus | L | 234 | 7.66 | 0.001 | −51 | 11 | 25 |

| Middle temporal gyrus | L | 210 | 7.48 | 0.001 | −48 | −25 | −8 |

| Thalamus | Midline | 37 | 7.24 | 0.002 | −18 | −19 | 7 |

| Insula | L | 163 | 7.17 | 0.002 | −33 | −13 | 4 |

| Supplementary motor area | Midline | 29 | 6.13 | 0.017 | −3 | −4 | 67 |

In sum, the results of our PPI analyses suggest that functional activity in secondary sensory regions (i.e., FFA, VWFA), knowledge representation regions in association cortex (i.e., IPL, PCC), and the ATL is tightly coupled during the retrieval of person-specific information, and that different regions within this circuit are differentially coupled depending on the content of the knowledge being retrieved. In contrast, the hippocampus, a region that has an undisputed role in the formation of episodic memories (55), does not appear to interact with other regions during the retrieval of person knowledge (Fig. 4A).

Dynamic causal modeling to test the “ATL-hub” theory.

PPI analysis does not provide information about the direction of causal influences between source and target regions, nor whether the connectivity is mediated by other regions. To gain traction on these issues, we performed dynamic causal modeling (DCM) to explore detailed information processing dynamics within the aforementioned network. We tested three models that might support the implementation of person knowledge retrieval. In model 1, retrieval is implemented by a direct link between sensory regions and knowledge representation regions, which could be formed by associative learning during the 2-d training session that preceded scanning. Alternatively, in model 2, the ATL acts as a “switchboard-like” hub to flexibly coordinate the flow of information when retrieving different content of person knowledge. In model 3, the hippocampus, rather than the ATL, acts as a domain-general memory hub (Materials and Methods and Fig. S5 for details regarding the model specifications).

Fig. S5.

DCM matrices. (A) For each model, the six ROIs were all set to be bidirectionally connected and self-connected. (B) The driving input region is FFA for face-cued conditions and VWFA for name-cued conditions. (C) Driving inputs (arrows) and modulatory connections (line-dots and matrices) for three DCM models are depicted.

Bayesian model selection clearly selected model 2 as the optimal model (Fig. 4B): it has the highest relative group log evidence in fixed-effects analysis (71.29) as well as the highest exceedance probability in random-effects analysis (59.23%). An analysis of absolute model fits confirmed that model 2 provides the highest explained variance among the three models, accounting for 24 ± 6% (mean ± SD) of the observed variance. Therefore, our DCM support a neural mechanism of person memory in which the ATL serves as the central hub coordinating the person-information retrieval from discrete sources.

Discussion

The results of the two studies reported here suggest that the ATL plays a critical role in representing and retrieving person knowledge. Using MVPA CC classification, study 1 provided strong evidence that the ATL face patch has a function akin to a person identity node, representing abstract conceptually invariant person identity information. Research in nonhuman primates has shown that cells in anterior–ventral temporal cortex are highly sensitive to particular facial identities as well as to facial familiarity (56, 57). Previous studies in humans using intracranial recording (16) or fMRI analyses (15, 42, 45) have suggested that the ATL can distinguish between different people using their faces, voices, or names. Our study extends these findings by using sophisticated multivariate analyses and a wider range of stimulus categories. Based on our results, we suggest that the ATL may be the only region that can merge such a wide variety of multicategorical person information.

The results of study 2 shed light onto the neural architecture of person knowledge. Study 2 provides strong support for the idea that person knowledge is organized in a hub-and-spoke manner such that specific features of person knowledge are neurally distributed and portions of the ATL serve as a convergence zone or hub (6–8). MVPA analyses suggested that status and personality trait information are stored separately in portions of the IPL and PCC, respectively, and PPI analyses showed that the ATL acts in concert with these regions to flexibly retrieve different aspects of person knowledge. Moreover, when a particular person memory was cued by a face, there was enhanced coupling between the FFA and ATL, whereas if the same memory was cued by a name, there was enhanced coupling between the visual word form area and the ATL. Critically, we performed DCM to directly test the hub-and-spoke accounts (i.e., model 2 and 3) against an alternative “distributed-only” account (5) (i.e., model 1), and the ATL-hub model was dominantly favored in the comparison.

Functional coupling as measured by PPI and DCM does not necessitate a direct anatomical connection between regions (53). However, the ATL is viewed as a convergence zone precisely because of its unusual pattern of white matter connectivity (58). It receives direct input from the ventral visual stream, which includes the FFA and the VWFA, via the inferior longitudinal fasciculus. Portions of the ATL are also directly connected to the PCC via a limbic pathway, the cingulum bundle, whereas superior aspects of the ATL are structurally interconnected with the IPL by a pathway most closely associated with language, the middle longitudinal fasciculus (59). Thus, the results of the PPI and DCM analyses rest on a verified neurostructural ground truth.

The hub-and-spoke model is a popular account of general semantic knowledge (6–8), and our DCM findings generally support that model. However, one aspect of our findings is challenging for this model. The hub-and-spoke model predicts that, within the ATL, there should be a single person identity node that links all features of all stimuli associated with a particular person. Across ROI-based and searchlight analyses in study 1, we did find that the ATL contains convergence zones (60) that bind different categories of cues related to an identity; however, we were unable to identify a single site—a “master node”—within the ATL that linked all four categories (faces, houses, names, and objects) and survived all six CC pairings. These findings are consistent with neuropsychological evidence that patients with ATL lesions often have person identification deficits from one or two modalities (46, 61), but no patient has been shown to have impairments in all (three or more) modalities at the same time (4). Moreover, our ATL ROI seemed to be biased toward faces: we found CC classifications only worked well for face-related pairings (i.e., face ↔ name, face ↔ house, and face ↔ object; Fig. 2), but not for nonface pairing (i.e., name ↔ house, name ↔ object, and house ↔ object; Fig. S2). One possibility is that known faces serve as singular categories (62), grouping and linking the bits of biographical knowledge and descriptors that define a person, but in a manner that is inherently asymmetrical. Another possibility is that our training regime created a person representation biased toward faces.

It is important to consider that biographical knowledge bears all of the signatures of semantic memory with little to none of the signatures of episodic memory, such as spatiotemporal context. It has been proposed that the learning of semantic associations can bypass the hippocampus entirely and, instead, rely on the ATL (63). Discrete hippocampal damage slows the learning rate of semantic associations but does not impair it completely (64). Indeed, individuals with hippocampal damage can learn new semantic associations through a type of incidental learning called “fast mapping,” whereas patients with ATL lesions cannot (65). Consistent with this small literature, our results suggest that the hippocampus plays a nonsignificant role in the retrieval of biographical information. The results of our univariate analysis showed that the hippocampus was active across all memory conditions so it was included in our network analyses. However, the functional connectivity analysis suggested that it had only minimal interactions with other brain regions during person knowledge retrieval (Fig. 4A), and DCM ruled out any critical role of the hippocampus in person knowledge retrieval (Fig. 4B). We speculate that the hippocampus may be important during the initial acquisition of semantic associations, but within a short amount of time, it is no longer needed and, instead, the ATL becomes the nexus for retrieval (21). Thus, the ATL, which is geographically proximal to the hippocampus, and structurally interconnected with the hippocampus, may play a vital role in orchestrating the cascade of neural events that ultimately result in the retrieval of person knowledge.

There are several limitations of the current investigation that should be noted. First, across the two studies reported here, we tested different groups of subjects and defined “ATL” ROIs in separate ways (i.e., “faces > houses” or “memory > baseline”). We are, therefore, unable to examine whether these ROIs in the separate groups correspond to the same anatomical region. Visual inspection of the each individual’s ROIs in both groups suggest that these regions fall in roughly the same portion of the ATLs (Fig. S1); however, whether the same population of neurons in the ATL of a given individual has both amodal and hub-like properties remains an open question. Second, we only tested the ATL-hub model for two person attributes (i.e., status and trait). It will be interesting for future researchers to assess whether the ATL similarly coordinates the retrieval of other attributes, such as physical (i.e., eye color, body shape) or evaluative characteristics (i.e., likeability, reputation). If our model is generalizable, one would expect to see increased connectivity between the ATL and area V4 during the retrieval of eye color, extrastriate body area during the retrieval of body characteristics, and orbitofrontal cortex during the retrieval of social-evaluative information. Third, the present study only focused on a subset of social knowledge—the biographical information about others. Although biographical information is important, several other types of social knowledge contribute to social behavior, including knowledge about other people’s mental states and goals, semantic knowledge about people that is not specific to particular individuals (e.g., people need to eat, people tend to like chocolate), and knowledge about social etiquette and norms (66). Future research is needed to elucidate the neural architectures supporting each knowledge type and how they relate to one another.

Materials and Methods

Subjects.

A total of 24 subjects (9 males; Mage = 23.21) participated in study 1, and a new cohort of 26 subjects (21 males; Mage = 20.38) participated in study 2. All subjects were native English speakers, right-handed, and had no history of psychological or neurological disorders. They were financially compensated and gave informed consent in a manner approved by the Institutional Review Board of Temple University. The sample size (n ∼ 25) was chosen based on previous studies using similar training paradigms and analytic approaches (10, 41, 67, 68).

Materials.

Study 1.

Biographies of four fictitious males were provided during training sessions (Fig. 1A). Each male’s face was professionally photographed (i.e., full-frontal, neutral expressions, uniform lighting/background) and was linked to six pieces of biographical information: a name, age, marital status, occupation, city of residence, and a house. The first five pieces of biographical information were presented as words and house was presented as a color image, procured from publically available sources on the Internet. Proper names were taken from the social security database of popular names (https://www.ssa.gov/cgi-bin/popularnames.cgi) and each had two syllables. A critical manipulation was made purposely on biographies: each male’s face, name, house, and occupation were distinctive (“unique bios”), whereas their age, marital status, and city of residence could be shared (“common bios”) with one other person. Biographical information was randomly assigned to each face such that each participant learned different associations for any given face.

In the fMRI session, a new set of stimuli was constructed for each fictitious male (Fig. 1B). Specifically, the learned faces/houses in training sessions were rephotographed from five different camera angles (0°, ±45°, and ±90°), and the learned names were presented in five different fonts/colors. In object runs, participants viewed daily-used objects that were representative of each male’s occupation from five different viewpoints (e.g., if the fictitious male was a doctor, a stethoscope was shown).

Study 2.

Biographies of eight fictitious males were provided in training sessions (Fig. 1C). Participants learned four pieces of information about each male: their face, name, occupation, and one personality trait. Eight distinct faces were generated by FaceGen Modeler 3.5, which allowed us to control low-level visual features such as color, brightness, and illumination. Eight artificial names were adapted from a previous study (69). The attractiveness and likeability of these artificial faces and names were carefully controlled by asking a different group of 20 participants to assign ratings. Social status and personality traits were manipulated in a 2 × 2 factorial design: each fictitious male was associated with an occupation title and salary that suggested high or low status (e.g., “Maur is a manager earning more than $9,000 per month” or “Lorc is a janitor earning less than $1,000 per month”) and with personality trait descriptions that denoted high or low sociability (e.g., “Jora is an introverted, quiet bookworm” or “Gris is an extroverted, chatty party animal”). In real life, there are infinite attributes associated with an individual (e.g., attractiveness, reputation, temper, athleticism, etc.); here, we only manipulated “social status” and “sociability traits” because they are the two principal dimensions of person concepts and impression formation (70). Again, biographical information was randomly combined such that each participant learned different occupation/personality characteristics of any given face/name.

In the fMRI session, the eight learned faces or names were presented during the “memory” conditions (Fig. 1D). In addition, we generated eight novel faces/names for the “nonmemory” baseline conditions. These faces/names matched the learned faces/names in regard of low-level visual features, phonemic properties (e.g., start with the same consonant), and subjective ratings of attractiveness/likeability. Participants could easily identify them as unfamiliar during the practice session right before the fMRI session.

Procedure.

Behavioral training sessions.

Participants were told to learn biographical information about four males in study 1 (Fig. 1A) and eight males in study 2 (Fig. 1C). The training protocol was adapted from the literature (10, 71). Training was conducted over 2 d, with the first day session lasting ∼30 min, and the second day session lasting 20 min. During each training session, participants first completed “show” trials in which they viewed slides containing a face image, a name, a house (only in study 1), and a short paragraph containing other biographical information. Each slide was presented three times. There was no time limit on slide presentation; participants pressed a button when they wished to move to the next screen. Next, participants completed “naming” trials in which they viewed a previously learned face and were asked to type that person’s name. After responding, participants were told whether they were correct or incorrect, and the correct biographical information for that individual was presented again on the screen. For naming trials, each male was presented six times in a random order. Finally, participants completed “matching” trials in which they were presented either with learned faces or names and were asked to select the corresponding biographical information (e.g., occupation) from all options presented below (e.g., pilot/mailman/photographer/doctor in study 1; manager/janitor in study 2). The matching phase consisted of blocks of 40 trials in study 1 (or 32 trials in study 2) and participants received correctness feedback after each trial as well as an accumulative accuracy at the end of each block. Participants were considered fully trained if they performed a matching block with accuracy over 95% in day 1 and 100% in day 2. If participants did not reach this level of accuracy, they had to do extra matching blocks until they reached the appropriate level (e.g., overlearning ensured). On day 3, all participants were retested using a paper and pencil test immediately before the scan, to ensure that everyone could accurately recall the learned biographical information.

fMRI session in study 1.

The fMRI session was scheduled on day 3 and consisted of two parts: two runs of a functional localizer and eight runs of the “person memory” task. Both tasks used a block design. In the localizer task, participants were instructed to pay attention to the images and respond whenever the same image was presented twice in a row (one-back task). Each run consisted of 30 blocks, evenly divided between three alternating stimulus types: “faces,” “places,” or “fixation cross.” Each stimulus was presented for 800 ms followed by a 200-ms interstimulus interval. All faces and places stimuli were adapted from a previous study in our laboratory (10).

In the person memory task, participants were asked to retrieve knowledge about the four males they had learned about during training (Fig. 1B). Participants first observed a series of stimuli related to a unique aspect of the “target” male’s identity (i.e., their face, name, house, or occupation) and then responded to a question regarding a common aspect of that male’s biography (i.e., their age, city of residence, or marital status). For instance, after viewing five images of Tyler’s house (presented at different vantage points), participants had to respond to a question regarding Tyler’s age: “True or False: the person associated with this house is 28”?

The person memory task consisted of eight runs (i.e., two runs for each category). Only one category (face/name/house/object) was consistently used to cue target males across the whole run. Each run consisted of 16 blocks of stimulus presentation (4 males × 4 repetitions) and each block contained five cue stimuli related to the same individual (3 s each) and one true/false question about that person (9 s). Note that face/house stimuli were only displayed in full-frontal view during training, whereas during the fMRI session the face/house cue stimuli were presented from five different vantage points. Similarly, the name cue stimuli here were presented in five different fonts and colors. These manipulations aimed to vary the low-level visual properties of each male’s cue stimuli while holding the high-level person identity constant, and also avoid visual habituation (56). The object cue stimuli were related to each male’s occupation. Five pictures depicting a daily-used object associated with each male’s occupation were presented. Note that these objects were not shown during training, because occupation information was presented in written form during training. They were used here to ensure participants thoroughly learned each male and their associated biographical information. The cue stimuli order (within a block), the target male order (within a run), and the category order (across runs) alternated and were randomized across participants.

fMRI session in study 2.

Participants completed six runs of a person memory task in the scanner on day 3 (Fig. 1D). In each run, we adopted a 3 × 2 factorial block-design where the factors were “task type” (status memory, trait memory, or nonmemory baseline) and “cue category” (name or face of the target male). In status/trait memory blocks, participants viewed faces or names of the eight learned males and were asked to indicate their social status (manager/janitor) or personality trait (extroverted/introverted). In the “nonmemory baseline” blocks, participants viewed eight novel faces/names they never learned before and were asked to indicate the spatial location of the cue stimuli appearing on the screen (left/right). At the beginning of each block, participants were informed of the upcoming task type (presented for 3 s). Each run consisted of 18 blocks (i.e., 3 task types × 2 cue types × 3 repetitions), with eight trials in each block to cover the whole set of faces/names. To make the memory and nonmemory conditions similar in terms of cognitive demand, participants had to respond in 4 s for status/trait trials but in 2 s for baseline trials. The target male order (within a block) and the task order (within a run) alternated and were randomized across participants.

Data Acquisition and Preprocessing.

The fMRI session was conducted at the Temple University Hospital on a 3-T Siemens Verio scanner, equipped with a 12-channel head coil. In study 1, functional images were acquired using a gradient-echo echo-planar imaging (EPI) sequence [repetition time (TR) = 3,000 ms; echo time (TE) = 20 ms; field of view = 240 × 240; matrix size = 80 × 80; flip angle = 90°]. Sixty-one interleaved slices (3 × 3 × 2.5-mm voxels) were acquired aligned to 30° tilted from the anterior commissure–posterior commissure line, with full brain coverage. These imaging parameters (i.e., short TE, tilted slices) were optimized for mitigating susceptibility artifacts around ATL and OFC (72), and were validated by pilot scans as well as previous studies in the laboratory (10, 28). In study 2, we adopted the same pulse sequence except the TR (2,000 ms) and 40 interleaved slices (3 × 3 × 3.5-mm voxels). In study 1 the visual stimulus was delivered by E-Prime software; in study 2, stimuli were delivered by Cogent toolbox running under Matlab R2014b. Responses were recorded using a four-button fiber optic response pad system.

To remove sources of noise and artifact, functional data were corrected for slice timing, realigned, unwarped, normalized to the EPI template [Montreal Neurological Institute (MNI) space, resampled to 3 × 3 × 3 mm], spatially smoothed (8 mm), high-pass filtered at 128 s, and prewhitened by means of an autoregressive model AR (1) using SPM8 software.

Univariate General Linear Model Analyses.

Subject-specific parameter estimates (β weights) for each condition were derived through a general linear model (GLM). For each subject, the data were best-fitted at every voxel using a combination of effects of interest. These were delta functions representing the onset of each of the experiment conditions, convolved with the SPM8 hemodynamic response function. The six motion regressors and memory error trials were also included as nuisance regressors. Next, subject-specific β weights were entered into a group-level random-effect GLM to allow statistical inference. Statistics maps were generated using a voxel-level familywise error (FWE)-corrected threshold of P < 0.05. Stereotaxic coordinates are reported in MNI space and regional labels were derived using the automated anatomical labeling (AAL) atlas in xjView.

In study 1, we set up one GLM for each of the four categories (face, name, house, and object) across two runs using unsmoothed data. This was prepared for subsequent MVPA CC classification. β maps for each target male (i.e., Tyler, Justin, Aaron, and Cameron) were extracted from each block of a run. Each male had four β maps per run and eight β maps in total per category.

In study 2, four different GLMs were set up across six runs, based on different analytic purposes. One GLM was set up for a univariate analysis of the main effect of task types. β maps for each task type regressor (i.e., face–status, face–trait, face–baseline, name–status, name–trait, and name–baseline) were extracted and contrasts of interest were conducted upon them (i.e., all memory > baseline, status memory > baseline, trait memory > baseline, face memory > name memory, and name memory > face memory) (Fig. S3 and Table S6). This GLM was also used for DCM analysis. For MVPA decoding of status, personality traits, and identity representations, three GLMs were set up separately using unsmoothed data. β maps for each status regressor (i.e., manager or janitor), each trait regressor (i.e., extrovert or introvert), and each learned male regressor (i.e., eight males) were extracted from status memory blocks, trait memory blocks, and all memory blocks, respectively.

Table S6.

Univariate analyses for the main effect of different task types in study 2

| Region | Hemisphere | Voxels | T | P FWE-corr | x | y | z |

| All-memory > baseline: (face–status, face–trait, name–status, name–trait) > (face–baseline, name–baseline) | |||||||

| Insula | L | 218 | 10.12 | <0.001 | −30 | 26 | 7 |

| R | 84 | 6.86 | 0.003 | 33 | 17 | 1 | |

| Posterior cingulate cortex | Midline | 132 | 8.97 | <0.001 | 15 | −28 | 52 |

| Precuneus | Midline | 52 | 8.47 | <0.001 | −9 | −73 | 37 |

| Cerebellum | Midline | 75 | 8.08 | <0.001 | −12 | −46 | −17 |

| Anterior cingulate cortex | Midline | 75 | 7.75 | 0.001 | −6 | 23 | 31 |

| Inferior frontal gyrus | L | 218 | 7.13 | 0.002 | −36 | 23 | 16 |

| Anterior temporal lobe | L | 12 | 6.75 | 0.004 | −51 | 2 | −14 |

| Inferior parietal lobe | L | 11 | 6.56 | 0.006 | −27 | −61 | 40 |

| Hippocampus | L | 11 | 6.47 | 0.007 | −36 | −34 | −5 |

| Status memory > baseline: (face–status, name–status) > (face–baseline, name–baseline) | |||||||

| Insula | L | 157 | 9.48 | <0.001 | −30 | 26 | 7 |

| R | 40 | 6.07 | 0.016 | 33 | 20 | −2 | |

| Posterior cingulate cortex | Midline | 129 | 8.81 | <0.001 | 15 | −28 | 52 |

| Precuneus | Midline | 41 | 7.83 | <0.001 | −9 | −73 | 37 |

| Cerebellum | Midline | 48 | 7.38 | 0.001 | −12 | −46 | −17 |

| Inferior parietal lobe | L | 18 | 7.33 | 0.001 | −27 | −61 | 40 |

| Anterior cingulate cortex | Midline | 36 | 7.31 | 0.001 | −6 | 23 | 31 |

| Superior temporal gyrus | R | 11 | 6.92 | 0.003 | 45 | −16 | −5 |

| Inferior frontal gyrus | L | 157 | 6.82 | 0.003 | −42 | 20 | 28 |

| Anterior temporal lobe | R | 10 | 6.68 | 0.005 | 51 | −4 | −11 |

| L | 6 | 6.37 | 0.009 | −51 | 2 | −14 | |

| Hippocampus | L | 5 | 5.99 | 0.019 | −33 | −34 | −5 |

| Trait memory > baseline: (face–trait, name–trait) > (face–baseline, name–baseline) | |||||||

| Insula | L | 228 | 9.67 | <0.001 | −30 | 26 | 7 |

| R | 117 | 7.62 | <0.001 | 33 | 17 | 1 | |

| Precuneus | Midline | 57 | 8.97 | <0.001 | −9 | −73 | 37 |

| Anterior cingulate cortex | Midline | 91 | 7.94 | <0.001 | −9 | 20 | 43 |

| Posterior cingulate cortex | Midline | 125 | 8.33 | <0.001 | 15 | −28 | 52 |

| Cerebellum | Midline | 82 | 8.21 | <0.001 | −12 | −46 | −17 |

| Inferior frontal gyrus | L | 228 | 7.24 | 0.002 | −36 | 23 | 16 |

| Superior temporal gyrus | L | 11 | 6.80 | 0.004 | −60 | −34 | 10 |

| Hippocampus | L | 18 | 6.76 | 0.004 | −36 | −34 | −5 |

| Anterior temporal lobe | L | 7 | 6.67 | 0.005 | −51 | 2 | −14 |

| Face memory > name memory: (face–status, face–trait) > (name–status, name–trait) | |||||||

| Fusiform gyrus | R | 119 | 9.95 | <0.001 | 39 | −58 | −14 |

| 8.09 | <0.001 | 39 | −79 | −11 | |||

| Cuneus | Midline | 70 | 7.96 | <0.001 | −12 | −97 | −5 |

| Inferior occipital gyrus | R | 108 | 7.33 | 0.001 | 27 | −91 | −5 |

| Name memory > face memory: (name–status, name–trait) > (face–status, face–trait) | |||||||

| Cuneus | Midline | 219 | 11.73 | <0.001 | 6 | −79 | 28 |

| 205 | 8.15 | <0.001 | −6 | −82 | 19 | ||

| Middle cingulate cortex | Midline | 109 | 9.58 | <0.001 | 6 | −13 | 40 |

| Superior temporal gyrus | R | 67 | 8.12 | <0.001 | 48 | −22 | 22 |

| 12 | 8.00 | <0.001 | 60 | −61 | 16 | ||

| L | 52 | 7.14 | 0.002 | −57 | −43 | 28 | |

| Orbitofrontal cortex | Midline | 12 | 7.31 | 0.001 | −12 | 23 | −17 |

| Medial prefrontal cortex | Midline | 47 | 7.24 | 0.002 | −12 | 53 | 28 |

| Fusiform gyrus and inferior temporal gyrus | L | 154 | 7.23 | 0.002 | −57 | −61 | −8 |

| 6.77 | 0.004 | −54 | −46 | 1 | |||

| Precuneus | Midline | 9 | 6.57 | 0.006 | 12 | −55 | 58 |

ROIs Localization.

Study 1 used an established functional localizer (10) to localize subject-specific ROIs. Face-selective regions (i.e., OFA, FFA, ATL, AMY, and OFC) were defined by individuating the peaks showing greater activity for faces than for places (“faces > places”; P < 0.05, uncorrected). A place-selective region (i.e., PPA) was defined by the opposite contrast (“places > faces”). To rule out effects driven by the low-level perceptual features of our stimuli, we additionally defined a control V1 ROI in early visual cortex around the voxel showing the greatest activation for all visual stimuli (“faces + places” > “fixation”) (Fig. S1A).

In study 2, subject-specific ROIs were functionally localized using a series of contrasts between task types in univariate analysis: ATL (“all memory > baseline”), FFA (“face memory > name memory”), VWFA (“name memory > face memory”), IPL (“status memory > baseline”), PCC (“trait memory > baseline”), and the hippocampus (“all memory > baseline”) (Fig. S1B). We stress that, even though ROI definition and subsequent MVPA analysis were based on the same data, our analyses did not suffer from circular logic (73), because the voxel selection criteria were based on “task types” in univariate analyses where all eight learned males were modeled by the same regressor whereas MVPA analysis aimed to classify each learned male. In other words, the voxel selection procedure used no information about the specific biographies of each learned male (74, 75). Similar rationale also applies to our PPI (32, 76) and DCM analysis (i.e., DCM inferences were made via model comparison, which were conditioned on prespecified regions but not biased by the selection of the regions per se) (77, 78).

A 6-mm spherical mask was generated for all ROIs (68, 79) in study 1 and study 2, centered on MNI coordinates with the highest activation within each peak (Table S7), and confirmed by anatomical AAL atlas. In study 1, we localized bilateral areas for each ROI (except midline areas such as OFC and V1) for ROI-based MVPA analyses; then we merged both hemispheres into one unified mask to represent each ROI for combinatorial MVPA analyses. In study 2, we generated a unilateral mask for each ROI because only one hemisphere survived in univariate analysis. In study 2, the same ROIs were used in subsequent MVPA, PPI, and DCM analysis.

Table S7.

Mean MNI coordinates of all ROIs defined in studies 1 and 2

| Region | Hemisphere | x | y | z |

| Study 1 | ||||

| Early vison cortex (V1) | Midline | 0 | −89 | 4 |

| Occipital face area (OFA) | R | 44 | −77 | −8 |

| L | −42 | −80 | −7 | |

| Fusiform face area (FFA) | R | 42 | −52 | −20 |

| L | −42 | −52 | −22 | |

| Anterior temporal lobe (ATL) | R | 51 | −4 | −27 |

| L | −50 | −2 | −26 | |

| Amygdala (AMY) | R | 21 | −6 | −18 |

| L | −19 | −6 | −17 | |

| Orbitofrontal cortex face patch (OFC) | Midline | −1 | 38 | −15 |

| Parahippocampal place area (PPA) | R | 29 | −47 | −11 |

| L | −27 | −48 | −11 | |

| Study 2 | ||||

| Anterior temporal lobe (ATL) | L | −50 | −2 | −16 |

| Fusiform face area (FFA) | R | 39 | −55 | −18 |

| Visual word form area (VWFA) | L | −45 | −56 | −12 |

| Inferior parietal lobe (IPL) | L | −30 | −58 | 45 |

| Posterior cingulate cortex (PCC) | Midline | 10 | −31 | 46 |

| Hippocampus (HIPP) | L | −33 | −30 | −8 |

MVPA Analyses.

In both studies, we adopted three MVPA decoding approaches: ROI-based, combinatorial, and searchlight analysis. All were implemented by the Decoding Toolbox (80) using a support vector machine as a classifier. Combinatorial analysis was executed in the same way as the ROI-based approach, except that conjunction masks were created between all possible combinations of two ROIs. Searchlight analysis was executed with a radius of 4 voxels (i.e., 12 mm) across the whole brain.

In study 1, a two-way CC classification scheme was used for each subject. The classifier was first trained on one category (e.g., face runs) and subsequently tested on the other category (e.g., name runs); the reverse decoding direction was also performed (e.g., first trained on name runs and then tested on face runs). The average two-way CC classification accuracy was then calculated for each ROI for each CC pair and compared with chance performance (identity of four males = 0.25). In total, six CC classification analyses were performed in a pairwise fashion between any two of the four categories (i.e., face–name, face–house, face–object, name–house, name–object, house–object).

Study 2 used a leave-one-run-out CV scheme in which the classifier was trained on five runs of data and tested on the remaining untrained run. This procedure was repeated six times, each time using a different test run, and the average CV accuracy was calculated for each ROI and compared with chance performance (status = 0.5, personality trait = 0.5, identity of eight fictitious males = 0.125).

For group-level inference, ROI-based analysis used one-tailed t tests with a Bonferroni correction of P < 0.05. For combinatorial analysis, we used one-tailed t tests of P < 0.05 to test whether the average change of CC/CV accuracy (by combining a particular ROI with each of the rest ROIs) was significantly above 0% (i.e., increase the prediction) (43). For searchlight analysis, a voxel-level FWE-corrected threshold of P < 0.05 was used. If null results were found, a lenient voxel-level threshold (P < 0.0001, uncorrected) was then used to reveal subthreshold results for exploratory purposes (Fig. S2 and Table S1).

PPI Analyses.

We carried out PPI analysis in SPM8 (53) to examine functional coupling between the six ROIs identified using univariate analyses during the person memory task. Seed regions included sensory input ROIs (VWFA/FFA) or knowledge representations ROIs (IPL/PPC) or the ATL (identity representation); and four experimental conditions were examined (i.e., face/name × status/personality trait) vs. baseline. For instance, in one PPI analysis, we used the FFA as the seed region and explored its “coupling” brain regions during the retrieval of social status; in another PPI analysis, we set the IPL as a seed region and explored its coupling regions during face–status conditions. In total, 12 PPI analyses were performed for each subject (Fig. S4 and Tables S3–S5).

For each PPI analysis, we created a new GLM with (i) a “physiological” regressor in which the seed region’s time course (i.e., first eigenvariate) was deconvolved to estimate the underlying neural activity; (ii) a “psychological” regressor in which task type trials (vs. baseline trials) were convolved with the canonical hemodynamic response function; and (iii) a “PPI interaction” regressor in which the psychological regressor was multiplied by the physiological regressor. We used this interaction regressor to identify voxels in which functional activity covaried in a task-dependent manner with the seed region. Subject-level PPI analyses were run to generate SPM contrast images similar to a subject-level GLM model, and these contrast images were entered into a group-level random-effects GLM and thresholded at P < 0.05 (voxel-level FWE corrected).

DCM.

We performed DCM analysis using DCM10 in SPM8 (81). Three distinct, but not mutually exclusive, models were tested (Fig. 4B and Fig. S5): (i) Model 1 hypothesizes that person knowledge is retrieved via a direct link from sensory input areas (FFA/VWFA) to knowledge representation areas (IPL/PCC), with the ATL being mainly implemented in identity recognition and therefore not involved in specific knowledge content retrieval. In model 1, one can expect two separate information flows during face–status memory: “FFA→ IPL” (for status knowledge retrieval) and “FFA→ ATL” (for implicit person identification). This model is conceptually similar to the distributed-only account in semantic memory research. (ii) Model 2 assumes that the ATL serves as a hub that coordinates activity within the network during person memory retrieval: a person’s identity is first recognized in the ATL, which then directs information flow to knowledge representation areas (IPL/PCC). In model 2, the information flows for face–status memory and name–trait memory can be expected as “FFA → ATL → IPL” and “VWFA → ATL → PCC,” respectively. Note that we did not set ATL’s modulation to hippocampus in this model because MVPA analyses suggest that the hippocampus does not contain any person-knowledge representations and PPI analyses found no functional connectivity between the ATL and hippocampus during person memory retrieval. (iii) In model 3, the hippocampus is the central hub: all person knowledge (including identity) is coordinated by this domain-general hub. The information flows during face–status memory would be “FFA → hippocampus → IPL (for status knowledge)” and “FFA → hippocampus → ATL (for implicit person identification).” For each model, the six ROIs were all set to be bidirectionally connected (Fig. S5A), and their time courses (i.e., first eigenvariate) were extracted for each subject individually.

To prevent poor model fit, we calculated the mean explained variance of each model across subjects by using the SPM function “spm_dcm_fmri_check.” To determine the optimal model, fixed-effects (FFX) and random-effects (RFX) group analyses were implemented by Bayesian models selection (82). In the FFX case, one assumes that the optimal model is identical across the population. It uses group log evidence to quantify the relative goodness of models, which is the exponentiated sum of the log model evidence of each subject-specific model. Usually, a difference in a group log evidence of 3 is taken as statistically strong evidence. Thus, if the group log evidence of one model is bigger than the other models’ by 3 or more, that model would be considered by FFX analysis to be the optimal model (78).

Because the FFX analysis is vulnerable to outlier subjects, we also implemented an RFX analysis, which accounts for the heterogeneity of the model structure across subjects. It uses hierarchical Bayesian modeling that estimates the parameters of a Dirichlet distribution over the probabilities of all models considered. These probabilities define a multinomial distribution over model space, enabling the computation of the posterior probability of each model given the data of all subjects and the models considered. The results of RFX analysis are reported in terms of the exceedance probability that one model is more likely than any other model. The optimal model in RFX analysis would be considered to be the one with the largest exceedance probability.

Acknowledgments