Abstract

Effective pattern recognition requires carefully designed ground-truth datasets. In this technical note, we first summarize potential data collection issues in digital pathology and then propose guidelines to build more realistic ground-truth datasets and to control their quality. We hope our comments will foster the effective application of pattern recognition approaches in digital pathology.

Keywords: Digital pathology, ground truth, object recognition, pattern recognition, quality control

Introduction

In pathology, the study of cells (cytology) and tissue (histology) is performed by examining cells and tissues which were sectioned, stained, and mounted on a microscope glass slide under a light microscope. These studies typically aim at detecting changes in cellularity or tissue architecture for the diagnosis of a disease. Over the past few decades, technological advances in scanning technology enabled the high-throughput conversion of glass slides into digital slides (whole slide images) at resolutions approaching those of traditionally used optical microscopes. Digital pathology has become an active field that holds promise for the future of anatomic pathology and raises many pattern recognition research challenges such as rare object detection/counting and robust tissue segmentation.[1,2,3] In addition to the numerous potential patterns to recognize in digital slides, one of the key challenges for recognition algorithms is the wide variety of sample preparation protocols. These yield highly variable image appearances of tissue and cellular structures. Ideally, pattern recognition algorithms should be versatile so that they could be applied to several classification tasks and image acquisition conditions without the need to develop completely novel methods but using training datasets related to each novel task at hand. However, such an idealistic application of pattern recognition methods on real-world applications requires the ground-truth data to be carefully designed and realistic. We believe realistic data collection is an underestimated challenge in digital pathology that deserves more attention. In this technical note, we first discuss potential dataset issues in digital pathology. We then suggest guidelines and tools to set up better ground-truth datasets and evaluation protocols.

Discussion

Potential sources of dataset variability and bias

Object recognition aims at designing methods to automatically find and identify objects in an image. The design of such methods usually requires ground-truth datasets provided by domain experts and depicting various categories (or classes) of objects to recognize. In object recognition research, publicly available ground-truth datasets are essential to enable continuous progress as they also allow algorithm quantitative evaluation and comparison of algorithms. However, computer vision dataset issues have been raised recently against datasets used for several years.[4,5,6,7,8,9,10,11] We expect similar problematic issues might arise in the coming years in the emerging field of digital pathology if precautions are not taken when collecting new datasets.

Indeed, in some of these studies, published in the broader computer vision community, authors have shown that some hidden regularities can be exploited by learning algorithms to classify images with some success. For example, background environments can be exploited in several face recognition benchmarks.[6,7] Similarly, images of some object recognition datasets can be classified using background regions with accuracy far higher than mere chance[11] although images were acquired in controlled environments. In biomedical imaging, illumination, focus, or staining settings might also discreetly contribute to classification performance.[10] This type of fluctuation can lead to reduced generalization performance of classifiers as also observed in high-content screening experiments where images of different plates can have quite different gray value distributions.[12]

Overall, these dataset biases will prevent an algorithm to work well on new images and are potentially guiding algorithm developers in the wrong direction. Moreover, the realism of several benchmarks has to be questioned beside the large amount of imaging data needed to analyze digital pathology applications. For example, in diagnostic cytology, a single patient slide might contain hundreds of thousands of objects (cells and artifacts). However, typical benchmarks (e.g.,[13] in serous cytology, and[14] in cervical cancer cytology screening) contain only a few hundred individual cells from a limited number (or unknown number) of patient samples; hence, variations induced by laboratory practices and by biological factors are often not well represented. We believe that this partly explains why pattern recognition approaches had only a limited impact in cytology although there have been numerous attempts at designing computer-aided cytology systems.[15]

The lack of details concerning data acquisition and evaluation protocols is also potentially hiding idiosyncrasies. An obvious sample selection bias would consist in collecting all examples of a given class (e.g., malignant cells) from a subset of slides while objects of another class (e.g., benign cells) are collected from another subset of slides. Such a data collection strategy might lead to classifiers that unwillingly capture slide-specific patterns rather than class-specific ones, hence have poor generalization performance. Similar problems might occur with other experimental factors, for example, when examples from slides stained in a different laboratory or stained on different days of the week are used, as it has been shown that these are major factors causing color variations in histology.[16] It has been reported that many other factors (e.g., variation in fixation delay timings, changes in temperature, etc.) can affect cytological specimens[17] and tissue sections,[18] hence the images used to develop recognition algorithms. Similarly, in immunohistochemistry, variable preanalytical conditions (such as fluctuations in cold ischemia, fixation, or stabilization time) could induce changes on certain marker expression, hence image analysis results.[19] Indeed, samples are prepared using colored histochemical stains that bind selectively to cellular components. Color variability is inherent to cytopathology and histopathology based on transmitted microscopy due to the several factors such as variable chemical coloring/reactivity from different manufacturers/batches of stains, coloring being dependent on staining procedures (timing, concentrations, etc.). Furthermore, light transmission is a function of tissue section thickness and influenced by the components of the different scanners used to acquire whole slide images.

Data collection guidelines

While it would be hardly possible to avoid all dataset variability and bias, it is important that the protocols for data acquisition and imaging acquisition try to reduce the nonrelevant differences between object categories. Moreover, object recognition evaluation protocols should focus on challenging methods in terms of robustness.

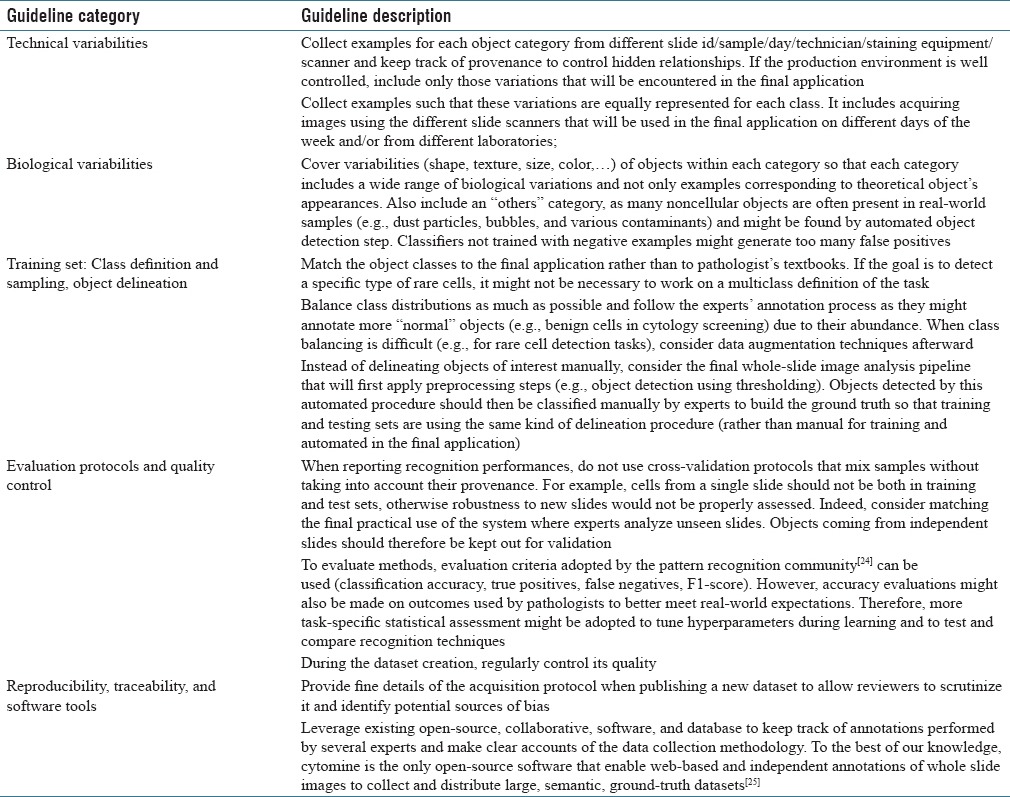

Table 1 lists and organizes recommendations for less biased data collection based on lessons learned from the design of a practical cytology system,[20] from observations in digital pathology challenges,[21] from more general recommendations in the broader microscopy image analysis,[22,23,26] and from computer vision literature.[27] While all these recommendations might not be followed simultaneously due to current standard practices and limited resources, we recommend to follow the most of these whenever possible.

Table 1.

Guidelines for less biased data collection and algorithm evaluation

Dataset quality control

While following guidelines for the construction of a realistic ground truth should reduce dataset bias, it might not be possible to control and constrain every aspect of the data collection due to the current laboratory practices and available resources. Hence, there might still be real-life reasons for dataset shift.[28] While other works have considered ground-truth quality assessment using various annotation scoring functions (e.g.,[29] where authors used the number of control points in the bounding polygon of a manual annotation), we believe these are not very relevant for practical pattern recognition applications in digital pathology. As[6,10] we rather think, it is important to assess dataset quality with respect to outcomes used by the final users. We, therefore, recommend to implement two simple quality control tests for assessing novel datasets and detecting biases before intensively working on them.

The first strategy simply evaluates recognition performances (e.g., classification accuracy) with global color histogram methods or related approaches. While color information can be helpful for some classification tasks, too good results using such a simple scheme might reveal that individual pixel intensities are (strongly) related to image classes. In particular, in histology and cytology, color statistics may be of additional value, for example, to indirectly recognize a cell with a larger dark nucleus, but experts usually discriminate objects based on subtle morphological or textural criteria. For example, we have observed that staining variability can be exploited by such an approach on a dataset of 850 images of H and E stained liver tissue sections from an aging study involving female mice on ad libitum or caloric restriction diets.[30] We use the Extremely Randomized Trees for Feature Learning (ET-FL) open-source classification algorithm of[31] that yields < 5% error rate to discriminate mouse liver tissues at different development stages using only individual pixels encoded in the Hue-Saturation-Value (HSV) color space (using 10-fold cross-validation evaluation protocol, and method parameter values[31] were: T = 10, nmin = 5000, and k = 3, with NLs = 1 million pixels extracted from training images). We observed that a similar approach yields also < 5% error rate for the classification of 1057 patches of four immunostaining patterns (background, connective tissue, cytoplasmic staining, and nuclear staining) from breast tissue microarrays[32] (using the same evaluation protocol and method parameter values).

Second, similarly to[6] that observed background artifacts in face datasets, one can easily evaluate recognition rates of classification methods on regions not centered on the objects of interest. We performed such an experiment using all 260 images of acute lymphoblastic leukemia lymphoblasts.[33] Using the ET-FL classifier,[31] we obtained 9% error rate using only pixel data from a square patch of 50 × 50 pixels extracted at the top-left corner of each image corresponding to background regions (using 10-fold cross-validation evaluation protocol, and method parameter values[31] were: T = 10, nmin = 5000, and k = 28, with NLs = 1 million 16 × 16 subwindows extracted from training images and described by HSV pixel values). That is significantly better than majority/random voting although these patches do not include any information about the cells to be recognized. This problem is illustrated in Figure 1.

Figure 1.

Illustration of illumination/saturation bias in unprocessed images from a dataset describing normal and lymphoblast cells.[28] The large images (left) are two images from each class. Small images (right) are cropped subimages (top left 50 × 50 corner) from 16 images for each class

In these two datasets, some acquisition factors are correlated to individual classes. Overall, these overly simple experiments stress the need for carefully designed datasets and evaluation protocols in digital pathology.

Conclusions

Pattern recognition could significantly shape digital pathology in the next few years as it has a large number of potential applications, but it requires the availability of representative ground-truth datasets. In this note, we summarized data collection challenges in this field and suggest guidelines and tools to improve the quality of ground-truth datasets. Overall, we hope these comments will complement other recent studies that provide guidelines for the design and application of pattern recognition methodologies,[31,34,35] hence contribute to the successful application of pattern recognition in digital pathology.

Financial support and sponsorship

R.M. was supported by the CYTOMINE and HISTOWEB research grants of the Wallonia (DGO6 n°1017072 and n°1318185).

Conflicts of interest

There are no conflicts of interest.

Acknowledgments

R.M. is grateful to persons Philipp Kainz (Medical University of Graz), Vannary Meas-Yedid (Pasteur Institute, France), and Nicky d'Haene (Hopital Erasme, Université libre de Bruxelles) for their comments on an earlier version of the manuscript.

Footnotes

Available FREE in open access from: http://www.jpathinformatics.org/text.asp?2017/8/1/19/204200

References

- 1.Fuchs TJ, Buhmann JM. Computational pathology: Challenges and promises for tissue analysis. Comput Med Imaging Graph. 2011;35:515–30. doi: 10.1016/j.compmedimag.2011.02.006. [DOI] [PubMed] [Google Scholar]

- 2.Kothari S, Phan JH, Stokes TH, Wang MD. Pathology imaging informatics for quantitative analysis of whole-slide images. J Am Med Inform Assoc. 2013;20:1099–108. doi: 10.1136/amiajnl-2012-001540. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.McCann MT, Castro C, Ozolek JA, Parvin B, Kovacevic J. Automated Histology Analysis: Opportunities for Signal Processing. IEEE Signal Processing. 2014 [Google Scholar]

- 4.Ponce J, Berg TL, Everingham M, Forsyth DA, Hebert M, Lazebnik S, et al. Toward Category Level Object Recognition. Ch 2. Switzerland: Springer-Verlag Lecture Notes in Computer Scienc; 2006. Dataset issues in object recognition. [Google Scholar]

- 5.Herve N, Boujemaa N. Image Annotation: Which Approach for Realistic Databases? In Proceeding ACM International Conference on Image and Video Retrieval (CIVR) 2007:170–7. [Google Scholar]

- 6.Shamir L. Evaluation of face datasets as tools for assessing the performance of face recognition methods. Int J Comput Vis. 2008;79:225–230. doi: 10.1007/s11263-008-0143-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kumar N, Berg AC, Belhumeur PN, Nayar SK. Attribute and Simile Classifiers for Face Verification. In IEEE International Conference on Computer Vision (ICCV); October. 2009 [Google Scholar]

- 8.Cox DD, Pinto N, Barhomi Y, DiCarlo JJ. Comparing State-of-the-Art Visual Features on Invariant Object Recognition Tasks. In Proceeding IEEE Workshop on Applications of Computer Vision (WACV) 2011 [Google Scholar]

- 9.Torralba A, Efros A. Unbiased Look at Dataset Bias. In Proceeding IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2011 [Google Scholar]

- 10.Shamir L. Assessing the efficacy of low-level image content descriptors for computer-based fluorescence microscopy image analysis. J Microsc. 2011;243:284–92. doi: 10.1111/j.1365-2818.2011.03502.x. [DOI] [PubMed] [Google Scholar]

- 11.Model I, Shamir L. Comparison of dataset bias in object recognition benchmarks. IEEE Access. 2015;3:1953–62. [Google Scholar]

- 12.Harder N, Batra R, Diessl N, Gogolin S, Eils R, Westermann F, et al. Large-scale tracking and classification for automatic analysis of cell migration and proliferation, and experimental optimization of high-throughput screens of neuroblastoma cells. Cytometry A. 2015;87:524–40. doi: 10.1002/cyto.a.22632. [DOI] [PubMed] [Google Scholar]

- 13.Lezoray O, Elmoataz A, Cardot H. A color object recognition scheme: Application to cellular sorting. Mach Vis Appl. 2003;14:166–71. [Google Scholar]

- 14.Marinakis Y, Dounias G, Jantzen J. Pap smear diagnosis using a hybrid intelligent scheme focusing on genetic algorithm based feature selection and nearest neighbor classification. Comput Biol Med. 2009;39:69–78. doi: 10.1016/j.compbiomed.2008.11.006. [DOI] [PubMed] [Google Scholar]

- 15.Bengtsson E, Malm P. Screening for cervical cancer using automated analysis of PAP-smears. Comput Math Methods Med 2014. 2014 doi: 10.1155/2014/842037. 842037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bejnordi BE, Timofeeva N, Otte-Holler I, Karssemeijer N, van der Laak J. Quantitative Analysis of Stain Variability in Histology Slides and an Algorithm for Standardization. Proceeding SPIE – The International Society for Optical Engineering (9041) 2014 [Google Scholar]

- 17.Sahay K, Mehendiratta M, Rehani S, Kumra M, Sharma R, Kardam P. Cytological artifacts masquerading interpretation. J Cytol. 2013;30:241–6. doi: 10.4103/0970-9371.126649. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Mcinnes EF. Artefacts in histology. Comp Clin Pathol. 2005;13:100–8. [Google Scholar]

- 19.Marien K. The Search for a Predictive Tissue Biomarker for Response to Colon Cancer Therapy with Bevacizumab. Doctoral Thesis, Universiteit Antwerpen. 2016 [Google Scholar]

- 20.Delga A, Goffin F, Kridelka F, Marée R, Lambert C, Delvenne P. Evaluation of CellSolutions BestPrep ® automated thin-layer liquid-based cytology Papanicolaou slide preparation and BestCyte ® cell sorter imaging system. Acta Cytol. 2014;58:469–77. doi: 10.1159/000367837. [DOI] [PubMed] [Google Scholar]

- 21.Giusti A, Claudiu D, Caccia C, Schmid-Huber J, Gambardella LM. A Comparison of Algorithms and Humans for Mitosis Detection. In Proceeding International Symposium on Biomedical Imaging (ISBI) 2014 [Google Scholar]

- 22.Kozubek M. Challenges and benchmarks in bioimage analysis. Adv Anat Embryol Cell Biol. 2016;219:231–62. doi: 10.1007/978-3-319-28549-8_9. [DOI] [PubMed] [Google Scholar]

- 23.Shamir L, Delaney JD, Orlov N, Eckley DM, Goldberg IG. Pattern recognition software and techniques for biological image analysis. PLoS Comput Biol. 2010;6:e1000974. doi: 10.1371/journal.pcbi.1000974. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Bradley AP. The use of the area under the ROC curve in the evaluation of machine learning algorithms. Pattern Recognit. 1997;30:1145–935. [Google Scholar]

- 25.Marée R, Rollus L, Stévens B, Hoyoux R, Louppe G, Vandaele R, et al. Collaborative analysis of multi-gigapixel imaging data using Cytomine. Bioinformatics. 2016;32:1395–401. doi: 10.1093/bioinformatics/btw013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Jeanray N, Marée R, Pruvot B, Stern O, Geurts P, Wehenkel L, et al. Phenotype classification of zebrafish embryos by supervised learning. PLoS One. 2015;10:e0116989. doi: 10.1371/journal.pone.0116989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Krig S. Ground truth data, content, metrics, and analysis. In Computer Vision Metrics. Ch 7. Switzerland: Springer; 2016. [Google Scholar]

- 28.Quionero-Candela J, Sugiyama M, Schwaighofer A, Lawrence ND. Dataset Shift in Machine Learning. US: The MIT Press; 2008. [Google Scholar]

- 29.Vittayakorn S, Hays J. Quality Assessment for Crowdsourced Object Annotations. In Proceeding of the British Machine Vision Conference, BMVA Press; September. 2011:1091–10911. [Google Scholar]

- 30.Shamir L, Macura T, Orlov N, Eckely DM, Goldberg IG. Iicbu 2008 – A Benchmark Suite for Biological Imaging. In 3rd Workshop on Bio-Image Informatics: Biological Imaging, Computer Vision and Data Mining. 2008 [Google Scholar]

- 31.Maree R, Geurts P, Wehenkel L. Towards generic image classification using tree-based learning: An extensive empirical study. Pattern Recognit Lett. 2016;74:17–23. [Google Scholar]

- 32.Swamidoss IN, Kårsnäs A, Uhlmann V, Ponnusamy P, Kampf C, Simonsson M, et al. Automated classification of immunostaining patterns in breast tissue from the human protein atlas. J Pathol Inform. 2013;4(Suppl):S14. doi: 10.4103/2153-3539.109881. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Donida Labati R, Piuri V, Scotti F. All-idb: The Acute Lymphoblastic Leukemia Image Database for Image Processing. In IEEE International Conference on Image Processing (ICIP); September. 2011 [Google Scholar]

- 34.Janowczyk A, Madabhushi A. Deep learning for digital pathology image analysis: A comprehensive tutorial with selected use cases. J Pathol Inform. 2016;7:29. doi: 10.4103/2153-3539.186902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Goodfellow I, Bengio Y, Courville A. Practical methodology. Deep Learning. Ch 11. US: MIT Press; 2016. [Google Scholar]