Abstract

Importance

Accurate documentation of patient symptoms in the electronic medical record (MR) is important for high quality patient care.

Objective

To explore inconsistencies between patient self-report on an eye symptom questionnaire (ESQ) and documentation in the MR.

Design

Observational study.

Setting

Comprehensive ophthalmology and cornea clinics at an academic institution.

Participants

Convenience sample of 192 consecutive, eligible patients, of whom 30 declined participation.

Main Outcome and Measures

Concordance of symptoms reported on an ESQ with data recorded in the MR. Agreement of symptom report was analyzed using kappa coefficients and McNemar’s tests. Disagreement was defined as a negative symptom report or no mention of a symptom in the MR for patients who reported moderate to severe symptoms on the ESQ. Logistic regression was used to investigate if patient factors, physician characteristics, or diagnoses were associated with the probability of disagreement for symptoms of blurry vision, eye pain, and eye redness.

Results

162 patients (324 eyes) were included. Subjects were on average 56.6 ± 19.4 years old, 62% female, and 85% Caucasian. At the subject level, 54 of 160 (34%) had discordant reporting of blurry vision between the ESQ and MR. Likewise, documentation was discordant for reporting glare (48%, n=78 of 162), eye pain (27%, n=43 of 162), and eye redness (25%, n=40 of 162) with poor to fair agreement (kappa range = −0.02–0.42). Discordance of symptom reporting was more frequently characterized by positive reporting on the ESQ and lack of documentation in the MR (Holm’s adjusted McNemar’s p <0.03, for seven of eight symptoms except blurry vision p=0.59). Return visits where the patient reported blurry vision on the ESQ had increased odds of not reporting the symptom in the MR, compared to new visits (Holm’s adjusted p=0.045).

Conclusions and Relevance

Symptom reporting was inconsistent between a patient’s self-report on an ESQ and documentation in the MR, with symptoms more frequently recorded on a questionnaire. Our results suggest that documentation of symptoms based on MR data may not provide a comprehensive resource for clinical practice or ‘big data’ research.

Keywords: Medical Record, Patient-Reported Outcomes, Eye

Introduction

The medical record (MR) began as a tool for physicians to document their pertinent findings from the clinical encounter and has evolved to serve providers, patients, health systems, and insurers. Advocates of conversion to an electronic MR aimed to balance the multiple functional purposes of the MR and user accessibility.1 After the passage of the Health Information Technology for Economic and Clinical Health Act, the percentage of office-based physicians utilizing any electronic MR increased from 18% in 2001 to 83% in 2014.2, 3 The Institute of Medicine promoted the core capabilities expected from an electronic MR including health information storage, electronic communication, and patient support.4

Physicians have mixed views on the ability of the MR to capture important components of the interaction with the patient.5–7 Researchers have reported inconsistencies between the MR and patient findings, but they have not necessarily clarified if this is due to poor documentation or poor communication between the patient and provider.8–15 The conversion from a paper MR to an electronic MR created new issues. Detractors of electronic MRs report interruptions in clinic workflow, interference with maintaining eye contact with patients, time-consuming data entry, longer clinic visits, and lower productivity.16 Electronic MR shortcuts, such as the copy-paste function and template-based notes, create unique user errors that may diminish the quality of information.17, 18

Researchers hope that the electronic MR can be used beyond clinical applications as a research tool.19 MR data could be analyzed by “big data” approaches such as natural language processing and bioinformatics and has the potential to improve healthcare efficiency, quality, and cost-effectiveness.20, 21 However, these applications assume that the MR has accurate patient level data. The current study was undertaken to fully explore inconsistencies between self-report on an eye symptom questionnaire (ESQ) and documentation in the MR for patients presenting to an ophthalmology clinic.

Methods

This study, approved by the institutional review board at the University of Michigan, was HIPAA-compliant and adhered to the Declaration of Helsinki. Written, informed consent was obtained from all enrollees. Patients were recruited at the Kellogg Eye Center from October 1, 2015 to January 31, 2016. Patients were eligible to be included in the study if they were 18 years or older. Exclusion criteria included patients in the 90-day post-operative period for ocular surgeries or history of complex ocular surface diseases requiring ocular surgery, which would potentially alter normal symptom reporting. Race was classified based on self-report in the MR as Caucasian, African American, Asian, or other.

We conducted a pilot project administering an ESQ (eFigure 1) to patients of 13 physicians in comprehensive (n=7) and cornea (n=6) clinics to understand the relationship between patient-reported symptoms and diagnosed eye disease. The ESQ was administered while the patient was waiting to see the physician after the technician encounter. The ESQ contained eight eye symptom items obtained from previously validated questionnaires, and asked about the severity of each symptom in the last seven days. Responses were reported for right and left eyes separately. Six of the eight symptom items were obtained from the National Institute of Health (NIH) Toolbox Vision-related Quality of Life measure, one item (eye pain) was derived from the National Eye Institute-Visual Function Questionnaire (NEI-VFQ), and one item (gritty sensation) was derived from the Ocular Surface Disease Index.22–24 Eye symptom items on the ESQ could be reported on a four-point Likert scale including no problem at all, a little bit of a problem, somewhat of a problem, or very much of a problem for seven questions, and on a five-point Likert scale including none, mild, moderate, severe, or very severe for one question (eye pain).

A medical student (N.V.) abstracted eye symptoms retrospectively from the electronic MR corresponding to the eight eye symptoms of the ESQ. We abstracted symptoms recorded by any person on the care team. Technicians and clinicians were not aware their documentation was to be queried. Wording differences for symptoms in the MR were recorded and collapsed into broad categories. The MR included radio buttons for all symptoms included in the ESQ. If the radio button was not checked or if there was no free text documentation of the symptom, the symptom was classified as “not documented”. The MR was reviewed to document: (1) if the patient reported having the symptom (positive symptom) or not having the symptom (negative symptom), (2) if a symptom was recorded as occurring within the past seven days, (3) if the MR indicated eye laterality for the symptom, and (4) if there was no documentation of the symptom in the MR. Eye symptoms reported in the MR but not included in the ESQ were also classified (eTable 1). These other symptoms were not explored in depth as they were not components of the validated questionnaires from the NIH Toolbox or NEI-VFQ.

Additional data were collected regarding demographic information such as age and gender of patients, clinical diagnosis of the eye (no presence of disease, non-urgent, or urgent anterior segment disease), type of visit (new visit, return visit, or new problem during a return visit), and characteristics of the examining physician. These physician characteristics included the number of years the physician had been in clinical practice, the physician’s average volume of patients on a clinic day, and if the physician worked with a medical scribe.

Statistical Analysis

Agreement between reporting symptoms on the ESQ versus the MR was summarized descriptively with cross-tables, and included frequencies and percentages. Results were reported both as eye-based and subject-based due to missing data for eye laterality in the electronic MR. At the subject-level, if the symptom was reported in at least 1 eye, then the subject was indicated as having the symptom. Presence of an eye symptom on the ESQ was defined as a report of “somewhat of a problem” or “very much of a problem” for seven symptoms, or a report of “moderate,” “severe,” or “very severe” for eye pain. Two alternative categorizations were performed as sensitivity analyses. In the first analysis, presence of a symptom on the ESQ was re-categorized as any positive report regardless of severity (including “mild” or “a little bit of a problem,” depending on the symptom). In the second analysis, presence of a symptom on the ESQ was re-categorized as only the highest positive symptom report categories (“severe and very severe” or “very much of a problem”). Symptom severity levels could not be captured in the MR to the same extent, therefore any positive documentation was taken as presence of that symptom. Lack of documentation of a symptom or an explicit negative report in the MR was treated as a negative report.

Kappa coefficients (κ) were used to assess the level of agreement/concordance of symptom reporting between the ESQ and MR at the subject-level. McNemar’s test was used to evaluate discordance in symptom report on the ESQ compared to the MR. At the eye-level, the most severely reported symptom on the ESQ was identified and agreement of report was compared to the MR.

Factors associated with disagreement in symptom reporting between the ESQ and MR were investigated with logistic regression models. Due to low rates of positive documentation in the MR of some symptoms, only blurry vision, eye pain, and eye redness were investigated in greater depth. Models were aggregated to the subject-level to preserve reported symptom data that would otherwise be missing at the eye-level. Patients with missing eye laterality but positive symptom reporting were treated as positive documentation in the MR. Models were based on the subset of subjects with a positive symptom report on the ESQ. Disagreement was defined as a negative report or no documentation of a symptom in the MR (-MR) for patients who had positive self-report on the ESQ (+ESQ). Factors investigated for associations with the probability of disagreement included patient demographic factors, the clinical diagnosis of the worse eye, physician characteristics, and visit type. To account for multiple tests, p-values were adjusted by the Holm’s method.25 A p-value of < 0.05 was considered to be statistically significant and all hypothesis tests were two-sided. All statistical analyses were performed in SAS version 9.4 (SAS Institute, Cary, NC).

Results

A total of 162 subjects (324 eyes) were included in the analysis. Descriptive statistics of the sample are summarized in Table 1. Subjects were on average 56.6 ±19.4 years old (range 18.4–94.0), 62% (101/162) were female, and 85% (135/159) were Caucasian. Eyes had an average logMAR visual acuity of 0.34 ± 0.64 (Snellen equivalent 20/44 ± 6.4 lines) and range of −0.12–+3.00 (Snellen equivalent 20/15-hand motion).

Table 1.

Descriptive statistics of the study sample (324 eyes of 162 subjects)

| Subject-based Characteristics (n=162) | |||

|---|---|---|---|

| Continuous Variable | Mean (SD) | Min, Max | Median |

| Age (years) | 56.6 (19.4) | 18.4, 94.0 | 60.7 |

| Categorical Variable | Frequency (%) | ||

| Female | 101 (62.3) | ||

| Race | |||

| Caucasian | 135 (84.9) | ||

| African American | 11 (6.9) | ||

| Asian | 11 (6.9) | ||

| Other | 2 (1.3) | ||

| Visit type | |||

| New visit | 51 (31.5) | ||

| Return visit (no new problems) | 91 (56.2) | ||

| New problem on return visit | 20 (12.4) | ||

| Eye-based Characteristics (n=324) | |||

| Continuous Variable | Mean (SD) | Min, Max | Median |

| LogMAR VA | 0.34 (0.64) | −0.12, 3.00 | 0.18 |

| Snellen VA | 20/44 (6.4 lines) | 20/15, HM | 20/30 |

SD, Standard deviations; VA, Visual acuity; HM, Hand motion

Symptom Reporting on the ESQ and in the MR

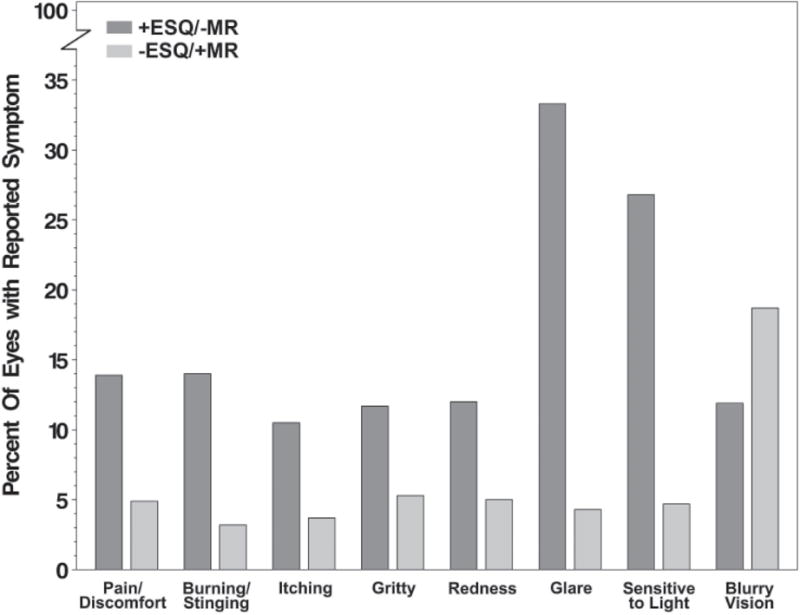

We examined the concordance and discordance of symptom report between methods and described the directionality of discordance at the eye-level (Figure 1, for full descriptive results, see eTable 2) and at the subject-level (Table 2; for full descriptive results, see eTable 3). The following results are at the subject level. Symptom presence was concordant (+ESQ, +MR) for blurry vision, glare, eye pain, and eye redness in 37.5%, 3.1%, 21.0%, and 14.2% of subjects, respectively. Symptom absence was concordant (-ESQ, -MR) for blurry vision, glare, eye pain, and eye redness in 28.8%, 48.8%, 52.5%, and 61.2% of subjects, respectively. Reporting blurry vision was discordant in 33.8% (n=54 of 160) of subjects. Of these discordant subjects, 46.3% had +ESQ/-MR. Reporting glare was discordant in 48.1% (n=78 of 162) of subjects. Of these discordant subjects, 91.0% had +ESQ/-MR. Reporting eye pain was discordant in 26.5% (n=43 of 162) of subjects. Of these discordant subjects, 74.4% had +ESQ/-MR. Reporting eye redness was discordant in 24.7% (n=40 of 162) of subjects. Of these discordant subjects, 80.0% had +ESQ/-MR.

Figure 1.

Discordance of symptomatic eyes on Eye Symptom Questionnaire (ESQ) or Medical Record (MR).

+ESQ/-MR = percent of symptoms positively reported (ESQ), but negatively documented in the MR (explicitly negative or not documented). -ESQ/+MR = percent of symptoms negatively reported on the ESQ, but positively documented in the MR.

Table 2.

Agreement between symptom report on a questionnaire compared to documentation in the medical record for the sample of 162 subjects

| MR Symptom Documentation | |||||

|---|---|---|---|---|---|

| ESQ Symptom Self-Report | Presentd freq (%) |

Absente freq (%) |

Kappa (95% CI) | McNemar’s Test P-value | Holm’s Adjusted P-value |

| Blurry Visiona,b | |||||

| Present | 60 (37.5) | 25 (15.6) | 0.32 (0.17,0.47) | 0.586 | 0.586 |

| Absent | 29 (18.1) | 46 (28.8) | |||

| Glarea | |||||

| Present | 5 (3.1) | 71 (43.8) | −0.02 (−0.10,0.07) | <0.001 | <0.001 |

| Absent | 7 (4.3) | 79 (48.8) | |||

| Eye Painc | |||||

| Present | 34 (21.0) | 32 (19.8) | 0.42 (0.28,0.56) | 0.001 | 0.004 |

| Absent | 11 (6.8) | 85 (52.5) | |||

| Eye Rednessa | |||||

| Present | 23 (14.2) | 32 (19.8) | 0.38 (0.24,0.53) | <0.001 | <0.001 |

| Absent | 8 (4.9) | 99 (61.2) | |||

| Burninga,b | |||||

| Present | 6 (3.7) | 37 (23.0) | 0.13 (−0.01,0.27) | <0.001 | <0.001 |

| Absent | 5 (3.1) | 113 (70.1) | |||

| Itchinga | |||||

| Present | 6 (3.7) | 26 (16.0) | 0.18 (0.01,0.36) | <0.001 | 0.002 |

| Absent | 6 (3.7) | 124 (76.6) | |||

| Grittya | |||||

| Present | 13 (8.0) | 27 (16.7) | 0.26 (0.09,0.43) | 0.016 | 0.033 |

| Absent | 12 (7.4) | 110 (68.0) | |||

| Sensitive to Lighta | |||||

| Present | 16 (9.9) | 56 (34.5) | 0.17 (0.05,0.28) | <0.001 | <0.001 |

| Absent | 6 (3.7) | 84 (51.8) | |||

ESQ, Eye Symptom Questionnaire; MR, Medical Record; CI, Confidence Interval; freq, frequency

p <0.001 for all eight symptoms, McNemar’s test

“Present” reported as “somewhat/very much of a problem” in at least one eye and “Absent” reported as “no problem at all/a little bit of a problem” in both eyes for the symptom

Missing data was present for these symptoms, percentages based on: n=160 subjects for Blurry vision and Burning

“Present” reported as “moderate/severe/very severe” in at least one eye and “Absent” reported as “none/mild” in both eyes for the symptom

Symptoms were “Present” if documented in the medical record for the subject

Symptoms were “Absent” if documented as no symptom in the MR or not documented in the MR

Symptom Agreement between the ESQ and MR

Kappa coefficients indicated poor to fair agreement between the ESQ and MR for symptom reporting (κ range: −0.02 to +0.42; Table 2). At the subject-level, positive reporting of symptoms on the ESQ with no documentation or a negative report in the MR was more prevalent than the converse for glare (+ESQ/-MR vs –ESQ/+MR: 43.8% vs. 4.3%), eye pain (19.8% vs. 6.8%) and eye redness (19.8% vs. 4.9%), but not for blurry vision (15.6% vs. 18.1%) (Table 2). McNemar’s tests indicate imbalance in discordant symptom reporting with more discrepancy in the direction of positive report on the ESQ and negative documentation in the MR for all eye symptoms (Holm’s adjusted p<=0.03) except for blurry vision (p=0.59) (Table 2).

Sensitivity Analyses

For the “inclusive” sensitivity analysis, results were predictably more discordant between the ESQ and MR. Kappa values remained poor to fair (κ range: −0.04 to +0.26) and McNemar’s test results showed stronger discordance in the direction of +ESQ/-MR (Holm’s adjusted McNemar’s p-values all <0.0001). For the “exclusive” sensitivity analysis (only most severe symptom considered positive on the ESQ), agreement was poor (κ range: −0.05 to +0.36). Blurry vision was more frequently discordant as –ESQ/+MR (Holm’s adjusted McNemar’s p<0.001). Other symptoms were discordant as +ESQ/-MR for glare (p<0.002) and light sensitivity (p=0.012). There were no statistically significant discordant findings for symptoms of pain, redness, burning, itching, and gritty sensation.

The agreement between the most severely reported symptom on the ESQ and MR documentation of that symptom was also evaluated at the eye-level. In 108 eyes, one symptom was reported on the ESQ at a higher level of severity than all other symptoms. In these 108 eyes, 25 eyes (23.2%) also had a positive documentation of that symptom in the MR, 13 eyes (12.0%) had documentation in the MR but no eye designation, 62 eyes (57.4%) had no indication of the symptom in the MR, and 8 eyes (7.4%) had an explicit negative symptom report.

Some agreement between symptom reporting for all eight symptoms on the ESQ and MR was seen in 46.3% of subjects (n=75 of 162). Exact agreement occurred in 23.5% of subjects (n=38 of 162). When a patient reported three or more symptoms on the ESQ, the MR never had exact agreement on the symptoms (Table 3).

Table 3.

Descriptive statistics of agreement of the exact symptoms reported on a questionnaire and documented in the medical record (MR), at the subject-level, and stratified by the number of unique eye symptoms reported on the questionnaire.

| Number of symptoms on ESQ | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Symptom Agreement with MR | Any (n=162) |

0 (n=37) |

1 (n=25) |

2 (n=22) |

3 (n=24) |

4 (n=10) |

5 (n=8) |

6 (n=13) |

7 (n=14) |

8 (n=9) |

| Exact | 38 (23.5) | 21 (13.0) | 13 (8.0) | 4 (2.5) | 0 (0.0) | 0 (0.0) | 0 (0.0) | 0 (0.0) | 0 (0.0) | 0 (0.0) |

| Some | 75 (46.3) | 0 (0.0) | 0 (0.0) | 8 (4.9) | 18 (11.1) | 10 (6.2) | 6 (3.7) | 13 (8.0) | 13 (8.0) | 7 (4.3) |

| None | 49 (30.2) | 16 (9.9) | 12 (7.4) | 10 (6.2) | 6 (3.7) | 0 (0.0) | 2 (1.2) | 0 (0.0) | 1 (0.6) | 2 (1.2) |

Data are displayed as: number of subjects (percent of 162 subjects)

ESQ, Eye Symptom Questionnaire; MR, medical record

Factors associated with ESQ and MR Disagreement

Logistic regression models predicting the probability of –MR in the subset of subjects with +ESQ for the symptoms of blurry vision, eye pain, and eye redness are summarized in eTable 4. No significant associations of age, gender, diagnosis, physician years in practice, clinic volume, or presence of a scribe were found with any of the three symptom outcomes (all p>0.05). Visit type showed a statistically significantly association with disagreement such that return visits patients who positively reported symptoms on the ESQ had increased odds of not reporting the symptom in the MR, compared to new visits. Upon multiple testing adjustment, these results only remained significant for blurry vision (odds ratio=5.25, 95% confidence interval=1.69–16.30, p=0.045). Due to small sample sizes for –ESQ and +MR, models of this disagreement could not be investigated.

Discussion

The original intent of the MR was not for complete documentation of the clinical encounter, but for physician note taking of their patient interaction. The electronic MR was implemented to integrate many sources of medical information. We demonstrated that there is substantial discrepancy in the symptoms reported by patients on an ESQ and those documented in the MR as has been shown in previous studies in other specialties.26–29 This discrepancy can occur in two directions: positive reporting by self report with negative or no documentation in the MR (+ESQ/-MR), or negative reporting by self-report and positive documentation in the MR (-ESQ/+MR).

We found significant imbalance in symptom reporting with more symptoms reported through self-report (ESQ) than through the MR, except for blurry vision. Prior studies have also found these types of differences, and these disconnects have been found in paper or electronic MRs.12, 27–29 Exact agreement between self-report and MR documentation dropped to zero when patients reported three or more symptoms on the ESQ. When we adjusted our categorizations through sensitivity analyses, discordance in symptom report understandably shifted as well. Discordance in symptom reporting could be due to differences in terminology of symptoms between the patient and provider or errors of omission, such as forgetting or choosing not to report/record a symptom.26, 30 Perhaps a more bothersome symptom is the focus of the clinical encounter and other less bothersome symptoms (e.g. glare) are not discussed (or documented). However, we show that even for the “exclusive” sensitivity analysis, the ESQ and the MR are inconsistently documented. We cannot assume that self-report is more accurate than the MR just because more symptoms are reported.

Quality of documentation is critical not only for patient care but also for quality measurements and clinical studies.19 In ophthalmology, the Intelligent Research in Sight (IRIS) registry can be used to evaluate patient-level data to improve patient outcomes and practice performance, but relies on objectively collected data, such as visual acuity and billing codes.31, 32 Inclusion of psychometric data, such as patient-reported outcomes (PROs), could be a future direction for the IRIS registry. We suggest that PROs, such as those provided by the NIH Toolkit or PROMIS (Patient-Reported Outcome Measurement Information System), could be collected as standardized, self-report templates and uploaded into the electronic MR.23, 33 Our study results suggest that integrating self-report would include more symptom reporting and would be consistent between patients in order to enhance the fidelity of the data. Using patient self-reporting, the patient-physician interaction could shift from reporting symptoms to focusing on symptom severity and causality. Additionally, reliance on PROs for symptom reporting is an important way to document that treatments lead to improved quality of life and are now being recommended by the FDA for use in clinical trials.34

We explored factors that may be related to the discordance between an ESQ and the MR. Patient factors (age, sex), physician factors (years in practice, workload, and use of a medical scribe), and presence of urgent or non-urgent anterior segment eye diseases were not significantly associated with reporting disagreements. Visit type was associated with disagreement for one symptom, blurry vision. When patients reported blurry vision on the ESQ, there was an increased probability that the physician would not document it in the MR during return visits when compared to new visits. As noted by other studies, inconsistency may rather be due to time constraints, system-related errors, and communication errors.15

There are limitations to our study. The study was done at a single center using a specific type of electronic MR, which limits generalizability. We could not assess the influence of any specific minority group on discrepancies in symptom reporting due to low representation of minorities in our sample. We did not use the entire NIH Toolkit survey, which may alter the survey’s validity. Recall bias can occur in which the patient could remember symptoms when prompted by a survey but not during the clinical encounter. A symptom was considered a ‘negative report’ when the symptom was not documented in the MR. This method is not a perfect reflection of the clinical encounter (even though it serves as such for medico-legal purposes). Therefore, we report inconsistencies and not sensitivities and specificities. In the future, two independent classifiers with a method of adjudication could be used to code MR documentation. The coding did occur independent of and without knowledge of the ESQ results.

This study identifies a key challenge for an electronic MR system – the quality of the documentation. We found significant inconsistencies between symptom self-report on an ESQ and documentation in the MR with a bias toward reporting more symptoms via self-report. If the MR lacks relevant symptom information, it has implications for patient care including communication errors and poor representation of the patient’s complaints. The inconsistencies imply caution for the use of MR data in research studies. Future work should further examine why information is inconsistently reported. Perhaps the implementation of self-report questionnaires for symptoms in the clinical setting will mitigate the limitations of the MR and ideally improve the quality of documentation.

Supplementary Material

Acknowledgments

Financial Support: The funding organizations had no role in design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review, or approval of the manuscript; and decision to submit the manuscript for publication. NGV: National Institutes of Health, Bethesda, MD; TL1 Training Grant 5TL1TR000435-09. PANC: National Eye Institute, Bethesda, MD; K23 Mentored Clinical Scientist Award K23EY025320; Research to Prevent Blindness Career Development Award; DCM: Kellogg Foundation; PPL: Kellogg Foundation; Research to Prevent Blindness. MAW: National Eye Institute, Bethesda, MD; K23 Mentored Clinical Scientist Award K23EY023596.

Abbreviations

- MR

Medical Record

- ESQ

Eye Symptom Questionnaire

- PROs

Patient-Reported Outcomes

- NIH

National Institute of Health

- PROMIS

Patient-Reported Outcome Measurement Information System

Footnotes

Conflicts of Interest: Centers for Disease Control (consulting, PPL), Blue Health Intelligence (consulting, PANC). These are all outside the submitted work. No conflicting relationship exists for the other authors.

Author contributions: N. V. researched the data, wrote the manuscript, and reviewed/edited the manuscript. L.N researched the data, wrote the manuscript, and reviewed/edited the manuscript. D.M contributed to the design and discussion of the study, and reviewed/edited the manuscript. P.L. contributed to the discussion of the study and reviewed/edited the manuscript. P.N. contributed to the discussion of the study and reviewed/edited the manuscript. M.W. researched the data, wrote the manuscript, and reviewed/edited the manuscript.

Maria A. Woodward had full access to all of the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis.

Leslie M. Niziol MS conducted and is responsible for the data analysis.

This article contains additional online-only material. The following should appear online-only: eFigure 1, eTable 1, eTable 2, eTable 3, eTable 4.

References

- 1.Raymond L, Pare G, Ortiz de Guinea A, et al. Improving performance in medical practices through the extended use of electronic medical record systems: a survey of Canadian family physicians. BMC Med Inform Decis Mak. 2015;15:27. doi: 10.1186/s12911-015-0152-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Centers for Disease Control and Prevention (CDC). National Center for Health Statistics (NCHS) Percentage of office-based physicians using any electronic health records or electronic medical records, physicians that have a basic system, and physicians that have a certified system, by state: United States, 2014. 2015 Available at: https://www.cdc.gov/nchs/data/ahcd/nehrs/2015_web_tables.pdf. Accessed November 8, 2016.

- 3.Centers for Disease Control and Prevention (CDC). National Center for Health Statistics (NCHS) Use and Characteristics of Electronic Health Record Systems Among Office-based Physician Practices: United States, 2001–2013. 2014 Available at: http://www.cdc.gov/nchs/products/databriefs/db143.htm. Accessed November 8, 2016.

- 4.Institute of Medicine. Key capabilities of an electronic health record system. 2003 Available at http://www.nationalacademies.org/hmd/Reports/2003/Key-Capabilities-of-an-Electronic-Health-Record-System.aspx. Accessed November 8, 2016. [PubMed]

- 5.DesRoches C, Campbell E, Rao S, et al. Electronic health records in ambulatory care–a national survey of physicians. N Engl J Med. 2008;359(1):50–60. doi: 10.1056/NEJMsa0802005. [DOI] [PubMed] [Google Scholar]

- 6.Chiang M, Boland M, Margolis J, et al. Adoption and perceptions of electronic health record systems by ophthalmologists: an American Academy of Ophthalmology survey. Ophthalmology. 2008;115(9):1591–7. doi: 10.1016/j.ophtha.2008.03.024. [DOI] [PubMed] [Google Scholar]

- 7.Street RL, Liu L, Farber NJ, et al. Provider interaction with the electronic health record: the effects on patient-centered communication in medical encounters. Patient Educ Couns. 2014;96(3):315–9. doi: 10.1016/j.pec.2014.05.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.St Sauver J, Hagen P, Cha S, et al. Agreement between patient reports of cardiovascular disease and patient medical records. Mayo Clin Proc. 2005;80(2):203–210. doi: 10.4065/80.2.203. [DOI] [PubMed] [Google Scholar]

- 9.Fromme E, Eilers K, Mori M, Hsieh Y, Beer T. How accurate is clinician reporting of chemotherapy adverse effects? A comparison with patient-reported symptoms from the Quality-of-Life Questionnaire C30. J Clin Oncol. 2004;22(17):3485–90. doi: 10.1200/JCO.2004.03.025. [DOI] [PubMed] [Google Scholar]

- 10.Beckles G, Williamson D, Brown A, et al. Agreement between self-reports and medical records was only fair in a cross-sectional study of performance of annual eye examinations among adults with diabetes in managed care. Med Care. 2007;45(9):876–83. doi: 10.1097/MLR.0b013e3180ca95fa. [DOI] [PubMed] [Google Scholar]

- 11.Corser W, Sikorskii A, Olomu A, Stommel M, Proden C, Holmes-Rovner M. Concordance between comorbidity data from patient self-report interviews and medical record documentation. BMC Health Serv Res. 2008;8:85. doi: 10.1186/1472-6963-8-85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Echaiz J, Cass C, Henderson J, Babcock H, Marschall J. Low correlation between self-report and medical record documentation of urinary tract infection symptoms. Am J Infect Control. 2015 Jun;43(9):983–6. doi: 10.1016/j.ajic.2015.04.208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Yadav S, Kazanji N, K CN, et al. Comparison of accuracy of physical examination findings in initial progress notes between paper charts and a newly implemented electronic health record. J Am Med Inform Assoc. 2016 doi: 10.1093/jamia/ocw067. epub ahead of print. Accessed September 15, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Shachak A, Hadas-Dayagi M, Ziv A, Reis S. Primary care physicians’ use of an electronic medical record system: a cognitive task analysis. J Gen Intern Med. 2009;24(3):341–8. doi: 10.1007/s11606-008-0892-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Margalit R, Roter D, Dunevant M, Larson S, Reis S. Electronic medical record use and physician-patient communication: an observational study of Israeli primary care encounters. Patient Educ Couns. 2006;61(1):134–41. doi: 10.1016/j.pec.2005.03.004. [DOI] [PubMed] [Google Scholar]

- 16.Health Affairs Blog. Physicians’ Concerns About Electronic Health Records: Implications And Steps Towards Solutions. 2014 Available at http://healthaffairs.org/blog/2014/03/11/physicians-concerns-about-electronic-health-records-implications-and-steps-towards-solutions/. Accessed November 8, 2016.

- 17.Mamykina L, Vawdrey D, Stetson P, Zheng K, Hripcsak G. Clinical documentation: composition or synthesis? J Am Med Inform Assoc. 2012;19(6):1025–31. doi: 10.1136/amiajnl-2012-000901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Bowman S. Impact of Electronic Health Record Systems on Information Integrity: Quality and Safety Implications. Perspect Health Inf Manag. 2013 Oct;10:1c. serial online. Available at: http://www.ncbi.nlm.nih.gov/pmc/articles/PMC3797550/. Accessed November 8, 2016. [PMC free article] [PubMed] [Google Scholar]

- 19.Weiskopf N, Weng C. Methods and dimensions of electronic health record data quality assessment: enabling reuse for clinical research. J Am Med Inform Assoc. 2013;20(1):144–51. doi: 10.1136/amiajnl-2011-000681. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Raghupathi W, Raghupathi V. Big data analytics in healthcare: promise and potential. Health Inf Sci Syst. 2014;2:3. doi: 10.1186/2047-2501-2-3. Available at: https://hissjournal.biomedcentral.com/articles/10.1186/2047–2501–2–3. Accessed November 8, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Ross M, Wei W, Ohno-Machado L. “Big data” and the electronic health record. Yearb Med Inform. 2014;9:97–104. doi: 10.15265/IY-2014-0003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Mangione C, Lee P, Pitts J, Gutierrez P, Berry S, Hays R. Psychometric properties of the National Eye Institute Visual Function Questionnaire (NEI-VFQ). NEI-VFQ Field Test Investigators. Arch Ophthalmol. 1998;116(11):1496–504. doi: 10.1001/archopht.116.11.1496. [DOI] [PubMed] [Google Scholar]

- 23.Paz S, Slotkin J, McKean-Cowdin R, et al. Development of a vision-targeted health-related quality of life item measure. Qual Life Res. 2013;22(9):2477–87. doi: 10.1007/s11136-013-0365-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Schiffman R, Christianson M, Jacobsen G, Hirsch J, Reis B. Reliability and validity of the Ocular Surface Disease Index. Arch Ophthalmol. 2000;118(5):615–21. doi: 10.1001/archopht.118.5.615. [DOI] [PubMed] [Google Scholar]

- 25.Holm S. A Simple Sequentially Rejective Multiple Test Procedure. Scandinavian Journal of Statistics. 1979;6(2):65–70. [Google Scholar]

- 26.Fries JF. Alternatives in medical record formats. Med Care. 1974;12(10):871–81. doi: 10.1097/00005650-197410000-00006. [DOI] [PubMed] [Google Scholar]

- 27.Barbara A, Loeb M, Dolovich L, Brazil K, Russell M. Agreement between self-report and medical records on signs and symptoms of respiratory illness. Prim Care Respir J. 2012;21(2):145–52. doi: 10.4104/pcrj.2011.00098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Pakhomov S, Jacobsen S, Chute C, Roger V. Agreement between Patient-reported Symptoms and their Documentation in the Medical Record. Am J Manag Care. 2008;14(8):530–9. [PMC free article] [PubMed] [Google Scholar]

- 29.Stengel D, Bauwens K, Walter M, et al. Comparison of handheld computer-assisted and conventional paper chart documentation of medical records. A randomized, controlled trial. J Bone Joint Surg Am. 2004;86-A(3):553–60. doi: 10.2106/00004623-200403000-00014. [DOI] [PubMed] [Google Scholar]

- 30.Chan P, Thyparampil PJ, Chiang MF. Accuracy and speed of electronic health record versus paper-based ophthalmic documentation strategies. Am J Ophthalmol. 2013;156(1):165–72. doi: 10.1016/j.ajo.2013.02.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Sommer A. The Utility of “Big Data” and Social Media for Anticipating, Preventing, and Treating Disease. JAMA Ophthalmol. 2016;134(9):1030–1. doi: 10.1001/jamaophthalmol.2016.2287. [DOI] [PubMed] [Google Scholar]

- 32.Parke li D, Lum F, Rich W. The IRIS® Registry: Purpose and perspectives. Ophthalmologe. 2016 doi: 10.1007/s00347-016-0300-2. epub ahead of print. Accessed September 15, 2016. [DOI] [PubMed] [Google Scholar]

- 33.Cella D, Yount S, Rothrock N, et al. The Patient-Reported Outcomes Measurement Information System (PROMIS). Progress of an NIH Roadmap Cooperative Group During its First Two Years. Med Care. 2007;45(5 Suppl 1):S3–S11. doi: 10.1097/01.mlr.0000258615.42478.55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.U.S. Food & Drug Administration. The Patient Reported Outcomes (PRO) Consortium. 2010 Available at: http://www.fda.gov/AboutFDA/PartnershipsCollaborations/PublicPrivatePartnershipProgram/ucm231129.htm. Accessed November 8, 2016.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.