Abstract

Human information processing is limited by attentional resources. That is, via attentional mechanisms, humans select a limited amount of sensory input to process while other sensory input is neglected. In multisensory research, a matter of ongoing debate is whether there are distinct pools of attentional resources for each sensory modality or whether attentional resources are shared across sensory modalities. Recent studies have suggested that attentional resource allocation across sensory modalities is in part task-dependent. That is, the recruitment of attentional resources across the sensory modalities depends on whether processing involves object-based attention (e.g., the discrimination of stimulus attributes) or spatial attention (e.g., the localization of stimuli). In the present paper, we review findings in multisensory research related to this view. For the visual and auditory sensory modalities, findings suggest that distinct resources are recruited when humans perform object-based attention tasks, whereas for the visual and tactile sensory modalities, partially shared resources are recruited. If object-based attention tasks are time-critical, shared resources are recruited across the sensory modalities. When humans perform an object-based attention task in combination with a spatial attention task, partly shared resources are recruited across the sensory modalities as well. Conversely, for spatial attention tasks, attentional processing does consistently involve shared attentional resources for the sensory modalities. Generally, findings suggest that the attentional system flexibly allocates attentional resources depending on task demands. We propose that such flexibility reflects a large-scale optimization strategy that minimizes the brain’s costly resource expenditures and simultaneously maximizes capability to process currently relevant information.

Keywords: attentional resources, load theory, multiple object tracking, attentional blink, multisensory

Introduction

In everyday life, humans often perform several tasks at the same time seemingly without any difficulty. Yet, in some instances, they are overwhelmed by task demands, suggesting that there are limits to human performance. Several studies have shown that the amount of information that humans can process is severely limited (for a review, see Marois & Ivanoff, 2005). That is, via attentional mechanisms, humans can only selectively attend to a limited amount of information in the environment while other information in the environment is neglected. At the level of attentional selection, a further distinction in attention research is that between object-based attention and spatial attention (Duncan, 1984; Fink, Dolan, Halligan, Marshall, & Frith, 1997; Serences, Schwarzbach, Courtney, Golay, & Yantis, 2004; Soto & Blanco, 2004). Object-based attention refers to selectively attending to features of an object (e.g., the color or shape), whereas spatial attention refers to attending to locations in space.

Several researchers characterize limitations in attentional selection as a pool of attentional resources (Kahneman, 1973; Lavie, 2005; Wickens, 2002) from which resources can be allocated to current tasks until the pool is exhausted. For instance, allocating attentional resources to a task of a low difficulty allows allocation of spare attentional resources to another task that can be performed at the same time. However, performing a highly difficult task may already exhaust attentional resources and does not allow allocating attentional resources to another task.

Limits in attentional resources have been demonstrated in each sensory modality (Alvarez & Franconeri, 2007; Hillstrom, Shapiro, & Spence, 2002; Potter, Chun, Banks, & Muckenhoupt, 1998; Raymond, Shapiro, & Arnell, 1992; Soto-Faraco & Spence, 2002; Tremblay, Vachon, & Jones, 2005; Wahn, Ferris, Hairston, & König, 2016). Yet, it is still a matter of debate whether there are more attentional resources available when attending to information in several sensory modalities in comparison to attending to information only in one sensory modality. That is, it is unclear whether attentional resources available to one sensory modality are distinct from the attentional resources available to another sensory modality or whether there is one shared pool of attentional resources for all sensory modalities.

In recent years, researchers proposed that the recruitment of attentional resources across sensory modalities is influenced by the type of task being performed (Chan & Newell, 2008; Wahn & König, 2015a , 2015b, , 2016). Analogously to the functional organization of the brain into where and what pathways for the sensory modalities (Livingstone & Hubel, 1988; Mishkin & Ungerleider, 1982; Ungerleider & Pessoa, 2008), researchers have suggested that the allocation of attentional resources across sensory modalities is influenced by whether spatial and/or object-based attention is required (Chan & Newell, 2008; Wahn & König, 2015a , 2015b, , 2016). Specifically, the recruitment of shared or distinct attentional resources for the sensory modalities is influenced by whether tasks involve object-based attention, spatial attention, or both.

In the present review, we will survey the literature to investigate to what extent the allocation of attentional resources is dependent on these task requirements. For this purpose, we will first review studies that investigated attentional resources across sensory modalities using tasks that require object-based attention. As object-based attention tasks, we consider all tasks in which participants need to discriminate features of a stimulus (e.g., a target from a distractor in a visual search task) or compare stimulus attributes for two stimuli (e.g., which of the two tones has a higher pitch in a pitch discrimination task). Then, we will review studies that used tasks primarily requiring spatial attention. For instance, spatial attention tasks can involve tracking the position of moving stimuli (e.g., targets in a multiple object tracking task) or the localization/detection of spatial stimuli in a localization task (e.g., localizing whether a stimulus appeared in the upper or lower half of the screen). Lastly, there are studies in which participants performed these two task types in combination (e.g., an object-based attention task is performed in the auditory modality while a spatial attention task is performed in the visual modality). After we review the findings for each task type separately, we will devote a section to the combination of these two task types. Moreover, in each section, behavioral findings are complemented with neurophysiological findings, and we provide a summary of findings at the end. At the end of the review, we will propose a more general conclusion of all reviewed studies and discuss possible future directions for multisensory research.

As a point of note, researchers investigating attentional resources within and across sensory modalities tend to assume that information from several sensory modalities is processed independently or competes for a common pool of attentional resources in the brain. However, information from multiple sensory modalities is rarely processed in isolation in the brain (Ghazanfar & Schroeder, 2006; Murray et al., 2016; Stein et al., 2010). That is, depending on how sensory information from multiple sensory modalities is received (i.e., whether the sensory input coincides in space and/or time), it is integrated into a unitary percept, resulting in an enhanced perceptual sensitivity (e.g., stimulus features can be differentiated more precisely and more reliably; Ernst & Banks, 2002; Meredith & Stein, 1983; Stein & Stanford, 2008; Stevenson et al., 2014). Apart from the process of multisensory integration, other related crossmodal interactions have been systematically investigated, such as crossmodal correspondences (for recent reviews, see Spence, 2010a; Spence & Deroy, 2013), crossmodal congruency effects (Doehrmann & Naumer, 2008; Spence, Pavani, & Driver, 2004), Bayesian alternation accounts (e.g., Goeke, Planera, Finger, & König, 2016), and sensory augmentation (e.g., König et al., 2016). In the present review, however, we will focus on studies that did not systematically investigate such multisensory processes. Moreover, the current review does not focus on the relation between attentional processes and multisensory integration (for the interested reader, we refer to recent reviews: Navarra, Alsius, Soto-Faraco, & Spence, 2010; Talsma, 2015; Talsma, Senkowski, Soto-Faraco, & Woldorff, 2010; Tang, Wu, & Shen, 2016). In addition, we do not focus on factors that influence multisensory processing related to memory and the current goals (ten Oever et al., 2016; van Atteveldt, Murray, Thut, & Schroeder, 2014). In sum, we only review studies in which information from multiple sensory modalities is assumed either to be processed independently or to compete for a common pool of attentional resources.

In addition, given that the goal of this review is to investigate task dependency in attentional resource allocation across sensory modalities, we confined the selection of reviewed studies mostly to dual-task designs. Dual-task designs allow for the systematic investigation of task dependency in attentional resource allocation because the types of tasks that are performed in combination can be systematically changed. In a dual-task design, participants perform two tasks either separately (single-task condition) or at the same time (dual-task condition). The rationale is that when two tasks rely on shared attentional resources, performing the two tasks at the same time should lead to a performance decrease relative to performing each task alone. Conversely, if attentional resources are distinct, then performing the two tasks at the same time should not lead to a performance decrease relative to performing them alone. When addressing the question of shared or distinct attentional resources across the sensory modalities, the sensory modalities in which the dual-task condition is performed are varied. In particular, two tasks are performed either within the same sensory modality or in separate sensory modalities. With regard to these two types of dual-task conditions, it is assumed that two tasks performed within the same sensory modality should always compete for attentional resources, whereas two tasks performed in separate sensory modalities may, may not, or may only partially compete for attentional resources. In particular, performing two tasks in separate sensory modalities causes no dual-task interference (i.e., no performance decrease in the dual-task condition relative to single-task conditions) if they rely on entirely distinct attentional resources. If they rely partially on shared resources, dual-task interference occurs. However, this interference is lower when tasks are performed in separate sensory modalities compared to the same sensory modality. If the interference between tasks is equal regardless of whether tasks are performed within the same or separate sensory modalities, then attentional resources are fully shared between the two sensory modalities.

Object-Based Attention Tasks

Dual-Task Designs

With regard to object-based attention tasks and the question of whether there are distinct or shared attentional resources across the sensory modalities, a phenomenon that has been extensively investigated in a dual-task design is the attentional blink (AB). In studies investigating the AB, a method known as rapid serial visual presentation (RSVP) is used, in which stimuli (e.g., letters) are presented at a rate of 6-20 items per second (Shapiro, Raymond, & Arnell, 2009). While viewing the RSVP, the participant is required to perform two object-based attention tasks in close temporal succession. In particular, participants are required to identify the occurrence of two target stimuli among distractor stimuli in the RSVP. For instance, one task could be to identify a white target letter in a stream of black letters, while the secondary task is to identify the target letter X in a stream of other letters. The time between target presentations within the RSVP is systematically varied between 100 and 800 ms. The basic finding is that the ability of participants to identify the second target decreases considerably if it is presented at a lag of between 100 and 500 ms (Potter et al., 1998; Raymond et al., 1992; Shapiro et al., 2009). This poor performance is explained in terms of insufficient attentional resources for attending to both targets and is referred to as the AB. Notably, the AB does not occur for lags of less than 100 ms, a phenomenon referred to as lag-1 sparing (Hommel & Akyürek, 2005; Potter et al., 1998; Visser, Bischof, & Di Lollo, 1999). In a meta-analysis (Visser et al., 1999), the researchers suggested that lag-1 sparing only occurs if targets are presented at the same spatial location and are presented within a temporal integration window (i.e., stimulus onset asynchronies are shorter than 100 ms; Potter et al., 1998).

Researchers have predominantly studied the AB within a single sensory modality and have found an AB for the auditory (Soto-Faraco & Spence, 2002; Tremblay et al., 2005), visual (Potter et al., 1998; Raymond et al., 1992), and tactile (Hillstrom et al., 2002) modalities. In order to investigate whether the AB also occurs across sensory modalities, researchers modified the original RSVP task by presenting both targets not within the same sensory modality but in different sensory modalities. With this modification, researchers no longer found an AB when targets were provided via the auditory and visual modality, supporting the existence of separate attentional resources (Duncan, Martens, & Ward, 1997; Hein, Parr, & Duncan, 2006; Potter et al., 1998; Soto-Faraco & Spence, 2002; Van der Burg, Olivers, Bronkhorst, Koelewijn, & Theeuwes, 2007). However, these findings are not undisputed, and other researchers found that an AB still exists when presenting visual and auditory targets (Arnell & Jenkins, 2004; Arnell & Larson, 2002; Jolicoeur, 1999). These apparently conflicting findings need not necessarily be evidence for the existence of a crossmodal AB but may instead be explained by different factors. Soto-Faraco and Spence (2002) noted that interference between sensory modalities was predominantly found in studies that required a switch in response mappings from one task in one sensory modality to a task in another sensory modality. Several researchers (Haroush, Deouell, & Hochstein, 2011; Potter et al., 1998; Soto-Faraco & Spence, 2002) argued that this switch in response mappings caused the interference, rather than the limitations of having shared attentional resources. Furthermore, in some studies, the two targets were presented in different spatial locations, resulting in additional costs due to the need to shift attention between locations. These additional costs occur independently of the involved sensory modalities (Soto-Faraco & Spence, 2002). What is more, blocking the experiment (i.e., by only investigating either a unisensory or crossmodal AB across several trials) instead of switching conditions on every trial could have resulted in undesired strategic effects. After controlling for these sets of factors, Soto-Faraco and Spence found no crossmodal AB for vision and audition, suggesting distinct resources for the visual and auditory modalities. However, it should be noted that for the visual and tactile modalities, a crossmodal AB indeed was found (Dell’Acqua, Turatto, & Jolicoeur, 2001; Soto-Faraco et al., 2002), indicating that for these two sensory modalities attentional resources are shared. However, in these studies, no unisensory ABs were measured to compare their magnitude to the crossmodal AB. Therefore, it is not yet clear whether attentional resources are completely shared or only partly shared for the visual and tactile sensory modalities.

Another closely related phenomenon for which researchers investigated attentional resources across sensory modalities is the psychological refractory period (PRP; Pashler, 1994). In studies similar to those investigating the AB, two targets were presented with a variable delay between targets. Participants were required to perform two object-based attention tasks in close temporal succession. However, the major difference was that instead of only having to identify the two presented targets, the identification was now speeded, meaning that participants needed to classify the targets as quickly as possible. The general finding was that participants were slower to classify the second target, the closer in time it was presented next to the first target (Pashler, 1994). Such an effect was also consistently found when the two targets were presented in separate sensory modalities, and potential neural substrates of such shared processing resources were found (Dux, Ivanoff, Asplund, & Marois, 2006; Pashler, 1994; Sigman & Dehaene, 2008), suggesting that attentional resources required at the level of response selection for speeded tasks are shared across sensory modalities (Hunt & Kingstone, 2004; Marois & Ivanoff, 2005). In summary, once humans are engaged in time-critical tasks, the first of two sequentially presented object-based attention tasks is prioritized regardless of the sensory modalities in which the tasks are carried out.

Researchers have also investigated attentional processing across the sensory modalities with several other paradigms using object-based attention in dual-task designs. For instance, in a study by Larsen, McIlhagga, Baert, and Bundesen (2003) , participants were simultaneously presented with two different letters, one of which was spoken and the other visual. Participants were equally good in reporting the letters when they had to report either the spoken or the visual letters, or both, suggesting that attentional resources are distinct for the visual and auditory sensory modalities when two object-based attention tasks are performed at the same time. In another study (Alais, Morrone, & Burr, 2006), participants simultaneously performed two object-based attention tasks (e.g., a pitch discrimination task and a contrast discrimination task) in separate conditions: They either performed two visual tasks, two auditory tasks, or a visual and an auditory discrimination task at the same time. Alais et al. (2006) found that tasks performed within the same sensory modality interfered with each other, whereas performance (measured as perceptual sensitivity thresholds) was mostly unaffected when tasks were performed in two separate sensory modalities. These results again suggest separate attentional resources for vision and audition when object-based attention tasks are performed. Relatedly, in a study by Helbig and Ernst (2008) involving the visual and tactile modalities, less dual-task interference was found when a visual same/different letter discrimination task was performed in combination with a tactile size discrimination task in comparison to a visual size discrimination task, suggesting in part shared attentional resources for the visual and tactile modalities.

Neurophysiological Studies

From a neurophysiological perspective, studies investigating the AB support the view that there are distinct attentional resources for vision and audition. In a recent study, Finoia et al. (2015) investigated the AB using EEG and fMRI measurements. Using fMRI measurements, Finoia et al. found that processing of auditory and visual targets involved partially overlapping frontoparietal networks. However, when using EEG measurements, processing related to the second target (i.e., indexed by the amplitude of time-locked N2 and P3 ERP responses) was preserved, suggesting distinct resources for the visual and auditory sensory modalities even though similar neuronal populations are involved in processing. Relatedly, in a different EEG study investigating the mismatch negativity, Haroush et al. (2011) found that auditory processing was enhanced during a visual AB, suggesting additional available attentional resources in the auditory modality. In sum, neurophysiological studies that investigated the AB supported the view that attentional resources are distinct for the visual and auditory sensory modalities.

Further evidence for distinct attentional resources for the visual and auditory sensory modalities for object-based attention tasks has been reported in a number of neurophysiological studies using steady-state evoked potentials (Keitel, Maess, Schröger, & Müller, 2013; Porcu, Keitel, & Müller, 2014; Talsma, Doty, Strowd, & Woldorff, 2006). A steady-state evoked potential occurs when a stimulus evokes an oscillatory brain response that flickers at a predefined frequency. Researchers have shown that the amplitude of the oscillation increases when the flickering stimulus is selectively attended to, suggesting that the magnitude of the steady-state evoked potential is a neural correlate of selective attention (Andersen, Fuchs, & Müller, 2011; Morgan, Hansen, & Hillyard, 1996; M. M. Müller et al., 1998; M. Müller et al., 2006; Walter, Quigley, Andersen, & Müller, 2012). Talsma et al. (2006) found that steady-state evoked potentials to a visual letter stream were larger when participants were required to attend to tones in comparison to visual or audiovisual stimuli, indicating that attentional resources are larger across the auditory and visual sensory modalities than within the visual sensory modality alone. Relatedly, Porcu et al. (2014) found that steady-state responses were affected more by stimuli attended to within the same sensory modality than stimuli in another sensory modality. Notably, in addition to the auditory modality, this study also involved the tactile modality, suggesting that the visual, auditory, and tactile modalities rely on separate attentional resources.

More generally, studies have investigated the neural correlates of object-based attention within each sensory modality. Classically, within the visual sensory modality, a ventral what pathway that specializes in object identification has been identified (Livingstone & Hubel, 1988; Mishkin & Ungerleider, 1982; Ungerleider & Pessoa, 2008). Previous fMRI studies have also indicated separate neural substrates of a what pathway for the auditory (Ahveninen et al., 2006) and tactile modalities (Reed, Klatzky, & Halgren, 2005; for a review, see Amedi, von Kriegstein, van Atteveldt, Beauchamp, & Naumer, 2005). Interestingly, an fMRI study also indicated that visual and tactile processing overlaps to some extent in the what pathway (Amedi, Malach, Hendler, Peled, & Zohary, 2001), potentially explaining why results at least show partially shared attentional resources when two object-based attention tasks are performed in the tactile and visual modalities (Dell’Acqua et al., 2001; Helbig & Ernst, 2008; Porcu et al., 2014; Soto-Faraco et al., 2002). More generally, these findings suggest that when two object-based attention tasks are performed in separate sensory modalities, neural populations involved in processing should overlap less, supporting the argument for distinct attentional resources.

Summary: Object-Based Attention Tasks

In summary, studies investigating object-based attention tasks across sensory modalities yield partially conflicting results—however, the majority of studies suggest that attentional resources are distinct for the visual and auditory sensory modalities (Alais et al., 2006; Duncan et al., 1997; Finoia et al., 2015; Haroush et al., 2011; Hein et al., 2006; Helbig & Ernst, 2008; Keitel et al., 2013; Larsen et al., 2003; Porcu et al., 2014; Potter et al., 1998; Soto-Faraco & Spence, 2002; Talsma et al., 2006; Van der Burg et al., 2007). A major factor that influences results is the type of response (i.e., whether speeded responses or responses with no time constraints are required). In particular, shared attentional resources are recruited for speeded stimulus object-based attention tasks, whereas object-based attention tasks with no time constraints recruit distinct attentional resources across the sensory modalities. These findings suggest that once humans are engaged in time-critical tasks, the first of two sequentially presented tasks is prioritized (Dux et al., 2006; Pashler, 1994; Sigman & Dehaene, 2008).

Another factor unrelated to task demands that influences results is the sensory modalities in which the tasks were carried out. In particular, attentional resources tend to be distinct for the auditory and visual modalities; in contrast, findings for the visual and tactile modalities suggest at least partially overlapping attentional resources. These discrepancies in results may be due to differences in paradigms. In particular, a study that did find partially shared resources used a two-alternative forced choice task for size discrimination (Helbig & Ernst, 2008). Studies finding shared attentional resources have investigated the crossmodal AB using either a localization task (Dell’Acqua et al., 2001) or a pattern discrimination task (Soto-Faraco et al., 2002). Moreover, these studies did not compare the magnitude of the crossmodal interference with the interference in unimodal AB, which leaves open the question of whether attentional resources are fully shared or only partially shared for the visual and tactile sensory modalities.

Overall, given that tasks are not time-critical and are also performed within the visual and auditory modalities, we conclude that distinct attentional resources for the sensory modalities are recruited when two object-based attention tasks are performed at the same time.

Spatial Attention Tasks

Dual-Task Designs

A prominent task that researchers have used to investigate the limitations of visuospatial attention is the multiple object tracking (MOT) task (Pylyshyn & Storm, 1988; Yantis, 1992). In a typical MOT task, participants first see several stationary objects on a computer screen. A subset of these objects is indicated as targets. Then, the targets become indistinguishable from the other objects and start to move randomly across the screen for several seconds. When the objects stop moving, the participants are required to indicate which objects are the targets. Generally, participants’ performance decreases with the number of targets that they are required to track, indicating humans’ limitations in spatial attentional processing (Alnæs et al., 2014; Alvarez & Franconeri, 2007; Wahn et al., 2016).

Recently, this paradigm has been applied to study the question of whether there are shared or distinct attentional resources across sensory modalities in a dual-task design (Arrighi, Lunardi, & Burr, 2011; Wahn & König, 2015a , 2015b ). In particular, participants performed the MOT task in combination with either a visual, auditory, or tactile localization task. In the localization task, participants continuously received spatial cues that they had to localize using the number pad on the keyboard. As a point of note, the locations of the spatial cues were randomly chosen from a set of eight possible locations. The results indicate that the two tasks interfered equally, regardless of whether the two spatial tasks (i.e., the MOT task and the localization task) were performed in the same or separate sensory modalities. That is, performance in the dual-task conditions was reduced by the same amount relative to the single-task conditions, regardless of whether tasks were performed in the same or separate sensory modalities. These findings suggest shared attentional resources for the sensory modalities for tasks requiring spatial attention (Wahn & König, 2015a , 2015b).

Another aspect that influences the recruitment of attentional resources across sensory modalities and that has been investigated in dual-task designs is whether attention is focused on one spatial location or divided between two spatial locations. In particular, Santangelo, Fagioli, and Macaluso (2010) compared conditions in which attention to visual and auditory stimuli was divided across hemifields with attending to lateralized auditory and visual stimuli. Santangelo et al. found that the dual-task interference for dividing attention across hemifields was lower than for attending to stimuli in the same hemifield. This suggests that the degree to which shared or distinct resources across sensory modalities are recruited depends on whether attention is focused or divided. However, Spence and Read (2003) observed that dual-task interference was lower when information from multiple sensory modalities was presented in the same spatial location rather than in different spatial locations. Yet, in their study, when stimuli were presented in the same spatial location, they were presented front-on rather than in one hemifield, and this could account for the differences in results.

Orthogonal Cueing

Another paradigm that has investigated how spatial attentional resources are shared between sensory modalities is the orthogonal cueing paradigm (Driver & Spence, 1998a, 1998b; Spence, 2010b; Spence & Driver, 2004). In the orthogonal cueing paradigm (for more detailed reviews, see Spence, 2010a , 2010 b; Spence & Driver, 2004), participants make elevation judgements of spatial stimuli in one sensory modality (e.g., visual) that were preceded by task-irrelevant cues in another sensory modality (e.g., auditory). For instance, in the visual modality, visual flashes were presented at the top or bottom of the visual field. What was additionally varied, independently of the elevation of these spatial stimuli, was whether the stimuli were shown in the left or right visual field. These stimuli were then preceded by cues in another sensory modality that were presented either in the left or right visual field. Importantly, these lateralized cues were uninformative about the elevation of the stimuli in the visual sensory modality. However, they did introduce a spatial expectation for which side the elevation judgements needed to be performed on. As a point of note, attention was still divided in this task because participants needed to attend to two spatial locations at the same time (e.g., the two spatial locations in which a visual stimulus could appear). Researchers (Driver & Spence, 1998a, 1998b; Spence, 2010b; Spence & Driver, 2004) consistently found that elevation judgements were more accurate and/or faster on the cued side. However, judgements were not as accurate when the preceding cues were presented in the same sensory modality in which the elevation judgement was performed, suggesting that spatial attentional systems for the sensory modalities are to some extent shared but not completely overlapping. That is, there is not one supramodal spatial attention system. Rather, the authors concluded that there are separate spatial attention systems for the sensory modalities that can mutually influence each other. In sum, findings from the orthogonal cueing paradigm suggest that spatial attentional resources for the sensory modalities are at least partially shared.

Neurophysiological Studies

From a neurophysiological perspective, several studies investigated auditory spatial deficits in patients with visuospatial neglect (Pavani, Husain, Ládavas, & Driver, 2004; Pavani, Làdavas, & Driver, 2002; Pavani, Ládavas, & Driver, 2003). In particular, researchers found that patients with visuospatial neglect are in part impaired for auditory localization tasks, suggesting that neural structures devoted to spatial attention residing in the parietal lobe process spatial information from several sensory modalities. However, these findings are not undisputed. Sinnett, Juncadella, Rafal, Azanón, and Soto-Faraco (2007) found that patients with lesions in the parietal cortex actually exhibited a dissociation between visual and auditory spatial deficits. That is, patients with visuospatial deficits did not show any auditory deficits and vice versa. In sum, these findings suggest that lesions to the right parietal cortex do not necessarily lead to spatial deficits across the sensory modalities.

More generally, several studies have investigated the neural substrates of spatial attentional processing for the different sensory modalities. Classically, spatial processing for the visual sensory modalities has been associated with a dorsal where pathway (Livingstone & Hubel, 1988; Mishkin & Ungerleider, 1982; Ungerleider & Pessoa, 2008). For the auditory and tactile modalities, researchers reported evidence for the existence of an additional where pathway residing in the parietal lobe that specializes in the processing of auditory (Ahveninen et al., 2006; Maeder et al., 2001) and tactile (Reed et al., 2005) spatial information. Furthermore, there are indications that separate modality-specific spatial processing systems quickly converge at the temporoparietal junction (Coren, Ward, & Enns, 2004), suggesting that spatial processing relies on shared neuronal populations early in processing.

Overall, neurophysiological studies suggest an overlap between the neuronal populations devoted to spatial attentional processing for the different sensory modalities. However, lesions to these structures do not necessarily lead to deficits in spatial processing across the sensory modalities.

Summary: Spatial Attention Tasks

In summary, studies investigating spatial attentional resources across the sensory modalities have employed a wide range of paradigms: Some studies evaluated interference between simultaneously performed tasks that were performed within either the same or separate sensory modalities (Wahn & König, 2015a , 2015b), and others investigated the effects of processing task-irrelevant cues in one sensory modality on spatial processing in another sensory modality (Spence, 2010b; Spence & Driver, 2004) or the spatial positions in which stimuli were presented (Santangelo et al., 2010; Spence & Read, 2003). Common to all studies is that the results indicate at least partially shared resources for the sensory modalities. The degree of overlap in processing, however, is influenced by whether stimuli are processed in succession or in parallel and the spatial positions in which they are presented. In particular, only if tasks are carried out in parallel and the spatial positions of stimuli are not systematically manipulated attentional resources do completely overlap (Wahn & König, 2015a , 2015b). That is, two spatial tasks performed at the same time interfere with each other, regardless of whether they are carried out in the same or separate sensory modalities.

Combining Object-Based and Spatial Attention Tasks

So far, we have addressed how attentional resources are allocated in tasks that require either spatial attention or object-based attention. In this section, we address how attentional resources are allocated across the sensory modalities when these two task types are combined (i.e., an object-based attention task is performed together with a spatial attention task).

Dual-Task Designs

In a recent study by Arrighi et al. (2011) , participants were required to perform a visuospatial task (i.e., a MOT task) and either a visual or an auditory discrimination task (i.e., a task requiring object-based attention) in a dual-task design. Arrighi et al. found that the MOT task selectively interfered with the visual discrimination task while the auditory discrimination performance was not affected, suggesting distinct attentional resources for the visual and auditory modalities. Relatedly, in other recent studies (Wahn & König, 2016; Wahn et al., 2015), participants performed a visual search task (i.e., a task in which participants needed to discriminate targets from distractors) and either a tactile or visual localization task at the same time. The localization task interfered with the visual search task, regardless of whether the localization task was performed in the tactile or visual sensory modality. However, the interference between tasks was larger when tasks were performed within the same sensory modality than when they were performed in different sensory modalities, suggesting that simultaneously performing a spatial attention task and object-based attention task recruits partially shared attentional resources.

Response-Competition Tasks

The ability to process spatial stimuli while engaged in an object-based attention task was investigated in response-competition tasks within the theoretical framework of Lavie’s load theory (Lavie, 2005 , 2010). In a response-competition task, participants were instructed to perform a visual search task (i.e., search for a target letter among other letters). Concurrent to the visual search task, peripheral distractors were presented to trigger response-compatibility effects. That is, peripheral distractors primed responses opposite to the response required for the visual search task, thus slowing down the participant’s completion of the visual search task. According to load theory, susceptibility to peripheral distractors critically depends on the perceptual load in the visual search task. That is, when humans are engaged in an easy visual search task, their ability to detect (or get distracted by) peripheral stimuli is higher because unused attentional resources are available to process these stimuli. Conversely, if humans are engaged in a demanding task, their ability to process distractor stimuli is lower because they have fewer additional attentional resources available.

While load theory has been primarily investigated in the visual sensory modality (Benoni, Zivony, & Tsal, 2014; Lavie, 1995; Lavie & Tsal, 1994; Tsal & Benoni, 2010), researchers also investigated load theory in a crossmodal research design (Matusz et al., 2015; Tellinghuisen & Nowak, 2003) to address the question of whether attentional resources are shared or distinct across sensory modalities. If attentional resources are shared, increasing task demands in a visual search task should require the same attentional resources as required to process distractors in another sensory modality. Hence, the likelihood that distractors in a sensory modality other than vision will interfere (i.e., result in response-competition effects) should be reduced if task demands in the visual search task are increased because there are fewer attentional resources available to process the distractors. Tellinghuisen and Nowak (2003) tested this prediction by investigating response-competition effects due to auditory distractors in a visual search task performed at different levels of difficulty. They found that regardless of the difficulty of the visual search task, auditory distractors resulted in response-competition effects, suggesting that the attentional resources required for auditory distractor processing and the visual search task were distinct. In a recent study, Matusz et al. (2015) further investigated the role of distractor processing in other sensory modalities by using audiovisual distractors and studying participants at three different age levels (6-year-olds, 11-year-olds, and 20-year-olds). Matusz et al. found that audiovisual distractors resulted in response-competition effects regardless of the difficulty level of the visual search task for 11-year-olds and 20-year-olds, suggesting that—at least for adults and older children—attentional resources are distinct for the visual and auditory sensory modalities. Interestingly, this was not the case for the 6-year-olds, suggesting that a reduced pool of available attentional resources in younger children shields them from the effects of distractors in other sensory modalities. More generally, these findings suggest that conclusions about the allocation of attentional resources proposed for adults do not necessarily apply to younger populations.

Using a similar task to the response-competition tasks discussed above, Macdonald and Lavie (2011) also used load theory to address a phenomenon referred to as inattentional deafness. In line with load theory, given that attentional resources are shared between sensory modalities, Macdonald and Lavie hypothesized that when humans are engaged in a visually demanding object-based attention task, they have fewer attentional resources available to detect a brief auditory stimulus than when they are engaged in a less demanding visual object-based attention task. Note that the auditory stimulus was presented in a different spatial location than the visual discrimination task (i.e., presented via headphones), thereby recruiting spatial attentional resources for auditory stimulus detection. Macdonald and Lavie found that participants detected significantly fewer auditory stimuli when being engaged in a visually demanding task than when they were engaged in a visually less demanding task, suggesting that attentional resources are shared between sensory modalities. These findings were confirmed in a recent study by Raveh and Lavie (2015) , in which several experiments consistently showed that auditory stimulus detection was influenced by task difficulty in a visual search task. However, while the findings of these studies suggest that attentional resources are shared between the auditory and visual modalities, it is not clear whether the degree of overlapping attentional resources is higher when stimuli are presented within the same sensory modality or in separate sensory modalities. This question was investigated in an earlier study by Sinnett, Costa, and Soto-Faraco (2006) . In particular, Sinnett et al. (2006) investigated how effects of inattentional blindness and inattentional deafness depend on whether they are induced by a difficult task within the same sensory modality or in a different sensory modality. With equally difficult tasks, they found that both inattentional blindness and deafness were less pronounced when induced by a demanding task in a different sensory modality than by a task within the same sensory modality. From these results, the authors concluded that there are at least partially separate attentional resources: The cross-modal tasks did induce inattentional blindness and deafness, but to a lesser extent than their within-modality counterparts.

Neurophysiological Studies

Within the framework of load theory, a number of neurophysiological studies (Berman & Colby, 2002; Houghton, Macken, & Jones, 2003; Rees, Frith, & Lavie, 2001) investigated the question of whether there are shared or distinct attentional resources for the sensory modalities. If there are distinct attentional resources for different sensory modalities, processing of visual spatial stimuli should be equal, regardless of whether a low-demand or high-demand auditory discrimination task is performed. Rees et al. (2001) tested this prediction in an fMRI study that compared the processing of visual motion stimuli between conditions in which either a low- or high-demand auditory discrimination task was performed. They found that visual motion processing was unaffected by task difficulty in the auditory task, suggesting distinct attentional resources between the visual and auditory modality. However, these findings are not undisputed (Berman & Colby, 2002; Houghton et al., 2003). In a different study, Berman and Colby (2002) found that blood oxygenation level-dependent (BOLD) activity related to motion as well as a motion after-effect were reduced when a highly demanding letter discrimination task was performed in the visual or in the auditory modality.

Similarly, a reduction in the motion after-effect was also found when a highly demanding auditory task that involved identifying digits was performed (Houghton et al., 2003). Lavie (2005) pointed out that these conflicting findings can be reconciled in terms of methodological differences between the studies. In particular, in the study by Rees et al. (2001) , participants were instructed to fixate the center of the screen and this was verified using an eye tracker, while in the other two studies eye movements were not monitored. As fixating to or away from the motion stimulus can alter activity in motion processing areas, this methodological difference could account for the discrepancies in results.

Further evidence for distinct attentional resources between the visual and auditory sensory modalities was provided in a recent MEG study (Molloy, Griffiths, Chait, & Lavie, 2015). In particular, Molloy et al. (2015) investigated inattentional deafness and showed a suppression of auditory evoked responses—that is, responses were time-locked to incidental tones—when a visual search task was performed at a high difficulty level in comparison to a low difficulty level.

Summary: Object-Based and Spatial Attention Tasks

In summary, investigating the task combination of object-based and spatial attention tasks with regard to the question of whether there are shared or distinct attentional resources across sensory modalities yielded partially conflicting results. On the one hand, visual motion processing was unaffected by task difficulty in an auditory task (Rees et al., 2001), suggesting distinct attentional resources for this task combination. On the other hand, participants’ detection rates of auditory stimuli were consistently lower when they were engaged in a visually demanding discrimination task in comparison to being engaged in a visual task with a low demand (Macdonald & Lavie, 2011; Raveh & Lavie, 2015; Sinnett et al., 2006). Notably, the sensory modality in which task load was varied was different across studies: vision in Macdonald and Lavie (2011) , Molloy et al. (2015) , and Raveh and Lavie (2015) ; audition in Rees et al. (2001) . It is conceivable that results depend on the sensory modality in which task load is induced. In particular, inducing auditory attentional load did not affect visual processing (Rees et al., 2001) while inducing visual attentional load did affect auditory processing (Macdonald & Lavie, 2011; Molloy et al., 2015; Raveh & Lavie, 2015). Such an interpretation suggests that the distribution of attentional resources across sensory modalities depends on the sensory modality in which a highly demanding task is performed. Yet, other studies investigating attentional resources across sensory modalities found that when inducing visual attentional load, there are distinct attentional resources for the auditory and visual sensory modalities (Arrighi et al., 2011) and partially shared resources for the tactile and visual sensory modalities (Wahn & König, 2016). Future studies could investigate whether the distribution of attentional resources across sensory modalities is dependent on the sensory modality in which task load is induced.

Taken together, studies investigating the combination of object-based and spatial attention tasks found partially shared attentional resources for the sensory modalities. That is, performing tasks in separate sensory modalities is still beneficial over performing them in the same sensory modality. However, multiple tasks still interfere to some extent, regardless of whether they are performed in the same or separate sensory modalities.

Conclusions and Future Directions

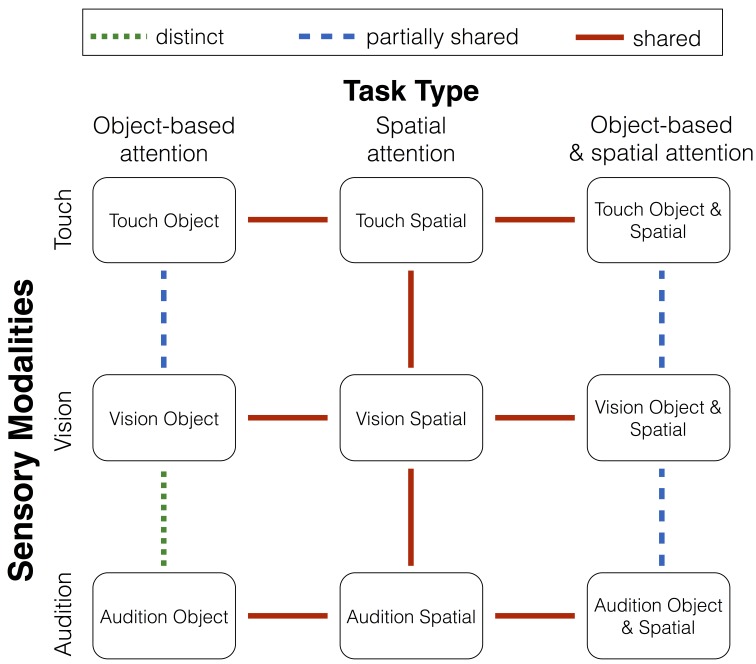

In the present review, we investigated how the recruitment of attentional resources across sensory modalities depends on the performed type of tasks. That is, we reviewed studies investigating attentional resources across the sensory modalities that involved tasks requiring object-based attention, spatial attention, or a combination of these two task types. Based on the reviewed studies, we want to suggest a few general conclusions regarding how attentional resources are allocated across the sensory modalities depending on the performed type of task (for an overview, see Figure 1). In particular, when humans perform two object-based attention tasks, findings suggest that distinct attentional resources are recruited for the visual and auditory modalities (Alais et al., 2006; Duncan et al., 1997; Finoia et al., 2015; Haroush et al., 2011; Hein et al., 2006; Helbig & Ernst, 2008; Keitel et al., 2013; Larsen et al., 2003; Porcu et al., 2014; Potter et al., 1998; Soto-Faraco & Spence, 2002; Talsma et al., 2006; Van der Burg et al., 2007). However, this is not the case when two object-based attention tasks are performed in the tactile and visual modalities (Dell’Acqua et al., 2001; Helbig & Ernst, 2008; Porcu et al., 2014; Soto-Faraco et al., 2002). For this combination of sensory modalities, attentional resources are at least partially shared. When two spatial attention tasks are performed in separate sensory modalities at the same time, attentional resources are shared (Wahn & König, 2015a , 2015b). When a spatial attention task and an object-based attention task are performed in combination, partially shared attentional resources tend to be recruited (Macdonald & Lavie, 2011; Molloy et al., 2015; Raveh & Lavie, 2015; Sinnett et al., 2006; Tellinghuisen & Nowak, 2003; Wahn & König, 2016).

Figure 1.

Overview of the main conclusions of the review. Green, blue, and red lines indicate distinct, partially shared, and shared attentional resources, respectively.

However, we want to emphasize that the task type (i.e., whether a task involves object-based or spatial attentional processing, or both) is one of many potential factors that could influence the allocation of attentional resources across the sensory modalities. Below, we discuss several additional factors for each of the reviewed task types and their combination.

Regarding object-based attention tasks, when two object-based attention tasks are performed in quick succession and both are time-critical, findings suggest that the first of the two tasks is prioritized, leaving no spare attentional resources for the second task (Dux et al., 2006; Marois & Ivanoff, 2005). Researchers propose that this depletion of attentional resources is due to limitations in the amodal processing capacities in the frontal lobe that are recruited for time-critical tasks (Dux et al., 2006; Marois & Ivanoff, 2005).

With regard to spatial attention tasks, a factor that influences the allocation of attentional resources across the sensory modalities is whether stimuli are attended in separate hemifields, a single hemifield, or front-on (Santangelo et al., 2010; Spence & Read, 2003). Relatedly, earlier studies that investigated attentional resources within the visual sensory modality indeed found that there are more visual attentional resources available when information processing is distributed across hemifields rather than focused within one hemifield (e.g., Alvarez & Cavanagh, 2005). Spatial attentional processing across several sensory modalities could follow the same principle. In particular, limitations in spatial processing across the sensory modalities could be effectively circumvented by distributing spatial information across hemifields. Further studies could investigate whether the findings of earlier studies conducted solely in the visual sensory modality (Alvarez & Cavanagh, 2005) can be generalized to processing of spatial information across several sensory modalities.

When humans perform a combination of task types (i.e., an object-based attention task and a spatial attention task), results could be influenced by the sensory modality in which attentional load is increased. That is, whether attentional load in the visual or auditory sensory modality is increased could lead to a differential recruitment of attentional resources for the sensory modalities. In particular, studies have suggested that increasing visual attentional load affects auditory processing, whereas increasing auditory attentional load does not affect visual processing (Macdonald & Lavie, 2011; Molloy et al., 2015; Raveh & Lavie, 2015; Rees et al., 2001). This imbalance in resource allocation might reflect a general processing precedence for visual information over information from other sensory modalities. Relatedly, such a processing precedence was identified for object recognition (Yuval-Greenberg & Deouell, 2007), and a memory advantage was found for visual recognition memory compared to auditory recognition memory (Cohen, Horowitz, & Wolfe, 2009). Importantly, research on the Colavita effect (Colavita, 1974; Spence, Parise, & Chen, 2012; Welch & Warren, 1986) has consistently indicated a processing precedence for visual information over information from other sensory modalities (Hartcher-O’Brien, Gallace, Krings, Koppen, & Spence, 2008; Hartcher-O’Brien, Levitan, & Spence, 2010; Hecht & Reiner, 2009; Koppen, Levitan, & Spence, 2009; Sinnett, Spence, & Soto-Faraco, 2007). However, other researchers found that this processing precedence for visual information is reversed early in development (Robinson & Sloutsky, 2004, 2013) and can be altered, depending on task demands, in adults (Chandra, Robinson, & Sinnett, 2011; Robinson, Ahmar, & Sloutsky, 2010; Robinson, Chandra, & Sinnett, 2016). An interesting future direction would be to investigate how the recruitment of attentional resources across sensory modalities depends on the sensory modality in which attentional load is increased. More generally, studying attentional resources across the developmental trajectory could reveal further factors that influence the allocation of attentional resources across sensory modalities (Matusz et al., 2015; Robinson & Sloutsky, 2004, 2013).

Another factor that influences the allocation of attentional resources across sensory modalities is the degree to which the occurrence of a stimulus in the environment can be predicted (Summerfield & de Lange, 2014; Summerfield & Egner, 2009; Talsma, 2015; ten Oever, Schroeder, Poeppel, van Atteveldt, & Zion-Golumbic, 2014; Thillay et al., 2015). For instance, when stimuli were received in predictable intervals compared to random presentations, perceptual sensitivities to detect stimuli within and across sensory modalities were significantly increased (ten Oever et al., 2014). Another future direction for research could be to investigate the extent to which attentional resource limitations can be circumvented by varying the predictability of the presented stimuli.

As an additional point of note, in the present review, we conceptualized attentional processing as a pool of resources that can be depleted depending on task demands ( Kahneman, 1973; Lavie, 2005; Wickens, 2002). However, other researchers suggested that findings interpreted as a depletion of attentional resources can alternatively be explained by dilution effects (Benoni et al., 2014; Tsal & Benoni, 2010) or crossmodal priming effects (Tellinghuisen & Nowak, 2003). We suggest that future studies could use an operationalization of attentional load that cannot be alternatively explained by perceptual effects (e.g., the number of items displayed in a visual search task or target/distractor similarities). In particular, researchers suggested that a MOT task is an ideal paradigm to systematically vary attentional load (i.e., by varying the number of targets that need to be tracked) while keeping the perceptual load constant (i.e., the total number of displayed objects; Arrighi et al., 2011; Cavanagh & Alvarez, 2005; Wahn & König, 2015a , 2015 b; Wahn et al., 2016).

More generally, the sheer number of factors on which attentional resource allocation is dependent suggests that the human attentional system flexibly adapts to current task demands. Furthermore, it becomes clear that it is impossible to make a definite statement about the nature of available attentional resources across the sensory modalities without accounting for the task demands in which they are recruited. Such a view suggests that studying attentional resources in paradigms that systematically vary the task demands could identify separate attentional mechanisms for different task demands that may be entirely independent of the involved sensory modalities (for a similar proposal that cognition generally is context- and action-dependent, see Engel, Maye, Kurthen, & König, 2013).

But why should task demands influence whether shared or distinct attentional resources across the sensory modalities are recruited? It could be that for some tasks it is more beneficial to recruit a shared pool of attentional resources for the sensory modalities, while for other tasks it is more beneficial to recruit separate pools of attentional resources for the sensory modalities. For instance, for time-critical tasks, using amodal resources residing in the frontal lobe (Dux et al., 2006; Marois & Ivanoff, 2005) could prioritize faster processing over relying on distributed information processing in separate neuronal populations (Finoia et al., 2015; Haroush et al., 2011; Keitel et al., 2013; Porcu et al., 2014 ; Talsma et al., 2006). Such different recruitment mechanisms could reflect a large-scale optimization strategy to efficiently make use of a limited pool of neuronal resources in the brain while maximizing processing of currently relevant information (for commonalities and relations between attentional resources and neuronal resources, see Cohen, Konkle, Rhee, Nakayama, & Alvarez, 2014; Just, Carpenter, & Miyake, 2003). Yet, load theory (Lavie, 2005) would suggest that the brain always uses all available resources rather than minimizing resource expenditures. However, given the excessive overall resource demands of the brain (Sokoloff, 1989), it is unlikely that it can always maximally use available resources. Rather, such a large-scale optimization strategy could maximize the processing of current task-relevant information while minimizing the brain’s processing and energy expenditures. Ultimately, future studies should investigate the optimality of resource expenditures in contexts in which shared or distinct attentional resources across the sensory modalities are recruited, and the extent to which currently relevant information is effectively extracted.

Author Note

We thank Laura Schmitz for her valuable feedback on the manuscript. We acknowledge the support by H2020 - H2020-FETPROACT-2014 641321 - socSMCs (for BW) and ERC-2010-AdG #269716 - MULTISENSE (for PK).

References

- Ahveninen J., Jääskeläinen I. P., Raij T., Bonmassar G., Devore S., Hämäläinen M., … Belliveau J. W. Task-modulated “what” and “where” pathways in human auditory cortex. Proceedings of the National Academy of Sciences of the United States of America. 2006;103:14608–14613. doi: 10.1073/pnas.0510480103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alais D., Morrone C., Burr D. Separate attentional resources for vision and audition. Proceedings of the Royal Society B: Biological Sciences. 2006;273:1339–1345. doi: 10.1098/rspb.2005.3420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alnæs D., Sneve M. H., Espeseth T., Endestad T., van de Pavert S. H. P., Laeng B. Pupil size signals mental effort deployed during multiple object tracking and predicts brain activity in the dorsal attention network and the locus coeruleus. Journal of Vision. 2014;14:1–20. doi: 10.1167/14.4.1. [DOI] [PubMed] [Google Scholar]

- Alvarez G. A., Cavanagh P. Independent resources for attentional tracking in the left and right visual hemifields. Psychological Science. 2005;16:637–643. doi: 10.1111/j.1467-9280.2005.01587.x. [DOI] [PubMed] [Google Scholar]

- Alvarez G. A., Franconeri S. L. How many objects can you track? Evidence for a resource-limited attentive tracking mechanism. Journal of Vision. 2007;7:1–10. doi: 10.1167/7.13.14. [DOI] [PubMed] [Google Scholar]

- Amedi A., Malach R., Hendler T., Peled S., Zohary E. Visuo-haptic object-related activation in the ventral visual pathway. Nature Neuroscience. 2001;4:324–330. doi: 10.1038/85201. [DOI] [PubMed] [Google Scholar]

- Amedi A., von Kriegstein K., van Atteveldt N. M., Beauchamp M., Naumer M. J. Functional imaging of human crossmodal identification and object recognition. Experimental Brain Research. 2005;166:559–571. doi: 10.1007/s00221-005-2396-5. [DOI] [PubMed] [Google Scholar]

- Andersen S. K., Fuchs S., Müller M. M. Effects of feature-selective and spatial attention at different stages of visual processing. Journal of Cognitive Neuroscience. 2011;23:238–246. doi: 10.1162/jocn.2009.21328. [DOI] [PubMed] [Google Scholar]

- Arnell K. M., Jenkins R. Revisiting within-modality and cross-modality attentional blinks: Effects of target-distractor similarity. Perception & Psychophysics. 2004;66:1147–1161. doi: 10.3758/bf03196842. [DOI] [PubMed] [Google Scholar]

- Arnell K. M., Larson J. M. Cross-modality attentional blinks without preparatory task-set switching. Psychonomic Bulletin & Review. 2002;9:497–506. doi: 10.3758/bf03196305. [DOI] [PubMed] [Google Scholar]

- Arrighi R., Lunardi R., Burr D. Vision and audition do not share attentional resources in sustained tasks. Frontiers in Psychology, 2:56. 2011;2:56–56. doi: 10.3389/fpsyg.2011.00056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benoni H., Zivony A., Tsal Y. Attentional sets influence perceptual load effects, but not dilution effects. Quarterly Journal of Experimental Psychology. 2014;67:785–792. doi: 10.1080/17470218.2013.830629. [DOI] [PubMed] [Google Scholar]

- Berman R. A., Colby C. L. Auditory and visual attention modulate motion processing in area MT+. Cognitive Brain Research. 2002;14:64–74. doi: 10.1016/s0926-6410(02)00061-7. [DOI] [PubMed] [Google Scholar]

- Cavanagh P., Alvarez G. A. Tracking multiple targets with multifocal attention. Trends in Cognitive Sciences. 2005;9:349–354. doi: 10.1016/j.tics.2005.05.009. [DOI] [PubMed] [Google Scholar]

- Chan J. S., Newell F. N. Behavioral evidence for task-dependent “what” versus “where” processing within and across modalities. Perception & Psychophysics. 2008;70:36–49. doi: 10.3758/pp.70.1.36. [DOI] [PubMed] [Google Scholar]

- Chandra M., Robinson C. W., Sinnett S. Coexistence of multiple modal dominances. In: Carlson L. A., Hölscher C., Shipley T. F., editors. Proceedings of the 33rd Annual Conference of the Cognitive Science Society. Austin, TX: Cognitive Science Society; 2011. pp. 2604–2609. [Google Scholar]

- Cohen M. A., Horowitz T. S., Wolfe J. M. Auditory recognition memory is inferior to visual recognition memory. Proceedings of the National Academy of Sciences of the United States of America. 2009;106:6008–6010. doi: 10.1073/pnas.0811884106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen M. A., Konkle T., Rhee J. Y., Nakayama K., Alvarez G. A. Processing multiple visual objects is limited by overlap in neural channels. Proceedings of the National Academy of Sciences of the United States of America. 2014;111:8955–8960. doi: 10.1073/pnas.1317860111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Colavita F. B. Human sensory dominance. Perception & Psychophysics. 1974;16:409–412. [Google Scholar]

- Coren S., Ward L. M., Enns J. T. Sensation and perception (6th ed.). New York, NY: Wiley; 2004. [Google Scholar]

- Dell’Acqua R., Turatto M., Jolicoeur P. Cross-modal attentional deficits in processing tactile stimulation. Perception & Psychophysics. 2001;63:777–789. doi: 10.3758/bf03194437. [DOI] [PubMed] [Google Scholar]

- Doehrmann O., Naumer M. J. Semantics and the multisensory brain: How meaning modulates processes of audio-visual integration. Brain Research. 2008;1242:136–150. doi: 10.1016/j.brainres.2008.03.071. [DOI] [PubMed] [Google Scholar]

- Driver J., Spence C. Attention and the crossmodal construction of space. Trends in Cognitive Sciences. 1998a;2:254–262. doi: 10.1016/S1364-6613(98)01188-7. [DOI] [PubMed] [Google Scholar]

- Driver J., Spence C. Crossmodal attention. Current Opinion in Neurobiology. 1998b;8:245–253. doi: 10.1016/s0959-4388(98)80147-5. [DOI] [PubMed] [Google Scholar]

- Duncan J. Selective attention and the organization of visual information. Journal of Experimental Psychology: General. 1984;113:501–517. doi: 10.1037//0096-3445.113.4.501. [DOI] [PubMed] [Google Scholar]

- Duncan J., Martens S., Ward R. Restricted attentional capacity within but not between sensory modalities. Nature. 1997;397:808–810. doi: 10.1038/42947. [DOI] [PubMed] [Google Scholar]

- Dux P. E., Ivanoff J., Asplund C. L., Marois R. Isolation of a central bottleneck of information processing with time-resolved fMRI. Neuron. 2006;52:1109–1120. doi: 10.1016/j.neuron.2006.11.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engel A. K., Maye A., Kurthen M., König P. Where’s the action? The pragmatic turn in cognitive science. Trends in Cognitive Sciences. 2013;17:202–209. doi: 10.1016/j.tics.2013.03.006. [DOI] [PubMed] [Google Scholar]

- Ernst M. O., Banks M. S. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. 2002;415:429–433. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- Fink G., Dolan R., Halligan P., Marshall J., Frith C. Space-based and object-based visual attention: Shared and specific neural domains. Brain. 1997;120:2013–2028. doi: 10.1093/brain/120.11.2013. [DOI] [PubMed] [Google Scholar]

- Finoia P., Mitchell D. J., Hauk O., Beste C., Pizzella V., Duncan J. Concurrent brain responses to separate auditory and visual targets. Journal of Neurophysiology. 2015;114:1239–1247. doi: 10.1152/jn.01050.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghazanfar A. A., Schroeder C. E. Is neocortex essentially multisensory? Trends in Cognitive Sciences. 2006;10:278–285. doi: 10.1016/j.tics.2006.04.008. [DOI] [PubMed] [Google Scholar]

- Goeke C. M., Planera S., Finger H., König P. Bayesian alternation during tactile augmentation. Frontiers in Behavioral Neuroscience. 2016;10:187. doi: 10.3389/fnbeh.2016.00187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haroush K., Deouell L. Y., Hochstein S. Hearing while blinking: Multisensory attentional blink revisited. The Journal of Neuroscience. 2011;31:922–927. doi: 10.1523/JNEUROSCI.0420-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartcher-O’Brien J., Gallace A., Krings B., Koppen C., Spence C. When vision ‘extinguishes’ touch in neurologically-normal people: Extending the Colavita visual dominance effect. Experimental Brain Research. 2008;186:643–658. doi: 10.1007/s00221-008-1272-5. [DOI] [PubMed] [Google Scholar]

- Hartcher-O’Brien J., Levitan C., Spence C. Out-of-touch: Does vision dominate over touch when it occurs off the body? Brain Research. 2010;1362:48–55. doi: 10.1016/j.brainres.2010.09.036. [DOI] [PubMed] [Google Scholar]

- Hecht D., Reiner M. Sensory dominance in combinations of audio, visual and haptic stimuli. Experimental Brain Research. 2009;193:307–314. doi: 10.1007/s00221-008-1626-z. [DOI] [PubMed] [Google Scholar]

- Hein G., Parr A., Duncan J. Within-modality and cross-modality attentional blinks in a simple discrimination task. Perception & Psychophysics. 2006;68:54–61. doi: 10.3758/bf03193655. [DOI] [PubMed] [Google Scholar]

- Helbig H. B., Ernst M. O. Visual-haptic cue weighting is independent of modality-specific attention. Journal of Vision. 2008;8:1–16. doi: 10.1167/8.1.21. [DOI] [PubMed] [Google Scholar]

- Hillstrom A. P., Shapiro K. L., Spence C. Attentional limitations in processing sequentially presented vibrotactile targets. Perception & Psychophysics. 2002;64:1068–1082. doi: 10.3758/bf03194757. [DOI] [PubMed] [Google Scholar]

- Hommel B., Akyürek E. G. Lag-1 sparing in the attentional blink: Benefits and costs of integrating two events into a single episode. Quarterly Journal of Experimental Psychology. 2005;58A:1415–1433. doi: 10.1080/02724980443000647. [DOI] [PubMed] [Google Scholar]

- Houghton R. J., Macken W. J., Jones D. M. Attentional modulation of the visual motion aftereffect has a central cognitive locus: Evidence of interference by the postcategorical on the precategorical. Journal of Experimental Psychology: Human Perception and Performance. 2003;29:731–740. doi: 10.1037/0096-1523.29.4.731. [DOI] [PubMed] [Google Scholar]

- Hunt A. R., Kingstone A. Multisensory executive functioning. Brain and Cognition. 2004;55:325–327. doi: 10.1016/j.bandc.2004.02.072. [DOI] [PubMed] [Google Scholar]

- Jolicoeur P. Restricted attentional capacity between sensory modalities. Psychonomic Bulletin & Review. 1999;6:87–92. doi: 10.3758/bf03210813. [DOI] [PubMed] [Google Scholar]

- Just M. A., Carpenter P. A., Miyake A. Neuroindices of cognitive workload: Neuroimaging, pupillometric and event-related potential studies of brain work. Theoretical Issues in Ergonomics Science. 2003;4:56–88. [Google Scholar]

- Kahneman D. Attention and effort. Englewood Cliffs, NJ: Prentice-Hall; 1973. [Google Scholar]

- Keitel C., Maess B., Schröger E., Müller M. M. Early visual and auditory processing rely on modality-specific attentional resources. Neuroimage. 2013;70:240–249. doi: 10.1016/j.neuroimage.2012.12.046. [DOI] [PubMed] [Google Scholar]

- Koppen C., Levitan C. A., Spence C. A signal detection study of the Colavita visual dominance effect. Experimental Brain Research. 2009;196:353–360. doi: 10.1007/s00221-009-1853-y. [DOI] [PubMed] [Google Scholar]

- König S. U., Schumann F., Keyser J., Goeke C., Krause C., Wache S., . . . König P. Learning new sensorimotor contingencies: Effects of long-term use of sensory augmentation on the brain and conscious perception. . PLoS ONE. 2016;11(12):e0166647–e0166647. doi: 10.1371/journal.pone.0166647. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Larsen A., McIlhagga W., Baert J., Bundesen C. Seeing or hearing? Perceptual independence, modality confusions, and crossmodal congruity effects with focused and divided attention. Perception & Psychophysics. 2003;65:568–574. doi: 10.3758/bf03194583. [DOI] [PubMed] [Google Scholar]

- Lavie N. Perceptual load as a necessary condition for selective attention. Journal of Experimental Psychology: Human Perception and Performance. 1995;21:451–468. doi: 10.1037//0096-1523.21.3.451. [DOI] [PubMed] [Google Scholar]

- Lavie N. Distracted and confused? Selective attention under load. Trends in Cognitive Sciences. 2005;9:75–82. doi: 10.1016/j.tics.2004.12.004. [DOI] [PubMed] [Google Scholar]

- Lavie N. Attention, distraction, and cognitive control under load. Current Directions in Psychological Science. 2010;19:143–148. [Google Scholar]

- Lavie N., Tsal Y. Perceptual load as a major determinant of the locus of selection in visual attention. Perception & Psychophysics. 1994;56:183–197. doi: 10.3758/bf03213897. [DOI] [PubMed] [Google Scholar]

- Macdonald J. S., Lavie N. Visual perceptual load induces inattentional deafness. Attention, Perception, & Psychophysics. 2011;73:1780–1789. doi: 10.3758/s13414-011-0144-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maeder P. P., Meuli R. A., Adriani M., Bellmann A., Fornari E., Thiran J.-P., … Clarke S. Distinct pathways involved in sound recognition and localization: A human fMRI study. Neuroimage. 2001;14:802–816. doi: 10.1006/nimg.2001.0888. [DOI] [PubMed] [Google Scholar]

- Marois R., Ivanoff J. Capacity limits of information processing in the brain. Trends in Cognitive Sciences. 2005;9:296–305. doi: 10.1016/j.tics.2005.04.010. [DOI] [PubMed] [Google Scholar]

- Matusz P. J., Broadbent H., Ferrari J., Forrest B., Merkley R., Scerif G. Multi-modal distraction: Insights from children’s limited attention. Cognition. 2015;136:156–165. doi: 10.1016/j.cognition.2014.11.031. [DOI] [PubMed] [Google Scholar]

- Mishkin M., Stein B. E. Interactions among converging sensory inputs in the superior colliculus. Science. 1983 Jul;221(4608):389–391. doi: 10.1126/science.6867718. [DOI] [PubMed] [Google Scholar]

- Mishkin M., Ungerleider L. G. Contribution of striate inputs to the visuospatial functions of parieto-preoccipital cortex in monkeys. Behavioural Brain Research. 1982;6:57–77. doi: 10.1016/0166-4328(82)90081-x. [DOI] [PubMed] [Google Scholar]

- Molloy K., Griffiths T. D., Chait M., Lavie N. Inattentional deafness: Visual load leads to time-specific suppression of auditory evoked responses. The Journal of Neuroscience. 2015;35:16046–16054. doi: 10.1523/JNEUROSCI.2931-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morgan S., Hansen J., Hillyard S. Selective attention to stimulus location modulates the steady-state visual evoked potential. Proceedings of the National Academy of Sciences of the United States of America. 1996;93:4770–4774. doi: 10.1073/pnas.93.10.4770. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Müller M., Andersen S., Trujillo N., Valdes-Sosa P., Malinowski P., Hillyard S. Feature-selective attention enhances color signals in early visual areas of the human brain. Proceedings of the National Academy of Sciences of the United States of America. 2006;103:14250–14254. doi: 10.1073/pnas.0606668103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Müller M. M., Picton T. W., Valdes-Sosa P., Riera J., Teder-Sälejärvi W. A., Hillyard S. A. Effects of spatial selective attention on the steady-state visual evoked potential in the 20-28 hz range. Cognitive Brain Research. 1998;6:249–261. doi: 10.1016/s0926-6410(97)00036-0. [DOI] [PubMed] [Google Scholar]

- Murray M. M., Thelen A., Thut G., Romei V., Martuzzi R., Matusz P. J. The multisensory function of the human primary visual cortex. Neuropsychologia. 2016;83:161–169. doi: 10.1016/j.neuropsychologia.2015.08.011. [DOI] [PubMed] [Google Scholar]

- Navarra J., Alsius A., Soto-Faraco S., Spence C. Assessing the role of attention in the audiovisual integration of speech. Information Fusion. 2010;11:4–11. [Google Scholar]

- Pashler H. Dual-task interference in simple tasks: Data and theory. Psychological Bulletin. 1994;116:220–244. doi: 10.1037/0033-2909.116.2.220. [DOI] [PubMed] [Google Scholar]

- Pavani F., Husain M., Ládavas E., Driver J. Auditory deficits in visuospatial neglect patients. Cortex. 2004;40:347–365. doi: 10.1016/s0010-9452(08)70130-8. [DOI] [PubMed] [Google Scholar]

- Pavani F., Làdavas E., Driver J. Selective deficit of auditory localisation in patients with visuospatial neglect. Neuropsychologia. 2002;40:291–301. doi: 10.1016/s0028-3932(01)00091-4. [DOI] [PubMed] [Google Scholar]

- Pavani F., Ládavas E., Driver J. Auditory and multisensory aspects of visuospatial neglect. Trends in Cognitive Sciences. 2003;7:407–414. doi: 10.1016/s1364-6613(03)00189-x. [DOI] [PubMed] [Google Scholar]

- Porcu E., Keitel C., Müller M. M. Visual, auditory and tactile stimuli compete for early sensory processing capacities within but not between senses. Neuroimage. 2014;97:224–235. doi: 10.1016/j.neuroimage.2014.04.024. [DOI] [PubMed] [Google Scholar]

- Potter M. C., Chun M. M., Banks B. S., Muckenhoupt M. Two attentional deficits in serial target search: The visual attentional blink and an amodal task-switch deficit. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1998;24:979–992. doi: 10.1037//0278-7393.24.4.979. [DOI] [PubMed] [Google Scholar]

- Pylyshyn Z. W., Storm R. W. Tracking multiple independent targets: Evidence for a parallel tracking mechanism. Spatial Vision. 1988;3:179–197. doi: 10.1163/156856888x00122. [DOI] [PubMed] [Google Scholar]

- Raveh D., Lavie N. Load-induced inattentional deafness. Attention, Perception, & Psychophysics. 2015;77:483–492. doi: 10.3758/s13414-014-0776-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raymond J. E., Shapiro K. L., Arnell K. M. Temporary suppression of visual processing in an RSVP task: An attentional blink? Journal of Experimental Psychology: Human Perception and Performance. 1992;18:849–860. doi: 10.1037//0096-1523.18.3.849. [DOI] [PubMed] [Google Scholar]

- Reed C. L., Klatzky R. L., Halgren E. What vs. where in touch: An fMRI study. Neuroimage. 2005;25:718–726. doi: 10.1016/j.neuroimage.2004.11.044. [DOI] [PubMed] [Google Scholar]

- Rees G., Frith C., Lavie N. Processing of irrelevant visual motion during performance of an auditory attention task. Neuropsychologia. 2001;39:937–949. doi: 10.1016/s0028-3932(01)00016-1. [DOI] [PubMed] [Google Scholar]

- Robinson C. W., Ahmar N., Sloutsky V. M. Evidence for auditory dominance in a passive oddball task. In: Ohlson S., editor. Proceedings of the 32nd Annual Conference of the Cognitive Science Society. Austin, TX: Cognitive Science Society; 2010. pp. 2644–2649. [Google Scholar]

- Robinson C. W., Chandra M., Sinnett S. Existence of competing modality dominances. Attention, Perception, & Psychophysics. 2016;78:1104–1114. doi: 10.3758/s13414-016-1061-3. [DOI] [PubMed] [Google Scholar]

- Robinson C. W., Sloutsky V. M. Auditory dominance and its change in the course of development. Child Development. 2004;75:1387–1401. doi: 10.1111/j.1467-8624.2004.00747.x. [DOI] [PubMed] [Google Scholar]

- Robinson C. W., Sloutsky V. M. When audition dominates vision. Experimental Psychology. 2013;60:113–121. doi: 10.1027/1618-3169/a000177. [DOI] [PubMed] [Google Scholar]

- Santangelo V., Fagioli S., Macaluso E. The costs of monitoring simultaneously two sensory modalities decrease when dividing attention in space. Neuroimage. 2010;49:2717–2727. doi: 10.1016/j.neuroimage.2009.10.061. [DOI] [PubMed] [Google Scholar]

- Serences J. T., Schwarzbach J., Courtney S. M., Golay X., Yantis S. Control of object-based attention in human cortex. Cerebral Cortex. 2004;14:1346–1357. doi: 10.1093/cercor/bhh095. [DOI] [PubMed] [Google Scholar]

- Shapiro K. L., Raymond J., Arnell K. Attentional blink. Scholarpedia, 4(6): 3320. 2009;4(6):3320–3320. [Google Scholar]

- Sigman M., Dehaene S. Brain mechanisms of serial and parallel processing during dual-task performance. The Journal of Neuroscience. 2008;28:7585–7598. doi: 10.1523/JNEUROSCI.0948-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sinnett S., Costa A., Soto-Faraco S. Manipulating inattentional blindness within and across sensory modalities. Quarterly Journal of Experimental Psychology. 2006;59:1425–1442. doi: 10.1080/17470210500298948. [DOI] [PubMed] [Google Scholar]

- Sinnett S., Juncadella M., Rafal R., Azanón E., Soto-Faraco S. A dissociation between visual and auditory hemi-inattention: Evidence from temporal order judgements. Neuropsychologia. 2007;45:552–560. doi: 10.1016/j.neuropsychologia.2006.03.006. [DOI] [PubMed] [Google Scholar]

- Sinnett S., Spence C., Soto-Faraco S. Visual dominance and attention: The Colavita effect revisited. Perception & Psychophysics. 2007;69:673–686. doi: 10.3758/bf03193770. [DOI] [PubMed] [Google Scholar]

- Sokoloff L. Circulation and energy metabolism of the brain. Basic Neurochemistry. 1989;2:338–413. [Google Scholar]

- Soto D., Blanco M. J. Spatial attention and object-based attention: A comparison within a single task. Vision Research. 2004;44:69–81. doi: 10.1016/j.visres.2003.08.013. [DOI] [PubMed] [Google Scholar]