Abstract

Resurgence is typically defined as an increase in a previously extinguished target behavior when a more recently reinforced alternative behavior is later extinguished. Some treatments of the phenomenon have suggested that it might also extend to circumstances where either the historic or more recently reinforced behavior is reduced by other non-extinction related means (e.g., punishment, decreases in reinforcement rate, satiation, etc.). Here we present a theory of resurgence suggesting that the phenomenon results from the same basic processes governing choice. In its most general form, the theory suggests that resurgence results from changes in the allocation of target behavior driven by changes in the values of the target and alternative options across time. Specifically, resurgence occurs when there is an increase in the relative value of an historically effective target option as a result of a subsequent devaluation of a more recently effective alternative option. We develop a more specific quantitative model of how extinction of the target and alternative responses in a typical resurgence paradigm might produce such changes in relative value across time using a temporal weighting rule. The example model does a good job in accounting for the effects of reinforcement rate and related manipulations on resurgence in simple schedules where Behavioral Momentum Theory has failed. We also discuss how the general theory might be extended to other parameters of reinforcement (e.g., magnitude, quality), other means to suppress target or alternative behavior (e.g., satiation, punishment, differential reinforcement of other behavior), and other factors (e.g., non-contingent versus contingent alternative reinforcement, serial alternative reinforcement, and multiple schedules).

Keywords: Resurgence, Choice, Relative value, Matching law, Extinction, Behavioral momentum

1. Introduction

Resurgence is typically defined as an increase in a previously extinguished behavior when a more recently reinforced behavior is also placed on extinction (e.g., Cleland et al., 2001; Epstein, 1985; Lattal and Wacker, 2015). The phenomenon is potentially clinically important because it is likely a source of relapse of problem behavior following widely used treatments involving differential reinforcement of alternative behavior (i.e., DRA; see Volkert et al., 2009, for discussion). In such treatments, a problem behavior is placed on extinction and a more appropriate alternative behavior is reinforced (e.g., a functional communication response). Resurgence is said to occur when the problem behavior increases as a result of omission of reinforcement for the alternative behavior during treatment lapses or when treatment ends. In addition to such undesirable outcomes, resurgence might also contribute to the generation of more positive behavioral effects. For example, the phenomenon might be involved when historically effective behavior recurs under changing circumstances to allow for appropriate adaptation, problem solving, and creativity (e.g., Epstein, 1985; Shahan and Chase, 2002). Thus, a more thorough understanding of resurgence could have far reaching implications for understanding how temporally distant past experiences provide a source of potential behavior (be it good or bad) under current conditions.

Despite the definition of resurgence above, both early (e.g., Epstein, 1985) and more recent (e.g., Lattal and Wacker, 2015) treatments of the phenomenon have suggested that it might extend to circumstances where either the historic or more recently reinforced behavior is reduced by other non-extinction related means (e.g., punishment, satiation, decreases in reinforcement rate). This broader view of resurgence is appealing because the recurrence of previous behavior under such conditions may indeed reflect the same general processes, and it also more easily accommodates potentially related clinical phenomena. The theory of resurgence developed here is consistent with this broader view of the phenomenon.

The purpose of this paper is to present a theory of resurgence in which the phenomenon is considered to result from the same processes generally thought to govern choice. In short, the general theory proposed here suggests that resurgence arises from changes in the relative values of two (or more) options across time: one that was historically more valuable and one that has been more recently valuable. The merits of pursuing a choice-based theory of resurgence are manifold. First, as we will more fully development below, it is relatively straightforward to characterize behavior in resurgence preparations as resulting from an ongoing choice between a target and an alternative behavior. Second, a choice-based theory provides an account of resurgence that allows it to be incorporated into an overarching choice-based account of operant behavior–an account that has served as a cornerstone for the field. Third, the long tradition of well-developed quantitative theories of choice provides numerous insights into how the determinants of resurgence might be formalized quantitatively.

Although the theory we will present is grounded in the more general conception of resurgence discussed above (e.g., Epstein, 1985; Lattal and Wacker, 2015), most empirical data and the two dominant accounts of resurgence have focused on extinction-induced resurgence in the more restrictive sense. Thus, we will begin by reviewing these two accounts, specifically Behavioral Momentum Theory (Shahan and Sweeney, 2011) and Context Theory (see Trask et al., 2015, for a recent statement)–focusing primarily on their shortcomings. Next, we will provide a general description of a choice-based account and then provide an example of how that account might be formalized to provide a more specific quantitative model of extinction-induced resurgence. Finally, we will explore how a choice-based theory might be applied to other resurgence-inducing operations. Along the way, we will consider existing areas in need of additional research and novel predictions of the choice-based theory.

2. Behavioral Momentum Theory of Resurgence

Behavioral Momentum Theory (e.g., Nevin and Grace, 2000) provides a quantitative account of the persistence of operant behavior under conditions of disruption. The theory suggests response rates and response strength (i.e., resistance to change) are two separate aspects of behavior controlled by different processes. Response rates are governed by the contingent response-reinforcer relation, but resistance to change is governed by the Pavlovian discriminative stimulus-reinforcer relation. As a result, all sources of reinforcement within a discriminative-stimulus context, be they contingent on the target behavior, non-contingent, or even contingent on a different behavior, are predicted to contribute to the persistence of the target behavior under conditions of disruption. This prediction has been widely confirmed under a variety of circumstance (e.g., Nevin et al., 1990; Shahan and Burke, 2004; see Nevin and Shahan, 2011, for review).

The extension of Behavioral Momentum Theory to resurgence (Shahan and Sweeney, 2011) is based specifically on the augmented momentum model of extinction (Nevin and Grace, 2000). This model suggests that decreases in behavior during extinction result from increasingly disruptive effects across time of: a) terminating the contingency between a response and a reinforcer and, b) generalization decrement from removal of reinforcers from the context. The model suggests that experience with higher rates of reinforcement within a discriminative-stimulus context prior to extinction renders an operant response more resistant to the disruptive effects of extinction. Quantitatively that is,

| (1) |

where Bt is the response rate at time t in extinction, B0 is the base-line response rate, and r is the rate of reinforcement within the context in baseline. The model has three free parameters, where c is the suppressive effect of breaking the response-reinforcer contingency, d scales disruption associated with elimination of reinforcers from the situation (i.e., generalization decrement), and b is sensitivity to baseline reinforcement rate. As time in extinction increases, disruption increases (in the numerator of the right side of the equation), but is counteracted by previous experience with higher reinforcement rates in the context (in the denominator). Reinforcement in the context (i.e., r) includes all sources of reinforcement, regardless of whether they are contingent on the target response or not. From the perspective of this model, resistance to extinction is governed by the strength of the behavior, which is a power function (i.e., rb) of the overall rate of reinforcement in the context of the pre-extinction baseline.

Shahan and Sweeney (2011) extended Eq. (1) to resurgence by suggesting that alternative reinforcement during extinction of a target behavior has two effects. First, alternative reinforcement serves as an additional source of disruption of the target behavior. Second, alternative reinforcement further strengthens the target behavior by serving as an additional source of reinforcement in the context. Thus,

| (2) |

where all terms are as in Eq. (1). The added variable Ra is the rate of alternative reinforcement during extinction and the added free parameter k scales the disruptive impact of the alternative reinforcement during extinction. Thus, the model has four free parameters. The inclusion of kRa increases the disruptive impact in the numerator, with higher rates of alternative reinforcement producing more suppression of the target behavior. When alternative reinforcement is removed during resurgence, kRa is zero and the target behavior increases as a result of the release from suppression. In addition, because Ra is included in the denominator, alternative reinforcement experienced during extinction also contributes to the future strength of the target behavior, and thus to resurgence.

Although this quantitative model incorporated resurgence into a larger theoretical context and provided a reasonably good account of the data existing at the time, the theory has encountered difficulties with some of its core predictions (see Craig and Shahan, 2016; for a more thorough discussion). For example, both Sweeney and Shahan (2013b) and Craig and Shahan (2016) have found that target responding during extinction is in some cases more persistent when alternative reinforcement is available than when it is not (i.e., extinction alone). Such increases in the persistence of a target response during extinction plus an alternative source of reinforcement should not happen according to Eq. (2) because any source of alternative reinforcement should increase the numerator, and thus disruption. As a result, these findings raise serious questions about the adequacy of the conceptual foundations of Eq. (2) in terms of the processes linking alternative reinforcement to increases in disruption within the framework of the augmented model of extinction (i.e., Eq. (1)). Although the data from such experiments during tests for resurgence were generally consistent with the basic model prediction that higher rates of alternative reinforcement should generate greater increases in responding when they are removed, the conceptual foundation of the model with respect to what is responsible for these effects (release from greater disruption with higher Ra) appears to be incorrect.

In addition, from its inception Eq. (2) has had problems with respect to how to incorporate the proposed added response-strengthening effects of alternative reinforcement (i.e., Ra in the denominator). This difficulty is rooted in its forbearer, Eq. (1). Specifically, in Eq. (1), the pre-extinction rate of reinforcement experienced in baseline is carried over unchanged into extinction because r remains unchanged. As a result, decreases in responding during extinction are driven only by the growth of the disruption term across extinction. In extending the model to resurgence with Eq. (2), Shahan and Sweeney (2011) followed this same logic and assumed that the alternative rate of reinforcement (i.e., Ra) added to the contextual reinforcement conditions (i.e., r + Ra) and remained there with the transition to the resurgence test. However, two problems arise from this assumption.

First, it remains unclear how one should incorporate additional changes in the rate of alternative reinforcement that might occur across the course of extinction. For example both, Sweeney and Shahan (2013b) and Winterbauer and Bouton (2012) examined the effects of alternative-reinforcement thinning on resurgence. In such thinning procedures, the rate of alternative reinforcement is reduced across sessions of extinction of the target behavior. Applying the same logic as above when Ra was added to the denominator, one might consider adding each subsequent alternative-reinforcement rate. But, doing so would lead to the absurd prediction that such decreases in alternative-reinforcement rate would produce greater response strength (and greater resurgence) than a situation where the original higher rate of alternative reinforcement is maintained throughout.

Second, the assumption of the additivity of baseline reinforcement rates and alternative-reinforcement rates is somewhat odd in the first place. If response strength is driven by the Pavlovian stimulus-reinforcer relation between a discriminative stimulus context and reinforcers obtained in that context, it is strange to assume the replacement of reinforcement of a target response with reinforcement for an alternative response should increase response strength. For example, if the target behavior is reinforced on a VI 15-s schedule (i.e., 240 reinforcers/h) during baseline and then an alternative behavior is reinforced on a VI 15-s schedule during extinction of the target, why should the Pavlovian stimulus reinforcer relation be assumed to be associated with 240 + 240 = 480 reinforcers/h? The overall rate of reinforcement in the context has not changed, and certainly it has not doubled. This issue arises from broader questions that have never been answered about how the original augmented model (Eq. (1)) should be applied across conditions with changes in reinforcement rate (see Craig et al., 2015; Shahan and Sweeney, 2011; for discussion). At present, an alternative way of incorporating changes in alternative-reinforcement rate across extinction that does not fundamentally alter the basic logic of the augmented model has not suggested itself. As a result, attempting to fix the momentum-based model of resurgence would appear to first require fixing the basic augmented model of extinction. Although it could be possible to generate a version of the augmented model that better characterizes changes in reinforcement rates across conditions, such a modified model would not address the problem with the other core assumption of the resurgence model that alternative reinforcement should always serve as an additional source of disruption (e.g., Sweeney and Shahan, 2013b; Craig and Shahan, 2016).

In short, in addition to the conceptual difficulties arising from the empirical failures of the model with respect to how alternative reinforcement is treated as a disruptor in the numerator of Eq. (2), there are additional conceptual difficulties about how alternative reinforcers are treated as a source of additional response strength in the denominator. As a result, both of the core assumptions made to extend behavioral momentum to resurgence appear to be difficult to sustain without a fundamental reworking of the theory, including the progenitor augmented model of extinction (i.e., Eq. (1); see Craig and Shahan, 2016; for full discussion).

The above issues notwithstanding, Eq. (2) also fails to provide any account of another outcome sometimes observed in experiments on resurgence. Eq. (2) predicts that as soon as alternative reinforcement is removed during a resurgence test, target responding increases and then decreases across further sessions of testing in extinction. This outcome does often occur in the literature (e.g., Sweeney and Shahan, 2013b; see Shahan and Sweeney, 2011, for review). However, target responding during resurgence tests often also occurs at a lower rate in early sessions of extinction of the alternative behavior, before increasing and again decreasing with additional sessions (see Podlesnik and Kelley, 2015; for review). In its present form, Eq. (2) has no means to account for such bitonic functions across sessions of resurgence testing.

Thus, although the Behavioral Momentum-Based theory of resurgence has been useful for generating research and for providing a broader theoretical context in which to frame resurgence, the problems with the core theoretical assumptions of the model, its empirical failings, and more general empirical problems for Behavioral Momentum Theory in general (see Craig et al., 2014 for review) suggest that an alternative approach may be more useful in generating a viable quantitative theory of resurgence. This conclusion is bolstered by the fact that the theory as developed thus far is only applicable to extinction-induced resurgence, and thus fails to provide insights into resurgence in the broader sense described in the Introduction section above.

3. Context Theory

The contextual account of resurgence is based upon a more general approach to relapse phenomena (e.g., renewal, spontaneous recovery, reinstatement) that characterizes post-extinction increases in operant or Pavlovian responding as resulting from retrieval from memory of previously learned associations under ambiguous circumstances (e.g., Bouton, 2002, 2004). Specifically, when an association is formed between either a conditional stimulus (CS) and an unconditional stimulus (US) or between a response and an outcome and is then followed by extinction, the meaning of the CS or the response becomes ambiguous as a result of these conflicting associations. Contextual stimuli serve as occasion setters for disambiguating these conflicting memories such that contexts that are more similar to the initial training context promote retrieval of the original learning, but conditions more similar to the extinction context promote retrieval of extinction learning. Further, the approach suggests that new inhibitory learning occurs during extinction, in the case of operant behavior (on which we will focus here), learning to withhold responding. This new inhibitory learning in extinction is suggested to be highly contextually dependent, such that changes in the contextual stimulus conditions produce failures of this new learning to generalize–thus resulting in increases in responding. It is important to note that the theory does not specify how the original excitatory conditioning or the inhibitory conditioning during extinction occurs (see McConnell and Miller, 2014). Instead, it defers to and depends upon other traditional associative theories with a number of theoretical complexities and uncertainties of their own (e.g., see Gallistel and Gibbon, 2002, for discussion).

Regardless, the core phenomenon in the contextual approach in general and as applied to resurgence is renewal. In a typical renewal procedure responding is established within one context (i.e., Context A; e.g., combinations of distinct flooring, scents, and chamber markings with rats) and then extinguished in a different context (i.e., Context B). Renewal is said to occur when either a return to Context A (i.e., ABA renewal) or testing in a novel Context C (i.e., ABC renewal) produces an increase in responding relative to the final level of responding in Context B. The short version of the contextual account of resurgence is that it is simply a form of renewal.

Originally, Bouton and Swartzentruber (1991) suggested that resurgence is a form of ABA renewal, but more recently Bouton and colleagues have suggested that it might be more appropriate to consider it a form of ABC renewal (e.g., Bouton et al., 2012; Trask et al., 2015; Winterbauer and Bouton, 2010). The idea is that resurgence is driven by changing contextual stimuli generated by reinforcer deliveries across conditions. Specifically, during baseline training of the target response, reinforcers are provided for the target response (Context A). With the transition to extinction of the target response and reinforcement of the alternative behavior, reinforcers now become available for the alternative, and these reinforcers serve as the context for learning to inhibit the target response (i.e., Context B). When the alternative behavior is also placed on extinction, this constitutes a novel context (i.e., Context C) characterized by the absence of reinforcement for either behavior. Thus, the hypothesized learning to withhold the target behavior that occurred in Context B fails to generalize to Context C, and target responding increases as a result of retrieval of the original association. Using this framework, Bouton and colleagues have argued that all data within the resurgence literature can be explained (see Trask et al., 2015) by specifying how various experimental manipulations in the literature might be characterized as changes in context.

Although the contextual account provides a general framework within which to place resurgence and may appear to provide a comprehensive explanation of resurgence data, the nature of the account raises serious concerns for us. In essence, the account suggests that any time resurgence occurs, the increase in behavior can simply be attributed to context change. As a result, it is difficult to see the account as an explanation, as opposed to simply a post-hoc description of experimental outcomes. A wide variety of changes in the external or internal environment of the organism (e.g., overt stimuli, emotions, mood, deprivation state, expectation of events, time, reinforcers and their absence, drug states) have been characterized as changes in context (cf. Bouton, 2002; McConnell and Miller, 2014). In practice, such changes in context must be inferred from the increases in behavior they seek to explain, even if they are explicitly arranged with distinctive stimuli, but especially if they are not. As one example, there has been considerable research on the rate and distribution of alternative reinforcers across sessions of extinction on resurgence (e.g., Craig and Shahan, 2016; Schepers and Bouton, 2015; Sweeney and Shahan, 2013b; Winterbauer and Bouton, 2010, 2012). The context approach interprets the effects of such manipulations in terms of the contextual changes produced by the changing reinforcer rates–with larger reinforcement rate changes constituting greater context changes (Bouton and Trask, 2016). The difficulty is not that reinforcer rate might be a discriminable feature of the environment, but that any increase in target behavior is said to result from such changes in context and any failure to see expected increases is attributed to failures of those changes to be discriminable enough to constitute a context change for the organism. Conversely, manipulations that are predicted to reduce context change but nevertheless generate similar amounts of resurgence are attributed to unanticipated context changes associated with those manipulations (see especially Winterbauer and Bouton, 2012). When applied in this fashion and without any formal specification of the factors that would allow one to say definitively what should constitute a context change, the context account does not always allow even clear directional predictions. Thus, whatever the virtues of the contextual account with respect to generality it is difficult to consider it a viable theory of resurgence given the lack of specificity/precision and falsifiability (see also McConnell and Miller, 2014; Podlesnik and Kelley, 2015; for related critiques).

However, it is worth noting that Bai et al. (2016) have attempted to begin to quantify some aspects of the context approach with respect to resurgence under limited conditions. Full development of a more general quantitative version of Context Theory might lead to a more viable version of the account. Until then, the notion of context might be viewed as serving as an all-purpose conceptual free parameter with nearly no constraints.

Even with its current level of flexibility, it is notable that Context Theory has also failed to address the bitonic target response-rate functions sometimes obtained in resurgence experiments. It is not immediately apparent why a contextual change engendered by removal of alternative reinforcement would sometimes be weaker (generating less resurgence) in earlier sessions of testing for resurgence, only to then be followed by increases in context change, and then again by decreases in context change. Regardless, as far as we know, Context Theory has never been used to propose any functional form of the response-rate function across sessions of resurgence testing. In addition, the vast majority of experiments inspired by and reported within the framework of Context Theory have conducted only a single session of resurgence testing (e.g., Bouton and Schepers, 2014; Schepers and Bouton, 2015; Winterbauer and Bouton, 2010; Winterbauer et al., 2013). Thus, it is impossible to evaluate both what the obtained response-rate functions might have been and how Context Theory might be applied to such data.

Finally, as with the Behavioral Momentum-Based Theory, Context Theory is built upon the assumption that resurgence is an extinction-related phenomenon. The application of the general contextual approach is based on the assertion that the new learning that occurs during extinction of a target response is highly contextually dependent, and thus susceptible to failures to generalize. The broader treatment of resurgence discussed above in the Introduction section and developed more fully in the next section suggests that extinction-induced resurgence is a specific instance of a broader phenomenon. Given the flexibility and lack of specificity of the contextual approach, it is not difficult to imagine how context change might be imposed upon and used as an explanatory construct for these more general conditions. However, in our opinion, doing so would likely further weaken the apparent value of the approach by making it more obvious that by explaining everything, it might actually explain very little.

Nevertheless, as will become apparent below, the Resurgence as Choice model (RaC) developed here does share some similarities with Context Theory. Most importantly, RaC involves the comparison of changing relative values of reinforcement sources across time. For such relative valuation comparisons to be made, the properties of the outcomes determining value must be discriminated and they must be remembered across time. However, instead of these valuations serving to disambiguate uncertainties about which of multiple conflicting associations are relevant, RaC suggests that the relative valuations are directly responsible for how an organism allocates its behavior to the available options (i.e., choice).

4. The Resurgence as Choice (RaC) model

The general approach to resurgence proposed here is that the probability of some target behavior is a function of the value of the outcomes historically obtained from that option relative to the value of the outcomes obtained more recently from an alternative option. Thus,

| (3) |

where pT is the conditional probability of the target behavior given that a response occurs and VT and VAlt represent the current values of the target and alternative options. As is likely obvious, this expression is a restatement of the concatenated matching law (Baum and Rachlin, 1969). The concatenated matching law is an extension of Herrnstein’s (1961) matching law which suggested that the relative rates of responding to two mutually exclusive response options (B1 and B2) is equal to the obtained relative rates of reinforcement obtained at those two options (R1 and R2), and thus,

| (4) |

Baum and Rachlin (1969) extended this formulation by suggesting that other parameters of the outcomes (e.g., magnitude, immediacy, quality, punishment, etc.) could be incorporated into the matching law by subsuming them into the construct of value (i.e., V) such that,

| (5) |

Thus, from this perspective the relative allocation of behavior to two options is governed by relative value of the two options, with value determined by the concatenated effects of the parameters of reinforcement for those options. The expression B1/(B1 + B2) is really just the conditional probability of B1, and can be written as pB1, as we have done in Eq. (3) above.1

A voluminous experimental and theoretical literature has been generated by matching theory since it was first proposed nearly 60 years ago (see Commons et al., 1982; Davison and McCarthy, 1988; Herrnstein et al., 1997; for book-length treatments). This literature is a rich source of suggestions about how the determinants of choice can be formulated quantitatively, what processes might be responsible for matching, and how matching theory might be extended to a vast array of choice-related situations. Many of these developments could prove useful in understanding resurgence and for generating quantitative models addressing the details of specific experimental arrangements. Indeed, in further exploring RaC below, we will make use of some of these previous developments, but many more are available for potential future refinement and extensions to additional circumstances.

RaC as presented at the general level in Eq. (3) is really just a conceptual framework within which to view resurgence. Because allocation to an historically productive target option (i.e., pT) is governed by the value of the outcomes produced by that option (VT) relative to the those produced by an alternative option (VAlt), any decrease in VAlt (all else being equal) would be expected to produce an increase in pT. The assertion of RaC is that resurgence is the result of just such an increase. To understand resurgence within this framework, consider an example. Imagine a rat responding on one lever on a variable-interval (VI) 15-s schedule of reinforcement. Next imagine that an additional lever is introduced that also produces reinforcement on a VI 15 s while the initial lever continues to produce reinforcement on a VI 15 s. Given what we know about choice and the matching law (Eq. (5)), no one would be surprised to see responding decrease on the initial lever, nor to see that if the second lever is then placed on extinction that responding on the initial lever would increase. What has seemed special about resurgence in the past is that the initial target behavior is placed on extinction with the introduction of the second lever. Nevertheless, if the initial target option maintains some residual value (VT) across extinction, resurgence can be viewed as being similar to the example in which reinforcement remained for the target. Further, any other manipulation applied to first decrease the value of the target option (VT) and then the value of the alternative option (VAlt) might be expected to produce similar effects in both examples. From this conceptual framework, the way to formalize resurgence is to determine how various outcomes are related to value and how changes in those outcomes affect the values of the options across time.

In the absence of formalization of how various parameters of the outcomes obtained at the options determine value across time, RaC is at the same level of specificity and flexibility as Context Theory. Indeed, in its most general form, the concatenated matching law upon which RaC is based is arguably tautological and unfalsifiable (Rachlin, 1971)–like Context Theory. Similar to the dependence of Context Theory on post-hoc inferences of context change based on changes in responding, RaC at this level can infer post-hoc changes in the relative values of VT and VAlt across time based on changes in pT. In short, without more specificity, RaC is only a framework for generating more specific, quantitative hypotheses about the processes at work. By generating and testing such quantitative statements about the processes at work, this approach could lead to recognition of failures in our understanding, and thus, to future refinements. As a demonstration of this approach, we will next provide a sketch of one way in which resurgence in the typical extinction-induced sense might be formalized.

4.1. The Temporal Weighting Rule (TWR)

In a typical experiment to examine extinction-induced resurgence, three phases are used. In the Phase 1, a target behavior (e.g., left lever press) is reinforced according to some schedule of reinforcement (e.g., a VI 15-s schedule). In Phase 2, the target behavior is placed on extinction and simultaneously a different, alternative behavior (e.g., right lever press) is made available and reinforced on some schedule (e.g., a VI 15 s). In Phase 3, the alternative behavior is also placed on extinction and resurgence is said to occur when the initial target behavior increases in frequency compared to Phase 2. All else being equal, these changing rates of the outcomes for the two options (i.e., reinforcement rates) would be a likely determinant of the values of the two options from the standpoint of RaC.

In attempting to extend matching theory to extinction-induced resurgence, Cleland et al. (2001) noted a difficulty associated with incorporating reinforcement rates associated with the two options(R1 and R2). Specifically, during Phase 2, the reinforcement rate associated with the target behavior is zero, as is the reinforcement rate for both the target and alternative behaviors in Phase 3. Thus, a straightforward application of the matching law (e.g., Eq. (4)) would fail to make any predictions about the allocation of behavior because both sides of the equation would equal zero in Phases 2 and 3. What is required to make such a framework feasible is a means to incorporate how the experience of past reinforcement is carried forward in time and combined with present circumstances (in this case, extinction) to determine value. Although there are many approaches to this issue (e.g., Davis et al., 1993; Davison and Hunter, 1979; Killeen, 1981), we have chosen to use the Temporal Weight Rule (TWR; see Devenport and Devenport, 1994; Mazur, 1996; for reviews) for both empirical and theoretical reasons that will be discussed more fully below.

The TWR provides a means to calculate how organisms weight varying past experiences as a function of the relative recency of those experiences. Specifically, the rule suggests:

| (6) |

where wx is the weight to be applied to a particular past experience. The numerator of this expression represents the recency of that particular past experience with tx being the time between the past experience and the present (tx is calculated as T−τx + 1, where T is the present time and τx is the time point for which tx is being calculated–ti is calculated similarly). Thus, more recent experiences (i.e., smaller tx) receive greater weighting (i.e., wx). The denominator is simply the sum of all the recencies of past experiences, some number n of which are under consideration. Thus, the rule provides a weighting for each of a series of experiences across time (i.e., w1, w2,... wn), and because each recency is divided by the sum of all the recencies in the series, these weightings always sum to 1. Each wx represents, therefore, a relative recency.2

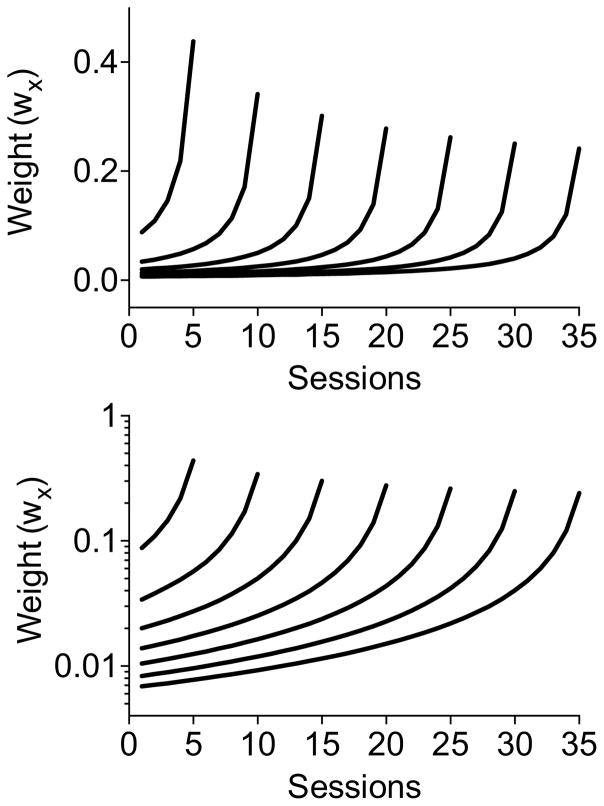

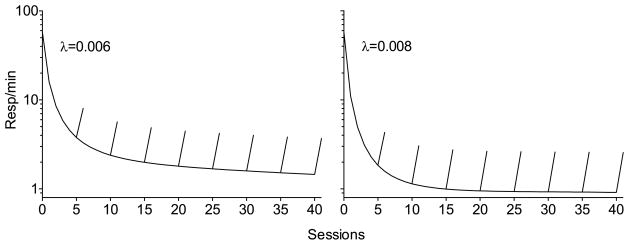

The top panel of Fig. 1 shows weightings for examples of series of experiences at different time points across 35 sessions. Specifically, in this example, tx is measured in number of sessions (i.e., one every day), with the most recent session having tx = 1 (the far right end of each series), the second most recent having tx = 2, and so on. For visual clarity, example weightings are provided only for series after every 5 sessions but, of course, a weighting function would be associated with every session increment. These functions characterizing how wx decays from the present session to sessions in the past are hyperbolic.3 As such, the weights associated with the most recent sessions initially decline quickly as they recede into the past (moving to the left on the x-axis), but the functions decelerate such that weightings for sessions from the more distant past decline more slowly. As a result, recent experience can have a relatively large impact, but the effects of the more distant history tend to linger for a long time. To make this feature of the weighting functions more clear, the bottom panel of Fig. 1 shows the same functions as the top, but with a logarithmic y-axis.

Fig. 1.

The top panel shows sample weighting functions generated by Eq. (6) (i.e., the Temporal Weighting Rule). Functions are presented after every five sessions. The bottom panel shows the same functions with a logarithmic y-axis.

To determine the value (i.e., V) of an option, the outcome experienced for that option at each time point in the past (i.e., Ox) is simply multiplied by the weighting for that time point (i.e., wx), and then all of the weighted outcomes are summed (e.g., Devenport and Devenport, 1994; Devenport et al., 1997; Mazur, 1996) such that:

| (7) |

If more than one option is available, Eqs. (6) and (7) are applied to the series of outcomes experienced at each of the options and a value is calculated for each option (i.e., V1 & V2). Probability of choosing an option is then determined by calculating the relative values of the options [i.e., p1 = V1/(V1 + V2) as in Eqs. (3) and (5) above].

Devenport and colleagues have shown that, thus applied, the TWR accounts well for the foraging behavior of a variety of organisms in situations with variable patch outcomes across time (e.g., Devenport and Devenport, 1993, 1994; Devenport et al., 1997). Devenport et al. (1997) have also shown that the TWR can account for spontaneous recovery (i.e., an increase in extinguished responding with the simple passage of time).4 Further, Mazur (1996) has demonstrated that the rule can be extended to account for the spontaneous recovery of previous response allocations in choice situations with transitory changes in relative reinforcement rates (see also Gallistel et al., 2001). These findings are important for two reasons. First, as both Devenport and colleagues and Mazur discuss, the TWR has provided an account of such findings where other approaches fail (e.g., an exponentially weighted moving average, Killeen, 1982). Second, the application of the TWR to spontaneous recovery demonstrates that the approach can provide an account of one of the core relapse phenomena. Thus, it seems that the TWR could be promising as a means to account for resurgence.

4.2. The TWR and extinction-iduced resurgence

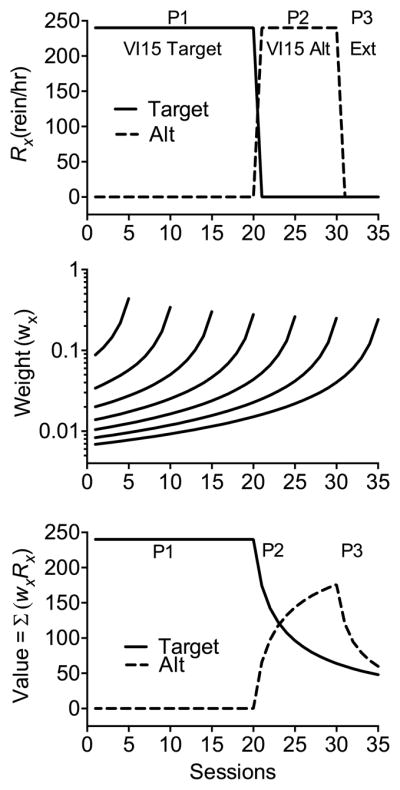

To understand how the TWR might be applied to extinction-induced resurgence, consider Fig. 2. The top panel displays an example of changing reinforcement rates across the typical three phases in a resurgence experiment. The target behavior is reinforced on a VI 15-s schedule in Phase 1 for 20 sessions. Next, in Phase 2, the target behavior is placed on extinction for 10 sessions and the alternative behavior is reinforced on VI 15 s. In Phase 3, both the target and alternative behaviors are extinguished for 5 sessions. The resulting reinforcement rates (i.e., reinforcers/h) depicted in the figure are the input to the TWR across time. The middle panel of Fig. 2 shows sample weighting functions generated by Eq. (6). Again, functions are presented for only every 5 sessions for visual clarity, but each session increment would have a corresponding new weighting function. To obtain values for the target (VT) and alternative (VAlt) options across sessions, the weighting for each session (wx from Eq. (6)) defined by the current session’s weighting function is applied to the past reinforcement rates for the target (RxT) and alternative (RxAlt) and then summed across all sessions for that weighting function:

| (8) |

Fig. 2.

The top panel shows arranged reinforcement rates for the target and alternative options in a sample resurgence experiment. The middle panel shows sample weighting functions as in Fig. 1. The bottom panel shows the value functions for the target and alternative options across sessions resulting from the application of Eq. (8). P1 = Phase 1, P2 = Phase 2, and P3 = Phase 3.

The resulting values for VT and VAlt across sessions are plotted in the bottom panel of Fig. 2. Note that at the beginning of Phase 2 when the target is placed on extinction, VT drops quickly at first and then more slowly as time progresses. In addition, VAlt increases with the introduction of reinforcement for the alternative behavior. Finally, like VT in Phase 2, VAlt decreases quickly at first in Phase 3 when the alternative is placed on extinction, and then more slowly as sessions continue. Because these changes in value in Phases 2 and 3 are of primary interest, the top panel Fig. 3 shows VT and VAlt across these sessions. Most importantly, the bottom panel of Fig. 3 shows how the values of VT and VAlt are translated into the probability of the target behavior (i.e., pT) according to Eq. (3) above. Note that pT decreases across sessions of Phase 2, but when the alternative behavior is also placed on extinction in Phase 3, pT increases across sessions as a result of increases in the relative value of VT. From the perspective of RaC, this increase in pT is resurgence. The reason that relative values change in this way is the hyperbolic form of the weighting function across sessions and the slower decline of value it generates across increasing sessions of extinction. Thus, the history of reinforcement for the target option in Phase 1 is carried forward as VT into Phases 2 and 3 where its lingering impact can be revealed when there is a decrease in VAlt.5 These changes in value across time and the increase in pT (i.e., resurgence) are a natural outcome of the TWR.

Fig. 3.

The top panel shows value functions for the target and alternative options across Phases 2 & 3 as presented in Fig. 2. The bottom panel shows changes in the probability of the target response across sessions generated by using these daily values in Eq. (3).

4.3. Scaled Temporal Weighting rule (sTWR)

Although application of the TWR to changes in the reinforcement conditions across a typical resurgence paradigm might provide a basic framework for understanding how the probability of a target response varies across phases, one aspect of Fig. 3 suggests that this framework might be incomplete. Specifically, the decreases in pT across Phase 2 appear to be too gradual, and pT remains rather high at the end of Phase 2. Real data from resurgence experiments often show rather precipitous declines to near-zero levels of the target behavior across Phase 2. In Fig. 3, the decreases in pT across Phase 2 are strictly dictated by the TWR as formalized in Eq. (6). Eq. (6) asserts that the weighting applied to any past experience (i.e., wx) is determined only by the relative recency of that experience. The equation includes no means to account for potential variations in how immediacy might differentially impact an organism’s weighting of the past as a result of either individual differences or past and present experimental parameters. However, a simple modification of the TWR can supply the approach with additional flexibility to incorporate such potential differences in how recency might impact the weighting of past experiences. Specifically, we propose a scaled temporal weighting rule (sTWR) in which recencies are scaled such that:

| (9) |

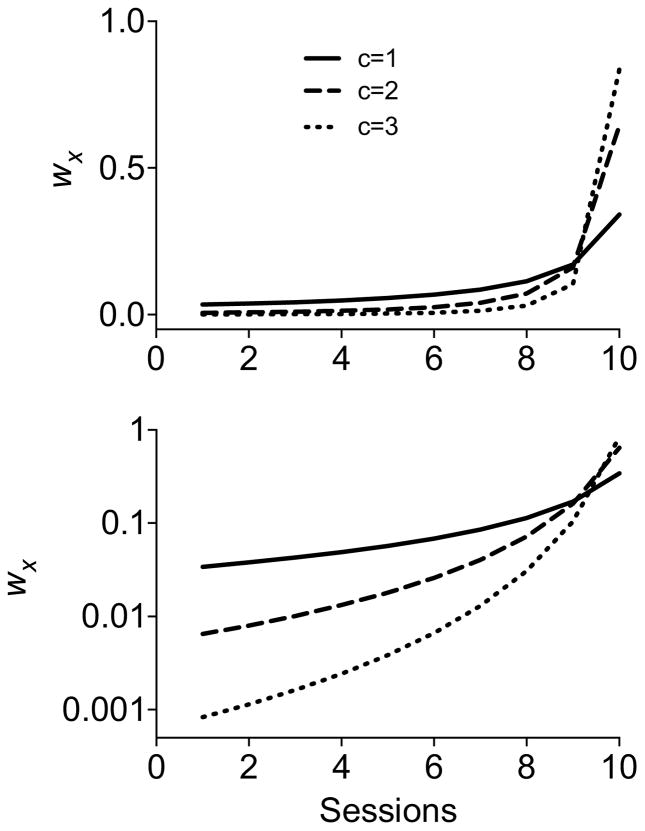

where all terms are as in Eq. (6), and the added term c is scaling exponent on the time from a previous experience to the present. The top panel of Fig. 4 shows how variations in c, what we will call the currency term, impact wx across ten sessions. When c = 1, Eq. (9) is simply the unscaled TWR from Eq. (6). As c increases, additional weight is given to more recent experiences and less weight is given to experiences from the more distant past. Because all recencies are scaled in Eq. (9), the weightings across all the experiences under consideration continue to sum to 1, as was true for Eq. (6)–only the distribution of these weightings across the past are impacted by increases in c. Importantly, the weighting functions generated by Eq. (9) across sessions, maintain the basic hyperbolic decreases generated by the TWR.6 The bottom panel of Fig. 4 shows the same functions as the top, but with a logarithmic y-axis.

Fig. 4.

The top panel shows weighting functions generated by Eq. (9) (i.e., the scaled temporal weighting rule; sTWR). Functions are presented for three different values of the currency term (i.e., c). The bottom panel shows the same functions with a logarithmic y-axis.

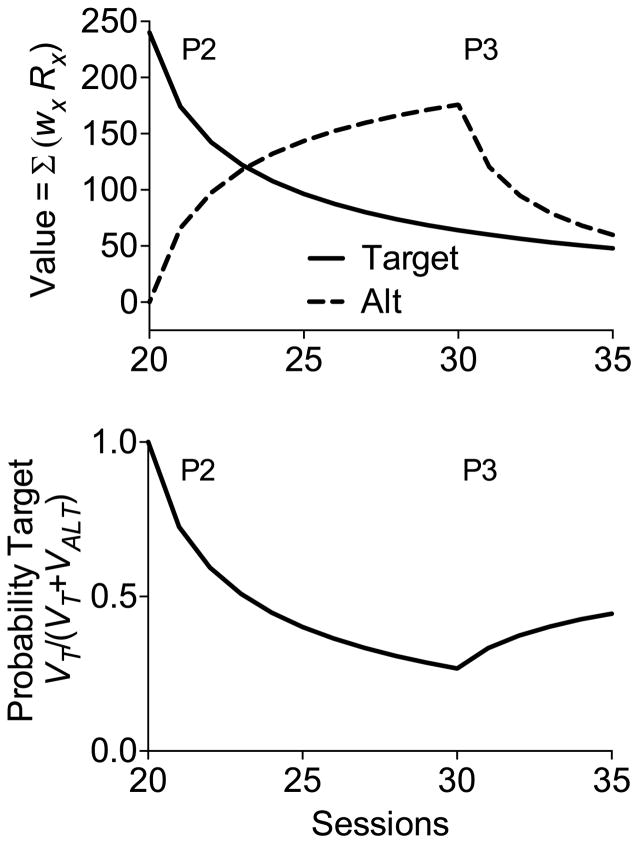

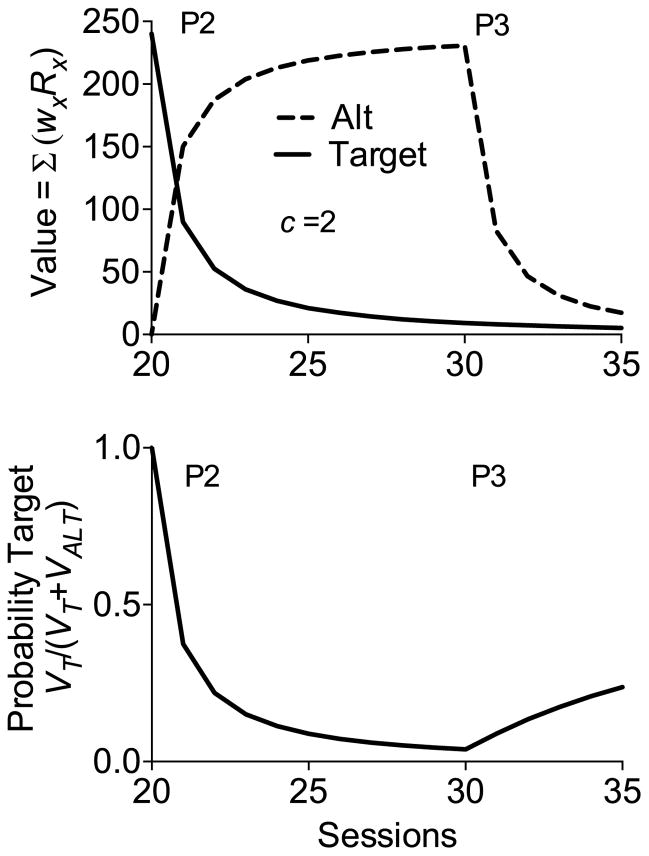

Fig. 5 shows value functions and pT derived from application of the sTWR (i.e., Eq. (9)) to the same reinforcement conditions as in Fig. 3, but with a currency parameter set at c = 2. Note that both the value of VT in Phase 2 and the value of VAlt in Phase 3 decrease more steeply and to lower levels than with the unscaled TWR (i.e., c = 1) as depicted in Fig. 3. In addition, the sTWR in Fig. 5 still produces the increase in pT across sessions of Phase 3 (i.e., resurgence). Thus, with c > 1, the sTWR appears to produce changes in pT across sessions that are more likely to accurately reflect the reality of resurgence experiments.

Fig. 5.

The top panel shows value functions from Eq. (8) for the target and alternative options across Phases 2 & 3 generated by application of Eq. (9) (sTWR with c = 2) to the reinforcement rates across conditions depicted in the top panel of Fig. 2. The bottom panel shows changes in the probability of the target response across sessions generated by using these daily values in Eq. (3).

Any number of variables could impact the value of c, and thus how organisms weight the past. In the absence of rules specifying how it is related to experimental parameters, c would be just a free parameter in the model (as would likely be the case with potential individual or species differences). However, one variable that has been of particular interest in resurgence experiments is the rate of reinforcement (see Craig and Shahan, 2016; for review), and there are good reasons to suspect that c could be related to this variable. To that end, consider the fact that the value function for VT generated by the sTWR across sessions of extinction in Phase 2 reflects only the history of reinforcement associated with the target option (i.e., the history for the alternative does not enter into the calculation of VT –and vice versa). Thus, across Phase 2, VT describes how extinction reduces the value of the target, regardless of whether or not alternative reinforcement is available. As a result, increases in c and the steeper decreases in VT they produce should reflect variables related to resistance to extinction. Although many variables are known to impact resistance to extinction and could affect c, most important for present purposes is the fact that with simple schedules of reinforcement, more frequent reinforcement generates less resistance to extinction.7 In the extreme, where continuous reinforcement generates less resistance to extinction than intermittent reinforcement, this is the well-known partial-reinforcement-extinction-effect (i.e., PREE; see Gallistel, 2012; Mackintosh, 1974; for reviews). However, the effect also extends to less extreme conditions in which higher rates of intermittent reinforcement in single schedules generate less resistance to extinction than do lower rates of intermittent reinforcement (e.g., Baum, 2012; Cohen, 1998; Cohen et al., 1993; Craig and Shahan, 2016; Shull and Grimes, 2006). Based on the sTWR, however, if a single value of c is applied to conditions arranging different reinforcement rates (including with c = 1–the unscaled TWR), all reinforcement rates will generate value functions that decrease from baseline levels at the same rate across sessions of extinction, suggesting no differential resistance to extinction. As a result, the approach would fail to capture PREE-like effects with different reinforcement rates. Given that any experiment on resurgence would necessarily arrange some reinforcement rate, this is an important issue to address.

Therefore, following Killeen (1981) we agree that animals should “pay more attention to recent events” when the frequency of reinforcement is high, and to be “guided by events that have happened over some relatively long period of time” when reinforcement rate is low. Thus, c should be expected to increase with increases in the frequency of reinforcement. Although any number of functions could be used to characterize how c should vary with reinforcement rates (e.g., Killeen uses an additional exponentially weighted moving average), we have found that a linear function based on running reinforcement rate for an option is adequate for present purposes.8 Thus,

| (10) |

where λ is a parameter modulating how quickly the currency term increases with reinforcement rate (i.e., r). Importantly, r reflects the overall average running reinforcement rate (in reinforcers/h) for an option across all of the sessions it has been available. A value of the c parameter is generated for and applied to each of the options separately (i.e., the target and alternative options) based on the running average reinforcement rate for each of those options. In effect, this approach suggests that the overall running average frequency of events experienced for an option determines the degree to which more recent events impact weightings for outcomes from that option. An option that has historically produced reinforcers at a high frequency generates heavier weighting of recent events at that option, but an option that has historically produced reinforcers at a lower rate generates less weighting of recent events at that option and a broader weighting of the past. If a static value of r were used in the determination of c (e.g., the last reinforcer rate experienced in baseline), then c would fail to adapt to the rate at which events are encountered at an option across time. As a result of using the running average reinforcer rate for an option, as r approaches zero, c approaches 1 (i.e., the unscaled TWR) and the organism takes a broader view of the past.

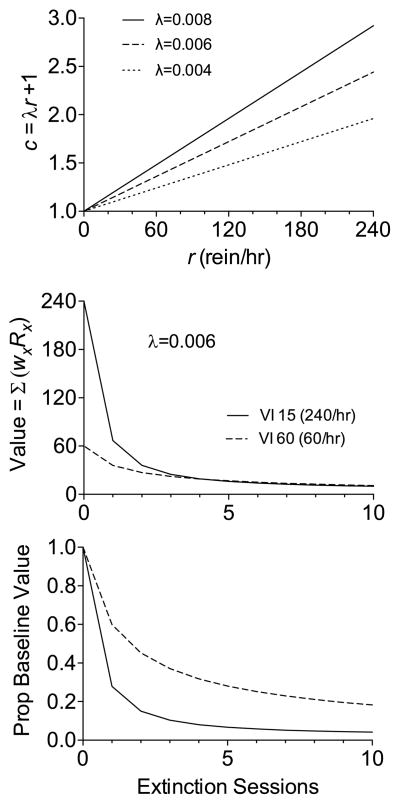

The top panel of Fig. 6 shows how c varies with reinforcement rates with different values of λ according to Eq. (10). The middle panel of Fig. 6 shows value functions across sessions of extinction generated by the sTWR with λ = 0.006 following reinforcement on VI 15-s versus VI 60-s reinforcement. Although value generated by the VI 15-s schedule is higher at the end of baseline (i.e., zero on the x-axis) than for the VI 60 s, value for the VI 15 s decreases more quickly with the introduction of extinction. To highlight this effect, the bottom panel of Fig. 6 shows the same functions as the middle panel, but presented as a proportion of the initial baseline value. Thus, when equipped with a currency term determined by reinforcement rate via Eq. (10), the sTWR generates value functions across extinction sessions that are consistent with PREE-like effects in single schedules. Before moving forward with the application of the sTWR to resurgence under different reinforcement-rate conditions, it would be desirable to first have a model to generate absolute rates of responding, as opposed to just changes in pT across phases, as has so far been the case. Thus, we first turn our attention to this issue.

Fig. 6.

The top panel shows the relation between average running reinforcement rate for an option (i.e., r) and the value of the currency term (i.e., c) as determined by Eq. (10). Functions are presented for three different λ parameters. The middle panel shows value functions during 10 sessions of extinction following reinforcement on a VI15-s or VI60-s schedule with λ = 0.006. The bottom panel shows the same value functions presented as a proportion of the value at the end pre-extinction training (i.e., x = 0 in the middle panel).

4.4. Response output

There are many ways one might build a model to convert changes in pT to changes in response rates. Here we provide an example of one such model,

| (11) |

where BT is target-response rate (resp/min) and VT and VAlt are as above. The parameter k is asymptotic baseline response rates, and A reflects the level of arousal. Obviously, the model is inspired by Herrnstein’s (1970) absolute response-rate version of the Matching Law. Notably, rather than using Herrnstein’s Re parameter (i.e., extraneous sources of reinforcement) in the denominator, we assume that overall output is modulated by invigorating effects of reinforcement (i.e., arousal) in a manner inspired by Killeen (1994). Because 1/A appears in the denominator, higher values of A tend to generate higher response rates (i.e., rates approach k more quickly with increases in VT). This approach is superior to the use of Re for present purposes because it is likely that the overall level of arousal (and response rate) will vary across the phases of a resurgence experiment in a way that would be difficult to capture with a fixed Re. For example, although pT from Eq. (3) (bottom panel Fig. 5) continuously increases across the sessions of resurgence testing in Phase 3, no reinforcer deliveries are occurring across those sessions and response rates are likely to decrease, generated here by decreases in arousal (i.e, A). The question that remains is how the value of A should be calculated across sessions.

Again, there are many possible ways one could formalize the relationship between reinforcement rates and arousal. Killeen (e.g., 1994) has suggested that arousal is a linear function of reinforcement rate. Further, Gibbon (1995) and Gallistel et al. (2001) have suggested that arousal in choice situations is a linear function of overall reinforcement rates across the alternatives (i.e., R1 + R2). However, because there is no reinforcement arranged in Phase 3, the current reinforcement rates obviously will not do. Of course, this is the problem for which the TWR was recruited to solve above. Thus, we suggest that the overall level of arousal (i.e., A) is a linear function of the summed values of the options (VT and VAlt) such that:

| (12) |

where the parameter a is the slope of the relation between arousal and value.9 In short, this approach assumes that the overall level of arousal is governed by the overall current value of the prospects for the two options. As a result, the decreases in value for both options across the sessions of Phase-3 extinction would produce consistent decreases in A across those sessions. In short, as the effects of all reinforcement recede into the past, arousal will decrease. Importantly, although the overall level of behavioral output would be expected to decrease across Phase 3, the increases in pT across that phase suggest that an increasing proportion of the responses that do occur will be to the target option.

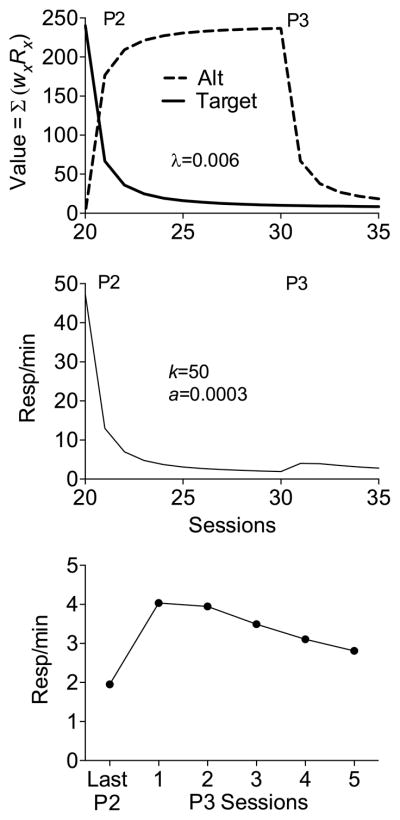

The top panel of Fig. 7 shows value functions for Phases 2 and 3 generated by Eqs. (9) and (10) with λ = 0.006 and reinforcement conditions as described in the earlier examples (i.e., target = VI 15 s; alternative = VI 15 s). The middle panel shows response rates generated from these value functions by Eqs. (11) and (12) with k = 50 and a = 0.0003. Note that removal of alternative reinforcement in Phase 3 results in an increase in target-response rates (i.e., resurgence). As is typically the case in resurgence experiments, the increase in target responding in Phase 3 is relatively small compared to baseline levels of responding. The bottom panel of Fig. 7 zooms in on the resurgence effect in Phase 3 in a manner more typical of how resurgence is presented in the literature. Note that target responding increases with the removal of alternative reinforcement in the first session of Phase 3 and then declines thereafter. Thus, when supplied with assumptions about how the relative values of target and alternative options change across time and how those relative values might be converted into response rates, the general framework suggested by RaC appears capable of generating a potentially viable quantitative account of resurgence.

Fig. 7.

The top panel shows value functions generated by the sTWR (Eq. (9)) using a currency term (i.e., c) determined by reinforcement rates according to Eq. (10) with λ = 0.006. Phase 1 target = VI15, Phase 2 alternative = VI15. The middle panel shows absolute response rates based on this value functions generated by Eq. (11). The bottom panel provides a zoomed view of the last day of Phase 2 and five Phase 3 resurgence sessions.

4.4.1. Response rates, arousal, and resurgence

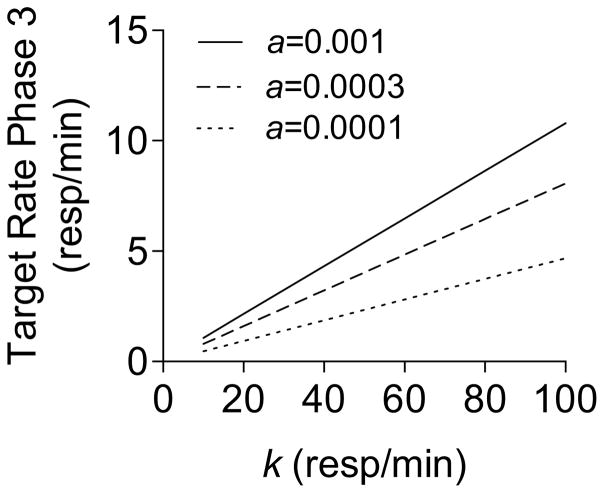

As reviewed by Trask et al. (2015), a reliable finding in the study of resurgence is that higher rates of Phase-1 responding are associated with higher rates of responding during resurgence testing in Phase 3 (e.g., de Silva et al., 2008; Winterbauer et al., 2013). From the current perspective, this result is a natural outcome of Eq. (11). The k parameter scales the relative valuations of the target and alternative options into the units of the target response in responses/min and represents asymptotic baseline rate of the target. Thus, higher values of k are associated with higher rates of the target response. Specifically, Eq. (11) suggests that response rates during Phase 3 are a linear function of asymptotic baseline response rates (i.e., k). This prediction is consistent with data presented by Sweeney and Shahan (2013b, see their Fig. 8 showing that Phase 3 response rates were indeed a linear function of Phase-1 response rates). Further, given that response output is modulated by 1/A in Eq. (11), higher levels of arousal also generate higher response rates in Phase 3 via higher values of the a parameter. Fig. 8 shows the relation between asymptotic baseline response rate (i.e., k) and response rates on the first day of Phase-3 resurgence testing generated with VI 15-s reinforcement for both the target and alternative options and λ = 0.006 as above in Fig. 7. Fig. 8 also shows that the slope of this function is steeper, and thus response rates during resurgence testing are higher at the same k value, as the value of a increases.

Fig. 8.

The relation between target response rates in the first session of Phase 3 (resurgence) and asymptotic baseline response rates (i.e., k in Eq. (11)). Functions are shown for three different a parameter values relating arousal to overall value of the options in Eq. (12).

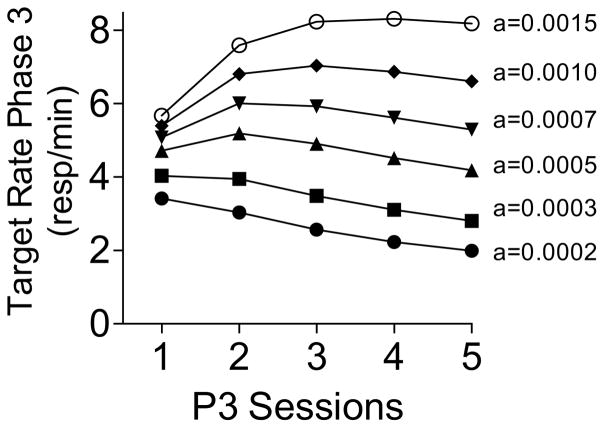

In addition to affecting the rate of the target response on the first day of resurgence testing in Phase 3 as shown in Fig. 8, it is important to note that changes in the a parameter also affect the shape of the target response-rate function across additional sessions of Phase 3. Fig. 9 shows target-response rates across 5 days of Phase-3 resurgence testing with a range of a values.10 Other parameters are as in the figures above (i.e., k = 60, λ = 0.006, Target = VI 15 s, Alternative = VI 15 s). As in Fig. 8, lower levels of the a parameter (i.e., the scalar for converting value into arousal, A), are associated with roughly linear decreases in target-response rates across Phase 3. However, as the a parameter increases, response rates begin to take on a bitonic form, initially increasing before beginning to decrease. At the highest values of a, the response rate functions begin to show less of a decrease at later sessions. Thus, RaC suggests that response-rate functions during the course of resurgence testing are sometimes bitonic (see Podlesnik and Kelley, 2015) and sometimes not because of potential differences in arousal during resurgence testing. As noted above, neither Behavioral Momentum Theory nor Context Theory provides an account of such response-rate functions across resurgence test sessions. It is important to remember that the source of this function in terms of RaC is the increasing probability of the target response (i.e., pT in Eq. (3)) associated with changes in the relative values of the target (VT) and alternative options (VAlt) across Phase 3. However, those increases in pT across resurgence testing sessions are counteracted by decreases in arousal due to the absence of recent reinforcement. In the end, the shape of the response-rate function depends upon the value of the a parameter which governs the extent to which overall reductions in the value of both options are converted into arousal. This interpretation suggests that research directly manipulating variables potentially related to arousal (e.g., deprivation, etc.) might allow direct experimental control of the shape of the response-rate function across sessions of resurgence testing.

Fig. 9.

Target response rates across 5 sessions of Phase-3 (resurgence) test sessions generated with different a parameter values in Eq. (12).

4.4.2. Bias

Although most experiments examining resurgence use target and alternative responses that are topographically similar (e.g., two lever presses or response keys), some experiments use responses that are topographically different (e.g., Craig and Shahan, 2016; Lieving and Lattal, 2003; Podlesnik et al., 2006; Sweeney and Shahan, 2013b). In circumstances where the responses are topographically different, there is the possibility that differences in the responses (e.g., difficulty, distance from food hopper, etc.) could lead to bias for one response over the other. Starting with Baum’s (1974) generalized matching law, bias has been treated formally in matching theory as a source of preference for one alternative that is independent of the conditions of reinforcement arranged by the options. McDowell (2005) has shown how such bias can be incorporated into Herrnstein’s (1970) absolute response-rate version of the matching law. Thus following McDowell we suggested that bias can be incorporated into RaC such that,

| (13) |

where all terms are as in Eq. (11) and the added parameter b represents bias. Values of b > 1 represent bias for the target option and b < 1 represent bias for the alternative option. Thus equipped, RaC could accommodate bias associated with the use of topographically different responses for the target and alternative options. In cases where topographically similar responses are used, b would be expected to be approximately 1 and could be omitted.

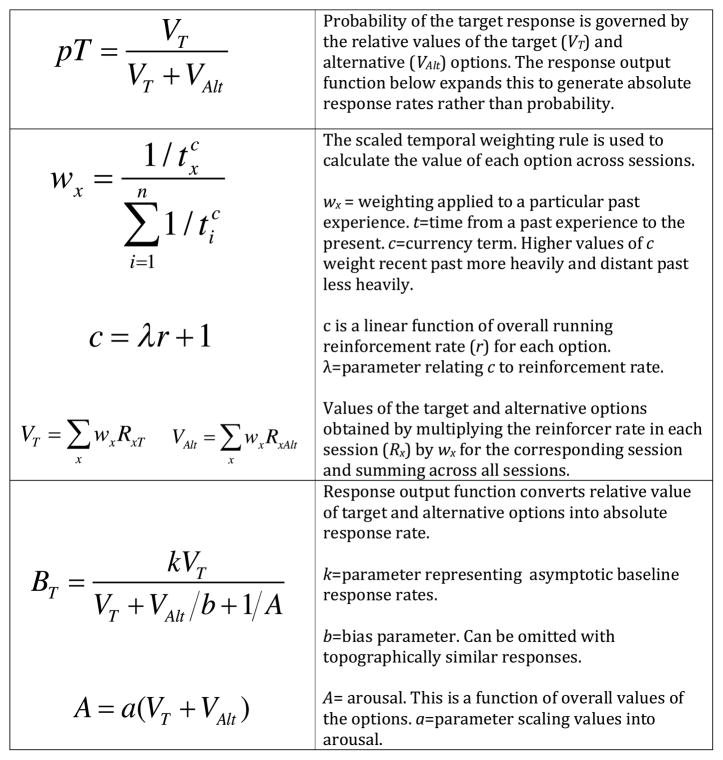

4.5. Model summary

RaC as developed above has three core components summarized in Fig. 10. First, in the most general sense, RaC suggests that probability of a target response (pT) is a function of the relative values of the target (VT) and alternative (VAlt) options according to Eq. (3). Second, the values of the two options across time are determined by the relative recencies of past experiences of reinforcement at those options according to Eqs. (8) and (9). The extent to which relative immediacy impacts value may depend on any number of factors (captured by the currency term c), but one factor that is likely to have such effects is the rate of reinforcement. The effects of reinforcement rate on c are determined by Eq. (10), where the λ parameter represents the degree to which c increases with increases in overall running average reinforcement rate for each option. Third, absolute response rates, as opposed to pT, are generated by the response-output function (i.e., Eq. (13)). Eq. (13) suggests that response output is a function of asymptotic baseline response rates (k), potential response bias (b), and arousal (A). Arousal is determined by Eq. (12), where the parameter a represents the extent to which overall value of the options is converted into arousal. Thus, as applied here, the model has four free parameters (i.e., λ, k, a, and b). However, the bias parameter (b) would be expected to be near 1 in typical experiments where topographically similar responses are used, and thus could be omitted, leaving the model with three free parameters.

Fig. 10.

Summary of the major components of the RaC model.

It is important to note at this point that the specific model of resurgence summarized in Fig. 10 is meant only to illustrate how the general framework provided by RaC might be formally developed to account for resurgence. We have made a number of assumptions about how specific processes might be involved and how they may combine to generate resurgence. Any number of these assumptions could be wrong or in need of modification. Nevertheless, as we will show below, despite any weaknesses in the assumptions of this example model, the general framework provided by RaC appears to provide a reasonably good account of many data from the resurgence literature that were problematic for Behavioral Momentum Theory.

5. Application of RaC to extinction-induced resurgence

5.1. Effects of Phase-1 and Phase-2 reinforcement rate

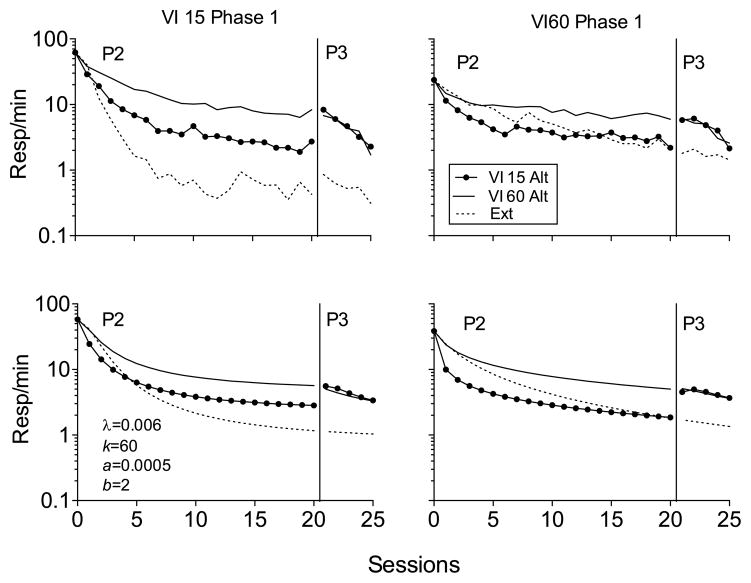

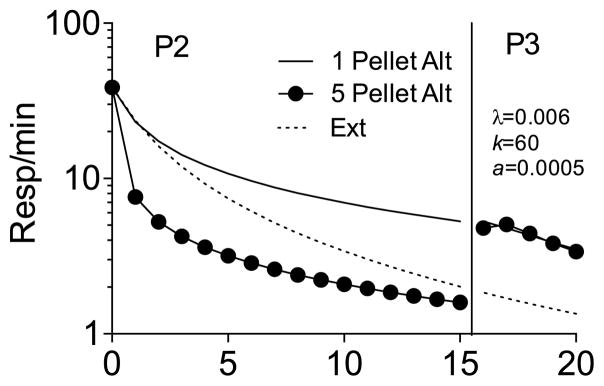

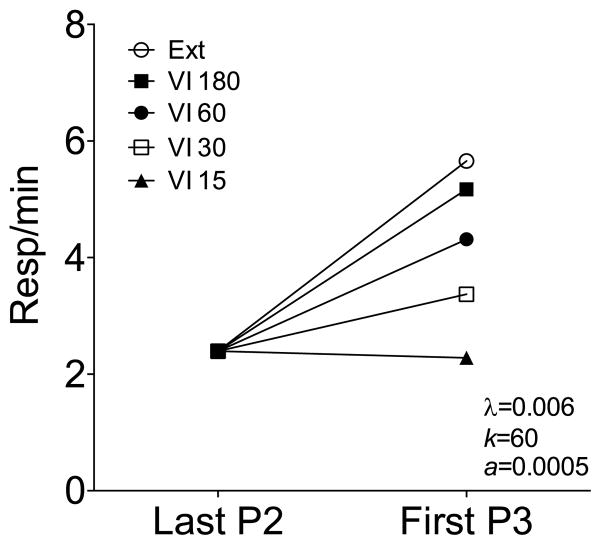

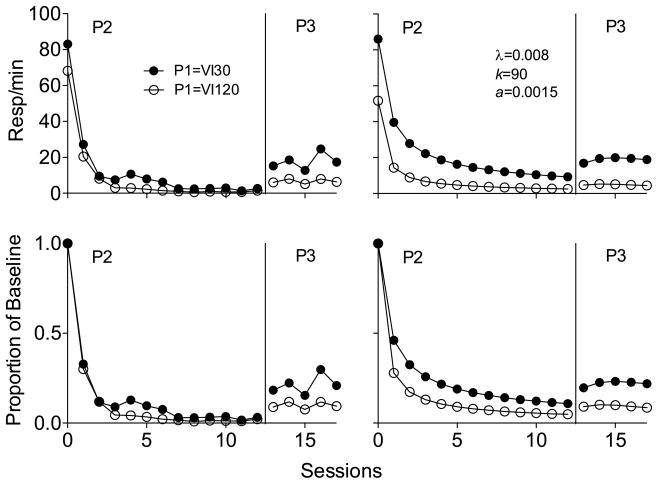

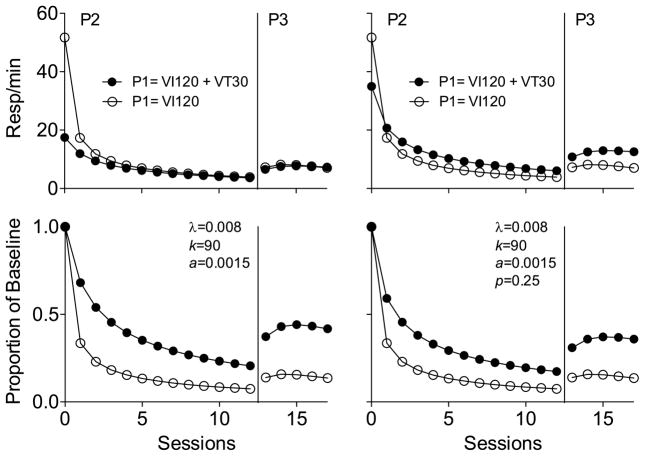

As noted above, the effects of reinforcement rate on resurgence have posed a serious difficulty for Behavioral Momentum Theory. Thus, we begin with a treatment of the most extensive dataset from a single experiment examining the effects of reinforcement rates on resurgence in single schedules by Craig and Shahan (2016). The experiment examined the effects of variations in Phase-1 and Phase-2 reinforcement rates in six groups of rats. In Phase 1, three groups earned food pellets for lever pressing on a VI 15-s schedule and three other groups on a VI 60-s for 30 sessions. In Phase 2, lever pressing was extinguished for all groups for 20 sessions, and subgroups received no alternative reinforcement or alternative reinforcement (i.e., the same food pellet at Phase 1) for nose-pokes on the opposite wall of the chamber on a VI 15-s or VI-60-s, thus resulting in six groups: VI 15 VI 15, VI 15 VI 60, VI 15 Ext, VI 60 VI 15, VI 60 VI 60, & VI 60 Ext. In Phase 3, nose poking was extinguished for 5 sessions for the groups that had received alternative reinforcement in Phase 2.

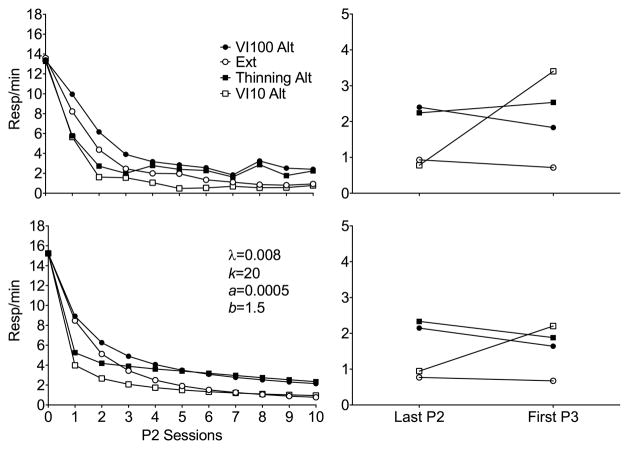

The top panels of Fig. 11 show the data from the Craig and Shahan (2016) experiment. The only notable difference for the groups that had received Phase-1 reinforcement on a VI 15-s schedule (i.e., top left panel) versus VI 60-s schedule (top right panel) was that responding for the VI15 Ext group decreased more quickly than for the VI60 Ext group (i.e., a PREE-like effect). The rate of baseline reinforcement had no meaningful impact on Phase-2 or Phase-3 responding for the other groups. However, higher-rate alternative reinforcement during Phase 2 (groups VI 15 VI 15 and VI 60 VI 15) generated lower target response rates than did lower-rate alternative reinforcement (i.e., VI 15 VI 60 and VI 60 VI 60). Nevertheless, lower-rate alternative reinforcement generated higher rates of responding during Phase 2 than was observed for the groups that had received no alternative reinforcement. As discussed above, this outcome is a major contradiction to the predictions of Behavioral Momentum Theory. A similar result with low-rate alternative reinforcement was also previously obtained by Sweeney and Shahan (2013b, discussed below), but the Craig and Shahan experiment was the first to obtain greater responding in Phase 2 with high-rate alternative reinforcement than with no alternative reinforcement. With respect to Phase 3, removal of high-rate alternative reinforcement produced resurgence for the VI 15 VI 15 and VI 60 VI 15 groups, whereas removal of lower rate alternative reinforcement for the VI 15 VI 60 and VI 60 VI 60 groups did not. Importantly, response rates for the high-rate and low-rate alternative-reinforcement groups did not differ during Phase 3. The reason the high-rate alternative-reinforcement groups showed resurgence and the low-rate groups did not was that response rates were lower in Phase 2 for the high-rate alternative-reinforcement groups. The bottom panels of Fig. 11 show that RaC provides a reasonably good simulation of this complex pattern of data with λ = 0.006, k = 60, a = 0.0005, and b = 2. The inclusion of the bias parameter is consistent with a bias for the target lever press over the alternative nose poke on the back wall of the chamber. In this case, bias for the target lever might have been present because the lever was closer to the food aperture (which was located on the front wall of the chamber).

Fig. 11.

The top panels show data replotted data from Craig and Shahan (2016). The bottom panels show simulations generated by RaC. Details in text.

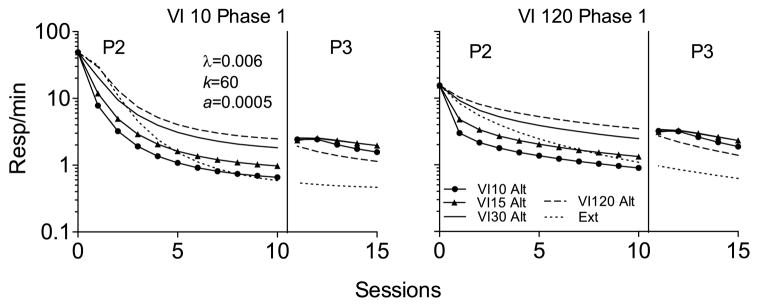

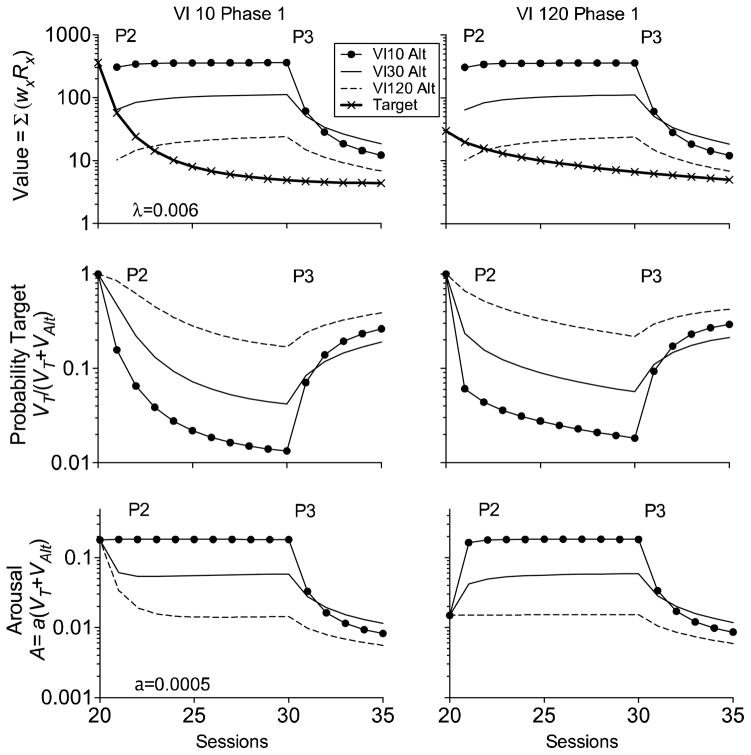

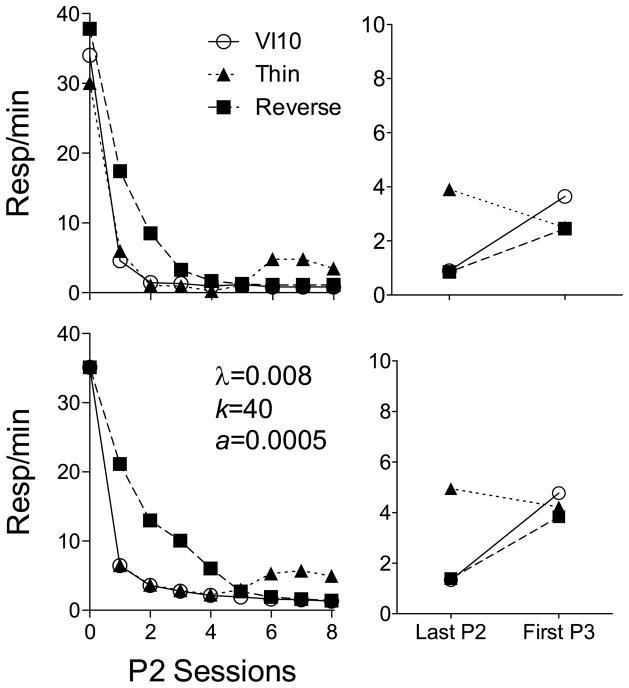

A couple of features of the Craig and Shahan (2016) experiment are somewhat atypical for many resurgence experiments. First, Phases 1 (i.e., 30 sessions) and 2 (i.e., 20 sessions) were quite lengthy. Second, the alternative response was topographically different from the target response, thus necessitating the inclusion of the bias parameter. Thus, it is of some interest to examine the effects of different reinforcement rates on the output of RaC under more typical conditions. In addition, examination of a wider range of a reinforcement rates would be useful. Fig. 12 shows simulations generated by RaC following 20 sessions of Phase-1 reinforcement on either a VI 10-s schedule or a VI 120-s schedule. In addition, Phase-2 alternative-reinforcement schedules ranging from VI 10 s to VI 120 s (i.e., 360-30 rein/h) are shown. Parameter values are as presented in Fig. 11, except that the bias parameter has been omitted. Across the wider range of values shown, the same basic pattern emerges as was true in Fig. 11. First, even with a 12-fold difference in Phase-1 rates of reinforcement (i.e., VI 10 versus VI 120) the model generates the same basic pattern of results. Second, lower rates of alternative reinforcement generate more target responding during Phase 2 than do higher rates of reinforcement. Third, lower rates of alternative reinforcement tend to generate more target responding during Phase 2 than does extinction alone. Fourth, high rates of alternative reinforcement (i.e., VI 10 and VI 15 Alt) generate responding that tends to be reduced below the levels of extinction alone earlier in Phase 2. In the later sessions of Phase 2, higher rates of alternative reinforcement (i.e., VI 15) can generate slightly elevated response rates as compared to extinction alone. The degree to which this later effect is observed would depend upon the length of Phase 2, and on any potential bias for the target over the alternative response (as was apparent in Fig. 11). Fourth, the increase in responding generated by the removal of alternative reinforcement with the transition between Phases 2 and 3 depends upon the rate of alternative reinforcement. The removal of higher rates of alternative reinforcement generates larger increases with the transition to Phase 3 (i.e., resurgence) because response rates tend to be lower in Phase 2 with those higher reinforcement rates. The lowest rate of alternative reinforcement (i.e., VI 120) generates the highest rates of responding during Phase 2, but fails to generate any increase in responding in Phase 3. Fifth, response rates in Phase 3 do not differ meaningfully as a result of different rates of alternative reinforcement, except for the lowest rate of alternative reinforcement (i.e., VI 120), which generates somewhat lower response rates.

Fig. 12.

Simulations of RaC across a range of alternative reinforcement rates following either VI10-s or VI120-s reinforcement of the target in Phase 1.

To aid understanding of the simulations of RaC in Fig. 12, the top panels of Fig. 13 show the value functions generated by Eq. (9) that serve as the basis for those simulations. Note that because the value functions calculated for VT and VAlt are independent of one another, the functions for VAlt at different rates of alternative reinforcement (i.e., VI 10 Alt, VI 30 Alt, VI 120 Alt) are exactly the same following Phase-1 reinforcement on the VI 10-s (top left) and VI 120-s schedules (top right). However, as would be expected, in Phase 1 (data points on the y-axes) the higher rate of target reinforcement in Phase 1 (VI 10) generates a higher VT than does the lower rate of Phase-1 reinforcement (VI 120). Nevertheless, after an initially steeper decrease in VT with the VI 10-s schedule in the first couple of sessions of Phase 2, the two VT functions are very similar across the rest of Phase 2. This similarity in the value functions for the different Phase-1 reinforcement rates across all but the early sessions of extinction is a direct result of the hyperbolic form of the weightings generated by the sTWR across sessions and the dependence of the currency term (i.e., c) on running rate of reinforcement for each option (Eq. (10)).

Fig. 13.

Value functions (top panels), probability of the target (middle panels), and arousal (bottom panels) associated with the simulations shown in Fig. 12.

The middle panels of Fig. 13 show the impact of these value functions on the probability of the target response [i.e., pT = VT /(VT + VAlt)]. Note that changes in pT across Phases 2 and 3 are similar following Phase-1 target reinforcement on VI 10 s or VI 120 s. These similar functions are the main reason that Phase-1 rate of target reinforcement has little impact on responding in Phases 2 and 3, as demonstrated above in Figs. 11 and 12. These value functions also demonstrate the reason that higher rates of alternative reinforcement generate lower target-response rates than do lower rates of alternative reinforcement. With higher rates of alternative reinforcement, VAlt is higher, and thus pT is lower. In short, less behavior is allocated to the target because the value of the alternative is higher. With the change to Phase 3 and the removal of alternative reinforcement, pT increases dramatically when high-rate alternative reinforcement (e.g., VI 10 Alt) is removed because of the precipitous decline in VAlt. In other words, the removal of the high rate of alternative reinforcement produces a shift in the allocation of behavior to the target (i.e., resurgence). A similar but less dramatic shift occurs following the somewhat lower rate of alternative reinforcement (VI 30 Alt), but importantly, the two value functions arrive at nearly the same place with the change to Phase 3, resulting in similar pT functions during this phase. When a very low rate of alternative reinforcement is arranged (VI 120 Alt), however, the value function of the alternative (VAlt) is much closer to the value function for the target, and thus more behavior is allocated to the target across Phase 2 (i.e., pT is higher). Removal of the low rate of alternative reinforcement also produces a less precipitous decline in the value of the alternative, and thus relatively small increases in pT.

The bottom panels of Fig. 13 show how arousal [i.e., A = a(VT + VAlt)] changes across sessions. Because arousal is driven by the sum of the value functions and VT is contributing only small values across all but the first couple of sessions of Phase 2, the arousal functions largely track the form of VAlt across Phases 2 and 3. Although arousal may be higher for the higher rates of alternative reinforcement, the low probability of the target response under these conditions means that little target behavior is generated. With the transition to Phase 3, arousal declines following removal of all Phase-2 reinforcement rates, thus generating specific response-rate functions across Phase-3 sessions that depend on a in Eq. (12).

Given the simulations and data above, RaC suggests that the rate of reinforcement for the target response in Phase 1 would be expected to have little impact on resurgence in single schedules. However, the rate of alternative reinforcement in Phase 2 would be expected to have an impact. Higher rates of alternative reinforcement generate larger increases in responding from the levels obtained at the end of Phase 2, and all but the lowest rates of alternative reinforcement would be expected to generate roughly similar overall rates of responding in Phase 3. Overall, these suggestions of RaC are consistent with the body of results generated when rates of Phase-1 or Phase-2 reinforcement are varied in resurgence experiments using single schedules of reinforcement (e.g., Bouton and Trask, 2016; Craig et al., 2016; Craig and Shahan, 2016; Leitenberg et al., 1975; Sweeney and Shahan, 2013b; Winterbauer and Bouton, 2010). The effects of differential reinforcement rates in multiple schedules are a different story, and will be addressed in a section below.

In summary, the broad conceptual framework of RaC and the specific example model presented in Fig. 10 appear to provide a reasonable account of the effects of reinforcement rate on extinction-induced resurgence in simple schedules. In short, responding in Phases 2 and 3 is governed by the relative value of the target and alternative options. The example model in Fig. 10 shows one way in which changes in value across sessions could be formalized and then used to generate expected rates of responding.

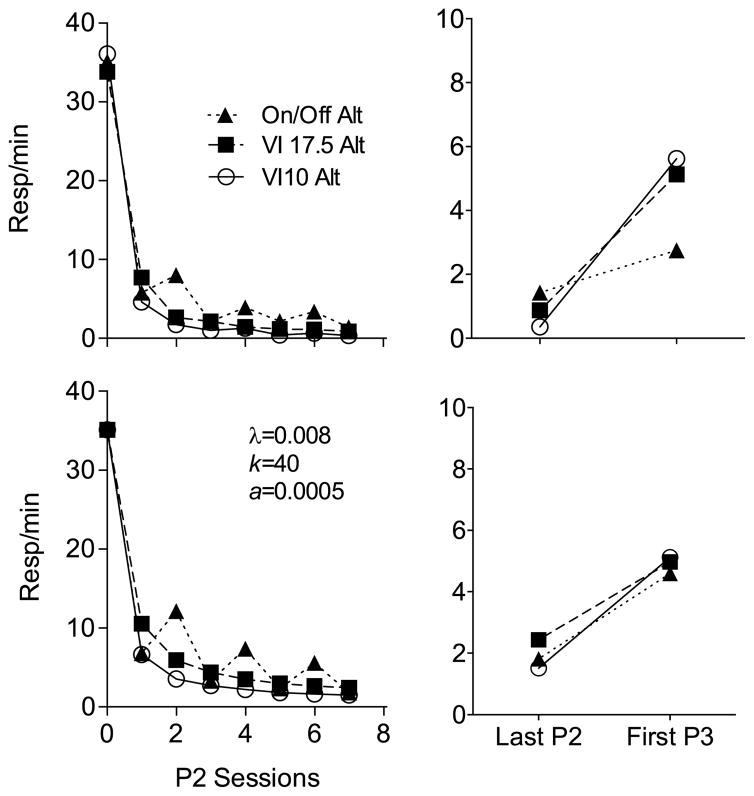

5.2. Changes in alternative-reinforcement rate across Phase 2