Abstract

This paper considers the problem of unconstrained minimization of smooth convex functions having Lipschitz continuous gradients with known Lipschitz constant. We recently proposed the optimized gradient method for this problem and showed that it has a worst-case convergence bound for the cost function decrease that is twice as small as that of Nesterov’s fast gradient method, yet has a similarly efficient practical implementation. Drori showed recently that the optimized gradient method has optimal complexity for the cost function decrease over the general class of first-order methods. This optimality makes it important to study fully the convergence properties of the optimized gradient method. The previous worst-case convergence bound for the optimized gradient method was derived for only the last iterate of a secondary sequence. This paper provides an analytic convergence bound for the primary sequence generated by the optimized gradient method. We then discuss additional convergence properties of the optimized gradient method, including the interesting fact that the optimized gradient method has two types of worstcase functions: a piecewise affine-quadratic function and a quadratic function. These results help complete the theory of an optimal first-order method for smooth convex minimization.

Keywords: First-order algorithms, Optimized gradient method, Convergence bound, Smooth convex minimization, Worst-case performance analysis

1 Introduction

We recently proposed the optimized gradient method (OGM) [1] for unconstrained smooth convex minimization problems, building upon Drori and Teboulle [2]. We showed in [1] that OGM has a worst-case cost function convergence bound that is twice as small as that of Nesterov’s fast gradient method (FGM) [3], yet has an efficient implementation that is similar to FGM. In addition, Drori [4] showed that OGM achieves the optimal worst-case convergence bound of the cost function decrease over the general class of first-order methods (for largedimensional problems), making it important to further study the convergence properties of OGM.

The worst-case convergence bound for OGM was derived for only the last iterate of a secondary sequence in [1], and this paper additionally provides an analytic convergence bound for the primary sequence generated by OGM by extending the analysis in [1]. We further discuss convergence properties of OGM, including the interesting fact that OGM has two types of worstcase functions: a piecewise affine-quadratic function and a quadratic function. These results complement our understanding of an optimal first-order method for smooth convex minimization.

2 Problem, Algorithms and Contributions

We consider the unconstrained smooth convex minimization problem

| (M) |

with the following two conditions:

- – f : ℝd → ℝ is a convex function of the type , i.e., continuously differentiable with Lipschitz continuous gradient:

where L > 0 is the Lipschitz constant. – The optimal set X∗(f) = arg minx∈ℝd f (x) is nonempty, i.e., problem (M) is solvable.

We use ℱL(ℝd) to denote the class of functions that satisfy the above conditions hereafter.

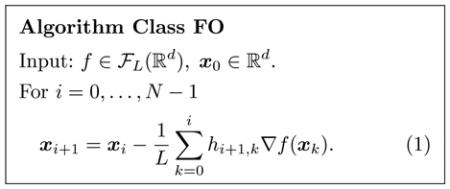

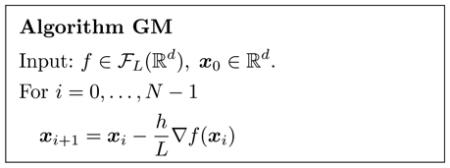

For large-scale optimization problems of type (M) that arise in various fields such as communications, machine learning and signal processing, general first-order algorithms that query only the cost function values and gradients are attractive because of their mild dependence on the problem dimension [5]. For simplicity, we initially focus on the class of fixed-step first-order (FO) algorithms having the following form:

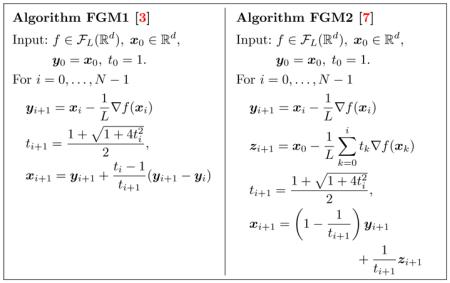

FO updates use weighted sums of current and previous gradients with (pre-determined) step sizes and the Lipschitz constant L. Class FO includes the (fixed-step) gradient method (GM), the heavy-ball method [6], Nesterov’s fast gradient method (FGM) [3, 7], and the recently introduced optimized gradient method (OGM) [1]. Those four methods have efficient recursive formulations rather than directly using (1) that would require storing all previous gradients and computing weighted summations every iteration. Among class FO, Nesterov’s FGM has been used widely, since it achieves the optimal rate O(1/N2) for decreasing a cost function in N iterations [8], and has two efficient forms as shown below for smooth convex problems.

Both FGM1 and FGM2 produce identical sequences {yi} and {xi}, where the primary sequence {yi} satisfies the following convergence bound [3, 7] for any 1 ≤ i ≤ N :

| (2) |

In [1], we showed that the secondary sequence {xi} of FGM satisfies the following convergence bound that is similar to (2) for any 1 ≤ i ≤ N :

| (3) |

Taylor et al. [9] demonstrated that the upper bounds (2) and (3) are only asymptotically tight.

When the large-scale condition “d ≥ 2N + 1” holds, Nesterov [8] showed that for any first-order method generating xN after N iterations there exists a function φ in ℱL(ℝd) that satisfies the following lower bound:

| (4) |

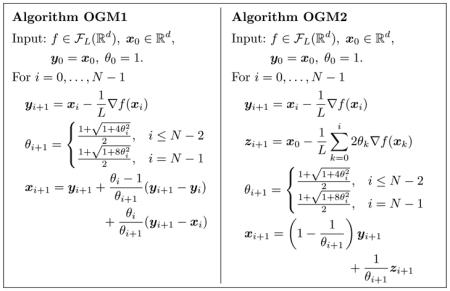

Although FGM achieves the optimal rate O(1/N2), one can still seek algorithms that improve upon the constant factor in (2) and (3), in light of the gap between the bounds (2), (3) of FGM and the lower complexity bound (4). Building upon Drori and Teboulle (hereafter “DT”)’s approach [2] of seeking FO methods that are faster than Nesterov’s FGM (reviewed in Section 3.3), we recently proposed following two efficient formulations of OGM [1].

OGM1 and OGM2 have computational efficiency comparable to FGM1 and FGM2, and produce identical primary sequence {yi} and secondary sequence {xi}. The last iterate xN of OGM satisfies the following analytical worst-case bound [1, Theorem 2]:

| (5) |

which is twice as small as those for FGM in (2) and (3). Recently for the condition “d ≥ N + 1”, Drori [4] showed that for any first-order method there exists a function ψ in ℱL(ℝd) that cannot be minimized faster than the following lower bound:

| (6) |

where xN is the Nth iterate of any first-order method. This lower complexity bound (6) improves on (4), and exactly matches the bound (5) of OGM, showing that OGM achieves the optimal worst-case bound of the cost function for first-order methods when d ≥ N + 1. What is remarkable about Drori’s result is that OGM was derived by optimizing over the class FO having fixed step sizes, leading to (5), whereas Drori’s lower bound in (6) is for the general class of first-order methods where the step sizes are arbitrary. It is interesting that OGM with its fixed step sizes is optimal over the apparently much broader class.

Because OGM has such optimality, it is desirable to understand its properties thoroughly. For example, analytical bounds for the primary sequence {yi} of OGM have not been studied previously, although numerical bounds were discussed by Taylor et al. [9]. This paper provides analytical bounds for the primary sequence of OGM, augmenting the convergence analysis of xN of OGM given in [1]. We also relate OGM to another version of Nesterov’s accelerated first-order method in [10] that has a similar formulation as OGM2.

In [1, Theorem 3], we specified a worst-case function for which OGM achieves the first upper bound in (5) exactly. The corresponding worst-case function is the following piecewise affine-quadratic function:

| (7) |

where OGM iterates remain in the affine region with the same gradient value (without overshooting) for all N iterations. Section 5 shows that a simple quadratic function is also a worst-case function for OGM, and describes why it is interesting that the optimal OGM has these two types of worst-case functions.

Section 3 reviews DT’s Performance Estimation Problem (PEP) framework in [2] that enables systematic worst-case performance analysis of optimization methods. Section 4 provides new convergence analysis for the primary sequence of OGM. Section 5 discusses the two types of worst-case functions for OGM, and Section 6 concludes.

3 Prior Work: Performance Estimation Problem (PEP)

Exploring the convergence performance of optimization methods including class FO has a long history. DT [2] were the first to cast the analysis of the worst-case performance of optimization methods into an optimization problem called PEP, reviewed in this section. We also review how we developed OGM [1] that is built upon DT’s PEP.

3.1 Review of PEP

To analyze the worst-case convergence behavior of a method in class FO having given step sizes h = {hi,k }0≤k<i≤N , DT’s PEP [2] bounds the decrease of the cost function after N iterations as

| (P) |

for given dimension d, Lipschitz constant L and the distance R between an initial point x0 and an optimal point x∗ ∈ X∗(f).

Since problem (P) is difficult to solve, DT [2] introduced a series of relaxations. Then the upper bound of the worst-case performance was found numerically in [2] by solving a relaxed PEP problem. For some cases, analytical worst-case bounds were revealed in [1, 2], where some of those analytical bounds were even found to be exact despite the relaxations. On the other hand, Taylor et al. [9] studied the numerical tight worst-case bound of (P) by avoiding DT’s one relaxation step that is not guaranteed to be tight and showing the tightness of the rest of DT’s relaxations in [2] (for the condition “d ≥ N + 2”).

To summarize recent PEP studies, DT extended the PEP approach for nonsmooth convex problems [11], Drori’s thesis [12] includes an extension of PEP to projected gradient methods for constrained smooth convex problems, and Taylor et al. [13] studied PEP for various first-order algorithms for solving composite convex problems. Similarly but using different relaxations of (P), Lessard et al. [14] applied the Integral quadratic constraints to (P), leading to simpler computation but slightly looser convergence upper bounds.

The next two sections review relaxations of DT’s PEP and an approach for optimizing the choice of h for FO using PEP in [2].

3.2 Review of DT’s Relaxation on PEP

This section reviews relaxations introduced by DT to make (P) into a simpler semidefinite programming (SDP) problem. DT first relax the functional constraint f ∈ ℱL(ℝd) by a well-known property of the class of ℱL(ℝd) functions in [8, Theorem 2.1.5] and then further relax as follows:

| (P1) |

for any given unit vector ν ∈ ℝd, where we denote and for i = 0, … , N, ∗, and define and .

Maximizing relaxed problem (P1) is still difficult, so DT [2] use a duality approach on (P1). Replacing maxG,δ LR2δN by minG,δ −δN for convenience, the Lagrangian of the corresponding constrained minimization problem (P1) with dual variables and becomes

| (8) |

where

| (9) |

and is the (i + 1)th standard basis vector.

Using further derivations of a duality approach on (8) in [2], the dual problem of (P1) becomes the following SDP problem:

| (D) |

where

Then, for given h, the bound BD(h, N, L, R) (that is not guaranteed to be tight) can be numerically computed using any SDP solver, while analytical upper bounds BD(h, N, L, R) for some choices of h were found in [1, 2]. Section 4 finds a new analytical upper bound for a modified version of BD.

3.3 Review of Optimizing the Step Sizes Using PEP

In addition to finding upper bounds for given FO methods, DT [2] searched for the best FO methods with respect to the worst-case performance. Ideally one would like to optimize h over problem (P):

| (HP) |

However, optimizing (HP) directly seems impractical, so DT minimized the dual problem (D) using a SDP solver over the coefficients h as

| (HD) |

Due to relaxations, the computed is not guaranteed to be optimal for problem (HP). Nevertheless, we show in [1] that solving (HD) leads to an algorithm (OGM) having a convergence bound that is twice as small as that of FGM. Interestingly, OGM is optimal among first-order methods with d ≥ N + 1 [4], i.e., is a solution of both (HP) and (HD) for d ≥ N + 1. An optimal point of (HD) is given in [1, Lemma 4 and Proposition 3] as follows:

| (10) |

| (11) |

| (12) |

Thus both OGM1 and OGM2 satisfy the convergence bound (5) [1, Theorem 2, Propositions 4 and 5].

4 New Convergence Analysis for the Primary Sequence of OGM

4.1 Relaxed PEP for the Primary Sequence of OGM

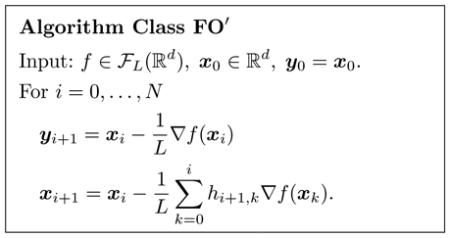

This section applies PEP to an iterate yN of the following class of fixed-step first-order methods (FO′), complementing the worst-case performance of xN in the previous section.

We first replace f (xN) − f(x∗) in (P) by f(yN +1) − f (x∗) as follows:

| (P′) |

We could directly repeat relaxations on (P′) as reviewed in Section 3.2, but we found it difficult to solve a such relaxed problem of (P′) analytically. Instead, we use the following inequality [8]:

| (13) |

to relax (P′), leading to the following bound:

| (P1′) |

This bound has an additional term compared to (P). We later show that the increase of the worst-case upper bound due to this strict relaxation step using (13) is negligible asymptotically.

Similar to relaxing from (P) to (P1) in Section 3.2, we relax (P1′) to the following bound:

| (P2′) |

for any given unit vector ν ∈ ℝd. Then, as in Section 3.2 and [1, 2], one can show that the dual problem of (P2′) is the following SDP problem

| (D′) |

by considering that the Lagrangian of (P2′) becomes

| (14) |

when we replace in (P2′) by for simplicity as we did for (P1) and (8). The formulation (14) is similar to (8), except the term . The derivation of (D′) and (14) is omitted here, since it is almost identical to the derivation of (D) and (8) in [1, 2].

4.2 Convergence Analysis for the Primary Sequence of OGM

To find an upper bound for (D′), it suffices to specify a feasible point.

Lemma 4.1 The following choice of is a feasible point of (D′):

| (15) |

| (16) |

| (17) |

Proof The equivalency between (15) and (16) follows from [1, Proposition 3]. Also, it is obvious that using .

We next rewrite to show that the choice satisfies the positive semidefinite condition in (D′). For any h and (λ, τ) ∈ Λ, the (i, k)th entry of the symmetric matrix S(h, λ, τ) in (9) can be written as

| (18) |

Inserting and into (18), we get

where the second equality uses .

Finally, using , we have

where .

Since (10) and (15) are identical except for the last iteration, the intermediate iterates of FO with both and are equivalent. We can also easily notice that the sequence of FO′ with both and are also identical, implying that both the primary sequence {yi} of OGM and FO′ with are equivalent.

Using Lemma 4.1, the following theorem provides an analytical convergence bound for the primary sequence {yi} of OGM.

Theorem 4.1 Let f ∈ ℱL(ℝd) and let y0, · · · , yN ∈ ℝd be generated by OGM1 and OGM2. Then for 1 ≤ i ≤ N , the primary sequence for OGM satisfies:

| (19) |

Proof The sequence generated by FO′ with is equivalent to that of OGM1 and OGM2 [1, Propositions 4 and 5].

Using (17) and , we have

| (20) |

based on Lemma 4.1. Since the primary sequence of OGM1 and OGM2 does not depend on a given N , we can extend (20) for all 1 ≤ i ≤ N.

Due to a strict relaxation leading to (P1′), we cannot guarantee that the bound (19) is tight. However, the next proposition shows that bound (19) is asymptotically tight by specifying one particular worst-case function that was conjectured by Taylor et al. [9, Conjecture 4].

Proposition 4.1 For the following function in ℱL(ℝd):

| (21) |

the iterate yN generated by OGM1 and OGM2 provides the following lower bound:

| (22) |

Proof Starting from x0 = Rν, where ν is a unit vector, and using the following property of the coefficients [1, Equation (8.2)]:

| (23) |

the primary iterates of OGM1 and OGM2 are as follows

where the corresponding sequence stays in the affine region of the function f1,OGM′ (x; N) with the same gradient value:

Therefore, after N iterations of OGM1 and OGM2, we have

exactly matching the lower bound (22).

The lower bound (22) matches the tight numerical worst-case bound in [9] (see Table 1). While Taylor et al. [9] provide numerical evidence about the tight bound of the primary sequence of OGM, our (22) provides an analytical bound that suffices for asymptotically tight worst-case analysis.

Table 1.

Exact numerical cost function bound of the last primary iterate yN and the last secondary iterate xN of FGM, OGM and OGM′

| N | FGM(prim.) | FGM(sec.) | OGM(prim.) | OGM(sec.) | OGM′(sec) |

|---|---|---|---|---|---|

| 1 | 1/6.00 | 1/6.00 | 1/6.00 | 1/8.00 | 1/5.24 |

| 2 | 1/10.00 | 1/11.13 | 1/12.47 | 1/16.16 | 1/9.62 |

| 3 | 1/15.13 | 1/17.35 | 1/21.25 | 1/26.53 | 1/15.12 |

| 4 | 1/21.35 | 1/24.66 | 1/32.25 | 1/39.09 | 1/21.71 |

| 5 | 1/28.66 | 1/33.03 | 1/45.42 | 1/53.80 | 1/29.38 |

| 10 | 1/81.07 | 1/90.69 | 1/143.23 | 1/159.07 | 1/83.54 |

| 20 | 1/263.65 | 1/283.55 | 1/494.68 | 1/525.09 | 1/269.56 |

| 40 | 1/934.89 | 1/975.10 | 1/1810.08 | 1/1869.22 | 1/947.55 |

| 80 | 1/3490.22 | 1/3570.75 | 1/6866.93 | 1/6983.13 | 1/3516.00 |

4.3 New Formulations of OGM

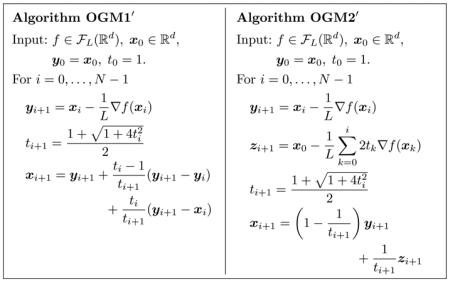

Using [1, Propositions 4 and 5], Algorithm FO′ with the coefficients (15) and (16) can be implemented efficiently as follows:

The OGM′ is very similar to OGM, because it generates same primary and secondary sequence; only the last iterate of the secondary sequence differs. Therefore, the bound (19) applies to the primary sequence {yi} of both OGM and OGM′, as summarized in the following corollary.

Corollary 4.1 Let f ∈ ℱL(ℝd) and let y0, · · · , yN ∈ ℝd be generated by OGM1′ and OGM2′. Then for 1 ≤ i ≤ N ,

| (24) |

4.4 Comparing Tight Worst-case Bounds of FGM, OGM and OGM′

While some analytical upper bounds of FGM, OGM and OGM′ such as (2), (3) (5), (19) and (24) are available for comparison, some of those are tight only asymptotically or some bounds for such algorithms are even unknown analytically. Therefore, we used the code of Taylor et al. [9] for tight (numerical) comparison of algorithms of interest for some given N. Table 1 provides tight numerical bounds of the primary and secondary sequence of FGM, OGM and OGM′. Interestingly, the worst-case performance of the secondary sequence of OGM′ is worse than that of FGM sequences, whereas the primary sequence of OGM (and OGM′) is roughly twice better.

The following proposition uses a quadratic function to define a lower bound on the worst-case performance of OGM1′ and OGM2′.

Proposition 4.2 For the following quadratic function in ℱL(ℝd):

| (25) |

both OGM1′ and OGM2′ provide the following lower bound:

| (26) |

Proof We use induction to show that the following iterates:

| (27) |

correspond to the iterates of OGM1′ and OGM2′ applied to f2(x). Starting from x0 = Rν, where ν is a unit vector, and assuming that (27) holds for i < N, we have

| (31) |

where the second and third equalities use (1) and (15). Therefore, we have

after N iterations of OGM1′ and OGM2′, which is equivalent to the lower bound (26).

Since the analytical lower bound (26) matches the numerical tight bound in Table 1, we conjecture that the quadratic function f2(x) is the worst-case function for the secondary sequence of OGM′ and thus (26) is the tight worst-case bound. Whereas FGM has similar worst-case bounds (and behavior as conjectured by Taylor et al. [9, Conjectures 4 and 5]) for both its primary and secondary sequence, the two sequences of OGM′ (or intermediate iterates of OGM) have two different worst-case behaviors, as discussed further in Section 5.2.

4.5 Related Work

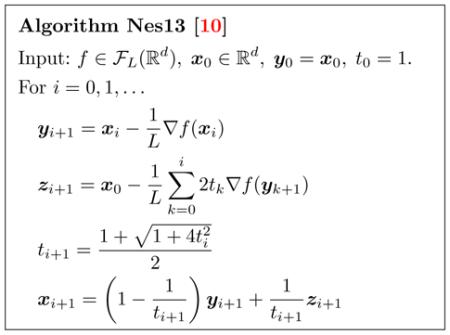

Nesterov’s Accelerated First-order Method in [10] Interestingly, an algorithm in [10, Section 4] is similar to OGM2′ and satisfies same convergence bound (19) for the primary sequence {yi}, which we call Nes13 in this paper for convenience.1

The only difference between OGM2′ and Nes13 is the gradient used for the update of zi. While both algorithms achieve same bound (19), Nes13 is less attractive in practice since it requires computing gradients at two different points xi and yi+1 at each ith iteration.

Similar to Proposition 4.1, the following proposition shows that the bound (19) is asymptotically tight for Nes13.

Proposition 4.3 For the function f1,OGM′ (x; N) (21) in ℱL(ℝd), the iterate yN generated by Nes13 achieves the lower bound (22).

Proof See the proof of Proposition 4.1.

5 Two Worst-case Functions for an Optimal Fixed-step GM and OGM

This section discusses two algorithms, an optimal fixed-step GM and OGM, in class FO that have a piecewise affine-quadratic function and a quadratic function as two worst-case functions. Considering that OGM is optimal among first-order methods (for d ≥ N +1), it is interesting that OGM has two different types of worst-case functions, because this property resembles the (numerical) analysis of the optimal fixed-step GM in [9] (reviewed below).

5.1 Two Worst-case Functions for an Optimal Fixed-step GM

The following is GM with a constant step size h.

For GM with 0 < h < 2, both [9] and [2] conjecture the following tight convergence bound:

| (28) |

The proof of the bound (28) for 0 < h ≤ 1 is given in [2], while the proof for 1 < h < 2 is still unknown but strong numerical evidence is given in [9]. In other words, at least one of the two functions specified below is conjectured to be a worst-case for GM with a constant step size 0 < h < 2. Such functions are a piecewise affine-quadratic function

| (29) |

and a quadratic function f2(x) (25), where f1,GM(x; h, N) and f2(x) contribute to the factors and (1−h)2N respectively in (28). Here, f1,GM(x; h, N) is a worst-case function where the GM iterates approach the optimum slowly, whereas f2(x) is a worst-case function where the iterates overshoot the optimum. (See Fig. 1.)

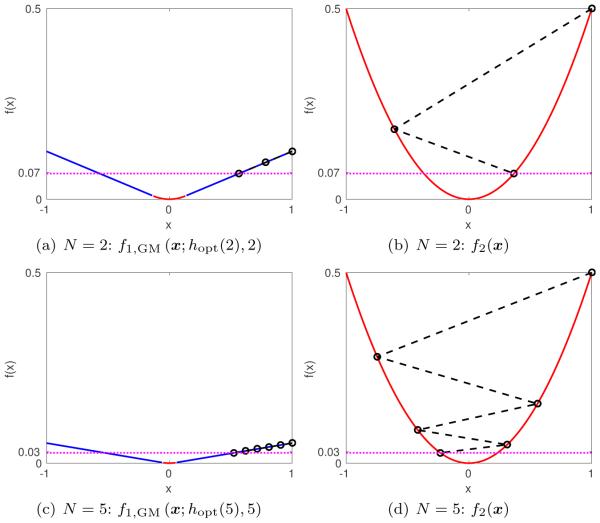

Fig. 1.

The worst-case performance of the sequence of GM with an optimal fixed-step hopt(N) for N = 2, 5 and d = L = R = 1. The numerically optimized fixed-step sizes for N = 2, 5 are hopt(2) = 1.6058 and hopt(5) = 1.7471 [9].

Assuming that the above conjecture for a fixed-step GM holds, Taylor et al. [9] searched (numerically) for the optimal fixed-step size 0 < hopt(N) < 2 for given N that minimizes the bound (28):

| (30) |

GM with the step hopt(N) has two worst-case functions f1,GM(x; h, N) and f2(x), and must compromise between two extreme cases. On the other hand, the case 0 < h < hopt(N) has only f1,GM(x; h, N) as the worst-case and the case hopt(N) < h < 2 has only f2(x) as the worst-case. We believe this compromise is inherent to optimizing the worst-case performance of FO methods. The next section shows that the optimal OGM also has this desirable property.

For the special case of N = 1, the optimal OGM reduces to GM with a fixed-step h = 1.5, and this confirms the conjecture in [9] that the step hopt(1) = 1.5 (30) is optimal for a fixed-step GM with N = 1. However, proving the optimality of hopt(N) (30) for the fixed-step GM for N > 1 is left as future work.

Fig. 1 visualizes the worst-case performance of GM with the optimal fixed-step hopt(N) for N = 2 and N = 5. As discussed, for the two worst-case function in Fig. 1, the final iterates reach the same cost function value, where the iterates approach the optimum slowly for f1,GM(x; h, N), and overshoot for f2(x).

5.2 Two Worst-case Functions for the Last Iterate xN of OGM

[1, Theorem 3] showed that f1,OGM(x; N) (7) is a worst-case function for the last iterate xN of OGM. The following theorem shows that a quadratic function f2(x) (25) is also a worst-case function for the last iterate of OGM.

Theorem 5.1 For the quadratic function (25) in ℱL(ℝd), both OGM1 and OGM2 exactly achieve the convergence bound (5), i.e.,

Proof We use induction to show that the following iterates:

correspond to the iterates of OGM1 and OGM2 applied to f2(x).

Starting from x0 = Rν, where ν is a unit vector, and assuming that (31) holds for i < N , we have

where the second and third equalities use (1) and (10). Therefore, we have

after N iterations of OGM1 and OGM2, exactly matching the bound (5).

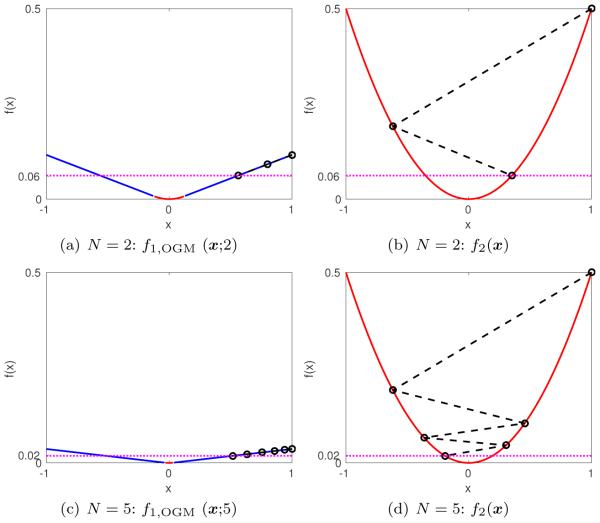

Thus the last iterate xN of OGM has two worst case functions: f1,OGM(x; N) and f2(x), similar to an optimal fixed-step GM in Section 5.1. Fig. 2 illustrates behavior of OGM for N = 2 and N = 5, where OGM reaches same worst-case cost function value for two different functions f1,OGM(x; N) and f2(x) after N iterations.

Fig. 2.

The worst-case performance of the secondary sequence of OGM for N = 2, 5 and d = L = R = 1.

In [9, Conjecture 4] and Section 4.2, the primary sequence of OGM is conjectured to have f1,OGM′ (x; N) as a worst-case function, whereas the quadratic function f2(x) becomes the best-case as the first primary iterate of OGM reaches the optimum just in one step. On the other hand, Section 4.4 conjectured that f2(x) is a worst-case function for the secondary sequence of OGM prior to the last iterate. Apparently the primary and secondary sequences of OGM have two extremely different worst-case analyses, whereas the last iterate xN of OGM compromises between the two worst-case behaviors, making the worst-case behavior of the optimal OGM interesting.

6 Conclusions

We provided an analytical convergence bound for the primary sequence of OGM1 and OGM2, augmenting the bounds of the last iterate of the secondary sequence of OGM in [1]. The corresponding convergence bound is twice as small as that of Nesterov’s FGM, showing that the primary sequence of OGM is faster than FGM. However, interestingly the intermediate iterates of the secondary sequence of OGM were found to be slower than FGM in the worstcase.

We proposed two new formulations of OGM, called OGM1′ and OGM2′ that are related closely to Nesterov’s accelerated first-order methods in [10] (originally developed for nonsmooth composite convex functions and differing from FGM in [3, 7]). For smooth problems, OGM and OGM′ provide faster convergence speed than [10] considering the number of gradient computations required per iteration.

We showed that the last iterate of the secondary sequence of OGM has two types of worst-case functions, a piecewise affine-quadratic function and a quadratic function. In light of the optimality of OGM (for d ≥ N + 1) in [4], it is interesting that OGM has these two types of worst-case functions. Because the optimal fixed-step GM also appears to have two such worst-case functions, one might conjecture that this behavior is a general characteristic of optimal fixed-step first-order methods.

In addition to the optimality of fixed-step first-order methods for the cost function value, studying the optimality for an alternative criteria such as the gradient (||∇f (xN )||) is an interesting research direction. Just as Nesterov’s FGM was extended for solving nonsmooth composite convex functions [10, 15], it would be interesting to extend OGM to such problems; recently this was numerically studied by Taylor et al. [13]. Incorporating a line-search scheme in [10, 15] to OGM would be also worth investigating, since computing the Lipschitz constant L is sometimes expensive in practice.

Acknowledgements

This research was supported in part by NIH grant U01 EB018753.

Footnotes

Nes13 was developed originally to deal with nonsmooth composite convex functions with a line-search scheme [10, Section 4], whereas the algorithm shown here is a simplified version of [10, Section 4] for unconstrained smooth convex minimization (M) without a line-search.

Mathematics Subject Classification (2000) 90C25 · 90C30 · 90C60 · 68Q25 · 49M25 · 90C22

References

- 1.Kim D, Fessler JA. Optimized first-order methods for smooth convex minimization. Mathematical Programming. 2015;159(1):81–107. doi: 10.1007/s10107-015-0949-3. DOI 10.1007/s10107-015-0949-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Drori Y, Teboulle M. Performance of first-order methods for smooth convex minimization: A novel approach. Math. Program. 2014;145(1-2):451–82. DOI 10.1007/s10107-013-0653-0. [Google Scholar]

- 3.Nesterov Y. A method for unconstrained convex minimization problem with the rate of convergence O(1/k2) Dokl. Akad. Nauk. USSR. 1983;269(3):543–7. [Google Scholar]

- 4.Drori Y. The exact information-based complexity of smooth convex minimization. 2016 URL http://arxiv.org/abs/1606.01424 .Arxiv 1606.01424.

- 5.Cevher V, Becker S, Schmidt M. Convex optimization for big data: scalable, randomized, and parallel algorithms for big data analytics. IEEE Sig. Proc. Mag. 2014;31(5):32–43. DOI 10.1109/MSP.2014.2329397. [Google Scholar]

- 6.Polyak BT. Some methods of speeding up the convergence of iteration methods. USSR Comp. Math. Math. Phys. 1964;4(5):1–17. [Google Scholar]

- 7.Nesterov Y. Smooth minimization of non-smooth functions. Mathematical Programming. 2005;103(1):127–52. DOI 10.1007/s10107-004-0552-5. [Google Scholar]

- 8.Nesterov Y. Introductory lectures on convex optimization: A basic course. Kluwer Academic Publishers; Dordrecht: 2004. [Google Scholar]

- 9.Taylor AB, Hendrickx JM, Glineur F. Smooth strongly convex interpolation and exact worst-case performance of first-order methods. Mathematical Programming. 2016 DOI 10.1007/s10107-016-1009-3. [Google Scholar]

- 10.Nesterov Y. Gradient methods for minimizing composite functions. Mathematical Programming. 2013;140(1):125–61. DOI 10.1007/s10107-012-0629-5. [Google Scholar]

- 11.Drori Y, Teboulle M. An optimal variant of Kelley’s cutting-plane method. Mathe-matical Programming. 2016 DOI 10.1007/s10107-016-0985-7. [Google Scholar]

- 12.Drori Y. Contributions to the complexity analysis of optimization algorithms. Tel-Aviv Univ.; Israel: 2014. Ph.D. thesis. [Google Scholar]

- 13.Taylor AB, Hendrickx JM, Glineur F. Exact worst-case performance of first-order algorithms for composite convex optimization. 2015 URL http://arxiv.org/abs/1512.07516. Arxiv 1512.07516.

- 14.Lessard L, Recht B, Packard A. Analysis and design of optimization algorithms via integral quadratic constraints. SIAM J. Optim. 2016;26(1):57–95. DOI 10.1137/ 15M1009597. [Google Scholar]

- 15.Beck A, Teboulle M. A fast iterative shrinkage-thresholding algorithm for linear in- verse problems. SIAM J. Imaging Sci. 2009;2(1):183–202. DOI 10.1137/080716542. [Google Scholar]