Abstract

Neural population equations such as neural mass or field models are widely used to study brain activity on a large scale. However, the relation of these models to the properties of single neurons is unclear. Here we derive an equation for several interacting populations at the mesoscopic scale starting from a microscopic model of randomly connected generalized integrate-and-fire neuron models. Each population consists of 50–2000 neurons of the same type but different populations account for different neuron types. The stochastic population equations that we find reveal how spike-history effects in single-neuron dynamics such as refractoriness and adaptation interact with finite-size fluctuations on the population level. Efficient integration of the stochastic mesoscopic equations reproduces the statistical behavior of the population activities obtained from microscopic simulations of a full spiking neural network model. The theory describes nonlinear emergent dynamics such as finite-size-induced stochastic transitions in multistable networks and synchronization in balanced networks of excitatory and inhibitory neurons. The mesoscopic equations are employed to rapidly integrate a model of a cortical microcircuit consisting of eight neuron types, which allows us to predict spontaneous population activities as well as evoked responses to thalamic input. Our theory establishes a general framework for modeling finite-size neural population dynamics based on single cell and synapse parameters and offers an efficient approach to analyzing cortical circuits and computations.

Author summary

Understanding the brain requires mathematical models on different spatial scales. On the “microscopic” level of nerve cells, neural spike trains can be well predicted by phenomenological spiking neuron models. On a coarse scale, neural activity can be modeled by phenomenological equations that summarize the total activity of many thousands of neurons. Such population models are widely used to model neuroimaging data such as EEG, MEG or fMRI data. However, it is largely unknown how large-scale models are connected to an underlying microscale model. Linking the scales is vital for a correct description of rapid changes and fluctuations of the population activity, and is crucial for multiscale brain models. The challenge is to treat realistic spiking dynamics as well as fluctuations arising from the finite number of neurons. We obtained such a link by deriving stochastic population equations on the mesoscopic scale of 100–1000 neurons from an underlying microscopic model. These equations can be efficiently integrated and reproduce results of a microscopic simulation while achieving a high speed-up factor. We expect that our novel population theory on the mesoscopic scale will be instrumental for understanding experimental data on information processing in the brain, and ultimately link microscopic and macroscopic activity patterns.

Introduction

When neuroscientists report electrophysiological, genetic, or anatomical data from a cortical neuron, they typically refer to the cell type, say, a layer 2/3 fast-spiking interneuron, a parvalbumin-positive neuron in layer 5, or a Martinotti cell in layer 4, together with the area, say primary visual cortex or somatosensory cortex [1–4]. Whatever the specific taxonomy used, the fact that a taxonomy is plausible at all indicates that neurons can be viewed as being organized into populations of cells with similar properties. In simulation studies of cortical networks with spiking neurons, the number of different cell types, or neuronal populations, per cortical column ranges from eight [5] to about 200 [6] with 31’000 to 80’000 simulated neurons for one cortical column, but larger simulations of several columns adding up to a million neurons (and 22 cells types) have also been performed [7]. In the following, we will refer to a model where each neuron in each population is simulated explicitly by a spiking neuron model as a microscopic model.

On a much coarser level, neural mass models [8–10], also called field models [11–13], population activity equations [14], rate models [15], or Wilson-Cowan models [16] represent the activity of a cortical column at location x by one or at most a few variables, such as the mean activity of excitatory and inhibitory neurons located in the region around x. Computational frameworks related to neural mass models have been used to interpret data from fMRI [17, 18] and EEG [9]. Since neural mass models give a compact summary of coarse neural activity, they can be efficiently simulated and fit to experimental data [17, 18].

However, neural mass models have several disadvantages. While the stationary state of neural mass activity can be matched to the single-neuron gain function and hence to detailed neuron models [11, 14, 19–22], the dynamics of neural mass models in response to a rapid change in the input does not correctly reproduce a microscopic simulation of a population of neurons [14, 22, 23]. Second, fluctuations of activity variables in neural mass models are either absent or described by an ad hoc noise model. Moreover, the links of neural mass models to local field potentials are difficult to establish [24]. Because a systematic link to microscopic models at the level of spiking neurons is missing, existing neural mass models must be considered as heuristic phenomenological, albeit successful, descriptions of neural activity.

In this paper we address the question of whether equations for the activity of populations, similar in spirit but not necessarily identical to Wilson-Cowan equations [16], can be systematically derived from the interactions of spiking neurons at the level of microscopic models. At the microscopic level, we start from generalized integrate-and-fire (GIF) models [14, 25, 26] because, first, the parameters of such GIF models can be directly, and efficiently, extracted from experiments [27] and, second, GIF models can predict neuronal adaptation under step-current input [28] as well as neuronal firing times under in-vivo-like input [26]. In our modeling framework, the GIF neurons are organized into different interacting populations. The populations may correspond to different cell types within a cortical column with known statistical connectivity patterns [3, 6]. Because of the split into different cell types, the number of neurons per population (e.g., fast-spiking inhibitory interneurons in layer 2/3) is finite and in the range of 50–2000 [3]. We call a model at the level of interacting cortical populations of finite size a mesoscopic model. The mathematical derivation of the mesoscopic model equations from the microscopic model (i.e. network of generalized integrate-and-fire neurons) is the main topic of this paper. The small number of neurons per population is expected to lead to characteristic fluctuations of the activity which should match those of the microscopic model.

The overall aims of our approach are two-fold. As a first aim, this study would like to develop a theoretical framework for cortical information processing. The main advantage of a systematic link between neuronal parameters and mesoscopic activity is that we can quantitatively predict the effect of changes of neuronal parameters in (or of input to) one population on the activation pattern of this as well as other populations. In particular, we expect that a valid mesoscopic model of interacting cortical populations will become useful to predict the outcome of experiments such as optogenetic stimulation of a subgroup of neurons [29–31]. A better understanding of the activity patterns within a cortical column may in turn, after suitable postprocessing, provide a novel basis for models of EEG, fMRI, or LFP [9, 17, 18, 24]. While we cannot address all these points in this paper, we present an example of nontrivial activity patterns in a network model with stochastic switching between different activity states potentially linked to perceptual bistability [32–34] or resting state activity [18].

As a second aim, this study would like to contribute to multiscale simulation approaches [35] in the neurosciences by providing a new tool for efficient and consistent coarse-grained simulation at the mesoscopic scale. Understanding the computations performed by the nervous system is likely to require models on different levels of spatial scales, ranging from pharmacological interactions at the subcellular and cellular levels to cognitive processes at the level of large-scale models of the brain. Ideally, a modeling framework should be efficient and consistent across scales in the following sense. Suppose, for example, that we are interested in neuronal membrane potentials in one specific group of neurons which receives input from many other groups of neurons. In a microscopic model, all neurons would be simulated at the same level; in a multi-scale simulation approach, only the group of neurons where we study the membrane potentials is simulated at the microscopic level, whereas the input from other groups is replaced by the activity of the mesoscopic model. A multiscale approach is consistent, if the replacement of parts of the microscopic simulation by a mesoscopic simulation does not lead to any change in the observed pattern of membrane potentials in the target population. The approach is efficient if the change of simulation scale leads to a significant speed-up of simulation. While we do not intend to present a systematic comparison of computational performance, we provide an example and measure the speed-up factor between mesoscopic and microscopic simulation for the case of a cortical column consisting of eight populations [5].

Despite of its importance, a quantitative link between mesoscopic population models and microscopic neuronal parameters is still largely lacking. This is mainly due to two obstacles: First, in a cortical column the number of neurons of the same type is small (50–2000 [3]) and hence far from the N → ∞ limit of classic “macroscopic” theories in which fluctuations vanish [14, 36–38]. Systematic treatments of finite-size networks using methods from statistical physics (system size expansion [39], path integral methods [40, 41], neural Langevin equations [42–45]) have so far been limited to simplified Markov models that lack, however, a clear connection to single neuron physiology.

Second, spikes generated by a neuron are generally correlated in time due to refractoriness [46], adaptation and other spike history dependencies [28, 47–51]. Therefore spike trains are often not well described by an (inhomogeneous) Poisson process, especially during periods of high firing rates [46]. As a consequence, the mesoscopic population activity (i.e. the sum of spike trains) is generally not simply captured by a Poisson model either [52–54], even in the absence of synaptic couplings [55]. These non-Poissonian finite-size fluctuations on the mesoscopic level in turn imply temporally correlated synaptic input to other neurons (colored noise) that can drastically influence the population activity [53, 54, 56] but which is hard to tackle analytically [57]. Therefore, most theoretical approaches rely on a white-noise or Poisson assumption to describe the synaptic input [58–62], thereby neglecting temporal correlations caused by spike-history dependencies in single neuron activity. Here, we will exploit earlier approaches to treating refractoriness [23] and spike frequency adaptation [55, 63] and combine these with a novel treatment of finite-size fluctuations.

Our approach is novel for several reasons. First, we use generalized integrate-and-fire models that accurately describe neuronal data [25, 26] as our microscopic reference. Second, going beyond earlier studies [58–60, 64], we derive stochastic population equations that account for both strong neuronal refractoriness and finite population size in a consistent manner. Third, our theory has a non-perturbative character as it neither assumes the self-coupling (refractoriness and adaptation) to be weak [65] nor does it hinge on an expansion around the N → ∞ solution for large but finite N [55, 66, 67]. Thus, it is also valid for relatively small populations and non-Gaussian fluctuations. And forth, in contrast to linear response theories [55, 68–72], our mesoscopic equations work far away from stationary states and reproduce large fluctuations in multistable networks.

In the Results section we present our mesoscopic population equations, suggest an efficient numerical implementation, and illustrate the main dynamical effects via a selected number of examples. To validate the mesoscopic theory we numerically integrate the stochastic differential equations for the mesoscopic population activities and compare their statistics to those of the population activities derived from a microscopic simulation. A detailed account of the derivation is presented in the Methods section. In the discussion section we point out limitations and possible applications of our mesoscopic theory.

Results

We consider a structured network of interacting homogeneous populations. Homogeneous here means that each population consists of spiking neurons with similar intrinsic properties and random connectivity within and between populations. To define such populations, one may think of grouping neurons into genetically-defined cell classes of excitatory and inhibitory neurons [4], or, more traditionally, into layers and cell types (Fig 1A). For example, pyramidal cells in layer 2/3 of rodent somatosensory cortex corresponding to whisker C2 form a population of about 1700 neurons [3]. Pyramidal cells in layer 5 of the same cortical column form another one (∼1200 neurons [3]), fast-spiking inhibitory cells in layer 2/3 a third one (∼100 neurons [3]) and non-fast-spiking inhibitory cells in layer 2/3 a fourth one (∼130 neurons [3]), and so on [3, 6, 73]. We suppose that the parameters of typical neurons from each population [27, 73, 74], the number of neurons per population [3, 73] and the typical connection probabilities [5] and strengths within and between populations [73, 75–79] are known from experimental data. The resulting spiking neural network can be simulated on a cellular level by numerical integration of the spiking dynamics of each individual neuron (Fig 1B). In the following, we will refer to this level of description as the microscopic level. Apart from being computationally expensive, the full microscopic network dynamics is highly complex and hence difficult to understand. To overcome these shortcomings, we have developed a new mean-field description for the mesoscopic dynamics of interacting populations.

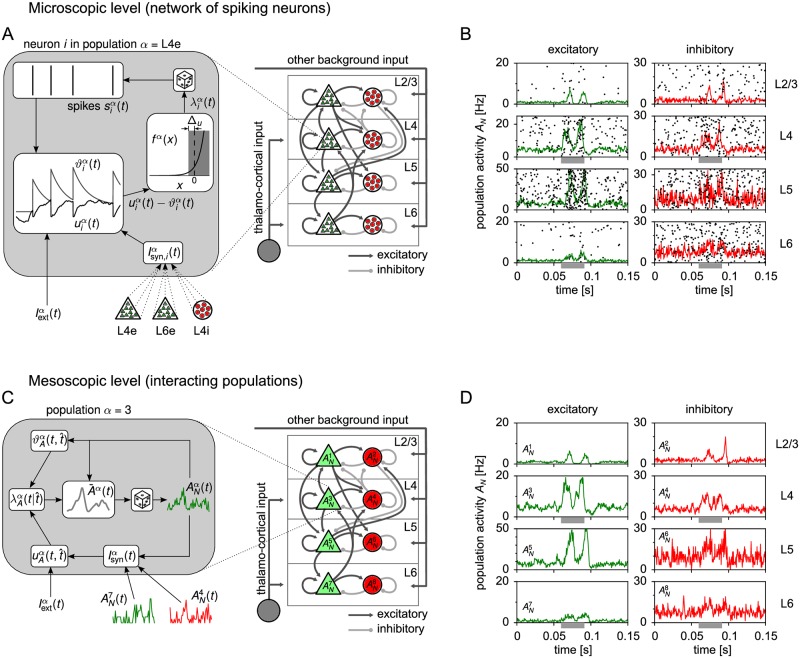

Fig 1. Illustration of population models on the microscopic and mesoscopic level.

(A) Cortical column model [5] with ∼ 80’000 neurons organized into four layers (L2/3, L4, L5, L6) each consisting of an excitatory (“e”) and an inhibitory (“i”) population. On the microscopic level, each individual neuron is described by a generalized integrate-and-fire (GIF) model with membrane potential , dynamic threshold and conditional intensity . Inset: GIF dynamics for a specific neuron i of population L4e (α = L4e). The neuron receives spikes from neurons in L4e, L4i and L6e, which drive the membrane potential . Spikes are elicited stochastically by a conditional intensity that depends on the instantaneous difference between and the dynamic threshold . Spike feedback (voltage reset and spike-triggered threshold movement) gives rise to spike history effects like refractoriness and adaptation. (B) Spike raster plot of the first 200 neurons of each population. The panels correspond to the populations in (A). Layer 4 and 6 are stimulated by a step current during the interval (0.06s, 0.09s) mimicking thalamic input (gray bars). Solid lines show the population activities computed with temporal resolution Δt = 0.5 ms, cf. Eq (2). The activities are stochastic due to the finite population size. (C) On the mesoscopic level, the model reduces to a network of 8 populations, each represented by its population activity . Inset: The mesoscopic model generates realizations of from an expected rate , which is a deterministic functional of the past population activities. (D) A corresponding simulation of the mesoscopic model yields population activities with the same temporal statistics as in (B).

Mesoscopic population equations

A population α of size Nα is represented by its population activity (Greek superscripts label the populations, Fig 1C) defined as

| (1) |

Here, with the Dirac δ-function denotes the spike train of an individual neuron i in population α with spike times . Empirically, the population activity is measured with a finite temporal resolution Δt. In this case, we define the coarse-grained population activity as

| (2) |

where Δnα(t) is the number of neurons in population α that have fired in a time bin of size Δt starting at time t. The two definitions converge in the limit Δt → 0.

An example of population activities derived from spiking activity in a cortical circuit model under a step current stimulation is shown in Fig 1B. To bridge the scales between neurons and populations, the corresponding mean-field model should ideally result in the same population activities as obtained from the full microscopic model (Fig 1D). Because of the stochastic nature of the population activities, however, the qualifier “same” has to be interpreted in a statistical sense. The random fluctuations apparent in Fig 1B and 1D are a consequence of the finite number of neurons because microscopic stochasticity is not averaged out in the finite sum in Eq (1). This observation is important because estimated neuron numbers reported in experiments on local cortical circuits are relatively small [3, 73]. Therefore, a quantitatively valid population model needs to account for finite-size fluctuations. As mentioned above, we will refer to the population-level with finite size populations (N ∼ 50 to 2000 per population) as the mesoscopic level. In summary, we face the following question: is it possible to derive a closed set of evolution equations for the mesoscopic variables that follow the same statistics as the original microscopic model?

To address this question, we describe neurons by generalized integrate-and-fire (GIF) neuron models (Fig 1A (inset) and Methods, Sec. “Generalized integrate-and-fire model”) with escape noise [14]. In particular, neuron i of population α is modeled by a leaky integrate-and-fire model with dynamic threshold [49, 80]. The variables of this model are the membrane potential and the dynamic threshold (Fig 1A, inset), where uth is a baseline threshold and θα(t) is a spike-triggered adaptation kernel or filter function that accounts for adaptation [26, 47, 81–84] and other spike-history effects [14, 84] via a convolution with the neurons spike train . In other words, the dynamic threshold depends on earlier spikes of neuron i: . Spikes are elicited stochastically depending on the present state of the neuron (Fig 1A, inset). Specifically, the probability that neuron i fires a spike in the next time step [t, t + Δt] is given by λi(t)Δt, where is the conditional intensity of neuron i (also called conditional rate or hazard rate):

| (3) |

with an exponential function . Analysis of experimental data has shown that the “softness” parameter of the threshold is in the range of 1 to 5 mV [85]. The parameter cα can be interpreted as the instantaneous rate at threshold.

Besides the effect of a spike on the threshold as mediated by the filter function θα(t), a spike also triggers a change of the membrane potential. In the GIF model (Methods, Sec. “Generalized integrate-and-fire model”), the membrane potential is reset after spiking to a reset potential ur, to which is clamped for an absolute refractory period tref. Absolute refractoriness is followed by a period of relative refractoriness, where the conditional intensity Eq (3) is reduced. This period is determined by the relaxation of the membrane potential from the reset potential to the unperturbed or “free” potential, denoted h(t), which corresponds to the membrane potential dynamics in the absence of resets.

The GIF model accurately predicts spikes of cortical neurons under noisy current stimulation mimicking in-vivo like input [25, 26] and its parameters can be efficiently extracted from single neuron recordings [26, 27]. Variants of this model have also been suggested that explicitly incorporate biophysical properties such as fast sodium inactivation [86, 87], conductance-based currents [88] and synaptically-filtered background noise [89].

Mean-field approximations

In order to derive a mesoscopic mean-field theory for populations of GIF neurons, we first approximate the conditional intensity of an individual neuron by an effective rate that only depends on its last spike time and on the history of the population activity , t′ < t (as expressed by the subscript “A”). This is called the quasi-renewal approximation [63]. Taking into account the dependence on the last spike is particularly important because of neuronal refractoriness.

To obtain such a quasi-renewal description we make two approximations. Firstly, we approximate the random connectivity by an effective full connectivity with proportionally scaled down synaptic weights (“mean-field approximation”). As a result, all neurons of the same population are driven by identical synaptic input (see Methods). This implies that for all neurons that had the same last spike time, the time course of the membrane potential is identical, . Secondly, we make the quasi-renewal approximation for GIF neurons [63], which replaces the threshold ϑi(t) by an effective threshold . Again, the effective threshold only depends on the last spike time and the history of the population activity. As a final result we obtain the conditional intensity for all neurons with a given last spike time as

| (4) |

(Fig 1C, inset). A comparison of Eq (4) with Eq (3) shows that the explicit dependence on all past spike times of a given neuron i has disappeared. Instead, the conditional intensity now only depends on the last firing time and the past population activity , t′ < t. To keep the notation simple, we omit in the following the population label α at all quantities.

Finite-size mean field theory

In this section, we present the main theoretical results with a focus on the finite-size effects arising from neuronal refractoriness. So far, we have effectively reduced the firing probability of a neuron to a function that only depends on its last spike time (Fig 2A). This allows us to describe the evolution of the system by the density of the last spike time [23, 68, 88, 89]. Because the last spike time characterizes the refractory state of the neuron, this density will also be referred to as the refractory density. Before we describe the novel finite-N theory, it is instructive to first recall the population equations for the case of infinite N (Fig 2B and 2C). Let us look at the population of neurons at time t and ask the following question: What fraction of these neurons has fired their last spike between and ? This fraction is given by the number of neurons that have fired in this interval multiplied by the survival probability that such a neuron has not fired again until time t. In other words, the product evaluated at time t represents the density of last spike times . Because a neuron with last spike time emits a spike with rate , the total population activity at time t is given by the integral [23]

| (5) |

This situation is depicted in Fig 2B. At the same time, the survival probability of neurons that fired their last spike at decays according to

| (6) |

with initial condition (Fig 2E and 2F red line). Eqs (5) and (6) define the population dynamics for N → ∞ [16, 23, 89].

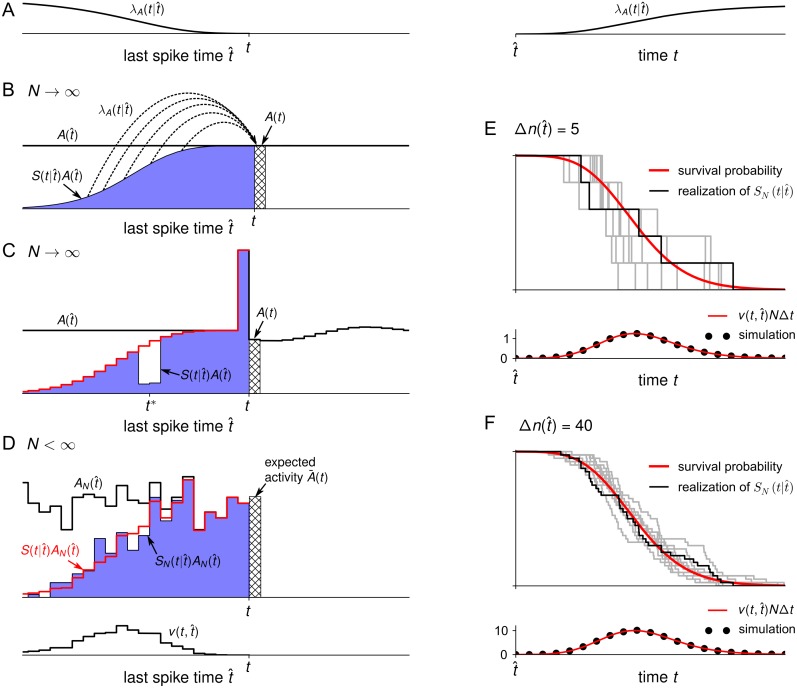

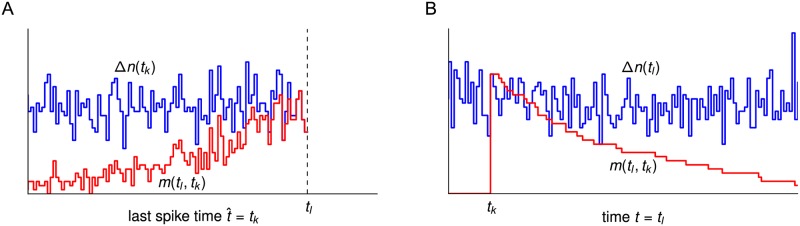

Fig 2. How fluctuations of the refractory density effect the population activity.

(A) The conditional intensity shown as a function of (left) and t (right). Typically, the conditional intensity increases as the time since the last spike grows and the neuron recovers from refractoriness. (B) For N → ∞, the population activity A(t) (hatched bin) results from averaged over the last spike times with a weighting factor (blue) corresponding to the density of last spike times. Here, is the survival probability. (C) The last spike times are discretized into time bins. In the bin immediately before t, a large fluctuation (blue peak) was induced by forcing some of the neurons with last spike time around t* to fire. At time t, the density of last spike times (blue) has a hole and a peak of equal probability mass. The red line shows the pseudo-density that would be obtained if we had used the survival probability of the unperturbed system. The peak at does not contribute to the activity A(t) because of refractoriness, but the hole at contributes with a non-vanishing rate (A), implying a reduction of A(t) (hatched bin). (D) For a finite population size (here N = 400), the refractory density (blue), determines the expectation (hatched bin) of the fluctuating activity AN(t). Analogous to the forced fluctuation in (C), the finite-size fluctuations are associated with negative and positive deviations in the refractory density (holes and overshoots) compared to the non-normalized density (red line) that would be obtained if only the mean and not the exact survival fraction had been taken into account. The variance of the deviations is proportional to given by Eq (12). As a function of , shows the range of where deviations are most prominent (bottom). (E, F) Given the number of neurons firing in the bin , , the fraction of neurons that survive until time t is shown for ten realizations (gray lines, one highlighted in black for clarity). The mean fraction equals the survival probability (red line, top panel). The variance of the number of survived neurons at time t, , is shown at the bottom (red line: semi-analytic theory, Eq (12); circles: simulation). (E) , (F) .

In the limit N → ∞, the dynamics of AN(t) = A(t) is deterministic because microscopic noise averages out. Nevertheless, the infinite-N case is useful to understand the main effect of fluctuations in the finite-N case. To this end, let us perform the following thought experiment: in a small time interval of length Δt immediately before time t, we induce a large, positive fluctuation in the activity by forcing many of the neurons with last spike close to a given time to fire a spike (Fig 2C). As a result, the density of last spike times at time t exhibits a large peak just prior to time t corresponding to the large number of neurons that have been forced to fire in the time interval [t − Δt, t). At the same time, these neurons leave behind a “hole” in the density around . Because the number of neurons is conserved, this hole exactly balances the area under the peak, and hence, the density of last spike times remains normalized. However, the two fluctuations (the hole and the peak) have two different effects on the population activity after time t. Specifically, the hole implies that some of the neurons which normally would have fired at time t with a nonzero rate λA(t|t*) > 0 are no longer available. Moreover, neural refractoriness implies that neurons which fired in the peak have a small or even vanishing rate λA(t|t − Δt) ≈ 0 at time t. As a result, the population activity is reduced shortly after the perturbation (Fig 2C). This example highlights the importance of the normalization of the refractory density as well as the state-dependence of the firing probability for understanding the effect of fluctuations. In particular, the normalization condition and neuronal refractoriness imply that a positive fluctuation of the population activity is succeeded by a negative fluctuation, and vice versa. This behavior is characteristic for spiking neurons, which are known to exhibit negative auto-correlations of their mean-centered spike trains at short time lags (see e.g. [14, 53, 90]).

We now turn to the finite-size case. In this case, it is advantageous to discretize the last spike times into small bins of size Δt that begin at the grid points , . Furthermore, we adopt the definition of the coarse-grained population activity, Eq (2), i.e. we consider the number of spikes in the time bin . Instead of the survival probability, we introduce the fraction of survived neurons , , such that is the number of neurons from bin k that have not fired again until time t (Fig 2D and 2E). Dividing this number by NΔt and taking the continuum limit Δt → 0, yields the density of last spike times in the case of finite N. Since all neurons are uniquely identified by their last spike time, this density is normalized [23]

| (7) |

We note that differentiating this equation with respect to time t yields the population activity as the formal finite-size analog of Eq (5). The change of the survival fraction , however, is not deterministic anymore as in Eq (6) but follows a stochastic jump process (Fig 2E and 2F): In the time step [t, t + Δt), the number of survived neurons for a given bin in the past, , makes a downstep that corresponds to the number of neurons that fire in the group with last spike time . For sufficiently small Δt, this number is Poisson-distributed with mean . Hence, the fraction of survived neurons evolves in time like a random stair case according to the update rule . The activity AN(t) = Δn(t)/(NΔt) in the time bin starting at t is given by the sum of all the downsteps, , where the sum runs over all possible last spike times. This updating procedure represents the evolution of the density of last spike times, , for finite N under the quasi-renewal approximation (cf. Methods, Eqs (41) and (42)). Although it is possible to simulate such a finite-N stochastic process using the downsteps , this process will not yield the reduced mesoscopic dynamics that we are looking for. The reason is that the variable refers to the subpopulation of neurons that fired in the small time bin at . For small Δt, the size of the subpopulation, , is a small number, much smaller than N. In particular, in the limit Δt → 0, the simulation of for all in the past would be as complex as the original microscopic dynamics of N neurons. Therefore we must consider such a simulation as a microscopic simulation. To see the difference to a mescoscopic simulation, we note that the population activity AN(t) involves the summation of many random processes (the downsteps ) over many small bins. If we succeed to simulate directly AN(t) from an underlying rate dynamics that depends deterministically on the past activities AN(t′), t′ < t, we will have a truely mescoscopic simulation. How to arrive at a formulation directly at the level of mescocopic quantities will be the topic of the rest of this section.

The crucial question is whether the stochasticity of the many different random processes can be reduced to a single effective noise process that drives the dynamics on the mesoscopic level. To this end, we note that for small Δt and given history , , each bin contributes with rate a Poisson random number of spikes to the total activity at time t (Fig 2D). Therefore, the total number of spikes Δn(t) is Poisson distributed with mean , where is the expected population rate

| (8) |

Here, the integral extends over all last spike times up to but not including time t. Eq (8) still depends on the stochastic variables . The main strategy to remove this microscopic stochasticity is to use the evolution of the survival probability , given by Eq (6), and the normalization condition Eq (7). For finite N, the quantity is formally defined as the solution of Eq (6) and can be interpreted as the mean of the survival fraction (Fig 2E and 2F, see Methods). Importantly, is a valid mesoscopic quantity since it only depends on the mesoscopic population activity AN (through , cf. Eq (6)), and not on a specific microscopic realization. Combining the survival probability with the actual history of the mesoscopic activity for yields the pseudo-density . In contrast to the macroscopic density in Eq (5) or the microscopic density , the pseudo-density is not normalized. However, the pseudo-density has the advantage that it is based on mesoscopic quantities only.

Let us split the survival fraction into the mesoscopic quantity and a microscopic deviation, . In analogy to the artificial fluctuation in our thought experiment, endogenously generated fluctuations in the finite-size system are accompanied by deviations of the microscopic density from the pseudo-density (Fig 2C and 2D, red line). A negative deviation () can be interpreted as a hole and a positive deviation () as an overshoot (compare red curve and blue histogram in Fig 2D). Similar to the thought experiment, the effect of these deviations needs to be weighted by the conditional intensity in order to arrive at the population activity. Eq (8) yields

| (9) |

Analogously, the normalization of the refractory density, Eq (7), can be written as

| (10) |

We refer to the second integral in Eq (9) as a correction term because it corrects for the error that one would make if one sets SN = S in Eq (8). This correction term represents the overall contribution of the holes (δS < 0) and overshoots (δS > 0) to the expected activity.

To eliminate the microscopic deviations in Eq (9) we use the normalization condition, Eq (10). This is possible because the correction term is tightly constrained by the sum of all holes and overshoots, , which by Eq (10), is completely determined by the past mesoscopic activities. Eqs (9) and (10) suggest to make the deterministic ansatz for the correction term. As shown in Methods (“Mesoscopic population equations”), the optimal rate Λ(t) that minimizes the mean squared error of this approximation is given by

| (11) |

Here, the quantity , called variance function, obeys the differential equation

| (12) |

with initial condition (see Methods, Eq (51). Importantly, the dynamics of v involves mesoscopic quantities only, and hence v is mesoscopic. As shown in Methods and illustrated in Fig 2D (bottom), we can interpret as the variance of the number of survived neurons, . To provide an interpretation of the effective rate Λ(t) we note that, for fixed t, the normalized variance is a probability density over . Thus, the effective rate Λ(t) can be regarded as a weighted average of the conditional intensity that accounts for the expected amplitude of the holes and overshoots.

Using the effective rate Λ(t) in Eq (9) results in the expected activity

| (13) |

Looking at the structure of Eq (13), we find that the first term is the familiar population integral known from the infinite-N case, Eq (5). The second term is a correction that is only present in the finite-N case. In fact, in the limit N → ∞, the pseudo-density converges to the macroscopic density , which is normalized to unity. Hence the correction term vanishes and we recover the population Eq (5) for the infinite system.

To obtain the population activity we consider an infinitesimally small time scale dt such that the probability of a neuron to fire during an interval [t, t + dt) is much smaller than one, i.e. . On this time scale, the total number of spikes dn(t) is an independent, Poisson distributed random number with mean , where is given by Eq (13). From Eq (2) thus follows the population activity

| (14) |

Alternatively, the population activity can be represented as a δ-spike train, or “shot noise”, , where is a random point process with a conditional intensity function . Here, the condition denotes the history of the point process {tpop,k} up to (but not including) time t, or equivalently the history of the population activity for . The conditional intensity means that the conditional expectation of the population activity is given by , which according to Eq (13) is indeed a deterministic functional of the past activities. Finally, we note that the case of large but finite populations permits a Gaussian approximation, which yields the more explicit form

| (15) |

Here, ξ(t) is a Gaussian white noise with correlation function 〈ξ(t)ξ(t′)〉 = δ(t − t′). The correlations of ξ(t) are white because spikes at t′ and t > t′ are independent given the expected population activity at time t. However, we emphasize that the expected population activity does include information on the past fluctuations ξ(t′) at time t′. Therefore the fluctuations of the total activity AN(t) are not white but a sum of a colored process and a white-noise process ξ(t) [55]. The white noise gives rise to the delta peak of the auto-correlation function at zero time lag which is a standard feature of any spike train, and hence also of AN(t). The colored process , on the other hand, arises from Eq (13) via a filtering of the actual population activity which includes the past fluctuations . For neurons with refractoriness, is negatively correlated with recent fluctuations (cf. the thought experiment of Fig 2B) leading to a trough at small time lags in the spike auto-correlation function [14, 53, 90].

The set of coupled Eqs (6), (12), (11)–(14) constitute the desired mesoscopic population dynamics and is the main result of the paper. The dynamics is fully determined by the history of the mesoscopic population activity AN. The Gaussian white noise in Eq (15) or the independent random number involved in the generation of the population activity via Eq (14) is the only source of stochasticity and summarizes the effect of microscopic noise on the mesoscopic level. Microscopic detail such as the knowledge of how many neurons occupy a certain microstate has been removed.

One may wonder where the neuronal interactions enter in the population equation. Synaptic interactions are contained in the conditional intensity which depends on the membrane potential , which in turn is driven by the synaptic current that depends on the population activity via Eq (29) in Methods. An illustration of the derived mesoscopic model is shown in Fig 1C (inset). In this section, we considered a single population to keep the notation simple. However, it is straightforward to formulate the corresponding equations for the case of several equations as shown in Methods, Sec. “Several populations”.

Stochastic population dynamics can be efficiently simulated forward in time

The stochastic population equations provide a rapid means to integrate the population dynamics on a mesoscopic level. To this end, we devised an efficient integration algorithm based on approximating the infinite integrals in the population equation Eq (13) by discrete sums over a finite number of refractory states (Methods, Sec. “Numerical implementation”). The algorithm involves the generation of only one random number per time step and population, because the activity is sampled from the mesoscopic rate . In contrast, the microscopic simulation requires in each time step to draw a random number for each neuron. Furthermore, because the population equations do not depend on the number of neurons, we expect a significant speed-up factor for large neural networks compared to a corresponding microscopic simulation. For example, the microscopic simulation of the cortical column in Fig 1B took 13.5 minutes to simulate 10 seconds of biological time, whereas the corresponding forward integration of the stochastic population dynamics (Fig 1D) took only 6.6 seconds on the same machine (see Sec. “Comparison of microscopic and mesoscopic simulations”).

A pseudocode of the algorithm to simulate neural population dynamics is provided in Methods (Sec. “Numerical implementation”). In addition to that, a reference implementation of this algorithm is publicly available under https://github.com/schwalger/mesopopdyn_gif, and will be integrated in the Neural Simulation Tool (NEST) [91], https://github.com/nest/nest-simulator, as a module presumably named gif_pop_psc_exp.

Two different consequences of finite N

For a first analysis of the finite-size effects, we consider the special case of a fully-connected network of Poisson neurons with absolute refractory period [14]. In this case, the conditional intensity can be represented as , where tref is the absolute refractory period, Θ(⋅) is the Heaviside step function and h(t) is the free membrane potential, which obeys the passive membrane dynamics

| (16) |

where τm is the membrane time constant, μ(t) = urest + RI(t) (where urest is the resting potential and R is the membrane resistance) accounts for all currents I(t) that are independent of the population activities, J is the synaptic strength and ϵ(t) is a synaptic filter kernel (see Methods, Eq (27) for details). For the mathematical analysis, we assume that the activity AN(t) and input μ(t) have started at t = −∞ so that we do not need to worry about initial conditions. In a simulation, we could for example start at t = 0 with initial conditions AN(t) = δ(t) for t ≤ 0 and h(0) = 0.

For the conditional intensity given above, the effective rate Λ(t), Eq (11), is given by Λ(t) = f(h(t)) because the variance is zero during the absolute refractory period . As a result, the mesoscopic population Eq (13) reduces to the simple form

| (17) |

This mesoscopic equation is exact and could have been constructed directly in this simple case. For N → ∞, where AN(t) becomes identical to , this equation has been derived by Wilson and Cowan [16], see also [14, 23, 92]. The intuitive interpretation of Eq (17) is that the activity at time t consists of two factors, the “free” rate λfree(t) = f(h(t)) that would be expected in the absence of refractoriness and the fraction of actually available (“free”) neurons that are not in the refractory period. For finite-size populations, these two factors explicitly reveal two distinct finite-size effects: firstly, the free rate is driven by the fluctuating population activity AN(t) via Eq (16) and hence the free rate exhibits finite-size fluctuations. This effect originates from the transmission of the fluctuations through the recurrent synaptic connectivity. Secondly, the fluctuations of the population activity impacts the refractory state of the population, i.e. the fraction of free neurons, as revealed by the second factor in Eq (17). In particular, a large positive fluctuations of AN in the recent past reduces the fraction of free neurons, which causes a negative fluctuation of the number of expected firings in the next time step. Therefore, refractoriness generates negative correlations of the fluctuations 〈ΔA(t)ΔA(t′)〉 for small |t − t′|. We note that such a decrease of the expected rate would not have been possible if the correction term in Eq (13) was absent. However, incorporating the effect of recent fluctuations (i.e. fluctuations in the number of refractory neurons) on the number of free neurons by adding the correction term, and thereby balancing the total number of neurons, recovers the correct Eq (17).

The same arguments can be repeated in the general setting of Eq (13). Firstly, the conditional intensity depends on the past fluctuations of the population activity because of network feedback. Secondly, the fluctuations lead to an imbalance in the number of neurons across different states of relative refractoriness (i.e. fluctuations do not add up to zero) which gives rise to the “correction term”, i.e. the second term on the r.h.s. of Eq (13).

Comparison of microscopic and mesoscopic simulations

We wondered how well the statistics of the population activities obtained from the integration of the mesoscopic equations compare with the corresponding activities generated by a microscopic simulation. As we deal with a finite-size system, not only to the first-order statistics (mean activity) but also higher-order statistics needs to be considered. Because there are several approximations involved (e.g. full connectivity, quasi-renewal approximation and effective rate of fluctuations in the refractory density), we do not expect a perfect match. To compare first- and second-order statistics, we will mainly use the power spectrum of the population activities in the stationary state (see Methods, Sec. “Power spectrum”).

Mesoscopic equations capture refractoriness

Our theory describes the interplay between finite-size fluctuations and spike-history effects. The most prominent spike-history effect is refractoriness, i.e. the strong effect of the last spike on the current probability to spike. To study this effect, we first focus on a population of uncoupled neurons with a constant threshold corresponding to leaky integrate-and-fire (LIF) models without adaptation (Fig 3). The reset of the membrane potential after each spike introduces a period of relative refractoriness, where spiking is less likely due to a hyper-polarized membrane potential (Fig 3A). Because of the reset to a fixed value, the spike trains of the LIF neurons are renewal processes. In the stationary state, the fluctuation statistics as characterized by the power spectrum is known analytically for the case of a population of independent renewal spike trains (Eq (134) in Methods).

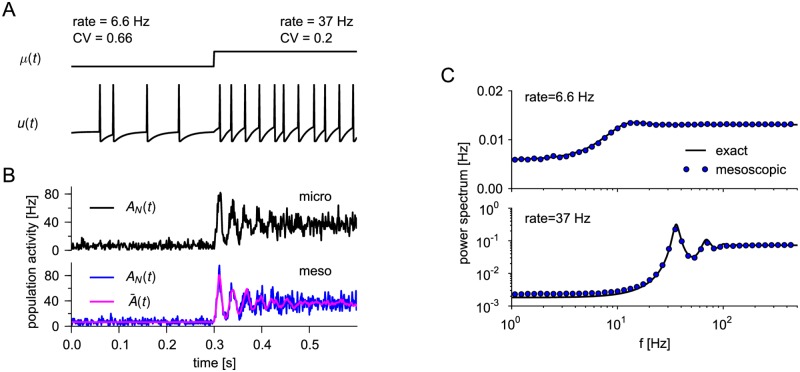

Fig 3. Population activity of uncoupled leaky integrate-and-fire neurons without adaptation.

(A) Neurons were stimulated by a step current Iext(t) such that μ = urest + RIext(t) increased from μ = 15 mV to μ = 30 mV (top). Voltage trace of one of 500 neurons (bottom). Stationary firing statistics (rate and coefficient of variation (CV) of the interspike intervals) corresponding to the two stimuli are indicated above the step current. (B) Realizations of the population activity AN(t) for the microscopic (top) and mesoscopic (bottom, blue line) simulation. The magenta line shows the expected population rate given the past actual realization AN(t′) for t′ < t. (C) The power spectrum of the stationary activity AN(t) obtained from renewal theory, Eq (134), (black solid line) and from the mesoscopic simulation (blue circles). The top and bottom panel corresponds to weak (μ = 15 mV) and strong (μ = 30 mV) constant stimulation (transient removed).

A single realization of the population activity AN(t) fluctuates around the expected activity that exhibits a typical ringing in response to a step current stimulation [23, 93]. The time course of the expected activity as well as the size of fluctuations are roughly similar for microscopic simulation (Fig 3B, top) and the numerical integration of the population equations (Fig 3B, bottom). We also note that the expected activity is not constant in the stationary regime but shows weak fluctuations. This is because of the feedback of the actual realization of AN(t′) for t′ < t onto the dynamics of , Eq (13).

A closer inspection confirms that the fluctuations generated by the mesoscopic population dynamics in the stationary state exhibit the same power spectrum (Fig 3C) as the theoretically predicted one, which is given by Eq (134). In particular, the mesoscopic equations capture the fluctuation statistics even at high firing rates, where the power spectrum strongly deviates from the white (flat) power spectrum of a Poisson process (Fig 3C bottom). The pronounced dip at low-frequencies is a well-known signature of neuronal refractoriness [94].

Mesoscopic equations capture adaptation and burstiness

Further important spike-history effects can be realized by a dynamic threshold. For instance, spike-frequency adaptation, where a neuron adapts its firing rate in response to a step current after an initial strong response (Fig 4A and 4B), can be modeled by an accumulating threshold that slowly decays between spikes [47, 49]. In single realizations, the mean population rate as well as the size of fluctuations appear to be similar for microscopic and mesoscopic case (Fig 4B, top and bottom, respectively). For a more quantitative comparison we compared the ensemble statistics as quantified by the power spectrum. This comparison reveals that the fluctuation statistics is well captured by the mesoscopic model (Fig 4C). The main effect of adaptation is a marked reduction in the power spectrum at low frequencies compared to the non-adaptive neurons of Fig 3. The small discrepancies compared to the microscopic simulation originate from the quasi-renewal approximation, which does not account for the individual spike history of a neuron before the last spike but only uses the population averaged history. This approximation is expected to work well if the threshold kernel changes slowly, effectively averaging the spike history locally in time [63].

Fig 4. Population dynamics captures adaptation and burstiness.

(A) 500 adapting leaky integrate-and-fire neurons were stimulated by a step current Iext that increased μ = urest + RIext(t) from μ = 12 mV to μ = 27 mV (top). Voltage trace of one neuron (bottom). Stationary firing statistics (rate and coefficient of variation (CV)) corresponding to the two stimuli are indicated above the step current. (B) Realizations of the population activity obtained from microscopic simulation (black) and mesoscopic population equation (blue) as well as (magenta). (C) Power spectra corresponding to the stationary activity (averaged over 1024 trials each of 20 s length) at low and high firing rates as in (A), circles and lines depict microscopic and mesoscopic case, respectively. Parameters in (A)–(C): uth = 10 mV, ur = 25 mV, threshold kernel for t ≥ tref with Jθ,1 = 1.5 mV⋅s, τθ,1 = 0.01 s, Jθ,2 = 1.5 mV⋅s, τθ,2 = 1 s. (D) Bursty neuron model. (i) Biphasic threshold kernel θ(t), where a combination of a negative part (facilitation) and a positive part (adaptation) yields a bursty spike pattern, (ii) sample firing pattern of one neuron. (iii) The interspike interval distribution with values of rate and CV. (E) Power spectrum of the population activity AN(t) shown in (D)-(iv). Parameters in (D) and (E): μ = 20, uth = 10 mV, ur = 0 mV, τm = 0.01 s, facilitation: Jθ,1 = −0.45 mV⋅s, τθ,1 = 0.05 s; adaptation: Jθ,2 = 2.5 mV⋅s, τθ,2 = 1 s.

In the case of fast changes of the threshold kernel, we do not expect that the quasi-renewal approximation holds. For example, a biphasic kernel [95] with a facilitating part at short interspike intervals (ISI) and an adaptation part for large ISIs (Fig 4D-(i)) can realize bursty spike patterns (Fig 4D-(ii)). The burstiness is reflected in the ISI density by a peak at small ISIs, corresponding to ISIs within a burst, and a tail that extends to large ISIs representing interburst intervals (Fig 4D-(iii)). Remarkably, the mesoscopic equations with the quasi-renewal approximation qualitatively capture the burstiness, as can be seen by the strong low-frequency power at about 1 Hz (Fig 4E). At the same time, the effect of adaptation manifests itself in a reduced power at even lower frequencies. The systematic overestimation of the power across frequencies implies a larger variance of the empirical population activity obtained from the mesoscopic simulation, which is indeed visible by looking at the single realizations (Fig 4D-(iv)). As an aside, we note that facilitation which is strong compared to adaptation can lead to unstable neuron dynamics even for isolated neurons [96].

Recurrent network of randomly connected neurons

So far, we have studied populations of uncoupled neurons. This allowed us to demonstrate that the mesoscopic dynamics captures effects of single neuron dynamics on the fluctuations of the population activity. Let us now suppose that each neuron in a population α is randomly connected to presynaptic neurons in population β with probability pαβ such that the in-degree is fixed to pαβ Nβ connections. In the presence of synaptic coupling, the fluctuations at time t are propagated through the recurrent connectivity and may significantly influence the population activity at time t′ > t. For instance, in a fully-connected network (pαβ = p = 1 for all α and β) of excitatory and inhibitory neurons (E-I network, Fig 5B and 5C), all neurons within a population receive identical inputs given by the population activities (cf. Eq (29)). Finite-size fluctuations of generate common input to all neurons and tend to synchronize neurons. This effect manifests itself in large fluctuations of the population activity (Fig 5B). Since the mean-field approximation of the synaptic input becomes exact in the limit p → 1, we expect a good match between the microscopic and mesoscopic simulation in this case. Interestingly, the power spectra of the population activities obtained from these simulations coincide well even for an extremely small E-I network consisting of only one inhibitory and four excitatory neurons (Fig 5C). This extreme case of N = 5 neurons with strong synapses (here, wEE = wIE = 12 mV, wII = wEI = −60 mV) highlights the non-perturbative character of our theory for fully-connected networks, which does not require the inverse system size or the synaptic strength to be small. In general, the power spectra reveal pronounced oscillations that are induced by finite size fluctuations [43]. The amplitude of these stochastic oscillations decreases as the network size increases and vanishes in the large-N limit.

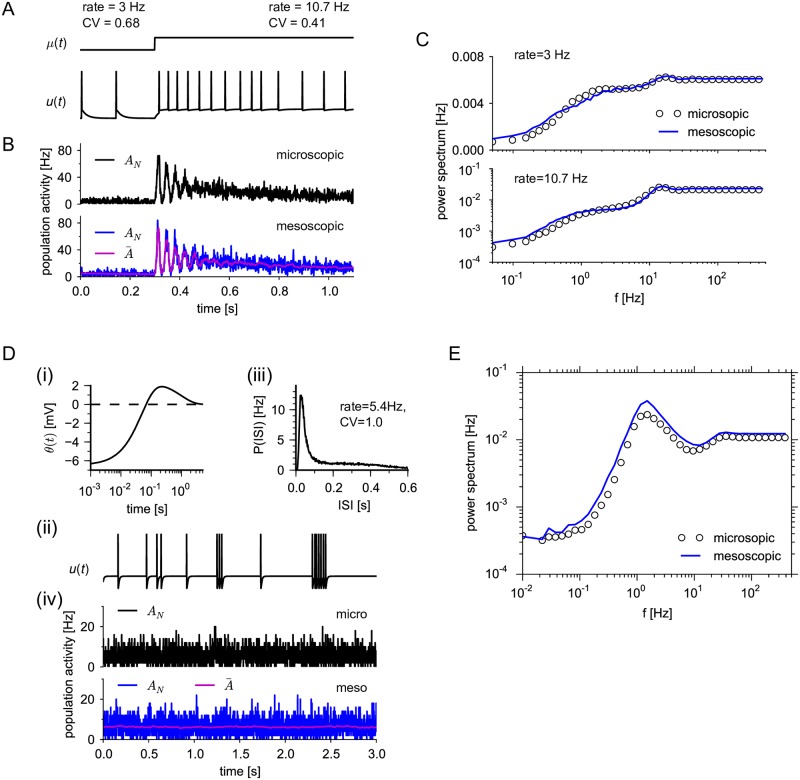

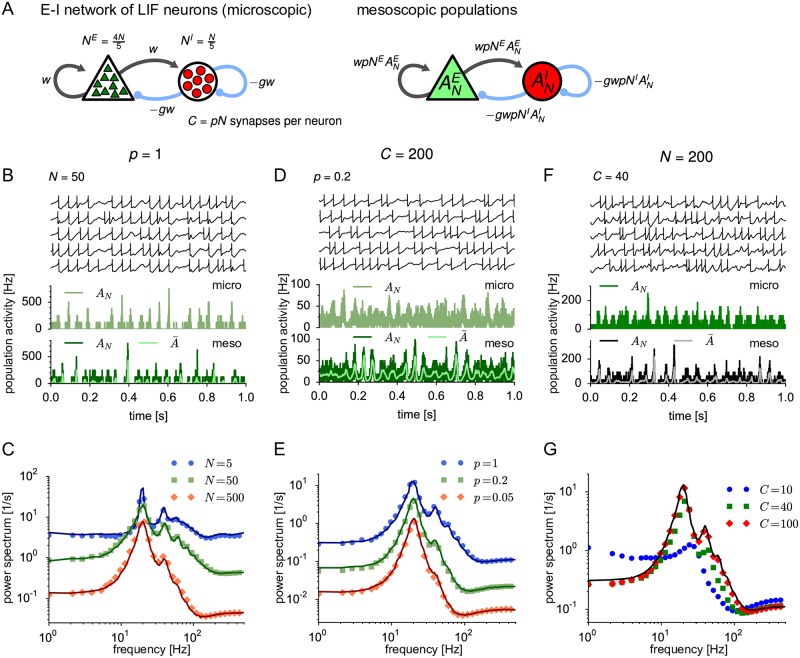

Fig 5. Mesoscopic dynamics of E-I network for varying network size N, connection probability p and number of synapses per neuron C.

(A) Left: Schematic of the network of NE excitatory and NI = NE/4 inhibitory leaky integrate-and-fire (LIF) neurons, each receiving CE = pNE (CI = pNI) connections from a random subset of excitatory (inhibitory) neurons. Total numbers are N = NE + NI and C = CE + CI. At C = 200, the synaptic strength is w = 0.3 mV and −gw = −1.5 mV for excitatory and inhibitory connections, respectively. To preserve a constant mean synaptic input, the synaptic strength is scaled such that Cw = const‥ Right: Schematic of a corresponding mesoscopic model of two interacting populations. (B) Trajectories of u(t) for five example neurons (top) and of the excitatory population activity obtained from the network simulation (middle) and the mesoscopic simulation (bottom, dark green) for C = N = 50; time resolution Δt = 0.2 ms. The light green trajectory (bottom panel) depicts the expected population activity given the past activity. (C) Power spectra of for different network sizes while keeping p = 1 fixed (microscopic: symbols, mesoscopic: solid lines with corresponding dark colors). (D) Sample trajectories corresponding to the green curve in (E) (N = 1000, p = 0.2). (E) Analogously to (C) but varying the connection probabilities while keeping C = pN = 200 fixed. (F) Sample trajectories corresponding to the green curve in (G) (N = 200, C = 40). (G) Analogously to (C) but varying the number of synapses C while keeping N = 200 fixed. Note that the mesoscopic theory (black solid line) is independent of C because the product Cw, which determines the interaction strength in the mesoscopic model (see, left panel of (A)), is kept constant. Parameters: μE/I = 24 mV, mV and θE/I(t) ≡ 0 (no adaptation).

If the network is not fully but randomly-connected (0 < p < 1), neurons still share a part of the finite-size fluctuations of the population activity. Earlier theoretical studies [58, 68, 97] have pointed out that these common fluctuations inevitably yield correlated and partially synchronized neural activity, as observed in simulations (Fig 5D and 5F). This genuine finite-size effect decreases for larger networks approaching an asynchronous state [98] (Fig 5C and 5E). As argued in previous studies [58–60], the fluctuations of the synaptic input can be decomposed into two components, a coherent and an incoherent one. The coherent fluctuations are given by the fluctuations of the population activity and are thus common to all neurons of a population. This component is exactly described by our mean-field approximation, used in Eq (4) (cf. Methods, Eq (31)). The incoherent fluctuations are caused by the quenched randomness of the network (i.e. each neuron receives input from a different subpopulation of the network) and have been described as independent Poisson input in earlier studies [58–60]. If we compare the membrane potential of a single neuron with the one expected from the mean-field approximation (Fig 6A, 6C and 6E, top), we indeed observe a significant difference in fluctuations. This difference originates from the incoherent component. Differences in membrane potential will lead to differences in the instantaneous spike emission probability for each individual neuron; cf. Eq (3). However, in order to calculate the population activity we need to average the conditional firing rate of Eq (3) over all neurons in the population (see Methods, Eq (28) for details). Despite the fact that each neuron is characterized by a different last firing time , the differences in firing rate caused by voltage fluctuations will, for sufficiently large N and not too small p, average out whereas common fluctuations caused by past fluctuations in the population activity will survive (Fig 6A, 6C and 6E, bottom). In other words, the coherent component is the one that dominates the finite-N activity whereas the incoherent one is washed out. Therefore, mesoscopic population activities can be well described by our mean-field approximation even when the network is not fully connected (Figs 5E, 5G and 6B, 6D, 6F). Remarkably, even for C = 200 synapses per neuron and p = 0.05, the mesoscopic model agrees well with the microscopic model. However, if both N and p are small, the mesoscopic theory breaks down as expected (Fig 5G, blue circles). Furthermore, strong synaptic weights imply strong incoherent noise, which is then passed through the exponential nonlinearity of the hazard function. This may lead to deviations of the population-averaged hazard rate from the corresponding mean-field approximation (Fig 6E, bottom), and, consequently, to deviations between microscopic and mesoscopic population activities in networks with strong random connections (Fig 6F).

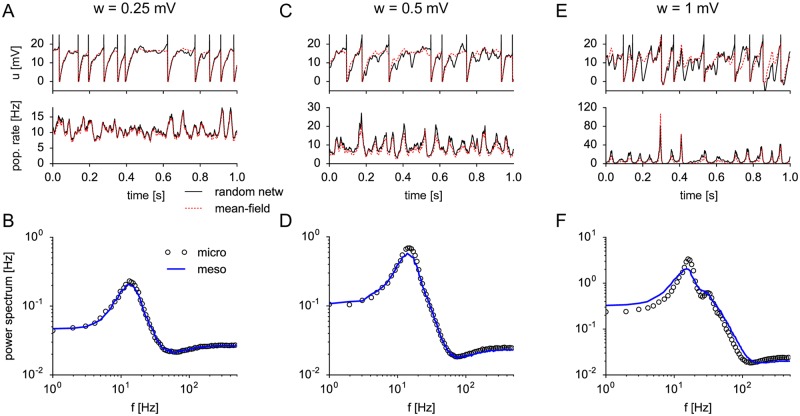

Fig 6. Mean-field approximation of synaptic input for randomly connected networks.

The same E-I network as in Fig 5 with N = 500 neurons and connection probability p = 0.2 was simulated for increasing synaptic strength wEE = wIE = w (wEI = wII = −5w) of excitatory (inhibitory) connections: (A, B) w = 0.25 mV, (C, D) w = 0.5 mV (E, F) w = 1 mV. (A, C, E) Top: Membrane potential of one example neuron shows fluctuations due to spike input from C = 100 presynaptic neurons (black line), which represent a random subset of all 500 neurons. The mean-field approximation of the membrane potential (dashed red line) assumes that the neuron had the same firing times but was driven by all neurons, i.e. by the population activities and , with rescaled synaptic strength . Although individual membrane potentials differ significantly from the mean-field approximation (top), the relevant population-averaged hazard rates (bottom) are well predicted by the mean-field approximation. (B, D, F) Corresponding power spectra of the (excitatory) population activity for microscopic (circles) and mesoscopic (blue solid line) simulation. Parameters as in Fig 5 except μE/I = 18 mV.

Finite-size induced switching in bistable networks

In large but finite E-I networks, the main effect of weak finite-size fluctuations is to distort the deterministic population dynamics of the infinitely large network (stable asynchronous state or limit cycle motion) leading to stochastic oscillations and phase diffusion that can be understood analytically by linear response theory [43, 55, 60, 69] and weakly nonlinear analysis [58]. This is qualitatively different in networks with multiple stable states. In such networks, finite-size fluctuations may have a drastic effect because they enable large switch-like transitions between metastable states that cannot be described by a linear or weakly nonlinear theory. We will show now that our mesoscopic population equation accurately captures strongly nonlinear effects, such as large fluctuations in multistable networks.

Multistability in spiking neural networks can emerge as a collective effect in balanced E-I networks with clustered connectivity [99], and, generically, in networks with a winner-take-all architecture, where excitatory populations compete through inhibitory interactions mediated by a common inhibitory population (see, e.g. [14, 100–104] and Fig 7A). Jumps between metastable states have been used to model switchings in bistable perception [32–34]. To understand such finite-size induced switching in spiking neural networks on a qualitative level, phenomenological rate models have been usually employed [32, 104]. In these models, stochastic switchings are enabled by noise added to the deterministic rate equations in an ad hoc manner. Our mesoscopic mean-field equations keep the spirit of such rate equations, however with the important difference that the noisy dynamics is systematically derived from the underlying spiking neural network without any free parameter. Here, we show that the mesoscopic mean-field equations quantitatively reproduce finite-size induced transitions between metastable states of spiking neural networks. We emphasize that the switching statistics depends sensitively on the properties of the noise that drive the transitions [105]. Therefore, an accurate account of finite-size fluctuations is expected to be particularly important in this case.

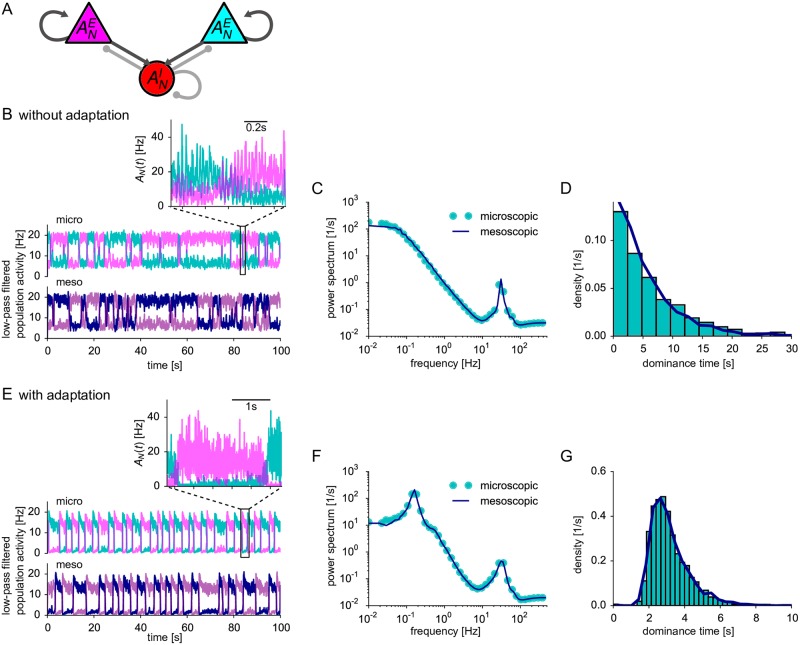

Fig 7. Finite-size induced switching in a bistable network.

(A) Schematic of a winner-take-all network architecture: Two competing excitatory populations (NE1 = NE2 = 400) interact with a common inhibitory population (NI = 200). (B)-(D) In the absence of adaptation (θE/I(t) ≡ 0), the excitatory populations switch between low and high activities in an irregular fashion (B). Activities in (B, E) are low-pass-filtered by a moving average of 100 ms. Top: full network simulation. Inset: Magnified view of the activities for 1 s (without moving average) showing fast large-amplitude oscillations. Bottom: mesoscopic simulation. (C) The power spectrum of the activity of the excitatory populations exhibits large low-frequency power and a high-frequency peak corresponding to the slow stochastic switching between high- and low-activity states and the fast oscillations, respectively. (D) The density of the dominance times (i.e. the residence time in the high-activity states) has an exponential form. (E-G) Like (B-D) but excitatory neurons exhibit weak and slow adaptation ( with Jθ = 0.1 mV⋅s, τθ = 1 s for t ≥ tref). Switching between high- and low-activity states is more regular than in the non-adapting case as revealed by a low-frequency peak in the power spectrum (F) and a narrow, unimodal density of dominance times (G). In (C, D) and (F, G) microscopic and mesoscopic simulation correspond to cyan symbols/bars and dark blue solid lines, respectively. Parameters: μE/I = 36 mV except for μE = 36.5 in (E-G) to compensate adaptation. Time step Δt = 0.01 ms (microscopic), Δt = 0.2 ms (mesoscopic). Efficacies of excitatory and inhibitory connections: wE = 0.0624 mV and wI = −0.2496 mV (B-D), and wE = 0.096 mV and wI = −0.384 mV (E-G), p = 1, mV.

We consider a simple bistable network of two excitatory populations with activities AE1 and AE2, respectively, that are reciprocally connected to a common inhibitory population with activity AI (Fig 7A). We choose the mean input and the connection strength such that in the large-N limit the population activities exhibited two stable equilibrium states, one corresponding to a situation, where AE1 is high and AE2 is low, the other state corresponding to the inverse situation, where AE1 is low and AE2 is high. We found that in smaller networks, finite-size fluctuations are indeed sufficient to induce transitions between the two states leading to repeated switches between high- and low-activity states (Fig 7B and 7E). The regularity of the switching appears to depend crucially on the presence of adaptation, as has been suggested previously [32, 34]. Remarkably, both in the presence and absence of adaptation, the switching dynamics of the spiking neural network appears to be well reproduced by the mesoscopic mean-field model.

For a more quantitative comparison, we use several statistical measures that characterize the bistable activity. Let us first consider the case without adaptation. As before, we compare the power spectra of the population activity for both microscopic and mesoscopic simulation and find a good agreement (Fig 7C). The peak in the power spectrum at relatively high-frequency reveals strong, rapid oscillations that are visible in the population activity after a switch to the high-activity state (inset of Fig 7B with magnified view). In contrast, the large power at low frequencies corresponds to the slow fluctuations caused by the switching of activity between the two excitatory populations, as revealed by the low-pass filtered population activity (Fig 7B). The Lorentzian shape of the power spectrum caused by the slow switching dynamics is consistent with stochastically independent, exponentially distributed residence times in each of the two activity states (i.e., a homogeneous Poisson process). The residence time distribution shows indeed a monotonic, exponential decay (Fig 7D) both in the microscopic and mesoscopic model. Furthermore, we found that residence times do not exhibit significant serial correlations. Together, this confirms the Poissonian nature of bistable switching in our three-population model of neurons without adaptation.

In models for perceptual bistability, residence times in the high-activity state are often called dominance times. The distribution of dominance times is usually not exponential but has been described by a more narrow, gamma-like distribution (see, e.g. [106]). Such a more narrow distribution emerges in a three-population network where excitatory neurons are weakly adaptive. When the population enters a high-activity state, the initial strong increase of the population activity is now followed by a slow adaptation to a somewhat smaller, stationary activity (Fig 7E). Eventually, the population jumps back to the low-activity state. The switching dynamics is much more regular with than without adaptation leading to slow stochastic oscillations as highlighted by a second peak in the power spectrum at low frequencies (Fig 7F) and a narrow distribution of dominance times (Fig 7G), in line with previous theoretical studies [32–34]. We emphasize, however, that in contrast to these studies the underlying deterministic dynamics for N → ∞ is in our case not oscillatory but bistable, because the adaptation level is below the critical value necessary in the deterministic model to switch back to the low-activity state.

The emergence of regular switching due to finite-size noise can be understood by interpreting the residence time of a given population in the high-activity state as arising from two stages: (i) the initial transient of the activity to a decreased (but still large) stationary value due to adaptation and (ii) the subsequent noise-induced escape from the stationary adapted state. The first stage is deterministic and hence does not contribute to the variability of the residence times. The variability results mainly from the second stage. The duration of the first stage is determined by the adaptation time scale. If this time covers a considerable part of the total residence time, we expect that the coefficient of variation (CV), defined as the ratio of standard deviation and mean residence time, is small. In the case without adaptation, a deterministic relaxation stage can be neglected against the mean noise-induced escape time so that the CV is larger.

Mesoscopic dynamics of cortical microcolumn

As a final example, we applied the mesoscopic population equations to a biologically more detailed model of a local cortical microcircuit. Specifically, we used the multi-laminar column model of V1 proposed by Potjans and Diesmann [5] (see also [72, 107] for an analysis of this model). It consists of about 80′000 non-adapting integrate-and-fire neurons organized into four layers (L2/3, L4, L5 and L6), each accommodating an excitatory and an inhibitory population (see schematic in Fig 1A). The neurons are randomly connected within and across the eight populations. We slightly changed this model to include spike-frequency adaptation of excitatory neurons, as observed in experiments (see e.g. [26]). Furthermore, we replaced the Poissonian background noise in the original model by an increase of mean current drive and escape noise (both in the microscopic and mesoscopic model). The mean current drive was chosen such that the firing rates of the spontaneous activity were matched to the firing rates in the original model. We note that the fitting of the mean current was made possible by the use of our population equations, which allow for an efficient evaluation of the firing rates. The complete set of parameters is listed in Methods, Sec. “Modified Potjans-Diesmann model”.

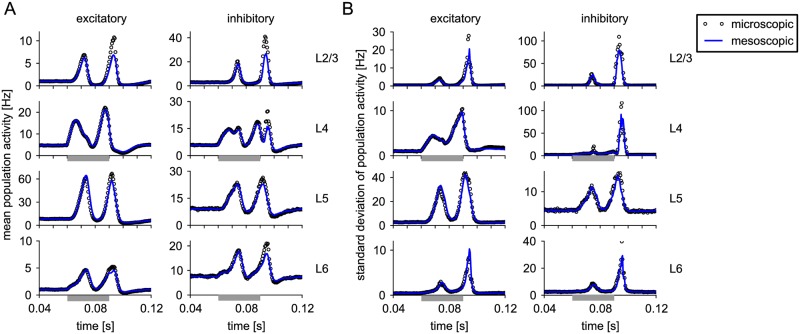

Sample trajectories of the population activities have already served as an illustration of our approach in Fig 1, where neurons in layer 4 and 6 are stimulated by a step current of 30 ms duration, mimicking input from the thalamus as in the original study [5]. Individual realizations obtained from the microscopic and mesoscopic simulation differ due to the marked stochasticity of the population activities (Fig 1B and 1D). However, trial-averaging reveals that the mean time-dependent activities that can be estimated from a peri-stimulus-time histogram (PSTH) obtained from microscopic and mesoscopic simulations indeed agree well, except for a slight underestimation of the oscillatory peak during stimulus offset compared to the microscopic simulation (Fig 8A). However, during the short moments where the mean time-dependent activity (PSTH) of the mescoscopic and microscopic simulation do not match, the time-dependent standard deviation across hundreds of trials (Fig 8B) is extremely high in both mesoscopic and microscopic simulation, indicating that fluctuations of the activity between one trial and the next are high after stimulus offset at 0.09s. The standard deviation as a function of time (Fig 8B) agrees overall nicely between microscopic and mesoscopic simulation, suggesting a good match of second-order statistics. A closer look at the second-order statistics, as provided by the power spectra of spontaneous activities (“ground state” of cortical activity), also reveals a good agreement at all frequencies (Fig 9). This agreement is remarkable in view of the low connection probabilities (p < 0.14, see table 5 in [5]) that violate the assumption of dense random connectivity used in the derivation of the mesoscopic mean-field equations. More generally, this example demonstrates that the range of validity of our mesoscopic theory covers relevant cortical circuit models.

Fig 8. Time-dependent statistics of the population activities in a cortical column model.

(A) Trial-averaged population activities (peri-stimulus-time histogram, PSTH) in the modified Potjans-Diesmann model as illustrated for a single trial in Fig 1. Circles and blue solid line show microscopic simulation (250 trials, simulation time step Δt = 0.01 ms) and mesoscopic simulation (1000 trials, Δt = 0.5 ms), respectively. A step current mimicking thalamic input is provided to neurons in layer 4 and 6 during a time window of 30 ms as indicated by the gray bar. Rows correspond to the layers L2/3, L4, L5 and L6, respectively, as indicated. Columns correspond to excitatory and inhibitory populations, respectively. (B) Corresponding, time-dependent standard deviation of AN(t) measured with temporal resolution Δt = 0.5 ms.

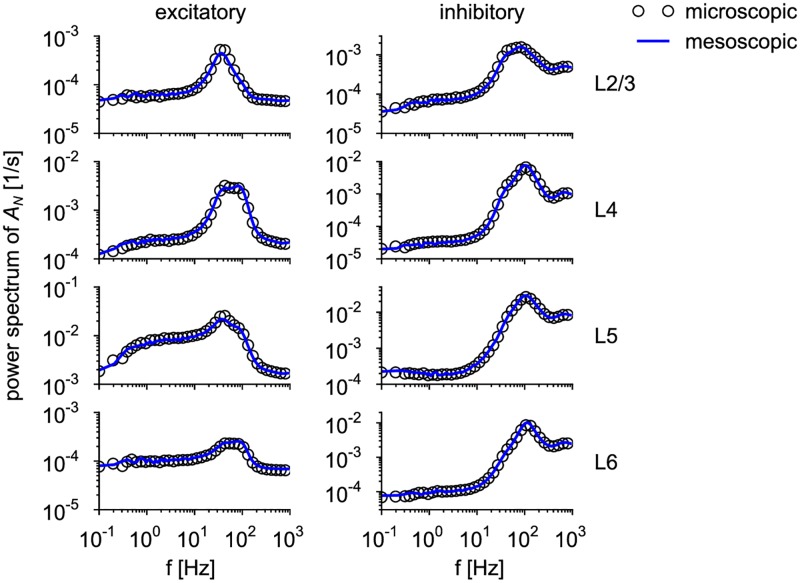

Fig 9. Stationary statistics of population activities in a cortical column model.

Power spectra of the spontaneous population activities AN(t) in the modified Potjans-Diesmann model in the absence of time-dependent thalamic input (corresponding to the activities shown in Fig 1B (microscopic) and Fig 1D (mesoscopic) outside of the stimulation window. Circles and blue solid lines represent microscopic and mesoscopic simulation, respectively. Rows correspond to the layers L2/3, L4, L5 and L6, respectively, as indicated. Columns correspond to excitatory and inhibitory populations, respectively.

Finally, we mention that the numerical integration of the mesoscopic population equations yields a significant speed-up compared to the microscopic simulation. While a systematic and fair comparison of the efficiencies depends on many details and is thus beyond the scope of this paper, we note that a simulation on a single core of 10s of biological time took 811.2s using the microscopic model, whereas that of the mesoscopic model only took 6.6s. This corresponds to a speed-up factor of around 120 achieved by using the mesoscopic population model. In the simulation, we employed the same integration time step of Δt = 0.5 ms for both models for a first naive assessment of the performance. However, a more detailed comparison of the performance should be based on simulation parameters that achieve a given accuracy. In this case, we expect an even larger speed-up of the mesoscopic simulation because for the same accuracy the temporally coarse-grained population equations allow for a significantly larger time step than the microscopic simulation of spiking neurons.

Discussion

In the present study we have derived stochastic population equations that govern the evolution of mesoscopic neural activity arising from a finite number of stochastic neurons. To our knowledge, this is the first time that such a mesoscopic dynamics has been derived from an underlying microscopic model of spiking neurons with pronounced spike-history effects. The microscopic model consists of interacting homogeneous populations of generalized integrate-and-fire (GIF) neuron models [14, 26–28], or alternatively, spike-response (SRM) [14] or generalized linear models (GLMs) [55, 108, 109]. These classes of neuron models account for various spike-history effects like refractoriness and adaptation [14, 84]. Importantly, parameters of these models can be efficiently extracted from single cell experiments [27] providing faithful representations of real cortical cells under somatic current injection. The resulting population equations on the mesoscopic level yield the expected activity of each population at the present time as a functional of population activities in the past. Given the expected activities at the present time, the actual mesoscopic activities can be obtained by drawing independent random numbers. The derived mesoscopic dynamics captures nonlinear emergent dynamics as well as finite-size effects, such as noisy oscillations and stochastic transitions in multistable networks. Realizations generated by the mesoscopic model have the same statistics as the original microscopic model to a high degree of accuracy (as quantified by power spectra and residence time distributions). The equivalence of the population dynamics (mesoscopic model) and the network of spiking neurons (microscopic model) holds for a wide range of population sizes and coupling strengths, for time-dependent external stimulation, random connectivity within and between populations, and even if the single neurons are bursty or have spike-frequency adaptation.

Quantitative modeling of mesoscopic neural data: Applications and experimental predictions

Our theory provides a general framework to replace spiking neural networks that are organized into homogeneous populations by a network of interacting mesoscopic populations. For example, the excitatory and inhibitory neurons of a layer of a cortical column [5] may be represented by one population each, as in Fig 1. Weak heterogeneity in the neuronal parameters are allowed in our theory because the mesoscopic equations describe the population-averaged behavior. Further subdivisions of the populations are possible, however, such as a subdivision of the inhibitory neurons into fast-spiking and non fast-spiking types [26]. Populations that show initially a large degree of heterogeneity can be further subdivided into smaller populations. In this case, a correct description of finite-size fluctuations, as provided by our theory, will be particularly important. However, as with any mean-field theory, we expect that our theory breaks down if neural activity and information processing is driven by a few “outlier” neurons such that a mean-field description becomes meaningless. Further limitations may result from the mean-field and quasi-renewal approximation, Eq (4). Formally, the mean-field approximation of the synaptic input requires dense connectivity and the heterogeneity in synaptic efficacies and in synapse numbers to be weak. Moreover, the quasi-renewal approximation assumes slow threshold dynamics. However, as we have demonstrated here, our mesoscopic population equations can provide in concrete applications excellent predictions even for sparse connectivity (Figs 5D–5G, 8 and 9) and may qualitatively reproduce the mesoscopic statistics in the presence of fast threshold dynamics (Fig 4D and 4E).