Abstract

Background

Health service and systems researchers have developed knowledge translation strategies to facilitate the use of reliable evidence for policy, including rapid response briefs as timely and responsive tools supporting decision making. However, little is known about users’ experience with these newer formats for presenting evidence. We sought to explore Ugandan policymakers’ experience with rapid response briefs in order to develop a format acceptable for policymakers.

Methods

We used existing research regarding evidence formats for policymakers to inform the initial version of rapid response brief format. We conducted user testing with healthcare policymakers at various levels of decision making in Uganda, employing a concurrent think-aloud method, collecting data on elements including usability, usefulness, understandability, desirability, credibility and value of the document. We modified the rapid response briefs format based on the results of the user testing and sought feedback on the new format.

Results

The participants generally found the format of the rapid response briefs usable, credible, desirable and of value. Participants expressed frustrations regarding several aspects of the document, including the absence of recommendations, lack of clarity about the type of document and its potential uses (especially for first time users), and a crowded front page. Participants offered conflicting feedback on preferred length of the briefs and use and placement of partner logos. Users had divided preferences for the older and newer formats.

Conclusion

Although the rapid response briefs were generally found to be of value, there are major and minor frustrations impeding an optimal user experience. Areas requiring further research include how to address policymakers’ expectations of recommendations in these briefs and their optimal length.

Background

Over a decade ago WHO made a global call for policymakers and health managers to use evidence in policies and practices to strengthen health services and systems, improve system outcomes, and achieve universal coverage and equity [1–4]. However, policymakers worldwide have encountered difficulties accessing timely and relevant research evidence of high quality [5–12].

Rapid syntheses of research findings, including rapid response briefs, are among some of the more promising strategies currently emerging to help address the challenges to, and facilitate policymakers’ use of, research evidence for policy [13–17]. Rapid syntheses are time-sensitive and can be tailored to local context, language and user needs. In this article, we define rapid response briefs as documents that present a summary of the best available evidence in a synthesised and contextualised manner in direct response to a decision-maker’s question. They are knowledge translation products that are a result of systematic and transparent methods to synthesise and appraise the evidence. They do not generate new knowledge but use already available findings, especially from existing systematic reviews [17]. Because the primary audience for rapid response briefs is policymakers, many of whom do not have a research or healthcare background, they should be short easy-to-read documents with minimal technical language, easily read and understood by very busy policymakers. However, little is known about what the optimal format of these briefs might be or if they are perceived as useful by policymakers using research evidence for policymaking.

The Ugandan country node of the Regional East African Community Health Policy Initiative (REACH-PI) [18] is a partner in WHO’s Evidence Informed Policy Network (EVIPNet) and participated in the “Supporting Use of Research Evidence (SURE) for Health Policy in African Health Systems” project [19]. Researchers piloted a rapid response service in March 2010, providing rapid response briefs on demand to policymakers in Uganda [17]. The objectives of this study were to (1) explore Ugandan policymakers’ experiences with a rapid response brief template developed for this service; (2) use our findings to improve the format of that template; and (3) assess the extent to which the revised rapid response brief template better met policymakers’ needs.

Methods

The structure of the service, how it was developed and the process of preparing rapid response briefs have been provided in detail elsewhere [17]. The service was developed based on a literature review, brain storming, interviews with potential users and pilot testing. The process for preparing a rapid response brief includes clarifying the question with the user, ensuring that it is within the scope of the service, searching for a systematic review or, when relevant, other evidence, preparing a structured summary of the best available evidence that was found, and peer review of the brief. This process took from 1 to 28 days, depending on the urgency of the question. Examples of rapid response briefs as referred to in this study can be found in the Uganda Clearinghouse for Health Policy and Systems Research (http://chs.mak.ac.ug/uch/home).

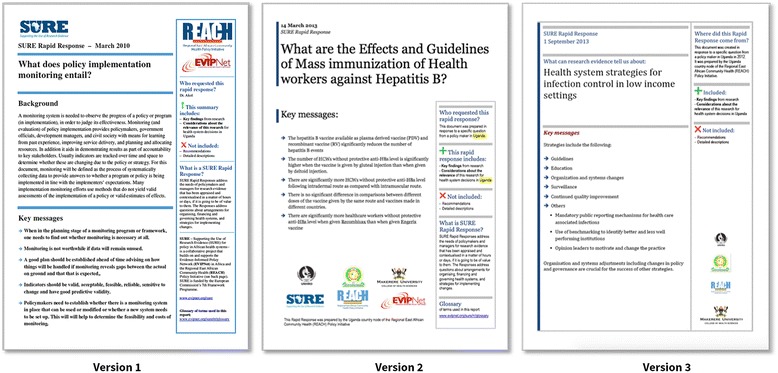

We based the initial format of the briefs (Fig. 1, version 1) on principles for presenting evidence to policymakers learned from other studies, for example, a graded entry or layered presentation and numerical results in tables [12, 20].

Fig. 1.

The initial format of the rapid response brief (version 1) and its two revisions (version 2 and 3)

We then explored user’s experiences and perceptions of the briefs using qualitative methods for data collection and analysis, and drew on these findings to agree on formatting improvements, in several cycles.

Participants

From a list of potential public and private sector stakeholder institutions involved in or closely associated with policymaking in Uganda, we obtained a purposive sample of top and mid-level policymakers and health managers. We ensured inclusion of participants who had used the service before and those that had not. The participants were invited to take part in the user testing exercises by email or physically-delivered formal letters of invitation [21], followed by a face-to-face visit to answer any questions or concerns about the exercise.

User testing

We explored policymakers’ experience with the rapid response brief format through a set of user tests. User testing is widely used for assessing users’ experiences with software, websites and (instructional) documents [22], including presentations of research evidence [23–27]. Representative users of a product are invited to participate in individual semi-structured interviews and asked to speak about their experience as they interact with the product [28, 29]. We adapted our methods from other studies that have user tested presentations of research evidence [20, 24–28].

Data collection

We carried out 12 user tests iteratively between 2010 and 2013 with eight purposively sampled participants: four (out of seven invited) in 2010 using the original template and four (out of six invited) in 2012 using the second version. We then involved four participants from these eight earlier tests in a shorter session in 2013 after making changes to the second template, to check their preference between the third and the earlier template. Previous user testing has found that 80% of known usability problems could be ascertained from five representative users, with three participants revealing almost 70% of all problems including the most severe ones; there are diminishing returns after the fifth user [30–32].

In test 1, we presented users with version 1 of the template (Fig. 1, version 1), which we designed based on prior research [20]. In test 2, we presented version 2 of the template (Fig. 1, version 2), which we revised based on feedback from test 1. In test 3, we presented version 3 (Fig. 1, version 3) of the template and the version that the participant was shown in test 1 or test 2.

We carried out user testing in the form of face-to-face interviews in a quiet room, with an interviewer and a note taker, lasting approximately 1 hour and audio-recorded. We created a scenario-based interview guide designed to explore the facets of user experience as described by Morville and adapted by Rosenbaum [33, 34]. These facets, described in Table 1, include findability, usability, usefulness, accessibility, credibility, desirability and value of the product. Although Morville’s meaning of value was from the perspective of the producer, in this study we assessed value from the perspective of the user. In addition, we adopted one of the two facets Rosenbaum added to the Morville framework, that of understandability.

Table 1.

Users’ experiences using rapid response briefs

| Domain | Issues raised (illustrated with quotes where possible) | What we changed | ||||

|---|---|---|---|---|---|---|

| Major problems | Big problems or frustrations | Minor issues | Positive feedback | Specific suggestions | ||

| Findability (the extent to which the product is navigable and the user can locate and easily find what they need) | • None | • A lot of information on the first page • Too many logos on the face/first page • Too many key messages – preference for only one or two |

• Distracting information box(es) especially on the first page • A dislike for headings presented in form of questions |

• Headings, colours, formatting, font are clear enough • Use of tables to summarise findings • A good balance between precision and detail of information |

• Reduce information on the first/face page • Use a style that allows for citation to show in the text not at the end • More use of figures and less of text |

• Only main logos left on front page, others moved to the back • Logos made smaller • No set number of key messages but instruction to keep these to a minimum • Revised what information would be on page one • Some information boxes moved to other pages |

| Usability (the ease of use of a product) | • None | • The report seemed lengthy • The methodology of how the report was prepared being in a small information box on the second page was easily missed • The absence of recommendations |

• None | • A background section that sets the context of the report • The small size of the document • Decision options were presented clearly • The conclusion was clear • Key messages were well linked to the approaches or options provided and discussed later, which made it easy to follow • Information boxes were helpful in providing guidance on what to find in the report |

• Aim to restrict text under a given subheading to the same page and not spill over to the next • Key messages should not simply be bullet points they should have a little more detail • Keep only important references |

• Report should aim at 5–7 pages • Reference to the methodology to be included in the main text, in the background whenever possible (considering a balance with size of the document) |

| Usefulness (the extent to which the product fills a need that the user had) | • None | • The absence of recommendations or a clear way forward | • None | • All participants found the reports useful for themselves and others | • The provision of recommendations | • Communications to rapid response service team to give more time to explaining the absence of recommendations (in report and during interaction) |

| Accessibility (the ease with which the product is available to all users including those with different preferences or disabilities) | • None | • None | • None | • Generally found accessible “…yeah I think, everyone, okay at least a good percentage can use this document without a problem” | • None | • No changes made to the template |

| Credibility (the extent to which the users trust and believe what is presented to them and what elements of the product influence this trust) | • None | • None | • None | • Credibility attached to the research institution and partners (as identified by the logos) • Referencing using credible and trusted sources • Provision of contact information to follow up on the report and its content |

• None | • No changes made to the template |

| Understandability (understanding (or recognising) the document category and understanding the document content) | • None | • Poor initial understanding of the type of document and its potential uses | • Unclear reference to methods used to prepare the document | • Simple language | • None | • Revision of information in the information boxes |

| Desirability (how much appreciation is drawn from the user by the power and value of the image, identity, brand, and other elements of emotional design) | • None | • None | • None | “I love the report especially the background. Also it is not very long so it is readable…” “Very desirable, we hope this service will not end” |

• Presentation of recommendations | • No changes made to the template |

| Value | • None | • None | “… the writing seems ‘laborious’. …you are spending a lot on describing yourselves…” “Make the reports/reports more accessible for those other than those who requested for it…” |

• None | • Increase visibility of the products through different kinds of dissemination | • Less information about partners and all of it moved to the back of the document • Decision to increase visibility with caution; need to balance demand with capacity to meet it |

Using the concurrent think-aloud method [22], we provided the participants with a brief based on a topic to which we felt any policymaker could easily relate and which did not require in-depth knowledge to understand. We systematically walked the participants through the brief and urged them to comment out-loud on their experience of the different sections, highlighting any aspects they appreciated or found problematic. Although the test allowed for the interviewer to probe where necessary, any questions arising from the users during the interview were noted and only discussed after the interview to avoid distracting and biasing them.

Data analysis

We transcribed the interviews, identifying and coding findings from the transcriptions under the following thematic areas summarised in Table 1: major problems, big problems or frustrations, and minor issues. We also looked out for positive feedback and any specific suggestions for improving the users’ experience. For the last set of user tests performed in 2013, we also identified preferences for the format before and after the changes made to the first version. All findings were coded according to their corresponding category in our adapted user experience framework. Transcription and coding was performed by the two researchers who carried out the interview, that is, the interviewer and the note taker.

The research team included three researchers from Makerere University who performed the interviews and did the initial coding and two researchers from the Norwegian Knowledge Center for the Health Services (NOKC) who participated in the latter stages of coding and thematic analysis. The entire team then discussed and came to a consensus on the themes and corrections to be made on the templates. Two senior researchers, one in Norway and one in Uganda, provided additional input during the discussions. We held the discussions through conference calls and in face-to-face meetings. The researchers at the NOKC, which included an information designer (SR), then re-designed the template based on the feedback.

This research received ethics approval from the School of Medicine, Research and Ethics Committee at Makerere University (reference number 2011–177). We sought consent for the process (including the audio-recording) from all participants.

Results

Of the eight participants, five were from the Ministry of Health, two from civil society and one was a development partner (Table 2). The participants’ backgrounds typically included a combination of several attributes, namely medical (medical doctors and clinical officers), finance/economics, education, statistics, research, nutrition, health promotion, advocacy, government and health systems planners.

Table 2.

Profiles of respondents involved in the user-testing exercises in this study

| Initial Test No (year) | Organisation of affiliation | Type of policymakera | Used service before | Participated in follow-up interview (Test 3) | Sex |

|---|---|---|---|---|---|

| Test 1 (2010) | MoH | Senior policymaker in MoH | No | No | F |

| Test 1 (2010) | NGO | Stakeholder in NGO/CSO | Yes | No | F |

| Test 1 (2010) | MoH | Policymaker in MoH | Yes | No | M |

| Test 1 (2010) | NGO | Stakeholder in NGO/CSO | No | No | F |

| Test 2 (2012) | MoH | Policymaker in MoH | No | Yes | M |

| Test 2 (2012) | MoH | Policymaker in MoH | No | Yes | F |

| Test 2 (2012) | Development partner | Development Partner, country representative | Yes | Yes | F |

| Test (2012) | MoH | Policymaker in MoH | Yes | Yes | M |

NGO non-governmental organisation, CSO civil society organisation, MoH Ministry of Health

aSelf-reported

Users’ experiences of the first version of the rapid response brief format are summarised in Table 1. The participants did not identify any major problems but identified a number of big problems including the length of the brief. One respondent felt the seven-page brief presented was too long, which reduced one’s motivation to read it. He explained his frustration:

“…at a glance I find it [the brief] a bit bulky,… Usually we need policy briefs of two pages, maybe maximum five…”

Another big problem reported was a crowded front page contributed to by what some participants felt were many key messages and various logos:

“… the first page is like a billboard…”

Other big problems included a poor initial understanding of the type of brief and its uses, and the absence of recommendations.

Participants also cited some minor issues and these included the fact that the information boxes on the first page were a distraction from the main text.

“And do you have to have these [information boxes] here? They can’t come at the back?”

Two participants felt there were too many information boxes on the providers of the briefs.

“You are doing a lot of awareness of who[m]ever these people are …”

Other minor issues cited included the fact that the heading had been presented as a question, and there was only implicit reference to methods used to prepare the document.

The format also had features that participants liked, such as clarity of presentation and use of tables to summarise findings.

“…this table you see summarizes the strategies… so it will not give the reader a lot of hurdles to (pauses) yeah…”

Participants cited a good balance between the precision and detail of information presented, a clear background section establishing the context of the brief, and the short length of the document, although the latter was a concern for two of them.

In addition, some participants felt the information boxes were in fact helpful in providing guidance on what to find in the brief. All participants found the briefs useful and expected others would feel the same. They all attached credibility to the briefs especially due to the partners represented and identified by the logos and generally found the brief usable:

“…yeah I think, everyone, okay at least a good percentage can use this document without a problem.”

To improve the briefs, participants suggested, among other things, that the style of referencing used be one showing references within the text. In addition, they requested the authors to consider providing recommendations and also increasing the visibility of the briefs within the target audience groups.

Participants provided conflicting feedback on three aspects of the rapid response briefs. While two participants reported the brief was long, the rest reported liking the length. Furthermore, some participants wanted additional information in the briefs while still wanting to keep them short. Five of the eight participants felt the information boxes on the right side of the page were distracting, irrelevant or misplaced. The other three felt the side boxes provided useful information about what was and was not in the brief. They also felt this information was necessary for users who have not interacted with the service or seen its products before. Three participants thought the logos on the first page were irrelevant and a form of advertising, and should be relegated to the back page. Four participants felt the logos gave credibility to the brief.

We attempted to balance how we addressed the different concerns in the revised template. For example, concerning the length of the brief versus the information useful for understanding the document, we maintained the guidance that the briefs should be limited to five pages and attempted to provide additional information online or through personal communication. Further, we kept the side boxes, while reducing the amount of information in them and emphasising key information, and we kept the logos on the first page, while reducing their size.

Overall preference for version 2 and version 3 of the templates

Two of the participants preferred the revised template while two preferred the older one (Table 3).

Table 3.

Users’ preferences for the alternative versions of the revised rapid response brief

| Which participant | Preference | Explanation why (illustrated with quotes if possible) | User experience category |

|---|---|---|---|

| Respondent 1 | Version 3 | Less crowded face/first page | Findability |

| Respondent 2 | Version 2 | Version 3 template looks “deficient” and still has no recommendations | Usability |

| Respondent 3 | Version 2 | Version 2 template is fine so long as you keep the document short | Usability |

| Respondent 4 | Version 3 | Face page is more attractive – makes the document feel “light” | Findability |

The reasons cited for preference of version 3 of the template were that the face page was less crowded and made the document feel ‘light’ and attractive. Those participants preferring the older version 2 did not have particular reasons. They said that the newer template looked ‘deficient’, but did not have any new recommendations. One respondent reported that there was no need for changes as long as the document was kept short. Although preferences for the different versions differed, none of the participants felt there were still any big problems with the final version (version 3).

Discussion

This study explored Ugandan policymakers’ experiences with a rapid response brief format and the extent to which subsequent changes to the template improved their experiences. Although we did not uncover any major problems, there were several large problems, causing confusion or difficulty, which were ultimately resolved to some extent. In addition, there was positive feedback and suggestions from participants.

Findings in relation to other studies

Problems

A large problem reported by one participant in this study was the length of the brief, a finding previously reported by others [12, 20, 35]. It is uncertain what the optimal number of pages is for such a document, but several studies have shown that policymakers do not take the time to read lengthy reports, and have a clear preference for short and concise reports or summaries of research [12, 20, 35]. Rapid response briefs are meant to help policymakers in urgent situations and, therefore, limited by time. Scientists and researchers are thought to have more available time to read longer reports, whereas policymakers may not [36–38].

Another big problem was the absence of recommendations in the brief. This frustrated all but one of the participants. Policymakers have expressed similar frustrations with SUPPORT summaries of systematic reviews [20] and evidence briefs for policy [39]. Moat et al. [40] found that “not concluding with recommendations” was the least helpful feature of these briefs. There are many reasons for which we did not include recommendations. Rapid response briefs seek to summarise relevant research evidence and may not incorporate other relevant information or consider all of the factors relevant for a decision. Furthermore, recommendations do not flow directly from research evidence. They reflect the judgments, views and values of the authors. Although recommendations might be within the scope of evidence briefs aiming to fully address the pros and cons of different options for addressing a problem [41], they are clearly outside of the scope of rapid response briefs, which aim to summarise succinctly the research evidence addressing a specific question. Therefore, it is necessary to find ways of clearly communicating to policymakers the purpose of rapid response briefs and to ensure they know what to expect in them.

Another major problem participants cited was a crowded first page because of multiple logos, multiple key messages and information boxes. Indeed, anecdotal evidence shows crowded text, lots of images, multiple font styles, and too much information can make a page very difficult to read [42]. Furthermore, there was confusion about what kind of document the brief was and how it had been prepared despite the information boxes describing this. This may reflect the fact that participants did not read the information boxes. Some expressed that these boxes were a distraction from the main content. It is also possible that participants read the information in the boxes but did not find it clear or sufficient. A potential solution would be to include a methods section in the text, but this would need to be balanced with concerns about the length of the brief. More interaction between users of the briefs and researchers might also help users to understand the methods used to prepare the briefs. Links to additional information online might be another way to provide this information without increasing the length of the briefs.

A minor issue cited was the feeling that the briefs were not visible to others who would potentially benefit from them. Since the research team deliberately took measures to limit the demand for services during the pilot, this may have limited its visibility. We expected visibility to improve after the pilot period.

Positive feedback

We found participants valued the rapid response briefs and found them to be useful. This is in keeping with findings from a similar study exploring policymakers’ experiences with a short summary of results of systematic reviews relevant to low- and middle-income countries [20].

One feature the participants felt increased the credibility of the briefs was the use of references from sources in which participants already had confidence, for example, well-known journals or The Cochrane Library. Rosenbaum et al. [20] also found the use of trusted sources increased the credibility of research summaries.

Considering the templates as a whole, there was equal preference for the two versions. However, this preference was not tagged to any major frustrations or big problems, but to guidance that can be adopted for either version; for example, the need to keep the brief short and not to crowd the front page.

Participants provided conflicting feedback about several aspects of the rapid response briefs. It is important to note that user testing does not look for consensus but welcomes all feedback to enable consideration of all potential difficulties from would-be users. It is possible the conflicting feedback seen in this study reflects differences in the backgrounds of the participants; for example, their training in research methods, level in the policymaking hierarchy, prior exposure to rapid response briefs, or exposure to other types of evidence products. It would be difficult, if not impossible, to tailor the format of the briefs to such differences although we attempted to balance how we addressed these concerns in the revised template.

Strengths and limitations

As far as we are aware, this is the only study that has evaluated users’ experience of reports or briefs of rapid responses to policymakers’ needs for research evidence. We used a concurrent think-aloud protocol widely used for user testing, which has been used in the past for evaluating how users experience the format of different types of evidence-based reports [23, 41, 43]. This approach avoided the risk of recall bias, which might have occurred if we had used a retrospective approach in which participants have to think back on their experience. In addition, the concurrent think-aloud approach can reveal more unspoken feedback than a retrospective approach. Although the need to think aloud while working can have a negative effect on the task being performed, and could affect participants’ perceptions of the briefs, it is unlikely this had a major impact on our findings, since the task of perusing and reading documents is one the participants perform regularly.

In this study, we involved eight participants (four for each version of the template), a number higher than that thought to reveal 80% of known usability problems, including the most severe ones. We believe we were able to capture most problems associated with rapid response briefs, especially the major ones.

Another strength of this study is that we did not only use the feedback from the user testing to make changes to the template. We conducted follow-up interviews after these changes to ensure there were no new major problems created or old ones left unhandled.

An important limitation of this study was the ‘lab’-like context. Rather than observing people using these briefs in an actual decision-making process, we invited them to interviews in which we designed policymaking scenarios which were not necessarily relevant for each of the participants. However, the briefs provided were those requested from actual policymaking processes and the topics of the briefs chosen were ones to which most policymakers would be able to relate.

Another potential limitation of this study is that fewer participants engaged in the third test where we tried to gauge preference for the two revised formats. Four participants might be considered too few to give a representative result. However, the aim of user testing is not primarily to create generalizable findings but to identify potentially important problems and experiences from people who represent the target group, and this was achieved.

Implications for practice and research

The main findings of this study are that users of rapid response briefs value them and for the most part, view the format of the briefs positively. The rapid response team in Uganda now uses the final template (version 3). An important challenge that should be addressed in practice and further research is balancing users’ preference for short briefs with their desire for more information. In addition, further user testing in other contexts, testing using actual scenarios and comparative evaluations of the effects of the rapid response service on decisions and other outcomes are needed.

Conclusion

Policymakers in Uganda found rapid response briefs to be useful, accessible and credible. However, they experienced some frustrations and there was some conflicting feedback, including different views about the length of the briefs, presentation of information about what the briefs are and are not and how they were prepared, and the absence of recommendations.

Acknowledgements

We would like to acknowledge the WHO-EVIPNet partners, SURE Project, and the Norwegian Knowledge Center for the Knowledge Services for the technical and other support that enabled the study on the rapid response service. We acknowledge Mrs. Rachel Emaasit for time spent editing this manuscript.

Funding

We acknowledge the IDRC’s International Research Chair in Evidence-Informed Health Policies and Systems and the African Doctoral Dissertation Research Fellowship for the financial support to enable this work. Furthermore, this research was funded in part by the European Community's Seventh Framework Programme under grant agreement n° 222881 (SURE project). The funders had no role in the design of the study, collection, analysis and interpretation of data, or in writing the manuscript.

Availability of data and materials

Raw data, namely the transcribed interviews used in this study, have not been availed at this time because they are still being analysed to answer more research questions. The dataset will be availed on the Uganda Clearing House for Health Systems and Policy Research website as soon as the authors have completed this analysis.

Authors’ contributions

RM conceived the study. RM-D, ADO, JNL and NKS participated in the design of the study. RM participated in collecting the data for the study. All authors participated in analysis of the data and provided comments on drafts of the manuscript. RM drafted the manuscript. All authors read and approved the final manuscript.

Competing interests

No competing interests to declare.

Consent for publication

Not applicable.

Ethics approval and consent to participate

This research received ethics approval from the School of Medicine, Research and Ethics Committee (SOMREC) at Makerere University (reference number 2011-177). We also sought consent for the process (including audio-recording) from all participants.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Rhona Mijumbi-Deve, Email: mijumbi@yahoo.com.

Sarah E. Rosenbaum, Email: sarah@rosenbaum.no

Andrew D. Oxman, Email: oxman@online.no

John N. Lavis, Email: lavisj@mcmaster.ca

Nelson K. Sewankambo, Email: sewankam@infocom.co.ug

References

- 1.World Health Organization. World Report on Knowledge for Better Health. 2004. http://www.who.int/rpc/meetings/en/world_report_on_knowledge_for_better_health2.pdf. Accessed July 2013.

- 2.World Health Organization. World Health Assembly: Resolution on Health Research. 2005. http://apps.who.int/gb/ebwha/pdf_files/WHA58-REC1/A58_2005_REC1-en.pdf. Accessed 11 Sep 2012.

- 3.World Health Organization. Mexico Ministerial Statement for the Promotion of Health. 2005. http://www.who.int/healthpromotion/conferences/previous/mexico/statement/en/. Accessed 11 Sep 2012.

- 4.World Health Organization . The Bamako Call to Action on Research for Health: Strengthening Research for Health, Development, and Equity. Bamako: The Global Ministerial Forum on Research for Health; 2008. [Google Scholar]

- 5.Carden F. Knowledge to Policy: Making the Most of Development Research. Ottawa: IDRC/SAGE; 2009. [Google Scholar]

- 6.Innvaer S, Vist G, Trommald M, Oxman A. Health policy-makers’ perceptions of their use of evidence: a systematic review. J Health Serv Res Policy. 2002;7:239–44. doi: 10.1258/135581902320432778. [DOI] [PubMed] [Google Scholar]

- 7.Wallace J, Nwosu B, Clarke M. Barriers to the uptake of evidence from systematic reviews and meta-analyses: a systematic review of decision makers’ perceptions. BMJ Open. 2012;2(5). doi:10.1136/bmjopen-2012-001220. [DOI] [PMC free article] [PubMed]

- 8.Oliver K, Innvaer S, Lorenc T, Woodman J, Thomas J. Second Conference on Knowledge Exchange in Public Health. Noorwijkhout: Holland Fuse Conference: How to Get Practice into Science; 2013. Barriers and Facilitators of the Use of Evidence by Policy Makers: An Updated Systematic Review. [Google Scholar]

- 9.Shearer JC, Dion M, Lavis J. Exchanging and using research evidence in health policy networks: a statistical network analysis. Implement Sci. 2014;9:126. doi: 10.1186/s13012-014-0126-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Contandriopoulos D, Lemire M, Denis J, Tremblay E. Knowledge exchange processes in organizations and policy arenas: a narrative systematic review of the literature. Milbank Q. 2010;88(4):444–83. doi: 10.1111/j.1468-0009.2010.00608.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Lavis J, Oxman A, Moynihan R, Paulsen E. Evidence-informed health policy 1 - synthesis of findings from a multi-method study of organizations that support the use of research evidence. Implement Sci. 2008;3:53. doi: 10.1186/1748-5908-3-53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lavis J, Davies H, Oxman A, Denis J, Golden-Biddle K, Ferlie E. Towards systematic reviews that inform health care management and policy-making. J Health Serv Res Policy. 2005;10(Suppl 1):35–48. doi: 10.1258/1355819054308549. [DOI] [PubMed] [Google Scholar]

- 13.Saul JE, Willis CD, Bitz J, Best A. A time-responsive tool for informing policy making: rapid realist review. Implement Sci. 2013;8:103. doi: 10.1186/1748-5908-8-103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Khangura S, Konnyu K, Cushman R, Grimshaw J, Moher D. Evidence summaries: the evolution of a rapid review approach. Syst Rev. 2012;1:10. doi: 10.1186/2046-4053-1-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Ganann R, Ciliska D, Thomas H. Expediting systematic reviews: methods and implications of rapid reviews. Implement Sci. 2010;5:56. doi: 10.1186/1748-5908-5-56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Riley B, Norman CD, Best A. A rapid review using systems thinking. Evid Policy. 2012;8(4):417–31. doi: 10.1332/174426412X660089. [DOI] [Google Scholar]

- 17.Mijumbi RM, Oxman AD, Panisset U, Sewankambo NK. Feasibility of a rapid response mechanism to meet policymakers’ urgent needs for research evidence about health systems in a low income country: a case study. Implement Sci. 2014;9:114. doi: 10.1186/s13012-014-0114-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.East African Community. Regional East African Community Health (REACH) Policy Initiative Project Arusha, Tanzania; 2005. http://www.who.int/alliance-hpsr/evidenceinformed/reach/en/. Accessed July 2013.

- 19.WHO-Evidence Informed Policy Network. Supporting the Use of Research Evidence (SURE) for Policy in African Health Systems. 2009. http://www.who.int/evidence/about/en/. Accessed Sept 2012.

- 20.Rosenbaum SE, Glenton C, Wiysonge CS, Abalos E, Mignini L, Young T, et al. Evidence summaries tailored to health policy-makers in low- and middle-income countries. Bull World Health Organ. 2011;89:54–61. doi: 10.2471/BLT.10.075481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Faulkner L. Beyond the five-user assumption: benefits of increased sample sizes in usability testing. Behav Res Methods Instrum Comput. 2003;35(3):379–83. doi: 10.3758/BF03195514. [DOI] [PubMed] [Google Scholar]

- 22.van den Haak M, De Jong M, Schellens PJ. Retrospective vs. concurrent think-aloud protocols: Testing the usability of an online library catalogue. Behav Inform Technol. 2003;22(5):339–51. doi: 10.1080/0044929031000. [DOI] [Google Scholar]

- 23.Rosenbaum SE, Glenton C, Cracknell J. User experiences of evidence-based online resources for health professionals: user testing of The Cochrane Library. BMC Med Inform Decis Mak. 2008;8:34. doi: 10.1186/1472-6947-8-34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Rosenbaum SE, Glenton C, Nylund HK, Oxman AD. User testing and stakeholder feedback contributed to the development of understandable and useful Summary of Findings tables for Cochrane reviews. J Clin Epidemiol. 2010;63(6):607–19. doi: 10.1016/j.jclinepi.2009.12.013. [DOI] [PubMed] [Google Scholar]

- 25.Giguere A, Legare F, Grad R, Pluye P, Rousseau F, Haynes RB, et al. Developing and user-testing Decision boxes to facilitate shared decision making in primary care - a study protocol. BMC Med Inform Decis Mak. 2011;11:17. doi: 10.1186/1472-6947-11-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Yu CH, Parsons JA, Hall S, Newton D, Jovicic A, Lottridge D, et al. User-centered design of a web-based self-management site for individuals with type 2 diabetes – providing a sense of control and community. BMC Med Inform Decis Mak. 2014;14:60. doi: 10.1186/1472-6947-14-60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Dorfman CS, Williams RM, Kassan EC, Red SN, Dawson DL, Tuong W, et al. The development of a web- and a print-based decision aid for prostate cancer screening. BMC Med Inform Decis Mak. 2010;10:12. doi: 10.1186/1472-6947-10-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Nielsen J. Usability Testing. 2. San Diego: Academic Press; 1994. pp. 165–206. [Google Scholar]

- 29.Krahmer E, Ummelen N. Thinking about thinking aloud: a comparison of two verbal protocols for usability testing. IEEE Trans Prof Commun. 2004;47(2):105–17. doi: 10.1109/TPC.2004.828205. [DOI] [Google Scholar]

- 30.Virzi RA. Measurement in human factors. Hum Factors. 1992;34(4):457–68. [Google Scholar]

- 31.Nielsen J, Landauer TK, editors. A mathematical model of the finding of usability problems. Amsterdam: ACM INTERCHI'93 Conference; 1993. [Google Scholar]

- 32.Nielsen J. Why You Only Need to Test with 5 Users. Nielsen Norman Group: Evidence-Based User Experience Research, Training, and Consulting. 2000. https://www.nngroup.com/articles/why-you-only-need-to-test-with-5-users/. Accessed Apr 2014.

- 33.Morville P. User Experience Design. Ann Arbor: Semantic Studios LLC; 2004. http://semanticstudios.com/publications/semantics/000029.php. Accessed Apr 2014.

- 34.Rosenbaum S. Improving the User Experience of Evidence. A Design Approach to Evidence-informed Health Care. Oslo College of Architecture and Design: Oslo; 2010. [Google Scholar]

- 35.Petticrew M, Whitehead M, Macintyre S, Graham H, Egan M. Evidence for public health policy on inequalities: the reality according to policymakers. J Epidemiol Community Health. 2004;58:811–6. doi: 10.1136/jech.2003.015289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.von Grebmer K. Chapter 21: Converting Policy Research into Policy Decisions: The Role of Communication and the Media. In: Babu SC, Ashok G, editors. Economic Reforms and Food Security: The Impact of Trade and Technology in South Asia. New York: Haworth Press, Inc. pp. 455–9.

- 37.Dabelko GD. Speaking their language: how to communicate better with policymakers and opinion shapers – and why academics should bother in the first place. Int Environ Agreements. 2005;5:381–6. doi: 10.1007/s10784-005-8329-8. [DOI] [Google Scholar]

- 38.Bogenschneider K, Corbett TJ. Evidence-Based Policymaking: Insights from Policy-Minded Researchers and Research-Minded Policymakers. London: Routledge; 2011. p. 368. [Google Scholar]

- 39.World Health Organisation - EVIPNet. Policy Briefs. Evidence Briefs for Policy. 2009. http://www.who.int/evidence/sure/policybriefs/en/. Accessed July 2014.

- 40.Moat KA, Lavis JN, Clancy SJ, El-Jardali FE-J, Pantoja T. Team ftKTPEs. Evidence briefs and deliberative dialogues: perceptions and intentions to act on what was learnt. Bull World Health Organ. 2014;92(1):20–8. doi: 10.2471/BLT.12.116806. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Rosenbaum SE, Glenton C, Oxman AD. Summary-of-findings tables in Cochrane reviews improved understanding and rapid retrieval of key information. J Clin Epidemiol. 2010;63(6):620–6. doi: 10.1016/j.jclinepi.2009.12.014. [DOI] [PubMed] [Google Scholar]

- 42.WordPress.org. Accessibility. WordPress Lessons. 2003. http://codex.wordpress.org/Accessibility. Accessed Jan 2017.

- 43.Cooke L. Assessing concurrent think-aloud protocol as a usability test method: a technical communication approach. IEEE Trans Prof Commun. 2010;53(3):201–15. doi: 10.1109/TPC.2010.2052859. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Raw data, namely the transcribed interviews used in this study, have not been availed at this time because they are still being analysed to answer more research questions. The dataset will be availed on the Uganda Clearing House for Health Systems and Policy Research website as soon as the authors have completed this analysis.