Abstract

Objective

To develop and validate case definitions (“computable phenotypes”) to accurately identify neurosurgical and critical care events in children with traumatic brain injury (TBI).

Design

Prospective observational cohort study, May 2013 – September 2015

Setting

Two large U.S. children’s hospitals with level 1 Pediatric Trauma Centers

Participants

174 children < 18 years old admitted to an intensive care unit (ICU) after TBI

Methods

Prospective data were linked to database codes for each patient. The outcomes were prospectively identified acute TBI, intracranial pressure monitor placement, craniotomy or craniectomy, vascular catheter placement, invasive mechanical ventilation, and new gastrostomy tube or tracheostomy placement. Candidate predictors were database codes present in administrative, billing, or trauma registry data. For each clinical event, we developed and validated penalized regression and Boolean classifiers (models to identify clinical events that take database codes as predictors). We externally validated the best model for each clinical event. The primary model performance measure was accuracy, the percent of test patients correctly classified.

Results

The cohort included 174 children who required ICU admission after TBI. Simple Boolean classifiers were ≥ 94% accurate for 7/9 clinical diagnoses and events. For central venous catheter placement, no classifier achieved 90% accuracy. Classifier accuracy was dependent on available data fields. 5/9 classifiers were acceptably accurate using only administrative data, but three required trauma registry fields and two required billing data.

Conclusions

In children with TBI, computable phenotypes based on simple Boolean classifiers were highly accurate for most neurosurgical and critical care diagnoses and events. The computable phenotypes we developed and validated can be utilized in any observational study of children with TBI, and can reasonably be applied in studies of these interventions in other patient populations.

Keywords: pediatrics, craniocerebral trauma, brain injuries, validation studies as topic, electronic health records

Introduction

Traumatic brain injury (TBI) causes approximately 2,200 deaths and 35,000 hospitalizations in U.S. children annually.(1) Our current knowledge of how best to treat children with TBI has many gaps.(2) The potential for gaining knowledge from large existing data sources is well-known.(3–5) Several national and international administrative databases and registries contain information about thousands of children who have suffered TBI.

The use of health care database codes to represent clinical diagnoses and events is challenging because International Classification of Diseases, Ninth Revision, Clinical Modification (ICD-9-CM) and other codes commonly used in observational studies have imperfect accuracy.(6, 7) Validation of these codes is important because misclassification of exposures, and particularly outcomes, can powerfully bias epidemiologic studies.(8) Validated case definitions, also known as computable phenotypes or classifiers, have been published for some clinical conditions, but not for neurosurgical and critical care interventions in children with TBI.(9) Some computable phenotypes are designed to find patients within electronic health record (EHR) systems in real time to facilitate study recruitment.(10) Others are designed to support comparative effectiveness studies using observational data.

We conducted this multi-center prospective observational study to accomplish two objectives. First, to develop and validate classifiers that accurately identify important variables for studies of children with TBI in databases: the TBI diagnosis, neurosurgical and critical care interventions, and proxies for functional status at hospital discharge. Second, to demonstrate a workflow for database code validation that generates classifiers that 1) are optimally accurate given the available predictors, 2) are consistent, reproducible(11), and parsimonious, 3) have unbiased accuracy estimates, and 4) can easily be applied by other investigators.

Methods

Study Sites and Inclusion/Exclusion Criteria

The methods for this prospective cohort study have been described previously.(12) Briefly, the study was conducted at two pediatric trauma centers: Primary Children’s Hospital (PCH) in Salt Lake City, UT and Children’s Hospital Colorado (CHCO) in Aurora, CO. At PCH, we enrolled patients from May, 2013 to September, 2015. At CHCO, we enrolled patients from September, 2014 to September, 2015.

We included all patients < 18 years old admitted to an intensive care unit (ICU) with a diagnosis of acute TBI and either a Glasgow Coma Scale (GCS) score ≤ 12 or a neurosurgical procedure within 24 hours of admission. We excluded surviving patients discharged from the ICU within 24 hours of admission without a neurosurgical or critical care intervention. The study was granted a waiver of consent by both institutional review boards and all eligible patients were enrolled. The sample size for this study was determined by the number of patients in the cohort when we locked the data and began the analysis for this manuscript.

Linkage of Prospective Data with Database Records

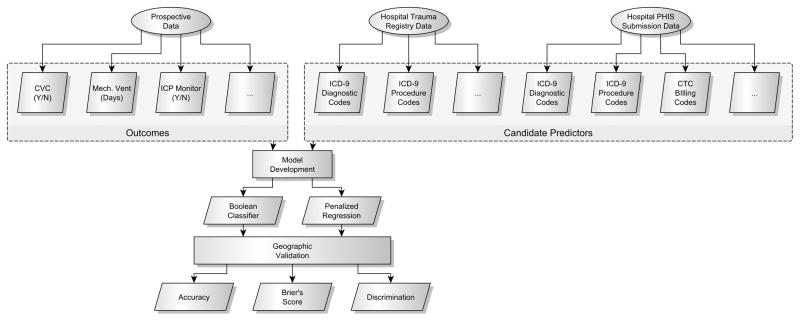

No single database contains all of the necessary variables to answer important clinical questions about how to care for children with TBI. We have previously linked the records of children with TBI in two large national databases, the Pediatric Health Information Systems (PHIS) database and the National Trauma Data Bank (NTDB).(13) PCH and CHCO submit data to both the PHIS database and the NTDB. After patient discharge, the PHIS and NTDB records for all study patients were obtained from the hospital analyst and hospital trauma teams, respectively. Using patient identifiers, we linked the prospective data with the PHIS and NTDB records for each patient (Figure 1).

Figure 1.

Analysis Flow Figure 1 Legend

Three data sources were linked for each patient: prospective data, hospital trauma registry data in the format submitted to the National Trauma Data Bank (NTDB), and hospital administrative and billing data in the format submitted to the Pediatric Health Information Systems (PHIS) database. CVC = central venous catheter; Mech. Vent = mechanical ventilation; ICP = intracranial pressure; ICD-9 = International Classification of Diseases. CTC = Clinical Transaction Classification.

PHIS

PHIS is a benchmarking and quality improvement database containing inpatient data from > 45 U.S. children’s hospitals. PHIS contains administrative data, diagnoses, and procedures as well as utilization information for hospital supplies and clinical, imaging, and laboratory services. The utilization data is coded using Clinical Transaction Classification (CTC) codes. The data reliability and quality monitoring processes used by the PHIS database have been reported previously.(14, 15) Importantly, the ICD-9-CM diagnosis and procedure codes in PHIS are the same ICD-9-CM codes present in many administrative databases.

NTDB

The NTDB contains standardized trauma registry data from more than 900 trauma centers in the United States.(16) The NTDB has a continuous data quality improvement process.(16) In contrast to standard administrative ICD-9-CM codes, the ICD-9-CM diagnosis and procedure codes in the NTDB are generated by each institution’s trauma registrars.

Statistical Methods - Overview

We developed and validated penalized logistic regression and Boolean classifiers using PHIS and NTDB data fields to predict each clinical event. The clinical events of interest were a diagnosis of acute TBI, intracranial pressure (ICP) monitor placement, craniotomy or craniectomy, central venous catheter (CVC), arterial catheter, or peripherally inserted central catheter (PICC) placement, invasive mechanical ventilation, new gastrostomy tube placement, and new tracheostomy placement. Each classifier is a model (penalized regression or Boolean) that generates an estimated probability and a classification (yes/no) of whether a given patient experienced a given clinical event (e.g., ICP monitoring).

Penalized logistic regression(17), now widely used in statistical and machine learning(18), has the potential to select only the necessary variables for a classifier and to “shrink” regression coefficients to avoid overfitting. We compared each penalized regression model with the corresponding Boolean classifier (e.g. code X | code Y = “yes”). The regression models differ from Boolean classifiers in two important ways. First, each variable in each model has a coefficient, so the effect of that variable on the estimated probability for a given patient may be higher or lower than that of other variables. Second, uninformative variables may have been removed from the models by the shrinkage process. Boolean classifiers with codes selected ad hoc may contain codes that are unnecessary or that worsen classification accuracy. Each code in a Boolean classifier is given equal weight, whereas a classification model developed using regression gives a different coefficient to each code.

We designed our analysis to follow the recommendations of the Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis (TRIPOD) statement, a recently released international guideline endorsed by 11 major journals.(19) Most computable phenotype studies (67%)(9) develop and evaluate classifiers on the same data, which generates “optimistic” accuracy estimates.(20) As recommended for small samples by the TRIPOD statement, we developed the classifiers using data from one site (N = 121) and externally validated them using a second site (N = 53), so-called geographic validation.(19) This is a departure from the more common “split-sample” approach, which has been shown to have high variability without a large sample size.(20)

Because investigators using existing data to study TBI may utilize data from different sources, we developed and validated the optimal computable phenotype/classifier for each clinical diagnosis or event in four different database types: 1) standard administrative ICD-9-CM data, 2) NTDB data, 3) PHIS data, and 4) linked PHIS-NTDB data. The first classifier is generalizable to any study of administrative data containing ICD-9-CM codes. The second classifier is generalizable to any study of data formatted according to the National Trauma Data Standard.(21) The third classifier is generalizable to any study using PHIS data. The fourth is useful for analyses of the linked PHIS-NTDB dataset.(13)

Gold Standard Outcomes and Candidate Predictors

For each clinical event, we used the prospectively collected data as the gold standard outcome. Candidate predictors for each clinical event were identified from published studies using ICD-9-CM codes and by review of ICD-9-CM and CTC codebooks by clinical experts. The candidate predictors for ICP monitoring, for example, included ICD-9-CM procedure codes, CTC billing codes for the “supply” kit (tubing, reservoir, etc.) necessary to monitor ICP, and a “clinical” CTC billing code for monitoring ICP.(22)

Penalized Regression Models

We developed and internally validated an optimal penalized logistic regression model for each clinical diagnosis or event and each of the four database types. One commonly used form of penalized regression, the LASSO (least absolute shrinkage and selection operator), can perform variable selection by shrinking coefficients to 0. Unfortunately, the LASSO will tend to select one of two highly correlated predictors at random, rather than incorporating correlated predictors as “groups” into a model. We avoided the LASSO’s inconsistencies with correlated predictors by allowing the shrinkage penalty (alpha) to vary between 0 (“ridge” regression)(23) and 0.9 (“elastic net”(24) regression has 0 < alpha < 1, LASSO has alpha = 1). We selected and internally validated the optimal model using 5-fold cross-validation with accuracy (percent of patients in the training dataset correctly classified as yes or no) as the metric to evaluate each model.(25) When models had equivalent accuracy, we selected the model with higher alpha to maximize parsimony.

Model Testing

We externally validated the classification models by predicting the event status (e.g. ICP monitoring yes/no) of each patient in the testing dataset (the second site) and comparing the classification with the truth. Again, the primary outcome was model accuracy. As recommended by guidelines(19), we also evaluated overall model performance using Brier’s score(26) and discrimination using sensitivity, specificity, and the c-index (equivalent to area under the curve [AUC] for these binary outcomes). A perfectly fit model will have a Brier’s score of 0 and “chance” predictions of 0.5 for each subject will give a Brier’s score of 0.25.

Software and Statistical Significance

Data analysis was conducted in R version 3.3.1.(27) We used the glmnet(28, 29) package in R to develop and internally validate the penalized regression models. We defined statistical significance as P < 0.05. Code to generate the analysis and the manuscript was written using rmarkdown(30), compiled using knitr(31), and is entirely reproducible.

Results

Prospective Cohort

We enrolled 174 consecutive patients overall, 121 (70%) at PCH and 53 (30%) at CHCO (Table 1). Most of the patients, 119/174 (68%), had severe TBI, and more than one-quarter, 49/174 (28%), had a GCS of 3 on admission. Overall injury severity was high: 139/172 (81%) had an injury severity score (ISS) of at least 15. Hospital mortality was 14% overall and 20% among those with severe TBI.

Table 1.

Patient and Injury Characteristics and Interventions

| Characteristic/Intervention | All: n = 174 | Colorado: 53 (30.5%) | Utah: 121 (69.5%) |

|---|---|---|---|

| Age, years | |||

| mean ± SD | 6.5 ± 5.3 | 6.2 ± 5.4 | 6.7 ± 5.3 |

| Female | |||

| n (%) | 65 (37) | 16 (30) | 49 (40) |

| Injury Mechanism | |||

| Traffic: n (%) | 53 (30) | 18 (34) | 35 (29) |

| Fall: n (%) | 40 (23) | 10 (19) | 30 (25) |

| Abuse: n (%) | 39 (22) | 17 (32) | 22 (18) |

| Other: n (%) | 42 (24) | 8 (15) | 34 (28) |

| ED GCS | |||

| 3: n (%) | 49 (28) | 12 (23) | 37 (31) |

| 4–8: n (%) | 70 (40) | 28 (53) | 42 (35) |

| 9–12: n (%) | 28 (16) | 10 (19) | 18 (15) |

| 13–15: n (%) | 27 (16) | 3 (6) | 24 (20) |

| Hospital Disposition | |||

| Home, no new supports: n (%) | 95 (55) | 25 (47) | 70 (58) |

| Mortality: n (%) | 25 (14) | 10 (19) | 15 (12) |

| Inpatient Rehabilitation: n (%) | 41 (24) | 17 (32) | 24 (20) |

| Other: n (%) | 13 (7) | 1 (2) | 12 (10) |

| Intracranial Pressure Monitoring | |||

| Any ICP Monitor: n (%) | 49 (28) | 23 (43) | 26 (21) |

| EVD: n (%) | 16 (9) | 7 (13) | 9 (7) |

| Bolt/Parenchymal: n (%) | 37 (21) | 13 (25) | 24 (20) |

| Subdural: n (%) | 6 (3) | 6 (11) | 0 (0) |

| Other Neurosurgical Procedures | |||

| EDH evacuation: n (%) | 18 (10) | 0 (0) | 18 (15) |

| SDH evacuation: n (%) | 24 (14) | 5 (9) | 19 (16) |

| Decompressive Craniectomy: n (%) | 10 (6) | 6 (11) | 4 (3) |

| Vascular Access | |||

| CV Catheter: n (%) | 72 (41) | 27 (51) | 45 (37) |

| Arterial Catheter: n (%) | 84 (48) | 29 (55) | 55 (45) |

| PICC: n (%) | 24 (14) | 10 (19) | 14 (12) |

| Invasive Mechanical Ventilation | |||

| Yes: n (%) | 161 (93) | 50 (94) | 111 (92) |

| New Tracheostomy | |||

| Yes: n (%) | 5 (3) | 1 (2) | 4 (3) |

| New Gastrostomy | |||

| Yes: n (%) | 13 (7) | 5 (9) | 8 (7) |

ED GCS = Emergency Department Glasgow Coma Scale; ICP = Intracranial Pressure; EVD = External Ventricular Drain; EDH = Epidural hematoma; SDH = Subdural hematoma; CV = central venous; PICC = peripherally inserted central catheter.

Neurosurgical Interventions

Overall, 49/174 (28%) patients received ICP monitoring (Table 1), including 40/119 (34%) of those with severe TBI. Of those who received ICP monitoring, the mean number of monitors was 1.3 ± 0.5. The maximum number of separate ICP monitors in one patient was 3. Among survivors, the first ICP monitor was in place a median (interquartile range) of 4 (2, 6) days.

We identified ICP monitors with excellent accuracy (98%) using only the ICD-9-CM procedure codes available in standard administrative databases (Table 2a). A Boolean classifier using 3 procedure codes in administrative data had 100% accuracy. We identified ICP monitors less well (accuracy 92%) using the ICD-9-CM procedure codes from the NTDB and penalized regression. A Boolean classifier using 6 codes had perfect accuracy using NTDB data (Table 2a). All ICP monitor classifers performed well by AUC and Brier’s score.

Table 2a.

ICP Classification Models

| Predictors | Accuracy (%) | Sensitivity (%) | Specificity (%) | AUC (%) | Brier | |

|---|---|---|---|---|---|---|

| Administrative | ||||||

| PR Model | phis0110 (3.9), phis0131, phis0221 (2.9) | 98.1 (90.1, 99.7) | 95.7 (86.4, 98.7) | 100.0 (93.2, 100.0) | 98.4 (95.2, 100.0) | 0.051 |

| Boolean | 100.0 (93.2, 100.0) | 100.0 (93.2, 100.0) | 100.0 (93.2, 100.0) | 100.0 (100.0, 100.0) | 0.000 | |

| NTDB | ||||||

| PR Model | ntdb0110 (5.1), ntdb0131, ntdb0239, ntdb0126, ntdb0128, ntdb0221 (4.0) | 92.5 (82.1, 97.0) | 82.6 (70.3, 90.5) | 100.0 (93.2, 100.0) | 94.1 (88.6, 99.6) | 0.077 |

| Boolean | 100.0 (93.2, 100.0) | 100.0 (93.2, 100.0) | 100.0 (93.2, 100.0) | 100.0 (100.0, 100.0) | 0.000 | |

| PHIS | ||||||

| PR Model | phis0110 (3.9), phis0131, phis0221 (3.9), icpsupplyyn (2.5) | 98.1 (90.1, 99.7) | 95.7 (86.4, 98.7) | 100.0 (93.2, 100.0) | 98.4 (95.2, 100.0) | 0.026 |

| Boolean | 98.1 (90.1, 99.7) | 100.0 (93.2, 100.0) | 96.7 (87.9, 99.1) | 97.9 (93.8, 100.0) | 0.019 | |

| NTDB + PHIS | ||||||

| PR Model | ntdb0110 (4.8), ntdb0131, ntdb0239, ntdb0126, ntdb0128, ntdb0221 (1.9), phis0110, phis0131, phis0221 (2.1), icpsupplyyn (0.5) | 92.5 (82.1, 97.0) | 82.6 (70.3, 90.5) | 100.0 (93.2, 100.0) | 94.1 (88.6, 99.6) | 0.038 |

| Boolean | 98.1 (90.1, 99.7) | 100.0 (93.2, 100.0) | 96.7 (87.9, 99.1) | 97.9 (93.8, 100.0) | 0.019 | |

“PR model” = best penalized regression model selected by cross-validation. Predictors in bold were included in the final model with coefficients shown on the logit scale. “Boolean” = Boolean classifier including all of the candidate predictors from the corresponding penalized regression model in the row above, i.e. any of X or Y or Z = yes. Candidate predictor abbreviations correspond to data source and specific code, e.g. “phis0110” = ICD-9-CM procedure code 01.10 from PHIS (standard administrative ICD-9-CM codes). “icpsupplyyn” = Clinical Transaction Classification supply billing code for ICP monitoring supplies, yes/no. “norfromed” = NTDB variable for transfer directly to Operating Room from Emergency Department. ICP = intracranial pressure; AUC = area under the curve; NTDB = National Trauma Data Bank; PHIS = Pediatric Health Information Systems database; Estimates are shown with 95% confidence intervals except the Brier’s score.

More than one quarter of the patients, 49/174 (28%), received a craniotomy or craniectomy (Table 1). Nearly half, 21/49 (43%), of those who received a craniotomy or craniectomy also received an ICP monitor. As expected, craniotomies were often for hematoma evacuation (Table 1). Of the 10 patients who received a strictly decompressive craniectomy, 4 received it on the day of admission, 4 on the day following admission, and one each on day 4 and day 5. Of those who had a craniotomy or craniectomy, 24/49 (49%) went directly to the operating room (OR) from the Emergency Department (ED).

Because ICD-9-CM procedure codes do not specifically identify each type of craniotomy or craniectomy, we developed a classifier to identify any craniotomy or craniectomy. Overall, craniotomy or craniectomy in children with TBI can be identified well, although multiple codes are required (Table 2b). Standard administrative ICD-9-CM procedure codes and a regression model are sufficient to identify craniotomy or craniectomy with 94% accuracy. Classifiers using administrative data were superior to classifiers using NTDB data, which were 89% accurate.

Table 2b.

Craniotomy or Craniectomy Classification Models

| Predictors | Accuracy (%) | Sensitivity (%) | Specificity (%) | AUC (%) | Brier | |

|---|---|---|---|---|---|---|

| Administrative | ||||||

| PR Model | phis0124 (2.8), phis0125 (2.2), phis0131 (2.8), phis0159, phis0211, phis0212 | 94.3 (84.6, 98.1) | 81.8 (69.4, 89.9) | 97.6 (89.3, 99.5) | 92.7 (82.4, 100.0) | 0.079 |

| Boolean | 94.3 (84.6, 98.1) | 81.8 (69.4, 89.9) | 97.6 (89.3, 99.5) | 92.7 (82.4, 100.0) | 0.057 | |

| NTDB | ||||||

| PR Model | ntdb0124 (2.9), ntdb0125 (2.8), ntdb0131 (2.3), ntdb0211, ntdb0159, ntdb0212, norfromed (0.8) | 88.7 (77.4, 94.7) | 72.7 (59.5, 82.9) | 92.9 (82.7, 97.3) | 82.8 (68.4, 97.1) | 0.093 |

| Boolean | 88.7 (77.4, 94.7) | 81.8 (69.4, 89.9) | 90.5 (79.6, 95.8) | 82.1 (68.6, 95.6) | 0.113 | |

| PHIS | ||||||

| PR Model | phis0124 (2.6), phis0125 (2.0), phis0131 (2.6), phis0159, phis0211, phis0212 | 94.3 (84.6, 98.1) | 81.8 (69.4, 89.9) | 97.6 (89.3, 99.5) | 92.7 (82.4, 100.0) | 0.083 |

| Boolean | 94.3 (84.6, 98.1) | 81.8 (69.4, 89.9) | 97.6 (89.3, 99.5) | 92.7 (82.4, 100.0) | 0.057 | |

| NTDB + PHIS | ||||||

| PR Model | ntdb0124 (1.6), ntdb0125 (2.5), ntdb0131 (0.5), ntdb0211, ntdb0159, ntdb0212, phis0124 (1.6), phis0125 (0.2), phis0131 (2.4), phis0159, phis0211, phis0212, norfromed (0.7) | 92.5 (82.1, 97.0) | 72.7 (59.5, 82.9) | 97.6 (89.3, 99.5) | 91.0 (79.5, 100.0) | 0.079 |

| Boolean | 88.7 (77.4, 94.7) | 81.8 (69.4, 89.9) | 90.5 (79.6, 95.8) | 82.1 (68.6, 95.6) | 0.113 | |

see legend with Table 2a

Vascular Catheters

Fewer than half of the patients, 82/174 (47%), received central venous access in the form of a CVC or PICC (Table 1). Among those with severe TBI, just over half, 67/119 (56%) received central venous access. Just over one-third of all patients, 62/174 (36%), and fewer than one-half of those with severe TBI, 52/119 (44%), had both central venous access and arterial access.

Models to identify vascular catheters were less accurate than models to identify neurosurgical procedures (Tables 3a–c). For CVC’s, the best model using administrative data was 79% accurate (Table 3a). Sensitivity was poor, 59%, but specificity was excellent, 100%. That model contained only one code, ICD-9-CM 38.93. Our variable selection method identified only one NTDB ICD-9-CM procedure code (89.62, a different code than in standard administrative data) to predict CVC’s. None of the CVC classifiers achieved 90% accuracy.

Table 3a.

CVL Classification Models

| Predictors | Accuracy (%) | Sensitivity (%) | Specificity (%) | AUC (%) | Brier | |

|---|---|---|---|---|---|---|

| Administrative | ||||||

| PR Model | phis3893 (1.1), phis3897 | 79.2 (66.5, 88.0) | 59.3 (45.8, 71.4) | 100.0 (93.2, 100.0) | 85.1 (77.7, 92.6) | 0.208 |

| Boolean | 88.7 (77.4, 94.7) | 92.6 (82.3, 97.1) | 84.6 (72.6, 91.9) | 88.9 (80.4, 97.5) | 0.113 | |

| NTDB | ||||||

| PR Model | ntdb3893, ntdb3897, ntdb8962 (1.2) | 86.8 (75.2, 93.5) | 74.1 (60.9, 84.0) | 100.0 (93.2, 100.0) | 89.4 (82.3, 96.5) | 0.180 |

| Boolean | 86.8 (75.2, 93.5) | 88.9 (77.7, 94.8) | 84.6 (72.6, 91.9) | 86.9 (77.6, 96.1) | 0.132 | |

| PHIS | ||||||

| PR Model | phis3893 (1.2), phis3897, cvlclin (0.9), cvlsupplyyn | 79.2 (66.5, 88.0) | 59.3 (45.8, 71.4) | 100.0 (93.2, 100.0) | 85.1 (77.7, 92.6) | 0.217 |

| Boolean | 81.1 (68.6, 89.4) | 100.0 (93.2, 100.0) | 61.5 (48.1, 73.4) | 86.5 (79.2, 93.7) | 0.189 | |

| NTDB + PHIS | ||||||

| PR Model | ntdb3893, ntdb3897, ntdb8962 (1.0), phis3893, phis3897, cvlclin, cvlsupplyyn | 86.8 (75.2, 93.5) | 74.1 (60.9, 84.0) | 100.0 (93.2, 100.0) | 89.4 (82.3, 96.5) | 0.193 |

| Boolean | 81.1 (68.6, 89.4) | 100.0 (93.2, 100.0) | 61.5 (48.1, 73.4) | 86.5 (79.2, 93.7) | 0.189 | |

“PR model” = best penalized regression model selected by cross-validation. Predictors in bold were included in the final model with coefficients shown on the logit scale. “Boolean” = Boolean classifier including all of the candidate predictors from the corresponding penalized regression model in the row above, i.e. any of X or Y or Z = yes. Candidate predictor abbreviations correspond to data source and specific code, e.g. “phis3893” = ICD-9-CM procedure code 38.93 from PHIS (standard administrative ICD-9-CM codes). CVL = central venous line. PICC = peripherally inserted central cather. “cvlclin” = Clinical Transaction Classification (CTC) clinical billing code for central venous line; “cvlsupplyyn” = CTC supply billing code for CVL supplies, yes/no. “artinsclin” = CTC clinical billing code for arterial catheter insertion. “piccclin” = CTC clinical billing code for PICC. AUC = area under the curve; NTDB = National Trauma Data Bank; PHIS = Pediatric Health Information Systems database; Estimates are shown with 95% confidence intervals except the Brier’s score.

Table 3c.

PICC Classification Models

| Predictors | Accuracy (%) | Sensitivity (%) | Specificity (%) | AUC (%) | Brier | |

|---|---|---|---|---|---|---|

| PHIS | ||||||

| PR Model | phis3893, phis3897, piccclin (3.2) | 98.1 (90.1, 99.7) | 100.0 (93.2, 100.0) | 97.7 (89.4, 99.5) | 95.5 (86.5, 100.0) | 0.043 |

| Boolean | 64.2 (50.7, 75.7) | 100.0 (93.2, 100.0) | 55.8 (42.5, 68.3) | 67.2 (58.4, 76.0) | 0.358 | |

| NTDB + PHIS | ||||||

| PR Model | ntdb3893, ntdb3897, ntdb8962, phis3893, phis3897, piccclin (3.0) | 98.1 (90.1, 99.7) | 100.0 (93.2, 100.0) | 97.7 (89.4, 99.5) | 95.5 (86.5, 100.0) | 0.048 |

| Boolean | 62.3 (48.8, 74.1) | 100.0 (93.2, 100.0) | 53.5 (40.3, 66.2) | 66.7 (58.1, 75.2) | 0.377 | |

see legend with Table 3a

Like CVC’s, arterial catheters are challenging to detect using ICD-9-CM procedure codes (Table 3b). Because the NTDB contained only one candidate code, no penalized regression model was fit for that data type. Boolean classifiers performed well overall: regression models had no advantage. The best classifier was a Boolean classifier using all of the available codes, including a CTC billing code found only in PHIS.

Table 3b.

Arterial Catheter Classification Models

| Predictors | Accuracy (%) | Sensitivity (%) | Specificity (%) | AUC (%) | Brier | |

|---|---|---|---|---|---|---|

| Administrative | ||||||

| PR Model | phis3891 (0.7), phis8961 | 79.2 (66.5, 88.0) | 65.5 (52.1, 76.9) | 95.8 (86.7, 98.8) | 82.3 (73.0, 91.7) | 0.256 |

| Boolean | 84.9 (72.9, 92.1) | 75.9 (62.9, 85.4) | 95.8 (86.7, 98.8) | 86.2 (77.4, 95.0) | 0.151 | |

| PHIS | ||||||

| PR Model | phis3891 (0.7), phis8961, artinsclin | 79.2 (66.5, 88.0) | 65.5 (52.1, 76.9) | 95.8 (86.7, 98.8) | 82.3 (73.0, 91.7) | 0.256 |

| Boolean | 84.9 (72.9, 92.1) | 75.9 (62.9, 85.4) | 95.8 (86.7, 98.8) | 86.2 (77.4, 95.0) | 0.151 | |

| NTDB + PHIS | ||||||

| PR Model | ntdb8961 (0.6), phis3891, phis8961, artinsclin | 92.5 (82.1, 97.0) | 89.7 (78.6, 95.3) | 95.8 (86.7, 98.8) | 92.4 (85.1, 99.6) | 0.200 |

| Boolean | 96.2 (87.2, 99.0) | 96.6 (87.7, 99.1) | 95.8 (86.7, 98.8) | 96.2 (90.9, 100.0) | 0.038 | |

see legend with Table 3a

No specific procedure code for PICC placement exists in ICD-9-CM. Of those patients who had a PICC placed, 14/24 (58%) also had a CVC. For that reason, the two ICD-9-CM procedure codes used for CVC’s were considered for the PICC classification models (Table 3c). Overall, we found that no ICD-9-CM-based method identifies PICC’s reliably. The administrative and NTDB-only models contained too little predictive ability for model performance measures to be calculated. However, a penalized regression model containing a single “clinical” CTC (billing) code from PHIS identifies PICC’s with 98% accuracy. A separate Boolean classifier based on the single CTC billing code also had 98% accuracy in the test dataset.

TBI Diagnosis

Overall, 167/174 (96%) of the prospective enrolled patients received one of the ICD-9-CM diagnosis codes used by the Centers for Disease Control (CDC) to track TBI hospitalizations and ED visits in their PHIS record and 171/174 (98%) received one in their NTDB record.(1) Because the group of CDC codes (in effect, a Boolean classifier) performed well overall, we did not build a penalized regression model for TBI diagnosis. Of the 7 patients without a CDC TBI diagnosis code in the PHIS record, 6 prospectively received a diagnosis of child abuse. Similarly, all 3 patients without a CDC TBI diagnosis code in their NTDB record were injured by child abuse. Among children with child abuse, the CDC codes were present less often in both the PHIS database, 33/39 (85%), and the NTDB, 36/39 (92%). Using the PHIS ICD-9-CM diagnosis code 995.54 (child physical abuse) in addition to the CDC list of diagnosis codes would capture 4 of the 7 missed patients.

Mechanical Ventilation

Because nearly all of the patients, 161/174 (93%), were invasively mechanically ventilated, we did not fit a penalized regression model. A Boolean classifier using administrative data and ICD-9-CM procedure codes for ventilation (96.70, 96.71, or 96.72) validated in adults(32) had only 80% within-sample accuracy in our study. Specificity was excellent, 100%, but sensitivity was a concern (78%). That classifier gave nearly three times as many false negatives (35) as true negatives (13). A similar classifier using NTDB data was only 49% accurate.

PHIS contains a “ventilation flag” that is a Boolean classifier including ICD-9-CM procedure codes 96.70 – 96.72 and two CTC billing codes for ventilation care by respiratory therapists. That flag had 93% accuracy, 93% sensitivity, and 92% specificity. A Boolean classifier using both the PHIS flag and any of the ICD-9-CM procedure codes from NTDB data had the best accuracy, 94%, but had nearly as many false negatives (10) as true negatives (12). All 10 of the false negatives from that classifier were intubated and extubated on the same calendar day and survived to discharge.

New Technology Dependence

Fewer than 10% of all patients, 13/174 (7%), received a new gastrostomy tube (GT) during the hospitalization (Table 1). Only 5/174 (3%) received a new tracheostomy, all of whom also received a new GT(12).

Because of the small number of events, we did not fit penalized regression models for GTs and tracheostomies. A Boolean classifer for GTs using ICD-9-CM procedure codes 43.11 or 43.19 from either PHIS or the NTDB would capture 12/13 (92%) of the patients who received a GT. The within-sample accuracy was 98%, with sensitivity 92% and specificity 98%. A Boolean classifier for tracheostomies using ICD-9-CM procedure codes 31.1× or 31.29 from the NTDB would capture 4/4 (100%) of the patients who received a tracheostomy.

Penalized Regression Models

The best performing model selected by cross-validation for each clinical event and data type had alpha = 0.9. This represents elastic net regression with the alpha tuning parameter close to that of the LASSO, which should maximize the variable selection properties of the elastic net.

Discussion

In this study, we developed and validated classifiers to identify ICP monitoring, cranial surgery, vascular access procedures, and other important clinical events in critically injured children with TBI. Simple Boolean classifiers were ≥ 94% accurate for 7/9 clinical diagnoses and events. For CVC placement, no classifier achieved 90% accuracy.

Accurate classification was dependent on available data fields. Only 4 (ICP monitoring, craniotomy or craniectomy, GT placement, and the TBI diagnosis) of the 9 variables we analyzed could be classified with ≥ 94% accuracy using standard administrative ICD-9-CM codes. For a fifth clinical event, CVC placement, administrative codes were as accurate as other data types, but the best available accuracy was only 89%. Three clinical events (arterial catheter placement, mechanical ventilation, and tracheostomy) required trauma registry codes for accurate classification. Two clinical events (PICC placement and mechanical ventilation) required transactional billing (CTC) codes from the PHIS database for accurate classification. Other investigators have also reported that for some clinical phenotypes and events, variables in addition to ICD-based codes are necessary.(9)

The classifiers we have developed and validated will be useful for other studies of critically injured children that utilize existing administrative, billing, or registry data. ICP monitors can be identified very accurately using either a penalized regression or a Boolean approach. Our Boolean classifier using administrative data performed better than a similar classifier did (91% sensitivity) in a single-center study of adults with severe TBI.(33) For craniotomy/craniectomy, the best classifiers performed well overall (94% accuracy and 98% specificity) and Boolean classifiers using NTDB data had similar sensitivity (82% versus 85%) to a study of adults with severe TBI (85%).(33) Investigators who choose to use the craniotomy/craniectomy classifiers in the future will need to perform sensitivity analyses to determine the effect of the classifiers’ sensitivity on their results.

Identifying vascular access procedures using database codes is more problematic. The ICD-9-CM procedure codes available in standard administrative data have excellent specificity but poor sensitivity for CVCs, approximately 60%. This is consistent with previous work which found poor sensitivity for CVCs and central line-associated bloodstream infections using ICD-9-CM and CPT codes.(34, 35) Careful sensitivity analyses are indicated if CVCs are to be identified using only administrative data fields. The best available classifiers in our study used registry (NTDB) data. PICC’s could only be identified with excellent accuracy using a billing code present in the PHIS database.

The diagnosis codes used by the CDC to track ED visits and hospitalizations for acute TBI performed well overall (>95% accuracy). Our findings are similar to another study that found that 89% of adults with severe TBI also had one of the CDC diagnosis codes.(33) However, the CDC algorithm is less accurate in children with TBI from child abuse, only 85%. Adding ICD-9-CM 995.54 to the CDC algorithm might improve detection of abused children with TBI.

A Boolean classifier using ICD-9-CM procedures codes 96.70–96.72 for mechanical ventilation had excellent specificity (100%) in administrative data but lower sensitivity (78%). These results are similar to a study in adults(32). Like that study, our evaluation of that classifier used only within-sample validation and may be optimistic. We also found that billing codes for respiratory therapy care present in PHIS dramatically improve the performance of classifiers for mechanical ventilation. The best available classifier for mechanical ventilation uses administrative, billing, and registry data in combination.

Children with TBI who survive their injuries but have persistent deficits may receive new GTs and tracheostomies. Simple Boolean classifiers identify these procedures accurately. Our results are consistent with a small study of adults receiving tracheostomies after stroke, which found that a Boolean classifier using ICD-9-CM procedures codes 31.1× and 31.2× performed well.(36)

Strengths of this study include the use of prospectively collected data as a gold standard. We also used best-practice methods for the development and validation of classification models including shrinkage of regression coefficients, internal validation using cross-validation (bootstrapping is another recommended option), and geographic external validation.(19) Without a very large sample size, geographic (different site) or temporal (different time) validation is now considered a stronger design than the “split-sample” approach because differences between the training and testing datasets are not strictly random.(10, 19, 20) Although not found to be superior to Boolean classifiers for most variables in this study, penalized regression effectively selected the key variables for each model and shrunk coefficients to avoid overfitting. Stepwise regression has been widely used in epidemiologic studies, but is not guaranteed to select the best set of predictors(37), does not always select a consistent set of predictors, and performs poorly when candidate predictors are highly correlated(38).

This study has several limitations. First, we conducted the prospective study at only two centers. Trauma registry coding at NTDB centers is guided by the National Trauma Data Standard(21), but significant variability between centers has been reported.(39) Second, the sample size is relatively small (174 children overall with 53 at the validation site). We used gold-standard methods to assess the accuracy of the classifiers we developed and reported confidence intervals with each point estimate. Several of the classifiers are robust despite the small sample size. Third, the classifers we developed are largely based on ICD-9-CM diagnosis and procedure codes. U.S. hospitals began using ICD-10 in October 2015. However, an enormous amount of existing data is coded using ICD-9-CM. The classifiers in this manuscript will be useful to investigators analyzing those data for the next several years. In addition, the prospective study that generated these data remains open. We will develop and validate novel ICD-10-based classifiers as soon as we have enrolled a sufficient sample size. Fourth, some clinical events are rare enough (e.g. tracheostomy) or common enough (e.g. mechanical ventilation) in critically injured children with TBI that only Boolean classifiers could be evaluated. Fifth, we did not assess model calibration. We evaluated the use of Cox calibration regression(40) as recommended(19, 41), but found that it performed erratically for models with binary outcomes and few predictors.

In conclusion, computable phenotypes based on simple Boolean classifiers were highly accurate for most neurosurgical and critical care diagnoses and events. The classifiers for interventions such as mechanical ventilation and vascular catheters can be applied in other studies. However, accurate classification of those interventions will require careful assessment of the data source, as billing codes or trauma registry variables are needed for the most accurate classifiers for some events. A workflow consistent with prediction model development was successful in generating accurate, reproducible, parsimonious, and generalizable classifiers.

Acknowledgments

Funding: This work was supported by the Eunice Kennedy Shriver National Institute for Child Health and Human Development (Grant K23HD074620 to TB) and the National Center for Research Resources (Colorado Clinical and Translational Sciences Institute Grant UL1 TR001082).

We are indebted to Kristine Hansen, Ryan Metzger, and Jacob Wilkes at Primary Children’s Hospital, to Michelle Loop and Elizabeth Vann at Children’s Hospital Colorado, and to Melissa Ringwood at the University of Utah Data Coordinating Center.

Footnotes

Copyright form disclosures:

Drs. Bennett, Kempe, and Dean received support for article research from the National Institutes of Health (NIH). Dr. Bennett’s institution received funding from NICHD and NCRR. Dr. Srivastava disclosed that he is the Past Chair of the Pediatric Hospitalist Research (PRIS) Network (www.prisnetwork.org) that conducts a variety of research studies funded by the NIH, AHRQ and the Children’s Hospital Association. Dr. Keenan’s institution received funding from NIH/NICHD and from the CDC. The remaining authors have disclosed that they do not have any potential conflicts of interest.

References

- 1.Faul M, Likang X, Wald M, et al. Traumatic brain injury in the united states: Emergency department visits, hospitalizations, and deaths 2002–2006. Atlanta (GA): National Center for Injury Prevention; Control, Centers for Disease Control; Prevention, U.S. Department of Health; Human Services; 2010. [Google Scholar]

- 2.Kochanek PM, Carney N, Adelson PD, et al. Guidelines for the acute medical management of severe traumatic brain injury in infants, children, and adolescents–second edition. Pediatr Crit Care Med. 2012;13(Suppl 1):S1–82. doi: 10.1097/PCC.0b013e31823f435c. [DOI] [PubMed] [Google Scholar]

- 3.Dreyer NA. Making observational studies count: Shaping the future of comparative effectiveness research. Epidemiology. 2011;22:295–7. doi: 10.1097/EDE.0b013e3182126569. [DOI] [PubMed] [Google Scholar]

- 4.Cooke CR, Iwashyna TJ. Using existing data to address important clinical questions in critical care. Crit Care Med. 2013;41:886–96. doi: 10.1097/CCM.0b013e31827bfc3c. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bennett TD, Spaeder MC, Matos RI, et al. Existing data analysis in pediatric critical care research. Front Pediatr. 2014;2:79. doi: 10.3389/fped.2014.00079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.O’Malley KJ, Cook KF, Price MD, et al. Measuring diagnoses: ICD code accuracy. Health Serv Res. 2005;40:1620–1639. doi: 10.1111/j.1475-6773.2005.00444.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Roberts RJ, Stockwell DC, Slonim AD. Discrepancies in administrative databases: Implications for practice and research. Crit Care Med. 2007;35:949–950. doi: 10.1097/01.ccm.0000257461.17112.89. [DOI] [PubMed] [Google Scholar]

- 8.Weiss NS. The new world of data linkages in clinical epidemiology: Are we being brave or foolhardy? Epidemiology. 2011;22:292–4. doi: 10.1097/EDE.0b013e318210aca5. [DOI] [PubMed] [Google Scholar]

- 9.Shivade C, Raghavan P, Fosler-Lussier E, et al. A review of approaches to identifying patient phenotype cohorts using electronic health records. J Am Med Inform Assoc. 2014;21:221–230. doi: 10.1136/amiajnl-2013-001935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kirby JC, Speltz P, Rasmussen LV, et al. PheKB: A catalog and workflow for creating electronic phenotype algorithms for transportability. J Am Med Inform Assoc. 2016 doi: 10.1093/jamia/ocv202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kamkar I, Gupta SK, Phung D, et al. Stable feature selection for clinical prediction: exploiting ICD tree structure using Tree-Lasso. J Biomed Inform. 2015;53:277–290. doi: 10.1016/j.jbi.2014.11.013. [DOI] [PubMed] [Google Scholar]

- 12.Bennett TD, Dixon RR, Kartchner C, et al. Functional status scale in children with traumatic brain injury: A prospective cohort study. Pediatric critical care medicine : a journal of the Society of Critical Care Medicine and the World Federation of Pediatric Intensive and Critical Care Societies. 2016 doi: 10.1097/PCC.0000000000000934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Bennett TD, Dean JM, Keenan HT, et al. Linked records of children with traumatic brain injury. probabilistic linkage without use of protected health information. Methods Inf Med. 2015:54. doi: 10.3414/ME14-01-0093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Slonim AD, Khandelwal S, He J, et al. Characteristics associated with pediatric inpatient death. Pediatrics. 2010;125:1208–16. doi: 10.1542/peds.2009-1451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Conway PH, Keren R. Factors associated with variability in outcomes for children hospitalized with urinary tract infection. J Pediatr. 2009;154:789–96. doi: 10.1016/j.jpeds.2009.01.010. [DOI] [PubMed] [Google Scholar]

- 16.American College of Surgeons Committee on Trauma. National Trauma Data Bank Research Data Set User Manual, Admission Year 2009. Chicago, IL: [Google Scholar]

- 17.Tibshirani R. Regression shrinkage and selection via the lasso. J R Statist Soc B. 1996;58:267–88. [Google Scholar]

- 18.Hastie T, Tibshirani R, Friedman J. The elements of statistical learning. 2. Springer; 2008. [Google Scholar]

- 19.Moons KGM, Altman DG, Reitsma JB, et al. Transparent Reporting of a multivariable prediction model for Individual Prognosis or Diagnosis (TRIPOD): explanation and elaboration. Ann Intern Med. 2015;162:W1–73. doi: 10.7326/M14-0698. [DOI] [PubMed] [Google Scholar]

- 20.Steyerberg EW, Harrell F, Jr, Borsboom GJ, et al. Internal validation of predictive models: Efficiency of some procedures for logistic regression analysis. J Clin Epidemiol. 2001;54:774–781. doi: 10.1016/s0895-4356(01)00341-9. [DOI] [PubMed] [Google Scholar]

- 21.The American College of Surgeons Committee on Trauma. [on April 7, 2016];National Trauma Data Standard. Accessed at http://www.ntdsdictionary.org/

- 22.Bratton SL, Newth CJ, Zuppa AF, et al. Critical care for pediatric asthma: Wide care variability and challenges for study. Pediatr Crit Care Med. 2012;13:407–14. doi: 10.1097/PCC.0b013e318238b428. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Hoerl A, Kennard R. Ridge regression: Biased estimation for nonorthogonal problems. Technometrics. 1970;12:55–67. [Google Scholar]

- 24.Zou H, Hastie T. Regularization and variable selection via the elastic net. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 2005;67:301–320. [Google Scholar]

- 25.Agarwal V, Podchiyska T, Banda JM, et al. Learning statistical models of phenotypes using noisy labeled training data. Journal of the American Medical Informatics Association : JAMIA. 2016 doi: 10.1093/jamia/ocw028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Brier G. Verification of forecasts expressed in terms of probability. Mon Weather Rev. 1950;78:1–3. [Google Scholar]

- 27.R Core Team. R: A Language and Environment for Statistical Computing. Vienna, Austria: R Foundation for Statistical Computing; [Google Scholar]

- 28.Friedman J, Hastie T, Simon N, et al. [on January 20, 2016];Glmnet: Lasso and elastic-net regularized generalized linear models. Accessed at http://CRAN.R-project.org/package=glmnet. R package version 2.0-5.: 2016.

- 29.Friedman J, Hastie T, Tibshirani R. Regularization paths for generalized linear models via coordinate descent. J Stat Softw. 2010;33:1–22. [PMC free article] [PubMed] [Google Scholar]

- 30.Allaire J, Cheng J, Xie Y, et al. [on August 10, 2015];rmarkdown: Dynamic Documents for R. Accessed at http://CRAN.R-project.org/package=rmarkdown. R package version 0.9.5.: 2016.

- 31.Xie Y. [on August 10, 2015];knitr: A General-Purpose Package for Dynamic Report Generation in R. Accessed at https://cran.r-project.org/web/packages/knitr/index.html. R package version 1.12.3.: 2016.

- 32.De Coster C, Li B, Quan H. Comparison and validity of procedures coded With ICD-9-CM and ICD-10-CA/CCI. Med Care. 2008;46:627–634. doi: 10.1097/MLR.0b013e3181649439. [DOI] [PubMed] [Google Scholar]

- 33.Carroll CP, Cochran JA, Guse CE, et al. Are we underestimating the burden of traumatic brain injury? Surveillance of severe traumatic brain injury using centers for disease control international classification of disease, ninth revision, clinical modification, traumatic brain injury codes. Neurosurgery. 2012;71:1064–70. doi: 10.1227/NEU.0b013e31826f7c16. discussion 1070. [DOI] [PubMed] [Google Scholar]

- 34.Wright SB, Huskins WC, Dokholyan RS, et al. Administrative databases provide inaccurate data for surveillance of long-term central venous catheter-associated infections. Infection control and hospital epidemiology. 2003;24:946–949. doi: 10.1086/502164. [DOI] [PubMed] [Google Scholar]

- 35.Patrick SW, Davis MM, Sedman AB, et al. Accuracy of hospital administrative data in reporting central line-associated bloodstream infections in newborns. Pediatrics. 2013;131(Suppl 1):S75–S80. doi: 10.1542/peds.2012-1427i. [DOI] [PubMed] [Google Scholar]

- 36.Lahiri S, Mayer SA, Fink ME, et al. Mechanical ventilation for acute stroke: A multi-state population-based study. Neurocrit Care. 2015;23:28–32. doi: 10.1007/s12028-014-0082-9. [DOI] [PubMed] [Google Scholar]

- 37.Hocking R. A biometrics invited paper the analysis and selection of variables in linear regression. Biometrics. 1976;32:1–49. [Google Scholar]

- 38.Steyerberg EW, Eijkemans MJ, Harrell F, Jr, et al. Prognostic modeling with logistic regression analysis: In search of a sensible strategy in small data sets. Med Decis Making. 2001;21:45–56. doi: 10.1177/0272989X0102100106. [DOI] [PubMed] [Google Scholar]

- 39.Arabian SS, Marcus M, Captain K, et al. Variability in interhospital trauma data coding and scoring: A challenge to the accuracy of aggregated trauma registries. The journal of trauma and acute care surgery. 2015;79:359–363. doi: 10.1097/TA.0000000000000788. [DOI] [PubMed] [Google Scholar]

- 40.Cox D. Two further applications of a model for binary regression. Biometrika. 1958;45:562–565. [Google Scholar]

- 41.Steyerberg EW, Vickers AJ, Cook NR, et al. Assessing the performance of prediction models: A framework for traditional and novel measures. Epidemiology. 2010;21:128–138. doi: 10.1097/EDE.0b013e3181c30fb2. [DOI] [PMC free article] [PubMed] [Google Scholar]