Abstract

Purpose

To determine response rates and associated costs of different survey methods among colorectal cancer (CRC) survivors.

Methods

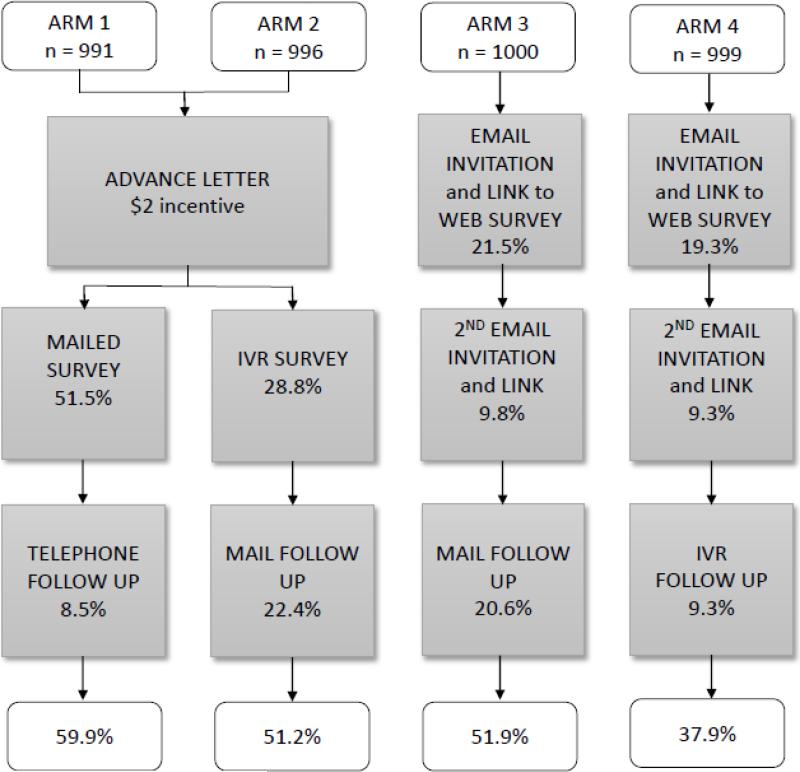

We assembled a cohort of 16,212 individuals diagnosed with CRC (2010-2014) from six health plans, and randomly selected 4,000 survivors to test survey response rates across 4 mixed-mode survey administration protocols (in English and Spanish): arm 1, mailed survey with phone follow-up; arm 2, interactive voice response (IVR) followed by mail; arm 3: email linked to web-based survey with mail follow-up; and arm 4, email linked to web-based survey followed by IVR.

Results

Our overall response rate was 50.2%. Arm 1 had the highest response rate (59.9%), followed by arm 3 (51.9%), arm 2 (51.2%) and arm 4 (37.9%). Response rates were higher among non-Hispanic whites in all arms than other racial/ethnic groups (p<0.001), among English (51.5%) than Spanish speakers (36.4%) (p<0.001), and among higher (53.7%) than lower (41.4%) socioeconomic status (p<0.001). Survey arms were roughly comparable in cost, with a difference of only 8% of total costs between the most (arm 2) and least (arm 3) expensive arms.

Conclusions

Mailed surveys followed by phone calls achieved the highest response rate; email invitations and online surveys cost less per response. Electronic methods, even among those with email availability, may miss important populations including Hispanics, non-English speakers, and those of lower socioeconomic status.

Implications for Cancer Survivors

Our results demonstrate effective methods for capturing patient-reported outcomes, inform the relative benefits/disadvantages of the different methods, and identify future research directions.

Keywords: Colorectal cancer, mixed-mode survey, patient-reported outcomes, survivors

Purpose

Patient-reported outcomes are essential for assessing quality of care among all populations, including cancer survivors, but participation in health-related surveys is declining [1]. High response rate is the most widely used measure of survey quality [2] as non-response bias can threaten the validity of survey estimates [3]. Numerous mechanisms for administering surveys exist. Telephone interview and mailed surveys are more traditional approaches, but technology-based approaches are increasing as smart phones and internet access become ubiquitous. These methods include web-based surveys, interactive voice recognition (IVR) surveys, text messaging, and use of “apps” for smart phones. To reach a wider range of respondents and to improve response rates, surveys which combine two or more of these survey methods, i.e. mixed-mode survey designs, are growing in popularity, although it is not clear whether such methods reduce bias in the sample who respond [1] as there is a non-linear relationship between response rate and bias in survey data [4] and there are minimal data evaluating these methods in many important populations, such as cancer survivors or among specific demographic groups, such as by age, language preference or race/ethnicity.

Mailed (paper) surveys are familiar to most respondents, and allow respondents to complete the survey at their convenience. They can capture more detailed information if open-ended responses are included, or if respondents can refer to sources of information beyond recall. However, this method also carries higher per-person costs for distribution, particularly in large populations. Electronic methods, including web-based, text, or IVR surveys offer a potentially lower-cost alternative to the traditional paper or interview-administered surveys. These methods have higher up-front costs associated with design and database setup, but once developed, a large number of surveys can be administered at very little marginal cost for each additional person surveyed. The data from these surveys are also captured in real time, thus eliminating some of the data entry costs associated with traditional paper-based surveys. These newer methods also have limitations. They rely on the availability of email addresses or phone numbers, they are less familiar to many patient populations, and their acceptability has not been widely tested.

There are approximately 1.2 million colorectal cancer (CRC) survivors living in the US today, and significant numbers of cancer survivors report unmet needs [5, 6]. The current study was motivated by a need to efficiently survey a large, multi-site population of CRC survivors and capture information on their current lifestyle habits and health concerns. Using electronic health data, we constructed a retrospective cohort of 16,212 individuals diagnosed with CRC (2010-2014) in six health plans participating in the Patient Outcomes Research to Advance Learning (PORTAL) network as a model for studying patient-reported risk factors and outcomes among cancer survivors. Within this diverse cohort, we conducted a randomized study comparing response rates across 4 mixed modes of survey administration: arm 1, mailed survey with phone call follow-up; arm 2, interactive voice response (IVR) followed by mailed survey; arm 3: email with link to web-based survey followed by mailed survey; and arm 4, email with link to web-based survey followed by IVR survey. The goal of this study was to determine response rates and associated costs of different survey methods to help determine the optimal approach to gather patient-reported outcomes and other survey data from CRC survivors.

Methods

Study Population and Data Sources

The population for this study was derived from the PORTAL Network. Funded as part of the Patient Centered Outcomes Research Institute's (PCORI) PCORnet [7], PORTAL includes 10 integrated health care systems with approximately 12 million members [8] (roughly 4% of the U.S population). The CRC cohort within PORTAL is a retrospective cohort including cases of CRC diagnosed between 1/1/2010 and 12/31/2014 at six PORTAL health care systems: Group Health Research Institute in Seattle, WA (GHRI), HealthPartners in Minneapolis, MN (HPI), and four Kaiser Permanente health plans: Colorado (KPCO), Southern California (KPSC), Northern California (KPNC), and Northwest (KPNW, in Portland, OR).

The primary data source for this study was the Virtual Data Warehouse initially developed by the Cancer Research Network [9] which is part of the Health Care Systems Research Network (www.hcsrn.org). The Virtual Data Warehouse includes standardized variables derived from administrative databases at each participating HCSRN health plan [10-12]. The Virtual Data Warehouse includes tumor registry data consistent with the North American Association of Central Cancer Registries standards [13]. From the tumor registry data, we used the following ICD-O-3 codes to define CRC: C180, C182-C189, C199 and C209.

In addition to the tumor characteristics, we used the Virtual Data Warehouse to gather patient information including vital status, age at diagnosis, race/ethnicity, and language preference (English or Spanish). As an indicator of socioeconomic status, we mapped patients’ addresses to block-level 2010 Census data on median family income and percent of households without completion of high school. Low socioeconomic status was defined as median family income less than $20,000 or 25% or more households without completion of high school. This study was approved by the Institutional Review Board of KPCO; all other participating health plans ceded oversight to KPCO. Informed consent information was provided to each participant at the beginning of the survey.

Survey Design

In order to better characterize the PORTAL CRC cohort we developed a 17-question written survey asking about family history of CRC, perceived general and mental health status, physical activity and sedentary behavior, aspirin and non-steroidal anti-inflammatory drug use, fruit and vegetable consumption, cancer treatment experiences and health concerns. These domains were chosen, in part, because these risk factors and patient-reported outcomes are not well captured in the electronic medical record. The web-based survey and the IVR survey script were designed to match the paper survey as closely as possible to provide comparable information. The survey included the same questions in the same order across all arms.

PORTAL has a patient advisory group that includes CRC survivors and a community-based advocacy organization, Fight Colorectal Cancer (www.fightcolorectalcancer.org). The survey was designed in partnership with these patient advisors using two rounds of input. First, we convened the advisors in person and conducted brainstorming exercises to identify things they wish had happened or that they wish they had known related to their CRC. We also asked them what would motivate their participation in research, and how they wanted data about their CRC care used for research purposes. These brainstorming exercises helped to identify survey domains and response categories for several survey questions. Once the survey, recruitment materials and survey administration plan were drafted, researchers met with these same patient advisors to gather feedback about survey administration and to further refine and finalize survey questions.

Survey Administration

We created a stratified random sample to compare the four arms of survey administration among CRC cohort members. To be eligible for the survey, cohort members were those who were diagnosed with CRC while enrolled in any participating health plan during 2010-2013, alive and enrolled at the time of the survey, and had a street address listed in their medical record (n=12,956). To keep the survey sample as representative as possible, our randomization scheme allowed for missing email or phone numbers. For example, a member with a missing email, while not eligible for arms with email contact, was eligible for randomization to arm 1 or arm 2, which do not involve email communications.

We excluded the small number of individuals who indicated that they did not want to be contacted for research purposes (such lists are maintained by each health plan). We sampled 1:1 on time since diagnosis (diagnosed 2010-2011 or 2012-2013) and oversampled those of Hispanic ethnicity at 20%. The full sample of 4000 survey participants (approximately 25% of the total cohort) included 1600 non-Hispanics diagnosed in 2010-2011, 1600 non-Hispanics diagnosed in 2012-2013, 400 Hispanics diagnosed in 2010-2011, and 400 Hispanics diagnosed in 2012-2013. Hispanics were over-sampled to achieve sufficient power to look for differences in response rates among both Hispanics and non-Hispanics.

Because mixed modes of survey administration often achieve higher response rates, we tested multiple mixed-methods approaches across 4 study arms (Figure 1). Each arm included 1000 cohort members. All survey instruments were developed in both English and Spanish, and participants were matched to their preferred language, as recorded in the electronic record. Those who did not indicate a language preference were contacted in English. In arms 1 and 2, advance letters were mailed with a $2 incentive. In contrast, Arms 3 and 4 tested initial contact only by electronic methods, and did not include the $2 incentive due to the infeasibility of inclusion with these contact methods.

Figure 1.

Response rates among PORTAL colorectal cancer cohort members by arm and mode of contact

After the advance letter, arm 1 received a mailed survey (including consent language) with a postage paid return envelope. Non-responders received reminder phone calls after 2 weeks, and had the option to complete the survey over the phone at that time. Up to 6 calls were made on different days and at different times to maximize successful contacts. After the advance letter, arm 2 received the survey as an IVR call. Up to 3 IVR calls/week were made, and like the phone calls in arm 1, calls were placed at different times. When answered, the IVR phone call gave an option to “opt-out” and receive no further contact. If the respondent was willing to continue, the system then provided a prompt to hear the information in English or Spanish, and asked the respondent for consent to proceed with the survey questionnaire. After consent, the IVR system conducted the survey with a recorded (not computer generated) voice. The IVR system also had an incoming call feature, where a message could be left for the respondent and the respondent could call into the system at their convenience. Those in arm 2 who did not respond to the IVR survey (and did not opt-out) were mailed a written survey. In arms 3 and 4, members received up to two email letters of invitation (11 days apart) which included a link to a secure website to complete the survey. Non-responders in arm 3 received a mailed survey, and non-responders in arm 4 received the survey as an IVR call.

All CRC members who completed the survey received a $10 gift card in the mail. Participants were informed of this compensation when they were first contacted and asked to participate in the study. The HealthPartners Institute Survey Research Center performed all tasks associated with the mailed survey, conducted the phone calls in arm 1, and mailed the introductory letters for arms 1 and 2. The Institute for Health Research at KPCO developed and supported the IVR, email and web-based applications, and mailed the gift cards.

Statistical analysis

Characteristics of the cohort members were tabulated by participating health plan. Survey response rates were tabulated by study arm and participant characteristics. The marginal survey response rates were compared for differences between the levels of participant characteristics using the Pearson chi-square test of independence.

Response rates were calculated as the total number of participants returning a partially or fully completed survey divided by total number eligible participants. Based on previous studies [14, 15], we considered arm 1 as the “gold standard”. Response rates for arms 2 through 4 were analyzed for non-inferiority to arm 1 using unadjusted and adjusted pair-wise differences from least square means within general linear models with a term for survey arm, and covariate adjustments for ethnicity (Hispanic, not Hispanic), year of first diagnosis of CRC (2010-2011, 2012-2013), race, gender, and age at first diagnosis of CRC. Only three of the pairwise differences from the least square means were used (arm 2 through arm 4 compared with arm 1), since these were the pre-planned comparisons. We used an alpha of .05 for each two-sided confidence interval around the mean differences in order to yield an experiment-wise alpha for non-inferiority (one-sided) of 3 * .025 = .075, based on the Bonferroni adjustment. We decided that a survey method with a response rate 5 percentage points lower than the gold standard would provide a reasonable alternative over a broad range of response rates. Further, if one of the alternative survey arms 2-4 had a response rate that was better than the gold standard in arm 1, we wanted to be able to detect that as well. We therefore chose a non-inferiority test with an equivalence margin of 5 percentage points.

We estimated the power to detect a difference in response rates using a non-inferiority test designed to show that any of the methods employed in arms 2-4 (considered individually) were not inferior to arm 1. For most comparisons, a minimum of 175 completed responses would be required to detect an approximate 10% difference in proportions with 80% power. This applies for a range of response rates between 30-70%.

Costs of survey administration were calculated by survey arm, per solicited, and per response. All costs associated with development of the survey methods and materials including the written survey, email and web-based survey systems, and the IVR system were considered fixed costs, including pilot testing of all systems, and translation of all materials from English to Spanish. Fixed costs were allocated across arms into varying estimated percentages of survey development, and up-front labor costs for the mail (including materials and postage), IVR, and web-based modes, based on total labor costs for these categories. For the per solicited costs for ongoing administration of each mode, complementary percentages to the fixed costs of total labor for mail, IVR and web modes were divided by the initially planned cohort size per arm (1000 members). The per respondent cost included the amount spent for incentives and participant gratitude by arm, divided by the number of respondents in each arm.

Results

The PORTAL CRC cohort includes 16,212 people; 8,265 men (51.0%), and 7,947 women (49.0%) (Supplemental Table 1). Overall, 62.8% of the cohort is non-Hispanic white (n=10,175); 15.4% (n=2,501) are Hispanic, 9.8% (n=1,592) are African-American, and 10.8% (n=1,754) are Asian or Pacific Islander. Most of the cohort (90.7%) indicated that English is their preferred language; 4.4% (n=706) indicated Spanish is their preferred language. Although all cohort members have health insurance through one of the participating health plans, 16.8% of the cohort reside in a census block with low income or low education.

To plan for the survey, we determined how many CRC cohort members listed an email address that could be used for communication with the health plan and was approved for use in research. In total, 57% of the cohort eligible for the survey (n=12,956) had an email address available. One health plan (GHRI) did not have email available for research; in other health plans 40%-80% of cohort members had email addresses available. Of note, percentages with email did not vary by demographic factors including gender, age, race/ethnicity, language preference or socioeconomic status (data not shown).

Of the 4,000 cohort members randomized, 6 were deceased after selection and 8 were ineligible due to language, leaving a total of 3,986 eligible to participate in the survey. Our overall survey response rate was 50.2%. Arm 1 (mail/phone) had the highest response rate overall (59.9%), followed by arm 3 (email/mail) (51.9%), arm 2 (IVR/mail) (51.2%) and arm 4 (email/IVR) (37.9%). Each arm included two methods of contact (Figure 1); after the first method of contact for each arm, the initial response rate in arm 1 was higher than in the other arms. In arm 1, 51.5% responded to the mailed survey, in arm 2, 28.8% responded to IVR, and in arms 3 and 4, response rates after one email invitation were 21.5% and 19.3%, respectively. After the second email, cumulative responses were 31.3% in arm 3, and 28.6% in arm 4. In the subsequent waves of contact, our results suggest that response is higher from mailed surveys than from a phone contact, whether delivered via IVR or a personal phone call.

The results of the non-inferiority tests are shown in Table 1. These tests indicate whether survey response results in arms 2, 3 or 4 are at least as good as the results in arm 1. For each comparison, there is not enough evidence to reject the null hypothesis of inferiority (alpha = 0.025) because the lower limit of the confidence interval is below -5. The response rates in all arms were inferior to the response rate in arm 1. This was true for both the unadjusted and the models adjusted for gender, age at first diagnosis, race/ethnicity, and year of diagnosis.

Table 1.

Non- inferiority test results comparing survey response rates across study arm

| Comparison | Arm 1 response rate | Comparator response rate | Unadjusted Estimate | 95% Confidence Interval | Adjusted Estimatea | 95% Confidence Interval |

|---|---|---|---|---|---|---|

| Arm 2 – Arm 1b | 59.9% | 51.2% | −8.7% | −13.1, −4.4% | −8.5% | −12.7, −4.2% |

| Arm 3 – Arm 1 | 59.9% | 51.9% | −8.0% | −12.4, −3.7% | −8.7% | −13.0, −4.4% |

| Arm 4 – Arm 1 | 59.9% | 37.9% | −22.0% | −26.3, −17.7% | −23.2% | −27.5, −19.0% |

Adjusted for gender, race/ethnicity, age at diagnosis, year of diagnosis

Arm 1 is the gold standard.

For all comparisons, the lower equivalence margin (−5%) is within or above the 95% confidence interval; thus there is not enough evidence to reject the null hypothesis of inferiority.

Table 2 displays the survey response rates by patient characteristics and arm. Survey response rates did not differ by year of diagnosis or gender. We observed differences by age at diagnosis, race/ethnicity, language preference, socioeconomic status, and stage at diagnosis. Response rates were highest in arm 1 for almost all responder characteristics examined. Response rates by age ranged from 45.8% (ages 50-59 years) to 54.2% (ages 70-79 years), but were especially low in two groups: In arm 2 (IVR/mail), only 28.6% (4/14) of those under 40 years old responded to the survey, and in arm 4 (email/IVR), only 22.8% of those 80 years and older responded. Response rates were higher among non-Hispanic whites in all arms compared to the other ethnic groups. Response among non-whites was especially low in arm 4 (25.1% among Hispanics, 18.5% among African-Americans, and 18.3% among Asian/Pacific Islanders). Response rates were also higher among English speakers (51.5%) compared to Spanish speakers (36.4%), and those with higher socioeconomic status (53.7%) compared to lower socioeconomic status (41.4%). With respect to stage at diagnosis, those with stages 1-3 CRC tended to have better response than those with stage 0 or 4 CRC.

Table 2.

Survey response rates by participant characteristics overall and by study arm

| Characteristic | Arm 1 | Arm 2 | Arm 3 | Arm 4 | Total | p-valuea |

|---|---|---|---|---|---|---|

| Overall | 594/991 (59.9%) | 510/996 (51.2%) | 519/1000 (51.9%) | 379/999 (37.9%) | 2002/3986 (50.2%) | |

| Year of diagnosis | 0.05 | |||||

| 2010-2011 | 294/495 (59.4%) | 247/498 (49.6%) | 258/500 (51.6%) | 171/499 (34.3%) | 970/1992 (48.7%) | |

| 2012-2013 | 300/496 (60.5%) | 263/498 (52.8%) | 261/500 (52.2%) | 208/500 (41.6%) | 1032/1994 (51.8%) | |

| Gender | 0.52 | |||||

| Female | 312/501 (62.3%) | 265/498 (53.2%) | 224/455 (49.2%) | 179/477 (37.5%) | 980/1931 (50.8%) | |

| Male | 282/490 (57.6%) | 245/498 (49.2%) | 295/545 (54.1%) | 200/522 (38.3%) | 1022/2055 (49.7%) | |

| Age at diagnosis (years) | 0.01 | |||||

| <40 | 10/17 (58.8%) | 4/14 (28.6%) | 10/19 (52.6%) | 18/39 (46.2%) | 42/89 (47.2%) | |

| 40-49 | 35/62 (56.5%) | 34/66 (51.5%) | 50/102 (49.0%) | 38/98 (38.8%) | 157/328 (47.9%) | |

| 50-59 | 116/207 (56.0%) | 105/217 (48.4%) | 112/262 (42.7%) | 96/250 (38.4%) | 429/936 (45.8%) | |

| 60-69 | 152/274 (55.5%) | 147/275 (53.5%) | 187/310 (60.3%) | 118/298 (39.6%) | 604/1157 (52.2%) | |

| 70-79 | 192/287 (66.9%) | 123/243 (50.6%) | 114/201 (56.7%) | 88/222 (39.6%) | 517/953 (54.2%) | |

| 80+ | 89/144 (61.8%) | 97/181 (53.6%) | 46/106 (43.4%) | 21/92 (22.8%) | 253/523 (48.4%) | |

| Race/ethnicity | <0.001 | |||||

| Non-Hispanic White | 381/561 (67.9%) | 342/575 (59.5%) | 364/606 (60.1%) | 295/620 (47.6%) | 1382/2362 (58.5%) | |

| African-American | 58/117 (49.6%) | 49/102 (48.0%) | 33/69 (47.8%) | 12/65 (18.5%) | 152/353 (43.1%) | |

| Asian/Pacific Islander | 53/103 (51.5%) | 45/108 (41.7%) | 54/115 (47.0%) | 20/109 (18.3%) | 172/435 (39.5%) | |

| Hispanic | 97/199 (48.7%) | 71/200 (35.5%) | 62/200 (31.0%) | 50/199 (25.1%) | 280/798 (35.1%) | |

| Other/Unknown | 5/11 (45.5%) | 3/11 (27.3%) | 6/10 (60.0%) | 2/6 (33.3%) | 16/38 (42.1%) | |

| Language preference | <0.001 | |||||

| Spanish | 34/72 (47.2%) | 33/83 (39.8%) | 9/41 (22.0%) | 10/40 (25.0%) | 86/236 (36.4%) | |

| English | 542/883 (61.4%) | 454/868 (52.3%) | 500/935 (53.5%) | 364/923 (39.4%) | 1860/3609 (51.5%) | |

| Other/Missing | 18/36 (50.0%) | 23/45 (51.1%) | 10/24 (41.7%) | 5/36 (13.9%) | 56/141 (39.7%) | |

| Socioeconomic status | <0.001 | |||||

| Low | 100/198 (50.5%) | 86/193 (44.6%) | 72/177 (40.7%) | 46/167 (27.5%) | 304/735 (41.4%) | |

| Medium/High | 403/634 (63.6%) | 346/626 (55.3%) | 352/637 (55.3%) | 264/643 (41.1%) | 1365/2540 (53.7%) | |

| Missing | 91/159 (57.2%) | 78/177 (44.1%) | 95/186 (51.1%) | 69/189 (36.5%) | 333/711 (46.8%) | |

| Stage at diagnosis | 0.02 | |||||

| 0 | 60/126 (47.6%) | 65/121 (53.7%) | 52/123 (42.3%) | 35/119 (29.4%) | 212/489 (43.4%) | |

| 1 | 191/305 (62.6%) | 177/338 (52.4%) | 165/313 (52.7%) | 115/310 (37.1%) | 648/1266 (51.2%) | |

| 2 | 163/255 (63.9%) | 138/272 (50.7%) | 120/232 (51.7%) | 97/247 (39.3%) | 518/1006 (51.5%) | |

| 3 | 145/242 (59.9%) | 112/215 (52.1%) | 149/266 (56.0%) | 108/265 (40.8%) | 514/988 (52.0%) | |

| 4 | 27/46 (58.7%) | 14/39 (35.9%) | 25/53 (47.2%) | 22/53 (41.5%) | 88/191 (46.1%) | |

| Unknown | 8/17 (47.1%) | 4/11 (36.4%) | 8/13 (61.5%) | 2/5 (40.0%) | 22/46 (47.8%) |

The p-value tests for differences in response rates in the “total” column between characteristic categories.

Finally, we compared the costs of survey administration in each of the four arms (Table 3). Our a priori hypothesis was that arm 1, with the advance letter, $2 incentive, mailed surveys and personal phone calls, would be the most expensive method of contact. However, the survey arms were roughly comparable in cost for this sample size, with a difference of only 8% of total costs between the most and least expensive arms. Arm 2 was the most expensive arm (29% of the total costs), followed by arm 1 (28%), arm 4 (22%) and arm 3 (21%). Arm 2 (advance letter with $2 incentive, IVR administration of survey, and follow up letter to non-responders) reflects the higher costs of mail set-up, printing, and delivery, and of IVR set-up and help-line support. Arm 3 (email messages with link to web-based survey, and follow up letter to non-responders) shows the cost advantages of an initial electronic contact. The cost per response was highest for arm 4 ($63) and lowest for arm 3 ($45).

Table 3.

Costs (expressed as percent of total costs) of survey implementation by study arm

| Cost category | Arm 1 | Arm 2 | Arm 3 | Arm 4 |

|---|---|---|---|---|

| KPCO labor: survey development and administration | 40% | 42% | 52% | 50% |

| IVR vendor: labor | - | 19% | 10% | 34% |

| Survey vendor: labor | 26% | 11% | 12% | - |

| Survey vendor: materials/postage | 8% | 6% | 4% | - |

| Incentive and participant gratitudea | 26% | 22% | 22% | 16% |

| Cost per response | $50 | $62 | $45 | $63 |

Incentive is the $2 bill included with the advance letter in arms 1 and 2, participant gratitude is the $10 gift card for completing the survey.

Recognizing that after the study infrastructure is developed the costs of subsequent surveys decrease over time, we calculated the costs of surveying an additional 2000 participants (500 per arm). Under this hypothetical scenario, costs per response were $26 for arms 1 and 2, $19 for arm 4, and $16 for arm 3, reflecting the cost benefit of email as the first contact (and that fact that the $2 incentive was only included in arms 1 and 2).

Discussion

To facilitate patient-centered outcomes research about CRC survivors, we assembled a cohort of over 16,000 men and women diagnosed with CRC from 2010-2014 across six participating health plans. The cohort is diverse in both age and race/ethnicity, including more than 1,600 people diagnosed under the age of 50, over 1,700 Asians/Pacific Islanders, 2,500 Hispanics, and nearly 1,600 African Americans. We sampled 4,000 members of the cohort to test survey response rates across different modes of contact (mail, phone, IVR, email). Our overall response rate was 50%; mailed surveys elicited the best response rates, but email invitations with a link to a web survey also resulted in fairly high response rates.

Our a priori hypothesis was that arm 1 would provide the highest response rates [14, 15], but would also be the most expensive. We hoped to demonstrate that IVR or email would provide a more cost-effective option that would achieve similar response rates to arm 1. Based on our non-inferiority test, responses in all arms were inferior to arm 1, and our costs per response were actually highest in arms 2 and 4. When we extrapolated our costs to reflect surveying an additional 500 participants in each arm, costs were considerably lower for arm 3, where the initial email contact yielded a relatively high response rate. Costs for computer-based methods could be further lowered if patient compensation, like the gift cards used in this study, were delivered electronically.

Arms 1 and 2 included an advance letter, to inform participants that we would be sending them a survey, either by mail or phone (IVR), and a $2 bill. Because arms 3 and 4 used email as the initial outreach, they did not include a similar $2 incentive, thus, we cannot test the effect of the incentive directly. However, our response rates in arm 2 and arm 3 were nearly identical (51.2% and 51.9%. respectively), suggesting that the incentive was not a strong motivator for participation.

It has been suggested that while mixed mode survey methods improve response rates, they may not reduce bias [1, 4,16]. Indeed, we observed differences in response rates by race/ethnicity, language preference, and socioeconomic status. Arms 1 and 3 had similar response rates among African-Americans and Asian/Pacific Islanders, but arm 1 performed better among Hispanics, Spanish speakers, and those with lower socioeconomic status. Response rates in arm 4 were 47.6% among non-Hispanic whites, but only 18-25% among non-whites. Our results suggest that paper surveys and personal (not IVR) phone calls are preferred among these sociodemographic groups, even though email availability did not vary by these factors.

Although 28.8% of participants responded to the IVR survey in arm 2, our research center received more complaints about IVR than any other mode of participant contact. Many of the participating health plans routinely use IVR for patient reminders for upcoming appointments or for preventive services like flu shots and are used extensively in commercial survey research, but even a relatively brief 17-item questionnaire may be too cumbersome to work well in the IVR format.

Previous studies that have tested response rates among CRC survivors have generally relied on mailed surveys [17-22]. A recent study conducted in England obtained a response rate of 63% with a mailed questionnaire and 2 reminders, a response rate similar to the results we achieved in arm 1 [17]. A study of long term CRC survivors [22] reported a response rate of 51%, and one among rectal cancer reported a response rate of 61% [21]. Others had lower response rates than ours; 34% among a random sample of Canadian CRC survivors [18] and 47% in a US study [19] that reported lower response rates among those who were older, non-white or diagnosed with metastatic cancer. Maxwell et al [20] achieved a 33% overall response rate among 3,316 CRC cases in Los Angeles and tested differences between incentives, compensation for completing the survey, first class mail versus registered mail and did not see significant differences in any combination of these factors. However, they did observe differences in response rates by race/ethnicity: white and Latino responders had higher (36% and 37%, respectively) response rates than did blacks and Asians (29% and 28%, respectively).

Strengths of the study include a large sample size; diversity by age, race/ethnicity, geographic location, and stage at diagnosis; and the ability to assess differences in responders and non-responders in the electronic medical record. The survey sample is comparable to the full cohort with one exception: there were fewer cases diagnosed in stage 4 in the survey sample compared to the full cohort. This difference in stage is likely due to higher mortality in stage 4 cases, thus fewer were alive at the time of the survey. Importantly, we didn't identify important differences in demographic factors between those with and without an email provided in their medical record. The limitations of our study include missing email addresses on about half of the population. While those without email were still eligible to be randomized to non-electronic study arms, there may be unmeasured differences between those with and without listed email addresses that we did not capture and which might influence response rates to other methods.

In seeking to understand and address the un-met needs of CRC survivors, it is essential to collect information from a broad spectrum of survivors, and optimize response rates when surveying these individuals. The results of our randomized study of survey methods in a diverse population of CRC survivors suggest that traditional methods of survey administration (mailed surveys and personal phone calls) provide somewhat higher response rates compared to email and IVR survey methods. While we did demonstrate the feasibility of email invitations and online surveys, and that these methods may provide a more cost-effective approach for surveying large groups of cancer patients, they may have lower response rates for important segments of the population including Hispanics, non-English speakers and those of lower socioeconomic status.

Supplementary Material

Acknowledgements

The authors gratefully acknowledge the assistance of the following people in conduct of this research: Michelle Henton, MA (Kaiser Permanente, Colorado); Jane Anau and Doug Kane (Group Health Research Institute); the members of the PORTAL Patient Engagement Core (PEC): Rose Hesselbrock, Florence Kurtilla, and Charles Anderson; and our colleagues at Fight Colorectal Cancer, including Anjelica Davis, MPPA. We thank the developers of the web-based and IVR systems at Kaiser Permanente, Colorado: Jonah N. Langer, David A. Steffen, MPH, Michael R. Shainline, MS, MBA, and Andew Hamblin. We also sincerely thank the study participants for their contributions to this project.

Funding:

This work was support by Contract No.CDRN-1306-04681 from the Patient Outcomes Research Institute (Awarded to Drs. Elizabeth M. McGlynn and Tracy Lieu). The infrastructure builds upon data structures that receive ongoing support from the National Cancer Institute Cancer (NCI) Research Network (Grant No. U24 CA171524, awarded to Dr. Lawrence H Kushi, PI) and the Kaiser Permanente Center for Effectiveness and Safety Research.

Footnotes

Compliance with Ethical Standards:

Conflict of Interest: All authors declare that they have no conflicts of interest.

Ethical approval: All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed consent: Informed consent was obtained from all individual participants included in the study.

References

- 1.Beebe TJ, McAlpine DD, Ziegenfuss JY, Jenkins S, Haas L, Davern ME. Deployment of a Mixed-Mode Data Collection Strategy Does Not Reduce Nonresponse Bias in a General Population Health Survey. Health Services Research. 2012;47:1739–54. doi: 10.1111/j.1475-6773.2011.01369.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Atrostic BK, Bates N, Burt G, Silberstein A. Nonresponse in U.S. Government Household Surveys: Consistent Measures, Recent Trends, and New Insights. Journal of Official Statistics. 2001;17:209–26. [Google Scholar]

- 3.Sackett DL. Bias in analytic research. Journal of Chronic Diseases. 1979;32:51–63. doi: 10.1016/0021-9681(79)90012-2. [DOI] [PubMed] [Google Scholar]

- 4.Groves RM. Nonresponse Rates and Nonresponse Bias in Household Surveys. Public Opinion Quarterly. 2006;70:646–75. [Google Scholar]

- 5.Armes J, Crowe M, Colbourne L, et al. Patients' Supportive Care Needs Beyond the End of Cancer Treatment: A Prospective, Longitudinal Survey. Journal of Clinical Oncology. 2009;27:6172–9. doi: 10.1200/JCO.2009.22.5151. [DOI] [PubMed] [Google Scholar]

- 6.van Ryn M, Phelan SM, Arora NK, et al. Patient-Reported Quality of Supportive Care Among Patients With Colorectal Cancer in the Veterans Affairs Health Care System. Journal of Clinical Oncology. 2014;32:809–15. doi: 10.1200/JCO.2013.49.4302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Patient-Centered Outcomes Research Institute The National Patient-Centered Clinical Research Network. 2016 http://www.pcornet.org/

- 8.Corley DA, Feigelson HS, Lieu TA, McGlynn EA. Building Data Infrastructure to Evaluate and Improve Quality: PCORnet. Journal of Oncology Practice. 2015;11:204–6. doi: 10.1200/JOP.2014.003194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Wagner EH, Greene SM, Hart G, et al. Building a research consortium of large health systems: the Cancer Research Network. J Natl Cancer Inst Monogr. 2005;35:3–11. doi: 10.1093/jncimonographs/lgi032. [DOI] [PubMed] [Google Scholar]

- 10.Ritzwoller DP, Carroll N, Delate T, et al. Validation of electronic data on chemotherapy and hormone therapy use in HMOs. Med Care. 2013;51:e67–73. doi: 10.1097/MLR.0b013e31824def85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ross TR, Ng D, Brown JS, et al. The HMO Research Network Virtual Data Warehouse: A Public Data Model to Support Collaboration. EGEMS (Washington, DC) 2014;2:1049. doi: 10.13063/2327-9214.1049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hornbrook MC, Hart G, Ellis JL, et al. Building a virtual cancer research organization. J Natl Cancer Inst Monogr. 2005;35:12–25. doi: 10.1093/jncimonographs/lgi033. [DOI] [PubMed] [Google Scholar]

- 13.North American Association of Central Cancer Registries NAACCR Strategic 460 Management Plan. 2014 http://www.naaccr.org/

- 14.Dillman DA, Phelps G, Tortora R, Swift K, Kohrell J, Berck J, et al. Response rate and measurement differences in mixed-mode surveys using mail, telephone, interactive voice response (IVR) and the Internet. Social Science Research. 2009;38(1):1–18. [Google Scholar]

- 15.Dillman DA, Smyth JD, Christian LM. Internet, phone, mail, and mixed-mode surveys: the tailored design method. John Wiley & Sons; 2014. [Google Scholar]

- 16.Davern M. Nonresponse Rates are a Problematic Indicator of Nonresponse Bias in Survey Research. Health Services Research. 2013;48:905–12. doi: 10.1111/1475-6773.12070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Downing A, Morris EJ, Richards M, et al. Health-Related Quality of Life After Colorectal Cancer in England: A Patient-Reported Outcomes Study of Individuals 12 to 36 Months After Diagnosis. Journal of Clinical Oncology. 2015;33:616–24. doi: 10.1200/JCO.2014.56.6539. [DOI] [PubMed] [Google Scholar]

- 18.McGowan EL, Speed-Andrews AE, Blanchard CM, et al. Physical activity preferences among a population-based sample of colorectal cancer survivors. Oncology Nursing Forum. 2013;40:44–52. doi: 10.1188/13.ONF.44-52. [DOI] [PubMed] [Google Scholar]

- 19.Kelly BJ, Fraze TK, Hornik RC. Response rates to a mailed survey of a representative sample of cancer patients randomly drawn from the Pennsylvania Cancer Registry: a randomized trial of incentive and length effects. BMC Medical Research Methodology. 2010;10:65. doi: 10.1186/1471-2288-10-65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Maxwell AE, Bastani R, Glenn BA, Mojica CM, Chang LC. An experimental test of the effect of incentives on recruitment of ethnically diverse colorectal cancer cases and their first-degree relatives into a research study. Cancer Epidemiology Biomarkers & Prevention. 2009;18:2620–5. doi: 10.1158/1055-9965.EPI-09-0299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Wendel CS, Grant M, Herrinton L, et al. Reliability and validity of a survey to measure bowel function and quality of life in long-term rectal cancer survivors. Quality of Life Research. 2014;23:2831–40. doi: 10.1007/s11136-014-0724-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Mohler MJ, Coons SJ, Hornbrook MC, et al. The Health-Related Quality of Life in Long-Term Colorectal Cancer Survivors Study: objectives, methods, and patient sample. Current Medical Research and Opinion. 2008;24:2059–70. doi: 10.1185/03007990802118360. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.