Abstract

The study of finger kinematics has developed into an important research area. Various hand tracking systems are currently available; however, they all have limited functionality. Generally, the most commonly adopted sensors are limited to measurements with one degree of freedom, i.e., flexion/extension of fingers. More advanced measurements including finger abduction, adduction, and circumduction are much more difficult to achieve. To overcome these limitations, we propose a two-axis 3D printed optical sensor with a compact configuration for tracking finger motion. Based on Malus’ law, this sensor detects the angular changes by analyzing the attenuation of light transmitted through polarizing film. The sensor consists of two orthogonal axes each containing two pathways. The two readings from each axis are fused using a weighted average approach, enabling a measurement range up to 180 and an improvement in sensitivity. The sensor demonstrates high accuracy (±0.3), high repeatability, and low hysteresis error. Attaching the sensor to the index finger’s metacarpophalangeal joint, real-time movements consisting of flexion/extension, abduction/adduction and circumduction have been successfully recorded. The proposed two-axis sensor has demonstrated its capability for measuring finger movements with two degrees of freedom and can be potentially used to monitor other types of body motion.

Keywords: finger motion tracking, angle measurement, flexion/extension, abduction/adduction, 3D printing, optical sensor, glove-based systems

1. Introduction

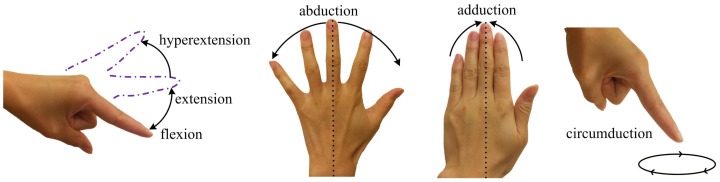

The hand is an amazing feat of human evolution enabling incredible physical dexterity and the ability to manipulate and develop tools through a series of finger motions, i.e., flexion, extension, abduction, adduction, and circumduction. Finger flexion is joint motion towards the palm relative to the standard anatomical position with extension being in the opposite direction as shown in Figure 1. Full extension is in line with the back of the hand and is defined as zero degree flexion. An injury can occur when joints are hyperextended too much [1]; in this paper, only hyperextension within normal limits is of interest. Finger abduction (ABD) and adduction (ADD) are the movements away from or towards the hand’s midline (dotted line), respectively. Circumduction is a circular motion combining flexion, extension, abduction and adduction [2].

Figure 1.

Finger motion: flexion, extension, abduction, adduction, and circumduction.

Understanding and measuring hand kinematics is important in research areas such as biomechanics and medicine. It is a challenging subject due to the articulated nature of the hand and the associated multiple degrees of freedom (DOFs). Standard clinical goniometers are typically manual devices used to assess the range of joint movement in fingers [3,4]. This method is only effective for static measurements and usually requires a long time to fully assess the whole hand. The accuracy (±5) is largely determined by the skill of the clinician or therapist; therefore, an accurate system that is able to monitor dynamic hand movements could be of significant benefit in clinical practice.

Various sensing techniques have been proposed to track finger motion, including resistive carbon/ink sensors [5,6], microfluidic strain sensors [7,8], magnetic induction coils [9], hetero-core spliced fibre sensors [10], fibre Bragg grating sensors [11,12] and multiple inertial measurement units (IMUs) [13,14]. Resistive film sensors are particularly favoured in glove-instrumented devices due to their flexibility, light weight, and low cost; however, they often suffer from instability, long transient response, and a high dependency on the radius of curvature [15], resulting in low accuracy. Previous work has shown commercial resistive bend sensors (Flexpoint Sensor Systems Inc., Draper, UT, USA [16]) suffer from severe hysteresis error and signal drift compared to our optical-based sensor [17,18]. In contrast, magnetic techniques are capable of monitoring precise hand movements, but the accuracy is significantly affected by interference from the Earth’s geomagnetic field or nearby ferromagnetic objects [19]. Optical fibre techniques measure finger flexion by detecting the attenuation of transmitted light as a function of fibre curvature; a polishing process [20] or the creation of imperfections [21] is required to increase sensitivity.

Using one or more of these sensing techniques, several glove-based devices have been developed to capture hand movements, e.g., SIGMA glove [22], SmartGlove [23], WU Glove [24], NeuroAssess Glove [25], Shadow Monitor [26], HITEG-Glove [27], Pinch Glove [28], Power Glove [29], CyberGlove [30], HumanGlove [31], and 5DT Data Glove [32]. These glove-based devices in most cases only detect finger flexion and/or extension using one-DOF sensors [10,22,25,27,33,34]. The measurement accuracy is usually adversely affected when monitoring two-DOF finger joints, i.e., the metacarpophalangeal (MCP) joints. The main reason is that finger ABD/ADD and flexion/extension do not occur in isolation [35], leading to unwanted deformations of the flexion sensors. Few glove systems have been able to measure two-DOF finger movements. One method is to use multiple one-DOF flexible sensors. The flexion/extension sensors are placed on the finger joints, and the ABD/ADD sensors are attached on the dorsal surface of the proximal phalanxes of adjacent fingers in an arched configuration [22,24,30,32]. In this approach, reliable ABD/ADD readings can only be obtained when the adjacent fingers have similar flexion; otherwise, twisting of the ABD/ADD sensor can occur, leading to inaccurate readings. Gestures, such as crossed fingers, are particularly prone to this problem. An alternative method is the use of IMUs that can provide 3D information of hand motion including finger ABD/ADD. The major drawback is the accumulated error since IMUs estimate the orientation trajectories by time integration of the inertial signal. Additionally, complicated models and data fusion processes are also required [14,36], causing large computation times for multiple joint articulations, thus limiting the system’s real-time capability.

Both finger flexion/extension and ABD/ADD are important parameters for finger kinetics. The majority of studies to date only consider finger flexion and extension, but accurate information on finger ABD/ADD is essential for areas such as medical diagnostics and rehabilitation. Accurate and simultaneous monitoring of two-DOF motion in human hand joints is an area underexplored in current glove-based systems. The main purpose of this work is to demonstrate a method for tackling this problem. We propose a two-axis optical sensor with an operating method based on Malus’ Law, capable of tracking the finger flexion/extension and ABD/ADD simultaneously. Additionally, the sensor demonstrates an ability to capture the finger circumduction, which is important for exploring the complex relationships between finger flexion/extension and ABD/ADD. This optical sensor features a wide measuring span of up to 180 for each axis, which is sufficient for measuring the entire range of finger motion. In the following sections, the hand skeleton model, sensor principle and fabrication, quasi-static characteristics, as well as the recorded movements of the MCP joint of the left index finger will be described.

2. Hand Skeleton Model

The human hand is highly articulated, but constrained at the same time. Each hand has up to 27 DOFs, 21 of which are contributed by the five finger joints for local movements and the other six for global hand movements [35,37]. Each digit, except the thumb, possesses four DOFs, one each for the proximal interphalangeal (PIP) and distal interphalangeal (DIP) joints, and the other two for the MCP joint. The thumb has a more complicated structure, possessing five DOFs, one for the interphalangeal (IP) joint, two for the MCP joint, and two more for the carpometacarpal (CMC) joint [35].

Six types of synovial joints typically exist in the human body with differing structure and mobility [38] (pp. 18–20): plane joints, hinge joints, pivot joints, ellipsoidal joints, saddle joints, and ball and socket joints. Human fingers only have hinge, ellipsoid and saddle joints. The uniaxial and stable hinge joint allows flexion/extension only, performing back and forth movements. The PIP, DIP, and IP joints are examples of the hinge type [38] (pp. 170, 174). Ellipsoidal joints, also known as condyloid joints, consist of two oval articular surfaces; one is concave, and the other one is convex. This type of joint is able to move in two planes, allowing flexion, extension, abduction, adduction, and circumduction. MCP joints are examples of the ellipsoidal type [38] (p. 169). Saddle joints are also biaxial and perform a series of movements, similar to ellipsoidal joints, utilizing two reciprocally concave–convex surfaces. The CMC joint of the thumb is a typical saddle joint [38] (pp. 164–167).

In this paper, we focus on detecting the motion of the MCP joint. The range of motion varies between individuals [39], but, in general, flexion is around 90, and slightly less for the index finger [35]. The active hyperextension of the index finger is approximately up to 30. ABD/ADD generally have a greater range when the fingers are fully extended, being as much as 30 in each direction [38] (p. 174).

3. Sensor Design

3.1. Principle of Operation

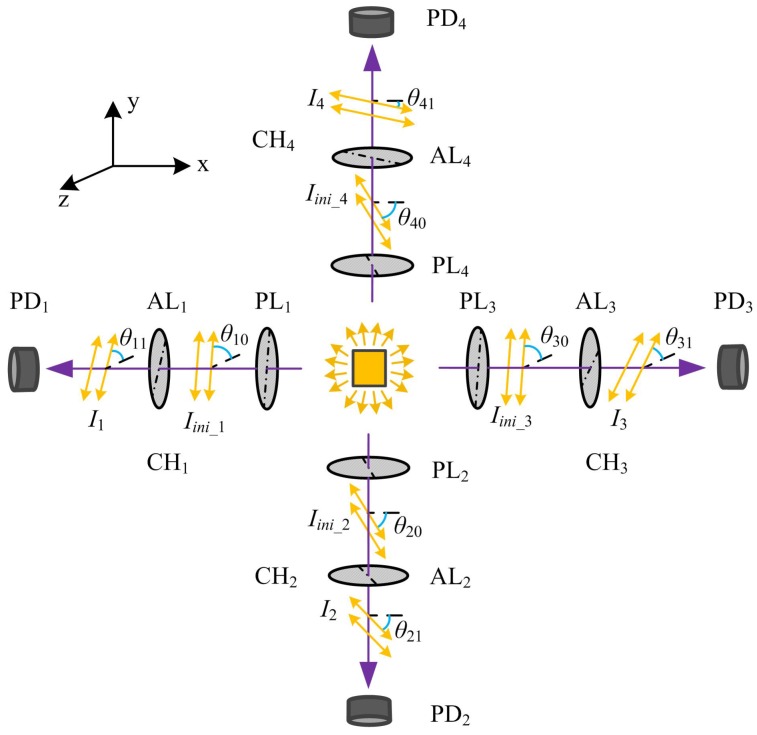

As illustrated in Figure 2, the optical sensor comprises four optical pathways, which, for ease of description, are now referred to as channels (CH, i = 1, 2, 3, and 4). Each channel includes a linear polarizer, a polarizing film analyzer, and a photodiode. The unpolarized light source provides visible light for all four channels. Passing through the linear polarizer PL, the incident light is partially blocked by the corresponding analyzer AL in CH, and is recorded by the photodiode PD. CH and CH are oriented along the x-axis, and CH and CH along the y-axis.

Figure 2.

The schematic diagram of the optical sensor. PL and AL are linear polarizing films for the sensing channel CH (i = 1, 2, 3, and 4). PD represents the photodiode which converts the light intensity into an electrical signal.

According to Malus’ Law, the final light intensity for each channel should obey the following theoretical relationship:

| (1) |

where the subscript i denotes the channel number. I denotes the detected light intensity, and I represents the initial polarized intensity at degrees. The parameter denotes the deviated angle of the analyzer’s transmission axis from the z-axis (i = 1, 3) or x-axis (i = 2, 4) shown in Figure 2.

Clearly, the final intensity obtained for each channel is proportional to the angle between the transmission axes of the polarizer and the analyzer. For this optical sensor, the received intensity is linearly converted into electrical current, and then modulated and amplified by current-to-voltage conditioning circuitry. The circuits are similar to the ones presented in our previous work [18]. Finally, the measured voltage (V) can be calculated by Equation (2).

| (2) |

where denotes the rotation angle of AL relative to the polarization direction of PL. The parameters m and a are the constants of proportionality, and b is a constant voltage related to the extinction ratio of the polarizing films when set orthogonally.

From Equation (2), it can be found that a single channel only allows a limited measuring range of 90 and the sensitivity is not constant. In order to track the entire flexion/extension of the MCP joints accurately (up to 120 including active hyperextension), we use two channels CH and CH to capture the movements simultaneously along one axis, and then combine the measured data. Supposing that the transmission axes of the polarizers PL and PL are in the same direction, the analyzers AL and AL are initially oriented with their transmission axes at 45 to each other. This ensures that at least one channel will be operating in the region with the maximum sensitivity and linearity. In fact, the oriented angle can be any value ranging from 40 to 50, since the cosine squared function demonstrates a high linearity in the range of 20 to 70. Additionally, CH and CH are used to capture the finger ABD/ADD movements, even though the ABD/ADD range is less than 90. CH and CH are operated in the same way as CH and CH.

3.2. Sensor Manufacture

The framework of the two-axis optical sensor is fabricated by an EnvisionTEC’s Perfactory Mini Multi Lens 3D Printer (EnvisionTEC, Gladbeck, Germany [40]) using the nanoparticle-filled material RCP30. RCP30 has excellent stiffness and opaqueness, making the sensor robust and improving interference immunity from ambient light sources.

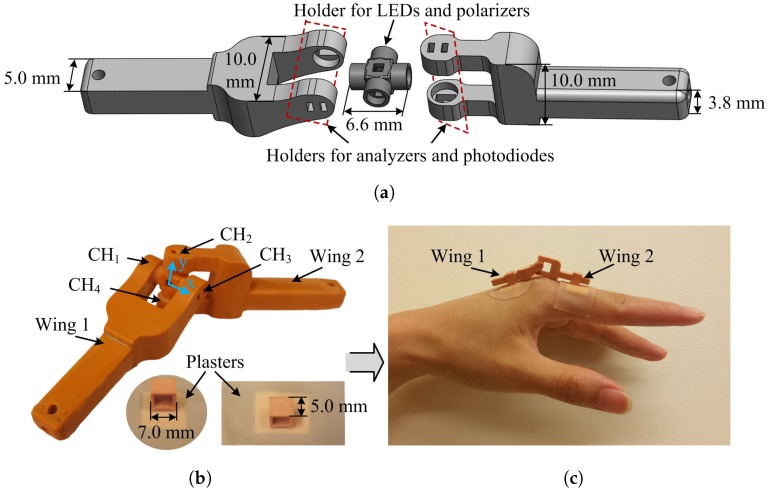

Surface mounted LEDs (KPG-1608ZGC, Farnell element14, Leeds, UK [41]), with a dominant wavelength of 525 nm and a thickness of 0.25 mm, are employed as the light source. In this sensor, all of the polarizers and the analyzers are made from commercial linear polarizing sheet (Edmund Optics Ltd., York, UK [42]), with an extinction ratio of 9000:1 and high transmission from 400 nm to 700 nm. The photodiodes (TEMD6200FX01, Farnell element14, Leeds, UK [41]) with peak sensitivity at 540 nm are used to detect the resultant light intensities. As shown in Figure 3a, the LEDs and linear polarizers are located inside a cross-shaped holder that is optically aligned with the analyzers and photodiodes in the two wing sections.

Figure 3.

The two-axis optical sensor. (a) the 3D design produced in SolidWorks 2015 (DS SolidWorks Corp., Waltham, MS, USA); (b) a photograph of the complete optical sensor and the two rectangular hollow parts glued on the adhesive plasters; (c) an example of the sensor’s placement on the metacarpophalangeal joint of the left index finger.

Figure 3b shows a photograph of the complete two-axis optical sensor. When the sensor wings are aligned, the horizontal line along CH and CH is defined as the x-axis and the vertical line along CH and CH as the y-axis. The prototype sensor has an overall width of 10 mm, height of 10 mm and a length of 56 mm, which could be reduced with more advanced manufacturing methods. To eliminate the influence of ambient light, non-reflective black Aluminum foil tape (AT205, Thorlabs Ltd., Ely, UK [43]) was used to cover the optical sensor, which was not included in Figure 3b for clarity. This tape totally blocks out ambient light according to the manufacturer’s specification. For this study, the sensor was attached to the finger using rectangular hollow components and adhesive plasters as shown in Figure 3b.

An example of the optical sensor attached to the MCP joint of the index finger is shown in Figure 3c. The two rectangular components glued on the adhesive plasters are placed on the metacarpal and the proximal phalanx of the index finger. The sensor wings are inserted into the rectangular parts, enabling them to slide in response to finger movements. When the index finger flexes or extends, rotation of the wings occur about the x-axis of the sensor. Similarly, ABD/ADD motion will cause a rotation about the y-axis. The optical sensor is also able to record finger circumduction by combining data from both rotation directions.

4. Methods

4.1. Measurement Apparatus

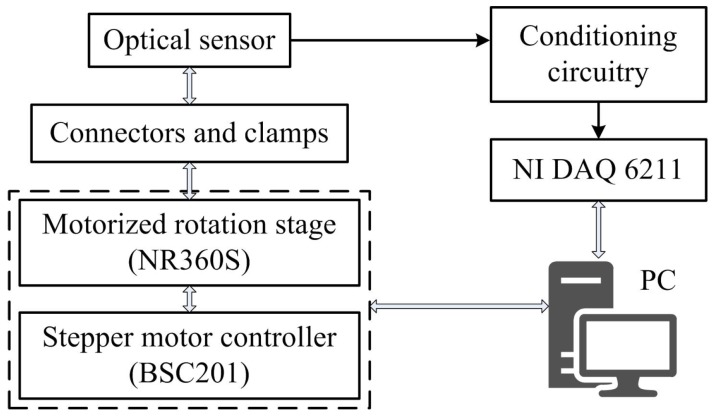

An automated experimental set-up, shown diagrammatically in Figure 4, is used to evaluate the performance of the optical sensor. A 360 continuous motorized rotation stage (NR360S, Thorlabs Inc. [43]), with one arcsec resolution, controlled the rotation angles. The stepper motor offers an accuracy of up to 5 arcmin when driven by the micro-stepping controller (BSC201).

Figure 4.

The block diagram of the automated measurement apparatus.

Each axis of the sensor was assessed separately using the same rotation stage. Evaluating the sensor rotation about the x-axis, Wing 2 was clamped rigidly and Wing 1 was connected to the central aperture of the stepper motor via a drill chuck. Similarly, for the rotation testing about the y-axis, Wing 1 was clamped and Wing 2 allowed to rotate under the control of the rotation stage. The resultant output signal was modulated by conditioning circuitry. The rotation system was controlled using ActiveX interfacing technology in LabVIEW 2014 (National Instruments, Newbury, UK) together with signal acquisition and processing using a multifunction DAQ (NI USB-6211, National Instruments).

4.2. Data Fusion Approach

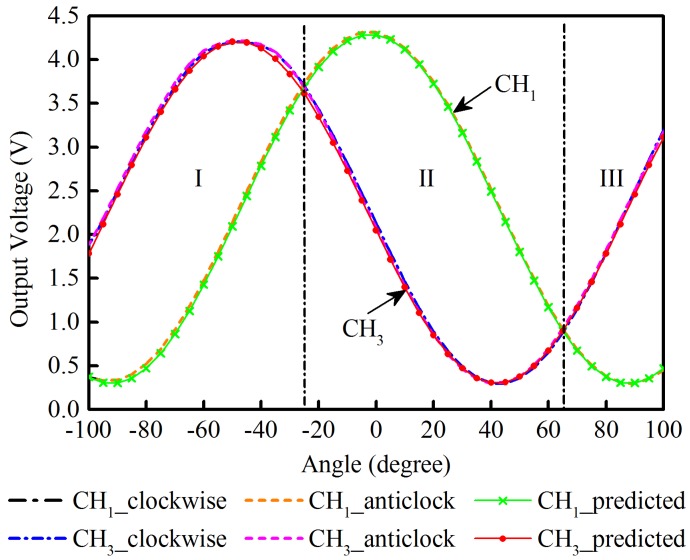

To explain the data fusion method, rotation about the x-axis is used as an example, i.e., data acquired for CH and CH. The amplitude of the output voltages obtained for CH and CH, can be divided into three regions: I, II, and III, as shown in Figure 5. In region I and region III, the outputs of CH are smaller in comparison with CH, while the opposite is true in region II. By comparing the voltage amplitudes from both channels, we can derive the rotation angle over a 180 range using Equation (2). To avoid using data from one channel only, a weighted average method was used to calculate the angular position. Variable weighting was applied to each channel taking into account the angular dependency of their sensitivities. Higher weights were applied when the channel sensitivity was at its maximum, i.e., close to angles of 45. Therefore, the final rotation angle about the x-axis, , can be computed by Equation (3):

| (3) |

where and denote the allocated different weights for CH and CH, respectively. V represents the output voltage proportional to the rotational angle in CH (i = 1, 3). The parameter n is determined by the nonlinear least squares regression based on the dataset explained in Section 5 and represented in Figure 5.

Figure 5.

The voltage-to-angle relationships of the sensing channels CH and CH of the optical sensor. CH_clockwise and CH_anticlock represent the outputs of CH when the sensor rotates from −100 to 100, and then back to −100. CH_predicted is the predicted output value of channel CH. Similarly, CH_clockwise, CH_anticlock, and CH_predicted represent the corresponding readings of CH.

5. Sensor Characteristics

The quasi-static performance of the two-axis optical sensor is evaluated using the automated measurement apparatus at room temperature. The motor performs rotations at 10 degrees per second and the data is acquired at 100 Hz. The physical position when the two wings of the sensor are aligned is defined as the origin.

To investigate the sensor’s angle-to-voltage relationship, the optical sensor was rotated, about the x-axis, from −100 to 100 and then back to −100 using increments of 5. The data set was composed of 500 samples at each angle setting. This process was repeated five times with an interval of three minutes between each sampling cycle. The same procedure was carried out for the rotation testing about the y-axis.

By averaging the data from CH and CH at each angle over five cycles, the voltage-to-angle relationship of both channels was obtained for both clockwise and anticlockwise rotations. The angular dependence of the output voltages is plotted in Figure 5. According to Equation (2), a and b are the output span and the offset voltage (the minimum output) of each channel CH (i = 1, 3). The predicted values for CH and CH are calculated and also plotted in Figure 5. CH and CH have a similar performance to that of CH and CH and have been omitted from Figure 5 for clarity.

As seen in Figure 5, both channels provide readings consistent with the predicted values for bidirectional rotation. The phase difference between the waveforms is consistent with the difference in orientation of each channel.

The sensor’s output characteristics are listed in Table 1. It demonstrates consistent performance between each channel with an average deviation from the theoretical voltage, equal to ±2.3%. The overall hysteresis error is less than 1.7% for rotation in both directions and the repeatability, as determined from the averaged relative standard deviations, is equal to 0.5%.

Table 1.

Output characteristics of the optical sensor.

| Channels | Deviations from the Predicted Voltages | Hysteresis | RSD | |

|---|---|---|---|---|

| Rotations about the x-axis | CH | ±2.4% | 1.6% | 0.4% |

| CH | ±2.1% | 1.4% | 0.6% | |

| Rotations about the y-axis | CH | ±2.5% | 2.0% | 0.6% |

| CH | ±2.3% | 1.9% | 0.4% | |

| overall | ±2.3% | 1.7% | 0.5% | |

Note: CH (i = 1, 2, 3, and 4) represents the sensing channels of the optical sensor, and RSD denotes the relative standard deviation.

In the linear region, where is in the range 20 to 70, the sensitivity of each channel is 62.6 mV/± 0.9 mV/; this can be further increased by adjusting the amplification factor of the conditioning circuits. According to the signal-to-noise level, the resolution is 0.1 under laboratory conditions for the linear regions, comparing favorably with the 0.5 achieved in commercial resistive bend sensors [25].

6. Sensor Performance Validation

6.1. Random Angle Testing

This investigation evaluated the efficiency of the data fusion approach and the measurement ability of the optical sensor. Using MATLAB 2014 (MathWorks, Natick, MA, USA), we generated one hundred uniformly distributed pseudorandom numbers to simulate finger motion. The angular data in the range of −70 to 110 was used to simulate the motion of an MCP joint in flexion or hyperextension, and the range was changed to −90 to 90 for the simulation of ABD/ADD movements. Following the rotation of the automated motorized system, the optical sensor rotated successively from one measurement position to the next, with five hundred samples recorded at each angle. The weighted average method was performed within LabVIEW and combines the outputs from CH and CH when monitoring the x-axis rotation and similarly combining CH and CH for y-axis monitoring.

The manufacturer’s design guide states that the motorized rotation stage has an accuracy of 0.08. Over the one hundred samples of random angles, the angle measured by the optical sensor differed from the set motor angle by an average of ±0.2 in the x-axis and ±0.3 in the y-axis. The slight difference of the sensor performance about the two axes, as well as differences between individual channels (shown in Table 1), may be due to inconsistencies in fabrication.

Nevertheless, the overall mean absolute error of the optical sensor is less than 0.3, and this is more accurate than some reported sensors: the linear potentiometer (0.7) [44], the embedded hetero-core optic fibre sensor (0.9) [10], the resistive bend sensor (1.5) [45], and the fibre Bragg grating sensor (2.0) [11].

6.2. Finger Motion Detection

To assess the sensor’s capability for monitoring finger motion, we attached the optical sensor on to the dorsal surface of a healthy volunteer’s left index finger in a similar fashion to that shown in Figure 3c. During these measurements, the participant is initially asked to sit with their arm relaxed and resting on a table. The position when the whole hand is flat is regarded as 0 flexion/extension and where the fingers are closed is treated as 0 ABD/ADD.

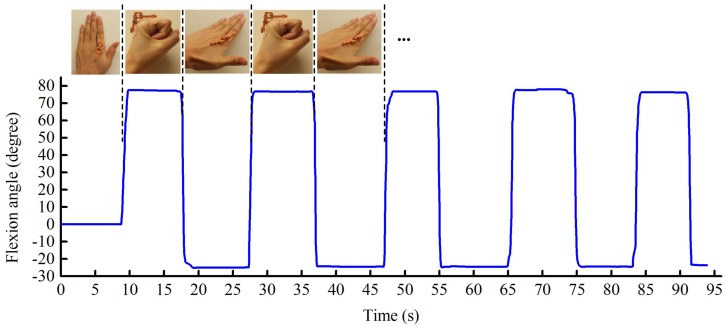

6.2.1. Finger Flexion and Extension

The participant initially placed their hand flat on the table with fingers close together. After several seconds, the participant formed a fist by rapidly bending their fingers, then remaining at that position for a few seconds before extending the fingers to maximum hyperextension. This procedure was repeated continuously for five cycles. During this testing, the fingers (except the thumb) are always kept close to each other so that ABD/ADD can be regarded as 0. Photographs were taken to record finger positions at their maximum flexion and hyperextension as a reference. For example, the angular motion of the left index finger was assessed by measuring the lines extrapolated from the photographs along the proximal phalange of the index finger and the back of the hand using the image processing program ImageJ (ver. ij150) [46].

Figure 6 demonstrates the dynamic response of the optical sensor to alternating finger flexion and extension. The positive readings represent index finger flexion or extension, and the negative angles indicate hyperextension. Variations in shape and width of the waveform are due to variations in the subject’s hand movements and periods of inactivity, respectively.

Figure 6.

The repeated flexion and extension of the metacarpophalangeal joint of the participant’s left index finger.

For this particular test subject, the optical sensor recorded a maximum finger flexion of 78.5 when the hand was clenched into a fist, and a maximum hyperextension of 25.1, and this compares to readings of 80.7 and 22.9 for measurements obtained from photographs.

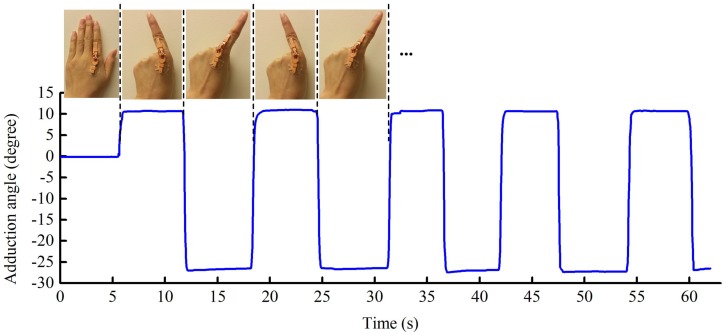

6.2.2. Finger Abduction and Adduction

This test involved measuring ABD/ADD, and as with the flexion test, the participant begins with the same open hand posture. Whilst maintaining the index finger in the same position, the other fingers were made to curl towards the palm. This was followed by the participant moving their index finger to its maximum position of abduction and then adduction, staying at each position for several seconds. The index finger was kept fully extended during these movements. As in Section 6.2.1, the photogrammetric method was adopted as a reference method.

Figure 7 presents the optical sensor’s capability to track finger ABD/ADD. The readings are negative for motion left of the finger midline, and positive to the right. For the participant in this study, the measured maximum registered ABD/ADD for the left and right sides were 11.0 and 27.5, respectively, and this compares with readings of 12.5 and 30.2 obtained photogrammetrically. As seen with flexion and extension measurements, the sensing channels for ABD/ADD monitoring also have an excellent dynamic response.

Figure 7.

The repeated abduction and adduction of the metacarpophalangeal joint of the participant’s left index finger.

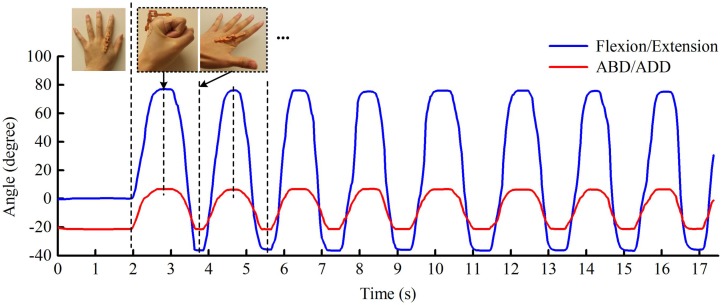

6.2.3. Finger Flexion/Extension and Abduction/Adduction

Simultaneous testing of finger flexion/extension and ABD/ADD was also investigated. The participant was instructed to place their hand flat on a table with fingers spread widely apart. The participant then bends the fingers into a clenched fist before extending to maximum hyperextension and ABD. This action was performed repeatedly for eight cycles.

The recorded finger movements are plotted in Figure 8. In this example, finger flexion co-occurring with adduction leads to increased readings. Finger extension when associated with abduction leads to falling regions in the trace. These changes are consistent with actual finger motion. The positive peak values in Figure 8 occur at the position with maximum finger flexion and adduction, i.e., clenched gesture, and the negative peaks respond to the gesture with maximum hyperextension and abduction.

Figure 8.

The simultaneous movements of the flexion/extension and abduction/adduction (ABD/ADD) of the metacarpophalangeal joint of the participant’s left index finger. The actions in the dotted box are repeated continuously.

During this testing, the maximum captured flexion was 77.1. The hyperextension was up to 37.2, much greater than the 25.1 measured in Figure 6. This is because, in general, less hyperextension occurs when the four fingers are closed together. Additionally, the maximum ABD/ADD at the two sides are 7.1 and 21.8, respectively. Due to the continuous movements of the fingers, it is difficult to take photographs for comparison during this testing. However, the promising results shown in Figure 8 reveal that the optical sensor has the ability to track finger flexion/extension and ABD/ADD simultaneously.

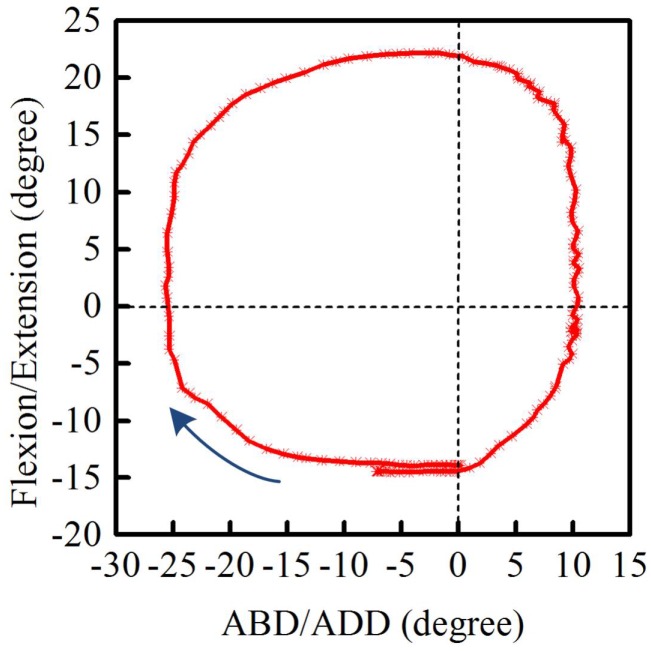

6.2.4. Finger Circumduction

The final investigation looked at finger circumduction. In this case, the participant moved their index finger to maximum hyperextension starting from the origin (0 flexion/extension and 0 ABD/ADD) while curling the remaining fingers inwards. The tip of the index finger was then made to execute a clockwise circular motion, which included positions of maximum ABD/ADD, i.e., circumduction.

Figure 9 shows the clockwise trajectory of the left index finger when tracked by the developed optical sensor. During circumduction, the MCP joint exhibits four movement types. Starting from maximum hyperextension (negative reading), the MCP joint executes: flexion-ABD, flexion-ADD, extension-ABD, and extension-ADD, before returning to the initial start point. The single camera method used in this work was not capable of monitoring dynamic finger circumduction; instead, a multi-camera format is needed. Figure 9, however, suggests that this type of optical sensing has great potential for tracking finger circumduction.

Figure 9.

The circumduction of the metacarpophalangeal joint of the left index finger.

In the future, we plan to investigate the performance of this two-axis sensor and compare it with time-stamped video frames for tracking dynamic finger motion.

7. Conclusions

An optical sensor has been developed that is capable of capturing the complex motion of the human hand. The sensor uses a two-axis measurement technique, allowing the motion of two-DOF joints to be accurately recorded. This sensor has an accuracy of ±0.3, much higher than optical fibre and potentiometer based technologies. Additionally, a particular advantage of our sensor is its wide measurement range of 180 which is sufficient to track the entire range of motion of human fingers. Time-consuming calibration is also unnecessary for this sensor.

When attached to the MCP joint of the index finger, the sensor has successfully tracked finger motion in real time, including flexion/extension, ABD/ADD and circumduction. The optical sensor achieved angular measurements within 2.7 of the reference values obtained photogrammetrically. The promising results show the capability of the presented sensor for tracking two-DOF finger motion. This two-DOF optical sensor should also work for both PIP and DIP finger joints, since they have only one DOF and therefore limited mobility compared with the MCP joint. Furthermore, this sensor has the potential to be fabricated with other dimensions for monitoring other joints in the human body, e.g., ankle, wrist, and knee.

In this work, the optical sensor was attached to the hand with adhesive plasters instead of using gloves. This improves the measurement accuracy but may be unsuitable for long-term monitoring. Only the left index finger of one test subject was investigated here, so additional studies on multiple participants and multiple finger joints will be required to thoroughly validate the sensor’s reliability and reproducibility. Moreover, further reduction in size may be necessary to avoid collisions when multiple sensors are placed close to each other on the hand. These problems will be addressed in future work.

Acknowledgments

This research is financially supported by the China Scholarship Council (CSC) and Cardiff University.

Author Contributions

Lefan Wang designed and constructed the sensing technology, performed the experiments, and drafted the early manuscript; Turgut Meydan supervised the work, and provided technical and scientific guidance during this research; and Paul Ieuan Williams provided conceptual input and critical revision of the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Henley C.N. Motion of the Fingers, Thumb, and Wrist—Language of Hand and Arm Surgery Series. [(accessed on 3 February 2017)]; Available online: http://noelhenley.com/532/motion-of-the-fingers-thumb-and-wrist-language-of-hand-and-arm-surgery-series/

- 2.Saladin K.S. Support and Movement. In: Wheatley C.H., Queck K.A., editors. Human Anatomy. 2nd ed. Michelle Watnick; New York, NY, USA: 2008. pp. 243–245. [Google Scholar]

- 3.Dutton M. Orthopaedic Examination, Evaluation, and Intervention. [(accessed on 3 February 2017)]; Available online: http://highered.mheducation.com/sites/0071474013/student_view0/chapter8/goniometry.html.

- 4.Macionis V. Reliability of the standard goniometry and diagrammatic recording of finger joint angles: A comparative study with healthy subjects and non-professional raters. BMC Musculoskelet. Disord. 2013;14:17. doi: 10.1186/1471-2474-14-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Yamada T., Hayamizu Y., Yamamoto Y., Yomogida Y., Izadi-Najafabadi A., Futaba D.N., Hata K. A stretchable carbon nanotube strain sensor for human-motion detection. Nat. Nanotechnol. 2011;6:296–301. doi: 10.1038/nnano.2011.36. [DOI] [PubMed] [Google Scholar]

- 6.Li J., Zhao S., Zeng X., Huang W., Gong Z., Zhang G., Sun R., Wong C.P. Highly Stretchable and Sensitive Strain Sensor Based on Facilely Prepared Three-Dimensional Graphene Foam Composite. ACS Appl. Mater. Interfaces. 2016;8:18954–18961. doi: 10.1021/acsami.6b05088. [DOI] [PubMed] [Google Scholar]

- 7.Chossat J.B., Tao Y., Duchaine V., Park Y.L. Wearable soft artificial skin for hand motion detection with embedded microfluidic strain sensing; Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA); Seattle, WA, USA. 26–30 May 2015; pp. 2568–2573. [Google Scholar]

- 8.Yoon S.G., Koo H.J., Chang S.T. Highly Stretchable and Transparent Microfluidic Strain Sensors for Monitoring Human Body Motions. ACS Appl. Mater. Interfaces. 2015;7:27562–27570. doi: 10.1021/acsami.5b08404. [DOI] [PubMed] [Google Scholar]

- 9.Fahn C.S., Sun H. Development of a fingertip glove equipped with magnetic tracking sensors. Sensors. 2010;10:1119–1140. doi: 10.3390/s100201119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Nishiyama M., Watanabe K. Wearable sensing glove with embedded hetero-core fiber-optic nerves for unconstrained hand motion capture. IEEE Trans. Instrum. Meas. 2009;58:3995–4000. doi: 10.1109/TIM.2009.2021640. [DOI] [Google Scholar]

- 11.Da Silva A.F., Goncalves A.F., Mendes P.M., Correia J.H. FBG sensing glove for monitoring hand posture. IEEE Sens. J. 2011;11:2442–2448. doi: 10.1109/JSEN.2011.2138132. [DOI] [Google Scholar]

- 12.Perez-Ramirez C.A., Almanza-Ojeda D.L., Guerrero-Tavares J.N., Mendoza-Galindo F.J., Estudillo-Ayala J.M., Ibarra-Manzano M.A. An Architecture for Measuring Joint Angles Using a Long Period Fiber Grating-Based Sensor. Sensors. 2014;14:24483–24501. doi: 10.3390/s141224483. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Moreira A.H., Queirós S., Fonseca J., Rodrigues P.L., Rodrigues N.F., Vilaça J.L. Real-time hand tracking for rehabilitation and character animation; Proceedings of the 2014 IEEE 3rd International Conference on the Serious Games and Applications for Health (SeGAH); Rio de Janeiro, Brazil. 14–16 May 2014; pp. 1–8. [Google Scholar]

- 14.Choi Y., Yoo K., Kang S.J., Seo B., Kim S.K. Development of a low-cost wearable sensing glove with multiple inertial sensors and a light and fast orientation estimation algorithm. J. Supercomput. 2016 doi: 10.1007/s11227-016-1833-5. [DOI] [Google Scholar]

- 15.Saggio G., Riillo F., Sbernini L., Quitadamo L.R. Resistive flex sensors: A survey. Smart Mater. Struct. 2015;25:013001. doi: 10.1088/0964-1726/25/1/013001. [DOI] [Google Scholar]

- 16.Flexpoint Sensor Systems Inc. [(accessed on 3 February 2017)]; Available online: http://www.flexpoint.com/

- 17.Wang L., Meydan T., Williams P., Kutrowski T. A proposed optical-based sensor for assessment of hand movement; Proceedings of the 2015 IEEE Sensors; Busan, Korea. 1–4 November 2015; pp. 1–4. [Google Scholar]

- 18.Wang L., Meydan T., Williams P. Design and Evaluation of a 3-D Printed Optical Sensor for Monitoring Finger Flexion. IEEE Sens. J. 2017;17:1937–1944. doi: 10.1109/JSEN.2017.2654863. [DOI] [Google Scholar]

- 19.Chen K.Y., Lyons K., White S., Patel S. uTrack: 3D input using two magnetic sensors; Proceedings of the 26th Annual ACM Symposium on User Interface Software and Technology; New York, NY, USA. 8–11 October 2013; pp. 237–244. [Google Scholar]

- 20.Bilro L., Oliveira J.G., Pinto J.L., Nogueira R.N. A reliable low-cost wireless and wearable gait monitoring system based on a plastic optical fibre sensor. Meas. Sci. Technol. 2011;22:045801. doi: 10.1088/0957-0233/22/4/045801. [DOI] [Google Scholar]

- 21.Babchenko A., Maryles J. A sensing element based on 3D imperfected polymer optical fibre. J. Opt. A Pure Appl. Opt. 2006;9:1–5. doi: 10.1088/1464-4258/9/1/001. [DOI] [Google Scholar]

- 22.Williams N.W., Penrose J.M.T., Caddy C.M., Barnes E., Hose D.R., Harley P. A goniometric glove for clinical hand assessment construction, calibration and validation. J. Hand Surg. (Br. Eur. Vol.) 2000;25:200–207. doi: 10.1054/jhsb.1999.0360. [DOI] [PubMed] [Google Scholar]

- 23.Li K., Chen I.M., Yeo S.H., Lim C.K. Development of finger-motion capturing device based on optical linear encoder. J. Rehabil. Res. Dev. 2011;48:69–82. doi: 10.1682/JRRD.2010.02.0013. [DOI] [PubMed] [Google Scholar]

- 24.Gentner R., Classen J. Development and evaluation of a low-cost sensor glove for assessment of human finger movements in neurophysiological settings. J. Neurosci. Methods. 2009;178:138–147. doi: 10.1016/j.jneumeth.2008.11.005. [DOI] [PubMed] [Google Scholar]

- 25.Oess N.P., Wanek J., Curt A. Design and evaluation of a low-cost instrumented glove for hand function assessment. J. Neuroeng. Rehabil. 2012;9:2. doi: 10.1186/1743-0003-9-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Simone L.K., Sundarrajan N., Luo X., Jia Y., Kamper D.G. A low cost instrumented glove for extended monitoring and functional hand assessment. J. Neurosci. Methods. 2007;160:335–348. doi: 10.1016/j.jneumeth.2006.09.021. [DOI] [PubMed] [Google Scholar]

- 27.Saggio G. A novel array of flex sensors for a goniometric glove. Sens. Actuators A Phys. 2014;205:119–125. doi: 10.1016/j.sna.2013.10.030. [DOI] [Google Scholar]

- 28.Bowman D.A., Wingrave C.A., Campbell J.M., Ly V.Q. Using Pinch Gloves (TM) for both Natural and Abstract Interaction Techniques in Virtual Environments. Departmental Technical Report in the Department of Computer Science, Virginia Polytechnic Institute and State University; Blacksburg, VA, USA: [Google Scholar]

- 29.Dipietro L., Sabatini A.M., Dario P. A survey of glove-based systems and their applications. IEEE Trans. Syst. Man Cybern. C (Appl. Rev.) 2008;38:461–482. doi: 10.1109/TSMCC.2008.923862. [DOI] [Google Scholar]

- 30.CyberGlove Systems LLC. [(accessed on 3 February 2017)]; Available online: http://www.cyberglovesystems.com/

- 31.Humanware. [(accessed on 3 February 2017)]; Available online: http://www.hmw.it/en/humanglove.html.

- 32.5DT. [(accessed on 3 February 2017)]; Available online: http://www.5dt.com/data-gloves/

- 33.Borghetti M., Sardini E., Serpelloni M. Sensorized glove for measuring hand finger flexion for rehabilitation purposes. IEEE Trans. Instrum. Meas. 2013;62:3308–3314. doi: 10.1109/TIM.2013.2272848. [DOI] [Google Scholar]

- 34.Ju Z., Liu H. Human hand motion analysis with multisensory information. IEEE/ASME Trans. Mechatron. 2014;19:456–466. doi: 10.1109/TMECH.2013.2240312. [DOI] [Google Scholar]

- 35.Lee J., Kunii T.L. Model-based analysis of hand posture. IEEE Comput. Graph. Appl. 1995;15:77–86. [Google Scholar]

- 36.Kortier H.G., Sluiter V.I., Roetenberg D., Veltink P.H. Assessment of hand kinematics using inertial and magnetic sensors. J. Neuroeng. Rehabil. 2014;11:70. doi: 10.1186/1743-0003-11-70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Wu Y., Huang T.S. Hand modeling, analysis and recognition. IEEE Signal Process. Mag. 2001;18:51–60. [Google Scholar]

- 38.Palastanga N., Soames R. Introduction and The upper limb. In: Demetriou-Swanwick R., Davies S., editors. Anatomy and Human Movement: Structure and Function. 6th ed. Elsevier Churchill Livingstone; London, UK: 2012. [Google Scholar]

- 39.Degeorges R., Parasie J., Mitton D., Imbert N., Goubier J.N., Lavaste F. Three-dimensional rotations of human three-joint fingers: An optoelectronic measurement. Preliminary results. Surg. Radiol. Anat. 2005;27:43–50. doi: 10.1007/s00276-004-0277-4. [DOI] [PubMed] [Google Scholar]

- 40.EnvisionTEC. [(accessed on 31 March 2017)]; Available online: https://envisiontec.com/

- 41.Farnell element14. [(accessed on 3 February 2017)]; Available online: http://uk.farnell.com/

- 42.Edmund Optics Ltd. [(accessed on 3 February 2017)]; Available online: http://www.edmundoptics.co.uk/

- 43.Thorlabs, Inc. [(accessed on 3 February 2017)]; Available online: https://www.thorlabs.com/

- 44.Park Y., Lee J., Bae J. Development of a Wearable Sensing Glove for Measuring the Motion of Fingers Using Linear Potentiometers and Flexible Wires. IEEE Trans. Ind. Inf. 2015;11:198–206. doi: 10.1109/TII.2014.2381932. [DOI] [Google Scholar]

- 45.Oess N.P., Wanek J., van Hedel H.J. Enhancement of bend sensor properties as applied in a glove for use in neurorehabilitation settings; Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Buenos Aires, Argentina. 31 August–4 September 2010; pp. 5903–5906. [DOI] [PubMed] [Google Scholar]

- 46.ImageJ. [(accessed on 17 March 2017)]; Available online: https://imagej.nih.gov/ij/