Abstract

In the era of value-based healthcare, many aspects of medical care are being measured and assessed to improve quality and reduce costs. Radiology adds enormously to health care costs and is under pressure to adopt a more efficient system that incorporates essential metrics to assess its value and impact on outcomes. Most current systems tie radiologists’ incentives and evaluations to RVU-based productivity metrics and peer-review-based quality metrics. In a new potential model, a radiologist’s performance will have to increasingly depend on a number of parameters that define “value,” beginning with peer review metrics that include referrer satisfaction and feedback from radiologists to the referring physician that evaluates the potency and validity of clinical information provided for a given study. These new dimensions of value measurement will directly impact the cascade of further medical management. We share our continued experience with this project that had two components: RESP (Referrer Evaluation System Pilot) and FRACI (Feedback from Radiologist Addressing Confounding Issues), which were introduced to the clinical radiology workflow in order to capture referrer-based and radiologist-based feedback on radiology reporting. We also share our insight into the principles of design thinking as applied in its planning and execution.

Keywords: Imaging informatics, Quality assurance, Total quality management, Radiology workflow

Introduction

Design thinking may be defined as a strategy-making process that applies tools to shift the focus toward human behavior. The main goals of this exercise are to invent the future, test ideas, and assess whether one can create, market, and sell products based on the feedback received. The process of design thinking includes answering the questions of “what is,” “what if,” and “what works” about a project. A good design is about first understanding the users, their environment, and recognizing challenges (what is). After doing deep-dive research to look for ways to address various challenges (what if), prototypes can be created to assess which ideas work (what works) and identify what features provide a compelling advantage to the users (what wows). Finally, design thinking seeks to shape the industry model in a way that materializes and executes ideas to provide solutions in the product. This article includes the account of how the principles of design thinking were incorporated to radiology reporting via two-way feedback via radiologist and referring physician.

Materials and Methods

We designed this project focusing on capturing the two-way feedback between the radiologist and the referring physician: RESP that assesses referring physician’s feedback on a radiology report and FRACI that evaluates the radiologist’s feedback to the referring physician on the validity, relevance, and quality of information available to him/her during image interpretation.

This system was created using principles of human-centered design, allowing clinicians to easily provide feedback within their existing report review workflows without having to enter a new system or application to do so. Attention was focused on minimizing the amount of clicks needed to provide feedback, reducing the text content needed to understand the interface, and making the required inputs minimal, while providing the opportunity to enter optional and additional text feedback. Analysis of existing user workflows for reading and responding to radiology reports, in combination with user research on the proposed language and user interface (UI) elements of the feedback mechanisms, along with leveraging best practices of survey design, ensured the design of the pilots was “lightweight” and usable for clinicians to intuitively use our application, minimizing any barriers that may have prevented providing feedback.

RESP was implemented as a plug-in or add-on to the image-viewing software that referring physicians use. A “thumbs up” icon immediately under the radiology report icon opens the RESP feedback window, which presents a 5-point scale system, labeled qualitatively from “Very helpful” to “It was problematic,” an additional list of selectable reasons about why a radiology report may not be helpful, and a comment box to physicians to provide feedback about the radiology report.

FRACI was implemented as a plug-in or add-on to the image-viewing software that radiologists use. A menu option labeled “Provide feedback” opens the FRACI feedback window, which presents a selection list of ways to help the radiologist provide helpful report for a given radiology order and a comment box to radiologists to provide feedback about the order.

The pilots were introduced to the clinical workflow throughout a large multihospital health system of 21 hospitals, about 400 clinical facilities, 400 radiologists, and 10,000 physicians and nurses. RESP and FRACI collected data starting October 12, 2015, with an interruption on RESP from March 14, 2016 to June 13, 2016 due to resource limitations. The pilots have had a few software patches that fixed technical issues dealing with web browser versions, a release that changed the back-end database technology and removed restrictions to data sources and another release that improved data fidelity.

We tabulated and visualized the data by month, modality, and department to understand and act on areas of improvement. The RESP data was further parsed based on the helpfulness/unhelpfulness gradation scale of feedback response. The FRACI data was further categorized based on the scope for improvement in patient care gradation of its response. Using our lightweight approach to the design of the feedback system, we have gotten feedback that the tool was intuitive to use. Referring physicians take an average of 31 s to provide feedback according to timestamp data collected. Response time ranged from 5 s to 5 min, for clinicians who chose to enter additional comments. The RESP dataset comprised of 266 entries, while the FRACI dataset included 340 entries as of 11:59 pm of October 13, 2016.

Results

RESP

Based on the referrer feedback, reports on 132 studies (50%) were deemed very helpful (highest scoring modality—DX with 60 entries, followed by CT at 27). Twenty-nine studies (11%) were reported as somewhat helpful. There were 31 (12%), 19 (7%), and 51 (19%) studies reported to be neutral, unhelpful, and problematic, respectively. One hundred nine entries (41%) had comments, while four of those (2%) were only comments without scores or reasons. See figure for graphical representation of the results Fig. 1. The average comment length was 102 characters.

Fig. 1.

This is a graphical representation of the data collected from the RESP project based on the modality and usefulness of the feedback. Also included is the graphical representation of the negative evaluation feedback

FRACI

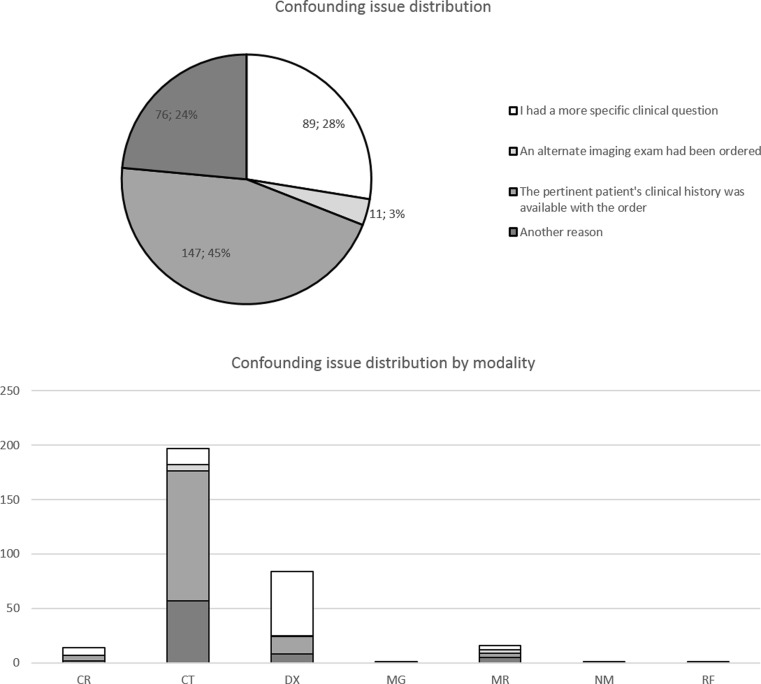

There were 147 (45%) entries reporting lack of pertinent clinical history provided, 119 of which were CT reports, 89 (28%) reporting needs to more specific clinical questions, 76 (24%) reporting other reasons to improve radiology reports, and 11 (3%) reporting that an alternative study had been ordered. Two hundred and sixty (76%) had comments, while 45 of those (13%) provided only comments outside of the given reasons. See figure for the graphical representation of the results Fig. 2. The average comment length was 64 characters.

Fig. 2.

This is a graphical representation of the data collected from the FRACI project based on the confounding issues encountered by modality

Discussion

Value-based healthcare model requires us to adopt methods that are geared toward improved quality and reduced waste/cost. A large share of health care expense is attributed to medical imaging. Therefore, it is imperative that radiology practices are assessed for quality, outcomes, and value.

There has been a lot of focus on error reporting in radiology practices and errors related to positioning, incorrect accession, laterality, among others that have been documented and published [1]. Quantifiable methods have been attempted to provide objective feedback to radiology reporting as well [2]. However, the communication between the radiologist and the referring physician is of critical importance in order to ensure judicious and effective use of resources and assure optimal quality of radiology reporting. One of the two channels in this communication is the radiologist’s feedback to the referrer on the adequacy of the ordered study and the relevance and sufficiency of the information provided. Ordering a radiology study can be compared to consult that one might request from infectious disease specialist for a case of meningitis or rheumatology for systemic lupus erythematosus. This means that the reason for consult (exam) has to be clearly indicated, along with a working diagnosis (when available) and a discrete clinical question that needs to be answered. One of the most common concerns that radiologists address is the lack of provided information and a clearly delineated clinical question. This can lead to significant confusion on the radiologist’s part, leading to potential errors in reporting and inefficiency in terms of extra time spent on acquiring the needed information from the patient’s chart. Radiologist’s feedback in terms of completing the loop, when it comes to the completion of the study, is also of immense importance. Automated and standardized methods to ensure prompt notification of radiology findings have also been shown to increase efficiency in radiology practices [3].

On the other hand, like any service provider, a radiologist’s performance is dependent on feedback provided by the ordering physician on the radiology report itself. In the medical community, there lingers a sense of disconnect and lack of understanding between the referrer and the radiologist [4]. In a large multihospital system where radiology interpretations tend to be decentralized, this can be further accentuated leading to hedging and guesswork on the radiologist’s part and lack of confidence in the reports by referring physicians. The end result is over-ordering of radiology studies and underutilization of the information within reports. This is a significant problem, especially given the fact that radiologic procedures add immensely to the healthcare costs. Another major consequence is compromised patient management. There have been calls for “non-traditional” approaches to improve clinical visibility of radiologists, some of which focus on direct patient communication [4]. Attempts have also been made to implement structured feedback systems for referring physicians to identify problems in radiology practices [5]. One remedial approach to address the issue of report quality is advocated by Dr. Daniel Rubin, in terms of creating a standard terminology (RadLex) to improve clarity, reduce errors and variation, etc. [6].

Our pilots, RESP and FRACI, capture essential data that allows us to identify key issues in the communication between the radiologist and the referrer, which, as we understand, needs to be clear and adequate for radiology to be properly utilized as a consult service Figs. 3 and 4.

Fig. 3.

Iterations of the RESP workflow as encountered by the user

Fig. 4.

Iterations of the FRACI workflow as encountered by the user

The initial results from the RESP were consistent with previous findings from other institutions, which suggested that referrer satisfaction on radiology reports ranges from 65 to 79% [7–11]. Diagnostic studies like plain film radiographs followed by CT were the highest in the satisfaction score. FRACI results were also consistent with the expected trends of lack of pertinent history being the major concern of radiologists in general. The disproportionately high responses for CT exams in the FRACI pilot may be explained by the increased level of complexity of clinical history of the patient undergoing the CT exam. One suggestion is to create a clinical synopsis for the reporting radiologists as a remedy for this problem in general [12].

We observed that usage went down to nine entries in the month 5 from a peak of 64 entries in month 2 after launch. We suspected that this is due to lack of follow-up on the data analysis and report. After our first attempt of establishing a follow-up process after feedback, we have been able to bring up the usage to a plateau of about 15 entries per month. Continued use of RESP promotes a culture of openness, and referring physicians are actually using it. More in-person marketing by going to the departmental meetings and promoting the tool may help increase usage. It is also possible that a big portion of physicians are still concerned about legal ramifications of providing feedback.

Design Thinking as Applied to the Two-Way Feedback System

What Is?

There are currently only the radiology value unit (RVU) system, which is used to weigh the complexity of imaging exam interpreted by the radiologist, the radiologist’s productivity and the radiologist’s compensation per RVU to measure this value. This is still a heavily volume-based metric. Through this feedback system, we seek to develop a more quality-based metric. Referring physicians underscored the need for improvements to radiology reporting, yet the feedback had a wide-ranging spectrum and not associated with any specific tangible report which a radiologist can reflect on. On the other hand, radiologists feel that there were times when they were not provided sufficient information about the patient or case and therefore were not able to provide the best quality radiology report they could have. Finding the essential clinical information to provide a report of basic utility to the referring physician often takes non-productive time that can be avoided if the clinical synopsis is provided in the first place.

What If?

What if we can measure the improvement of radiology report quality over time, especially after certain behavioral changes were made, either through departmental policies, radiologist exposure to feedback on their report quality, or through self-motivation? What if the radiologist is able to better understand which aspects of his/her report need improvement, by virtue of a specific and granular feedback as opposed to a “blanket” feedback to the entire radiology department?

What Wows?

Confidence and reliability are often lacking between the radiologist and the referring healthcare professionals. This gap can be bridged first by understanding the specific issues with particular reports and then by acting to remedy future problems, which in turn will help improve the quality and the credibility of radiologists’ reports. It also functions as a tool that allows radiologists to communicate with the referrer in order to understand the reason for exam so as to be able to answer the clinical question appropriately. It allows the radiologists to screen incorrectly ordered studies and therefore reduce waste and iatrogenic issues such as excess radiation exposure. Also, better understanding of the clinical question may allow for a more suitable study or an adjusted protocol to yield the best information from the radiology scan and address patient’s needs to help improve the overall experience.

What Works?

Referring physicians provide feedback to radiologists about their radiology reports using a 5-point scale system, labeled qualitatively from “Very helpful” to “It was problematic,” and further provide specific reasons about why a radiology report may not be helpful. Meanwhile, radiologists provide feedback to referring physicians about the quality of information available to them during image interpretation using a combination of preset reasons and free-text comments. We then tabulated and visualized the data by month, modality, and department to understand and act on areas of improvement. This system was created using principles of human-centered design, allowing clinicians to easily provide feedback within their existing report review workflows without having to enter a new system or application to do so. Attention was focused on minimizing the amount of clicks needed to provide feedback, reducing the text content needed to understand the interface, and making the required inputs minimal, while providing the opportunity to enter optional and additional text feedback. Analysis of existing user workflows for reading and responding to radiology reports, in combination with user research on the proposed language and UI elements of the feedback mechanisms, along with leveraging best practices of survey design (including a 5-point Likert scale), ensured the design of the pilots was “lightweight” and usable for clinicians to easily and quickly provide feedback. Through research, we recognized that referring physicians and interpreting radiologists were concerned about possible legal ramifications of providing feedback through a software system. Using design research methods to understand user concerns and the dissemination of system learning materials to clinicians, we ensured that users were aware that the feedback would not go to the patient’s electronic medical record (EMR).

Our user experience research, combined with our lightweight design, enabled the referring physicians and interpreting radiologists to intuitively use our application, minimizing any barriers that may be holding them from providing feedback. We investigate the reasons why usage is not as high as it could be and has waned. We suspect it is because we have not conducted in-person marketing of the system, which could help system adoption, or that have not established a system to follow up on the collected data with department heads. Dissemination of these data can help departmental chiefs understand quality issues in their departments. It would be best to have a dedicated clinical expert to follow up and act on the data, as this person would have the experience, tools, and relationships to push the system further and establish departmental quality goals based on the data.

During the design phase, we performed some of the activities within the design thinking framework, some with specific documentation, others embedded in the collective artifacts and philosophy Fig. 5. The following are the notable activities:

Visualization—Our designer created concept work and wire frames throughout the research, development, and marketing processes to improve communication during those phases.

Journey mapping—The team discusses the information flow and user journey and made design decisions based on those discussions.

Brainstorming—The team had a few brainstorming sessions to establish the requirements and specifications.

Concept development—This was an iterative process. Again, our designer’s wire frames helped in this regard.

Fig. 5.

A schematic illustration of the design thinking principles as applied to the two-way feedback system

These screenshots illustrate the use of visualization and iterative process of concept development (figures 7–14). Note the change in text content and icon locations as we collect feedback from radiologists and referring physicians. This illustration sheds led on the design thinking approach applied to this project (figure 15).

In the realm of medical imaging, there are many opportunities to redesign the current models that are outdated and poorly equipped to tackle the challenges of a value-based model [13]. This provides a tremendous opportunity for us to incorporate principles of design thinking to facilitate the transition of volume- to value-based healthcare. Challenges in communication begin with the ordering physician and the radiologist. Confidence and reliability are lacking between these two groups of medical professionals. When thinking about “What wows,” there needs to be a focus on bridging this gap by avoiding typographical and grammatical errors, avoiding equivocal hedging terms, and likewise, using definitive terms to describe relevant information, using evidence of medical literature to support the impression of the report can help improve dialog. These improvements will go a long way in improving the quality and the credibility of radiologists’ reports. It is critically important for the radiologists to communicate with the referrer in order to understand the reason for exam so as to be able to answer the clinical question. It allows the radiologists to screen incorrectly ordered studies and therefore reduce waste and iatrogenic issues such as excess radiation exposure. It also provides the opportunity to address patient-related issues such as pain and other clinical conditions that can be addressed to improve patient experience. Finally, a system that ensures completion of the feedback loop by letting the radiologist follow up on the patient with pathology correlation, among others lays the foundation of a robustly integrated clinical system that is bound to improve outcomes. All pilots should be introduced in a multi-tier learning launch scheme, whereby we collect data and review it to derive results that drive change in our radiology practice.

Conclusion

Based on the data derived from this system thus far, lack of pertinent clinical information was by far the predominant concern of the radiologist, including other issues such as incorrectly ordered scans. The referrers found most of the radiology reports very useful, especially the basic diagnostic radiography studies; however, there were concerns related to the reports not narrowing down the diagnosis and disagreement on the recommendations made. Feedback systems such as these will be key in the value assessment of radiology reporting in the era of value-based healthcare and having a design thinking-based approach will allow us to identify the real issues and address them methodically to help achieve the intended outcomes.

References

- 1.Golnari P, Forsberg D, Rosipko B, Sunshine J. Online error reporting for managing quality control within radiology. J Digit Imaging. 2015 doi: 10.1007/s10278-015-9820-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Scott J, Palmer E. Radiology reports: a quantifiable and objective textual approach. Clinical Radiology. 2015;70:1185–e1191. doi: 10.1016/j.crad.2015.06.080. [DOI] [PubMed] [Google Scholar]

- 3.Georgiou A, Hordern A, Dimigen M, et al. Effective notification of important non-urgent radiology results: a qualitative study of challenges and potential solutions. Journal of Medical Imaging and Radiation Oncology. 2014;58(Issue 3):291–297. doi: 10.1111/1754-9485.12156. [DOI] [PubMed] [Google Scholar]

- 4.Gunn A, Mangano M, Choy G, Sahani D. Rethinking the role of the radiologist: enhancing visibility through both traditional and nontraditional reporting practices. RadioGraphics. 2015;35:416–423. doi: 10.1148/rg.352140042. [DOI] [PubMed] [Google Scholar]

- 5.Gunn A, Alabre C, Bennett S, Kautzky M, Krakower T, Palamara T, Choy G. Structured feedback from referring physicians: a novel approach to quality improvement in radiology reporting. AJR. 2013;201:853–857. doi: 10.2214/AJR.12.10450. [DOI] [PubMed] [Google Scholar]

- 6.Rubin DL. Creating and curating a terminology for radiology: ontology modeling and analysis. J Digit Imaging. 2008;21(4):355–362. doi: 10.1007/s10278-007-9073-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Gunn AJ, Sahani DV, Bennett SE, Choy G. Recent measures to improve radiology reporting: perspectives from primary care physicians. J Am Coll Radiol. 2013;10(2):122–7. doi: 10.1016/j.jacr.2012.08.013. [DOI] [PubMed] [Google Scholar]

- 8.Gunn AJ, Mangano MD, Pugmire BS, Sahani DV, Binder WD, et al. Toward improved radiology reporting practices in the emergency department: a survey of emergency department physicians. J Radiol Radiat Ther. 2013;1(2):1013. [Google Scholar]

- 9.Johnson AJ, Ying J, Littenberg B. Improving the quality of radiology reporting: a physician survey to define the target. Journal of the American College of Radiology. 2004;1(7):497–505. doi: 10.1016/j.jacr.2004.02.019. [DOI] [PubMed] [Google Scholar]

- 10.Grieve FM, Plumb AA, Khan SH. Radiology reporting: a general perspective. Br J Radiol. 2010;83(985):17–22. doi: 10.1259/bjr/16360063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Obara P, Sevenster M, Chang P, et al. Evaluating the referring physician’s clinical history and indication as a means for communicating chronic conditions that are pertinent at the point of radiologic interpretation. Journal of Digital Imaging. 2015;28(3):272–282. doi: 10.1007/s10278-014-9751-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Cohen M, Alam K. Radiology clinical synopsis: a simple solution for obtaining an adequate clinical history for the accurate reporting of imaging studies on patients in intensive care units. Pediatr Radiol. 2005;35:918–922. doi: 10.1007/s00247-005-1491-x. [DOI] [PubMed] [Google Scholar]

- 13.AG Perreira et al.: Solutions in radiology services management: a literature review. Radiol Bras vol.48 no.5 São Paulo,2015 [DOI] [PMC free article] [PubMed]