Abstract

With ongoing healthcare payment reforms in the USA, radiology is moving from its current state of a revenue generating department to a new reality of a cost-center. Under bundled payment methods, radiology does not get reimbursed for each and every inpatient procedure, but rather, the hospital gets reimbursed for the entire hospital stay under an applicable diagnosis-related group code. The hospital case mix index (CMI) metric, as defined by the Centers for Medicare and Medicaid Services, has a significant impact on how much hospitals get reimbursed for an inpatient stay. Oftentimes, patients with the highest disease acuity are treated in tertiary care radiology departments. Therefore, the average hospital CMI based on the entire inpatient population may not be adequate to determine department-level resource utilization, such as the number of technologists and nurses, as case length and staffing intensity gets quite high for sicker patients. In this study, we determine CMI for the overall radiology department in a tertiary care setting based on inpatients undergoing radiology procedures. Between April and September 2015, CMI for radiology was 1.93. With an average of 2.81, interventional neuroradiology had the highest CMI out of the ten radiology sections. CMI was consistently higher across seven of the radiology sections than the average hospital CMI of 1.81. Our results suggest that inpatients undergoing radiology procedures were on average more complex in this hospital setting during the time period considered. This finding is relevant for accurate calculation of labor analytics and other predictive resource utilization tools.

Keywords: Bundled payments, Case mix index, MS-DRG codes, Radiology informatics, Radiology reimbursements

Introduction

There are multiple healthcare payment reforms currently underway in the USA, with a strong focus towards integrated care delivery. In 2014, US healthcare spending reached $3 trillion accounting for 17.5% of GDP and is projected to be close to 20% of GDP by 2024 [1]. With such increases in healthcare related spending, there is a significant trend towards moving away from the traditional fee-for-service model to alternative reimbursement models in an attempt to contain or drive down costs.

In the traditional fee-for-service payment model, providers are reimbursed by insurers for each service item provided. There has been some evidence to indicate that physicians routinely order unnecessary tests, procedures, or treatments [2, 3] and unnecessary imaging alone is reported to waste at least $7 billion annually in the USA [4]. Since each service gets reimbursed, there is no major incentive for hospitals to minimize costs associated with these tests while the insurer has an open-ended economic risk as there is no limit on the number of services that can be ordered when treating a patient. On the other hand, with capitated payment models, the economic risks shift to the hospital since the hospital only gets reimbursed a fixed amount to treat a specific condition [5].

The Centers for Medicare & Medicaid Services (CMS) which administers the Medicare and Medicaid programs to collectively provide health insurance to over 50 million Americans recently announced its intentions towards greater value-based care, rather than continuing to reward volume regardless of quality of care delivered. Towards this goal, CMS has set a goal of moving 50% of Medicare payments into alternative payment models, such as Accountable Care Organizations (ACOs) and bundled payments, by the end of 2018 [6]. As part of this new initiative, CMS initiated a new payment model from 1 April 2016 for hip and knee replacements where hospitals are held accountable for quality of care delivered. The hospitals may be rewarded with additional Medicare payments for good quality and spending performance or be required to repay Medicare for poor quality services. Similarly, starting from around 2011, various radiology procedures have been getting paid under “bundled codes” when two or more related studies are performed together. For instance, the American College of Radiology routinely monitors changes to radiology-related payments and recently reported that the bundled code payments are falling short of the payment levels of the predecessor codes and values; for instance, computed tomography (CT) abdomen-pelvis without contract exams were paid at $418.43 prior to using bundled codes; in 2013, under the bundled payment model, this was reduced to $306.05 and in 2014, this was further reduced to $241.79 [7].

With such changes to reimbursements, and in an attempt to reduce costs associated with unnecessary imaging, radiology is being set up to shift from one of the primary profit-centers for a hospital to a cost-center. Radiology departments are increasingly asked to do more with less annual budget and to remain competitive while managing bottom lines. As such, radiology departments need to optimize quality of care, patient experience, outcomes, efficiency, and throughput while reducing costs.

Hospital labor is typically the single largest component of the operating cost of hospitals, accounting for 50% or more of expenses [8]. Therefore, reducing labor costs could often be one of the first attempts when trying to minimize costs, although determining optimal labor is not a trivial task. In the context of radiology, various factors such as the nature of the hospital, type of procedures performed, and patient demographics need to be taken into account when determining the optimal number of technologists, nurses, patient transporters, technologist-aids, and other support staff. Due to the complexities involved, it is usual to take a data-driven approach where benchmarks and averages are used to compare hospitals with other similar facilities to determine optimal staffing and thresholds. For instance, a hypothetical benchmark may suggest that, on average, 24 CT studies should be performed on a machine per day, assuming 20 min per study and an 8-h shift. In the absence of granular, department/section-specific national metrics, such benchmark values are usually weighted using hospital-level national metrics as determined by CMS—for inpatient settings, the hospital’s case mix index (CMI) [9] is widely used, whereas Ambulatory Payment Classifications (APC) [10] are often used for outpatient settings. These metrics are determined at a hospital-wide level and do not necessarily reflect the actual patients seen at the department level. In this paper, we hypothesize that CMI can be calculated at a more granular level than at hospital level and argue that using hospital-wide generic measures may not sufficiently represent the case complexity certain radiology areas actually face and may in fact significantly underestimate the staffing requirements and even indicate a low resource utilization despite running at or close to full capacity.

Methods

In this section, we discuss two common measures used for benchmarking followed by details on the setting and dataset we used to evaluate our hypothesis.

Case Mix Index

A hospital’s CMI reflects the clinical complexity of its inpatient population and is a measure of the relative costs and resources needed to treat its patient mix. When a patient is discharged from a hospital, the patient stay is assigned one Medicare severity-diagnosis-related group (MS-DRG) code based on primary and secondary diagnosis, procedures, age, gender, and discharge status. MS-DRGs have numeric weights assigned to them by CMS reflecting national average hospital resource consumption by patients for that MS-DRG relative to national average hospital resource consumption by all patients—this means, the higher the numeric value, the higher the resource consumption will be [9]. Once the MS-DRGs are known, CMI can be calculated for a given patient cohort for a given time period by calculating the weighted average of MS-DRGs. Although MS-DRGs are defined for Medicare patients only, it is common to use the same weights to calculate CMI for all inpatients (even if their insurance provider is not Medicare). CMI is typically determined at the hospital level, as opposed to department level, and published at least once a year by CMS. The average hospital CMI is around 1.37 (range 0.58–3.73, n = 3619) [11].

Given that even seemingly small changes in CMI can have a large effect on the bottom line, it is important to track CMI over time and take action if needed. Despite the CMI calculation being relatively straightforward, to the best of the authors’ knowledge, service line leaders of hospital departments, such as Chair/Director of Radiology/Cardiology/Oncology and so on, do not have access to department level CMI, yet alone the more granular section-specific CMI. Almost all reporting systems provide routine reports that contain billing exam volume for each section as well as various other reports related to daily operations. However, CMI reports are not part of routine reporting. Extracting MS-DRG codes from a hospital billing system to calculate CMI is not a trivial task as it requires a significant understanding of the underlying billing system’s database schema as well as domain knowledge to determine the cohort of inpatients who had exams performed in the department of interest.

A hospital’s CMI is used to calculate the adjusted cost per patient the hospital will be paid. For example, if hospital “A” has a CMI of 1 and hospital “B” has a CMI of 2, a patient group in hospital B will cost twice as much to treat as the same group in hospital “A”. Exact hospital payment calculation for a stay is rather complex, but the basic hospital payment for any admission is the DRG weight multiplied by a conversion factor where the dollar amount associated with the conversion factor is determined by the insurance provider based on factors such as the CMI, local wage index, type of facility, the number of low-income patients, and so forth [12].

Ambulatory Payment Classification System

The Ambulatory Payment Classification system, commonly referred to as APC, is the government’s method of paying facilities for Medicare related outpatient services, analogous to MS-DRG for inpatients. Similar to MS-DRGs, rendered outpatient services are coded using APC and the hospitals are paid by multiplying the APC relative weight by a conversion factor with a minor adjustment for geographic location. For example, treatment of toe fracture (APC code 28525) has an APC weight of 32.4936 whereas an X-ray exam of facial bones (APC code 70140) has an APC weight of only 0.8247 indicating that the former is a much more complex procedure [13]. APCs were created to transfer some financial risk for provision of outpatient services from the Federal government to incentivize hospitals to provide outpatient services economically, efficiently, and profitably [10, 14]. As such, APC payments are fixed payment to the hospital and the hospital is at risk for potential profit or loss with each payment it receives. APC payments apply to outpatient surgery, outpatient clinics, observation services, and outpatient testing such as radiology.

Clinical Setting and Data Collection

In this study, we chose to profile Lahey Hospital and Medical Center, the tertiary care facility of an integrated care delivery network based in Burlington, Massachusetts [15]. Working specifically with the radiology department, we extracted data using routine operational reports produced by the enterprise electronic medical record system (Epic, Madison, WI [16]), to determine the distribution of the inpatient and outpatient exams.

We first extracted operational data for the 6-month period, from 1 Apr. 2015 to 30 Sep. 2015 for all radiology exams performed at the main hospital in Burlington, Massachusetts. An “exam” is identified by a unique accession number. The dataset included exam start and end times, procedure descriptions, patient class, accession numbers, and other exam-related fields that are not part of protected health information (such as patient name, age, and date of birth) [17]. This dataset contained data for 126,834 exams (30,160 inpatient exams, 73,765 outpatient exams, and 22,909 emergency exams) performed across 13 different modalities in radiology (such as computed tomography, magnetic resonance imaging, and ultrasound) for 10 radiology sections (i.e., cost-centers). Certain modalities are associated with the same section/cost-center; for instance, modalities: bone density, fluoroscopy, and X-ray are part of diagnostic radiology.

The department on average performed 24% inpatient exams, 58% outpatient exams, and 18% exams on emergency patients. Given that inpatients account for almost a quarter of all exams, the Radiology Chair (CW) and Head of Interventional Radiology (SF) agreed that having the capability to determine department and section-specific CMIs would be beneficial to quantify the complexity of the inpatients who are being treated in the department, as well as understand if department/section-specific CMI is higher than the general hospital CMI that is published by CMS. Determining exam volume by patient class is relatively straightforward by using routine operational reports, whereas extracting data related to calculate CMI requires extracting additional information which is not performed routinely; as such, this step was performed first prior to extracting any additional data.

Extracting data required to calculate CMI required formulating the necessary queries to extract billing data related to hospital discharges from the enterprise electronic medical record (EMR) for the same time period for patients who had inpatient radiology procedures. As previously mentioned, billing for inpatients occurs at a hospital account level, meaning that an entire hospital stay for a given patient will be billed under a single hospital specific account, irrespective of the number of procedures performed. After formulating the necessary queries, we extracted all transaction level data for all patients who had radiology procedures. This dataset contained the hospital account number, procedure-related fields (including accession number, procedure code, procedure description, performing section, and exam end date-time) as well as the Medicare DRG code, relative weight, and description. The dataset was then filtered for inpatients only. This resulted in 13,997 inpatient-section combinations belonging to 7704 unique hospital accounts—note that a single admission will often result in multiple exams, and a given hospital account number can be associated with more than one performing section. A selected subset of the fields for an illustrative account is shown below in Table 1. DRG code 658 in Table 1 corresponds to DRG description kidney & ureter procedures for neoplasm w/o cc/mcc, and patient class 101 is coded as “inpatient.”

Table 1.

Selected fields for a fictitious patient

| Exam end date-time | Procedure code | Procedure description | Section name | Hospital account number | Accession number | DRG code | DRG weight | Patient class |

|---|---|---|---|---|---|---|---|---|

| 2015-04-30 19:51 | IMG11117 | CT chest W contrast | Bur CT scan | 1234567 | 121212 | 658 | 1.5299 | 101 |

| 2015-05-01 08:40 | IMG20065 | FL more than 1 h | Bur diagnostic rad | 1234567 | 232323 | 658 | 1.5299 | 101 |

| 2015-05-01 11:04 | IMG14137 | US performed in OR | Bur ultrasound | 1234567 | 343434 | 658 | 1.5299 | 101 |

| 2015-05-01 13:00 | IMG10031 | XR chest 1 VW | Bur diagnostic rad | 1234567 | 454545 | 658 | 1.5299 | 101 |

| 2015-05-01 13:04 | IMG10252 | XR portable abdomen | Bur diagnostic rad | 1234567 | 565656 | 658 | 1.5299 | 101 |

Overall CMI for the department is calculated by summing the DRG weights for all inpatients and then dividing this value by the number of hospital account numbers. Similarly, section level CMI is calculated by summing the DRG weights for all inpatients for a given section and then dividing this value by the number of hospital account numbers for the same section. Since the same hospital account number can belong to multiple sections due to a patient having procedures across different sections, the total account count for all sections is higher than the count at the department level. When multiple exams were associated with the same hospital account number, we used the month of the most recent exam as the billing month—for instance, the DRG weight associated with the CT exam for the patient illustrated in Table 1 will contribute towards May’s CMI for section BUR CT SCAN.

Results

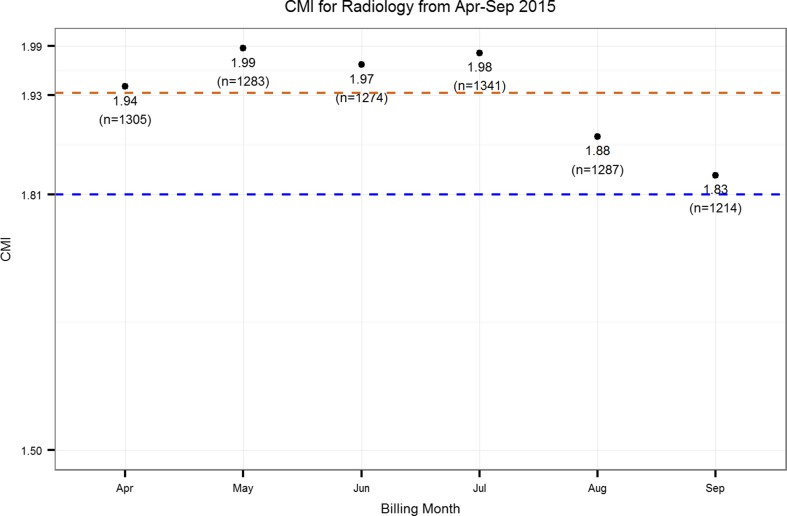

Using the dataset exemplified in Table 1, we calculated CMI for the radiology department. Figure 1 shows how CMI has varied from April to September. As determined by CMS, the hospital CMI for 2015 was 1.81, which is also shown in Fig. 1. Overall CMI for the department was 1.93.

Fig. 1.

CMI for radiology from Apr–Sep 2015. Number of hospital accounts for each month is shown in parenthesis

We also computed CMI for the ten sections within radiology. These are shown in Fig. 2.

Fig. 2.

CMI for sections within radiology compared to overall hospital CMI. Number of hospital accounts for each department is shown in parenthesis

Average CMI for the different Radiology sections is shown in Table 2.

Table 2.

Average CMI for sections within radiology

| Section name | Average CMI | Hospital account count (n = 13,997) |

|---|---|---|

| Bur interv neuro rad | 2.81 | 114 (<1%) |

| Bur interv radiology | 2.46 | 599 (4%) |

| Bur vascular lab | 2.34 | 837 (6%) |

| Bur ultrasound | 2.10 | 1366 (10%) |

| Bur diagnostic rad | 2.07 | 6236 (45%) |

| Bur PET-CT scan | 2.07 | 15 (<1%) |

| Bur MRI | 1.85 | 1156 (8%) |

| Bur CT scan | 1.81 | 3455 (25%) |

| Bur nuclear med | 1.60 | 212 (<2%) |

| Bur mammography | 1.49 | 7 (<1%) |

Discussion

CMI is a measure widely used to benchmark hospitals, but it is also used to drive a variety of management decisions, including determining optimal staffing levels and other resource allocation calculations. With ongoing changes to healthcare reimbursements, hospitals are asked to increasingly do more with less annual budget. Given that labor is the highest contributor to overall costs, reducing labor may be one of the first options most administrators would consider. In this paper, we argue that patient complexity at a department or section level, instead of the hospital level may need to be factored into such decisions in order to not compromise quality of care and facilitate optimal workflow.

A key strength of the work discussed herein is that we have presented a novel application of a well-known, widely used measure to determine the complexity of the patients treated at a granular level, with a focus on radiology and its sections. CMI has a direct impact on reimbursements and it has been reported [18] that small changes in CMI can have large financial implications to hospitals—for instance, even a seemingly small change of 0.1 in CMI for a hospital with a base rate of $5000 per relative weight will result in receiving $500 less per discharge which can account to millions of dollars when aggregated over the number of annual discharges. Given that CMI is a surrogate measure of hospital level compensation, looking at CMI per department could offer valuable insights into allocating limited resources available within a hospital. In the given example, the observed average CMI of 1.93 for the radiology department is approximately 7% higher than the average hospital CMI of 1.81, with an even higher CMI in the interventional sections of that department. If expense reduction methods are employed to adjudicate whether staffing levels are commensurate with clinical load in various departments, it may be important to not simply apply the average hospital level CMI to a specific department, but examine the actual patient complexity that department is facing as well.

Figure 1 shows that radiology department CMI was consistently higher than hospital CMI on a month over month basis. These results suggest that the inpatients having radiology procedures are, on average, sicker than the general inpatient population. Furthermore, Fig. 2 and Table 2 show that there are specific sections within radiology, such as interventional neuroradiology and interventional radiology, which are caring for an inpatient population which is even sicker than the general inpatient population having radiology procedures, potentially requiring significantly more resources for daily operations of the section. Results in Table 2 are also in line with one’s intuition, since interventional neuroradiology procedures, such as intracranial vascular procedures with hemorrhage, are complex procedures that can take over 8 h. Conversely, not very many patients would have mammograms or PET-CT exams in an inpatient setting, and as such, the number of hospital account count is low for these sections. MRI exams require the patient to comply with breath holding commands and be still for a considerable amount of time (e.g., 30 or more minutes) which can be challenging for very sick patients; the lower CMI patients undergoing MRI compared to some of the other radiology specialities may be a reflection thereof as indicated in Fig. 2 and Table 2. Providing CMI information at a section level, opposed to only relying on what is calculated at the hospital level, can assist with the determination of adequate staffing levels, especially in those areas where nurses and technologists care for patients collaboratively.

Monitoring CMI over time can also be useful to understand how the treated patient population changes over time. Such a methodology can also be used by radiology department administrators to justify requests for additional staff, or maintain current staffing levels when staff reductions are proposed. In fact, in early-2015, a third party consulting firm performed labor analytics on Lahey Radiology by using data for the 3-month period from April to June 2014 as baseline. Lahey Hospital in Burlington had been observing a steady increase in case complexity in recent years, and in November 2014, the interventional radiology department hired three new nurses to merely keep up with daily operations. The analytics software used by the third party was based on APC weights, modeling the department as an outpatient setting. The addition of nursing staff to the interventional department, which had become necessary to care for the complex patients, significantly lowered the calculated interventional radiology’s productivity to around 60–70%. We argue that this was an artifact of the method that was being used. With multiple comorbidities (limited mobility and other complications), inpatients usually tend to be sicker than outpatients [19, 20]. APC had already underestimated the extra effort required to treat this sicker inpatient population, and having additional staff only made the productivity numbers worse. However, by introducing department level CMI, Radiology managed to advocate for a more suitable calculation of its expected productivity reflecting actual patient mix and complexity in the benchmark target. The use of the correct metric provided both business intelligence, and also aided in supporting appropriate resourcing. Another potential practical application of the proposed methodology could be determining CMI at a provider level for benchmarking purposes. Most practices currently employ a relative value unit (RVU)-based methodology to determine physician productivity, although more comprehensive approaches have been proposed in the literature [21, 22]. Such approaches can possibly be augmented by adding CMI as one of the factors so that patient complexity is also reflected in physician benchmarking. RVUs have been reported to undervalue (in terms of having a low RVU value and therefore, low compensation) the cognitive office effort required for certain conditions that may be encumbered by time-consuming extensive records, imaging review, patient history, examination, and conferences with the patient and other physicians [23]. A CMI-integrated approach may help resolve some of the deviations in productivity between physicians in their daily procedures.

Despite providing a valuable addition to the metrics radiology administrators track, the current study has two main limitations. The most notable is that the dataset is from a single hospital and therefore we cannot make a generalized claim that inpatients having radiology procedures are generally sicker than the hospital inpatient population. The second limitation is that we have considered the inpatient population in isolation and ignored the outpatients the facility treats—a more accurate measure of complexity for a department may consider using outpatient measures, such as APC, to calculate a measure for the outpatients and combining this information with CMI using appropriate weights to determine an overall complexity score.

The current study was based on data that were manually extracted from the hospital’s EMR. We are currently exploring how this process can be automated so that CMI can be monitored on a monthly basis at a department/section level and also integrated this information into routine reports administrators receive. Since billing data is captured in proprietary software, we are exploring to see if such information may be exposed via emerging industry approaches such as the FHIR specification [24]. There has been interest in calculating CMI for different departments in the hospital other than radiology, and as such, we are working with leadership from the operating room to determine CMI for that department. Carrying out CMI analysis for various departments across multiple hospitals may help increase our understanding of the inter-department variations in patient complexity.

Conclusions

Calculating a more granular service level and section level CMI can be a valuable metric to support decisions related to determining optimal distribution of operating resources. Since labor is the highest contributor to overall costs, reducing labor may be one of the first options considered when cost reduction is necessary. We argue that patient complexity may need to be factored into labor analytics in order to drive realistic managerial decision-making which does not compromise quality of care and facilitate optimal workflow.

Acknowledgments

The authors would like to acknowledge the contributions of Patricia Doyle (Director of Radiology) and Bruce Ota (Radiology Administrator) for all their support and guidance on this work.

Contributor Information

Thusitha Mabotuwana, Phone: +1 914 208 0096, Email: thusitha.mabotuwana@philips.com.

Christopher S. Hall, Email: christopher.hall@philips.com

Sebastian Flacke, Email: Sebastian.Flacke@Lahey.org.

Shiby Thomas, Email: Shiby.Thomas@Lahey.org.

Christoph Wald, Email: Christoph.Wald@lahey.org.

References

- 1.Centers for Medicare & Medicaid Services: NHE Fact Sheet. [cited 2016 Jul 17]; Available from: https://www.cms.gov/research-statistics-data-and-systems/statistics-trends-and-reports/nationalhealthexpenddata/nhe-fact-sheet.html

- 2.Kanzaria HK, Hoffman JR, Probst MA, et al. Emergency physician perceptions of medically unnecessary advanced diagnostic imaging. Acad Emerg Med. 2015;22(4):390–8. doi: 10.1111/acem.12625. [DOI] [PubMed] [Google Scholar]

- 3.Lavery HJ, Brajtbord JS, Levinson AW, et al. Unnecessary imaging for the staging of low-risk prostate cancer is common. Urology. 2011;77(2):274–8. doi: 10.1016/j.urology.2010.07.491. [DOI] [PubMed] [Google Scholar]

- 4.peer60. Unnecessary Imaging: Up to $12 Billion Wasted Each Year. [cited 2016 Jul 17]; Available from: http://research.peer60.com/unnecessary-imaging/

- 5.Centers for Medicare & Medicaid Services. Comprehensive Care for Joint Replacement Model. [cited 2016 Jul 17]; Available from: https://innovation.cms.gov/initiatives/cjr

- 6.Centers for Medicare & Medicaid Services. Health Care Payment Learning and Action Network. [cited 2016 Jul 15]; Available from: https://www.cms.gov/newsroom/mediareleasedatabase/fact-sheets/2015-fact-sheets-items/2015-02-27.html

- 7.Kassing P, Mulaik MW, Rawson J. Pricing radiology bundled CPT codes accurately. Radiol Manage. 2013;35(2):9–15. [PubMed] [Google Scholar]

- 8.Kirtane M. Ensuring the validity of labor productivity benchmarking. Healthc Financ Manage. 2012;66(6):126–8. [PubMed] [Google Scholar]

- 9.Office of Statewide Health Planning and Development. Case Mix Index. [cited 2016 Jul 17]; Available from: http://www.oshpd.ca.gov/HID/Products/PatDischargeData/CaseMixIndex/

- 10.Barbara W: Medicare payment for hospital outpatient services: a historical review of policy options - report to the Medicare payment advisory commission. 2005, Rand Corporation

- 11.Centers for Medicare & Medicaid Services. FY 2011 Final Rule Data Files. 2011 [cited 2016 Jul 17]; Available from: https://www.cms.gov/Medicare/Medicare-Fee-for-Service-Payment/AcuteInpatientPPS/FY-2011-IPPS-Final-Rule-Home-Page-Items/CMS1237932.html

- 12.Centers for Medicare & Medicaid Services. Acute Inpatient PPS. [cited 2016 Jul 17]; Available from: https://www.cms.gov/Medicare/Medicare-Fee-for-Service-Payment/AcuteInpatientPPS/index.html?redirect=/acuteinpatientpps/

- 13.Centers for Medicare & Medicaid Services. Addendum A and Addendum B Updates. [cited 2016 Jul 17]; Available from: https://www.cms.gov/Medicare/Medicare-Fee-for-Service-Payment/HospitalOutpatientPPS/Addendum-A-and-Addendum-B-Updates.html

- 14.American College of Emergency Physicians. APC (Ambulatory Payment Classifications). [cited 2016 Jul 17]; Available from: http://www.acep.org/Clinical---Practice-Management/APC-%28Ambulatory-Payment-Classifications%29-FAQ/

- 15.Lahey Hospital & Medical Center. [cited 2016 Jul 17]; Available from: http://www.lahey.org/

- 16.Epic. [cited 2016 Jul 15]; Available from: http://www.epic.com/

- 17.Summary of the HIPAA Privacy Rule. [cited 2016 Jul 15]; Available from: http://www.hhs.gov/hipaa/for-professionals/privacy/laws-regulations/

- 18.Cesta T. Follow these sure-fire tips to sort through data and measure outcomes in your department. Hosp Case Manag. 2011;19(5):71–3. [PubMed] [Google Scholar]

- 19.Ajijola OA, Macklin EA, Moore SA, et al. Inpatient vs. elective outpatient cardiac resynchronization therapy device implantation and long-term clinical outcome. Europace. 2010;12(12):1745–9. doi: 10.1093/europace/euq319. [DOI] [PubMed] [Google Scholar]

- 20.Dickstein K. Inpatients are sicker than outpatients; finally it’s evidence-based. Europace. 2010;12(12):1662–3. doi: 10.1093/europace/euq362. [DOI] [PubMed] [Google Scholar]

- 21.Duszak R, Jr, Muroff LR. Measuring and managing radiologist productivity, part 1: clinical metrics and benchmarks. J Am Coll Radiol. 2010;7(6):452–8. doi: 10.1016/j.jacr.2010.01.026. [DOI] [PubMed] [Google Scholar]

- 22.Duszak R, Jr, Muroff LR. Measuring and managing radiologist productivity, part 2: beyond the clinical numbers. J Am Coll Radiol. 2010;7(7):482–9. doi: 10.1016/j.jacr.2010.01.025. [DOI] [PubMed] [Google Scholar]

- 23.Katz S, Melmed G. How Relative Value Units Undervalue the Cognitive Physician Visit: A Focus on Inflammatory Bowel Disease. Gastroenterol Hepatol (N Y) 2016;12(4):240–4. [PMC free article] [PubMed] [Google Scholar]

- 24.HL7.org. Welcome to FHIR. [cited 2016 Jul 15]; Available from: https://www.hl7.org/fhir/