Abstract

Background

Clinical guidelines provide systematically developed recommendations for deciding on appropriate health care options for specific conditions and clinical circumstances. Up until recently, patients and caregivers have rarely been included in the process of developing care guidelines.

Objective

This project will develop and test a new online method for including patients and their caregivers in this process using Duchenne muscular dystrophy (DMD) care guidelines as an example. The new method will mirror and complement the RAND/UCLA Appropriateness Method (RAM)—the gold standard approach for conducting clinical expert panels that uses a modified Delphi format. RAM is often used in clinical guideline development to determine care appropriateness and necessity in situations where existing clinical evidence is uncertain, weak, or unavailable.

Methods

To develop the new method for engaging patients and their caregivers in guideline development, we will first conduct interviews with experts on RAM, guideline development, patient engagement, and patient-centeredness and engage with Duchenne patients and caregivers to identify how RAM should be modified for the purposes of patient engagement and what rating criteria should patients and caregivers use to provide their input during the process of guideline development. Once the new method is piloted, we will test it by conducting two concurrently run patient/caregiver panels that will rate patient-centeredness of a subset of DMD care management recommendations already deemed clinically appropriate and necessary. The ExpertLens™ system—a previously evaluated online modified Delphi system that combines two rounds of rating with a round of feedback and moderated online discussions—will be used to conduct these panels. In addition to developing and testing the new engagement method, we will work with the members of our project’s Advisory Board to generate a list of best practices for enhancing the level of patient and caregiver involvement in the guideline development process. We will solicit input on these best practice from Duchenne patients, caregivers, and clinicians by conducting a series of round-table discussions and making a presentation at an annual conference on Duchenne.

Results

The study protocol was reviewed by RAND’s Human Subjects Protection Committee, which determined it to be exempt from review. Interviews with RAM experts have been completed. The projected study completion date is May 2020.

Conclusions

We expect that the new method will make it easier to engage large numbers of patients and caregivers in the process of guideline development in a rigorous and culturally appropriate manner that is consistent with the way clinicians participate in guideline development. Moreover, this project will develop best practices that could help involve patients and caregivers in the clinical guideline development process in other clinical areas, thereby facilitating the work of guideline developers.

Keywords: Delphi method, Duchenne muscular dystrophy, ExpertLens, guideline development, online stakeholder engagement panels, patient engagement

Introduction

Clinical guidelines provide systematically developed recommendations for deciding on appropriate health care options for specific conditions and clinical circumstances [1]. A key methodological aspect that influences the quality of guideline recommendations is the composition of the group developing the guideline [2]. All stakeholders with a legitimate interest in a clinical guideline should be engaged in guideline development to ensure that guidelines are created in a transparent, democratic manner and are acceptable to different stakeholder groups [3]. However, clinical guideline development groups traditionally have not directly involved patients or their caregivers [4]. For example, only an estimated 25% of guidelines involve patients in the development process [4]. Moreover, a review of 51 evidence-based clinical practice guidelines found only 5% of guideline word count and 6% of references were related to patient preferences [5].

Patients, their caregivers, and many organizations concerned about guideline development have long argued that guideline development groups need to better include patients and caregivers because these stakeholders have particular knowledge and expertise on the direct experience of conditions of interest [1]. Research shows that patients and clinicians value the balance between risks and benefits differently [6] and that patients and their families provide unique perspectives that may differ from areas of focus in a clinical encounter [7]. For instance, while some care recommendations might be deemed appropriate and necessary by clinicians, they may not be acceptable from the patient perspective [8]; patients may focus more on issues related to overall quality of life rather than specific disease areas or life expectancy [9]. Effective implementation of guidelines ultimately requires patient adherence, and one might argue that the practical use of guidelines will be higher where patients feel the guidelines are sensitive and relevant to their needs. Moreover, the World Health Organization, National Institute for Health and Care Excellence (NICE), and Institute of Medicine also called for involving patients and other public stakeholders in developing and implementing clinical guidelines [4,8,10]. For example, the Guideline International Network (G-I-N)—an international organization dedicated to guidelines and to hosting the largest international guideline library—created a Patient and Public Involvement Group (G-I-N PUBLIC) to more effectively engage patient stakeholders in developing and implementing clinical guidelines [11]. Finally, the Grading of Recommendations Assessment, Development, and Evaluation (GRADE) approach to guideline development also encourages guideline developers to ensure that guidelines address the outcomes that patients value and that their recommendations are likely to be acceptable from the patient perspective [12].

While there is agreement that patients should be involved in guideline development, there is no consensus on how patients should participate in this process. Patients can be involved at different stages of the process, from topic selection, to reviewing and grading the strength of evidence, to developing recommendations [13]. They can also be asked to provide their views on living with their condition, accessing services, perceived benefits and harms of treatment options, or clinical outcomes of importance [1,14]. Perhaps more than any other stakeholder, patients are able to reflect on what outcomes they are looking for from the guidelines. Besides asking patients to join the evidence review group [15] or to submit evidence to be considered for guideline development [1], which could lead to a broader range of evidence being considered, guideline development groups have engaged patients in reviewing existing studies on patient preferences and solicited patient input in designing data collection instruments to help identify areas where patients and their caregivers feel guideline recommendations are most needed [16]. Some guideline groups have dedicated time during meetings to focus on patient and caregiver perspectives [17]. For example, NICE uses deliberative participation methods that involve members of the general public, including patients, in discussing social values related to clinical guideline development, so panel experts can interact directly with citizens [18]. Some guideline organizations include professional advocates acting on behalf of patients with a given condition in guideline development groups or panels to promote “the patient perspective” as an influence on guideline recommendations [19]. Although professional patient advocates play an important role, lay people with a given medical condition should also be directly engaged in the process of developing guideline recommendations [20] because when they are excluded, trade-offs on what makes the cut as a recommendation are made on behalf of patients rather than with and by patients [21]. Finally, it is generally not possible for one person or a small number of people to adequately represent the diversity of perspectives of all patients with the condition. Therefore, approaches that encourage participation of larger groups of patients are needed.

In summary, research is needed to develop a systematic, scalable, and culturally appropriate method to engage patients—particularly those with rare diseases or disabilities that limit their mobility—and their caregivers in developing guideline recommendations. Ideally, this method should facilitate the practical use of care guidelines given low levels of compliance and adherence to care recommendations among both clinicians and patients [22,23]. Finally, this new method should be consistent with the method used by clinicians in the process of developing consensus-based clinical guidelines and account for key recommendations from a workshop of international leaders in guideline development that recommended to expand patient engagement methods to include Web-based consultations and to analyze the benefits and drawbacks of specific methods for patient involvement [13].

In this project, we will develop such a method using Duchenne muscular dystrophy (DMD) as an example. DMD is a progressive, fatal disorder where caregiving, financial, emotional, and physical demands increase over time and can impact the entire family. Affected individuals have progressive loss of functional muscle fibers, which results in weakness, loss of ambulation (typically in the teen years), and premature death (typically in the mid-to-late second decade of life) [24]. The DMD community developed a set of clinical care guidelines covering 8 domains of care [25,26], but patients and caregivers have been consulted in the development of guidelines for 2 domains only.

Methods

Overview

This project is an equal partnership between researchers from RAND, a nonprofit research institution that developed Delphi and RAND/UCLA Appropriateness Method (RAM), and community partners from Parent Project Muscular Dystrophy (PPMD), the largest most comprehensive nonprofit organization in the United States focused on finding a cure for DMD. The partnership is a natural fit given the complementary expertise, skills, and resources both organizations bring to the partnership. We assembled a strong interdisciplinary team of academic and community investigators, along with patient and caregiver representatives, who bring the right mix of methodological skills and clinical expertise combined with the lived experience of caring for a Duchenne patient. The project has a 7-person interdisciplinary Advisory Board that includes an adult DMD patient, caregivers and patient advocates, researchers, a clinician, a guideline developer, and a RAM expert.

Data Collection

Our study relies on a 3-step mixed-methods approach to develop and test a new approach for patient engagement in clinical guideline development.

First, we will adapt RAM, a gold standard approach used by clinical experts in the process of guideline development to reach consensus on appropriateness and necessity of care recommendations [27], for the purposes of systematic online engagement of DMD patients and their caregivers in the process of determining patient-centeredness of already existing care guidelines. RAM is a modified Delphi method that combines two rating rounds with a face-to-face moderated discussion. Nine clinical experts review the existing evidence (if any) and rate the appropriateness and sometimes necessity of existing treatment options or procedures using a 9-point Likert scale. Appropriateness and necessity ratings are based on experts’ own clinical judgments—informed by the systematic review of existing evidence—about what treatment options are best for “an average patient presenting to an average physician who performs the procedure in an average hospital” [27]. RAM is considered a formal consensus exploration method that meets the requirements of a scientific method; it has been recommended for use in guideline development in the absence of rigorously conducted randomized controlled trials [28] because it helps provide explicit links between the scientific evidence and the guideline recommendation. RAM was used to develop Duchenne guidelines. RAM panels, however, have been criticized for their small size and inclusion of only clinicians and researchers [29].

In modifying RAM, we will consult with up to 10 researchers and clinicians who have used RAM in the past, engaged patients in guideline development, or worked on topics related to patient-centeredness. We will solicit their perspectives on what modifications to RAM are needed to facilitate patient and caregiver engagement in guideline development, how patient-centeredness ratings of care management strategies can be included in guidelines, how guidelines can be rated and perceived by the clinical community, and how these ratings could help providers, patients, and their caregivers determine the best course of action and ensure adherence to care recommendations.

To triangulate these findings, we will also solicit input from a maximum variation purposive sample of up to 10 adult DMD patients and up to 30 caregivers. We can achieve diversity of perspectives and experiences by recruiting adult patients and caregivers of patients at various stages of disease progression, which is typically associated with the patient’s age, and from different geographic locations. Participants will be recruited by PPMD through its Duchenne Connect (DCN) registry—the largest repository of patient self-reported information on DMD.

We will be asking patients and caregivers to share their perspectives on the topics covered during the RAM expert interviews and comment on the usability of and suggest modifications to the ExpertLens (EL) system, an online modified Delphi platform that we will use for patient and caregiver engagement. EL is a previously evaluated online modified Delphi system that typically combines two rounds of rating with a round of asynchronous moderated online discussions [30,31]. EL has been used in numerous research studies [32-39] but has yet to be used in the context of patient involvement in clinical guideline development. We chose EL because it allows for conducting RAM panels and soliciting input from large, diverse, and geographically distributed groups of participants iteratively; for combining quantitative and qualitative data; for engaging participants anonymously; and for exploring points of agreement and disagreement among participants [40,41]. These characteristics make EL particularly useful for engaging DMD patients and caregivers—who are located around the country, with some living abroad—and for collecting their input on existing care guidelines for DMD. Patients and caregivers will rate patient-centeredness of care recommendations using 9-point Likert scales and share their thoughts using online discussion boards that use the same open-ended format as previous PPMD engagement efforts.

To learn what DMD patients and their caregivers think about EL, we will first ask them to watch a short video describing each EL round and what participation in the panel will entail. We will then provide them with access to the EL system and ask them to share their thoughts on the user-friendliness of the EL tool, the instructions on how to use EL, the statistical feedback that will be provided to participants, and the interactiveness of the discussion round, among other topics. To do this, participants will answer a series of open-ended questions and join threaded discussion boards within EL. We will also ask questions about participants’ understanding of and thoughts about patient-centeredness, participation burden in the EL process that is likely to be acceptable from the perspective of patients and caregivers, the maximum number of clinical scenarios, and the amount and type of background information on DMD patient and caregiver preferences and clinical information that should be included. We anticipate that participants will spend approximately 1 to 2 hours answering these questions and engaging in an online discussion over a period of 7 to 10 days. They will receive a $50 gift card for their participation.

Based on the input obtained from expert interviews and DMD patients and caregivers, we will implement changes to the EL platform and develop the modified Delphi protocol for rating patient-centeredness of already developed clinical guidelines. The revised version of the EL will be pilot tested by 2 to 3 DMD patients and 7 to 8 caregivers, who will go through all three rounds of the EL process as if they were real study participants. After each round, pilot-testers will share feedback by answering questions either by email or phone. These open-ended questions will focus on specific issues related to system usability, question clarity, and ease of discussion use, among other topics. We anticipate that participation in all three rounds will take about 3 to 4 hours over a period of 3 weeks. Participants will receive a $50 gift card for each round completed at the end of the pilot.

Second, we will test the new approach using one of the DMD care guideline domains that was developed using RAM but without patient or caregiver input, such as cardiac or endocrine care management guidelines. To do so, we will conduct two 3-round EL panels using a modified version of the EL system to determine the level of patient-centeredness of selected DMD care recommendations already deemed clinically appropriate. Our operational definition of patient-centeredness will be informed by the existing literature and consistent with the operationalization of appropriateness used in RAM. Our 3-round design is consistent with a recommendation for conducting Delphi studies with 2 or 3 rating rounds [42]. In testing the new approach, we will compare participants’ ratings of patient-centeredness, satisfaction levels, and participation rates after rounds 1 and 3 in both panels. Because round 1 of the EL process is essentially a survey, we will treat round 1 ratings as data from a patient engagement survey—a more common mode of patient engagement than the modified Delphi approach [43]. Such a strategy is particularly relevant for comparing the two approaches in a rare disease community where the pool of potential participants is limited. Finally, as in previous studies validating the EL approach [31,39], we will determine the replicability of final panel ratings of patient-centeredness by comparing results of two EL panels conducted using the same protocol.

We will use the DCN patient registry and PPMD social media channels to recruit 20 to 25 adult patients and 60 to 70 caregivers with a range of experiences with Duchenne care and varying degrees of comfort using technology. We will then randomly assign them to two panels similar in their composition. Doing so will help us determine the replicability of panel determinations and adhere to the best practice of conducting online modified Delphi panels that suggests including 20 to 40 participants [31] to ensure their active participation while minimizing burden associated with reading comments posted by all panel members. We will recruit more than 40 participants per panel to account for attrition typical for multiround Delphi studies without face-to-face meetings. Our panels will be significantly larger than a traditional 9-person RAM clinical panel [27]. We can engage more participants because of the online nature of the panel, which helps increase the reliability of panel findings [28]. Our goal is to recruit a maximum variation sample [44] that reflects the diversity of DMD patient and caregiver experiences. This sample will not be random, because participants will be chosen on purpose to ensure their knowledge, expertise, and diversity of experiences. This is a standard approach to recruiting Delphi participants [28,45].

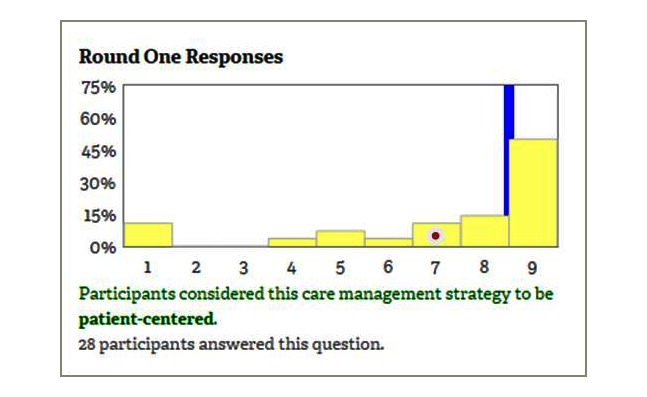

In round 1, participants will use 9-point Likert scales to rate the level of patient-centeredness of selected care management strategies and explain their responses using open-text boxes provided after each rating question (Figure 1). In round 2, participants will see a distribution of responses to all round 1 questions (Figure 2). While only the panelist knows his or her individual rating, all participants know the group’s ratings. Showing statistical feedback to the participant is an essential component of the Delphi process [42]. For each question, participants will see a bar chart showing the frequency of each response category (yellow bars), a group median (blue line), their individual response (red dot), and a short statement describing the group decision based on the group agreement as produced by the RAM [27]. Consistent with best practices in Delphi studies [45], we will provide instructions on how to interpret statistical results using instructional videos and text boxes that appear when a participant hovers over a chart. Participants will be also able to review all rationale comments posted in round 1 and discuss group ratings using an asynchronous and anonymous discussion board moderated by content experts from PPMD/DCN and online engagement experts from RAND. In round 3, participants will reanswer round 1 questions and rate any new care management strategies that might have been suggested in round 2. Allowing for new questions to be added in round 3 is consistent with the best practices for conducting Delphi studies [42]. All participants will receive a $50 gift card for completing each round.

Figure 1.

Round 1 mock-up screenshot.

Figure 2.

Round 2 mock-up screenshot.

At the end of each EL panel, participants will use 7-point Likert-type scales to rate their satisfaction with the online engagement process [31] by expressing their level of agreement with such statements as “participation in this study was interesting,” “the discussions brought out views I hadn’t considered,” and “I was comfortable expressing my views in the discussion round,” among others. We will use a modified version of these questions after round 1 and 3 to compare participants’ experiences. Modifications to satisfaction questions that are asked after round 1 are needed because participants would not have participated in the discussion round at that time. We will develop additional questions about the usefulness and feasibility of widespread use of the online process of rating patient-centeredness of care guidelines. Open-ended questions will be added to encourage participants to use their own words to share their experiences and perspectives. A subsample of patients and caregivers involved in the EL process will be asked to participate in semistructured phone interviews to further share their experiences and thoughts after they complete all study rounds.

Third, we will develop a series of best practices for engaging patients and their caregivers in the process of care guideline development. To do so, we will identify generalizable lessons learned that could inform the methodology of engaging patients and caregivers in the process of guideline development by working in close collaboration with our study Advisory Board. We will share these best practices during one of the PPMD’s annual Connect Conferences that are attended by nearly 500 families from around the world. During the conference, we will engage with adult DMD patients, caregivers, and clinicians in a series of up to three round-table discussions that will allow us to discuss how to address care management approaches deemed appropriate but not consistent with patient’s care preferences or desired outcomes (eg, not patient-centered). Such small group discussions are a core component of the community-partnered research conference model that we developed for ensuring appropriate dissemination of study findings and for soliciting community input on study outcomes [46]. Each round-table discussion will last for about 60 to 90 minutes and include up to 8 participants. As a token of appreciation, participants will receive a $50 gift card.

Data Analysis

There are two types of data analysis that will be performed in this study. First, qualitative data from expert and EL participant interviews; feedback from pilot testers about EL; responses to all open-ended questions, rationale comments, and discussions within EL; and round-table discussions will be analyzed thematically by identifying and describing explicit and implicit ideas in the data. Applied thematic analysis [47] will be used because it helps reduce large amounts of textual data and present them in easy-to-understand statements that could be used to explain how experts feel about patient engagement in guideline development, how the EL system should be modified to facilitate patient engagement in care guideline development, how patient input should be solicited, and what participants think about an online approach to patient and caregiver engagement in guideline development once they participate in the study. To expedite the data analysis and ensure its usefulness, we will begin with a deductive approach to directly answer our research questions. At the same time, we will use an open coding strategy to flag interesting ideas and themes that may not be directly relevant to research questions but should be explored further once preliminary data analysis is complete or once more data have been analyzed [48]. Such an inductive approach is crucial for identifying unanticipated ideas and issues that frequently emerge from open-ended questions.

Given the volume of data collected from different types of participants, we will ensure efficient data management by using qualitative data analysis (QDA) software such as MAXQDA (Verbi GmbH) to code and retrieve large amount of textual data to help ensure analysis rigor [49]. Doing so will help us organize, evaluate, code, annotate, and interpret qualitative data by creating easy-to-read reports and data visualizations. We will develop, program within the QDA software, and update on an as-needed basis a codebook—a list of codes, often hierarchically organized, accompanied by a description and examples of each code—to facilitate data coding. Data coders will be trained on how to use the codebook, work jointly to code approximately 20 percent of the data, and discuss any discrepancies until consensus is achieved and the codebook is appropriately adjusted.

Second, ratings of patient-centeredness collected during the EL panel process will be analyzed quantitatively to determine the existence of consensus among participants. It is recommended that every Delphi study determine how consensus will be defined among participants before the data collection begins [42]. One of the EL features is its use of the RAM [27] to automatically determine the group decision (eg, whether a particular care management strategy was deemed patient-centered) for each round 1 item, which is displayed in round 2 and is also calculated after round 3. This process, identical to that used in determining appropriateness of different DMD care management strategies, begins with determining the existence of disagreement among participants using the following a priori process. EL automatically (1) calculates the value of interpercentile range (IPR), or the range of responses that fall between the 70th and the 30th percentiles; (2) calculates the value of the interpercentile range adjusted for symmetry (IPRAS), which is a measure of dispersion for asymmetric distributions; and (3) compares the values of IPR and IPRAS to see if there is disagreement. Disagreement is said to exist if IPR>IPRAS [27,50]. Disagreement among participants automatically produces an uncertain decision. If, instead, there is no disagreement among panelists, the value of the median will determine if the group decision is positive, negative, or uncertain. If the median is within the upper tertile of the 9-point response scale (response categories 7-9), then the decision is positive, meaning that a care management approach is considered to be patient-centered. If the median is within the lower tertile of the 9-point response scale (response categories 1-3), then the decision is negative, meaning that a care management approach is considered to be not patient-centered. A median that lies within the middle tertile (response categories 4-6) produces an uncertain decision. We will use this approach to determining consensus on the patient-centeredness of care management strategies in both EL panels using round 1 and round 3 rating data.

To compare survey and modified Delphi results, we will pool the data across two EL panels and compare determinations of patient-centeredness after round 1 (survey) and round 3 (Delphi). Because there is no right or wrong response, it is not possible to determine which approach produces better results or is more valid at the time the data are collected. However, we follow a recommended practice in Delphi studies and focus on how much each method can help patients and their caregivers reach consensus [28]. To do so, we will first calculate the percentage of care management strategies for which panelists reached agreement versus those where agreement was not achieved. We will then focus on strategies where panelists reached agreement and calculate the proportion with positive, negative, and uncertain determinations. Our assumption is that there will be fewer strategies characterized by participant disagreement after round 3, which we treat as an indicator of the benefit of using the modified Delphi approach. Still, we believe the survey approach may have its own benefit. Indeed, we assume that participant attrition rates (eg, the number of nonresponders) will be smaller in round 1 than in round 3. Larger samples may help provide a better description of the diversity of patient experiences, albeit with potentially fewer qualitative details that will crystalize after round 2 discussion.

To determine the replicability of online panel ratings, we will adopt the following a priori analytic approach originally developed by Shekelle and colleagues [51] to analyze reproducibility of in-person panel ratings. We will first examine round 3 determinations of patient-centeredness for each care management strategy and identify the proportion of strategies receiving positive, negative, or uncertain determinations. Then, we will determine the pairwise percentage of agreement between the two panels and use t tests to identify any statistically significant differences in panel ratings. Because the distribution of ratings may be nonnormal, we will conduct sensitivity analyses using the Wilcoxon rank sum, a nonparametric method. We will treat a 70% agreement as an indicator of acceptable reproducibility, which is at least as good as that of in in-person panels [51]. Finally, we will calculate kappa coefficients comparing the determinations made by the two panels across items. If the kappa statistic is at least moderate (.41-.60), we will consider the online approach to be a reliable mode of collecting patient-centeredness ratings using the modified RAM approach [52]. This threshold is conservative; previous research shows that the reproducibility of both in-person [51] and online [31] panel findings is rarely better than moderate.

Besides comparing ratings of patient-centeredness, we will also focus on participant experiences after round 1 (survey) and round 3 (Delphi). As in earlier studies [31], we will pool the data across the two EL panels and look at the average response to each satisfaction question asked in round 1 and round 3. We will consider a mean value of 5 (agree slightly) and higher on the 7-point positively worded agreement scale to be an indicator of a generally positive opinion. Depending on sample size, we may conduct exploratory factor analyses to identify constructs that capture participant experiences and opinions about the new online system. To explore differences in participant satisfaction and perceived usefulness of the online approach after rounds 1 and 3, we will use a paired t test. We anticipate greater satisfaction and perceived usefulness after round 3 but a higher level of perceived participation burden.

Results

The study protocol was reviewed by RAND’s Human Subjects Protection Committee, which determined it to be exempt from review. Interviews with RAM experts have been completed. The study team is in the process of analyzing these interviews. The projected study completion date is May 2020.

Discussion

Our study is expected to make a number of significant methodological contributions to a growing body of approaches to integrate patients and caregivers as active participants in research teams and decision-making bodies. First, by adapting an existing gold standard approach to expert elicitation for the purposes of systematic engagement of patients and caregivers in a culturally appropriate yet scientifically rigorous manner, our study will help address a methodological gap in evidence on consumer involvement and systematic integration of patient preferences in clinical practice guidelines [4,43]. Second, the proposed project augments and complements efforts to update the existing care and management guidelines for DMD, led by the Centers for Disease Control and Prevention’s DMD Care Considerations Working Group. In the proposed project, we will introduce an innovative new step that could be integrated into care guideline development where patients and their caregivers will rate DMD care management strategies already deemed clinically appropriate and necessary on the patient-centeredness criteria that will be developed in close partnership between patients, clinicians, and researchers. Doing so may help mitigate barriers that have led to variability in guideline implementation and increase guideline adherence, which may lead to improved treatment and quality of life for affected patients and families. Third, the proposed project will develop best practices that could help involve patients and caregivers in the clinical guideline development process in other clinical areas, thereby facilitating the work of groups aiming to incorporate patient values and preferences into guideline development, such as G-I-N and GRADE.

Acknowledgments

This work is supported through a Patient-Centered Outcomes Research Institute Program Award (ME-1507-31052). All statements in this report, including its findings and conclusions, are solely those of the authors and do not necessarily represent the views of the Patient-Centered Outcomes Research Institute, its Board of Governors, or Methodology Committee.

Abbreviations

- DCN

Duchenne Connect

- DMD

Duchenne muscular dystrophy

- EL

ExpertLens

- G-I-N

Guideline International Network

- G-I-N PUBLIC

Guideline International Network Patient and Public Involvement Group

- GRADE

Grading of Recommendations Assessment, Development, and Evaluation

- IPR

interpercentile range

- IPRAS

interpercentile range adjusted for symmetry

- NICE

National Institute for Health and Care Excellence

- PPMD

Parent Project Muscular Dystrophy

- QDA

qualitative data analysis

- RAM

RAND/UCLA Appropriateness Method

- UCLA

University of California Los Angeles

Footnotes

Conflicts of Interest: DK and SG are members of the ExpertLens team at RAND. SG’s spouse is a salaried employee of Eli Lilly and Company and owns stock. SG has accompanied his spouse on company-sponsored travel. All other coauthors report no conflicts of interest.

References

- 1.Kelson M. Patient involvement in clinical guideline development. Where are we now? J Clin Gov. 2001;9:169–174. [Google Scholar]

- 2.Grimshaw J, Eccles M, Russell I. Developing clinically valid practice guidelines. J Eval Clin Pract. 1995 Sep;1(1):37–48. doi: 10.1111/j.1365-2753.1995.tb00006.x. [DOI] [PubMed] [Google Scholar]

- 3.Cluzeau F, Wedzicha JA, Kelson M, Corn J, Kunz R, Walsh J, Schünemann HJ, ATS/ERS Ad Hoc Committee on Integrating and Coordinating Efforts in COPD Guideline Development Stakeholder involvement: how to do it right: article 9 in Integrating and coordinating efforts in COPD guideline development. An official ATS/ERS workshop report. Proc Am Thorac Soc. 2012 Dec;9(5):269–273. doi: 10.1513/pats.201208-062ST. [DOI] [PubMed] [Google Scholar]

- 4.Schünemann HJ, Fretheim A, Oxman AD. Improving the use of research evidence in guideline development: 10. Integrating values and consumer involvement. Health Res Policy Syst. 2006 Dec 05;4:22. doi: 10.1186/1478-4505-4-22. https://health-policy-systems.biomedcentral.com/articles/10.1186/1478-4505-4-22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Chong C, Chen I, Naglie C, Krahn M. Do clinical practice guidelines incorporate evidence on patient preferences? Med Decis Making. 2007;27(4):E63–E64. [Google Scholar]

- 6.Fraenkel L, Miller AS, Clayton K, Crow-Hercher R, Hazel S, Johnson B, Rott L, White W, Wiedmeyer C, Montori VM, Singh JA, Nowell WB. When patients write the guidelines: patient panel recommendations for the treatment of rheumatoid arthritis. Arthritis Care Res (Hoboken) 2016 Jan;68(1):26–35. doi: 10.1002/acr.22758. doi: 10.1002/acr.22758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hunt LM, Arar NH. An analytical framework for contrasting patient and provider views of the process of chronic disease management. Med Anthropol Q. 2001 Sep;15(3):347–367. doi: 10.1525/maq.2001.15.3.347. [DOI] [PubMed] [Google Scholar]

- 8.Krahn M, Naglie G. The next step in guideline development: incorporating patient preferences. JAMA. 2008 Jul 23;300(4):436–438. doi: 10.1001/jama.300.4.436. [DOI] [PubMed] [Google Scholar]

- 9.Peay HL, Hollin I, Fischer R, Bridges JFP. A community-engaged approach to quantifying caregiver preferences for the benefits and risks of emerging therapies for Duchenne muscular dystrophy. Clin Ther. 2014 May;36(5):624–637. doi: 10.1016/j.clinthera.2014.04.011. https://linkinghub.elsevier.com/retrieve/pii/S0149-2918(14)00209-4. [DOI] [PubMed] [Google Scholar]

- 10.Kelson M, Akl EA, Bastian H, Cluzeau F, Curtis JR, Guyatt G, Montori VM, Oliver S, Schünemann HJ, ATS/ERS Ad Hoc Committee on Integrating and Coordinating Efforts in COPD Guideline Development Integrating values and consumer involvement in guidelines with the patient at the center: article 8 in Integrating and coordinating efforts in COPD guideline development. An official ATS/ERS workshop report. Proc Am Thorac Soc. 2012 Dec;9(5):262–268. doi: 10.1513/pats.201208-061ST. [DOI] [PubMed] [Google Scholar]

- 11.Ollenschläger G, Marshall C, Qureshi S, Rosenbrand K, Burgers J, Mäkelä M, Slutsky J, Board of Trustees 2002‚ Guidelines International Network (G-I-N) Improving the quality of health care: using international collaboration to inform guideline programmes by founding the Guidelines International Network (G-I-N) Qual Saf Health Care. 2004 Dec;13(6):455–460. doi: 10.1136/qhc.13.6.455. http://qhc.bmj.com/cgi/pmidlookup?view=long&pmid=15576708. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Alonso-Coello P, Oxman AD, Moberg J, Brignardello-Petersen R, Akl EA, Davoli M, Treweek S, Mustafa RA, Vandvik PO, Meerpohl J, Guyatt GH, Schünemann HJ. GRADE Evidence to Decision (EtD) frameworks: a systematic and transparent approach to making well informed healthcare choices. 2: Clinical practice guidelines. BMJ. 2016 Dec 30;353 doi: 10.1136/bmj.i2089. [DOI] [PubMed] [Google Scholar]

- 13.Boivin A, Currie K, Fervers B, Gracia J, James M, Marshall C, Sakala C, Sanger S, Strid J, Thomas V, van der Weijden T, Grol R, Burgers J. Patient and public involvement in clinical guidelines: international experiences and future perspectives. Qual Saf Health Care. 2010 Oct;19(5):e22. doi: 10.1136/qshc.2009.034835. [DOI] [PubMed] [Google Scholar]

- 14.Graham T, Alderson P, Stokes T. Managing conflicts of interest in the UK National Institute for Health and Care Excellence (NICE) clinical guidelines programme: qualitative study. PLoS One. 2015;10(3) doi: 10.1371/journal.pone.0122313. http://dx.plos.org/10.1371/journal.pone.0122313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Clinical Practice Guideline for the Recognition and Assessment of Acute Pain in Children. Oxford: Royal College of Nursing; 1999. [PubMed] [Google Scholar]

- 16.Marriot S, Lelliot P. Clinical Practice Guidelines and their Development. London: Royal College of Psychiatrists; 1994. [Google Scholar]

- 17.Jarrett L. A Report on a Study to Evaluate Patient/Carer Membership of the First NICE Guideline Development Groups. London: National Institute for Clinical Excellence; 2004. [Google Scholar]

- 18.Kelson M. The NICE Patient Involvement Unit. Evidence-based Healthcare and Public Health. 2005 Aug;9(4):304–307. doi: 10.1016/j.ehbc.2005.05.013. [DOI] [Google Scholar]

- 19.Abdelsattar ZM, Reames BN, Regenbogen SE, Hendren S, Wong SL. Critical evaluation of the scientific content in clinical practice guidelines. Cancer. 2015 Mar 01;121(5):783–789. doi: 10.1002/cncr.29124. doi: 10.1002/cncr.29124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.National Clinical Guidelines for Stroke. London: Royal College of Physicians; 2000. [Google Scholar]

- 21.Browman GP, Somerfield MR, Lyman GH, Brouwers MC. When is good, good enough? Methodological pragmatism for sustainable guideline development. Implement Sci. 2015 Mar 06;10:28. doi: 10.1186/s13012-015-0222-4. https://implementationscience.biomedcentral.com/articles/10.1186/s13012-015-0222-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Bobo JK, Kenneson A, Kolor K, Brown MA. Adherence to American Academy of Pediatrics recommendations for cardiac care among female carriers of Duchenne and Becker muscular dystrophy. Pediatrics. 2009 Mar;123(3):e471–e475. doi: 10.1542/peds.2008-2643. [DOI] [PubMed] [Google Scholar]

- 23.Landfeldt E, Lindgren P, Bell CF, Schmitt C, Guglieri M, Straub V, Lochmüller H, Bushby K. Compliance to care guidelines for Duchenne muscular dystrophy. J Neuromuscul Dis. 2015;2(1):63–72. doi: 10.3233/JND-140053. http://europepmc.org/abstract/MED/26870664. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Biggar WD. Duchenne muscular dystrophy. Pediatr Rev. 2006 Mar;27(3):83–88. doi: 10.1542/pir.27-3-83. [DOI] [PubMed] [Google Scholar]

- 25.Bushby K, Finkel R, Birnkrant DJ, Case LE, Clemens PR, Cripe L, Kaul A, Kinnett K, McDonald C, Pandya S, Poysky J, Shapiro F, Tomezsko J, Constantin C, DMD Care Considerations Working Group Diagnosis and management of Duchenne muscular dystrophy, part 1: diagnosis, and pharmacological and psychosocial management. Lancet Neurol. 2010 Jan;9(1):77–93. doi: 10.1016/S1474-4422(09)70271-6. [DOI] [PubMed] [Google Scholar]

- 26.Bushby K, Finkel R, Birnkrant DJ, Case LE, Clemens PR, Cripe L, Kaul A, Kinnett K, McDonald C, Pandya S, Poysky J, Shapiro F, Tomezsko J, Constantin C, DMD Care Considerations Working Group Diagnosis and management of Duchenne muscular dystrophy, part 2: implementation of multidisciplinary care. Lancet Neurol. 2010 Feb;9(2):177–189. doi: 10.1016/S1474-4422(09)70272-8. [DOI] [PubMed] [Google Scholar]

- 27.Fitch K, Bernstein S, Aguilar M. The RAND/UCLA Appropriateness Method User's Manual. Santa Monica: RAND Corporation; 2001. [Google Scholar]

- 28.Black N, Murphy M, Lamping D, McKee M, Sanderson C, Askham J, Marteau T. Consensus development methods: a review of best practice in creating clinical guidelines. J Health Serv Res Policy. 1999 Oct;4(4):236–248. doi: 10.1177/135581969900400410. [DOI] [PubMed] [Google Scholar]

- 29.Murphy MK, Black NA, Lamping DL, McKee CM, Sanderson CF, Askham J, Marteau T. Consensus development methods, and their use in clinical guideline development. Health Technol Assess. 1998;2(3):i–iv. http://www.journalslibrary.nihr.ac.uk/hta/volume-2/issue-3. [PubMed] [Google Scholar]

- 30.Khodyakov D, Grant S, Barber CEH, Marshall DA, Esdaile JM, Lacaille D. Acceptability of an online modified Delphi panel approach for developing health services performance measures: results from 3 panels on arthritis research. J Eval Clin Pract. 2017 Apr;23(2):354–360. doi: 10.1111/jep.12623. [DOI] [PubMed] [Google Scholar]

- 31.Khodyakov D, Hempel S, Rubenstein L, Shekelle P, Foy R, Salem-Schatz S, O'Neill S, Danz M, Dalal S. Conducting online expert panels: a feasibility and experimental replicability study. BMC Med Res Methodol. 2011 Dec 23;11:174. doi: 10.1186/1471-2288-11-174. https://bmcmedresmethodol.biomedcentral.com/articles/10.1186/1471-2288-11-174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Barber CEH, Marshall DA, Alvarez N, Mancini GBJ, Lacaille D, Keeling S, Aviña-Zubieta JA, Khodyakov D, Barnabe C, Faris P, Smith A, Noormohamed R, Hazlewood G, Martin LO, Esdaile JM, Quality Indicator International Panel Development of cardiovascular quality indicators for rheumatoid arthritis: results from an international expert panel using a novel online process. J Rheumatol. 2015 Sep;42(9):1548–1555. doi: 10.3899/jrheum.141603. http://europepmc.org/abstract/MED/26178275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Barber CEH, Marshall DA, Mosher DP, Akhavan P, Tucker L, Houghton K, Batthish M, Levy DM, Schmeling H, Ellsworth J, Tibollo H, Grant S, Khodyakov D, Lacaille D, Arthritis Alliance of Canada Performance Measurement Development Panel Development of system-level performance measures for evaluation of models of care for inflammatory arthritis in Canada. J Rheumatol. 2016 Mar;43(3):530–540. doi: 10.3899/jrheum.150839. http://www.jrheum.org/cgi/pmidlookup?view=long&pmid=26773106. [DOI] [PubMed] [Google Scholar]

- 34.Barber CE, Patel JN, Woodhouse L, Smith C, Weiss S, Homik J, LeClercq S, Mosher D, Christiansen T, Howden JS, Wasylak T, Greenwood-Lee J, Emrick A, Suter E, Kathol B, Khodyakov D, Grant S, Campbell-Scherer D, Phillips L, Hendricks J, Marshall DA. Development of key performance indicators to evaluate centralized intake for patients with osteoarthritis and rheumatoid arthritis. Arthritis Res Ther. 2015 Nov 14;17:322. doi: 10.1186/s13075-015-0843-7. https://arthritis-research.biomedcentral.com/articles/10.1186/s13075-015-0843-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Claassen CA, Pearson JL, Khodyakov D, Satow PM, Gebbia R, Berman AL, Reidenberg DJ, Feldman S, Molock S, Carras MC, Lento RM, Sherrill J, Pringle B, Dalal S, Insel TR. Reducing the burden of suicide in the U.S.: the aspirational research goals of the National Action Alliance for Suicide Prevention Research Prioritization Task Force. Am J Prev Med. 2014 Sep;47(3):309–314. doi: 10.1016/j.amepre.2014.01.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kastner M, Bhattacharyya O, Hayden L, Makarski J, Estey E, Durocher L, Chatterjee A, Perrier L, Graham ID, Straus SE, Zwarenstein M, Brouwers M. Guideline uptake is influenced by six implementability domains for creating and communicating guidelines: a realist review. J Clin Epidemiol. 2015 May;68(5):498–509. doi: 10.1016/j.jclinepi.2014.12.013. https://linkinghub.elsevier.com/retrieve/pii/S0895-4356(15)00007-4. [DOI] [PubMed] [Google Scholar]

- 37.Khodyakov D, Mikesell L, Schraiber R, Booth M, Bromley E. On using ethical principles of community-engaged research in translational science. Transl Res. 2016 May;171:52–62. doi: 10.1016/j.trsl.2015.12.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Khodyakov D, Stockdale SE, Smith N, Booth M, Altman L, Rubenstein LV. Patient engagement in the process of planning and designing outpatient care improvements at the Veterans Administration health-care system: findings from an online expert panel. Health Expect. 2017 Feb;20(1):130–145. doi: 10.1111/hex.12444. http://europepmc.org/abstract/MED/26914249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Rubenstein L, Khodyakov D, Hempel S, Danz M, Salem-Schatz S, Foy R, O'Neill S, Dalal S, Shekelle P. How can we recognize continuous quality improvement? Int J Qual Health Care. 2014 Feb;26(1):6–15. doi: 10.1093/intqhc/mzt085. http://europepmc.org/abstract/MED/24311732. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Dalal S, Khodyakov D, Srinivasan R, Straus S, Adams J. ExpertLens: a system for eliciting opinions from a large pool of non-collocated experts with diverse knowledge. Technol Forecast Soc. 2011;78(8) [Google Scholar]

- 41.Rowe G, Gammack JG. Promise and perils of electronic public engagement. Sci Pub Pol. 2004 Feb 01;31(1):39–54. doi: 10.3152/147154304781780181. [DOI] [Google Scholar]

- 42.Boulkedid R, Abdoul H, Loustau M, Sibony O, Alberti C. Using and reporting the Delphi method for selecting healthcare quality indicators: a systematic review. PLoS One. 2011;6(6) doi: 10.1371/journal.pone.0020476. http://dx.plos.org/10.1371/journal.pone.0020476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Nilsen ES, Myrhaug HT, Johansen M, Oliver S, Oxman AD. Methods of consumer involvement in developing healthcare policy and research, clinical practice guidelines and patient information material. Cochrane Database Syst Rev. 2006 Jul 19;(3):CD004563. doi: 10.1002/14651858.CD004563.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Marshall MN. Sampling for qualitative research. Fam Pract. 1996 Dec;13(6):522–525. doi: 10.1093/fampra/13.6.522. http://fampra.oxfordjournals.org/cgi/pmidlookup?view=long&pmid=9023528. [DOI] [PubMed] [Google Scholar]

- 45.Hasson F, Keeney S, McKenna H. Research guidelines for the Delphi survey technique. J Adv Nurs. 2000 Oct;32(4):1008–1015. [PubMed] [Google Scholar]

- 46.Khodyakov D, Pulido E, Ramos A, Dixon E. Community-partnered research conference model: the experience of Community Partners in Care study. Prog Community Health Partnersh. 2014;8(1):83–97. doi: 10.1353/cpr.2014.0008. http://europepmc.org/abstract/MED/24859106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Guest G, MacQueen K, Namey E. Applied Thematic Analysis. Thousand Oaks: Sage; 2011. [Google Scholar]

- 48.Neuman L. Social Research Methods: Qualitative and Quantitative Approaches. Cranbury: Pearson Education, Inc; 2003. [Google Scholar]

- 49.Mays N, Pope C. Rigour and qualitative research. BMJ. 1995 Jul 08;311(6997):109–112. doi: 10.1136/bmj.311.6997.109. http://europepmc.org/abstract/MED/7613363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Basger BJ, Chen TF, Moles RJ. Validation of prescribing appropriateness criteria for older Australians using the RAND/UCLA appropriateness method. BMJ Open. 2012;2(5) doi: 10.1136/bmjopen-2012-001431. http://bmjopen.bmj.com/cgi/pmidlookup?view=long&pmid=22983875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Shekelle PG, Kahan JP, Bernstein SJ, Leape LL, Kamberg CJ, Park RE. The reproducibility of a method to identify the overuse and underuse of medical procedures. N Engl J Med. 1998 Jun 25;338(26):1888–1895. doi: 10.1056/NEJM199806253382607. [DOI] [PubMed] [Google Scholar]

- 52.Sim J, Wright CC. The kappa statistic in reliability studies: use, interpretation, and sample size requirements. Phys Ther. 2005 Mar;85(3):257–268. http://www.ptjournal.org/cgi/pmidlookup?view=long&pmid=15733050. [PubMed] [Google Scholar]