Abstract

In this paper, we extensively study the global asymptotic stability problem of complex-valued neural networks with leakage delay and additive time-varying delays. By constructing a suitable Lyapunov–Krasovskii functional and applying newly developed complex valued integral inequalities, sufficient conditions for the global asymptotic stability of proposed neural networks are established in the form of complex-valued linear matrix inequalities. This linear matrix inequalities are efficiently solved by using standard available numerical packages. Finally, three numerical examples are given to demonstrate the effectiveness of the theoretical results.

Keywords: Additive time-varying delays, Complex-valued neural networks, Global asymptotic stability, Leakage delay, Lyapunov–Krasovskii functional

Introduction

In the past decades, there have been increasing research interests in analyzing the dynamic behaviors of neural networks due to their widespread applications (Guo and Li 2012; Manivannan and Samidurai 2016; Mattia and Sanchez-Vives 2012; Yu et al. 2013), and the references therein. In many applications, complex signals are involved and complex-valued neural network is preferable. In the recent years, the complex-valued neural network is an emerging field of research in both theoretical and practical points of view. The major advantage of complex-valued neural networks is to explore new capabilities and higher performance of the designed network. According to that there has been increasing attention paid to study the dynamical behavior of the complex-valued neural networks and found an applications in different areas, such as pattern classification problems (Nait-Charif 2010), associative memory (Tanaka and Aihara 2009) and optimization problems (Jiang 2008). The equilibrium point of those existing applications are necessary to keep the networks to be stable. Therefore, the stability analysis is the most important dynamical property of complex-valued neural networks.

In real life situation, a time delay often occurs for the reason of the finite switching speed of the amplifiers, and it also appears in the electronic implementation of the neural networks when processing the signal transmission, which may cause the dynamical behaviors of neural networks in the form of instability, bifurcation and oscillation (Alofi et al. 2015; Cao and Li 2017; Hu et al. 2014; Huang et al. 2017). Thus, many authors have taken into account the constant time delays (Hu and Wang 2012; Subramanian and Muthukumar 2016) and time-varying delays (Chen et al. 2017; Gong et al. 2015) in the stability analysis of complex-valued neural networks.

In addition, a time delay in leakage term of the systems, which is called the leakage delay and a considerable factor affecting dynamics for the worse in the systems, is being put to use in the stability analysis of neural networks. The aforesaid results (Bao et al. 2016; Chen et al. 2017; Gong et al. 2015; Hu and Wang 2012; Subramanian and Muthukumar 2016) concerning the dynamical behavior analysis of the complex-valued neural networks with constant time delays or time-varying delays and did not consider the leakage effect. Even though, the leakage delay is extensively studied for real-valued neural networks (Lakshmanan et al. 2013; Li and Cao 2016; Sakthivel et al. 2015; Xie et al. 2016), the complex-valued neural networks with leakage delay has been rarely considered in the literature (Chen and Song 2013; Chen et al. 2016; Gong et al. 2015). As an example, by constructing an appropriate Lyapunov–Krasovskii functionals, the authors Gong et al. (2015) and Chen et al. (2016), respectively, studied the global -stability problem for the continuous-time and discrete-time complex-valued neural networks with leakage time delay and unbounded time-varying delays. By employing a combination of fixed point theory, Lyapunov–Krasovskii functional and the free weighting matrix method, the existence, uniqueness and global stability of the equilibrium point of complex-valued neural networks with both leakage delay and time delay on time scales are established in Chen and Song (2013).

Furthermore, the neural networks model with two successive time-varying delays has been introduced in Zhao et al. (2008) and the authors correctly pointed out that since signal transmissions may experience a few segment of networks and the conditions of network transmission may differ for each other, which can possibly produce successive delays with different properties. It is not rational to lump the two time delays into one time delay. Thus, it is more reasonable to model the neural networks with additive time-varying delays. In Shao and Han (2012), the authors discussed the stability and stabilization for continuous-time systems with two additive time-varying input delays arising from networked control systems. The problem of stability criteria of neural networks with two additive time-varying delay components are addressed in Tian and Zhong (2012) by using the both reciprocally convex and convex polyhedron approach. The authors Rakkiyappan et al. (2015) studied the passivity and passification problem for a class of memristor-based recurrent neural networks with additive time-varying delays. From the above, the additive time-varying delays are only presented in the real-valued neural networks in the previous literature.

However, it is worth noting that in those existing results, the time-varying delay considered in the complex-valued neural networks is usually a single. Stability research on complex-valued neural networks with additive time-varying delays has not been considered in the literature, which motivates our research interesting. To the best of authors knowledge, the global asymptotic stability analysis for complex-valued neural networks with leakage delays and additive time-varying delays has not been considered in the literature, and remains as a topic for further investigation.

In this paper, the main contributions are given as follows:

It is the first time to establish the global asymptotic stability of complex-valued neural networks with leakage delays and additive time-varying delay components.

A suitable Lyapunov–Krasovskii functional is constructed with the full information of additive time-varying delays and leakage delays.

A new type of complex-valued triple integral inequality is introduced to estimate the upper bound of the derivative of Lyapunov–Krasovskii functional.

Based on the model transformation technique, sufficient conditions for the global asymptotic stability of proposed neural networks are obtained in the linear matrix inequality form, which can be checked numerically by using the effective YALMIP toolbox in MATLAB.

Finally, three illustrative examples are provided to show the effectiveness of the proposed criteria.

The rest of this paper is organized as follows: In “Problem formulation and preliminaries” section, the model of the complex-valued neural networks with leakage delay and additive time-varying delays is presented, and some preliminaries are briefly outlined. In “Main result” section, the sufficient conditions are derived to ascertain the global asymptotic stability of the complex-valued neural networks with leakage delay and additive time-varying delays by Lyapunov–Krasovskii functional method. Three numerical examples are given to show the effectiveness of the acquired conditions in “Numerical example” section. Finally, conclusions are drawn in “Conclusion” section.

Notations

The notation used throughout this paper is fairly standard. and denote the set of n-dimensional complex vectors, complex matrices, respectively. The superscript T and denotes the matrix transposition and complex conjugate transpose, respectively; i denotes the imaginary unit, that is . For any matrix P, means P is positive definite (negative definite) matrix. For complex number , the notation stands for the module of z and ; stands for diagonal of the block- diagonal matrix. If , denotes by its operator norm, i.e., . The notation always denotes the conjugate transpose of block in a Hermitian matrix.

Problem formulation and preliminaries

In this paper, we consider a model of complex-valued neural networks with leakage delay and two additive time-varying delay components, which can be described by

| 1 |

where is the state vector of the neural networks with n neurons at time t, with is the self feedback connection weight matrix. and are the connection weight matrix and delayed connection weight matrix, respectively; is the complex-valued neuron activation function; is the external input vector; denotes leakage delay, and are two time varying delays satisfies , , , , , , and are less than one. The initial condition associated with the complex-valued neural network (1) is given by

It means that u(s) is continuous and satisfies (1). Let be the space of continuous functions mapping into

Assumption 2.1

Let satisfies the Lipschitz continuity condition in the complex domain, that is, for all , there exists a positive constant , such that, for , we have

Definition 2.2

The vector is said to be an equilibrium point of complex valued neural networks (1) if it satisfies the following condition

Theorem 2.3

(Existence of equilibrium point) Under the Assumption 2.1, there exist an equilibrium point for the system (1) if

Proof

Since the activation function of the system (1) is bounded, there exists a constant such that,

Let . Then for We denote and let us define the map by,

Since, H is a continuous map and using the condition we obtain that

Therefore from the definition of , H maps into itself. By Brouwers fixed point theorem, it can be inferred that there exist a fixed point of H, which satisfies

Pre multiplying by the matrix A on both sides, gives

That is, by Definition 2.2, is an equilibrium point of (1). Hence, the proof is completed.

For convenience, we shift the equilibrium point to the origin by letting Then, the system (1) can be written as

| 2 |

where, By using the transformation, the system (2) has an equivalent form as follows

| 3 |

In the following, we introduce relevant assumption and lemmas to facilitate the presentation of main results in the ensuing sections.

Assumption 2.4

Let satisfies the Lipschitz continuity condition in the complex domain, that is, for all , there exists a positive constant , such that, for , we have

where is called Lipschitz constant. Moreover, define

Lemma 2.5

Velmurugan et al. (2015)(Schur Complement) A given matrix,

where , is equivalent to any one of the following conditions

Lemma 2.6

For any constant Hermitian matrix and , a scalar function with scalars such that the following inequalities are satisfied:

-

(i)

-

(ii)

-

(iii)

Proof

The proof of complex-valued Jensen’s inequality (i) is given in Chen and Song (2013). Therefore, we have to prove (ii) and (iii) as follows:

From (i), the following inequality holds:

By the Schur complement Lemma (Velmurugan et al. 2015), the above inequality becomes,

| 4 |

Integrating (4) from a to b, we have

| 5 |

By using Schur complement Lemma, the inequality (5) is equivalent to

This completes the proof of (ii). By applying the same procedure presented in the proof of (ii), the inequality (iii) can be easily derived. Thus, it is omitted.

Main result

In this section, by utilizing a Lyapunov–Krasovskii functional and integral inequalities, we will present a delay-dependent stability criterion for the complex-valued neural networks with leakage delays and additive time-varying delays (3) via linear matrix inequality.

Theorem 3.1

Under Assumption 2.4, the complex-valued neural networks (3) is globally asymptotically stable, if there exist positive Hermitian matrices J, M, N, O, P, Q, R, S, T, U, V, W, X, Y and positive diagonal matrix G such that the following linear matrix inequality holds:

| 6 |

where , , , , , , , , , , , , , , , , , .

Proof

Consider the following Lyapunov–Krasovskii functional

| 7 |

where

Taking the time derivative of V(t) along the trajectories of system (3), it follows that

| 8 |

| 9 |

| 10 |

| 11 |

| 12 |

Applying Lemma 2.6 (i) to the integral terms of (10) which produces that

| 13 |

| 14 |

| 15 |

From (3) and (13)–(15), it follows that

| 16 |

By using Lemma 2.6 (2), the integral terms in (11) can be estimated as follows:

| 17 |

| 18 |

Then, substituting (17) and (18) into (11), yields

| 19 |

Similarly, by using Lemma 2.6 (ii) and (iii), the integral terms in can be obtained as follows:

| 20 |

| 21 |

Therefore, together with (12) and (20), (21), we get

| 22 |

Moreover, based on Assumption 2.4, for any we have

| 23 |

Let . From (23), it can be seen that

Thus,

| 24 |

Combining (8), (9), (16), (19), (22) and (24), one can deduce that

where

If (6) holds, then we get

Hence, the complex-valued neural networks (3) is globally asymptotically stable. This completes the proof.

Remark 3.2

In Dey et al. (2010), the problem of asymptotic stability for continuous-time systems with additive time-varying delays is investigated by utilizing the free matrix variables. The authors Wu et al. (2009) addressed the stability problem for a class of uncertain systems with two successive delay components. By using a convex polyhedron method, the delay-dependent stability criteria is established in Shao and Han (2011) for neural networks with two additive time-varying delay components. However, when constructing the Lyapunov–Krasovskii functional, those results are not adequately use the full information about the additive time-varying delays , and , which would be inevitably conservative to some extent. Since, the authors Cheng et al. (2014) utilized the full information about the additive time-varying delays in the constructed Lyapunov–Krasovskii functional and studied the delay-dependent stability of real-valued continuous-time system which gives the less conservative results. Inspired by the above, in the present paper, we also make the full information of additive time-varying delays , and in the study of stability analysis for complex-valued neural networks.

Remark 3.3

In the existing literature, many researchers studied the stability problem of neural networks and proposed good results, for example see Lakshmanan et al. (2013); Sakthivel et al. (2015); Xie et al. (2016) and references there in. Most of these results are founded on the inequality Chen and Song (2013) and Velmurugan et al. (2015) studied the stability and passivity analysis of complex-valued neural networks with the help of complex-valued Jensen’s inequality Based on the above analysis and discussions, in this paper the single integral inequality is handled with the complex-valued Jensen’s inequality. Moreover, in this present paper, we introduce the double integral inequality and as well as triple integral inequality for calculating the derivative of Lyapunov–Krasovskii functional in the complex-valued neural networks.

Remark 3.4

In the following, we will discuss the global asymptotic stability criteria for complex-valued neural networks with additive time-varying delays, that is, there is no leakage delay in (3), then the system (3) becomes

| 25 |

Then, according to Theorem 3.1, we have the following corollary for the delay-dependent global asymptotic stability of system (25).

Corollary 3.5

Given scalars , , and , the equilibrium point of complex-valued neural networks (25) with additive time-varying delays is globally asymptotically stable if there exist positive Hermitian matrices M, N, P, Q, R, S, T, V, W, X and positive diagonal matrix G such that the following linear matrix inequality holds:

| 26 |

where ,

Proof

The proof immediately follows from the proof of Theorem 3.1, by setting , , and hence it is omitted. This completes the proof.

Remark 3.6

When or complex-valued neural networks with additive time-varying delays (25) reduces to single time-varying delays. Without loss of generality, assume that then (25) becomes

| 27 |

By letting in Corollary 3.5, we can easily obtain the sufficient condition for global asymptotic stability of complex-valued neural networks with time-varying delays (27), which are summarized the following corollary.

Corollary 3.7

Given scalars and , the equilibrium point of complex-valued neural networks (27) is globally asymptotically stable if there exist positive Hermitian matrices M, P, Q, S, V, W and positive diagonal matrix G such that the following linear matrix inequality holds:

| 28 |

where , , , , ,

Remark 3.8

In Liu and Chen (2016), the global exponential stability of complex-valued neural networks with asynchronous time delays is established by decomposing the complex-valued networks into its real and imaginary parts and construct an equivalent real-valued system. The authors Xu et al. (2014) derived the exponential stability condition for a class of complex-valued neural networks with time-varying delays and unbounded delays by utilizing the vector Lyapunov–Krasovskii functional method, homeomorphism mapping lemma and the matrix theory. In Liu and Chen (2016) and Xu et al. (2014), the authors addressed the stability results of complex-valued neural networks with constant or time-varying delays by separating their activation function into its real and imaginary parts. However, when the activation functions cannot be expressed by separating their real and imaginary parts, the proposed stability results in Liu and Chen (2016) and Xu et al. (2014) cannot be applied. It should be mentioned that, in this present paper, the proposed stability criterion for complex-valued neural networks is valid regardless of the active functions can be expressed by separating their real and imaginary parts. Thus, the derived delay dependent stability condition in this paper is more general than the existing literature (Liu and Chen 2016; Xu et al. 2014).

Numerical example

In this section, we give three numerical examples to demonstrate the derived main results.

Example 4.1

Consider a two-dimensional complex-valued neural networks (3) with the following parameters:

The activation functions are chosen as . The time-varying delays are considered as , , which satisfying and Take and by using the effective YALMIP toolbox in MATLAB, we can find the feasible solutions to linear matrix inequality in (6) as follows:

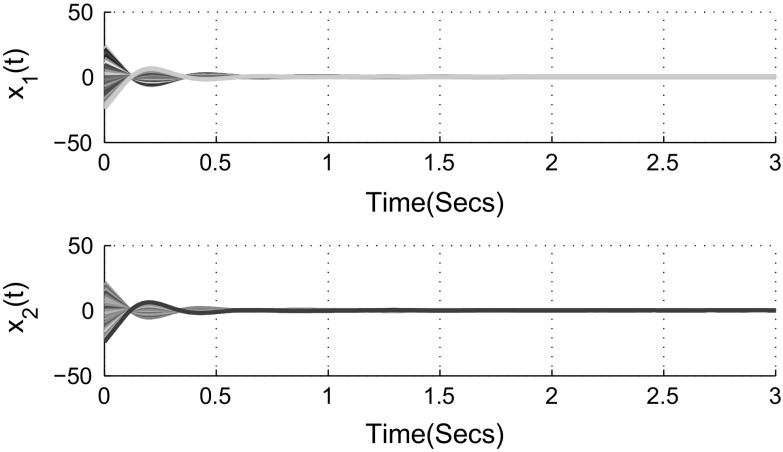

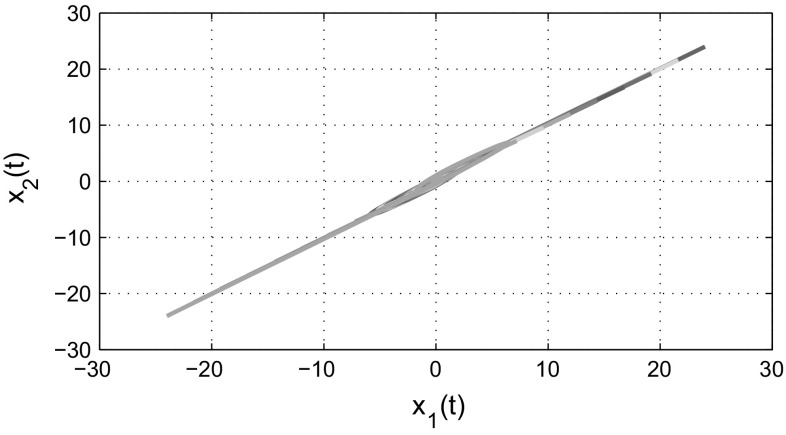

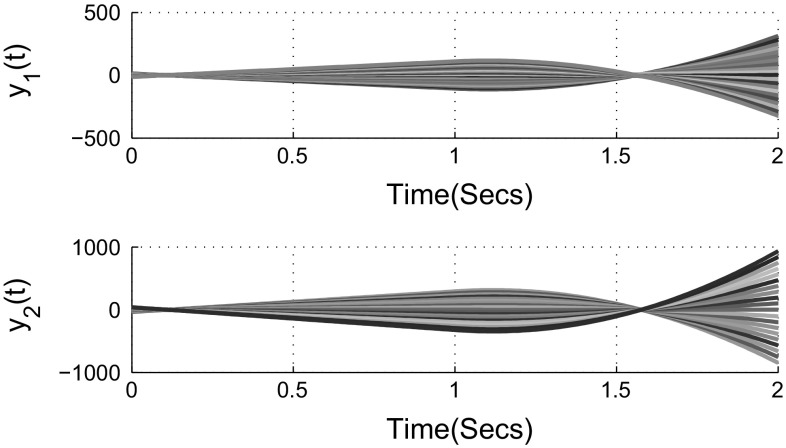

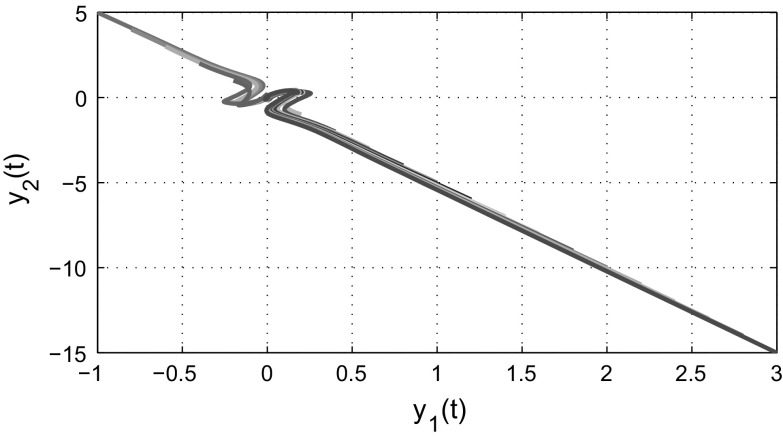

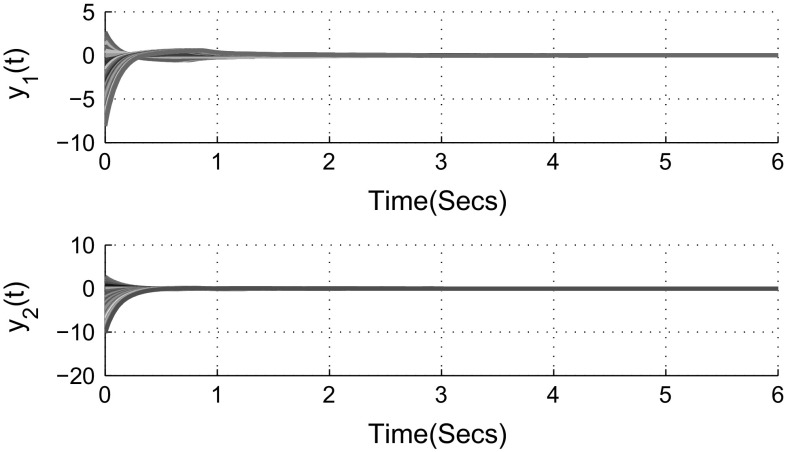

and According to Theorem 3.1, the complex-valued neural networks with leakage delay and additive time-varying delays (3) is globally asymptotically stable. Figures 1 and 2 show that the time responses of the real and imaginary parts of the system (3) with 21 initial conditions, respectively. The phase trajectories of the real parts of the system (3) is given in Fig. 3. Similarly, the phase trajectories for imaginary parts of the system (3) is given in Fig. 4. Also, Figs. 5 and 6 depict the real and imaginary parts of states of the considered complex-valued neural networks (3) with under the same 21 initial conditions. It is easy to check that the unique equilibrium point of the system (3) is unstable, this implies that the delays in leakage term on the dynamics of complex-valued neural networks cannot be ignored when we analyze the stability of complex-valued neural networks.

Fig. 1.

State trajectories of real parts of the system (3) in Example 4.1

Fig. 2.

State trajectories of imaginary parts of the system (3) in Example 4.1

Fig. 3.

State trajectories of neural networks (3) between real subspace

Fig. 4.

State trajectories of neural networks (3) between imaginary subspace

Fig. 5.

Time response of real parts of the system (3) when

Fig. 6.

Time response of imaginary parts of the system (3) when

Example 4.2

Consider the following two-dimensional complex-valued neural networks (25) with additive time-varying delays:

where

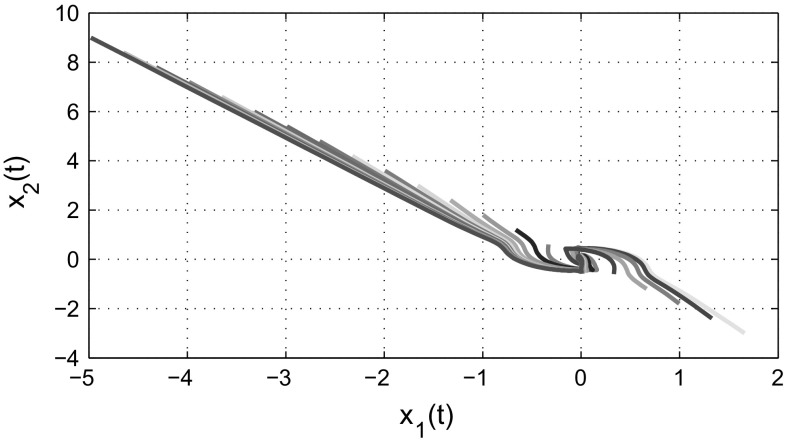

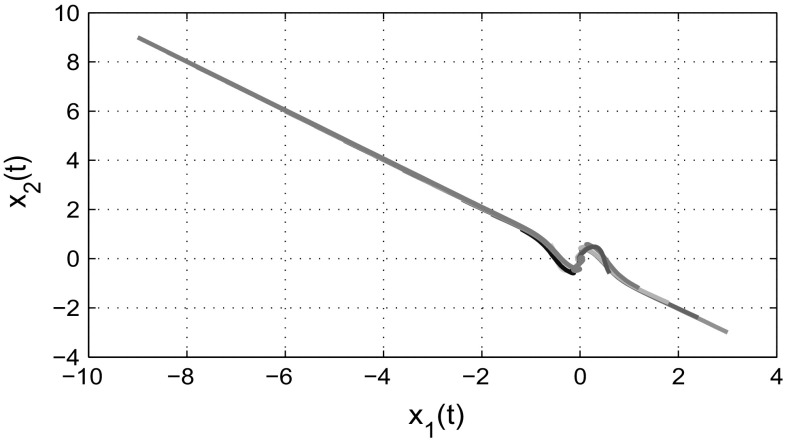

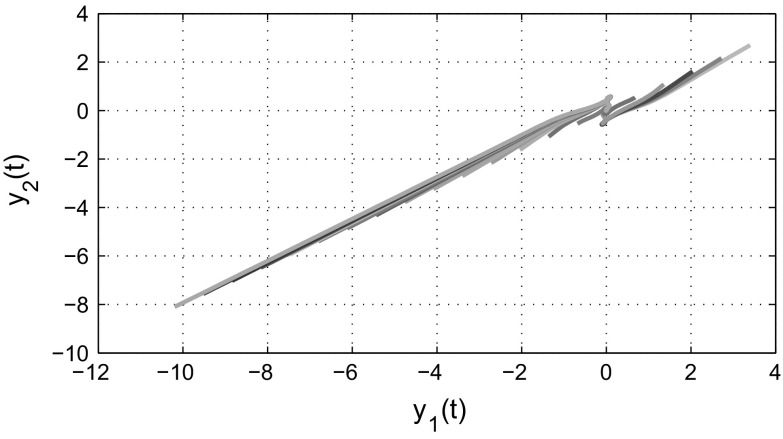

The additive time-varying delays are taken as and . Choose the nonlinear activation function as with , , , , By using the YALMIP toolbox in MATLAB along with the above parameters, the linear matrix inequality (26) is feasible. From Figs. 7 and 8, we have found that the state trajectories of the system with its real and imaginary parts are converge to the zero equilibrium point with different initial conditions, respectively. The phase trajectories of real and imaginary parts of the system (25) are depicted in Figs. 9 and 10, respectively. By Corollary 3.5, we can conclude that the proposed neural networks (25) is globally asymptotically stable.

Fig. 7.

Trajectories of the real parts x(t) of the states z(t) for the neural network (25)

Fig. 8.

Trajectories of the imaginary parts y(t) of the states z(t) for the neural network (25)

Fig. 9.

State trajectories of neural networks (25) between real subspace

Fig. 10.

State trajectories of neural networks (25) between imaginary subspace

Example 4.3

Consider the following two-dimensional complex-valued neural networks (27) with time-varying delays:

where

Choose the nonlinear activation function as with . The time-varying delays are chosen as which satisfies , By employing the MATLAB YALMIP Toolbox, we can find the feasible solutions to linear matrix inequalities in (28) as follows, which guarantee the global asymptotic stability of the equilibrium point.

Figures 11 and 12, respectively, displays state trajectories of the complex-valued neural networks (27) with its real and imaginary parts are converge to the origin with 21 randomly selected initial conditions. The phase trajectories of real and imaginary parts of the system (27) are drawn in Figs. 13 and 14, respectively.

Fig. 11.

Time responses of real parts of the system (27) with 21 initial conditions

Fig. 12.

Time responses of imaginary parts of the system (27) with 21 initial conditions

Fig. 13.

Phase trajectories of real parts of the proposed system (27)

Fig. 14.

Phase trajectories of imaginary parts of the proposed system (27)

Conclusion

In this paper, the global asymptotic stability of the complex-valued neural networks with leakage and additive-time varying delays has been studied. The sufficient conditions have been proposed to ascertain the global asymptotic stability of the addressed neural networks based on the appropriate Lyapunov–Krasovskii functional with involving triple integral terms. The complex-valued linear matrix inequalities are used to study the main results which can be easily solved by YALMIP tool in MATLAB. Three numerical examples have been presented to illustrate the effectiveness of theoretical results.

Acknowledgements

The authors wish to thank the editor and reviewers for a number of constructive comments and suggestions that have improved the quality of this manuscript. This work was supported by Science Engineering Research Board (SERB), DST, Govt. of India under YSS Project F.No: YSS/2014/000447 dated 20.11.2015.

Footnotes

This work was supported by Science Engineering Research Board (SERB), DST, Govt. of India under YSS Project F. No: YSS/2014/000447 dated 20. 11. 2015.

Contributor Information

K. Subramanian, Email: subramaniangri@gmail.com

P. Muthukumar, Phone: 91-451-2452371, Email: pmuthukumargri@gmail.com

References

- Alofi A, Ren F, Al-Mazrooei A, Elaiw A, Cao J. Power-rate synchronization of coupled genetic oscillators with unbounded time-varying delay. Cogn Neurodyn. 2015;9(5):549–559. doi: 10.1007/s11571-015-9344-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bao H, Park JH, Cao J. Synchronization of fractional-order complex-valued neural networks with time delay. Neural Netw. 2016;81:16–28. doi: 10.1016/j.neunet.2016.05.003. [DOI] [PubMed] [Google Scholar]

- Cao J, Li R. Fixed-time synchronization of delayed memristor-based recurrent neural networks. Sci China Inf Sci. 2017;60(3):032201. doi: 10.1007/s11432-016-0555-2. [DOI] [Google Scholar]

- Cheng J, Zhu H, Zhong S, Zhang Y, Zeng Y. Improved delay-dependent stability criteria for continuous system with two additive time-varying delay components. Commun Nonlinear Sci Numer Simul. 2014;19(1):210–215. doi: 10.1016/j.cnsns.2013.05.026. [DOI] [Google Scholar]

- Chen X, Song Q. Global stability of complex-valued neural networks with both leakage time delay and discrete time delay on time scales. Neurocomputing. 2013;121:254–264. doi: 10.1016/j.neucom.2013.04.040. [DOI] [Google Scholar]

- Chen X, Song Q, Zhao Z, Liu Y. Global -stability analysis of discrete-time complex-valued neural networks with leakage delay and mixed delays. Neurocomputing. 2016;175:723–735. doi: 10.1016/j.neucom.2015.10.120. [DOI] [Google Scholar]

- Chen X, Zhao Z, Song Q, Hu J. Multistability of complex-valued neural networks with time-varying delays. Appl Math Comput. 2017;294:18–35. [Google Scholar]

- Dey R, Ray G, Ghosh S, Rakshit A. Stability analysis for continuous system with additive time-varying delays: a less conservative result. Appl Math Comput. 2010;215(10):3740–3745. [Google Scholar]

- Gong W, Liang J, Cao J. Global -stability of complex-valued delayed neural networks with leakage delay. Neurocomputing. 2015;168:135–144. doi: 10.1016/j.neucom.2015.06.006. [DOI] [Google Scholar]

- Gong W, Liang J, Cao J. Matrix measure method for global exponential stability of complex-valued recurrent neural networks with time-varying delays. Neural Netw. 2015;70:81–89. doi: 10.1016/j.neunet.2015.07.003. [DOI] [PubMed] [Google Scholar]

- Guo D, Li C. Population rate coding in recurrent neuronal networks with unreliable synapses. Cogn Neurodyn. 2012;6(1):75–87. doi: 10.1007/s11571-011-9181-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hu M, Cao J, Hu A. Exponential stability of discrete-time recurrent neural networks with time-varying delays in the leakage terms and linear fractional uncertainties. IMA J Math Control Inf. 2014;31(3):345–362. doi: 10.1093/imamci/dnt014. [DOI] [Google Scholar]

- Hu J, Wang J. Global stability of complex-valued recurrent neural networks with time-delays. IEEE Trans Neural Netw Learn Syst. 2012;23(6):853–865. doi: 10.1109/TNNLS.2012.2195028. [DOI] [PubMed] [Google Scholar]

- Huang C, Cao J, Xiao M, Alsaedi A, Hayat T. Bifurcations in a delayed fractional complex-valued neural network. Appl Math Comput. 2017;292:210–227. [Google Scholar]

- Jiang D (2008) Complex-valued recurrent neural networks for global optimization of beamforming in multi-symbol MIMO communication systems. In: Proceedings of international conference on conceptual structuration, Shanghai. pp 1–8

- Lakshmanan S, Park JH, Lee TH, Jung HY, Rakkiyappan R. Stability criteria for BAM neural networks with leakage delays and probabilistic time-varying delays. Appl Math Comput. 2013;219(17):9408–9423. [Google Scholar]

- Li R, Cao J. Stability analysis of reaction-diffusion uncertain memristive neural networks with time-varying delays and leakage term. Appl Math Comput. 2016;278:54–69. [Google Scholar]

- Liu X, Chen T. Global exponential stability for complex-valued recurrent neural networks with asynchronous time delays. IEEE Trans Neural Netw Learn Syst. 2016;27(3):593–606. doi: 10.1109/TNNLS.2015.2415496. [DOI] [PubMed] [Google Scholar]

- Manivannan R, Samidurai R, Cao Jinde, Alsaedi Ahmed. New delay-interval-dependent stability criteria for switched Hopfield neural networks of neutral type with successive time-varying delay components. Cogn Neurodyn. 2016;10(6):543–562. doi: 10.1007/s11571-016-9396-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mattia M, Sanchez-Vives MV. Exploring the spectrum of dynamical regimes and timescales in spontaneous cortical activity. Cogn Neurodyn. 2012;6(3):239–250. doi: 10.1007/s11571-011-9179-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nait-Charif H. Complex-valued neural networks fault tolerance in pattern classification applications. IEEE Second WRI Glob Congr Intell Syst. 2010;3:154–157. [Google Scholar]

- Rakkiyappan R, Chandrasekar A, Cao J. Passivity and passification of memristor-based recurrent neural networks with additive time-varying delays. IEEE Trans Neural Netw Learn Syst. 2015;26(9):2043–2057. doi: 10.1109/TNNLS.2014.2365059. [DOI] [PubMed] [Google Scholar]

- Sakthivel R, Vadivel P, Mathiyalagan K, Arunkumar A, Sivachitra M. Design of state estimator for bidirectional associative memory neural networks with leakage delays. Inf Sci. 2015;296:263–274. doi: 10.1016/j.ins.2014.10.063. [DOI] [Google Scholar]

- Shao H, Han QL. New delay-dependent stability criteria for neural networks with two additive time-varying delay components. IEEE Trans Neural Netw. 2011;22(5):812–818. doi: 10.1109/TNN.2011.2114366. [DOI] [PubMed] [Google Scholar]

- Shao H, Han QL. On stabilization for systems with two additive time-varying input delays arising from networked control systems. J Franklin Inst. 2012;349(6):2033–2046. doi: 10.1016/j.jfranklin.2012.03.011. [DOI] [Google Scholar]

- Subramanian K, Muthukumar P (2016) Existence, uniqueness, and global asymptotic stability analysis for delayed complex-valued Cohen–Grossberg BAM neural networks. Neural Comput Appl. doi:10.1007/s00521-016-2539-6

- Tanaka G, Aihara K. Complex-valued multistate associative memory with nonlinear multilevel functions for gray-level image reconstruction. IEEE Trans Neural Netw. 2009;20(9):1463–1473. doi: 10.1109/TNN.2009.2025500. [DOI] [PubMed] [Google Scholar]

- Tian J, Zhong S. Improved delay-dependent stability criteria for neural networks with two additive time-varying delay components. Neurocomputing. 2012;77(1):114–119. doi: 10.1016/j.neucom.2011.08.027. [DOI] [Google Scholar]

- Velmurugan G, Rakkiyappan R, Lakshmanan S. Passivity analysis of memristor-based complex-valued neural networks with time-varying delays. Neural Process Lett. 2015;42(3):517–540. doi: 10.1007/s11063-014-9371-8. [DOI] [Google Scholar]

- Wu H, Liao X, Feng W, Guo S, Zhang W. Robust stability analysis of uncertain systems with two additive time-varying delay components. Appl Math Model. 2009;33(12):4345–4353. doi: 10.1016/j.apm.2009.03.008. [DOI] [Google Scholar]

- Xie W, Zhu Q, Jiang F. Exponential stability of stochastic neural networks with leakage delays and expectations in the coefficients. Neurocomputing. 2016;173:1268–1275. doi: 10.1016/j.neucom.2015.08.086. [DOI] [Google Scholar]

- Xu X, Zhang J, Shi J. Exponential stability of complex-valued neural networks with mixed delays. Neurocomputing. 2014;128:483–490. doi: 10.1016/j.neucom.2013.08.014. [DOI] [Google Scholar]

- Yu K, Wang J, Deng B, Wei X. Synchronization of neuron population subject to steady DC electric field induced by magnetic stimulation. Cogn Neurodyn. 2013;7(3):237–252. doi: 10.1007/s11571-012-9233-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhao Y, Gao H, Mou S. Asymptotic stability analysis of neural networks with successive time delay components. Neurocomputing. 2008;71:2848–2856. doi: 10.1016/j.neucom.2007.08.015. [DOI] [Google Scholar]