Abstract

Bi-clustering is a widely used data mining technique for analyzing gene expression data. It simultaneously groups genes and samples of an input gene expression data matrix to discover bi-clusters that relevant samples exhibit similar gene expression profiles over a subset of genes. The discovered bi-clusters bring insights for categorization of cancer subtypes, gene treatments and others. Most existing bi-clustering approaches can only enumerate bi-clusters with constant values. Gene interaction networks can help to understand the pattern of cancer subtypes, but they are rarely integrated with gene expression data for exploring cancer subtypes. In this paper, we propose a novel method called Network-aided Bi-Clustering (NetBC). NetBC assigns weights to genes based on the structure of gene interaction network, and it iteratively optimizes sum-squared residue to obtain the row and column indicative matrices of bi-clusters by matrix factorization. NetBC can not only efficiently discover bi-clusters with constant values, but also bi-clusters with coherent trends. Empirical study on large-scale cancer gene expression datasets demonstrates that NetBC can more accurately discover cancer subtypes than other related algorithms.

Introduction

Gene expression means that cells transfer the genetic information in deoxyribonucleic acid (DNA) into a protein molecule with biological activity through transcription and translation in life process1. Microarry techniques enable researchers simultaneously measure expression levels of numerous genes2. The measurements of gene expression under many specific conditions are often represented as a gene expression data matrix, of which each row corresponds to a gene and each column represents the expression levels under a specific condition3, 4.The specific conditions usually relate to environments, patients, tissues, time points, and they are also synonymously called as samples. One key step of analyzing gene expression data is to identify clusters of genes, or of conditions4. For example, cancer can be classified into subtypes based on the pervasive differences in their gene expression patterns, and thus to provide a cancer patient with precise treatment5, 6.

Many clustering approaches have been proposed to analyze gene expression data, such as k-means7, hierarchical clustering8, local self-organizing maps9, local adaptive clustering10 and so on. Tavazoie et al.7 applied k-means to group gene expression data by assigning a sample to its nearest centroid, which is calculated by averaging all samples in that cluster. Eisen et al.8 applied average-link hierarchical clustering to cluster yeast gene expression data. Hierarchical clustering iteratively merges two closest clusters from singleton clusters, or partitions clusters into sub-ones by taking all samples as the single initial cluster. Average-link method uses the average distance between members of two clusters. These approaches have enabled researchers to explore the association between biological mechanisms and different physiological states, as well as to identify gene expression signatures. These traditional approaches, however, separately group gene expression data from genes dimension only. They can not discover the patterns that similar genes exhibit similar behaviors only over a subset of conditions (or samples), or relevant samples exhibit similar expression profiles over a subset of genes11. Patients of a cancer subtype may show similar expression profiles on a number of genes, instead of all5, 6.

Bi-clustering becomes an alternative to traditional clustering approaches for gene expression data analysis. Bi-clustering (or co-clustering), simultaneously groups genes and samples, it aims at discovering the patterns (or bi-clusters) that some genes exhibit similar expression values only on a subset of conditions12, 13. One can obtain a set of genes co-regulated under a set of conditions via bi-clustering. Bi-clustering shows great potentiality to find biological significance patterns14, which usually include: with constant values in the entire bi-cluster, with constant values in rows, with constant values in columns, with additive constant values and with multiplicative coherent values15, 16.

Many bi-clustering techniques have been applied to gene expression data analysis17. Cheng et al.12 pioneered a bi-clustering solution for grouping gene expression data, whose exact solution is known as a NP-hard problem. To combat with this problem, they used a greedy search to discover bi-clusters with low mean-squared residue score. Particularly, they iteratively removed or added genes and conditions from gene expression data matrix to find a bi-cluster, whose mean-squared residue score is below a certain threshold. However, this iterative solution can only produce one bi-cluster at a time, and it is hard to set a suitable threshold. Bergmann et al.18 proposed an iterative signature algorithm to iteratively search bi-clusters based on two pre-determined thresholds, one for matrix rows (representing genes) and the other one for matrix columns (representing samples). Obviously, the specification of these two thresholds affects the composition of bi-clusters. Therefore, similar as the solution proposed by Cheng et al.12, this signature algorithm is also heavily dependent on suitable setting of thresholds.

Researchers also move toward concurrently discovering multiple bi-clusters at a time. For instance, bi-clustering based on graph theory19, 20, information theory21, statistical method22, matrix factorization23. Sun et al.24 contributed a heuristic algorithm called Biforce, which transforms the data matrix into a weighted bipartite graph and judges the connection between nodes by a user-specified similarity threshold. Next, Biforce edits the bipartite graph by deleting or inserting edges to obtain bi-clusters. Kluger et al.20 proposed a spectral bi-clustering algorithm to simultaneously group genes and samples to find distinctive patterns from gene expression data. This algorithm is based on the observation that the structure of gene expression data can be found in the eigenvectors across genes or samples. It firstly computes several largest left and right singular vectors of the normalized gene expression data matrix, and then uses normalized cut25 or k-means on the matrix reconstructed by the left and right eigenvectors to obtain the row and column labels. Shan et al.22 proposed Bayesian co-clustering (BCC). BCC assumes that the genes (or samples) of gene expression data are generated by a finite mixture of underlying probability distributions, i.e., multivariate normal distribution. The entry of gene expression data matrix can be generated by the joint distributions of genes and conditions. One advantage of BCC is that it computes the probability of genes (or samples) belonging to several bi-clusters, instead of exclusively partitioning genes (or samples) into only one bi-cluster, but BCC suffers from a long runtime cost on large scale gene expression data. Dhillon et al.21 proposed an information-theoretic bi-clustering algorithm. Likewise BCC, this algorithm views the entry of gene expression data matrix as the estimation of joint probability of row-column distributions and optimizes these distributions by maximizing mutual information between entries of bi-clusters. But this approach is restricted to non-negative data matrix. Murali et al.26 proposed the concept of conserved gene expression motifs (xMOTIFs), each motif is defined as a subset of genes whose expressions are simultaneously conserved for a subset of samples. xMOTIFS aims to discover large conserved gene motifs that cover all the samples and classes in the data matrix. Hochreiter et al.27 proposed a factor analysis bi-clustering (FABIA) algorithm based on multiplicative model, which accounts for the linear dependency between gene expression profiles and samples. Lazzeroni et al.28 proposed a plaid model bi-clustering (Plaid), the entries of each bi-cluster are modelled by a general additive model and extracted by row and column indicator variables.

To explore bi-clusters with coherent trends, Cho et al.29 proposed a minimum sum-squared residue co-clustering (MSSRCC) solution to identify bi-clusters. MSSRCC iteratively obtains row and column clusters by a k-means like algorithm on row and column dimensions while monotonically decreasing the sum-squared residue. MSSRCC can discover multiple bi-clusters with coherent trends, or constant values. Gene expression data are always with a limit number of samples but with thousands of genes4. Distance between samples turns to be isometric as the number of genes (or gene dimension) increase30. MSSRCC firstly reduces the gene dimension by choosing genes with large deviation of expression levels among samples, and then applies bi-clustering on the pre-selected gene expression data to identify bi-clusters. However, this selection may lose the information hidden in the gene expression data, since the biological sense is not always straight31.

More recently, molecular interaction networks are also incorporated into bi-clustering to improve the performance of discovering cancer subtypes32–35. Knowing the subtype of a cancer patient can provide directional clues for precise treatment. Hofree et al.36 proposed a network-based stratification method to integrate somatic cancer with gene interaction networks. This approach initially groups cancer patients with mutations in similar network regions and then performs bi-clustering on the gene expression profiles using graph-regularized non-negative matrix factorization37. Liu et al.38 proposed a network-assisted bi-clustering (NCIS) to identify cancer subtypes via semi-non-negative matrix factorization37. NCIS assigns weights to genes as the importance indicator of genes in the clustering process. The weight of each gene refers to both the gene interaction network and gene expression profiles. However, NCIS can only discover bi-clusters with constant values.

The identified bi-clusters by a bi-clustering algorithm depend on the adopted objective function of that algorithm17. MSSRCC uses sum-squared residue as the objective function but it does not incorporate the gene interaction network. NCIS assigns weights to genes by referring to both the absolute deviation of genes expression profiles among samples and gene interaction network, but NCIS can only find bi-clusters with constant values, since its objective function is to minimize the distance between all entries of a bi-cluster and the centroid, defined by average of all entries in that bi-cluster.

To simultaneously discover multiple bi-clusters with constant or coherent values and to synergy bi-clustering with gene interaction network for cancer subtypes discovery, we introduce a novel method called Network aided Bi-Clustering (NetBC for short). NetBC firstly assigns weights to genes based on the structure of gene interaction network and the deviation of gene expression profiles. Next, it iteratively optimizes sum-squared residue to generate the row and column indicative matrices by matrix factorization. After that, NetBC takes advantage of the row and column indicative matrices to generate bi-clusters. To quantitatively and comparatively study the performance of NetBC, we test NetBC and other related comparing methods on several publicly available cancer gene expression datasets from The Cancer Genome Atlas (TCGA) project39. We use the clinical features of patients to evaluate the performance because the true subtypes of these samples belonging to are unknown. Experimental results show that NetBC can better group patients into subtypes than comparing methods. We further conduct experiments on cancer gene expression datasets with known subtypes to comparatively study the performance of NetBC. NetBC again demonstrates better results than these methods.

Results and Discussion

To comparatively evaluate the performance of the proposed NetBC, we compared NetBC with NCIS38, MSSRCC29, BCC22, Cheng and Church (CC)12, BiMax14, Biforce24, xMOTIFs26, FABIA27, and Plaid28. Since NCIS, MSSRCC and BCC aim to extract non-overlapping bi-clusters with checkerboard structure, we compare NetBC with NCIS, MSSRCC and BCC on separating samples on two large scale cancer gene expression datasets from TCGA34 and several cancer gene expression datasets with known subtypes. CC, BiMax, Biforce, xMOTIFs, FABIA, and Plaid aim to extract arbitrarily positioned overlapping bi-clusters, we compare NetBC with CC, BiMax, Biforce, xMOTIFs, FABIA, and Plaid by relevance and recovery on synthetic datasets with implanted bi-clusters.

Determining the number of Gene clusters (k) and sample clusters (d)

Determining the number of clusters is a challenge for most clustering methods. Here we adopt a widely used method to find the number of gene clusters k (or sample clusters d) that best fits the gene expression data matrix38, 40. If the number of gene clusters k (or sample clusters d) is suitable, we would expect that the gene separation (or sample separation) would change very little in different runs. For each run, we define an adjacency matrix of genes M g with size m×m and an adjacency matrix of samples M s with size n×n, when gene i and gene j belong to the same cluster and , otherwise. Similarly, when sample i and sample j belong to the same cluster and , otherwise. The consensus matrices and are the average of many base and , which are obtained by repeatedly running the clustering method. The entry of () is among 0 and 1. reflects the similarity between genes and denotes the distance between genes. If the selection of k is suitable, is rather stable among multiple runs. In other words, is close to 0 or 1. If the selection of d is suitable, will not sharply fluctuate in different runs. We use the cophenetic correlation coefficient and to evaluate the stability of the consensus matrix. The cophenetic correlation coefficient is obtained by calculating Pearson correlation between the distance matrix and the distance matrix obtained by the linkage used in the reordering of 38, 40. To determine suitable k and d, we evaluate the stability of bi-clustering results over a range of combinations with k and d. We select the combination of k and d that produces the largest .

Results on TCGA cancer gene expression data

To comparatively evaluate the performance of the proposed NetBC, we compare NetBC with other related and representative bi-clustering methods on separating samples, including NCIS38, MSSRCC29, and BCC22 on two large scale cancer gene expression data from TCGA34. Since all the selected comparing aim to extract non-overlapping bi-clusters with checkerboard structure, we can use the dependence test between different clinical features and the discovered subtypes to evaluate their performance.

The lung cancer gene expression data contains 1298 patients (samples) with gene expression profiles of 20530 genes. The cancer subtypes of these samples are unknown. For comparison, we adopts the clinical features to study the performance of NetBC and these comparing methods. The clinical features are survival analysis, percent lymphocyte, eml4 alk translocation performed, pathologic stage, percent tumor cells stage, percent tumor nuclei. We choose relapse-free survival (RFS) for survival analysis. RFS means the length of time after primary treatment to a cancer patient that survives without any signs or symptoms of that cancer. RFS is one way to measure how well the treatment works. Percent lymphocyte means different percentages of infiltration of lymphocyte. Eml4 alk translocation clinical feature means whether Eml4 gene and alk gene are fused, the fusion of these two genes can lead to lung cancer. Pathologic stage represents different stages of the cancer pathologic. Percent tumor cell stage represents the percentages of tumor cells in the total cells. Percent tumor nuclei stage represents the percentages of tumor nuclei in a malignant neoplasm specimen. After removing samples with missing data of clinical features, 486 samples with 20530 genes are remained in the lung cancer dataset.

The breast cancer gene expression dataset contains 1241 patients with 17814 genes. Removing the samples that lack of clinical data, we finally retain 416 samples with 17372 genes. The clinical features to evaluate these bi-clustering methods contain AJCC Stage, Converted stage, Node coded, Tumor coded, Percent normal cells, and Percent tumor nuclei. We also make survival analysis for breast cancer, but no method has a p-value smaller than 0.05, one possible reason is the insufficient clinical data. The AJCC stage represents the different stages of the cancer based on the present lymph nodes. Converted stage represents different stages of the cancer. Node coded means different Node status of patients. Tumor coded means different types of tumor. Percent normal cells represents different percentages of normal cells in the malignant neoplasm specimen. Percent tumor nuclei represents different percentages of the tumor nuclei in the malignant neoplasm specimen.

The gene interaction network used for experiments are combined with networks collected from BioGRID41 (version: 3.4.138, access date: July 1, 2016), HPRD42 (version: 9, access date: February 15, 2017) and STRING43 (version: 10.0, access date: February 15, 2017). To fairly compare NetBC with NCIS, the collected gene interaction network for both NetBC and NCIS is directed. Since the TCGA cancer gene expression data is too large, we use k-means to initialize the indicative matrix R and C of NetBC, NCIS and MSSRCC. The number of iterations for these methods is set as 300. We set k = 7 and d = 6 for the TCGA lung cancer gene expression data and k = 11 and d = 6 for the TCGA breast cancer gene expression data based on the cophenetic correlation coefficient over a range of combinations of k and d (k from 1 to 12, and d from 4 to 6).

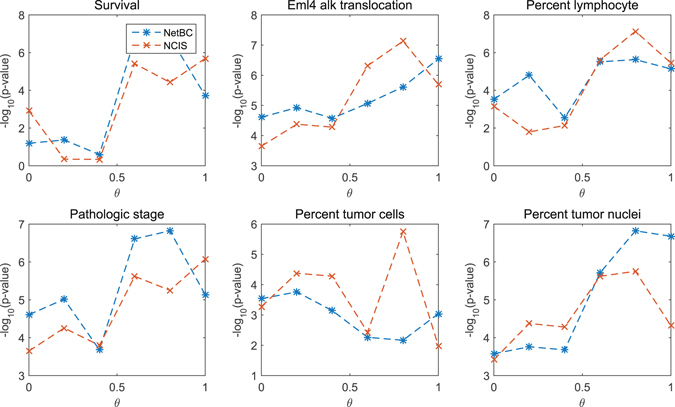

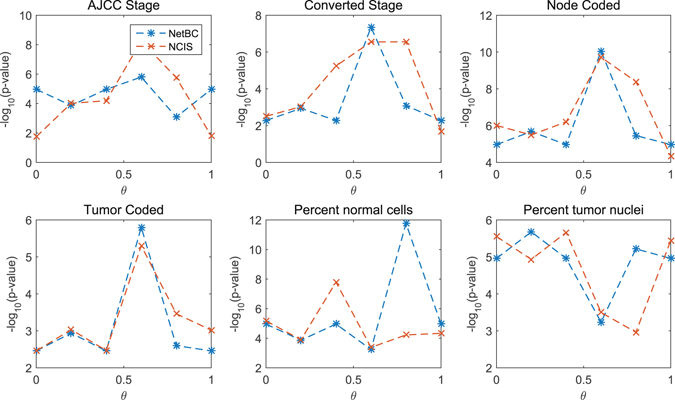

θ is a scalar parameter to balance the contribution of gene interaction network and the deviation of gene expression profiles among samples when assigning genes weights. The significance levels of the difference between different clinical features and subtypes discovered by NetBC and NCIS with a range over different θ are given in Fig. 1 (Lung) and Fig. 2 (Breast). The p-value is adjusted by Benjamini & Hochberg method44. From Fig. 1 and Fig. 2, we can see that the input value of θ affects the experimental results of NetBC and NCIS. θ ∈ (0,1) means assigning weights to genes according to both the variation of gene expression levels and gene interaction network. We can find that NetBC and NCIS with θ ∈ (0.5, 1) show better performance than their cousins θ ∈ (0,0.5) in most cases. This observation demonstrates that assigning weights to genes according to both the variation of gene expression levels and gene interaction network can improve the performance of bi-clustering than using gene expression profiles along. NetBC also outperforms NCIS in majority cases.

Figure 1.

p-value of the dependence test between different clinical features and sub-types of Lung cancer discovered by NetBC (or NCIS) under different input values of θ For the survival time, we use logrank test. For the eml4 alk translocation performed, percent lymphocyte infiltration, percent tumor nuclei, and percent tumor nuclei, we use the Chi-squared test. The p value is adjusted by Benjamini & Hochberg method. Larger −log10(p-value) means better performance.

Figure 2.

p-value of the dependence test between different clinical features and sub-types of Breast cancer discovered by NetBC (or NCIS) under different input values of θ For all the clinical features, we use the Chi-squared test. The p value is adjusted by Benjamini & Hochberg method. Larger −log10(p-value) means better performance.

To fairly compare NetBC, NCIS with BCC and MSSRCC, θ is setting as 0 for both NetBC and NCIS. Parameters of BCC are the number of gene clusters (or samples clusters), and the initialization of Gaussian distribution (μ, σ). μ and σ are fixed as random values provided in their demo codes, since there is no prior knowledge about the distribution of samples. The significance levels of the difference between subtypes discovered by these bi-clustering methods and the clinical features are given in Table 1 (Lung) and Table 2 (Breast). The p-value is adjusted by Benjamini & Hochberg method44. In these tables, a smaller p value indicates better results. From Table 1 and Table 2, we can see that NetBC has smaller p-value than other methods on most clinical features. Given the p-value threshold 0.05, NetBC successfully divides cancer patients into subtypes according to clinical features. BCC can not separate the cancer subtypes as well as others. That is principally because it assumes that patients are generated by a finite mixture of underlying probability distributions. Since the cancer gene expression data has limit samples with a large amount of genes, it is difficult to well estimate these underlying distributions. Although both NetBC and NCIS assign weights to genes as the importance indicator of genes, NetBC performs much better than NCIS on most clinical features. The main difference between them is that the objective function of NetBC is to minimize the sum-squared residue, while NCIS is to minimize the sum-squared distance between entries and centroids of bi-clusters. NCIS can only discover bi-cluster with constant values, NetBC can not only discover bi-clusters with constant values but also bi-clusters with coherent trend values. We can also observe that NetBC outperforms MSSRCC. MSSRCC utilizes a similar objective function as NetBC to minimize the sum-squared residue, the main difference between them is that NetBC assigns weights to genes, but MSSRCC does not. NetBC uses matrix factorization to get row and column indicative matrices and MSSRCC iteratively obtains row and column clusters by a k-means like algorithm on row and column dimensions. This observation shows that assigning weights to genes can improve the performance of bi-clustering.

Table 1.

p value of the dependence test between different clinical features and the discovered subtypes of Lung cancer.

| Method | Survival | Eml4 alk translocation | Percent lymphocyte | Pathologic stage | Percent tumor cells | Percent tumor nuclei |

|---|---|---|---|---|---|---|

| NetBC | 3.03 × 10−1 | 9.98 × 10 −3 | 2.95 × 10−2 | 9.98 × 10 −3 | 2.91 × 10 −2 | 2.79 × 10 −2 |

| NCIS | 5.42 × 10−2 | 2.60 × 10−2 | 4.26 × 10−2 | 2.60 × 10−2 | 3.78 × 10−2 | 3.28 × 10−2 |

| MSSRCC | 1.19 × 10 −2 | 7.85 × 10−2 | 2.48 × 10 −2 | 4.34 × 10−2 | 6.30 × 10−2 | 5.23 × 10−2 |

| BCC | 8.54 × 10−1 | 3.78 × 10−1 | 8.54 × 10−1 | 8.54 × 10−1 | 9.97 × 10−1 | 7.57 × 10−1 |

For the survival time, we use logrank test. For the eml4 alk translocation performed, percent lymphocyte infiltration, percent tumor nuclei, and percent tumor nuclei, we use the Chi-squared test. The p value is adjusted by Benjamini & Hochberg method. The smaller the p value, the better the performance is.

Table 2.

p value of the dependence test between different clinical features and the discovered subtypes of Breast cancer.

| Method | AJCC Stage | Converted Stage | Node Coded | Tumor Coded | Percent normal cells | Percent tumor nuclei |

|---|---|---|---|---|---|---|

| NetBC | 6.96 × 10 −3 | 1.02 × 10−1 | 6.96 × 10 −3 | 8.50 × 10−2 | 7.01 × 10 −3 | 7.01 × 10 −3 |

| NCIS | 1.80 × 10−2 | 4.82 × 10−2 | 4.11 × 10−3 | 4.82 × 10 −2 | 2.03 × 10−2 | 7.20 × 10−3 |

| MSSRCC | 2.30 × 10−2 | 2.62 × 10 −2 | 1.42 × 10−2 | 7.13 × 10−2 | 1.42 × 10−2 | 3.17 × 10−2 |

| BCC | 9.5 × 10−1 | 9.5 × 10−1 | 9.5 × 10−1 | 9.5 × 10−1 | 9.5 × 10−1 | 9.5 × 10−1 |

For all the clinical features, we use the Chi-squared test. The p value is adjusted by Benjamini & Hochberg method. The smaller the p value, the better the performance is.

Results on cancer gene expression data with known subtypes

We also apply NetBC, NCIS, MSSRCC and BCC in clustering cancer gene expression datasets with known subtypes. Table 3 provides the brief description of these datasets. Breast contains three subtypes: samples from patients who developed distant metastases within 5 years (34 samples), samples from patients who continued to be disease-free after a period of at least 5 years (44 samples), samples from patients with BRCA germline mutation (20 samples). ALL contains three types of leukemia: 19 acute lymphoblastic leukemia (ALL) B-cell, 8 ALL (T-cell), 11 acute myeloid leukemia (AML). Liver contains four subtypes: sprague dawley (67 samples), wistar (32 samples), wistar kyoto (21 samples), fisher (2 samples). Leukemia contains three subtypes: ALL (B-cell) (10 samples), ALL (T-cell) (samples), AML (10 samples). Tumor contains two subtypes: cancer patients (31 samples) and normal (19 samples). DLBCLB includes three subtypes of diffuse large B cell lymphoma, ’oxidative phosphorylation’ (42 samples), ’B-cell response’ (51 samples), and ’host response’ (87 samples). DLBCLC contains four subtypes of diffuse large B cell lymphoma according to statistical differences of the survival analysis: 17 samples, 16 samples, 13 samples, 12 samples. MultiB contains four subtypes: breast cancer (5 samples), prostate cancer (9 samples), lung cancer (7 samples), and colon cancer (11 samples). The adopted gene interaction networks are also collected from BioGRID41, HPRD42 and STRING43.

Table 3.

Details of 8 cancer gene expression datasets.

| Dataset | Source | #Subtypes(d) | #samples(n) | #genes(m) |

|---|---|---|---|---|

| Breast | 53 | 3 | 98 | 1213 |

| ALL | 54 | 3 | 38 | 5571 |

| Leukemia | 54 | 3 | 30 | 4412 |

| Tumor | 54 | 2 | 50 | 12422 |

| Liver | 55 | 4 | 122 | 8799 |

| DLBCLB | 56 | 3 | 180 | 661 |

| DLBCLC | 56 | 4 | 58 | 3759 |

| MultiB | 56 | 4 | 32 | 5565 |

#Subtypes is the number of cancer subtypes (or clusters), #samples is the number of samples, and #genes is the number of genes.

Since the ground truth sample clusters of these datasets are known, we adopt two widely used metrics: rand index (RI)45 and F1-measure 46 to evaluate the quality of clustering. Suppose the ground truth subtypes of samples in the gene expression data matrix are , the clusters produced by a clustering method are . represents the number of pairs of samples that are both in the same clusters of and also both in the same clusters of ; represents the number of pairs of samples that are in the same clusters of but in different clusters of ; represents the number of pairs of samples that are in different clusters of but in the same clusters of ; represents the number of pairs of samples that are in different clusters of and also in different clusters of . RI is defined as follows:

F1-measure is the harmonic mean of precision and recall and is defined as follows:

where Pr and Re are precision and recall, defined as follows:

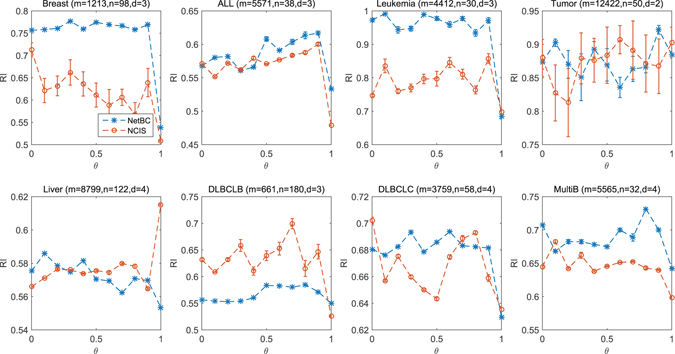

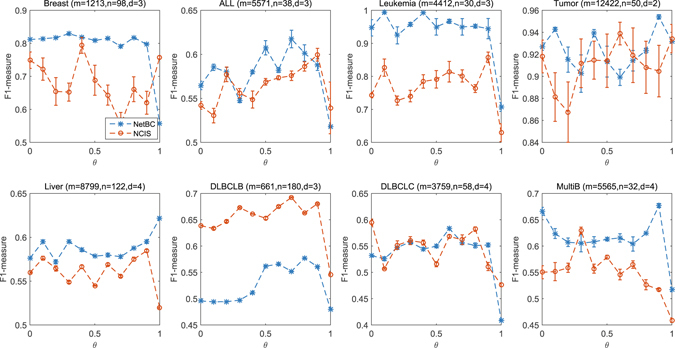

We compare the performance of NetBC with NCIS, MSSRCC and BCC. We perform the experiment by randomly initializing the row and column indicative matrices R and C of NetBC, NCIS and MSSRCC. The number of iterations for NetBC and NCIS is set as 300, and θ = 0 is used for both NetBC and NCIS. The parameter setting of BCC is similar to the experiment on TCGA cancer gene expression data. We perform 10 independent experiments and report the average results for each method on a particular dataset. We determine the number of gene clusters k based on the cophenetic correlation coefficient and set d as the ground truth number of cancer subtypes. Figure 3 provide the experimental results of these comparing methods with respect to RI and F1-measure. We can observe that NetBC performs better than other methods under both RI and F1-measure. This observation indicates that NetBC is an effective bi-clustering approach to identify cancer subtypes.

Figure 3.

RI (a) and F1-measure (b) of different bi-clustering methods on eight datasets.

We also compare NetBC and NCIS when incorporating gene interaction network on real cancer gene expression data with known subtypes. θ controls the balance of referring to gene interaction network and the variation of gene expression profiles when assigning weights to genes. We analyze the influence of θ by varying its value from 0 to 1 with stepsize 0.1. We perform the experiment by randomly initializing the indicative matrices R and C of NetBC, NCIS, and fix the number of iterations for both NetBC and NCIS as 300. Figures 4 and 5 reveal the RI and F1-measure of NetBC and NCIS on eight cancer gene expression datasets. The reported experimental results are the average of ten independent runs for each particular dataset under each input value of θ. We can see that NetBC consistently outperforms NCIS over a range of θ values in most cases. This experimental results again demonstrate that NetBC improves the performance of cancer subtypes discovery. We can observe that NetBC (0.1 ≤ θ ≤ 0.9) generally has better performance than NetBC when θ = 0 (or θ = 1). From these observations, we can conclude that assigning weights to genes by referring to both gene expression profiles and gene interaction network shows advantage than assigning weights to genes by using gene interaction network (or by gene expression profiles) alone. However, there is no clear pattern to choose the most suitable θ. The possible reason is that θ is not only related to the deviation of expression profiles, but also the quality of gene interaction network and gene expression data. Adaptively choosing a suitable θ is an important future pursue. In summary, these empirical study shows that integrating gene interaction networks with gene expression profiles can generally boost the performance of bi-clustering in discovering cancer subtypes.

Figure 4.

RI under different input values of θ.

Figure 5.

F1-measure under different input values of θ.

Results on synthetic datasets

Let denote the set of implanted bi-clusters and denote the set of bi-clusters discovered by a bi-clustering method. We can measure the similarity between the implanted bi-clusters and discovered bi-clusters by using the average bi-cluster relevance score14 as follows:

| 1 |

where each (or ) contains a gene set (or ) and a sample set (or ). The relevance score reflects to what extent the discovered bi-clusters represent implanted bi-clusters in the gene dimension. Similarly, average bi-cluster recovery is defined as , which evaluates how well implanted bi-clusters are covered by a bi-clustering method.

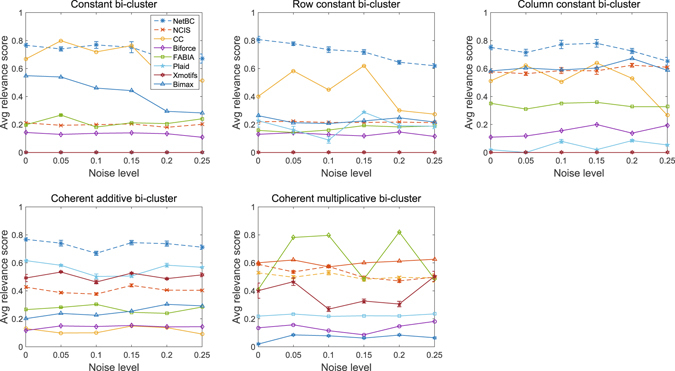

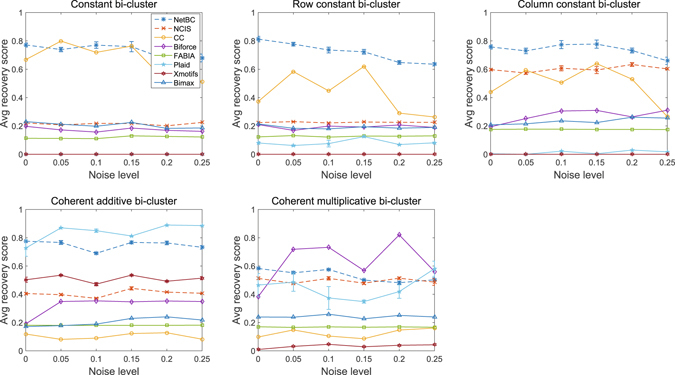

Here, we compare NetBC with several bi-clustering methods on synthetic datasets with known bi-clusters. The codes of these comparing methods are online available, including Cheng and Church (CC)12, BiMax14, FABIA27, Plaid28, xMOTIFs26 and Biforce24.

Since NetBC and NCIS aim to discover non-overlapping bi-clusters, the synthetic datasets are generated without overlapping bi-clusters in the same way as that done by Prelic et al.14 and Wang et al.47 Particularly, these synthetic datasets are generated with five different types of bi-clusters, each synthetic dataset contains one type of bi-clusters. The five types of bi-clusters are (i) constant bi-cluster; (ii) row-constant bi-cluster; (iii) column-constant bi-cluster; (iv) additive coherent bi-cluster (or shift bi-cluster); (v) multiplicative coherent bi-cluster (or scale bi-cluster). The background matrices of these synthetic datasets are with entries randomly chosen from Gaussian distribution N(0, 1). Each bi-cluster is generated by choosing a submatrix from the background matrix with the entries modified according to one of the five rules: (i) constant bi-cluster is generated by randomly selecting an entry of a submatrix and replacing other entry values with this entry value; (ii) row-constant bi-cluster is generated by randomly selecting a base column within a selected submatrix and copying it to other columns in this submatrix; (iii) column-constant bi-cluster is generated by randomly selecting a base row within a selected submatrix and copying it to other rows in this submatrix; (iv) additive coherent bi-cluster is generated by randomly selecting a base row within a selected submatrix and replacing other rows in this submatrix by shifting the base rows; (v) multiplicative coherent bi-cluster is generated by randomly selecting a base row within a selected submatrix and replacing other rows in this submatrix by scaling the base rows. Then, we also add noise to these synthetic datasets to study the robustness of these comparing methods. Noise is simulated by adding random value from normal distribution to each entry of the synthetic gene expression data matrix. The noise level is increased by enlarging the standard deviation σ from 0.05 to 0.25 with stepsize 0.05.

It is crucial to select suitable parameters for bi-clustering tools. We follow the solution used by Eren et al.48 and Sun et al.24, 49 to select major parameters of these comparing bi-clustering tools over a range of values when they perform the best in the specified range. We set the number of the bi-clusters as the ground truth number of implanted bi-clusters for all bi-clustering approaches. δ and α are critical to the accuracy and runtime of CC. δ controls the maximum mean squared-residue in a bi-cluster. By default δ = 1, we run CC with different δ between 0 and 1 with stepsize 0.01. We set δ = 0.03, since CC performs the best when δ = 0.03. α controls the tradeoff between running time and accuracy. By default α=1, a larger α produces higher accuracy but asks for more runtime, we set α = 1.5 since the synthetic datasets are not too large. The number of max iterations for each layer in Plaid affects its results and its default value is 20, and we set it as 50 since Plaid performs best with the number of max iterations fixed as 50. We also select the row.release and column.release of Plaid in [0.5, 1] and set them as 0.6. BiMax and xMOTIFs are dependent on how the data are discretized. We performs xMOTIFs on synthetic data discretized with number of levels from 0 and 50 with stepsize 1. xMOTIFs performs best on synthetic data with the number of levels as 5. BiMax requires binary input data, we perform BiMax on synthetic data that are binary discretized with different thresholds from 0.05 to 1 with stepsize 0.05. BiMax performs best with threshold 0.1. We run Biforce with parameter t 0 (edge threshold) from 0.05 to 1 with setpsize 0.05. Biforce performs best with t 0 = 0.1. NetBC and NCIS depend on the initialization of indicative matrices R and C, we randomly initialize them for both NetBC and NCIS for all experiments on synthetic datasets. The number of iterations for NetBC and NCIS is set as 300. θ is set as 0, since the gene interaction networks of these synthetic datasets are not available.

From Figs 6 and 7, we can see that, even without incorporating gene interaction networks, NetBC still achieves better performance than most bi-clustering methods on the constant, row constant, column constant, coherent additive bi-clusters. CC achieves relatively better performance on constant, row constant and column constant bi-clusters. Plaid achieves better performance on coherent additive bi-clusters than other methods. xMOTIFs is skilled in extracting additive bi-clusters. Bimax is good at extracting column constant bi-clusters. On the coherent multiplicative bi-clusters, Biforce performs better than NetBC. Furthermore, we can see that NetBC outperforms NCIS on all these five types of bi-clusters and NetBC is generally robust to noise.

Figure 6.

Relevance of bi-clustering methods on five types of bi-clusters.

Figure 7.

Recovery of bi-clustering methods on five types of bi-clusters.

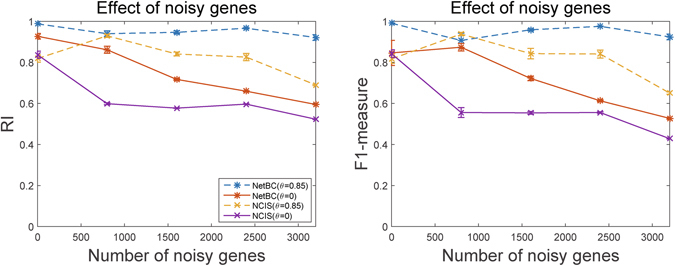

To further assess the performance of NetBC and NCIS, we compared their performance on separating samples in Leukemia cancer gene expression data with simulated noisy genes. Leukemia contains 38 samples and 4412 genes. The gene interaction network (obtained from BioGrid, HPRD and STRING) of Leukemia contains 162987 edges. We assign weights to genes according to the variation of gene expression profiles across samples and gene interaction network. Next, we choose genes with lowest weight as ‘uninformative’ genes and tag them as noisy genes. We permute the expression levels of these noisy genes with random numerics between the maximum and minimum value of the Leukemia gene expression data matrix. Figure 8 reports the results of NetBC and NCIS under different number of noisy genes.

Figure 8.

Performance of NetBC and NCIS under different number of noisy genes.

From this Figure, we can see that NetBC almost always outperforms NCIS, but the performance of NetBC and NCIS with θ = 0, and of NCIS with θ = 0.85 downgrades as the number of noisy genes increase. The reason is that noisy genes not only mislead the variance of gene expression profiles, but also successively mislead the weights assigned to genes. We can find that NetBC and NCIS with θ = 0.85 generally have better performance than with θ = 0, and NetBC with θ = 0.85 is much less affected by noisy genes than NCIS with θ = 0.85. These experimental results demonstrate that incorporating gene interaction network to assign weight to genes can improve the performance of bi-clustering than assigning weights to genes based on the variation of gene expression profiles alone, and also show that NetBC can more effectively incorporating gene interaction network than NCIS.

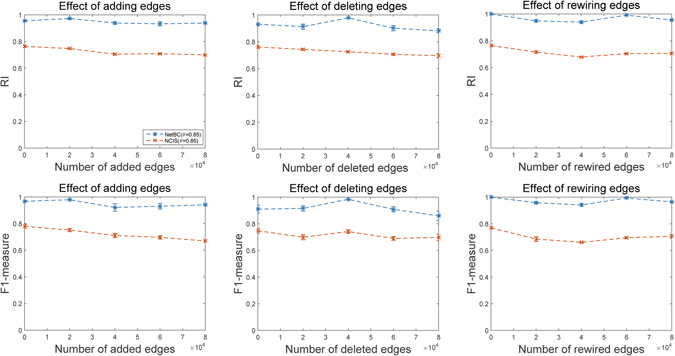

We also explore the performance of NetBC and NCIS over random perturbations in the gene interaction network. Figure 9 reports the experimental results of NetBC and NCIS with θ = 0.85 on Leukemia dataset with randomly added, deleted and rewired edges between genes in the network. Here, NetBC and NCIS with θ = 0 are not considered, since θ = 0 means the network is excluded. By comparing the results in Fig. 8 with those in Fig. 9, we can see that, even with some fluctuations both NetBC and NCIS are relatively robust to perturbations of network. NetBC sometimes even obtains better results as more edges added or deleted. This observation is accountable, since the edges in the original network are not complete but with some noisy (missing) edges, and some randomly added (deleted or perturbed) edges just coincide with missing (or noisy) edges. We believe the performance of NetBC and NCIS can be further improved with the improved quality of gene interaction network.

Figure 9.

Performance of NetBC and NCIS with randomly added, deleted and rewired edges of gene interaction network.

Runtime analysis

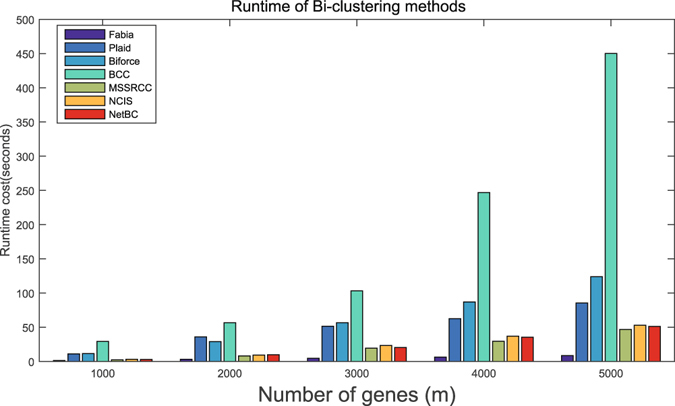

Bi-clustering is often involved with long runtime costs, especially when the gene expression data matrix is large. Therefore, it is highly challenging to develop an efficient bi-clustering algorithm. Suppose the number of iterations is T. In each iteration, NetBC takes to compute (see Method Section for meanings of these matrix symbols), to compute , to compute and to compute . Since , and m is generally larger than n. The overall time complexity of NetBC is . NCIS also works on the same size matrices, it has similar time complexity as NetBC.

Here, we record the runtime change of NetBC and of several widely used bi-clustering methods as the number of genes increases from 1000 to 5000 and fixed the number samples as 200, and report the recorded results in Fig. 10. All the comparing methods are implemented on a desktop computer (Windows 7, 8GB RAM, Intel(R) Core(TM) i5-4590). The parameters of these methods are sets as default values. From Fig. 10, we can see that Fabia runs faster than other comparing methods. The runtime cost of NetBC is slightly larger than MSSRCC, since NetBC additionally utilizes gene interaction networks to assign weights to genes. NetBC is slightly faster than NCIS and they both increase relative slowly with the increase number of genes. The runtime cost of BCC is the largest and increases sharply, since BCC assumes the genes (or samples) of gene expression data are generated by a finite mixture of underlying probability distributions and it takes much time to estimate these distributions. Similarly, Plaid assumes the values of bi-clusters can be explained by additive model, it also needs relatively larger runtime cost than other comparing methods. The runtime cost of Biforce is larger than Plaid. In summary, NetBC can hold comparable efficiency with state-of-the-art bi-clustering methods and it generally obtains better performance than related methods.

Figure 10.

Runtime costs of eight bi-clustering methods under different number of genes, the number of samples is fixed as 200.

Methods

Let represent the gene expression data for m genes and n samples, the (i, j)-th entry of G is given by g ij. A bi-cluster is a subset of rows that exhibit similar behavior across a subset of columns, and it can be described as a sub-matrix of G. Let represent a set of row indices for a row cluster and represent a set of column indices for a column cluster. A sub-matrix of G determined by and encodes a bi-cluster.

Objective

The target of bi-clustering is to discover multiple bi-clusters from a gene expression data matrix, all the entries of a bi-cluster exhibit similar numerical values as much as possible. This target can be achieved by reordering the rows and columns of the matrix to group similar rows and similar columns together13. Traditional clustering algorithms group the similar genes (or samples) together by assigning genes (or samples) to their nearest cluster centroids. Ideally, entries are with constant value in the entire bi-cluster. For real world gene expression data, constant bi-clusters are usually distorted by noises. A common criterion to evaluate a bi-cluster is the sum of squared differences between each entry of a bi-cluster and the mean of that bi-cluster17. The squared difference between an entry g ij and the mean of the corresponding bi-cluster is computed as below:

| 2 |

where is the mean of all entries in the bi-cluster, is the cardinality of . Bi-clustering tries to find all combinations of and , each combination has the minimum .

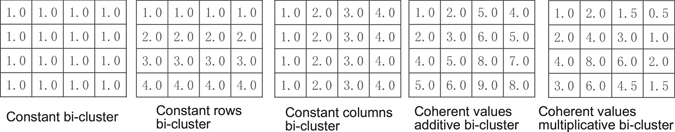

In fact, the criterion to evaluate a bi-clustering method depends on the types of bi-clusters that can be identified by that method15. The criterion defined in Eq. (2) aims at finding bi-clusters with constant values. However, researchers may be not only interested with bi-clusters with constant values, but also bi-clusters with clear trends (or patterns), which generally include five major patterns17: (i) with constant values in the entire bi-cluster; (ii) with constant values in rows; (iii) with constant values in columns; (iv) with additive coherent values; (v) with multiplicative coherent values. If , then corresponds to a bi-cluster with constant value. If (or ) with , then corresponds to a bi-cluster with constant value in rows. Similarly, if (or ), then corresponds to a bi-cluster with constant value in columns. If (or ), then corresponds to a bi-cluster with additive (or multiplicative) coherent values. These five patterns of bi-clusters are recognized to have biological significance. Figure 11 provides illustrative examples for these five patterns of bi-clusters.

Figure 11.

Example of the five types of bi-clusters.

To discover bi-clusters of these patterns, we use the squared-residue to quantify the difference between an entry g ij of a bi-cluster and the row mean, column mean and bi-cluster mean of that bi-cluster. Here we adopt a formula suggested by Cheng et al.12 as follows:

| 3 |

where is the row mean, is the column mean, is the bi-cluster mean. The smaller the squared residue h ij, the larger the coherence is.

Suppose G is partitioned into k row (gene) clusters and d column (sample) clusters. We use as the indicative matrix for gene clusters, if , gene i belongs to gene cluster . Similarly, we use matrix as the indicative matrix for sample clusters, if , sample j belongs to sample cluster d′. R and C has the following form:

Assume row-cluster has rows, and . Since there are k row clusters, we use to represent the row index set of -th row cluster. Then, we can obtain:

| 4 |

where and .

Similarly, we can obtain , . To explore multiple bi-clusters, NetBC minimizes the sum-squared residue over all genes and samples. Based on the above analysis, we can measure the overall sum-squared residue of multiple bi-clusters discovered by NetBC using G, R and C as follow:

| 5 |

where is the row mean, is the column mean, and is the bi-cluster mean of (i, j) entry of G in its corresponding bi-cluster, respectively.

The above objective function of NetBC can also be reformulated as:

| 6 |

where is an identity matrix, since .

Assigning weights to genes

Gene expression data usually has a large amount of genes but with a few samples. The similarity between samples turns to be isometric as the gene dimensionality increasing30. Usually, genes are selected based on the absolute deviation value of the gene expression profile among samples to reduce the gene dimension. Feature selection methods, like principle component analysis (PCA)50, are applied to select genes to reduce the gene dimension. But selecting a subset of genes may result in information loss on clustering gene expression data especially when the biological sense is usually not straight. For this reason, assigning weights to genes to indicate the importance of the gene is more reasonable38. NetBC assigns weights to genes by using both the gene expression profiles and gene interaction network. Genes, who regulate more genes in the gene interaction network and show larger expression variations across samples than other genes, are viewed more important to identify cancer subtypes, and will be given larger weights.

NetBC uses the GeneRank algorithm51 to assign weights to genes. Suppose interactions between m genes are encoded by . If there is directed (or undirected) an interaction from gene i to gene j, (or ); otherwise, . The importance of a gene depends on the quantity and importance of its interacting partners, a gene that interacts with more genes and shows larger variation of expression profiles across samples should be assigned with a larger weight. Based on this assumption, the weight is set as follows:

| 7 |

where denotes the weight of gene i in the t-th iteration, e i is the absolute value of expression profiles change for gene i among all samples. means the total number of genes that have interactions with gene j. θ balances the weight from gene expression profiles and gene interaction network, it also enables isolated genes to be accessed and it is usually set as 0.85. Eq. (7) is guaranteed to convergence when 0 ≤ θ ≤ 138. The final optimized weights for all genes in Eq. (7) can be computed as follows:

| 8 |

where is a diagonal matrix with the j-th diagonal element equal to . When , the weights of the genes are assigned according to Eq. (8). When θ = 0, the weights of genes are completely dependent on the deviation of gene expression profiles. When θ = 1, the weights of genes are assigned only based on the interacting partners of genes in the interaction network.

Optimization

After assigned weights to genes, the objective function of NetBC can be rewritten as:

| 9 |

where is diagonal matrix with .

To optimize Eq. (9), we can iteratively optimize row indicative matrix R and column indicative matrix C by alternatively fixing one of them as constant. Let , , then the objective function to optimize R can be rewritten as follows:

| 10 |

Let be the Lagrangian multipliers for , then the Lagrangian function for R is:

| 11 |

To solve R, we let . Based on Karush Kuhn-Tucker conditions52, we can get

| 12 |

where , , and , and are similarly defined as and . Then we can obtain the optimal R as follows:

| 13 |

After that we can fix R to update the column indicative matrix C. Similarly, we set , . Then the objective function to obtain optimal C is:

| 14 |

Let be the Lagrangian multiplier for C, then the Lagrangian function for C is as below:

| 15 |

Similarly, we can obtain the optimal formula of C.

| 16 |

where , . The optimal R and C can be iteratively optimized via Eq. (13) and Eq. (16) until Φ(R, C) convergency.

Data availability

The Matlab codes of NetBC can be accessed from http://mlda.swu.edu.cn/codes.php?name=NetBC.

Acknowledgements

The authors are grateful to the reviewers’ comments on significantly improving this paper. This work is supported by Natural Science Foundation of China (61402378), Natural Science Foundation of CQ CSTC (cstc2014jcyjA40031 and cstc2016jcyjA0351), Fundamental Research Funds for the Central Universities of China (XDJK2362015XK07 and XDJK2016B009).

Author Contributions

G.-X.Y., X.-X.Y. and J.W. proposed the theoretical method, G.-X.Y. and J.W. designed the experiments and analyzed the results, X.-X.Y. implemented the experiments, G.-X.Y., X.-X.Y. and J.W. wrote and revised the manuscript.

Competing Interests

The authors declare that they have no competing interests.

Footnotes

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Brazma A, Vilo J. Gene expression data analysis. FEBS Letters. 2000;480:17–24. doi: 10.1016/S0014-5793(00)01772-5. [DOI] [PubMed] [Google Scholar]

- 2.Kallioniemi OP, Wagner U, Kononen J, Sauter G. Tissue microarray technology for high-throughput molecular profiling of cancer. Human Molecular Genetics. 2001;10:657–662. doi: 10.1093/hmg/10.7.657. [DOI] [PubMed] [Google Scholar]

- 3.Ben-Dor, A., Friedman, N. & Yakhini, Z. Class discovery in gene expression data. Proceedings of the 5th Annual International Conference on Computational Biology, 31-38 (2001).

- 4.D’haeseleer P. How does gene expression clustering work? Nature Biotechnology. 2005;23:1499–1502. doi: 10.1038/nbt1205-1499. [DOI] [PubMed] [Google Scholar]

- 5.Perou CM, Sørlie T, Eisen MB, van de Rijn M, Jeffrey SS, Rees CA, Fluge Ø. Molecular portraits of human breast tumours. Nature. 2000;406:747–752. doi: 10.1038/35021093. [DOI] [PubMed] [Google Scholar]

- 6.Sørlie T, Perou CM, Tibshirani R, Aas T, Geisler S, Johnsen H, Thorsen T. Gene expression patterns of breast carcinomas distinguish tumor subclasses with clinical implications. Proceedings of the National Academy of Sciences. 2001;98:10869–10874. doi: 10.1073/pnas.191367098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Tavazoie S, Hughes JD, Campbell MJ, Cho RJ, Church GM. Systematic determination of genetic network architecture. Nature Genetics. 1999;22:281–285. doi: 10.1038/10343. [DOI] [PubMed] [Google Scholar]

- 8.Eisen MB, Spellman PT, Brown PO, Botstein D. Cluster analysis and display of genome-wide expression patterns. Proceedings of the National Academy of Sciences. 1998;95:14863–14868. doi: 10.1073/pnas.95.25.14863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Vesanto J, Alhoniemi E. Clustering of the self-organizing map. IEEE Transactions on Neural Networks. 2000;11:586–600. doi: 10.1109/72.846731. [DOI] [PubMed] [Google Scholar]

- 10.Domeniconi C, Gunopulos D, Ma S, Yan B, Al-Razgan M, Papadopoulos D. Locally adaptive metrics for clustering high dimensional data. Data Mining and Knowledge Discovery. 2007;14:63–97. doi: 10.1007/s10618-006-0060-8. [DOI] [Google Scholar]

- 11.Ben-Dor A, Chor B, Karp R, Yakhini Z. Discovering local structure in gene expression data: the order-preserving submatrix problem. Journal of Computational Biology. 2003;10:373–384. doi: 10.1089/10665270360688075. [DOI] [PubMed] [Google Scholar]

- 12.Cheng, Y. & Church, G. M. Biclustering of expression data. Proceedings of the Eighth International Conference on Intelligent Systems for Molecular Biology, 93-103 (2000). [PubMed]

- 13.Hartigan JA. Direct clustering of a data matrix. Journal of the American Statistical Association. 1972;267:123–129. doi: 10.1080/01621459.1972.10481214. [DOI] [Google Scholar]

- 14.Prelić BS, Zimmermann P. A systematic comparison and evaluation of biclustering methods for gene expression data. Bioinformatics. 2006;22:1122–1129. doi: 10.1093/bioinformatics/btl060. [DOI] [PubMed] [Google Scholar]

- 15.Madeira SC, Oliveira AL. Biclustering algorithms for biological data analysis: a survey. IEEE/ACM Transactions on Computational Biology and Bioinformatics. 2004;1:24–25. doi: 10.1109/TCBB.2004.2. [DOI] [PubMed] [Google Scholar]

- 16.Veroneze R, Banerjee A, Von Zuben FJ. Enumerating all maximal biclusters in numerical datasets. Information Sciences. 2017;379:288–309. doi: 10.1016/j.ins.2016.10.029. [DOI] [Google Scholar]

- 17.Tanay A, Sharan R, Shamir R. Biclustering algorithms: A survey. Handbook of Computational Molecular Biology. 2005;9:122–124. [Google Scholar]

- 18.Bergmann S, Ihmels J, Barkai N. Iterative signature algorithm for the analysis of large-scale gene expression data. Physical Review E. 2003;67:031902. doi: 10.1103/PhysRevE.67.031902. [DOI] [PubMed] [Google Scholar]

- 19.Denitto, M., Farinelli, A. & Bicego, M. Biclustering gene expressions using factor graphs and the max-sum algorithm. Proceedings of the 24th International Joint Conference on Artificial Intelligence, 925-931 (2015).

- 20.Kluger Y, Basri R, Chang JT, Gerstein M. Spectral biclustering of microarray data: coclustering genes and conditions. Genome Research. 2003;13:703–716. doi: 10.1101/gr.648603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Dhillon, I. S., Mallela, S. & Modha, D. S. Information-theoretic co-clustering. Proceedings of the 9th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 89-98 (2003).

- 22.Shan, H. & Banerjee, A. Bayesian co-clustering. Proceedings of the 8th IEEE International Conference on Data Mining. 530-539 (2008).

- 23.Carmona-Saez P, Pascual-Marqui RD, Tirado F, Carazo JM, Pascual-Montano A. Biclustering of gene expression data by non-smooth non-negative matrix factorization. BMC Bioinformatics. 2006;7:1. doi: 10.1186/1471-2105-7-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Sun P, Speicher NK, Röttger R, Guo J, Baumbach J. Bi-Force: large-scale bicluster editing and its application to gene expression data biclustering. Nucleic Acids Research. 2014;42:e78. doi: 10.1093/nar/gku201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Shi J, Malik J. Normalized cuts and image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2000;22:888–905. doi: 10.1109/34.868688. [DOI] [Google Scholar]

- 26.Murali T, Kasif S. Murali, T. and Kasif, S. Extracting conserved gene expression motifs from gene expression data. Pacific Symposium on Biocomputing. 2003;8:77–88. [PubMed] [Google Scholar]

- 27.Hochreiter S, Bodenhofer U, Heusel M. FABIA: factor analysis for bicluster acquisition. Bioinformatics. 2010;26:1520–1527. doi: 10.1093/bioinformatics/btq227. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Lazzeroni L, Owen A, et al. Lazzeroni, L. & Owen, A. Plaid models for gene expression data. Statistica Sinica. 2002;12:61–86. [Google Scholar]

- 29.Cho H, Dhillon IS. Coclustering of human cancer microarrays using minimum sum-squared residue coclustering. IEEE/ACM Transactions on Computational Biology and Bioinformatics. 2008;5:385–400. doi: 10.1109/TCBB.2007.70268. [DOI] [PubMed] [Google Scholar]

- 30.Steinbach M, Ertöz L, Kumar V. The challenges of clustering high dimensional data. In: New Directions in Statistical Physics. 2004;273:273–309. [Google Scholar]

- 31.Jiang D, Tang C, Zhang A. Cluster analysis for gene expression data: a survey. IEEE Transactions on Knowledge and Data Engineering. 2004;16:1370–1386. doi: 10.1109/TKDE.2004.68. [DOI] [Google Scholar]

- 32.Shim JE, Lee I. Network-assisted approaches for human disease research. Animal Cells and Systems. 2015;19:231–235. doi: 10.1080/19768354.2015.1074108. [DOI] [Google Scholar]

- 33.Barabási AL, Gulbahce N, Loscalzo J. Network medicine: a network-based approach to human disease. Nature Reviews Genetics. 2011;12:56–68. doi: 10.1038/nrg2918. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Chuang HY, Lee E, Liu YT, Lee D, Ideker T. Network-based classification of breast cancer metastasis. Molecular Systems Biology. 2007;3:140. doi: 10.1038/msb4100180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Hanisch D, Zien A, Zimmer R, Lengauer T. Co-clustering of biological networks and gene expression data. Bioinformatics. 2002;18:S145–S154. doi: 10.1093/bioinformatics/18.suppl_1.S145. [DOI] [PubMed] [Google Scholar]

- 36.Hofree M, Shen JP, Carter H, Gross A, Ideker T. Network-based stratification of tumor mutations. Nature Methods. 2013;10:1108–1115. doi: 10.1038/nmeth.2651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Ding, C., Li, T., Peng, W. & Park, H. Orthogonal nonnegative matrix t-factorizations for clustering. Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 126-135 (2006).

- 38.Liu Y, Gu Q, Hou JP, Han J, Ma J. A network-assisted co-clustering algorithm to discover cancer subtypes based on gene expression. BMC Bioinformatics. 2014;15:1. doi: 10.1093/bib/bbs075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Network CGA. Comprehensive molecular portraits of human breast tumours. Nature. 2012;490:61–70. doi: 10.1038/nature11453. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Brunet JP, Tamayo P, Golub TR, et al. Metagenes and molecular pattern discovery using matrix factorization. Proceedings of the National Academy of Sciences. 2004;101:4164–4169. doi: 10.1073/pnas.0308531101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Stark C, Breitkreutz BJ, Reguly T, Boucher L, Breitkreutz A, Tyers M. BioGRID: a general repository for interaction datasets. Nucleic Acids Research. 2006;34:D535–D539. doi: 10.1093/nar/gkj109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Prasad TK, Goel R, Kandasamy K, Keerthikumar S, Kumar S, Mathivanan S, Balakrishnan L. Human protein reference database2009 update. Nucleic Acids Research. 2009;37:D767–D772. doi: 10.1093/nar/gkn892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Szklarczyk D, Franceschini A, Wyder S, Forslund K, Heller D, Huerta-Cepas J, Kuhn M. STRING v10: protein-protein interaction networks, integrated over the tree of life. Nucleic Acids Research. 2015;43:D447–D452. doi: 10.1093/nar/gku1003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Shaffer JP. Multiple hypothesis testing. Annual Review of Psychology. 1995;46:561–576. doi: 10.1146/annurev.ps.46.020195.003021. [DOI] [Google Scholar]

- 45.Rand WM. Objective criteria for the evaluation of clustering methods. Journal of the American Statistical Association. 1971;66:846–850. doi: 10.1080/01621459.1971.10482356. [DOI] [Google Scholar]

- 46.Van Rijsbergen, C. J. Information retrieval. Butterworths, London (1979).

- 47.Wang Z, Li G, Robinson RW, Huang X. UniBic: Sequential row-based biclustering algorithm for analysis of gene expression data. Scientific Reports. 2016;6:23466. doi: 10.1038/srep23466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Eren K, Deveci M, Kucuktunc O, Catalyurek UV. A comparative analysis of biclustering algorithms for gene expression data. Briefings in Bioinformatics. 2013;14:279–292. doi: 10.1093/bib/bbs032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Sun P, Guo J, Baumbach J. BiCluE-Exact and heuristic algorithms for weighted bi-cluster editing of biomedical data. BMC Proceedings. 2013;7:S9. doi: 10.1186/1753-6561-7-S7-S9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Wold S, Esbensen K, Geladi P. Principal Component Analysis. Chemometrics and Intelligent Laboratory Systems. 1987;2:37–52. doi: 10.1016/0169-7439(87)80084-9. [DOI] [Google Scholar]

- 51.Morrison JL, Breitling R, Higham DJ, Gilbert DR. GeneRank: using search engine technology for the analysis of microarray experiments. BMC Bioinformatics. 2005;6:1. doi: 10.1186/1471-2105-6-233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Boyd, S., Vandenberghe, L. Convex optimization. Cambridge University Press, (2004).

- 53.Van’t Veer LJ, Dai H, Van De Vijver MJ. Gene expression profiling predicts clinical outcome of breast cancer. Nature. 2002;415:530–536. doi: 10.1038/415530a. [DOI] [PubMed] [Google Scholar]

- 54.Tamayo P, Scanfeld D, Ebert BL. Metagene projection for cross-platform, cross-species characterization of global transcriptional states. Proceedings of the National Academy of Sciences. 2007;104:5959–5964. doi: 10.1073/pnas.0701068104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Jolly RA, Goldstein KM, Wei T. Pooling samples within microarray studies: a comparative analysis of rat liver transcription response to prototypical toxicants. Physiological Genomics. 2005;22:346–355. doi: 10.1152/physiolgenomics.00260.2004. [DOI] [PubMed] [Google Scholar]

- 56.Rosenwald A, Wright G, Chan WC. The use of molecular profiling to predict survival after chemotherapy for diffuse large-B-cell lymphoma. New England Journal of Medicine. 2002;346:1937–1947. doi: 10.1056/NEJMoa012914. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The Matlab codes of NetBC can be accessed from http://mlda.swu.edu.cn/codes.php?name=NetBC.