Abstract

Background

Cognitive impairment represents a critical unmet treatment need in multiple sclerosis (MS). Cognitive remediation is promising but traditionally requires multiple clinic visits to access treatment. Computer-based programs provide remote access to intensive and individually-adapted training.

Objective

Our goal was to develop a protocol for remotely-supervised cognitive remediation that enables individuals with MS to participate from home while maintaining the standards for clinical study.

Methods

MS participants (n = 20) were randomized to either an active cognitive remediation program (n = 11) or a control condition of ordinary computer games (n = 9). Participants were provided study laptops to complete training for five days per week over 12 weeks, targeting a total of 30 hours. Treatment effects were measured with composite change via scores of a repeated neuropsychological battery.

Results

Compliance was high with an average of 25.0 hours of program use (80% of the target) and did not differ between conditions (25.7 vs. 24.2 mean hours, p = 0.80). The active vs. control participants significantly improved in both the cognitive measures (mean composite z-score change of 0.46 ± 0.59 improvement vs. −0.14 ± 0.48 decline, p = 0.02) and motor tasks (mean composite z-score change of 0.40 ± 0.71improvement vs. −0.64 ± 0.73 decline, p = 0.005).

Conclusions

Remotely-supervised cognitive remediation is feasible for clinical study with potential for meaningful benefit in MS.

Keywords: Multiple sclerosis, cognition, rehabilitation, fingolimod, quality of life, relapsing–remitting MS

Introduction

Cognitive impairment is a critical concern for individuals living with multiple sclerosis (MS), affecting at least 40% of adult and 30% of pediatric patients.1–3 Cognitive impairment can exact a major toll on overall quality of life, compromising employment and relationships.1,2

Cognitive remediation is a behaviorally-based approach that offers many treatment advantages. Traditionally, this approach has required the patient to travel to the clinic for multiple one-to-one sessions with a clinician or group.4–6 Unfortunately, this is a costly approach and daily or weekly visits to the clinic are often not feasible for individuals living with MS.5–7 While there are few adequately-designed clinical trials, meta-analyses have shown potential benefit of these programs,4,8,9 with a large controlled trial (n = 86) indicating significant benefit for a structured program of ten session memory training program.5 However, recruitment for this trial took over seven years, possibly due to the constraints of receiving treatment in the clinic.

With rapid technological advances, many web-based cognitive remediation programs are readily available using computers instead of clinicians to administer and drive the training.9,10 There are a variety of programs now available, including some that are directly marketed to individuals with neurological disorders. Key components include frequent repetitive learning trials adjusted in real-time to adapt to the user’s ongoing level of performance.10,11 These offer the advantage of a relatively low-cost and easily accessible option. However, controlled study is critically needed in order to guide effective use for individuals living with MS.

In order to conduct clinical trials that are accessible and feasible to a wide range of individuals living with MS, we have developed a protocol for remotely-supervising the use of a web-based cognitive remediation program delivered to individuals in their homes. The protocol includes an active control condition with random double-blind assignment to treatment condition. Here, we describe the features of this protocol and a pilot study of its use.

Methods

The primary outcome of this study was feasibility of our cognitive remediation protocol. We enrolled participants seeking treatment for cognitive impairment due to MS, as judged by their referring neurologist. All participants in the pilot study were required to have the same disease-modifying therapy, with all participants recently initiated on fingolimod treatment. Participants were required to be English speaking and between the ages of 18 and 70 years with a diagnosis of relapsing–remitting MS (RRMS) and no other major medical condition, and stable disease, with the additional requirement of no recent relapse or associated steroid use in the past month. Participants were also required to fully understand and consent to study procedures, with sufficient visual and motor capacity to operate the study computer equipment.

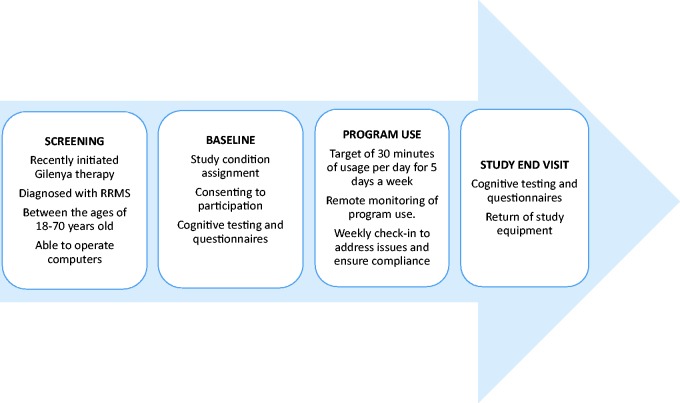

After meeting study eligibility criteria and consenting to study enrollment, participants completed a baseline cognitive evaluation and were randomly assigned to either the active or control game condition. Participants were provided with study equipment that included a laptop computer and accessories, preconfigured to their assigned condition. After receiving instruction from a study technician detailing operation of the laptop and accessing the assigned program, participants were sent home with their study computer and materials. Based on previous studies,5,9,12 we targeted a 12-week treatment period with the completion of five days of cognitive exercise per week (60 total days played across three months). For each of these days, the participant would be required to play half an hour of the program. Technical support, coaching, and monitoring of computer use were completed remotely by a study technician, also detailed below (Figure 1).

Figure 1.

Overview of study procedures.

Study equipment

For MS participants, a larger screen size is particularly important to ensure that participants do not have to strain their vision to complete the program. For this purpose, laptops with 17” screens were provided, as they allowed for optimal visibility. Noise cancelling headsets were also provided to ensure participants could create an environment that was quiet and undisturbed. Standard hand-held mice were provided, with the option for adaptive mice as needed. Touch-screen devices may be utilized for future studies; however, these require a different approach to configuration and due to its implementations.

All participants were required to have internet connectivity to facilitate real-time data collection of their program usage. Participants assigned to use the active program also needed a working internet connection in order to access the active program website. In the case that a participant was lacking an internet connection, a Wi-Fi device was installed at their homes for the duration of the study program.

Laptop configuration and monitoring software

In order to gather data on program usage, laptops were installed with a monitoring software program to provide real-time data on all computer activity to the study technician. We used the time-tracking and computer monitoring software “WorkTime,” which was developed by NesterSoft, Inc.13 The program allowed the study technician to obtain detailed measures of program usage for both study conditions.

Laptops were also installed with TeamViewer,14 a remote support software. TeamViewer allows the study technician to remotely access the participant’s desktop to assist with any technical difficulties or issues that would require direct assistance. To ensure security, the program requires passwords and access identification codes on both ends of the connection.

The laptops were all labeled to inform participants that usage activity was being monitored and recorded. Additional labeling on the laptops provided information specific to the participant the laptop was assigned to, such as their login credentials for their assigned game program, however, the laptops assigned to each condition remained indiscernible from each other.

Active treatment condition – adaptive cognitive remediation program

For the pilot study, the Lumosity platform, developed by Lumos Labs, Inc.,15 was chosen as the active adaptive cognitive remediation program. We developed a study-specific portal and set of games that focused on the most common areas of impairment in MS, including speeded information processing and working memory. The games were visually engaging, using simple rules that were explained during a brief instructional phase before participants begin. All games were adaptive as they had the ability to increase difficulty based on the participant’s improvement. The program tracked progress using various gameplay parameters, such as unique levels played and improvements made in the game.

Active control condition – ordinary computer games

In order to provide an active control, we selected a computer-based gaming program that would provide the experience of cognitive exercise associated with cognitive benefit but without the key components of the adaptive cognitive remediation programs (i.e. games not developed based on cognitive neuroscience principles to drive neural plasticity). We found that the most feasible options as a control condition would be software rather than a web-based platform. Therefore, based on prior research,16 we chose the commercially available Hoyle puzzles and board games program,17 which matched the active treatment condition with regard to overall procedure and program intensity. Rather than pre-programmed at login, the participant was given a list of daily exercises to complete that would last the same game play time as the treatment condition. Participants in the active control condition were instructed to play two games for 15 minutes each, according to a set rotational sequence. The control condition was also designed to account for nonspecific treatment effects, including placebo response, interactions with research personnel, and experience with computers and computer-related activities, and any halo or expectation effect on study assessments.

Blinding

Participants were told that they would be assigned to one of two types of cognitive exercise gaming programs, however, the participants were naïve to their assigned condition. Baseline and follow-up neuropsychological testing was completed by a blinded psychometrician, and the referring physician and study PI were also blinded to condition. The study technician was not blinded and assumed responsibility for condition assignment, participant instruction, and data entry for program use.

Remote supervision

A dedicated study technician supported participants throughout the 12 week study following a protocol for routine weekly contact. Participants were provided with support through phone and email, with the goal of responding to all requests for support within one day. Each week, the study technician compiled user data from the remote WorkTime program, and, additionally, from the Lumosity program for the active participants, and recorded the data into a database. With this information as a reference, each participant was then contacted for a weekly check-in call to discuss any concerns or technical problems with their game play. If a participant failed to respond to contact over the span of two weeks, and did not show any gameplay on the tracking software, the study PI would contact them directly to determine the circumstances behind the participant’s lack of contact and program usage, as well as to provide encouragement for participation, if needed.

Outcome measures

The primary outcome of this study was feasibility, measured by overall compliance. Based on in-clinic trials of cognitive remediation,5,12 we defined compliance to be 50% or more of targeted usage (i.e. playing 15 of the 30 target hours across the 12 week study). Further, at this 50% mark, participants would receive more frequent and intensive cognitive remediation when compared to clinic-based sessions (usually one to three visits per week).

Measures used to evaluate initial efficacy of the cognitive exercise programs are listed in Table 1. To determine change in cognitive functioning, participants were administered a battery of neuropsychological tests at baseline and follow-up. Tests were selected due to their sensitivity to cognitive functioning in MS and measure complex attention, working memory, processing speed, visual and verbal learning. Raw performance scores were transformed to z-scores using age-referenced normative data provided with the study manuals. Representative z-scores from each test were then averaged to create a general cognitive composite score. The composite scores represent an individual’s overall cognitive or motor ability by averaging the scores across several tests. For this reason, difference on a single test that did not reach significance may still contribute to the overall significant difference in composite score change.

Table 1.

Baseline descriptive measures.

| Test | Outcome | Study measure |

|---|---|---|

| EDSSa | Total score | Neurologic disability |

| WRAT-3b | Reading recognition, | Estimated premorbid cognitive functioning |

| SDMTc | Speeded information processing | Estimated level of current cognitive impairment |

| Cognitive composite | Domain | Study outcome |

| WAIS-IVe letter number sequence | Information processing | Composite (total score) |

| SRTf | Verbal learning | Composite (total trials) |

| BVMT-Rg | Visual learning | Composite (total trials) |

| Corsi block visual sequence33 | Working memory | Total score |

| Motor composite | ||

| DKEFSh trail 5 | Motor speed | Composite (letter/number trial) |

| Nine-hole peg test18 | Fine motor function | Composite (both trials on both hands) |

| Timed 25 foot walk19 | Gross motor function | Composite (total trials) |

| Self-report measures | ||

| Participant-reported outcomes (with informant input) | ||

| ECogi | ||

Note: Alternate forms used where available to minimize practice effects.

Expanded Disability Status Scale.26

Wide range achievement test, third edition.27

Symbol digit modalities test.28

Paced auditory serial addition test.29

Wechsler adult intelligence scale, fourth edition.30

Selective reminding test.31

Brief visuospatial memory test, revised.32

Delis–Kaplan executive function system.20

Everyday cognition scale.21

A second composite score was created to determine change in motor functioning. In addition to scores from the motor function tests of the nine-hole peg test and the timed 25-foot walk,18,19 the motor composite score included the fine motor speed score from the Delis–Kaplan executive function systems (DKEFS) test.20

Finally, efficacy was also measured by self- and informant- reported outcomes. Self-reported outcomes included the everyday cognition (ECog) questionnaire, and global impression of change for the participant and their chosen informant. The ECog is used to determine informant-rated impressions of the participant’s cognitive functioning when engaging in everyday activities.21

Procedures

All participants were recruited through the Stony Brook Medicine Multiple Sclerosis Comprehensive Care Center. Enrolled participants were required to travel to clinic on two separate occasions, once at the study start date to establish their cognitive baseline and receive the study equipment, and a second visit after the end of the twelve week remediation for cognitive testing and to return the study equipment. Additionally, participants signed a “Time Agreement Contract” to reinforce study compliance. As the study was underway, we provided an option for the study technician to travel to their home to provide these visits if the participant had issues with transportation.

Participants were compensated for attendance of the baseline and follow-up appointments ($100 per visit). Individual cognitive remediation sessions were not compensated.

Results from pilot study

We completed an intention-to-treat analysis of the results including findings from all participants enrolled in the study.

Participants

A total of 20 participants were enrolled in this study from the Stony Brook MS Comprehensive Care Center in Stony Brook, NY. Table 2 shows the demographic and clinical features of the sample. The groups were generally matched in age, gender and overall disease severity.

Table 2.

Sample demographic and clinical characteristics.

| Characteristic: | Active condition (n = 11) | Control condition (n = 9) | p value |

|---|---|---|---|

| Gender | |||

| Female (%) | 63.6% (n = 7) | 77.7% (n = 7) | – |

| Age (years) | |||

| Mean (SD) | 38 ( ±10.58) | 42 (±12.53) | 0.42 |

| Range | 24–55 | 19–55 | – |

| EDSSa | |||

| Median | 2 | 2.5 | 0.23 |

| Range | 0–3 | 0–3.5 | – |

| Education (years) | |||

| Mean (SD) | 15.27 (±2.57) | 13.88 (±1.90) | 0.18 |

| Range | 12–20 | 11–16 | – |

| WRAT-3b reading | |||

| Mean (SD) | 100.5 (±10.42) | 102.3 (±6) | 0.64 |

| ECogc (baseline) | |||

| Mean (SD) | 67.73 (±18.55) | 63.1429 (±18.97) | 0.62 |

| SDMTd (baseline) | |||

| Mean z-score (SD) | −0.45 (±1.25) | −0.79 (±1.01) | 0.50 |

| Race | |||

| White (%) | 72.7% (n = 8) | 66.7% (n = 6) | – |

| Black (%) | 18.2% (n = 2) | 11.1% (n = 1) | – |

| Ethnicity | |||

| Hispanic (%) | – | 11.1% (n = 1) | – |

| Non-Hispanic (%) | 90.9% (n = 10) | 88.8% (n = 8) | – |

As shown in Table 3, the groups did not significantly differ at baseline or follow-up on any of the study measures. As would be expected, the group as a whole is characterized by mild to moderate impairments in domains sensitive to MS including complex attention and learning, as well as motor speed.

Table 3.

Compliance.

| Active (n = 11) | Control (n = 9) | Overall (n = 20) | |

|---|---|---|---|

| Mean total hours of game usage (SD) | 25.69 (8.26) | 24.16 (15.55) | 25.00 (11.76) |

| Mean hours played per week | 1.93 (0.64) | 1.87 (1.13) | 1.90 (0.87) |

| Total hours played | |||

| 0–9.99 | 9.09% (n = 1) | 11.11% (n = 1) | 10% (n = 2) |

| 10–19.99 | 9.09% (n = 1) | 44.44% (n = 4) | 25% (n = 5) |

| 20–29.99 | 45.45% (n = 5) | 11.11% (n = 1) | 30% (n = 6) |

| 30 or more | 36.36% (n = 4) | 33.33% (n = 3) | 35% (n = 7) |

| Percentage compliant to study requirements | 81.8% (n = 9) | 77.78% (n = 7) | 80.00% (n = 16) |

Game use and compliance

Across the 12-week treatment period, participants were targeted to complete a total of 30 hours of cognitive exercises. There was no significant difference in play time between the two conditions, with those in the active group averaging 25.7 ± 8.3 hours over the course of the study compared to 24.2 ± 15.6 hours of play in the control condition (p = 0.80).

The range of game play time for the entire group was 4.5–56.7 hours. Only four participants did not meet the 50% cutoff for compliance, indicating an overall compliance rate of 80% (n = 16). Of those who were non-compliant, two were in the active condition and two were in the control group.

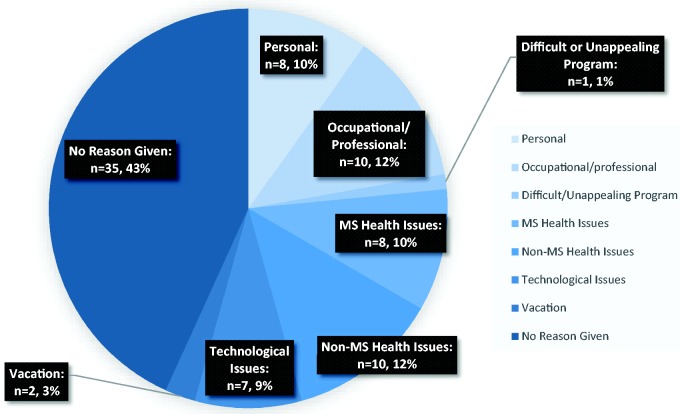

Reasons for not meeting weekly compliance goals were also recorded, with the most common reports being health issues not related to MS (e.g. upper respiratory infection) and occupational commitments (see Figure 2). Figure 2 further specifies the total instances of noncompliance each week into the reasons for noncompliant weeks. Noncompliant participants were given extra guidance and encouragement by the study technician to reach their weekly goal of 2.5 hours per week.

Figure 2.

Reasons given for a noncompliant training week (across total weeks of trial participation).

Program benefit

While the groups did not differ in either baseline or follow-up performances with the exception of the timed 25 foot walk, p = 0.01 at follow-up (Table 4), change in the cognitive and motor composite scores suggest a significant benefit in favor of the active treatment (Table 5). The active group significantly improved on test performance at follow-up compared to the control condition (change in general cognitive composite z score of 0.46 ± 0.59 improvement vs. −0.14 ± 0.48 decline, p = 0.02). The active group, unexpectedly, had a significantly improved mean motor composite score (0.40 ± 0.71 improvement vs. −0.64 ± 0.73 decline, p = 0.005). Large effect sizes (Cohen’s d) were observed in both composite analyses, indicating clinical significance of the remediation program.

Table 4.

Performance by group at baseline and study end.

| Measure | Baseline visit |

Follow-up visit |

||||

|---|---|---|---|---|---|---|

| Active (n = 11) | Control (n = 9) | vs. | Active (n = 11) | Control (n = 9) | vs. | |

| mean ± SD | mean ± SD | p | mean ± SD | mean ± SD | p | |

| WAIS-IVa letter-numbering sequencing* | −0.40 (±0.70) | 0.09 (±0.80) | 0.18 | −0.04 (±0.73) | −0.04 (±0.72) | 0.99 |

| Visual span (Corsi blocks)b* | −0.65 (±1.00) | −0.48 (±1.25) | 0.75 | −0.26 (±0.68) | −0.52 (±0.67) | 0.41 |

| PASAT 2 second trialsc | −0.68 (±1.21) | −0.93 (±1.27) | 0.66 | −0.28 (±1.05) | −0.48 (±1.17) | 0.69 |

| PASAT 3 second trials | −0.52 (±1.61) | −0.89 (±1.30) | 0.58 | 0.24 (±0.99) | −0.32 (±0.88) | 0.20 |

| DKEFSd trail 5† | 0.70 (±0.43) | 0.52 (±0.38) | 0.34 | 0.64 (±0.43) | 0.63 (±0.26) | 0.97 |

| DKEFS trails 2/3 combo | 0.25 (±0.72) | −0.20 (±1.18) | 0.34 | 0.27 (±0.77) | 0.00 (±1.08) | 0.54 |

| SRT learning trialse* | −0.30 (±1.23) | −0.15 (±1.66) | 0.82 | 0.13 (±1.45) | −0.24 (±0.86) | 0.49 |

| SRT delay | 0.51 (±1.17) | 0.67 (±1.01) | 0.75 | 0.59 (±1.39) | 0.30 (±1.16) | 0.62 |

| BVMT-R learning trialsf* | −0.80 (±1.36) | 0.06 (±1.37) | 0.18 | −0.15 (±1.64) | −0.25 (±1.56) | 0.89 |

| BVMT-R delay | −0.94 (±1.71) | 0.16 (±0.93) | 0.09 | −0.17 (±1.69) | −0.33 (±1.46) | 0.82 |

| Nine-hole pegs dominant handg† | −1.96 (±1.88) | −2.18 (±1.31) | 0.77 | −1.46 (±1.70) | −2.76 (±1.34) | 0.07 |

| Nine-hole pegs non-dominant hand† | −1.91 (±1.78) | −2.53 (±1.78) | 0.45 | −1.56 (±1.55) | −3.20 (±2.34) | 0.09 |

| Timed 25-foot walkh† | −2.46 (±1.77) | −4.10 (±1.95) | 0.07 | −2.19 (±1.35) | −5.54 (±3.42) | 0.01 |

WAIS-IV: Wechsler adult intelligence scale, fourth edition.30

Corsi block tapping task.33

PASAT: paced auditory serial addition test.29

DKEFS: Delis–Kaplan executive function system.20

SRT: selective reminding test.31

BVMT-R: brief visuospatial memory test, revised.32

Nine-hole peg test.18

Timed 25-foot walk.19

Tests make up the general cognitive composite.

Tests make up the motor domain composite.

Table 5.

Change in cognitive and motor composite scores show benefit for active condition.

| Baseline visit mean z score |

Follow-up visit mean z score |

Mean change |

||||||

|---|---|---|---|---|---|---|---|---|

| Active (n = 10) | Control (n = 9) | Active (n = 11) | Control (n = 9) | Active (n = 10) | Control (n = 9) | |||

| mean ± SD | mean ± SD | mean ± SD | mean ± SD | mean ± SD | mean ± SD | p | Cohen’s d | |

| General cognitive composite | −0.54 (±0.80) | −0.12 (±0.92) | −0.08 (±0.81) | −0.26 (±0.65) | 0.46 (±0.59) | −0.14 (±0.48) | 0.02 | 1.11 |

| Motor composite | −1.54 (±1.39) | −2.07 (±0.86) | −1.14 (±0.98) | −2.72 (±1.08) | 0.40 (±0.71) | −0.64 (±0.73) | 0.01 | 1.45 |

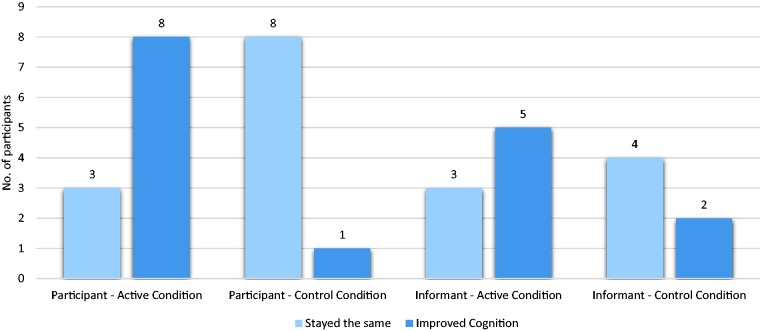

For self-report (Figure 3), more participants in the active vs. control condition reported improved cognitive functioning (72% vs. 11%, p = 0.04), as well as improved overall memory (55% vs. 11%, p = 0.045). The active group reported a higher level of perceived improvement in performing daily tasks measured by the ECog (57.36 ± 13.51 vs. 40.56 ± 2.13, p = 0.002).

Figure 3.

Participant and informant reported impression of cognitive change. Participant reported outcome active vs. control (0.73 ± 0.47 vs. 0.11 ± 0.33, p = 0.003). Informant reported outcome active vs. control (0.71 ± 0.49 vs. 0.33 ± 0.52, p = 0.20).

All statistical analysis, including descriptive statistics, t-tests, and comparative analysis, was carried out in IBM SPSS Statistics 21.

Discussion

Our protocol using remote supervision for cognitive remediation delivered to participants in their home is feasible for controlled and double-blind clinical trials for use in MS. We found that this protocol allowed us to rapidly recruit study participants and that there was a high degree of user compliance.

Noncompliance is found in any trial requiring consistent participation,4,10 especially for those with daily work and family obligations. However, this relatively low noncompliance rate suggests a remote cognitive remediation program is both a feasible and viable method for individuals with MS to access treatment.

Components of the protocol can be exchanged: the chosen adaptive program could be substituted using any number of other programs, control condition, and time played. The ideal control condition may be web-based as well, for instance a version of the program that is adaptive vs. non-adaptive for true comparison. Furthermore, a recent meta-analysis performed on studies for mild cognitive impairment suggest that one hour of playing time may be superior to the 30 minutes used in our study for optimal benefit.4 However, longer playing time may represent a trade-off with compliance.

In addition to compliance, we found a preliminary signal for potential benefit as well. Participants in the active condition had greater benefit on both the cognitive and motor composites, and this corresponded to perceived cognitive benefit as well. Additionally, improvements on specific measures of complex attention and working memory were consistent with the expected benefit based on the domains addressed by our specific set of exercises. Our findings are generally consistent with the results found for the use of similar programs in MS participants,22,23 as well as in other conditions.4,9 There is emerging evidence demonstrating the potential benefits of the overall Lumosity training platform in other populations,24 and it is similar to many other targeted training programs with options to tailor exercises at the individual or group level.

Measures addressing daily impact of cognitive training are also important to include in larger trials. For example, when including both the self- and informant-reports, the MS Neuropsychological Questionnaire may be considered more meaningful than solely the participant report used in this study.34 We have also found that a semi-structured interview, the Cognitive Assessment Interview or CAI, to be a promising outcome measure that includes both a participant and informant input 25.

For our pilot study, inclusion criteria were not based upon objectively-measured cognitive impairment. First, it is difficult to determine which representative measurement should be used to screen and enroll MS participants who may benefit from these very broadly-targeted cognitive remediation programs. Second, our findings suggest that cognitive impairment at study entry may not be closely predictive of training benefit, and studies in other conditions have actually found those with less severe baseline cognitive impairment may benefit the most.4,11,12

Reaching participants away from the clinic provides a more real-world approximation of use and allows for rapid recruitment, which adequately powers clinical trials at a much lower cost. This protocol is currently being tested for a larger controlled trial. Results of these and other studies will soon provide much needed guidance for use of cognitive remediation programs for those living with MS related-cognitive difficulties.

Research ethics

This study conformed to the Declaration of Helsinki, as well as proceeded with formal approval from Stony Brook University Human Subjects Committee (IRB), Stony Brook, and Stony Brook University IRB (CORIHS A), Stony Brook, reference number 2013-2130-R2. Written consent was required by all participants and patient identifiers were removed and anonymized.

Acknowledgements

We would like to thank Maria Amella, William Scherl, and Wendy Fang for their assistance with testing and data entry.

Funding

This work was supported by Novartis AG with support from The Lourie Foundation Inc. The cognitive remediation program was provided by Lumos Labs, Inc.

Conflict of interest.

Lauren Krupp has received compensation for activities as a speaker, consultant, advisory board participant, and/or royalties from the following: J&J, Biogen Idec, Novartis AG, Teva Neurosciences, Bayer, Multicell, Quintiles, EMD Serono, Medidata, and Pfizer. She has also received research funding from Novartis, Teva Neurosciences, National Multiple Sclerosis Society, Department of Defense, National Institutes of Health, Lourie Foundation and Multiple Sclerosis Foundation. Patricia Melville has received compensation for activities as a consultant with Novartis Pharmaceuticals Corporation.

References

- 1.Amato MP, Portaccio E, Goretti B, et al. Cognitive impairment in early stages of multiple sclerosis. Neurol Sci 2010; 31: S211–S214. [DOI] [PubMed] [Google Scholar]

- 2.Chiaravalloti ND, DeLuca J. Cognitive impairment in multiple sclerosis. Lancet Neurol 2008; 7: 1139–1151. [DOI] [PubMed] [Google Scholar]

- 3.Julian L, Serafin D, Charvet L, et al. Cognitive impairment occurs in children and adolescents with multiple sclerosis: results from a United States network. J Child Neurol 2013; 28: 102–107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Li H, Li J, Li N, et al. Cognitive intervention for persons with mild cognitive impairment: a meta-analysis. Ageing Res Rev 2011; 10: 285–296. [DOI] [PubMed] [Google Scholar]

- 5.Chiaravalloti ND, Moore NB, Nikelshpur OM, et al. An RCT to treat learning impairment in multiple sclerosis: the MEMREHAB trial. Neurology 2013; 81: 2066–2072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Filippi M, Riccitelli G, Mattioli F, et al. Multiple sclerosis: effects of cognitive rehabilitation on structural and functional MR imaging measures: an explorative study. Radiology 2012; 262: 932–940. [DOI] [PubMed] [Google Scholar]

- 7.Mattioli F, Stampatori C, Zanotti D, et al. Efficacy and specificity of intensive cognitive rehabilitation of attention and executive functions in multiple sclerosis. J Neurol Sci 2010; 288: 101–105. [DOI] [PubMed] [Google Scholar]

- 8.Rohling ML, Faust ME, Beverly B, et al. Effectiveness of cognitive rehabilitation following acquired brain injury: a meta-analytic re-examination of Cicerone et al.’s (2000, 2005) systematic reviews. Neuropsychology 2009; 23: 20–39. [DOI] [PubMed] [Google Scholar]

- 9.Wykes T, Huddy V, Cellard C, et al. A meta-analysis of cognitive remediation for schizophrenia: methodology and effect sizes. Am J Psychiatry 2011; 168: 472–485. [DOI] [PubMed] [Google Scholar]

- 10.Solari A, Motta A, Mendozzi L, et al. Computer-aided retraining of memory and attention in people with multiple sclerosis: a randomized, double-blind controlled trial. J Neurol Sci 2004; 222: 99–104. [DOI] [PubMed] [Google Scholar]

- 11.Keshavan MS, Vinogradov S, Rumsey J, et al. Cognitive training in mental disorders: update and future directions. Am J Psychiatry 2014; 171: 510–522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.O'Brien AR, Chiaravalloti N, Goverover Y, et al. Evidenced-based cognitive rehabilitation for persons with multiple sclerosis: a review of the literature. Arch Phys Med Rehabil 2008; 89: 761–769. [DOI] [PubMed] [Google Scholar]

- 13.NesterSoft, Inc. WorkTime. NesterSoft, 2015.

- 14.TeamViewer GmbH. TeamViewer. WorkTime. www.teamviewer.com, 2015.

- 15.Lumos Labs, Inc. Lumosity. Lumos Labs, 2015.

- 16.Hooker CI, Bruce L, Fisher M, et al. The influence of combined cognitive plus social-cognitive training on amygdala response during face emotion recognition in schizophrenia. Psychiatr Res Neuroimaging 2013; 213: 99–107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hoyle Gaming. Hoyle puzzles and board games program. Hoyle Gaming, 2015.

- 18.Oxford Grice K, Vogel KA, Le V, et al. Adult norms for a commercially available nine-hole peg test for finger dexterity. Am J Occup Ther 2003; 57: 570–573. [DOI] [PubMed] [Google Scholar]

- 19.Goldman MD, Motl RW, Scagnelli J, et al. Clinically meaningful performance benchmarks in MS: timed 25-foot walk and the real world. Neurology 2013; 81: 1856–1863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Parmenter BA, Zivadinov R, Kerenyi L, et al. Validity of the Wisconsin card sorting and Delis–Kaplan executive function system (DKEFS) sorting tests in multiple sclerosis. J Clin Exp Neuropsychol 2007; 29: 215–223. [DOI] [PubMed] [Google Scholar]

- 21.Farias ST, Mungas D, Reed BR, et al. The measurement of everyday cognition (ECog): scale development and psychometric properties. Neuropsychology 2008; 22: 531–544. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Plohmann AM, Kappos L, Ammann W, et al. Computer assisted retraining of attentional impairments in patients with multiple sclerosis. J Neurol Neurosurg Psychiatry 1998; 64: 455–462. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Chiaravalloti ND, DeLuca J, Moore NB, et al. Treating learning impairments improves memory performance in multiple sclerosis: a randomized clinical trial. Mult Scler 2005; 11: 58–68. [DOI] [PubMed] [Google Scholar]

- 24.Ballesteros S, Prieto A, Mayas J, et al. Brain training with non-action video games enhances aspects of cognition in older adults: a randomized controlled trial. Front Aging Neurosci 2014; 6: 277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Marzillano A, Speed B, Cersosimo B, et al. Assessing cognition in MS clinical trials: the cognitive assessment interview (CAI). Neurology 2015; 84(14 Suppl): P3.233. [Google Scholar]

- 26.Cadavid D, Tang Y, O’Neill G. Responsiveness of the Expanded Disability Status Scale (EDSS) to disease progression and therapeutic intervention in progressive forms of multiple sclerosis. Rev Neurol 2010; 51: 321–329. [PubMed] [Google Scholar]

- 27.Stone MJ, Wilkinson GS. Wide range achievement test, 3rd edition (WRAT-3). Rasch Meas Trans 1995; 8: 403. [Google Scholar]

- 28.Smith A. Symbol digits modalities test, Los Angeles: Western Psychological Services, 1982. [Google Scholar]

- 29.Gronwall DM. Paced auditory serial-addition task: a measure of recovery from concussion. Perceptual Motor Skills 1977; 44: 367–373. [DOI] [PubMed] [Google Scholar]

- 30.Hartman DE. Wechsler Adult Intelligence Scale IV (WAIS IV): return of the gold standard. Appl Neuropsychol 2009; 16: 85–87. [DOI] [PubMed] [Google Scholar]

- 31.Scherl WF, Krupp LB, Christodoulou C, et al. Normative data for the selective reminding test: a random digit dialing sample. Psychol Rep 2004; 95: 593–603. [DOI] [PubMed] [Google Scholar]

- 32.Benedict RH. Brief visuospatial memory test – revised: professional manual, Odessa, FL: PAR, 1997. [Google Scholar]

- 33.Kessels RP, van Zandvoort MJ, Postma A, et al. The Corsi block-tapping task: standardization and normative data. Appl Neuropsychol 2000; 7: 252–258. [DOI] [PubMed] [Google Scholar]