Abstract

Recapitulation involves the reactivation of cognitive and neural encoding processes at retrieval. In the current study, we investigated the effects of emotional valence on recapitulation processes. Participants encoded neutral words presented on a background face or scene that was negative, positive or neutral. During retrieval, studied and novel neutral words were presented alone (i.e., without the scene or face) and participants were asked to make a remember, know or new judgment. Both the encoding and retrieval tasks were completed in the fMRI scanner. Conjunction analyses were used to reveal the overlap between encoding and retrieval processing. These results revealed that, compared to positive or neutral contexts, words that were recollected and previously encoded in a negative context showed greater encoding-to-retrieval overlap, including in the ventral visual stream and amygdala. Interestingly, the visual stream recapitulation was not enhanced within regions that specifically process faces or scenes but rather extended broadly throughout visual cortices. These findings elucidate how memories for negative events can feel more vivid or detailed than positive or neutral memories.

1. Introduction

The hallmark of episodic memory is the ability to bring back to mind the contextual and source details associated with an event (Tulving, 1972). This ability to mentally travel back to the time of encoding during retrieval is a central feature of several memory theories (Morris, Bransford, & Franks, 1977; Moscovitch et al., 2005; Rolls, 2000; Tulving & Thomson, 1973) and supported by studies of reinstatement and recapitulation—reactivation of the cognitive and/or neural processes that were engaged at encoding at the time of retrieval (Buckner & Wheeler, 2001; Rugg, Johnson, Park, & Uncapher, 2008; Waldhauser, Braun, & Hanslmayr, 2016; Wheeler, Petersen, & Buckner, 2000)—sometimes referred to as ecphory (Tulving, 1983; Tulving, 1976; also see Bowen & Kark, 2016; Waldhauser et al., 2016).

Empirical studies of recapitulation have shown that when recalling a visual memory, visual cortices that were active during encoding become reactivated during retrieval and likewise retrieval of an auditory memory activates auditory cortices, even when that visual or auditory information is no longer present at retrieval (Gottfried, Smith, Rugg, & Dolan, 2004; Nyberg et al., 2000; Slotnick, 2004; Wheeler & Buckner, 2004; Wheeler et al., 2000). Several researchers have also established reactivation of content-specific brain regions. The fusiform gyrus contains a portion—often referred to as the fusiform face area (FFA)—that is consistently active during the perception of faces (Kanwisher & Yovel, 2006), and retrieval of words studied in the context of faces has been shown to reactivate that fusiform region, even when the words were presented alone at retrieval (Skinner, Grady, & Fernandes, 2010). Similarly, content-specific reactivation has been found for images previously paired with faces (in the FFA) and with words (in the left middle fusiform cortex, an area sensitive to word reading; Cohen & Dehaene, 2004) even when those images were not presented with the face or word at retrieval (Hofstetter, Achaibou, & Vuilleumier, 2012).

These findings of content-specific reactivation suggest that neural processes at retrieval involve similar neural processes to those that were engaged when the information was originally encoded. In fact, recapitulation may explain why, when we remember, it often feels as though we are re-experiencing the event. Indeed, some research has suggested that recapitulation during recognition may be greater when individuals have the subjective experience of recollection as compared to when their memories are based on feelings of familiarity with no retrieval of specific detail (Johnson, Minton, & Rugg, 2008; Johnson, Price, & Leiker, 2015; Johnson & Rugg, 2007; Waldhauser et al., 2016; Wheeler & Buckner, 2004). Just as the sensory processes implemented during mental imagery share overlap with those implemented during perception (e.g., Ishai, 2010), the processes that allow someone to bring to mind a prior event appear to overlap with the processes that were invoked during the original occurrence of that event.

What is relatively unknown is how emotional valence influences recapitulation. Extensive research has suggested that compared to neutral, emotional memories—negative memories in particular—tend to be remembered vividly, with greater feelings of re-experiencing and with an overall emotional memory enhancement (see Phelps & Sharot, 2008 for a review). Most theories of emotional memory have explained this enhancement via processes engaged at encoding or consolidation (Mather & Sutherland, 2011; McGaugh, 2000, 2004; Yonelinas & Ritchey, 2015), yet there is also evidence that emotion can influence retrieval processes. For instance, even when retrieval cues are neutral, fMRI studies have demonstrated that when the studied content associated with those retrieval cues is emotional, there is greater activity in regions including the amygdala (Daselaar et al., 2008; Smith, Henson, Dolan, & Rugg, 2004) and the hippocampus (Ford, Morris, & Kensinger, 2014), and there is enhanced connectivity between those two regions (Smith, Stephan, Rugg, & Dolan, 2006) compared to when the memory target is neutral. These results demonstrate that the emotion present during an encoded episode can influence the processes that arise in response to a neutral cue at retrieval (see also ERP evidence from Jaeger, Johnson, Corona, & Rugg, 2009; Maratos, Allan, & Rugg, 2000; Smith, Dolan, & Rugg, 2004).

Additional research has suggested that emotional valence may also be of importance. Negative events are sometimes remembered more vividly than positive (see Kensinger, 2009 for a review) and, relatedly, negative events (e.g., financial loss) outweigh the impact of a relatively equivalent positive event (e.g., financial gain) suggesting that “bad is stronger than good” (Baumeister, Bratslavsky, Finkenauer, & Vohs, 2001; see also Tversky & Kahneman, 1991). Patients with amygdala damage tend to show greater deficits retrieving negative emotional memories than positive (Buchanan, Tranel, & Adolphs, 2006), although the reason for that asymmetry is unknown. There is also an increasing literature demonstrating that negative and positive stimuli engage different brain processes at encoding, and that this may lead to differences in the type of information available at retrieval. For example, retrieval of positive events engages frontal areas, and retrieval of negative events engages more posterior sensory regions (Markowitsch, Vandekerckhove, Lanfermann, & Russ, 2003) which is in line with evidence that frontal and sensory regions are engaged during encoding of emotionally positive and negative stimuli, respectively (Mickley & Kensinger, 2008).

What is clear from these studies is that emotional valence can affect the processes engaged at encoding or retrieval, but the likelihood that an emotional experience comes to mind, the accompanying subjective vividness, and the memory for contextual source details, may be influenced by the overlap of processes engaged at encoding and retrieval. We hypothesize that negative stimuli are associated with greater recapitulation in sensory cortices and this leads to subjective memory enhancement. However, only a handful of studies have examined emotion-modulated recapitulation. Fenker and colleagues (Fenker, Schott, Richardson-Klavehn, Heinze, & Düzel, 2005) examined recapitulation during retrieval of neutral words previously paired with a fearful or neutral face at encoding. Using a region of interest approach, they found that bilateral FFA activation was stronger for emotional compared to neutral trials that were accompanied with a remember response, but amygdala and hippocampus did not show this same distinction. Smith, Henson, Dolan and Rugg (2004) studied recapitulation during retrieval of neutral objects previously superimposed on a negative, positive or neutral background at encoding. Compared to positive or neutral, successful recognition of stimuli associated with a negative background was correlated with reactivation of visual processing regions. Ritchey and colleagues (2013) also provided evidence that encoding-to-retrieval similarity correlated with amygdala activity specifically during the successful retrieval of negative items, although valence differences were not the focus of the study.

We are aware of only one study that has directly compared the recapitulation (i.e., encoding-to-retrieval overlap) associated with successful retrieval of negative and positive memories. In this study (Kark & Kensinger, 2015), participants encoded black and white degraded line-drawing versions of International Affective Picture System (IAPS; Lang, Bradley, & Cuthburt, 2008) images, which were followed by the full colored photograph. After a short delay (20 minutes), participants were given the line-drawings as retrieval cues and asked to make an old or new judgment. Correctly remembered positive and negative images engaged posterior regions of the ventral occipital-temporal cortex more than correctly remembered neutral images, reflecting recapitulation of the emotional aspects of the stimulus. Furthermore, negative stimuli in particular were associated with more extensive encoding-to-retrieval overlap throughout anterior sensory regions—including the anterior inferior temporal gyri—when items were remembered rather than forgotten, suggesting that recapitulation in these sensory cortices support successful memory more strongly for negative compared to positive or neutral stimuli.

The current study served to replicate the prior findings with regard to valence differences in recapitulation and to build off of these prior findings in two key ways. First, by employing a remember/know paradigm, we could test whether recapitulation was disproportionately related to recollection, as would be suggested by many theories regarding the links between recapitulation and conscious re-experience. Second, we could extend Kark and Kensinger’s (2015) findings in several ways. Although the line drawings used in Kark and Kensinger had less emotion than the full IAPS images, emotional content may have still been conveyed by the line drawings. Thus, some of the differences at retrieval could have been due to the retrieval cue rather than to the memory representation that was retrieved. The present study circumvented this concern by using neutral words as retrieval cues and by counterbalancing across participants whether those words had been studied in a positive, negative, or neutral context; thus, the retrieval cues give no hint regarding the previous encoding-related source information. Furthermore, using a conceptual retrieval cue (a word) rather than a complex visual retrieval cue (a line drawing) allowed us to test whether negative valence enhances sensory recapitulation even when the retrieval cue does not have visuo-sensory complexity. Finally, the present study was designed to examine content-specific recapitulation (Cohen & Dehaene, 2004; Fenker et al., 2005; Hofstetter et al., 2012; Skinner et al., 2010) as well as affective recapitulation, by presenting neutral words at study in the context of faces or scenes that were of positive, negative, or neutral valence. Thus, the present study could examine the effects of valence (negative, positive, neutral) as well as study content (faces, scenes) on retrieval-related recapitulation of successful encoding processes.

2. Methods

2.1. Participants

Participants between the ages of 18 and 30 were recruited through the Boston College undergraduate participant pool as well as advertisements on the website Craigslist.org. All procedures were approved by the Boston College Institutional Review Board and all participants provided informed consent. Before being scheduled for an MRI appointment, eligibility was determined using a medical screening form to ensure no past or current medical or psychological conditions that could influence the results (e.g., past head injury, epilepsy, untreated high blood pressure) as well an MRI screening form to ensure no contra-indicators to the MRI scan (e.g., metal implants, claustrophobia). Participants were scanned at the Harvard Center for Brain Science. For analysis Approach 1, data are reported from 19 participants (7 male) but one of these participants was excluded from Approach 2 (which separates face and scene trials and recollection from other processes) for having fewer than 5 trials in some conditions in the general linear model (GLM). Participant characteristics are presented in Table 1. The mean values for participants included in these two separate approaches did not significantly differ on any of the characteristics listed in Table 1, t(35) ≤ .23, p ≥ .81. Additional participants were excluded due to voluntary withdrawal (1 participant), scanner malfunction (1), problems with image acquisition (2), excessive head motion (see preprocessing section for details; 5 participants), at-chance behavioral performance or failure to follow instructions (3), and an experimenter error that resulted in a mismatch between the counterbalancing list used at study and at retrieval (1).

Table 1.

Participant characteristics

| BDI | Shipley | Age | |

|---|---|---|---|

| Approach 1 N =19 |

1.26 (1.66) | 30.79 (8.76) | 23.26 (3.57) |

| Approach 2 N =18 |

1.28 (1.70) | 30.56 (8.95) | 23.06 (3.56) |

Note. BDI = Beck Depression Inventory; Shipley = Shipley Vocabulary Test;. Age = age of the participant. Means are presented with standard deviations in parentheses.

2.2. Stimuli & Task

The stimuli consisted of faces, scenes and words gathered from different sources, as detailed below. During encoding, stimuli stayed on screen for 3250 ms; during retrieval, stimuli stayed on-screen until a response was made, to a maximum of 3250 ms. OptSeq (http://surfer.nmr.mgh.harvard.edu/optseq/) software was used to optimize jittered sequences (up to 8000 ms) and trial durations. E-Prime 2.0 software (Psychology Software Tools, Pittsburgh, PA) was used for stimulus presentation and response collection. All word stimuli were presented in white, Arial 35-point font centered near the top of the screen on a black background.

2.2.1. Faces and Scenes

Forty-two face stimuli were taken from the NimStim Set of Facial Expressions (Tottenham et al., 2009; (http://www.macbrain.org/resources.htm). These faces conveyed various emotional expressions (e.g., smiling, frowning) ranging in emotional valence and arousal (e.g., smiling mouth closed, smiling mouth open), gender, race and ethnicity. Fifty-seven scene images that contained no perceptible faces were selected. To meet this requirement, images were taken from the IAPS and supplemented with those taken from online sources. The chosen scenes contained different content that ranged in emotional valence (e.g. natural disaster, thanksgiving dinner) and arousal. These faces and scenes were rated for valence and arousal by each individual participant, as described in Procedure, to tailor the stimulus set to each participant according to his or her ratings.

2.2.2. Words

Words were taken from a published dataset (Warriner, Kuperman, & Brysbaert, 2013) consisting of 13,916 words that include valence and arousal ratings (scale of 1–9). The list was first restricted to six-letter words and then further reduced to those of neutral valence and relatively low arousal; from this subset, the list was reduced to 384 words using a random number generator (Mvalence = 5.71, SDvalence =1.64, range = 5.0 – 6.9; Marousal = 3.41, SDarousal = 2.16, range = 2.27–3.96). Words were then split into 8 groups of 48 words. The 8 lists did not differ on average valence or arousal ratings, F(7, 383) = .85, p =. 55 and F(7, 383) = .65, p = .72, respectively.

2.3. Questionnaires

Two questionnaires were administered before the fMRI scan. The Beck Depression Inventory (Beck, Steer, & Carbin, 1988) was used to determine whether participants were currently experiencing symptoms of depression. Scores between 0 and 13 indicate minimal symptoms of depression. All participants had a score between 0 and 4 except for one participant who had a score of 12. The Shipley Institute of Living Scale: Vocabulary (Shipley, 1940) provided a measure of crystallized intelligence. See Table 1 for means of these questionnaires.

2.4. Procedure

After being scheduled for an MRI session, participants were sent information regarding an online survey that needed to be completed at least 3 days before their MRI appointment.

2.4.1. Online Survey

The survey was conducted via surveymonkey.com, asking participants to rate the valence and arousal of all the 42 face and 57 scene stimuli. Participants indicated whether the image was positive, negative, or neutral and rated arousal on a scale of 1–7, where 1 is very calming, 4 is neutral (neither calming nor arousing), and 7 is very arousing. These ratings were used to select a subset of images (4 scenes and 4 faces1 of each valence; 24 images total) that would make up the individualized set of images used as experimental stimuli. For each participant, 8 negative (4 faces, 4 scenes) and 8 positive (4 faces, 4 scenes) images were selected from those given the highest arousal ratings and 8 images rated as neutral and non-arousing were selected. The ratings ensured that during the experiment, participants were viewing images they deemed emotional, and they also provided an initial exposure to the stimuli so that they would be familiar at the time of encoding.

2.4.2. Encoding and Retrieval Task

Participants were given verbal and written instructions about the memory task and asked to practice the encoding and retrieval task on a computer. As noted, there were 4 study-test blocks. Each encoding block contained 48 trials, of which 24 trials included a scene image and 24 included a face image. During each trial of the encoding task, a word and image were presented on the screen together (see Figure 1). Participants were asked to indicate whether the image was a face or scene and to try to remember the word for a subsequent memory test. Participants were not instructed to “pair” the image and word together. Additionally, participants practiced both the encoding and retrieval task outside the scanner, and thus they were aware that only memory for the words would be tested. During the retrieval task, old (n = 48 per block) and new words (n = 40 per block) were presented one at a time. There were slightly fewer new than old words to minimize the length of the recognition blocks (an important consideration in the MRI environment) while still minimizing the likelihood that participants would shift their response criterion due to a perceived frequency difference in old and new words. Participants made a remember/know/new judgment using the instructions from Rajaram (1993). Importantly, the retrieval instructions did not explicitly mention bringing back to mind the face/scene images from the encoding context; thus participants could make a ‘remember’ response without retrieving information about the face/scene image, as long as they could bring to mind some other episodic detail (e.g., an association they made to the word; what trial came before or after in the study list). Some prior work (Strack & Forster, 1995) has suggested that including a guess option may help isolate familiarity processes as participants use the ‘know’ (over ‘remember’ or ‘new’) when they are in fact guessing. We chose not to include a ‘guess’ response option at retrieval in order to streamline the task design, however, this also means that ‘know’ responses may be contaminated by guessing, and thus should not be considered a pure measure of familiarity. After practicing the task, participants changed into MRI compatible clothing, were fit with MRI compatible prescription glasses if necessary, and were set up in the MRI scanner (fMRI data acquisition described next). While in the scanner, participants completed 4 alternating encoding-retrieval blocks.

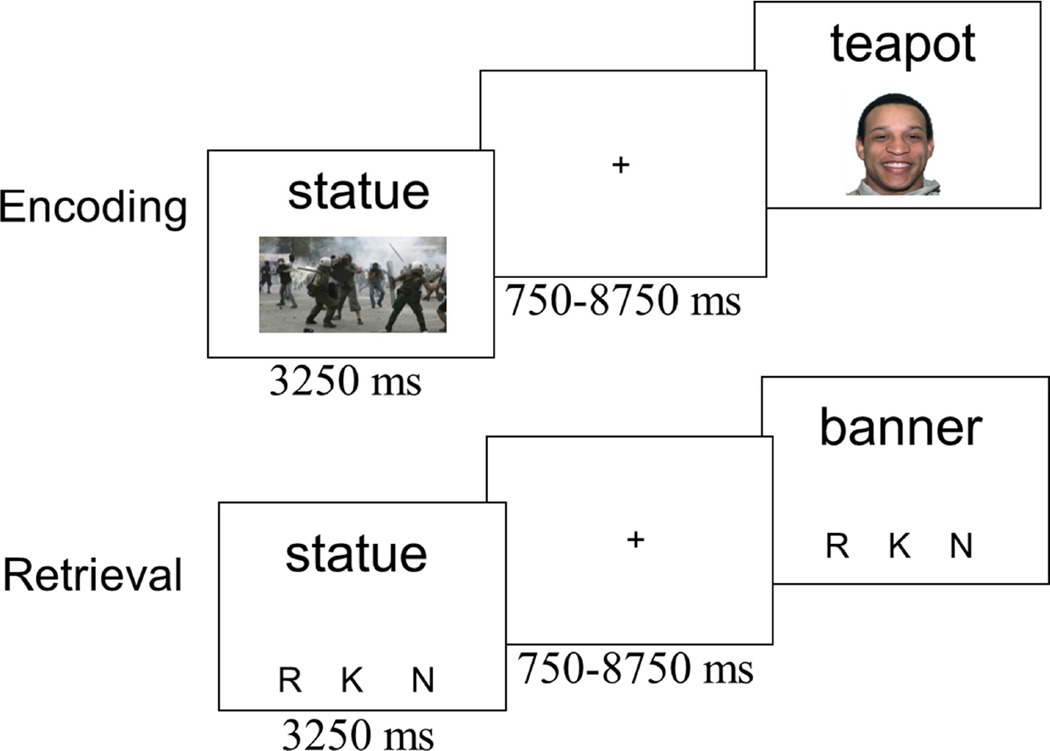

Figure 1.

Schematic of the encoding and retrieval task. During the encoding task, participants were asked to remember the word for the upcoming memory test and to make a decision, via button press, as to whether the image was a face or scene. During retrieval, participants were asked to make a remember (R), know (K) or new (N) judgment for each word.

2.4.3. Functional Localizer Task

Following the encoding and retrieval blocks, participants performed a functional localizer task (Julian, Fedorenko, Webster, & Kanwisher, 2012). Participants performed a 1-back task on blocks of faces, scenes, objects and scrambled images with 10 of each stimulus type in 2 fMRI runs. Each stimulus stayed on the screen for 1750 ms. Each block was separated by a 14 s fixation block. Images of body parts were used during a practice run before completing the experimental runs.

2.4.4. Tasks Completed After MRI Session

After exiting the scanner, participants completed a source memory judgment. Participants were given the list of 48 words from the last encoding block and asked to indicate whether each word had been paired with a face, scene or “don’t know”. The “don’t know” option was included to gauge guessing. After changing back into their street clothes, participants were fully debriefed about the purpose of the study and paid for their participation.

2.5. fMRI Data Acquisition

Structural and functional images were acquired using a Siemens Tim Trio 3 Tesla scanner and 32-channel head coil. Following the localizer and auto-aligned scout, whole-brain T1-weighted anatomical images were collected (MEMPRAGE, 176 sagittal slices, 1.0 mm3 voxels, TR = 2530 ms, TE1 = 1.64 ms, TE2 = 3.5 ms, TE3 = 5.36 ms, TE4 = 7.22 ms, Flip angle = 7 degrees, 256 field of view, base resolution = 256). Following this structural scan, eight functional runs were collected (4 encoding, 4 retrieval). Encoding and retrieval T2-weighted echoplanar images (EPI) were acquired using an interleaved simultaneous multi-slice sequence (SMS)—3 slices per scan, starting with odd numbered slices. SMS-EPI acquisitions used a modified version of the Siemens WIP 770A (Moeller et al., 2010; Setsompop et al., 2012) with slices tilted 22 degrees coronally from anterior to poster commissure (AC-PC) alignment (69 slices, 2.0 mm3 voxels, TR = 2000 ms, TE = 27 ms, Flip angle = 80 degrees, 216 field of view, base resolution = 108).The first 4 scans of each run were discarded to allow for equilibrium effects and were not included in analyses. A functional localizer scan followed the memory task runs. T2-weighted echoplanar images were an interleaved simultaneous multi-slice sequence, 3 slices at a time starting with odd numbered slices tilted 15 degrees coronally from AC-PC alignment (32 slices, 3.0 x 3.0 x 3.6 mm voxels, TR = 2000 ms, TE = 30 ms, Flip angle = 80 degrees, 192 field of view, base resolution = 64).

2.6 Analyses

2.6.1. Preprocessing

Images were pre-processed and analyzed using SPM8 (Wellcome Department of Cognitive Neurology, London, United Kingdom). All functional images were reoriented, realigned, co-registered, spatially normalized to the Montreal Neurological Institute template (resampled at 3 mm during segmentation and written at 2 mm during normalization), and smoothed using a 4 mm isotropic 12 Gaussian kernel. Global mean intensity and motion outliers were identified using Artifact Detection Tools (ART; available at www.nitrc.org/projects/artifactdetect). The parameters for outlier detection were the following: 1) more than 3 standard deviations above the global mean intensity, 2) less than ± 5 mm for translation motion and 3) ±1 degree for rotation. A run was considered problematic if more than 10TRs were detected as outliers. In total, one retrieval run was completely removed for a single participant and 18 TRs from the end of an encoding run for another participant.

2.6.2. General Linear Model

The fMRI analyses were used to 1) examine whether valence-based recapitulation occurs generally for hits or whether the recapitulation is enhanced for recollection compared to other processes (i.e., familiarity and forgetting); 2) replicate and extend prior work (Kark & Kensinger, 2015) showing greater reactivation in sensory regions for successful memory of negative stimuli compared to successful memory of positive or neutral stimuli; 3) replicate previous findings (e.g., Fenker et al., 2005) showing reactivation of content-specific regions, namely (FFA and parahippocampal place area [PPA]); 4) determine if recapitulation varies not only as a function of valence (negative, positive, neutral), but whether this interacts with stimulus content (i.e., faces or scenes) and memory within content specific ROIs.

In Approach 1, hits (i.e., correctly recognized “old” items) were broken down by response type—henceforth referred to as “remember hits” and “know hits”. Remember hits were compared to know hits and, in a separate analysis, remember hits were compared to misses (i.e., all incorrect “new” responses) by each valence, collapsed across study content (faces, scenes). In Approach 2, trials were separated by study content as well as by valence; additionally, because recollection, compared to other processes, is thought to reflect memory for episodic content from the encoding event, and can be considered a measure of mental time travel that we discussed in the introduction, we felt that it was most relevant to contrast “remembered” trials to other trial types. Know and new responses to old items were collapsed together and compared to remember hits (i.e., recollection) using a masking approach to restrict search space to pre-defined regions of interest (ROIs) described in detail in supplementary material section 1.0. Combining know and new responses in this way also increased the analysis bin size, and as described in the methods section, no analysis included participants or conditions with fewer than 5 trials per analysis bin.

At the first (subject) level of analysis, two rapid event-related design matrices were created for Approach 1 (one for encoding and one for retrieval) and another two for Approach 2 (one for encoding and one for retrieval). For Approach 1, a 13-column encoding regression matrix included 9 conditions of interest (subsequent remember hits, subsequent know hits and subsequent misses for each level of valence), as well as 4 columns to regress out linear drift for the 4 concatenated runs; a 15-column retrieval regression matrix included 9 conditions of interest (remember hits, know hits and misses for each level of valence), 2 nuisance regressor columns for false alarms (FAs) and correct rejections (CRs), and 4 columns to regress out linear drift2 for the four concatenated runs. Contrasts were then created, comparing each condition of interest at encoding to baseline (e.g., Subsequent Negative Remember Hit>Baseline), and each condition at retrieval to baseline (e.g., Negative Remember Hit > Baseline). For Approach 2, a 16-column encoding regression matrix included 12 conditions of interest (subsequent remember hits and subsequent know/miss responses by valence and content), 4-columns to regress out linear drift across 4 concatenated runs; an 18-column retrieval regression matrix included 12 conditions of interest (remember hit and know/miss response by valence and content), 2 nuisance regressor columns for FAs and CRs, and 4 columns to regress out linear drift for the four concatenated runs (see footnote 2). Contrasts were created comparing the conditions of interest at encoding to baseline (e.g., Subsequent Negative Face Remember Hit > Baseline) and conditions of interest at retrieval to baseline (e.g., Negative Face Remember Hit > Baseline).

2.6.3. Encoding-to-retrieval overlap

For Approach 1, random-effects contrast analyses at encoding were conducted comparing: subsequent remember hits to subsequent know hits by each valence (3 contrasts) and subsequent remember hits to subsequent misses by each valence (3 contrasts). Random-effects contrast analyses at retrieval were conducted comparing: remember hits to know hits for each valence (3 contrasts) and remember hits to misses by each valence (3 contrasts). Conjunction analyses were then used to examine the spatial overlap between encoding and retrieval. More specifically, to examine recapitulation effects for each conditions of interest, retrieval activity was inclusively masked with the equivalent encoding activity (e.g., Encoding Subsequent Remember Hit > Subsequent Miss ∩ Retrieval Remember Hit > Miss).

For Approach 2, random-effects contrasts of interest were activity for Scenes > Faces and activity for Faces > Scenes at encoding. ROIs for the PPA and FFA were then defined from a combination of the functional localizer and encoding activity. For details describing ROI definition the reader is referred to supplementary material section 1.0. Activity from these ROIs was extracted and submitted to a 3 (Valence: negative, positive, neutral) x 2 (Content: face, scene) x 2 (Memory: remember, know/miss) repeated-measures ANOVA.

2.7 Data Reporting and Visualization

Voxel threshold of p < .005 (uncorrected) was used for all individual contrasts, unless otherwise specified. To correct for multiple comparisons at p < .05, we used an 18 voxel extent (k) as determined by Monte Carlo simulations (https://www2.bc.edu/sd-slotnick/scripts.htm). Our discussion and visualizations of activations in all figures reflect this 18 voxels extent but to avoid Type II error (see Lieberman & Cunningham, 2009) we report all clusters with at least 10 contiguous voxels in the tables. For conjunction analyses, individual thresholds for each voxel were set at p = .0243 such that using the Fisher equation (Fisher, 1973), joint probability was set to p = .005.

MNI Coordinates from SPM8 were converted to Talaraich Coordinates using the GingerAle tool (http://www.brainmap.org/ale). Anatomical labels in the cluster report tables were assigned using the Talairach Daemon (Lancaster et al., 1997; Lancaster et al., 2000) and checked manually using an anatomy atlas (Talairach & Tournoux, 1988). We report the whole-brain results in our tables and figures for Approach 1 and region of interest results in figures for Approach 2.

3. Results

3.1 Behavioral Results

3.1.1. Online Ratings

The experimental stimuli for each participant were selected based on their subjective valence (negative, positive, neutral) and arousal (scale 1–7) rating. The arousal ratings were submitted to a 3 (valence: negative, positive, neutral) x 2 (content: face, scenes) ANOVA. There was a significant valence x content interaction, F(2, 30) = 15.02, p < .001, ηp2 = .50. Follow-up ANOVA for faces indicated a main effect of valence, F(2, 30) = 70.34, p < .001, ηp2 = .83. Faces rated as negative were also rated as more arousing, M = 6.31, SD = .63, compared to positive faces, M = 4.75, SD = .86, t(15) = 8.25, p < .001, η2 = .82 which were rated significant more arousing that neutral faces, M = 4.06, SD = .25, t(15) = 3.13, p =.001, η2 = .40. The same pattern was true of scenes. A follow-up ANOVA for scenes indicated a main effect of valence, F(2, 30) = 95.07, p < .001, ηp2 = .86. Scenes rated as negative were rated as more arousing, M = 6.49, SD = .52, compared to positive M = 6.03, SD = 1.00, t(15) = 2,77, p= .01, η2 = .34 which were rated as more arousing than neutral M = 4.02, SD = .063, t(15) = 7.98, p < .001, η2 =.81. Negative faces and positive scenes did not differ from one another in arousal ratings, t(16) = 1.11, p = .29, η2 = .073.

3.1.2. Memory Performance

Unless otherwise specified, N = 19 and all dependent variables associated with the word stimuli were submitted to a 3 (valence: negative, positive, neutral) x 2 (content: face, scene) repeated-measures ANOVA.

3.1.2.1. Hit Rate

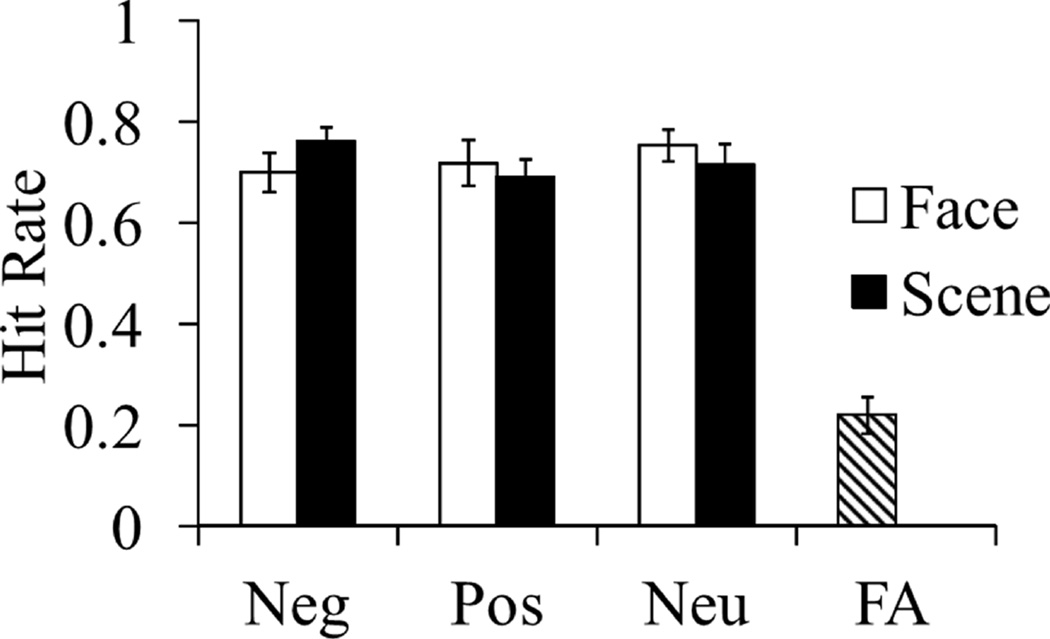

There were no main effects of valence or content but a significant interaction emerged, F(2, 36) = 3.86, p = .03, ηp2 = .17. This interaction was driven by significantly higher hit rates for negative scenes compared to positive and neutral scenes, F(2, 36) = 3.78, p = .03, ηp2 = .17. Hit rates for negative, positive and neutral faces did not significantly differ, F(2, 36) = 1.49, p = .24, ηp2 = .08. See Figure 2 for the mean hit rates at each level of the conditions. False alarm rate (see Figure 2) and measure of signal detection index of sensitivity (d’; M = 1.61, SE = .15) are single values because distractor items were neutral words that were never paired with an emotional context. d’ was significantly higher for remember responses (M = 1.99, SE = .19) compared to know responses (M = .97, SE = .14), t(18) = 7.41, p < .001, η2 = .75.

Figure 2.

Behavioral performance, N = 19. Neg = negative; Pos = positive; Neu = neutral; FA = false alarm rate. Hit rates were higher for words encoded with negative scenes compared to positive and neutral scenes, but hit rates for words encoded with negative, positive or neutral faces did not statistically differ.

3.1.2.2. Reaction Time

Examining the influence of the independent variables on median reaction times (RTs) for hits revealed no main effects nor an interaction, F(2, 36) ≤ .99, p ≥ .33, ηp2 ≤ .05. The average reaction time across conditions is 1259 milliseconds (SE = 51).

3.1.2.3. Proportion of Remember and Know Responses

The proportion of responses to target items were submitted to a 3 (valence: negative, positive, neutral) x 2 (content: face, scene) x 2 (response: remember, know) repeated-measures ANOVA. There was a significant main effect of response type, F(1, 18) = 5.24, p = .03, ηp2 = .23. Overall, participants used remember more often (M = .43, SE = .04) than know (M = .28, SE = .03). No interactions with response type emerged, F(2, 36) ≤ .48, p > .62, ηp2 ≤ .03.

3.1.2.4. Source Memory Judgments

Source (face/scene/don’t know) judgments were collected (N = 16) for the last block of trials and included only the 48 target items. On average, participants selected “face” 19.1%, “scene” 22.9% and “don’t know” 57.9% of the time. Examining all trials, participants chose the correct source on 28% of trials, and when “don’t know” trials were excluded and analyses were restricted to those in which a participant made a face or scene choice, accuracy was 79%. Due to extensive use of the “don’t know” option there were not enough face/scene judgments per condition to do a full factorial ANOVA on source memory. Our main interest was in determining if source accuracy was better for “remember” compared to “know” or “new” trials. We separated item memory by remember and know (correct responses) and new (incorrect response) and examined source memory. A repeated measures ANOVA indicated an effect of Response, F(2, 30) = 33.20, p >.001, ηp2 = .69. Follow-up paired t-test indicated that source memory was better for old words given a remember response (M = .23, SD =.11) compared to know (M = .07, SD =.08), t(15) = 5.28, p <.001, η2 = .65. Source memory was also better for old words given a know response compared to those words previously judged as new (M = .03, SD =.02), t(15) = 2.23, p =.04, η2 = .25. We next collapsed across response, and a paired t-test indicated that source accuracy was equivalence for images previously paired with faces (M = .11, SD =.07) and scene (M = .13, SD =.06), t(15) = 1.45, p = .17, η2 = .08. Finally, there was also no effect of valence, F(2, 30) = .30, p = .74, ηp2 = .02, source memory was equivalent for words encoded in a negative (M = .12, SD =.07) , positive (M = .13, SD =.07), and neutral (M = .12, SD =.07) context. Further, the proportion of “don’t know” responses (57.9%) was evenly split among the three valences (~19% for each of the valences) and there was no effect of valence on source accuracy when trials were restricted to those in which a face or scene judgement was made (i.e., excluding “don’t know” trials), F(2, 24) = .47, p =. 63, ηp2 = .04.

3.2. Neuroimaging Results Approach 1

3.2.1. Stronger recapitulation for remember compared to know responses and for items studied in a negative context

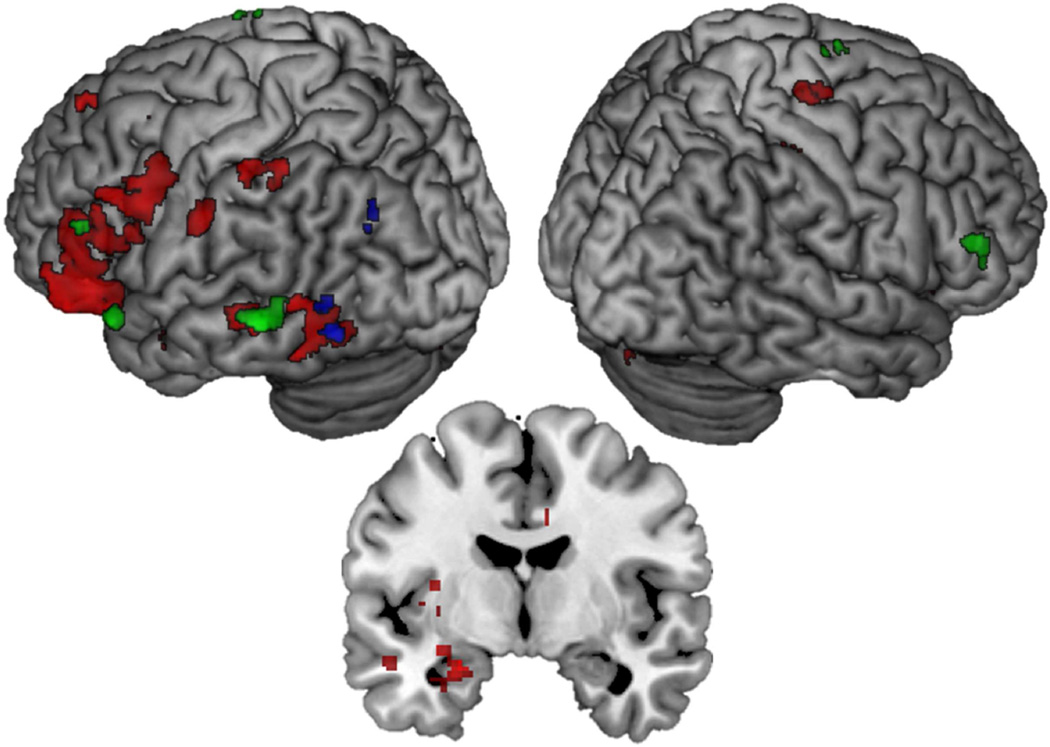

We first wanted to compare recapitulation for remember hits to know hits (and vice-versa) and to establish whether the extent of recapitulation varied as a function of the valence of the study context. Activity to remember hits was contrasted with activity to know hits during encoding and retrieval to examine the encoding-to-retrieval overlap at each level of valence (e.g., Negative Remember Hits > Negative Know Hits at encoding ∩ Negative Remember Hits > Negative Know Hits at retrieval). For negative valence, there was extensive overlap throughout the brain, shown in red in Figure 3. In particular, there was evidence of recapitulation in the amygdala (MNI: −24, −6, −20, k = 227), in anterior sensory cortices including left fusiform gyrus (BA 37; MNI: −52, −50, −18, k = 371) and left superior temporal gyrus (BA 22; −46, 2, −24, k = 39), as well as in several frontal regions including left and right inferior frontal gyri (BA 11/47; MNI: −38, 34, 12, k = 1119; MNI: 24, 30, −14, k = 28), and left middle frontal gyrus (BA 6, 8, 9; MNI: −26, 12, 48, k = 21; MNI: −40, 28, 40, k = 14; MNI: −48, 20, 30, k = 467).

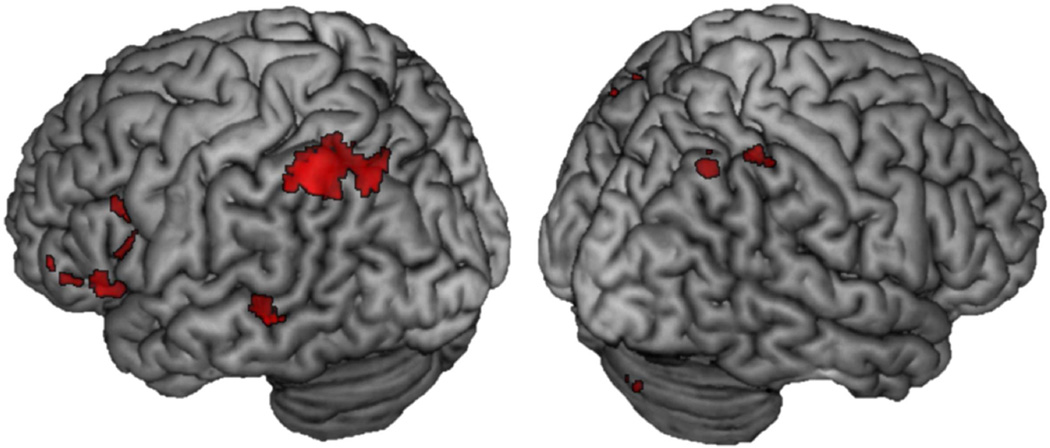

Figure 3.

Encoding-to-retrieval spatial overlap for Remember Hits > Know Hits at each level of valence. Red = Negative; Positive = Blue; Neutral = Green. Coronal slice at MNI Y = −6 showing recapitulation in the amygdala associated with negative but not positive or neutral context

For positive items, overlap was limited to the left fusiform (BA 37; MNI: −48, −62, −10, k = 20) shown in blue in Figure 3. Finally, overlapping activity for Neutral Remember Hits > Neutral Know Hits included left inferior frontal gyrus (BA 47; MNI: −46, 22, −20, k = 28; BA 45; MNI: −52, 26, 4, k = 18), left and right middle frontal gyrus (BA 46; MNI = −46, 34, 16, k = 36; MNI 54, 34, 14, k = 33) and left middle temporal gyrus (BA 21; MNI: −60, −40, −4, k = 89) Activity for this analysis is shown in green in Figure 3 and regions active in this analysis are listed in Table 2. Few regions exhibited overlap that was greater for familiarity than recollection for positive and neutral valence (Positive Know Hits > Positive Remember Hits and Neutral Know Hits > Neutral Remember Hits); these results are detailed in the supplementary material section 1.1. No regions reached threshold for being more active for familiarity than recollection for negative valence.

Table 2.

Regions that showed spatial overlap for activity related to recollection greater than familiarity for each level of valence

| Lobe | Region | Hem | BA | MNI Coordinates |

TAL Coordinates |

k | excl |

|---|---|---|---|---|---|---|---|

| Encoding ∩ Retrieval (Negative Remember Hits > Negative Know Hits) | |||||||

| Frontal | Inferior frontal gyrus | L | 11/47 | −38, 34, −12 | −36, 31, −4 | 1119 | Y, k=1058 |

| R | 11 | 24, 30, −14 | 21, 27, −5 | 28 | Y | ||

| Medial frontal gyrus | L | 8 | −6, 46, 44 | 7, 37, 47 | 133 | Y | |

| Middle frontal gyrus | L | 6 | −26, 12, 48 | −26, 5, 48 | 21 | Y | |

| L | 8 | −40, 28, 40 | −38, 21, 42 | 14 | Y | ||

| L | 9 | −48, 20, 30 | −46, 14, 32 | 467 | Y | ||

| Limbic | Cingulate gyrus | L | 24 | −4, 2, 28 | −5, −2, 29 | 22 | Y |

| L | NA | −34, 2, −2 | −32, 0, 2 | 74 | Y | ||

| R | 24 | 8, 6, 34 | 6, 1, 35 | 22 | Y | ||

| Posterior cingulate | L | 29 | −4, −38, 18 | −5, −39, 17 | 13 | Y | |

| Parietal | Inferior parietal lobule | L | 40 | −48, −44, 52 | −46, −47, 46 | 45 | Y |

| L | 7 | −32, −52, 48 | −31, −54, 42 | 10 | Y | ||

| Postcentral gyrus | L | 3 | −28, −30, 52 | −28, −34, 47 | 14 | Y | |

| L | 2 | −60, −18, 34 | −57, −21, 32 | 48 | Y | ||

| R | 3 | 28, −28, 50 | 24, −32, 47 | 43 | Y | ||

| R | 2 | 52, 18, 22 | 47, −21, 23 | 28 | Y | ||

| R | 4 | 26, −20, 66 | 22, −26, 62 | 28 | Y | ||

| L | 3 | −26, −26, 54 | −26, −31, 50 | 21 | Y | ||

| Temporal | Fusiform gyrus | L | 37 | −52, −50, −18 | −49, −47, −17 | 371 | Y, k=308 |

| L | 36 | −36, −38, −24 | −34, −35, −21 | 16 | N | ||

| Middle temporal gyrus | R | 21 | 56, −44, −4 | 51, −43, −3 | 12 | Y | |

| Superior temporal | L | 22 | −46, 2, −24 | −43, 2, −18 | 39 | Y | |

| Cerebellum | gyrus | L | NA | −6, −32, −32 | −6, −29, −27 | 11 | Y |

| Cerebellum | R | NA | 14, −80, −34 | 12, −73, −33 | 19 | Y | |

| Other | Amygdala | L | NA | −24, −6, −20 | −23, −5, −15 | 227* | Y |

| Lentiform nucleus | L | −20, 12, −16 | −19, 11, −9 | 10 | Y | ||

| Encoding ∩ Retrieval (Positive Remember Hits > Positive Know Hits) | |||||||

| Temporal | Fusiform gyrus | L | 37 | −48, −62, −10 | −45, −58, −11 | 68 | Y, k =16 |

| Parietal | Inferior parietal lobule | L | 39 | −36, −66, 24 | −35, −65, 19 | 25 | Y |

| Cerebellum | L | NA | −22, −40, −24 | −21, −37, −21 | 20 | Y | |

| Encoding ∩ Retrieval (Neutral Remember Hits > Neutral Know Hits) | |||||||

| Frontal | Inferior frontal gyrus | L | 47 | −46, 22, −20 | −41, 21, −13 | 28 | Y, k=24 |

| L | 45 | −52, 26, 4 | −49, 22, 9 | 18 | N | ||

| Medial frontal gyrus | L | 9 | −4, 48, 12 | −5, 42, 19 | 10 | Y | |

| Middle frontal gyrus | L | 46 | −46, 34, 16 | −44, 29, 21 | 36 | Y, k=14 | |

| L | 9 | −14, 54, 16 | −14, 47, 23 | 14 | Y | ||

| R | 46 | 54, 34, 14 | 49, 28, 20 | 33 | Y | ||

| Temporal | Inferior temporal gyrus | L | 37 | −46, −56, 0 | −44, −54, −2 | 15 | N |

| Middle temporal gyrus | L | 21 | −60, −40, −4 | −57, −38, −4 | 89 | Y | |

| Fusiform gyrus | L | 37 | −42, −46, −12 | −40, −43, −11 | 10 | N | |

| −38, −52, −6 | −36, −50, −6 | 13 | Y | ||||

Note. Hem = hemisphere; L = left; R = Right; BA = Brodmann’s Area; MNI = Montreal Neurological Institute; TAL = Talairach; Excl = whether region is specific/exclusive to that valence; Y = yes; N= No; Y, k # = number of voxels that survived the exclusive masking.

The peak of this cluster is in the amygdala (k extent = 55), and extends into the hippocampus and putamen.

Exclusive masking was then used to reveal the regions that showed encoding-to-retrieval overlap specific to each valence (e.g., regions specific to positive-context trials and not for the negative and neutral-context trials even when negative and neutral thresholds were reduced to p = .10). The last column of Table 2 indicates whether the region contributed uniquely to each valence and any differences in cluster size after exclusive masking. This column makes clear that the majority of regions revealed to be specific to negative-context trials survived this exclusive masking.

3.2.2. Stronger recapitulation associated with successful memory for items studied in a negative context

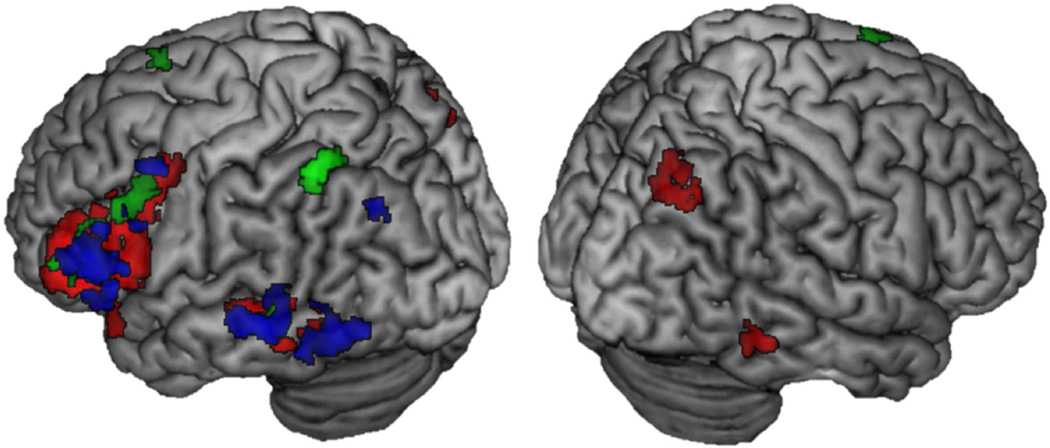

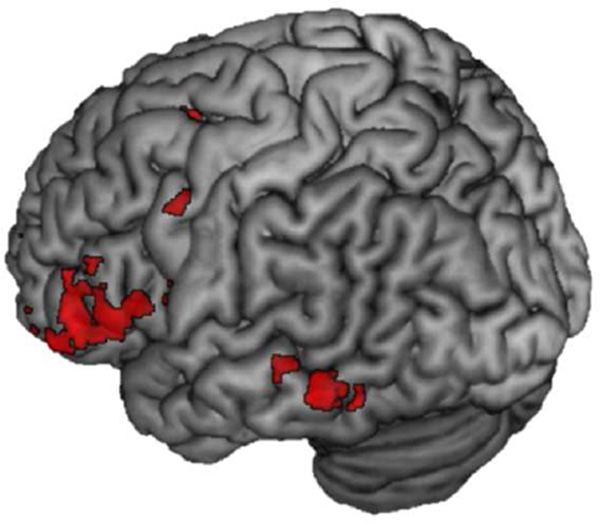

Conjunction analyses comparing remember hits to misses at each level of valence were analyzed next (e.g., Negative Remember Hits> Negative Misses at encoding ∩ Negative Remember Hits > Negative Misses at retrieval). For items studied in a negative context, this conjunction revealed extensive overlap throughout the brain, but of interest was activity in sensory regions, including right and left fusiform gyrus (BA 37; MNI: −50, −52, −14, k = 257; MNI: 56, −44, −12, k = 42), left middle temporal gyrus (BA 21; MNI: −56, −40, −4, k = 74) and left and right inferior temporal gyrus (BA 21/20; MNI: −52, −48, 0, k = 56; MNI: 56, −44, −12), shown in red in Figure 4. For items studied in a positive context, there was less overlap, but in similar regions as for negative valence, including left fusiform (BA 37; MNI: −48, −64, −10, k = 131) and left middle temporal gyrus (BA 21; MNI: −60, −40, −4, k = 48) shown in blue in Figure 4. Items studied in a neutral context showed left lateralized overlap in middle temporal gyrus (BA 21; MNI: −60, −40, −4, k = 31) shown in green in Figure 4. This pattern of results of greater reactivation in sensory cortices for negative valence is consistent with Kark and Kensinger (2015), as detailed in the introduction. The reverse analysis of Misses > Remember Hits did not reveal any overlap that survived thresholding for negative or positive valence, but there was significant recapitulation for Neutral Misses > Neutral Remember Hits. The overlap was mostly right lateralized and was distributed throughout the frontal, parietal and temporal lobes. Refer to supplementary material section 1.2 for the results of this analysis.

Figure 4.

Encoding-to-retrieval spatial overlap for Remember Hits> Misses at each level of valence. Red = Negative; Positive = Blue; Neutral = Green.

Exclusive masking was then used to reveal the regions that showed encoding-to-retrieval overlap specific to each valence. Refer to the last column in Table 3 which indicates whether the regions in the previous analysis contributed uniquely to each valence and any differences in cluster size after exclusively masking out the activity related to the other two valences.

Table 3.

Regions that showed encoding-to-retrieval overlap for remember activity greater than miss activity for each level of valence

| Lobe | Region | Hem | BA | MNI Coordinates |

TAL Coordinates |

k | excl |

|---|---|---|---|---|---|---|---|

| Encoding ∩ Retrieval (Negative Remember Hits > Negative Misses) | |||||||

| Frontal | Inferior frontal gyrus | L | 47 | −48, 32, −8 | −45, 29, −1 | 2334 | Y, k = 1936 |

| R | 11 | 22, 30, −14 | 20, 27, −5 | 25 | Y | ||

| Medial frontal gyrus | L | 6 | −4, 6, 62 | −5, −2, 60 | 18 | Y | |

| −4, 16, 54 | −5, 8, 54 | 84 | Y | ||||

| Middle frontal gyrus | L | 8 | −52, 12, 40 | −50, 6, 40 | 10 | N | |

| Superior frontal gyrus | L | 6 | 15, −12, 6 | −13, −2, 69 | 15 | N | |

| Parietal | Inferior parietal lobule | L | 40 | −46, −40, 42 | −44, −42, 37 | 10 | Y |

| 40 | −38, −48, 40 | −33, −50, 35 | 25 | Y | |||

| R | 40 | 38, −52, 42 | 34, −54, 38 | 15 | Y | ||

| Superior parietal lobule | R | 19 | 32, −76, 44 | 28, −77, 37 | 159 | Y | |

| Temporal | Fusiform gyrus | L | 37 | −50, −52, −14 | −47, −49, −14 | 257 | Y, k=224 |

| R | 37 | 44, −56, −14 | 40, −53, −13 | 13 | Y | ||

| Inferior temporal gyrus | L | 21 | −52, −48, 0 | −49, −46, −1 | 56 | Y, k=52 | |

| R | 20 | 56, −44, −12 | 51, −42, −10 | 42 | Y | ||

| Middle temporal gyrus | L | 21 | −56, −40, −4 | −53, −38, −4 | 74 | Y, k=58 | |

| Encoding ∩ Retrieval (Positive Remember Hits > Positive Misses) | |||||||

| Frontal | Inferior frontal gyrus | L | 45 | −44, 26, 4 | −42, 22, 9 | 78 | Y, k=48 |

| Orbital gyrus | R | 11 | 4, 52, −32 | 3, 49, −20 | 10 | N | |

| Precentral gyrus | L | 6 | −52, 8, 24 | −49, 4, 25 | 20 | Y, k=10 | |

| Parietal | Superior parietal lobule | L | 19 | −30, −70, 32 | −29, −70, 26 | 13 | Y, k=27 |

| L | 19 | −30, −74, 44 | −29, −74, 36 | 18 | N | ||

| Temporal | Fusiform gyrus | L | 37 | −48, −64, −10 | −45, −60, −11 | 131 | Y, k=315 |

| Middle temporal gyrus | L | 21 | −60, −40, −4 | −57, −38, −4 | 48 | Y, k= 73 | |

| Superior temporal gyrus | L | 39 | 52, −54, 28 | −50, −54, 25 | 15 | N | |

| Cerebellum | L | NA | −34, −36, −26 | −32, −33, −23 | 10 | N | |

| −24, −46, −30 | −23, −42, −27 | 23 | N | ||||

| R | NA | 20, −36, −38 | 18, −32, −33 | 19 | N | ||

| Encoding ∩ Retrieval (Neutral Remember Hits > Neutral Misses) | |||||||

| Frontal | Inferior frontal gyrus | L | 45 | −58, 24, 10 | −55, 20, 14 | 10 | N |

| L | 9 | −42, 20, 28 | −40, 15, 30 | 10 | Y | ||

| L | 45 | −42, 32, 6 | −40, 28, 12 | 58 | Y* | ||

| L | 9 | −44, 16, 28 | −42, 11, 30 | 265 | Y, k=107 | ||

| Frontal | Superior frontal gyrus | L | 6 | −10, 16, 66 | −11, 7, 64 | 18 | Y |

| Parietal | Inferior parietal lobule | L | 40 | −46, −54, 50 | −44, −56, 43 | 63 | Y |

| Temporal | Middle temporal gyrus | L | 21 | −60, −40, −4 | −57, −38, −4 | 31 | N |

Note. Hem = hemisphere; L = left; R = Right; BA = Brodmann’s Area; MNI = Montreal Neurological Institute; TAL = Talairach; Excl = whether region is specific/exclusive to that valence; Y = yes; N= No; Y, k # = number of voxels that survived the exclusive masking;

In the exclusive masking the cluster was broken into BA 10 MNI −48, 44, 0 and BA 46 MNI: −44, 22, 22.

3.2.3. Ruling out that Negative Valence Findings are due to Arousal

The arousal ratings for the stimuli differed for negative and positive valence (see behavioral results section 3.1.1). To determine whether the more extensive reactivation for items associated with a negative context was due to the higher arousal of that context, we compared the encoding-to-retrieval spatial overlap for negative faces with positive scenes because the arousal ratings for these two categories were not significantly different, t(16) = 1.11, p = .29. For each valence, we examined the encoding and retrieval activity that was greater for remember hits than for know/misses. We then used exclusive masking to examine the overlap that was specific to either negative-face context or to positive-scene context. There was extensive reactivation that was specific to successful memory of negative faces compared to successful memory of positive scenes, including in left and right superior parietal lobule, left inferior frontal gyrus, left middle temporal gyrus and left precuneus (See Figure 6). No above-threshold overlap was revealed to be specific to the positive scenes.

Figure 6.

Encoding-to-retrieval overlap that was exclusive to successful memory for negative faces compared to positive scenes, suggesting that recapitulation effects are more associated with valence than arousal. There was no above-threshold activity for the reverse analysis of positive scenes > negative faces.

3.2.4 Ruling out that Negative Valence Findings are due to Stimulus Properties

To determine whether negative, positive and neutral stimuli (collapsed across faces and scenes) were perceptually similar we utilized the Saliency Toolbox for Matlab (http://www.saliencytoolbox.net; Walther & Koch, 2006;). This toolbox provides a saliency statistic for each stimulus which can then be used to examine whether differences in saliency exist across the categories of stimuli. We compared the average saliency for negative (M = .045, SD = .016), positive (M = .049, SD = .016), and neutral (M = .043, SD = .015) stimuli in a oneway ANOVA and found no main effect of valence, F(2, 100) = 1.64, p = .20. This suggests that the effects of valence reported in the analyses above cannot easily be attributed to top-down attentional differences driven by low-level visual properties of the overall valence category.

As a further test of whether the more extensive reactivation for items associated with a negative context was due to stimulus properties, we compared the encoding-to-retrieval spatial overlap for negative faces with positive faces. The face stimuli were very similar to one another in content, compared to scene stimuli which varied more widely; thus comparing negative to positive faces allowed us to determine if valence difference persist despite similar visual content. For each valence, we examined the encoding and retrieval activity that was greater for remember hits than for know/misses. We then used exclusive masking to examine the overlap that was specific to either negative-face context or to positive-face context. All activation was left lateralized. There was extensive reactivation that was specific to successful memory of negative faces compared to successful memory of positive faces, including in left inferior, middle and superior frontal gyrus, and of interest to our hypotheses, in middle temporal gyrus and fusiform (see Figure 7). A cluster in the inferior frontal gyrus (BA 47; MNI: −30, 24, −22, k = 14) and one in the precentral gyrus that extends into middle frontal gyrus (BA 6; MNI: −58, 0, 44, k = 23), were specific to positive faces, but importantly no activation in the ventral visual stream was specific to positive faces.

Figure 7.

Encoding-to-retrieval overlap that was exclusive to successful memory for negative faces compared to positive faces. These comparisons indicate that effects of negative valence cannot be easily be accounted for by differences in visual properties of the stimulus category. Negative and positive face stimuli used in the current study were perceptually very similar (re: NimStim Set of Facial Expressions; Tottenham et al., 2009).

3.3 Neuroimaging Results Approach 2

3.3.1. PPA and FFA recapitulation and region of interest analysis

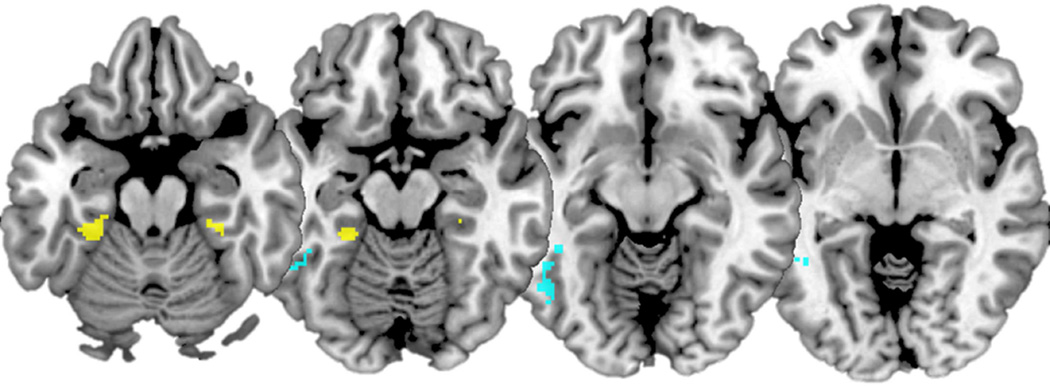

As a first step, we wanted to replicate previous findings of encoding-to-retrieval overlap in content specific regions, namely the FFA and PPA. Collapsing across valence, conjunction analyses comparing the encoding-to-retrieval overlap for face stimuli (i.e., Face Remember Hits > Face Know/Miss) showed recapitulation in left FFA (MNI: −50, −52, −16, k = 113). The same conjunction analysis but with scenes (Scene Remember Hits> Scene Know/Miss) showed recapitulation in bilateral PPA (MNI: −28, −36, −20; k = 63; MNI: 30, −34, −18, k = 19). These clusters are shown on Figure 5 in cyan and yellow, respectively.

Figure 5.

Encoding-to-retrieval overlap for Face Remember Hit> Face Know/New showing reactivation in left FFA in cyan. Encoding-to-retrieval overlap for Scene Remember Hit> Face Know/New showing reactivation in bilateral PPA in yellow. Slices at MNI Z = −20, −15, −10, −5.

To delve deeper, we then examined whether recapitulation differed as a function of stimulus content, valence and memory characteristics within content-specific ROIs. Reducing the search space to the PPA and FFA ROIs defined using the functional localizer task (see supplementary material section 1.0 for details on ROI definition), we found some evidence of content-specific source reactivation but it was not modulated by valence. Refer to supplementary material section 1.3 for the results of this analysis.

4. Discussion

Theories of memory have long included ideas regarding recapitulation (Morris et al., 1977; Tulving, 1983) and more recent experimental work has provided neural evidence for this process (Fenker et al., 2005; Johnson et al., 2015; Johnson & Rugg, 2007; Kahn, Davachi, & Wagner, 2004; Kark & Kensinger, 2015; Smith et al., 2004a, 2004b; Wheeler et al., 2006, 2000). The current experiment replicated and extended this prior work by answering a number of questions about valence-related recapitulation. We found that anterior sensory regions in the ventral visual stream showed more encoding-to-retrieval overlap for successfully remembered stimuli associated with negative valence, compared to positive or neutral, and that this recapitulation was stronger for memories accompanied by recollection compared to familiarity. ROI analyses querying activity within the FFA and PPA suggest that, although content-specific recapitulation occurs, it may not be modulated by valence. Together, these results indicate that although negative valence enhances the overlap in sensory processes engaged during encoding and retrieval, it may not do so in a way that preserves precise information about the content (e.g., face or scene) of studied information. We discuss these findings in more detail below.

In our first analysis, we examined the effect of valence (negative, positive, and neutral) on recapitulation processes, comparing recapitulation for trials recognized with a ‘remember’ versus a ‘know’ response. Overall, there was evidence of more recapitulation for stimuli that elicited a ‘remember’ response, and this was especially true for negative stimuli. Recapitulation in anterior sensory cortices was associated with successful recollection for negative compared to positive or neutral stimuli. Neutral word processing is typically associated with activity in the visual word form area (Cohen & Dehaene, 2004), but not with extensive activity throughout the ventral visual stream; thus, the extensive activation seen in this contrast strongly suggests that there is recapitulation of some aspects of the encoded visual context. These findings align with previous work which has shown that negative valence engages more posterior sensory regions during both encoding (Mickley & Kensinger, 2008) and retrieval (Markowitsch et al., 2003) and that this engagement of sensory regions may be what is leading to greater memory for detail for negative stimuli (Dewhurst & Parry, 2000; Ochsner, 2000; Phelps & Sharot, 2008). This result also provides support for our hypothesis that the likelihood that an emotionally negative experience comes to mind and the subjective quality of that memory will be influenced by the encoding-to-retrieval overlap.

Our results serve as a conceptual replication of the findings from Kark and Kensinger (2015; also see Smith et al., 2004b) who revealed that, compared to positive or neutral valence, negative stimuli were associated with more widespread recapitulation, including in sensory regions. In the current study, stimuli associated with negative valence engaged fusiform, inferior and middle temporal regions within the ventral visual processing stream, and we additionally found evidence of recapitulation in the amygdala for negative, but not positive or neutral stimuli. The amygdala is consistently implicated in emotional processing and successful memory for emotional information (LaBar & Cabeza, 2006) and there is some evidence that amygdala may be particularly important for negative memory retrieval, as its damage disproportionately disrupts memory for negative events (Buchanan, Tranel, & Adolphs, 2006). It is important to note that the effects of greater recapitulation associated with negative valence cannot be accounted for by arousal more generally, as the comparison of recapitulation associated with negative faces to positive scenes (which were given similar arousal ratings) showed that there was still a significantly greater amount of recapitulation in sensory processing regions for the items studied in the negative context. Additionally, greater recapitulation for negative valence is unlikely to be accounted for by differences in visual properties of the stimuli as saliency of the stimuli did not differ across the valence categories and further, recapitulation associated with negative faces compared to positive faces (which were very similar in visual content) showed a greater amount of overlap in sensory processing regions.

We were able to demonstrate these effects of emotional valence despite a number of methodological decisions that should have minimized our ability to detect such effects, suggesting that these findings are robust. First, unlike the pictorial retrieval cues used by Kark and Kensinger, which may have still contained some emotional content, the neutral verbal stimuli used as retrieval cues in the present study provided no information about the previous encoding context, thus creating a stronger test of recapitulation. Second, participants were not required to remember anything about the encoding context when making the memory judgment, and in fact they showed very little knowledge of the specific source details regarding whether the target word had been paired with a face or scene. Yet despite their poor explicit knowledge of the contextual source, our results indicate that there was residual influence of the study context that was greater for items previously encoded within a negative context. Recapitulation of emotion can still influence memory processes even though there is nothing about the task—at encoding or retrieval—that is requiring this association.

This residual influence of study context was also revealed in our analyses of encoding-to-retrieval overlap in FFA for successful memory of stimuli previously paired with a face, and in PPA for successful memory of stimuli previously paired with a scene. However, contrary to our hypotheses, recapitulation within the FFA and PPA did not vary as a function of valence. In other words, face and scene content-specific regions did not reactivate more based on the valence of the previously encoded source. Together, these results reveal that although negative valence enhances the overlap of many neural processes engaged at encoding and at retrieval, it does not always do so specifically within regions specialized for category-based visual processing.

The results of the two analyses have led us to propose that negative valence leads to greater recapitulation of sensory regions and this recapitulation of sensory regions is associated with a subjective memory enhancement—such as recollection of visual sensory details—but not necessarily with memory for specific source details (e.g., the face and scene) from the encoding context. This may explain why previous studies have reported that negative memories tend to be associated with subjective feelings of re-experience and vividness (Kensinger, 2009; Phelps & Sharot, 2008) and how these feelings of re-experience may also be accompanied by incorrect details about the memory (Neisser & Harsch, 1992). When an event is negative, it seems that there is not a greater likelihood that the category of stimulus previously associated with that retrieval cue is reactivated, as valence effects were not revealed in category-specific regions, and face/scene source memory test results were poor. Yet the results do clearly show evidence of enhanced sensory overlap during the encoding and retrieval of stimuli associated with negative, compared to positive or neutral, valence. When a person encounters something threatening or survival relevant in the visual domain at the time of encoding, there is reactivation of sensory cortices associated with that modality, but not necessarily the cortices of the precise content. In other words, negative visual events lead to greater activation of ventral visual stream at encoding and greater re-activation of the ventral visual stream at retrieval, but this re-activation is not precise to content-specific cortices, but rather may be flexible and more reflective of a generalizing across a modality. Indeed, there is recent evidence that a negative event, in the form of a small shock to an item (e.g., hammer), can cause a person to generalize that negative experience to a large category group (e.g., tools), even to category members were not paired with a shock (e.g., screwdriver; Dunsmoor, Murty, Davachi, & Phelps, 2015). From an evolutionary perspective, it may not be as beneficial to remember exactly which type of insect stung you but to be able to generalize that knowledge to animals with broadly similar features. Then the next time you are in a heavily wooded area (neutral retrieval cue), memory for those sensory details will help allow you to avoid being stung again.

4.3. Limitations and Future Directions

There are a few limitations to the current design of the study that provide some direction for future research. As a way to ensure that participants were viewing stimuli they deemed emotional, participants rated all stimuli, and an individualized set of experimental stimuli were selected based on their subjective ratings; however, it was not possible to equate arousal ratings within-category (faces or scenes) for positive and negative stimuli. We described an analysis that indicates the results are better explained by valence rather than arousal, but future experiments could more directly test valence versus arousal effects. Second, we suggest that greater recapitulation for negative stimuli leads to a subjective memory enhancement, however this relationship is currently untested and in the present study such an enhancement was not present behaviorally. This leaves open many questions as to the relation between recapitulation and recollection for negative information. Techniques with better temporal resolution can provide information about the spatial-temporal unfolding of valence-related recapitulation and the phenomenological characteristics of emotional memory, providing insight into whether the recapitulation processes precede successful recollection or reflect the recovery of recollective detail. Finally, our sample (N = 19) was small; thus statistical power is low and null effects we report should be interpreted with caution.

4.3. Conclusions and Implications

Recapitulation of emotional contextual source information occurs in response to neutral cues: Stimuli previously encoded in a negative context recapitulated anterior sensory regions to a greater extent than information studied in a positive or neutral context, and this recapitulation was stronger for items that were recollected rather than deemed familiar. Clearly, the likelihood that an emotional experience comes to mind is influenced by the overlap of processes engaged at encoding and retrieval, and this recapitulation may be particularly linked to ecphoric processes for negative items. Despite replicating previous findings of encoding-to-retrieval overlap in FFA and PPA for successful memory of faces and scenes, respectively, we did not find evidence that valence modulated the reactivation within these specific regions. Rather, negative valence may enforce a generalizing to the sensory modality (visual, in this paradigm) rather than to content-specific cortices. These results shed light on how a neutral trigger can reactivate sensory details from a previously experienced negative event. The fact that negative memories show extensive recapitulation – but not specifically within category-specific regions – also may help us understand why many individuals experience a memory bias toward negative experiences but also can have difficulty remembering the contextual features of those experiences.

Supplementary Material

Acknowledgments

The authors wish to thank Sarah Scott and Haley DiBiase for help with data collection as well as Tammy Moran and Dr. Ross Mair from the Harvard Center for Brain Science.

Funding Sources

This work was supported by a National Institute of Health Grant awarded to EAK [R01MH080833].

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Due to experimenter error, one subject was shown 5 negative faces and 3 negative scenes.

Two participants had only 3 columns of linear drift. One participant only completed 3 retrieval runs and the other had a retrieval run removed due to excessive head motion.

Comparisons of brain activation for negative faces and positive scenes are presented below to assess valence versus arousal explanations of the data.

References

- Baumeister RF, Bratslavsky E, Finkenauer C, Vohs KD. Bad is stronger than good. Review of General Psychology. 2001;5(4):323–370. http://doi.org/10.1037/1089-2680.5.4.323. [Google Scholar]

- Beck AT, Steer RA, Carbin MG. Psychometric properties of the Beck Depression Inventory: Twenty-five years of evaluation. Clinical Psychology Review. 1988;8(1):77–100. http://doi.org/10.1016/0272-7358(88)90050-5. [Google Scholar]

- Bowen HJ, Kark SM. Commentary: Episodic Memory Retrieval Functionally Relies on Very Rapid Reactivation of Sensory Information. Frontiers in Human Neuroscience. 2016:10. doi: 10.3389/fnhum.2016.00196. http://doi.org/10.3389/fnhum.2016.00196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buchanan TW, Tranel D, Adolphs R. Memories for emotional autobiographical events following unilateral damage to medial temporal lobe. Brain: A Journal of Neurology. 2006;129(Pt 1):115–127. doi: 10.1093/brain/awh672. http://doi.org/10.1093/brain/awh672. [DOI] [PubMed] [Google Scholar]

- Buckner RL, Wheeler ME. The cognitive neuroscience of remembering. Nature Reviews. Neuroscience. 2001;2(9):624–634. doi: 10.1038/35090048. http://doi.org/10.1080/17588928.2010.503602. [DOI] [PubMed] [Google Scholar]

- Cohen L, Dehaene S. Specialization within the ventral stream: the case for the visual word form area. NeuroImage. 2004;22(1):466–476. doi: 10.1016/j.neuroimage.2003.12.049. http://doi.org/10.1016/j.neuroimage.2003.12.049. [DOI] [PubMed] [Google Scholar]

- Daselaar SM, Rice HJ, Greenberg DL, Cabeza R, LaBar KS, Rubin DC. The spatiotemporal dynamics of autobiographical memory: Neural correlates of recall, emotional intensity, and reliving. Cerebral Cortex. 2008;18(1):217–229. doi: 10.1093/cercor/bhm048. http://doi.org/10.1093/cercor/bhm048. [DOI] [PubMed] [Google Scholar]

- Dewhurst SA, Parry LA. Emotionality, distinctiveness, and recollective experience. European Journal of Cognitive Psychology. 2000;12(4):541–551. http://doi.org/10.1080/095414400750050222. [Google Scholar]

- Dunsmoor JE, Murty VP, Davachi L, Phelps EA. Emotional learning selectively and retroactively strengthens memories for related events. Nature. 2015;520(7547):345–348. doi: 10.1038/nature14106. http://doi.org/10.1038/nature14106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fenker DB, Schott BH, Richardson-Klavehn A, Heinze HJ, Düzel E. Recapitulating emotional context: Activity of amygdala, hippocampus and fusiform cortex during recollection and familiarity. European Journal of Neuroscience. 2005;21(7):1993–1999. doi: 10.1111/j.1460-9568.2005.04033.x. http://doi.org/10.1111/j.1460-9568.2005.04033.x. [DOI] [PubMed] [Google Scholar]

- Fisher RA. Statistical Methods for Research Workers. 14th. New York: Hafner Press; 1973. [Google Scholar]

- Ford JH, Morris JA, Kensinger EA. Effects of Emotion and Emotional Valence on the Neural Correlates of Episodic Memory Search and Elaboration. Journal of Cognitive Neuroscience. 2014;26(4):825–839. doi: 10.1162/jocn_a_00529. http://doi.org/10.1162/jocn_a_00529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gottfried JA, Smith APR, Rugg MD, Dolan RJ. Remembrance of odors past: Human olfactory cortex in cross-modal recognition memory. Neuron. 2004;42(4):687–695. doi: 10.1016/s0896-6273(04)00270-3. http://doi.org/10.1016/S0896-6273(04)00270-3. [DOI] [PubMed] [Google Scholar]

- Hofstetter C, Achaibou A, Vuilleumier P. Reactivation of visual cortex during memory retrieval: Content specificity and emotional modulation. NeuroImage. 2012;60(3):1734–1745. doi: 10.1016/j.neuroimage.2012.01.110. http://doi.org/10.1016/j.neuroimage.2012.01.110. [DOI] [PubMed] [Google Scholar]

- Ishai A. Seeing faces and objects with the “mind’s eye.”. Archives Italiennes de Biologie. 2010;148(1):1–9. http://doi.org/10.3410/b2-34. [PubMed] [Google Scholar]

- Jaeger A, Johnson JD, Corona M, Rugg MD. ERP correlates of the incidental retrieval of emotional information: Effects of study-test delay. Brain Research. 2009;1269:105–113. doi: 10.1016/j.brainres.2009.02.082. http://doi.org/10.1016/j.brainres.2009.02.082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson JD, Minton BR, Rugg MD. Content dependence of the electrophysiological correlates of recollection. NeuroImage. 2008;39(1):406–416. doi: 10.1016/j.neuroimage.2007.08.050. http://doi.org/10.1016/j.neuroimage.2007.08.050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson JD, Price MH, Leiker EK. Episodic retrieval involves early and sustained effects of reactivating information from encoding. NeuroImage. 2015;106:300–310. doi: 10.1016/j.neuroimage.2014.11.013. http://doi.org/10.1016/j.neuroimage.2014.11.013. [DOI] [PubMed] [Google Scholar]

- Johnson JD, Rugg MD. Recollection and the reinstatement of encoding-related cortical activity. Cerebral Cortex. 2007;17(11):2507–2515. doi: 10.1093/cercor/bhl156. http://doi.org/10.1093/cercor/bhl156. [DOI] [PubMed] [Google Scholar]

- Julian JB, Fedorenko E, Webster J, Kanwisher N. An algorithmic method for functionally defining regions of interest in the ventral visual pathway. NeuroImage. 2012;60(4):2357–2364. doi: 10.1016/j.neuroimage.2012.02.055. http://doi.org/10.1016/j.neuroimage.2012.02.055. [DOI] [PubMed] [Google Scholar]

- Kahn I, Davachi L, Wagner AD. Functional-neuroanatomic correlates of recollection: implications for models of recognition memory. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience. 2004;24(17):4172–4180. doi: 10.1523/JNEUROSCI.0624-04.2004. http://doi.org/10.1523/JNEUROSCI.0624-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, Yovel G. The fusiform face area: a cortical region specialized for the perception of faces. Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences. 2006;361(1476):2109–2128. doi: 10.1098/rstb.2006.1934. http://doi.org/10.1098/rstb.2006.1934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kark SM, Kensinger EA. Effect of emotional valence on retrieval-related recapitulation of encoding activity in the ventral visual stream. Neuropsychologia. 2015;78:221–230. doi: 10.1016/j.neuropsychologia.2015.10.014. http://doi.org/10.1016/j.neuropsychologia.2015.10.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kensinger EA. Remembering the Details: Effects of Emotion. Emotion Review. 2009;1(2):99–113. doi: 10.1177/1754073908100432. http://doi.org/10.1177/1754073908100432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaBar KS, Cabeza R. Cognitive neuroscience of emotional memory. Nature Reviews. Neuroscience. 2006;7(1):54–64. doi: 10.1038/nrn1825. http://doi.org/10.1038/nrn1825. [DOI] [PubMed] [Google Scholar]

- Lancaster JL, Rainey LH, Summerlin JL, Freitas CS, Fox PT, Evans AC, Mazziotta JC. Automated labeling of the human brain: A preliminary report on the development and evaluation of a forward-transform method. Human Brain Mapping. 1997;5(4):238–242. doi: 10.1002/(SICI)1097-0193(1997)5:4<238::AID-HBM6>3.0.CO;2-4. http://doi.org/10.1002/(SICI)1097-0193(1997)5:4<238::AID-HBM6>3.0.CO;2-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lancaster JL, Woldorff MG, Parsons LM, Liotti M, Freitas CS, Rainey L, Fox PT. Automated Talairach Atlas labels for functional brain mapping. Human Brain Mapping. 2000;10(3):120–131. doi: 10.1002/1097-0193(200007)10:3<120::AID-HBM30>3.0.CO;2-8. http://doi.org/10.1002/1097-0193(200007)10:3<120::AID-HBM30>3.0.CO;2-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lang PJ, Bradley MM, Cuthburt BN. Technical Report A-8. Gainesville, FL: University of Florida; 2008. International affective picture system (IAPS): Affective ratings of pictures and instruction manual. [Google Scholar]

- Lieberman MD, Cunningham WA. Type I and Type II error concerns in fMRI research: Re-balancing the scale. Social Cognitive and Affective Neuroscience. 2009;4(4):423–428. doi: 10.1093/scan/nsp052. http://doi.org/10.1093/scan/nsp052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maratos EJ, Allan K, Rugg MD. Recognition memory for emotionally negative and neutral words: An ERP study. Neuropsychologia. 2000;38(11):1452–1465. doi: 10.1016/s0028-3932(00)00061-0. http://doi.org/10.1016/S0028-3932(00)00061-0. [DOI] [PubMed] [Google Scholar]

- Markowitsch HJ, Vandekerckhove MMP, Lanfermann H, Russ MO. Engagement of lateral and medial prefrontal areas in the ecphory of sad and happy autobiographical memories. Cortex; a journal devoted to the study of the nervous system and behavior. 2003;39 doi: 10.1016/s0010-9452(08)70858-x. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/14584547. [DOI] [PubMed] [Google Scholar]

- Mather M, Sutherland MR. Arousal-Biased Competition in Perception and Memory. Perspectives on Psychological Science. 2011;6(2):114–133. doi: 10.1177/1745691611400234. http://doi.org/10.1177/1745691611400234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGaugh JL. Memory--a century of consolidation. Science (New York, N.Y.) 2000;287(5451):248–251. doi: 10.1126/science.287.5451.248. http://doi.org/10.1126/science.287.5451.248. [DOI] [PubMed] [Google Scholar]

- McGaugh JL. The amygdala modulates the consolidation of memories of emotionally arousing experiences. Annual Review of Neuroscience. 2004;27:1–28. doi: 10.1146/annurev.neuro.27.070203.144157. http://doi.org/10.1146/annurev.neuro.27.070203.144157. [DOI] [PubMed] [Google Scholar]

- Mickley KR, Kensinger EA. Emotional valence influences the neural correlates associated with remembering and knowing. Cognitive, Affective & Behavioral Neuroscience. 2008;8(2):143–152. doi: 10.3758/cabn.8.2.143. http://doi.org/10.3758/CABN.8.2.143. [DOI] [PubMed] [Google Scholar]

- Moeller S, Yacoub E, Olman CA, Auerbach E, Strupp J, Harel N, Uğurbil K. Multiband multislice GE-EPI at 7 tesla, with 16-fold acceleration using partial parallel imaging with application to high spatial and temporal whole-brain fMRI. Magnetic Resonance in Medicine. 2010;63(5):1144–1153. doi: 10.1002/mrm.22361. http://doi.org/10.1002/mrm.22361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris CD, Bransford JD, Franks JJ. Levels of processing versus transfer appropriate processing. Journal of Verbal Learning and Verbal Behavior. 1977;16(5):519–533. http://doi.org/10.1016/S0022-5371(77)80016-9. [Google Scholar]

- Moscovitch M, Rosenbaum RS, Gilboa A, Addis DR, Westmacott R, Grady C, Nadel L. Functional neuroanatomy of remote episodic, semantic and spatial memory: A unified account based on multiple trace theory. Journal of Anatomy. 2005;207(1):35–66. doi: 10.1111/j.1469-7580.2005.00421.x. http://doi.org/10.1111/j.1469-7580.2005.00421.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neisser U, Harsch N. Phantom flashbulbs: False recollections of hearing the news about Challenger. In: Winograd E, Neisser U, editors. Affect and accuracy in recall. Cambridge: Cambridge University Press; 1992. pp. 9–31. http://doi.org/10.1017/CBO9780511664069.003. [Google Scholar]

- Nyberg L, Persson J, Habib R, Tulving E, McIntosh aR, Cabeza R, Houle S. Large scale neurocognitive networks underlying episodic memory. Journal of Cognitive Neuroscience. 2000;12(1):163–173. doi: 10.1162/089892900561805. http://doi.org/10.1162/089892900561805. [DOI] [PubMed] [Google Scholar]

- Ochsner KN. Are affective events richly recollected or simply familiar? The experience and process of recognizing feelings past. Journal of Experimental Psychology. General. 2000;129(2):242–261. doi: 10.1037//0096-3445.129.2.242. http://doi.org/10.1037/0096-3445.129.2.242. [DOI] [PubMed] [Google Scholar]