Abstract.

We present an imaging-based method for noncontact spirometry. The method tracks the subtle respiratory-induced shoulder movement of a subject, builds a calibration curve, and determines the flow-volume spirometry curve and vital respiratory parameters, including forced expiratory volume in the first second, forced vital capacity, and peak expiratory flow rate. We validate the accuracy of the method by comparing the data with those simultaneously recorded with a gold standard reference method and examine the reliability of the noncontact spirometry with a pilot study including 16 subjects. This work demonstrates that the noncontact method can provide accurate and reliable spirometry tests with a webcam. Compared to the traditional spirometers, the present noncontact spirometry does not require using a spirometer, breathing into a mouthpiece, or wearing a nose clip, thus making spirometry test more easily accessible for the growing population of asthma and chronic obstructive pulmonary diseases.

Keywords: spirometry, mobile health, remote sensing, video analysis

1. Introduction

Asthma and chronic obstructive pulmonary disease (COPD) are the most prevalent obstructive airway diseases, affecting tens of millions of people in the United States alone.1 The most common way to diagnose the diseases and reassess the progression of the diseases is spirometry, which measures how much a patient inhales and how fast he/she exhales. In a spirometry test, the patient is instructed to exhale rapidly and forcefully into a mouthpiece connected to a spirometer that measures breath flow rate and volume. To ensure that all the air is inhaled into the spirometer for accurate flow measurement, the patient is also instructed to wear a nose clip, which leads to complaints of discomfort. For good hygiene, a disposal mouthpiece is used for each spirometry test. The needs of the spirometer, nose clip, and mouthpiece contribute to factors that prevent widespread use of spirometry at home. To overcome this barrier, here we describe an imaging-based noncontact spirometry using a webcam.

There are increasing efforts in developing noncontact respiratory monitoring methods. Depending on the monitoring principles, these methods can be divided into three categories: thermal, photoplethysmography (PPG), and body movement detections. The first category measures the air temperature change associated with exhaled breath near the mouth and nose regions of a subject using an infrared imaging system.2,3 The temperature change can be also detected via the pyroelectric effect.4

The second category extracts the respiratory signal embedded in the PPG signals. PPG measures the change of light absorption or reflection induced by the change of blood volume with each pulse. The movement of the thoracic cavity affects the blood flow during breathing, which leads to a modulation in the PPG signal by the respiratory activity.5 Several PPG signal processing methods, including independent component analysis,6 principal component analysis,5 digital filtering,7 and variable frequency complex demodulation8 have been proposed to remove noise in PPG and extract respiration-induced modulation in PPG.9

The third category detects the subtle chest movement induced by breathing with different technologies, such as frequency-modulated radar wave10,11 and ultrawideband impulse radio radar.12 Several optical imaging-based methods have been introduced to monitor respiratory activities. For example, our group13 developed a differential method to track the shoulder movement associated with breathing. Reyes et al.14 measured the intensity change of the chest wall. Lin et al. applied an optical flow algorithm to detect respiratory activities.15 These methods used low-cost cameras. More sophisticated three-dimensional imaging with multicamera and projector-camera setups were used to track chest surface deformation during breathing.16,17

The works summarized above demonstrated noncontact monitoring of respiratory activities, but to date a noncontact spirometry has not been reported. To achieve this capability, one must be able to accurately and quickly measure respiratory cycles over an extremely wide flow rate range and validate the results with real subjects. In this paper, we describe an imaging-based method to perform spirometry, which can track the flow-volume (spirometry) curve and extract important parameters, including forced expiratory volume in the first second (FEV1), forced vital capacity (FVC), and peak expiratory flow rate (PEF). These parameters are critical for the diagnosis and management of asthma and COPD. We compare and calibrate the imaging-based noncontact spirometry with the simultaneously performed traditional spirometry and validate the results with a pilot study including 16 subjects.

2. Method

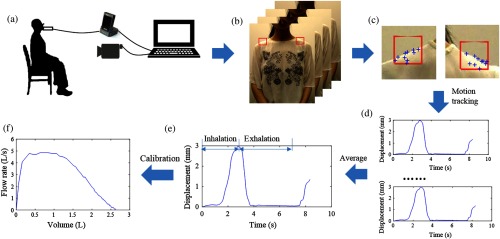

The imaging-based spirometry first captures the image of a subject’s upper body, including face and shoulders, during inhalation and exhalation with a webcam (Fig. 1). Then, it analyzes the shoulder movement and determines the spirometry exhalation rate versus exhalation volume curve, FEV1, FVC, and PEF. To validate and calibrate the method, this work includes a simultaneous spirometry test of each subject with a traditional spirometer (gold standard reference) [Fig. 1(a)]. The data from the imaging-based and traditional spirometry tests are compared and used to construct a calibration curve for the subject. From the calibration curve, the exhalation volume is determined from the shoulder movement, and the corresponding exhalation rate is obtained from the time derivative of the exhalation volume.

Fig. 1.

Principle of noncontact spirometry. (a) Experiment setup. (b) Video recording. (c) and (d) Video analysis. (e) Signal analysis. (f) Flow-volume (spirometry) curve.

2.1. Data Acquisition

A web camera (Logitech C905) was used to capture the video of a subject’s upper body under a typical indoor ambient light condition. The subject was instructed to sit on a backrest chair at the distance of 90 cm from the camera and to perform a forced spirometry test using a gold standard commercial spirometer (MicroLoop, Carefusion), during which both the video and spirometry data were recorded synchronously with a laptop computer [Fig. 1(a)]. The frame rate of the camera was set at 30 frames per second (fps), and the spatial resolution of frame was . The commercial spirometer complies with ATS/ERS 2005 standards, and its sampling rate is .

Sixteen subjects were enrolled in an Institutional Review Board (IRB) study approved by Arizona State University (No. STUDY00004548). The subjects included different genders (nine males, seven females), ages ( years old, ), body mass index (, ), and heights (, ).

Following the standard forced spirometry test procedure, the subject was asked to wear a nose clip, inhale as deeply as they can, and then exhale into the mouthpiece attached to the spirometer as hard as possible and for as long as possible. In each test, the subject performed six forced breathing cycles continuously, in which three forced cycles were used to build calibration curves while the others were used for validation.

2.2. Video Processing

Two shoulder regions consisting of each were selected for detecting respiratory related movement. The regions included the middle portions of the shoulders with clear boundaries that separated the body and background. The upper body movement of the subject was tracked with the Kanade–Lucas–Tomasi (KLT) tracker in the defined region of interest (ROI)18–20 during spirometry test. A Harris corner detector was used to detect feature points within the ROI of the shoulders.19 The detector computes the spatial variation () of image intensity in all directions, with

| (1) |

where , are the gradients of the image intensity of the feature point in the - and -directions, , are the numbers of pixels shifted from each point in the image in the - and -directions, and the angle brackets denote averaging (over , ). The matrix in Eq. (1) is the Harris matrix. The points, which have large eigenvalues for both - and -directions in the Harris matrix, were defined as feature points.

To track the feature points frame by frame, affine transformation was used in the adjacent frames for ROI registration. In general, an affine transformation is composed of rotation, translation, scaling, and skewing. Considering two patches of an image in adjacent frames, an affine map acting on patch is represented as

| (2) |

where is the deformation matrix and is the translation vector. The transformation parameters can be determined in a closed form when minimizing the dissimilarity measure . An example of the dissimilarity measure is the sum of squared difference, given by

| (3) |

where is a weighting function.

2.3. Signal Analysis

To calibrate the shoulder movement, we counted the number of pixels of a certain feature in the image frame and related it to the actual physical length of the feature. The conversion factor is defined as

| (4) |

In the ’th frame, the vertical component of the feature points, representing the shoulder position pos, due to respiration, is given by

| (5) |

where is the vertical component of the ’th point in the ’th frame, is the total frame number of the video.

To correlate the shoulder displacement (change in position) with the spirometer reading, a calibration curve was built for each subject, which converted the shoulder displacement to breathing volume in forced spirometry (Fig. 2). The exhaled volume data from three randomly selected forced breathing cycles of each subject were fitted with a fifth-order polynomial. Note that the sampling rate of spirometer () was higher than the web camera (30 Hz). To match the spirometer reading and camera output, we down sampled volume data from the spirometer for close comparison with the measurement of our optical system. The flow rate was determined from time derivative of the breathing volume.

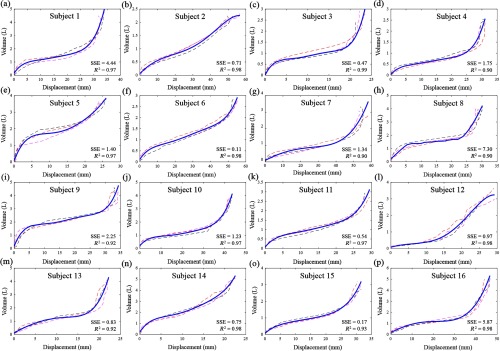

Fig. 2.

Calibration curves (a) to (p) for different subjects. The dotted lines are obtained from three forced breathing cycles, and the blue lines are fifth-order polynomial fitting curves. The sum of squares due to error (SSE) indicators are of the fitting quality (see text for more details).

3. Results

3.1. Calibration Curves

In each test, the subject performed six forced breathing cycles continuously. We randomly selected three forced cycles to build a calibration curve, and the other three cycles were used for validation for each subject. Figure 2 shows these calibration curves for different subjects. Despite the variability in the calibration curves for different subjects, each could be fitted with a fifth-order polynomial using summed square of residuals () and -square () to evaluate the goodness of fit. is defined as

| (6) |

where is the exhaled volume from the images, is the fitting function (fifth-order polynomial), and -squared is

| (7) |

where is the average volume and is the total variance of the data. measures the variance of the fitting model, and describes how close the data are to the fitted curve. The ranges from 0.11 to 7.30, with the median value of 1.10, indicating small errors between the exhaled volume using our proposed method and the fitting model in most cases. ranges from 0.90 to 0.99, indicating good fitting quality.

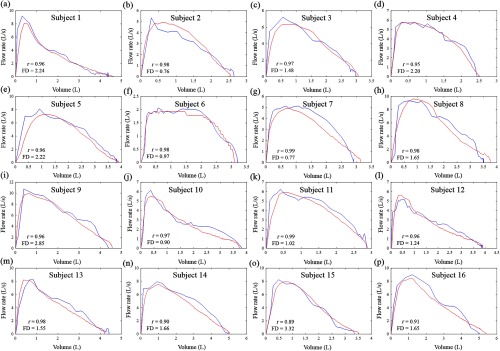

3.2. Flow-Volume Curves and Vital Respiratory Parameters

Using the calibration curves obtained above, we converted the remaining three breathing cycles into flow-volume curves (blue lines) for each subject. This flow-volume is one of the most important spirometry test results that is provided by most commercial spirometers (Fig. 3). For comparison, the simultaneously recorded curves from the commercial spirometer are also shown in Fig. 3 (red lines). Frechet distance (FD) and Pearson product-moment correlation coefficient (Pearson’s ) were used to measure the similarity between the imaging-based spirometry curves and the gold standard spirometry curves. Given two curves and , FD is defined as

| (8) |

where (resp. ) is an arbitrary continuous nondecreasing function from [0,1] onto (resp. ).

Fig. 3.

Flow-volume curves (a) to (p) for different subjects. The red curves are from the gold standard spirometer, and blue curves are from the imaging-based method. is a Pearson product-moment correlation coefficient, and “FD” measures the distance between the calibrated curve and spirometer result.

Pearson’s measures the linear dependence between the imaging-based results and the gold standard spirometry results. As shown in Fig. 3, the Pearson’s values ranged from 0.89 to 0.99, and FD was found to range from 0.76 to 3.32, indicating good agreements between the imaging-based and traditional spirometer curves for most subjects.

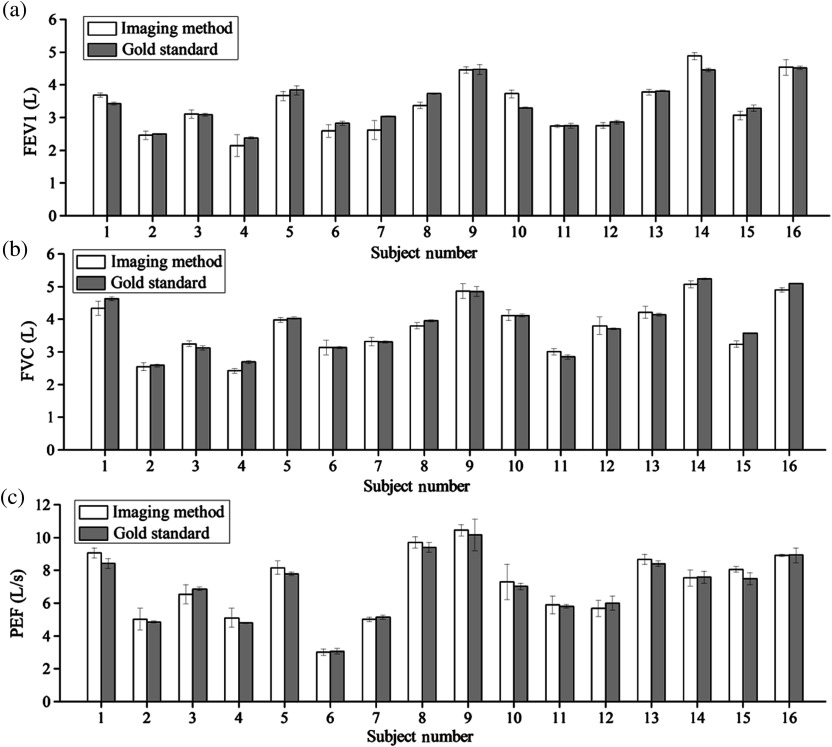

Vital parameters, FEV1, FVC, and PEF, were calculated from the imaging-based spirometry curves (see Table 1 and Fig. 4.). Figure 4 shows that the results measured using the imaging-based method are in excellent agreement with those by the gold standard spirometer. To quantify the agreement, the Pearson , root-mean-square-error (RMSE), relative error, and paired sample -test were analyzed. The Pearson values for FEV1, FVC, and PEF are 0.95, 0.98, and 0.97, respectively, indicating good linear correlation between the imaging-based and traditional spirometers. The RMSE values of FEV1, FVC, and PEF are 0.27, 0.18, and 0.56 respectively, indicating small differences between the two methods. The average errors of FEV1, FVC, and PEF are 8.5%, 6.9%, and 7.7%, respectively. In the paired -test, FEV1 and FVC show the pairwise difference between the imaging-based spirometry and the gold standard spirometry with a mean of zero at the 5% significance level, which is consistent with the RMSE values.

Table 1.

FEV1, FVC, and PEF values for three forced breathing cycles .

| Subject | FEV1 (L) | FVC (L) | PEF (L/s) | |||

|---|---|---|---|---|---|---|

| Estimated | Gold | Estimated | Gold | Estimated | Gold | |

| 1 | ||||||

| 2 | ||||||

| 3 | ||||||

| 4 | ||||||

| 5 | ||||||

| 6 | ||||||

| 7 | ||||||

| 8 | ||||||

| 9 | ||||||

| 10 | ||||||

| 11 | ||||||

| 12 | ||||||

| 13 | ||||||

| 14 | ||||||

| 15 | ||||||

| 16 | ||||||

Fig. 4.

Comparison of (a) FEV1, (b) FVC, and (c) PEF obtained with the imaging-based and gold standard spirometry for different subjects. The values are averaged over three breathing cycles, and the error bars are standard deviations of the measurements.

4. Discussion

Respiration induces craniocaudal, anteroposterior displacements, and cross-sectional area changes of rib cage and abdomen,21 which has been studied by analyzing the three-dimensional movements of the body.22 In this work, we have shown that accurate spirometry can be obtained from the shoulder displacement alone without complex three-dimensional chest movement measurement. This finding is consistent with a previous study that shows good correlation between vertical body movement measured by magnetic resonance imaging and spirometry.23 To convert the displacement into volume, if we could simply model the lung as a cube or sphere, a third-degree polynomial fitting would suffice. However, the human lung is a complicated system, in which bronchi and alveoli have different shapes. Empirically, we found that a fifth-degree polynomial could fit the breathing volume well. Although a higher degree could be even more accurate, it brings up the over-fitting issue. In a future study, we could explore a general calibration to make the system easier for home use.

In the imaging-based spirometry, accurate tracking of the shoulder displacement is critical. In this work, we used the KLT tracking algorithm, which requires good contrast of the shoulder image. This means that the subject’s clothes must have a substantially different color from the background color. We found that this requirement was not difficult to meet for all the sixteen subjects who wore different clothes with different colors and patterns (Fig. 5). In addition to KLT, other algorithms24–26 may also be used to track motions.

Fig. 5.

Snapshots of the videos in the dataset. The subjects wore clothes with various colors and patterns.

Subjects with different genders, ages, body mass indices, and heights were included in this small pilot test. We found that height is moderately correlated with FVC. The Pearson correlation between height and FVC is 0.69. The correlation between body mass index and FVC is 0.50, which showed weak correlation. For the two gender groups, we found a significant difference () between male (, ) and female subjects (, ) in FVC. More subjects with diverse profiles will be needed to validate these observations.

The spirometry curves and extracted respiratory parameters provide key information about asthma, COPD, and other respiratory conditions. For example, a concave upward pattern is often observed in asthma or COPD patients.27 Certain distinct patterns are linked to the obstructing lesions in the upper airway and variable intrathoracic obstructions. However, all the subjects enrolled in our current study are healthy adults. In future study, we will include subjects with respiratory diseases. Another direction of further improvement of the current work is to use a faster camera. The current work used a camera with a frame rate of 30 fps, and the faster camera will improve the temporal resolution and is expected to lead to more accurate measurement of the exhalation rate.

5. Conclusion

We have demonstrated an imaging-based spirometer for accurate forced spirometry tests with a webcam. We validated the technology with a pilot study including sixteen subjects and compared the data with simultaneously performed spirometry tests with a gold standard commercial spirometer. The spirometry curves and key respiratory parameters, including FEV1, FVC, and PEF, from the imaging-based approach are in excellent agreement with those by the gold standard technology. The imaging-based spirometer does not require acquiring a spirometer, using a mouthpiece, or wearing a nose clip, which will lower the cost and improve the user experience, thus contributing to the diagnosis and management of the large and growing asthma and COPD populations.

Acknowledgments

This research was supported by the National Institute of Biomedical Imaging and Bioengineering award (No. 1U01EB021980).

Biographies

Chenbin Liu received his BA degree in electronic information engineering from Hunan University, Changsha, and his PhD in biomedical engineering from Zhejiang University, Hangzhou. He is a postdoc researcher in Biodesign Center for Bioelectronics and Biosensors, Arizona State University. His research interests include using image processing and machine learning method to develop mobile health and computer-aided diagnosis system.

Yuting Yang received her BA degree in biomedical engineering from Sichuan University, Chengdu, and her PhD in biomedical engineering from Zhejiang University, Hangzhou. She is a postdoc researcher in Biodesign Center for Bioelectronics and Biosensors, Arizona State University. Her research interests include developing workout energy expenditure and heart beat montioring system based on low-cost camera.

Francis Tsow received his PhD in electrical engineering from Arizona State University, Tempe. He is an assistant research professor, in Center for Bioelectronics and Biosensors, Biodesign Institute, Arizona State University. His research interests include sensors that are related to environmental monitoring, fitness/exercise and health monitoring, diagnosis, and disease management in a low cost, efficient, and high impact manner.

Dangdang Shao received her BA degree in mechanical engineering and automation from Shanghai Jiao Tong University, her master’s degree in electrical engineering from Shanghai Jiao Tong University, Shanghai, and her PhD in electrical engineering from Arizona State University, Tempe. Her research interests include monitoring vital physiological signals using optical system, image processing, and artificial intelligence.

Nongjian Tao received his BA degree in physics from Anhui University, Hefei, and his PhD in physics from Arizona State University, Tempe. He is the director of the Center for Bioelectronics and Biosensors at the Biodesign Institute and is a professor in the Ira A. Fulton Schools of Engineering at Arizona State University. His research interests include exploring fundamental properties of single molecules and nanostructured materials, developing new sensing and detection technologies, and building fully integrated devices for real-world applications.

Disclosures

No conflicts of interest, financial or otherwise, are declared by the authors.

References

- 1.Menezes A. M. B., et al. , “Increased risk of exacerbation and hospitalization in subjects with an overlap phenotype: COPD-asthma,” Chest 145(2), 297–304 (2014). 10.1378/chest.13-0622 [DOI] [PubMed] [Google Scholar]

- 2.Murthy R., Pavlidis I., “Noncontact measurement of breathing function,” IEEE Eng. Med. Biol. Mag. 25(3), 57–67 (2006). 10.1109/MEMB.2006.1636352 [DOI] [PubMed] [Google Scholar]

- 3.Murthy J. N., et al. , “Thermal infrared imaging: a novel method to monitor airflow during polysomnography,” Sleep 32(11), 1521–1527 (2009). 10.1093/sleep/32.11.1521 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Huang Y. P., Young M.-S., Tai C. C., “Noninvasive respiratory monitoring system based on the piezoceramic transducer’s pyroelectric effect,” Rev. Sci. Instrum. 79(3), 35103 (2008). 10.1063/1.2889398 [DOI] [PubMed] [Google Scholar]

- 5.Madhav K. V., et al. , “Robust extraction of respiratory activity from PPG signals using modified MSPCA,” IEEE Trans. Instrum. Meas. 62(5), 1094–1106 (2013). 10.1109/TIM.2012.2232393 [DOI] [Google Scholar]

- 6.Poh M.-Z., McDuff D. J., Picard R. W., “Advancements in noncontact, multiparameter physiological measurements using a webcam,” IEEE Trans. Biomed. Eng. 58(1), 7–11 (2011). 10.1109/TBME.2010.2086456 [DOI] [PubMed] [Google Scholar]

- 7.Verkruysse W., Svaasand L. O., Nelson J. S., “Remote plethysmographic imaging using ambient light,” Opt. Express 16(26), 21434–21445 (2008). 10.1364/OE.16.021434 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Chon K. H., Dash S., Ju K., “Estimation of respiratory rate from photoplethysmogram data using time–frequency spectral estimation,” IEEE Trans. Biomed. Eng. 56(8), 2054–2063 (2009). 10.1109/TBME.2009.2019766 [DOI] [PubMed] [Google Scholar]

- 9.Wang W., Stuijk S., De Haan G., “A novel algorithm for remote photoplethysmography: spatial subspace rotation,” IEEE Trans. Biomed. Eng. 63(9), 1974–1984 (2016). 10.1109/TBME.2015.2508602 [DOI] [PubMed] [Google Scholar]

- 10.Droitcour A. D., Boric-Lubecke O., Kovacs G. T. A., “Signal-to-noise ratio in Doppler radar system for heart and respiratory rate measurements,” IEEE Trans. Microwave Theory Tech. 57(10), 2498–2507 (2009). 10.1109/TMTT.2009.2029668 [DOI] [Google Scholar]

- 11.Mostov K., Liptsen E., Boutchko R., “Medical applications of shortwave FM radar: remote monitoring of cardiac and respiratory motion,” Med. Phys. 37(3), 1332–1338 (2010). 10.1118/1.3267038 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lai J. C. Y., et al. , “Wireless sensing of human respiratory parameters by low-power ultrawideband impulse radio radar,” IEEE Trans. Instrum. Meas. 60(3), 928–938 (2011). 10.1109/TIM.2010.2064370 [DOI] [Google Scholar]

- 13.Shao D., et al. , “Noncontact monitoring breathing pattern, exhalation flow rate and pulse transit time,” IEEE Trans. Biomed. Eng. 61(11), 2760–2767 (2014). 10.1109/TBME.2014.2327024 [DOI] [PubMed] [Google Scholar]

- 14.Reyes B., et al. , “Tidal volume and instantaneous respiration rate estimation using a volumetric surrogate signal acquired via a smartphone camera,” IEEE J. Biomed. Health Inf. 21(3), 764–777 (2016). 10.1109/JBHI.2016.2532876 [DOI] [PubMed] [Google Scholar]

- 15.Lin K.-Y., Chen D.-Y., Tsai W.-J., “Image-based motion-tolerant remote respiratory rate monitoring,” IEEE Sens. J. 16(9), 3263–3271 (2016). 10.1109/JSEN.2016.2526627 [DOI] [Google Scholar]

- 16.Shafiq G., Veluvolu K. C., “Surface chest motion decomposition for cardiovascular monitoring,” Sci. Rep. 4, 5093 (2014). 10.1038/srep05093 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Drummond G. B., Duffy N. D., “A video-based optical system for rapid measurements of chest wall movement,” Physiol. Meas. 22(3), 489–503 (2001). 10.1088/0967-3334/22/3/307 [DOI] [PubMed] [Google Scholar]

- 18.Lucas B. D., Kanade T., “An iterative image registration technique with an application to stereo vision,” in Proc. of the 7th Int. Joint Conf. on Artificial intelligence (IJCAI ’81), Vol. 81, No. 1, pp. 674–679 (1981). [Google Scholar]

- 19.Shi J., Tomasi C., “Good features to track,” in Proc. IEEE Computer Society Conf. on Computer Vision and Pattern Recognition (CVPR ’94), pp. 593–600 (1994). 10.1109/CVPR.1994.323794 [DOI] [Google Scholar]

- 20.Tomasi C., Kanade T., Detection and Tracking of Point Features, School of Computer Science, Carnegie Mellon University, Pittsburgh: (1991). [Google Scholar]

- 21.Kenyon C. M., et al. , “Rib cage mechanics during quiet breathing and exercise in humans,” J. Appl. Physiol. 83(4), 1242–1255 (1997). [DOI] [PubMed] [Google Scholar]

- 22.McCool F. D., et al. , “Estimates of ventilation from body surface measurements in unrestrained subjects,” J. Appl. Physiol. 61(3), 1114–1119 (1986). [DOI] [PubMed] [Google Scholar]

- 23.Plathow C., et al. , “Evaluation of chest motion and volumetry during the breathing cycle by dynamic MRI in healthy subjects: comparison with pulmonary function tests,” Invest. Radiol. 39(4), 202–209 (2004). 10.1097/01.rli.0000113795.93565.c3 [DOI] [PubMed] [Google Scholar]

- 24.Li Y., et al. , “Tracking in low frame rate video: a cascade particle filter with discriminative observers of different life spans,” IEEE Trans. Pattern Anal. Mach. Intell. 30(10), 1728–1740 (2008). 10.1109/TPAMI.2008.73 [DOI] [PubMed] [Google Scholar]

- 25.Weng S. K., Kuo C. M., Tu S. K., “Video object tracking using adaptive Kalman filter,” J. Visual Commun. Image Representation 17(6), 1190–1208 (2006). 10.1016/j.jvcir.2006.03.004 [DOI] [Google Scholar]

- 26.Comaniciu D., Ramesh V., Meer P., “Real-time tracking of non-rigid objects using mean shift,” in Proc. IEEE Conf. on Computer Vision and Pattern Recognition, Vol. 2, pp. 142–149 (2000). 10.1109/CVPR.2000.854761 [DOI] [Google Scholar]

- 27.Miller M. R., et al. , “Standardisation of spirometry,” Eur. Respir. J. 26(2), 319–338 (2005). 10.1183/09031936.05.00034805 [DOI] [PubMed] [Google Scholar]