Abstract

Nowadays, it is obvious that there is a relationship between changes in the retinal vessel structure and diseases such as diabetic, hypertension, stroke, and the other cardiovascular diseases in adults as well as retinopathy of prematurity in infants. Retinal fundus images provide non-invasive visualization of the retinal vessel structure. Applying image processing techniques in the study of digital color fundus photographs and analyzing their vasculature is a reliable approach for early diagnosis of the aforementioned diseases. Reduction in the arteriolar–venular ratio of retina is one of the primary signs of hypertension, diabetic, and cardiovascular diseases which can be calculated by analyzing the fundus images. To achieve a precise measuring of this parameter and meaningful diagnostic results, accurate classification of arteries and veins is necessary. Classification of vessels in fundus images faces with some challenges that make it difficult. In this paper, a comprehensive study of the proposed methods for classification of arteries and veins in fundus images is presented. Considering that these methods are evaluated on different datasets and use different evaluation criteria, it is not possible to conduct a fair comparison of their performance. Therefore, we evaluate the classification methods from modeling perspective. This analysis reveals that most of the proposed approaches have focused on statistics, and geometric models in spatial domain and transform domain models have received less attention. This could suggest the possibility of using transform models, especially data adaptive ones, for modeling of the fundus images in future classification approaches.

Keywords: Arteries and veins, computer-aided diagnosis, medical image processing, retinal fundus images, retinal vessel classification

Introduction

The retina is a multi-layered tissue of light-sensitive cells which has surrounded the posterior cavity of the eye, where light rays are converted into neural signals for interpretation by the brain. One of the most important retina associated diseases is diabetes. Diabetes affects a patient’s body from various aspects such as changes in the retinal blood vessels. Diabetic retinopathy refers to a common complication of diabetes which affects the retinal vascular area, and it is increasingly becoming a major cause of blindness throughout the world.[1,2,3,4] Diabetic retinopathy is broadly divided into nonproliferative and proliferative types. The earliest form of diabetic retinopathy is nonproliferative, in which damaged blood vessels in the retina begin to leak extra fluid and small amount of blood spreads into the eye. In proliferative retinopathy, new fragile blood vessels grow in the retina, which can result in significant visual impairment.[2,5,6]

Laser therapy in the earliest stages of diabetic retinopathy can prevent from progression of the eye damages, and the risk of blindness may even be reduced to a great extent. Success of the treatment depends on early detection and regular check up/follow up by ophthalmologist.

Various methods have been proposed for early diagnosis of this disease. Fundus imaging has been known as one of the primary methods of screening for retinopathy.[2] Recent advances in digital imaging and image processing have been resulted in widespread use of image modeling and analysis techniques in all areas of medical sciences, especially in ophthalmology. Retinal blood vessel network is the only blood vessel network of the body that is visible in a non-invasive imaging method.[7] Retinal fundus color imaging is a common procedure for both manual and automatic evaluation of this vessel structure. Structural analysis of retinal vessel network is used as a reliable tool for early detection of retinopathies.[8,9,10,11] Researchers started this analysis with the development of vessel segmentation methods and expanded it for the evaluation of morphological features of the vessel network.[12,13] There are many parameters that can be measured from the retinal vessels structure such as changes in the thickness of the vessels, curvature of the vessel structure, and arteriolar–venular ratio (AVR).

AVR has been found useful for early diagnosis of diseases such as hypertension, diabetes, stroke, and the other cardiovascular diseases in adults, and retinopathy of prematurity in infants.[14,15,16] Therefore, to achieve meaningful diagnostic results, accurate measurement of this parameter is necessary. Various protocols have been defined for measuring the AVR. In Japan, it is often measured using the largest adjacent pair vessels in macula-centered images and in a certain distance from the optic disc margin, usually 0.25–1 of the optic disc diameter. However, in the U.S., six largest vessels in the area within 0.5–1 of the optic disc diameter from its margin in optic disc-centered images are generally considered to AVR calculation.[17]

AVR calculation problem comprises several smaller problems including: optic disc localization, vessel segmentation, accurate vessel diameter measurement, vessel network analysis, and classification of arteries and veins. Optic disc localization is required to determine region of interest (ROI), where the measurements are performed according to the protocol. Vessel segmentation is necessary for finding the exact location of the vessels and also for thickness calculation. Vessel network analysis is required because the location of bifurcations and cross over points should be determined for successful implementation of medical protocols. Classification of arteries and veins is a fundamental step in measuring the AVR. Separation of arteries and veins with high accuracy is important because small errors in classification may lead to relatively large errors in the final AVR. Therefore, providing an effective and efficient method for vessel classification seems necessary.

The structure of this paper is as follows: first, the problem of the retinal vessel classification and its challenges are expressed. Then, in third section, a comprehensive review of the state-of-the art methods for arteries and veins classification in fundus images is provided and finally, in fourth and fifth sections, discussion and conclusions are presented, respectively.

Problem Statement

Many research works have been conducted for retinal vessel segmentation,[12] but automatic classification of the segmented vessels has received less attention. Classification of vessels in retinal fundus images faces some challenges which make it difficult. Two challenging factors are low contrast of the fundus images and inhomogeneous lighting of the background. Inhomogeneous lighting is caused by imaging process, while low contrast is the result of this fact that different blood vessels have different contrast with the background. In other words, thicker vessels have higher contrast in comparison to thinner ones. In addition, changes in color of retina for different subjects which emanate from biological characteristics raise another problem.

Retinal vessel classification approaches are often based on visual and geometric features which discriminate arteries and veins. Generally, arteries and veins are different in four features: veins are thicker than arteries, veins are darker (redder), and central reflex is more recognizable for arteries. Moreover, arteries and veins usually alternate near the optic disc and before branching off.

However, in many cases, these differences are not sufficient to distinguish arteries from veins. For example, in low quality images, central reflex in the outer areas often will be removed. In addition, the vessels in the outer regions of the image are very dark because of shading effect resulted from inhomogeneous lighting of the image. In these cases, arteries and veins look very similar that leads to misclassification of some vessels. Furthermore, thickness does not account an appropriate feature for classification, because this feature is variable from the highest value near the optic disc to the smallest value in outer parts. Moreover, if major arteries or veins are branched off inside the optic disc, it is possible that there are two adjacent arteries or veins just out of the optic disc.[18]

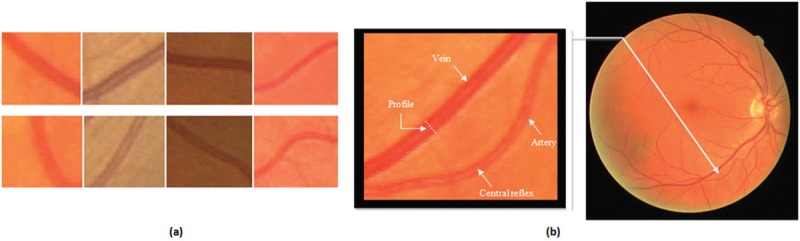

In Figure 1a, four vein pieces in the first row and four artery pieces in the second row are shown. These examples indicate that arteries and veins are very similar in appearance. Therefore, classification approaches which only use local features cannot necessarily achieve good results.

Figure 1.

(a) Samples of vein (first row) and artery (second row) pieces in fundus images. (b) Specifying central reflex and profile in a piece of a fundus image

Vessel Classification Methods in Fundus Images

Common approach in most of the studies conducted for vessel classification in fundus images involves five steps: (1) vessel segmentation, (2) ROI selection to classify its vessels, (3) feature extraction from different parts of the vessel, (4) classification of the feature vectors, and (5) combining the results to determine the final label of the vessel. Typically, three procedures are considered for extracting the features: pixel-based, profile-based, and segment-based feature extraction procedures. Profile is a piece of vessel with one pixel thickness which is perpendicular to the vessel orientation [see Figure 1b].

Proposed vessel classification methods can be placed into two broad categories, that is, automatic and semi-automatic. In semi-automatic methods, major vessels usually are labeled by an expert as vein or artery in their initial points. Then, these labels are propagated over the vessel network through the vessel tracking algorithms that use of connectivity information and structural characteristics of vascular tree. On the other hand, conventional approach in automatic methods is as following: first, centerline pixels of the vessels that make up the vessel skeleton are extracted from the segmented fundus image. Subsequently, different features for each centerline pixel (or its related profile or along each segment) are extracted. Finally, each pixel is labeled by the classifier as a vein or artery.

Semi-automatic methods

A semi-automatic method for retinal vessel analysis is proposed by Martinez-Perez et al.[19] in which arterial and venous trees are analyzed, separately. After determining a branch as either vein or artery by the expert, an automatic process calculates geometrical and topological features of each segment of the branch. Rothaus et al.[20,21] proposed a method that uses a conditional optimization approach based on the anatomical properties of arteries and veins. In this method, that can be considered as an extension of Martinez-Perez work, labels are propagated during the vessel graph by using some manually labeled starting segments.

Estrada et al.[22] developed a semi-automatic approach which combines graph-theoretic methods with domain-specific knowledge and is capable of analyzing the entire vasculature. This classification framework which relies on estimating the vascular topology is indeed the extension of previously proposed tree topology estimation framework[23] that incorporates expert, domain-specific features to construct a likelihood model. In the next step, this model is maximized by iteratively exploring the space of possible solutions consistent with the projected vessels. The proposed method was tested on four retinal datasets namely WIDE,[23] AV-DRIVE,[24] CT-DRIVE,[24] and AV-INSPIRE[25] and achieved classification accuracies of 91.0, 93.5, 91.7, and 90.9%, respectively.

Most of the conducted efforts for retinal vessel classification tend to be fully automatic methods which can be utilized for clinical purposes. In the following section, a comprehensive review of this kind of classifiers is presented.

Automatic methods

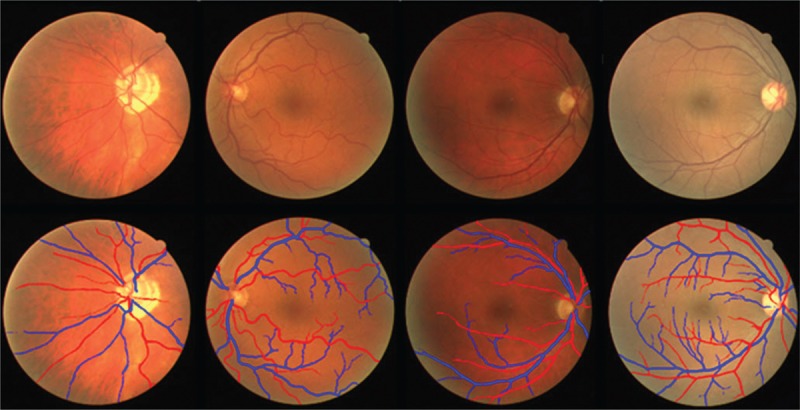

Artery and vein classification problem in retinal fundus images is complicated because of the similarity between descriptive features of these two structures and also variability in contrast and illumination of fundus images. Retinal images suffer from inhomogeneous contrast and illumination which arises from inter-image and intra-images changes. Some sample fundus images are shown in Figure 2, which depict high color and illumination variations inter and intra images. To achieve meaningful color information, these changes must be eliminated. For this purpose, in the works of Grisan and Ruggeri[26] image background is analyzed to detect changes in contrast and illumination, then these changes are corrected by statistical estimation of their characteristics.

Figure 2.

Some samples of fundus images from DRIVE dataset (first row) and their ground truth (second row). The red lines correspond to arteries, and the blue lines correspond to veins

One of the first automatic retinal vessel classification methods proposed by Grisan and Ruggeri[26] in 2003. Vessel network extraction is a primary stage in fundus image analysis which is often performed by a vessel tracking process and a set of vessel segments are provided. Grisan and Ruggeri used sparse tracking algorithm for automatic extraction of the vessel network. To take advantages of local features and vessel network symmetry, retinal image is divided into some zones with the equal number of arteries and veins. It is assumed that two vessel types in these zones have considerable differences in their local features. In this method, an area around the optic disc (within 0.5–2 of the optic disc diameter from its center) is divided into four zones, in which each one contains one of the major arches.

Among various features, variance of the red channel and mean of the hue channel in each vessel segment are considered as the most discriminative features for classification. Clinically, in two adjacent vessels, the darker (more reddish) vessel is considered as vein, and if there is not considerable difference in red values, the vessel that has more color uniformity is considered as vein.

After feature extraction, vessels have been classified using a fuzzy clustering algorithm. The Euclidean distance of each pixel from the mean value of features in each class is considered as classification criterion. Finally, labels of pixels in each segment are combined based on major voting and the whole segment is classified. After vessel classification in the specified zone, this classification can spread out of this zone (where little information is available from texture and color to discriminate arteries and veins) by vessel tracking. Thirty-five fundus images have been analyzed in this study, in which 11 images were used to develop the algorithm, and 24 images were used for validation. Reported results on 24 validation images show the overall error of 12.4%. Considering that this classification procedure is performed around the optic disc and the underlying assumption is that all four quadrants have similar number of arteries and veins, this method is more suitable for optic disc-centered images.

Ruggeri et al.[27] developed this method in 2007 by AVR assessment in the area from 0.5 to 1 disc diameter from the optic disc margin. In this paper, a correlation with a manual reference standard on 14 images is provided which varies between 0.73 and 0.83, depending on the protocol which is used for AVR calculation. Afterward, Tramontan et al.[28] further developed this method in 2008 by improving the vessel-tracking algorithm which led to an increase in correlation with the reference standard up to 0.88 for 20 images acquired from DCCT study.[29] In this method, red contrast parameter is used which is defined as the ratio between the peak of the central line intensity value and the largest intensity value of two vessel edges. Arteries and veins have been classified based on the average value of the red contrast along the vessel which determines the probability of belonging to the vein class.

A piecewise Gaussian model is proposed by Li et al.[30] to capture central reflex in the green channel for separating arteries from veins. The minimum Mahalanobis distance classifier is applied for identification of vessel type. Experiments on 505 vessel segments from different fundus images were resulted in true positive rate of 82.46% for arteries and 89.03% for veins.

Jelinek et al.[31] tested different classifiers and features to discrimination of arteries and veins. They used eight features including mean and standard deviation of red, blue, green and hue channels as well as 13 classifiers available through the Weka toolbox.[32,33,34] The three best features were the mean of green, and the mean and standard deviation of hue. Best classification result obtained from Naive-Bayes which led to a mean accuracy of 70% over eight images.

Four different classifiers namely nearest neighbor (NN), 5-NN, Fisher linear discriminant, and support vector machine (SVM) are investigated by Narasimha-Iyer et al.[35] to retinal vessel classification and the best result has obtained by the SVM. Structural and functional features are utilized for separating arteries and veins. Central reflex as a structural indicator and the ratio of the vessel optical densities from images at oxygen-sensitive and oxygen-insensitive as a functional feature have been used. The classifier is applied to a set of 251 vessel segments from 25 dual wavelength images and has achieved to true positive rate of 97% for arteries and 90% for veins.

In 2007, Kondermann et al.[18] examined two profile-based and ROI-based feature extraction methods, as well as two classification methods based on SVM and neural networks for the separation of arteries and veins in retinal fundus images. Profile-based features are RGB color space values by subtracting their mean values that have been determined for each centerline pixel and also pixels belonged to its profile. ROI-based features are obtained in a square region around each centerline pixel (that is rotated in such a way that its horizontal axis is aligned with the main axis of the vessel). Multiclass principle component analysis is used to reduce the size of the feature vector before applying it to the classifier.

The methodologies have been assessed on four 1024 × 1280 retina images containing 10,132 centerline pixels. Four kernels including: linear, polynomial, radial basis function (RBF), and sigmoidal with different values of the parameters were examined for SVM classification which RBF kernel yielded the best result. Both classifiers show good performance on manually segmented data, but for automatic segmented images, their performance is deteriorated about 10%. The best reported results indicate that 95.32% of the pixels belong to the major vessels in the area within three diameters of the optic disc are properly classified using ROI-based feature extraction method combining with multi-layer perceptron (MLP) classifier. Adding meta-knowledge (all pixels of a vessel section, between two intersections, must belong in a same class) has contributed to classification rate by 6.44%. It is worth mentioning that these experiments have been conducted on high-quality images, and optic disc is in the center of the images which reduces destructive effects of the inhomogeneous illumination.

In the works of Muramatsu et al.,[17] two major pair vessels in upper and lower temporal regions which come out from the optic disc are selected manually for AVR calculation. First, retinal vessels are extracted using top-hat transformation and double-ring filter techniques. Subsequently, the position and diameter of the optic disc are obtained to determine the desired ROI for measuring the AVR. Once the vessels were segmented, RGB color information is extracted from the vessel segments in quarter-disc to one disc diameter from the edge of the optic disc. Centerline pixels are classified using linear discriminant analysis (LDA) classifier, and the label of each segment is determined by majority voting. Classification accuracy of the centerline pixels that were classified correctly was 88.2% which resulted correctly in the classification of 30 pairs out of 40 pairs of the major arteries and veins in 20 test images from DRIVE dataset.[36]

In 2011, Muramatsu et al.[37] further improved their approach and proposed a method, in which vessels are classified using LDA classifier that utilizes features including RGB color and contrast values. Contrast value is defined as the difference between the average of intensity values in a 5 × 5 window around the intended pixel (centerline pixel) within the vessel, and the average of intensity values in a 10 × 10 window around that. By applying a feature selection, contrast value of the blue channel was removed, and the other five features have been used to train the classifier. Finally, each vessel segment is classified by majority voting using obtained labels for centerline pixels which has led to the accuracy of 92.8%.

In the works of Váazquez and co-authors,[38,39,40] a color-based clustering procedure is combined with a vessel tracking method based on the minimal path approach. Considering AVR measurement protocols, the ROI is defined by several circumferences centered at the optic disc. Five feature vectors from RGB, HSI, and gray level color spaces were selected including red values, green values, red and green values, hue values, and gray level values. Another feature vector was also defined that contains a mean of the hue and variance of the red values in each profile. Furthermore, to minimize the effect of outliers, the mean and the median of each profile in each color channel were also considered. Finally, of all these features, median of the green values was selected as the most discriminant feature.

After defining feature vectors in each circumference, optic disc-centered retinal image is divided into four quadrants, in which coordinates axes are rotated by 20° steps between 0° and 180°. Then, k-means clustering is applied to extracted feature vectors in each quadrant and classify them. In the next step, vessel segments are classified by majority voting based on the classification results of the feature vectors extracted from their profiles. Final label of each vessel segment is obtained by combining the local classification results in the quadrants in which the vessel is found. Finally, vessel tracking strategy tracks vessel segments along the vessel, in different circumferences, to obtain the final label of the vessel. Reported results in the works of Vázquez et al.[40] show the classification rate of 87.68% in 100 retinal images from VICAVR-2 dataset.[41] One disadvantage of the k-means clustering method is that this method is sensitive to initialization, and it is possible to get stuck in local minima.

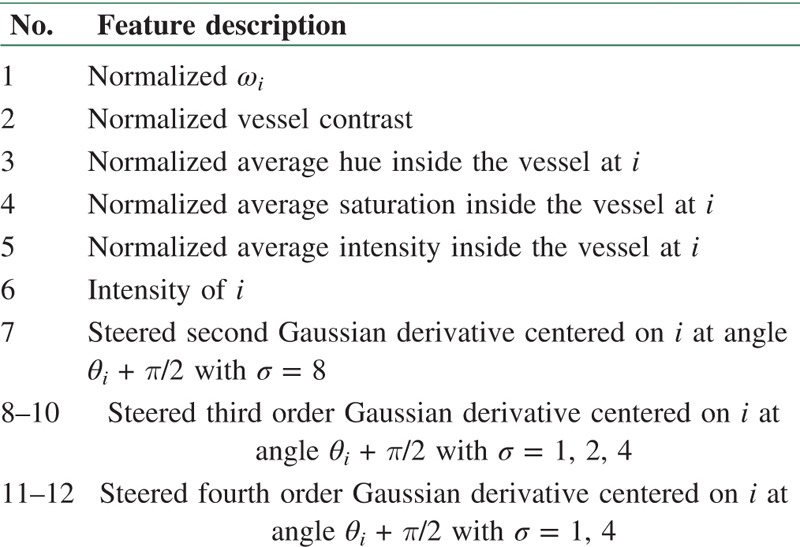

In the works of Niemeijer et al.,[42] an automatic supervised method is provided for arteries and veins classification in DRIVE dataset images. First, centerline pixels of the vessels are extracted, and bifurcations and cross over points are removed by omitting pixels with more than two neighbors. In this way, vascular network is divided into some segments. In the next step, width (ωi) and angle (θi) of each pixel are calculated. After performing preprocessing on all 20 training images, 24 features have been extracted (for each centerline pixel and along its profile) which are including intensity values and derivative information. All features are normalized to have zero mean and unit standard deviation. In the next stage, dimension of the feature space has been reduced by sequential forward floating selection (SFFS) method and the most prominent features were selected which are summarized in Table 1. SFFS starts with an empty set of features and then adds or removes features to improve the classification performance.

Table 1.

Selected features by SFFS for each centerline pixel (i)[42]

For each centerline pixel, this set of 12 features were extracted and different classifiers including LDA, quadratic discriminant analysis (QDA), SVM, and KNN were examined, in which KNN (K = 286) yielded the best performance according to the area under the ROC curve (AUC) value. Outcome of the KNN classifier assigns a soft label to each centerline pixel of the test image and each segment is labeled by averaging method assuming that all connected pixels in each segment should be of the same type. This approach achieved to the AUC of 0.88 for 20 test images.

Niemeijer et al.[43,44] improved their previous work and provided an automatic method to estimate the AVR. The performance of four former classifiers (KNN, SVM, LDA, and QDA) on the feature vector containing 27 features is studied in a supervised classification manner by Niemeijer et al.,[44] in which LDA shows the best result. By removing the width, feature vector only contains color and color variation features of the vessel including HSI color space components and the corresponding values of the red and green channels which are extracted from the vessels in the area within 1–1.5 of the optic disc diameter from the optic disc center. Since using of feature selection has deteriorated the results, all features have been applied to the classifier. The label of each segment is determined by using the median of the labels of its centerline pixels. Sixty-five high-quality digital color fundus photographs obtained from patients with primary open angle glaucoma at the University of Iowa Hospitals and Clinics were used for training and testing of the system. The images are 2392 × 2048 pixels and are centered on the optic disc. Twenty-five images were randomly selected for training, and the other 40 test images have been made publicly available in the Iowa Normative Set for Processing Images of the REtina (INSPIRE-AVR).[25] Vessel classification results were compared to the labeling by the human expert yielded an area under the ROC curve of 0.84. AVR values were compared to the results of the semi-automatic computer program called IVAN which obtained a mean unsigned error of 0.06 with a mean AVR of 0.67.

Rothaus and Jiang[45] used a feature vector including three color features and five model features (width, contrast, noise ratio, displacement, and modeling error) for the classification of the major vessels near the optic disc. In this method, k-means clustering is used to distinguish between arteries, veins, and undefined vessels. A meaningful label is assigned to each cluster in interpretation step which utilizes anatomical description. Undefined label is assigned to the cluster that has the highest average model error. The cluster is classified as vein which its corresponding vessels are darker, wider, more reddish, and have less central reflection in comparison to arteries. Evaluation of this method on the MARS dataset including 448 retinal images led to the classification error of less than 30% in 80% of the test images.

Zamperini et al.[46] conducted several experiments for finding optimal features for retinal vessel classification. First, a set of 86 features were extracted in three categories of central colors and within-vessel variations, contrast with surrounding pixels, and position and size features. Then, by applying a greedy backward feature selection, a reduced set of 16 features was selected including both color features computed in the internal and external regions of vessels around the centerline pixels and positional ones (distances from the optic disc center and image center). Obtained results indicate that color contrast between the vessels and background is the most discriminant feature, but some vessels need more features for classification. Unlike thickness, position and color variations inside the vessel were identified as useful features for this purpose. Different linear and nonlinear classifiers such as linear and quadratic normal Bayes, Parzen classifier, and linear and radial basis SVM were examined. Forty-two macula-centered images, acquired from Ninewells Hospital, Dundee, were used for classification, in which experimental results on a set of 656 pixels in the area around the optic disc showed the best accuracy of 93.1% in the use of linear normal Bayes. These experiments demonstrate that utilizing discriminative descriptors obviates using vascular connectivity information and yielded a good classification performance.

Relan et al.,[47] in 2013, suggested a classification method based on Gaussian mixture model (GMM) that uses four color features (mean of red, mean of green, mean of hue, and variance of red) which are extracted within a circular area around each centerline pixel with diameter equal to 60% of the mean vessel diameter. The proposed unsupervised Gaussian mixture model-expectation maximization (GMM-EM) classifier classifies pair vessels in four quadrant areas around the optic disc within 0.5–1 of the optic disc diameter from its margin. This method has achieved the classification accuracy of 92% on 406 vessels in 35 illumination-corrected images, whereas 13.5% of vessels were classified as unlabeled. These images with resolution of 2048 × 3072 pixels were randomly selected from a large dataset of non-mydriatic fundus images were obtained from the Orkney Complex Disease Study (ORCADES). Repeating these experiments on 802 vessels from 70 images led to the classification accuracy of 90.45 and 13.8% unlabeled vessels.[48]

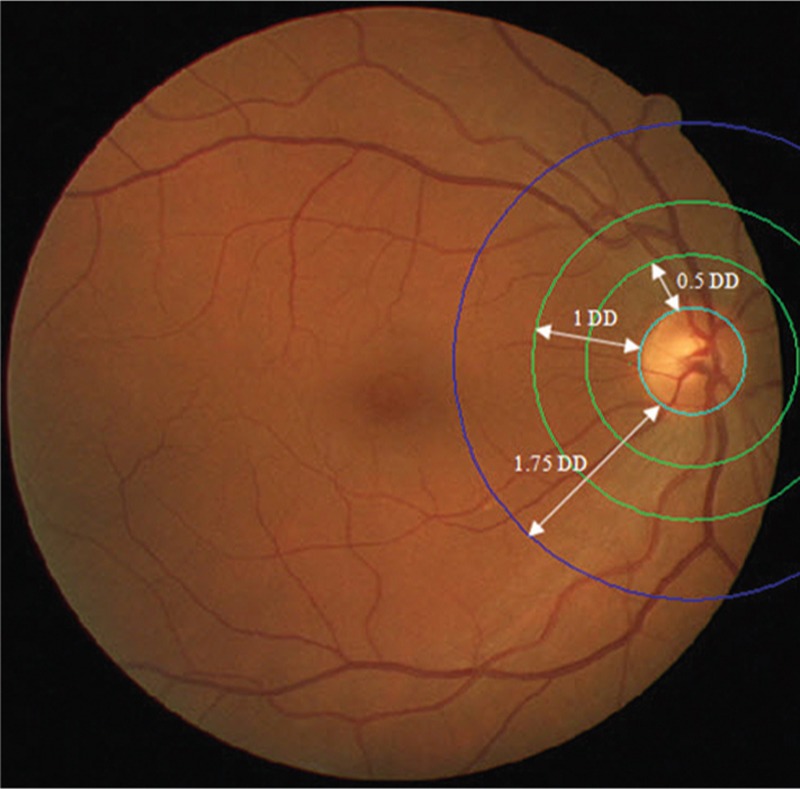

In 2014, Relan et al.[48] proposed a supervised method for the classification of arteries and veins that utilizes the same features which were used in their previous work. In this method, a least square-SVM (LS-SVM) classifier classifies the vessels in the same former area around the optic disc and an extended zone (a circular zone from optic disc margin to 1.75 of its diameter). Figure 3 shows the desired areas for AVR measurement. A study has been conducted by Cheung et al.;[49] according to that the retinal vascular caliber measurement in this extended zone is also indicative of blood pressure. The classification accuracy is 94.88% for 802 vessels (70 images) and 93.96% for 1207 vessels in the extended zone.[50]

Figure 3.

AVR measurements zones. DD=optic disc diameter

Instead of classifying centerline pixels, small vessel segments are classified and features corresponding to RGB, HSI, and LAB color spaces are extracted from them. Mean and variance of intensity values and difference of the average value of pixel intensities over centerline pixels and walls of vessels are obtained for centerline pixels as well as the entire small segments. By using SFFS, a set of eight features are chosen as the most discriminant features, in which most of them are related to red and green color channels. The performance of three classification methods of SVM, LDA, and fuzzy clustering has been studied on the selected features, and the best result has been achieved by the LDA which was improved by using structural features of vessel network in the post-processing step. Since all images are not optic disc-centered, major vessels in a circular region around the optic disc and of a certain distance from it are considered for classification. Performance evaluation of this method on DRIVE dataset images and 13 retinal images (2592 × 3872) from Khatam-Al-Anbia eye hospital of Mashhad shows the classification accuracy of 90.16 and 88.18%, respectively. These reported results were obtained for vessels thicker than three pixels in the area within 0.5–1 of the optic disc diameter from its margin.

In the proposed method by Joshi et al.,[51] the vascular graph is separated by using Dijkstra’s shortest-path algorithm to find different subgraphs. Then, each subgraph is labeled as either artery or vein using a fuzzy C-means clustering algorithm. A feature vector consisting of four features, mean and variance of green channel as well as mean and variance of hue channel, in a 3 × 3 neighborhood of each centerline pixel is extracted. This approach was applied to 50 fundus images of 50 subjects selected randomly from EYECHECK dataset,[52] which resulted in an accuracy of 91.44% correctly classified vessel pixels.

Dashtbozorg et al.,[53] by analyzing the graph extracted from retinal vessel structure, proposed an automatic classification method which classifies the entire vascular tree by determining the type of each intersection pixels (graph nodes) and assigning a label to each vessel segment (graph links). In this method, the labeling results obtained from the graph analysis combined with the intensity features are used to put a label on the segments. Thus, a set of 30 color-based features are extracted from centerline pixels which by applying SFFS, 19 most prominent features are selected. After feature selection, KNN, LDA, and QDA classifiers were examined, in which the best result was achieved by LDA. Evaluation of this algorithm on three datasets namely INSPIRE-AVR, DRIVE, and VICAVR[54] led to the classification accuracy of 88.3, 87.4, and 89.8%, respectively. In this algorithm, classification is not limited to a specific zone, and it is able to classify the entire retinal vessel tree, which is the most important advantage of this method compared to the previously mentioned methods.

Fraz et al.[7] proposed an ensemble classifier of boot strapped decision trees for classification of vessels in fundus images into arteries and veins. Features are extracted from RGB and HSI color spaces in three manners: pixel-based, profile-based, and segment-based. Segment-based features are calculated in two ways: first, the mean and variance of the intensities are calculated in the entire segment and second, relatively large segments are divided into smaller pieces with an approximate length of 50 pixels, and the mean and variance of the intensities are calculated in these pieces. Therefore, a set of 51 color features were extracted, of which 16 features were selected using out-of-bag feature importance index. The proposed method classifies vessels across the entire image, and its evaluation on 3149 vessel segments from 40 macula-centered EPIC Norfolk fundus images[55] led to 83% classification rate.

In the works of Hatami and Goldbaum,[56] local binary pattern (LBP) is used for feature extraction which is robust against low contrast and low quality fundus images and provides additional information from vessel texture and shape. Both single classifiers including Bayesian network, Naive Bayes, LibSVM, MLP, Cart, and Random tree, and ensemble or multiple classifiers such as AdaBoost, bagging, random committee, random subspace, rotation forest, and majority voting have been examined for retinal vessel classification problem. Twenty images from the STARE dataset[57] were used for evaluation of the studied methods. Experiments conducted by using nine feature extraction methods (such as original RGB, PCA, ICA, wavelet, and LBP) and 13 classification approaches which reported results indicate that application of LBP features improves the performance of all classifiers. Moreover, ensemble classifiers achieve better performance compared to the single ones when utilize LBP features. The best results have obtained by the combination of random committee classifier and multiscale rotation invariant LBP (MS-RI LBP) feature extraction method which is equal to 90.7% in classification rate and 0.97 in AUC value.

Discussion

Challenges which exist in classification of retinal vessels make it difficult to achieve the results with high accuracy. Provided methods usually use four characteristics to distinguish between arteries and veins: difference in color, difference in thickness, central reflex, and branching information of arteries and veins around the optic disc.

Many classification approaches utilize of features that describe color and color variations in the vessels. A major issue in classification is that the absolute color of blood in vessels varies between images and even within the same subject. Some of the reasons for this variability are the amount of hemoglobin oxygen saturation, aging and development of cataract, differences in flash intensity, flash spectrum, nonlinear optical distortions of the camera, flash artifacts, and focus.[44] Moreover, the resolution of the images is another important factor that must be considered. Introduced noise in high resolution images reduces color information. Dependency of different classification approaches to color information indicates the importance of normalizing image resolution in studies that use fundus cameras with different resolutions. Thickness of the vessels is not a reliable feature for classification because in addition to its variability along the vessel, it greatly is affected by vessel segmentation. Since arteries carry blood with high oxygen values, their inner part is brighter than their walls and this means the central reflex is more obvious in arteries. Some investigations found this characteristic as a key feature in discrimination between the vessels, but the central reflex is only recognizable in thicker ones.

All these factors make automatic classification of arteries and veins a very difficult computational task and influence the accuracy of the system. Some of the previously proposed approaches have used clustering instead of classification to overcome these challenges. Many of the other presented methods have tried to simplify the problem by choosing only major vessels around the optic disc for classification task. In this way, the analysis is limited to the major vessels and confusing information resulted from smaller arteries and veins are avoided. Moreover, classification of major vessels is sufficient for applications such as AVR calculation.

Various parameters can be considered for the evaluation of different methods. In addition to AVR, which is one of the important parameters for assessment, classification error has a great importance. Lower number of errors increase reliability of the method and can be used as a criterion for feasibility of replacing manual marking with automatic methods. Considering that various proposed methods for vessel classification in fundus images are evaluated on different datasets (which are different in imaging conditions, intensities, resolution, etc.), and different criteria are applied for their performance evaluation (classification accuracy, correlation coefficient, AUC, AVR, etc.), it is not possible to compare them quantitatively.

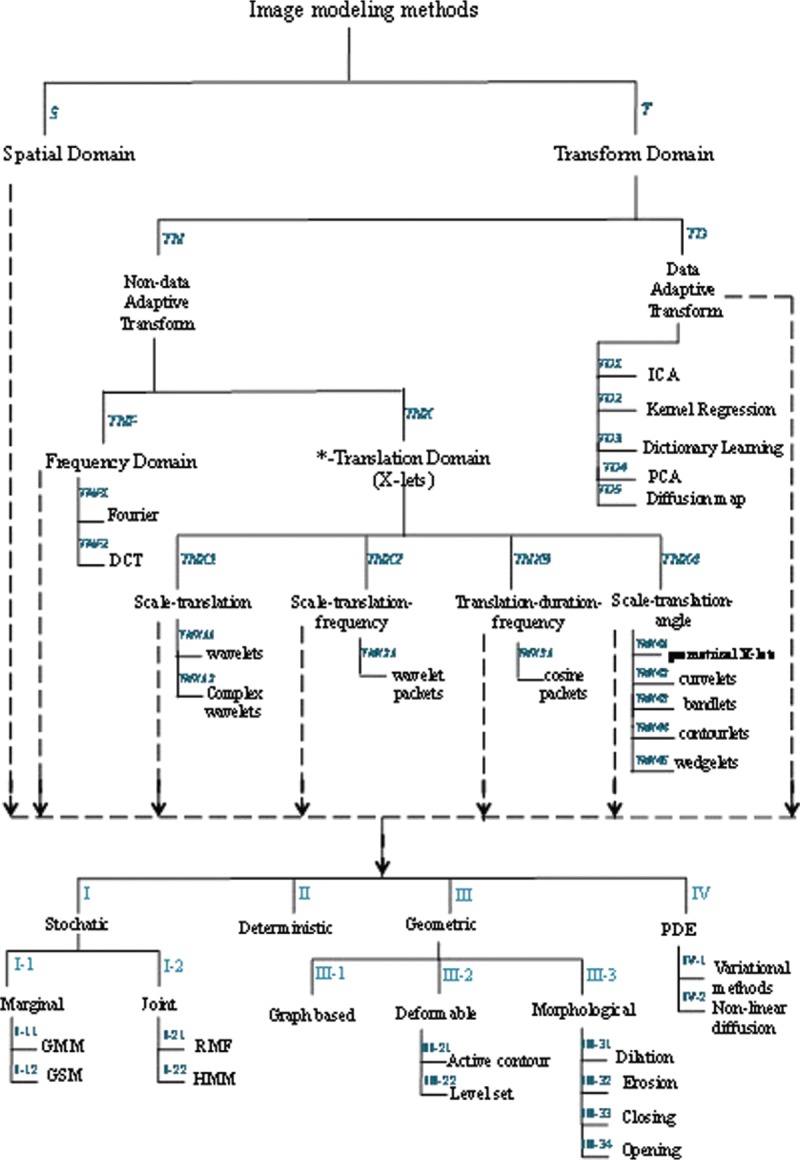

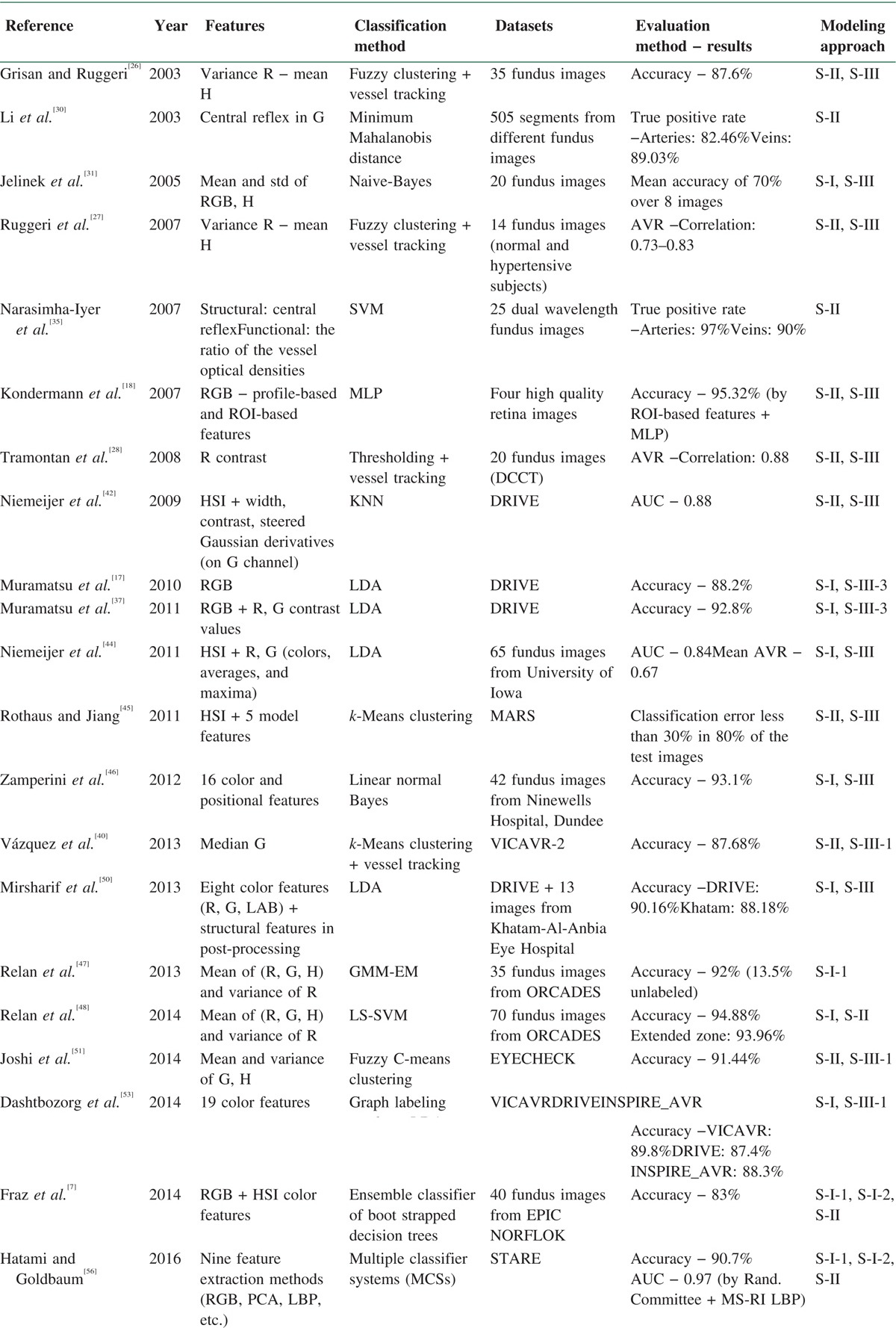

With respect to the aforementioned drawbacks, we made an effort to evaluate the proposed methods from another perspective, that is, image modeling methods. Amini and Rabbani[58] have provided a comprehensive categorization of the different models which have been used in medical image processing. According to Figure 4, modeling methods are broken down into two chief categories of spatial and transform domains. In addition, transform domain models are divided into data adaptive and non-data adaptive transforms. Moreover, all models have been classified in deterministic, stochastic, geometric, and partial differential equation groups. We tried to put automatic classification approaches for retinal vessel classification in their related modeling category. Table 2 provides a summary of the automatic classification methods in terms of features, classifiers, datasets, evaluation methods and results, and more importantly on modeling approach based on Figure 4 (e.g., S-I-1 in 16th row shows that the proposed method has used GMM-EM classifier, which is a statistical model). In each approach, the best-obtained results have been mentioned.

Figure 4.

Classification of the medical image processing models[58]

Table 2.

Summary of the automatic retinal vessel classification methods (in each method the best obtained results are mentioned)

Conclusion and Future Works

In this paper, a comprehensive review of the proposed methods for artery and vein classification in fundus images is provided. In a rough categorization, these methods are divided into semi-automatic and automatic groups. In semi-automatic ones, an expert is involved in the classification procedure who determines arteries and veins in her/his initial point, and this discrimination automatically propagates across the vascular network in the fundus image. Vessel tracking algorithms which make use of the structural characteristics and connectivity information are used for this end. In contrary, in automatic methods, all the classification procedures have been done automatically, and vessels are classified without any interference of the human operator. Since automatic ones are more clinically favorable, and most of the proposed methods concentrated on these approaches.

Given that the provided automatic methods for the separation of arteries and veins have been evaluated on various datasets and have utilized different measurement criteria, it is not possible to conduct a fair and unbiased comparison of their performance. Therefore, we decided to evaluate these methods from modeling perspective with regard to the categorization provided by Amini and Rabbani.[58] According to Table 2, results of this evaluation reveal that most of the provided methods have utilized geometric models in the feature extraction step and spatial domain stochastic/deterministic strategies in the classification step.

Although most of the provided methods so far have been developed in the spatial domain, advantages of the transform domain models make them an appropriate choice for the separation of arteries and veins. As a suggestion for future research direction, data adaptive transform models for modeling of the fundus images can be taken into account. Considering that these modeling strategies engage data-relevant information in the classification procedure, it is expected to provide more discrimination information from arteries and veins and yielded more precise classification of the vessels. For further research, we plan to utilize dictionary-learning techniques as a subdivision of data-adaptive models for the classification of arteries and veins in fundus images.

With the increasing development of the automatic methods, it is hoped that the results of retinal vessel classification can serve the ophthalmologist as a quick and advanced computer assistant, both in fast and accurate analysis of retina images and also in performing required quantitative calculation which leads to a more effective treatment of diseases related to the retina.

Financial support and sponsorship

Nil.

Conflicts of interest

There are no conflicts of interest.

References

- 1.Nguyen TT, Wang JJ, Wong TY. Retinal vascular changes in pre-diabetes and prehypertension: New findings and their research and clinical implications. Diabetes Care. 2007;30:2708–15. doi: 10.2337/dc07-0732. [DOI] [PubMed] [Google Scholar]

- 2.Viswanath K, McGavin DD. Diabetic retinopathy: Clinical findings and management. Community Eye Health. 2003;16:21–4. [PMC free article] [PubMed] [Google Scholar]

- 3.Moss SE, Klein R, Klein BE. The 14-year incidence of visual loss in a diabetic population. Ophthalmology. 1998;105:998–1003. doi: 10.1016/S0161-6420(98)96025-0. [DOI] [PubMed] [Google Scholar]

- 4.Aiello LM. Perspectives on diabetic retinopathy. Am J Ophthalmol. 2003;136:122–35. doi: 10.1016/s0002-9394(03)00219-8. [DOI] [PubMed] [Google Scholar]

- 5.Yau JW, Rogers SL, Kawasaki R, Lamoureux EL, Kowalski JW, Bek T, et al. Global prevalence and major risk factors of diabetic retinopathy. Diabetes Care. 2012;35:556–64. doi: 10.2337/dc11-1909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Mohamed Q, Gillies MC, Wong TY. Management of diabetic retinopathy: A systematic review. JAMA. 2007;298:902–16. doi: 10.1001/jama.298.8.902. [DOI] [PubMed] [Google Scholar]

- 7.Fraz MM, Rudnicka AR, Owen CG, Strachan DP, Barman SA. Automated arteriole and venule recognition in retinal images using ensemble classification. 2014 International Conference on Computer Vision Theory and Applications (VISAPP), vol 3, January 05, 2014. IEEE. :194–202. [Google Scholar]

- 8.Sinthanayothin C, Boyce JF, Williamson TH, Cook HL, Mensah E, Lal S, et al. Automated detection of diabetic retinopathy on digital fundus images. Diabet Med. 2002;19:105–12. doi: 10.1046/j.1464-5491.2002.00613.x. [DOI] [PubMed] [Google Scholar]

- 9.Niemeijer M, van Ginneken B, Staal J, Suttorp-Schulten MS, Abràmoff MD. Automatic detection of red lesions in digital color fundus photographs. IEEE Trans Med Imaging. 2005;24:584–92. doi: 10.1109/TMI.2005.843738. [DOI] [PubMed] [Google Scholar]

- 10.Walter T, Klein JC, Massin P, Erginay A. A contribution of image processing to the diagnosis of diabetic retinopathy − Detection of exudates in color fundus images of the human retina. IEEE Trans Med Imaging. 2002;21:1236–43. doi: 10.1109/TMI.2002.806290. [DOI] [PubMed] [Google Scholar]

- 11.Faust O, Acharya UR, Ng EY, Ng KH, Suri JS. Algorithms for the automated detection of diabetic retinopathy using digital fundus images: A review. J Med Syst. 2012;36:145–57. doi: 10.1007/s10916-010-9454-7. [DOI] [PubMed] [Google Scholar]

- 12.Fraz MM, Remagnino P, Hoppe A, Uyyanonvara B, Rudnicka AR, Owen CG, et al. Blood vessel segmentation methodologies in retinal images − A survey. Comput Methods Programs Biomed. 2012;108:407–33. doi: 10.1016/j.cmpb.2012.03.009. [DOI] [PubMed] [Google Scholar]

- 13.Mendonça AM, Campilho A. Segmentation of retinal blood vessels by combining the detection of centerlines and morphological reconstruction. IEEE Trans Med Imaging. 2006;25:1200–13. doi: 10.1109/tmi.2006.879955. [DOI] [PubMed] [Google Scholar]

- 14.Ikram MK, de Jong FJ, Vingerling JR, Witteman JC, Hofman A, Breteler MM, et al. Are retinal arteriolar or venular diameters associated with markers for cardiovascular disorders? The Rotterdam Study. Invest Ophthalmol Vis Sci. 2004;45:2129–34. doi: 10.1167/iovs.03-1390. [DOI] [PubMed] [Google Scholar]

- 15.Sun C, Wang JJ, Mackey DA, Wong TY. Retinal vascular caliber: Systemic, environmental, and genetic associations. Surv Ophthalmol. 2009;54:74–95. doi: 10.1016/j.survophthal.2008.10.003. [DOI] [PubMed] [Google Scholar]

- 16.Hatanaka Y, Nakagawa T, Hayashi Y, Aoyama A, Zhou X, Hara T, et al. Automated detection algorithm for arteriolar narrowing on fundus images. Proc. 27th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBS), paper, vol 291, August. 2005 doi: 10.1109/IEMBS.2005.1616400. [DOI] [PubMed] [Google Scholar]

- 17.Muramatsu C, Hatanaka Y, Iwase T, Hara T, Fujita H. Automated detection and classification of major retinal vessels for determination of diameter ratio of arteries and veins. SPIE Medical Imaging, March 4, 2010. International Society for Optics and Photonics. :76240J. [Google Scholar]

- 18.Kondermann C, Kondermann D, Yan M. Blood vessel classification into arteries and veins in retinal images. Medical Imaging, March 8, 2007. International Society for Optics and Photonics. :651247. [Google Scholar]

- 19.Martinez-Perez ME, Hughes AD, Stanton AV, Thom SA, Chapman N, Bharath AA, et al. Retinal vascular tree morphology: A semi-automatic quantification. IEEE Trans Biomed Eng. 2002;49:912–7. doi: 10.1109/TBME.2002.800789. [DOI] [PubMed] [Google Scholar]

- 20.Rothaus K, Rhiem P, Jiang X. Separation of the retinal vascular graph in arteries and veins. International Workshop on Graph-Based Representations in Pattern Recognition, June 11, 2007. Springer Berlin Heidelberg. :251–62. [Google Scholar]

- 21.Rothaus K, Jiang X, Rhiem P. Separation of the retinal vascular graph in arteries and veins based upon structural knowledge. Image Vis Comput. 2009;27:864–75. [Google Scholar]

- 22.Estrada R, Allingham MJ, Mettu PS, Cousins SW, Tomasi C, Farsiu S. Retinal artery-vein classification via topology estimation. IEEE Trans Med Imaging. 2015;34:2518–34. doi: 10.1109/TMI.2015.2443117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Estrada R, Tomasi C, Schmidler SC, Farsiu S. Tree topology estimation. IEEE Trans Pattern Anal Mach Intell. 2015;37:1688–701. doi: 10.1109/TPAMI.2014.2382116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Qureshi TA, Habib M, Hunter A, Al-Diri B. A manually-labeled, artery/vein classified benchmark for the DRIVE dataset. Proceedings of the 26th IEEE International Symposium on Computer-Based Medical Systems, June 20, 2013. IEEE. :485–8. [Google Scholar]

- 25.INSPIRE-AVR: Iowa normative 2015 set for processing images of the retina-artery vein ratio. [Last accessed 2012]. Available from: http://webeye.ophth.uiowa.edu/component/k2/item/270 .

- 26.Grisan E, Ruggeri A. A divide et impera strategy for automatic classification of retinal vessels into arteries and veins. Proceedings of the 25th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, vol 1, September 17, 2003. IEEE. :890–3. [Google Scholar]

- 27.Ruggeri A, Grisan E, De Luca M. An automatic system for the estimation of generalized arteriolar narrowing in retinal images. 2007 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, August 22, 2007. IEEE. :6463–6. doi: 10.1109/IEMBS.2007.4353839. [DOI] [PubMed] [Google Scholar]

- 28.Tramontan L, Grisan E, Ruggeri A. An improved system for the automatic estimation of the Arteriolar-to-Venular diameter Ratio (AVR) in retinal images. 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, August 20, 2008. IEEE. :3550–3. doi: 10.1109/IEMBS.2008.4649972. [DOI] [PubMed] [Google Scholar]

- 29.Color photography vs fluorescein angiography in the detection of diabetic retinopathy in the diabetes control and complications trial. The Diabetes Control and Complications Trial Research Group. Arch Ophthalmol. 1987;105:1344–51. doi: 10.1001/archopht.1987.01060100046022. [DOI] [PubMed] [Google Scholar]

- 30.Li H, Hsu W, Lee ML, Wang H. A piecewise Gaussian model for profiling and differentiating retinal vessels. Proceedings of the 2003 International Conference on Image Processing (ICIP), vol 1, September 14, 2003. IEEE. :1–1069. [Google Scholar]

- 31.Jelinek HF, Depardieu C, Lucas C, Cornforth DJ, Huang W, Cree MJ. Towards vessel characterization in the vicinity of the optic disc in digital retinal images. Image Vis Comput Conf, November 28. 2005:2–7. [Google Scholar]

- 32.Witten IH, Frank E. Data Mining: Practical Machine Learning Tools and Techniques. San Francisco: Morgan Kaufmann Publishers; 2005. p. 560. ISBN 0-12-088407-0. [Google Scholar]

- 33.Efron B. Estimating the error rate of a prediction rule: Improvement on cross-validation. J Am Stat Assoc. 1983;78:316–31. [Google Scholar]

- 34.Hall MA. Correlation-based feature selection for machine learning. Doctoral dissertation. The University of Waikato [Google Scholar]

- 35.Narasimha-Iyer H, Beach JM, Khoobehi B, Roysam B. Automatic identification of retinal arteries and veins from dual-wavelength images using structural and functional features. IEEE Trans Biomed Eng. 2007;54:1427–35. doi: 10.1109/TBME.2007.900804. [DOI] [PubMed] [Google Scholar]

- 36.Niemeijer M, Staal J, Ginneken B, Loog M, Abramoff MD. DRIVE: Digital retinal images for vessel extraction. 2017. Available from: http://www.isi.uu.nl/Research/Databases/DRIVE .

- 37.Muramatsu C, Hatanaka Y, Iwase T, Hara T, Fujita H. Automated selection of major arteries and veins for measurement of arteriolar-to-venular diameter ratio on retinal fundus images. Comput Med Imaging Graph. 2011;35:472–80. doi: 10.1016/j.compmedimag.2011.03.002. [DOI] [PubMed] [Google Scholar]

- 38.Vázquez SG, Cancela B, Barreira N, Penedo MG, Saez M. On the automatic computation of the arterio-venous ratio in retinal images: Using minimal paths for the artery/vein classification. 2010 International Conference on Digital Image Computing: Techniques and Applications (DICTA), December 1, 2010. IEEE. :599–604. [Google Scholar]

- 39.Saez M, González-Vázquez S, González-Penedo M, Barceló MA, Pena-Seijo M, Coll de Tuero G, et al. Development of an automated system to classify retinal vessels into arteries and veins. Comput Methods Programs Biomed. 2012;108:367–76. doi: 10.1016/j.cmpb.2012.02.008. [DOI] [PubMed] [Google Scholar]

- 40.Vázquez SG, Cancela B, Barreira N, Penedo MG, Rodríguez-Blanco M, Seijo MP, et al. Improving retinal artery and vein classification by means of a minimal path approach. Mach Vis Appl. 2013;24:919–30. [Google Scholar]

- 41.VICAVR-2: VARPA images for the computation of the arterio/venular ratio, database. 2011. [Last accessed 2011]. Available from: http://www.varpa.es/vicavr2.html .

- 42.Niemeijer M, van Ginneken B, Abràmoff MD. Automatic classification of retinal vessels into arteries and veins. SPIE Medical Imaging, February 26, 2009. International Society for Optics and Photonics. :72601F. [Google Scholar]

- 43.Niemeijer M, van Ginneken B, Abràmoff MD. Automatic determination of the artery vein ratio in retinal images. SPIE Medical Imaging, March 4, 2010. International Society for Optics and Photonics. :76240I. [Google Scholar]

- 44.Niemeijer M, Xu X, Dumitrescu AV, Gupta P, van Ginneken B, Folk JC, et al. Automated measurement of the arteriolar-to-venular width ratio in digital color fundus photographs. IEEE Trans Med Imaging. 2011;30:1941–50. doi: 10.1109/TMI.2011.2159619. [DOI] [PubMed] [Google Scholar]

- 45.Rothaus K, Jiang X. Classification of arteries and veins in retinal images using vessel profile features. 2011 International Symposium on Computational Models for Life Sciences (CMLS-11), vol 1371, no 1, 2011. AIP Publishing. :9–18. [Google Scholar]

- 46.Zamperini A, Giachetti A, Trucco E, Chin KS. Effective features for artery-vein classification in digital fundus images. 2012 25th International Symposium on Computer-Based Medical Systems (CBMS), June 20, 2012. IEEE. :1–6. [Google Scholar]

- 47.Relan D, MacGillivray T, Ballerini L, Trucco E. Retinal vessel classification: Sorting arteries and veins. 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), July 3, 2013. IEEE. :7396–9. doi: 10.1109/EMBC.2013.6611267. [DOI] [PubMed] [Google Scholar]

- 48.Relan D, MacGillivray T, Ballerini L, Trucco E. Automatic retinal vessel classification using a least square-support vector machine in VAMPIRE. 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, August 26, 2014. IEEE. :142–5. doi: 10.1109/EMBC.2014.6943549. [DOI] [PubMed] [Google Scholar]

- 49.Cheung CY, Hsu W, Lee ML, Wang JJ, Mitchell P, Lau QP, et al. A new method to measure peripheral retinal vascular caliber over an extended area. Microcirculation. 2010;17:495–503. doi: 10.1111/j.1549-8719.2010.00048.x. [DOI] [PubMed] [Google Scholar]

- 50.Mirsharif Q, Tajeripour F, Pourreza H. Automated characterization of blood vessels as arteries and veins in retinal images. Comput Med Imaging Graph. 2013;37:607–17. doi: 10.1016/j.compmedimag.2013.06.003. [DOI] [PubMed] [Google Scholar]

- 51.Joshi VS, Reinhardt JM, Garvin MK, Abramoff MD. Automated method for identification and artery-venous classification of vessel trees in retinal vessel networks. PLoS One. 2014;9:e88061. doi: 10.1371/journal.pone.0088061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Abramoff MD, Suttorp-Schulten MS. Web-based screening for diabetic retinopathy in a primary care population: The EyeCheck project. Telemed J e-Health. 2005;11:668–74. doi: 10.1089/tmj.2005.11.668. [DOI] [PubMed] [Google Scholar]

- 53.Dashtbozorg B, Mendonça AM, Campilho A. An automatic graph-based approach for artery/vein classification in retinal images. IEEE Trans Image Process. 2014;23:1073–83. doi: 10.1109/TIP.2013.2263809. [DOI] [PubMed] [Google Scholar]

- 54.VICAVR: VARPA images for the computation of the arterio/venular ratio, database. 2009. [Last accessed 2009]. Available from: http://www.varpa.es/vicavr.html .

- 55.EPIC-Norfolk. European Prospective Investigation of Cancer (EPIC) 2017. Available from: http://www.srl.cam.ac.uk/epic/

- 56.Hatami N, Goldbaum M. Automatic Identification of Retinal Arteries and Veins in Fundus Images Using Local Binary Patterns; 2016. arXiv preprint arXiv:1605.00763 [Google Scholar]

- 57.STARE: Structured analysis of the retina. 2017. Available from: http://cecas.clemson.edu/~ahoover/stare/

- 58.Amini Z, Rabbani H. Classification of medical image modeling methods: A review. Curr Med Imaging Rev. 2016;12:130–48. [Google Scholar]