Abstract

Objectives:

The overall aim of this study was to determine the effect of introducing a smartphone pain application (app), for both Android and iPhone devices that enables chronic pain patients to assess, monitor, and communicate their status to their providers.

Methods:

This study recruited 105 chronic pain patients to use a smartphone pain app and half of the patients (N=52) had 2-way messaging available through the app. All patients completed baseline measures and were asked to record their progress every day for 3 months, with the opportunity to continue for 6 months. All participants were supplied a Fitbit to track daily activity. Summary line graphs were posted to each of the patients’ electronic medical records and physicians were notified of their patient’s progress.

Results:

Ninety patients successfully downloaded the pain app. Average age of the participants was 47.1 (range, 18 to 72), 63.8% were female and 32.3% reported multiple pain sites. Adequate validity and reliability was found between the daily assessments and standardized questionnaires (r=0.50) and in repeated daily measures (pain, r=0.69; sleep, r=0.83). The app was found to be easily introduced and well tolerated. Those patients assigned to the 2-way messaging condition on average tended to use the app more and submit more daily assessments (95.6 vs. 71.6 entries), but differences between groups were not significant. Pain-app satisfaction ratings overall were high.

Discussion:

This study highlights some of the challenges and benefits in utilizing smartphone apps to manage chronic pain patients, and provides insight into those individuals who might benefit from mHealth technology.

Key Words: chronic pain, innovative technology, mHealth, pain app, smartphone

There has been an explosion of mobile devices and smartphone applications (apps) used to track health data and change the approach to management of chronic diseases. Mobile communication technology is the fastest growing sector of the communication industry. It has been estimated that there are over 7 billion registered users of mobile phones worldwide1 and in the United States, over two-thirds of the population own smartphones capable of running sophisticated apps.2 It is further estimated that 80% of adults worldwide will own a smartphone by 2020.3 With increased availability of smartphones and Internet accessibility, older adults, those with lower household incomes, and individuals in both urban and rural environments will have access to sophisticated health-related apps.4 These programs can allow information to be transferred to interested parties and can offer interventions to a greater number of patients than could be seen individually, particularly among high-cost patients who require chronic disease management.

There is strong evidence that the electronic monitoring available in many apps is superior to paper-and-pencil diaries with respect to compliance, user-friendliness, patient satisfaction, and test reliability and validity.5–7 Indeed, momentary electronic assessment methods with ratings of current symptoms are better than retrospective assessments (eg, minimization of recall bias) and are generally considered to be “state-of-the-art” measures for evaluation of pain and other health-related outcomes.8,9 It is now estimated that there are over 14,000 health-related apps for iOS alone.3 These programs were designed primarily for monitoring and obtaining information while less emphasis was placed on behavioral health interventions. A recent review article by the Commonwealth Fund4 evaluated mobile health apps and strategies to activate patients to change behavior. They reviewed over 1000 health care–related apps to determine usefulness based on engagement, relevance to the targeted patient population, consumer ratings, and reviews, and concluded that only a minority of the apps (43% iOS and 27% Android) appeared likely to be useful. They suggested that levels of engagement increases when apps offer guidance based on information entered by the user and communication and support from the providers.

A number of smartphone apps have been developed specifically for persons with noncancer and cancer pain.10–13 In a review of commercially available pain apps,14 111 were identified across the major mobile phone platforms, with 86% reporting no health care professional involvement. The authors were able to divide the function of the pain apps into 3 major categories: (1) general information about pain, its symptoms, and treatment options; (2) diary-based tracking of symptoms, medication use, and appointment reminders; and (3) interventions for pain management, which tend to be relaxation strategies. Most (54%) of the applications were found to contain general information, while only 24% included a tracking program and only 17% included an intervention. None of the apps that were reviewed contained all 3 functions.

In a more recent review article of 220 pain-related apps, Wallace and Dhingra15 found little evidence that health care professionals were involved in creating the apps. From their review they concluded that most of the apps offered self-monitoring (62.3%) and pain education (24.1%), but few (13.6%) had both. There was also little evidence-based content in how to manage pain. In another article in which 224 pain apps were reviewed,16 there was again little evidence that health care professionals were involved in creating the apps. Most of these apps included self-management (79.5%), education (59.8%), or both (13.8%). Very few of the apps offered interactive social support (2.2%) or goal-seeting (1.8%). The authors concluded that none of the apps they reviewed met their 5 prime areas of functionality: self-monitoring, goal setting, skills training, social support, and education.

In a randomized controlled trial with 140 women with chronic widespread pain, participants evaluated a 4-week smartphone-based intervention consisting of 3 daily symptom surveys with immediate daily written therapist feedback that encouraged coping skills.17 The intervention group reported significantly less catastrophizing, better acceptance of pain, and overall better functioning than the control group, and this difference was maintained for 5 months after the intervention. Unfortunately, there was a 30% dropout rate in the intervention group (vs. 3% in the nonintervention group), which was correlated with older age, more pain, worse sleep, and overall worse functioning compared with compliers.

Although some encouraging preliminary work has been conducted to suggest that interactive programs to improve communication among providers and patients is feasible,18 there have been very few studies designed to comprehensively examine the usability, acceptability, reliability, utility, and content and face validity of a smartphone pain app. To date, most apps designed for pain patients have lacked provider involvement or direct communication of daily assessments with patient physicians. We designed a pain app that summarized patient progress on templated line graphs, tracked behavior, and shared information with health care providers by posting the summary data on the patient’s electronic medical record. We proposed a pilot study to determine the effect of introducing a smartphone pain app to chronic pain patents that assesses, monitors, and communicates their status to their providers, and provides self-management strategies. We were interested in understanding how feasible it would be to implement the pain app, how adherent patients would be in using the app, and whether any issues of safety would arise. We hypothesized that (1) patients would find the app easy to use and be adherent in using the app for at least 1 month, (2) the daily assessment ratings from the pain app would be valid and reliable (eg, significantly correlated with standardized paper-and-pencil baseline and follow-up measures assessing similar constructs), (3) those who received supportive messaging would be more adherent in using the app, and (4) those who regularly used the app would demonstrate greater improvement in pain, mood, and activity.

METHODS

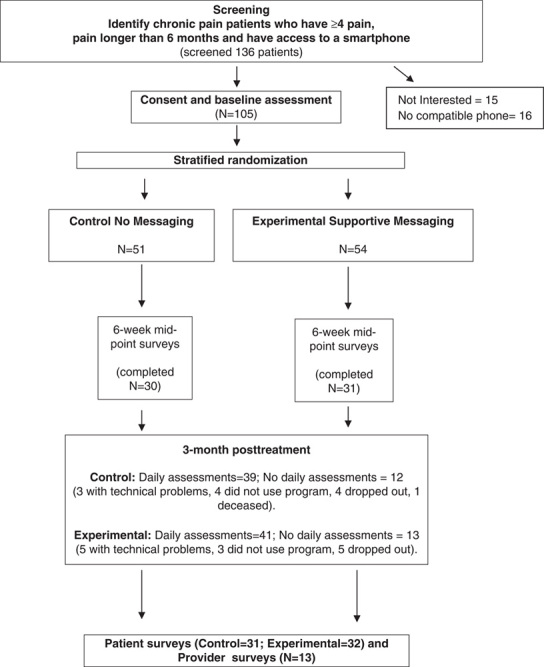

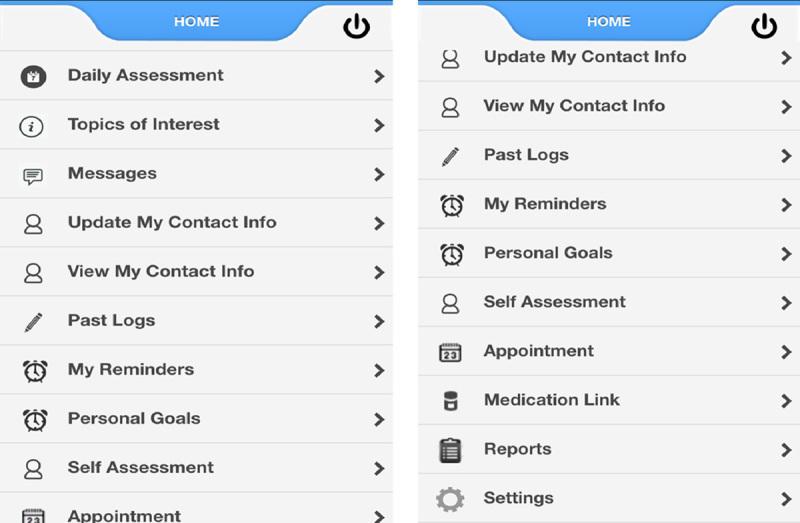

This study was approved by the Internal Review Board of Brigham and Women’s Hospital (BWH) (study schema, Fig. 1). We developed and tested a smartphone pain app that can be used on iPhone (iOS) and Android devices. The pain app could be downloaded for free through the Apple Store and Google Play and used to monitor progress and provide feedback through 2-way messaging. The pain app was Vericode tested and all data were saved on a secure encrypted password-protected server with access limited only to study personnel through the administration portal. Components of the smartphone application included: (1) demographic and contact information, (2) comprehensive baseline chronic pain assessment with body map, (3) daily assessments with push notification reminders, (4) personalized goal setting (eg, exercise routine, weight management), (5) topics of interest with psychological and medical management strategies (eg, Gate Control Theory,19 stress and relaxation, managing sleep disturbances, weight management and nutrition, problem solving strategies), (6) self-affirming positive statements, (7) saved progress line graphs directed to the health care provider, and (8) past summary logs (Fig. 2). The users were prompted to write goals designed to improve health and coping (eg, daily aerobic and relaxation exercises, weight reduction, medication management) and submit these through the app.

FIGURE 1.

Study schema.

FIGURE 2.

Pain app home page with links when scrolled down.

The app was developed based on information from a book written by the lead author (R.N.J.) to help in coping with pain.20 Usability testing was performed on 5 pain patients to help identify any potential programmatic problems and changes were made to improve the program and to resolve any user issues with the help of the app development company (Technogrounds Inc.). All patients completed baseline measures and were asked to record their progress by answering 5 questions at least once every day for 3 months. They had the option of receiving up to 5 reminders each day and could enter as many assessments each day as they wanted. All participants had the opportunity to continue the study and to use the app for 6 months if they wanted. All participants were supplied a Fitbit (Fitbit Zip, San Francisco, CA) to track daily activity. The participants were asked to use the Fitbit every day during the 3-month trial. The Fitbit data were not integrated into the pain app but were available on the Fitbit website (www.fitbit.com) for summary data for both the users and investigators.

We recruited patients with cancer and noncancer-related chronic pain to participate in this pilot study.21 We conducted this pilot study to understand the practicalities of recruitment and the acceptability and adherence of using a smartphone pain app among persons with chronic pain. All participants needed to be 18 years or older and own a study-compatible smartphone (iPhone or Android device). Other inclusion criteria included (1) having chronic pain for>6 months’ duration, (2) averaging 4 or greater on a pain intensity scale of 0 to 10, and (3) able to speak and understand English. Patients were excluded from the study if they had (1) any cognitive impairment that would prevent them from understanding the consent, study measures, or procedures, (2) any clinically unstable medical condition judged to interfere with study participation, (3) a pain condition requiring urgent surgery, (4) a present psychiatric condition (eg, DSM diagnosis of schizophrenia, delusional disorder, psychotic disorder or dissociative disorder) that was judged to interfere with the study, (5) visual impairment or motor impairment that would interfere with use of a smartphone, and (6) an active addiction disorder (eg, active cocaine or IV heroin use) that would interfere with study participation.

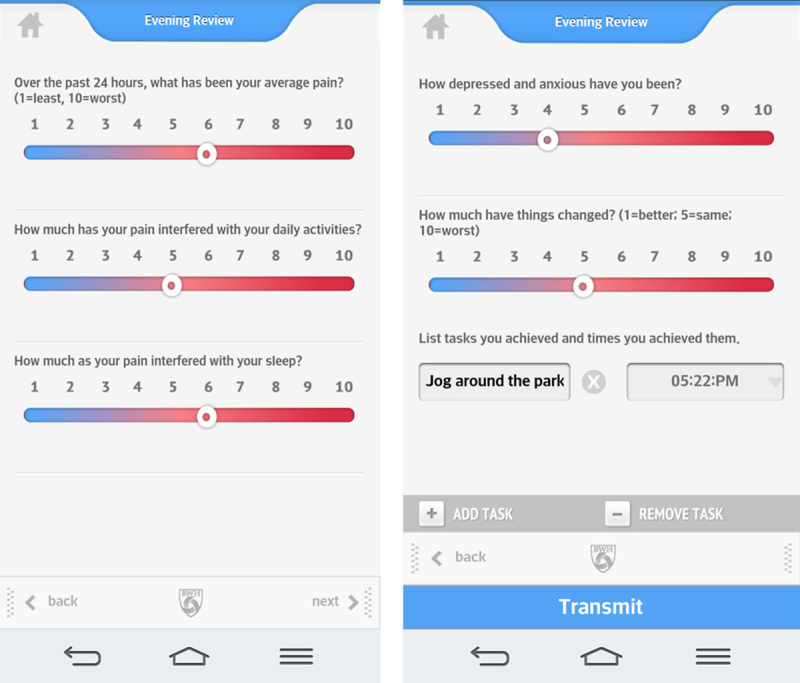

Participants were recruited by their treating physicians and were handed a flyer describing the study. After the individual was contacted and deemed eligible and willing to participate, he/she signed a consent form and completed baseline measures. Recruitment took place at BWH and Dana Farber Cancer Institute, Boston. The patients were randomized to either receive 2-way messaging or a standard message on the smartphone app using a stratified randomization table. Those in the experimental group received 2-way messaging of weekly supportive text messages and feedback about their progress by the study research assistant (RA). The intent was to make the messages for those in the experimental group personal based on the information supplied through the daily assessments. The messages varied related to changes in the line graphs and daily assessment data (eg, Hello Dave! It looks like your pain, mood and activity interference this week have all improved—way to go!). Those in the control group received a standard reply of “Thank you. Your message has been received” every time the participants sent a message through the app. All the return messages were written by the RA. The only differences between the treatment groups were in the content of the messages sent. Data from all participants were copied and saved into the patient’s electronic medical record. All patients were encouraged to try not to significantly vary their treatment over the 3 months of the study. Patients were assisted in downloading the pain app and had access to a research assistant (D.C.J.) who could answer any questions or help manage technical problems encountered. All participants were encouraged to complete brief daily assessments on the pain app that consisted of 1 to 10 ratings of pain, activity interference, sleep, mood, and whether things had gotten better or worse (Fig. 3). If possible, the participants were encouraged to complete the reports at the same time each day. These reports were stored on the app server in the form of line graphs and were copied and saved to the patient’s electronic medical record every 2 weeks by the study RA along with a brief summary note of the patient’s progress sent through clinical messaging to each of the patient’s providers. Patients who wished to discontinue the study were allowed to do so at their request. All participants were mailed midpoint assessments approximately 6 weeks after the start of the study and postintervention assessments after 3 months of using the smartphone pain app. All participants received $25 after completing the baseline assessment, $25 for completing the 6-week assessment, $50 after completion of the 3-month posttreatment assessments.

FIGURE 3.

Pain app daily assessments.

To operationalize concepts used in this study, we defined adherence as achieving 60% compliance in daily assessments over the 3-month trial, tolerability as achieving 80% satisfaction ratings, and safety as absence of any adverse event that occurred related to the use of the pain app. For reliability of the pain app we set a correlation of r=0.8 (test-retest) as acceptable and for validity we set a correlation of r=0.7 as acceptable of measuring the same between-measures construct.

Measures

The study measures were completed at the time of recruitment and follow-up questionnaires were mailed to the subjects with a self-addressed stamped envelope so that they could be completed and returned. We assessed study feasibility and tolerability by examining adherence in using the pain app (ie, number and frequency of daily assessments) and attrition (ie, number who completed the study). We documented any reported safety issues and determined outcome efficacy through standardized pre-post measures. The following measures were administered to all study participants at baseline, 6-week midpoint, and 3-month follow-up time points.

The Brief Pain Inventory (BPI)22

This self-report questionnaire, formerly the Brief Pain Questionnaire,23 is a well-known measure of clinical pain and has shown sufficient reliability and validity. This questionnaire provides information about pain history, intensity, and location as well as the degree to which the pain interferes with daily activities, mood, and enjoyment of life. Scales (rated from 0 to 10) indicate the intensity of pain in general, at its worst, at its least, average pain, and pain “right now” over the past 24 hours. A figure representing the body is provided for the patient to shade the area corresponding to his or her pain. Test-retest reliability for the BPI reveals correlations of 0.93 for worst pain, 0.78 for usual pain, and 0.59 for pain now.

Pain Catastrophizing Scale (PCS)24,25

The PCS is a 13-item instrument that examines 3 components of catasrophizing: rumination, magnification, and helplessness. Each item is rated from “not at all” to “all the time” on a 0 to 4 scale. The PCS is found to predict levels of pain and distress among clinical patients and scores have been related to thought intrusions. It has good psychometric properties with adequate reliability and validity and is associated with levels of pain, depression, and anxiety.

Pain Disability Inventory (PDI)26

The PDI is a 7-item questionnaire rated from 0 to 10 on level of disability of 7 areas of activity interference including family/home responsibilities, recreation, social activity, occupation, sexual behavior, self-care, and life-supporting behaviors. Each item is rated based on how much the pain prevents the user from doing what would normally be done. It has shown to have excellent test-retest reliability and validity and is sensitive to high levels of disability.

Hospital Anxiety and Depression Scale (HADS)27,28

The HADS is a 14-item scale designed to assess the presence and severity of anxious and depressive symptoms over the past week. Seven items assess anxiety, and 7 items measure depression, each coded from 0 to 3 (eg, not at all; most of the time). The HADS has been used extensively in clinics and has adequate reliability (Cronbach α=0.83) and validity, with optimal balance between sensitivity and specificity.

Coping Strategies Questionnaire (CSQ)29

A brief version of the CSQ adopted from the original30 has 14 items selected to represent 2-item scales of 7 pain coping strategies (5 adaptive strategies: diverting attention, reinterpreting pain sensations, ignoring sensations, coping self-statements, increased behavioral activities; and 2 maladaptive strategies: catastrophizing, praying or hoping). Scores are measured on a scale of 0=“never do that” to 6=“always do that” representing the frequency of use of each pain coping response when the person has pain. Higher scores represent better coping. The CSQ is the most widely used measure of coping with chronic pain. The subscales all show good internal consistency and serves as a broad measure of both “positive” and “negative” approaches to coping with daily pain symptoms.

At posttreatment the patients completed the following measure:

End-of-study satisfaction questions: at follow-up, participant's who completed the 3-month pain app trial were asked to answer a number of questions developed for this study on a 0 to 10 scale to assess the smartphone pain app on (1) how easy the program was to use, (2) how useful the reminders were, (3) how useful the daily reports were, (4) how appealing the program was, (5) how bothersome the daily prompts were, (6) how easy the program was to navigate, (7) how much the user was willing to use the program every day, (8) how easy it was to send a report, (9) how responsive the providers were to the reports, and (10) how much the program helped them cope with their pain.

Attending physicians (BWH only) and pain fellows from the pain center were asked to complete an anonymous online survey of their impressions of the smartphone pain app. They rated 10 items on how satisfied they were with (1) the summary graphs, (2) the way the pain app data helped them manage their patients, (3) the way the pain app helped the patients understand their pain, (4) the way the pain app was used in the clinic, and (5) the way they received pain app summary messages through the electronic medical record system. They also rated how much they believed (6) that the summary messages were helpful, (7) that the pain app positively changed their patients’ behavior, (8) that using the pain app in the clinic improved their overall practice, (9) that the feedback from the pain app improved patient outcomes, and whether (10) the pain app was an added burden to the clinic, (11) they had time to examine individual patient pain app data during clinic hours, and (12) they believed regular use of a smartphone pain app would reduce health care costs. All items were rated on a 1 to 5 scale from 1=strongly agree, 2=agree, 3=neither agree nor disagree, 4=disagree, and 5=strongly disagree. Three of the items were reverse scored (eg, “I am dissatisfied with …”).

Statistical Analysis

This pilot study was designed to gather preliminary data on the feasibility, tolerability, safety, and efficacy of the smartphone pain app as well as information to refine the pain app for future use among persons with chronic pain. Analyses were conducted using an intent-to-treat analysis. Significant differences between groups at baseline were assessed and univariate and multivariate descriptive analyses were performed on all the dependent variables. χ2, t tests, and logistic regression analyses were conducted as appropriate. We examined the content validity of the pain app by comparing questionnaire ratings of average pain intensity and daily assessment of pain on the app. We tested the test-retest reliability of the daily pain app ratings by examining differences between day 7 and day 8 ratings with 95% confidence intervals (CI). We decided to use ratings on days 7 and 8 because there was more of a tendency for users to submit multiple daily entries during the first week of using the app. We also used survival statistics to examine differences in pain app use over time comparing differences between those assigned to the experimental condition and the control condition. Although there were a limited number of participants in this trial, repeated measures analysis of variance and preliminary mixed linear models procedures were also conducted as appropriate. This trial was designed to gather information about the use and utility of a smartphone pain app for persons with chronic pain.

RESULTS

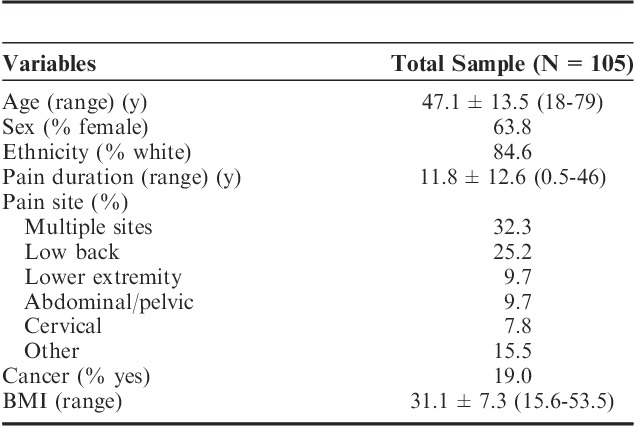

One hundred thirty-six (N=136) individuals were approached about the study and 105 (N=105) chronic pain patients were successfully recruited. Of those who were approached but were not consented, 15 did not want to participate after learning about the study and 16 did not have a compatible phone. Of the 105 participants who were consented, the average age was 47.1 (SD=13.5), 63.8% were female, and 84.6% were white and 32% reported having multiple pain sites (Table 1). Pain duration averaged 11.8 years and 20 of the patients (19.1%) had cancer-related pain. Three of the patients had a noncompatible device or no device at the time of recruitment. One of the patients stated that he was just about to purchase an iPhone and we agreed to consent that person. Two other patients had Android devices that could not download the program despite multiple attempts. In these instances both devices were older and we concluded that the age of the device kept them from using the program.

TABLE 1.

Patient Demographic Characteristics (N=105)

Ninety (85.7%) of the 105 participants successfully downloaded the pain app program and 82 (78.1%) of the participants submitted daily reports. All of the subjects were given a link to the pain app (App Store or Google Play) and were assisted in downloading the program with the RA present or, if time or circumstances did not allow, were instructed in downloading the program remotely by the RA. They were also encouraged to contact the RA if they encountered difficulties. If they successfully downloaded the program their name and hospital number appeared on the Admin Portal. Fourteen percent (N=15) of the participants did not succeed in downloading the program after being consented for the study and did not request assistance. Sixty-one (67.8%) of the 90 subjects who succeeded in downloading the pain app had iPhones and 29 (32.2%) of the participants had Android smartphones. No demographic differences were found between subjects with an iPhone and those with an Android device. Over the course of the study, 11 of the subjects withdrew from the study, mostly because they reported being too busy or they did not want to start again after updating their phones. Fourteen experienced technical problems with the app, 8 did not submit any daily assessments, and 1 patient died from metastatic cancer during the trial. The total number of daily assessments over 3 months averaged 35.0 (SD=39.6; range, 0 to 234). Fifty of the 90 participants who downloaded the app (55.6%) completed at least 30 daily assessments, 40 (44.4%) completed at least 60 daily assessments, and 24 (26.7%) completed at least 90 daily assessments. Thirty percent of the 90 participants (N=27) logged daily Fitbit data after 90 days, and 17.7% (N=16) continued to use the Fitbit after 180 days. No differences were found in mean activity level over time. Although the participants were able to continue to use the app after the 6-month trial, only 5 (5.6%) continued to enter daily assessments. Content validity between BPI average pain and daily pain assessment was found to be adequate for self-reported pain intensity (r=0.50; 95% CI, 0.10-0.85) and the reliability between repeated measures on the daily ratings (day 7 and day 8) was high (daily pain, r=0.69; 95% CI, 0.44-0.89; daily sleep, r=0.83; 95% CI, 0.73-0.91; daily mood, r=0.83; 95% CI, 0.68-0.92; daily activity interference, r=0.84; 96% CI, 0.74-0.91).

Differences were assessed in the frequency of use between those with 2-way messaging and those with only 1-way messaging for 150 days. Those patients assigned to the 2-way messaging treatment arm on average tended to use the app more and submit more daily assessments (95.6 vs. 71.6 entries), but differences between groups were not significant.

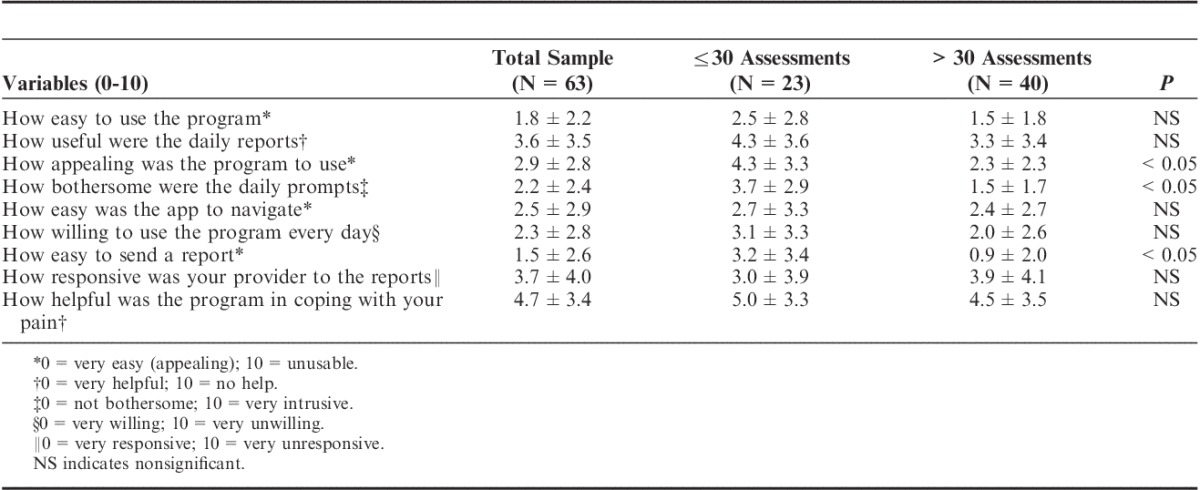

Sixty-three of the 80 participants who used the app successfully completed and mailed back satisfaction questionnaires after 3 months. Patient satisfaction survey results showed that the app was easy to use (average, 1.8/10; 0=very easy, 10=unusable), easy to navigate (2.5/10), and most subjects reported willingness to use the program after the study was over (2.4/10; 0=very willing, 10=unwilling; Table 2). Although compliance of daily ratings tended to decrease after 1-month (average daily assessment ratings first month=16.4±17.0; range, 0 to 105; second month=10.3±13.2; range, 0 to74; third month=8.3±12.3; range, 0 to 66), those participants with more daily assessments were found to be more satisfied with the app (more appealing, easier to send a message, less bothersome, P<0.05) compared with those who used the app less often (Table 3). Also, those who used the app more frequently demonstrated modest increased levels of activity (increased steps), but, overall, frequency of use did not significantly affect pain intensity, mood, coping, or activity level.

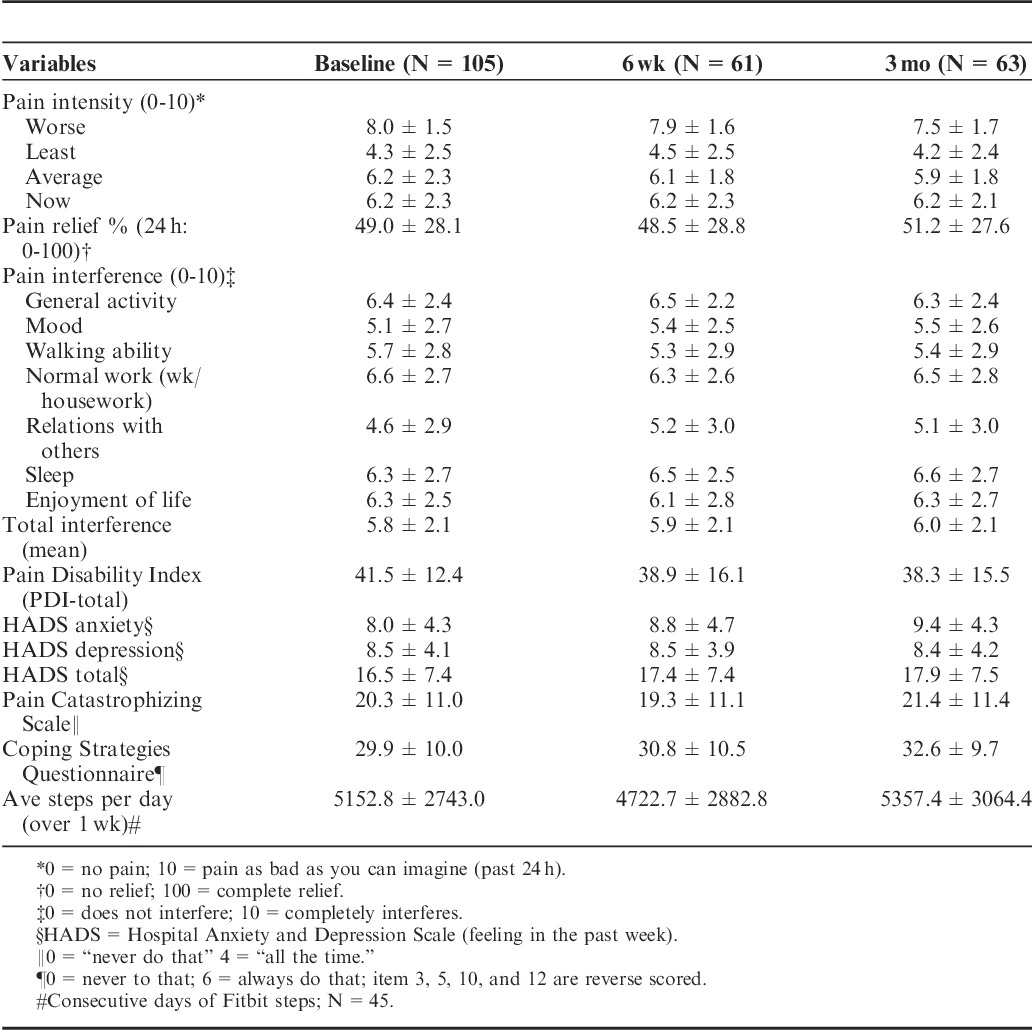

TABLE 2.

Patient Descriptive Characteristics at Baseline, 6 Weeks, and 3 Months

TABLE 3.

Patient Poststudy Satisfaction Questionnaire Responses After 3 Months for those With 30 or Less Daily Assessments and Those With >30 Daily Assessments (N=63)

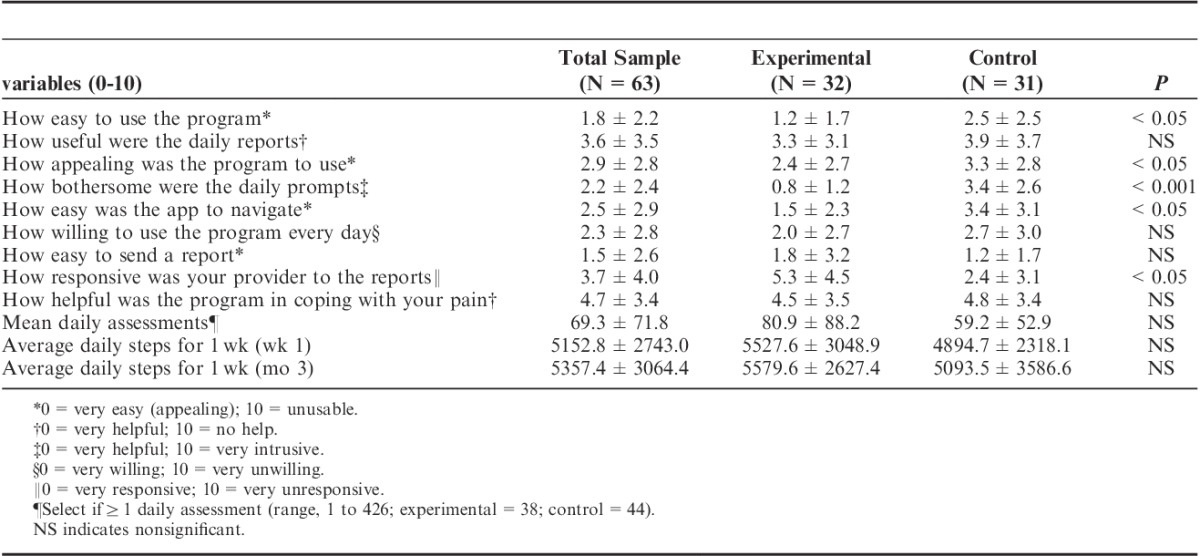

Patients assigned to the experimental condition of 2-way messaging found the app more appealing (P<0.05), easier to use and to navigate (P<0.05), and less bothersome (P<0.001) than those without the 2-way messaging. However, those assigned to the experimental condition also felt that their providers were less responsive to their reports than the subjects in the control condition (P<0.05; Table 4). Ninety-two percent of the participants who completed the poststudy questionnaires agreed to continue to be involved in the pain app study after 3 months. No significant differences were found between the experimental and control groups on outcomes of pain, activity, and mood.

TABLE 4.

Patient Poststudy Satisfaction Questionnaire Responses After 3 Months for Those With 2-Way Messaging (Experimental) and Those Without 2-Way Messaging (N=63)

Seven pain management physicians completed the anonymous satisfaction survey at the end of the trial. They averaged 52.3 years of age (range, 34 to 65 y), 85.7% were white, 85.7% were male, and they averaged 21.7 years of clinical practice. Eighty-six percent reported being satisfied with the way the app was used in the clinic and 85.7% liked receiving the pain app summary messages. Also, 85.7% believed that using the app would improve their overall practice while none of the physicians felt that the pain app was an added burden to the clinic. Seventy-one percent were satisfied with the way the pain app data helped them manage their patients, and 71.4% were satisfied with the pain app summary graphs and the way the pain app helped their patients understand their pain. Only 2 physicians (28.6%) believed that they would not have time to examine the pain app data during clinic hours. Just over half (57.1%) believed that use of the pain app would reduce health care costs, and, while most believed that feedback from the pain app would improve patient outcomes (71.4%), only 42.9% felt that the pain app positively changed their patients’ lives.

Six pain fellows also completed the anonymous survey. They averaged 33.2 years of age, 50.0% were white, and 66.7% were male. Even though they were not sent clinical messages about those patients who were using the pain app and were sometimes unaware of which patients were in the study, 83.3% believed that using the pain app in the clinic would improve the overall practice and 83.3% believed that regular use of the pain app would reduce health care costs. Fifty percent were satisfied with the way the pain app helped in managing the patients and none expressed dissatisfaction with the summary graphs or believed that the pain app was an added burden.

DISCUSSION

This preliminary study highlights some of the challenges and benefits of utilizing a smartphone pain app for chronic pain patients, and may provide insight into how pain apps might be most clinically useful. Overall, the smartphone pain app was found to be usable, valid, reliable, and easily accepted among patients and providers alike, although further validity and reliability testing of the items on the pain app should be considered in future investigations. The 2-way messaging feature was also found to moderately improve compliance with daily assessments, although differences between groups were not significant. Patient satisfaction ratings suggest that the daily assessments and perceived connection with providers were beneficial. Continued research to understand ways to optimize use of these applications for clinical use, however, is needed.

It should be highlighted that the participants in this study had significant pain (pain intensity on the BDI) and disability (high PDI) and reported elevated levels of negative affect (high HADS, PCS, and PSQ scores) compared with other pain patient populations. The participants reported many years of chronic pain (average, 10 y), many also were older, overweight, and had low levels of daily activity. It is uncertain how the results would be different if the participants were younger, had higher levels of functioning, and were less disabled due to their pain. We had initially wanted to recruit an even number of patients with cancer-related pain, but found that some patients with advanced metastatic disease perceived the pain app to be difficult to manage and preferred not to participate. Further studies with a divergent patient population would help to identify those individuals who might benefit the most from using a pain app. An encouraging finding was, however, that those individuals who agreed to participate were overall very satisfied with the pain app.

We encountered some technical difficulties with the app that might have also affected the outcome of this study. In particular, some participants reported not receiving push notifications or daily reminders to complete their daily assessment, and this could have made a difference in the rate of completed daily assessments. For those who changed and upgraded their phones, they needed to start again in downloading the program and some encountered difficulties at that time, which caused delays in being able to enter daily assessments. Because of the cross-platform of using Android and iOS software, we also encountered difficulties with one or the other program due to upgrades in the software code (ie, for a period of time those with an Android device could not use the program and at other times those with an iPhone could not use the program). This is worth noting in the development of future mobile health care applications as different platforms continue to require updates at varying schedules. We also encountered difficulties with the Fitbit. Although the participants were instructed to wear the Fitbit every day, some reported that they forgot to clip in on, some admitted losing their device, and others damaged the device (eg, accidently put it in with the wash). Despite encouragement to use the Fitbit, some participants did not use it every day. These contributed to missing data by the end of the study. Adherence might have increased if the devices were in the form of a wrist band rather than a clip-on device.

A surprise finding was that those few patients who were most active (higher number of steps per day) were least satisfied with the pain app. Quite possibly those patients who are very active and less disabled from their pain did not like to focus on and continually rate their pain, sleep, activity interference, or mood compared with those who were more disabled due to their pain. Thus, the pain app could have been perceived as bothersome to those who were able to remain busy, active and working, particularly if their pain was not perceived to be a significant part of their lives. In contrast, those who could do very little during the day and showed significant daily disruption of their activity seem to appreciate the pain app more. Quite possibly these individuals gained some personal benefit in relaying their information to family members and to their providers.

Another surprise finding was that those who received 2-way messaging felt that their providers were less responsive to their reports. It may be that they had increased expectation that their providers would be more actively monitoring their progress than in the past and the physicians would be more deliberate in intervening with their pain. Thus, the 2-way messaging may have been disappointing for those who felt that their treatment would be greatly improved with the use of the app. Future studies might examine how to incentivize clinicians to more actively incorporate the pain app data into their busy clinic schedule.

A key goal of this project was to increase our understanding of the use of information technology in improving the quality of life among persons with chronic pain. Although mobile technology does not have the capability to completely replace traditional face-to-face interactions with a health care professional, this study offers support for the novel advantages of obtaining clinical information through an app. The potential for reduced health care utilization among pain patients using smartphones pain apps should be explored in future trials. Although we did not find any differences between iPhone and Android owners in terms of app usage, future studies are needed to determine advantages in software platforms and types of smartphones. Further investigations are also needed to determine ways to improve app adherence over an extended period of time. In this study, very few individuals continued to use the app beyond 6 months, which highlights the need to explore ways to improve long-term compliance with a pain app. Incorporating a reward system for continued app use and exploring the notion of an app that mimics a competitive game (gamification)4 with ongoing motivational challenges so that the user can have some competitive sense of winning when using the app should be tested.

Innovative systems currently in development designed to help manage pain can deliver messages in real time close to any precipitating event. These programs can begin to simulate some of the processes of directly interacting with a health care provider. There is a discrepancy, however, between the number of available apps and scientific studies designed to measure their efficacy, feasibility, usability, and compliance and more research is needed. In particular, further assessment of how access to the internet and 2-way messaging with pain clinic staff might be beneficial is needed. While no regulatory body is currently available to monitor, rate, and recommend available applications for chronic pain patients, rigorous interventional studies and reviews by the scientific community are needed. More attention is also needed in determining the benefits of mobile technology for clinicians in diagnosing and treating persons with chronic pain. This would include the benefits of electronic pain assessment programs, medication dosing guidelines, and communication among providers using hospital electronic medical records. Although the future of mobile technology is promising in the management of acute and chronic pain, the challenges of reducing the higher probability of dropout of complex pain patients with severe symptoms remain. Some understanding of the intrinsic benefits to patients in using a smartphone pain app is needed. As chronic pain patients with multiple comorbidities are the ones using the highest percentage of resources,31 future efforts are needed to focus on ways to engage these challenging patients in using remote innovative technology.

There are a number of limitations of this study that need to be highlighted. First, we encountered missing data due to lack of cooperation among the participants and technical difficulties with the hardware and software. Although those who dropped out were not significantly different that those who successfully completed the 3-month study, there is the risk that selection bias contributed to the results of the satisfaction ratings. There is also the possibility that what was made available through the pain app may not have been robust enough to have any effect on outcome among chronic pain patients. We encountered a lack of push notifications for the daily assessments among many of the users and there were no notifications of new messages either on the phones or on the admin portal. Thus, the participants did not always know when a message was sent and the investigators did not always know when a message was returned and this might have affected participant response. We also did not examine the time of day when the messages were send and how the information is used by the physicians. Future investigations on how time of daily assessments influence outcome and how much the physicians use the pain app data are needed. Second, we encountered difficulties with those who changed phones and/or changed their email address during the study. Some participants encountered difficulties in downloading the program after purchasing a new smartphone. Also, some of the participants had multiple files within the admin portal because they changed phones or email addresses. This made it more problematic to follow the patients and to consolidate their data. Third, there was no treatment-as-usual group to compare those participants who had access to a smartphone pain app and those who did not. Fourth, although all patients were encouraged to try not to significantly vary their treatment over the 3 months of the study, it is possible that some treatment changes were made of which we were unaware. Finally, these results are based on a limited number of participants and we report findings for a brief follow-up period. Additional studies are needed with a larger number of patients who represent different degrees of disability and who are followed for a longer period of time to gain further understanding of which patients may benefit more from use of a smartphone pain app.

Despite these limitations, the results of this study suggest that a smartphone pain app is reliable and valid in collecting remote data, is generally accepted and tolerated by chronic pain patients, can be readily implemented in the clinic, and can be a viable platform in communicating daily assessment status with providers. Two-way communication has the potential of increasing adherence in using the app, although no evidence was found in this study that frequent use of the pain app significantly reduced pain, increased function, or improved mood. Mobile application technologies possess advantages and possibilities that have not previously existed and future studies are needed to address the best ways that mobile technologies might enhance health care management.

ACKNOWLEDGMENTS

The authors appreciate the assistance of the patients and staff of the Pain Management Center, Brigham and Women’s Hospital, and the Dana Farber Cancer Institute for their participation in this study. Special thanks are extended to Robert Gimlich for IT support and to the staff of Technogrounds Inc. for developing the smartphone pain app.

Footnotes

Supported by a pilot grant from the Mayday Fund and a government grant through the Center for Future Technologies in Cancer Care (through Boston University #015403-03). The authors declare no conflict of interest.

REFERENCES

- 1.Vardeh D, Edwards RR, Jamison RN, et al. There’s an app for that: mobile technology is a new advantage in managing chronic pain. Pain: Clin Updates. 2013;21:1–7. [Google Scholar]

- 2.Pew Research Center. Pew Research Center Internet Project Omnibus Survey. Washington, DC: Pew; 2014. [Google Scholar]

- 3.Blumenthal S, Somashekar G. Advancing health with information technology in the 21st century. The Huffington Post. 2015. Available at: http:huffingtonpost.com/susan-blumenthal/advancing-health-with-inf_b_7968190.html. Accessed November 21, 2016.

- 4.Singh K, Bates D, Drouin K, et al. Developing a Framework for Evaluating the Patient Engagement, Quality, and Safety of Mobile Health Applications. New York, NY: The Commonwealth Fund; 2016. Available at: www.commonwealthfund.org/publications/issue-briefs/2016. Accessed November 21, 2016. [PubMed] [Google Scholar]

- 5.Jamison RN, Raymond SA, Levine J, et al. Electronic diaries for monitoring chronic pain: 1-year validation study. Pain. 2001;91:277–285. [DOI] [PubMed] [Google Scholar]

- 6.Jamison RN, Gracely RH, Raymond SA, et al. Comparative study of electronic vs. paper VAS ratings: a randomized, crossover trial utilizing healthy volunteers. Pain. 2002;99:341–347. [DOI] [PubMed] [Google Scholar]

- 7.Palermo TM, Valenzuela D, Stork PP. A randomized trial of electronic versus paper pain diaries in children: impact on compliance, accuracy, and acceptability. Pain. 2004;107:213–219. [DOI] [PubMed] [Google Scholar]

- 8.Stone AA, Broderick JE. Real-time data collection for pain: appraisal and current status. Pain Med. 2007;8:S85–S93. [DOI] [PubMed] [Google Scholar]

- 9.Heron KE, Smyth JM. Ecological momentary interventions: incorporating mobile technology into psychosocial and health behaviour treatments. Br J Health Psychol. 2010;15:1–39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hundert AS, Huguet A, McGrath PJ, et al. Commercially available mobile phone headache diary apps: a systematic review. JMIR Mheath Uhealth. 2014;2:e36., 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Stinson JN, Jibb LA, Nguyen C, et al. Development and testing of a multidimensional iPhone pain assessment application for adolescents with cancer. J Med Internet Res. 2013;15:e51., 1–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Reynoldson C, Stones C, Allsop M, et al. Assessing the quality and usability of smarphone apps for pain self-management. Pain Med. 2014;15:898–909. [DOI] [PubMed] [Google Scholar]

- 13.Vega R, Roset R, Castarlenas E, et al. Development and testing of painometer: a smartphone app to assess pain intensity. J Pain. 2014;15:1001–1007. [DOI] [PubMed] [Google Scholar]

- 14.Rosser BA, Eccleston C. Smartphone applications for pain management. J Telemed Telecare. 2011;17:308–312. [DOI] [PubMed] [Google Scholar]

- 15.Wallace LS, Dhingra LK. A systematic review of smartphone applications for chronic pain available for download in the United States. J Opioid Manag. 2014;10:63–68. [DOI] [PubMed] [Google Scholar]

- 16.Lallo C, Jibb LA, Rivera J, et al. “There’s a pain app for that”: review of patient-targeted smartphone applications for pain management. Clin J Pain. 2015;31:557–563. [DOI] [PubMed] [Google Scholar]

- 17.Kristjansdottir OB, Fors EA, Eide E, et al. A smartphone-based intervention with diaries and therapist-feedback to reduce catastrophizing and increase functioning in women with chronic widespread pain: randomized controlled trial. J Med Internet Res. 2013;15:e72., 1–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Vanderboom CE, Vincent A, Luedtke CA, et al. Feasibility of interactive technology for symptom monitoring in patients with fibromyalgia. Pain Manag Nurs. 2013;15:1–8. [DOI] [PubMed] [Google Scholar]

- 19.Melzack R, Wall PD. Pain mechanisms: a new theory. Science. 1965;150:971–979. [DOI] [PubMed] [Google Scholar]

- 20.Jamison RN. Learning to Master Your Chronic Pain. Sarasota, FL: Professional Research Press; 1996. [Google Scholar]

- 21.Abbott JH. The distinction between randomized clinical trials (RCTs) and preliminary feasibility and pilot studies: what they are and are not. J Ortho Sports Phys Ther. 2014;44:555–558. [DOI] [PubMed] [Google Scholar]

- 22.Cleeland CS, Ryan KM. Pain assessment: global use of the Brief Pain Inventory. Ann Acad Med Singapore. 1994;23:129–138. [PubMed] [Google Scholar]

- 23.Daut RL, Cleeland CS, Flanery RC. Development of the Wisconsin Brief Pain Questionnaire to assess pain in cancer and other diseases. Pain. 1993;17:197–210. [DOI] [PubMed] [Google Scholar]

- 24.Sullivan MJ, Pivik J. The Pain Catastrophizing Scale: development and validation. Psychol Assessment. 1995;7:524–532. [Google Scholar]

- 25.Sullivan MJ, Stanish W, Waite H, et al. Catastrophizing, pain, and disability in patients with soft-tissue injuries. Pain. 1998;77:253–260. [DOI] [PubMed] [Google Scholar]

- 26.Tait RC, Pollard CA, Margolis RB, et al. The Pain Disability Index: psychometric and validity data. Arch Phys Med Rehab. 1987;68:138–141. [PubMed] [Google Scholar]

- 27.Zigmond AS, Snaith RP. The Hospital Anxiety and Depression Scale. Acta Psychiatrica Scand. 1983;37:361–370. [DOI] [PubMed] [Google Scholar]

- 28.Bjelland I, Dahl AA, Huag TT, et al. The validity of the Hospital Anxiety and Depression Scale: an updated literature review. J Psychosom Res. 2002;52:69–77. [DOI] [PubMed] [Google Scholar]

- 29.Jensen MP, Keefe FJ, Lefebvre JC, et al. One- and two-item measures of pain beliefs and coping strategies. Pain. 2003;104:453–469. [DOI] [PubMed] [Google Scholar]

- 30.Rosenstiel AK, Keefe FJ. The use of coping strategies in chronic low back pain patients: relationship to patient characteristics and current adjustment. Pain. 1983;17:33–44. [DOI] [PubMed] [Google Scholar]

- 31.Institute of Medicine. Relieving Pain in America: A Blueprint for Transforming Prevention, Care, Education, and Research. Washington, DC: The National Academies Press; 2011. [PubMed] [Google Scholar]