Abstract

Recently, virtual reality (VR) and augmented reality (AR) have received increasing attention, with the development of VR/AR devices such as head-mounted displays, haptic devices, and AR glasses. Medicine is considered to be one of the most effective applications of VR/AR. In this article, we describe a systematic literature review conducted to investigate the state-of-the-art VR/AR technology relevant to plastic surgery. The 35 studies that were ultimately selected were categorized into 3 representative topics: VR/AR-based preoperative planning, navigation, and training. In addition, future trends of VR/AR technology associated with plastic surgery and related fields are discussed.

Keywords: Virtual reality, Augmented reality, Plastic surgery, Virtual surgery, Virtual simulation

INTRODUCTION

The term virtual reality (VR) was coined by Jaron Lanier in 1986 to refer to the following collection of technological devices: a computer capable of interactive 3-dimensional (3D) visualization, a head-mounted display (HMDs), and controllers equipped with 1 or more position trackers [1]. The first application of VR in healthcare took place in the early 1990s, with the use of VR to visualize complex medical data during surgery and to preoperatively plan surgical procedures [2]. Currently, many scientists define VR as a simulation of the real world based on computer graphics and a 3D world where communities of real people interact; create content, items, and services; and produce real economic value through e-commerce [3]. Sometimes, haptic technology is added to VR to provide more immersive simulation by generating a virtual tactile feeling with force-feedback haptic devices [4]. Haptic technology recreates the sense of touch by applying forces, vibrations, or motions to the user.

In real surgery, it is important to have a thorough, accurate, and detailed knowledge of the anatomical structure of the surgical target. This aspect is especially important in plastic surgery, where most of the surgical outcomes are directly connected to the patient's external appearance. With the development of computer graphics and sensors, VR and augmented reality (AR) have become technologies that can bring new opportunities for development of the diagnostic and operative techniques used in reconstructive plastic surgery and aesthetic surgery. As we already know, compared with 2-dimensional methods, 3D computer simulation enables more accurate, realistic, and intuitive diagnosis and surgical analysis. Although VR/AR technology cannot fully simulate humans' 5 senses of reality, many obstacles will be solved in the near future, considering the pace at which VR/AR techniques and sensors are being developed.

In this paper, we introduce the state-of-the-art VR/AR technology relevant to plastic surgery by discussing recent articles and products. Due to the lack of studies and products relating specifically to plastic surgery, we included research about some other surgical specialties, such as orthopedic surgery and neurosurgery. We will also discuss the future trends of VR technology associated with plastic surgery and related fields.

METHODS

A systematic review examining the current status of VR technology in surgery and its applications was conducted using PubMed and Google Scholar. The date range was between 2005 and 2017. We used a combination of search terms including “virtual reality,” “plastic surgery,” “maxillofacial,” “surgery,” “jaw surgery,” “simulation,” “training,” “head mounted display,” and “haptic.” We focused on the use of VR devices such as HMDs, 3D eyewear, and haptic devices in maxillofacial surgery. AR-based technologies were also considered. A total of 35 publications and products from 78 studies and 15 commercial systems were included in this review, and the number of publications that dealt with maxillofacial surgery was 14.

RESULTS

A total of 78 publications from the search results were filtered by title and abstract screening, and 45 were selected for a full-text review. An additional 15 systems were considered during the manual search. Finally, 31 papers and 4 products were selected for this review, chosen by the degree to which the surgical target was related to plastic surgery. Table 1 shows the categorized search results relating to the surgical applications of VR/AR [5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39]. Considering the main concerns and interests in VR/AR-based surgery, we classified the selected studies and products into 3 groups according to the clinical application: surgical planning (patient-specific simulations), surgical navigation, and surgical training. Subcategories for each clinical application were developed in terms of the surgical domain and device.

Table 1. Categorized search results relating to the surgical applications of VR/AR.

| Clinical application/Surgical domain | Devices |

|---|---|

| Surgical planning | |

| Orthognathic surgery | Motion tracking dental cast model (mixed reality) [5] |

| Facial contouring | Haptic device [6,7] |

| Cranio-maxillofacial reconstruction | Haptic device [8], 3D eyewear [8], immersive workbench [8] |

| Cleft lip repair | Haptic device [9] |

| Cranio-maxillofacial fracture reduction | Haptic device [10,11], immersive workbench [10], 3D eyewear [10] |

| Orthopedic fracture reduction | Haptic device [12,13,14], 3D display [12,13], 3D eyewear [13], AR device [15] |

| Orthopedic drilling | Haptic device [16] |

| Neurosurgery | Haptic device [17,18], 3D eyewear [17,18], immersive workbench [17,18] |

| Surgical navigation | |

| Orthognathic surgery | AR device [19,20,21] |

| Facial contouring | AR device [22] |

| Other maxillofacial surgery | AR device [23] |

| Bone tumor resection | AR device [24] |

| Neurosurgery | AR device [25] |

| Surgical training | |

| Orthognathic surgery | Haptic device [26,27], immersive workbench [26,27] |

| Orthopedic fracture reduction | Haptic device [28,29,30], bone model (mixed reality) [28] |

| Other orthopedic surgery | HMD [31], hand-held controller [31] |

| Orthopedic drilling, burring | Haptic device [32,33,34], 3D eyewear [33] |

| Neurosurgery | Haptic device [18,35,36,37,38,39], AR device [36], 3D eyewear [18,35,36], stereoscopic microscope view [39], immersive workbench [18,35,36,39] |

VR, virtual reality; AR, augmented reality; 3D, 3-dimensional; HMD, head-mounted display.

Surgical planning

This section covers 13 publications and a commercial product that is related to patient-specific simulation during the preoperative phase using 3D mesh models of the patients. The models were reconstructed from medical image data such as computed tomography (CT) and magnetic resonance imaging (MRI) volumes. VR/AR devices were used for orthopedic operations that deal with bones with complicated shapes, such as cranio-maxillofacial bones and the hip bone. Research has been conducted into VR-based surgical planning in neurosurgery. Most such studies used a haptic device to translate and rotate 3D mesh models, and to give a tactile sense in bone cutting, drilling, and burring simulation.

For maxillofacial surgery, Fushima and Kobayashi [5] suggested a mixed reality-based system using a dental cast model and a 3D maxillofacial mesh model. The system synchronized the movement of the dental cast model in the real world and the 3D patient model in the virtual world. By making a 3D model move, following the transformation of the dental cast model, they performed orthognathic planning.

In facial contouring surgery, Tsai et al. [6] used a haptic device for surgical simulation to reduce a protruded zygoma and to insert an implant into a chin. Mandibular angle reduction simulation has also been conducted using a VR-based system [7]. For mandibular reconstructive surgery, Woo et al. [40] performed 3D virtual planning using computer simulation. Olsson et al. [8] targeted cranio-maxillofacial reconstructive surgery, using an installation with an immersive workbench and 3D eyewear. The workbench consisted of a semi-transparent mirror, a display monitor, and a haptic device to improve the surgeon's immersion during surgical planning. The developed system was designed by following real surgical procedures, enabling the surgeon to simulate mandibulectomy and fibular transplant to the mandibular defect site using 3D patient mesh models. It also allowed the surgeons to test and find configurations of vessels and skin paddles (Figs. 1, 2) [8]. Schendel et al. [9] simulated cleft lip repair surgery for surgical planning, using a haptic device to make incisions and to close the cleft in 3D patient skin models.

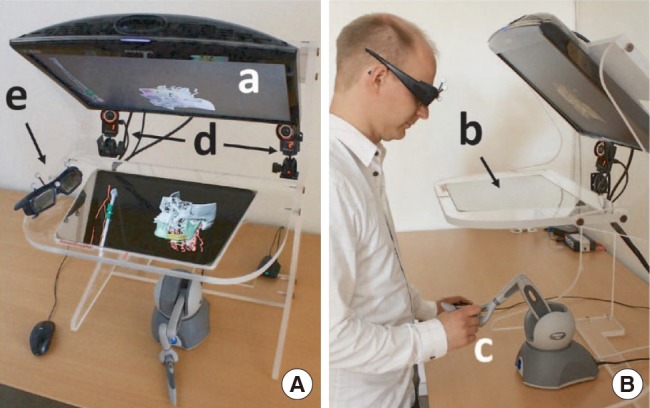

Fig. 1. VR-based workbench system for mandibular reconstruction surgery.

VR-based workbench system as seen from above (A) and from the side (B). The monitor (a) displays the anatomical 3D model, which is reflected on the half-transparent mirror (b). The surgeon manipulates the 3D model with the haptic device (c) under the mirror. The infrared cameras (d) track the markers on the stereo glasses (e) for user look-around. VR, virtual reality; 3D, 3-dimensional. Adapted from Olsson et al. Plast Reconstr Surg Glob Open 2015;3:e479 [8], with permission of Wolters Kluwer Health Inc. via Copyright Clearance Center.

Fig. 2. Reconstruction planning workflow.

(A) Load segmented bone and vessels from computed tomography angiogram data and resect bone to prepare the recipient site for reconstruction. (B) Define the positions, orientations, and angulations of fibula segments; test pedicle reach to anastomosis sites on the recipient vessels; and test possible skin paddle configurations. (C) Resulting plan. The user iterates within the Design and Test stage to find a suitable configuration for the fibula, vessels, and skin paddle. Adapted from Olsson et al. Plast Reconstr Surg Glob Open 2015;3:e479 [8], with permission of Wolters Kluwer Health Inc. via Copyright Clearance Center.

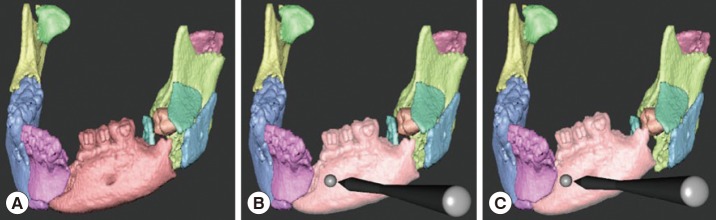

Research has been conducted into virtual surgery for cranio-maxillofacial complex fracture reduction with 3D patient bone mesh models (Fig. 3) [10,11]. Both such studies used a haptic device to manipulate bone fragments, but an immersive workbench and a piece of 3D eyewear were also used for simulation in the study of Olsson et al. [10].

Fig. 3. Virtual reconstruction of mandible.

Each individual bone fragment is given a unique color (A). When the haptic cursor is held close to a bone fragment, it is highlighted (B) and the user can then grasp and manipulate it with the 6-DOF haptic handle (C). Contact forces guide the user during manipulation. DOF, degree of freedom. Adapted from Olsson et al. Int J Comput Assist Radiol Surg 2013;8:887-94 [10], on the basis of Open Access.

Not only cranio-maxillofacial fracture surgery, but also hip fracture reduction surgery was dealt with in a study by Kovler et al. [12]. A haptic device and a piece of 3D eyewear were used. Femur fracture reduction surgery planning using a haptic device was presented [14], as well as further elaboration of the system with the incorporation of an immersive screen and a user tracking system [13]. For orthopedic fracture reduction surgery, a surgical plate pre-bending system was proposed in a study by Shen et al. [15]. They used an AR system that consisted of a camera, a marker, and a display device. It allowed the surgeon to locate a real implant based on a designed 3D implant model that was presented on the display, and to bend it to fit the planned shape. In a study of orthopedic burring simulation, with proposed applications in various orthopedic procedures, a haptic device was actually used for burring in 3D patient bone mesh models [16]. Luciano et al. [17] focused on spinal fixation simulation and used ImmersiveTouch [18], an impressive workbench-based simulation system specialized for neurosurgery, including a haptic device and 3D eyewear.

Surgical navigation

This section covers 7 publications describing systems that display patient anatomical information, surgical plans, the position of surgical tools, and so on for use in surgeon support. AR-based technologies have been used for orthognathic surgery, face contouring, bone tumor resection, and neurosurgery. Most publications in this section dealt with 3D patient mesh models that were reconstructed from CT or MRI data. The systems overlay these models onto real-time streaming video images to provide surgeons with preoperative planning and anatomical information.

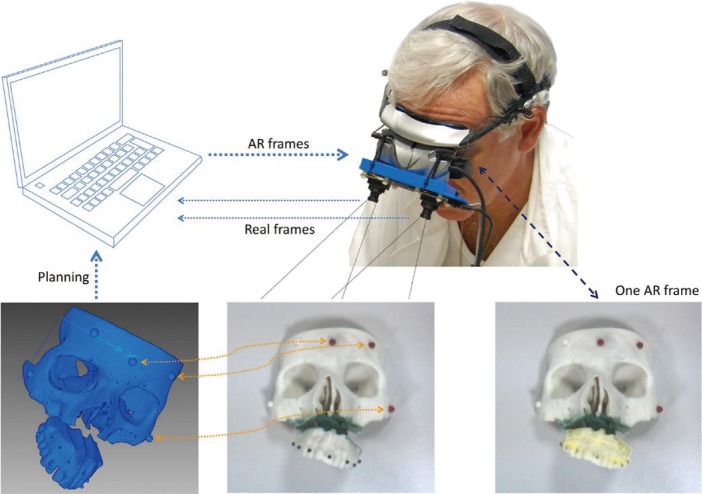

AR-based navigation systems have been introduced for orthognathic surgery, providing overlaid images of real surgical views and virtual surgical plans for guidance [19,20,21]. Badiali et al. [19] used an HMD to display overlaid images to allow surgeons to follow virtual surgical plans when repositioning patient bones after maxillofacial osteotomies (Fig. 4). Zinser et al. [20] and Mischkowski et al. [21] used interactive portable displays with a camera to handle this system easily during surgery and displayed overlaid images on it (Fig. 5).

Fig. 4. Wearable AR system for maxillofacial surgery.

The two external cameras acquire real video frames. The software application merges the virtual 3D model derived during surgical planning with real data from the camera frames and sends the result to the two internal monitors. Alignment between real and virtual information is obtained by calculating the positions of colored markers relative to camera data, with respect to their known positions (recorded during planning), using detailed preoperative CT images). AR, augmented reality; 3D, 3-dimensional; CT, computed tomography. Adapted from Badiali et al. J Craniomaxillofac Surg 2014;42:1970-6 [19], with permission of Elsevier via Copyright Clearance Center.

Fig. 5. Application of AR-based display in orthognathic surgery.

Surgeon's view of the segmented virtual maxilla in an AR environment. AR, augmented reality. Adapted from Mischkowski et al. J Craniomaxillofac Surg 2006;34:478-83 [21], with permission of Elsevier via Copyright Clearance Center.

In facial contouring surgery, AR technology has also been used. Lin et al. [22] developed an AR-based system for mandibular angle osteotomy to overlay a 3D patient mandible model and a virtual planned 3D surgical guide model on a real surgical view via an HMD. This system helped surgeons to utilize a surgical guide of the planned position and to perform cutting procedures accurately.

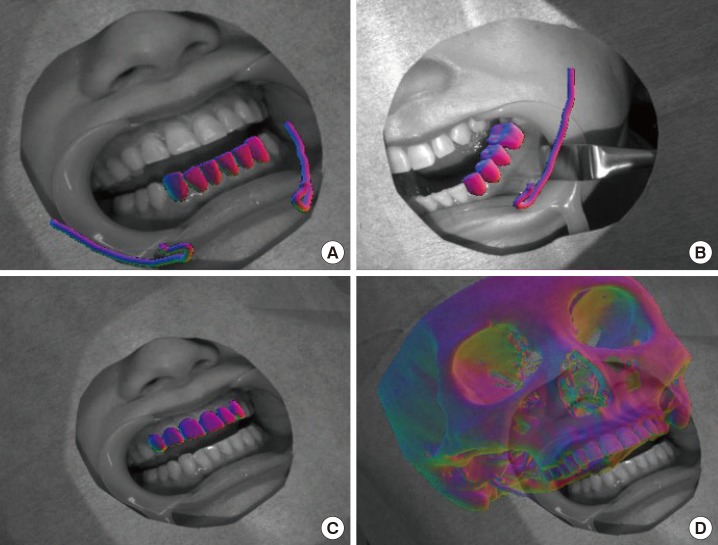

Wang et al. [23] proposed markerless AR-based technology to widely support oral and maxillofacial surgery (Figs. 6, 7). It matched a 3D patient teeth model to a real patient's teeth in a real-time video image to track the patient's position. It also overlaid other 3D anatomical models such as the maxillofacial bone, nerves, and vessels.

Fig. 6. Augmented fusion of patient's models for surgical visualization.

(A) and (B) Image registration results using the lower front teeth and lower left molars models. Nerve canals are overlaid on the image for surgical visualization. (C) and (D) Image registration result using the upper front teeth model and the resulting augmented fusion of the maxillofacial model with the camera video for surgical visualization. Adapted from Wang et al. Int J Med Robot 2016;2016 Jun 9 [Epub]. http://doi.org/10.1002/rcs.1754 [23], with permission of John Wiley and Sons via Copyright Clearance Center.

Fig. 7. Markerless AR-based system for oral and maxillofacial surgery.

(A) Picture of the surgeon wearing the 4K camera. (B) Teeth tracking and (C) video see-through augmented reality validated on clinical data. The model of the carotid artery of the patient is overlaid. Adapted from Wang et al. Int J Med Robot 2016;2016 Jun 9 [Epub]. http://doi.org/10.1002/rcs.1754 [23], with permission of John Wiley and Sons via Copyright Clearance Center.

Choi et al. [24] developed an AR-based surgical navigation system using a tablet PC with an embedded camera for bone tumor resection in the pelvic region. They overlaid and provided a 3D patient tumor model and planned resection margin information on the real surgical view. In AR-based display images, it was found to be hard for the user to recognize depth information. Choi et al. [25] tried to overcome this difficulty by switching AR and VR display images and providing the closest distance information about a real surgical tool and a 3D patient mesh model. In addition, they applied it to spine surgery.

Surgical training

This section covers 11 publications and 3 commercial systems that aim for surgical skill training or deal with non-patient-specific data for surgical simulation. Haptic devices have mostly been used for training in skills such as bone drilling, burring, and cutting. The training devices discussed here include training for orthognathic maxillofacial surgery, orthopedic fracture reduction, and neurosurgery.

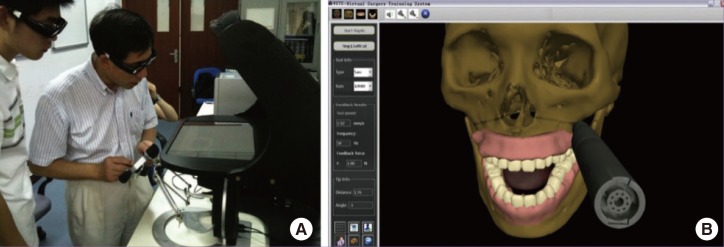

An immersive workbench system with a haptic device to train for procedures of orthognathic maxillofacial surgery was developed by Wu et al. [26] and Lin et al. [27] (Fig. 8). These 2 studies specifically focused on LeFort 1 procedures. To allow surgeons to train for surgical procedures, the system was designed to provide functions of bone sawing, drilling, and plate fixation with haptic force feedback.

Fig. 8. Virtual training system for maxillofacial surgery.

(A) A surgeon evaluating use of the simulator, and (B) the bone sawing procedure for 6 trials. Adapted from Lin Y, et al. J Biomed Inform 2014;48: 122-9 [27], with permission of Elsevier via Copyright Clearance Center.

For orthopedic fracture reduction surgery, there are wire training simulators using VR. Seah et al. [28] used the haptic device and the 3D bone mesh model for training in positioning Kirschner wires in distal radius fracture reduction surgery. Moreover, Thomas et al. [29] proposed a mixed reality-based wire navigation simulator that was composed of a real drill, plastic bone model, and a 3D bone model. This may be used for interochanteric fractures. TraumaVison [30] is a commercial product used to simulate orthopedic trauma surgery using a haptic device. In addition, it provides a virtual fluoroscopic image and a 3D mesh model to establish a sort of training for fracture reduction and implant placement. OssoVR [31] is a virtual reality-based simulation platform that can be used to train for surgical procedures immersively using an HMD and tracked hand-held controllers (Fig. 9). The surgeon interacts with the virtual world naturally using his or her hands. In orthopedic surgery, most VR-based training studies to improve surgical skills such as bone drilling and burring have used a haptic device for the surgeon's tactile experience [32,33,34]. The system developed by Wong et al. [33] additionally included 3D eyewear to facilitate the user's immersion.

Fig. 9. VR-based training for orthopedic surgery.

OssoVR, virtual training simulation of orthopedic surgery using virtual reality (VR). Adapted from http://ossovr.com [31], with permission of OssoVR.

In the field of neurosurgery, Lemole et al. [35] and Alaraj et al. [36] utilized ImmersiveTouch [18] in neurosurgical education (Fig. 10). Sutherland et al. [37] presented an AR-based haptic training simulator for spinal needle insertion. Tsai and Hsieh [38] also developed a system for spinal surgery using a haptic device, but their simulator was specialized for burring. NeuroVR [39] is an immersive workbench system with a haptic device. It does not contain 3D eyewear, but it provides a 3D view through a stereoscopic microscope view fixed on the workbench. The surgeon can simulate scenarios ranging from a cranial opening to endoscopic brain surgery.

Fig. 10. VR-based simulation system for neurosurgery.

A general view of the immersive virtual reality (VR) environment being used by a resident. The operator is using haptic devices in both right and left hand which mimic the operating room environment. The virtual image is projected on a screen in front of the resident. Adapted from Alaraj A, et al. Neurosurgery 2015;11 Suppl 2:52-8 [36], on the basis of Open Access.

DISCUSSION

As discussed in the introduction, VR/AR is a collection of technologies that involve a computer, software, display device, and tracking sensors. However, it is essential to consider how to express the procedural and technological knowledge involved in surgery in terms of computer processing [41]. Currently, VR is still lacking realism, but it is clear that this will be possible in the near future.

From the results of our literature survey, VR/AR technologies applied to plastic surgery can be categorized into 3 areas: surgical planning, navigation, and training. VR-based surgical planning utilizes VR technology and a patient-specific model for optimal preoperative planning. VR-based surgical navigation often combines AR technology to guide the operation with more useful information (the patient's anatomical features and/or preoperative planning). VR-based virtual training has also been extensively investigated, and many commercial products are already available for educational purposes in medicine. The results of surveying the state-of-the-art technologies in each category were reported in the Results section. Herein, we would like to highlight the main advantages of VR/AR in plastic surgery, which are as follows:

VR/AR allows the presentation of virtual objects to all human senses in a way identical to their natural counterparts.

As a diagnostic tool, the simulated 3D reconstruction of organs based on radiological data can provide a more naturalistic view of a patient's appearance and anatomy.

Preoperative surgical planning can provide a more realistic prediction of the outcome, especially in craniofacial and aesthetic surgery.

Computerized 3D atlases of human anatomy, physiology, and pathology can provide better learning and training systems for plastic and reconstructive surgery.

Intraoperative navigation reduces the possibility of major complications and increases the possibility of the best surgical results.

VR/AR technology can play an important role in telemedicine, from remote diagnosis to complex teleinterventions.

In the future, VR/AR will evolve to implement more realistic, immersive, and interactive simulations. Further development in the aforementioned 3 areas—surgical planning, navigation, and training—will make it possible to be widely used in clinical settings including plastic surgery. Other new VR/AR-related techniques will draw people's interest as technology evolves. One example is holographic interaction. Worldwide, many researchers are investigating holographic displays and tactile feedback in 3D worlds [42,43]. Once the current technical problems of holographic interaction are solved, surgical applications using holographic interaction will open new horizons. Yet another interesting topic in VR/AR-based surgery is telepresence surgery. Using master and slave robots, a surgeon will be able to remotely carry out a procedure with the aid of advanced VR/AR technology.

Although VR/AR technology has not been widely used in plastic surgery, it has a great potential in the fields of surgical planning, navigation, and training. Considering the speed of development of VR/AR in terms of software and hardware, many successful applications in plastic surgery will surely assist surgeons in achieving better surgical outcomes with high efficiency in the near future. To address this possibility, it is essential to have an active attitude towards clinical applications based on the beneficial aspects of VR/AR from the perspectives of both the surgeon and the engineer.

Footnotes

This research was supported by the Korea Institute of Science and Technology institutional program (2E26880, 2E26276).

No potential conflict of interest relevant to this article was reported.

References

- 1.Zimmerman TG, Lanier J, Blanchard C, et al. A hand gesture interface device; Proceedings of the SIGCHI/GI Conference on Human Factors in Computing Systems and Graphics Interface; 1987 Apr 5-9; Toronto, CA. 1987. pp. 189–192. [Google Scholar]

- 2.Chinnock C. Virtual reality in surgery and medicine. Hosp Technol Ser. 1994;13:1–48. [PubMed] [Google Scholar]

- 3.Pensieri C, Pennacchini M. Overview: virtual reality in medicine. J Virtual Worlds Res. 2014;7:1–34. [Google Scholar]

- 4.Geomagic. Geomagic Haptic Devices [Internet] Rock Hill, SC: Geomagic; c2016. [cited 2017 Feb 7]. Available from: http://www.geomagic.com/en/products-landing-pages/haptic. [Google Scholar]

- 5.Fushima K, Kobayashi M. Mixed-reality simulation for orthognathic surgery. Maxillofac Plast Reconstr Surg. 2016;38:13. doi: 10.1186/s40902-016-0059-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Tsai MD, Liu CS, Liu HY, et al. Virtual reality facial contouring surgery simulator based on CT transversal slices; Proceedings of the 5th International Conference on Bioinformatics and Biomedical Engineering; 2011 May 10-12; Wuhan, China. 2011. pp. 1–4. [Google Scholar]

- 7.Wang Q, Chen H, Wu W, et al. Real-time mandibular angle reduction surgical simulation with haptic rendering. IEEE Trans Inf Technol Biomed. 2012;16:1105–1114. doi: 10.1109/TITB.2012.2218114. [DOI] [PubMed] [Google Scholar]

- 8.Olsson P, Nysjo F, Rodriguez-Lorenzo A, et al. Haptics-assisted virtual planning of bone, soft tissue, and vessels in fibula osteocutaneous free flaps. Plast Reconstr Surg Glob Open. 2015;3:e479. doi: 10.1097/GOX.0000000000000447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Schendel S, Montgomery K, Sorokin A, et al. A surgical simulator for planning and performing repair of cleft lips. J Craniomaxillofac Surg. 2005;33:223–228. doi: 10.1016/j.jcms.2005.05.002. [DOI] [PubMed] [Google Scholar]

- 10.Olsson P, Nysjo F, Hirsch JM, et al. A haptics-assisted cranio-maxillofacial surgery planning system for restoring skeletal anatomy in complex trauma cases. Int J Comput Assist Radiol Surg. 2013;8:887–894. doi: 10.1007/s11548-013-0827-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Zhang J, Li D, Liu Q, et al. Virtual surgical system in reduction of maxillary fracture; Proceedings of the 2015 IEEE International Conference on Digital Signal Processing (DSP); 2015 Jul 21-24; Singapore. 2015. pp. 1102–1105. [Google Scholar]

- 12.Kovler I, Joskowicz L, Weil YA, et al. Haptic computer-assisted patient-specific preoperative planning for orthopedic fractures surgery. Int J Comput Assist Radiol Surg. 2015;10:1535–1546. doi: 10.1007/s11548-015-1162-9. [DOI] [PubMed] [Google Scholar]

- 13.Cecil J, Ramanathan P, Pirela-Cruz M, et al. A Virtual Reality Based Simulation Environment for Orthopedic Surgery. In: Meersman R, Panetto H, Mishra A, et al., editors. On the Move to Meaningful Internet Systems: OTM 2014 Workshops; Confederated International Workshops: OTM Academy, OTM Industry Case Studies Program, C&TC, EI2N, INBAST, ISDE, META4eS, MSC and OnToContent; 2014 Oct 27-31; Amantea, Italy. Berlin, Heidelberg: Springer Berlin Heidelberg; 2014. pp. 275–285. [Google Scholar]

- 14.Cecil J, Ramanathan P, Rahneshin V, et al. Collaborative virtual environments for orthopedic surgery; Proceedings of the 2013 IEEE International Conference on Automation Science and Engineering (CASE); 2013 Aug 17-20; Madison, WI. 2013. pp. 133–137. [Google Scholar]

- 15.Shen F, Chen B, Guo Q, et al. Augmented reality patient-specific reconstruction plate design for pelvic and acetabular fracture surgery. Int J Comput Assist Radiol Surg. 2013;8:169–179. doi: 10.1007/s11548-012-0775-5. [DOI] [PubMed] [Google Scholar]

- 16.Chan S, Li P, Locketz G, et al. High-fidelity haptic and visual rendering for patient-specific simulation of temporal bone surgery. Comput Assist Surg (Abingdon) 2016;21:85–101. doi: 10.1080/24699322.2016.1189966. [DOI] [PubMed] [Google Scholar]

- 17.Luciano CJ, Banerjee PP, Sorenson JM, et al. Percutaneous spinal fixation simulation with virtual reality and haptics. Neurosurgery. 2013;72(Suppl 1):89–96. doi: 10.1227/NEU.0b013e3182750a8d. [DOI] [PubMed] [Google Scholar]

- 18.ImmersiveTouch Inc. ImmersiveTouch [Internet] IChicago, CA: ImmersiveTouch Inc; 2017. [cited 2017 Jan 12]. Available from: http://www.immersivetouch.com. [Google Scholar]

- 19.Badiali G, Ferrari V, Cutolo F, et al. Augmented reality as an aid in maxillofacial surgery: validation of a wearable system allowing maxillary repositioning. J Craniomaxillofac Surg. 2014;42:1970–1976. doi: 10.1016/j.jcms.2014.09.001. [DOI] [PubMed] [Google Scholar]

- 20.Zinser MJ, Mischkowski RA, Dreiseidler T, et al. Computer-assisted orthognathic surgery: waferless maxillary positioning, versatility, and accuracy of an image-guided visualisation display. Br J Oral Maxillofac Surg. 2013;51:827–833. doi: 10.1016/j.bjoms.2013.06.014. [DOI] [PubMed] [Google Scholar]

- 21.Mischkowski RA, Zinser MJ, Kubler AC, et al. Application of an augmented reality tool for maxillary positioning in orthognathic surgery: a feasibility study. J Craniomaxillofac Surg. 2006;34:478–483. doi: 10.1016/j.jcms.2006.07.862. [DOI] [PubMed] [Google Scholar]

- 22.Lin L, Shi Y, Tan A, et al. Mandibular angle split osteotomy based on a novel augmented reality navigation using specialized robot-assisted arms: a feasibility study. J Craniomaxillofac Surg. 2016;44:215–223. doi: 10.1016/j.jcms.2015.10.024. [DOI] [PubMed] [Google Scholar]

- 23.Wang J, Suenaga H, Yang L, et al. Video see-through augmented reality for oral and maxillofacial surgery. Int J Med Robot. 2016 Jun 09; doi: 10.1002/rcs.1754. [Epub] [DOI] [PubMed] [Google Scholar]

- 24.Choi H, Park Y, Cho H, et al. An augmented reality based simple navigation system for pelvic tumor resection; Proceedings of the 11th Asian Conference on Computer Aided Surgery (ACCAS 2015); 2015 Jul 9-11; Singapore. 2015. [Google Scholar]

- 25.Choi H, Cho B, Masamune K, et al. An effective visualization technique for depth perception in augmented reality-based surgical navigation. Int J Med Robot. 2016;12:62–72. doi: 10.1002/rcs.1657. [DOI] [PubMed] [Google Scholar]

- 26.Wu F, Chen X, Lin Y, et al. A virtual training system for maxillofacial surgery using advanced haptic feedback and immersive workbench. Int J Med Robot. 2014;10:78–87. doi: 10.1002/rcs.1514. [DOI] [PubMed] [Google Scholar]

- 27.Lin Y, Wang X, Wu F, et al. Development and validation of a surgical training simulator with haptic feedback for learning bone-sawing skill. J Biomed Inform. 2014;48:122–129. doi: 10.1016/j.jbi.2013.12.010. [DOI] [PubMed] [Google Scholar]

- 28.Seah TE, Barrow A, Baskaradas A, et al. A virtual reality system to train image guided placement of kirschner-wires for distal radius fractures. In: Bello F, Cotin S, editors. Biomedical Simulation; 6th International Symposium, ISBMS 2014; 2014 Oct 16-17; Strasbourg, FR. Cham: Springer International Publishing; 2014. pp. 20–29. [Google Scholar]

- 29.Thomas GW, Johns BD, Kho JY, et al. The validity and reliability of a hybrid reality simulator for wire navigation in orthopedic surgery. IEEE Trans Hum Mach Syst. 2015;45:119–125. [Google Scholar]

- 30.Swemac. TraumaVision-medical orthopedic simulator [Internet] Linkoping, SE: Swemac; c2017. [cited 2017 Jan 12]. Available from: http://www.swemac.com/simulators/traumavision. [Google Scholar]

- 31.OssoVR. OssoVR [Internet] San Francisco, CA: OssoVR; [cited 2017 Feb 6]. Available from: http://ossovr.com. [Google Scholar]

- 32.Vankipuram M, Kahol K, McLaren A, et al. A virtual reality simulator for orthopedic basic skills: a design and validation study. J Biomed Inform. 2010;43:661–668. doi: 10.1016/j.jbi.2010.05.016. [DOI] [PubMed] [Google Scholar]

- 33.Wong D, Unger B, Kraut J, et al. Comparison of cadaveric and isomorphic virtual haptic simulation in temporal bone training. J Otolaryngol Head Neck Surg. 2014;43:31. doi: 10.1186/s40463-014-0031-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Qiong W, Hui C, Wen W, et al. Impulse-based rendering methods for haptic simulation of bone-burring. IEEE Trans Haptics. 2012;5:344–355. doi: 10.1109/TOH.2011.69. [DOI] [PubMed] [Google Scholar]

- 35.Lemole GM, Jr, Banerjee PP, Luciano C, et al. Virtual reality in neurosurgical education: part-task ventriculostomy simulation with dynamic visual and haptic feedback. Neurosurgery. 2007;61:142–148. doi: 10.1227/01.neu.0000279734.22931.21. [DOI] [PubMed] [Google Scholar]

- 36.Alaraj A, Luciano CJ, Bailey DP, et al. Virtual reality cerebral aneurysm clipping simulation with real-time haptic feedback. Neurosurgery. 2015;11(Suppl 2):52–58. doi: 10.1227/NEU.0000000000000583. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Sutherland C, Hashtrudi-Zaad K, Sellens R, et al. An augmented reality haptic training simulator for spinal needle procedures. IEEE Trans Biomed Eng. 2013;60:3009–3018. doi: 10.1109/TBME.2012.2236091. [DOI] [PubMed] [Google Scholar]

- 38.Tsai MD, Hsieh MS. Computer based system for simulating spine surgery; Proceedings of the 22nd IEEE International Symposium on Computer-Based Medical Systems; 2009 Aug 2-5; Albuquerque, NM. 2009. pp. 1–8. [Google Scholar]

- 39.CAE Healthcare Inc. NeuroVR [Internet] Montreal, CA: Cae Healthcare; 2017. [cited 2017 Jan 12]. Available from: http://caehealthcare.com/eng/interventional-simulators/neurovr. [Google Scholar]

- 40.Woo T, Kraeima J, Kim YO, et al. Mandible reconstruction with 3D virtual planning. J Int Soc Simul Surg. 2015;2:90–93. [Google Scholar]

- 41.Burdea G, Coiffet P. Virtual reality technology. Hoboken, NJ: J. Wiley-Interscience; 2003. [Google Scholar]

- 42.Hackett M, Proctor M. Three-dimensional display technologies for anatomical education: a literature Review. J Sci Educ Technol. 2016;25:641–654. [Google Scholar]

- 43.Makino Y, Furuyama Y, Inoue S, et al. HaptoClone (Haptic-Optical Clone) for mutual tele-environment by real-time 3D image transfer with midair force Feedback; Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems; 2016; Santa Clara, CA. ACM; 2016. pp. 1980–1990. [Google Scholar]