Significance

People perform tasks in coordination with others in daily life, but the mechanisms are not well understood. Using Granger causality models to examine string quartet dynamics, we demonstrated that musicians assigned as leaders affect other performers more than musicians assigned as followers. These effects were present during performance, when musicians could only hear each other, but were magnified when they could also see each other, indicating that both auditory and visual cues affect nonverbal social interactions. Furthermore, the overall degree of coupling between musicians was positively correlated with ratings of performance success. Thus, we have developed a method for measuring nonverbal interaction in complex situations and have shown that interaction dynamics are affected by social relations and perceptual cues.

Keywords: leadership, joint action, music performance, body sway, Granger causality

Abstract

The cultural and technological achievements of the human species depend on complex social interactions. Nonverbal interpersonal coordination, or joint action, is a crucial element of social interaction, but the dynamics of nonverbal information flow among people are not well understood. We used joint music making in string quartets, a complex, naturalistic nonverbal behavior, as a model system. Using motion capture, we recorded body sway simultaneously in four musicians, which reflected real-time interpersonal information sharing. We used Granger causality to analyze predictive relationships among the motion time series of the players to determine the magnitude and direction of information flow among the players. We experimentally manipulated which musician was the leader (followers were not informed who was leading) and whether they could see each other, to investigate how these variables affect information flow. We found that assigned leaders exerted significantly greater influence on others and were less influenced by others compared with followers. This effect was present, whether or not they could see each other, but was enhanced with visual information, indicating that visual as well as auditory information is used in musical coordination. Importantly, performers’ ratings of the “goodness” of their performances were positively correlated with the overall degree of body sway coupling, indicating that communication through body sway reflects perceived performance success. These results confirm that information sharing in a nonverbal joint action task occurs through both auditory and visual cues and that the dynamics of information flow are affected by changing group relationships.

Coordinating actions with others in time and space—joint action—is essential for daily life. From opening a door for someone to conducting an orchestra, periods of attentional and physical synchrony are required to achieve a shared goal. Humans have been shaped by evolution to engage in a high level of social interaction, reflected in high perceptual sensitivity to communicative features in voices and faces, the ability to understand the thoughts and beliefs of others, sensitivity to joint attention, and the ability to coordinate goal-directed actions with others (1–3). The social importance of joint action is demonstrated in that simply moving in synchrony with another increases interpersonal affiliation, trust, and/or cooperative behavior in infants and adults (e.g., refs. 4–9). The temporal predictability of music provides an ideal framework for achieving such synchronous movement, and it has been hypothesized that musical behavior evolved and remains adaptive today because it promotes cooperative social interaction and joint action (10–12). Indeed music is used in important situations where the goal is for people to feel a social bond, such as at religious ceremonies, weddings, funerals, parties, sporting events, political rallies, and in the military.

In social contexts, individuals often assume leader and follower roles. For example, to jointly lift a heavy object, one person may verbally indicate the upcoming joint movements. However, many forms of joint action are coordinated nonverbally, such as dancing a tango or performing music in an ensemble. Previous studies have examined nonverbal joint action between two coactors engaged in constrained laboratory tasks in terms of how they adapt to each other (2, 13–16). However, the effect of social roles, such as leader and follower, on group coordination is not well understood, nor are the nonverbal mechanisms by which leader–follower information is communicated, particularly in ecologically realistic situations involving more than two coactors (2).

Music ensembles provide an ideal model to study leader–follower dynamics. Music exists in all human cultures as a social activity, and group coordination is a universal aspect of music (12, 17), which suggests that music and coordination functions may be linked in the human brain. Ensemble music performance shares many psychological principles with other forms of interpersonal coordination, including walking, dancing, and speaking (18). The principles of coordination in musical ensembles are thus expected to generalize to other interaction situations. Small music ensembles are well suited to studying joint action because they act as self-managed teams, with all performers contributing to the coordination of the team (19, 20).

The complex, nonverbal characteristics of ensemble playing require high sensorimotor, social, and motivational engagement to achieve shared technical and aesthetic goals without explicit verbal guidance, making it a tractable problem for initial study of joint action in ecologically realistic situations. Although musical scores provide some objective guidance for coordination, performers vary tempo, phrasing, articulation, and loudness dynamically to achieve joint musical expression (21–23). Thus, music ensembles provide an opportunity to investigate coordination and adaptation in a complex nonverbal interaction task in which agents work toward a shared goal: an aesthetic performance of music.

Previous studies on music and coordination have focused primarily on note-to-note temporal synchrony (23), including how it is modulated by the partner (24–29) and the role of perceptual information (30–33). A few studies have attempted to describe temporal synchronization among musicians using mathematical models (34–36). However, we are not aware of any existing studies that have done so using an experimental manipulation of leadership for investigating interpersonal coordination beyond the note-to-note level. Unlike most previous studies, we examined leader–follower interaction dynamics at a more global level than note-to-note timing accuracy. Specifically, we measured body sway in two string quartets. Body sway is a global measurement of an individual’s actions (14) not precisely time-locked to the musical notes or the finger and bow movements that are critical to musical sound generation. We used body sway as a reflection of interpersonal communication in achieving a joint aesthetic goal by coordinating performance aspects, such as phrasing, dynamics, timbre, and expressive timing between musicians. We used a bidirectional modeling technique—Granger causality (GC)—to study how musicians form a common musical expression. Leadership assignment was experimentally manipulated, as well as the presence of visual information and compositional style of the music performed, to study changes in information flow between musicians.

Body sway has been used to index underlying mechanisms of joint action as it becomes interpersonally coupled when individuals engage in a coordinated task (37, 38) and during conversation (14, 15). Body sway dynamics are thought to reflect real-time interpersonal information sharing (14, 16). Previous studies of music ensembles suggest that body sway helps pianists coordinate tempo fluctuations (31) and that the body sway of performers playing the leading melody tend to precede the sway of those who play the accompaniment (30, 39). However, it remains unclear whether body sway is a motor byproduct of music performance, or reflects higher order aspects of joint action, such as leader–follower communication. In the present study, musicians’ body sway was recorded with motion capture while we experimentally manipulated leader–follower roles through assignment of a “secret” leader on each trial, with the other musicians not told who the leader was. We hypothesized that body sway dynamics among performers would change as different performers were assigned the leadership role.

Auditory and visual information can contribute to coordinating joint actions, particularly in the absence of verbal communication (2). Even when visual information is not directly relevant, visual cues can improve interpersonal coordination (14, 40–42). In piano duos, being able to see the other performer increases temporal synchrony (31). Here, we examined how visual information affects leader–follower dynamics. We manipulated visual information by having the musicians face each other (visual present) or face away from one another (visual absent). We hypothesized that the influence of leader–follower dynamics would be higher in the visual-present condition, indicating that visual information, in addition to auditory information, is used to facilitate coordination.

We extended previous research on interpersonal coordination by examining directional information flow among group members using Granger causality analysis. Granger causality is well-suited to the analysis of interdependent time-series data because it quantifies how well the history of one time series predicts the current status of a second time series, after taking into account how much the time series is predicted by its own previous history, in the form of a log-likelihood ratio referred to as GC (Fig. 1B) (43, 44). The larger the value of GC, the better the prediction and the more information is flowing from one time series to another. In the context of music ensembles, Granger causality will reveal both the direction and magnitude of information flow among performers. Previous studies primarily used cross-correlation to examine the similarity between movement time series. However, this measure is not sensitive to the direction of information flow between agents and may result in type I errors if time series are autocorrelated (45). A few previous observational studies on music ensembles used Granger causality to analyze bow movements, timbre variations, and body sway of performers (39, 46, 47). However, because these studies did not manipulate leadership assignment or visual information, it remains unclear whether previous findings reflect a motor byproduct of executing music scores or are related to higher order aspects of joint action, such as leader–follower communication.

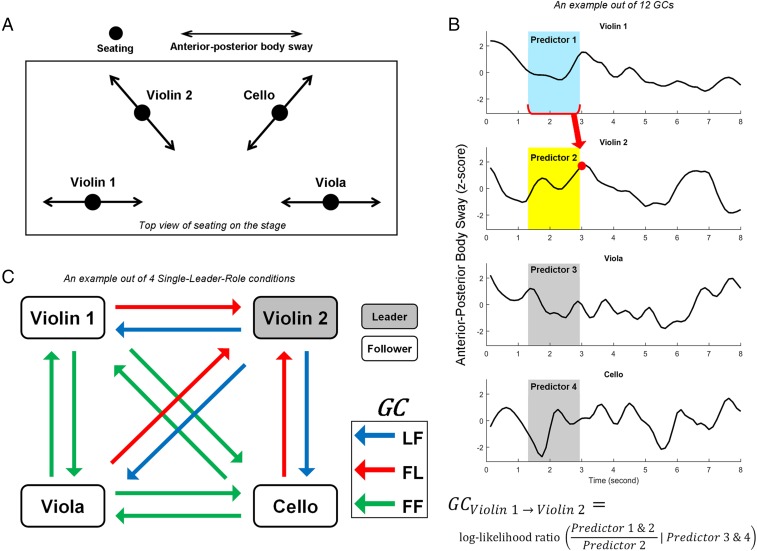

Fig. 1.

Illustrations of the experimental design and Granger causality analyses. (A) Top-down view of the locations of performers on stage. (B) Example excerpt of recorded anterior–posterior body sway motion time series in four performers from the middle of a trial. The Granger causality (GC) of the body sway of violin 1 directionally coupling to (or predicting) violin 2, for example, was calculated by taking the log-likelihood ratio of the degree to which the prior body sway time series of violin 1 (predictor 1, shaded in light blue) contributes to predicting the current status of violin 2 (red dot), over and above the degree to which it is predicted by its own prior time series (predictor 2, shaded in light yellow), while conditional on the prior time series of the other performers (predictors 3 and 4, shaded in gray). This calculation was repeated along the entire time axis to estimate GC. The length of the predictor window (shaded areas) was determined by the model order. The algorithm is conceptually expressed by the equation shown (see ref. 43 for mathematical details). (C) Categorization of the 12 directional relationship pairs between the four performers into three Paired-Roles. We calculated the GCs of directional body sway couplings (using the formula outlined in B) for all 12 directional pairs of performers (shown as arrows) in each quartet as shown on the left. For Single-Leader-Role trials, the 12 GCs were then categorized according to whether the Paired-Role was leader-to-follower (LF), follower-to-leader (FL), or follower-to-follower (FF). For example, if violin 2 was assigned as the single leader while the others were followers in a trial, the arrows (blue) coming out from violin 2 were categorized as LF, the arrows (red) pointing at violin 2 as FL, and the other arrows (green) as FF. For Ambiguous-Role trials, all 12 GCs were categorized as either all leaders (L-all) or all followers (F-all) (not shown in the figure). We treated the 12 unique directional pairs in a quartet on each trial as 12 unique samples for repeated-measures statistical analyses.

In the present study, we investigated information flow in two professional string quartets, manipulating leadership assignment and the presence of visual information. Performers’ body sways were recorded with motion capture (Fig. 1A) while they performed works that varied in compositional style. Different performers were secretly assigned leader or follower roles on different trials (Fig. 1C). On all trials, musicians were told that one performer would be assigned the role of quartet leader. On Single-Leader-Role trials, only one of the four musicians was assigned as leader and the others as followers. We hypothesized that leaders would have greater influence on the body sway of followers than vice versa or than between followers, indexed by Granger causality. On Ambiguous-Role trials, either all four performers were assigned as leaders, or all four performers were assigned as followers, contrary to the expectations of the musicians. This assigning was done to examine whether information flow (indexed by Granger causality) between musicians would increase over the performance of a musical excerpt as the musicians established a joint musical interpretation. We also examined the role of visual information by comparing Granger causality when the musicians faced each other (Seeing) or faced away (Nonseeing). We hypothesized that the influence of leader–follower dynamics would be higher in the visual present (Seeing) condition, indicating that visual information, in addition to auditory information, is used for predicting other musicians’ intentions. We replicated the effects in two separate string quartets playing two different styles of music. One quartet played musical pieces from the Baroque period (quartet 1), in which the different parts are fairly equal in importance, whereas the second quartet played music from the Classical period (quartet 2). Because music of the Classical period more strongly assigns the leader role to one player (typically the first violinist) (33, 34), replicating the effect of leadership assignment on information flow in Classical period music would provide strong support for our conclusions.

Results

Quartet 1.

In quartet 1, the string quartet played Baroque music excerpts in which all four parts—violin 1, violin 2, viola, and cello—were fairly equal in importance.

Subjective identification of the leader.

In Single-Leader-Role conditions, one member of the quartet was assigned as the leader and the rest as followers. For Single-Leader-Role trials (including both Seeing and Nonseeing conditions), on average 2.94 ± 0.25 (mean ± SD) out of the three performers who were followers on each trial correctly identified who the leader was, and a Wilcoxon signed-rank test showed that this result exceeded chance levels (one of three followers correctly identifying the leader) with P < 0.001. Thus, followers were highly accurate at identifying who the secretly assigned leader was on each trial, suggesting that experimentally manipulated leader–follower relationships were successfully built during music performances.

Analyses of body sway coupling in Single-Leader-Role conditions.

A two-way repeated-measures analysis of variance (rANOVA) was conducted on GC scores, with Paired-Role [leader-to-follower (LF), follower-to-leader (FL), and follower-to-follower (FF)] and Vision (Seeing, Nonseeing) as within-subjects factors.

The results of quartet 1 (Fig. 2A) showed a significant interaction [F(2, 22) = 14.70, P < 0.001, f2 = 0.38]. The simple effect analyses further showed that Paired-Role modulated GC under both Seeing [F(2, 22) = 37.75, P < 0.001, f2 = 1.02] and Nonseeing [F(2, 22) = 26.03, P < 0.001, f2 = 0.70] conditions.

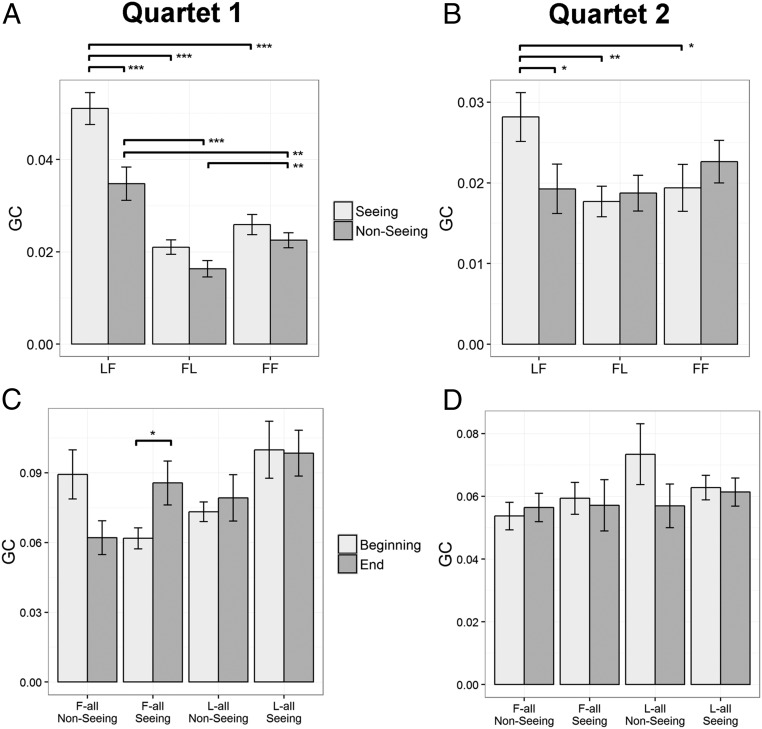

Fig. 2.

GC of interpersonal body sway couplings. Comparisons are marked as *P < 0.05, **P < 0.01, ***P < 0.001. Error bars represent SE. (A and B) GCs of Single-Leader-Role trials are presented in (A) for quartet 1 and (B) for quartet 2. Both experiments showed a significant interaction between Paired-Role and Vision. Specifically, the LF (leader-to-follower) coupling was higher than both FL (follower-to-leader) and FF (follower-to-follower) couplings when performers could see each other, but this effect was attenuated when performers could not see each other. Also, the GC of LF coupling was higher when performers could see each other than when they could not. These results show that GC reflects leader–follower relationships, and seeing or not seeing others specifically mediates the FL coupling. (C and D) GC scores at the beginnings and ends of Ambiguous-Role trials are presented in (C) for quartet 1 and (D) for quartet 2. In quartet 1 (Baroque music), there was a significant three-way interaction (Time × Role × Vision). Specifically, GC increased from the first 30 s to the last 30 s of pieces performed when all performers were assigned as followers and they could see each other. The same analyses on quartet 2 (Classical music) did not show any significant effects.

Follow-up t tests among different levels of Paired-Role showed that, in the Seeing condition, GC was higher in the LF condition than in the FF [t(11) = 6.18, P < 0.001] and FL [t(11) = 7.33, P < 0.001] conditions, but GC was not different between FF and FL conditions [t(11) = 1.73, P = 0.111]. In the Nonseeing condition, GC in the LF condition was higher than in the FF [t(11) = 3.73, P = 0.003] and FL [t(11) = 7.54, P < 0.001] conditions, and GC in the FF condition was higher than in the FL condition [t(11) = 3.29, P = 0.007].

Additionally, GC was higher in Seeing than Nonseeing under the LF condition [t(11) = 7.69, P < 0.001], but Vision did not significantly modulate GC under the FF [t(11) = 1.92, P = 0.081] or FL [t(11) = 1.80, P = 0.099] conditions.

In sum, the results showed an interaction between Paired-Role and Vision on GC of body sway coupling between performers. Specifically, the body sway of the leader predicted those of the followers (LF coupling) to a greater extent than the other directional couplings, follower-to-follower and follower-to-leader (FF and FL, respectively). Being able to see the other performers specifically facilitated the LF coupling but not the other pairings.

Analyses of body sway coupling in Ambiguous-Role conditions.

On the Ambiguous-Role trials, either all performers were assigned the leader role (L-all), or all were assigned the follower role (F-all). Our main hypothesis was that performers would nonverbally build a joint coordinated pattern over the course of a musical excerpt. A three-way rANOVA was performed with Time (Beginning, End), Ambiguous-Role (L-all, F-all), and Vision (Seeing, Nonseeing) as factors.

Results of quartet 1 (Fig. 2C) showed a significant three-way interaction [F(1, 11) = 6.85, P = 0.024, f2 = 0.06]. Under the L-all condition, the simple rANOVA did not show any significant effects (P values > 0.06). Under the F-all condition, there was a significant interaction between Vision and Time [F(1, 11) = 10.65, P = 0.008, f2 = 0.20]. Paired t tests further showed that the GC was larger in the End than the Beginning of the trial in the Seeing condition [t(11) = 2.86, P = 0.016], but not in the Nonseeing condition [t(11) = −2.14, P = 0.055].

In sum, under Ambiguous-Role assignment, there was some evidence of learning over the course of a trial in the case where all performers were assigned follower roles, but there was no evidence of this learning when all performers were assigned leader roles. It is possible that three minutes is simply not long enough to see robust changes in coupling strength in musical performance under these conditions.

Correlations between body sway coupling and subjective ratings of performances.

We examined whether body sway coupling among quartet performers was associated with their subjective ratings of how well the quartet achieved the task goal (Fig. 3). Specifically, each performer rated three aspects of the performance after each trial: (i) the overall goodness of the ensemble performance, (ii) the temporal synchronization of the group, and (iii) the ease of coordination among performers.

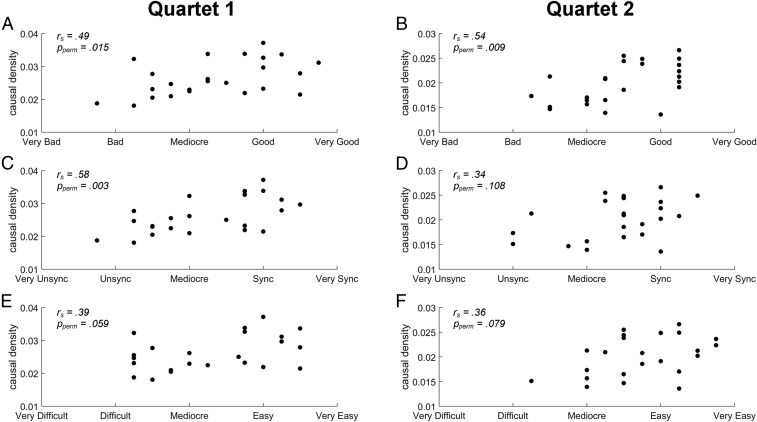

Fig. 3.

Correlations between subjective ratings and group body sway couplings. (A, C, and E) Scatter plots of the results of quartet 1. (B, D, and F) Scatter plots of the results of quartet 2. On each trial, each performer subjectively rated the levels of goodness of performance (A and B), temporal synchronization (C and D), and ease of coordination (E and F), with five-point Likert scales, and we calculated the mean rating across the performers for each of these three aspects for each trial. To represent the group causal density (44) of body sway, we calculated the mean of the 12 GCs reflecting the pairwise influences for each trial, which reflects the overall causal interactivity sustained in a quartet. The Spearman rank correlation tests on all trials (n = 24) showed that the group body sway coupling was positively correlated with goodness of performance level in both experiments and also positively correlated with temporal synchronization level in quartet 1 but not in quartet 2.

Across trials, causal density, the mean GC of overall interpersonal body sway coupling (44), was positively correlated with the performers’ subjective ratings of goodness [rs(23) = 0.49, 95% CI (0.11, 0.75), Pperm (P value of permutation test) = 0.015] and synchronization [rs(23) = 0.58, CI (0.23, 0.80), Pperm = 0.003], but it did not correlate significantly with performance difficulty [rs(23) = 0.39, CI (−0.02, 0.69), Pperm = 0.059].

Quartet 2.

The procedure with quartet 1 was repeated in a second string quartet that played Classical music excerpts in which one instrument (most often the first violin) usually has the most important melodic part; however, we instructed the musicians to attempt to perform the piece using their assigned roles. We performed the same analyses as in quartet 1, summarized here (SI Results for detailed results).

Subjective identification of the leader.

On Single-Leader-Role trials, the leader was correctly identified on average by 2.44 ± 0.63 out of the three followers, which is higher than chance levels (P < 0.001).

Analyses of body sway coupling in Single-Leader-Role conditions.

The analyses of body sway coupling in Single-Leader-Role conditions (Fig. 2B) replicated the results of quartet 1 in that Paired-Role and Vision interactively modulated GC [F(2, 22) = 4.43, P = 0.024, f2 = 0.10]. The t tests further showed that, in the Seeing condition, GC was higher in the LF than FL [t(11) = 3.18, P = 0.009] and FF [t(11) = 2.36, P = 0.038] conditions, and GC for the LF condition was higher in the Seeing than Nonseeing condition [t(11) = 2.57, P = 0.026].

Analyses of body sway coupling in Ambiguous-Role conditions.

On the Ambiguous-Role trials (Fig. 2D), the three-way rANOVA did not find any significant effect (P values > 0.128), and the t tests on changes in GC between the Beginning and the End of the performance were not significant within any Paired-Role × Vision condition [unsigned t(11) < 2.14, P values > 0.056], suggesting that, with Classical music, the performers were not able to improve the information flow within 3 min when faced with an ambiguous role assignment.

Correlations between body sway coupling and subjective ratings of performances.

The Spearman correlational analyses between total body sway coupling within a quartet and subjective ratings of performances (Fig. 3) showed that the GC causal density positively correlated with the subjective rating of how good the performance was across trials [rs(23) = 0.54, CI (0.17, 0.77), Pperm = 0.009], as in quartet 1. Correlations with rated synchronization level (Pperm = 0.108) and performance difficulty (Pperm = 0.079) were not significant.

Differences Between Baroque and Classical Music Styles.

We hypothesized that, although the basic findings would be similar across quartets and musical styles, because Classical music (played in quartet 2) tends to have one instrument playing the melody and the others playing accompaniment, unlike the Baroque music in quartet 1, the quartets would adopt somewhat different coordinative strategies. This unbalanced default leadership hypothesis is supported in that (i) a Mann–Whitney U test showed that more followers correctly identified the assigned leader when Baroque music was played compared with Classical music (z = 2.45, P = 0.007), and (ii) the Kruskal–Wallis H tests showed that the baseline GCs (i.e., without regard to who was the assigned leader) among the performers in each quartet were more unequal when playing Classical music [χ2(3) = 18.2, P < 0.001, f2 = 0.52] than Baroque music [χ2(3) = 7.98, P = 0.046, f2 = 0.13]. See SI Results and Fig. S1 for detailed results.

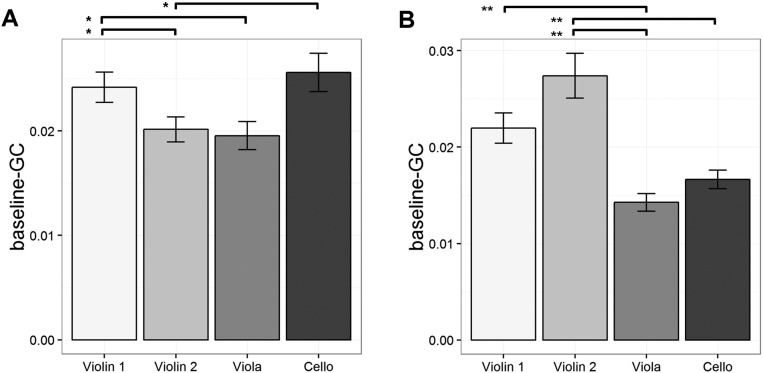

Fig. S1.

The baseline-GC of each performer without regard to leadership role is presented in (A) for playing Baroque music in quartet 1 and (B) for playing Classical music in quartet 2. The greater difference across instruments for quartet 2 compared with quartet 1 likely reflects the different musical styles of the pieces played by the two quartets, with the quartet playing Baroque music having intrinsically more equal parts across instruments compared with the quartet playing Classical music, in which one of the violins tends to have the melody at a particular point in time. Thus, this difference likely indicates that the musical structure of the pieces plays a role in social leadership interactions in addition to effects of assigned leadership role. Significant comparisons are marked as *P < 0.05, **P < 0.01. Error bars represent SE.

Discussion

The results showed that anterior–posterior body sway couplings among string quartet performers reflected nonverbal interpersonal coordination, leadership roles, and performers’ subjective evaluations of their coordinative performance. Assigned leaders influenced followers more than followers influenced leaders or than one follower influenced another, and this effect was larger when performers could see each other than when they could not. Moreover, the degree of total coupling within an ensemble was positively associated with the rated goodness of the performance, suggesting that body sway reflects the communication of information critical to performance success. We replicated these effects in two string quartets playing music of different compositional styles. The findings extend our understanding of interpersonal coordination by examining interactions between more than two people in a naturalistic complex coordinative task through experimental manipulation of leadership assignment and visual information. The use of Granger causality enabled the asymmetric coordination structure among coactors to be disentangled, revealing “who leads whom.”

Our methods enabled examination of nonverbal joint action in a naturalistic setting with four coactors. Through this approach, we were able to examine both overall coordination and the coordination structure associated with leader–follower, follower–leader, and follower–follower roles. We also showed that visual information selectively facilitates leader-to-follower coupling among four coactors in a string quartet, suggesting that visual communication is most useful for coordinating with a leader. Although previous studies examined how perceptual or motor manipulations affect precise movement synchronization between coactors at the note-to-note level (e.g., ref. 34), we used body sway to examine interaction at the more global level of creating a joint aesthetic expression, including factors such as synchronization, phrasing, and dynamics. Furthermore, although previous studies provided descriptions of leadership dynamics in music ensembles (20, 39, 46), our study experimentally manipulated leadership orthogonally to characteristics of the individual musical parts played by each instrument. This manipulation allowed us to show that information flow changes with leadership assignment and that body sway is not a motor byproduct of music performance, but reflects nonverbal communication between members of a group.

An important contribution of the present study is the use of Granger causality over more traditional cross-correlation approaches (45). The use of Granger causality analysis enabled us to examine body sway relationships after partialing out predictions within each performer. This handling of auto-correlated series is critical because within-performer movements may be similar across performers due to the use of a common musical score. Furthermore, we found that cross-correlation analyses of our data were not able to reveal leader–follower effects evident with the Granger causality approach (SI Results and Fig. S2). Importantly, the Granger causality approach enabled us to conclude that the coupling relations reflected actual directional information flow between performers.

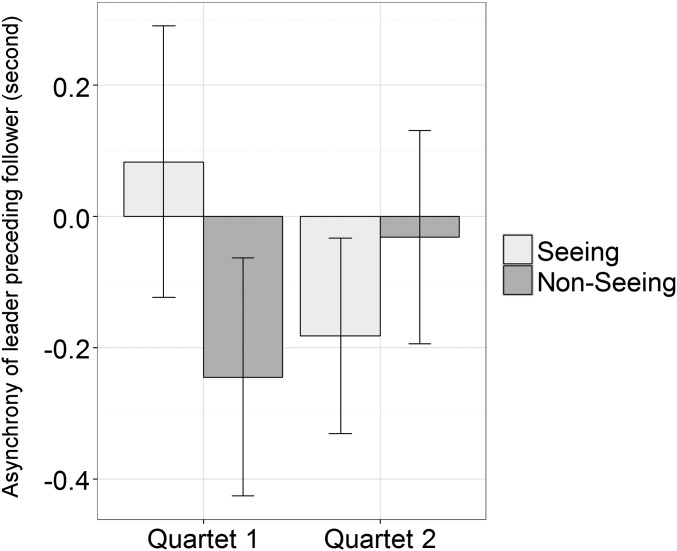

Fig. S2.

Mean body sway temporal asynchrony of leader preceding follower, as identified by the maximum unsigned cross-correlation coefficient. The y axis reflects the maximum cross-correlation, with scores above 0 indicating that the leader preceded the follower and scores below 0 indicating that the follower preceded the leader in time. Error bars represent SE. The t tests revealed that in no condition in any quartet was the mean asynchrony significantly different from 0, nor was the asynchrony significantly different between Seeing and Nonseeing conditions in either quartet. These results suggested that the cross-correlational analysis was not powerful enough to reveal leading–following relationships, as was revealed by our Granger causality analysis.

Critical to the idea that body sway coupling reflects interpersonal coordination (14), we found that total interpersonal body sway coupling in a performance correlated with the performers’ rated goodness of that ensemble performance. Previous studies showed that, when two participants converse during a coordinative task, their body sways tend to unintentionally and spontaneously couple (14, 15). The present study extends this finding to a nonverbal musical context and shows that body sway interactions are related to the rated outcome success of the joint action. Although high-level ensemble performers who have played together for many years are experienced and critical evaluators of the quality of their performances, it would be interesting to investigate relations between total interpersonal body sway coupling and objective measures of performance quality (e.g., acoustic analyses) and ratings by expert and nonexpert audience members.

Although musical performance has often been characterized as involving auditory–motor coordination, basic research on individuals has shown that visual information can help anticipation of auditory information (48, 49), which could be useful for auditory-guided motor coordination. Supporting this view, previous piano duet studies showed that anticipatory gazing at the head motion of a coactor improved accuracy of synchronization during expressive tempo variations (31, 32) and that seeing a partner facilitated temporal synchronization in a jazz ensemble (50). Our findings further show that visual information is particularly helpful for leader-to-follower coordination, suggesting that followers use visual information to anticipatively coordinate their own actions to the leader’s performance. We also found that, when no one was assigned as the leader in a musical style without strong intrinsic leader roles, body sway couplings among performers increased from the beginning to the end of each piece when they could see each other, suggesting that exchanged visual information helped build up interpersonal auditory–motor coordination in real time. Together, these findings indicate that visual information facilitates interpersonal information flow over and above auditory information as a channel for nonverbal communication and improves auditory–motor coordination among coactors.

Despite the overall similarity of the results for quartets 1 and 2, the few differences that emerged suggest that characteristics of the task—specifically, how strongly the musical score suggests a specific leader—modulate group coordination. Previous studies in nonmusical domains indicated that the nature of a coordinative task can modulate interpersonal coordinative structure (e.g., ref. 51), which is consistent with our findings that the secretly assigned leader was more accurately identified for Baroque than Classical performances. Baseline body sway couplings without regard to leadership role were more equal across musician dyads for Baroque than Classical music (SI Results and Fig. S1), suggesting that the relative importance of the four parts intrinsic to the musical score affected interpersonal coordination. Furthermore, total interpersonal body sway coordination was related only to rated temporal synchronization between performers for Baroque but not for Classical music. It is possible that different musical styles shift the coordinative emphases from timing synchronization to other common aesthetic goals, such as coordinated loudness or timbre, which were not rated explicitly in the current study. Further studies are needed to directly examine these issues.

Another question requiring study is how interpersonally exchanged information between ensemble members guides motor coordination within each performer. When listening to an auditory beat, low frequency oscillations in the EEG phase lock with the incoming beats (52). Furthermore, the power of beta band (∼20 Hz) oscillations in both auditory and motor regions fluctuates at the tempo of the auditory rhythm and reflects temporal prediction (53–55). These oscillatory activities might also play a role in the prediction of the intentions and actions of others (21, 56, 57).

Given that music is universal across human societies and is used extensively to promote nonverbal social coordination, it is important to understand how musicians achieve a joint aesthetic goal. Furthermore, the findings of the current study using a music ensemble as a model can be generalized and applied to other forms of interpersonal coordination. Evidence to date indicates that music performance shares the same principles with many other forms of interpersonal coordination (e.g., walking, dancing, and speaking): People tend to coordinate with each other’s movements in joint actions where there is a common goal, and interpersonal coordination involves perceptual, motor, and social factors (see ref. 18 for a review). Thus, understanding how leader–follower roles, sensory information, and structural elements of the situation affect interpersonal coordination in music performance is critical for understanding joint action in general. Furthermore, our method of using Granger causality to uncover bidirectional information flow under experimental manipulation in a naturalistic situation can be applied to understanding group behavior in various situations, such as the important ability to detect who does or does not belong to a particular social group (58), and determining best crowd control procedures for emergency evacuation (59), which are important topics in psychology, computer vision, and public safety.

In conclusion, the present study showed that manipulation of leadership roles and visual information interactively modulated interpersonal coordination in string quartets across styles of music played, as reflected by interpersonally coupled body sways indexed by Granger causality. The coupling degree was positively associated with the success of coordinative performance, and leader-to-follower influence was highest when performers could see each other. Importantly, these results were obtained in a complex naturalistic environment with more than two individuals, while, at the same time, exercising high experimental control of the factors of interest.

Methods

Participants.

Two internationally recognized professional music ensembles participated: the Cecilia String Quartet (four females; mean age = 30.5 y; range = 30–34), and the Afiara Quartet (three male, one female; mean age = 32 y; range = 29–33). Participants were right-handed except for the second violin of the Cecilia Quartet and the cello of the Afiara Quartet. Participants had normal hearing and were neurologically healthy by self-report. Consent was obtained from each participant, and they received reimbursement. The McMaster University Research Ethics Board approved all procedures.

Stimuli and Apparatus.

The data were collected in the McMaster Large Interactive Virtual Environment laboratory (LIVELab) (LIVELab.mcmaster.ca). Quartet 1 (Cecilia Quartet) performed 12 different chorales (Table S1) from the Baroque period (∼1600 to 1750), composed by J. S. Bach, each between 2 and 3 min long. Quartet 2 (Afiara Quartet) also performed 12 different pieces (Table S2), each between 2.5 and 5 min long, from the Classical period (∼1730 to 1820), composed by J. Haydn and W. A. Mozart. The Bach chorales consisted of four parallel melodies, which were relatively equal in importance compared with music from the Classical period, in which one instrument typically has the main melody whereas the others play a supportive accompaniment (34). The musicians did not rehearse the pieces, nor had they previously performed the pieces together. They did not verbally discuss the pieces although they had their individual parts ahead of the experiment.

Table S1.

The trial order of conditions and the Baroque music pieces played in quartet 1

| Block-trial order | Leader | Vision | Bach chorale no. |

| 1-1 | Violin 2 | Seeing | 9 |

| 1-2 | Cello | Seeing | 5 |

| 1-3 | Viola | Seeing | 8 |

| 1-4 | Violin 1 | Seeing | 4 |

| 1-5 | All followers | Seeing | 7 |

| 1-6 | All leaders | Seeing | 14 |

| 2-1 | Violin 2 | Nonseeing | 5 |

| 2-2 | Cello | Nonseeing | 4 |

| 2-3 | Viola | Nonseeing | 9 |

| 2-4 | Violin 1 | Nonseeing | 14 |

| 2-5 | All followers | Nonseeing | 8 |

| 2-6 | All leaders | Nonseeing | 7 |

| 3-1 | All leaders | Nonseeing | 2 |

| 3-2 | All followers | Nonseeing | 15 |

| 3-3 | Violin 1 | Nonseeing | 11 |

| 3-4 | Viola | Nonseeing | 3 |

| 3-5 | Cello | Nonseeing | 1 |

| 3-6 | Violin 2 | Nonseeing | 13 |

| 4-1 | All leaders | Seeing | 15 |

| 4-2 | All followers | Seeing | 3 |

| 4-3 | Violin 1 | Seeing | 2 |

| 4-4 | Viola | Seeing | 13 |

| 4-5 | Cello | Seeing | 11 |

| 4-6 | Violin 2 | Seeing | 1 |

Table S2.

The trial order of conditions and the Classical music pieces played in quartet 2

| Block-trial order | Leader | Vision | Music piece |

| 1-1 | Violin 1 | Nonseeing | Mozart: K. 158, mvt. 3 |

| 1-2 | Viola | Nonseeing | Haydn: Op. 20, No. 1, mvt. 2 |

| 1-3 | Cello | Nonseeing | Haydn: Op. 17, No. 4, mvt. 2 |

| 1-4 | Violin 2 | Nonseeing | Haydn: Op. 17, No. 2, mvt. 2 |

| 1-5 | All followers | Nonseeing | Mozart: K. 169, mvt. 3 |

| 1-6 | All leaders | Nonseeing | Haydn: Op. 17, No. 1, mvt. 2 |

| 2-1 | Violin 1 | Seeing | Haydn: Op. 20, No. 1, mvt. 2 |

| 2-2 | Viola | Seeing | Haydn: Op. 17, No. 2, mvt. 2 |

| 2-3 | Cello | Seeing | Mozart: K. 158, mvt. 3 |

| 2-4 | Violin 2 | Seeing | Haydn: Op. 17, No. 1, mvt. 2 |

| 2-5 | All followers | Seeing | Haydn: Op. 17, No. 4, mvt. 2 |

| 2-6 | All leaders | Seeing | Mozart: K. 169, mvt. 3 |

| 3-1 | All leaders | Seeing | Mozart: K. 156, mvt. 3 |

| 3-2 | All followers | Seeing | Mozart: K. 170, mvt. 2 |

| 3-3 | Violin 2 | Seeing | Haydn: Op. 20, No. 6, mvt. 3 |

| 3-4 | Cello | Seeing | Mozart: K. 171, mvt. 3 |

| 3-5 | Viola | Seeing | Mozart: K. 168, mvt. 3 |

| 3-6 | Violin 1 | Seeing | Mozart: K. 172, mvt. 3 |

| 4-1 | All leaders | Nonseeing | Mozart: K. 170, mvt. 2 |

| 4-2 | All followers | Nonseeing | Mozart: K. 171, mvt. 3 |

| 4-3 | Violin 2 | Nonseeing | Mozart: K. 156, mvt. 3 |

| 4-4 | Cello | Nonseeing | Mozart: K. 172, mvt. 3 |

| 4-5 | Viola | Nonseeing | Haydn: Op. 20, No. 6, mvt. 3 |

| 4-6 | Violin 1 | Nonseeing | Mozart: K. 168, mvt. 3 |

K., Köchel number; mvt., movement; Op., Opus.

An optical motion capture system (24 Oqus 5+ cameras and an Oqus 210c video camera; Qualisys) recorded the head movements of participants at 179 Hz. Four retroreflective markers (3 mm) were placed symmetrically on headbands. Each participant wore the headband around the forehead, located above the nose, eyes, and occipital bone. Because string quartets sit in a semicircle, we recorded the motion of the head as an index of the global upper-body sway (14, 15). Additional EEG and EKG electrodes were also placed on the chest and head of each participant (data not reported here). It was confirmed by the performers that these placements did not constrain their body movements and that they were able to perform as usual.

Design and Procedure.

A full factorial Role (violin 1 leader, violin 2 leader, viola leader, cello leader, all leader, all follower) × Vision (Seeing, Nonseeing) experimental design was used, collapsing over musical pieces. In the Seeing condition, performers faced the center of the quartet whereas, in the Nonseeing condition, each faced 180 degrees away from the center, with all participants unable to see one another (Fig. 1A). The orders of Vision and Role levels were orthogonally counterbalanced across blocks. Each of the 12 musical pieces was performed twice, once in each Vision condition, yielding 24 trials. Each piece was played in two different Role conditions so the exact same trial was never repeated (Tables S1 and S2 show the complete design).

Performers were given confidential sheets that assigned them as either leader or follower on each trial. Performers were informed that there was one leader and three followers in all trials. Four Role levels were Single-Leader-Role conditions, in that one performer was the leader and the others followers (leader was either violin 1, violin 2, viola, or cello), and two were Ambiguous-Role conditions (all four were leaders, all four were followers). Performers were instructed to perform at their best within the role assigned to them. All trials were performed on the same day for quartet 1, and the first and the second halves of the trials were performed 2 d apart for quartet 2.

To prevent the assigned leader from explicitly initiating the performance, trials began with three metronome tones (interonset interval, 875 ms for all pieces played by quartet 1; and 500 ms for pieces played by quartet 2) via speakers. Performers were instructed to follow the tempo and start on the first downbeat after the last tone. Because some pieces were shorter in duration than others, if necessary, each piece was performed repeatedly until the trial reached 2 min for quartet 1, and 2.5 min for quartet 2.

A potential concern was that experimentally manipulating leadership might distort natural body sway, but the evidence suggests that such distortion was not the case. First, performers were not told that body sway was the variable of interest. Second, Mann–Whitney U tests showed that leader/follower role assignment did not significantly modulate any of range, variation, or total moving distance of body sway within any performer of any quartet (P values > 0.379), suggesting that body sway was not unnaturally exaggerated or attenuated by experimentally manipulated leadership. So, manipulating leadership seems to have influenced only the relationships between players’ movements, not the movements themselves.

After each trial, each performer rated three aspects of the group’s performance using a five-point Likert scale (−2 to 2): (i) the overall goodness of the ensemble performance, (ii) the temporal synchronization (taking all levels, from note-to-note to musical phrase, into consideration) of the group, and (iii) the ease of coordination among performers. Given the high level of the musicians and the fact that each quartet had worked together for many years with regular intense rehearsal and concert schedules, we expected that they would be sensitive evaluators of these variables. Performers who were not assigned as the leader on a particular trial were also asked to identify who they thought was the assigned leader using a forced-choice categorical response measure.

Data Processing and Granger Causality.

For each trial, the recorded motion trajectories were de-noised, spatially averaged, down-sampled, z-score–normalized, and projected to the anterior–posterior body orientation (SI Methods for details) to produce four body sway time series, one for each performer (Fig. 1B).

The Matlab Multivariate Granger Causality Toolbox (43) was used to estimate the magnitude of Granger causality (GC) between each pair of body sway time series among all four performers in each quartet (Fig. 1B). GC is a statistical estimation of the magnitude of how much one time series is predicted by the history of another time series, taking into account how much it is predicted by its own previous history, in the form of a log-likelihood ratio. The larger the value of GC, the better the prediction and the more information is flowing from one time series to another. It is important to note that we estimated each GC between two time series conditional on the remaining two time series because, in this way, any potential common influence on other variables was partialed out (43). In this way, 12 unique GCs were obtained from each trial, corresponding to the degree to which each of violin 1, violin 2, viola, and cello predicted each of three other performers (Fig. 1C and SI Methods for details).

Statistical Analyses.

The 12 directional couplings (n = 12) between the various pairs of performers were considered independent (43) and treated as a random factor in a within-subjects analysis (Fig. 1C). The Single-Leader-Role trials and the Ambiguous-Role trials were analyzed separately. For the Single-Leader-Role analyses, the factor Paired-Role had three levels [leader-to-follower (LF), follower-to-leader (FL), and follower-to-follower (FF)], and Vision had two levels (Seeing and Nonseeing). For each of the 12 directional couplings for each trial, we first estimated the GC, and then we took the mean GCs of the trials belonging to each Vision (Seeing, Nonseeing) × Paired-Role (LF, FL, FF) level as an estimate for each condition. For the Ambiguous-Role analyses, Paired-Role had two levels (Leader-all and Follower-all), Vision had two levels (Seeing and Nonseeing), and Time had two levels (Beginning and End). The Time level was extracted from the first and last 30-s epochs from each performance (trial). Again, we took the mean GCs of the trials belonging to each Vision × Paired-Role × Time level for each directional coupling.

We performed repeated-measures analysis of variance (rANOVA) on GC scores for analyses of body sway coupling in Single-Leader-Role and Ambiguous-Role conditions. Follow-up post hoc analyses were performed for each significant F-test.

For analyzing correlations between body sway coupling and subjective ratings of performances across all 24 trials, we correlated the mean of the four performers’ subjective ratings (one missing easy/difficulty rating on a trial by a performer of quartet 1 was excluded from averaging) with the group causal density (44) of body sways, calculated as the mean of the 12 GCs reflecting all pairwise influences in a quartet, for each trial. For each correlation analysis, we estimated the Spearman rank correlation coefficient (rs) on the nonnormally distributed data, using Fisher's z transformation to estimate the 95% confidence interval (CI) of rs, and used a permutation test (5,000 times) to calculate the P value (Pperm) to adjust for the ties in the ranked data.

We used χ2 tests to check the normality assumption for parametric tests, the Mauchly test to check the sphericity assumption, and the Brown–Forsythe test to check the homogeneity of variances assumption. When the assumption of normality was violated (P < 0.05), nonparametric test equivalents were used (specified in Results). Every statistical test was performed two-tailed, if applicable. We set α = 0.05, and each Bonferroni-adjusted α was used for each post hoc comparison series as a conservative control for type I error; we report the tests with Bonferroni-adjusted α < P < 0.05 as trends.

SI Methods

Details of Data Processing and Granger Causality.

Motion capture data processing.

Motion trajectories were exported from Qualisys Track Manager for processing and analysis in MATLAB. The first 120 s of each piece were analyzed for quartet 1 (Cecilia Quartet), and the first 150 s of each piece were analyzed for quartet 2 (Afiara Quartet). Missing data due to recording noise was found in only 1 of 384 trajectories (0.06% of the epoch length) of quartet 1, and 3 of 384 trajectories (0.11 to 0.17% of the epoch length) of quartet 2, and were filled with spline interpolation. Each trajectory was down-sampled to 8 Hz by spatially averaging the samples within each nonoverlapped 125-ms window because Granger causality analysis prefers a low model order for capturing a given physical time length of the movement trajectory (43). This rate is sufficient for capturing most of the head movements, confirmed by visual inspection. No filtering or temporal smoothing was applied to the data because temporal convolution distorts the estimation of Granger causality (43). To estimate the anterior–posterior body sway, we spatially averaged the positions of the four motion capture markers on the head of each performer in the x–y plane (collapsing altitude) for each time frame, and the anterior–posterior orientation was referenced to the center of the half circle formed by the locations of the performers (Fig. 1A). Finally, each time series was normalized (z-score) to equalize the magnitude of the sway motion among performers. This procedure produced four normalized body sway time series, one for each performer for each trial (Fig. 1B).

Granger causality.

We followed the procedure and used the algorithms of the Multivariate Granger Causality (MVGC) Toolbox (43) in MATLAB to estimate the magnitude of Granger causality (GC) between each pair of body sway time series among all four performers in each quartet. First, the MVGC toolbox confirmed that each time series passed the stationary assumption for Granger causality analysis, with the spectral radius less than 1. Second, the optimal model order (the length of history included) was determined by the Akaike information criterion on each trial. The optimal model order is a balance between maximizing goodness of fit and minimizing the number of coefficients (length of the time series) being estimated. The model order used was 13 (1,625 ms, 1.86 beats) for quartet 1, and 9 (1,125 ms, 2.25 beats) for quartet 2 because these were the largest optimal model orders across trials within each quartet. Model order was a fixed parameter within quartet (i.e., did not vary by trial), which avoided model order artificially affecting GCs on different trials, and the largest model order across trials covered all optimal model orders across trials. Finally, 12 unique GCs were obtained from each trial, corresponding to the degrees to which each of violin 1, violin 2, viola, and cello predicted each of three other performers. It is important to note that each GC estimated, representing how well the time series of one performer predicted that of another performer, was made conditional on how well the time series of that performer predicted those of the other two performers. This conditional estimation is optional for estimating GC, and we chose to do so because this way partials out any potential common dependencies on other variables (43), which fit our purpose of quantifying the pairwise information flows among performers of a quartet.

SI Results

Details of Results for Quartet 2.

Analyses of body sway coupling in Single-Leader-Role conditions.

As for quartet 1, we used an rANOVA to investigate whether Paired-Role (LF, FL, FF) and Vision (Seeing, Nonseeing) modulated the coupling strength of anterior–posterior body sway motions among performers (Fig. 1B). The results showed an interaction between Vision and Paired-Role [F(2, 22) = 4.43, P = 0.024, f2 = 0.10]. Follow-up post hoc simple effect analyses showed a simple effect of Paired-Role in the Seeing condition [F(2, 22) = 5.78, P = 0.010, f2 = 0.13]; specifically, post hoc paired t tests showed that GC was higher in the LF than FL condition [t(11) = 3.18, P = 0.009] and a trend for GC to be higher in LF than FF condition [t(11) = 2.36, P = 0.038, Bonferroni-adjusted α = 0.05/3], but GC in the FF condition was not different from in the FL [t(11) = 0.59, P = 0.564] condition. In contrast, there was no effect of Paired-Role in the Nonseeing condition [F(2, 22) = 1.18, P = 0.325]. Orthogonal post hoc paired t tests showed that the GC was not modulated by Vision for either FL [t(11) = −0.41, P = 0.687] or FF [t(11) = −1.17, P = 0.268] conditions. There was, however, a trend for GC to be higher in Seeing than Nonseeing for the LF condition [t(11) = 2.57, P = 0.026, Bonferroni-adjusted α = 0.05/3].

In sum, despite playing music differing in style, the quartets of both experiments 1 and 2 showed an interaction between Paired-Role and Vision on GC of body sway coupling between performers. Specifically, the body sway of the leader predicted those of the followers (LF coupling) to a greater extent than for other directional couplings. Also, seeing other performers specifically facilitated the LF coupling but not other pairings. However, these effects seemed to be weaker in the case of Classical music (quartet 2) than Baroque music (quartet 1), which is investigated further below.

Analyses of body sway coupling in Ambiguous-Role conditions.

As for quartet 1, we extracted the first and last 30-s epochs from each trial and compared whether the GC of body sway couplings improved from the beginning to end of each performance (Fig. 2D). A three-way rANOVA performed with Time (Beginning, End), Ambiguous-Role (L-all, F-all), and Vision (Seeing, Nonseeing) as factors revealed no significant main effects or interaction effects (P values > 0.12).

Correlations between body sway coupling and subjective ratings of performances.

As for quartet 1, we examined whether body sway coupling among quartet performers reflected their subjective ratings of how well the quartet achieved the task goals (Fig. 3). The Spearman rank correlation results showed that the causal density positively correlated with the subjective rating of how good the performance was [rs(23) = 0.54, CI (0.17, 0.77), Pperm = 0.009], but not with how synchronized [rs(23) = 0.34, CI (−0.07, 0.65), Pperm = 0.108] or how easy [rs(23) = 0.36, CI (−0.05, 0.67), Pperm = 0.079] the performance was.

Differences Between Quartets.

We hypothesized that, because Classical music tends to have one instrument playing the melody and the others accompaniment (33–35, 60, 61) whereas, in Baroque music, the parts are more equal in importance, the quartets would adopt somewhat different coordinative strategies. To estimate the extent to which each performer acted as a leader when assigned a follower role, termed Leadership Baseline, we took the mean GCs of body sway coupling of each performer in predicting each of the other three performers [e.g., baseline-GCViolin1 = (GCViolin1→Violin2 + GCViolin1→Viola + GCViolin1→cello)/3], when that performer was assigned as a follower in Single-Leader-Role trials. We then compared the baseline-GC among performers in each quartet (Fig. S1).

Kruskal–Wallis H tests (nonparametric alternative of a one-way between-subject ANOVA) showed that the performers had different baseline-GC in both quartets [quartet 1, χ2(3) = 7.98, P = 0.046, f2 = 0.13; quartet 2, χ2(3) = 18.2, P < 0.001, f2 = 0.52]. In the quartet playing Baroque music (quartet 1) (Fig. S1A), however, post hoc Mann–Whitney U tests (Bonferroni-adjusted α = 0.05/6) did not show any significant baseline-GC differences between players (P values > 0.035), but there were trends for the baseline-GC of violin 1 to be higher than those of violin 2 (z = 2.11, P = 0.035) and the viola (z = 1.99, P = 0.046), and for the baseline-GC of the cello to be higher than that of violin 2 (z = 2.11, P = 0.035). In the quartet playing Classical music (quartet 2) (Fig. S1B), on the other hand, the post hoc U tests showed that the baseline-GC of violin 1 was higher than that of the viola (z = 2.97, P = 0.003) and that the baseline-GC of violin 2 was higher than those of the viola (z = 3.20, P = 0.001) and cello (z = 2.86, P = 0.004) although the other comparison failed to reach threshold (P values > 0.212). These results confirmed our hypothesis that the default leadership was more unequal when playing Classical compared with Baroque music.

Analyses with Cross-Correlation.

Cross-correlation estimates the similarity between two time series as a function of a shifting time step and has been used for quantifying the preceding-lag relationship between two time series variables. To empirically compare Granger causality and cross-correlation, we performed cross-correlation analyses on the same preprocessed data to which we had applied Granger causality. If cross-correlation is able to reflect the leading–following relationship between performers in the Single-Leader-Role trials, the cross-correlation analysis on body sway would show the highest similarity in the time step where leader precedes follower.

For each trial of each quartet, the cross-correlation was estimated between each pair of the four performers’ anterior–posterior body sway time series. To match the Granger causality analyses, the cross-correlation coefficients were calculated for lags up to plus or minus the model order of each quartet. Twelve samples (the random factor) were obtained under each of the Seeing and Nonseeing conditions for each quartet, corresponding to the mean asynchrony by which each performer (violin 1, violin 2, viola, or cello) led each of the other three performers. If cross-correlation reflects leading–following relationships between performers, the maximum unsigned cross-correlation coefficient (highest similarity) would occur at the temporal asynchrony where the leader’s time series precedes the follower’s.

The cross-correlation results are shown in Fig. S2. One-sample t tests showed that the asynchrony between leader and follower’s body sways were not significantly different from 0 in either Seeing or Nonseeing conditions of either quartet [unsigned t(11) < 1.35, P values > 0.205]. The paired t tests further showed that the asynchrony was not different between Seeing and Nonseeing conditions of either quartet [unsigned t(11) < 0.93, P values > 0.375]. In sum, the results showed that the cross-correlational analysis was not powerful enough to reflect the leading–following relationship or the effect of visual information, as was revealed by Granger causality analyses.

Acknowledgments

We thank Dave Thompson and Carl Karichian for technical assistance; and the staff and student members of the LIVELab for assisting with these experiments. This research was supported by grants to L.J.T. from the Social Sciences and Humanities Research Council of Canada, the Canadian Institutes of Health Research, and the Canadian Foundation for Innovation; and by a Vanier Canada Graduate Scholarship (to A.C.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1617657114/-/DCSupplemental.

References

- 1.Knoblich G, Sebanz N. The social nature of perception and action. Curr Dir Psychol Sci. 2006;15:99–104. [Google Scholar]

- 2.Sebanz N, Bekkering H, Knoblich G. Joint action: Bodies and minds moving together. Trends Cogn Sci. 2006;10:70–76. doi: 10.1016/j.tics.2005.12.009. [DOI] [PubMed] [Google Scholar]

- 3.Tomasello M, Vaish A. Origins of human cooperation and morality. Annu Rev Psychol. 2013;64:231–255. doi: 10.1146/annurev-psych-113011-143812. [DOI] [PubMed] [Google Scholar]

- 4.Cirelli LK, Einarson KM, Trainor LJ. Interpersonal synchrony increases prosocial behavior in infants. Dev Sci. 2014;17:1003–1011. doi: 10.1111/desc.12193. [DOI] [PubMed] [Google Scholar]

- 5.Cirelli LK, Wan SJ, Trainor LJ. Fourteen-month-old infants use interpersonal synchrony as a cue to direct helpfulness. Philos Trans R Soc Lond B Biol Sci. 2014;369:20130400. doi: 10.1098/rstb.2013.0400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Cirelli LK, Wan SJ, Trainor LJ. Social effects of movement synchrony: Increased infant helpfulness only transfers to affiliates of synchronously moving partners. Infancy. 2016;21:807–821. [Google Scholar]

- 7.Hove MJ, Risen JL. It’s all in the timing: Interpersonal synchrony increases affiliation. Soc Cogn. 2009;27:949–960. [Google Scholar]

- 8.Trainor LJ, Cirelli L. Rhythm and interpersonal synchrony in early social development. Ann N Y Acad Sci. 2015;1337:45–52. doi: 10.1111/nyas.12649. [DOI] [PubMed] [Google Scholar]

- 9.Wiltermuth SS, Heath C. Synchrony and cooperation. Psychol Sci. 2009;20:1–5. doi: 10.1111/j.1467-9280.2008.02253.x. [DOI] [PubMed] [Google Scholar]

- 10.Huron D. Is music an evolutionary adaptation? Ann N Y Acad Sci. 2001;930:43–61. doi: 10.1111/j.1749-6632.2001.tb05724.x. [DOI] [PubMed] [Google Scholar]

- 11.Trainor LJ. The origins of music in auditory scene analysis and the roles of evolution and culture in musical creation. Philos T Roy Soc B. 2015;370:20140089. doi: 10.1098/rstb.2014.0089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Savage PE, Brown S, Sakai E, Currie TE. Statistical universals reveal the structures and functions of human music. Proc Natl Acad Sci USA. 2015;112:8987–8992. doi: 10.1073/pnas.1414495112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Noy L, Dekel E, Alon U. The mirror game as a paradigm for studying the dynamics of two people improvising motion together. Proc Natl Acad Sci USA. 2011;108:20947–20952. doi: 10.1073/pnas.1108155108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Shockley K, Richardson DC, Dale R. Conversation and coordinative structures. Top Cogn Sci. 2009;1:305–319. doi: 10.1111/j.1756-8765.2009.01021.x. [DOI] [PubMed] [Google Scholar]

- 15.Shockley K, Santana MV, Fowler CA. Mutual interpersonal postural constraints are involved in cooperative conversation. J Exp Psychol Hum Percept Perform. 2003;29:326–332. doi: 10.1037/0096-1523.29.2.326. [DOI] [PubMed] [Google Scholar]

- 16.Fowler CA, Richardson MJ, Marsh KL, Shockley KD. Coordination: Neural, Behavioral and Social Dynamics. Springer; Heidelberg: 2008. pp. 261–279. [Google Scholar]

- 17.Roederer JG. The search for a survival value of music. Music Percept. 1984;1:350–356. [Google Scholar]

- 18.Repp BH, Su YH. Sensorimotor synchronization: A review of recent research (2006–2012) Psychon Bull Rev. 2013;20:403–452. doi: 10.3758/s13423-012-0371-2. [DOI] [PubMed] [Google Scholar]

- 19.D’Ausilio A, Novembre G, Fadiga L, Keller PE. What can music tell us about social interaction? Trends Cogn Sci. 2015;19:111–114. doi: 10.1016/j.tics.2015.01.005. [DOI] [PubMed] [Google Scholar]

- 20.Volpe G, D'Ausilio A, Badino L, Camurri A, Fadiga L. Measuring social interaction in music ensembles. Philos T Roy Soc B. 2016;371:20150377. doi: 10.1098/rstb.2015.0377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Keller PE, Novembre G, Loehr J. Shared Representations: Sensorimotor Foundations of Social Life. Cambridge Univ Press; Cambridge, UK: 2016. pp. 280–310. [Google Scholar]

- 22.Palmer C. Music performance. Annu Rev Psychol. 1997;48:115–138. doi: 10.1146/annurev.psych.48.1.115. [DOI] [PubMed] [Google Scholar]

- 23.Palmer C. The Psychology of Music. Elsevier; Amsterdam: 2013. pp. 405–422. [Google Scholar]

- 24.Keller PE, Knoblich G, Repp BH. Pianists duet better when they play with themselves: On the possible role of action simulation in synchronization. Conscious Cogn. 2007;16:102–111. doi: 10.1016/j.concog.2005.12.004. [DOI] [PubMed] [Google Scholar]

- 25.Loehr JD, Palmer C. Temporal coordination between performing musicians. Q J Exp Psychol (Hove) 2011;64:2153–2167. doi: 10.1080/17470218.2011.603427. [DOI] [PubMed] [Google Scholar]

- 26.Pecenka N, Keller PE. The role of temporal prediction abilities in interpersonal sensorimotor synchronization. Exp Brain Res. 2011;211:505–515. doi: 10.1007/s00221-011-2616-0. [DOI] [PubMed] [Google Scholar]

- 27.Repp BH, Keller PE. Self versus other in piano performance: Detectability of timing perturbations depends on personal playing style. Exp Brain Res. 2010;202:101–110. doi: 10.1007/s00221-009-2115-8. [DOI] [PubMed] [Google Scholar]

- 28.Zamm A, Pfordresher PQ, Palmer C. Temporal coordination in joint music performance: Effects of endogenous rhythms and auditory feedback. Exp Brain Res. 2015;233:607–615. doi: 10.1007/s00221-014-4140-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Zamm A, Wellman C, Palmer C. Endogenous rhythms influence interpersonal synchrony. J Exp Psychol Hum Percept Perform. 2016;42:611–616. doi: 10.1037/xhp0000201. [DOI] [PubMed] [Google Scholar]

- 30.Goebl W, Palmer C. Synchronization of timing and motion among performing musicians. Music Percept. 2009;26:427–438. [Google Scholar]

- 31.Kawase S. Gazing behavior and coordination during piano duo performance. Atten Percept Psychophys. 2014;76:527–540. doi: 10.3758/s13414-013-0568-0. [DOI] [PubMed] [Google Scholar]

- 32.Kawase S. Assignment of leadership role changes performers’ gaze during piano duo performances. Ecol Psychol. 2014;26:198–215. [Google Scholar]

- 33.Timmers R, Endo S, Bradbury A, Wing AM. Synchronization and leadership in string quartet performance: A case study of auditory and visual cues. Front Psychol. 2014;5:645. doi: 10.3389/fpsyg.2014.00645. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Elliott MT, Chua WL, Wing AM. Modelling single-person and multi-person event-based synchronisation. Curr Opin Behav Sci. 2016;8:167–174. [Google Scholar]

- 35.Wing AM, Endo S, Bradbury A, Vorberg D. Optimal feedback correction in string quartet synchronization. J R Soc Interface. 2014;11:20131125. doi: 10.1098/rsif.2013.1125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Loehr JD, Large EW, Palmer C. Temporal coordination and adaptation to rate change in music performance. J Exp Psychol Hum Percept Perform. 2011;37:1292–1309. doi: 10.1037/a0023102. [DOI] [PubMed] [Google Scholar]

- 37.Athreya DN, Riley MA, Davis TJ. Visual influences on postural and manual interpersonal coordination during a joint precision task. Exp Brain Res. 2014;232:2741–2751. doi: 10.1007/s00221-014-3957-2. [DOI] [PubMed] [Google Scholar]

- 38.Tolston MT, Shockley K, Riley MA, Richardson MJ. Movement constraints on interpersonal coordination and communication. J Exp Psychol Hum Percept Perform. 2014;40:1891–1902. doi: 10.1037/a0037473. [DOI] [PubMed] [Google Scholar]

- 39.Badino L, D’Ausilio A, Glowinski D, Camurri A, Fadiga L. Sensorimotor communication in professional quartets. Neuropsychologia. 2014;55:98–104. doi: 10.1016/j.neuropsychologia.2013.11.012. [DOI] [PubMed] [Google Scholar]

- 40.Oullier O, de Guzman GC, Jantzen KJ, Lagarde J, Kelso JA. Social coordination dynamics: Measuring human bonding. Soc Neurosci. 2008;3:178–192. doi: 10.1080/17470910701563392. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Richardson MJ, Marsh KL, Isenhower RW, Goodman JR, Schmidt RC. Rocking together: Dynamics of intentional and unintentional interpersonal coordination. Hum Mov Sci. 2007;26:867–891. doi: 10.1016/j.humov.2007.07.002. [DOI] [PubMed] [Google Scholar]

- 42.Varlet M, Marin L, Lagarde J, Bardy BG. Social postural coordination. J Exp Psychol Hum Percept Perform. 2011;37:473–483. doi: 10.1037/a0020552. [DOI] [PubMed] [Google Scholar]

- 43.Barnett L, Seth AK. The MVGC multivariate Granger causality toolbox: A new approach to Granger-causal inference. J Neurosci Methods. 2014;223:50–68. doi: 10.1016/j.jneumeth.2013.10.018. [DOI] [PubMed] [Google Scholar]

- 44.Seth AK, Barrett AB, Barnett L. Causal density and integrated information as measures of conscious level. Philos T Roy Soc A. 2011;369:3748–3767. doi: 10.1098/rsta.2011.0079. [DOI] [PubMed] [Google Scholar]

- 45.Dean RT, Dunsmuir WT. Dangers and uses of cross-correlation in analyzing time series in perception, performance, movement, and neuroscience: The importance of constructing transfer function autoregressive models. Behav Res Methods. 2016;48:783–802. doi: 10.3758/s13428-015-0611-2. [DOI] [PubMed] [Google Scholar]

- 46.D’Ausilio A, et al. Leadership in orchestra emerges from the causal relationships of movement kinematics. PLoS One. 2012;7:e35757. doi: 10.1371/journal.pone.0035757. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Papiotis P, Marchini M, Perez-Carrillo A, Maestre E. Measuring ensemble interdependence in a string quartet through analysis of multidimensional performance data. Front Psychol. 2014;5:963. doi: 10.3389/fpsyg.2014.00963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Hasson U, Ghazanfar AA, Galantucci B, Garrod S, Keysers C. Brain-to-brain coupling: A mechanism for creating and sharing a social world. Trends Cogn Sci. 2012;16:114–121. doi: 10.1016/j.tics.2011.12.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Schroeder CE, Lakatos P, Kajikawa Y, Partan S, Puce A. Neuronal oscillations and visual amplification of speech. Trends Cogn Sci. 2008;12:106–113. doi: 10.1016/j.tics.2008.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Schober MF, Levine MF. Visual and auditory cues in jazz musicians’ ensemble performance. In: Williamon A, Edwards D, Bartel L, editors. Proceedings of the International Symposium on Performance Science 2011. Association Européenne des Conservatoires; Utrecht, The Netherlands: 2011. pp. 553–554. [Google Scholar]

- 51.Ramenzoni VC, Davis TJ, Riley MA, Shockley K, Baker AA. Joint action in a cooperative precision task: Nested processes of intrapersonal and interpersonal coordination. Exp Brain Res. 2011;211:447–457. doi: 10.1007/s00221-011-2653-8. [DOI] [PubMed] [Google Scholar]

- 52.Calderone DJ, Lakatos P, Butler PD, Castellanos FX. Entrainment of neural oscillations as a modifiable substrate of attention. Trends Cogn Sci. 2014;18:300–309. doi: 10.1016/j.tics.2014.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Chang A, Bosnyak DJ, Trainor LJ. Unpredicted pitch modulates beta oscillatory power during rhythmic entrainment to a tone sequence. Front Psychol. 2016;7:327. doi: 10.3389/fpsyg.2016.00327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Fujioka T, Ross B, Trainor LJ. Beta-band oscillations represent auditory beat and its metrical hierarchy in perception and imagery. J Neurosci. 2015;35:15187–15198. doi: 10.1523/JNEUROSCI.2397-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Fujioka T, Trainor LJ, Large EW, Ross B. Internalized timing of isochronous sounds is represented in neuromagnetic β oscillations. J Neurosci. 2012;32:1791–1802. doi: 10.1523/JNEUROSCI.4107-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Keller PE, Novembre G, Hove MJ. Rhythm in joint action: Psychological and neurophysiological mechanisms for real-time interpersonal coordination. Philos Trans R Soc Lond B Biol Sci. 2014;369:20130394. doi: 10.1098/rstb.2013.0394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Lindenberger U, Li SC, Gruber W, Müller V. Brains swinging in concert: Cortical phase synchronization while playing guitar. BMC Neurosci. 2009;10:22. doi: 10.1186/1471-2202-10-22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Solera F, Calderara S, Cucchiara R. Socially constrained structural learning for groups detection in crowd. IEEE Trans Pattern Anal Mach Intell. 2016;38:995–1008. doi: 10.1109/TPAMI.2015.2470658. [DOI] [PubMed] [Google Scholar]

- 59.Zhan B, Monekosso DN, Remagnino P, Velastin SA, Xu LQ. Crowd analysis: A survey. Mach Vis Appl. 2008;19:345–357. [Google Scholar]

- 60.King EC. The roles of student musicians in quartet rehearsals. Psychol Music. 2006;34:262–282. [Google Scholar]

- 61.Murnighan JK, Conlon DE. The dynamics of intense work groups: A study of British string quartets. Adm Sci Q. 1991;36:165–186. [Google Scholar]