Abstract

The evaluation of educational programs has become an expected part of medical education. At some point, all medical educators will need to critically evaluate the programs that they deliver. However, the evaluation of educational programs requires a very different skillset than teaching. In this article, we aim to identify and summarize key papers that would be helpful for faculty members interested in exploring program evaluation.

In November of 2016, the 2015-2016 Academic life in emergency medicine (ALiEM) Faculty Incubator program highlighted key papers in a discussion of program evaluation. This list of papers was augmented with suggestions by guest experts and by an open call on Twitter. This resulted in a list of 30 papers on program evaluation. Our authorship group then engaged in a process akin to a Delphi study to build consensus on the most important papers about program evaluation for medical education faculty.

We present our group’s top five most highly rated papers on program evaluation. We also summarize these papers with respect to their relevance to junior medical education faculty members and faculty developers.

Program evaluation is challenging. The described papers will be informative for junior faculty members as they aim to design literature-informed evaluations for their educational programs.

Keywords: program evaluation, medical education, curated collection

Introduction and background

Medical educators spend much of their time developing and delivering educational programs. Programs can include didactic lectures, online modules, boot camps, and simulation sessions. Program evaluation is essential to determine the value of the teaching that is provided [1-2], whether or not it meets its intended objectives and how it should be improved or modified in the future [3]. However, rather than beginning at a program's conception [2], evaluation is often only considered late in the process or after the curriculum has been delivered [1].

Program evaluation can be mistaken for assessment or research, but these constructs are subtly different. Within medical education, assessment is generally understood to be the measurement of individual student performance [4]. While student success can provide some information on the effectiveness of a program, program evaluation goes further to determine whether the program worked and how it can be improved [3]. Program evaluation often overlaps and shares methods with research, but its primary goal is to improve or judge the evaluated program, rather than to create and disseminate new knowledge [4].

In 2016, the Faculty Incubator was created by the Academic Life in Emergency Medicine (ALiEM) team to create a virtual community of practice (CoP) [5-6] for early career educators. In this online forum, members of this CoP discussed and debated topics relevant to modern emergency medicine (EM) clinician educators. As part of this program, we created a one-month module focused on program evaluation.

This paper is a narrative review, which highlights the literature that was felt to be the most important for faculty developers and junior educators who wish to learn more about program evaluation.

Review

Methods

During November 1-30, 2016, the junior faculty educators and mentors of the ALiEM Faculty Incubator [7] discussed the topic of program evaluation in an online discussion forum. The Faculty Incubator involved 30 junior faculty members and 10 mentors. All junior faculty members were required to participate in the discussion which was facilitated by the mentors, however, participation was not strictly monitored. The titles of papers that were cited, shared, and recommended were compiled into a list.

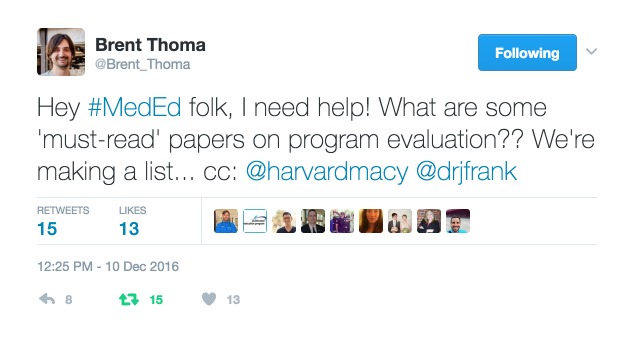

This list was expanded using two other methods: articles recommended during a YouTube Live discussion featuring mentors with significant experience in program evaluation (Dr. Lalena Yarris, George Mejicano, Chad Kessler, and Megan Boysen-Osborn) and a call for important program evaluation papers on Twitter. We ‘tweeted’ requests to have participants of the free open access meducation and medical education (#FOAMed and #MedEd) online virtual communities of practice [8] provide suggestions for important papers on the topic of program evaluation. Figure 1 demonstrates an exemplary tweet. Several papers were suggested via more than one modality.

Figure 1. Tweet by Brent Thoma soliciting requests for key papers on program evaluation in medical education.

The importance of these papers for program evaluation was evaluated through a three-round voting process inspired by the Delphi methodology [9-11]. All of this manuscript's authors read the 30 articles and participated in this process. In the first round, raters were asked to indicate the importance of each article on a seven-point Likert scale, anchored at one by the statement "unimportant for junior faculty" and at seven by the statement "essential for junior faculty." In the second round, rates were provided with a frequency histogram displaying how each article had been rated in the first round. They were then asked to indicate if each article "must be included in the top papers" or "should not be included in the top papers." In the third round, rates were provided with the results of the second round as a percentage of raters who indicated that each article must be included. They were then asked to select the five papers which should be included in the article because they are the most important.

Similar methods were used by the ALiEM faculty incubator in a previous series of papers published in the Western Journal of Emergency Medicine and Population Health [12-15]. Readers will note that this was not a traditional Delphi methodology [9] because our rates included novices (i.e. junior faculty members, participants in the faculty incubator) as well as experienced medical educators (i.e. clinician educators, all of whom have published > 10 peer-reviewed publications, who serve as mentors and facilitators of the ALiEM faculty incubator). Rather than only including experts, we intentionally involved junior educators to ensure we selected papers that would be of use to a spectrum of educators throughout their careers.

Results

The ALiEM faculty incubator discussions, expert recommendations, and social media requests yielded 30 articles. The paper evaluation process resulted in a rank-order listing of these papers in order of perceived relevance as indicated by the results of round three. The top five papers are expanded upon below. The ratings of all 30 papers and their full citations are listed in (Table 1).

Table 1. The complete list of study design literature reviewed by the authorship team and the ratings following each round of evaluation.

| Article Title | Round 1: Mean rating (SD) | Round 2: % of raters that endorsed this paper | Round 3: % of raters that endorsed this paper | Top 5 Papers |

| Twelve tips for evaluating educational programs [1] | 6.8 (0.4) | 100% | 100% | 1st (tie) |

| Program evaluation models and related theories: AMEE Guide No. 67 [16] | 6.7 (0.5) | 100% | 100% | 1st (tie) |

| AMEE Education Guide no. 29: evaluating educational programmes [4] | 6.2 (1.4) | 88.9% | 100% | 1st (tie) |

| Rethinking program evaluation in health professions education: beyond 'did it work'? [3] | 6.0 (1.0) | 100% | 88.9% | 4th |

| Perspective: Reconsidering the focus on "outcomes research" in medical education: a cautionary note [17] | 5.7 (1.2) | 77.8% | 77.8% | 5th |

| A conceptual model for program evaluation in graduate medical education [18] | 5.9 (1.1) | 55.6 | 0% | |

| Evaluating technology-enhanced learning: A comprehensive framework [19] | 5.8 (1.3) | 66.7 | 22.2% | |

| The structure of program evaluation: an approach for evaluating a course, clerkship, or components of a residency or fellowship training program [20] | 5.6 (0.9) | 44.4% | 0% | |

| AM last page: A snapshot of three common program evaluation approaches for medical education [21] | 5.6 (1.0) | 55.6 | 0% | |

| Using an outcomes-logic-model approach to evaluating a faculty development program for medical educators [5] | 5.0 (1.2) | 22.2% | 0% | |

| Achieving desired results and improved outcomes: integrating planning and assessment throughout learning activities [22] | 5.0 (1.7) | 44.4% | 0% | |

| Diseases of the curriculum [23] | 4.9 (1.7) | 55.6% | 11.1% | |

| Nimble approaches to curriculum evaluation in graduate medical education [24] | 4.7 (1.0) | 22.2% | 0% | |

| 12 Tips for programmatic assessment [25] | 4.7 (2.2) | 55.6% | 0% | |

| A model to begin to use clinical outcomes in medical education [26] | 4.4 (1.4) | 22.2% | 0% | |

| Meta-analysis of faculty's teaching effectiveness: Student evaluation of teaching ratings and student learning are not related [27] | 4.4 (1.9) | 0% | 0% | |

| Transforming the academic faculty perspective in graduate medical education to better align educational and clinical outcomes [28] | 4.3 (1.4) | 0% | 0% | |

| How we conduct ongoing programmatic evaluation of our medical education curriculum [29] | 4.2 (1.1) | 11.1% | 0% | |

| Using a modified nominal group technique as a curriculum evaluation tool [30] | 4.1 (1.3) | 11.1% | 0% | |

| A new framework for designing programs of assessment [31] | 4.1 (1.7) | 0% | 0% | |

| Evaluation of a collaborative program on smoking cessation: Translating outcomes framework into practice [32] | 3.9 (1.5) | 11.1% | 0% | |

| The role of theory-based outcome frameworks in program evaluation: Considering the case of contribution analysis [33] | 3.9 (1.8) | 0% | 0% | |

| Use of an institutional template for annual program evaluation and improvement: benefits for program participation and performance [34] | 3.4 (1.4) | 0% | 0% | |

| Instructional effectiveness of college teachers as judged by teachers, current and former students, colleagues, administrators, and external (neutral) observers [35] | 3.3 (1.7) | 0% | 0% | |

| Student evaluations of teaching (mostly) do not measure teaching effectiveness [36] | 3.1 (1.8) | 11.1% | 0% | |

| How we use patient encounter data for reflective learning in family medicine training [37] | 3.0 (1.0) | 0% | 0% | |

| Half a minute: Predicting teacher evaluations from thin slices of nonverbal behavior and physical attractiveness [38] | 2.9 (1.6) | 0% | 0% | |

| Experimental study design and grant writing in eight steps and 28 questions [39] | 2.6 (1.8) | 0% | 0% | |

| Early experience of a virtual journal club [40] | 2.4 (1.6) | 0% | 0% | |

| Cost: The missing outcome in simulation-based medical education research: A systematic review [41] | 2.3 (1.0) | 0% | 0% |

Discussion

The following is the list of papers that our group has determined to be of interest and relevance to junior faculty members and faculty development officers. The accompanying commentaries are meant to explain the relevance of these papers to junior faculty members and also highlight considerations for senior faculty members when using these works for faculty development workshops or sessions.

1. The Association for Medical Education in Europe (AMEE) Education Guide no. 29 Evaluating Educational Programmes [4]: This education guide within medical teacher begins with a brief discussion of the history of program evaluation. It goes on to recommend a framework of evaluation for educators that focuses on the methodology of evaluation, the context of evaluation practice, and the challenge of modifying existing programs with the results the evaluation. This overview includes detailed sets of questions for evaluators to ask about programs that they review. Perhaps the most salient piece of advice from this paper is that improvement even when modesty is valuable.

Relevance to Junior Faculty Member

This is a high-yield read for the junior faculty educator because it provides a succinct and comprehensive overview of program evaluation through the presentation of a framework, which can be adapted by junior faculty educators. Each step within the framework is accompanied by an explanation to assist the reader in understanding the components.

Considerations for Faculty Developers

Faculty developers should be expected to understand program evaluation in the context of its history. This manuscript summarizes the historical program evaluation literature from within and beyond medical education in a way that contextualizes modern controversies and informs current approaches. Faculty developers should use this manuscript to center themselves within the literature. The framework provided may also guide their approach to evaluating the programs of their more junior faculty members.

2. Program Evaluation Models and Related Theories- AMEE Guide No. 67 [16]: This guide discusses the three main education theories that underlie various evaluation models (i.e. reductionist theory, system theory, and complexity theory). It begins by describing the purpose of program evaluation, clarifying the definition of program evaluation, and explaining why we evaluate educational programs. The authors conclude that the main purpose of any educational program is change – be it intended or unintended – and defines program evaluation as the “systematic collection and analysis of information related to the design, implementation, and outcomes of a program for the purpose of monitoring and improving the quality and effectiveness of the program.” The guide ends with a description of four evaluation models (i.e. experimental / quasi-experimental models, Kirkpatrick’s four-level model, logic models, and (context/ input/ process/ product model) informed by these education theories.

Relevance to Junior Faculty Members

Change is the most important aspect of any educational program, so measuring change should be the focus of a program evaluation. It is important for junior educators to understand that evaluation should analyze both the intended and unintended change resulting from a program, rather than solely investigating the intended outcomes. By discussing several different evaluation models and their underlying educational theories, this guide will allow the junior faculty educators to choose the best evaluation modality that is most relevant to their individual educational activity.

Considerations for Faculty Developers

This paper may enhance a faculty developer’s foundational knowledge of program evaluation by summarizing its underlying education theories and common models. It may also serve as a frequent reference for faculty developers as they select conceptual frameworks to inform the evaluation of educational programs.

3. Twelve Tips For Evaluating Educational Programs [1]: The tips provided in this article can be summarized into three primary themes. Prior to beginning the evaluation, it is important to understand the program, be realistic in what is possible, define the stakeholders, determine the intended outcomes of the program, select an evaluation paradigm, and choose a measurement modality. As evaluation design begins, assemble a group of collaborators who will help to brainstorm, guide the methods used and assist in the piloting of the evaluation. Finally, they recommend avoiding common pitfalls such as confusing program evaluation with learner assessment, evaluating an outcome that is not consistent with the program’s goals, using an unreliable instrument or an instrument without context-specific validity evidence and having unrealistic expectations.

Relevance to Junior Faculty Members

Planning for program evaluation must take place as part of the program design process and not as an afterthought. The 12 tips provide salient advice and a model that is thorough, yet easily achievable for junior faculty educators. While the format of this paper presents only an overview of several complex concepts (e.g. validity evidence), the author provides references for a more in-depth review of these topics.

Considerations for Faculty Developers

Faculty developers will find this concise and clear paper, helpful as both a reference for mentees and to further their own understanding of program evaluation. In addition to foundational tips, the author summarizes advanced concepts that may apply to a faculty developer’s educational practice. Rather than simply presenting a formula for program evaluation, the inclusion of the strengths and weaknesses of various paradigms allows a more nuanced understanding of the gray areas in evaluating educational programs. Referencing the complexities of validity evidence and the potential drawbacks of a patient-related outcome approaches may spark dialogue in faculty development programs and collaborations. Finally, the references included are thoughtful and relevant and would be good additions to faculty developers’ personal libraries.

4. Rethinking Programme Evaluation in Health Professions Education-Beyond 'Did it Work?' [3]: This article begins with a provocative analysis of Kirkpatrick hierarchy, establishing the multiple problems that arise when evaluation programs focus solely on outcomes. Beyond the outcome ("Did it work?"), it reinforces the importance of considering the educational theory ("Why will it work?"), the process ("How did it work?"), the context ("What context is the program operating in?”), and unexpected results within the evaluation of a program. In doing so, the authors open the discussion regarding which evaluation approaches might be better suited for different educational programs. More important than finding "the perfect" evaluation model is gaining a holistic view of a program that clarifies the relationship between interventions and their outcomes.

Relevance to Junior Faculty Members

The spirit of this paper is laudable: do not aim to find a single explanation or theory, but familiarize yourself with the literature and determine the best way to evaluate a program within your own context. It will guide junior faculty in their efforts to develop new educational programs within their educational contexts; focusing not only on if a certain program works, but on why it should work, how it worked, and what else occurred. These questions will guide implementation processes and inform future approaches.

Considerations for Faculty Developers

Providing a historical and theoretical overview of program evaluation as a discipline, this article traces the roots of program evaluation. It highlights the importance of going beyond the Kirkpatrick hierarchy to develop a greater understanding of why a program might succeed or fail. The first figure clearly outlines essential elements that explain how theory intersects with implementation and evaluation and is a must read for those who are training program evaluator to their faculty members, to guide them towards richer methods for describing curricula or programs in their scholarly work. Notably, this advice was considered controversial and should be carefully considered [42].

5. Perspective: Reconsidering the Focus on "Outcomes Research" in Medical Education- a Cautionary Note [17]: There is an increasing emphasis on higher-level outcomes (e.g. patient outcomes) in educational research which presents challenges to researchers. After discussing the limitations of this approach, the authors offer salient advice for educational research: begin with a study question and proceed in a stepwise fashion to determine the intended outcome and measurement tool, rather than beginning with the measurement tool and working backward. They recommend beginning with Kirkpatrick level one outcomes (e.g. reaction) and sequentially progressing to higher levels (e.g. learning, behavior, and results) [43] throughout a program of research, rather than always striving to find an impact on patient-level outcomes.

Relevance to Junior Faculty Members

There are several challenges and pitfalls associated with developing medical education studies and evaluating patient-level outcomes. While patient-level outcomes will have a role as educational research continues to evolve, they can be difficult to fund without large grants as multi-site involvement is required to obtain adequate power. Lower level outcomes, such as student learning or behavior, remain important for assessments of novel interventions, as well as for isolating the most effective components of an intervention. This is important advice for junior faculty members who are already influenced by the focus on patient-level outcomes within medical research.

Considerations for Faculty Developers

Faculty developers must acknowledge the problems inherent to seeking patient-level outcomes in educational research and program evaluation. Junior faculty members may be inclined to “shoot for the moon” and seek an impact on patient outcomes before first establishing that their program is well received, leads to attitude and behavioral change, and is sustainable.

Limitations

As with our previous papers [12-15], this study was not designed to be an exhaustive systematic literature review. We attempted to triangulate our naturally emergent list with more papers by utilizing expert consultation and an open social media call, which yielded some important recommended papers. Considering the depth and breadth of our final list, we feel that these adjunctive methods have resulted in an important, if not comprehensive, review of the literature.

Conclusions

We present five key papers addressing the topic of program evaluation with discussions and applications for junior faculty members and those leading faculty development initiatives. These papers provide a basis from which junior faculty members can design literature-informed program evaluations for their educational projects.

Acknowledgments

The authors would like to acknowledge Dr. Michelle Lin and the 2016-17 Academic Life in Emergency Medicine (ALiEM) Faculty Incubator participants and mentors for facilitating the drafting and submission of this manuscript.

The content published in Cureus is the result of clinical experience and/or research by independent individuals or organizations. Cureus is not responsible for the scientific accuracy or reliability of data or conclusions published herein. All content published within Cureus is intended only for educational, research and reference purposes. Additionally, articles published within Cureus should not be deemed a suitable substitute for the advice of a qualified health care professional. Do not disregard or avoid professional medical advice due to content published within Cureus.

Funding Statement

Drs. Michael Gottlieb, Megan Boysen-Osborn, and Teresa M Chan report receiving teaching honoraria from Academic Life in Emergency Medicine (ALiEM) during the conduct of the study for their participation as mentors for the 2016-17 ALiEM Faculty Incubator.

Footnotes

The authors have declared that no competing interests exist.

References

- 1.Twelve tips for evaluating educational programs. Cook DA. Med Teach. 2010;32:296–301. doi: 10.3109/01421590903480121. [DOI] [PubMed] [Google Scholar]

- 2.Fitzpatrick JL, Sanders JR, Worthen BR. London, England: Pearson Higher Ed; 2011. Program Evaluation: Alternative Approaches and Practical Guidelines. [Google Scholar]

- 3.Rethinking programme evaluation in health professions education: Beyond “did it work?”. Haji F, Morin MP, Parker K. Med Educ. 2013;47:342–351. doi: 10.1111/medu.12091. [DOI] [PubMed] [Google Scholar]

- 4.AMEE Education Guide no. 29: Evaluating educational programmes. Goldie J. Med Teach. 2006;28:210–224. doi: 10.1080/01421590500271282. [DOI] [PubMed] [Google Scholar]

- 5.Using an outcomes-logic-model approach to evaluate a faculty development program for medical educators. Armstrong EG, Barsion SJ. Acad Med. 2006;81:483–488. doi: 10.1097/01.ACM.0000222259.62890.71. [DOI] [PubMed] [Google Scholar]

- 6.Wenger E. Cambridge, England: Cambridge University Press; 1990. Communities of practice: Learning, meaning, and identity. [Google Scholar]

- 7.ALiEM Faculty Incubator 2017-2018: Call for Applications. [Mar;2017 ];Gottlieb M, Chan TM-Y, Yarris LM. https://www.aliem.com/faculty-incubator/ Faculty. 2016

- 8.Leveraging a virtual community of practice to participate in a survey-based study: A description of the METRIQ study methodology. Thoma B, Paddock M, Purdy E, et al. Acad Emerg Med. 2017;1:110–113. doi: 10.1002/aet2.10013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Research guidelines for the Delphi survey technique. Hasson F, Keeney S, McKenna H. J Adv Nurs. 2000;32:1008–1015. [PubMed] [Google Scholar]

- 10.Emergency medicine and critical care blogs and podcasts: establishing an international consensus on quality. Thoma B, Chan TM, Paterson QS, et al. Ann Emerg Med. 2015;66:396–402. doi: 10.1016/j.annemergmed.2015.03.002. [DOI] [PubMed] [Google Scholar]

- 11.Administration and leadership competencies: establishment of a national consensus for emergency medicine. Thoma B, Poitras J, Penciner R, et al. CJEM. 2015;17:107–114. doi: 10.2310/8000.2013.131270. [DOI] [PubMed] [Google Scholar]

- 12.Primer series: five key papers fostering educational scholarship in junior academic faculty. Chan T, Gottlieb M, Fant A, et al. West J Emerg Med. 2016;17:519–526. doi: 10.5811/westjem.2016.7.31126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Gottlieb M, Boysen-Osborn M, Chan TM, et al. West J Emerg Med. Vol. 18. Academic; 2017. Academic primer series: Eight key papers about education theory; pp. 293–302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Primer series: five key papers about team collaboration relevant to emergency medicine. Gottlieb M, Grossman C, Rose E, et al. West J Emerg Med. 2017;18:303–310. doi: 10.5811/westjem.2016.11.31212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Primer series: five key papers for consulting clinician educators. Chan T, Gottlieb M, Quinn A, et al. West J Emerg Med. 2017;18:311–317. doi: 10.5811/westjem.2016.11.32613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Program evaluation models and related theories: AMEE Guide N. 67. Frye AW, Hemmer PA. Med Teach. 2012;34:288–299. doi: 10.3109/0142159X.2012.668637. [DOI] [PubMed] [Google Scholar]

- 17.Perspective: Reconsidering the focus on “outcomes research” in medical education: a cautionary note. Cook DA, West CP, Colin P. Acad Med. 2013;88:162–167. doi: 10.1097/ACM.0b013e31827c3d78. [DOI] [PubMed] [Google Scholar]

- 18.Musick DW. Acad Med. Vol. 8. Aug: 2006. A conceptual model for program evaluation in graduate medical education; pp. 1051–1056. [DOI] [PubMed] [Google Scholar]

- 19.Evaluating technology-enhanced learning: A comprehensive framework. Cook DA, Ellaway RH. Med Teach. 2015;37:961–970. doi: 10.3109/0142159X.2015.1009024. [DOI] [PubMed] [Google Scholar]

- 20.Durning SJ, Hemmer P, Pangaro LN. Teach Learn Med. Vol. 19. Summer: 2007. The structure of program evaluation: an approach for evaluating a course, clerkship, or components of a residency or fellowship training program; pp. 308–318. [DOI] [PubMed] [Google Scholar]

- 21.AM last page: A snapshot of three common program evaluation approaches for medical education. Blanchard RD, Torbeck L, Blondeau W. Acad Med. 2013;88:146. doi: 10.1097/ACM.0b013e3182759419. [DOI] [PubMed] [Google Scholar]

- 22.Moore DE, Green JS, Gallis HA. J Contin Educ Health Prof. Vol. 29. Winter: 2009. Achieving desired results and improved outcomes: integrating planning and assessment throughout learning activities ; pp. 1–15. [DOI] [PubMed] [Google Scholar]

- 23.Abrahamson S. J Med Educ. Vol. 53. Dec: 1978. Diseases of the Curriculum; pp. 951–957. [DOI] [PubMed] [Google Scholar]

- 24.Reed DA. J Grad Med Educ. Vol. 3. Jun: 2011. Nimble approaches to curriculum evaluation in graduate medical education; pp. 264–266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Twelve Tips for programmatic assessment. van der Vleuten CP, Schuwirth LW, Driessen EW, et al. Med Teach. 2015;37:641–646. doi: 10.3109/0142159X.2014.973388. [DOI] [PubMed] [Google Scholar]

- 26.Haan CK, Edwards FH, Poole B, et al. Acad Med. Vol. 83. Jun: 2008. A model to begin to use clinical outcomes in medical education; pp. 574–580. [DOI] [PubMed] [Google Scholar]

- 27.Meta-analysis of faculty's teaching effectiveness: Student evaluation of teaching ratings and student learning are not related. Uttl B, White CA, Gonzalez DW. Stud educ eval. 2016 [Google Scholar]

- 28.Wong BM, Holmboe ES. Acad Med. Vol. 91. Apr: 2016. Transforming the academic faculty perspective in graduate medical education to better align educational and clinical outcomes; pp. 473–479. [DOI] [PubMed] [Google Scholar]

- 29.How we conduct ongoing programmatic evaluation of our medical education curriculum. Karpa K, Abendroth CS. Med Teach. 2012;34:783–786. doi: 10.3109/0142159X.2012.699113. [DOI] [PubMed] [Google Scholar]

- 30.Dobbie A, Rhodes M, Tysinger JW, et al. Fam Med. Vol. 36. Jun: 2004. Using a modified nominal group technique as a curriculum evaluation tool; pp. 402–406. [PubMed] [Google Scholar]

- 31.Dijkstra J, Van der Vleuten CP, Schuwirth LW. Adv in Health Sci Educ. Vol. 15. Aug: 2010. A new framework for designing programmes of assessment; pp. 379–393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Evaluation of a collaborative program on smoking cessation: translating outcomes framework into practice. Shershneva MB, Larrison C, Robertson S, et al. Journal of Continuing Education in the Health Professions. 2011;31:0. doi: 10.1002/chp.20146. [DOI] [PubMed] [Google Scholar]

- 33.The role of theory-based outcome frameworks in program evaluation: Considering the case of contribution analysis. Dauphinee WD. Med Teach. 2015;37:979–982. doi: 10.3109/0142159X.2015.1087484. [DOI] [PubMed] [Google Scholar]

- 34.Andolsek KM, Nagler A, Weinerth JL. J Grad Med Educ. Vol. 2. Jun: 2010. Use of an institutional template for annual program evaluation and improvement: benefits for program participation and performance; pp. 160–164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Instructional effectiveness of college teachers as judged by teachers themselves, current and former students, colleagues, administrators, and external (neutral) observers. Feldman KA. Res High Educ. 1989;30:137–194. [Google Scholar]

- 36.Student evaluations of teaching (mostly) do not measure teaching effectiveness. Boring A, Ottoboni K, Stark PB. Scienceopen. 2016:1–11. [Google Scholar]

- 37.How we use patient encounter data for reflective learning in family medicine training. Morgan S, Henderson K, Tapley A, et al. Med Teach. 2015;37:897–900. doi: 10.3109/0142159X.2014.970626. [DOI] [PubMed] [Google Scholar]

- 38.Half a minute: Predicting teacher evaluations from thin slices of nonverbal behavior and physical attractiveness. Ambady N, Rosenthal R. J Pers Soc Psychol. 1993;64:431–441. [Google Scholar]

- 39.Experimental study design and grant writing in eight steps and 28 questions. Bordage G, Dawson B. Med Educ. 2003;37:376–385. doi: 10.1046/j.1365-2923.2003.01468.x. [DOI] [PubMed] [Google Scholar]

- 40.Oliphant R, Blackhall V, Moug S, et al. Clin Teach. Vol. 12. Dec: 2015. Early experience of a virtual journal club; pp. 389–393. [DOI] [PubMed] [Google Scholar]

- 41.Zendejas B, Wang AT, Brydges R, et al. Surgery. Vol. 153. Feb: 2013. Cost: The missing outcome in simulation-based medical education research: A systematic review; pp. 160–176. [DOI] [PubMed] [Google Scholar]

- 42.Why medical educators should continue to focus on clinical outcomes. Wayne DB, Barsuk JH, McGaghie WC. Acad Med. 2013;88:1403. doi: 10.1097/ACM.0b013e3182a368d5. [DOI] [PubMed] [Google Scholar]

- 43.Kirkpatrick DL. San Francisco: Berrett-Koehler Publishers, Inc; 1994. Evaluating Training Programs. [Google Scholar]