Abstract

Background

The new sepsis definition has increased the need for frequent sequential organ failure assessment (SOFA) score recalculation and the clerical burden of information retrieval makes this score ideal for automated calculation.

Objective

The aim of this study was to (1) estimate the clerical workload of manual SOFA score calculation through a time-motion analysis and (2) describe a user-centered design process for an electronic medical record (EMR) integrated, automated SOFA score calculator with subsequent usability evaluation study.

Methods

First, we performed a time-motion analysis by recording time-to-task-completion for the manual calculation of 35 baseline and 35 current SOFA scores by 14 internal medicine residents over a 2-month period. Next, we used an agile development process to create a user interface for a previously developed automated SOFA score calculator. The final user interface usability was evaluated by clinician end users with the Computer Systems Usability Questionnaire.

Results

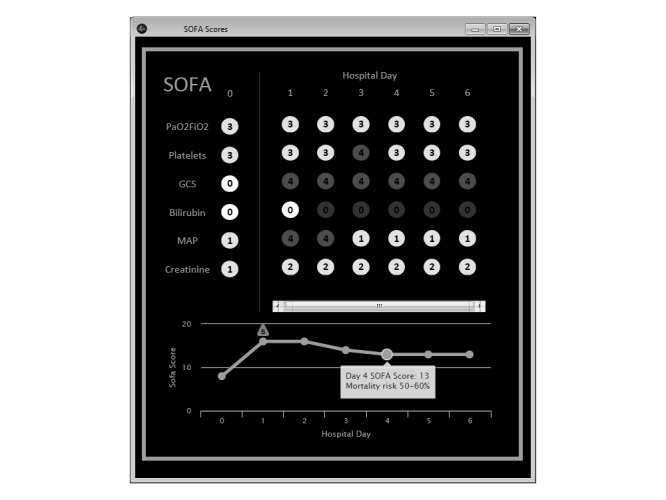

The overall mean (standard deviation, SD) time-to-complete manual SOFA score calculation time was 61.6 s (33). Among the 24% (12/50) usability survey respondents, our user-centered user interface design process resulted in >75% favorability of survey items in the domains of system usability, information quality, and interface quality.

Conclusions

Early stakeholder engagement in our agile design process resulted in a user interface for an automated SOFA score calculator that reduced clinician workload and met clinicians’ needs at the point of care. Emerging interoperable platforms may facilitate dissemination of similarly useful clinical score calculators and decision support algorithms as “apps.” A user-centered design process and usability evaluation should be considered during creation of these tools.

Keywords: automation, organ dysfunction scores, software design, user-computer interface

Introduction

As electronic medical records (EMRs) have propagated through the US health care system, they have brought both great promise and great problems [1,2]. One unintended consequence of increasing EMR adoption that has been recently characterized is physician burnout associated with EMR-associated clerical tasks [3]. The high clerical burden of these tasks may be a consequence of variable attention given to usability and user-centered design by vendors [4,5]. Health information technology interfaces that are not well adapted to clinician workflow can both increase clerical workload and potentially pose safety risks to patients [6-8]. As in other industries, medicine has sought to overcome task-related inefficiencies through automation [9].

Automation of computer interaction in clinical medicine can take many forms. Automated information retrieval is commonly utilized to generate shift hand-off and inpatient rounding reports, significantly reducing time spent on information retrieval tasks [10-12]. Automating clinical guideline implementation through clinical decision support rules has also been done to reduce practice variability by promoting standards of care [13,14]. A recent change in the definition of sepsis has opened a challenge to create and implement clinical decision support that could reduce the clinician workload of information retrieval and processing specific to the sequential organ failure assessment (SOFA) score [15].

In March 2016, the operational definition of sepsis was updated to include a change in SOFA score ≥2 compared with baseline (ΔSOFA) [15]. The updated definition has been controversial [16-20]. The SOFA score, which assesses organ dysfunction in six domains, was created in 1996 to describe sepsis-related organ dysfunction [21]. Originally, the SOFA score was calculated at admission [21]. With time, usage has been extended to include serial recalculation using the most abnormal values during the preceding 24 h [22]. However, the new sepsis definition suggests that the SOFA score would need more frequent recalculation to identify sepsis in real time.

The prospective time-drain imposed by the new definition may not be trivial; previous studies have indicated a time-cost of about 5 min for information retrieval and manual score calculation per patient [23]. Consequently, methods to include automated SOFA score calculations in daily clinical reports have been created [24,25]. EMR interfaces have advanced since those studies and the time-drain of manual SOFA calculation may have changed. Additionally, these previous automated SOFA score calculators were used in printed daily reports and have not been adapted to meet clinician needs for real-time use at the bedside.

The goals of this study were to (1) quantify the current time-drain of manual SOFA score calculation and (2) describe the user-centered design process and usability evaluation of an EMR-integrated real-time automated SOFA score calculator interface.

Methods

Setting

This study was performed at Mayo Clinic Hospital, St Marys Campus in Rochester, Minnesota. The study protocol was reviewed and approved by the Mayo Clinic Institutional Review Board.

Time-Motion Analysis

Internal medicine residents were observed calculating baseline and current SOFA scores during their medical intensive care unit (ICU) rotation over a 2-month period. Residents utilized Mayo Clinic’s locally developed EMR for data retrieval. The instrument (website, mobile phone app, etc) utilized to perform the calculation was at the clinician's discretion. Total calculation time and calculation instrument were captured for each observation. The total time-cost was calculated using average task completion time, assuming one SOFA score calculation or patient day, and extrapolated to the total number of patient medical ICU days at St Marys Hospital in Rochester, MN during a 1-year period.

Interface Development and Usability Evaluation

The user interface was designed using an agile development process involving stakeholders from critical care medicine and information technology. Agile software development is a user-centered design process where programs are built incrementally in many short development cycles. These development cycles are analogous to plan-do-study-act cycles utilized in clinical quality improvement. In contrast with traditional “waterfall” linear software development, end-user testing and feedback is performed during each agile developmental cycle rather than during the last phase of the project. Agile software development utilizes close collaboration between developers and end users to guide improvements during each cycle—this feature allows early customization of the user interface (UX) to meet the clinician end user’s information needs. Close involvement of clinician end users throughout the development process has been shown to improve usability and end-user utilization of the resulting product [26,27].

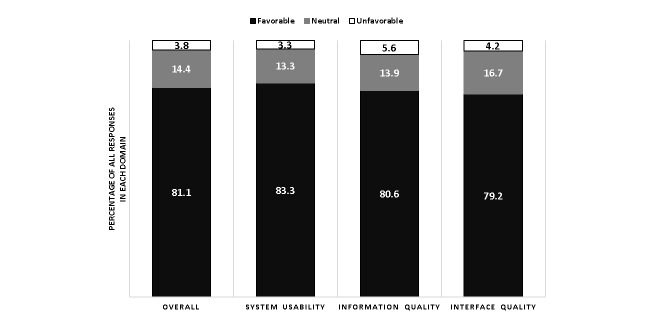

The algorithm underlying the SOFA score calculator was previously validated for daily score calculation [25] and updated to facilitate more frequent recalculation every 15 min. With each recalculation, the 24-h calculation frame is shifted by 15 min. During the initial planning phase, clinician stakeholders were interviewed to determine essential and nice-to-have user interface features and how to display information for each SOFA subcomponent. Next, a UX mockup was constructed using Pencil (Evolus, Ho Chi Min City, Vietnam), an open-source multi-platform graphical user interface (GUI) prototyping tool, and returned to clinician stakeholders for review and comment. To complete the cycle, changes were made to the UX prototypes by developers and returned to clinicians for review and feedback. We continued iterative UX development cycles until a consensus was reached on interface design. The interface underwent a total of four iterative development cycles spanning 2 weeks before consensus was reached. The final UX was integrated into clinical workflow through our institution’s ICU patient care dashboard by adding indicator icons to our unit-level multipatient viewer. The indicator icon changes when the ΔSOFA criteria have been met but does not trigger a visual alert. A mouse-click on the indicator displays the automated SOFA score calculator interface (Figure 1).

Figure 1.

Example of the automated sequential organ failure assessment (SOFA) score calculator’s final implemented user interface.

The final UX was evaluated with the Computer Systems Usability Questionnaire administered through REDCap [28,29]. The questionnaire was sent to all potential end users not involved in UX development who were scheduled to work during the 2 months after the interface had been made available for clinical use. A 5-point Likert scale was used for each item. Responses to each item were categorized as favorable (4-5), neutral (3), or unfavorable (1-2). Each question item belonged to one of three domains—system usability, information quality, and interface quality [28]. The proportion of question items categorized as favorable, neutral, and unfavorable was calculated for the overall questionnaire and within each domain.

All statistical analysis for study was performed with R version 3.3.1 [30]. For the time motion analysis, linear regression was performed to assess the relationship between hospital day and calculation time. Descriptive statistics were used to describe the survey participants’ clinical roles and the proportion of responses within each usability domain.

Results

Time-Motion Analysis

Fourteen internal medicine residents were observed calculating 35 baseline and 35 current SOFA scores for patients admitted to the medical ICU under their care. The overall mean (SD) calculation time was 61.6 s (33). The time required to calculate the current SOFA score was significantly lower than the baseline score (39.9 s [8.3] vs 83.4 s [36.0]; P<.001). Most participants (9/14, 64%) manually entered data points into a Web-based score calculator; the remainder used a mobile phone app. There was a significant linear association between current hospital day and time to calculate baseline score (P<.001, R2=.54). If we extrapolated the time-cost to an entire year within our institution’s 24-bed medical intensive care unit, the cumulative time required for one extra manual SOFA score calculation for each patient day (6770 patient days) would be about 116 (64) hours. This amounts to almost 5 extra hours of work per ICU bed distributed among our medical intensive care clinicians.

Interface Design and Usability Evaluation

Clinician stakeholders identified several key features during the initial stakeholder analysis. Essential needs identified by clinicians reflected their clinical information needs: (1) ability to quickly identify when the ΔSOFA≥2 (vs baseline) threshold had been passed; (2) ability to quickly view current, baseline, and previous SOFA scores from the current hospitalization, broken down by SOFA score component; (3) ability to quickly identify when data was missing for each SOFA component and if data was carried forward; (4) ability to quickly identify the source data used for each SOFA component calculation; and (5) high accuracy. Items 3-5 reflect the concerns several stakeholders expressed about the potential for automation bias with this tool [31]. One nonessential need was identified: Displaying prognostic mortality risk associated with each SOFA score. All identified information needs were incorporated into the initial UX mockup. Major UX changes during the development process included (1) formatting and coloring changes to highlight extreme or missing data for each SOFA component, (2) changes to the ΔSOFA threshold indicator, and (3) changes to limit the quantity of daily SOFA scores visible for prolonged hospitalizations.

Fifty computer systems usability questionnaires were distributed to clinicians who had the opportunity to use the tool in clinical practice during the 2-month period. We received 24% (12/50) responses. The questionnaire was completed by 11% (1/9) residents, 17% (4/24) fellows, and 42% (7/17) attending physicians. A summary of user usability feedback is shown in Figure 2.

Figure 2.

Percent of responses categorized as favorable, unfavorable, or neutral within each domain from the postimplementation computer usability scale questionnaire (respondents=12).

Discussion

Principal Findings

The first part of our study estimates the time-drain of manual SOFA score calculation with a modern EMR system and describes an attempt to mitigate these inefficiencies with an EMR-integrated automated SOFA score calculator created through a user-centered design process. Our time-motion analysis found that the current time required for manual calculation using a modern EMR has improved compared with a study performed 5 years prior [23]. However, these efficiencies may be obscured by the need for repeated calculation under the new sepsis definition. Real-world usage would likely dictate more frequent recalculation and consequently automation would be more desirable as the cumulative time requirements increase.

The second part of our study describes the iterative, user-centered design process for an EMR-integrated automated calculator “app” for the SOFA score. Clinician stakeholders worked closely with developers throughout the rapid UX development process. The resulting interface was favorable to clinician end users in all three usability domains assessed (system usability, information quality, and information quality).

Comparison With Prior Work

Several other clinical scores have been automated for clinical practice—examples include APACHE II [32,33], APACHE IV [34], CHA2 DS2-VASc [35], Charlson comorbidity score [36], and early warning systems [37]. These studies primarily focused on algorithm validation rather than information delivery. The information delivery needs for clinicians using these clinical scores depends on the clinical context; many clinical scoring systems are used at decision points in patient care and clinical practice guidelines (CPG). Clinicians’ poor CPG adherence has been recognized for many years [38]. Consequently, user-centered design processes have been utilized to improve CPG adherence though clinical decision support—ranging from surgical pathways to guideline implementation—with favorable results [26,39-42].

Future Directions

Future demand for SOFA score calculation in clinical practice may be dependent on policy from the Centers for Medicare and Medicaid Services (CMS), which is still recommending the use of the previous definition of sepsis outlined in the Severe Sepsis or Septic Shock Early Management (SEP-1) bundle because of concerns about increasing cases of missed sepsis under the new definition [16,17,19]. CMS adoption of the new sepsis definition would likely spur a significant increase in the usage of the SOFA score by linking quality metrics and payments. Because of the time-cost of score calculation in an otherwise busy clinical setting, manual SOFA score recalculation may only be performed after the clinician has already suspected new onset sepsis due to physiologic changes noted at the bedside. In this situation, application of the ΔSOFA definition (≥2 over baseline) would be confirmatory and not predictive—counter to the Surviving Sepsis Campaign’s goal to improve early recognition of sepsis [43]. However, by automating the SOFA score calculation process and repeating the calculation as new clinical information becomes available, the ΔSOFA criteria could effectively function as a sepsis sniffer. Further studies would be needed to compare the effectiveness of the ΔSOFA criteria as a “sepsis sniffer” against other “black box” sepsis detection algorithms being developed [37,44-48]. The application of the ΔSOFA criteria as a “sepsis sniffer” does have promise—a recent retrospective study demonstrated that SOFA has greater discrimination for in-hospital mortality in critically-ill patients than either quick SOFA (qSOFA) or systemic inflammatory response syndrome (SIRS) criteria [49].

The pairing of the automated SOFA calculator algorithm with the user-centered UX design may hold advantages over these machine-learning based “black box” algorithms—our underlying algorithm is based on a familiar, well-validated clinical score and the visualization of each SOFA component allows clinicians to “look under the hood” to explore the source data behind each item’s value. The ability to verify the source data within the UX reflected the information needs of our clinician stakeholders identified during the agile software development process. With the “black box” algorithms of artificial neural networks and other machine learning techniques, a comparable level of transparency is not possible. Finally, traditional externally validated clinical scores, like SOFA, may be more generalizable than machine-learning algorithms [50]. The external validity of these machine learning algorithms is dependent on the diversity of the data sources used for training and cross-validation, whereas traditional clinical scores adopted into CPGs have already been externally validated. Consequently, researchers may have an opportunity to translate and distribute traditional clinical scoring models as automated computerized algorithms through interoperability platforms.

The emerging “substitutable medical apps, reusable technology” (SMART) on “fast healthcare interoperability resources” (FHIR) interoperable application platform is a promising avenue to bridge the gap between standalone applications and EMR integration [51]. Additionally, the platform offers a means to reduce the 17-year gap between clinical-knowledge generation and widespread usage [52]. Under this platform, interoperable applications can be developed and widely distributed like popular mobile phone apps. Calculator apps and other forms of clinical decision support are currently being “beta-tested” on this platform [53]. In the future, researchers developing clinical scores or computer-assisted decision algorithms may be encouraged to develop similar interoperable applications. In the “app” domain, whether on a mobile phone or integrated into the EMR, usability is an important feature that must be balanced with functionality to encourage widespread adoption. The agile development process described in this paper involved clinicians in the development process early and often, leading to an EMR-integrated “app” that met both clinician information and usability needs within a concise 2-week timeline.

Limitations

The primary limitation of this study is that the clinician stakeholders are from a single institution and their needs might not match the needs of clinicians elsewhere. However, a similar user-centered design and evaluation process could be utilized at other institutions to create and customize a similar tool. Second, clinician survey response rate was low. We aimed to include residents, fellows, and critical care attending physicians with exposure to the tool to obtain perspectives from a wide variety of clinical roles. However, nearly all survey responses were provided by critical care attending physicians and fellows. The tool appeared to meet the usability needs of these content experts.

Conclusions

The incorporation of SOFA scoring into the sepsis definition potentially adds about 1 min per patient (calculation) to an intensive care clinicians’ workload—an amount that is compounded when recalculation is performed multiple times daily to confirm if ΔSOFA criteria have been met. This added workload can be eliminated through automated information retrieval and display. To generate the information display for an EMR-integrated automated SOFA score calculator, we utilized a user-centered agile design process that resulted in a user interface with >75% of usability features receiving favorable ratings across the system usability, information quality, and interface quality usability domains. Usability evaluations are important as clinical decision support algorithms are translated into EMR-integrated applications.

Acknowledgments

This research was made possible by CTSA Grant Number UL1 TR000135 from the National Center for Advancing Translational Sciences (NCATS), a component of the National Institutes of Health (NIH). Its contents are solely the responsibility of the authors and do not necessarily represent the official view of NIH.

Abbreviations

- CMS

Centers for Medicare and Medicaid Services

- CPG

clinical practice guideline

- EMR

electronic medical record

- FHIR

fast healthcare interoperability resources

- GUI

graphical user interface

- ICU

intensive care unit

- qSOFA

quick SOFA

- SEP-1

Severe Sepsis or Septic Shock Early Management Bundle

- SIRS

systemic inflammatory response syndrome

- SMART

substitutable medical apps, reusable technology

- SOFA

Sequential Organ Failure Assessment

- UX

User Interface

Footnotes

Conflicts of Interest: One or more of the investigators associated with this project and Mayo Clinic have a financial conflict of interest in technology used in the research and that the investigators and Mayo Clinic may stand to gain financially from the successful outcome of the research.

References

- 1.Hersh W. The electronic medical record: Promises and problems. J Am Soc Inf Sci. 1995 Dec;46(10):772–6. doi: 10.1002/(SICI)1097-4571(199512)46:10<772::AID-ASI9>3.0.CO;2-0. [DOI] [Google Scholar]

- 2.Hillestad R, Bigelow J, Bower A, Girosi F, Meili R, Scoville R, Taylor R. Can electronic medical record systems transform health care? potential health benefits, savings, and costs. Health Affairs. 2005 Sep 01;24(5):1103–17. doi: 10.1377/hlthaff.24.5.1103. http://content.healthaffairs.org/cgi/pmidlookup?view=long&pmid=16162551. [DOI] [PubMed] [Google Scholar]

- 3.Shanafelt TD, Dyrbye LN, Sinsky C, Hasan O, Satele D, Sloan J, West CP. Relationship between clerical burden and characteristics of the electronic environment with physician burnout and professional satisfaction. Mayo Clin Proc. 2016 Jul;91(7):836–48. doi: 10.1016/j.mayocp.2016.05.007. [DOI] [PubMed] [Google Scholar]

- 4.Ratwani RM, Fairbanks RJ, Hettinger AZ, Benda NC. Electronic health record usability: analysis of the user-centered design processes of eleven electronic health record vendors. J Am Med Inform Assoc. 2015 Nov;22(6):1179–82. doi: 10.1093/jamia/ocv050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ellsworth MA, Dziadzko M, O'Horo JC, Farrell AM, Zhang J, Herasevich V. An appraisal of published usability evaluations of electronic health records via systematic review. J Am Med Inform Assoc. 2017 Jan;24(1):218–26. doi: 10.1093/jamia/ocw046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kellermann AL, Jones SS. What it will take to achieve the as-yet-unfulfilled promises of health information technology. Health Affairs. 2013 Jan 07;32(1):63–8. doi: 10.1377/hlthaff.2012.0693. [DOI] [PubMed] [Google Scholar]

- 7.Karsh B, Weinger MB, Abbott PA, Wears RL. Health information technology: fallacies and sober realities. J Am Med Inform Assoc. 2010 Nov 01;17(6):617–23. doi: 10.1136/jamia.2010.005637. http://jamia.oxfordjournals.org/cgi/pmidlookup?view=long&pmid=20962121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Sittig DF, Singh H. Defining health information technology-related errors: new developments since to err is human. Arch Intern Med. 2011 Jul 25;171(14):1281–4. doi: 10.1001/archinternmed.2011.327. http://europepmc.org/abstract/MED/21788544. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Friedman BA. Informating, not automating, the medical record. J Med Syst. 1989 Aug;13(4):221–5. doi: 10.1007/BF00996645. [DOI] [PubMed] [Google Scholar]

- 10.Wohlauer MV, Rove KO, Pshak TJ, Raeburn CD, Moore EE, Chenoweth C, Srivastava A, Pell J, Meacham RB, Nehler MR. The computerized rounding report: implementation of a model system to support transitions of care. J Surg Res. 2012 Jan;172(1):11–7. doi: 10.1016/j.jss.2011.04.015. [DOI] [PubMed] [Google Scholar]

- 11.Kochendorfer KM, Morris LE, Kruse RL, Ge BG, Mehr DR. Attending and resident physician perceptions of an EMR-generated rounding report for adult inpatient services. Fam Med. 2010 May;42(5):343–9. http://www.stfm.org/fmhub/fm2010/May/Karl343.pdf. [PubMed] [Google Scholar]

- 12.Li P, Ali S, Tang C, Ghali WA, Stelfox HT. Review of computerized physician handoff tools for improving the quality of patient care. J Hosp Med. 2013 Aug;8(8):456–63. doi: 10.1002/jhm.1988. [DOI] [PubMed] [Google Scholar]

- 13.Eberhardt J, Bilchik A, Stojadinovic A. Clinical decision support systems: potential with pitfalls. J Surg Oncol. 2012 Apr 01;105(5):502–10. doi: 10.1002/jso.23053. [DOI] [PubMed] [Google Scholar]

- 14.Carman MJ, Phipps J, Raley J, Li S, Thornlow D. Use of a clinical decision support tool to improve guideline adherence for the treatment of methicillin-resistant staphylococcus aureus: skin and soft tissue infections. Adv Emerg Nurs J. 2011;33(3):252–66. doi: 10.1097/TME.0b013e31822610d1. [DOI] [PubMed] [Google Scholar]

- 15.Singer M, Deutschman CS, Seymour CW, Shankar-Hari M, Annane D, Bauer M, Bellomo R, Bernard GR, Chiche J, Coopersmith CM, Hotchkiss RS, Levy MM, Marshall JC, Martin GS, Opal SM, Rubenfeld GD, van der Poll T, Vincent J, Angus DC. The third international consensus definitions for sepsis and septic shock (sepsis-3) JAMA. 2016 Feb 23;315(8):801–10. doi: 10.1001/jama.2016.0287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Townsend SR, Rivers E, Tefera L. Definitions for sepsis and septic shock. JAMA. 2016 Jul 26;316(4):457–8. doi: 10.1001/jama.2016.6374. [DOI] [PubMed] [Google Scholar]

- 17.Simpson SQ. New sepsis criteria: a change we should not make. Chest. 2016 May;149(5):1117–8. doi: 10.1016/j.chest.2016.02.653. [DOI] [PubMed] [Google Scholar]

- 18.Singer M. The new sepsis consensus definitions (sepsis-3): the good, the not-so-bad, and the actually-quite-pretty. Intensive Care Med. 2016 Dec;42(12):2027–9. doi: 10.1007/s00134-016-4600-4. [DOI] [PubMed] [Google Scholar]

- 19.Sprung CL, Schein RM, Balk RA. The new sepsis consensus definitions: the good, the bad and the ugly. Intensive Care Med. 2016 Dec;42(12):2024–6. doi: 10.1007/s00134-016-4604-0. [DOI] [PubMed] [Google Scholar]

- 20.Marshall JC. Sepsis-3: what is the meaning of a definition? Crit Care Med. 2016 Aug;44(8):1459–60. doi: 10.1097/CCM.0000000000001983. [DOI] [PubMed] [Google Scholar]

- 21.Vincent JL, Moreno R, Takala J, Willatts S, De MA, Bruining H, Reinhart CK, Suter PM, Thijs LG. The SOFA (Sepsis-related Organ Failure Assessment) score to describe organ dysfunction/failure. On behalf of the Working Group on Sepsis-Related Problems of the European Society of Intensive Care Medicine. Intensive Care Med. 1996 Jul;22(7):707–10. doi: 10.1007/BF01709751. [DOI] [PubMed] [Google Scholar]

- 22.Ferreira FL, Bota DP, Bross A, Mélot C, Vincent JL. Serial evaluation of the SOFA score to predict outcome in critically ill patients. JAMA. 2001 Oct 10;286(14):1754–8. doi: 10.1001/jama.286.14.1754. [DOI] [PubMed] [Google Scholar]

- 23.Thomas M, Bourdeaux C, Evans Z, Bryant D, Greenwood R, Gould T. Validation of a computerised system to calculate the sequential organ failure assessment score. Intensive Care Med. 2011 Mar;37(3):557. doi: 10.1007/s00134-010-2083-2. [DOI] [PubMed] [Google Scholar]

- 24.Bourdeaux C, Thomas M, Gould T, Evans Z, Bryant D. Validation of a computerised system to calculate the sequential organ failure assessment score. Crit Care. 2010 Dec 9;14(Suppl 1):P245. doi: 10.1186/cc8477. [DOI] [PubMed] [Google Scholar]

- 25.Harrison AM, Yadav H, Pickering BW, Cartin-Ceba R, Herasevich V. Validation of computerized automatic calculation of the sequential organ failure assessment score. Crit Care Res Pract. 2013;2013:975672. doi: 10.1155/2013/975672. doi: 10.1155/2013/975672. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Lenz R, Blaser R, Beyer M, Heger O, Biber C, Bäumlein M. IT support for clinical pathways - lessons learned. Int J Med Inform. 2007 Dec;76(Supplement 3):S397–S402. doi: 10.1016/j.ijmedinf.2007.04.012. [DOI] [PubMed] [Google Scholar]

- 27.Horsky J, McColgan K, Pang J, Melnikas A, Linder J, Schnipper J, Middleton B. Complementary methods of system usability evaluationurveys and observations during software design and development cycles. J Biomed Inform. 2010 Oct;43(5):782–90. doi: 10.1016/j.jbi.2010.05.010. [DOI] [PubMed] [Google Scholar]

- 28.Lewis J. IBM computer usability satisfaction questionnaires: psychometric evaluation and instructions for use. Int J Hum Comput Interact. 1995 Jan;7(1):57–78. [Google Scholar]

- 29.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009 Apr;42(2):377–81. doi: 10.1016/j.jbi.2008.08.010. http://linkinghub.elsevier.com/retrieve/pii/S1532-0464(08)00122-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.R Core Team . R-project. Vienna, Austria: 2016. [2017-04-27]. R: A language and environment for statistical computing https://www.r-project.org/ [Google Scholar]

- 31.Lyell D, Coiera E. Automation bias and verification complexity: a systematic review. J Am Med Inform Assoc. 2017 Mar 01;24(2):423–31. doi: 10.1093/jamia/ocw105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Mitchell WA. An automated APACHE II scoring system. Intensive Care Nurs. 1987;3(1):14–8. doi: 10.1016/0266-612x(87)90005-8. [DOI] [PubMed] [Google Scholar]

- 33.Junger A, Böttger S, Engel J, Benson M, Michel A, Röhrig R, Jost A, Hempelmann G. Automatic calculation of a modified APACHE II score using a patient data management system (PDMS) Int J Med Inform. 2002 Jun;65(2):145–57. doi: 10.1016/s1386-5056(02)00014-x. [DOI] [PubMed] [Google Scholar]

- 34.Chandra S, Kashyap R, Trillo-Alvarez CA, Tsapenko M, Yilmaz M, Hanson AC, Pickering BW, Gajic O, Herasevich V. Mapping physicians' admission diagnoses to structured concepts towards fully automatic calculation of acute physiology and chronic health evaluation score. BMJ Open. 2011;1(2):e000216. doi: 10.1136/bmjopen-2011-000216. http://bmjopen.bmj.com/cgi/pmidlookup?view=long&pmid=22102639. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Navar-Boggan AM, Rymer JA, Piccini JP, Shatila W, Ring L, Stafford JA, Al-Khatib SM, Peterson ED. Accuracy and validation of an automated electronic algorithm to identify patients with atrial fibrillation at risk for stroke. Am Heart J. 2015 Jan;169(1):39–44.e2. doi: 10.1016/j.ahj.2014.09.014. [DOI] [PubMed] [Google Scholar]

- 36.Singh B, Singh A, Ahmed A, Wilson GA, Pickering BW, Herasevich V, Gajic O, Li G. Derivation and validation of automated electronic search strategies to extract Charlson comorbidities from electronic medical records. Mayo Clin Proc. 2012 Sep;87(9):817–24. doi: 10.1016/j.mayocp.2012.04.015. http://europepmc.org/abstract/MED/22958988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Umscheid CA, Betesh J, VanZandbergen C, Hanish A, Tait G, Mikkelsen ME, French B, Fuchs BD. Development, implementation, and impact of an automated early warning and response system for sepsis. J Hosp Med. 2015 Jan;10(1):26–31. doi: 10.1002/jhm.2259. http://europepmc.org/abstract/MED/25263548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Cabana MD, Rand CS, Powe NR, Wu AW, Wilson MH, Abboud Pc, Rubin Hr. Why don't physicians follow clinical practice guidelines? JAMA. 1999 Oct 20;282(15):1458–65. doi: 10.1001/jama.282.15.1458. [DOI] [PubMed] [Google Scholar]

- 39.Trafton JA, Martins SB, Michel MC, Wang D, Tu SW, Clark DJ, Elliott J, Vucic B, Balt S, Clark ME, Sintek CD, Rosenberg J, Daniels D, Goldstein MK. Designing an automated clinical decision support system to match clinical practice guidelines for opioid therapy for chronic pain. Implement Sci. 2010 Apr 12;5:26. doi: 10.1186/1748-5908-5-26. https://implementationscience.biomedcentral.com/articles/10.1186/1748-5908-5-26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.de FJ, Gokalp H, Fursse J, Sharma U, Clarke M. Designing effective visualizations of habits data to aid clinical decision making. BMC Med Inform Decis Mak. 2014 Nov 30;14:102. doi: 10.1186/s12911-014-0102-x. https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-014-0102-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Xie M, Weinger MB, Gregg WM, Johnson KB. Presenting multiple drug alerts in an ambulatory electronic prescribing system: a usability study of novel prototypes. Appl Clin Inform. 2014;5(2):334–48. doi: 10.4338/ACI-2013-10-RA-0092. http://europepmc.org/abstract/MED/25024753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Mishuris RG, Yoder J, Wilson D, Mann D. Integrating data from an online diabetes prevention program into an electronic health record and clinical workflow, a design phase usability study. BMC Med Inform Decis Mak. 2016 Jul 11;16:88. doi: 10.1186/s12911-016-0328-x. https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-016-0328-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Dellinger R, Levy M, Rhodes A, Annane D, Gerlach H, Opal S, Sevransky JE, Sprung CL, Douglas IS, Jaeschke R, Osborn TM, Nunnally ME, Townsend SR, Reinhart K, Kleinpell RM, Angus DC, Deutschman CS, Machado FR, Rubenfeld GD, Webb S, Beale RJ, Vincent J-L, Moreno R, Surviving Sepsis Campaign Guidelines Committee including the Pediatric Subgroup Surviving sepsis campaign: international guidelines for management of severe sepsis and septic shock: 2012. Crit Care Med. 2013 Feb;41(2):580–637. doi: 10.1097/CCM.0b013e31827e83af. [DOI] [PubMed] [Google Scholar]

- 44.Herasevich V, Afessa B, Chute CG, Gajic O. Designing and testing computer based screening engine for severe sepsis/septic shock. AMIA Annu Symp Proc. 2008 Nov 06;:966. [PubMed] [Google Scholar]

- 45.Despins LA. Automated detection of sepsis using electronic medical record data: a systematic review. J Healthc Qual. 2016 Sep 13;:1–12. doi: 10.1097/JHQ.0000000000000066. [DOI] [PubMed] [Google Scholar]

- 46.Harrison AM, Thongprayoon C, Kashyap R, Chute CG, Gajic O, Pickering BW, Herasevich V. Developing the surveillance algorithm for detection of failure to recognize and treat severe sepsis. Mayo Clin Proc. 2015 Feb;90(2):166–75. doi: 10.1016/j.mayocp.2014.11.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Calvert JS, Price DA, Chettipally UK, Barton CW, Feldman MD, Hoffman JL, Jay M, Das R. A computational approach to early sepsis detection. Comput Biol Med. 2016 Jul 1;74:69–73. doi: 10.1016/j.compbiomed.2016.05.003. [DOI] [PubMed] [Google Scholar]

- 48.Moorman JR, Rusin CE, Lee H, Guin LE, Clark MT, Delos JB, Kattwinkel J, Lake DE. Predictive monitoring for early detection of subacute potentially catastrophic illnesses in critical care. Conf Proc IEEE Eng Med Biol Soc. 2011;2011:5515–8. doi: 10.1109/IEMBS.2011.6091407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Raith E, Udy A, Bailey M, McGloughlin S, MacIsaac C, Bellomo R. Prognostic accuracy of the SOFA Score, SIRS criteria, and qSOFA score for in-hospital mortality among adults with suspected infection admitted to the intensive care unit. JAMA. 2017;317(3):1644–55. doi: 10.1001/jama.2016.20328. [DOI] [PubMed] [Google Scholar]

- 50.Tu JV. Advantages and disadvantages of using artificial neural networks versus logistic regression for predicting medical outcomes. J Clin Epidemiol. 1996 Nov;49(11):1225–31. doi: 10.1016/s0895-4356(96)00002-9. [DOI] [PubMed] [Google Scholar]

- 51.Mandel JC, Kreda DA, Mandl KD, Kohane IS, Ramoni RB. SMART on FHIR: a standards-based, interoperable apps platform for electronic health records. J Am Med Inform Assoc. 2016 Feb 17;23(5):899–908. doi: 10.1093/jamia/ocv189. http://jamia.oxfordjournals.org/cgi/pmidlookup?view=long&pmid=26911829. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Balas EA, Boren SA. Managing clinical knowledge for health care improvement. Yearb Med Inform. 2000;(1):65–70. [PubMed] [Google Scholar]

- 53.Mandl K, Gottleib D, Mandel J, Schwertner N, Pfiffner P, Alterovitz G. SMART Health IT Project. 2015. [2017-04-26]. SMART app gallery https://gallery.smarthealthit.org .