Abstract

Background

Memory and attention are two cognitive domains pivotal for the performance of instrumental activities of daily living (IADLs). The assessment of these functions is still widely carried out with pencil-and-paper tests, which lack ecological validity. The evaluation of cognitive and memory functions while the patients are performing IADLs should contribute to the ecological validity of the evaluation process.

Objective

The objective of this study is to establish normative data from virtual reality (VR) IADLs designed to activate memory and attention functions.

Methods

A total of 243 non-clinical participants carried out a paper-and-pencil Mini-Mental State Examination (MMSE) and performed 3 VR activities: art gallery visual matching task, supermarket shopping task, and memory fruit matching game. The data (execution time and errors, and money spent in the case of the supermarket activity) was automatically generated from the app.

Results

Outcomes were computed using non-parametric statistics, due to non-normality of distributions. Age, academic qualifications, and computer experience all had significant effects on most measures. Normative values for different levels of these measures were defined.

Conclusions

Age, academic qualifications, and computer experience should be taken into account while using our VR-based platform for cognitive assessment purposes.

Keywords: Systemic Lisbon Battery, attention, memory, cognitive assessment, virtual reality

Introduction

Attention and memory are among the most common cognitive functions affected by acquired brain injuries [1]. Attention refers to the process of selecting a specific stimulus from the physical environment (external stimuli) or the body (internal stimuli) [2]. In addition to selection, this ability also depends on processes of orientation and alertness [3]. The symptoms resulting from the disruption of these abilities are related to an inability to process information automatically. Tasks that are usually automatic (eg, reading) become more difficult for patients with brain injuries, and require a great deal of effort and concentration [4,5]. The neural basis for attention may rely on different brain areas, from midbrain structures [6], to parietal regions, and the anterior pre-frontal cortex [7,8]. The ability to perform everyday life tasks may also depend on memory functions [9], which are particularly affected by pre-frontal brain lesions [10]. Memory can be defined as the ability to encode and/or recall a specific stimulus or situation. There are different theoretical and clinical models that conceptualize memory in terms of information (declarative vs non-declarative) or temporal dimensions (retrospective vs prospective). One model of memory suggests, for example, that information can be manipulated in memory before it is used for a specific purpose [11]. This ability has been defined as working memory, which consists of multiple subsystems that store (for a limited amount of time in short-term memory), and manipulate different kinds of sensory information [12]. However, the roles of attention and memory abilities in everyday functioning go beyond these specific processes, being related to a wider range of cognitive functions called executive functions [13].

The ability to prepare a meal accurately by being able to maintain an adequate level of attention to the task, or even remembering what to buy at the grocery shop, are examples of attention or memory abilities applied to different domains of instrumental activities of daily living (IADLs) that are usually compromised, to different extents, by traumatic brain injuries [14], stroke [15], or even alcohol abuse [16].

The assessment of attention and memory functions is traditionally made with paper-and-pencil tests. Cancelation tests for visual stimuli are usually the best option to assess attention abilities, whilst the Wechsler Memory Scale is one of the most widely used tests for memory assessment [17,18]. This test assesses memory functions within different domains, comprising the following seven subtests: (1) spatial addition, (2) symbol span, (3) design memory, (4) general cognitive screener, (5) logical memory, (6)verbal paired associates, and (7) visual reproduction. In addition to the partial scores on each subtest, total scores reflect general memory ability. One of the shortcomings of such tests, however, is the fact that they do not evaluate the patient while he or she is performing IADLs. Their ecological validity is, therefore, uncertain [19-21]. The optimal way to avoid this pitfall is to carry out evaluations of cognitive performance based on IADLs. While pervasive technologies are already available to contribute to this purpose through the collection of behavioral and physiological data [22], the correlation between the collected data and the impairment of a specific domain, such as memory and attention, has not yet been established.

An emerging alternative to traditional tests is to design and develop virtual reality (VR) worlds that mimic real IADLs and record participants’ performance while executing specific tasks involving attention and memory functions. One such platform is the basis for the Systemic Lisbon Battery (SLB) [23]. It consists of a 3D mock-up of a small town in which participants are free to walk around and engage in several IADLs and in ordinary digital games. While these activities are taking place, the system records for each task several indicators of performance, such as errors and execution times. In order for this to fulfill its purpose of assessment, it must be ensured that the SLB activities are valid indicators of functionality for the cognitive dimension that they were designed to assess. For the virtual kitchen, one of the activities of the SLB, this has already been established [24] using the Virtual Kitchen Test (VKT). The VKT was designed to evaluate frontal brain functioning and was pre-validated in a controlled study with a clinical sample of individuals with alcohol dependence syndrome and with cognitive impairments. This test was developed according to the rationale of the Trail Making Test [25], which is a well-established test used to assess frontal functions. The results showed that scores from the VKT were associated with participants’ performance on traditional neuropsychological tests, and discriminated between the cognitive performance of patients and controls involved in the study.

Another recent study has focused on defining normative data based on which clinical deviations could be identified for each IADL activity and/or task in the SLB [23]. In that study, 59 healthy students performed the exercises available in the SLB that address attention and memory functions. The results of that study suggested that this approach may be an alternative to traditional neuropsychological tests, but broader samples were needed to establish the normative values of performance in those tests with greater confidence. Here, our aim was to estimate normative scores for the SLB from a larger, non-clinical sample collected in the general population, as well as to test the concurrent validity of the SLB subscales with conventional neuropsychological tests.

Methods

Participants

We used a snowball method for recruiting participants. Masters students enrolled in a course on cyberpsychology were specially trained for this study and recruited family members (ie, siblings, parents, and grandparents) to participate. This ensured some demographic diversity through the participation of roughly three different cohorts of adults of both genders. These were asked to participate in a study designed to evaluate attention and memory performance while executing VR-based daily life activities. Participants were not included if they were younger than 18 years of age, had a history of psychiatric disorders, perceptual or motor disabilities or substance abuse. In addition, participants were excluded if they did not have regular access to the World Wide Web and/or if they scored below the cutoff values for their age on the Mini-Mental State Examination (MMSE) [26], which was administered prior to the main tasks. However, all participants scored above those cutoff points.

A final sample of 243 participants with a mean age of 37 years (SD 15.87), 39.5% male (96/243), and 60.5% female (147/243), was included in the study. Of the participants, 69.5% (169/243) had previous experience in using a personal computer for gaming purposes. Formal education ranged from 9 years to post-graduate level, with completed secondary-level studies (27.2%, 66/243) and ongoing university studies (23.0%, 55/243) the most frequent responses. A characterization of the participant sample is detailed in Table 1.

Table 1.

Sample characterization (N=243).

| Characterization | n (%) | |

| Gender | ||

| Male | 96 (39.5) | |

| Female | 147 (60.5) | |

| Employment situation | ||

| Student | 71 (30.1) | |

| Working student | 1 (0.4) | |

| Worker | 144 (61.0) | |

| Unemployed | 9 (3.8) | |

| Retired | 11 (4.7) | |

| Computer experience | ||

| None | 22 (9.1) | |

| Basic | 88 (36.2) | |

| Intermediate | 116 (47.7) | |

| Expert | 17 (7.0) | |

| Video game experience | ||

| Never | 107 (44.8) | |

| Occasionally | 88 (36.8) | |

| Frequently | 30 (12.6) | |

| More than 50% of days | 9 (3.8) | |

| Every day | 5 (2.1) | |

| Formal education | ||

| Basic studies | 43 (18.0) | |

| Incomplete high school | 32 (13.4) | |

| High school | 65 (27.2) | |

| University studies | 55 (23.0) | |

| University degree | 35 (14.6) | |

| Graduate Studies | 9 (3.8) | |

| Age, years | ||

| Mean (SD) | 36.99 (15.85) | |

| Minimum | 18 | |

| Maximum | 86 | |

| MMSE score | ||

| Mean (SD) | 28.09 (3.09) | |

| Minimum | 22 | |

| Maximum | 30 | |

Study Procedure

Potential participants first responded to a screening protocol questionnaire. If they did not fulfill all the inclusion criteria, they were thanked and did not participate in the study. Participants fulfilling the inclusion criteria were given the MMSE test, but their results on the MMSE were only analyzed after their participation in the main task. Both the MMSE and the screening protocol used to assess the other criteria were administered in paper forms. Interviewers then ran Unity Web Player and asked participants to sign in to the platform with a pre-established code so that we could, if needed, establish an epigenetic relation between participants. Before performing the main tasks, participants carried out a familiarization task to ensure that they had the necessary skills to navigate and interact in a mediated 3D environment, but this task did not include the tasks on which they would be assessed.

The main tasks were carried out on the SLB [23], a VR platform for the assessment of cognitive impairments based on serious-games principles and developed on Unity 2.5. It consists of a small-city scenario, complete with streets, buildings, and normal infrastructures (eg, shops) used by people in their daily lives. The SLB is freely available online [27]. To ensure a more immersive environment, tthe SLB scenario is populated by computer-controlled non-playable characters (NPCs), which roam across the city. Besides the house, which is the spawn point (the starting point of the player in scenario), and in which the users can engage in most of the home-based daily activities (ie, personal hygiene, dressing, meals), this "city" has a supermarket, an art gallery, a pharmacy, and a casino. The assessment tasks are performed in all these settings. The tasks to perform in the SLB range from memory tasks to complex procedures, and the platform is undergoing a constant process of development to optimize and expand the set of tasks included.

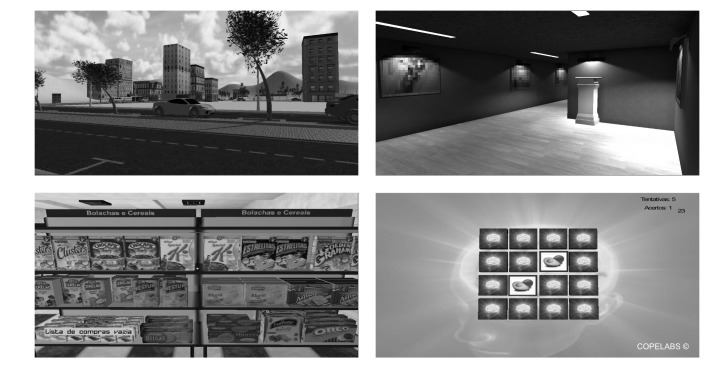

In this particular study, participants performed three different tasks. The first (fruit-matching) is a short-term memory task consisting of a matching tiles game in which participants had to complete 8 trials of matching pairs of fruits. The second (supermarket) is a working memory and attention task, and took place in a supermarket scenario where the participants were instructed to buy 7 products (a milk bottle, a pack of sugar, a bottle of olive oil, a pack of crackers, a bottle of soda, a bottle of beer, and a can of tuna) for the lowest possible expense (€25 maximum) in a minimum amount of time. The third (art gallery) is an attention task, and took place in an art gallery. Participants had to match missing pieces in three different paintings into their correct place. These three tasks are illustrated in Figure 1.

Figure 1.

Systemic Lisbon Battery (SLB) subtests. City spawn point (top left); gallery (top right); supermarket (bottom left); and memory game (bottom right).

The avatar was spawned in the bedroom, where the participant had to complete the first task. The other tasks were performed according to a protocol that was provided on screen just before signing in. All activities were listed in the protocol, together with the indications to roam the virtual city. For each task, performance indicators were automatically recorded, for each code, in a file (*.txt) that was later exported to Microsoft Excel.

Outcome Measures and Statistical Analysis

Basic cognitive performance was assessed with the MMSE [26] in a validated Portuguese version [28,29]. The MMSE is a brief screening test that assesses aspects of mental function related to cognition. Higher scores on each measure indicate better cognitive functionality. We used the cutoff values for the Portuguese population established by Guerreiro and colleagues [28], according to level of education: 22 for 0-2 years of schooling, 24 for 3-6 years of schooling and 27 for 7 or more years of schooling.

IADLs-related cognitive performance measures were based on the execution times and number of errors in the three SLB tasks (fruit matching memory task, supermarket memory and attention task, and art gallery attention task). We verified the correlations between these to avoid measurement overlap. In the case of the supermarket task, in which participants were instructed to go for the cheapest solutions, we also added the amount of cash spent on listed products. In all cases, lower scores indicate higher cognitive performance.

The main goal of this study was to establish normative values for three subtests of the SLB. Given what is known on the effect of demographic variables (ie, namely age and education) on measures of cognitive performance, it was important to identify their effects and establish normative values separately for different levels of age and education. Since the SLB was developed as a VR application, controlling the effects of video game and computer experience was also a necessary goal. Finally, we were also interested in understanding the relations between the results of the different subtests, as well as between the different subtests results and MMSE.

Demographic effects on performance were tested with non-parametric statistical analyses (Mann-Whitney and Kruskal-Wallis tests for independent samples), since the distributions of the performance measures did not pass the Kolmogorov-Smirnov test for normality. For the same reason, we computed correlations using Spearman's rank order correlation (ρ).

Inferential statistics were carried out using IBM SPSS v.20 (IBM Corp. USA). For every statistical analysis, we considered a CI of 95%, so statistical results are reported as significant when the P value is lower than .05. Although this was not an experimental study, the main conclusions of this study were based on inferential statistics, which required a priori power analysis to estimate the sample size needed for proper statistical analysis. This procedure was conducted in G*Power (v3.1) with Cohen’s r effect size for non-parametric Spearman’s r stests [30,31]. Given an expected effect size of .30 (medium) for a .05 significance level (alpha) in two-tailed testing with a power (1-beta) of .80, the required sample size for this study was 167 participants.

Results

Means (SDs) for both errors and execution time for the three subtests are reported in Table 2. For subtest 2 (supermarket), the descriptive statistics for money spent are also reported. Finally, CIs for the 95% level are also provided for each subtest. The correlations between execution times on the three different tests were all positive and moderate: gallery–memory game, r s(128) = .371, P<.001; gallery-supermarket, r s(127) = .312, P=.001; memory game – supermarket, r s(116) = .360, P<.001, suggesting that time-performance on the different SLB tasks is evaluating interrelated cognitive performance constructs. The inter-correlations between execution times and errors within each subtest were also all positive and moderate: gallery, r s(125) = .300, P=.001; memory game, r s(103) = .341, P<.001: and supermarket, r s(129) = .510, P<.001; which is what we should expect. However, none of the correlations between error rates in the different subtests were significant, which is a result that needs some discussion. In addition, the predictably negative correlations with task scores on the MMSE were all either weak or non-significant.

Table 2.

Descriptive data on virtual reality-based subtests (N=243).

| Mean (SD) | CI 95% | ||

| Lower bound | Upper bound | ||

| Memory game execution time | 40.66 (8.94) | 37.29 | 40.91 |

| Memory game errorsa | 7.85 (2.47) | 7.43 | 8.49 |

| Supermarket execution time | 435.98 (202.37) | 394.31 | 477.66 |

| Supermarket errors | 7.19 (9.59) | 5.22 | 9.17 |

| Supermarket money spentb | 10.56 (3.88) | 9.76 | 11.36 |

| Gallery execution time | 155.55 (105.12) | 119.79 | 155.20 |

| Gallery errors | 10.64 (18.47) | 5.94 | 13.39 |

aNumber of incorrect hits.

bMoney spent in Euros used to purchase the pre-defined list of products.

In order to test whether there were effects of socio-demographic characteristics and computer and video game experience on performance in this set of subtests of the SLB, and thus if separate normative values should be established for different levels of each of those variables, we carried out a series of tests. Since most of the outcome variables were non-normally distributed, we used either the Mann-Whitney or the Kruskal-Wallis tests, respectively, for two or more groups.

The test for computer experience (Table 3) indicates effects on execution times in both the fruit matching memory task (χ23=12.485, P=.006), and in the art gallery attention task (χ23=9.351, P=.025). In the memory task, specialists performed significantly faster than participants with no experience (P=.008), basic experience (P=.001), and intermediate experience (P=.012). In the art gallery attention task, specialists also performed significantly faster than participants with no experience (P=.012), with basic experience (P=.006), and with intermediate experience (P=.036). In fact, participants with a lot of computer experience were typically much faster than other participants in performing the tasks, suggesting that computer experience should be taken into account when assessing performance based on execution times.

Table 3.

Subtests results by computer experience.

| Level of computer experience, mean ranks | |||||

| None | Basic | Intermediate | Specialist | χ2a | |

| Memory game execution time | 72.68 | 74.96 | 61.98 | 24.00 | 12.485b |

| Memory game errorsc | 38.00 | 52.08 | 60.78 | 45.89 | 5.581 |

| Supermarket execution time | 84.40 | 68.25 | 60.40 | 54.50 | 4.619 |

| Supermarket errors | 75.40 | 66.30 | 60.87 | 74.69 | 2.132 |

| Supermarket money spentd | 81.75 | 61.91 | 63.53 | 74.75 | 3.067 |

| Gallery execution time | 75.90 | 71.31 | 60.85 | 29.86 | 9.351e |

| Gallery errors | 82.70 | 62.49 | 61.86 | 45.67 | 4.476 |

aChi-square of the Kruskal-Wallis test.

b P<.01.

cNumber of incorrect hits.

dMoney spent in Euros used to purchase the pre-defined list of products.

e P<.05.

As for academic qualifications, tests results show one significant effect on performance as measured by number of errors in the art gallery attention task (χ25= 22.024, P=.001). Here, the significant differences were between participants with only basic studies, on the one hand, and on the other, those who had completed high-school (P=.000), had or were attending university (P=.013), or had university degrees (P=.006) (Table 4). This task thus seems to be tapping into some cognitive skill that is learned in the high school system. There were no significant effects of gender, video game experience, TV viewing-hours per week, VR knowledge, 3D experience, or 3D knowledge, on any of the cognitive performance indicators.

Table 4.

Subtests results by academic qualifications.

| Level of academic qualification, mean ranks | |||||||

| Basic studies (9thgrade) | Incomplete high school | High school | University attendance | University degree | Graduate studies | χ2a | |

| Memory game execution time | 67.23 | 75.33 | 67.09 | 59.04 | 57.65 | 44.00 | 4.774 |

| Memory game errorsb | 42.36 | 64.97 | 60.54 | 50.39 | 51.41 | 42.17 | 7.694 |

| Supermarket execution time | 76.54 | 55.82 | 57.88 | 55.09 | 76.90 | 50.33 | 9.731 |

| Supermarket errors | 76.48 | 56.94 | 59.97 | 57.61 | 64.62 | 69.58 | 5.130 |

| Supermarket money spentc | 77.71 | 67.44 | 59.97 | 55.13 | 65.29 | 41.25 | 8.269 |

| Gallery execution time | 67.32 | 68.33 | 59.21 | 60.95 | 58.06 | 69.83 | 1.761 |

| Gallery errors | 84.00 | 73.88 | 46.35 | 54.00 | 54.53 | 52.00 | 22.024d |

aChi-square of the Kruskal-Wallis test.

bNumber of incorrect hits.

cMoney spent in Euros used to purchase the pre-defined list of products.

d P<.01.

Age was significantly, albeit only weakly or at best moderately, related to reduced performance, as measured by execution times on the different tasks: art gallery attention task r s(127) = .312, P<.001; fruit matching memory task r s(139) = .172, P=.049; supermarket memory and attention task r s(127) = .184, P<.001), as well as by the MMSE r s(242) = -.147, P=.022. We tested the effects of age cohort on performance in the different subtests of the SLB (execution time and errors for both gallery and memory game) by dividing the sample into four cohorts according to age quartiles (Table 5). Results indicate significant effects for both gallery execution time (χ23=14.733, P=.002) and gallery errors (χ23=10.400, P=.015). Older participants took longer to complete the task and made more errors. Post-hoc comparisons show significant differences in the gallery execution time measure in the comparisons between the <23 years age group and both the >49 and 35-48 age groups. With respect to gallery errors, the most significant differences were between the 23 to 34 and the >49 age groups. The age effect for the memory task (execution time) was just beyond the margin of significance (P=.052), so we did not analyze post-hoc differences.

Table 5.

Kruskal-Wallis non-parametric comparison of Systemic Lisbon Battery (SLB) performance by age cohorts.

| Age cohort in years, mean (SD) | χ2 | ||||

| <23 | 23-34 | 35-48 | >49 | ||

| Gallery execution time | 105.31 (57.36) | 135.98 (69.96) | 173.70 (130.58) | 186.08 (103.22) | 14.733a |

| Gallery errorsb | 5.69 (9.20) | 3.22 (3.93) | 9.91 (16.50) | 19.46 (26.45) | 10.400c |

| Memory game execution time | 36.85 (9.19) | 43.01 (7.43) | 42.45 (8.19) | 40.58 (9.51) | 7.721 |

| Memory game errors | 8.21 (2.49) | 7.86 (2.11) | 8.061 (2.46) | 7.33 (2.62) | .544 |

a P<.01.

bNumber of incorrect hits.

c P<.05.

Discussion

Principal Findings

Neuropsychological research has exposed the limitations of traditional paper-and-pencil neuropsychological tests for the assessment of cognitive functioning. A major critique is that those tests do not replicate cognitive functions used in the activities of daily living. A more ecologically valid emerging alternative is to use VR-based applications to test executive functions and related cognitive functions such as memory and attention. One of these applications is the SLB [23], a free online application and cognitive test, which provides a highly immersive and motivating experience with a first-person view that mimics IADLs.

The main objective of this study was to identify normative values for this application to be used as baseline in clinical studies. Our results indicate that performance on VR-based IADLs as measured by execution times is enhanced by education and computer experience, whilst age decreases performance. According to these results, we propose that normative values for execution times on VR-based IADLs be separately established for different levels of each of these variables. Conversely, we found no effects of gender, which is reassuring in that it indicates that the SLB has no gender bias and normative values do not need to be adjusted to gender. In addition, the moderate positive correlations between execution times suggest that the different subtests are tapping into different but associated cognitive functions, which is what we would have expected. The same pattern was not found for errors, which is probably due to floor effects on all of these, as we are dealing with a non-clinical sample for which errors are all relatively low. However, error rates on each of the tasks are correlated with the respective execution times, which indicate they are not random. Correlations between task performance and MMSE are mostly non-significant, which is probably due to a ceiling effect on the MMSE itself, also typical of non-clinical samples.

If we take into account these differences, these results indicate that VR-based assessments of cognitive functions using tasks that reproduce activities of daily life, such as the SLB, may be useful to assess cognitive functioning during the execution of activities of daily living, although a larger study comparing normal with clinical samples, and evaluating the comparative performance and within-subject correlation between results of the SLB and traditional neuropsychological tests is still needed. Moreover, it is important to note that it is possible to have these applications available anytime, anywhere, and to everyone with the advent of pervasive technology through mobile devices, which will make their use easier and more accessible than current conventional treatments. It is therefore urgent to test their validity and establish normative data for varied populations.

Limitations

The data was recorded on a variety of personal computers and several volunteers participated in the data collection. Thus, it was impossible to guarantee homogeneity of conditions, in particular in what concerns the influence of screen types, interfaces (eg, mouse), and of the person running the tests. The over-65-years sample size was too small to draw firm conclusions for that age group. In addition, we did not assess whether prior training could impact on performance, as all participants underwent training before the assessment. Finally, the fact that we were assessing a non-clinical sample probably explains the floor and ceiling effects in the error rates and MMSE, even though this is an essential step for every assessment and/or training battery validation that is focused in working with both clinical and general populations.

Conclusions

The assessment of cognitive functions is traditionally made through non-ecological pencil-and-paper tests. However, interactive and immersive platforms options like virtual reality apps, which mimic real-life activities, are increasingly available. Nevertheless, such options require establishing normative data for healthy populations, which can be used to assess cognitive problems in (potentially) clinical populations. This study follows this aim by identifying normal levels of cognitive performance in a non-clinical sample, using assessment measures based on VR versions of IADLs chosen for their demand on memory and attention functions. Age, level of education, and computer experience all appear to contribute to performance with this tool, which implies that normative values have to be adjusted to all these variables.

Acknowledgments

The study was financed by COPELABS via the Foundation for Science and Technology – FCT of Portugal (PEst-OE/PSI/UI0700/2011). The study and the role of each author were approved by the ethics commission of COPELABS.

Abbreviations

- IADLs

instrumental activities of daily living

- MMSE

Mini-Mental State Examination

- SLB

Systemic Lisbon Battery

- VKT

Virtual Kitchen Test

- VR

virtual reality

Footnotes

Authors' Contributions: Pedro Gamito designed the study and wrote the main part of the manuscript. Diogo Morais and Sara Correia carried out statistical procedures and analyses. Jorge Oliveira wrote the literature review. Paulo Lopes prepared the evaluation protocol. Felipe Picareli and Marcelo Matias developed the 3D app. Rodrigo Brito revised the text and the presentation of results.

Conflicts of Interest: The authors owned and have developed the SLB app, but this app is freely available and the authors will not profit commercially from it.

References

- 1.Yasuda S, Wehman P, Targett P, Cifu D, West M. Return to work for persons with traumatic brain injury. Am J Phys Med Rehabil. 2001 Nov;80(11):852–64. doi: 10.1097/00002060-200111000-00011. [DOI] [PubMed] [Google Scholar]

- 2.Raz A, Buhle J. Typologies of attentional networks. Nat Rev Neurosci. 2006 May;7(5):367–79. doi: 10.1038/nrn1903. [DOI] [PubMed] [Google Scholar]

- 3.Johnson A, Proctor RW. Attention: Theory and Practice. Thousand Oaks, CA: Sage Publications; 2004. [Google Scholar]

- 4.Brooks N, McKinlay W, Symington C, Beattie A, Campsie L. Return to work within the first seven years of severe head injury. Brain Inj. 1987;1(1):5–19. doi: 10.3109/02699058709034439. [DOI] [PubMed] [Google Scholar]

- 5.Ownsworth TL, Mcfarland K. Memory remediation in long-term acquired brain injury: two approaches in diary training. Brain Inj. 1999 Aug;13(8):605–26. doi: 10.1080/026990599121340. [DOI] [PubMed] [Google Scholar]

- 6.Coull JT, Nobre AC, Frith CD. The noradrenergic alpha2 agonist clonidine modulates behavioural and neuroanatomical correlates of human attentional orienting and alerting. Cereb Cortex. 2001 Jan;11(1):73–84. doi: 10.1093/cercor/11.1.73. http://cercor.oxfordjournals.org/cgi/pmidlookup?view=long&pmid=11113036. [DOI] [PubMed] [Google Scholar]

- 7.Posner MI, Petersen SE. The attention system of the human brain. Annu Rev Neurosci. 1990;13:25–42. doi: 10.1146/annurev.ne.13.030190.000325. [DOI] [PubMed] [Google Scholar]

- 8.Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nat Rev Neurosci. 2002 Mar;3(3):201–15. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- 9.Bottari C, Dassa C, Rainville C, Dutil E. The criterion-related validity of the IADL Profile with measures of executive functions, indices of trauma severity and sociodemographic characteristics. Brain Inj. 2009 Apr;23(4):322–35. doi: 10.1080/02699050902788436. [DOI] [PubMed] [Google Scholar]

- 10.Cahn-Weiner DA, Farias ST, Julian L, Harvey DJ, Kramer JH, Reed BR, Mungas D, Wetzel M, Chui H. Cognitive and neuroimaging predictors of instrumental activities of daily living. J Int Neuropsychol Soc. 2007 Sep;13(5):747–57. doi: 10.1017/S1355617707070853. http://europepmc.org/abstract/MED/17521485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Baddeley A. Working Memory, Thought, and Action. Oxford, UK: Oxford University Press; 2007. [Google Scholar]

- 12.Baddeley A. The episodic buffer: a new component of working memory? Trends Cogn Sci. 2000 Nov 1;4(11):417–423. doi: 10.1016/s1364-6613(00)01538-2. [DOI] [PubMed] [Google Scholar]

- 13.McCabe DP, Roediger HL, McDaniel MA, Balota DA, Hambrick DZ. The relationship between working memory capacity and executive functioning: evidence for a common executive attention construct. Neuropsychology. 2010 Mar;24(2):222–43. doi: 10.1037/a0017619. http://europepmc.org/abstract/MED/20230116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bottari C, Gosselin N, Guillemette M, Lamoureux J, Ptito A. Independence in managing one's finances after traumatic brain injury. Brain Inj. 2011;25(13-14):1306–17. doi: 10.3109/02699052.2011.624570. [DOI] [PubMed] [Google Scholar]

- 15.Sadek JR, Stricker N, Adair JC, Haaland KY. Performance-based everyday functioning after stroke: relationship with IADL questionnaire and neurocognitive performance. J Int Neuropsychol Soc. 2011 Sep;17(5):832–40. doi: 10.1017/S1355617711000841. [DOI] [PubMed] [Google Scholar]

- 16.Reid MC, Concato J, Towle VR, Williams CS, Tinetti ME. Alcohol use and functional disability among cognitively impaired adults. J Am Geriatr Soc. 1999 Jul;47(7):854–9. doi: 10.1111/j.1532-5415.1999.tb03844.x. [DOI] [PubMed] [Google Scholar]

- 17.Lees-Haley PR. Forensic neuropsychological test usage: an empirical survey. Arch Clin Neuropsych. 1996;11(1):45–51. doi: 10.1016/0887-6177(95)00011-9. [DOI] [Google Scholar]

- 18.Camara WJ, Nathan JS, Puente AE. Psychological test usage: implications in professional psychology. Prof Psychol Res Pr. 2000;31(2):141–154. doi: 10.1037//0735-7028.31.2.141. [DOI] [Google Scholar]

- 19.Chaytor N, Schmitter-Edgecombe M. The ecological validity of neuropsychological tests: a review of the literature on everyday cognitive skills. Neuropsychol Rev. 2003 Dec;13(4):181–97. doi: 10.1023/b:nerv.0000009483.91468.fb. [DOI] [PubMed] [Google Scholar]

- 20.Spooner DM, Pachana NA. Ecological validity in neuropsychological assessment: a case for greater consideration in research with neurologically intact populations. Arch Clin Neuropsychol. 2006 May;21(4):327–37. doi: 10.1016/j.acn.2006.04.004. http://linkinghub.elsevier.com/retrieve/pii/S0887-6177(06)00052-7. [DOI] [PubMed] [Google Scholar]

- 21.Parsons TD. Neuropsychological assessment using virtual environments: enhanced assessment technology for improved ecological validity. In: Brahnam S, Lakhmi CJ, editors. Advanced Computational Intelligence Paradigms in Healthcare 6: Virtual Reality in Psychotherapy, Rehabilitation, and Assessment. Berlin: Springer Berlin Heidelberg; 2011. pp. 271–289. [Google Scholar]

- 22.Dunton GF, Dzubur E, Kawabata K, Yanez B, Bo B, Intille S. Development of a smartphone application to measure physical activity using sensor-assisted self-report. Front Public Health. 2014;2:12. doi: 10.3389/fpubh.2014.00012. http://dx.doi.org/10.3389/fpubh.2014.00012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Gamito P, Oliveira J, Pinto L, Rodelo L, Lopes P, Brito R, Morais D. Normative data for a cognitive VR rehab serious games-based approach. ACM Digital Library; PervasiveHealth '14. Proceedings of the 8th International Conference on Pervasive Computing Technologies for Healthcare; 20-23 May 2014; Oldenburg. 2014. pp. 443–446. [Google Scholar]

- 24.Gamito P, Oliveira J, Caires C, Morais D, Brito R, Lopes P, Saraiva T, Soares F, Sottomayor C, Barata A, Picareli F, Prates M, Santos C. Virtual Kitchen Test. assessing frontal lobe functions in patients with alcohol dependence syndrome. Methods Inf Med. 2015;54(2):122–6. doi: 10.3414/ME14-01-0003. [DOI] [PubMed] [Google Scholar]

- 25.Reitan RM. Validity of the trail making test as an indicator of organic brain damage. Percept Mot Skills. 1958 Dec;8(3):271–276. doi: 10.2466/pms.1958.8.3.271. [DOI] [Google Scholar]

- 26.Folstein MF, Folstein SE, McHugh PR. "Mini-mental state". A practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res. 1975 Nov;12(3):189–98. doi: 10.1016/0022-3956(75)90026-6. [DOI] [PubMed] [Google Scholar]

- 27.LabPsiCom. [2013-08-29]. http://labpsicom.ulusofona.pt .

- 28.Guerreiro M, Silva A, Botelho M, Leitão O, Castro-Caldas A, Garcia C. Adaptação à população Portuguesa na tradução do “Mini Mental State Examination” (MMSE). [Adaptation to the Portuguese population of the Mini-Mental State Examination] Revista Portuguesa de Neurologia. 1994;1:9–10. [Google Scholar]

- 29.Morgado J, Rocha C, Maruta C, Guerreiro M, Martins I. Novos valores normativos do Mini-Mental State Examination. [New normative data of the Mini-Mental State Examination] Sinapse. 2009;9(2):10–16. [Google Scholar]

- 30.Erdfelder E, Faul F, Buchner A. GPOWER: a general power analysis program. Behav Res Methods Instrum Comput. 1996 Mar;28(1):1–11. doi: 10.3758/BF03203630. [DOI] [Google Scholar]

- 31.Cohen J. Statistical Power Analysis for the Behavioral Sciences. Hillsdale, NJ: L Erlbaum Associates; 1988. Statistical power analysis for the behavioral sciences (2nd ed) [Google Scholar]