Abstract

Purpose:

The goal of this study was to evaluate the feasibility, reliability, and validity of a computer-based geriatric assessment via two methods of electronic data capture (SupportScreen and REDCap) compared with paper-and-pencil data capture among older adults with cancer.

Methods:

Eligible patients were ≥ 65 years old, had a cancer diagnosis, and were fluent in English. Patients were randomly assigned to one of four arms, in which they completed the geriatric assessment twice: (1) REDCap and paper and pencil in sessions 1 and 2; (2) REDCap in both sessions; (3) SupportScreen and paper and pencil in sessions 1 and 2; and (4) SupportScreen in both sessions. The feasibility, reliability, and validity of the computer-based geriatric assessment compared with paper and pencil were evaluated.

Results:

The median age of participants (N = 100) was 71 years (range, 65 to 91 years) and the diagnosis was solid tumor (82%) or hematologic malignancy (18%). For session 1, REDCap took significantly longer to complete than paper and pencil (median, 21 minutes [range, 11 to 44 minutes] v median, 15 minutes [range, 9 to 29 minutes], P < .01) or SupportScreen (median, 16 minutes [range, 6 to 38 minutes], P < .01). There were no significant differences in completion times between SupportScreen and paper and pencil (P = .50). The computer-based geriatric assessment was feasible. Few participants (8%) needed help with completing the geriatric assessment (REDCap, n = 7 and SupportScreen, n = 1), 89% reported that the length was “just right,” and 67% preferred the computer-based geriatric assessment to paper and pencil. Test–retest reliability was high (Spearman correlation coefficient ≥ 0.79) for all scales except for social activity. Validity among similar scales was demonstrated.

Conclusion:

Delivering a computer-based geriatric assessment is feasible, reliable, and valid. SupportScreen methodology is preferred to REDCap.

INTRODUCTION

Older adults are at increased risk for developing cancer. They are also at increased risk for experiencing treatment toxicity. A key determinant in evaluating whether an older adult can tolerate cancer treatment is understanding their functional versus chronological age, which can be captured through a geriatric assessment.1 This assessment captures information regarding an individual’s function, comorbidity, cognition, nutrition, psychological state, and social support. Studies have demonstrated the benefits of this assessment in predicting cancer treatment toxicity and survival, as well as identifying areas of vulnerability to guide interventions.2 However, the integration of a geriatric assessment into oncology clinics has been limited by perceptions of the amount of time and resources needed to complete the assessment.

To address these concerns, a brief geriatric assessment for older patients with cancer was developed by the Alliance (formerly Cancer and Leukemia Group B).3 This geriatric assessment is completed by the patient using paper and pencil. Previous studies have demonstrated that the majority of older adults with cancer receiving standard-of-care treatment4 or enrolled in a cooperative group clinical trial3 can complete the patient portion of the geriatric assessment on their own. However, the manual scoring and data entry of patient responses are time consuming and have inherent potential for errors. Because the goal of a geriatric assessment is to help health care providers improve management of older adults with cancer in real time, a more user-friendly, accurate, and efficient method of obtaining a geriatric assessment is needed.

A computer-based survey platform has the potential to resolve many of the limitations in a paper-and-pencil survey; however, it is not clear whether a computer-based geriatric assessment is feasible, or whether the results would be reliable and valid. The goal of this study was to evaluate the feasibility, reliability, and validity of delivering the computer-based geriatric assessment via two methods of electronic data capture (SupportScreen and REDCap) compared with paper-and-pencil data capture among patients with cancer who are ≥ 65 years old.

METHODS

Eligibility Criteria

Patients were recruited from the outpatient medical oncology and hematology practices at the City of Hope Comprehensive Cancer Center and were eligible to participate if they were ≥ 65 years old, had a cancer diagnosis, and were fluent in English (because many of the geriatric assessment measures are not validated in other languages). Patients of any performance status could enroll in this study; however, patients with significant visual or auditory impairments precluding the ability to read the questions or to hear the instructions were ineligible. This study was approved by the City of Hope Institutional Review Board. All patients were required to provide informed consent to participate.

Electronic Data Capture: SupportScreen and REDCap

The geriatric assessment was given on an iPad using touchscreen technology via a Web browser. The content of the browser was the geriatric assessment hosted and executed on either the SupportScreen or REDCap platforms. The SupportScreen platform was developed by researchers and information technology specialists at City of Hope to identify the biopsychosocial needs of patients.5,6 SupportScreen is run on a Juniper ISG2000 firewall’s virtual Web server, which has a dedicated SQL Server 2005 database to ensure the confidentiality of all collected data. REDCap is a Web-based program developed by Vanderbilt University. Conventionally, REDCap is primarily used for collection of research data that is entered by a trained research assistant.7 The advantage of using this particular platform is that REDCap is a secure method that can be used across several different sites, enabling multicenter data capture.

Geriatric Assessment Tool

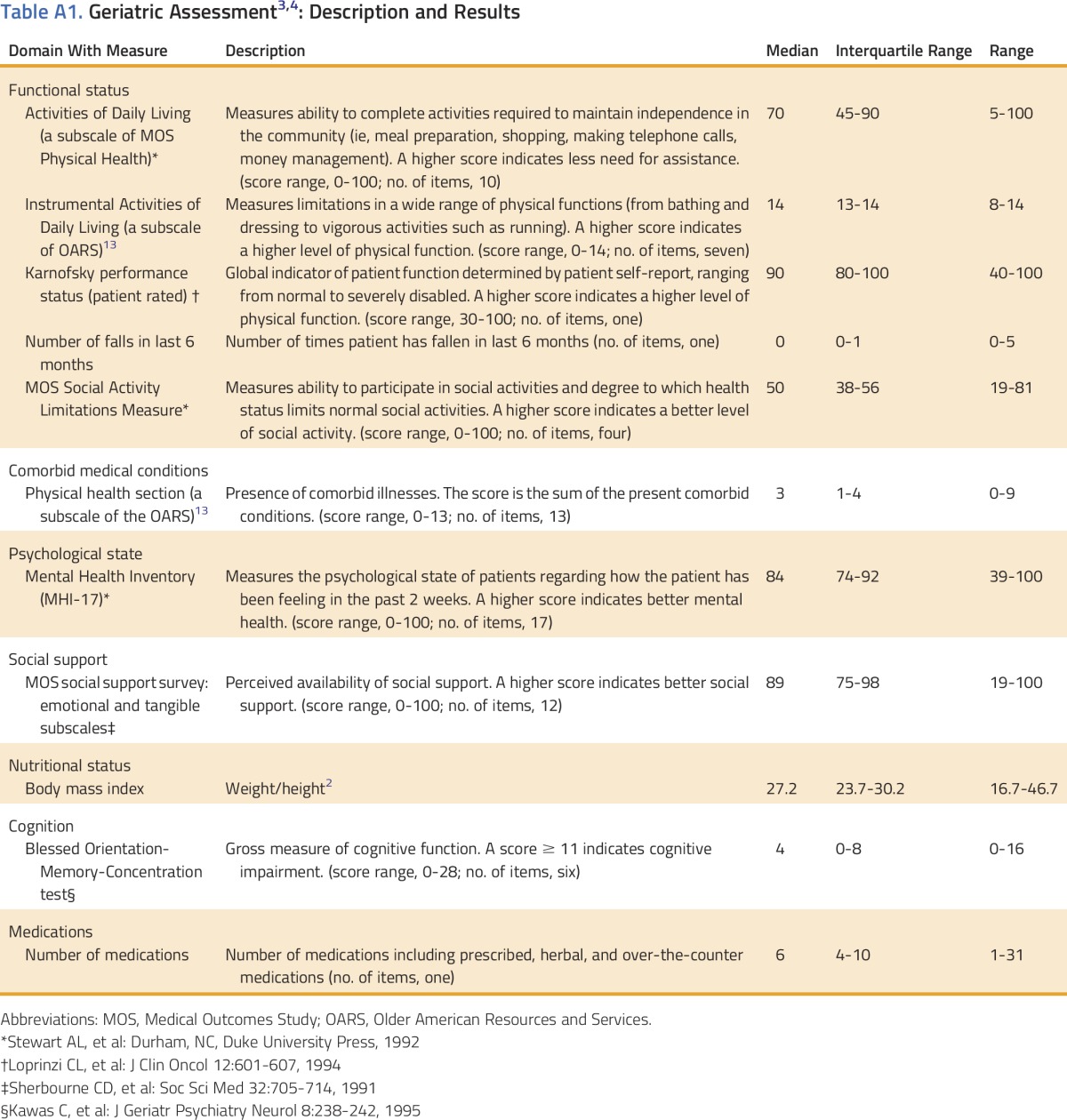

A full description of the geriatric assessment (Appendix Table A1 [online only]), as well as the reliability and validity of the tools included within the assessment, have been reported in prior publications.3,4,8

The geriatric assessment consists of a portion completed by the patient, as well as a brief portion completed by the provider. This study focused on the feasibility of using computer methodology for the patient portion of the assessment.

A Blessed Orientation-Memory-Concentration (BOMC) test (one of the measures included in the provider portion of the geriatric assessment) was performed to determine if the patient had possible cognitive impairment (score ≥ 11 on the BOMC). If the patient met the threshold for potential cognitive impairment on the basis of the BOMC, the patient’s primary oncologist was notified so that further workup and evaluation could be performed as deemed clinically necessary. The patient continued to participate in the study procedures because their treating physician deemed that they had the capacity to consent and participate.

Study Procedure

A computer-generated randomization list assigned patients to one of the four arms: (1) REDCap and paper and pencil in sessions 1 and 2 in random order; (2) REDCap in both sessions 1 and 2; (3) SupportScreen and paper and pencil in sessions 1 and 2 in random order; and (4) SupportScreen in both sessions 1 and 2.

In all arms, before initiation of session 1, patients were given a brief introduction by a trained research assistant on how to use the iPad and how to answer the geriatric assessment questions. The research assistant helped patients who had technical difficulties and noted the reasons for requiring assistance. The administration of sessions 1 and 2 was separated by 30 minutes to keep the survey administration consistent.

At the end of each session, patients were asked to rate how easy it was to use the computer survey (very easy, easy, difficult, or very difficult) and to specify their preferred survey method (computer v paper), when applicable. They were also asked to provide feedback about questions that were difficult to understand or perceived to be missing from the survey; their perception of the survey length (too long, too short, or just right); and whether any of the questions were upsetting. Patients were asked about their computer skill level (none, beginner, intermediate, or advanced).

Patient sociodemographic information was captured including age, sex, race, ethnicity, marital status, education, employment status, income, preferred language, and who they lived with. A chart review was performed to capture the patient’s cancer type, stage, and prior treatment (surgery, radiation, and/or systemic therapy).

Statistical Analysis

The feasibility of the computer-based geriatric assessment via SupportScreen or REDCap was evaluated by the following factors: length of time to complete the assessment; the patient’s ability to complete the assessment without assistance; number of questions missed or selected as “preferred not to answer” or “I don’t know”; the patient’s perception of how long it took to complete the assessment; and the ease of using the computer methodology.

Test–retest reliability was analyzed using Spearman correlation coefficients. Internal consistency of geriatric assessment measures was analyzed using Cronbach alpha coefficient. Spearman correlation coefficients among similar scales were assessed to determine scale validity.

For all analyses, summary statistics including frequencies and percentages were used for categorical data and median values with interquartile ranges were used for continuous data. Differences among randomization arms were evaluated using χ2 tests (categorical data) and Kruskal-Wallis tests (continuous data). Differences by survey methods, computer skill, and possible cognitive impairment were tested using Wilcoxon signed rank test. Changes in time to complete the first and second surveys were verified as normally distributed through Shapiro-Wilk test for normality and analyzed using paired t tests. Bonferroni adjustments for multiple comparisons were made when evaluating statistical significance of P values. Analyses were performed using SAS version 9.4.

RESULTS

Patient Demographic Data and Geriatric Assessment Results

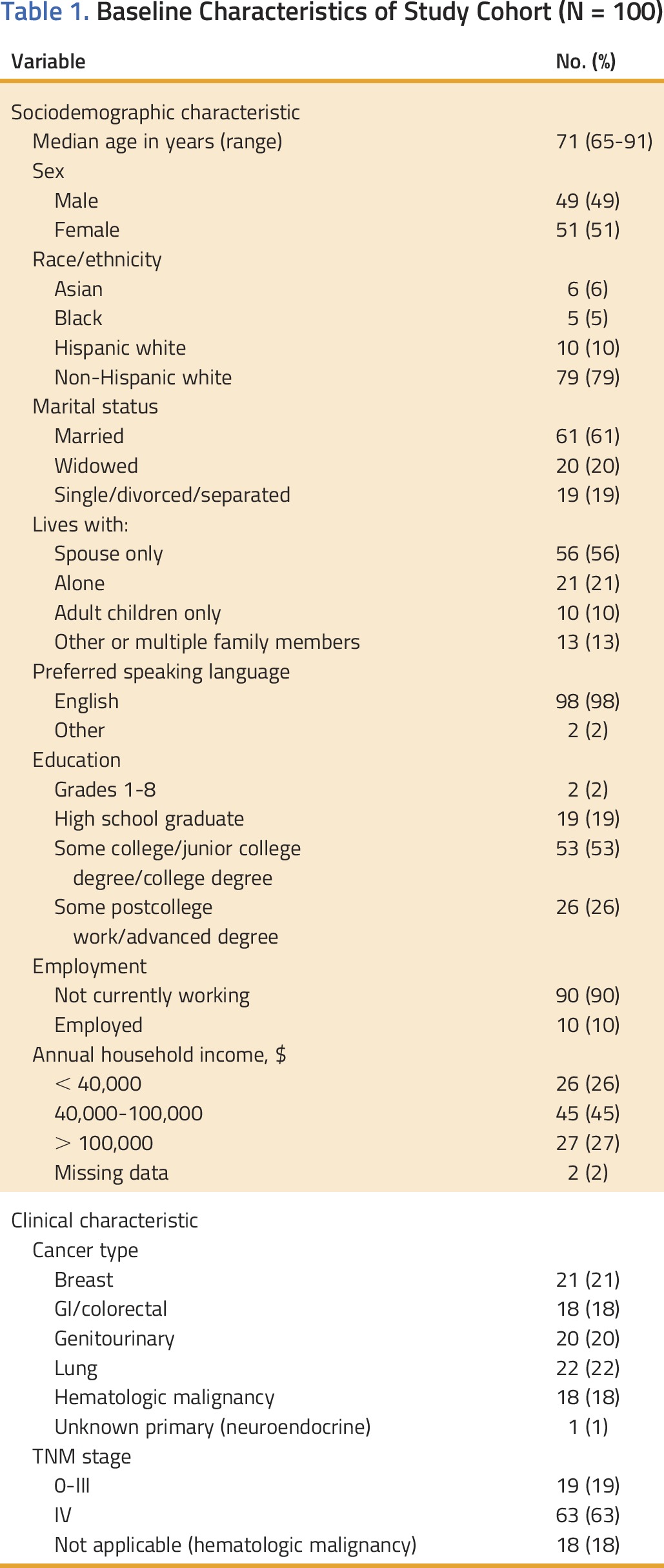

As shown in Appendix Table A1, participants had a median age of 71 years (range, 65 to 91 years) and had either a solid tumor (82%) or hematologic malignancy (18%). The majority of patients had stage IV disease (63%), were non-Hispanic white (79%), were married (61%), preferred to speak English (98%), and had at least some college education (79%). The need for assistance with Instrumental Activities of Daily Living (IADL) was reported by 44% of patients. The study population had a median of three comorbid medical conditions and took a median of six medications per day. Unintentional weight loss in the past 6 months was reported by 28% of patients and 11% had a BOMC score ≥ 11 (indicating possible cognitive impairment). There were no statistically significant differences among treatment arms for any of the baseline characteristics examined (Table 1).

Table 1.

Baseline Characteristics of Study Cohort (N = 100)

Feasibility

Completion time

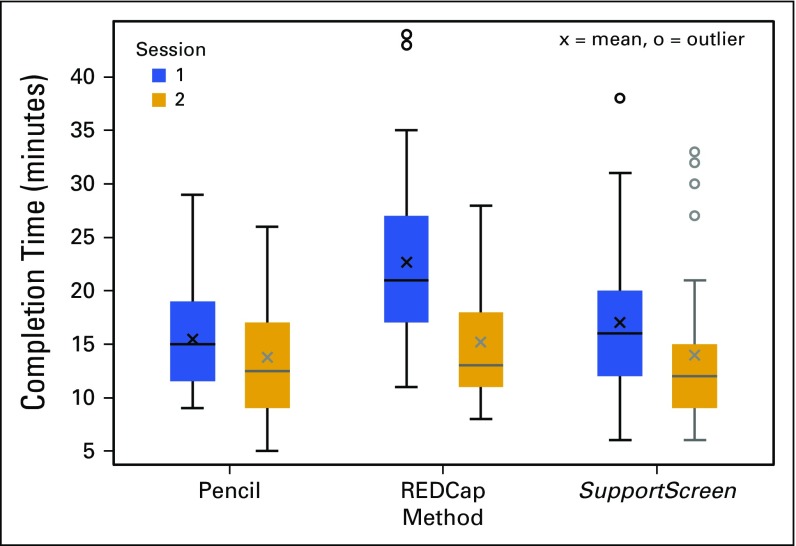

Distributions of completion time for the geriatric assessment by method and session are shown in Fig 1. For session 1, there were no significant differences in completion times between SupportScreen methodology and paper and pencil (P = .50); however, REDCap took significantly longer to complete than paper and pencil (median, 21 minutes [range, 11 to 44 minutes] v median, 15 minutes [range, 9 to 29 minutes], P < .01) or SupportScreen (median, 16 minutes [range, 6 to 38 minutes], P < .01). For session 2, there were no statistically significant differences in completion times among REDCap, SupportScreen, or paper and pencil (P = .32). Overall, the time to completion from session 1 to session 2 improved by an average of −4.4 minutes (standard deviation [SD] = 6.8, P < .01).

FIG 1.

Distributions of completion time for geriatric assessments by method and session.

Patients characterized their level of computer skill. Fifty-seven percent of patients reported that they had intermediate or advanced computer skills and 43% reported that they had beginner level or no computer skills. There were differences in completion time on the basis of computer skill for REDCap, but not for SupportScreen. For REDCap, patients with no or beginner computer skills were significantly slower than those with intermediate or advanced computer skills in both the first session (median, 24 minutes [range, 11 to 44 minutes] v median, 20 minutes [range, 11 to 32 minutes], P = .05) and the second session (median, 18 minutes [range, 9 to 28 minutes] v median, 12 minutes [range, 8 to 28 minutes], P < .01). Patients with possible cognitive impairment (BOMC ≥ 11) took longer to complete the first session (median, 24 minutes [range, 9 to 32 minutes]) compared with the remainder of the cohort (median, 17 minutes [range, 6 to 44 minutes]), P = .06; however, there was no significant difference in time to complete the second session.

Need for assistance

Only eight participants (8%, REDCap [n = 7] and SupportScreen [n = 1]) needed help with completing the geriatric assessment. None of these eight patients had possible cognitive impairment on the basis of the BOMC test.

Missing items

Twelve participants (12%) did not complete items on the geriatric assessment; however, of these patients, only three skipped the items completely (SupportScreen [n = 2], REDCap [n = 1]) and the remainder responded “Do not know” or “Do not want to answer.”

Patients’ perceptions of the assessment length

Most patients (89%) believed that the length was “just right.” One patient (1%) thought that the assessment was too short. Ten patients (10%) considered that the assessment took too long.

Perception of ease

Seven patients (7%) reported that they found the assessment difficult. Among the seven patients who reported difficulty, five did so in the first session (paper and pencil [n = 2], REDCap [n = 2], and SupportScreen [n = 1]). All of these patients changed their response to “easy” or “very easy” in the second session. The majority of patients reported in both sessions that they preferred taking a computer version (66 of 98, 67%).

Reliability and Validity

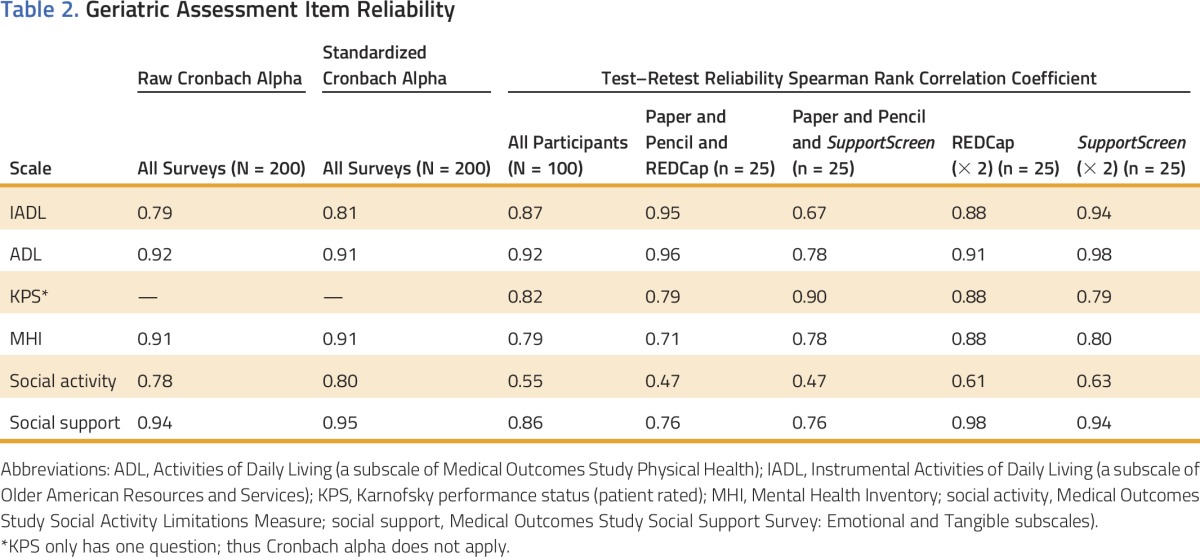

The reliability and validity of the following measures in the geriatric assessment were evaluated: Activities of Daily Living (ADL; a subscale of Medical Outcomes Survey [MOS] Physical Health); IADL; MOS Social Support: emotional and tangible subscales; MOS Social Activity Limitations Measure; and Mental Health Inventory (MHI-17). Although the intrascale reliability has been established elsewhere, we confirmed the reliability in the context of the geriatric assessment.3 Alpha coefficient values for the five scales are listed in Table 2. All reliability coefficients are > 0.7.

Table 2.

Geriatric Assessment Item Reliability

The test–retest reliability for the entire cohort and by study arm demonstrated positive Spearman correlations (≥ 0.65) for all of the scales except for the MOS Social Activity Limitations Measure (ranging from 0.47 to 0.63).

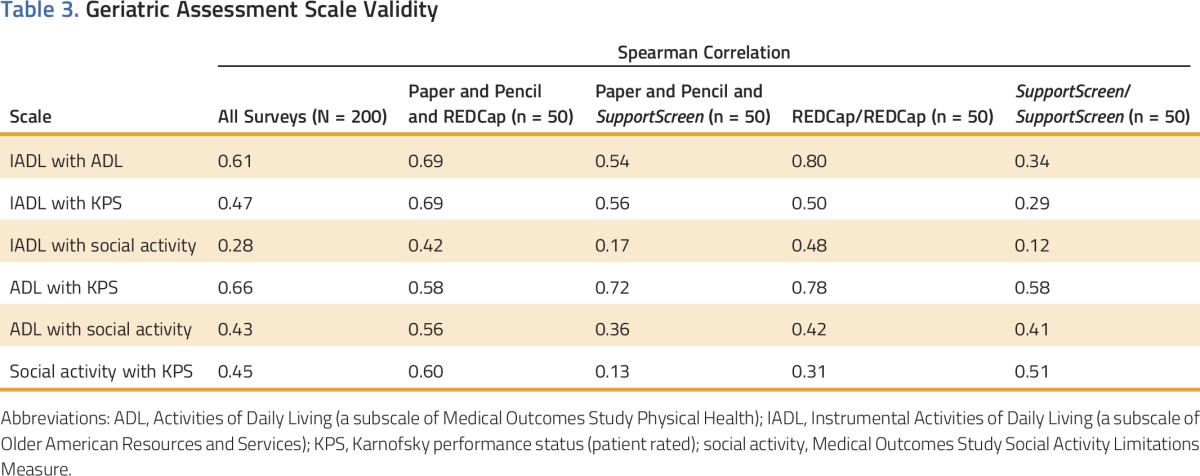

The validity of the geriatric assessment was evaluated for the overall cohort and by study arm (Table 3). The correlation between the IADL and ADL scores was strong overall (0.61) and > 0.54 in all arms except for those patients who took SupportScreen twice. The patient’s assessment of Karnofsky performance status (KPS) correlated weakly with the IADL score (0.47) and with the patient’s social activity level (0.45). The MOS Social Activity score was weakly correlated with the ADL score (0.43) and IADL score (0.28).

Table 3.

Geriatric Assessment Scale Validity

DISCUSSION

This study demonstrates that delivering a computer-based geriatric assessment is feasible, reliable, and valid. There were no significant differences in completion times between the paper-and-pencil and SupportScreen methodologies; however, REDCap took significantly longer than either of the other two methods. The time to completion decreased for session 2 in all arms, suggesting some practice effect. Feasibility was further demonstrated by only a small proportion of patients who needed help with the computer-based geriatric assessment (8%), skipped items completely (3%), or reported that they found the assessment difficult (7%). Furthermore, the majority of patients (67%) reported that they preferred the computer version to paper and pencil.

When we compared these results to prior research that evaluated the feasibility of the paper-and-pencil geriatric assessment, we found similar results for the patient time to completion. In particular, the median time to completion of the paper-and-pencil version in this study was 15 minutes (range, 9 to 29 minutes) compared with a median time of 15 minutes (range, 3 to 45 minutes) in a prior study of older adults enrolled in cooperative group trials.3 The results for SupportScreen are not significantly different (median, 16 minutes [range, 6 to 38 minutes]), although REDCap took longer. These findings are not surprising because SupportScreen was developed as an interface to be completed by patients. In contrast, REDCap is configured as a research database with more limitations in the ability to modify the layout of the questions and response buttons. The main advantage of REDCap is the secure interface across institutions, which would facilitate data entry in a multi-institutional setting.

In contrast to the findings from this study, McCleary et al9 tested a touchscreen methodology using a similar geriatric assessment (with minor modifications) and found a longer time to completion (mean, 23 minutes). Furthermore, almost half of patients required assistance. The most common reason for needing assistance was a lack of computer familiarity. In contrast, only 8% of the patients enrolled in our study required assistance with completion of the geriatric assessment (beyond the brief explanation that was provided by the research assistant), despite 43.9% of patients reporting that they had beginner level or no computer skills. Potential etiologies for these variances could be differences in the patient populations, computer platforms, or explanations provided to the patient on how to use the computer methodology. These findings highlight the need to understand the barriers to utilization of computer technology among varying patient populations.

Although there are concerns that older adults may lag in the adoption of new technologies, the few studies that have tested the feasibility of computer technology among older adults demonstrate that they are capable of completing computer-based assessments. A study of 81 community-dwelling individuals ≥ 85 years old evaluated a computer-based cognitive test versus a paper-and-pencil test. The octogenarians were less likely to rate the computerized cognitive tests as difficult, stressful, or unacceptable compared with the paper-and-pencil tests.10 A study comparing community-dwelling adults 60 to 74 years old with adults 75 to 89 years old found that older adults are able to learn computer skills; however, the oldest adults needed more personalized teaching aids.11

In a study by Loscalzo et al6 of touchscreen technology to obtain patient-reported psychosocial data, only 5.8% of adults ≥ 65 years old found the touchscreen survey to be “difficult” or “very difficult.” Similarly, Newell et al12 used touchscreen technology in a cohort of patients of all ages with cancer (19% were ≥ 70 years old) to capture information on demographic characteristics, cancer descriptors, adverse effects of treatment, levels of anxiety and depression, and perceived needs. More than 90% of the 229 patients rated the touchscreen survey as easy to complete (97%), enjoyable (96%), and not stressful (93%). Of the study participants, 19% stated that they needed “a lot of help to complete the survey” and 99% stated that they usually had enough time to do the survey while waiting to see the doctor.

The reliability and validity of the measures included in the geriatric assessment have been established in other studies.3,13 Our study confirms that all scales are internally consistent and nearly all, except for the MOS Social Activity Limitations Measure, are reliable by test–retest evaluation. The reason that the MOS Social Activity Limitations Measure test–retest correlation was lower is unknown, and further analyses of this scale will need to be conducted in future studies. The IADL score correlated with the ADL score, demonstrating validity because both of these scales focus on daily function. Interestingly, KPS correlated weakly with IADL score and with social activity, suggesting that these scales are measuring different aspects of daily performance. This could help explain the results of prior research demonstrating that KPS is a poor predictor of chemotherapy toxicity, whereas IADL items are predictors of risk.8

There are limitations to this research. First, our sample largely consisted of white, non-Hispanic, and college-educated patients. This patient population may already be comfortable and familiar using computers and understanding and answering survey items. Future studies of the feasibility of the computerized assessment in underserved populations and with non-native English speakers are needed. Second, patients were given brief instructions on how to use the computer methodology, but, despite these instructions, some patients still needed assistance completing the computer-based geriatric assessment. The need for available personnel for instruction and extra assistance must be considered, with subsequent plans for implementation.

Despite these limitations, this research also has a number of strengths. This study demonstrates that the majority of older adults are able to complete a computer-based geriatric assessment with minimal guidance, providing an additional means of obtaining these data in daily practice. Although this study was done among older adults with cancer, the questions are not disease specific and could potentially be valuable to the assessment of older adults with other medical conditions. A computer-based geriatric assessment is available on the Cancer and Aging Research Group Web site (www.mycarg.org, the Geriatric Assessment Tools tab).

This research team is currently working on computer algorithms to summarize the information acquired in the geriatric assessment, with the objective of recommending interventions on the basis of the results. Ultimately, we hope that the geriatric assessment–driven interventions will improve the quality of care of older adults who are undergoing cancer therapy, by identifying areas of vulnerability as well as potential interventions to address them.

ACKNOWLEDGMENT

This study was supported by R21 AG041489 (principal investigator, A.H.) and the City of Hope Excellence Award (principal investigator, A.H.).

Appendix

Table A1.

AUTHOR CONTRIBUTIONS

Conception and design Arti Hurria, Jerome Kim, Matthew Loscalzo, Harvey Cohen, Beverly Canin, Betty Ferrell

Provision of study materials or patients: Marianna Koczywas, Sumanta Pal, Vincent Chung, Stephen Forman, Nitya Nathwani, Marwan Fakih, Chatchada Karanes, Dean Lim, Leslie Popplewell

Collection and assembly of data: Arti Hurria, Chie Akiba, Jerome Kim, Dale Mitani, Vani Katheria

Data analysis and interpretation: Arti Hurria, Vani Katheria, Marianna Koczywas, Vincent Chung, Stephen Forman, Marwan Fakih, Chatchada Karanes, Harvey Cohen, David Cella, Leanne Goldstein

Manuscript writing: All authors

Final approval of manuscript: All authors

Accountable for all aspects of the work: All authors

AUTHORS’ DISCLOSURES OF POTENTIAL CONFLICTS OF INTEREST

Reliability, Validity, and Feasibility of a Computer-Based Geriatric Assessment for Older Adults With Cancer

The following represents disclosure information provided by authors of this manuscript. All relationships are considered compensated. Relationships are self-held unless noted. I = Immediate Family Member, Inst = My Institution. Relationships may not relate to the subject matter of this manuscript. For more information about ASCO’s conflict of interest policy, please refer to www.asco.org/rwc or jop.ascopubs.org/site/misc/ifc.xhtml.

Arti Hurria

Consulting or Advisory Role: GTx, Seattle Genetics, Boehringer Ingelheim, On Q Health, Sanofi

Research Funding: GlaxoSmithKline (Inst), Celgene (Inst), Novartis (Inst)

Chie Akiba

No relationship to disclose

Jerome Kim

No relationship to disclose

Dale Mitani

No relationship to disclose

Matthew Loscalzo

Honoraria: Novartis

Speakers’ Bureau: Novartis

Patents, Royalties, Other Intellectual Property: SupportScreen (royalties from employer, City of Hope)

Travel, Accommodations, Expenses: Novartis

Vani Katheria

No relationship to disclose

Marianna Koczywas

Consulting or Advisory Role: AstraZeneca, Ignyta

Speakers’ Bureau: AstraZeneca

Sumanta Pal

Honoraria: Novartis, Medivation, Astellas Pharma

Consulting or Advisory Role: Pfizer, Novartis, Aveo, Myriad Pharmaceuticals, Genentech, Exelixis, Bristol-Myers Squibb, Astellas Pharma

Research Funding: Medivation

Vincent Chung

Consulting or Advisory Role: Perthera

Speakers’ Bureau: Celgene

Research Funding: Novartis (Inst)

Stephen Forman

No relationship to disclose

Nitya Nathwani

No relationship to disclose

Marwan Fakih

Honoraria: Sirtex Medical

Consulting or Advisory Role: Amgen, Sirtex Medical

Speakers’ Bureau: Sirtex Medical

Research Funding: Novartis (Inst), Amgen (Inst)

Chatchada Karanes

Research Funding: Fate Therapeutics, Pharmacyclics, AROG Pharmaceuticals, Sanofi

Dean Lim

No relationship to disclose

Leslie Popplewell

Honoraria: Cardinal Health Inc

Harvey Cohen

No relationship to disclose

Beverly Canin

No relationship to disclose

David Cella

Leadership: FACIT.org

Honoraria: Pfizer, Novartis, Astellas, Bayer, Bristol-Myers Squibb

Consulting or Advisory Role: AbbVie, Bayer, GlaxoSmithKline, Pfizer

Research Funding: Novartis (Inst), Genentech (Inst), Ipsen (Inst), Bayer (Inst), Pfizer (Inst)

Travel, Accommodations, Expenses: GlaxoSmithKline, Bayer

Betty Ferrell

No relationship to disclose

Leanne Goldstein

Stock or Other Ownership: Infosphere Clinical Research

REFERENCES

- 1.Extermann M, Overcash J, Lyman GH, et al. Comorbidity and functional status are independent in older cancer patients. J Clin Oncol. 1998;16:1582–1587. doi: 10.1200/JCO.1998.16.4.1582. [DOI] [PubMed] [Google Scholar]

- 2.Wildiers H, Heeren P, Puts M, et al. International Society of Geriatric Oncology consensus on geriatric assessment in older patients with cancer. J Clin Oncol. 2014;32:2595–2603. doi: 10.1200/JCO.2013.54.8347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Hurria A, Cirrincione CT, Muss HB, et al. Implementing a geriatric assessment in cooperative group clinical cancer trials: CALGB 360401. J Clin Oncol. 2011;29:1290–1296. doi: 10.1200/JCO.2010.30.6985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Hurria A, Gupta S, Zauderer M, et al. Developing a cancer-specific geriatric assessment: A feasibility study. Cancer. 2005;104:1998–2005. doi: 10.1002/cncr.21422. [DOI] [PubMed] [Google Scholar]

- 5.Clark K, Bardwell WA, Arsenault T, et al. Implementing touch-screen technology to enhance recognition of distress. Psychooncology. 2009;18:822–830. doi: 10.1002/pon.1509. [DOI] [PubMed] [Google Scholar]

- 6.Loscalzo M, Clark KL, Holland J. Successful strategies for implementing biopsychosocial screening. Psychooncology. 2011;20:455–462. doi: 10.1002/pon.1930. [DOI] [PubMed] [Google Scholar]

- 7.Harris PA, Taylor R, Thielke R, et al. Research electronic data capture (REDCap)—A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42:377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hurria A, Togawa K, Mohile SG, et al. Predicting chemotherapy toxicity in older adults with cancer: A prospective multicenter study. J Clin Oncol. 2011;29:3457–3465. doi: 10.1200/JCO.2011.34.7625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.McCleary NJ, Wigler D, Berry D, et al. Feasibility of computer-based self-administered cancer-specific geriatric assessment in older patients with gastrointestinal malignancy. Oncologist. 2013;18:64–72. doi: 10.1634/theoncologist.2012-0241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Collerton J, Collerton D, Arai Y, et al. A comparison of computerized and pencil-and-paper tasks in assessing cognitive function in community-dwelling older people in the Newcastle 85+ Pilot Study. J Am Geriatr Soc. 2007;55:1630–1635. doi: 10.1111/j.1532-5415.2007.01379.x. [DOI] [PubMed] [Google Scholar]

- 11.Echt KV, Morrell RW, Park DC. Effects of age and training formats on basic computer skill acquisition in older adults. Educ Gerontol 24:3-25, 1998 [Google Scholar]

- 12.Newell S, Girgis A, Sanson-Fisher RW, et al: Are touchscreen computer surveys acceptable to medical oncology patients? J Psychosoc Oncol 15:37-46, 1997 [Google Scholar]

- 13.Fillenbaum GG, Smyer MA. The development, validity, and reliability of the OARS multidimensional functional assessment questionnaire. J Gerontol. 1981;36:428–434. doi: 10.1093/geronj/36.4.428. [DOI] [PubMed] [Google Scholar]