Abstract

Plant image identification has become an interdisciplinary focus in both botanical taxonomy and computer vision. The first plant image dataset collected by mobile phone in natural scene is presented, which contains 10,000 images of 100 ornamental plant species in Beijing Forestry University campus. A 26-layer deep learning model consisting of 8 residual building blocks is designed for large-scale plant classification in natural environment. The proposed model achieves a recognition rate of 91.78% on the BJFU100 dataset, demonstrating that deep learning is a promising technology for smart forestry.

1. Introduction

Automatic plant image identification is the most promising solution towards bridging the botanical taxonomic gap, which receives considerable attention in both botany and computer community. As the machine learning technology advances, sophisticated models have been proposed for automatic plant identification. With the popularity of smartphones and the emergence of Pl@ntNet mobile apps [1], millions of plant photos have been acquired. Mobile-based automatic plant identification is essential to real-world social-based ecological surveillance [2], invasive exotic plant monitor [3], ecological science popularization, and so on. Improving the performance of mobile-based plant identification models attracts increased attention from scholars and engineers.

Nowadays, many efforts have been conducted in extracting local characteristics of leaf, flower, or fruit. Most researchers use variations on leaf characteristic as a comparative tool for studying plants, and some leaf datasets including Swedish leaf dataset, Flavia dataset, and ICL dataset are standard benchmark. In [4], Söderkvist extracted shape characteristics and moment features of the leaves and analyzed the 15 different Swedish tree classes using back propagation for the feed-forward neural network. In [5], Fu et al. chose the local contrast and other parameters to describe the characteristics of the surrounding pixels of veins. The artificial neural network was used to segment the veins and other leaves. The experiment shows that the neural network is more effective in identifying the vein images. Li et al. [6] proposed an efficient leaf vein extraction method by combining snakes technique with cellular neural networks, which obtained satisfactory results on leaf segmentation. He and Huang used the probabilistic neural network as a classifier to identify the plant leaf images, which has a better identification accuracy comparing to BP neural network [7]. In 2013, the idea of natural-based leaf recognition was proposed, and the method of contour segmentation algorithm based on polygon leaf model was used to obtain contour image [8]. With the deep learning becoming a hot spot in the field of image recognition, Liu and Kan proposed texture features in combination with shape characteristics, using deep belief network architecture as a classifier [9]. Zhang et al. designed a deep learning system which includes eight layers of Convolution Neural Network to identify leaf images and achieved a higher recognition rate. Some researchers focus on the flowers. Nilsback and Zisserman proposed a method of bag of visual word to describe the color, shape, texture features, and other characteristics [10]. In [11], Zhang et al. combined Harr features with SIFT features of flower image, coding them with nonnegative sparse coding method and classifying them by k-nearest neighbor method. In [12], they raised a method of recognizing the picking rose by integrating BP neural network. The studies of identifying plants by fruit are relatively rare. Li et al. proposed the method of multifeature integration using preference Ainet as the recognition algorithm [13]. After so many years continued exploration into plant recognition technology, the dedicated mobile applications such as LeafSnap [14], Pl@ntNet [1], or Microsoft Garage's Flower Recognition app [15] can be conveniently used for identify plants.

Although the research on automatic plant taxonomy has yield fruitful results, one must note that those models are still far from the requirements of a fully automated ecological surveillance scenario [3]. The aforesaid datasets lack the mobile-based plant images acquired in natural scene which vary greatly in contributors, cameras, areas, periods of the year, individual plants, and so on. The traditional classification models rely heavily on preprocessing to eliminate complex background and enhance desiring features. What is more, the handcraft feature engineering is incapable of dealing with large-scale datasets consisting of unconstrained images.

To overcome aforementioned challenges and inspired by the deep learning breakthrough in image recognition, we acquired the BJFU100 dataset by mobile phone in natural environment. The proposed dataset contains 10,000 images of 100 ornamental plant species in Beijing Forestry University campus. A 26-layer deep learning model consisting of 8 residual building blocks is designed for uncontrolled plant identification. The proposed model achieves a recognition rate of 91.78% on the BJFU100 dataset.

2. Proposed BJFU100 Dataset and Deep Learning Model

Deep learning architectures are formed by multiple linear and nonlinear transformations of input data, with the goal of yielding more abstract and discriminative representations [16]. These methods have dramatically improved the state-of-the-art in speech recognition, visual object recognition, object detection, and many other domains such as drug discovery and genomics [17]. The deep convolutional neural networks proposed in [18] demonstrated outstanding performance in the large-scale image classification task of ILSVRC-2012 [19]. The model was trained on more than one million images and has achieved a winning top-5 test error rate of 15.3% over 1,000 classes. It almost halved the error rates of the best competing approaches. This success has brought about a revolution in computer vision [17]. Recent progress in the field has advanced the feasibility of deep learning applications to solve complex, real-world problems [20].

2.1. BJFU100 Dataset

The BJFU100 dataset is collected from natural scene by mobile devices. It consists of 100 species of ornamental plants in Beijing Forestry University campus. Each category contains one hundred different photos acquired by smartphone in natural environment. The smartphone is equipped with a prime lens of 28 mm equivalent focal length and a RGB sensor of 3120 × 4208 resolution.

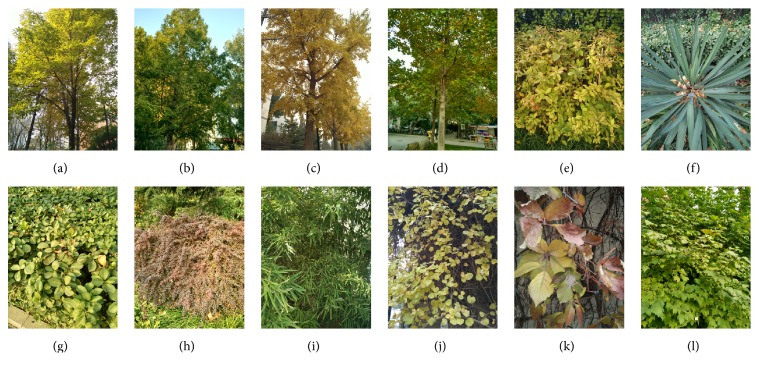

For tall arbors, images were taken from a low angle at ground as shown in Figures 1(a)–1(d). Low shrubs were shot from a high angle, as shown in Figures 1(e)–1(h). Other ornamental plants were taken from a level angle. Subjects may vary in size by an order of magnitude (i.e., some images show only the leaf, others an entire plant from a distance), as shown in Figures 1(i)–1(l).

Figure 1.

Example images of the BJFU100 dataset. (a) Chinese buckeye, (b) metasequoia, (c) Ginkgo biloba, (d) hybrid tulip tree, (e) Weigela florida cv. red-prince, (f) Yucca gloriosa, (g) Euonymus kiautschovicus Loes, (h) Berberis thunbergii var. atropurpurea, (i) mottled bamboo, (j) Celastrus orbiculatus, (k) Parthenocissus quinquefolia, and (l) Viburnum opulus.

2.2. The Deep Residual Network

With the network depth increasing, traditional methods are not as expected to improve accuracy but introduce problems like vanishing gradient and degradation. The residual network, that is, ResNet, introduces skip connections that allow the information (from the input or those learned in earlier layers) to flow more into the deeper layers [23, 24]. With increasing depth, ResNets give better function approximation capabilities as they gain more parameters and successfully contribute to solving vanishing gradient and degradation problems. Deep residual networks with residual units have shown compelling accuracy and nice convergence behaviors on several large-scale image recognition tasks, such as ImageNet [23] and MS COCO [25] competitions.

2.2.1. Residual Building Blocks

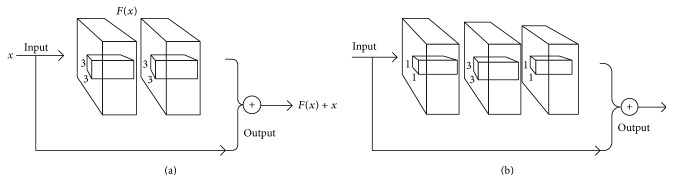

Residual structural unit utilizes shortcut connections with the help of identity mapping. Shortcut connections are those skipping one or more layers. The original underlying mapping can be realized by feed-forward neural networks with shortcut connections. The building block illustrated in Figure 2 is defined as

| (1) |

where x and y are the input and output vectors of stacked layers, respectively. The function F(x, {Wi}) represents the residual mapping that needs to be learned. The function σ(a) denotes ReLU [26] and the biases are omitted for simplifying notations. The dimensions of x and F must be equal to perform the element-wise addition. If this is not the case, a linear projection Ws is applied to match the dimensions of x and F:

| (2) |

Figure 2.

(a) A basic building block. (b) A “bottleneck” building block of deep residual networks.

The baseline building block is shown in Figure 2(a). A shortcut connection is added to each pair of 3 × 3 filters. Concerning the training time on deeper nets, a bottleneck building block is designed as in Figure 2(b). The three layers are 1 × 1, 3 × 3, and 1 × 1 convolutions, where the 1 × 1 layers are responsible for reducing and then restoring dimensions, leaving 3 × 3 layer a bottleneck with smaller input/output dimensions [23]. Bottleneck building blocks use fewer parameters to obtain more abstraction of layers.

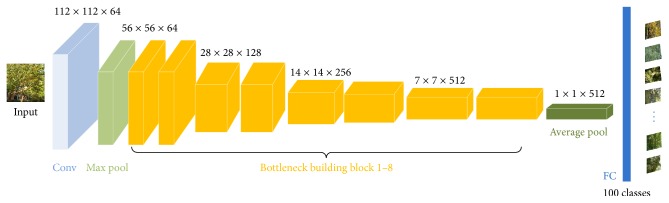

The overall network architecture of our 26-layer ResNet, that is, ResNet26, model is depicted in Figure 3. As Figure 3 shows, the model is mainly designed by using bottleneck building blocks. The input image is fed into a 7 × 7 convolution layer and a 3 × 3 max pooling layer followed by 8 bottleneck building blocks. When the dimensions increase, 1 × 1 convolution is used in bottleneck to match dimensions. The 1 × 1 convolution enriches the level of abstraction and reduces the time complexity. The network ends with a global average pooling, a fully connected layer, and a softmax layer. We adopt batch normalization (BN) [27] right after each convolution layer and before ReLU [26] activation layer. Downsampling is performed by the first convolution layer, the max pooling layer, and the 3, 5, and 7 bottleneck building blocks.

Figure 3.

Architecture of 26-layer ResNet model for plant identification.

3. Experiments and Results

3.1. Implementation and Preprocess

The model implementation is based on the open source deep learning framework keras [28]. All the experiments were conducted on a Ubuntu 16.04 Linux server with a 3.40 GHz i7-3770 CPU (16 GB memory) and a GTX 1070 GPU (8 GB memory). The 100 samples of each class are split into 80 training samples and 20 test samples. Compared with conventional classification methods, data preprocess on deep learning approaches is much simpler. In this paper, the inputs to the network are RGB color images. All the images only need to be rescaled to 224 × 224 pixels and then per-pixel value is divided by 255.

3.2. Training Algorithm

During the back propagation phase, the model parameter is trained by the stochastic gradient descent (SGD) algorithm, with the categorical cross-entropy loss function as optimization object. The SGD can be expressed as follows:

| (3) |

where δx is sensitivity, wx+1 is multiplicative bias, ∘ indicates that each element is multiplied, up is upsampling, down is downsampling, Δwx represents the weight update of the layer, and η is the learning rate. The cross-entropy loss function is defined to be

| (4) |

where fj is the jth element in the classification score vector f.

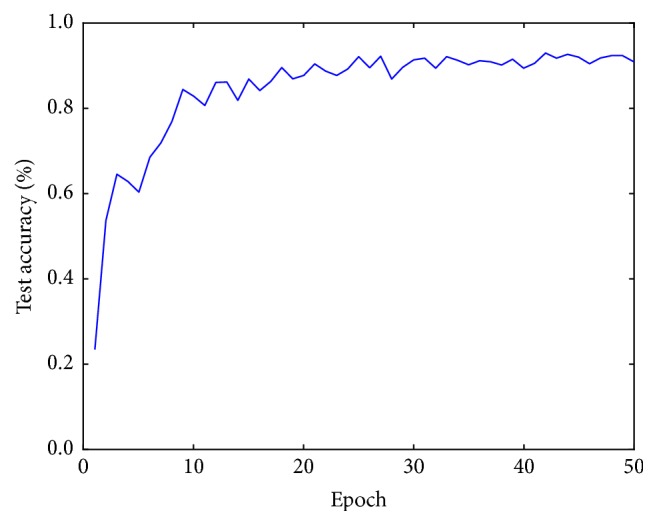

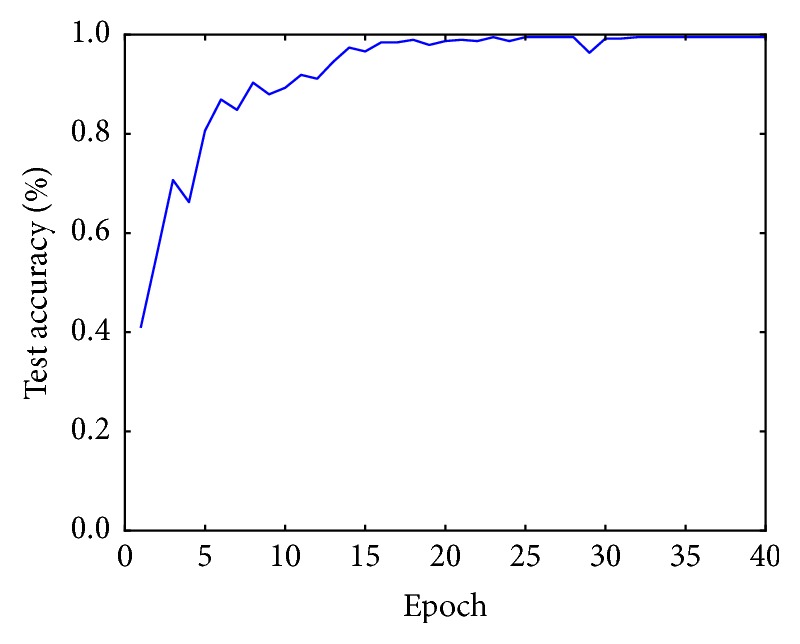

After some preliminary training experiments, the base learning rate is set to 0.001, which is gradually reduced at each epoch. The decay rate is 10−6 and the momentum is 0.9. Figure 4 shows the training process of ResNet26 model. Test accuracy improves quickly since the first epochs and stabilizes after 40 epochs.

Figure 4.

Evolution of classification accuracy in the test set.

3.3. Results Analysis

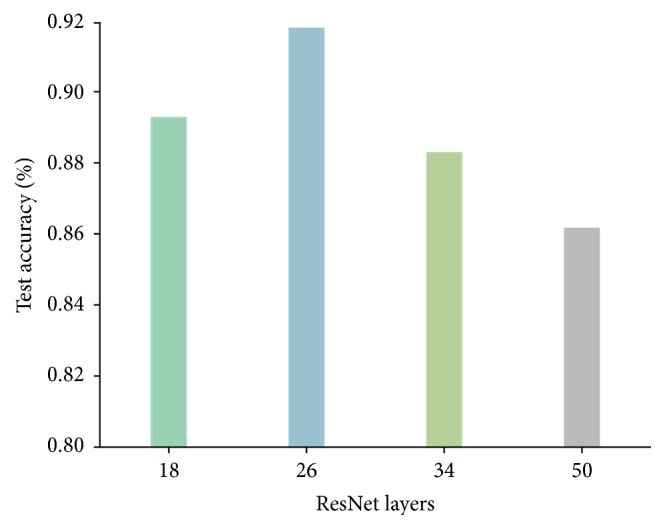

To find the best deep residual network, a series of experiments have been conducted on BJFU100 dataset. Figure 5 shows the comparison of test accuracy among the proposed ResNet26 model and the original ResNet model of 18, 34, and 50 layers [23] designed for ImageNet. The ResNet18, ResNet34, and ResNet50 yield a test accuracy of 89.27%, 88.28%, and 86.15%, respectively. The proposed ResNet26 results in 91.78% accuracy which increases the overall efficiency up to 2.51%.

Figure 5.

Test accuracy of the ResNet18, ResNet34, ResNet50 [23], and ResNet26 model. The proposed ResNet26 outperforms the best reference ResNet by 2.51%.

The ResNet26 is the best tradeoff between model capacity and optimization difficulty. For the size of BJFU100, ResNet26 contains enough trainable parameter to learn the discriminative feathers, which prevents underfitting. Compared to larger model, ResNet26 results in fast and robust convergence during SGD optimization, which prevents overfitting or falls into local optimum.

4. ResNet26 on Flavia Dataset

To show the effectiveness of the proposed ResNet26 model, a series of experiments have been performed on the publicly available Flavia [29] leaf dataset. It comprises 1907 images of 1600 × 1200 pixels, with 32 categories. Some of the samples are shown in Figure 6. We randomly select 80% of the dataset for training and 20% for testing.

Figure 6.

Example images of the Flavia dataset.

All the images are doubled and resized to 224 × 224 pixels. Per-pixel value is divided by the maximum value and subtracted the mean values of the data.

The training algorithm is exactly the same as that applied to the BJFU100 dataset. Figure 7 shows the training process of ResNet26 model. Test accuracy improves quickly since the first epochs and stabilizes after 30 epochs.

Figure 7.

Evolution of classification accuracy in the test set.

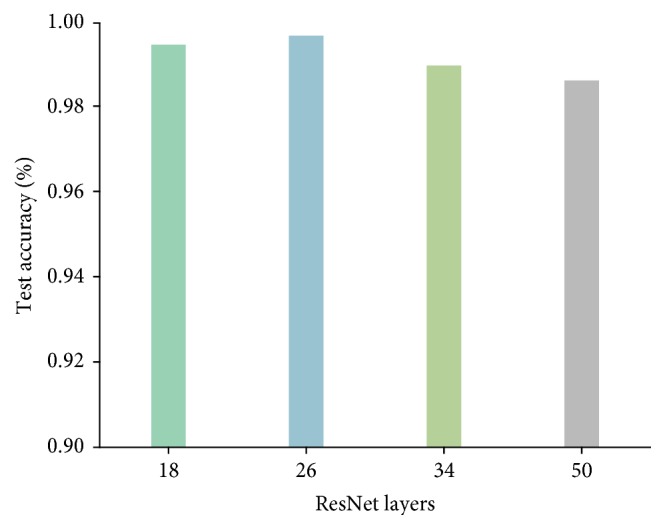

The test accuracy of each model is estimated by 10-fold cross-validation, as visualized in Figure 8. The ResNet18, ResNet34, and ResNet50 achieve a test accuracy of 99.44%, 98.95%, and 98.60%, respectively. The proposed ResNet26 gains 99.65% accuracy which increases the overall efficiency up to 0.21%. Table 1 summarizes our result and other previously published results on Flavia [29] leaf dataset. The ResNet26 model achieves a 0.28% improvement compared with the best-performing method.

Figure 8.

Test accuracy of the ResNet18, ResNet34, ResNet50 [23], and ResNet26 model on Flavia dataset. The proposed ResNet26 outperforms the best reference ResNet by 0.21%.

Table 1.

Recognition rate comparison on Flavia dataset.

5. Conclusion

The first mobile device acquired BJFU100 dataset containing 10,000 images of 100 plant species which provides data pillar stone for further plant identification study. We continue to expand the BJFU100 dataset by wider coverage of species and seasons. The dataset is open for academic community, which is available at http://pan.baidu.com/s/1jILsypS. This work also studied a deep learning approach to automatically discover the representations needed for classification, allowing use of a unified end-to-end pipeline for recognizing plants in natural environment. The proposed model ResNet26 results in 91.78% accuracy in test set, demonstrating that deep learning is the promising technology for large-scale plant classification in natural environment.

In future work, the BJFU100 database will be expanded by more plant species at different phases of life cycle and more detailed annotations. The deep learning model will be extended from classification task to yield prediction, insect detection, disease segmentation, and so on.

Acknowledgments

This work was supported by the Fundamental Research Funds for the Central Universities: YX2014-17 and TD2014-01.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Authors' Contributions

Yu Sun and Yuan Liu contributed equally to this work.

References

- 1.Joly A., Goëau H., Bonnet P., et al. Interactive plant identification based on social image data. Ecological Informatics. 2014;23:22–34. doi: 10.1016/j.ecoinf.2013.07.006. [DOI] [Google Scholar]

- 2.Goëau H., Bonnet P., Joly A. LifeCLEF plant identification task 2015. Proceedings of the Conference and Labs of the Evaluation Forum (CLEF '15); 2015; [Google Scholar]

- 3.Goëau H., Bonnet P., Joly A. Plant identification in an open-world (lifeclef 2016). Proceedings of the CLEF working notes; 2016. [Google Scholar]

- 4.Söderkvist O. Computer Vision Classification of Leaves from Swedish Trees. 2001. [Google Scholar]

- 5.Fu H., Chi Z., Chang J., Fu C. Extraction of leaf vein features based on artificial neural network—Studies on the living plant identification I. Chinese Bulletin of Botany. 2003;21:429–436. [Google Scholar]

- 6.Li Y., Zhu Q., Cao Y., Wang C. A leaf vein extraction method based on snakes technique. Proceedings of the International Conference on Neural Networks and Brain (ICNN&B '05); 2005; pp. 885–888. [Google Scholar]

- 7.He P., Huang L. Feature extraction and recognition of plant leaf. Journal of Agricultural Mechanization Research. 2008;6:p. 52. [Google Scholar]

- 8.Cerutti G., Tougne L., Mille J., Vacavant A., Coquin D. Understanding leaves in natural images - a model-based approach for tree species identification. Computer Vision and Image Understanding. 2013;117(10):1482–1501. doi: 10.1016/j.cviu.2013.07.003. [DOI] [Google Scholar]

- 9.Liu N., Kan J.-M. Plant leaf identification based on the multi-feature fusion and deep belief networks method. Journal of Beijing Forestry University. 2016;38(3):110–119. doi: 10.13332/j.1000-1522.20150267. [DOI] [Google Scholar]

- 10.Nilsback M.-E., Zisserman A. Delving deeper into the whorl of flower segmentation. Image and Vision Computing. 2010;28(6):1049–1062. doi: 10.1016/j.imavis.2009.10.001. [DOI] [Google Scholar]

- 11.Zhang C., Liu J., Liang C., Huang Q., Tian Q. Image classification using Harr-like transformation of local features with coding residuals. Signal Processing. 2013;93(8):2111–2118. doi: 10.1016/j.sigpro.2012.09.007. [DOI] [Google Scholar]

- 12.Wang Y. J., Zhang Y. W., Wang D. L., Yin X., Zeng W. J. Recognition algorithm of edible rose image based on neural network. Journal of China Agricultural University. 2014;19(4):180–186. [Google Scholar]

- 13.Li X., Li L., Gao Z., Zhou J., Min S. Image recognition of camellia fruit based on preference for aiNET multi-features integration. Transactions of the Chinese Society of Agricultural Engineering. 2012;28(14):133–137. [Google Scholar]

- 14.Kumar N., Belhumeur P. N., Biswas A., et al. Leafsnap: a computer vision system for automatic plant species identification. Proceedings of the Computer Vision—ECCV 2012; 2012; pp. 502–516. [DOI] [Google Scholar]

- 15. https://www.microsoft.com/en-us/research/project/flowerreco/

- 16.Bengio Y., Courville A., Vincent P. Representation learning: a review and new perspectives. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2013;35(8):1798–1828. doi: 10.1109/tpami.2013.50. [DOI] [PubMed] [Google Scholar]

- 17.LeCun Y., Bengio Y., Hinton G. Deep learning. Nature. 2015;521(7553):436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 18.Krizhevsky A., Sutskever I., Hinton G. E. Advances in neural information processing systems. 2012. Imagenet classification with deep convolutional neural networks; pp. 1097–1105. [Google Scholar]

- 19. http://www.image-net.org/challenges/LSVRC/2012/

- 20.Huval B., Wang T., Tandon S., et al. An empirical evaluation of deep learning on highway driving. https://arxiv.org/abs/1504.01716.

- 21.Kulkarni A., Rai H., Jahagirdar K., Upparamani P. A leaf recognition technique for plant classification using RBPNN and Zernike moments. International Journal of Advanced Research in Computer and Communication Engineering. 2013;2:984–988. [Google Scholar]

- 22.Sari C., Akgül C. B., Sankur B. Combination of gross shape features, fourier descriptors and multiscale distance matrix for leaf recognition. Proceedings of the 55th International Symposium (ELMAR '13); September 2013; pp. 23–26. [Google Scholar]

- 23.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR '16); June 2016; Las Vegas, Nev, USA. pp. 770–778. [DOI] [Google Scholar]

- 24.He K., Zhang X., Ren S., Sun J. Identity mappings in deep residual networks. Proceedings of the European Conference on Computer Vision; 2016; pp. 630–645. [DOI] [Google Scholar]

- 25.Dai J., He K., Sun J. Instance-aware semantic segmentation via multi-task network cascades. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR '16); June 2016; Las Vegas, Nev, USA. pp. 3150–3158. [DOI] [Google Scholar]

- 26.Nair V., Hinton G. E. Rectified linear units improve restricted boltzmann machines. Proceedings of the 27th International Conference on Machine Learning (ICML '10); June 2010; pp. 807–814. [Google Scholar]

- 27.Ioffe S., Szegedy C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. https://arxiv.org/abs/1502.03167.

- 28. https://keras.io/

- 29.Wu S. G., Bao F. S., Xu E. Y., Wang Y.-X., Chang Y.-F., Xiang Q.-L. A leaf recognition algorithm for plant classification using probabilistic neural network. 2007 IEEE International Symposium on Signal Processing and Information Technology; December 2007; Giza, Egypt. pp. 11–16. [DOI] [Google Scholar]