Abstract

There is strong scientific consensus that emphasizing print-to-sound relationships is critical when learning to read alphabetic languages. Nevertheless, reading instruction varies across English-speaking countries, from intensive phonic training to multicuing environments that teach sound- and meaning-based strategies. We sought to understand the behavioral and neural consequences of these differences in relative emphasis. We taught 24 English-speaking adults to read 2 sets of 24 novel words (e.g., /buv/, /sig/), written in 2 different unfamiliar orthographies. Following pretraining on oral vocabulary, participants learned to read the novel words over 8 days. Training in 1 language was biased toward print-to-sound mappings while training in the other language was biased toward print-to-meaning mappings. Results showed striking benefits of print–sound training on reading aloud, generalization, and comprehension of single words. Univariate analyses of fMRI data collected at the end of training showed that print–meaning relative to print–sound relative training increased neural effort in dorsal pathway regions involved in reading aloud. Conversely, activity in ventral pathway brain regions involved in reading comprehension was no different following print–meaning versus print–sound training. Multivariate analyses validated our artificial language approach, showing high similarity between the spatial distribution of fMRI activity during artificial and English word reading. Our results suggest that early literacy education should focus on the systematicities present in print-to-sound relationships in alphabetic languages, rather than teaching meaning-based strategies, in order to enhance both reading aloud and comprehension of written words.

Keywords: reading acquisition, reading comprehension, phonics, fMRI, artificial language learning

There has been persistent and intense debate concerning the manner in which children should be taught to read. There is now a strong scientific consensus regarding the critical importance of instruction that emphasizes the relationship between spelling and sound in reading acquisition (at least in alphabetic writing systems; e.g., National Reading Panel, 2000; Rayner et al., 2001; Rose, 2006). However, the extent to which this scientific consensus around phonic knowledge has permeated policy and practice in the area of reading instruction varies considerably across English-speaking countries. Specifically, while some countries mandate the use of systematic phonics in classrooms, others place greater emphasis on meaning-based knowledge, or use less-structured approaches that include some combination of both. Such differences in emphasis have consequences since, when there is limited instructional time, emphasizing one set of skills necessarily reduces emphasis on another set of skills.

In the present research, we seek to determine the behavioral and neural consequences of forms of reading instruction that emphasize the relationship between print and sound versus the relationship between print and meaning. To do so, we use a laboratory analogue of reading acquisition, in which adults are trained over an extended period on precisely defined novel materials, using precisely defined teaching and testing regimes. By combining this highly controlled method with neuroimaging, we are able to evaluate not only whether a manipulation improves reading performance behaviorally (using a range of reading aloud and comprehension tasks), but also how it improves performance, in terms of the neural systems modified by a particular experimental manipulation. In this way, our work also demonstrates how neuroscientific evidence can form part of the evidence base for developing educational methods.

Reading Instruction in Alphabetic Writing Systems

In England, the provision of systematic phonics instruction is a legal requirement in state-funded primary schools. To ensure compliance in all schools, children are required to participate in a national “phonics screen” in their second year of reading instruction (when they are five or six years-old), which measures word and nonword reading aloud. Since its implementation in 2012, the results of this assessment have shown dramatic year-on-year gains in the percentage of children reaching the expected standard—from 58% in 2012 to 81% in 2016. However, despite the apparent success of this policy, there continues to be resistance to it among teachers’ unions and others, who argue in favor of a less-prescriptive approach consisting of a variety of phonic- and meaning-related skills (Association of Teachers & Lecturers, 2016; National Union of Teachers, 2015). One frequent objection is that while phonics may assist reading aloud, it may not promote (and may even erode) reading comprehension (Davis, 2013).

The provision of systematic phonics instruction is also part of the Common Core State Standards Initiative in the U.S. (http://www.corestandards.org/). However, at present, not all U.S. states have adopted the Common Core standards. Further, unlike in England, there is no national assessment of phonic knowledge in young children that could reveal the success of the standards, or individual schools’ compliance with them. Similarly, even among states that have adopted the Common Core standards, there are reports that particular school boards promote “balanced literacy” approaches that include a variety of meaning-related as well as phonic-related skills (Hernandez, 2014; Moats, 2000).

Finally, although reading using phonic knowledge is included in the Australian curriculum, it is suggested as only one strategy, alongside a multicuing approach based on contextual, semantic, and grammatical information (Snow, 2015), including guessing the pronunciation of a word based on a picture or the word’s first letter (Neilson, 2016). The use of systematic phonics instruction is even less widespread in New Zealand classrooms, where text-based information (e.g., predictions based on pictures, preceding context, and prior knowledge) is regarded as more important than word-level phonic information for reading acquisition (Tunmer, Chapman, Greasy, Prochnow, & Arrow, 2013).

This brief review suggests that there is considerable variability in how reading is taught in English-speaking countries. Some prioritize print-to-sound knowledge, others prioritize print-to-meaning knowledge, and still others teach a variety of sound-based and meaning-based skills in the initial periods of reading instruction. Though there is strong evidence for the importance of learning to appreciate print-to-sound relationships in reading acquisition (e.g., National Reading Panel, 2000; Rayner et al., 2001; Rose, 2006), there is limited data on the behavioral and neural consequences of the relative difference in emphasis that characterizes reading instruction in the classroom. In the next section, we consider what might be the cognitive foundations of this differential emphasis.

Print-to-Sound and Print-to-Meaning Pathways for Reading

The Simple View of Reading (Gough & Tunmer, 1986) has had substantial impact on policy and practice in literacy education (e.g., Rose, 2006). The Simple View proposes that reading comprehension arises from the combination of print-to-sound decoding plus oral language skill (i.e., sound-to-meaning mappings), and hence emphasizes the importance of phonic knowledge in reading instruction. However, the Simple View is not a processing model, so is silent as to the actual mechanisms that underpin the discovery of meaning from the printed word. In order to capture these mechanisms, we must look to computational models of reading such as the Dual Route Cascaded model (DRC model, Coltheart, Rastle, Perry, Langdon, & Ziegler, 2001) and the Triangle model (Harm & Seidenberg, 2004; Plaut, McClelland, Seidenberg, & Patterson, 1996).

Like the Simple View of Reading, both the DRC and the Triangle model propose that reading comprehension can be achieved via an indirect pathway that maps from print-to-sound, and then from sound-to-meaning using preexisting oral language. The importance of the print-to-sound-to-meaning pathway is demonstrated by decades of research showing that phonological information is computed rapidly and as a matter of routine in the recognition and comprehension of printed words (Frost, 1998; Rastle & Brysbaert, 2006). In alphabetic and syllabic writing systems, print-to-sound mappings are largely systematic, allowing words to be broken down into symbols that correspond to sounds. The print-to-sound-to-meaning pathway has therefore sometimes been termed the sub-word pathway because of the componential nature of print-to-sound mappings (Wilson et al., 2009; Woollams, Lambon Ralph, Plaut, & Patterson, 2007). However, because the models vary in how they accomplish this mapping, we refer to the indirect pathway from print-to-sound-to-meaning simply as the phonologically mediated pathway.

Unlike the Simple View of Reading, both the DRC and Triangle model also propose a direct pathway from print-to-meaning. In alphabetic and syllabic writing systems, in contrast to the relationship between print and sound, the relationship between print and meaning is largely arbitrary and holistic. Similarly spelled words do not have similar meanings (at least when they are morphologically simple), and thus there is no sense in which a word can be broken down into component parts in order to access its meaning. This pathway is therefore sometimes termed the whole-word pathway (Wilson et al., 2009; Woollams et al., 2007). However, the distributed nature of these mappings means that the Triangle model can also capture subword regularities between print and meaning where these exist, for example, in polymorphemic words (Plaut & Gonnerman, 2000). Thus, throughout this article we will refer to the mapping from print-to-meaning as the direct pathway, because both models propose that written words can be comprehended without phonological mediation.

Plaut et al. (1996) argued that the “division of labor” between the phonologically mediated versus the direct pathway between print and meaning depends on the necessity of these processes for producing the appropriate response, given the task being performed and the characteristics of the orthography. This has led to suggestions that the direct pathway may only be necessary in orthographies with some degree of inconsistency between spelling and sound, because otherwise the phonologically mediated pathway can support accurate reading aloud and comprehension (Share, 2008; Ziegler & Goswami, 2005). However, this overlooks the fact that comprehension of written words should be more efficient using the direct than the phonologically mediated pathway, irrespective of spelling–sound consistency (Seidenberg, 2011). In the current research we therefore sought to determine how reading instruction that emphasizes print-to-sound versus print-to-meaning mappings impacts on the development of these pathways, and thus on reading aloud and comprehension of written words, in a regular orthography.

Neuroimaging and Neuropsychological Evidence for Dual Reading Pathways

Neuroimaging and neuropsychological evidence offer strong support for the notion of dual pathways to meaning proposed in cognitive models. This evidence has yielded a model in which phonologically mediated reading is underpinned by a dorsal pathway including left posterior occipitotemporal cortex, inferior parietal sulcus, and dorsal portions of the inferior frontal gyrus (opercularis, triangularis). Data from fMRI experiments in alphabetic languages reveal that these regions consistently show greater activation for nonwords than words (Taylor, Rastle, & Davis, 2013). Left inferior parietal cortex has also been found to be more active when reading alphabetic relative to logographic writing systems (Bolger, Perfetti, & Schneider, 2005). Furthermore, patients with damage to left posterior occipitotemporal cortex show slow and effortful reading in alphabetic scripts (Roberts et al., 2013), and those with damage to left inferior parietal cortex and dorsal inferior frontal gyrus show poor nonword relative to word reading (Rapcsak et al., 2009; Woollams & Patterson, 2012).

Conversely, neural evidence suggests that ventral pathway brain regions underpin direct print-to-meaning processes. Vinckier et al. (2007) showed that from left posterior to anterior occipitotemporal cortex there is an increasingly graded response to the word-likeness of written stimuli, with the mid-fusiform/inferior temporal gyrus responding more strongly to words and pseudowords than to stimuli containing frequent bigrams, followed by consonant strings, then false fonts. This processing hierarchy is supported by analyses of anatomical connectivity (Bouhali et al., 2014), with posterior occipitotemporal cortex connecting to speech processing regions such as left inferior frontal gyrus and posterior middle and superior temporal gyri, whereas anterior fusiform shows connectivity with more anterior temporal regions that are important for semantic processing. Supporting the idea that direct print-to-meaning processes are underpinned by anterior fusiform, meta-analytic fMRI data reveal greater activation for words than nonwords in this region, in addition to more lateral temporal lobe regions such as left middle temporal and angular gyri1 (Taylor et al., 2013). Similarly, patients with left anterior fusiform lesions display poorer performance in reading and spelling words than nonwords (Purcell, Shea, & Rapp, 2014; Tsapkini & Rapp, 2010). Further evidence for the correspondence between the direct pathway and ventral brain regions comes from semantic dementia patients, who have atrophy in anterior temporal lobes, including anterior fusiform gyrus (Mion et al., 2010), and often show particular problems reading aloud words with atypical spelling-to-sound mappings (Woollams et al., 2007). These irregular or inconsistent words depend more heavily on the direct pathway than words with typical or consistent print-to-sound relationships, which primarily rely on the phonologically mediated pathway.

The Development of Neural Reading Pathways

Like adults, children show neural activity in both dorsal and ventral pathways for a simple contrast of reading words and/or nonwords relative to rest (Martin, Schurz, Kronbichler, & Richlan, 2015). Supporting the involvement of dorsal stream regions in children’s phonologically mediated reading skills, activity in left dorsal inferior frontal gyrus and inferior parietal cortex during a rhyme judgement task was positively correlated with change in nonword reading skill, between the ages of 9 and 15 (McNorgan, Alvarez, Bhullar, Gayda, & Booth, 2011). Providing further longitudinal evidence, Preston et al. (2016) showed that in left fusiform gyrus, inferior parietal cortex, and dorsal inferior frontal gyrus, the degree of convergence between neural activity during print and speech tasks at age 8 predicted reading skill at age 10.

Computational models suggest that the involvement of the direct pathway increases with reading skill (Harm & Seidenberg, 2004; Plaut et al., 1996). In line with this, several authors have proposed that as children become better readers, reliance shifts from the dorsal to the ventral pathway (Pugh et al., 2000; Rueckl & Seidenberg, 2009; Sandak et al., 2012). This conceptualization is supported by longitudinal data showing that areas of the ventral pathway increase in sensitivity to written words between the ages of 9 and 15, and that this increasing sensitivity is associated with speeded word reading ability but not with nonword reading or phonological processing skill (Ben-Shachar, Dougherty, Deutsch, & Wandell, 2011).

Overall, although the data from children are somewhat limited, they support the proposed distinction between the phonologically mediated dorsal pathway and the direct print-to-meaning ventral pathway. However, we are unaware of any evidence linking instructional methods to changes in these neural systems.

Laboratory Approaches to Studying Language Learning

Ultimately, the questions being addressed in this manuscript need to be investigated in child populations. However, to provide an initial investigation into the impact of teaching method on neural mechanisms for reading, we used an artificial language approach with adults. There has been a surge of interest in recent years in using these approaches to model the acquisition of different types of linguistic information (Bowers, Davis, & Hanley, 2005; Clay, Bowers, Davis, & Hanley, 2007; Fitch & Friederici, 2012; Gaskell & Dumay, 2003; Hirshorn & Fiez, 2014; Tamminen, Davis, & Rastle, 2015; Taylor, Plunkett, & Nation, 2011). In contrast to studying children learning their first language (who vary in their prior experience with both spoken and written forms), artificial language approaches provide total control over participants’ prior knowledge of a new language or writing system. They also make it possible to manipulate what participants are taught and how they are taught in a way that could never be achieved in a naturalistic learning setting with children. Finally, working with adult learners permits collection of more extensive behavioral and brain imaging evidence during different stages of acquisition than would be possible with children. Artificial language learning studies consistently show that participants can learn sets of novel linguistic materials to a high degree of accuracy in a single training session, that this knowledge is sufficient to promote generalization to untrained materials (Tamminen et al., 2015; Taylor et al., 2011; Taylor, Rastle, & Davis, 2014a), and that this knowledge is long lasting (Havas, Waris, Vaquero, Rodríguez-Fornells, & Laine, 2015; Laine, Polonyi, & Abari, 2014; Merkx, Rastle, & Davis, 2011; Tamminen & Gaskell, 2008).

This body of research has yielded interesting insights into the mechanisms that underpin the learning and abstraction of different types of linguistic information. However, significant questions remain over the extent to which artificial language learning reflects natural acquisition processes. Indeed, in the related field of artificial grammar learning, there is a long history of debate over the nature of knowledge acquired (Perruchet & Pacteau, 1990; Reber, 1967; see Pothos, 2007, for a review). For example, if these paradigms reflect strategic, problem solving operations rather than the development of long-term abstract knowledge, then this would undermine their usefulness in understanding the acquisition of linguistic knowledge. Similarly, it could be that adults who have fully developed language and/or reading systems solve these laboratory learning tasks in fundamentally different ways than children acquiring these linguistic skills for the first time. One approach to countering these criticisms has been to demonstrate that similar behavioral effects emerge in artificial language learning studies as in natural languages (e.g., frequency and consistency effects on reading aloud; Taylor et al., 2011), while another has been to show that the constraints that underpin artificial language learning in adults also pertain to children (Henderson, Weighall, Brown, & Gaskell, 2013). However, more direct evidence that the processes recruited in artificial language learning paradigms overlap with those used in natural language would be desirable.

In this article we propose that neuroimaging data may provide this more direct evidence. Specifically, we will use brain responses to gain information about the mechanisms underlying different methods of literacy instruction in alphabetic writing systems. This approach is appropriate and timely because, as outlined earlier, the neural systems that underpin the phonologically mediated and direct pathways to reading in adults are well understood and appear to be similar in children (Martin et al., 2015; Taylor et al., 2013). We propose that we can capitalize on knowledge of these pathways to assess (a) whether training people to read new words printed in artificial scripts engages these neural reading pathways, and (b) whether and how different parts of the reading system are affected by different forms of training. These observations would provide direct evidence not only of the value of artificial learning paradigms for investigating reading, but also of the mechanism by which a particular training intervention operates. We believe that these inferences would be very difficult to draw from a purely behavioral outcome (e.g., accuracy or learning rate in a particular training condition), or through naturalistic study of children learning to read in their first language. This knowledge gained from our study will therefore complement that acquired from studies conducted on children, to inform the development of new interventions that target specific reading pathways.

Laboratory Approaches to Studying the Neural Basis of Reading Acquisition

In the present study we aimed to uncover the neural consequences of reading instruction that prioritizes print-to-sound versus print-to-meaning mappings. Previous training studies suggest that learning and retrieving componential print-to-sound associations for novel words (written either in familiar or artificial letters) modulates neural activity in dorsal pathway brain regions such as left inferior parietal cortex and inferior frontal gyrus (Mei et al., 2014; Quinn, Taylor, & Davis, 2016; Sandak et al., 2004; Taylor et al., 2014a). However, modulation of ventral pathway activity in artificial language learning studies has been somewhat elusive. Taylor et al. (2014a) found that learning whole object names activated the ventral pathway (left anterior fusiform gyri and ventral inferior frontal gyrus), more than learning letter-to-sound associations (see also, Quinn et al., 2016, for a similar finding when retrieving object names). However, no studies have reported ventral pathway activity for training on whole written words; instead, left angular and middle temporal gyri are more often implicated (Mei et al., 2014; Takashima et al., 2014). The failure to observe ventral pathway activity for trained words may be the result of relatively short and/or superficial training regimes, or because trained words were meaningless. In the current study, we therefore trained novel words extensively, all items had associated meanings, and we examined dorsal and ventral pathway activation during both phonological and meaning based tasks.

The Present Study

We used an artificial language paradigm underpinned by fMRI measures of brain activity to reveal the behavioral and neural consequences of an emphasis on print-to-sound versus print-to-meaning mappings as adults learned to read new alphabetic orthographies. We used a within-subject design in which 24 adults learned to read two sets of novel words (henceforth referred to as languages) written in two different sets of unfamiliar symbols (orthographies), over a 2-week training period. Figure 1 provides some examples of the stimuli, and Appendix A shows the stimuli learned by one participant. Participants were first preexposed to the sounds (phonology) and meanings (semantics) of the novel words in each language (Figure 2, row A). They then learned both orthography-to-phonology (O–P, print-to-sound) and orthography-to-semantic (O–S, print-to-meaning) mappings for each language over a 2-week training period (Figure 2, row C). Each orthography had a systematic one-to-one correspondence between print and sound, and an arbitrary whole-word correspondence between print and meaning. Our artificial languages therefore had writing systems that were similar to those of natural languages with transparent orthographies, such as Spanish or Italian. However, we manipulated the focus of learning: for one language participants received three times as much training on O–P mappings and for the other they received three times as much training on O–S mappings.

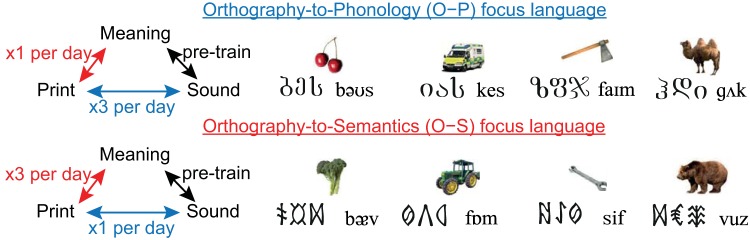

Figure 1.

Examples of stimuli used and illustration of how the learning focus manipulation was implemented. Note that this represents the experience of one participant as the assignment of orthography to spoken word set, noun set, and learning focus was counterbalanced across participants, as detailed in Appendix B. See the online article for the color version of this figure.

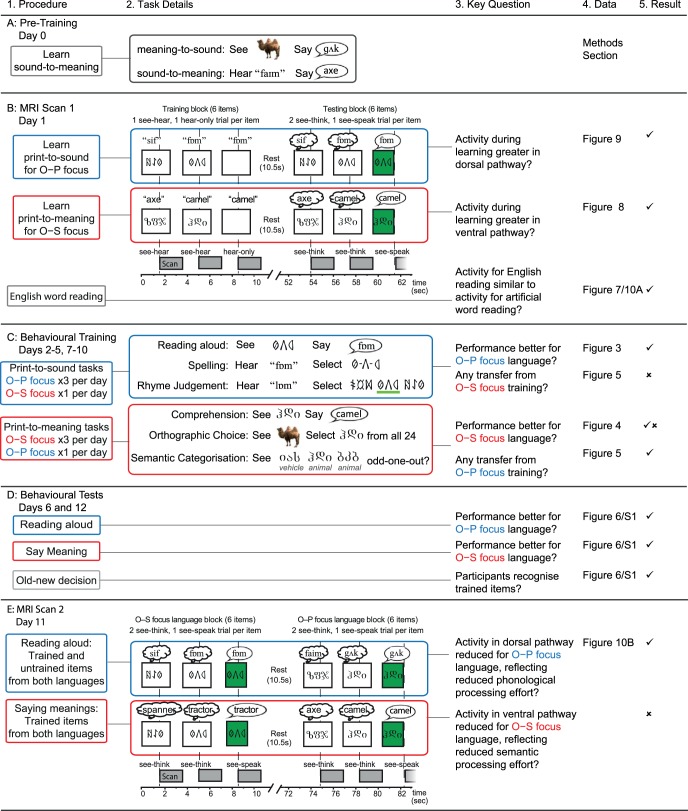

Figure 2.

Overview of procedures, task details, key questions, data sets, and results for each part of the experiment. Column 1 gives an overview of each training procedure. Column 2 gives further details of the behavioral and MRI protocols. In rows B and E, which show the trial format and timing during MRI scans, dotted lines indicate correspondence between stimulus presentation and scan onset. Black outlined boxes show what participants were viewing, and what they were hearing, thinking, or saying is shown above each box. Column 3 delineates the key questions addressed by each part of the experiment, column 4 shows where the results can be found, and in column 5 ticks and crosses indicate whether each prediction was confirmed by the data. See the online article for the color version of this figure.

All aspects of the stimulus sets were counterbalanced across subjects, including which set of meanings was associated with which set of spoken words, which set of spoken words was written in which orthography, and which training focus was associated with which orthography (full counterbalancing details provided in Appendix B). Thus, any observed influences of training focus on learning could not be attributed to any inadvertent differences between the sets of spoken words, symbol sets, or meanings.

We measured behavioral performance throughout the course of training, when the relative amount of exposure to orthography–phonology and orthography–semantic mappings varied between the two languages. Literacy acquisition in this laboratory model thereby enabled us to assess the behavioral consequences of an emphasis on print-to-sound versus print-to-meaning relationships for reading aloud and comprehension of printed words. We also used brain imaging to assess the neural impact of the different training protocols in three different ways.

-

1

The existing literature suggests that learning print-to-sound and print-to-meaning mappings should engage the dorsal and ventral pathways of the reading network respectively. To determine whether this was indeed the case, we measured neural activity while participants learned print-to-sound mappings for the O–P focus language and print-to-meaning mappings for the O–S focus language. This was participants’ first exposure to the two artificial orthographies. Figure 2, row B provides further details about MRI Scan 1, which also included an English word and pseudoword reading task.

-

2

Following 2-weeks of intensive behavioral training, participants underwent a second scanning session (MRI Scan 2), in which they generated pronunciations (reading aloud) and meanings (reading comprehension) of trained items from both languages. Details of these tasks are provided in Figure 2, row E. This enabled us to examine whether the two training regimes (O–P vs. O–S focus) differentially impacted activity in the dorsal and ventral pathways of the reading network during both reading aloud and reading comprehension. We anticipated that training focused on print-to-sound, rather than print-to-meaning, mappings should increase the efficiency of the phonologically mediated pathway. Thus, by the end of training, activity in brain regions along the dorsal pathway (e.g., inferior parietal sulcus, inferior frontal gyrus) should be reduced during reading aloud, reflecting less effortful processing for the print-to-sound than the print-to-meaning focused language. In the context of this artificial language, we can also address whether the converse is true—that is, whether training focused on print-to-meaning mappings increases the efficiency of the direct pathway. If so, then by the end of training, we would expect activity in brain regions along the ventral pathway (e.g., anterior fusiform gyrus) to be reduced during reading comprehension, indicating less effortful processing for the print-to-meaning than the print-to-sound focused language. Such an outcome could indicate that there are positive neural consequences of “balanced literacy” reading instruction programs that emphasize print-to-meaning relationships.

-

3

In MRI Scan 2, participants also read aloud untrained items from both the artificial languages. We used these data to compare the magnitude and spatial distribution of neural activity when reading aloud trained and untrained items from the artificial languages to that seen for English word and pseudoword reading (English data collected in MRI Scan1). This enabled us to determine the extent to which neural activity when reading the trained orthographies resembled reading in natural language, and thus to assess the ecological validity of using artificial orthographies to test hypotheses about literacy acquisition.

Method

Participants

Twenty-four native English speaking adults (21 females) aged 18–30 participated in this study. None of the participants had any current, or history of, learning disabilities, hearing impairments, or uncorrected vision impairments. All participants were right-handed and were students or staff at Royal Holloway, University of London, United Kingdom. Participants were paid for their participation in the study.

Stimuli

Spoken pseudoword forms

Six sets of 24 monosyllabic consonant-vowel-consonant (CVC) pseudowords were constructed from 12 consonant (/b/, /d/, /f/, /g/, /k/, /m/, /n/, /p/, /s/, /t/, /v/, /z/) and eight vowel phonemes; four vowels occurred in three sets of pseudowords (language 1: /ε/, /ʌ/, /aɪ/, /əʊ/) and four occurred in the other three sets (language 2: /æ/, /ɒ/, /i/, /u/). The two languages were constructed using different vowel phonemes to minimize their confusability, because each participant learned one set of items from Language 1, and another set of items from Language 2. Within each set of pseudowords, consonants occurred twice in onset position, and twice in coda position, whereas vowels occurred six times each. Pseudowords were recorded by a female native English speaker and digitized at a sampling rate of 44.1 kHz. For each participant, one set of pseudowords from each language constituted the trained items, one set from each language was used as untrained items to test generalization at the end of training, and one set from each language was used as untrained items for old-new decision to test whole-item recognition at the end of training. The assignment of pseudoword sets to conditions (trained, generalization, old-new decision) was counterbalanced across participants, as detailed in Appendix B.

Artificial orthographies

Two sets of 20 unfamiliar alphabetic symbols were selected from two different archaic orthographies (Hungarian Runes, Georgian Mkhedruli). Each phoneme from the two languages was associated with one symbol from each orthography, for example, the phoneme /b/ was associated with one Hungarian symbol and one Georgian symbol. Thus, there was an entirely regular, or one-to-one correspondence between symbols and sounds in each orthography. Each participant learned to read a set of trained items from one language written in Hungarian Runes and a set of trained items from the other language written in Georgian Mkhedruli. The assignment of language to orthography was counterbalanced across participants, as detailed in Appendix B. Some examples of items written in the artificial orthographies are shown in Figure 1, and the full set of items learned by one participant is shown in Appendix A.

Word meanings

Two sets of 24 familiar objects, comprising six fruits and vegetables, six vehicles, six animals, and six tools, were assigned to the two sets of trained items. Each item had an associated picture and a single word name. The majority of these items were used in experiments by Tyler et al. (2003). The two sets were matched on familiarity using the MRC Psycholinguistic database (Coltheart, 1981). A photo of each item was selected from the Hemera Photo Objects 50,000 Premium Image Collection, or where this was not possible (n = 9) from the Internet. Trained items were assigned meanings arbitrarily, such that there was no systematic relationship between the sound or spelling of each item and its meaning. The assignment of noun set to language, orthography, and training focus, was counterbalanced across participants, as detailed in Appendix B. Example stimuli are shown in Figure 1.

English words and pseudowords

At the end of the first scanning session, participants read aloud 60 regular words and 60 irregular words, half of which were high and half of which were low in imageability, and 60 pseudowords. We used these data to establish the extent to which neural activity during reading of the artificial languages was similar to that seen during English word and pseudoword reading. Regularity was defined using the grapheme-to-phoneme rules implemented in the DRC model (Coltheart et al., 2001). The four sets of words were pairwise matched on length, phonetic class of onset, log frequency from the Zipf scale (van Heuven, Mandera, Keuleers, & Brysbaert, 2014) and orthographic neighborhood size from the English Lexicon Project (Balota et al., 2007). Words and pseudowords were matched on length, phonetic class of onset, and orthographic neighborhood size (details provided in Appendix C).

Procedure

Phonology-to-semantic pretraining (see Figure 2A)

Before beginning the orthography training, participants learned the association between the spoken forms of the 24 trained pseudowords from each language and their meanings. Items from the two languages were presented in separate alternating training runs, and participants completed three runs for each language. In each run there were eight alternating blocks of training and testing. In each of the four training blocks, participants listened to six pseudowords, each presented with a picture of a familiar noun alongside its written English name. Each training block was followed by two testing blocks: one in which participants saw pictures of the six nouns they had just learned and overtly produced the associated pseudowords (semantic-to-phonology), and another in which they heard the six pseudowords they had learned and had to say the English translation (phonology-to-semantic). For trained items that contained the vowels /ε/, /ʌ/, /aɪ/, /əʊ/ (Language 1), the mean proportion of items correctly recalled by the end of the third run was .69 (within-subject standard error (SE) = .03) for semantic-to-phonology mappings, and .84 (SE = .02) for phonology-to semantic mappings. For the trained items that contained the vowels /æ/, /ɒ/, /i/, /u/ (Language 2), semantic-to-phonology mapping accuracy by Run 3 was .80 (SE = .02) and phonology-to-semantic mapping accuracy was .88 (SE = .03). Note that, due to counterbalancing procedures detailed in Appendix B, these slight differences in performance for the two languages could not have impacted on subsequent performance for O–P versus O–S focused training.

Due to adjustments made to scheduling, phonology-to-semantic pretraining took place the day before the first scan started for 6 participants, but one week before the first scan for 18 participants. These latter participants also completed an additional run of phonology-to-semantic training the day before the first scan. Performance remained stable between the third run of the initial pretraining session and this additional run.2 Overall, pretraining provided participants with relatively good knowledge of the relationship between the phonological and semantic forms of the two languages.

First MRI scanning session (Figure 2B)

Within the first scanning session, participants learned print-to-sound mappings for the O–P focus language (reading aloud) and print-to-meaning mappings for the O–S focus language (saying the meanings). The assignment of orthography to print-to-sound or print-to-meaning learning was counterbalanced across participants. In a final scanning run they then completed an English word and pseudoword reading aloud task. Further details of the functional imaging acquisition procedures are provided later in the Methods.

Print-to-sound and print-to-meaning training

Participants completed two learning runs for the O–P focus language, which involved learning print-to-sound associations, and two for the O–S focus language, which involved learning print-to-meaning associations. All 24 trained items from the language were presented in a randomized order in each run. Run types alternated and half the participants started with O–P learning and half with O–S learning.

Each run was broken down into four training blocks in which participants learned six items; each training block was followed by a test block in which participants retrieved pronunciations or meanings for the six items. Training blocks comprised 12 trials presented in a randomized order. Six had concurrent visual and spoken form presentation (see-hear) and six had isolated spoken form presentation (hear-only). Contrasts between these two trial types enabled us to examine how activity differed when a trial afforded a learning opportunity (see-hear) relative to when it did not (hear-only). For O–P learning runs, spoken forms constituted the pronunciation of the written word in the new language, whereas for O–S learning runs, spoken forms constituted the English meaning of the written word. Each training trial was 3,500 ms in duration, with visual items presented for the first 2,500 ms and spoken forms commencing at the onset of the trial. Scan volume acquisition (2,000 ms) commenced 1,500 ms after the trial onset.

In testing blocks, participants retrieved the pronunciations or meanings learned in the preceding training block. Testing blocks comprised 12 see-think trials, presented in a randomized order, in which participants were presented with an item’s visual form on a white background and covertly retrieved its pronunciation or meaning. Half of the see-think trials were immediately followed by a see-speak trial, in which the same item was presented on a green background and participants overtly articulated its pronunciation or meaning having retrieved it in the preceding see-think trial. This split trial format allowed participants enough time to both retrieve the pronunciation (see-think) and articulate it (see-speak). Each testing trial was 3,500 ms in duration, with visual forms presented at the beginning of the trial and scanning acquisition (2,000 ms) commencing after 1,500 ms. Visual forms were presented for 2,500 ms on see-think trials and 1,500 ms on see-speak trials to encourage participants to generate spoken forms before the onset of scan volume acquisition. Stimuli were presented over high quality etymotic headphones, and responses were recorded using a dual-channel MRI microphone (FOMRI II, Optoacoustics) and scored offline for accuracy. Response times (RTs) were labeled manually through inspection of the speech waveform using CheckFiles (a variant of CheckVocal, Protopapas, 2007). The same recording and scoring procedures were used for all MRI tasks.

English word and pseudoword reading

The 180 words and pseudowords were presented in a randomized order and participants were instructed to read each item aloud as quickly and accurately as possible. Items were presented in the center of a white background, in black, 32-point Arial font for 1,500 ms, and each was followed by a 2,000 ms blank screen interstimulus interval. Scan volume acquisition (2,000 ms) commenced at the onset of the blank screen. This scanning run therefore used the same timing as for see-speak trials in test blocks from the O–P and O–S learning runs. Eighteen items were presented per block and each block was followed by a 10.5-s blank screen rest period. Responses were recorded using a dual-channel MRI microphone. Contrasts between words and pseudowords were used to inform interpretations of activity during reading aloud and saying the meanings of items at the end of training.

Behavioral training (Figure 2C)

Participants learned about the two novel languages for an average of 1.5 hr per day (tasks were self-paced), for eight consecutive days, with breaks for weekends. To maximize participant engagement and minimize boredom, on each day they engaged in six different tasks for each language, three that involved mapping between orthography and phonology (O–P tasks) and three that involved mapping between orthography and semantics (O–S tasks). The nature of the tasks that involved O–P and O–S learning was matched as closely as possible. We manipulated the focus of learning, such that it prioritized orthography-to-phonology mappings for one language (the one for which they learned print-to-sound mappings in the scanner) and orthography-to-semantic mappings for the other language (the one for which they learned print-to-meaning mappings in the scanner). This manipulation was instantiated as follows: For the O–P focus language, participants completed O–P tasks three consecutive times per day and O–S tasks only once per day. Conversely, for the O–S focus language, participants completed O–S tasks three consecutive times per day and O–P tasks only once per day. Across the days, we varied the order in which the tasks were completed and whether each task was first completed for the O–P or the O–S language. For tasks requiring spoken responses, these were recorded and manually coded offline for accuracy and RT. Accuracy and RTs were recorded by E-Prime for tasks that required keyboard or mouse responses.

O–P tasks

The following tasks emphasized learning of mappings between orthography and phonology.

Reading aloud

The orthographic forms of each of the 24 trained items were presented in a randomized order. Participants read them aloud, that is, said their pronunciation in the new language, and pressed spacebar to hear the correct answer. This task therefore emphasized mapping from orthography to phonology.

Spelling

Participants heard the phonological form of a trained item, while viewing all 16 symbols from the corresponding orthography, presented in a four-by-four array. They then clicked (using the mouse) on the three symbols that spelled that item in the correct order. After each trial, feedback was given indicating which symbols were correct and which were incorrect, and the correct spelling was also displayed alongside the participant’s spelling. This task therefore emphasized mapping from phonology to orthography.

Rhyme judgment

On each trial, the orthographic forms of three trained items were presented on the left, center, and right of the screen. Participants heard a pseudoword that rhymed with one of these items and were instructed to press 1 (left), 2 (center), or 3 (right), to indicate which item it rhymed with. They then received feedback as to which was the correct answer and also heard how that item was pronounced. This task required participants to understand the mappings between orthography and phonology but was somewhat easier than the other two O–P tasks due to its forced choice nature. Each trained item was presented once as a target, and the two trained item distractors were pseudorandomly selected such that at least one shared either the final consonant or the vowel phoneme, but neither shared both the vowel and final consonant. In order to ensure that responses did not become overlearned, five rhyming pseudowords were generated for each trained item. Most were generated using Wuggy (Keuleers & Brysbaert, 2010).

O–S tasks

The following tasks emphasized learning of mappings between orthography and semantics.

Saying the meaning

The orthographic forms of each of the 24 trained items were presented in a randomized order. Participants said their English meaning aloud and then pressed spacebar to hear the correct answer. This task therefore emphasized mapping from orthography to semantics.

Orthographic search

Participants saw the orthographic forms of all 24 trained items in a 6 × 4 array and, above this, a picture of one of the trained meanings. Participants used the mouse to click on the orthographic form that matched the meaning and were then given feedback as to which item they should have selected. This task therefore emphasized mapping from semantics to orthography.

Semantic categorization

Participants saw the orthographic forms of three trained items and had to press 1 (left), 2 (center), or 3 (right), to indicate which was from a different semantic category from the other two. Participants were told that there were four categories; animals, vegetables/fruit, vehicles, and tools. Each trained item target was presented once, but the distractor category and items from that category were randomly selected on each trial. Feedback was given to indicate the correct answer, alongside a picture of the meaning of that item. This forced choice task was chosen to be of the same nature as the rhyme judgment task, but emphasized the mapping between orthography and semantics rather than orthography and phonology.

Second MRI scanning session (Figure 2E)

Neural activity was measured while participants read aloud the 24 trained and 24 untrained items from each language, and said the meanings of the 24 trained items from each language. The reading aloud task was split across two runs as it comprised twice as many items as the saying the meaning task. Half the participants first completed two reading aloud runs and then one saying the meaning run, whereas half the participants had the reverse task order. Trained and untrained items were presented in a randomized order across the two reading aloud runs, and participants were informed that they would be asked to say the pronunciations of items they had learned as well as new items written in the same symbols. Similarly, trained items were presented in a randomized order in the saying the meaning run, and participants were instructed to say the English meaning of each item.

Forty-eight items (24 from each language) were presented in each run, and there were two see-think and one see-speak trials for each item. In see-think trials, participants were presented with an item’s artificial orthographic form and covertly retrieved its pronunciation or meaning. Half the see-think trials were immediately followed by a see-speak trial, in which the same item was presented on a green background and participants overtly articulated its pronunciation or meaning, having retrieved it in the preceding see-think trial. Runs were split into blocks of 18 trials (12 see-think and six see-speak), with alternating blocks for the two different languages, to avoid undue confusion between them.

All trials were 3,500 ms in duration, with visual items presented for the first 2,500 ms followed by a blank screen on see-think trials, and for the first 1,500 ms followed by a blank screen on see-speak trials. Scan volume acquisition (2,000 ms) commenced 1,500 ms after the onset of the visual form. A 10.5-s rest period during which a blank screen was presented followed each block. Further details of the functional imaging acquisition procedures are provided later in the Method section.

Behavioral testing (Figure 2D)

After four days of training (Day 6), and following the second MRI Scan (Day 12), participants completed several tests to assess learning. These included reading aloud and saying the meanings of trained items, which took exactly the same form as during training, except that only one run of each task was completed, and no feedback was given. Participants were additionally asked to read aloud untrained items, which proceeded in the same way as reading aloud trained items, but participants were informed that the items they were going to be reading would be unfamiliar words written in the same symbols as the words they had been learning. This tested their ability to generalize their knowledge about the symbol-to-sound mappings in each language. They also completed an old-new decision task for each language in which they saw the orthographic forms of 24 trained and 24 untrained items, in a randomized order, and were asked to press Z if they had learned the item, and M if they had not learned the item. This tested whether they recognized the whole-word forms of the trained items. For all of the test tasks, the two languages were presented in separate runs.

Functional Imaging Acquisition

Functional MRI data were acquired on a 3T Siemens Trio scanner (Siemens Medical Systems, Erlangen, Germany) with a 32-channel head coil. Blood oxygenation level-dependent functional MRI images were acquired with fat saturation, 3 mm isotropic voxels and an interslice gap of .75 mm, flip angle of 78 degrees, echo time [TE] = 30 ms, and a 64 × 64 data matrix. For all tasks, in both scanning sessions, we used a sparse imaging design with a repetition time (TR) of 3,500 ms but an acquisition time (TA) of 2,000 ms, which provided a 1,500-ms period in which to present spoken words and record spoken responses in the absence of echoplanar scanner (Edmister, Talavage, Ledden, & Weisskoff, 1999). The acquisition was transverse oblique, angled to avoid the eyes and to achieve whole-brain coverage including the cerebellum. In a few cases the very top of the parietal lobe was not covered. To assist in anatomical normalization we also acquired a T1-weighted structural volume using a magnetization prepared rapid acquisition gradient echo protocol (TR = 2,250 ms, TE = 2.99 ms, flip angle = 9 degrees, 1 mm slice thickness, 256 × 240 × 192 matrix, resolution = 1 mm isotropic).

Six dummy scans were added at the start of each run to allow for T1 equilibration, these scans are excluded from statistical analyses. In the first scanning session, 144 images were acquired in each of the 8.4 min O–P and O–S training runs, and 210 images were acquired in the 12.25 min English word and pseudoword reading run. In the second scanning session, 336 images were acquired across the two 9.8 min reading aloud testing runs, and 168 images were acquired in the 9.8 min say the meaning testing run.

Image processing and statistical analyses were performed using SPM8 software (Wellcome Trust Centre for Functional Neuroimaging, London, United Kingdom). Images for each participant were realigned to the first image in the series (Friston et al., 1995) and coregistered to the structural image (Ashburner & Friston, 1997). The transformation required to bring a participant’s structural T1 image into standard Montreal Neurological Institute (MNI) space was calculated using tissue probability maps (Ashburner & Friston, 2005) and these warping parameters were then applied to all functional images for that participant. Normalized functional images were resampled to 2 mm isotropic voxels. The data were spatially smoothed with an 8mm full-width half maximum isotropic Gaussian kernel prior to model estimation.

Data from each participant were entered into general linear models for event-related analysis (Josephs & Henson, 1999). In all models, events were convolved with the SPM8 canonical hemodynamic response function (HRF). Movement parameters estimated at the realignment stage of preprocessing were added as regressors of no interest. Low frequency drifts were removed with a high-pass filter (128 s) and AR1 correction for serial autocorrelation was made. Contrasts of parameter estimates were taken forward to second level group analyses (paired-sample t tests, repeated measures analysis of variance [ANOVA]) using participants as a random effect. All comparisons were assessed using a voxelwise uncorrected threshold of p < .001. After thresholding, only activations exceeding a cluster extent family wise error (FWE) corrected threshold of p < .05, obtained using the nonstationarity toolbox in SPM8 (Hayasaka, Phan, Liberzon, Worsley, & Nichols, 2004) were further considered for interpretation. Figures show results at this cluster extent corrected threshold, displayed on a canonical brain image. Cluster coordinates are reported in the space of the MNI152 average brain template and anatomical labels were generated by MRICron (Rorden, Karnath, & Bonilha, 2007) which uses the automated anatomical labeling (AAL) template (Tzourio-Mazoyer et al., 2002). Further details about each of these univariate analyses are provided in the Results section.

In addition to analyses of the magnitude of activation in different conditions, we also analyzed the spatial distribution of activation for particular contrasts. These analyses allowed us to quantify spatial similarity between activation maps for reading artificial and English items (e.g., comparing the contrast of trained vs. untrained artificial items with English words vs. pseudowords). For these analyses, similarity was quantified using voxelwise correlation of T-statistic values for relevant contrasts in single subjects. These methods are similar to those used in Haxby et al. (2001), and used custom Matlab code that was informed by methods developed by Kriegeskorte, Mur, and Bandettini (2008) and Nili et al. (2014). Correlation coefficients in single participants were computed over voxels selected to fall within gray-matter masks defined by thresholding tissue probability maps derived from the normalization stage of preprocessing. Pearson correlation coefficients derived from statistical maps in single participants were Fisher Z-transformed to conform to normality assumptions and then entered into one-sample t tests over participants to compare observed correlations to the null hypothesis.

Results

Performance During Training

Orthography–phonology and orthography–semantic training

In order to assess the impact of the learning focus manipulation, we conducted ANOVAs comparing accuracy and RTs for the two languages in the first session of each of the 8 training days, on all six training tasks. Tasks that emphasized mappings between orthography and phonology (O–P tasks) are shown in Figure 3 and tasks that emphasized mappings between orthography and semantics (O–S tasks) are shown in Figure 4. Missing data, for example due to computer error, or if RT data were missing because a participant gave no correct responses in a particular condition, were replaced with the mean for that day and language. In these and all subsequent analyses, where Mauchley’s test indicated that the assumption of sphericity was violated, degrees of freedom were adjusted using the Greenhouse-Geisser correction.

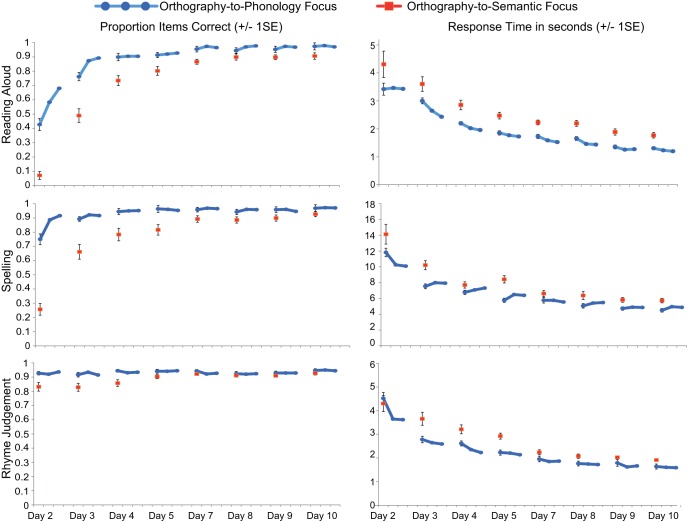

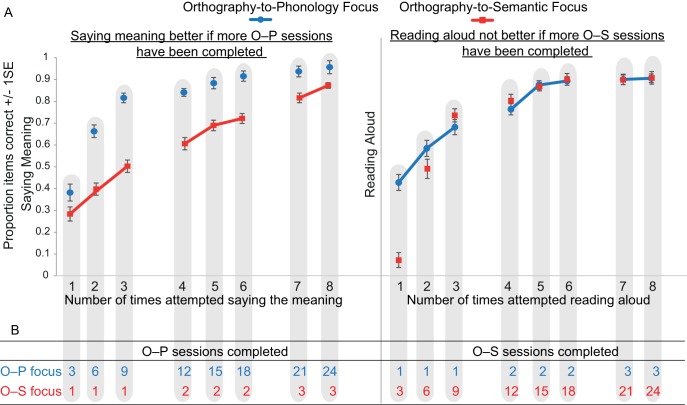

Figure 3.

Accuracy and RTs in tasks that involved mapping between orthography and phonology for the O–P and O–S focus languages on each day and each session of training. All error bars in this and subsequent figures use standard error appropriate for within-participant designs (Loftus & Masson, 1994). Error bars are shown only for the first session on each day because only these data points were statistically compared. See the online article for the color version of this figure.

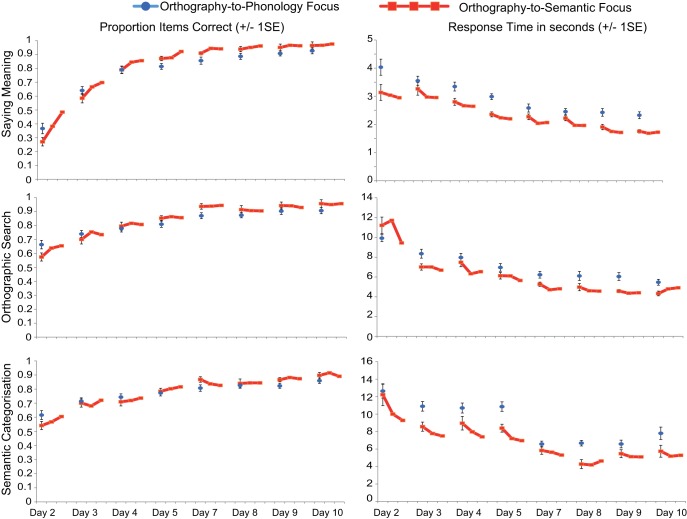

Figure 4.

Accuracy and RTs in tasks that involved mapping between orthography and semantics for the O–P and O–S focus languages on each day and each session of training. Error bars are shown only for the first session on each day because only these data points were statistically compared. See the online article for the color version of this figure.

The results of all ANOVAs are presented in Table 1. Performance (accuracy and RT) improved across the training days for all tasks. For the three O–P tasks (reading aloud, spelling, rhyme judgment), accuracy was higher and RTs were faster for the O–P than the O–S focus language, although for accuracy this effect reduced as performance reached ceiling toward the end of training. In contrast, for the three O–S tasks (saying meanings, orthographic search, semantic categorization), accuracy did not differ between the two languages, except on the first training day, when it was higher for the O–P focus language, and on the last training day, when it was higher for the O–S focus language. RTs for the O–S tasks were faster throughout training for the O–S focus language.

Table 1. Results of ANOVAs Assessing the Effect of Training Focus and Day on Accuracy and Response Times in the Six Training Tasks Across the 8 Days of Training.

| Task | Main effect focus | Main effect day | Interaction | |

|---|---|---|---|---|

| Accuracy in O–P tasks | Reading aloud | F(1, 23) = 30.81, p < .001, η2 = .57 | F(2.13, 48.96) = 145.66, p < .001, η2 = .86 | F(1.98, 45.58) = 11.07, p < .001, η2 = .33 |

| Spelling | F(1, 23) = 19.12, p < .001, η2 = .45 | F(2.83, 5.14) = 64.01, p < .001, η2 = .73 | F(2.97, 68.35) = 19.72, p < .001, η2 = .46 | |

| Rhyme judgement | F(1, 23) = 7.72, p = .01, η2 = .25 | F(1.93, 4.42) = 4.02, p < .05, η2 = .15 | F(2.79, 64.24) = 3.30, p < .05, η2 = .13 | |

| Accuracy in O–S tasks | Say meaning | F(1, 23) < 1, ns | F(2.71, 62.24) = 109.48, p < .05, η2 = .83 | F(3.27, 75.14) = 4.39, p < .01, η2 = .16 |

| Orthographic search | F(1, 23) < 1, ns | F(2.51, 57.68) = 26.08, p < .001, η2 = .53 | F(3.41, 78.41) = 3.30, p < .05, η2 = .13 | |

| Semantic categorization | F(1, 23) < 1, ns | F(3.16, 72.66) = 27.37, p < .001, η2 = .54 | F(3.91, 89.98) = 2.50, p = .05, η2 = .10 | |

| RT in O–P Tasks | Reading aloud | F(1, 23) = 32.72, p < .001, η2 = .59 | F(3.26, 75.05) = 99.51, p < .001, η2 = .81 | F(2.70, 62.08) < 1, ns |

| Spelling | F(1, 23) = 32.49, p < .001, η2 = .59 | F(2.10, 48.36) = 49.34, p < .001, η2 = .68 | F(2.77, 63.88) = 1.91, ns | |

| Rhyme judgement | F(1, 23) = 4.12, p = .05, η2 = .15 | F(2.87, 66.02) = 65.24, p < .001, η2 = .74 | F(2.23, 51.35) = 3.46, p < .05, η2 = .13 | |

| RT in O–S Tasks | Say meaning | F(1, 23) = 21.41, p < .001, η2 = .48 | F(2.64, 60.76) = 37.27, p < .001, η2 = .62 | F(3.39, 77.70) = 1.70, ns |

| Orthographic search | F(1, 23) = 12.26, p < .01, η2 = .35 | F(3.60, 82.83) = 29.44, p < .001, η2 = .56 | F(3.99, 91.86) = 2.34, p = .06 | |

| Semantic categorization | F(1, 23) = 15.61, p = .001, η2 = .40 | F(2.54, 58.46) = 20.92, p < .001, η2 = .48 | F(4.04, 92.82) = 1.72, ns |

Overall, training that focused on orthography-to-phonology mappings was more beneficial than training that focused on orthography-to-semantic mappings; accuracy was higher and responses were faster in tasks that required mapping between print and sound, and accuracy was equivalent in tasks that required mapping between print and meaning. The only benefit from training that focused on orthography-to-semantic mappings was increased speed in mapping between print and meaning.

Transfer between orthography–phonology and orthography–semantic training

The previous analyses established that tasks that required print-to-sound mapping were more accurate when training focused on O–P than O–S mappings. In contrast, accuracy was equivalent on tasks that required print-to-meaning mapping whether training primarily focused on O–P or O–S mappings. This suggests that knowledge of O–P mappings transferred and benefited access to item meanings as well as item sounds. To provide further evidence for this observation, we compared learning trajectories in saying the meanings for the two languages when the number of times participants had attempted this particular task was equated, but when the amount of orthography–phonology focused training they had completed was much greater for the O–P than the O–S focus language. We also compared learning trajectories for the two languages when the number of times participants had attempted reading aloud was equated, but when the amount of orthography–semantic focused training was much greater for the O–S than the O–P focus language. These comparisons are displayed in Figure 5.

Figure 5.

Replotting of data shown in the top left graphs of Figures 3 and 4 to illustrate how having completed relatively more O–P training sessions benefits saying the meanings (left panel), whereas having completed relatively more O–S training sessions does not benefit reading aloud (right panel). Graphs show the proportion of items correct in saying the meaning (left panel) and in reading aloud (right panel) the first eight times (after the initial scanning session) participants completed these tasks for the O–P and O–S focus languages. Joined up points indicate that sessions were completed on the same day, whereas separated points indicate that sessions were completed on different days. The information in the table that is connected to the graphs by gray lozenges shows how, when the number of times saying the meaning is equated, participants have received relatively more O–P training for the O–P than the O–S focus language (left panel). Conversely, when the number of times reading aloud is equated, participants have received relatively more O–S training for the O–S than the O–P focus language (right panel). See the online article for the color version of this figure.

In order to assess the impact of transfer quantitatively, we conducted ANOVAs on the proportion of items correct the first eight times (factor = session) participants said the meanings versus read aloud (factor = task) items from the O–P versus the O–S focus language (factor = training focus). This meant that for saying the meanings, sessions for the O–P focus language came from each of the 8 days of behavioral training, whereas sessions for the O–S focus language came from Days 2 to 4. In contrast, for reading aloud, sessions for the O–S focus language came from each of the eight days of training whereas sessions for the O–P focus language came from Days 2 to 4.3 As set out in Figure 5B, this provided us with imbalances in the type of training received, which enabled us to examine how additional O–P versus O–S focused training impacted on saying the meanings and reading aloud, respectively.

The ANOVA revealed that overall accuracy was higher for saying the meanings than reading aloud, F(1, 23) = 7.08, p = .01, η2 = .24, and for the O–P than the O–S focus language, F(1, 23) = 30.88, p < .001, η2 = .57. These effects were qualified by a significant interaction between task and training focus, F(1, 23) = 14.58, p = .001. η2 = .39. Participants were significantly more accurate at saying the meanings for the O–P focus than the O–S focus language, whereas accuracy in reading aloud was equivalent for the two languages. Planned comparisons demonstrated that the advantage of the O–P over the O–S focus language in saying the meanings was present in all sessions, but greater in magnitude in earlier sessions, and that there was no advantage for the O–S than the O–P language in reading aloud in any of the sessions. These analyses suggest that additional print-to-sound focused training boosted performance in saying the meanings, but that additional print-to-meaning focused training did not boost reading aloud.

Performance During Test Sessions

We assessed participants’ performance in a number of ways on Days 6 (middle of training) and 12 (end of training). However, because the Day 6 data represent just a snap-shot of the training data reported in Figures 3 and 4, they are provided in the supplementary materials only.

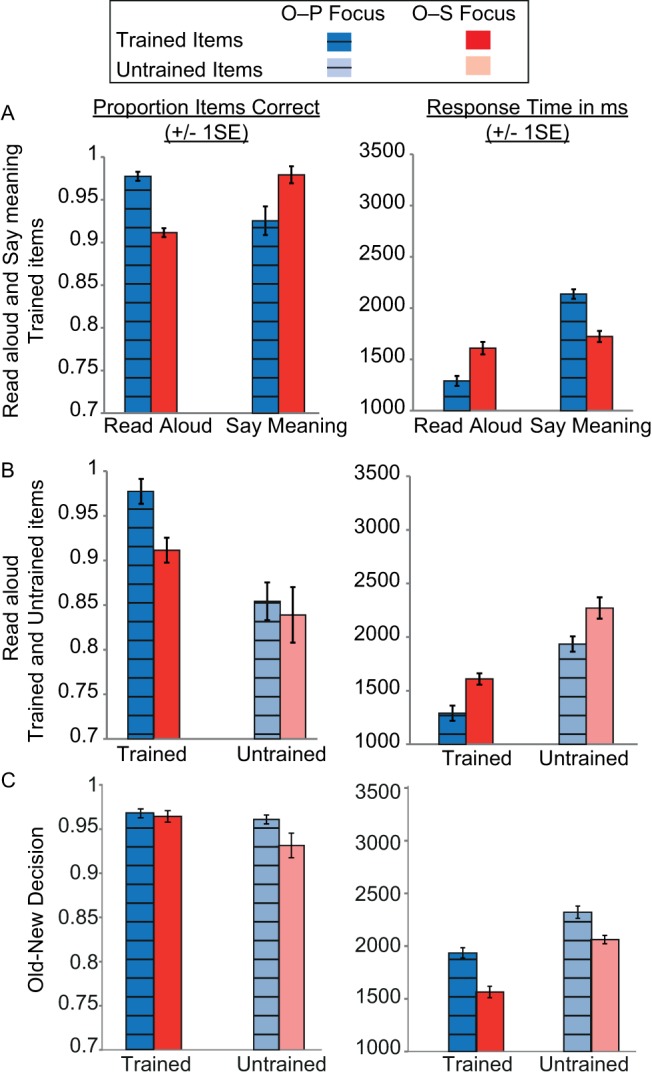

Reading aloud and saying meanings of trained items (Figure 6A, Table 2)

Figure 6.

Accuracy and RTs in test tasks conducted at the end of behavioral training. (A) reading aloud and saying the meanings of trained items, (B) reading aloud trained and untrained items, (C) old-new decision. See the online article for the color version of this figure.

Table 2. Results of Analyses Conducted at the End of Training That Assessed the Effect of Training Focus (O–P vs. O–S) on Accuracy and Response Times in Reading Aloud Versus Saying the Meanings of Trained Items, Reading Aloud Trained Versus Untrained Items, and Old-New Decisions to Trained Versus Untrained Items.

| Task | Main effect task | Main effect focus | Interaction |

|---|---|---|---|

| Reading aloud and saying the meaning of trained items | |||

| Accuracy | F(1, 23) < 1, ns | F(1, 23) < 1 ns | F(1, 23) = 7.35, p = .01, η2 = .24 |

| RT | F(1, 23) = 60.88, p < .001, η2 = .73 | F(1, 23) < 1, ns | F(1, 23) = 31.10, p < .001, η2 = .58 |

| Reading aloud trained and untrained items | |||

| Accuracy | F(1, 23) = 21.55, p < .001, η2 = .48 | F(1, 23) = 1.57, ns | F(1, 23) = 1.72, ns |

| RT | F(1, 23) = 57.31, p < .001, η2 = .71 | F(1, 23) = 9.61, p < .01, η2 = .30 | F(1, 23) < 1, ns |

| Old-new decisions to trained and untrained items | |||

| D-Prime | t(23) = 1.20, ns | ||

| RT | F(1, 23) = 66.23, p < .001, η2 = .74 | F(1, 23) = 17.30, p < .001, η2 = .43 | F(1, 23) = 1.90, ns |

By the end of training, participants were able to read aloud and say the meanings of more than 90% of the items in both languages. Response times were around 1,500 ms. ANOVAs on both accuracy and RTs with the factors task (read aloud vs. say meaning) and training focus (O–P vs. O–S focus language) obtained significant interactions between these two factors; reading aloud was faster and more accurate for the language that received O–P than O–S focused training, but saying the meaning was faster and more accurate for the language that received O–S than O–P focused training (see Table 1). Thus, by the end of training, performance for each language was best for the task that had received most training.

Generalization to untrained items (Figure 6B, Table 2)

The proportion of untrained items read aloud correctly from each language was around 0.8 with response times of 2,000 ms. An ANOVA on accuracy revealed an effect of training status (trained > untrained items), but no effect of language or interaction between training and language. For RTs, trained items were read aloud faster than untrained items and responses were faster for the O–P than the O–S language. There was no interaction between training status and language. The results confirm that untrained items were harder to read aloud than trained items. Furthermore, O–P focused training benefited reading aloud speed for both trained and untrained items relative to O–S focused training.

Old-new decision (Figure 6C, Table 2)

Discrimination between trained and untrained items was highly accurate; one-sample t tests on d-prime values indicated that performance was above chance for both the O–P focus language (d′ = 3.58) and the O–S focus language (d′ = 3.43) at the end of training. Paired t tests on d-prime values also indicated that discrimination accuracy did not differ for the two languages. An ANOVA showed that RTs were faster for trained than untrained items, and for the O–S than the O–P focus language. There was no interaction between training status and language.

Summary of test performance at the end of training

Overall, O–P focused training conferred benefits on reading aloud of trained and untrained items. In contrast, O–S focused training benefited saying item meanings, and also the speed with which participants could discriminate trained from untrained items.

Functional MRI Data Prior to Behavioral Training

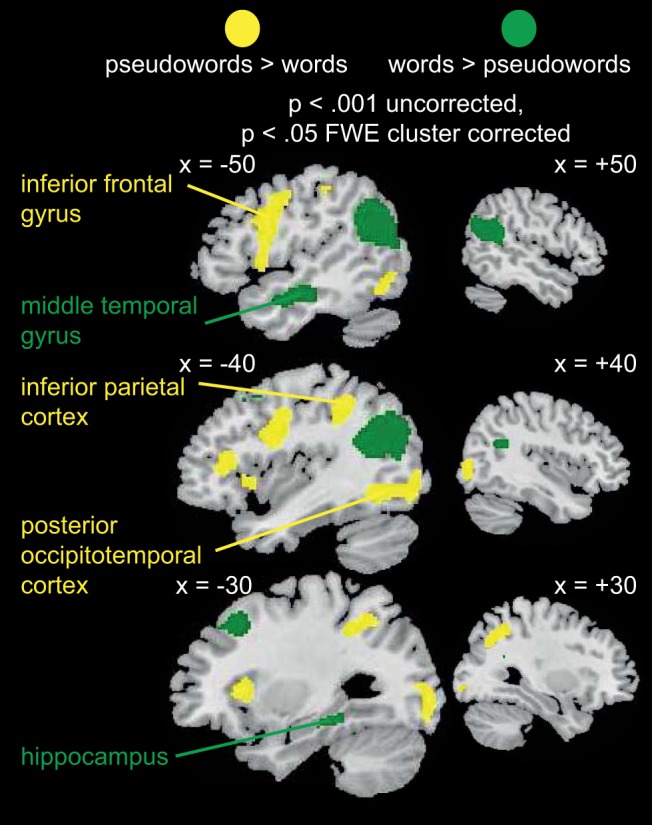

Reading aloud English words and pseudowords

Examining activity during English word and pseudoword reading allowed us to delineate the neural systems our participants used for reading aloud in their native language. In particular, we used the contrast pseudowords > words, because this should reveal dorsal stream brain regions involved in spelling-to-sound conversion and phonological output, and the contrast words > pseudowords, since this should reveal ventral stream brain regions involved in lexical and/or semantic processing (Taylor et al., 2013).

Analyses were conducted on data from 20 participants, for whom the mean proportion of pseudowords and words read correctly is reported in Appendix D. Also provided in Appendix D are details of which subjects were excluded from each of the fMRI analyses, along with exclusion criteria. Note that we later compare English word and pseudoword reading with reading aloud the artificial orthographies. Therefore, as detailed in Appendix D, three participants were excluded from the current analysis because they performed poorly or moved excessively when reading aloud artificial orthographies at the end of training. We modeled errors, correct words, and correct pseudowords, and conducted paired t tests on pseudowords > words, and words > pseudowords. As shown in Figure 7 and Appendix E, pseudowords activated left inferior frontal and precentral gyri and the insula, bilateral inferior and superior parietal cortices, bilateral occipitotemporal cortices, supplementary motor area, and the right insula more than words. In contrast, words activated bilateral angular and supramarginal gyri, left middle temporal gyrus, precuneus, left middle frontal gyrus, and left hippocampus more than pseudowords. These results are very similar to those from a recent meta-analysis of neuroimaging studies, confirming that the phonologically mediated dorsal pathway is more active for pseudoword than word reading (Taylor et al., 2013) whereas the direct ventral pathway is more active for word than pseudoword reading.

Figure 7.

Brain regions that were more active during English pseudoword than word reading (yellow), or word than pseudoword reading (green). Left and right hemisphere slices show whole-brain activations at p < .001 voxelwise uncorrected and p < .05 FWE cluster-corrected for 20 participants.

Learning the pronunciations and meanings of novel words in the artificial orthographies

Participants learned pronunciations for the 24 trained items from the O–P focus language and meanings for the 24 trained items from the O–S focus language, while neural activity was measured with fMRI. Analyses were conducted on 18 participants, for whom the proportion of items recalled correctly during scanning is reported in Appendix D.

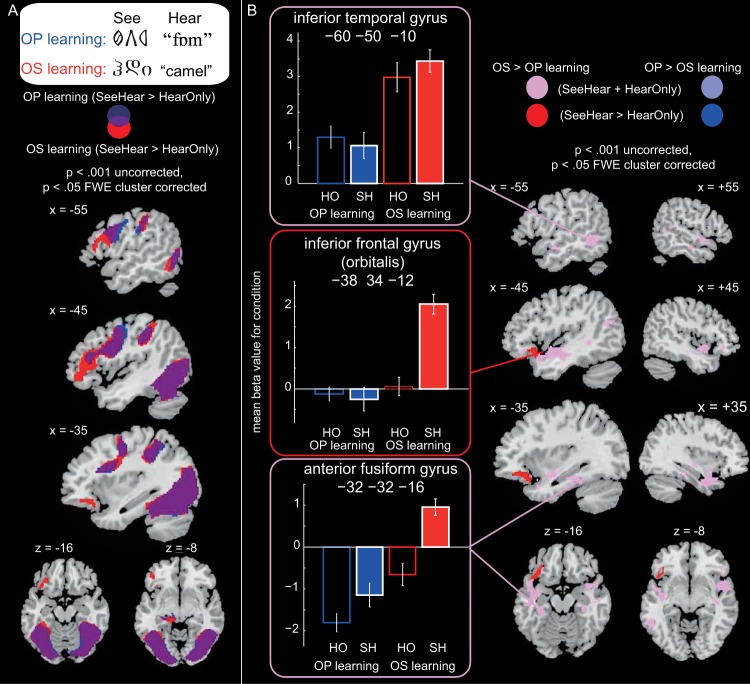

We modeled four event types in each O–P and O–S learning run: hear-only, see-hear, see-think, and see-speak. To examine the brain regions involved in learning pronunciations (O–P) and meanings (O–S) of novel words written in artificial orthographies, we conducted an ANOVA with two factors, learning type (O–P vs. O–S) and trial type (see-hear vs. hear-only), collapsed across the two runs. The same spoken forms were presented on see-hear and hear-only trials, but only see-hear trials afforded an associative learning opportunity. Therefore, the main effect of trial type, in particular the directional contrast (see-hear > hear-only), should reveal brain regions involved in learning the artificial orthographies. The main effect of learning type will reveal differences in activity between O–P and O–S learning. However, because this may have partly been driven by differences in listening to meaningful versus nonmeaningful spoken words, we then looked for an interaction between learning and trial type. In particular, for differences between O–P and O–S learning that were greater for see-hear than hear-only trials. Results are shown in Figure 8 and peak coordinates are reported in Appendix F.

Figure 8.

Brain regions active when learning pronunciations (OP learning) and meanings (OS learning) of artificial orthographies in MRI Scan 1, prior to behavioral training. Left and right hemisphere slices show whole-brain activations at p < .001 voxelwise uncorrected and p < .05 FWE cluster-corrected for 18 participants. Panel A shows the simple effect of see-hear > hear-only activity for OP (blue) and OS (red) learning, and the overlap between these (purple). Panel B shows the results of an ANOVA that included the factors learning type (OP vs. OS) and trial type (see-hear vs. hear-only). Slices show the main effect of learning type (pink: OS > OP, light blue: OP > OS) and the interaction between learning type and trial type that was driven by greater activation for see-hear relative to hear-only trials for OS than OP learning (red). Note that no brain regions showed more activation for OP than OS learning. Plots show activation for hear-only (HO) and see-hear (SH) trials for OP and OS learning at representative peak voxels that showed a main effect of learning type (pink boxes) or an interaction between learning and trial type (red box).

The main effect of trial type revealed that there was greater activation for see-hear than hear-only trials in bilateral occipitotemporal cortices, thalamus, and bilateral inferior frontal and precentral gyri. To provide additional information, rather than showing the main effect, Figure 8A shows the simple effect of see-hear > hear-only activity for O–P and O–S learning and the overlap between them. This analysis demonstrates that both dorsal and ventral processing streams of the reading network are involved in learning pronunciations and meanings of artificial orthographies. There was also a main effect of learning type (Figure 8B); activation was greater for O–S than O–P learning in left fusiform and parahippocampal gyri, left temporal pole and inferior frontal gyrus (orbitalis), right temporal pole and inferior frontal gyrus (orbitalis), bilateral superior and medial frontal gyri, left inferior and middle temporal gyri, and the cerebellum. O–P learning did not activate any brain regions more than O–S learning. Finally, an interaction between learning type and trial type was obtained in left inferior frontal gyrus (orbitalis). As can be seen in the plots in Figure 8B, this interaction was driven by greater see-hear relative to hear-only activation for O–S than O–P learning. The plots also show that, although a significant interaction was not obtained in left anterior fusiform, this region showed a very similar profile to left inferior frontal gyrus (orbitalis), with only the see-hear O–S learning trials showing positive activation relative to rest. These analyses demonstrate that learning the arbitrary (English) meanings of novel words written in an artificial orthography activates ventral stream regions of the reading network previously implicated in semantic processing (Binder, Desai, Graves, & Conant, 2009) including both left inferior frontal gyrus (orbitalis) and left anterior fusiform, more than is the case for learning the systematic pronunciations of novel words.

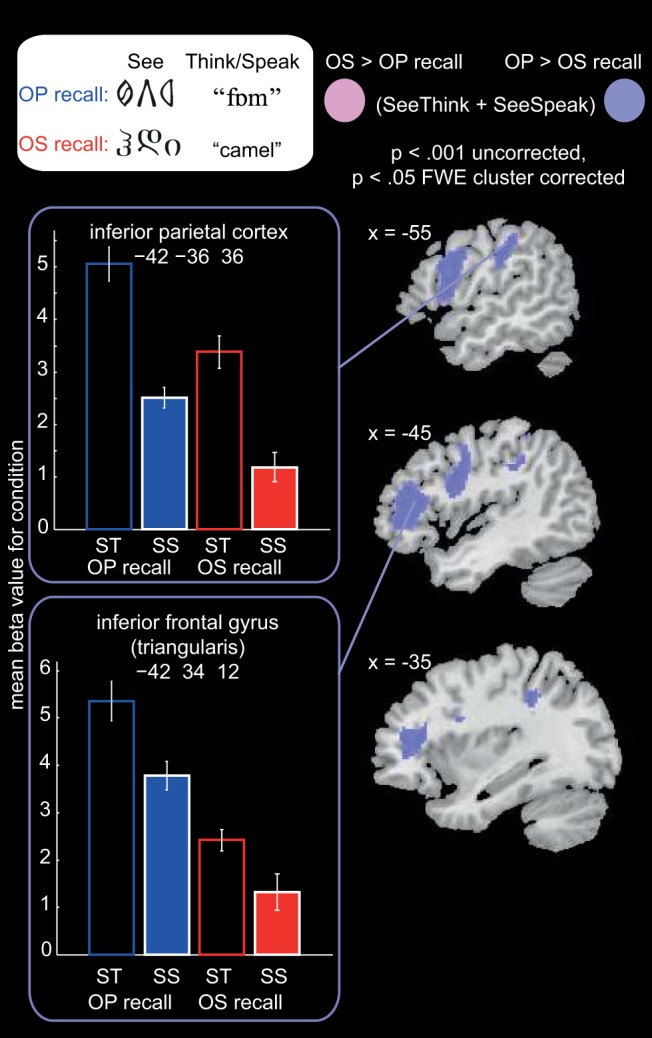

Next we examined differential activation during recall of pronunciations versus meanings. Two paired t tests, O–P > O–S, and O–S > O–P, were conducted, collapsed across the two runs, and collapsed across see-think and see-speak trials. See-speak trials always immediately succeeded see-think trials for the same item and so the demands on recalling (pronunciation or meaning) were greatest during see-think trials. However, both see-think and see-speak trials were included in this analysis because, at this early stage of learning, participants were likely to still be partially retrieving pronunciations during see-speak trials. This assertion is supported by the fact that mean response times for reading aloud and saying the meanings were between 3,000 ms and 4,500 ms on the first day of behavioral training, and the time between the onset of see-think and see-speak trials was 3500ms. Results are shown in Figure 9 and peak coordinates are reported in Appendix G. Activation was greater during pronunciation (O–P) than meaning (O–S) recall in left inferior frontal gyrus (triangularis and opercularis) and left precentral gyrus, cerebellum, and left inferior parietal cortex and postcentral gyrus. The reverse contrast, O–S > O–P recall, did not reveal any significant clusters. The plots in Figure 9 show activation when retrieving O–P versus O–S associations during both see-think and see-speak trials. These analyses suggest that recalling systematic pronunciations of novel orthographic forms activates dorsal stream regions of the reading network, including left inferior frontal, motor, and inferior parietal cortices, more than recalling arbitrary meanings of orthographic forms.

Figure 9.

Brain regions showing differential activity when recalling pronunciations (OP recall) versus meanings (OS recall) of artificial orthographies, in MRI Scan 1, prior to behavioral training. Left hemisphere slices show the results of paired t tests between these two tasks (pink: OS > OP, light blue: OP > OS), collapsed across see-think (ST) and see-speak (SS) trials. Note that no brain regions showed greater activity when recalling meanings than pronunciations. Whole-brain activations are presented at p < .001 voxelwise uncorrected and p < .05 FWE cluster-corrected for 18 participants. Plots show activation for see-think and see-speak trials for OP and OS recall at representative peak voxels that showed greater activity for recalling pronunciations (OP) than meanings (OS).

Functional MRI Data After the End of Training

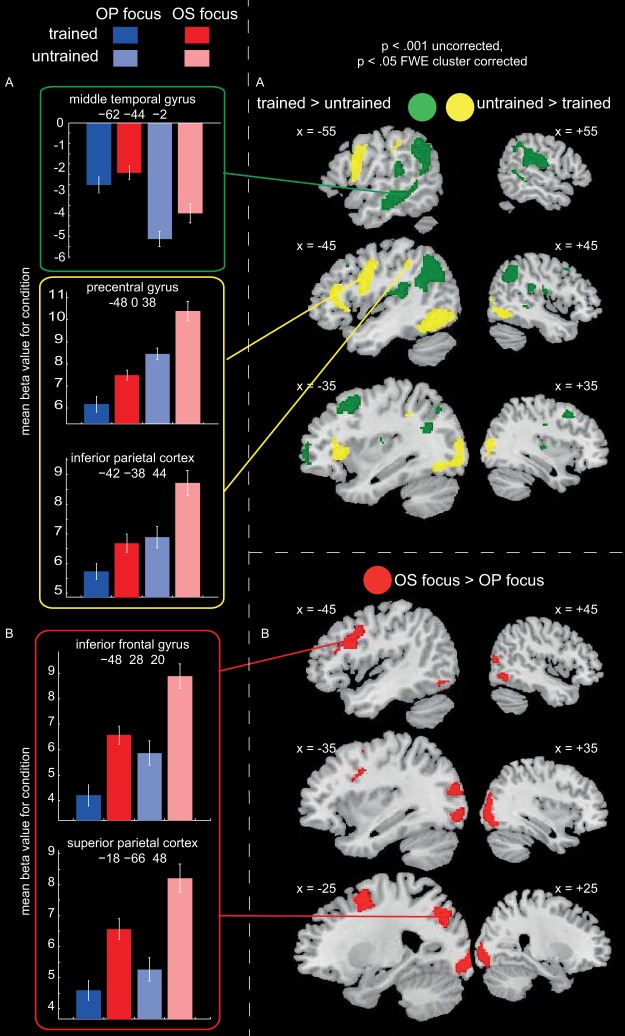

Reading aloud trained and untrained artificial orthography items

Participants read aloud the 24 trained items from the O–P and the O–S focus languages, as well as 24 untrained items from each language, while neural activity was measured with fMRI. Twenty participants were included in the analyses, for whom the proportion of trained and untrained items read aloud correctly in each language during scanning is reported in Appendix D.