Abstract

The dominant theoretical framework for decision making asserts that people make decisions by integrating noisy evidence to a threshold. It has recently been shown that in many ecologically realistic situations, decreasing the decision boundary maximizes the reward available from decisions. However, empirical support for decreasing boundaries in humans is scant. To investigate this problem, we used an ideal observer model to identify the conditions under which participants should change their decision boundaries with time to maximize reward rate. We conducted 6 expanded-judgment experiments that precisely matched the assumptions of this theoretical model. In this paradigm, participants could sample noisy, binary evidence presented sequentially. Blocks of trials were fixed in duration, and each trial was an independent reward opportunity. Participants therefore had to trade off speed (getting as many rewards as possible) against accuracy (sampling more evidence). Having access to the actual evidence samples experienced by participants enabled us to infer the slope of the decision boundary. We found that participants indeed modulated the slope of the decision boundary in the direction predicted by the ideal observer model, although we also observed systematic deviations from optimality. Participants using suboptimal boundaries do so in a robust manner, so that any error in their boundary setting is relatively inexpensive. The use of a normative model provides insight into what variable(s) human decision makers are trying to optimize. Furthermore, this normative model allowed us to choose diagnostic experiments and in doing so we present clear evidence for time-varying boundaries.

Keywords: decision making, decision threshold, decreasing bounds, optimal decisions, reward rate

In an early theory of decision making, Cartwright and Festinger (1943) modeled decision making as a struggle between fluctuating forces. At each instant, the decision maker drew a sample from the (Gaussian) distribution for each force and computed the difference between these samples. This difference was the resultant force and no decision was made while the opposing forces were balanced and the resultant force was zero. Cartwright and Festinger realized that if a decision was made as soon as there was the slightest imbalance in forces, there would be no advantage to making decisions more slowly. This was inconsistent with the observation that the speed of making decisions traded-off with their accuracy, a property of decision making that had already been recorded (Festinger, 1943; Garrett, 1922; Johnson, 1939) and has been repeatedly observed since (e.g., Bogacz, Wagenmakers, Forstmann, & Nieuwenhuis, 2010; Howell & Kreidler, 1963; Pachella, 1974; Wickelgren, 1977; Luce, 1986). Cartwright and Festinger addressed the speed–accuracy trade-off by introducing an internal restraining force—also normally distributed and in the opposite direction to the resultant force—which would prevent the decision maker from going off “half-cocked” (Cartwright & Festinger, 1943, p. 598). The decision maker drew samples from this restraining force and did not make a decision until the resultant force was larger than these samples. The restraining force was adaptable and could be adjusted on the basis of whether the decision maker wanted to emphasize speed or accuracy in the task.

In the ensuing decades, Cartwright and Festinger’s theory fell out of favor because several shortcomings (see Irwin, Smith, & Mayfield, 1956; Vickers, Nettelbeck, & Willson, 1972) and was superseded by the signal detection theory (Tanner & Swets, 1954) and sequential sampling models (LaBerge, 1962; Laming, 1968; Link & Heath, 1975; Ratcliff, 1978; Stone, 1960; Vickers, 1970). These models do not mention a restraining force explicitly, but this concept is implicit in a threshold, which must be crossed before the decision maker indicates their choice. Just as the restraining force could be adjusted based on the emphasis on speed or accuracy, these models proposed that the threshold could be lowered or raised to emphasize speed or accuracy. This adaptability of thresholds has been a key strength of these models, a feature that has been used to explain how distribution of response latencies changes when subjects are instructed to emphasize speed or accuracy in a decision (for a review, see Bogacz, Brown, Moehlis, Holmes, & Cohen, 2006; Ratcliff & Smith, 2004; Ratcliff, Smith, Brown, & McKoon, 2016).

Introducing a restraining force or a threshold to explain the speed–accuracy trade-off answers one question but raises another: How should a decision maker select the restraining force (threshold) for a decision-making problem? Should this restraining force remain constant during a decision? This problem was examined by Wald (1947) who proposed that, for an isolated decision, an optimal decision maker can distinguish between two hypotheses by choosing the desired ratio of Type I and Type II errors and then using a statistical procedure called the sequential-probability-ratio-test (SPRT). In the SPRT, the decision maker sequentially computes the ratio of the likelihoods of all observations given the hypotheses and the decision process terminates only once the ratio exceeds a threshold (corresponding to accepting the first hypothesis) or decreases below another threshold (corresponding to accepting the second hypothesis). The values of these thresholds do not change as more samples are accumulated and they determine the accuracy of decisions. Wald and Wolfowitz (1948) showed that the SPRT requires a smaller or equal number of observations, on average, than any other statistical procedure for a given accuracy of decisions.

The SPRT gives a statistically optimal procedure to set the threshold for an isolated decision. However, in many real-world decision problems—a bird foraging for food, a market trader deciding whether to keep or sell stocks, a professor going through a pile of job applications or, indeed, a psychology undergraduate doing an experiment for course credits—decisions are not made in isolation; rather, individuals have to make a sequence of decisions. How should one set the threshold in this situation? Is the optimal threshold still given by SPRT? If decision makers accrue a reward from each decision, an ecologically sensible goal for the decision maker may be to maximize the expected reward from these decisions, rather than to minimize the number of samples required to make a decision with a given accuracy (as SPRT does). And for sequences that involve a large number of decisions or sequence of decisions that do not have a clearly defined end point, it would make sense for the decision maker to maximize the reward rate (i.e., the expected amount of rewards per unit time). In fact, under certain assumptions, including the assumption that every decision in a sequence has the same difficulty, it can be shown that the two optimization criteria—SPRT and reward rate—result in the same threshold (Bogacz et al., 2006). That is, the decision maker can maximize reward rate by using the SPRT and maintaining an appropriately chosen threshold that remains constant within and across trials. Experimental data suggest that people do indeed adapt their speed and accuracy to improve their reward rate (Bogacz, Hu, Holmes, & Cohen, 2010; Simen et al., 2009). This adaptability seems to be larger for younger than older adults (Starns & Ratcliff, 2010, 2012) and seems to become stronger with practice (Balci et al., 2011) and guidance (Evans & Brown, 2016).

However, maintaining a fixed and time-invariant threshold across a sequence of trials cannot be the optimal solution in many ecologically realistic situations where the difficulty of decisions fluctuates from trial-to-trial. Consider, for example, a situation in which there is very little information or evidence in favor of the different decision alternatives. Accumulating little evidence to a fixed threshold might take a very long time. The decision maker risks being stuck in such an impoverished trial because they are unable to choose between two equally uninformative options, like Buridan’s donkey (see Lamport, 2012), who risks being starved because it is unable to choose between two equally palatable options. Cartwright and Festinger (1943) foresaw this problem and noted that “there is good reason to suppose that the longer the individual stays in the decision region, the weaker are the restraining forces against leaving it” (p. 600). So they speculated that the mean restraining force should be expressed as a decreasing function of time but they were not prepared to make specific assumptions as to the exact nature of this function.

In cases where the restraining force may change with time, the concept of a fixed threshold may be replaced by a time-dependent decision boundary between making more observations and choosing an alternative. A number of recent studies have mathematically computed the shape of decision boundaries that maximize reward rate when decisions in a sequence vary in difficulty and shown that the decision maker can maximize the reward rate by decreasing this decision boundary with time (Drugowitsch, Moreno-Bote, Churchland, Shadlen, & Pouget, 2012; Huang & Rao, 2013; Moran, 2015). It can also be shown that the shape of the boundary that maximizes reward rate depends on the mixture of decision difficulties. Indeed, on the basis of the difficulties of decisions in a sequence, optimal boundaries may decrease, remain constant or increase (Malhotra, Leslie, Ludwig, & Bogacz, 2017; also see subsequent text).1

The goal of this study was to test whether, and under what circumstances, humans vary their decision boundaries with time during a decision. More generally, we assessed the relationship between the bounds used by people and the optimal bounds—that is, the boundary that maximizes reward rate. Importantly, we adopt an experimental approach that is firmly rooted in a mathematical optimality analysis (Malhotra et al., 2017) and that allows us to infer the decision boundary relatively directly based on the sequences of evidence samples actually experienced by decision makers.

Previous evidence on whether people change decision boundaries at all during a trial, much less adapt it to be optimal, is inconclusive. Some evidence of time-dependent boundaries was found early on in studies that compared participant behavior with Wald’s optimal procedure. These studies used an expanded-judgment paradigm in which the participant makes their decision based on a sequence of discrete samples or observations presented at discrete times—for example, deciding between two deck of cards with different means based on cards sampled sequentially from the two decks (see, e.g., Becker, 1958; Busemeyer, 1985; Irwin et al., 1956; Manz, 1970; Pleskac & Busemeyer, 2010; Smith & Vickers, 1989; Vickers, 1995). The advantage of this paradigm is that the experimenter can record not only the response time and accuracy of the participant, but also the exact sequence of samples on which they base their decisions. In an expanded-judgment paradigm Pitz, Reinhold, and Geller (1969) found that participants made decisions at lower posterior odds when the number of samples increased. Similar results were reported by Sanders and Linden (1967) and Wallsten (1968). Curiously, participants seemed to be disregarding the optimal strategy in these studies, which was to keep decision boundaries constant. We discuss in the following text why this behavior may be ecologically rational when the participant has uncertainty about task parameters.

The shape of decision boundaries has been also analyzed in a number of experiments using a paradigm where the samples drawn by the participant are implicit, that is, hidden from the experimenter. In these paradigms, the data recorded is limited to the response time and accuracy, so one can distinguish between constant or variable decision boundaries only indirectly, by fitting the two models to the data and comparing them. These tasks generally involve detecting a signal in the presence of noise. Therefore, to distinguish these experiments from the expanded-judgment tasks, we call them signal detection tasks. Examples of this paradigm include lexical decisions (Wagenmakers, Ratcliff, Gomez, & McKoon, 2008), basic perceptual discrimination (e.g., brightness; Ratcliff & Rouder, 1998; Ludwig, Gilchrist, McSorley, & Baddeley, 2005), and numerosity judgments (Starns & Ratcliff, 2012). Pike (1968) analyzed data from a number of psychophysical discrimination studies and found that this data is best explained by the accumulator model (Audley & Pike, 1965) if subjects either vary decision bounds between trials or decrease bounds during a trial. Additional support for decreasing boundaries was found by Drugowitsch et al. (2012), who analyzed data collected by Palmer, Huk, and Shadlen (2005). Finally, data from nonhuman primates performing a random dot motion discrimination task (Roitman & Shadlen, 2002) were best fit by a diffusion model with decreasing boundaries (Ditterich, 2006).

In contrast to these studies that found evidence favoring decreasing boundaries, Hawkins, Forstmann, Wagenmakers, Ratcliff, and Brown (2015) analyzed data from experiments on human and nonhuman primates spanning a range of experiments using signal detection paradigms and found equivocal support for constant and decreasing boundaries. They found that overall evidence, especially in humans, favored constant boundaries and that, crucially, experimental procedures such as the extent of task practice seemed to play a role in which option was favored. Therefore, what seems to be missing is a more systematic analysis of the conditions under which people decrease the decision boundary within a trial and understanding why they would do so.

In this study, we took a different approach: Rather than inferring the decision boundaries indirectly by fitting different boundaries to explain RTs and error rates, we used the expanded-judgment paradigm, where the experimenter can observe the exact sequence of samples used by the participant and record the exact evidence and time used to make a decision. This evidence and time should lie on the boundary. This allowed us to make a more direct estimate of the decision boundary used by the participants and compare this boundary with the optimal boundary. We found that, in general, participants modulated their decision boundaries during a trial in a manner predicted by the maximization of reward rate. This effect was robust across paradigms and for decisions that play out over time scales that range from several hundreds of ms to several s. However, there were also systematic deviations from optimal behavior. Much like the expanded-judgment tasks discussed in preceding text, in a number of our experiments participants seemed to decrease their decision boundary even when it was optimal to keep them constant. We mapped these strategies on to the reward landscape, predicted by the theoretical model—that is, the variation in reward rate with different settings of the decision boundary. These analyses suggest that participants’ choice of decision boundary may be guided not only by maximizing the reward rate, but also by robustness considerations. That is, they appear to allow for some “error” in their boundary setting due to uncertainty in task parameters and deviate from optimality in a manner that reduces the impact of such error.

Although it has been argued that the results from an expanded-judgment task can be generalized to signal detection paradigms, where sampling is implicit (Edwards, 1965; Irwin & Smith, 1956; Sanders & Linden, 1967; Pleskac & Busemeyer, 2010; Vickers, Burt, Smith, & Brown, 1985), these tasks usually use a slow presentation rate and elicit longer response latencies than those expected for perceptual decisions. It is possible that attention and memory play a different role in decision making at this speed than at faster speeds at which perceptual decisions occur. To address this possibility, we adapted the expanded-judgment task to allow fast presentation rates and consequently elicit rapid decisions.

The rest of the article is split into five sections. First, we summarize the theoretical basis for the relationship between a boundary and reward rate. In the next three sections, we describe a series of expanded-judgment tasks, each of which compares the boundaries used by participants with the theoretically optimal boundaries. In the final section, we consider the implications of our findings as well as the potential mechanisms by which time-varying boundaries may be instantiated. Data from all experiments reported in this article is available online (https://osf.io/f3vhr/).

Optimal Shape of Decision Boundaries

We now outline how an expanded-judgment task can be mathematically modeled and how this model can be used to establish the relationship between the task’s parameters and decision boundaries that maximize reward rate. We summarize the key results from a theoretical study of Malhotra et al. (2017), provide intuition for them, and state predictions that we tested experimentally.

Consider an expanded-judgment task that consists of making a sequence of decisions, each of which yields a unit reward if the decision is correct. Each decision (or trial) consists of estimating the true state of the world on the basis of a sequence of noisy observations. We consider the simplest possible case in which the world can be in one of two different states, call these up or down, and each observation of the world can be one of two different outcomes. Each outcome provides a fixed amount of evidence, δX, to the decision maker about the true state of the world:

| 1 |

where u is the up-probability that governs how quickly evidence is accumulated and depends on the true state of the world. We assume throughout that u ≥ 0.5 when the true state is up and u ≤ 0.5 when the true state is down. Note that the parameter u will determine the difficulty of a decision—when u is close to 0.5 the decision will be hard while when u is close to 0 or 1 the decision will be easy.

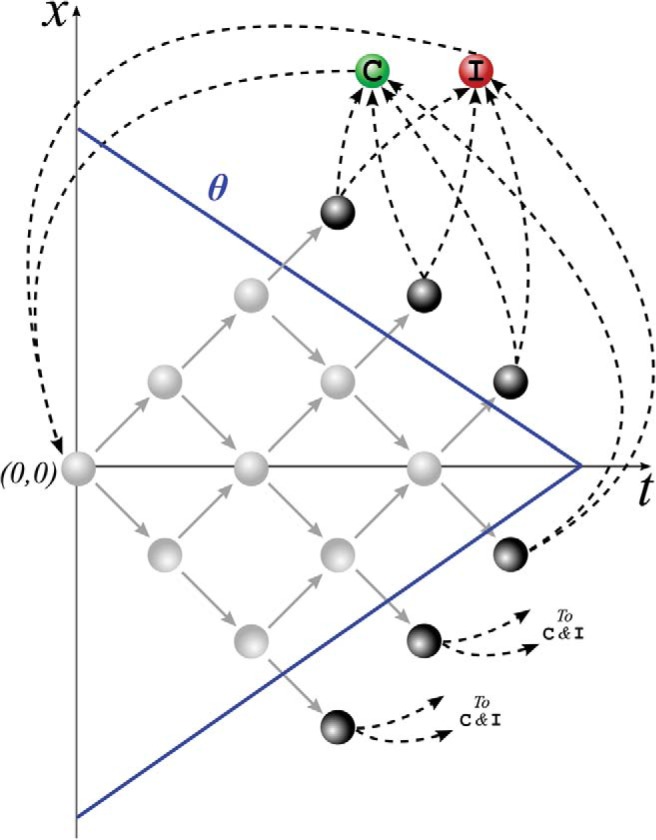

Figure 1 illustrates the process of making a decision in this expanded-judgment task. As assumed in sequential sampling models, decision making involves the accumulation of the probabilistic evidence, so let x be the cumulative evidence, that is, the sum of all δX outcomes. The accumulation continues till x crosses one of two boundaries θ corresponding to the options, so that the decision maker responds up when x > θ, and down when x < −θ. During the expanded-judgment task described above, the state of the decision maker, at any point of time, is defined by the pair (t, x), where t is the number of observations made. In any given state, the decision maker can take one of two actions: (a) make another observation—we call this action wait, or (b) signal their estimate of the true state of the world—we call this the action go. As shown in Figure 1, taking an action wait can lead to one of two transitions: the next observed outcome is +1; in this case, make a transition to state (t + 1, x + 1), or the next observed outcome is −1; in this case, make a transition to state (t + 1, x − 1). Similarly, taking the action go can also lead to one of two transitions: (a) The estimated state is the true state of the world; in this case, collect a reward and make a transition to the correct state, or (b) the estimated state is not the true state of the world; in this case, make a transition to the incorrect state. After making a transition to a correct or incorrect state, the decision maker starts a new decision, that is, returns to the state (t, x) = (0, 0) after an intertrial delay DC following a correct choice and DI following the incorrect choice.

Figure 1.

Evidence accumulation and decision making as a Markov decision process: States are shown by circles, transitions are shown by arrows, and actions are shown by color of the circles. The solid (blue) line labeled θ indicates a hypothetical decision boundary. The policy that corresponds to the boundary is indicated by the color of the states. Black circles indicate the action go, whereas gray circles indicate wait. Dashed lines with arrows indicate transitions on go, whereas solid lines with arrows indicate transitions on wait. The rewarded and unrewarded states are shown as C and I, respectively (for correct and incorrect). See the online article for the color version of this figure.

These set of state-action pairs and transitions between these states defines a Markov decision process (MDP) shown schematically in Figure 1. In this framework, any decision boundary that is a function of time, θ = f(t), can be mapped to a set of actions, such that action wait is selected for any state within the boundaries, and action go is selected for any state on or beyond the boundaries. The mapping that assigns actions to all possible states is called a policy for the MDP.

We assume that a decision maker wishes to maximize the reward rate (i.e., the expected number of rewards per unit time). The reward rate depends on the decision boundary: If the boundary is too low, the decision maker will make errors and miss possible rewards, but if it is too high, each decision will take a long period, and the number of reward per unit of time will also be low.

The policy that maximizes average reward can be obtained by using a dynamic programming procedure known as policy iteration (Howard, 1960; Puterman, 2005; Ross, 1983). Several recent studies have shown how dynamic programming can be applied to decision-making tasks to get a policy that maximizes reward rate (see, e.g., Drugowitsch et al., 2012; Huang & Rao, 2013; Malhotra et al., 2017). We now summarize how the optimal shape of decision boundary depends on task’s parameters on the basis of the analysis given in Malhotra et al. (2017). Let us first consider a class of tasks in which the difficulty of the decisions is fixed. That is, evidence can point either toward up or down, but the quality of the evidence remains fixed across decisions: , with ϵ corresponding to the drift. The drift can take values in range and it determines the difficulty of each trial with higher drift corresponding to easier trials. For single-difficulty tasks, ϵ remains fixed across trials.

In the single-difficulty tasks, reward rate can be optimized by choosing a policy such that the decision boundary remains constant during each decision. Intuitively, this is because the decision maker’s estimate of the probability that the world is in a particular state depends only on integrated evidence x, but not on time elapsed within the trial t. Therefore, the optimal action to take in each state only depends on x but not t, so go actions are only taken if x exceeds a particular value, leading to constant boundaries.

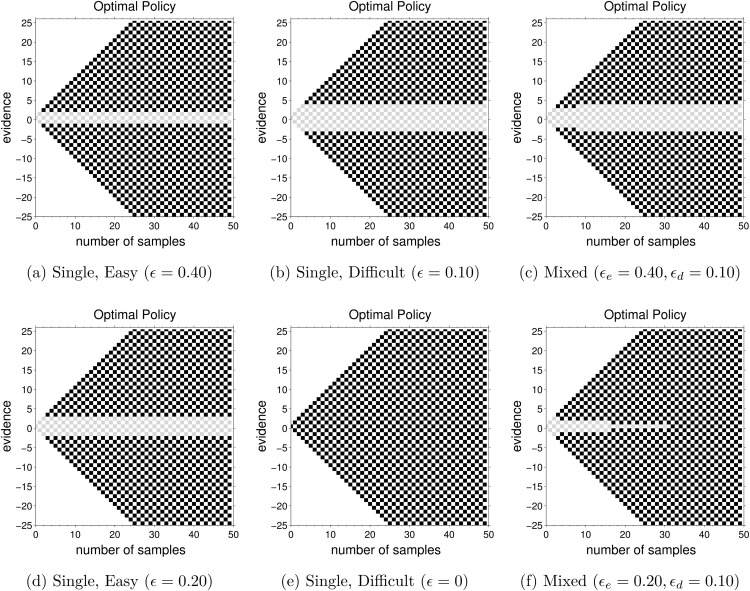

The optimal height of the decision boundary in the single-difficulty tasks depends on task difficulty in a nonmonotonic way. For very easy tasks (ϵ close to ), each outcome is a very reliable predictor of the state of the world, so very few outcomes need to be seen to obtain an accurate estimate of the state of the world (Figure 2a). As the difficulty increases, more outcomes are required, and the optimal boundary increases (compare Figures 2a and 2b). However, when the task becomes very difficult (ϵ close 0), there is little benefit in observing the stimulus at all, and for ϵ = 0 the optimal strategy is not to integrate evidence at all, but guess immediately, that is, θ = 0 (compare Figures 2d and 2e).

Figure 2.

Optimal policies for single and mixed-difficulty tasks. Black squares indicate states of the MDP where the optimal action is to go—that is, choose an alternative—whereas gray squares indicate states where the optimal action is to wait—that is, collect more evidence. In each row, the two panels on the left show optimal policies for single-difficulty tasks with two different levels of difficulty and the right-most panel shows optimal policy for mixed-difficulty task obtained by mixing the difficulties is the two left-hand panels. The intertrial intervals (ITIs) were DC = DI = 70 for the top row (Panels a through c) and DC = DI = 50 for the bottom row (Panels d through f).

Let us now consider a mixed-difficulty task, in which half of the trials are easy with drift ϵe and the other half of the trials are difficult with drift ϵd, where ϵe > ϵd. We assume that during mixed-difficulty tasks, the decision maker knows that there are two levels of difficulty (either through experience or instruction), but does not know if a particular trial is easy or difficult. Indeed, a key assumption of the underlying theory is that the difficulty level is something the decision maker has to infer during the accumulation of evidence.

In mixed-difficulty tasks, reward rate is optimized by using boundaries that may decrease, increase or remain constant based on the mixture of difficulties. Intuitively, this is because the decision maker’s estimate of the probability that the world is in a particular state, given the existing evidence, depends on their inference about the difficulty of the trial. Time becomes informative in mixed-difficulty tasks because it helps the decision maker infer whether a given trial is easy or difficult and hence the estimate of the true state of the world depends not only on the evidence, x, but also on the time, t. The optimal decision maker should begin each decision trial assuming the decision could be easy or difficult. Therefore, θ at the beginning of the trial should be in between the optimal boundaries for the two difficulties. As they make observations, they will update their estimate of the task difficulty. In particular, as time within a trial progresses, and the decision boundary has not been reached, the estimated probability of the trial being difficult increases and the decision boundary moves toward the optimal boundary for the difficult trials.

The above principle is illustrated in Figures 2c and 2f showing optimal boundaries for two sample mixed-difficulty tasks. Figure 2f shows the optimal boundary for a task in which half of the trials have moderate difficulty and half are very difficult (the optimal bounds for single-difficultly tasks with corresponding values of drift are shown in Figures 2d and 2e). As the time progresses the optimal decision maker infers that a trial is likely to be very difficult, so an optimal strategy involves moving on to the next trial (which may be easier), that is, decreasing the decision boundary with time in the trial.

In contrast, when the boundary for the difficult task is higher than the easy task (the difficult task is not extremely hard; Figures 2a and 2b), the optimal boundary in the mixed-difficulty task will again start at a value in-between the boundaries for the easy and difficult tasks and approach the boundary for the difficult task (Figure 2c). In this case, the boundary for the difficult task will be higher than the easy task meaning that the optimal boundary will increase with time.

In summary, the mathematical model makes three key predictions about the normative behavior: (a) optimal decision boundaries should stay constant if all decisions in a sequence are of the same difficulty, (b) it is optimal to decrease decision boundaries if decisions are of mixed difficulty and some decisions are extremely difficult (or impossible), and (c) it may be optimal to keep decision boundaries constant or even increase them in mixed-difficulty tasks where the difficult decision is not too difficult. In the next three sections, we compare human behavior with these normative results.

Experiment 1

To compare human behavior with the normative behavior described previously, we designed an experiment that involved an evidence-foraging game which parallels the expanded-judgment task described in the previous section. We modeled this evidence-foraging game on previous expanded-judgment tasks, such as Irwin et al. (1956) and Vickers, Burt, et al. (1985), where participants are shown a sequence of discrete observations and required to judge the distribution from which these observations were drawn. We modified these expanded-judgment paradigms so that (a) the observations could have only one of two values (i.e., drawn from the Bernoulli distribution), (b) the reward-structure of the task was based on performance, and (c) the task had an intrinsic speed–accuracy trade-off. We introduced a speed–accuracy trade-off by using a fixed-time blocks paradigm: the experiment was divided into a number of games, the total duration for each game was fixed, and participants could attempt as many decisions as they like during this period. Therefore, if a participant takes a very long time for each decision they are likely to be accurate, but will not be able to complete many decisions during a game. If a participant decides very quickly, they are likely to perform worse in terms of accuracy, but will have more reward “opportunities” during the game. The goal of the participants was to collect as much reward as possible during each game, so they need to find a balance between these two strategies.

In this expanded judgment task, we are able to record the exact sequence of stimuli presented to the participants and the position in state-space (t, x) at which participants made their decisions. Based on the location of these decisions, we inferred how the decision boundary for a participant depended on time. According to the above theory, the optimal decision boundary should be independent of time in single-difficultly tasks, but could vary with time during mixed-difficulty tasks. By comparing the inferred decision boundary with optimal boundaries in each type of task, we assessed whether participants adjusted their decision boundaries to maximize reward rate.

Method

Description of task

Twenty-four participants from the university community were asked to play a set of games on a computer. The number of participants was chosen to give a sample size that is comparable to previous human decision-making studies and kept constant during all of our experiments.2 Each game lasted a fixed duration and participants made a series of decisions during this time. Correct decisions led to a reward and participants were asked to maximize the cumulative reward. The game was programmed using Matlab® and Psychtoolbox (Brainard, 1997; Kleiner et al., 2007; Pelli, 1997) and was played using a computer keyboard. The study lasted approximately 50 min, including the instruction phase and training.

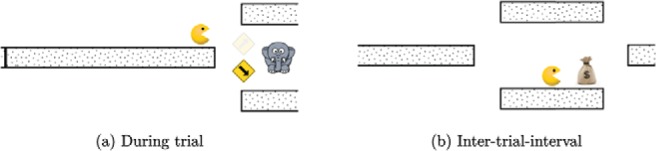

During each game, participants were shown an animated creature (Pacman) moving along a path (see Figure 3). A trial started with Pacman stationary at a fork in the path. At this point Pacman could jump either up or down and the participant made this choice using the up or down arrow keys on the keyboard. One of these paths contained a reward, but the participant could not see this before making the decision. Participants were shown a sequence of cues and they could wait and watch as many cues as they wanted before making their choice. The display also showed the total reward they accumulated in the experiment and a progress bar showing how much time was left in the current game.

Figure 3.

Two screenshots of the display during the experiment. The left panel shows the display during the evidence-accumulation phase of a trial. Participants chose whether Pacman goes up or down after seeing a sequence of cues (arrows) pointing up or down. The elephant next to the arrow indicates that this is an easy game, so the arrow points to the reward-holding path with probability 0.70. The right panel shows a screenshot during the intertrial interval (ITI). The participant has chosen the lower path and can now see that this was the correct (rewarded) decision. See the online article for the color version of this figure.

Once the participant indicated their choice, an animation showed Pacman moving along the chosen path. If this path was the rewarded one, a bag with a $ sign appeared along the path (right panel in Figure 3). When Pacman reached this bag, the reward was added to the total and Pacman navigated to the next fork and this started the next trial. If the participant chose the unrewarded path, the money bag appeared along the other path.

The intertrial interval (ITI) started as soon as the participant indicated their choice. We manipulated the ITI for correct and incorrect decisions by varying Pacman’s speed. Participants were told that Pacman received a “speed-boost” when it ate the money bag so that ITI for correct decisions was smaller than that for incorrect decisions. Values for all parameters used during the game are shown in Table 1.

Table 1. Values of Parameters Used During the Game.

| Parameter name | Value |

|---|---|

| Interstimulus interval (ISI) | 200 ms |

| Intertrial interval (ITI), correct (ISI × DC) | 3 s |

| ITI, incorrect (ISI × DI) | 10 s |

| Reward | (approx 2 cents) |

| Drift for easy condition (ϵe) | .20 |

| Drift for difficult condition (ϵd) | 0 |

| Block duration, training | 150 s |

| Block duration, testing, easy | 240 s |

| Block duration, testing, difficult | 300 s |

| Block duration, testing, mixed | 300 s |

Cue stimuli

When Pacman reached a fork, cues were displayed at a fixed rate, with a new cue every 200 ms. We call this delay the interstimulus interval (ISI). During these 200 ms, the cue was displayed for 66 ms, followed by 134 ms of no cue. Each cue was the outcome of a Bernoulli trial and consisted of either an upward or a downward pointing arrow. This arrow indicated the rewarded path with a particular probability.

Next to the cues, participants were shown a picture of either an elephant or a penguin. This animal indicated the type of game they were playing. One of the two animals provided cues with a probability 0.70 of being correct, while the other animal provided cues with a probability 0.50 of being correct. Thus, the two animals mapped to the two single-difficultly conditions—easy (with ϵ = 0.20) or difficult (with ϵ = 0)—shown in Figure 2d and 2e. The mapping between difficulties and animals was counterbalanced across participants.

We chose the values of up-probability so that the optimal decision boundaries in the mixed-difficulty case have the steepest slope, making it easier to detect if participants decrease decision boundaries. The theory in the previous section shows that decision boundaries decrease only when the difficult decisions are extremely difficult. In the experiment we set the up-probability for difficult condition to the extreme value of 0.5, that is, ϵd = 0; therefore, the cues do not give any information on the true state of the world. Using this value has two advantages: (a) it leads to optimal decision boundaries in mixed-difficulty games with the steepest decrease in slope, and (b) it makes it easier for participants to realize that the optimal boundary in the difficult condition is very low (in fact, the optimal strategy for difficult games is to guess immediately). Optimal boundaries should also decrease (although with a smaller slope) when decisions are marginally easier (e.g., ϵd = 0.03). But we found that participants frequently overweight evidence given by these low probability cues (perhaps analogous to the overweighting of small probabilities in other risky choice situations, e.g., Tversky & Kahneman, 1992; Gonzalez & Wu, 1999) and need a large amount of training to establish the optimal behavior in such extremely difficult (but not impossible) games. In contrast, when ϵd = 0, participants could learn the optimal strategy difficult games with a small amount of training.

The experiment consisted of three types of games: easy games, where only the animal giving 70% correct cues appeared at each fork; difficult games, where only the animal giving the 50% cues appeared at the fork; and mixed games, where the animal could change from one fork to the next. Participants were given these probabilities at the start of each game and also received training on each type of game (see Structure of Experiment section). Importantly, during mixed games participants were shown a picture of a wall instead of either animal and told that the animal was hidden behind this wall. That is, other than the cues themselves, they received no information indicating whether a particular trial during a mixed game was easy or difficult so that they had to infer the type of trial solely on the basis of these cues. This corresponds to the mixed-difficulty task shown in Figure 2f.

Reward structure

Participant reimbursement was broken down into three components. The first component was fixed and every participant received (approx $7.5) for taking part in the study. The second component was the money bags accumulated during the experiment. Each money bag was worth (approx 2 cents) and participants were told that they could accumulate up to (approx $6) during the experiment. The third component was a bonus prize of (approx $25) available to the participant who accumulated the highest reward during the study. Participants were not told how much other participants had won until after they took part in the study.

Structure of experiment

The experiment was divided into a training phase and a testing phase. Participants were given training on each type of game. The duration of each training game was 150 s. This phase allowed participants to familiarize themselves with the games and probability of cues as well as understand the speed–accuracy trade-off for each type of games. The reward accumulated during the training phase did not count toward their reimbursement.

The testing phase consisted of six games, two of each type. Participants were again reminded of the probabilities of cues at the start of each game. The order of these games was counterbalanced across participants so that each type of game was equally likely to occur in each position in the sequence of games. The duration of the easy games was 240 s, whereas the difficult and mixed games lasted 300 s each. The reason for different durations for different types of games was that we wanted to collect around the same amount of data for each condition. Pilot studies showed that participants generally have faster reaction time (RT) during the easy games (see following Results section). Therefore, we increased the length of the difficult and mixed blocks. By using these durations, participants made approximately 70 to 90 choices during both easy and mixed conditions. In the middle of each game, participants received a 35 s break.

Eliminating nondecision time

We preprocessed the recorded data to eliminate nondecision time—the delay between making a decision and executing a response. As a result of this nondecision time, the data contained irrelevant stimuli that were presented after the participant had made their decision. To eliminate these irrelevant stimuli, we estimated the nondecision time for each participant on the basis of their responses during the easy games. Appendix A illustrates the method in detail; the key points are summarized briefly in the following text.

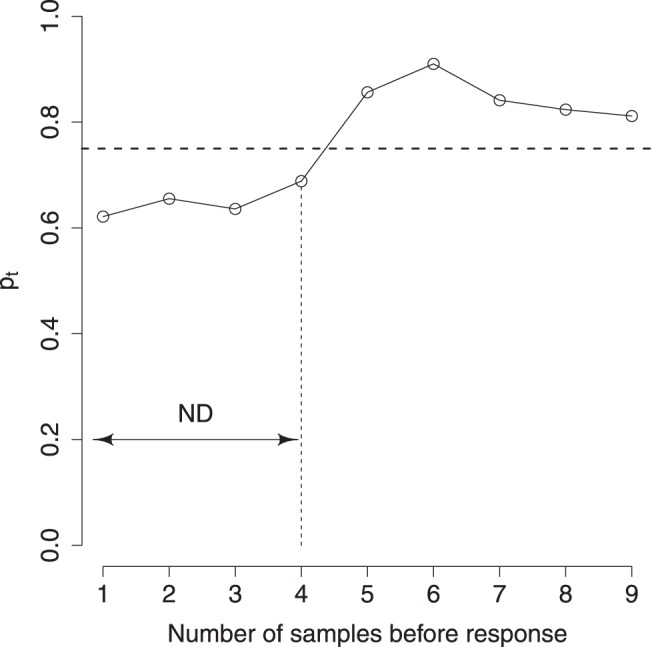

For each participant, we reversed the sequence of stimuli and aligned them on the response time. Let us call these ordered sequence (s1i, s2i, . . . , sTi), where i is the trial number and (1, 2, . . . , T) are the stimulus indices before the response. Each stimulus can be either up or down, that is, sti ∈ {up, down}. At each time step, we estimated the correlation (across trials) between the observing a stimulus in a particular direction and making a decision to go in that direction. That is, we computed pt at each stimulus index, t ∈ {1, 2, . . . , T}, as the fraction of trials where the response ri ∈ {up, down} is the same as sti. So, for each participant, the values (p1, p2, . . . , pT) serve as an estimate of the correlation between the stimulus at that index and the response.

If stimuli at a particular index, t, occurred after the decision, that is, during the nondecision time, we expected them to have a low correlation with response and consequently pt to be below the drift rate, 0.70. We determined the first index in the sequence with pt larger than 0.75; that is, the first index with more than 75% of stimuli in the same direction as the response. This gave us an estimate of the number of stimuli, ND, that fall in the nondecision period. We used this estimate to eliminate the stimuli, s1, . . . , sND from each recorded sequence for the participant. See Figure A1 in Appendix A.

Figure A1.

The plot shows the proportion of trials at each time step before the response (for a particular participant and condition) that are in the same direction as the response. The dashed horizontal line represents a threshold on this proportion used to compute the nondecision time. The dashed vertical line shows the last time-step where this proportion was below the threshold.

For 21 out of 24 participants, we estimated ND = 1, that is, a nondecision delay of approximately 200 ms. For two subjects the nondecision delay was two stimuli and for one participant no stimuli were excluded.

Exclusion of participants

To ensure that each participant understood the task, we conducted a binomial test on responses in the easy and mixed-difficulty games. This test checked whether the number of correct responses during a game were significantly different from chance. Two participants failed this test during mixed-difficulty games and were excluded from further analysis.

Analysis method

We now describe how we estimated the decision boundary underlying each participant’s decisions. In signal-detection paradigms, the experimenter cannot observe the exact sequence of samples based on which the participant made their decision. Therefore, parameters like boundary are obtained by fitting a sequential sampling or accumulator model to the RT and error distributions. In contrast, the expanded-judgment paradigm allows us to observe the entire sequence of samples used to make each decision. Therefore, our analysis method takes into account not only the evidence and time at which the decision (‘up’/‘down’) was made, but also the exact sequence of actions (wait–go) in response to the sequence of cues seen by the participant. It also takes into account the trial-to-trial variability in the behavior of participants: even when participants saw the exact same sequence of cues, they could vary their actions from one trial to next.

If a participant makes a decision as soon as evidence crosses the boundary, the value of time and evidence, (t, x) during each decision should lie along this boundary. Therefore, one way to recover this boundary is by simply fitting a curve through the values of (t, x) for all decisions in a block. However, note that participants show a trial-to-trial variability in their decision making. Sequential sampling models account for this trial-to-trial variability by assuming noisy integration of sensory signals as well as variability in either drift, starting point or in threshold (see Ratcliff, 1978; Ratcliff & Smith, 2004). We chose to model this variability by assuming there is stochasticity in each wait–go decision. That is, instead of waiting when evidence was below the boundary and going as soon as evidence crossed the boundary, we assumed that a participant’s decision depended on the outcome of a random variable, with the probability of the outcome depending on the accumulated evidence and time.

Specifically, we define two predictor variables—the evidence accumulated, X = x, and the time spent in the trial, T = t – and a binary response variable, A ∈ wait, go. The probability of an action can be related to the predictor variables using the following logistic regression model:

| 2 |

where βT and βX are the regression coefficients for time and evidence, respectively, and β0 is the intercept. Given the triplet (X, T, A) for each stimulus in each trial, we estimated for each type of game and each participant the and that maximized the likelihood of the observed triplets.

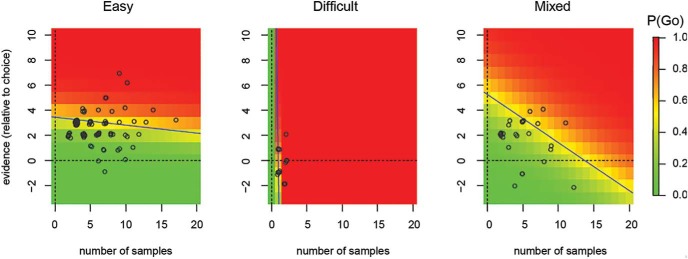

Figure 4 shows the results of applying the above analysis to one participant. The data are split according to condition – easy, difficult or mixed. Each circle shows the end of a random walk (sequence of stimuli) in the time-evidence plane. These random walks were used to determine the (maximum likelihood) regression coefficients, and , as outlined above. These estimated coefficients are then used (Equation 2) to determine the probability of going at each x and t, which is shown as the heat-map in Figure 4.

Figure 4.

The decisions made by a subject during Experiment 1 and the inferred boundaries based on these decisions. Each scatter-plot shows the values of evidence and time where the subject made decisions during a particular game (only easy trials considered during mixed game). These values have been slightly jittered for visualization. The heat-map shows the for each x and t inferred using logistic regression. The solid line shows a “line of indifference” where and serves as a proxy for the subject’s boundary (see Appendix B). See the online article for the color version of this figure.

This heat-map shows that, under the easy condition, this participant’s probability of going strongly depended on the evidence and weakly on the number of samples. In contrast, under the difficult condition, the participant’s probability of going depends almost exclusively on the number of samples—most of their decisions are made within a couple of samples and irrespective of the evidence. Under the mixed condition, the probability of going is a function of both evidence and number of samples.

Since we were interested in comparing the slopes of boundaries during easy and mixed conditions, we determined a line of indifference under each condition, where , that is, the participant was equally likely to choose actions wait and go. Substituting in Equation 2 gives the line:

| 3 |

with slope and intercept as . We used the slope of this line as an estimate for the slope of the boundary. Appendix B reports a set of simulations that tested the validity of this assumption and found that there is a systematic relationship between this inferred slope and the true slope generating decisions. Importantly, these simulations also demonstrate that even if the variability in data is due to noisy integration of sensory signals (rather than trial-to-trial variability in decision boundary), this inferential method still allows us to make valid comparisons of slopes of boundaries in easy and mixed games.

Each panel in Figure 4 also shows the line of indifference for the condition. The slope of the line of indifference is steepest under the difficult condition followed by the mixed condition and most flat for the easy condition. Note that for the mixed condition, we only considered the “easy” trials—that is, trials showing cues with correct probability = 0.70. This ensured that we made a like-for-like comparison between easy and mixed conditions.

A quantitative comparison of slopes between conditions can be made by taking the difference between slopes. However, a linear difference is inappropriate as large increasing slopes are qualitatively quite similar to large decreasing slopes—both indicate a temporal, rather than evidence-based boundary (e.g., the difficult condition in Figure 4). Therefore, we compared slopes in the mixed and easy conditions by converting these slopes from gradients to degrees and finding the circular difference between slopes:

| 4 |

where me and mm are the slopes in easy and mixed conditions, respectively; Δm is the difference in slopes and mod is the modulo operation. Equation 4 ensures that the difference between slopes is confined to the interval [−90, +90] degrees and large increasing slopes have a small difference to large decreasing slopes.

The above analysis assumes that evidence accumulated by a participant mirrors the evidence presented by the experimenter—so there is no loss of evidence during accumulation and the internal rate of evidence accumulation remains the same from one trial to next. In Appendix C we performed simulations to verify that inferences using the above analysis remain valid even when there is loss in information accumulated and when the drift rate varied from one trial to next.

Results

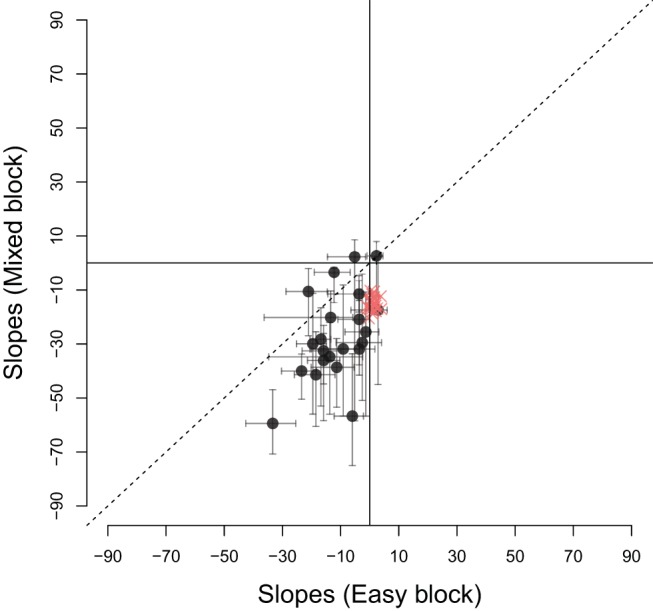

The mean RTs during easy, difficult and mixed games were 1444 ms (SEM = 23 ms), 1024 ms (SEM = 47ms) and 1412 ms (SEM = 22ms), respectively, where SEM is the within-subject standard error of the means. Note that ‘RT’ here refers to ‘decision time,’ that is, the raw response time minus the estimated nondecision time. As noted above, the nondecision time for most participants was approximately 200 ms. Figure 5 compares the slopes for the lines of indifference in the easy and mixed games (black circles). Error bars indicate the 0.95 percentile confidence interval.3 Like the participant shown in Figure 4 the estimated slope for most participants was more negative during the mixed games than during easy games, falling below the identity line. A paired t test on the difference in slopes in the two conditions (using Equation 4) confirmed that there was a significant difference in the slopes (t(21) = 5.24, p < 0.001, m = 15.94, d = 1.20), indicating that the type of game modulated how participants set their decision boundary.

Figure 5.

Each circle (black) compares the estimated slope in easy and mixed games for 1 participant. Circles below the dashed line were participants who had a larger gradient of the inferred boundary during the mixed games as compared to the easy games. Error bars indicate the 0.95 percentile bootstrapped confidence intervals for the estimated slopes. Crosses (red) show 24 simulated participants—decisions were simulated using a rise-to-threshold model with optimal boundaries shown in Figures 2d and 2f. See the online article for the color version of this figure.

Figure 5 also shows the relationship between the slopes of easy and mixed games for 24 simulated participants (red crosses) who optimize the reward rate. Each of these participants had slopes of boundary calculated using dynamic programming (Malhotra et al., 2017) and made decisions based on a noisy integration of evidence to this optimal boundary. The slopes in each condition were then inferred using the same procedure as for our real participants. These optimal participants, like the majority of participants in our study, had a larger (negative) slope in the mixed condition than the easy condition. However, in contrast to the optimal participants, the majority of participants also exhibited a negative slope during the easy games, indicating that they lowered their decision boundary with time during this condition. A t test confirmed that the slope during easy condition was less than zero (t(21) = −5.51, p < 0.001, m = −11.47). Participants also showed substantial variability in the decision boundary in the easy condition, with slopes varying between 0 and 45 degrees.

An alternative possibility is that participants change their decision boundary during the experiment, adopting a higher (but constant) boundary toward the beginning and lowering it to different (constant) boundary during the experiment. In order to check for this possibility, we split the data from each condition into two halves and checked whether the mean number of samples required to make a decision changed from the first half to second half of the experiment. During easy games, we found that participants observed 7.5 and 6.8 samples, on average, during the first and second half of the experiment, respectively. During mixed games, these mean observations changed to 6.8 and 6.2 samples, on average, during the first and second half of the experiment. A two-sided paired t test which examined whether the mean number of samples were different in the two halves of the experiment found no significant difference in either the easy games (t(21) = 1.76, p = 0.09, m = 0.73) or in the mixed games (t(21) = 1.40, p = 0.18, m = 0.64).4

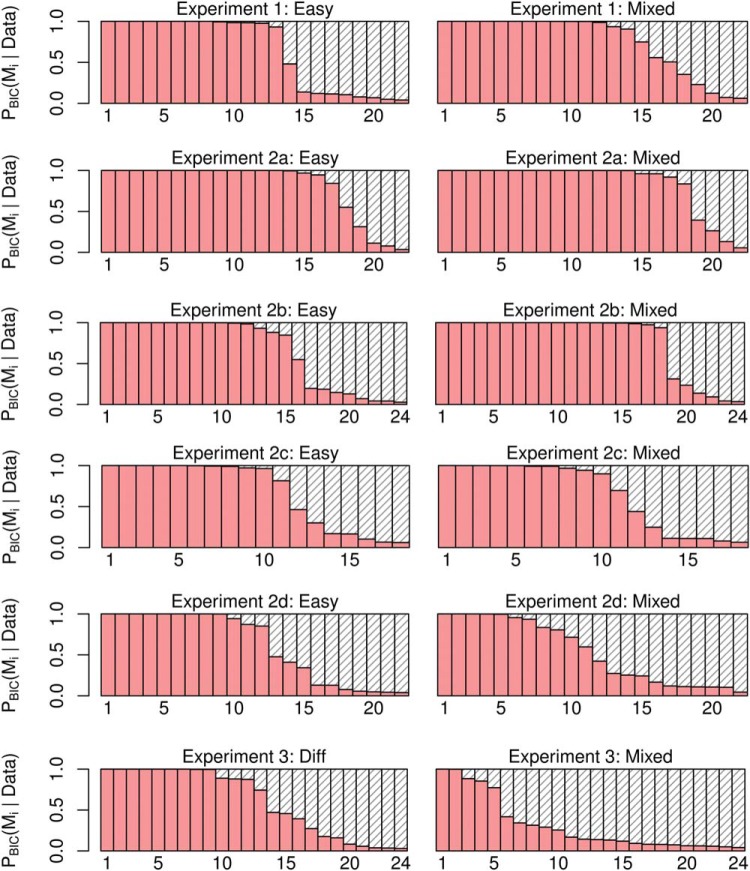

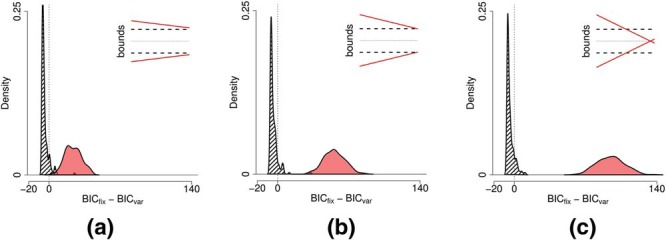

We checked the robustness of these results by performing a model comparison exercise, pitting a time-varying decision boundary against a fixed boundary model. The latter simply involves a logistic regression in which the decisions to wait or go were based on evidence only. The full details of this model comparison procedure and results are described in Appendix D. Based on a comparison of Bayesian Information Criteria (Schwarz, 1978; Wagenmakers, 2007), the time-varying model provided a better account of the behavior of 15 out of 22 participants in mixed-difficulty games. For 3 participants, the evidence was ambiguous and for the remaining 4 participants the simpler, fixed boundary model won. In easy games, the model using time as a predictor was better at accounting for data from 13 participants while the simpler model performed better to data for 8 participants.

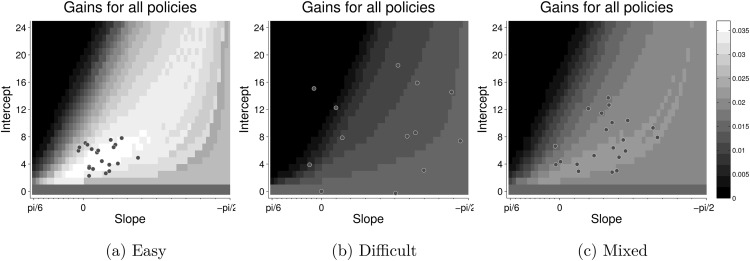

In order to understand why participants decrease the decision boundary in easy games and why different participants show a large variation in their choice of boundary, we computed the reward rate accrued by each participant’s choice of boundary and compared it to the reward rate for the optimal policy. This gave us the cost of setting any nonoptimal decision boundary. Figure 6 shows the landscape (heat-map) of the reward rate for each type of game for a host of different boundaries, defined by different combinations of intercepts and slopes. The circles indicate the intercepts and slopes of the inferred line of indifference of each participant.

Figure 6.

The reward rates for different decision boundaries. In each panel, the slope and intercept determine a linear boundary. The actions of all states below the boundary are set to wait and all states above are set to go. The heat-map in each panel shows the reward rate for each threshold. The circles show the inferred boundaries used by the participants in Experiment 1.

Notice, in particular, the landscape for the easy games. Even though the peak of this landscape lies at the policy with zero slope (flat bounds), there is a “ridge” of policies on the landscape where the reward rate is close to optimal. The policies chosen by most participants in Experiment 1 seem to lie along this ridge—even though participants do not necessarily choose the optimal policy, they seem to be choosing policies that are close to optimal. A similar pattern holds in the mixed games. In contrast, during difficult games, the average reward is low, irrespective of the policy. Correspondingly, there is a large variability in the policies chosen by participants. We examine the effect of reward landscape on the policies chosen by participants in more detail at the end of Experiment 2.

Experiment 2

Experiment 1 established that people modulate their decision boundary based on task difficulty and variations in the reward landscape. However, our experimental paradigm—effectively an expanded-judgment task—is clearly very different from the dominant, typically signal-detection paradigms used to test rise-to-threshold models and time-varying boundaries (e.g., Britten, Shadlen, Newsome, & Movshon, 1992; Palmer et al., 2005; Ludwig, 2009; Starns & Ratcliff, 2012). In our paradigm, response times in mixed games were generally between 1 and 2 s, whereas in the perceptual decision-making literature, RTs are typically between 0.5-1 s (Palmer et al., 2005). It is possible that at this speed participants do not, or cannot, modulate their decision boundaries and instead adopt suboptimal fixed thresholds.

Our aim in Experiment 2 then was to replicate and extend our findings to a more rapid task, where RTs were similar to a signal-detection paradigm. More generally, we tested the robustness and generality of the results from the expanded-judgment task of Experiment 1 by introducing different (i) stimulus materials, (ii) ISIs and (iii) ITIs. The variation in ISI was designed to induce more rapid decision making (with RTs typically < 1s). Since the optimal policies are computed on a relative time scale (based on a unit ISI), we can scale both the interstimulus and ITI without affecting the optimal policy, but reducing the RT. The variation in ITI (specifically: for correct decisions, DC) was introduced to manipulate the reward landscape, without affecting the optimal policy. Bogacz et al. (2006) have previously shown that the optimal policy is invariant to change in DC for single-difficultly games. Malhotra et al. (2017) showed that this result generalizes to the mixed-difficulty scenario: optimal policy for mixed-difficulty games depends only on the ITI for incorrect decisions, DI, but is independent of the ITI for correct decisions, DC, as long as DC < DI. If participants were optimizing the reward rate, they should not change their decision boundary with a change in DC. However, as we will see below, changing DC does affect the wider reward landscape around the optimal policy and we explored to what extent participants were sensitive to this change.

Permutations of varying these two parameters leads to four experiments, which we have labeled Experiments 2a–2d. The values of parameters for each experiment are shown in Table 2. Experiment 2a was a replication of Experiment 1 with exactly the same parameters, but using the new paradigm (described below). In Experiment 2b, we scaled the ISI and ITI to elicit rapid decisions but kept all other parameters the same as Experiment 2a. In Experiment 2c, we increased the inter-trial-interval for correct responses to match that for incorrect responses. All other parameters were kept same as Experiment 2a. Finally, in Experiment 2d, we scaled ISI and ITI to elicit rapid decisions and also matched ITIs for correct and incorrect decisions.

Table 2. Values of Parameters for Experiment 2.

| Parameter name | Value | |

|---|---|---|

| Note. The parameters that are common to all four subexperiments are listed at the top. Each of the four subexperiments has a different combination of interstimulus and intertrial intervals, the values of which are listed at the bottom. ISI = interstimulus interval. | ||

| Drift for easy condition (ϵe) | .22 | |

| Drift for difficult condition (ϵd) | 0 | |

| Reward | (approx 2 cents) | |

| DI = ISI×50 | ||

| ISI | DC = DI | |

| 200 msec | Experiment 2a | Experiment 2c |

| 50 msec | Experiment 2b | Experiment 2d |

Like Experiment 1, 24 healthy adults between the age of 18 and 35 from the university community participated in each of these experiments, with no overlapping participants between experiments.

Method

Decreasing the ISI increases two sources of noise in the experiment: (i) noise due to variation in attention to cues (i.e., there is a greater likelihood of participants “missing” samples when they are coming in faster; ii) noise due to visual interference between consecutive cues in the same location. The second source of noise is particularly challenging for our purposes. That is, the analysis presented here assumes that each evidence sample is processed independently. However, if we were to present a sequence of cues in rapid succession, it is clear that, due to the temporal response properties of the human visual system, successive cues could “blend in” with each other (Georgeson, 1987). As a result, we could not simply speed-up the presentation of the arrow cues in the Pacman task. We adapted the original task from Experiment 1 to another evidence-foraging game that retained the structure of the paradigm and that allowed for systematic variation of the various parameters of interest (i.e., interstimulus and ITIs).

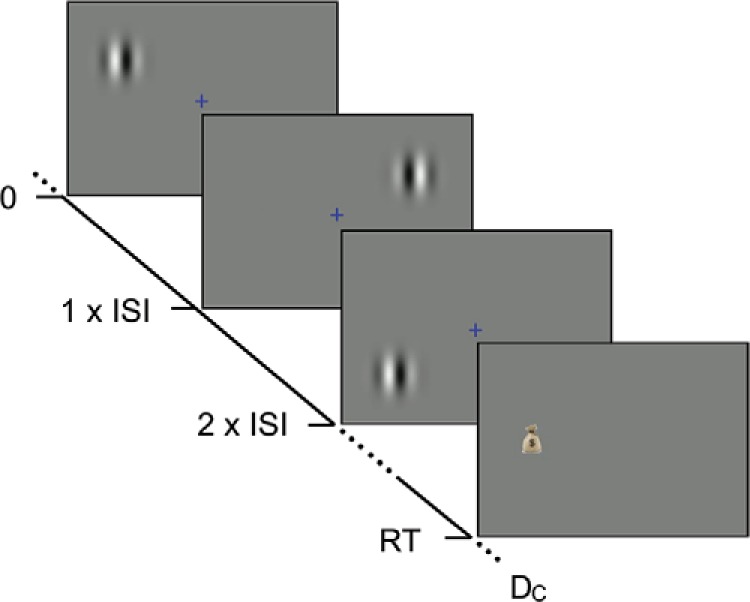

Participants were again asked to maximize their cumulative reward by making correct decisions in a game. But now, during each trial participants focused on a fixation cross in the middle of the screen with gray background5 and were told that a reward was either on the left or the right of the fixation cross. In order to make their choice, participants were shown cues that could appear either to the left or right of the fixation cross. In order to minimize interference (see below) cues could appear in two alternative locations on each side – ‘left-up’ or ‘left-down’ on the left and ‘right-up’ or ‘right-down’ on the right. A cue appeared on the same side as the reward with a given probability. Participants were given this probability at the beginning of the game. For single-difficultly games, they were told that this probability was the same for all trials within this game. For the mixed-difficulty games, they were told that a particular trial during the game could give cues with one of two different probabilities or and they were given these possible probabilities at the start of each game (i.e., block). Participants were again told that they could see as many cues as they wanted during a trial before making a decision, but the total duration of the game was fixed. Figure 7 shows an example trial in which the participant makes the decision to go left after observing a series of cues.

Figure 7.

An illustration of the paradigm for Experiments 2a through 2d. During each trial, participants chose left or right based on a sequence of cues. Each cue was a Gabor pattern displayed (for a fifth of interstimulus interval [ISI]) in one of four possible locations, equidistant from the fixation cross. If the decision was correct (as in this example), a money bag was displayed on the chosen side of the fixation cross and the participant waited for the duration DC before starting the next trial. If the decision was incorrect, no money bag was displayed and the participant waited for the duration DI before starting the next trial. RT = Reaction time. See the online article for the color version of this figure.

Each cue was a Gabor pattern (sinusoidal luminance grating modulated by a 2D Gaussian window). We designed these cue patterns to minimize interference between consecutive patterns. The integration period of early visual mechanisms depends strongly on the spatiotemporal parameters of the visual patterns. But for coarse (i.e., low spatial frequency) and transient patterns it should be less than 100 ms (Georgeson, 1987; Watson, Ahumada, & Farrell, 1986). To ensure the low spatial frequency we fixed the nominal spatial frequency of the Gabor to 0.4 cycles/deg (we did not precisely control the viewing distance, so the actual spatial frequency varied somewhat between participants) and the size of the Gaussian window to 1.2 deg (2D standard deviation). The patterns had a vertical orientation. In the “fast” experiments (Experiments 2b and 2d), each cue was displayed for 10 ms and the delay between onset of two consecutive cues (the ISI) was 50 ms. To ensure that consecutive cues were processed independently by the visual system we (i) alternated the location on one side of the screen (e.g., ‘left-up’ and ‘left-down’) so that the smallest ISI at any one retinal location was 90 ms and (ii) alternated the phase of the patterns (90° and 270°).

Participants indicated their choice by pressing the left or right arrow keys on a keyboard. When the decision was correct, a money bag appeared on the chosen side. During the ITI, an animation displayed this money bag moving toward the bottom of the screen. When the decision was incorrect, no money bag appeared. All money bags collected by the participant remained at the bottom of the screen, so participants could track the amount of reward they had gathered during the current game.

The structure of the experiment was the same as Experiment 1, with the experiment consisting of a set of games of fixed durations and given difficulties. Each game consisted of a sequence of trials where participants could win a small reward if they made the correct decision or no reward if they made an incorrect decision. Games were again of three different types: (i) Type I, corresponding to easy games from Experiment 1, (ii) Type II corresponding to difficult games and (iii) Type 3 corresponding to mixed games. The type of the game was indicated by the color of the fixation cross – Type I: Green, Type II: Red and Type 3: Blue. The order of games was counterbalanced across participants.

In all four experiments the up-probability for easy and difficult games was 0.50 ± 0.22 and 0.50 ± 0, respectively. During mixed games, easy and difficult trials were equally likely. The reward rate-optimal policy for easy games was again to maintain constant threshold (Figure 2d) while for difficult it was to guess immediately (Figure 2e). Similarly, the optimal policy for the mixed condition was to start with a high boundary (similar to the boundary at the start of easy games) and steadily decrease it, eventually making a decision at x = 0 (Figure 2f). Just like in Experiment 1, participants were given training on each type of game and the reward structure was divided into three components: (approx $8.50) for participating, (approx 2 cents) for each correct response and (approx $25) for the participant accumulating the largest number of money bags.

Results

We analyzed data using the same method as Experiment 1 after removing the nondecision time. Wait and go actions were used to determine the probability of going at all combinations of evidence and time, which were then used to determine a line of indifference, where the probability of wait matched the probability of go. We compared the slopes of this line of indifference for easy and mixed games for each of the four experiments.

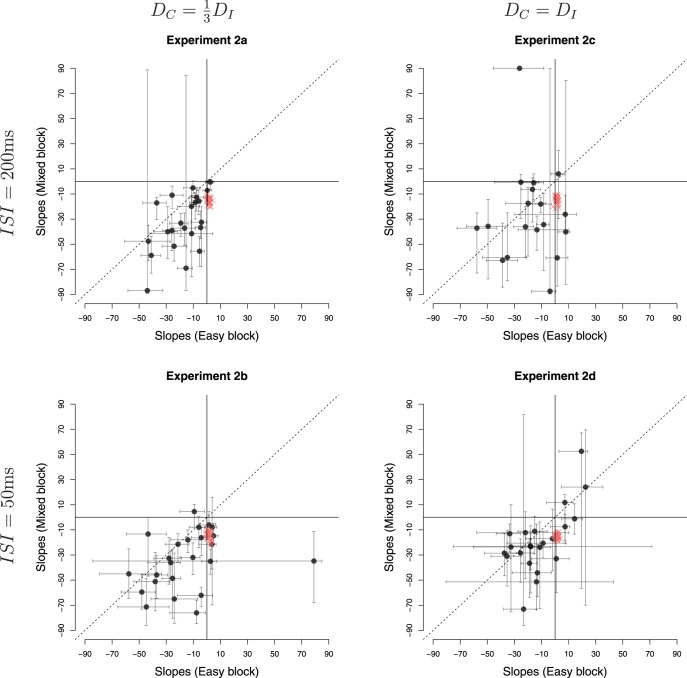

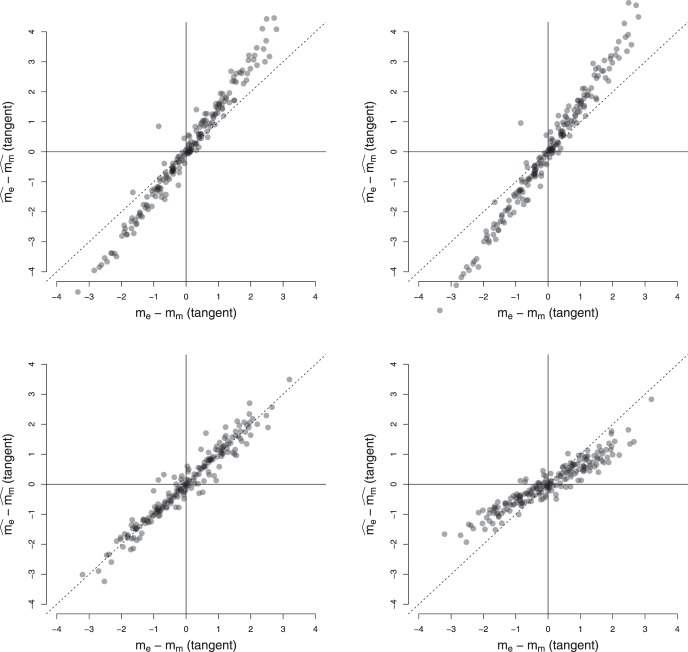

Experiment 2a

This experiment used the same parameters as Experiment 1, but replaced the Pacman game, with the evidence-foraging game described in Figure 7. Two participants failed the binomial test in the mixed games and were excluded from analysis. The mean RTs during easy, difficult and mixed games were 1155 ms (5.8 samples, SEM = 17 ms), 618 ms (3.1 samples, SEM = 27 ms) and 1142 ms (5.7 samples, SEM = 19 ms), respectively. We estimated the average number of stimuli that fell in the nondecision period (ND) to be 0.96, that is, a average nondecision delay of approximately 192 ms. For 21 out of the 22 participants, we estimated ND = 1 and for one participant no stimuli were excluded. Figure 8 (top-left panel) shows a comparison of the estimated slopes of lines of indifference in easy and mixed games. We observed that slopes were negative in both easy and mixed games for almost all participants and more negative during mixed games than easy games (t(21) = 3.92, p < 0.001, m = 15.76, d = 0.84). Again, circles show the slopes for estimated lines of indifference for each subjects. The 0.95 percentile confidence intervals on these slopes are obtained using the same bootstrap procedure described in Experiment 1.6 Note that the mean difference in slopes is virtually identical to Experiment 1, although the effect size (Cohen’s d) was larger during Experiment 1. Thus, Experiment 2a replicated the results of Experiment 1 showing that the findings were robust to different formulations of the evidence-foraging game. A model comparison exercise concurred with these results, showing that the majority of participants in mixed (N = 18) as well as easy games (N = 17) were better accounted by a logistic regression model using both evidence and time as a predictor than by a simpler model that used only evidence as the predictor (see Appendix D for details).

Figure 8.

Slopes (in degrees) of estimated lines of indifference in easy versus mixed games in four experiments. The dashed line shows the curve for equal slope in easy and mixed games. Each circle (black) shows the estimated slopes for one participant. Error bars show 0.95 percentile bootstrapped confidence intervals. Crosses (red) show the estimated slopes for 24 simulated participants—decisions were simulated using a rise-to-threshold model with boundaries given by the optimal policies computed as described by (Malhotra et al., 2017). See the online article for the color version of this figure.

Experiment 2b

In the next experiment, we decreased the ISI to 50 ms and scaled the ITIs accordingly. All other parameters were the same as Experiment 2a. All participants passed the binomial test in the easy and mixed games. The mean RT during easy, difficult and mixed games were 337 ms (6.7 samples, SEM = 4.2 ms) 418 ms (8.4 samples, SEM = 9.8 ms) and 419 ms (8.4 samples, SEM = 5.3 ms), respectively, showing that this paradigm successfully elicited subsecond RTs typically found in signal detection paradigms. We estimated the average number of stimuli that fell in the nondecision period to be 3.7, that is, an average nondecision delay of approximately 183 ms. We estimated ND = 4 for 15 participants, ND = 3 for six participants, ND = 5 for two participants and ND = 1 for one participant. The bottom-left panel of Figure 8 shows the estimated slopes in easy versus mixed games. Like Experiment 2a, the slopes were negative for most participants in both easy and mixed games. Similarly, we also observed that the slopes were more negative in the mixed games than in the easy games, although the result was a little weaker than in Experiment 2a (t(23) = 2.12, p = 0.044, m = 11.80, d = 0.47). There are three possible reasons for this weaker result. First, the distribution for difference in slopes is more diffuse due the outlier at the right of the plot. Excluding this participant gave a clearer difference in slopes (t(22) = 3.31, p = .003, m = 15.19, d = 0.72) that was numerically highly similar to the slope difference observed in Experiments 1 and 2a. Second, for reasons discussed below, our estimates of nondecision time are likely to be less accurate in the “faster” paradigm. In turn, this error introduces variability in the accuracy of the actual evidence paths on a trial-by-trial basis that were used to derive our slope estimates. Lastly, it is possible that the process decreasing the boundary needs time to estimate the drift and adjust the boundary accordingly. With shorter ISI, this process may have less time to affect the decision process before the response is made, resulting in smaller difference in slopes between conditions.

Experiment 2c

Next, we changed the ISI back to 200 ms (same as Experiment 2a) but increased DC, the ITI for correct decisions, to the same value as DI, the ITI for incorrect decisions (10s for both). Increasing the ITI decreased the reward per unit time and meant that participants had to wait longer between trials. Participants found this task difficult, we suspect because the ITI is so much longer than the typical RT. That is, participants spend most of their time waiting for a new trial, but then those trials are over rather quickly. Perhaps as a result, the games lacked in engagement and six out of 24 participants failed the binomial test in mixed games. For the 18 remaining participants, the mean RTs in easy, difficult and mixed games were 807 ms (4.0 samples, SEM = 27 ms), 858 ms (4.3 samples, SEM = 49 ms) and 954 ms (4.8 samples, SEM = 32 ms), respectively. We estimated the average number of stimuli that fell in the nondecision period to be 0.9, that is, an average nondecision delay of approximately 176 ms (ND = 2 for 21 participants and ND = 0 for the remaining three participants). The estimated slopes are shown in the top-right panel of Figure 8. We observed much greater variability in the estimated decision boundaries, though slopes were generally negative in mixed as well as easy games.7 The mean estimated slopes decreased more rapidly in mixed games as compared to easy games. However, given the large variability of responses and the number of participants that had to be excluded, this effect was comparatively weaker (t(17) = 2.41, p = .028, m = 17.97, d = 0.60). Nevertheless, the mean slope difference is very similar to that observed in all previous three experiments.

Experiment 2d

In this experiment we tested the final permutation of ISIs and ITIs—we decreased the ISI to 50 ms and matched the ITIs for correct and incorrect decisions (both 2.5s). Two participants failed the binomial test in mixed games and were excluded from further analysis. The mean RTs during easy, difficult and mixed games were 240 ms (4.8 samples, SEM = 4 ms), 308 ms (6.2 samples, SEM = 9 ms) and 294 ms (5.9 samples, SEM = 5 ms), respectively. We estimated the average number of stimuli that fell in the nondecision period to be 4.3, that is, an average nondecision delay of approximately 216 ms (ND = 4 for 18 participants, ND = 5 for five participants and ND = 7 for one participant). The bottom-right panel in Figure 8 compares the estimated slopes in easy and mixed games. The mean slope in either kind of game was negative (t(21) = −3.10, p = .005, m = −11.66 for easy games and t(21) = −3.42, p = .003, m = −18.82 for mixed games). However, in contrast to Experiment 2b, there was no significant difference in mean estimated slopes during easy and mixed games (t(21) = 1.71, p = 0.10, m = 7.15, d = 0.32).

Discussion

Experiments 2a-d revealed three key behavioral patterns: (i) participants generally decreased their decision boundaries with time, not only in the mixed games, but also in the easy games, (ii) this pattern held for the rapid task (Experiment 2b) but the variability of parameter estimates increased at faster RTs, (iii) decreasing the difference between DC and DI decreased the difference in slopes between easy and mixed games.

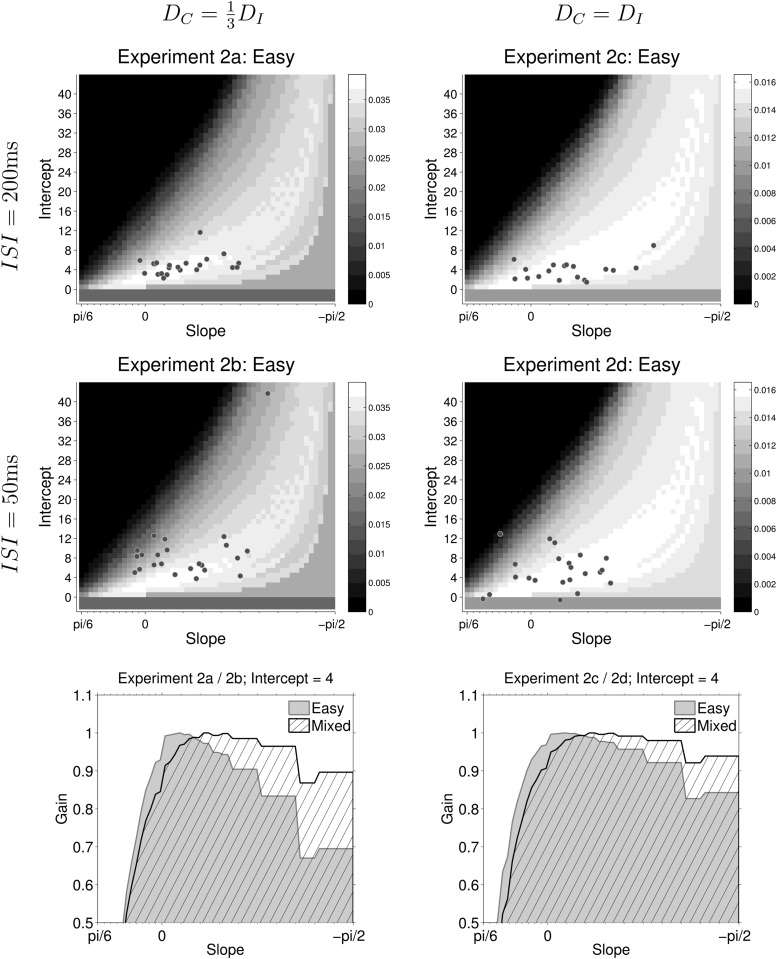

Clearly, it is not optimal to decrease the decision boundary during fixed difficulty (easy) games, but most participants seemed to do this. As noted in Experiment 1, a possible reason is that the reward rate for suboptimal policies is asymmetrical around the optimal boundary. Figure 9(a) shows the reward rate landscape for all possible decision boundaries during easy games in Experiment 2a, and maps the estimated boundaries for each participant onto this landscape.

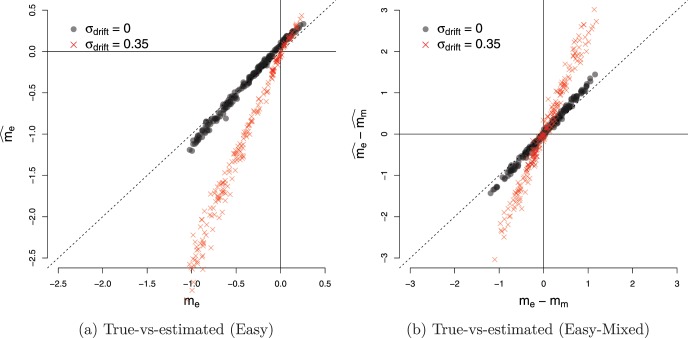

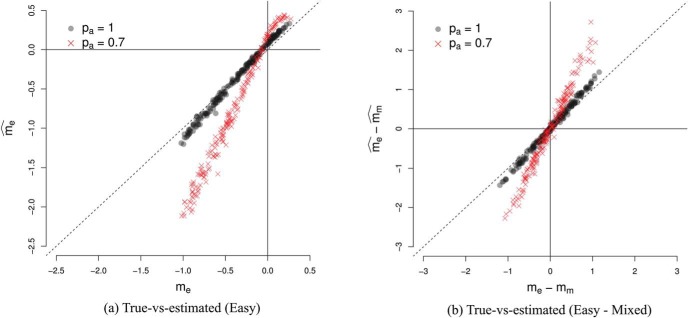

Figure 9.

Reward rate for Experiments 2a through 2d. Each heat-map in the first two rows shows the “landscape” of reward rate in policy space and dots show estimated policies adopted by participants in easy games. The bottom row show profiles sliced through the (normalized) reward-rate landscape at a particular intercept. Shaded regions show profiles for easy games, whereas hatched regions show profiles for mixed games.

Reward rate is maximum at (0, 3). When slope increases above zero the reward rate drops rapidly. In contrast, when slope decreases below zero, reward rate decreases gradually. This asymmetry means that participants pay a large penalty for a suboptimal boundary with a positive slope, but a small penalty for a suboptimal boundary with a negative slope. If participants are uncertain about the evidence gathered during a trial, or about the optimal policy, it is rational for them to decrease their decision boundary, as an error in estimation will lead to a relatively small penalty. Figure 9 suggests that most participants err on the side of caution and adopt policies with high (though not maximum) rewards and decreasing boundaries.

The shape of the reward landscape also sheds light on why participants behave differently when the ITI DC is changed, even though changing this parameter does not affect the optimal policy. The first column in Figure 9 shows the reward rate in experiments where , while the second column shows the reward rate in experiments where DC = DI. The top two rows show the reward-rate landscapes in easy games at all combinations of slopes and intercepts, while the bottom row compares the reward rate in easy and mixed games at a particular intercept of decision boundary but different values of slope (i.e., a horizontal slice through the heat-maps above). Even though the optimal policy in all four experiments is the same, there are several ways in which the reward-rate landscape in the left-hand column (Experiments 2a and 2b) differ from the landscape in the right-hand column (Experiments 2c and 2d).

First, the reward-rate landscape in easy games is more sharply peaked when (Experiments 2a and 2b). This is most clearly discernible in panels in the bottom row which shows the profile of the (normalized) reward-rate landscape at a particular intercept. If the participant adopts a boundary with large negative slope, the difference between the reward rate for such a policy and the optimal reward rate is larger when (left panel) than when DC = DI (right panel). So in Experiments 2a and 2b adopting a suboptimal policy carries a larger ‘regret’ than in Experiments 2c and 2d. This means that the reward landscape constrains the choice of boundaries more in Experiments 2a and 2b than it does in Experiments 2c and 2d, even though the optimal policy for all experiments is the same.

The panels in the bottom row also compare the reward-rate profiles during easy (shaded) and mixed (hatched) games at a particular intercept. It can be seen that for both types of experiments the normalized reward rate is larger in mixed games than easy games when slopes are more negative. Thus it is better (more rewarding) to have decreasing boundaries in mixed games than in easy games. However, the difference in easy and mixed games is larger when (left panel) than when DC = DI (right panel). Correspondingly, we found a more robust difference in slopes during Experiments 2a and 2b than we did in Experiments 2c and 2d.

The third behavioral pattern was an increase in variability of slopes when the decisions were made more rapidly. There are two possible sources of this variability: internal noise and error in estimation of the nondecision time. Recall that we excluded stimuli that arrive during the nondecision time based on a single estimate of this time for each participant. It is likely that the nondecision time varies from trial-to-trial; indeed, this is a common assumption in models of decision making (see Ratcliff & Smith, 2004). Any such variability means that on some trials we are including irrelevant samples (estimating a nondecision time too short) or excluding relevant samples (estimating a nondecision time too long). As a result, there is a discrepancy between the evidence paths that actually led to the participant’s decision and the one entered into the logistic regression model used to estimate the decision boundary. Importantly, this discrepancy will be much smaller in the experiments with a long ISI, because even an error in nondecision time of, say, 100 ms will at most introduce only one additional or excluded evidence sample. However, in the experiments with a much shorter ISIs, the same numerical error will result in several additional or missed evidence samples. Therefore, trial-to-trial variability in the nondecision times introduces more noise in the slope estimates for the faster experiments.

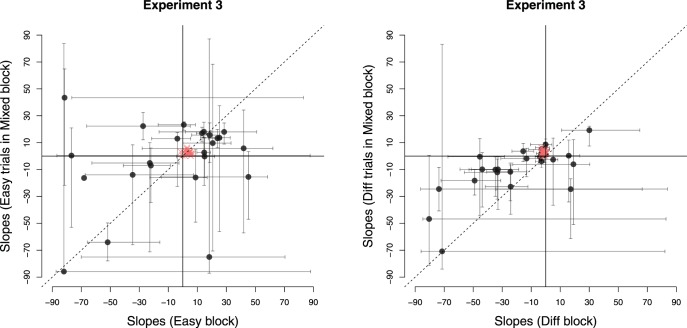

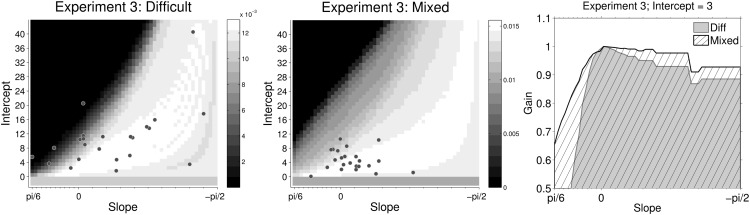

Experiment 3