Structured Abstract

Background

Risk-standardized measures of hospital outcomes reported by the Centers for Medicare and Medicaid Services (CMS) include Medicare fee-for-service (FFS) patients and exclude Medicare Advantage (MA) patients due to data availability. MA penetration varies greatly nationwide and appears associated with increased FFS population risk. Whether variation in MA penetration affects the performance on the CMS measures is unknown.

Objective

To determine whether the MA penetration rate is associated with outcomes measures based on fee-for-service patients.

Research Design

In this retrospective study, 2008 MA penetration was estimated at the Hospital Referral Region (HRR) level. Risk-standardized mortality rates (RSMRs), and risk-standardized readmission rates (RSRRs) for heart failure, acute myocardial infarction, and pneumonia from 2006–2008 were estimated among HRRs, along with several markers of FFS population risk. Weighted linear regression was used to test the association between each of these variables and MA penetration among HRRs.

Results

Among 304 HRRs, MA penetration varied greatly (median: 17.0%, range: 2.1% – 56.6%). Although MA penetration was significantly (p<0.05) associated with 5 of the 6 markers of FFS population risk, MA penetration was insignificantly (p≥0.05) associated with 5 of 6 hospital outcome measures.

Conclusion

RSMRs and RSRRs for heart failure, acute myocardial infarction, and pneumonia do not seem to differ systematically with MA penetration, lending support to the widespread use of these measures even in areas of high MA penetration.

Keywords: acute MI, heart failure, Medicare, mortality, outcomes assessment, pneumonia, readmissions, risk adjustment

Introduction

Hospital 30-day risk-standardized mortality rates (RSMRs) and readmission rates (RSRRs) are used to evaluate hospital quality nationwide.1,2 These measures are calculated annually from Medicare fee-for-service (FFS) administrative claims data and are publicly reported by the Centers for Medicare and Medicaid Services (CMS). Given the Patient Protection and Affordable Care Act of 2010’s provisions for hospital quality improvement and focus on outcomes,3 these and similar measures will continue to be central to quality assessment.

The RSMR and RSRR measures are calculated for Medicare FFS patients only. Patients enrolled in Medicare Advantage (MA), a set of managed care options offered through private insurers, are excluded due to data availability. However, MA enrollees comprise about 24%4 of Medicare beneficiaries nationally, and MA penetration (the fraction of eligible Medicare beneficiaries enrolled in MA) varies considerably nationwide, from <1% in Alaska to 41% in Oregon.4 Moreover, studies have shown that hospitalized MA populations tend to have a lower risk profile (younger, with fewer comorbid conditions) than hospitalized Medicare FFS populations.5 Future hospital outcome measures will ideally include MA patients to better characterize population risk. In the absence of these data, we expect that RSMRs and RSRRs adequately adjust for the risk profile of measured FFS patients, regardless of MA penetration. Nevertheless, to our knowledge, how MA penetration may affect regional performance on these measures has not been addressed.

One putative effect is that, in areas of high MA penetration, omitting the generally healthier MA patients and including only FFS patients might lead to the FFS population at a given hospital or region, which is the measured population, being at higher risk than the measured population in another region with lower MA penetration. If this difference in risk is not fully captured by the measures’ risk adjustment then the measures may suggest poorer performance in areas of high MA penetration because the omission of the healthier patients could lead to a concentration of high-risk patients and worse outcomes than predicted by the models.

Therefore, in order to address whether RSMRs and RSRRs may differ systematically with MA penetration, we examined the relationships between MA penetration, FFS population risk profiles, and the RSMR and RSRR measures across the United States for heart failure, acute myocardial infarction, and pneumonia.

Methods

MA and Medicare FFS Enrollment Data

County-level MA penetration data for 2008 were calculated using the Bureau of Health Professions’ 2008 Area Resource File (ARF).6 The ARF contains the number of MA enrollees as well as the total Medicare eligibility for each county.

Outcomes Data

Hierarchical logistic regression models were used to estimate hospital-level RSMRs and RSRRs for heart failure, acute myocardial infarction, and pneumonia, as previously described.1,2,7–11 The models include age, sex, and relevant clinical covariates that represent comorbidities, and exclude clinical variables that may represent complications of care. The hierarchical models account for the nested structure of the data created by the clustering of patients within hospitals.

For each hospital, the RSMR and RSRR are calculated as the national unadjusted rate of outcome (deaths within 30 days of admission or readmissions within 30 days of discharge) multiplied by the ratio of the predicted rate of outcome divided by the expected rate of outcome. The predicted rate of outcome for each hospital is the average of the predicted probabilities of outcome of all admissions in the hospital, and the expected rate of outcome for each hospital is the average of the expected probabilities of outcome of all admissions in the hospital. The predicted probability of outcome in each admission for a specific hospital is calculated using the hierarchical model by applying the estimated regression coefficients to observed clinical covariates and adding the hospital-specific intercept. The hospital-specific intercept reflects the hospital’s actual performance with its patients relative to hospitals with similar patients. The expected probability of outcome in each admission for a specific hospital is calculated using the hierarchical model by applying the estimated regression coefficients to observed clinical covariates and adding a common intercept that is the average of all hospital-specific intercepts. The common intercept reflects the performance of an average hospital with similar patients.

This approach was developed for CMS and endorsed by the National Quality Forum. These models are based on administrative data and the results of the models have been validated by comparison to models based on medical records.1,2,7–11 For each hospital, we calculated RSMR and RSRR for heart failure, acute myocardial infarction, and pneumonia using claims data from January 2006 to December 2008.

FFS Population Risk Profiles

The hospital-level expected rate of death and the expected rate of readmission for each hospital, as described above, can serve as proxies for the level of risk in that hospital’s FFS population. A hospital with high underlying FFS population risk should have a high expected rate of death and/or expected rate of readmission for each condition. For each hospital, we calculated expected rate of death and expected rate of readmission for heart failure, acute myocardial infarction, and pneumonia using claims data from January 2006 to December 2008.

Aggregating to Hospital Referral Regions (HRRs)

Expected rates of death and of readmission, RSMRs, RSRRs, and MA penetration data were aggregated to the level of hospital referral regions (HRRs) to examine the regional associations. HRRs were created based on patterns of referral for cardiologic-surgical and neurosurgical procedures12 and have been used as a proxy for hospital catchment areas.13–15

County-level MA penetration data were aggregated to the HRR-level using the geographic information software ArcGIS version 9.3.1 (ESRI, Redlands, CA), area-preserving maps of the United States by county16 and HRR,17 and SAS version 9.2 (SAS Institute Inc, Cary, North Carolina). The county map was overlaid on the HRR map, splitting each HRR into numerous non-overlapping polygons, where each polygon represents a fractional area (possibly the entire area) of a distinct county. Each polygon’s area was divided by its parent county’s area to calculate the polygon’s weight. Then, each polygon’s weight was multiplied by its parent county’s MA enrollment to determine that county’s contribution to the HRR. County contributions were summed to estimate the MA enrollment for the entire HRR. This process was repeated to estimate total Medicare eligibility for each HRR, and the ratio of these two estimates yielded MA penetration for each HRR.

To calculate expected rates of death, the expected rates of readmission, RSMRs, and RSRRs at the HRR-level, hospital-level data were linked to the 2008 American Hospital Association Survey Data18 through Medicare Provider Number to determine each hospital’s HRR designation. Each variable was calculated at the HRR-level as the volume weighted mean of that variable among all constituent hospitals in that HRR.

Statistical Analyses

For each dependent variable, a weighted linear regression model was used to test its relationship with MA penetration, using the number of patient discharges in the HRR for that particular condition and outcome as a weight in order to account for the potential difference in variances of the outcome among HRRs. All analyses were done using SAS version 9.2 and STATA version 10.1 (StataCorp, College Station, Texas).

Results

There were 304 (99%) HRRs for this analysis out of a possible 307. Alaska, Hawaii, and the District of Columbia were excluded due to lack of data needed to aggregate to HRRs.

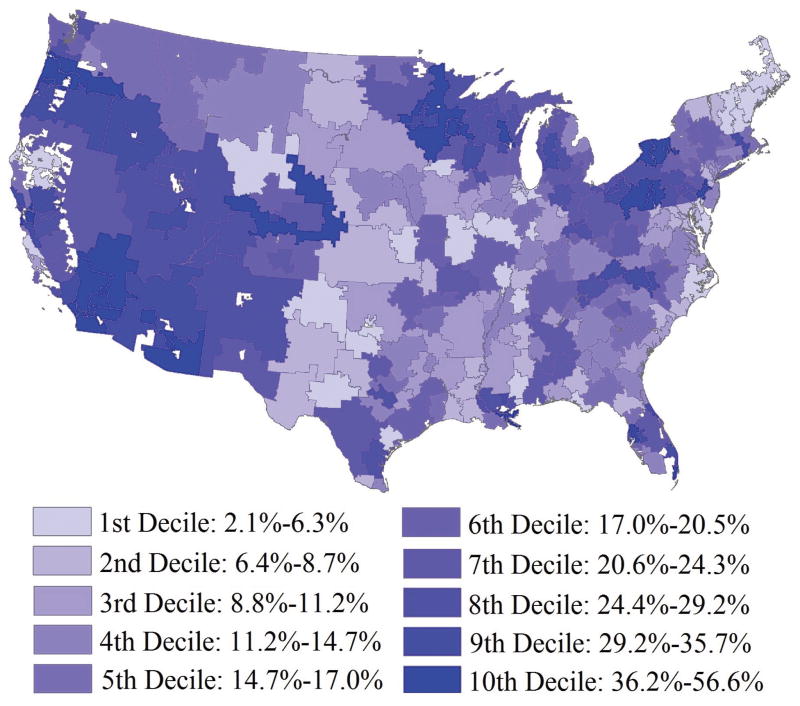

The median MA penetration was 17.0%, ranging from 2.1% to 56.6%, and there was an absolute difference of 35.7% between the 5th (4.9%) and 95th percentiles (40.6%). Figure 1 maps MA penetration by HRR across the United States. MA penetration is highest in broad areas of the West and Upper Midwest, with pockets of high MA penetration in the Eastern Great Lakes and central Florida. MA penetration is lowest in the majority of the Midwest and South, with pockets of low MA penetration on the Eastern Seaboard.

Figure 1.

Medicare Advantage Penetration by Decile in the United States among Hospital Referral Regions: 2008.

HRR-level expected rates of death, expected rates of readmission, RSMRs, and RSRRs exhibited normal distributions for each condition. Table 1 shows descriptive statistics for each FFS population risk profile and outcome measure across HRRs.

Table 1.

FFS Risk Profiles and Outcome Measures among 304 Hospital Referral Regions

| Min. | 25th Percentile | Median | 75th Percentile | Max. | |

|---|---|---|---|---|---|

|

|

|||||

| Expected Rate of Death | |||||

| Heart Failure | 9.2 | 10.9 | 11.4 | 11.8 | 13.1 |

| Acute Myocardial Infarction | 13.2 | 15.9 | 16.6 | 17.6 | 22.2 |

| Pneumonia | 9.4 | 10.9 | 11.4 | 11.8 | 14.3 |

| Expected Rate of Readmission | |||||

| Heart Failure | 21.8 | 23.6 | 24.2 | 24.8 | 27.2 |

| Acute Myocardial Infarction | 16.1 | 18.8 | 19.6 | 20.2 | 23.8 |

| Pneumonia | 15.6 | 17.2 | 17.7 | 18.3 | 21.0 |

| RSMR* | |||||

| Heart Failure | 7.6 | 10.5 | 11.2 | 11.9 | 14.2 |

| Acute Myocardial Infarction | 12.6 | 15.2 | 15.9 | 16.6 | 19.1 |

| Pneumonia | 8.8 | 10.8 | 11.4 | 12.1 | 16.3 |

| RSRR‡ | |||||

| Heart Failure | 20.1 | 23.4 | 24.2 | 25.0 | 28.9 |

| Acute Myocardial Infarction | 16.3 | 18.9 | 19.6 | 20.3 | 23.2 |

| Pneumonia | 14.6 | 17.4 | 18.0 | 18.7 | 22.5 |

RSMR indicates risk-standardized mortality rate, expressed as a percentage.

RSRR indicates risk-standardized readmission rate, expressed as a percentage.

Table 2 shows the results of all weighted regression models. MA penetration showed a statistically significant (p<0.05) positive association with the majority (5 of 6) of profiles of FFS population risk, 3 of which were highly significant (p≤0.0001). Therefore, for example, in the regression with expected rates of death from heart failure, the slope of 0.0169% signifies that, for every 100,000 Medicare FFS patients with heart failure, 16.9 more deaths would be expected for each 1% increase in regional MA penetration. Conversely, MA penetration did not show statistically significant (p≥0.05) associations with the majority (5 of 6) of hospital outcome measures.

Table 2.

Results of Linear Regressions with Medicare Advantage Penetration

| Slope, %* | Standard Error, %* | P | R2 | |

|---|---|---|---|---|

|

|

||||

| Expected Rates of Deaths | ||||

| Heart Failure | 0.0169 | 0.0032 | <0.0001 | 0.0856 |

| Acute Myocardial Infarction | 0.0295 | 0.0060 | <0.0001 | 0.0732 |

| Pneumonia | 0.0193 | 0.0043 | <0.0001 | 0.0629 |

| Expected Rates of Readmissions | ||||

| Heart Failure | 0.0049 | 0.0044 | 0.2659 | 0.0041 |

| Acute Myocardial Infarction | 0.0149 | 0.0060 | 0.0132 | 0.0202 |

| Pneumonia | 0.0129 | 0.0044 | 0.0040 | 0.0271 |

| RSMRs† | ||||

| Heart Failure | −0.0108 | 0.0049 | 0.0300 | 0.0155 |

| Acute Myocardial Infarction | −0.0061 | 0.0050 | 0.2251 | 0.0049 |

| Pneumonia | −0.0078 | 0.0049 | 0.1097 | 0.0084 |

| RSRRs‡ | ||||

| Heart Failure | 0.0019 | 0.0067 | 0.7738 | 0.0003 |

| Acute Myocardial Infarction | 0.0033 | 0.0053 | 0.5378 | 0.0013 |

| Pneumonia | −0.0016 | 0.0053 | 0.7679 | 0.0003 |

Slopes and standard errors are expressed as percentages because regressions were run with variables expressed as percentages.

RSMR indicates risk-standardized mortality rate.

RSRR indicates risk-standardized readmission rate.

Discussion

The RSMR and RSRR measures are risk-standardized hospital outcome measures based on Medicare FFS administrative claims data. We do not find evidence among HRRs for a linear association between MA penetration and performance on these measures. An association would have raised a concern about the adequacy of the measures’ risk-adjustment; this negative result makes it less likely that such an association exists at the hospital level.

Our results demonstrate that marked national variation in MA penetration occurs among HRRs, a smaller unit of analysis than previously shown; in certain cases, adjacent HRRs within the same state have markedly different rates of MA penetration. We also find that HRRs with higher MA penetration tend to have higher expected rates of death and of readmission among FFS patients, further evidence that hospitalized MA patients tend to have lower rates of risk factors for these outcomes than hospitalized FFS patients nationally.

These findings are consistent with our expectations that areas with higher MA penetration have higher risk FFS populations. It also suggests that the risk models are sensitive enough to detect these differences. The findings do not suggest that areas with high MA penetration are disadvantaged by the measures. Future hospital outcome measures should include MA patients to avoid this issue entirely.

One potential limitation of this study is the heterogeneity of the regressions. The results of the regression models suggest that MA penetration is not associated with RSMRs or RSRRs. Yet, a statistically significant (p=0.03) association exists between MA penetration and RSMRs for heart failure. Although MA penetration may have no systematic effect on hospital outcome measures, the full complexity of the relationship remains to be elucidated.

Additionally, our results should be interpreted in the context of some methodological limitations. First, we used MA data from a single year; further studies including multiple years may identify a dynamic relationship over time. Second, although we believe that HRRs are the appropriate level of analysis for these data, aggregating county-level data to HRRs using geographic area presumes homogeneity in the distribution of MA and Medicare enrollees within counties that overlap multiple HRRs. However, the validity of this assumption cannot currently be tested since MA penetration data are available only at the county and state levels. Finally, while the absence of linear associations suggests the absence of a meaningful relationship, higher-order mathematical relationships may still exist.

Despite these potential limitations, we find that, although MA penetration varies considerably among HRRs and correlates with increased FFS population risk, the RSMR and RSRR measure results do not appear associated with MA penetration. We find no evidence that MA penetration influences the ability of these measures to evaluate and compare hospital quality.

References

- 1.Krumholz HM, Wang Y, Mattera JA, et al. An administrative claims model suitable for profiling hospital performance based on 30-day mortality rates among patients with acute myocardial infarction. Circulation. 2006 Apr 4;113(13):1683–92. doi: 10.1161/CIRCULATIONAHA.105.611186. Epub 2006 Mar 20. [DOI] [PubMed] [Google Scholar]

- 2.Keenan PS, Normand SL, Lin Z, et al. An administrative claims measure suitable for profiling hospital performance on the basis of 30-day all-cause readmission rates among patients with heart failure. Circ Cardiovasc Qual Outcomes. 2008 Sep;1(1):29–37. doi: 10.1161/CIRCOUTCOMES.108.802686. [DOI] [PubMed] [Google Scholar]

- 3.United States Government Printing Office. Public Law 111-148 - Patient Protection and Affordable Care Act. United States Government Printing Office; 2010. [Accessed June 29, 2010]. Available at: http://www.gpo.gov/fdsys/pkg/PLAW-111publ148/pdf/PLAW-111publ148.pdf. [Google Scholar]

- 4.Gold M, Phelps D, Jacobson G, et al. Medicare Advantage 2010 Data Spotlight: Plan Enrollment Patterns and Trends. [Accessed June 29, 2010];Henry J. Kaiser Family Foundation web site. 2010 Jun 22; Available at: http://www.kff.org/medicare/upload/8080.pdf.

- 5.Friedman B, Jiang HJ, Russo CA. HCUP Statistical Brief #66. Agency for Healthcare Research and Quality; Rockvill, MD: Jan, 2009. [Accessed June 29, 2010]. Medicare Hospital Stays: Comparisons between the Fee-for-Service Plan and Alternative Plans, 2006. Available at: http://www.hcup-us.ahrq.gov/reports/statbriefs/sb66.pdf. [PubMed] [Google Scholar]

- 6.Area Resource File (ARF) US Department of Health and Human Services, Health Resources and Services Administration, Bureau of Health Professions; Rockville, MD: 2007–2008. [Google Scholar]

- 7.Lindenauer PK, Bernheim SM, Grady JN, et al. The performance of US hospitals as reflected in risk-standardized 30-day mortality and readmission rates for Medicare beneficiaries with pneumonia. J Hosp Med. 2010 Jul-Aug;5(6):E12–8. doi: 10.1002/jhm.822. [DOI] [PubMed] [Google Scholar]

- 8.Krumholz HM, Zhenqiu L, Drye EE, et al. An administrative claims measure suitable for profiling hospital performance based on 30-day all cause readmission rates among patients with acute myocardial infarction. Circ Cardiovasc Qual Outcomes. 2011 Mar 1;4(2):243–52. doi: 10.1161/CIRCOUTCOMES.110.957498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Krumholz HM, Wang Y, Mattera JA, et al. An administrative claims model suitable for profiling hospital performance based on 30-day mortality rates among patients with heart failure. Circulation. 2006 Apr 4;113(13):1693–701. doi: 10.1161/CIRCULATIONAHA.105.611194. Epub 2006 Mar 20. [DOI] [PubMed] [Google Scholar]

- 10.Bratzler DW, Normand SL, Wang Y, et al. An administrative claims model for profiling hospital 30-day mortality rates for pneumonia patients. PLoS ONE. 2011 Apr 12;6(4):e17401. doi: 10.1371/journal.pone.0017401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Bernheim SM, Grady JN, Lin Z, et al. National patterns of risk-standardized mortality and readmission for acute myocardial infarction and heart failure. Update on publicly reported outcomes measures based on the 2010 release. Circ Cardiovasc Qual Outcomes. 2010 Sep;3(5):459–67. doi: 10.1161/CIRCOUTCOMES.110.957613. Epub 2010 Aug 24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.The Dartmouth Atlas of Health Care [database online] Lebanon, NH: The Dartmouth Institute for Health Policy and Clinical Practice; 2010. [Google Scholar]

- 13.Nallamothu BK, Rogers MA, Chernew ME, et al. Opening of specialty cardiac hospitals and use of coronary revascularization in Medicare beneficiaries. JAMA. 2007 Mar 7;297(9):998–9. doi: 10.1001/jama.297.9.962. [DOI] [PubMed] [Google Scholar]

- 14.Fisher ES, Wennberg JE, Stukel TA, et al. Associations among hospital capacity, utilization, and mortality of US Medicare beneficiaries, controlling for sociodemographic factors. Health Serv Res. 2000 Feb;34(6):1351–62. [PMC free article] [PubMed] [Google Scholar]

- 15.Skinner J, Weinstein J, Sporer SM, et al. Racial, ethnic, and geographic disparities in rates of knee arthroplasty among Medicare patients. N Engl J Med. 2003 Oct 2;349(14):1350–9. doi: 10.1056/NEJMsa021569. [DOI] [PubMed] [Google Scholar]

- 16.Census 2000 Summary File 100-Percent Data [computer file] Washington, DC: United States Census Bureau; 2000. [Accessed June 29, 2010]. Available at: http://factfinder.census.gov/servlet/MetadataBrowserServlet?type=dataset&id=DEC_2000_SF1_U&_lang=en. [Google Scholar]

- 17.HRR Geographic Boundary File [computer file] Lebanon, NH: The Dartmouth Institute for Health Policy and Clinical Practice; 2010. [Accessed June 29, 2010]. Available at: http://www.dartmouthatlas.org/downloads/geography/hrr_bdry.zip. [Google Scholar]

- 18.The annual survey of hospitals database: documentation for 2008 data. Chicago: American Hospital Association; 2008. [Google Scholar]