Abstract

There is mounting evidence that constraints from action can influence the early stages of object selection, even in the absence of any explicit preparation for action. Here, we examined whether action properties of images can influence visual search, and whether such effects were modulated by hand preference. Observers searched for an oddball target among three distractors. The search arrays consisted either of images of graspable ‘handles’ (‘action-related’ stimuli), or images that were otherwise identical to the handles but in which the semicircular fulcrum element was re-oriented so that the stimuli no longer looked like graspable objects (‘non-action-related’ stimuli). In Experiment 1, right-handed observers, who have been shown previously to prefer to use the right hand over the left for manual tasks, were faster to detect targets in action-related versus non-action-related arrays, and showed a response time (RT) advantage for rightward- versus leftward-oriented action-related handles. In Experiment 2, left-handed observers, who have been shown to use the left and right hands relatively equally in manual tasks, were also faster to detect targets in the action-related versus non-action-related arrays, but RTs were equally fast for rightward- and leftward-oriented handle targets. Together, or results suggest that action properties in images, and constraints for action imposed by preferences for manual interaction with objects, can influence attentional selection in the context of visual search.

Keywords: action properties, attention, selection, visual search

Introduction

In everyday life, our visual environment is cluttered with different objects, some of which are relevant for grasping and interaction, and others that are not. Due to limits in processing capacity, different objects in a scene compete for limited processing resources and the control of behavior (Treisman & Gelade, 1980; Wolfe & Pashler, 1998). Although competition between objects can be biased by physical salience, when a target must be located from among a number of similar distractors, selection can also be biased by features of the target that specify uniquely its relevance for behavior (Desimone & Duncan, 1995). Interestingly, there is accumulating evidence from laboratory studies that images of objects that are strongly associated with action can modulate attention and early selection processes. For example, responses to single graspable stimuli are facilitated when the orientation of an object’s handle is spatially compatible with the responding hand of the observer (Murphy, van Velzen, & de Fockert, 2012; Tucker & Ellis, 1998). Similarly, attention is enhanced towards pairs of object images displayed in the left and right hemifields (e.g., a teapot and a cup) when the objects are positioned in such a way as to suggest that they could be used together (action-related configuration), versus when they could not easily be used together (action-unrelated configuration) (Humphreys, Riddoch, & Fortt, 2006; Riddoch, Humphreys, Edwards, Baker, & Willson, 2003; Riddoch et al., 2011; Roberts & Humphreys, 2011; Yoon, Humphreys, & Riddoch, 2010). Action-relevant cues that are conveyed by images of graspable objects have sometimes been referred to as ‘action properties’ (Humphreys, 2013), as distinct from the opportunities for genuine action that real objects present to an able-bodied observer, which are known classically as affordances (Gibson, 1979). Importantly, the effects of action properties on attention and neural responses can arise even when observers are not required to plan or perform an explicit action with the stimulus (Handy, Grafton, Shroff, Ketay, & Gazzaniga, 2003; Humphreys et al., 2010; Murphy et al., 2012; Pappas & Mack, 2008; Riddoch et al., 2003).

Despite a substantial literature documenting the effects of ‘affordances’, or action properties, of images on attention, surprisingly little is known about whether or not action-related images influence visual search in healthy adults. According to the affordance competition hypothesis (Cisek, 2007), when a number of graspable objects are presented simultaneously, multiple potential action plans are generated automatically and these plans compete for selection. In the context of visual search, when the display consists of multiple images of objects, the different stimuli presumably compete with one-another for selection and manual responses. Importantly, if action properties of images can trigger the preparation of motor plans (Creem-Regehr & Lee, 2005; Grezes, Tucker, Armony, Ellis, & Passingham, 2003; Handy et al., 2003; Humphreys, 2013; Johnson-Frey, Newman-Norlund, & Grafton, 2005), and if the generation of motor plans toward one or more locations in space can modulate the selection of stimuli at that location (Baldauf & Deubel, 2010; Schneider & Deubel, 2002), then images of objects with strong action properties should serve as stronger competitors for selection than those that convey little or no action-relevant information (Humphreys, 2013). In line with this prediction, Humphreys and Riddoch (2001) presented arrays of tools to a single patient with a lateralized disorder of attention, known as unilateral neglect, and measured his performance in a search task. The patient, who was severely impaired in his ability to find contralesional (left) targets, showed a marked improvement in detecting left-sided targets when he was asked to search for objects based on their typical action (i.e., “find the object you could drink from”), compared to when targets were specified by name (i.e., “find the cup”) or other non-action related visual features (e.g., “find the red object”) (Humphreys & Riddoch, 2001). Whether or not similar effects of action properties on visual search are observed in neurologically healthy observers is currently unknown.

It could also be the case that the strength with which objects are associated with action depends on our pattern of long-term visuo-motor experience with the left versus right hand (Adam, Muskens, Hoonhorst, Pratt, & Fischer, 2010; Gonzalez, Ganel, & Goodale, 2006). For example, during manual grasping tasks, right-handers overwhelmingly choose their dominant right hand (Gonzalez & Goodale, 2009; Mamolo, Roy, Bryden, & Rohr, 2004; Stone, Bryant, & Gonzalez, 2013), whereas most left-handers are equally likely to grasp objects with either the left or right hand (Gallivan, McLean, & Culham, 2011; Gonzalez, Whitwell, Morrissey, Ganel, & Goodale, 2007; Main & Carey, 2014; Mamolo et al., 2004; Stone et al., 2013). Differences in hand dominance have also been shown to have implications for the perception of arm length (Linkenauger, Witt, Bakdash, Stefanucci, & Proffitt, 2009) and object distance (Gallivan et al., 2011; Linkenauger, Witt, Stefanucci, Bakdash, & Proffitt, 2009). For example, right-handers tend to perceive that their right arm is longer than the left, while left-handers perceive their left and right arms as being equal in length (Linkenauger, Witt, Stefanucci, et al., 2009). Moreover, neural activation in response to visual objects in superior parieto-occipital cortex (SPOC), a brain area implicated in reaching actions, also varies as a function of perceived graspability (Gallivan, Cavina-Pratesi, & Culham, 2009). In right-handers, SPOC is activated only in the left hemisphere (LH) for objects perceived to be reachable by the right hand (but not in the RH for objects that are reachable by the left hand), whereas in left-handers, SPOC responses are observed bilaterally for objects that are graspable by either the left or right hand (Gallivan et al., 2011).

Here, we examined the extent to which action properties of object images, and hand dominance, influence visual search in healthy observers. In two experiments, right-handed (Experiment 1) and left-handed (Experiment 2) observers detected a single target positioned among three distractors. The search items were grayscale images of objects, whose elements were configured so that the items either resembled objects that are associated strongly with action (i.e., door handles), or non-action-related objects. On ‘target-present’ trials, the target was defined as the ‘oddball’ item in the array, whose rectangular element faced the opposite direction to that of the distractors. By virtue of the fact that the horizontal element of the target was rightward- or leftward-facing, we were also able to examine how target orientation influenced detection. We measured the extent to which observers perceived the different stimuli as being functional in a separate behavioral rating task. Importantly, unlike many other studies of action properties of images on attention, we minimized the potential influence of lower-level visual properties of our stimuli on performance (Garrido-Vasquez & Schubo, 2014), by matching closely our ‘action-related’ and ‘non-action-related’ stimuli for elongation, asymmetry, color, contrast, luminance, perspective and size.

We predicted that if action properties of images influence attention in the context of visual search, then search performance, as measured by response times (RTs) and accuracy, would be better for action-related versus non-action-related stimuli, in both right- and left-handed observers. Further, if action properties of images that imply a grasp with the preferred hand enhance early visual processing at the target’s location (Handy et al., 2003; Humphreys, 2013; Wykowska & Schubo, 2012), then detection performance in right-handers should be enhanced for action-related targets that are oriented towards the dominant right hand, whereas left-handed observers should be equally proficient for leftward- and rightward-oriented action-related targets.

Experiment 1

Method

Participants

Twenty-four right-handed healthy undergraduate college students (19 females; Age: M = 22.7, SD = 4.248) participated in the study in return for course credit. All participants had normal or corrected-to-normal vision and were right-handed. Informed consent was obtained prior to participation in the study and all protocols used were approved by The University of Nevada, Reno Social, Behavioral, and Educational Institutional Review Board (IRB).

Stimuli and Apparatus

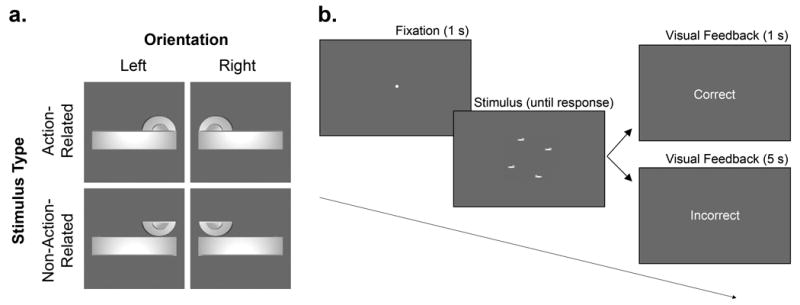

We measured observers’ response time (RT) and accuracy to detect a single ‘oddball’ target displayed among three distractors. Critically, we compared search performance for arrays that were comprised of images of action-related, versus non-action-related, objects. The ‘action-related’ objects were comprised of two basic elements, a semicircle and a horizontal rectangle, that were configured so that they resembled a door handle (Figure 1a). The ‘non-action-related’ stimuli were identical in all respects to their action-related counterparts, except that the semicircular component (handle fulcrum) was re-oriented 180 degrees so that the stimulus no longer resembled a handle. We created two versions of each stimulus type: one whose main horizontal element (shaft) was oriented to the left of the fulcrum, and the other with the shaft oriented rightwards. The combination of each Stimulus Type (action-related, non-action-related) and Orientation (left, right shaft) yielded a total four unique stimuli. The target was defined as an ‘oddball’ on the basis of the orientation of the shaft: on target-present trials the target’s shaft was oriented in the opposite direction to that of the distractors, while during target-absent trials the shafts of all stimuli were oriented in the same direction. The stimuli were presented on a 27” ASUS (VG278HE) LCD monitor with a display resolution of 1920 × 1080 pixels. The background color was grey (RGB: 104, 104, 104). Event timing and the collection of responses was controlled by a PC (Intel Core i7-4770, CPU 3.40 GHz, 16 GB RAM) running Matlab (Mathworks, USA) and the Psychophysics Toolbox extensions (Brainard, 1997; Kleiner et al., 2007; Pelli, 1997) software. A SMI RED eye tracker (SensoMotoric Instruments, Teltow, Germany) with 60 Hz sampling rate was used to monitor fixation prior to the onset of the search array on each trial.

Figure 1.

The stimuli and trial sequence. (a) Action-related stimuli (top row) were images of door handles that were oriented as if to imply a grasp by the left or right hand. Non-action-related stimuli (bottom row) were comprised of the same components as their action-related counterparts, but the orientation of the semicircular ‘fulcrum’ was rotated 180 degrees so that the image no longer resembled a handle. The action-related and non-action-related stimuli were matched closely for global shape, elongation, and directionality. On target-present trials, one of the stimuli (the target) differed from the distractors with respect to its left-right orientation. (b) Trials began with one second of central fixation (monitored with an eye-tracker). The 4-item search array was displayed until response and feedback was provided. The participant’s task was to detect whether a target was present, or not, by pressing a key on a keyboard with the index finger of the left or right hand (counterbalanced across observers). The stimulus array shown in (b) depicts a ‘target-present’ trial in which a rightward-oriented target is embedded among three leftward-oriented distractors.

Procedure

The experiment was conducted in a quiet testing room in which the computer monitor was the only source of illumination. Each trial began with the onset of a central fixation point. After (but not before) gaze had been maintained at the fixation point for 1 s, the search array appeared, and it remained on-screen until the participant’s response (Figure 1b). Participants were instructed to determine as quickly and accurately as possible whether one of the stimuli in the array was different to the others with respect to its orientation (target-present trial) or whether the stimuli in the array were all oriented in the same direction (target-absent trial). To reduce the likelihood that observers could rely on spatial patterns in the arrays to perform the search task, we randomized across trials both the position of the target relative to the distractors (i.e., different target locations), and the relative positions of the stimuli around the central fixation point (i.e., different display configurations). We used three different display configurations in which the centroid (i.e., the vertical and horizontal midpoint) of the four search elements varied in position around an invisible circle around the fixation point, while maintaining an equal distance between each element and the fixation point. At a viewing distance of 60 cm the stimuli subtended 5.16° × 2.04°. The centroid of each stimulus was positioned 8.93° VA from the center of the screen, and the stimuli were distanced 14.66° VA from one another. The targets could appear at one of four possible positions in the array. Targets appeared in each of the four possible positions in the array twenty times, for each level of Stimulus Type and Orientation. Participants provided target present/absent responses with a keypress, using the index finger of the left and right hands (‘A’ left hand, ‘L’ right hand). Mapping of target present/absent responses to the left and right hands was counterbalanced across participants. In ‘button map 1’ participants used their dominant right-hand to indicate a target-present trial, while in ‘button map 2’ they used their left-hand. Participants were encouraged to respond as quickly and accurately as possible. Correct responses were followed by on-screen visual text feedback (“Correct”) for 1 s, before continuing to the next trial. After incorrect trials, participants received an auditory tone, and visual feedback (“Incorrect”). Incorrect trials were followed by a five second delay before the onset of the next trial. All incorrect trials were appended to the end of the trial queue.

Trials were blocked by Stimulus Type (action-related, non-action-related), and the order in which the blocks were presented was counterbalanced across participants using an A-B design. For both action-related and non-action-related stimulus blocks, participants first completed 16 practice trials (8 target-absent, 8 target-present) followed by 160 experimental trials (80 target-absent, 80 target-present). The order of trials was randomized within each block. Rest periods were offered to participants between each block, and the entire experiment took ~30 minutes to complete, including rest breaks.

After completing the visual search task, all participants were asked to complete a ‘Functionality Rating Task’ in which they judged how functional they thought each stimulus was. ‘Functional’ was defined to participants as the extent to which the stimulus appears to have a specific function or purpose, or evoked a sense of potential for action. The stimuli in the rating task were presented one at a time and participants were asked to rate each stimulus using a 10-point Likert scale (1 = Not at all, to 10 = Completely). The ordering of stimuli in the rating task was randomized across participants. Follow-up paired-samples t-tests were conducted where appropriate.

Data Analysis

For each participant, we calculated mean RTs on target-present trials in each block. Any trials in which RTs were +/−2 SDs from the mean in each block were excluded from further analysis (Experiment 1: 3%, Experiment 2% of all trials). Incorrect target-present trials were removed from the analysis of RTs. The RT and accuracy data were analyzed using a three-way mixed-model analysis of variance (ANOVA), with the within-subjects factors of Stimulus Type (action-related vs. non-action-related) and target Orientation (left, right), and the between-subjects factor of Button Mapping (map 1, map 2).

Results

Response accuracy in each condition is shown in Table 1. A 3-way mixed-model ANOVA comparing accuracy in each Stimulus Type, Orientation, and Button Mapping condition showed no significant main effects or interactions (all p-values > .05).

Table 1.

Mean (SD) % accuracy of right-handed observers in each Stimulus Type and target Orientation of Experiment 1.

| Stimulus Type | Orientation

|

|

|---|---|---|

| Left

|

Right

|

|

| M (SD) | M (SD) | |

| Action-Related | .96 (.06) | .97 (.05) |

| Non-Action-Related | .96 (.05) | .98 (.04) |

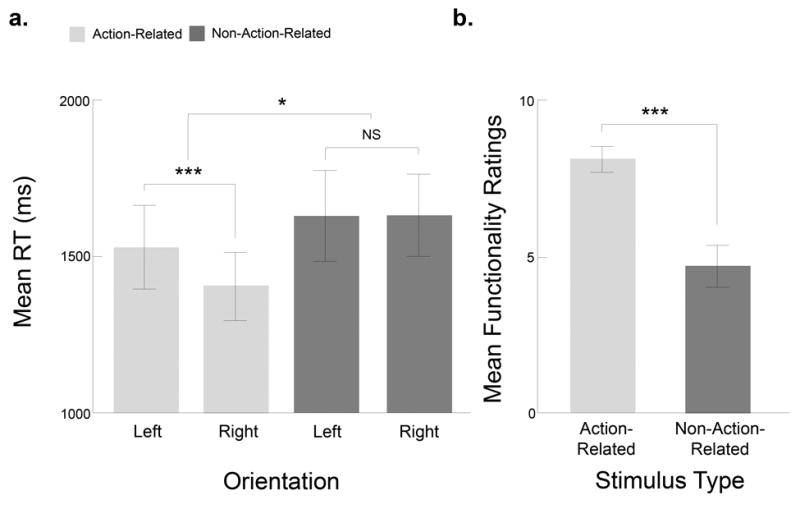

A mixed-model ANOVA comparing RTs in each Stimulus Type, Orientation, and Button Mapping condition revealed a significant main effect of Stimulus Type (F(1,22) = 6.31, p = .023, ηp2 = 0.22), in which target detection in the action-related arrays was faster (M = 14264 ms) than in the non-action-related arrays (M = 1595 ms). There was also a significant main effect of Orientation (F(1,22) = 4.41, p = .047, ηp2 = 0.17) in which responses were faster for rightward- (M = 1479 ms) versus leftward-oriented (M = 1542 ms) targets. Critically, however, these main effects were qualified by a significant two-way interaction between Stimulus Type and Orientation (F(1,22) = 12.54, p < .01, ηp2 = 0.36). Paired samples t-tests confirmed that for action-related stimulus arrays, detection was faster when the target handle was oriented rightwards (M = 1362 ms) versus leftwards (M = 1490 ms; t(23) = 4.04, p < .001, d = .98) (Figure 2a), whereas there was no significant difference between RTs for leftward- versus rightward-oriented targets in the non-action-related arrays (left: M = 1593 ms; right: M = 1597 ms; t(23) = .08, p = .944). The factor of Button Mapping did not enter into any significant main effects (F(1,22) = 0.08, p = .794, ηp2 = 0.01) or interactions (Stimulus Type × Button Mapping: F(1,22) = 0.49, p = .503, ηp2 = 0.02; Orientation × Button Mapping: F(1,22) = 0.25, p = .252, ηp2 = 0.06; Stimulus Type × Orientation × Button Mapping: F(1,22) = 0.03, p = .870, ηp2 = 0.01).

Figure 2.

Results of Experiment 1, with right-handed observers. (a) Target detection performance in right-handers was faster when the target was oriented towards the dominant (right) hand, but only for the action-related stimuli. The graph shows RTs in ms, for action-related (light gray), and non-action-related (dark gray) search arrays, in each left/right handle orientation. (b) Survey reports conducted after the main experiment confirmed that participants perceived the action-related stimuli to be more functional than the non-action-related stimuli. Here, and in all following graphs, * = p < .05, *** = p < .001, error bars = +/− 1 SEM.

Finally, we examined the functionality ratings from the right-handed sample in the Functionality Rating Task. Consistent with the visual search data, our right-handers provided functionality ratings that were higher numerically for rightward- versus leftward-oriented action-related targets (right: M = 8.3, SD = 3.4; left: M = 8.1, SD = 3.3), while ratings for the left and right non-action-related stimuli were almost identical (right: M = 3.8, SD = 3.3; left: M = 3.8, SD = 3.3). A two-way RM ANOVA on the rating data indicated that there was a main effect of Stimulus Type, in which the action-related stimuli were perceived as being more functional than the non-action-related stimuli (F(1, 23) = 607.10, p < .001, ηp2 = 0.96) (see Figure 2b). However, there was no significant main effect of Orientation (F(1, 23) = 0.58, p = .454, ηp2 = 0.03) and no significant interaction between Stimulus Type and Orientation (F(1, 23) = 0.32, p = .583, ηp2 = 0.01).

Experiment 2

In Experiment 1 we found that although right-handed observers were faster overall to search for targets within action-related versus non-action-related stimulus arrays, target detection performance was facilitated most for action-related ‘handles’ that were oriented towards the dominant right (versus non-dominant left) hand. These findings suggest that target selection in visual search is modulated by action properties within an image. In Experiment 2, we examined whether action properties and hand preferences influence visual search performance in a sample of left-handed observers. We predicted that if action properties of images that imply a grasp with the preferred hand enhance early visual processing at the target’s location (Handy et al., 2003; Humphreys, 2013; Wykowska & Schubo, 2012), given that left-handers tend to use either the left or right hand in response to graspable objects, then target detection performance in left-handers should (unlike right-handers) be proficient for both leftward- and rightward-oriented action-related targets.

Method

Participants

Twenty-four left-handed undergraduate college students (14 females; Age: M = 23.29, SD = 8.85) participated in the study in return for course credit. All participants had normal or corrected-to-normal vision. Informed consent was obtained prior to participation in the study and all protocols used were approved by The University of Nevada, Reno Social, Behavioral, and Educational Institutional Review Board (IRB).

Stimuli and Apparatus

The stimuli, apparatus and procedures used in Experiment 2 were identical to those of Experiment 1. In Experiment 2, however, we measured hand preference using a modified version of the Edinburgh Handedness Inventory (Oldfield, 1971). The questionnaire includes questions about which hand is preferred for ten different tasks (e.g., writing, lighting a match, sweeping). To complete the test, participants enter a single plus sign (‘+’) to indicate their hand preference, and two plus signs (‘++’) to indicate a strong hand preference. Plus signs for each hand (left, right) are summed separately and a handedness score computed using the following equation: ((Right – Left)/(Right – Left)). Handedness scores on the test range from +1.0 (strong right-hand preference) to −1.0 (strong left-hand preference). Mean score on the test was −0.53 (SD = .24), Range = −1.0 to −0.2.

Results

Response accuracy in each condition is shown in Table 2. As in Experiment 1, accuracy was high (>97% correct) in all conditions. A 3-way mixed-model ANOVA comparing accuracy in each Stimulus Type, Orientation, and Button Mapping condition showed no significant main effects or interactions (all p-values > .05).

Table 2.

Mean (SD) % accuracy of left-handed observers in each Stimulus Type and target Orientation condition of Experiment 2.

| Stimulus Type | Orientation

|

|

|---|---|---|

| Left

|

Right

|

|

| M (SD) | M (SD) | |

| Action-Related | .98 (.06) | .98 (.06) |

| Non-Action-Related | .97 (.04) | .98 (.04) |

Next, we analyzed RTs using a three-way mixed-model RM ANOVA, with the within-subjects factors of Stimulus Type (action-related, non-action related) and target Orientation (left, right), and the between-subjects factor of Button Mapping (map 1, map 2). There was a significant main effect of Stimulus Type (F(1,22) = 16.11, p < .000, ηp2 = 0.44), in which target detection was faster for action-related (1312 ms) versus non-action-related (1709 ms) arrays. There was no significant main effect of target handle Orientation (F(1,22) = .01, p = .966, ηp2 = 0.00; right: = 1511 ms; left = 1503 ms), and critically, there was no interaction between Stimulus Type and Orientation (F(1,22) = 1.42, p = .245, ηp2 = 0.06; Figure 3a). The factor of Button Mapping did not enter into any significant main effects (F(1,22) = 2.26, p = .147, ηp2 = 0.09) or interactions (Stimulus Type × Button Mapping: F(1,22) = 1.34, p = .260, ηp2 = 0.06; Orientation × Button Mapping: F(1,22) = 0.11, p = .750, ηp2 = 0.01; Stimulus Type × Orientation × Button Mapping: F(1,22) = 0.44, p = .515, ηp2 = 0.02).

Figure 3.

Results of Experiment 2, with left-handed observers. (a) Target detection performance in left-handers was faster overall for action-related versus non-action-related stimuli, but (unlike right-handers in Experiment 1) search was equally efficient for leftward- and rightward-oriented targets. (b) Survey reports conducted after the main experiment confirmed that participants perceived the action-related stimuli to be more functional than the non-action-related stimuli.

For the Functionality Rating Task left-handers provided the following ratings for rightward- and leftward-oriented action-related targets: right (M = 7.7, SD = 3.0), left (M = 7.5, SD = 3.1). Ratings for the non-action-related stimuli were almost identical (right: M = 3.6, SD = 3.0; left: M = 3.6, SD = 2.2). A two-way RM ANOVA on the left-handers’ rating data revealed a main effect of Stimulus Type (F(1, 23) = 607.10, p < .001, ηp2 = 0.97), in which the action-related stimuli were perceived as being more functional than the non-action-related stimuli (see Figure 3b). There was no significant main effect of Orientation (F(1, 23) = 0.02, p = .881, ηp2 = 0.01) and no significant interaction between Stimulus Type and Orientation (F(1, 23) = 0.89, p = .354, ηp2 = 0.04).

Finally, because of the extensive body of research literature on the differences between right- versus left-handers with respect to their patterns of manual interaction with, and neural responses to, graspable objects, we compared directly whether there were differences between the two subject groups in RTs for action-related targets. A mixed-model ANOVA on RTs for action-related targets, using the within-subjects factor of target Orientation (left, right) and the between-subjects factor of Handedness (left, right), revealed a significant main effect of Orientation (F(1,46) = 9.14, p = .004, ηp2 = 0.17), in which RTs were faster overall for rightward- (M = 1314 ms) versus leftward-oriented (M = 1432 ms) handles. There was no significant main effect of Handedness (F(1,46) = 0.34, p = .554, ηp2 = 0.01). Despite the pattern of results we observed for each subject group when they were analyzed independently, the interaction between Orientation and Handedness was marginal, and did not reach statistical significance (F(1,46) = 2.76, p = .104 ηp2 = 0.06).

Discussion

In the present study, we examined whether visual search performance in healthy observers was modulated by action-relevant cues in images of objects. We compared observers’ RTs and accuracy to detect targets that were embedded in stimulus arrays comprised of action-related versus non-action-related images of objects. Critically, on half of the trials, the stimuli in the search array resembled functional door handles (‘action-related’ displays). On the remaining trials, the stimuli in the array were reconfigured so that they did not resemble functional handles (‘non-action-related’ displays). Observers performed a speeded target-present vs. absent detection task. On target-absent trials, all items in the array were oriented in the same direction. On target-present trials, the horizontal shaft of one of the stimuli (the target) was oriented in a direction opposite to the distractors. Accordingly, we also examined whether search on target-present trials was modulated by the left/right orientation of the target. We were particularly interested in whether differences in detection performance for action-related stimuli would reflect previously-documented differences in long-term visuo-motor hand use patterns, and neural responses to graspable objects, between right- (Experiment 1), and left-handed (Experiment 2) observers. We predicted that target detection in right-handers would be enhanced for action-related targets, particularly those whose orientation was compatible with a grasp by the dominant right hand; conversely, detection performance in left-handers would be enhanced equally for both leftward- and rightward-oriented action-related targets.

In line with our predictions, in Experiment 1 we found that right-handers were faster overall to detect targets in action-relevant versus non-action-relevant stimulus arrays. Importantly, however, right-handers were fastest to detect rightward- versus leftward-oriented targets, but only when the stimuli in the array depicted action-relevant handles. In Experiment 2, we found that left-handers were also faster overall to detect targets in action-relevant versus non-action-relevant stimulus arrays. However target detection was equally proficient for rightward- and leftward-oriented action-relevant handles. Target orientation had no influence on RTs in the non-action-relevant object arrays for either right- or left-handed participants. Follow-up behavioral ratings performed by participants in each experiment confirmed that both right- and left-handers perceived the action-related ‘handles’ as being more functional than their reconfigured non-action-related counterparts (although, interestingly, neither participant group explicitly perceived rightward-oriented handles as being more or less functional than their leftward-oriented counterparts). Overall, these data support and extend previous neuropsychological evidence (Humphreys & Riddoch, 2001), showing that visual search for a target amongst multiple distractors is modulated by action properties of images.

Our data also extend a growing body of literature documenting differences in the patterns of typical hand use, perception, and neural responses toward graspable objects, between right- and left-handed individuals. In particular, our data suggest that long-term, well-established differences in visuo-motor associations between objects and hand actions can bias attentional selection mechanisms in the context of visual search. Despite the pattern of results reported in experiments 1 and 2, a global comparison of RTs for action-related targets between left and right-handed observers showed an overall facilitatory effect for rightward-oriented targets (which was driven by right-handed participants), and the interaction between handedness and handle orientation did not reach significance (p=0.10). It could be argued that, based on previous studies of left-handers, detection performance should be equally (rather than more) proficient, for leftward- versus rightward-oriented handles. There is some speculation, however, about the extent to which handedness is linked to manual grasping preferences (Stone & Gonzalez, 2015). For example, numerous studies have found that left-handers show either no hand preference, or sometimes a right-hand preference, for grasping (Gallivan et al., 2011; Gonzalez et al., 2006; Gonzalez & Goodale, 2009; Gonzalez et al., 2007; Main & Carey, 2014) –although it is unclear to what extent these results reflect a dominant role of the left-hemisphere in visuomotor control (Frey, Funnell, Gerry, & Gazzaniga, 2005; Goodale, 1988), versus the influence of long-term environmental pressures on left-handers. Moreover, although it is the case that left-handers represent a relatively small proportion of the human population –probably around 10% (Gonzalez and Goodale, 2009), some have argued that there may be a sub-group of left-handed individuals who show a strong unilateral preference in favor of the left-hand, and that this pattern is not related to measures derived from self-reported handedness questionnaires (Gonzalez & Goodale, 2009; Gonzalez et al., 2007). Although such ‘left-left-handers’ represent a tiny fraction of the overall population, it would be interesting to examine whether such individuals show a reverse pattern of stimulus orientation-related effects to those observed here with right-handed individuals.

Spatial biases in attention for action-related stimuli have been argued, by some, to reflect statistical learning of the frequency of actions and events in everyday life (Humphreys and Riddoch, 2007; Humphreys, 2013). It is certainly the case that in everyday real-world environments, handles (as opposed to door knobs) often have different left/right orientations. Although hand preferences in grasping tasks involving small objects sometimes show surprising deviations from what would be expected based on biomechanical efficiency (Bryden & Huszczynski, 2011; Bryden & Roy, 2006; Mamolo, Roy, Rohr, & Bryden, 2006), it is the case that heavy or very large objects may, depending on their orientation, enforce a grasp by either the left or right hand. Following from statistical learning accounts (Humphreys and Riddoch, 2007; Humphreys, 2013), therefore, one prediction is that action-related stimuli that demand more frequently a grasping response by the preferred hand (i.e., scissors), may amplify differences in orientation effects on search between right- and left-handed observers.

Action-related images may be stronger competitors for selection than non-action-related objects because perceived relevance for grasping enhances early perceptual processing at the target location (Handy et al., 2003; Humphreys, 2013; Wykowska & Schubo, 2012). For example, previous studies have shown that that visual sensitivity is increased at spatial locations occupied by graspable versus non-graspable stimuli (Garrido-Vasquez & Schubo, 2014). Similarly, using event-related potentials (ERPs) (Handy et al., 2003) found evidence of early sensory gain at the spatial location of images of tools (versus non-graspable objects) when they were positioned in the lower right visual field –locations that are presumably most relevant for visually-guided grasping. Although planning and preparing a manual grasping movement can prime visual perception of features relevant to the target (Bekkering & Neggers, 2002; Bub, Masson, & Lin, 2013; Wykowska, Schubo, & Hommel, 2009), other studies have shown that visuo-motor neural responses to graspable objects in the ventral (Roberts & Humphreys, 2010) and dorsal-stream areas (Chao & Martin, 2000; Proverbio, Adorni, & D’Aniello, 2011) can be triggered in the absence of any motor task. In our study therefore, faster RTs for action-related stimuli (but not their non-action-related counterparts) may reflect a similar sensory gain that facilitates the detection of a stimulus at a given spatial location.

It is possible that the faster search performance for functional versus non-functional targets in our task is due to the stimuli in the non-functional arrays being less familiar, or less well grouped, than those in the functional displays. Given that lower-level stimulus attributes, such as global shape and pointedness, can influence attention and eye-movements (Sigurdardottir, Michalak, & Sheinberg, 2014), we designed the stimuli in our functional and non-functional conditions so that they were matched closely for color, shape, spatial frequency, and luminance. As a result of controlling for lower-level influences, the stimuli in our non-functional condition may have been less familiar to observers than their action-related counterparts. Visual search has been shown to be less efficient for unfamiliar versus familiar items (Flowers & Lohr, 1985; Johnston, Hawley, & Farnham, 1993), although this is not always the case (Johnston et al., 1993; Johnston, Hawley, Plewe, Elliot, & DeWitt, 1990), and similar arguments might be raised for other studies of ‘affordance’ effects on attention (Garrido-Vasquez & Schubo, 2014). Search performance has also been found to be superior when the stimuli are more strongly grouped according to Gestalt principles (Humphreys, Quinlan, & Riddoch, 1989; Wertheimer, 1923). Although we cannot rule out the possible contribution of grouping in driving the main effect of stimulus functionality, arguments based on grouping effects cannot account for the differences in RT we observed between rightward versus leftward-oriented handles in the functional stimulus arrays in right-handed participants.

There has been increasing research interest and theoretical emphasis on the idea that a central goal of perception is action, and that without studying action it is not possible understand fully the mechanisms of perception (Cisek & Kalaska, 2010; Creem-Regehr & Kunz, 2010). We have been careful, here, to distinguish between the opportunities for genuine action that real, tangible objects present to an able-bodied observer, which are known classically as affordances (Gibson, 1979), from action-related cues that are conveyed by images of graspable objects, which, following from Humphreys (2013), we have referred to as ‘action properties’. This distinction serves to highlight two contrasting theoretical approaches to visual perception: constructivist approaches that view perception as a cognitive process, and the ecological approach, spearheaded by the work of James Gibson (Gibson 1979), which postulates that environmental layout and meaning are specified directly by ambient light without the need for mental representations or information processing (Heft, 1981; Norman, 2002). This distinction also highlights a long-standing debate about the extent to which ‘affordances’ (or at least, effects on behavior and neural responses related to action cues that are conveyed by a stimulus), versus stimulus-response compatibility (SRC) effects, can be invoked by images of objects that do not themselves afford genuine physical grasping (Proctor & Miles, 2014). Although there exists a growing body of literature documenting ‘affordance-effects’ on attention, perception and cognition, and although the term ‘affordance’ has been used frequently to describe the effects of action-relevant cues on behavior and neural responses, it is the case that the overwhelming majority of these studies have relied on pictures of objects rather than real-world exemplars. Some have argued that effects of affordances on responses, such as object handle orientation, may be influenced strongly by left-right spatial asymmetries in the visual features of the stimuli, or their position on the screen (Cho & Proctor, 2010; Lien, Jardin, & Proctor, 2013; Phillips & Ward, 2002; Song, Chen, & Proctor, 2014), and can therefore be explained by abstract spatial codes that can affect any left-right responses, rather than by appealing to action or affordance accounts (Proctor & Miles, 2014). Indeed, in their careful and critical review, Proctor and Miles (2014) came to the conclusion that “…there is little evidence to justify application of the concept of affordance to laboratory studies of stimulus-response compatibility effects, either in its ecological form or when it is divorced from direct perception and instead paired with a representational/computational approach” (pp. 227–228). Yet, others have used non-elongated or asymmetrical stimuli and nevertheless found effects of action properties of images on performance (Netelenbos & Gonzalez, 2015).

From an evolutionary perspective, the human brain has evolved presumably to allow us to perceive and interact with real objects and environments (Heft, 2013), and as such, images may constitute an unusual and relatively impoverished class of stimuli with which to characterize the mechanisms of naturalistic vision. Although there have been surprisingly few studies that have examined directly whether images are appropriate proxies for real world objects in psychology and neuroscience, there is mounting evidence to suggest that images may indeed be processed and represented differently to their real-world counterparts (Snow et al., 2011). An important direction for future research in this domain, and one that we are currently pursuing in our laboratory, is to determine how and why real objects and images elicit different effects on perception and attention.

In summary, the results of the current study suggest that action properties of object images can influence on selective attention in the context of multi-element visual arrays, in which different stimuli compete for attentional selection. Our results are compatible with recent arguments that visual sensitivity is increased at the spatial location of images of objects that are action-related. The current findings underscore the view that capacity limits in attention reflect underlying physical limits in our ability to act coherently upon objects in the world with a limited number of effectors (Humphreys, 2013). We have outlined a number of predictions and directions for future research involving different populations and stimulus types, and highlighted the potential caveats of using images to study perception, attention, and action.

Significance Statement.

Images of graspable objects have been shown to attract attention relative to non-action-related images of objects. Here we show that when healthy adult observers search for a ‘target’ object among a set of ‘distractors’ in a visual array, search performance is faster when the images depict action-related objects compared to non-action-related objects. The extent to which action-related properties of images influenced search was also modulated by the observer’s hand preference. Right-handers were faster to detect search targets when the handle of the action-related object was oriented so as to be compatible with a grasp by the dominant right hand. Conversely, in left-handers, who have been shown to use both the left and right hands equally during manual tasks, search was equally fast for both leftward- and rightward-oriented action-related targets. Together, the results demonstrate that constraints from action can facilitate search in cluttered visual scenes.

Acknowledgments

Research reported in this publication was supported by a grant to J.C. Snow from the National Eye Institute of the National Institutes of Health under Award Number R01EY026701. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. The research was also supported by the National Institute of General Medical Sciences of the National Institutes of Health under grant number P20 GM103650.

References

- Adam JJ, Muskens R, Hoonhorst S, Pratt J, Fischer MH. Left hand, but not right hand, reaching is sensitive to visual context. Experimental Brain Research. 2010;203(1):227–232. doi: 10.1007/s00221-010-2214-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baldauf D, Deubel H. Attentional landscapes in reaching and grasping. Vision Research. 2010;50(11):999–1013. doi: 10.1016/j.visres.2010.02.008. [DOI] [PubMed] [Google Scholar]

- Bekkering H, Neggers SF. Visual search is modulated by action intentions. Psychological Science. 2002;13(4):370–374. doi: 10.1111/j.0956-7976.2002.00466.x. [DOI] [PubMed] [Google Scholar]

- Brainard DH. The Psychophysics Toolbox. Spatial Vision. 1997;10(4):433–436. [PubMed] [Google Scholar]

- Bryden PJ, Huszczynski J. Under what conditions will right-handers use their left hand?. The effects of object orientation, object location, arm position, and task complexity in preferential reaching. Laterality: Asymmetries of Body, Brain and Cognition. 2011;16(6):722–736. doi: 10.1080/1357650x.2010.514344. [DOI] [PubMed] [Google Scholar]

- Bryden PJ, Roy EA. Preferential reaching across regions of hemispace in adults and children. Developmental Psychobiology. 2006;48(2):121–132. doi: 10.1002/dev.20120. [DOI] [PubMed] [Google Scholar]

- Bub DN, Masson ME, Lin T. Features of planned hand actions influence identification of graspable objects. Psychological Science. 2013;24(7):1269–1276. doi: 10.1177/0956797612472909. [DOI] [PubMed] [Google Scholar]

- Chao LL, Martin A. Representation of manipulable man-made objects in the dorsal stream. Neuroimage. 2000;12(4):478–484. doi: 10.1006/nimg.2000.0635. [DOI] [PubMed] [Google Scholar]

- Cho DT, Proctor RW. The object-based Simon effect: grasping affordance or relative location of the graspable part? Journal of Experimental Psychology: Human Perception and Performance. 2010;36(4):853–861. doi: 10.1037/a0019328. [DOI] [PubMed] [Google Scholar]

- Cisek P. Cortical mechanisms of action selection: the affordance competition hypothesis. Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences. 2007;362(1485):1585–1599. doi: 10.1098/rstb.2007.2054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cisek P, Kalaska JF. Neural mechanisms for interacting with a world full of action choices. Annual Review of Neuroscience. 2010;33:269–298. doi: 10.1146/annurev.neuro.051508.135409. [DOI] [PubMed] [Google Scholar]

- Creem-Regehr SH, Kunz BR. Perception and action. Wiley Interdisciplinary Reviews: Cognitive Science. 2010;1(6):800–810. doi: 10.1002/wcs.82. [DOI] [PubMed] [Google Scholar]

- Creem-Regehr SH, Lee JN. Neural representations of graspable objects: are tools special? Cognitive Brain Research. 2005;22(3):457–469. doi: 10.1016/j.cogbrainres.2004.10.006. [DOI] [PubMed] [Google Scholar]

- Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annual Review of Neuroscience. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. 0147-006X (Print) [DOI] [PubMed] [Google Scholar]

- Flowers JH, Lohr DJ. How does familiarity affect visual search for letter strings? Perception & Psychophysics. 1985;37(6):557–567. doi: 10.3758/bf03204922. [DOI] [PubMed] [Google Scholar]

- Frey SH, Funnell MG, Gerry VE, Gazzaniga MS. A dissociation between the representation of tool-use skills and hand dominance: insights from left- and right-handed callosotomy patients. Journal of Cognitive Neuroscience. 2005;17(2):262–272. doi: 10.1162/0898929053124974. [DOI] [PubMed] [Google Scholar]

- Gallivan JP, Cavina-Pratesi C, Culham JC. Is that within reach? fMRI reveals that the human superior parieto-occipital cortex encodes objects reachable by the hand. The Journal of Neuroscience. 2009;29(14):4381–4391. doi: 10.1523/jneurosci.0377-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallivan JP, McLean A, Culham JC. Neuroimaging reveals enhanced activation in a reach-selective brain area for objects located within participants’ typical hand workspaces. Neuropsychologia. 2011;49(13):3710–3721. doi: 10.1016/j.neuropsychologia.2011.09.027. [DOI] [PubMed] [Google Scholar]

- Garrido-Vasquez P, Schubo A. Modulation of visual attention by object affordance. Frontiers in Psychology. 2014;5:59. doi: 10.3389/fpsyg.2014.00059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gibson JJ. The Ecological Approach to Visual Perception. Boston, MA: Houghton Mifflin; 1979. [Google Scholar]

- Gonzalez CL, Ganel T, Goodale MA. Hemispheric specialization for the visual control of action is independent of handedness. Journal of Neurophysiology. 2006;95(6):3496–3501. doi: 10.1152/jn.01187.2005. [DOI] [PubMed] [Google Scholar]

- Gonzalez CL, Goodale MA. Hand preference for precision grasping predicts language lateralization. Neuropsychologia. 2009;47(14):3182–3189. doi: 10.1016/j.neuropsychologia.2009.07.019. [DOI] [PubMed] [Google Scholar]

- Gonzalez CL, Whitwell RL, Morrissey B, Ganel T, Goodale MA. Left handedness does not extend to visually guided precision grasping. Experimental Brain Research. 2007;182(2):275–279. doi: 10.1007/s00221-007-1090-1. [DOI] [PubMed] [Google Scholar]

- Goodale MA. Hemispheric differences in motor control. Behavioural Brain Research. Behavioral Brain Research. 1988;30(2):203–214. doi: 10.1016/0166-4328(88)90149-0. [DOI] [PubMed] [Google Scholar]

- Grezes J, Tucker M, Armony J, Ellis R, Passingham RE. Objects automatically potentiate action: an fMRI study of implicit processing. The European Journal of Neuroscience. 2003;17(12):2735–2740. doi: 10.1046/j.1460-9568.2003.02695.x. [DOI] [PubMed] [Google Scholar]

- Handy TC, Grafton ST, Shroff NM, Ketay S, Gazzaniga MS. Graspable objects grab attention when the potential for action is recognized. Nature Neuroscience. 2003;6(4):421–427. doi: 10.1038/nn1031. [DOI] [PubMed] [Google Scholar]

- Heft H. An examination of constructivist and Gibsonian approaches to environmental psychology. Population and Environment. 1981;4(4):227–245. [Google Scholar]

- Heft H. An ecological approach to psychology. Review of General Psychology. 2013;17(2):162. [Google Scholar]

- Humphreys GW. Beyond Serial Stages for Attentional Selection: The Critical Role of Action. Action Science. 2013:229–251. [Google Scholar]

- Humphreys GW, Quinlan PT, Riddoch MJ. Grouping processes in visual search: effects with single- and combined-feature targets. Journal of Experimental Psychology: General. 1989;118(3):258–279. doi: 10.1037//0096-3445.118.3.258. [DOI] [PubMed] [Google Scholar]

- Humphreys GW, Riddoch MJ. Knowing what you need but not what you want: affordances and action-defined templates in neglect. Behavioural Neurology. 2001;13(1–2):75–87. doi: 10.1155/2002/635685. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humphreys GW, Riddoch MJ, Fortt H. Action relations, semantic relations, and familiarity of spatial position in Balint’s syndrome: crossover effects on perceptual report and on localization. Cognitive, Affective, & Behavioral Neuroscience. 2006;6(3):236–245. doi: 10.3758/cabn.6.3.236. [DOI] [PubMed] [Google Scholar]

- Humphreys GW, Yoon EY, Kumar S, Lestou V, Kitadono K, Roberts KL, Riddoch MJ. The interaction of attention and action: from seeing action to acting on perception. British Journal of Psychology. 2010;101(Pt 2):185–206. doi: 10.1348/000712609x458927. [DOI] [PubMed] [Google Scholar]

- Johnson-Frey SH, Newman-Norlund R, Grafton ST. A distributed left hemisphere network active during planning of everyday tool use skills. Cerebral Cortex. 2005;15(6):681–695. doi: 10.1093/cercor/bhh169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnston WA, Hawley KJ, Farnham JM. Novel popout: Empirical boundaries and tentative theory. Journal of Experimental Psychology: Human Perception and Performance. 1993;19(1):140. [Google Scholar]

- Johnston WA, Hawley KJ, Plewe SH, Elliot JM, DeWitt MJ. Attention capture by novel stimuli. Journal of Experimental Psychology: General. 1990;119(4):397. doi: 10.1037//0096-3445.119.4.397. [DOI] [PubMed] [Google Scholar]

- Kleiner M, Brainard D, Pelli D, Ingling A, Murray R, Broussard C. What’s new in Psychtoolbox-3. Perception. 2007;36(14):1. [Google Scholar]

- Lien MC, Jardin E, Proctor RW. An electrophysiological study of the object-based correspondence effect: is the effect triggered by an intended grasping action? Attention, Perception, & Psychophysics. 2013;75(8):1862–1882. doi: 10.3758/s13414-013-0523-0. [DOI] [PubMed] [Google Scholar]

- Linkenauger SA, Witt JK, Bakdash JZ, Stefanucci JK, Proffitt DR. Asymmetrical body perception: a possible role for neural body representations. Psychological Science. 2009;20(11):1373–1380. doi: 10.1111/j.1467-9280.2009.02447.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Linkenauger SA, Witt JK, Stefanucci JK, Bakdash JZ, Proffitt DR. The effects of handedness and reachability on perceived distance. Journal of Experimental Psychology: Human Perception and Performance. 2009;35(6):1649–1660. doi: 10.1037/a0016875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Main JC, Carey DP. One hand or the other? Effector selection biases in right and left handers. Neuropsychologia. 2014;64:300–309. doi: 10.1016/j.neuropsychologia.2014.09.035. [DOI] [PubMed] [Google Scholar]

- Mamolo CM, Roy EA, Bryden PJ, Rohr LE. The effects of skill demands and object position on the distribution of preferred hand reaches. Brain and Cognition. 2004;55(2):349–351. doi: 10.1016/j.bandc.2004.02.041. [DOI] [PubMed] [Google Scholar]

- Mamolo CM, Roy EA, Rohr LE, Bryden PJ. Reaching patterns across working space: the effects of handedness, task demands, and comfort levels. Laterality. 2006;11(5):465–492. doi: 10.1080/13576500600775692. [DOI] [PubMed] [Google Scholar]

- Murphy S, van Velzen J, de Fockert JW. The role of perceptual load in action affordance by ignored objects. Psychonomic Bulletin & Review. 2012;19(6):1122–1127. doi: 10.3758/s13423-012-0299-6. [DOI] [PubMed] [Google Scholar]

- Netelenbos N, Gonzalez CL. Is that graspable? Let your right hand be the judge. Brain and Cognition. 2015;93:18–25. doi: 10.1016/j.bandc.2014.11.003. [DOI] [PubMed] [Google Scholar]

- Norman J. Two visual systems and two theories of perception: An attempt to reconcile the constructivist and ecological approaches. Behavioral and Brain Sciences. 2002;25(1):73–96. doi: 10.1017/s0140525x0200002x. discussion 96-144. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9(1):97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Pappas Z, Mack A. Potentiation of action by undetected affordant objects. Visual Cognition. 2008;16(7):892–915. [Google Scholar]

- Pelli DG. The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision. 1997;10(4):437–442. [PubMed] [Google Scholar]

- Phillips JC, Ward R. SR correspondence effects of irrelevant visual affordance: Time course and specificity of response activation. Visual Cognition. 2002;9(4–5):540–558. [Google Scholar]

- Proctor RW, Miles JD. Does the concept of affordance add anything to explanations of stimulus–response compatibility effects. The Psychology of Learning and Motivation. 2014;60:227–266. [Google Scholar]

- Proverbio AM, Adorni R, D’Aniello GE. 250 ms to code for action affordance during observation of manipulable objects. Neuropsychologia. 2011;49(9):2711–2717. doi: 10.1016/j.neuropsychologia.2011.05.019. [DOI] [PubMed] [Google Scholar]

- Riddoch MJ, Humphreys GW, Edwards S, Baker T, Willson K. Seeing the action: neuropsychological evidence for action-based effects on object selection. Nature Neuroscience. 2003;6(1):82–89. doi: 10.1038/nn984. [DOI] [PubMed] [Google Scholar]

- Riddoch MJ, Pippard B, Booth L, Rickell J, Summers J, Brownson A, Humphreys GW. Effects of action relations on the configural coding between objects. Journal of Experimental Psychology: Human Perception and Performance. 2011;37(2):580–587. doi: 10.1037/a0020745. [DOI] [PubMed] [Google Scholar]

- Roberts KL, Humphreys GW. Action relationships concatenate representations of separate objects in the ventral visual system. Neuroimage. 2010;52(4):1541–1548. doi: 10.1016/j.neuroimage.2010.05.044. [DOI] [PubMed] [Google Scholar]

- Roberts KL, Humphreys GW. Action relations facilitate the identification of briefly-presented objects. Attention, Perception, & Psychophysics. 2011;73(2):597–612. doi: 10.3758/s13414-010-0043-0. [DOI] [PubMed] [Google Scholar]

- Schneider WX, Deubel H. Selection-for-perception and selection-for-spatial-motor-action are coupled by visual attention: A review of recent findings and new evidence from stimulus-driven saccade control. Attention and performance XIX: Common mechanisms in perception and action. 2002;(19):609–627. [Google Scholar]

- Sigurdardottir HM, Michalak SM, Sheinberg DL. Shape beyond recognition: form-derived directionality and its effects on visual attention and motion perception. Journal of Experimental Psychology: General. 2014;143(1):434–454. doi: 10.1037/a0032353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snow JC, Pettypiece CE, McAdam TD, McLean AD, Stroman PW, Goodale MA, Culham JC. Bringing the real world into the fMRI scanner: repetition effects for pictures versus real objects. Scientific Reports. 2011;1:130. doi: 10.1038/srep00130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song X, Chen J, Proctor RW. Correspondence effects with torches: grasping affordance or visual feature asymmetry? Quarterly Journal of Experimental Psychology. 2014;67(4):665–675. doi: 10.1080/17470218.2013.824996. [DOI] [PubMed] [Google Scholar]

- Stone KD, Bryant DC, Gonzalez CL. Hand use for grasping in a bimanual task: evidence for different roles? Experimental Brain Research. 2013;224(3):455–467. doi: 10.1007/s00221-012-3325-z. [DOI] [PubMed] [Google Scholar]

- Stone KD, Gonzalez CL. The contributions of vision and haptics to reaching and grasping. Frontiers in Psychology. 2015;6:1403. doi: 10.3389/fpsyg.2015.01403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Treisman AM, Gelade G. A feature-integration theory of attention. Cognitive Psychology. 1980;12(1):97–136. doi: 10.1016/0010-0285(80)90005-5. [DOI] [PubMed] [Google Scholar]

- Tucker M, Ellis R. On the relations between seen objects and components of potential actions. Journal of Experimental Psychology: Human Perception and Performance. 1998;24(3):830–846. doi: 10.1037//0096-1523.24.3.830. [DOI] [PubMed] [Google Scholar]

- Wertheimer M. A source book of Gestalt Psychology. London: Routledge & Kegan Paul; 1923. Laws of organization in perceptual forms. [Google Scholar]

- Wolfe JM, Pashler H. Visual Search. Attention. 1998;1:13–73. [Google Scholar]

- Wykowska A, Schubo A. Action intentions modulate allocation of visual attention: electrophysiological evidence. Frontiers in Psychology. 2012;3:379. doi: 10.3389/fpsyg.2012.00379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wykowska A, Schubo A, Hommel B. How you move is what you see: action planning biases selection in visual search. Journal of Experimental Psychology: Human Perception and Performance. 2009;35(6):1755–1769. doi: 10.1037/a0016798. [DOI] [PubMed] [Google Scholar]

- Yoon EY, Humphreys GW, Riddoch MJ. The paired-object affordance effect. Journal of Experimental Psychology: Human Perception and Performance. 2010;36(4):812–824. doi: 10.1037/a0017175. [DOI] [PubMed] [Google Scholar]