Abstract

The human face conveys emotional and social information, but it is not well understood how these two aspects influence face perception. In order to model a group situation, two faces displaying happy, neutral or angry expressions were presented. Importantly, faces were either facing the observer, or they were presented in profile view directed towards, or looking away from each other. In Experiment 1 (n = 64), face pairs were rated regarding perceived relevance, wish-to-interact, and displayed interactivity, as well as valence and arousal. All variables revealed main effects of facial expression (emotional > neutral), face orientation (facing observer > towards > away) and interactions showed that evaluation of emotional faces strongly varies with their orientation. Experiment 2 (n = 33) examined the temporal dynamics of perceptual-attentional processing of these face constellations with event-related potentials. Processing of emotional and neutral faces differed significantly in N170 amplitudes, early posterior negativity (EPN), and sustained positive potentials. Importantly, selective emotional face processing varied as a function of face orientation, indicating early emotion-specific (N170, EPN) and late threat-specific effects (LPP, sustained positivity). Taken together, perceived personal relevance to the observer—conveyed by facial expression and face direction—amplifies emotional face processing within triadic group situations.

Keywords: Emotion, Facial Expression, Face Orientation, Personal Relevance, Social Interaction, EEG / ERP

Introduction

Efficiently gauging interpersonal relations is crucial for adequate social functioning. In this regard, the human face conveys salient information about other people’s emotional state and their intention (Ekman and Friesen, 1975; Baron-Cohen, 1997; Adolphs and Spezio, 2006). In a group setting, the personal relevance of other people’s facial expressions also depends on who is the target of the displayed emotion. This information is conveyed by face or gaze orientation relative to the observer (Itier and Batty, 2009; Graham and LaBar, 2012) and relative to the other members of a group. In such a social context, the extent to which an observer attributes personal relevance to a face he/she is confronted with (see Scherer et al., 2001; Sander et al., 2003) is likely to change perceptual processes and behavioural responding to other people’s emotions. However, the vast majority of recent research modelled dyadic interactions by presenting single faces to an observer, and less is known about emotion processing when multiple faces are involved (e.g. Puce et al., 2013).

Face processing in dyadic situations

Recent research on dyadic interactions (one sender, one observer) suggests that distinct brain structures are specialized for face processing (e.g. fusiform face area, posterior superior temporal sulcus; Haxby and Gobbini, 2011). Further studies indicated neural substrates which are involved in the processing of both, emotional and social information, in facial and other stimulus categories (e.g. amygdala, insula and medial prefrontal cortex; Olsson and Ochsner, 2008; Schwarz et al., 2013). Event-related brain potential (ERP) studies revealed selective processing of structural and emotional facial features (Schweinberger and Burton, 2003; Schupp et al., 2006; Amodio et al., 2014). Most prominently, the N170 component has been related to structural face encoding in temporo-occipital areas (between 130 and 200 ms; Bentin et al., 1996, 2007; but see Thierry et al., 2007). For example, enhanced N170 amplitudes have been observed to inverted faces (e.g. Itier and Taylor, 2002) or when attending faces in a spatial attention task (e.g. Holmes et al., 2003). More recently, the N170 was found to also be sensitive to social information such as own-age or own-race biases, stereotyping and social categorization (e.g. enhanced N170 to in-group faces; Amodio et al., 2014; Ofan et al., 2011; Vizioli et al., 2010; Wiese et al., 2008). This suggests that social information may impact already early stages of structural face encoding. Other ERP components have been suggested as indicators of facilitated emotion processing. Specifically, the early posterior negativity (EPN) (occipito-temporal EPN, 200–300 ms) and late positive potentials [centro-parietal posterior negativity (LPP), 300–700 ms] have been observed for angry, but also for happy faces compared with neutral facial expressions (Schupp et al., 2004; Williams et al., 2006). Furthermore, EPN and LPP vary as a function of explicitly instructed social information. For example, pronounced processing differences have been observed for emotional faces while participants anticipate to give a speech (Wieser et al., 2010), to receive evaluative feedback (Molen van der et al., 2013), and during the anticipation of a social meeting situation (Bublatzky et al., 2014).

Face orientation and personal relevance

Another line of research examined how the direction of a face or gaze (relative to the observer) can modulate the effects of emotional facial expressions (Itier and Batty, 2009; Graham and LaBar, 2012). Although gaze direction indicates the focus of attention, other people’s head and/or body orientation in a group situation provide relevant information regarding the direction of potential action, interpersonal relationship and communicative constellations. Thus, in reference to the observer (e.g. Sander et al., 2003; Northoff et al., 2006; Herbert et al., 2011), the personal relevance of an emotional facial expression may vary depending on who is the target of an emotion.

For instance, fearful expressions averted from the observer, but towards the location of threat, have been shown to elicit more negative affect in the observer than frontal fearful faces (the converse pattern was observed with angry faces; Adams and Kleck, 2003; Hess et al., 2007; Sander et al., 2007). These findings have been complemented by recent neuroimaging studies showing amygdala activation depending on facial expression and face/gaze orientation (N’Diaye et al., 2009; Sauer et al., 2014; but see Adams et al., 2003). Thus, enhanced neural activation in emotion and face-sensitive structures may provide the basis for rapid and adequate behavioural responding; however, perceptual and attentional processes in group settings are not well understood.

The basic assumption of this research was that face processing varies as a function of perceived personal relevance in a triadic group situation— jointly conveyed by facial emotion and face orientation with respect to the observer. Facial emotions were implemented by presenting two faces side by side, each displaying happy, neutral or angry facial expressions. Importantly, to vary sender-recipient constellations within this triad (i.e. two faces on the screen and the observer), faces were presented either both facing the observer (frontally directed), or in profile views directed towards, or looking away from each other. The impact of these triadic situations was examined by means of self-reported picture evaluations (Experiment 1) and ERP measures providing insights into the temporal dynamics of perceptual–attentional face processing (Experiment 2).

Hypotheses

Based on previous research, main effects of facial expression were predicted for both, self-report and ERP measures. We expected to replicate previous findings regarding ratings of valence and arousal for facial expressions (e.g. Alpers et al., 2011). With regard to perceived personal relevance, emotional faces were predicted to be more relevant than neutral faces. Similarly, the motivation to join in such a situation (wish-to-interact) or ratings of displayed interactivity were assumed to be particularly pronounced for emotional compared with neutral face constellations (Experiment 1). Building upon previous ERP studies that presented single faces (Schupp et al., 2004; Hinojosa et al., 2015), larger N170, EPN and LPP components were expected for both angry and happy faces relative to neutral facial expressions (Experiment 2).

Regarding face orientation, a linear gradient was hypothesized (facing the observer > facing each other > looking away) for picture evaluation (Experiment 1) and electrocortical processing (Experiment 2). However, facial frontal and profile views vary in structural features, and such differences have been associated with modulations of the N170 amplitude (e.g. pronounced N170 to averted faces; Caharel et al., 2015). As toward- and away–oriented face pairs were physically highly similar (i.e. graphical elements were mirrored), this comparison may help to disentangle structural and emotional face processing at different processing stages (N170, EPN and LPP).

Particular interest refers to the interaction between facial emotion and its direction. According to the notion of a threat-advantage in face processing (Öhman et al., 2001), being confronted with two frontally directed angry faces should be most relevant to the observer. Furthermore, observing others in a threat-related interaction (towards-directed) provides important information about social relationships in which the observer participates, and is accordingly predicted to be more powerful as compared with the condition showing threat oriented away from each other and the observer. A similar gradient across orientation conditions is expected for happy faces. Given that the orientation gradient is presumed to be attenuated for neutral faces, significant interactions of facial expression and orientation were predicted for the rating measures in Experiment 1.

With regard to ERP measures in Experiment 2, EPN and LPP amplitudes for angry and/or happy faces were predicted to be most distinct from neutral faces when directed at the observer (Schupp et al., 2004, 2006; Williams et al., 2006), and we hypothesized a gradual decrease of this selective emotion effect for the other face directions (i.e. EPN and LPP effects decreasing from frontal > toward > away-oriented faces). Finally, the interaction between emotion and orientation effects may vary across time. For instance, explicit relevance instructions (i.e. ‘you are going to meet this person later on’) have been shown to specifically modulate happy face processing at later processing stages (i.e. enhanced LPP; Bublatzky et al., 2014). In contrast, for threat processing, interactions between expression and orientation may emerge earlier, given the emphasis on speed in threat processing (Öhman et al., 2001).

Experiment 1

Methods

Participants. Sixty-four healthy volunteers (17 males) between the ages of 18 to 35 (M = 23.8, s.d. = 5.2) were recruited at the University of Mannheim. Participants scored within a normal range with regard to depression, general and social anxiety (Beck Depression Inventory M = 5.3, s.d. = 5.6; STAI-State M = 36.8, s.d. = 8.0; STAI-Trait M = 38.9, s.d. = 8.8; SIAS M = 18.1, s.d. = 8.8; FNE-brief version M = 35.5, s.d. = 8.5). All participants were fully informed about the study protocol before providing informed consent according to University of Mannheim ethic guidelines. Participants received partial course credits.

Materials and presentation. Happy, neutral and angry faces were selected from the Karolinska Directed Emotional Faces (KDEF) (Lundqvist et al., 1998). Pictures of eight face actors1 were combined to 30 pairs each depicting a female and a male face displaying the same facial expression. To manipulate the perceived personal relevance to the participant, emotional and neutral faces were presented either facing the observer (frontal view), directed toward or away from each other (profile views 90°). Pictures (800 × 600 pixels) were presented randomly with regard to facial expression and face orientation. Each trial started with a fixation cross (1 s), then a picture presentation (2 s), followed by a rating screen (no time limit). Pictures were rated on the dimensions of personal ‘relevance’ (‘How relevant is this situation for you personally?’), ‘wish to interact’ (‘How much would you like to interact with the displayed people?’), and displayed ‘interactivity’ (‘How much do these people interact with each other?’) with nine-point visual analog scales ranging from ‘not at all’ to ‘very much’. Furthermore, picture ‘valence’ (‘How pleasant or unpleasant is this situation?’) and ‘arousal’ (‘How arousing is this situation?’) were rated using a computerized version of the self-assessment manikin (SAM) (Bradley and Lang, 1994). Each participant viewed a different order of pictures, which were presented on a 22-inch computer screen ∼1 m in front of the participant.

Procedure. After completing questionnaires, a practice run included six picture trials to familiarize participants with the rating procedure. Participants were instructed to attend to each picture presented on the screen, and to rate the displayed face pairs according to all dimensions described earlier. To reduce the number of ratings per picture trial, pictures were presented three times followed by either one or two rating questions. Sequence of rating questions was balanced across participants.

Data reduction and analyses. For each rating dimension, repeated measures ANOVAs were conducted including the factors Facial Expression (happy, neutral, angry) and Orientation (frontal, toward, away).2 Greenhouse-Geisser procedure was used to correct for violations of sphericity, and as a measure of effect size the partial η2 (ηp2) is reported. To control for type 1 error, Bonferroni correction was applied for post hoc t-tests.

Results

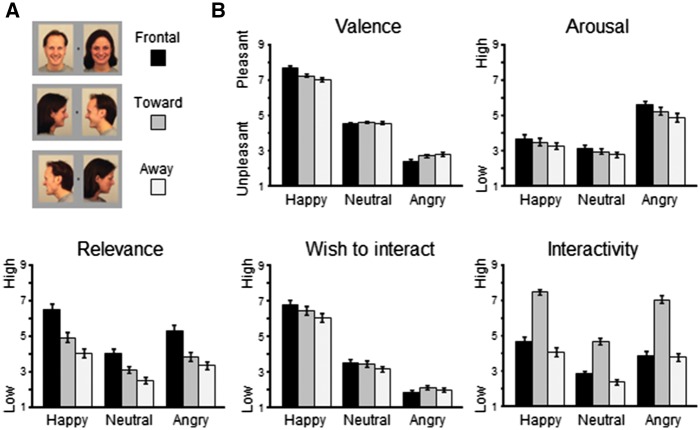

Relevance. Rated relevance differed significantly for Facial Expression, F(2,126) = 42.74, P < 0.001, ηp2 = 0.40, and Orientation, F(2,126) = 48.16, P < 0.001, ηp2 = 0.43 (Figure 1). Happy and angry faces were rated as more relevant than neutral faces, and happy as more relevant than angry facial expressions, all Ps < 0.001. Furthermore, frontally oriented faces were rated as more relevant than toward-oriented faces, and faces directed towards were more relevant than away-oriented faces, all Ps < 0.001. Of particular interest, the significant interaction Facial Expression × Orientation, F(4,252) = 13.26, P < 0.001, ηp2 = 0.17, indicated a higher relevance of emotional compared with neutral facial expressions that varied as a function of face orientation (frontal > toward > away), all Ps < 0.001. Thus, emotional facial expressions were rated as most relevant when directed frontally to the observer, followed by towards oriented faces, and least relevant when directed away from each other.

Fig. 1.

(A) Illustration of the experimental stimulus materials. (B) Mean ratings (±SEM) for pleasant, neutral, and angry face pairs plotted for each face orientation (frontal, toward, and away).

Wish-to-interact. The rated wish to interact with the displayed people varied for Facial Expression, F(2,126) = 298.29, P < 0.001, ηp2 = 0.83 and Orientation, F(2,126) = 22.96, P < 0.001, ηp2 = 0.27. Wish-to-interact was strongest for happy, than neutral and least for angry facial expressions, all Ps < 0.001. Regarding face orientation, the wish-to-interact was more pronounced for frontally and toward directed compared with away-oriented faces, Ps < 001. No difference was observed for frontal compared with toward face orientation, P = 0.99.

Furthermore, the interaction of Facial Expression × Orientation was significant, F(4,252) = 11.53, P < 0.001, ηp2 = 0.16. For happy and neutral faces, wish-to-interact was more pronounced for frontally and toward directed as compared with away-oriented faces, Ps < 0.001; no difference was observed for frontal relative to toward orientation, Ps < 0.19. For angry faces, wish-to-interact was less pronounced for frontal compared with toward face orientation, P < 0.01; no further comparison reached significance, Ps > 0.16.

Interactivity. Interactivity ratings revealed main effects of Facial Expression, F(2,126) = 91.66, P < 0.001, ηp2 = 0.59, and Orientation, F(2,126) = 98.87, P < 0.001, ηp2 = 0.61. Interactivity was rated higher for happy and angry faces compared with neutral faces, Ps < 0.001; and happy as more interactive then angry faces, P < 0.01. Furthermore, interactivity ratings were most pronounced for toward-oriented faces compared with both frontal and away orientation, Ps < 0.001, and more interactive for frontal compared with away-oriented face pairs, P < 0.05.

The significant interaction Facial Expression × Orientation, F(4,252) = 22.30, P < 0.001, ηp2 = 0.26, indicated pronounced differences for happy and neutral faces as a function of orientation (toward > frontal > away), Ps < 0.01. However, for angry faces, toward orientation was rated as more interactive than both frontal- and away-oriented faces, Ps <0.001, but no difference was found for frontal compared with away-oriented angry faces, P = 1.0.

Valence. Similar to studies using single face stimuli, valence ratings differed as a function of Facial Expression, F(2,126) = 636.67, P < 0.001, ηp2 = 0.91. Happy faces were perceived as more pleasant compared with neutral and angry faces, Ps < 0.001, and neutral faces as more pleasant than angry faces, P < 0.001. The main effect orientation approached significance, F(2,126) = 2.93, P = 0.08, ηp2 = 0.04.

Of particular interest, valence ratings for Facial Expression varied as a function of Orientation, F(4,252) = 43.77, P < 0.001, ηp2 = 0.41. Whereas neutral faces were rated similarly regardless of orientation, Ps > 0.75, pleasure ratings for happy faces varied as a function of orientation (frontal > toward > away), Ps < 0.001, and for angry faces in the opposite direction (frontal < toward < away), Ps < 0.05.

Arousal. Rated arousal varied for Facial Expression, F(2,126) = 97.54, P < 0.001, ηp2 = 0.61, and Orientation, F(2,126) = 17.78, P < 0.001, ηp2 = 22. Both happy and angry faces were perceived as more arousing compared with neutral faces, Ps < 0.01, and angry as more arousing than happy facial expressions, P < 0.001. Regarding face orientation, frontal faces were rated as more arousing than faces oriented toward and away from each other, Ps < 0.01, in turn toward-oriented faces were more arousing than faces directed away, P < 0.01.

Furthermore, the interaction Facial Expression × Orientation was significant, F(4,252) = 3.97, P < 0.01, ηp2 = 0.06. Each facial expression was rated as more arousing when presented frontally, compared with toward, and relative to away-oriented face pairs (frontal > toward > away), Ps < 0.01, these differences were most pronounced for angry faces, P < 0.001.

Discussion

The proposed personal relevance gradient—jointly conveyed by face orientation and facial expression—was confirmed by self-report data. Ratings provided clear evidence that the impact of two emotional faces differs as a function of face orientation. Apparently, the perceived relevance was particularly pronounced for face pairs directed at the observer (frontal view), and even more relevant when displaying emotional compared with neutral facial expressions. Furthermore, facial profile views directed towards each other were rated as more relevant compared with faces looking away from each other. Thus, when two faces are seen, head direction (relative to the observer and the respective third person) indicates different group constellations changing personal relevance to the observer. Furthermore, this response gradient was present for wish-to-interact, picture valence, and arousal ratings. In contrast, serving as a question without direct (self-) reference to the observer (Herbert et al., 2011), displayed interactivity was rated highest for emotional faces directed towards each other. With regard to differences between facial emotions (happy or threat advantage; Öhman et al., 2001), happy faces were rated as more relevant than angry faces; however, the opposite pattern was observed for arousal ratings. This distinction between reported arousal and personal relevance may be particularly informative regarding behavioural and neuroimaging studies which show either arousal- or relevance-based result patterns (Schupp et al., 2004; N’Diaye et al., 2009; Bublatzky et al., 2010, 2014). To follow up on the perceptual-attentional mechanisms in such triadic group constellations, Experiment 2 measured event-related brain potentials to differently oriented, emotional and neutral facial expressions.

Experiment 2

Methods

Participants . Thirty-three healthy volunteers (16 female) who had not participated in Experiment 1 were recruited from University of Mannheim. Participants’ age was between 19 and 35 (M = 22.6, s.d. = 3.3) and they scored within the normal range on depression, trait anxiety and social anxiety (BDI-V M = 15.8, s.d. = 10.8; STAI-State M = 33.3, s.d. = 7.0; STAI-Trait M = 34.8, s.d. = 9.7; SIAS M = 13.4, s.d. = 7.2; FNE-brief M = 29.7, s.d. = 8.2).

Material. Stimulus materials were identical to Experiment 1. However, presentation features were adjusted for EEG/ERP methodology. First, to reduce interference by rapidly changing face directions, face stimuli were presented in separate blocks for frontally directed, toward- and away-oriented face pairs. Block order was balanced across participants. Second, to focus on implicit stimulus processing (Schupp et al., 2006), pictures were presented (1 s each) as a continuous picture stream (without perceivable inter-trial interval). To account for potential picture sequence effects (e.g. Flaisch et al., 2008; Schweinberger and Neumann, 2016), several constraints were implemented: Stimulus randomization was restricted to no more than three repetitions of the same facial expression, equal transition probabilities between facial expression categories, and no immediate repetition of the same face actor displaying the same emotion; each participant viewed an individual picture sequence. Third, to enhance trial number per condition, each picture was presented 10 times per block (300 trials), resulting in a total number of 900 presentations. Finally, as the location of emotionally meaningful face areas (eye, mouth) varies as a function of face orientation, picture size was reduced (1280 × 960 pixels) to approximate visual angle across conditions (frontal 3.6°, toward 2.1°, away 4.9°).

Procedure. After the EEG sensors were attached, participants were seated in a dimly-lit and sound-attenuated room. During a practice run (24 trials), participants were familiarized with the picture presentation procedure. Following, instructions were given to attend to each picture appearing on the screen, and the main experiment started with the three experimental blocks separated by brief breaks.

EEG recording . Electrophysiological data were recorded using a 64 actiCap system (BrainProducts, Germany) with Ag/AgCl active electrodes mounted into a cap according to the 10-10 system (Falk Minow Services, Germany). VisionRecorder acquisition software and BrainAmp DC amplifiers (BrainProducts) served to collect continuous EEG with a sampling rate of 500 Hz, with FCz as the recording reference, and on-line filtering from 0.1 to 100 Hz. Electrode impedances were kept below 5 kΩ. Off-line analyses were performed using VisionAnalyzer 2.0 (BrainProducts) and EMEGS (Peyk et al., 2011) including low-pass filtering at 30 Hz, artifact detection, sensor interpolation, baseline-correction (based on mean activity in the 100 ms time window preceding picture onset), and conversion to an average reference (Junghöfer et al., 2006). Ocular correction of horizontal and vertical (e.g. eye blinks) eye movements was conducted via a semi-automatic Independent Component Analysis-based procedure (Makeig et al., 1997). Stimulus-synchronized epochs were extracted lasting from 100 ms before to 800 ms after picture onset. Finally, separate average waveforms were calculated for the experimental conditions Facial Expression (happy, neutral, angry) and Orientation (frontal, toward, away), for each sensor and participant.

Data reduction and analyses . To test effects of facial expression and orientation on face processing a two-step procedure was used. Visual inspection was supported by single sensor waveform analyses to determine relevant sensor clusters and time windows. For the waveform analyses, ANOVAs containing the factors Facial Expression (happy, neutral, angry) and Orientation (frontal, toward, away) were calculated for each time point after picture onset separately for each individual sensor to highlight main effects and interactions (Bublatzky et al., 2010, Bublatzky and Schupp, 2012). Similar to previous research that used single faces (e.g. Schupp et al., 2004), processing differences for Facial Expression and Orientation were observed over occipito-temporal (N170, EPN)3 and centro-parietal sensor sites (LPP, sustained positivity).4

The following main analyses were based on mean amplitudes in bilateral clusters within selected time windows for the N170 (time: 150–200 ms; sensors P7, P8), EPN (time: 200–300 ms; sensors PO9, PO10), LPP (time: 310–450 ms; sensors: CP1, CP2, CP3, CP4, P3, P4) and a sustained positivity (time: 450–800 ms; sensors: P1, P2). Data were entered into repeated measures ANOVAs including the factors Facial Expression (happy, neutral, angry), Orientation (frontal, toward, away), and Laterality (left, right). Statistical correction procedures were done as described earlier.

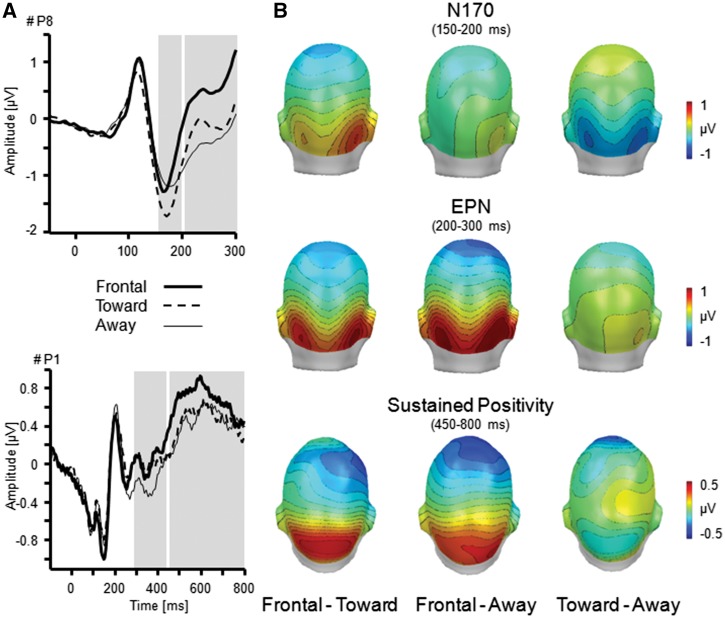

Results

N170 . The N170 component was modulated by Facial Expression, F(2,64) = 3.27, P < 0.05, ηp2 = 09, and Orientation, F(2,64) = 4.45, P < 0.05, ηp2 = 0.12 (Figures 2 and 3). The N170 was more pronounced for happy compared with neutral and angry faces, Fs(1,32) = 5.46 and 4.26, Ps < 0.05, ηp2 > 0.12, but neutral and angry facial expression did not differ, F(1,32) = 0.29, P = 0.60, ηp2 =.01. Furthermore, N170 amplitudes were more pronounced for toward compared with frontal- and away-oriented face pairs, Fs(1,32) = 6.11 and 7.34, Ps < 0.05, ηp2 > 0.16. No difference was observed for frontal relative to away-oriented faces, F(1,32) = 0.31, P = 0.58, ηp2 = 0.01. The interaction Facial Expression × Orientation was not significant, F(4,128) = 0.77, P = 0.53, ηp2 = 0.02.

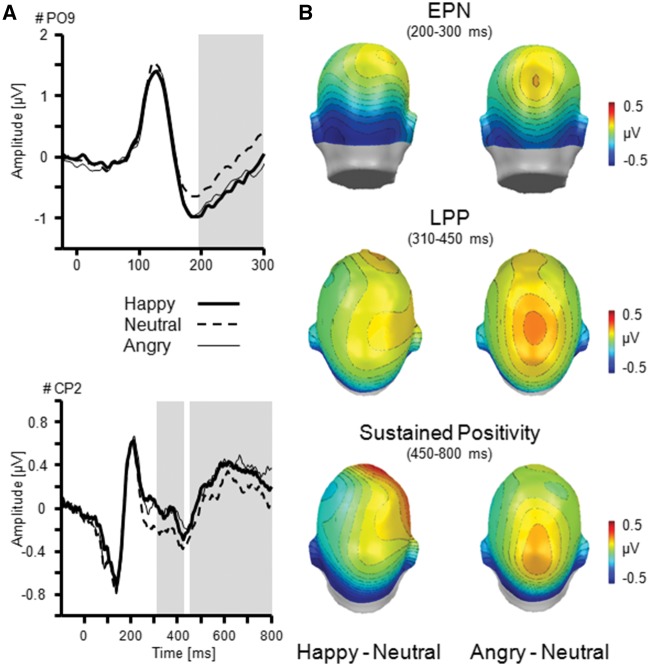

Fig. 2.

Illustration of the main effect Facial Expression as revealed by the EPN, LPP and sustained positivity. (A) ERP waveforms for an exemplary occipital (PO9) and centro-parietal sensor (CP2) for happy, neutral, and angry facial expressions. (B) Topographical difference maps (happy–neutral, angry–neutral) display the averaged time interval plotted on back (EPN: 200–300 ms) and top view (LPP: 310–450 ms; Sustained Positivity: 450–800 ms) of a model head. Analysed time windows are highlighted in grey.

Fig. 3.

Illustration of the main effect Orientation as shown by the N170, EPN and sustained positivity. (A) ERP waveforms for exemplary parietal sensors (P8 and P1) for frontal, toward, and away directed faces. (B) Topographical difference maps display the averaged time interval plotted on back (N170: 150–200 ms; EPN: 200–300 ms) and top view (Sustained Positivity: 450–800 ms) of a model head. Analysed time windows are highlighted in grey.

Similar to previous research, the N170 was more pronounced over right compared with left hemisphere, F(1,32) = 4.49, P = 0.04, ηp2 = 0.12, but no interactions including Laterality reached significance, Fs < 1.35, Ps > 0.17, ηp2 < 0.06.

Early posterior negativity. Similar to research that used single face stimuli, EPN amplitudes varied as a function of Facial Expression, F(2,64) = 8.65, P = 0.001, ηp2 = 0.21. More pronounced negativities were observed for happy and angry compared with neutral facial expressions, Fs(1,32) = 14.07 and 13.76, Ps < 0.01, ηp2 > 0.30. No difference was observed between happy and angry faces, F(1,32) = 0.02, P = 0.88, ηp2 < 0.01. Furthermore, EPN amplitudes were modulated by Orientation, F(2,64) = 19.91, P < 0.001, ηp2 = 0.38. Interestingly, a more pronounced negativity was observed for away- and toward-oriented face pairs compared with frontal orientation, F(1,32) = 37.69 and 17.83, P < 0.001, ηp2 > 0.36. Toward- and away-oriented faces did not differ, Fs(1,32) = 2.05, P = 0.16, ηp2 = 0.06.

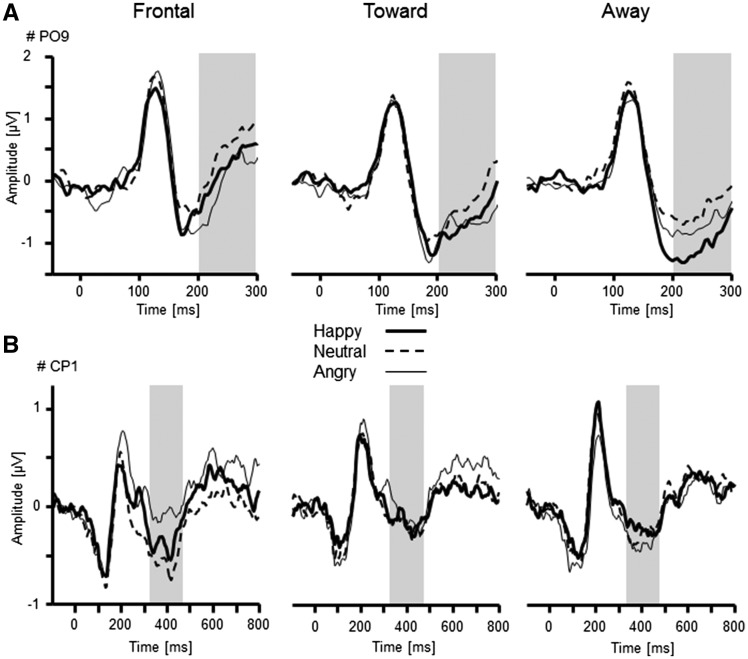

Of particular interest, EPN amplitudes varied as a function of Facial Expression × Orientation, F(4,128) = 2.91, P < 0.05, ηp2 = 0.08 (Figure 4). Follow-up analyses were calculated for each face orientation separately. Frontal faces revealed a main effect of Facial Expression, F(2,64) = 6.63, P < 0.01, ηp2 = 0.17, with more pronounced negativities for angry compared with neutral faces, P < 0.01, but not for the other comparisons, Ps > 0.24. For toward-oriented faces, the main effect Facial Expression reached significance level, F(2,64) = 3.19, P = 0.05, ηp2 = 0.09, indicating more pronounced negativity for emotional compared with neutral faces. However, none of the follow-up comparisons reached significance, Ps > 0.12. Moreover, away-oriented faces varied as a function of Facial Expression, F(2,64) = 5.18, P < 0.01, ηp2 = 0.14. Negativity was most pronounced for happy compared with neutral faces, P < 0.05, and missed significance compared with angry faces, P = 0.06. Angry and neutral faces did not differ, P = 1.0.

Fig. 4.

Illustration of the interactions Facial Expression × Orientation as revealed by the EPN (A: PO9) and LPP component (B: CP1). Separate ERP waveforms are plotted for frontal-, toward- and away-oriented faces when displaying happy, neutral, and angry facial expressions. Analysed time windows are highlighted in grey.

Exploratory analyses contrasted the three levels of Face Orientation separately for each Facial Expression. Amplitudes varied for happy, neutral and angry faces as a function of Orientation, F(2,64) = 23.7, 14.0 and 7.76, Ps < 0.01, ηp2 = 0.43, 0.30 and 0.20, each with more pronounced negativities for toward and away compared with frontal face orientation, Ps < 0.01, but no differences between toward and away-oriented faces, Ps > 0.098.

Overall, the EPN tended to be more pronounced over the left relative to the right hemisphere, F(1,32) = 4.13, P = 0.051, ηp2 = 0.11; however, no further interactions including Laterality reached significance, Fs < 2.7, Ps > 0.08, ηp2 < 0.08.

Late positive potential. Centro-parietal positive potentials did not show a main effect of Facial Expression, F(2,64) = 1.55, P = 0.22, ηp2 = 0.05, and only a marginal effect of Orientation, F(2,64) = 2.89, P = 0.07, ηp2 = 0.08, indicating enhanced positivity for frontal and toward compared with away-oriented faces. However, a significant interaction of Facial Expression × Orientation was observed, F(4,128) = 3.12, P < 0.05, ηp2 = 0.09 (Figure 4). Separate follow-up tests were conducted for each face orientation. LPP for frontal face orientation varied as a function of Facial Expression, F(2,64) = 4.77, P < 0.05, ηp2 = 0.13. Specifically angry faces were associated with pronounced positivity compared with neutral faces, F(1,32) = 12.40, P = 0.001, ηp2 = 0.28, but not relative to happy faces, F(1,32) = 3.06, P = 0.09, ηp2 = 0.09. Happy and neutral faces did not differ, F(1,32) = 1.10, P = 0.30, ηp2 = 0.03. Neither toward- nor away-oriented faces varied as a function of Facial Expression, F(2,64) = 0.10 and 1.61, Ps > 0.21, ηp2 < 0.05.

Exploratory analyses revealed a significant Orientation effect specifically for angry faces, F(2,64) = 6.74, P < 0.01, ηp2 = 0.17. Whereas frontal- and toward-oriented angry faces did not differ, P = 0.64, both orientations resulted in more pronounced positivity compared with away-oriented angry faces, Ps = 0.01 and 0.06. No main effect of Orientation were observed for happy or neutral faces, F(2,64) = 0.19 and 2.34, Ps > 0.11, ηp2 < 0.07, nor did any pairwise comparison reach significance, all Ps > 0.13.

The LPP was more positive over the right compared with the left hemisphere, F(1,32) = 5.50, P < 0.05, ηp2 = 0.15. No further interaction including Laterality was significant, Fs < 0.78, Ps > 0.44, ηp2 < 0.02.

Sustained p ositivity. Sustained positive potentials differed as a function of Facial Expression, F(2,64) = 3.40, P < 0.05, ηp2 = 0.10. More pronounced positivity was observed for angry compared with neutral faces, F(1,32) = 7.10, P < 0.05, ηp2 = 0.18, but not compared with happy, F(1,32) = 3.15, P = 0.09, ηp2 = 0.09. Happy and neutral faces did not differ, F(1,32) = 0.65, P = 0.43, ηp2 = 0.02. Furthermore, the main effect of Orientation reached significance level, F(2,64) = 3.19, P = 0.05, ηp2 = 0.09. Follow-up tests indicated more pronounced positivity for frontal compared with toward- and away-oriented faces, Fs(1,32) = 5.10 and 6.36, Ps < 0.05, ηp2 > 0.14, but no difference between toward- and away-oriented faces, F(1,32) = 0.02, P = 0.88, ηp2 < 0.01. The interaction Facial Expression × Orientation was not significant, F(4,128) = 1.57, P = 0.20, ηp2 = 0.05.

Sustained positive potentials tended to be more pronounced over the right relative to the left hemisphere, F(1,32) = 3.40, P = 0.075, ηp2 = 0.10, but no further interaction reached significance, Fs < 0.64, Ps > 0.51, ηp2 < 0.02.

Taken together, selective emotion processing was observed for the N170 component (happy vs neutral and angry), EPN (happy and angry vs neutral) and sustained positive potentials (angry vs neutral, and no difference for happy). Moreover, face orientation effects were revealed by pronounced N170 (towards vs. frontal and away), EPN (toward and away vs frontal), LPP (frontal and toward vs away) and sustained positive potentials (frontal vs toward and away). Importantly, EPN and LPP components showed interaction effects which indicate varying emotion effects as a function of face orientation relative to the observer.

General discussion

The present studies document the impact of personal relevance—conveyed by facial emotions and orientation—on face processing. Participants saw face pairs (happy, neutral and angry) that were either directed at the observer, in profile views oriented towards, or looking away from each other, and were thus part of a triadic group situation. Self-report data indicated that pictures were perceived according to a postulated relevance gradient (Experiment 1). Specifically, faces directed at the observer were rated as more relevant than faces directed towards each other, which in turn were more relevant than faces looking away (frontal > toward > away). Moreover, this gradient was most pronounced for emotional facial expressions. Experiment 2 adds information on the temporal dynamics of face processing and stimulus evaluation in viewing such triadic situations. ERPs revealed the joint impact of face orientation and emotional facial expression on early and late processing stages. Selective emotion processing was observed for the N170 component, EPN and sustained parietal positivity. Face orientation effects were shown with similar timing and topography. Of particular interest, interaction effects of face orientation and displayed emotion were observed for EPN and centro-parietal LPP amplitudes. These findings support the notion that prioritization for perceptual-attentional processing depends on the flexible integration of multiple facial cues (i.e. expression and orientation) within a group situation.

Early ERP effects (N170)

Being involved in a triadic interaction becomes even more relevant when other group members express affective states. In modelling such a situation, electrocortical indicators of selective emotion processing and face orientation were observed. The first ERP component sensitive to both facial orientation and expression was the N170 component. In accordance with the notion that the N170 reflects structural encoding of facial stimuli in temporo-occipital areas (Itier and Taylor, 2002; Holmes et al., 2003; Jacques and Rossion, 2007), amplitudes distinguished frontally directed from averted face displays and spatial configuration of multiple faces may influence the N170 component (e.g. Puce et al., 2013). However, this effect was observed specifically for toward-oriented faces (relative to frontal), but not when the same faces were mirrored and directed away from each other. Accordingly, the structural difference between frontal and profile views may not entirely explain the present N170 effect for toward-oriented faces.

Similar to other authors (e.g. Amodio et al., 2014), we suggest that socio-emotional information is likely to be involved. In Experiment 1, for instance, toward-oriented face pairs were rated as most interactive especially when displaying facial emotions. Moreover, in Experiment 2, the N170 showed differential processing specifically for happy faces. This finding is in line with previous studies on single face processing reporting emotion effects for the N170 (i.e. 32 out of 56 meta-analysed studies; Hinojosa et al., 2015); however, contradicts others that observe either no emotional modulation at this stage, or no differences between happy and angry facial expressions (for an overview see Hinojosa et al., 2015). Thus, the present N170 main effects may reflect the concurrent analyses of multiple low-level features—such as contour and contrast in head orientation, teeth or eye whites (e.g. DaSilva et al., 2016; Whalen et al., 2004)—which may transmit and/or trigger more high-level information about social-emotional group settings.

Early ERP effects (EPN)

Following the N170, the EPN was sensitive to face orientation and varied as a joint function with facial expression. Similar to studies that used single face displays (Schupp et al., 2004; Rellecke et al., 2012), the simultaneous presentation of two angry faces revealed threat-selective processing when directed at the observer, but not for toward-oriented faces. In contrast, happy faces were associated with pronounced EPN amplitudes when directed elsewhere. Thus, emotional face processing varied as a function of face direction, with indication of threat-selective (frontal view) and happy-selective processing patterns (away oriented). This ERP finding complements recent behavioural and fMRI studies which have suggested different signal value of emotional facial expression depending on who is the target of facial emotions. In this regard, the amygdala may serve as a relevance detector specialized to extract survival relevant information (Sander et al., 2003), and differentially guide attention to facial emotions as a function of their direction. For example, Sato et al. (2004) observed more amygdala activity for angry expressions directed at the observer than looking away from them. Behavioural data extend this notion to other emotional expressions. For instance, similar to frontal views of angry faces, averted fearful expressions indicate the location of threat and are rated more negatively than the converse combination (i.e. averted angry and frontal fearful faces; Adams and Kleck, 2003; Adams et al., 2006; Sander et al., 2007). In contrast, happy expressions directed away may signal that ‘all is well’ (Hess et al., 2007).

Alternatively, as two faces were used in this study, happy expressions directed away from each other, and away from the observer, may indicate social exclusion of the observer (Schmitz et al., 2012). In support of this interpretation, averted happy expressions and frontally directed angry faces were rated as most unpleasant relative to the other face directions (Experiment 1) and revealed a similar pattern of potentiated defensive reflexes in another study (Bublatzky and Alpers, in press). To follow-up on these hypotheses, a gradual variation of the orientation angle (e.g. face pairs averted between 0 and 90°; Caharel et al., 2015) or dynamic face or gaze shifts (Latinus et al., 2015) could serve to manipulate the extent of inclusion–exclusion in a triadic situation. Furthermore, the use of fearful or painful facial expressions may help to delineate threat processing in such constellations (e.g. approach- vs. avoidance-related emotions; Sander et al., 2007; Gerdes et al. 2012; Reicherts et al., 2012), and connect the present findings to the functional level, for instance, by testing simple (Neumann et al., 2014) or more complex behaviour (e.g. decisions to approach or avoid; Bublatzky et al., 2017; Pittig et al., 2015).

Later ERP effects

Regarding later processing stages, the notion of selective threat encoding was supported by enhanced positivities over centro-parietal regions. Such positive potentials have been suggested to reflect a distributed cortical network (including multiple dorsal and ventral visual structures; Sabatinelli et al., 2013) that is involved in a natural state of selective attention to emotionally and motivationally relevant stimuli (Bradley et al., 2001; Schupp et al., 2007, 2008). Here, facial threat was associated with an enhanced positivity for both frontal and toward face orientation at a transitory stage (LPP; 310–450 ms), whereas more sustained positive potentials were observed specifically for frontally directed angry faces (sustained parietal positivity; 450-800 ms). This finding is in line with recent ERP research that demonstrated enhanced LPP amplitudes for a variety of emotional compared with neutral stimulus materials (e.g. natural scenes or words; Kissler et al., 2007; Schacht and Sommer, 2009; Bublatzky and Schupp, 2012). Furthermore, a gradual increase of LPP amplitudes was observed as a function of reported emotional arousal (Bradley et al., 2001) and social communicative relevance (Schindler et al., 2015).

These findings are complemented by the present ERP and rating data. Specifically, angry face pairs were rated as most arousing and high in perceived relevance (compared with neutral faces) and further varied as a function of face orientation (frontal > toward > away; Experiment 1). Similarly, angry faces were associated with enhanced LPP amplitudes (frontal and toward > away) and a sustained positivity (frontal > toward and away; Experiment 2). In contrast, neutral faces were rated as non-emotional regardless of orientation and did not show differential orientation effects for LPP or sustained positivity. Thus, in maximizing the personal relevance to an observer, direct face orientation may amplify the emotional significance of angry facial expression.

Studying group situations

Examining multi-face displays appears promising for understanding person perception and its neural correlates (Feldmann-Wüstefeld et al., 2011; Wieser et al., 2012; Puce et al., 2013). Similar to attentional competition designs (e.g. Winston et al., 2003; Pourtois et al., 2004), face pairs with different emotion displays—such as an angry person looking at a fearful one—may serve to model interpersonal aggression and submissiveness (e.g. in reference to gender stereotypes; Ito and Urland, 2005; Hess et al., 2009). Furthermore, both averted face conditions (towards and away) are based on highly similar physical stimulus characteristics (faces just differed in spatial location; cf., Sato et al., 2004). This enables to compare communicative versus rather non-communicative situations (i.e. faces directed towards versus away). Interestingly, both averted conditions were associated with increased EPN compared with frontal face views in this study. This finding may be related to enhanced attention to profile views as these stimuli provide less directly accessible emotional information. Alternatively, averted faces may be more likely to trigger spatial attention (Nakashima and Shioiri, 2015), with conflicting directions in away-oriented faces. Overall, in comparison to previous studies that observed most pronounced effects for frontal face views, variant findings may relate to differences in design and stimulus features (e.g. event-related vs block design; single vs double face presentation; Schupp et al., 2004; Puce et al., 2013).

Opening a new route in studying triadic social situations, the present study adds and goes beyond the bulk of research on dyadic situations in person perception. Acknowledging that structural stimulus features (frontal vs profile views) may, in part, contribute to the early ERP effects (i.e. regarding N170; Bentin et al., 2007; Thierry et al., 2007); a more detailed focus on perceptual variance in multiple face displays is pertinent. This may be done, for instance, by the gradual variation of orientation angle between two or more faces relative to the observer (Puce et al., 2013; Caharel et al., 2015); the use of differently directed body parts may further contrast orientation effects in non-facial stimuli (Bauser et al., 2012; Flaisch and Schupp, 2013). Importantly, a focus on neural activity in group dynamics with different sender–recipient constellations is versatile. For instance, triadic situations may be examined when facing real persons (Pönkänen et al., 2008, 2010), to test joint attention and/or action with others (Sebanz et al., 2006; Nummenmaa Calder, 2009), or focusing on interpersonal disturbances in (sub-)clinical samples (e.g. individuals high in social anxiety or rejection sensitivity; Keltner and Kring, 1998; Domsalla et al., 2014). Thus, the present laboratory approach opens new ways to examine emotions as a function of group constellations (e.g. who is happy/angry with whom?), and puts facial expressions and orientation information into a social context.

Conclusions

Simultaneous presentation of two faces was used to model triadic interactions, which varied in displayed emotion (facial expression) and sender-recipient constellations (face orientation). The main findings indicate that both factors exert a joint impact on face perception. According to a proposed relevance gradient facial displays gain more emotional qualities (frontal > toward > away head orientation; Experiment 1). Moreover, selective emotion processing varies as a function of face direction. Experiment 2 revealed selective processing of facial emotions and face orientation with similar timing and topography (N170, EPN, LPP and sustained positivity). Of particular interest, synergistic effects of facial emotion and orientation varied along the processing stream. Specifically, enhanced early visual attention was observed for direct threat (i.e. angry faces directed at the observer) and non-specific safety (i.e. averted happy faces). Regarding later evaluative processing stages, threat-selective processing was observed to vary as a function of face orientation (LPP and sustained positivity). Thus, in fostering the personal relevance to an observer, differently directed facial expressions may amplify the emotional significance of facial stimuli.

Acknowledgements

We are grateful to J. Yokeeswaran for her assistance in data collection. A. Pittig is now at the Institute of Clinical Psychology and Psychotherapy, Technische Universität Dresden, Germany.

Funding

This work was supported, in part, by the ‘Struktur- und Innovationsfonds (SI-BW)’ of the state of Baden-Wuerttemberg, Germany, and by the German Research Foundation German Research Foundation (Deutsche Forschungsgemeinschaft; BU 3255/1-1) granted to Florian Bublatzky. The funding source had no involvement in the conduct, analysis or interpretation of data.

Conflict of interest. None declared.

Footnotes

KDEF identifier: f01, f20, f25, f26, m05, m10, m23, m34.

Accounting for potential gender effects, exploratory analyses tested participants Gender as a between group factor. Non-significant interactions (Facial Expression by Orientation by Gender) were observed for the rated dimensions: Relevance F(16,236) = 0.89, P = 0.56, ηp2 = 0.06; Wish-to-interact F(16,236) = 0.22, P = 0.99, ηp2 = 0.02; Interactivity F(16,236) = 0.69, P = 0.76, ηp2 = 0.05; Valence F(16,236) = 0.54, P = 0.90, ηp2 = 0.04; Arousal F(16,236) = 1.08, P = 0.38, ηp2 = 0.07.

Previous studies related the P1 component to differences in stimulus physics and orientation effects (e.g. Bauser et al., 2012; Caharel et al., 2015; Flaisch and Schupp, 2013). Supplementary analyses on the P1 component (scored between 100 and 140 ms at PO3/4 and O1/2) revealed neither main effects of Facial Expression and Orientation, F(2,64) = 1.25 and 0.89, Ps = 0.29 and .41, ηp2 < 0.04, nor an interaction, F(4,128) = 0.51, P = 0.69, ηp2 = 0.02.

Similar to Study 1, participants gender did not impact the interaction Facial Expression by Orientation by Laterality for the reported ERP components: N170 F(4,124) = 0.57, P = 0.66, ηp2 = 0.02; EPN F(4,124) = 0.91, P = 0.45, ηp2 = 0.03; LPP F(4,124) = 1.11, P = 0.35, ηp2 = 0.03; Sustained Positivity F(4,124) = 1.15, P = 0.34, ηp2 = 0.04).

References

- Adams R.B., Ambady N., Macrae C.N., Kleck R.E. (2006). Emotional expressions forecast approach-avoidance behavior. Motivation and Emotion, 30(2),177–86. [Google Scholar]

- Adams R.B. Jr., Gordon H.L., Baird A.A., Ambady N., Kleck R.E. (2003a). Effects of gaze on amygdala sensitivity to anger and fear faces. Science, 300, 1536.. [DOI] [PubMed] [Google Scholar]

- Adams R.B., Kleck R.E. (2003b). Perceived gaze direction and the processing of facial displays of emotion. Psychological Science, 14(6), 644–7. [DOI] [PubMed] [Google Scholar]

- Adolphs R., Spezio M. (2006). Role of the amygdala in processing visual social stimuli. Progress in Brain Research, 156, 363–78. [DOI] [PubMed] [Google Scholar]

- Alpers G.W., Adolph D., Pauli P. (2011). Emotional scenes and facial expressions elicit different psychophysiological responses. International Journal of Psychophysiology, 80, 173–81. [DOI] [PubMed] [Google Scholar]

- Amodio D.M., Bartholow B.D., Ito T.A. (2014). Tracking the dynamics of the social brain: ERP approaches for social cognitive and affective neuroscience. Social Cognitive and Affective Neuroscience, 9(3), 385–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baron-Cohen S. (1997). Mindblindness: An essay on autism and theory of mind. MIT press.

- Bauser D.S., Thoma P., Suchan B. (2012). Turn to me: Electrophysiological correlates of frontal vs averted view face and body processing are associated with trait empathy. Frontiers in Integrative Neuroscience, 6, doi:10.3389/fnint.2012.00106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentin S., Allison T., Puce A., Perez E., McCarthy G. (1996). Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience, 8(6), 551–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentin S., Taylor M.J., Rousselet G.A., et al. (2007). Controlling interstimulus perceptual variance does not abolish N170 face sensitivity. Nature Neuroscience, 10(7), 801–2. [DOI] [PubMed] [Google Scholar]

- Bradley M.M., Codispoti M., Cuthbert B.N., Lang P.J. (2001). Emotion and motivation I: defensive and appetitive reactions in picture processing. Emotion, 1, 276–98. [PubMed] [Google Scholar]

- Bradley M.M., Lang P.J. (1994). Measuring emotion: the self-assessment manikin and the semantic differential. Journal of Behavior Therapy and Experimental Psychiatry, 25, 49–59. [DOI] [PubMed] [Google Scholar]

- Bublatzky F., Alpers G.W. (in press). Facing two faces: Defense activation varies as a function of personal relevance. Biological Psychology. doi: 10.1016/j.biopsycho.2017.03.001. [DOI] [PubMed] [Google Scholar]

- Bublatzky F., Alpers G.W., Pittig A. (2017). From avoidance to approach: The influence of threat-of-shock on reward-based decision making. Behaviour Research and Therapy, doi: 0.1016/j.brat.2017.01.003. [DOI] [PubMed]

- Bublatzky F., Flaisch T., Stockburger J., Schmälzle R., Schupp H.T. (2010). The interaction of anticipatory anxiety and emotional picture processing: an event-related brain potential study. Psychophysiology, 47(4), 687–96. [DOI] [PubMed] [Google Scholar]

- Bublatzky F., Gerdes A.B.M., White A.J., Riemer M., Alpers G.W. (2014). Social and emotional relevance in face processing: Happy faces of future interaction partners enhance the LPP. Frontiers in Human Neuroscience, 8, doi:10.3389/fnhum.2014.00493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bublatzky F., Schupp H.T. (2012). Pictures cueing threat: Brain dynamics in viewing explicitly instructed danger cues. Social Cognitive and Affective Neuroscience, 7(6), 611–22. doi:10.1093/scan/nsr032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caharel S., Collet K., Rossion B. (2015). The early visual encoding of a face (N170) is viewpoint-dependent: a parametric ERP-adaptation study. Biological Psychology, 106, 18–27. [DOI] [PubMed] [Google Scholar]

- DaSilva E.B., Crager K., Geisler D., Newbern P., Orem B., Puce A. (2016). Something to sink your teeth into: The presence of teeth augments ERPs to mouth expressions. NeuroImage, 127, 227–41. [DOI] [PubMed] [Google Scholar]

- Domsalla M., Koppe G., Niedtfeld I., et al. (2014). Cerebral processing of social rejection in patients with borderline personality disorder. Social Cognitive and Affective Neuroscience, 9(11), 1789–97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekman P., Friesen W.V. (1975). Unmasking the Face. NJ: Prentice Hall Englewood Cliffs. [Google Scholar]

- Feldmann‐Wüstefeld T., Schmidt‐Daffy M., Schubö A. (2011). Neural evidence for the threat detection advantage: differential attention allocation to angry and happy faces. Psychophysiology, 48(5), 697–707. [DOI] [PubMed] [Google Scholar]

- Flaisch T., Junghöfer M., Bradley M.M., Schupp H.T., Lang P.J. (2008). Rapid picture processing: affective primes and targets. Psychophysiology, 45(1), 1–10. [DOI] [PubMed] [Google Scholar]

- Flaisch T., Schupp H.T. (2013). Tracing the time course of emotion perception: the impact of stimulus physics and semantics on gesture processing. Social Cognitive and Affective Neuroscience, 8(7), 820–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gerdes A.B.M., Wieser M.J., Alpers G.W., Strack F., Pauli P. (2012). Why do you smile at me while I'm in pain? - Pain selectively modulates voluntary facial muscle responses to happy faces. International Journal of Psychophysiology, 85, 161–7. [DOI] [PubMed] [Google Scholar]

- Graham R., LaBar K.S. (2012). Neurocognitive mechanisms of gaze-expression interactions in face processing and social attention. Neuropsychologia, 50(5), 553–66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby J.V., Gobbini M.I. (2011). Distributed Neural Systems for face Perception. In: Calder A.J., Rhodes G., Johnson M.H., Haxby J.V., The Oxford Handbook of Face Perception, Oxford: Oxford University Press. [Google Scholar]

- Herbert C., Pauli P., Herbert B.M. (2011). Self-reference modulates the processing of emotional stimuli in the absence of explicit self-referential appraisal instructions. Social Cognitive and Affective Neuroscience, 6, 653–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hess U., Adams R.B. Jr., Kleck R.E. (2007). Looking at you or looking elsewhere: The influence of head orientation on the signal value of emotional facial expressions. Motivation and Emotion, 31, 137–44. [Google Scholar]

- Hess U., Adams R.B., Grammer K., Kleck R.E. (2009). Face gender and emotion expression: are angry women more like men? Journal of Vision, 9(12), 19.. [DOI] [PubMed] [Google Scholar]

- Hinojosa J.A., Mercado F., Carretié L. (2015). N170 sensitivity to facial expression: A meta-analysis. Neuroscience and Biobehavioral Reviews, doi:10.1016/j.neubiorev. 2015.06.002. [DOI] [PubMed] [Google Scholar]

- Holmes A., Vuilleumier P., Eimer M. (2003). The processing of emotional facial expression is gated by spatial attention: evidence from event-related brain potentials. Cognitive Brain Research, 16, 174–84. [DOI] [PubMed] [Google Scholar]

- Itier R.J., Batty M. (2009). Neural bases of eye and gaze processing: the core of social cognition. Neuroscience and Biobehavioral Reviews, 33(6), 843–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Itier R.J., Taylor M.J. (2002). Inversion and contrast polarity reversal affect both encoding and recognition processes of unfamiliar faces: a repetition study using ERPs. NeuroImage, 15(2), 353–72. [DOI] [PubMed] [Google Scholar]

- Ito T.A., Urland G.R. (2005). The influence of processing objectives on the perception of faces: an ERP study of race and gender perception. Cognitive, Affective, and Behavioral Neuroscience, 5(1), 21–36. [DOI] [PubMed] [Google Scholar]

- Jacques C., Rossion B. (2007). Early electrophysiological responses to multiple face orientations correlate with individual discrimination performance in humans. NeuroImage, 36(3), 863–76. [DOI] [PubMed] [Google Scholar]

- Junghöfer M., Peyk P., Flaisch T., Schupp H.T. (2006). Neuroimaging methods in affective neuroscience : Selected methodological issues. Progress in Brain Research, 156, 123–43. [DOI] [PubMed] [Google Scholar]

- Keltner D., Kring A.M. (1998). Emotion, social function, and psychopathology. Review of General Psychology, 2(3), 320. [Google Scholar]

- Kissler J., Herbert C., Peyk P., Junghöfer M. (2007). Buzzwords: early cortical responses to emotional words during reading. Psychological Science, 18, 475–80. [DOI] [PubMed] [Google Scholar]

- Latinus M., Love S.A., Rossi A., et al. (2015). Social decisions affect neural activity to perceived dynamic gaze. Social Cognitive and Affective Neuroscience, 10(11), 1557–67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lundqvist D., Flykt A., Öhman A. (1998). The Karolinska Directed Emotional Faces-KDEF, CD-ROM from Department of Clinical Neuroscience, Psychology section, Karolinska Institutet, ISBN 91-630-7164-9.

- Makeig S., Jung T.P., Bell A.J., Ghahremani D., Sejnowski T.J. (1997). Blind separation of auditory event-related brain responses into independent components. Proceedings of the National Academy of Sciences of the United States of America, 94(20), 10979–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Molen van der M.J., Poppelaars E.S., Van Hartingsveldt C.T., Harrewijn A., Moor B.G., Westenberg P.M. (2013). Fear of negative evaluation modulates electrocortical and behavioral responses when anticipating social evaluative feedback. Frontiers in Human Neuroscience, 7, doi:10.3389/fnhum.2013.00936. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakashima R., Shioiri S. (2015). Facilitation of visual perception in head direction: visual attention modulation based on head direction. PloS One, 10(4), e0124367.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- N`Diaye K.N., Sander D., Vuilleumier P. (2009). Self-relevance processing in the human amygdala: Gaze direction, facial expression, and emotion intensity. Emotion, 9(6), 798–806. [DOI] [PubMed] [Google Scholar]

- Nummenmaa L., Calder A.J. (2009). Neural mechanisms of social attention. Trends in cognitive sciences, 13(3), 135–43. [DOI] [PubMed] [Google Scholar]

- Neumann R., Schulz S.M., Lozo L., Alpers G.W. (2014). Automatic facial responses to near-threshold presented facial displays of emotion: Imitation or evaluation?. Biological Psychology, 96, 144–9. [DOI] [PubMed] [Google Scholar]

- Northoff G., Heinzel A., De Greck M., Bermpohl F., Dobrowolny H., Panksepp J. (2006). Self-referential processing in our brain—a meta-analysis of imaging studies on the self. NeuroImage, 31(1), 440–57. [DOI] [PubMed] [Google Scholar]

- Ofan R.H., Rubin N., Amodio D.M. (2011). Seeing race: N170 responses to race and their relation to automatic racial attitudes and controlled processing. Journal of Cognitive Neuroscience, 23(10), 3153–61. [DOI] [PubMed] [Google Scholar]

- Öhman A., Lundqvist D., Esteves F. (2001). The face in the crowd revisited: a threat advantage with schematic stimuli. Journal of Personality and Social Psychology, 80(3), 381–95. [DOI] [PubMed] [Google Scholar]

- Olsson A., Ochsner K.N. (2008). The role of social cognition in emotion. Trends in Cognitive Sciences, 12, 65–71. [DOI] [PubMed] [Google Scholar]

- Peyk P., Cesarei A.D., Junghöfer M. (2011). ElectroMagneto Encephalography software: Overview and integration with other EEG/MEG toolboxes. Computational Intelligence and Neuroscience, vol. 2011, Article ID: 861705, 10 pages, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pittig A., Alpers G.W., Niles A.N., Craske M.G. (2015). Avoidant decision-making in social anxiety disorder: a laboratory task linked to in vivo anxiety and treatment outcome. Behavior Research and Therapy, 73, 96–103. [DOI] [PubMed] [Google Scholar]

- Pönkänen L.M., Alhoniemi A., Leppänen J.M., Hietanen J.K. (2010). Does it make a difference if I have an eye contact with you or with your picture? An ERP study. Social Cognitive and Affective Neuroscience, 6(4), 486–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pönkänen L.M., Hietanen J.K., Peltola M.J., Kauppinen P.K., Haapalainen A., Leppänen J.M. (2008). Facing a real person: an event-related potential study. NeuroReport, 19(4), 497–501. [DOI] [PubMed] [Google Scholar]

- Pourtois G., Grandjean D., Sander D., Vuilleumier P. (2004). Electrophysiological correlates of rapid spatial orienting towards fearful faces. Cerebral Cortex, 14(6), 619–33. [DOI] [PubMed] [Google Scholar]

- Puce A., McNeely M.E., Berrebi M.E., Thompson J.C., Hardee J., Brefczynski-Lewis J. (2013). Multiple faces elicit augmented neural activity. Frontiers in Human Neuroscience, 7, 282, doi: 10.3389/fnhum.2013.00282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reicherts P., Wieser M.J., Gerdes A.B., et al. (2012). Electrocortical evidence for preferential processing of dynamic pain expressions compared to other emotional expressions. Pain, 153(9), 1959–64. [DOI] [PubMed] [Google Scholar]

- Rellecke J., Sommer W., Schacht A. (2012). Does processing of emotional facial expressions depend on intention? Time-resolved evidence from event-related brain potentials. Biological Psychology, 90(1), 23–32. [DOI] [PubMed] [Google Scholar]

- Sabatinelli D., Keil A., Frank D.W., Lang P.J. (2013). Emotional perception: correspondence of early and late event-related potentials with cortical and subcortical functional MRI. Biological Psychology, 92(3), 513–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sander D., Grafman J., Zalla T. (2003). The human amygdala: an evolved system for relevance detection. Reviews in the Neurosciences, 14(4), 303–16. [DOI] [PubMed] [Google Scholar]

- Sander D., Grandjean D., Kaiser S., Wehrle T., Scherer K.R. (2007). Interaction effects of perceived gaze direction and dynamic facial expression: evidence for appraisal theories of emotion. European Journal of Cognitive Psychology, 19(3), 470–80. [Google Scholar]

- Sato W., Yoshikawa S., Kochiyama T., Matsumura M. (2004). The amygdala processes the emotional significance of facial expressions: an fMRI investigation using the interaction between expression and face direction. NeuroImage, 22, 1006–13. [DOI] [PubMed] [Google Scholar]

- Sauer A., Mothes-Lasch M., Miltner W.H., Straube T. (2014). Effects of gaze direction, head orientation and valence of facial expression on amygdala activity. Social Cognitive and Affective Neuroscience, 9(8), 1246–52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schacht A., Sommer W. (2009). Emotions in word and face processing: early and late cortical responses. Brain and Cognition, 69(3), 538–50. [DOI] [PubMed] [Google Scholar]

- Scherer K.R., Schorr A., Johnstone T. (2001). Appraisal Processes in Emotion: Theory, Methods, Research. New York: Oxford University Press. [Google Scholar]

- Schindler S., Wegrzyn M., Steppacher I., Kissler J. (2015). Perceived communicative context and emotional content amplify visual word processing in the fusiform gyrus. The Journal of Neuroscience, 35(15), 6010–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmitz J., Scheel C.N., Rigon A., Gross J.J., Blechert J. (2012). You don’t like me, do you? Enhanced ERP responses to averted eye gaze in social anxiety. Biological Psychology, 91(2), 263–9. [DOI] [PubMed] [Google Scholar]

- Schupp H.T., Flaisch T., Stockburger J., Junghöfer M. (2006). Emotion and attention: event-related brain potential studies. Progress in Brain Research, 156, 31–51. [DOI] [PubMed] [Google Scholar]

- Schupp H.T., Öhman A., Junghöfer M., Weike A.I., Stockburger J., Hamm A.O. (2004). The facilitated processing of threatening faces: an ERP analysis. Emotion, 4(2), 189–200. [DOI] [PubMed] [Google Scholar]

- Schupp H.T., Stockburger J., Bublatzky F., Junghöfer M., Weike A.I., Hamm A.O. (2007). Explicit attention interferes with selective emotion processing in human extrastriate cortex. Bmc Neuroscience, 8(1), 16.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schupp H.T., Stockburger J., Bublatzky F., Junghöfer M., Weike A.I., Hamm A.O. (2008). The selective processing of emotional visual stimuli while detecting auditory targets: An ERP analysis. Brain Research, 1230, 168–76. [DOI] [PubMed] [Google Scholar]

- Schwarz K.A., Wieser M.J., Gerdes A.B.M., Mühlberger A., Pauli P. (2013). Why are you looking like that? How the context influences evaluation and processing of human faces. Social Cognitive and Affective Neuroscience, 8, 438–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schweinberger S.R., Burton A.M. (2003). Covert recognition and the neural system for face processing. Cortex, 39(1), 9–30. [DOI] [PubMed] [Google Scholar]

- Schweinberger S.R., Neumann M.F. (2016). Repetition effects in human ERPs to faces. Cortex, 80, 141–53. [DOI] [PubMed] [Google Scholar]

- Sebanz N., Bekkering H., Knoblich G. (2006). Joint action: bodies and minds moving together. Trends in cognitive sciences, 10(2), 70–6. [DOI] [PubMed] [Google Scholar]

- Thierry G., Martin C.D., Downing P., Pegna A.J. (2007). Controlling for interstimulus perceptual variance abolishes N170 face selectivity. Nature Neuroscience, 10(4), 505–11. [DOI] [PubMed] [Google Scholar]

- Vizioli L., Rousselet G.A., Caldara R. (2010). Neural repetition suppression to identity is abolished by other-race faces. Proceedings of the National Academy of Sciences of the United States of America, 107(46), 20081–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whalen P.J., Kagan J., Cook R.G.. et al. (2004). Human amygdala responsivity to masked fearful eye whites. Science, 306(5704), 2061. [DOI] [PubMed] [Google Scholar]

- Wiese H., Schweinberger S.R., Neumann M.F. (2008). Perceiving age and gender in unfamiliar faces: Brain potential evidence for implicit and explicit person categorization. Psychophysiology, 45(6), 957–69. [DOI] [PubMed] [Google Scholar]

- Wieser M.J., Pauli P., Reicherts P., Mühlberger A. (2010). Don’t look at me in anger! Enhanced processing of angry faces in anticipation of public speaking. Psychophysiology, 47, 271–80. [DOI] [PubMed] [Google Scholar]

- Williams L.M., Palmer D., Liddell B.J., Song L., Gordon E. (2006). The ‘when’and ‘where’of perceiving signals of threat versus non-threat. NeuroImage, 31(1), 458–67. [DOI] [PubMed] [Google Scholar]

- Wiznston J.S., O'Doherty J., Dolan R.J. (2003). Common and distinct neural responses during direct and incidental processing of multiple facial emotions. NeuroImage, 20(1), 84–97. [DOI] [PubMed] [Google Scholar]