Abstract

Critical thinking, the capacity to be deliberate about thinking, is increasingly the focus of undergraduate medical education, but is not commonly addressed in graduate medical education. Without critical thinking, physicians, and particularly residents, are prone to cognitive errors, which can lead to diagnostic errors, especially in a high-stakes environment such as the intensive care unit. Although challenging, critical thinking skills can be taught. At this time, there is a paucity of data to support an educational gold standard for teaching critical thinking, but we believe that five strategies, routed in cognitive theory and our personal teaching experiences, provide an effective framework to teach critical thinking in the intensive care unit. The five strategies are: make the thinking process explicit by helping learners understand that the brain uses two cognitive processes: type 1, an intuitive pattern-recognizing process, and type 2, an analytic process; discuss cognitive biases, such as premature closure, and teach residents to minimize biases by expressing uncertainty and keeping differentials broad; model and teach inductive reasoning by utilizing concept and mechanism maps and explicitly teach how this reasoning differs from the more commonly used hypothetico-deductive reasoning; use questions to stimulate critical thinking: “how” or “why” questions can be used to coach trainees and to uncover their thought processes; and assess and provide feedback on learner’s critical thinking. We believe these five strategies provide practical approaches for teaching critical thinking in the intensive care unit.

Keywords: medical education, critical thinking, critical care, cognitive errors

Critical thinking, the capacity to be deliberate about thinking and actively assess and regulate one’s cognition (1–4), is an essential skill for all physicians. Absent critical thinking, one typically relies on heuristics, a quick method or shortcut for problem solving, and can fall victim to cognitive biases (5). Cognitive biases can lead to diagnostic errors, which result in increased patient morbidity and mortality (6).

Diagnostic errors are the number one cause of medical malpractice claims (7) and are thought to account for approximately 10% of in-hospital deaths (8). Many factors contribute to diagnostic errors, including cognitive problems and systems issues (9), but it has been shown that cognitive errors are an important source of diagnostic error in almost 75% of cases (10). In addition, a recent report from the Risk Management Foundation, the research arm of the malpractice insurer for the Harvard Medical School hospitals, labeled more than half of the malpractice cases they evaluated as “assessment failures,” which included “narrow diagnostic focus, failure to establish a differential diagnosis, [and] reliance on a chronic condition of previous diagnosis (11).” In light of these data and the Institute of Medicine’s 2015 recommendation to “enhance health care professional education and training in the diagnostic process (8),” we present this framework as a practical approach to teaching critical thinking skills in the intensive care unit (ICU).

The process of critical thinking can be taught (3); however, methods of instruction are challenging (12), and there is no consensus on the most effective teaching model (13, 14). Explicit teaching about reasoning, metacognition, cognitive biases, and debiasing strategies may help avoid cognitive errors (3, 15, 16) and enhance critical thinking (17), but empirical evidence to inform best educational practices is lacking. Assessment of critical thinking is also difficult (18). However, because it is of paramount importance to providing high-quality, safe, and effective patient care, we believe critical thinking should be both explicitly taught and explicitly assessed (12, 18).

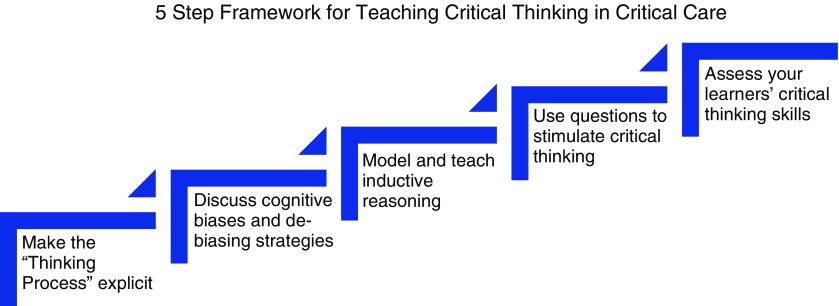

Critical thinking is particularly important in the fast-paced, high-acuity environment of the ICU, where medical errors can lead to serious harm (19). Despite the paucity of data to support an educational gold standard in this field, we propose five strategies, based on educational principles, we have found effective in teaching critical thinking in the ICU (Figure 1). These strategies are not dependent on one another and often overlap. Using the following case scenario as an example for discussion, we provide a detailed explanation, as well as practical tips on how to employ these strategies.

A 45-year-old man with a history of hypertension presents to the emergency department with fatigue, sore throat, low-grade fever, and mild shortness of breath. On arrival to the emergency department, his heart rate is 110 and his blood pressure is 90/50 mm Hg. He is given 2 L fluids, but his blood pressure continues to fall, and norepinephrine is started. Physical examination is normal with the exception of dry mucous membranes. Laboratory studies performed on blood samples obtained before administration of intravenous fluid show: white blood cell count, 6.0 K/uL; hematocrit, 35%; lactate, 0.8 mmol/L; blood urea nitrogen, 40 mg/dL; and creatinine, 1.1 mg/dL. A chest radiograph shows no infiltrates. He is admitted to the medical intensive care unit.

Attending: What is your assessment of this patient?

Resident: This is a 45-year-old male with a history of hypertension who was sent to us from the emergency department with sepsis.

Attending: That is interesting. I am puzzled: What is the source of infection? And how do you account for the low hematocrit in an essentially healthy man whom you believe to be volume depleted?

Resident: Well, maybe pneumonia will appear on the X-ray in the next 24 hours. With respect to the hematocrit...I’m not really sure.

Figure 1.

Five strategies to teach critical thinking skills in a critical care environment.

Strategy 1: Make the “Thinking Process” Explicit

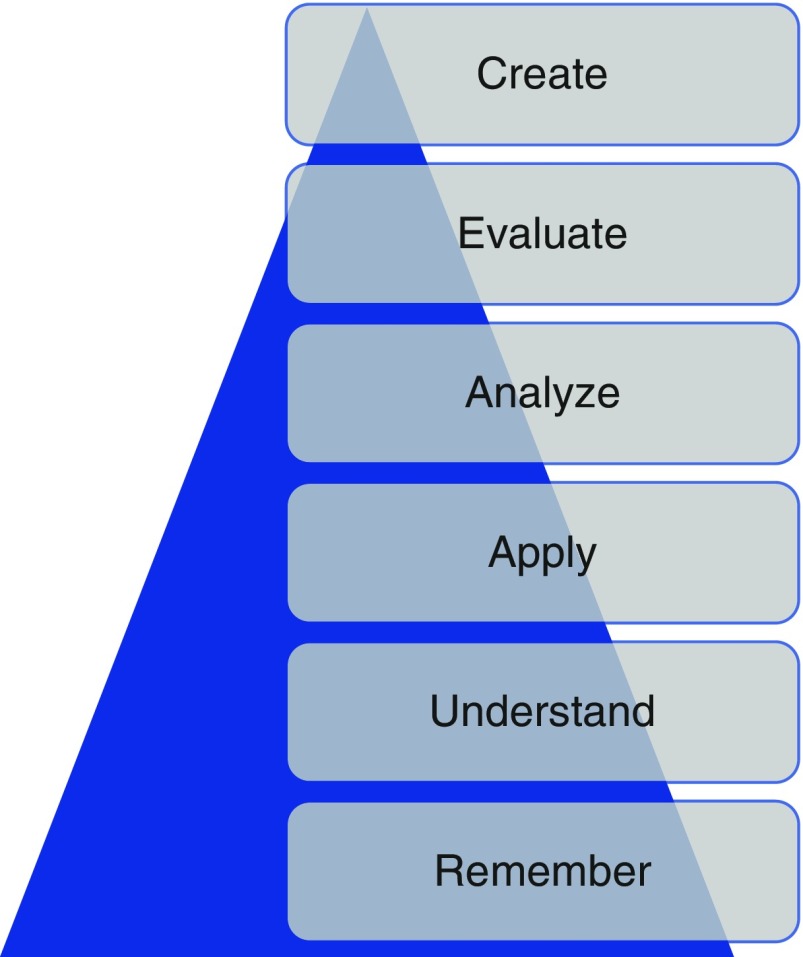

In the ICU, many attendings are satisfied with the trainee simply putting forth an assessment and plan. In the case presented here, the resident’s assessment that the patient has sepsis is likely based on the resident remembering a few facts about sepsis (i.e., hypotension is not responsive to fluids) and recognizing a pattern (history of possible infection + fever + hypotension = sepsis). With this information, we may determine that the learner is operating at the lowest level of Bloom’s taxonomy: remembering (20) (Figure 2), in this case, she seems to be using reflexive or automatic thought. In a busy ICU, it is tempting for the attending to simply overlook the response and proceed with one’s own plan, but we should be expecting more. As indicated in the attending’s response, we should make the thinking process explicit and push the resident up Bloom’s taxonomy: to describe, explain, apply, analyze, evaluate, and ultimately create (20) (Figure 2).

Figure 2.

The revised Bloom’s taxonomy. This schematic, first created in 1956, depicts six levels of the cognitive domain. Remembering is the lowest level; creating is the highest level. Adapted from Anderson and Krathwol (20).

Faculty members should probe the thought process used to arrive at the assessment and encourage the resident to think about her thinking; that is, to engage in the process of metacognition. We recommend doing this in real time as the trainee is presenting the case by asking “how” and “why” questions (see strategy 4).

Attending: Why do you think he has sepsis?

Resident: Well, he came in with infectious symptoms. Also, his blood pressure is quite low, and it only improved slightly with fluids in the emergency department.

Attending: Okay, but how is blood pressure generated? How could you explain hypotension using other data in the case, such as the low hematocrit?

If the trainee is encouraged to think about her thinking, she may conclude that she was trying to force a “pattern” of sepsis, perhaps because she frequently sees patients with sepsis and because the emergency department framed the case in that way. It is possible that she does not have enough experience in the ICU or specific knowledge about sepsis to accurately assess this patient; in the actual case, a third-year resident with significant ICU experience ultimately admitted to defaulting to pattern recognition.

One way to push learners up Bloom’s taxonomy is to help them understand dual-process theory: the idea that the brain uses two thinking processes, type 1 and type 2 (alternately known as system 1 and system 2). Type 1 thinking is the more intuitive process of decision making; type 2 is an analytical process (17, 21, 22). Type 1 thinking is immediate and unconscious, and the hallmark is pattern recognition; type 2 is deliberate and effortful (17).

Critical thinkers understand and recognize the dual processes (21) and the fact that type I thinking is common in their daily lives. Furthermore, they acknowledge that type 1 reasoning, which is often automatic and unconscious, can be prone to error. There is a paucity of data linking cognitive errors to the particular type of thinking (14), but many of these studies are plagued by the fact that they do not test the atypical pattern. As a consequence, they do not truly test the hypothesis that type 2 reasoning will reduce error in more complex cases. It has been shown that combining type 1 and type 2 thinking improves diagnostic accuracy compared with just using one method versus another (23). We believe that helping learners understand how their minds work will help them recognize when they may be falling into pattern recognition and when this will be problematic (e.g., when there are discordant data, or one can only quickly think of one diagnosis). By expecting more from our learners, by compelling them to understand, analyze, and evaluate, we must provide constant feedback and coaching to help them develop, and we must ask the right questions (see strategy 4) to guide them.

Strategy 2: Discuss Cognitive Biases and De-Biasing Strategies

Cognitive biases are thought patterns that deviate from the typical way of decision making or judging (24). These occur commonly when we are under stress or time constrained when making decisions. At this time, there are more than 100 described cognitive biases, some of which are more common in medicine than others (25). We believe that the six outlined in Table 1 are particularly prevalent in the ICU.

Table 1.

Six common biases frequently used in the intensive care unit

| Cognitive bias | Description |

|---|---|

| Availability bias | Judging things as more likely if they quickly and easily come to mind |

| Confirmation bias | Selectively seeking information to support rather than refute a diagnosis |

| Anchoring bias | Hooking into the salient aspects of a case early in the diagnostic work-up |

| Framing effect | Presenting a case in a specific way to influence the diagnosis |

| Diagnostic momentum | Attaching diagnostic labels to patients and not revisiting them |

| Premature closure | Finalizing a diagnosis without full confirmation |

Although there are many proponents of teaching cognitive biases (6), there are no studies showing that teaching these to trainees improves their clinical decision making (14), again recognizing that research in this area has often not focused on the scenarios in which cognitive bias is likely to lead to error. Most cognitive biases are quiescent until the right scenario presents itself (26), which makes them difficult to study in the clinical context. Imagine an overworked, tired resident in a busy ICU or one who received an incomplete sign-out or felt pressure from the system to make a quick decision to move along patient care. These scenarios occur daily in the ICU; as a consequence, we believe that teaching residents how to recognize biases and giving them strategies to debias is important.

The resident in the clinical scenario outlined here is falling prey to many biases in her assessment that the patient has sepsis. First, it is likely that on her ICU rotation she has seen many patients with sepsis, and thus sepsis is a diagnosis that is easily available to her mind (availability bias). Next, she is falling victim to confirmation bias: The presence of hypotension supports a diagnosis of sepsis and is disproportionately appreciated by the trainee compared with a white blood cell count of 6,000, which does not easily fit with the diagnosis and is ignored. Next, she anchors and prematurely closes on the diagnosis of sepsis and does not look for other possible explanations of hypotension. The resident does not realize that she is subject to these biases; explicitly discussing them will help her understand her thinking process, enable her to recognize when she may be jumping to conclusions, and help her identify when she must switch to type 2 thinking.

Attending: Why do you think he has sepsis?

Resident: Well, he came in with infectious symptoms. Also, his blood pressure is quite low, and it did not improve with fluids in the emergency department. This is similar to the other patient with sepsis.

Attending: I can see why sepsis easily comes to your mind, as we have recently admitted three other patients with sepsis. These patients had similar features to this patient, so your mind is jumping to that conclusion, but if we stop and think together about what pieces of the case don’t fit with sepsis, we may come up with a different diagnosis.

Resident: Well, the lack of leukocytosis doesn’t make sense.

Attending: Yes! I agree, that is a bit odd. Let’s broaden our differential and not anchor on sepsis. What else could this be?

Cognitive forcing strategies (16), the process of making trainees aware of their cognitive biases and then developing strategies to overcome the bias, may help this resident. Studies show that debiasing can be taught to emergency medicine trainees (27), and we believe it can also be taught to critical care trainees, who experience a similar fast-paced and high-stakes learning environment. Proposed debiasing strategies include encouraging trainees to consider alternative diagnoses (3, 6, 27, 28) and promoting broad differentials. In particular, they need to be able to rethink cases when confronted with information that is not consistent with the working diagnosis; for example, leukocytosis, as above. They should be allowed to communicate their level of uncertainty, and we should not think less of them if they do not have a single final answer with a targeted plan (29). When we do not discuss inconsistent information, we essentially give trainees permission to ignore it.

Attending: In addition to the white blood cell count not fitting, I’m also struggling with the hematocrit: How is it 35% in the setting of presumed decreased intravascular volume?

Resident: Hmm.... I’m actually not sure. You’re right, though, it doesn’t make sense.

Attending: I agree. Let’s pause and think about how we are thinking about this case.

To a large degree, recognition of cognitive bias requires metacognition, defined as thinking about one’s thinking (3, 16, 27). This process is optimized with a familiarity with how the mind works; that is, a basic understanding of dual-process theory and cognitive biases. In the ICU, we find it easiest to engage in a group metacognition exercise. The attending asks, “How are we thinking about this case?” This allows both the attending and the team to reflect together on how and why the diagnosis has been made. This can provide insight into the tendency to prematurely close or limit considerations, which has been shown to be the most common cause of inaccurate clinical synthesis (10).

Other debiasing strategies include accountability (6) and feedback (25, 30). Giving specific and in-the-moment feedback can help residents understand their decisions (25). It is our job as attendings to provide this feedback, and it is thought that this is one of the most effective debiasing strategies (25).

Strategy 3: Model and Teach Inductive Reasoning

In medicine, we classically teach clinical reasoning via the hypothetico-deductive strategy (31) and rarely discuss inductive reasoning. To date, there are no data proving the advantages of one strategy over another, but we believe that modeling inductive reasoning is an important part of critical thinking, especially when type 1 thinking provides limited answers. In hypothetico-deductive reasoning, physicians make a cognitive jump from a few facts to hypotheses framed as a differential diagnosis from which one then deduces characteristics that are matched to the patient (32). Because this way of thinking relies on memory and pattern recognition, we find that it is more subject to cognitive biases, including premature closure, than inductive reasoning.

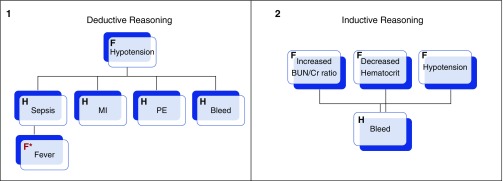

In our case, the presence of hypotension leads the trainee to come up with a differential based primarily on that single observation; the resident thinks of diagnoses such as sepsis or cardiogenic shock. Contrast this way of thinking with inductive reasoning, which proceeds in an orderly way from multiple facts to hypotheses (32). In our case, putting together the facts of hypotension, decreased hematocrit, and elevated blood urea nitrogen/creatinine would lead to a broader list of possible explanations or hypotheses that would include bleeding (see Figure 3 to compare and contrast inductive and deductive reasoning). We propose that this way of thinking is grounded more deeply in pathophysiology, and we believe it leads to broader thinking, because trainees do not have to rely on memory, pattern recognition, or heuristics; rather, they can reason their way through the problem via an understanding of basic mechanisms of health and disease.

Figure 3.

Schematic representations of deductive (1) and inductive (2) reasoning apropos to the clinical case. In deductive reasoning, one fact (F; hypotension) is used to generated multiple hypotheses (H), and then facts that pertain to each are retrofitted (red F*; fever). In inductive reasoning, facts are grouped and used to generate hypotheses. Adapted from Pottier (32).

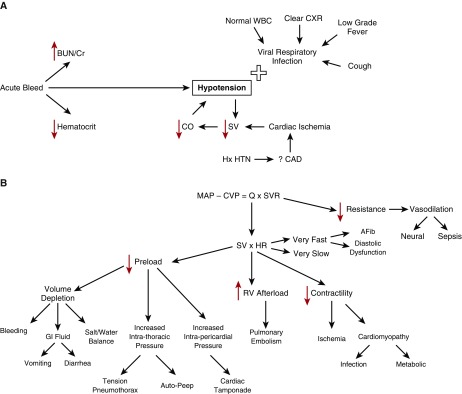

Inductive reasoning can be practiced using both mechanism and concept maps. Mechanism maps are a visual representation of how the pathophysiology of disease leads to the clinical symptoms (33), whereas concept maps graphically represent relationships between multiple concepts (33) and make links explicit. Both types reinforce mechanistic thinking and can be used as tools to avoid cognitive biases. Using our case as an example, if the resident started with the hypotension and made a mechanism (Figure 4A) or concept (Figure 4B) map, she would be less likely to anchor on the diagnosis of sepsis. This process gives trainees a strategy to broaden their differential and a way to think about the case when they do not know what is going on.

Figure 4.

(A) A mechanism map of a 45-year-old man presenting with cough, shortness of breath. Found to have an increased BUN/Cr ration, a decreased hematocrit, and a normal white blood cell count. (B) A concept map of the clinical case. AFib = atrial fibrillation; BUN/Cr = blood urea nitrogen to creatinine ratio; CAD = coronary artery disease; CO/Q = cardiac output; CVP = central venous pressure; CXR = chest X-ray; GI = gastrointestinal; HR = heart rate; Hx HTN = history of hypertension; MAP = mean arterial pressure; RV = right ventricle; SV = stroke volume; SVR = systemic vascular resistance; WBC = white blood cell.

Although critics contend that these maps take time and do not have a place in the ICU, we find that quickly sketching a mechanism map on rounds while the case is being presented only takes 1–2 minutes and is a powerful way of making your method of clinical reasoning explicit to the learner. This can also be done later as a way to review pathophysiology. We hold monthly concept mapping sessions for our students (34) to improve their clinical reasoning skills, but find that in the ICU with residents, doing this quickly in real time with a mechanism map is more effective.

Strategy 4: Use Questions to Stimulate Critical Thinking

Questions can be used to engage the learners and inspire them to think critically. When questioning trainees, it is important to avoid the “quiz show” type questions that just test whether a trainee can recall a fact (e.g., “What is the most common cause of X”?). In our current advanced technological age, answers to this type of question reveal less about thinking abilities than how adept one is at searching the internet. These questions do not provide insight into the trainee’s understanding but can, we fear, subtly emphasize that the practice of medicine is about memorization, rather than thinking. In addition, this type of question is often perceived by the trainee as “pimping.” This can belittle the trainee while securing the attending physician’s place of power (35) and create a hostile learning environment.

Attending: Why do you think this patient is hypotensive?

Attending: How does the BUN/creatinine ratio relate to the hypotension?

Attending: How would you expect the intravascular volume depletion to affect his hematocrit?

Questions like these allow the trainee to elaborate on her knowledge, which feels much safer to the learner and provides the attending insight into her thinking.

Resident: If my theory of sepsis were correct, I would think the patient would be intravascularly dry and have a higher hematocrit. The fact that it is only 35% and that his BUN/creatinine ratio is consistent with a prerenal picture is making me worried that maybe the hypotension is not from sepsis but, rather, from bleeding. I think we need to evaluate for gastrointestinal bleeding.

When the right questions are used to coach the resident, her thought processes are uncovered and she can be guided to the correct diagnosis. Although experience and domain-specific knowledge are important, data indicate that in the majority of malpractice cases involving diagnostic error, the problem is not that the doctor did not know the diagnosis; rather, she did not think of it. Reasoning, rather than knowledge, is key to avoiding mistakes in cases with confounding data.

Strategy 5: Assess Your Learner’s Critical Thinking

It is difficult, but necessary for trainee development, to assess critical thinking (18). Milestones, ranging from challenged and unreflective thinkers to accomplished critical thinkers, have been proposed (18). This approach is helpful not only for providing feedback to trainees on their critical thinking but also to give the trainees a framework to guide reflection on how they are thinking (see Table 2 for a description of the milestones).

Table 2.

Milestones of critical thinking and the descriptions of each stage

| Critical thinking milestone | Hallmarks of each milestone |

|---|---|

| Challenged thinker | Environmental pressures such as time force thinkers to make decisions; premature closure is a common bias |

| Unreflective thinker | Narrow differential diagnosis; anchoring is common |

| Beginning critical thinker | Broader but still limited differential; ignores data that do not fit; availability bias is common |

| Practicing critical thinker | Broad differential with mechanistic understanding, but differential is not weighted |

| Advanced critical thinker | Broad differential, admits uncertainty, engages in metacognition and solicits feedback |

Note that “Challenged thinker” is in italics because any thinker can be challenged as a result of environmental pressures or time constraints. Adapted from Papp (18).

It is important to note that anyone, even accomplished critical thinkers, can become “challenged critical thinkers” when the environment precludes critical thinking. This is particularly relevant in critical care. In a busy ICU, one is often faced with time pressure, which contributes to premature closure. In our case presented earlier, perhaps the resident had limited time to admit this patient, and thus settled on the diagnosis of sepsis. It is our hope that teaching trainees to recognize this risk will lead to fewer cognitive biases. Imagine a different exchange between faculty and resident:

Attending: How are you doing with the new admission? How are you thinking about the case?

Resident: I’m concerned this is sepsis, but there are few pieces that don’t fit. However, given the two other admissions and the cardiac arrest on the floor who is heading our way, I haven’t been able to give this case as much thought as I would like to.

Attending: Okay, do you want to work through the case together? Or could I help with some other tasks so you have more time to think about this?

This type of response reflects a practicing critical thinker: one who is aware of her limitations and thinking processes. This can only occur, however, if the attending creates an environment in which critical thinking is valued by making a safe space and asking the right questions.

Conclusions

The ICU is a high-acuity, fast-paced, and high-stakes environment in which critical thinking is imperative. Despite the limited empirical evidence to guide faculty on best teaching practices for enhancing reasoning skills, it is our hope that these strategies will provide practical approaches for teaching this topic in the ICU. Given how fast medical knowledge grows and how rapidly technology allows us to find factual information, it is important to teach enduring principles, such as how to think.

Our job in the ICU, where literal life-and-death decisions are made daily, is to teach trainees to focus on how we actually think about problems and to uncover cognitive biases that cause flawed thinking and may lead to diagnostic error. The focus of the preclerkship curriculum at the undergraduate level is increasingly moving away from transfer of content to application of knowledge (36). When teaching residents and fellows, faculty should also emphasize thinking skills by making the thinking process explicit, discussing cognitive biases, and debiasing strategies, modeling and teaching inductive reasoning, using questions to stimulate curiosity, and assessing critical thinking skills.

As Albert Einstein said, “Education... is not the learning of facts, but the training of the mind to think...” (38).

Supplementary Material

Footnotes

Author Contributions: M.M.H. contributed to manuscript drafting, figure creation, and editing; S.C. contributed to figure creation, critical review, and editing; and R.M.S. contributed to figure creation, critical review, and editing.

Author disclosures are available with the text of this article at www.atsjournals.org.

References

- 1.Scriven M, Paul R. Critical thinking as defined by the national council for excellence in critical thinking. Presented at the 8th Annual International Conference on Critical Thinking and Education Reform; August 1987; Rohnert Park, California. [Google Scholar]

- 2.Huang GC, Newman LR, Schwartzstein RM. Critical thinking in health professions education: summary and consensus statements of the Millennium Conference 2011. Teach Learn Med. 2014;26:95–102. doi: 10.1080/10401334.2013.857335. [DOI] [PubMed] [Google Scholar]

- 3.Croskerry P. From mindless to mindful practice--cognitive bias and clinical decision making. N Engl J Med. 2013;368:2445–2448. doi: 10.1056/NEJMp1303712. [DOI] [PubMed] [Google Scholar]

- 4.Facione P. Critical thinking: What it is and why it counts. Millbrae, CA: The California Academic Press; 2011. [Google Scholar]

- 5.Tversky A, Kahneman D. Judgement under uncertainty: heuristics and biases. Science. 1974;185:1124–1131. doi: 10.1126/science.185.4157.1124. [DOI] [PubMed] [Google Scholar]

- 6.Croskerry P. The importance of cognitive errors in diagnosis and strategies to minimize them. Acad Med. 2003;78:775–780. doi: 10.1097/00001888-200308000-00003. [DOI] [PubMed] [Google Scholar]

- 7.Saber Tehrani AS, Lee H, Mathews SC, Shore A, Makary MA, Pronovost PJ, Newman-Toker DE. 25-year summary of US malpractice claims for diagnostic errors 1986-2010: an analysis from the National Practitioner Data Bank. BMJ Qual Saf. 2013;22:672–680. doi: 10.1136/bmjqs-2012-001550. [DOI] [PubMed] [Google Scholar]

- 8.National Academies of Sciences, Engineering, and Medicine. Improving diagnosis in health care. Washington, DC: The National Academies Press; 2015. [Google Scholar]

- 9.Lambe KA, O’Reilly G, Kelly BD, Curristan S. Dual-process cognitive interventions to enhance diagnostic reasoning: a systematic review. BMJ Qual Saf. 2016;25:808–820. doi: 10.1136/bmjqs-2015-004417. [DOI] [PubMed] [Google Scholar]

- 10.Graber ML, Franklin N, Gordon R. Diagnostic error in internal medicine. Arch Intern Med. 2005;165:1493–1499. doi: 10.1001/archinte.165.13.1493. [DOI] [PubMed] [Google Scholar]

- 11.Hoffman J. editor. 2014 Annual benchmarking report: malpractice risks in the diagnostic process. Cambridge, MA: CRICO Strategies; 2014 [accessed 2017 Feb 22]. Available from: https://psnet.ahrq.gov/resources/resource/28612/2014-annual-benchmarking-report-malpractice-risks-in-the-diagnostic-process.

- 12.Willingham DT. Critical thinking, why is it so hard to teach? Am Educ. 2007 Summer:8–19. [Google Scholar]

- 13.Wellbery C. Flaws in clinical reasoning: a common cause of diagnostic error. Am Fam Physician. 2011;84:1042–1048. [PubMed] [Google Scholar]

- 14.Norman GR, Monteiro SD, Sherbino J, Ilgen JS, Schmidt HG, Mamede S. The causes of errors in clinical reasoning: cognitive biases, knowledge deficits, and dual process thinking. Acad Med. 2017;92:23–30. doi: 10.1097/ACM.0000000000001421. [DOI] [PubMed] [Google Scholar]

- 15.Croskerry P.Diagnostic failure: a cognitive and affective approach Henriksen K, Battles JB, Marks ES, Lewin DI.editors. Advances in patient safety: from research to implementation. Volume 2: concepts and methodology Rockville, MD: Agency for Healthcare Research and Quality; 2005241–254. [PubMed] [Google Scholar]

- 16.Croskerry P. Cognitive forcing strategies in clinical decisionmaking. Ann Emerg Med. 2003;41:110–120. doi: 10.1067/mem.2003.22. [DOI] [PubMed] [Google Scholar]

- 17.Croskerry P, Petrie DA, Reilly JB, Tait G. Deciding about fast and slow decisions. Acad Med. 2014;89:197–200. doi: 10.1097/ACM.0000000000000121. [DOI] [PubMed] [Google Scholar]

- 18.Papp KK, Huang GC, Lauzon Clabo LM, Delva D, Fischer M, Konopasek L, Schwartzstein RM, Gusic M. Milestones of critical thinking: a developmental model for medicine and nursing. Acad Med. 2014;89:715–720. doi: 10.1097/ACM.0000000000000220. [DOI] [PubMed] [Google Scholar]

- 19.Garrouste Orgeas M, Timsit JF, Soufir L, Tafflet M, Adrie C, Philippart F, Zahar JR, Clec’h C, Goldran-Toledano D, Jamali S, et al. Outcomerea Study Group. Impact of adverse events on outcomes in intensive care unit patients. Crit Care Med. 2008;36:2041–2047. doi: 10.1097/CCM.0b013e31817b879c. [DOI] [PubMed] [Google Scholar]

- 20.Anderson LW, Krathwold DR. Taxonomy for learning, teaching and assessing: a revision of Bloom’s taxonomy of educational objectives. New York: Longman; 2001. [Google Scholar]

- 21.Kahneman D. Thinking fast and slow. New York: Farrar, Straus and Giroux; 2011. [Google Scholar]

- 22.Evans JS, Stanovich KE. Dual-process theories of higher cognition: advancing the debate. Perspect Psychol Sci. 2013;8:223–241. doi: 10.1177/1745691612460685. [DOI] [PubMed] [Google Scholar]

- 23.Ark TK, Brooks LR, Eva KW. Giving learners the best of both worlds: do clinical teachers need to guard against teaching pattern recognition to novices? Acad Med. 2006;81:405–409. doi: 10.1097/00001888-200604000-00017. [DOI] [PubMed] [Google Scholar]

- 24.Haselton MG, Nettle D, Andrews PW. The evolution of cognitive bias. In: Buss DM, editor. The handbook of evolutionary psychology. Hoboken, NJ: John Wiley & Sons Inc.; 2005. pp. 724–746. [Google Scholar]

- 25.Elstein AS. Thinking about diagnostic thinking: a 30-year perspective. Adv Health Sci Educ Theory Pract. 2009;14:7–18. doi: 10.1007/s10459-009-9184-0. [DOI] [PubMed] [Google Scholar]

- 26.Reason J. Human error. Cambridge: Cambridge University Press; 1990. [Google Scholar]

- 27.Croskerry P. Achieving quality in clinical decision making: cognitive strategies and detection of bias. Acad Emerg Med. 2002;9:1184–1204. doi: 10.1111/j.1553-2712.2002.tb01574.x. [DOI] [PubMed] [Google Scholar]

- 28.Arkes HA. Impediments to accurate clinical judgment and possible ways to minimize their impact. In: Arkes HR, Hammond KR, editors. Judgement and decision making: an interdisciplinary reader. New York: Cambridge University Press; 1986. pp. 582–592. [Google Scholar]

- 29.Simpkin AL, Schwartzstein RM. Tolerating uncertainty- the next medical revolution? N Engl J Med. 2016;375:1713–1715. doi: 10.1056/NEJMp1606402. [DOI] [PubMed] [Google Scholar]

- 30.Croskerry P. The feedback sanction. Acad Emerg Med. 2000;7:1232–1238. doi: 10.1111/j.1553-2712.2000.tb00468.x. [DOI] [PubMed] [Google Scholar]

- 31.Bowen JL. Educational strategies to promote clinical diagnostic reasoning. N Engl J Med. 2006;355:2217–2225. doi: 10.1056/NEJMra054782. [DOI] [PubMed] [Google Scholar]

- 32.Pottier P, Hardouin J-B, Hodges BD, Pistorius MA, Connault J, Durant C, Clairand R, Sebille V, Barrier JH, Planchon B. Exploring how students think: a new method combining think-aloud and concept mapping protocols. Med Educ. 2010;44:926–935. doi: 10.1111/j.1365-2923.2010.03748.x. [DOI] [PubMed] [Google Scholar]

- 33.Guerrero APS. Mechanistic case diagramming: a tool for problem-based learning. Acad Med. 2001;76:385–389. doi: 10.1097/00001888-200104000-00020. [DOI] [PubMed] [Google Scholar]

- 34.Richards J, Schwartzstein R, Irish J, Almeida J, Roberts D. Clinical physiology grand rounds. Clin Teach. 2013;10:88–93. doi: 10.1111/j.1743-498X.2012.00614.x. [DOI] [PubMed] [Google Scholar]

- 35.Kost A, Chen FM. Socrates was not a pimp: changing the paradigm of questioning in medical education. Acad Med. 2015;90:20–24. doi: 10.1097/ACM.0000000000000446. [DOI] [PubMed] [Google Scholar]

- 36.Krupat E, Richards JB, Sullivan AM, Fleenor TJ, Jr, Schwartzstein RM. Assessing the effectiveness of case-based collaborative learning via randomized controlled trial. Acad Med. 2016;91:723–729. doi: 10.1097/ACM.0000000000001004. [DOI] [PubMed] [Google Scholar]

- 37.Hogarth RM. Judgement and choice: the psychology of decision. Chichester: Wiley; 1980. [Google Scholar]

- 38.Frank P. Einstein: his life and times. New York: Da Capo Press; 2002.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.