Abstract

Sparsity-based approaches have been popular in many applications in image processing and imaging. Compressed sensing exploits the sparsity of images in a transform domain or dictionary to improve image recovery from undersampled measurements. In the context of inverse problems in dynamic imaging, recent research has demonstrated the promise of sparsity and low-rank techniques. For example, the patches of the underlying data are modeled as sparse in an adaptive dictionary domain, and the resulting image and dictionary estimation from undersampled measurements is called dictionary-blind compressed sensing, or the dynamic image sequence is modeled as a sum of low-rank and sparse (in some transform domain) components (L+S model) that are estimated from limited measurements. In this work, we investigate a data-adaptive extension of the L+S model, dubbed LASSI, where the temporal image sequence is decomposed into a low-rank component and a component whose spatiotemporal (3D) patches are sparse in some adaptive dictionary domain. We investigate various formulations and efficient methods for jointly estimating the underlying dynamic signal components and the spatiotemporal dictionary from limited measurements. We also obtain efficient sparsity penalized dictionary-blind compressed sensing methods as special cases of our LASSI approaches. Our numerical experiments demonstrate the promising performance of LASSI schemes for dynamic magnetic resonance image reconstruction from limited k-t space data compared to recent methods such as k-t SLR and L+S, and compared to the proposed dictionary-blind compressed sensing method.

Index Terms: Dynamic imaging, Structured models, Sparse representations, Dictionary learning, Inverse problems, Magnetic resonanace imaging, Machine learning, Nonconvex optimization

I. Introduction

Sparsity-based techniques are popular in many applications in image processing and imaging. Sparsity in either a fixed or data-adaptive dictionary or transform is fundamental to the success of popular techniques such as compressed sensing that aim to reconstruct images from limited sensor measurements. In this work, we focus on low-rank and adaptive dictionary-sparse models for dynamic imaging data and exploit such models to perform image reconstruction from limited (compressive) measurements. In the following, we briefly review compressed sensing (CS), CS-based magnetic resonance imaging (MRI), and dynamic data modeling, before outlining the contributions of this work.

A. Background

CS [1]–[4] is a popular technique that enables recovery of signals or images from far fewer measurements (or at a lower rate) than the number of unknowns or than required by Nyquist sampling conditions. CS assumes that the underlying signal is sparse in some transform domain or dictionary and that the measurement acquisition procedure is incoherent in an appropriate sense with the dictionary. CS has been shown to be very useful for MRI [5], [6]. MRI is a relatively slow modality because the data, which are samples in the Fourier space (or k-space) of the object, are acquired sequentially in time. In spite of advances in scanner hardware and pulse sequences, the rate at which MR data are acquired is limited by MR physics and physiological constraints [5].

CS has been applied to a variety of MR techniques such as static MRI [5], [7], [8], dynamic MRI (dMRI) [6], [9]–[11], parallel imaging (pMRI) [12]–[15], and perfusion imaging and diffusion tensor imaging (DTI) [16]. For static MR imaging, CS-based MRI (CSMRI) involves undersampling the k-space data (e.g., collecting fewer phase encodes) using random sampling techniques to accelerate data acquisition. However, in dynamic MRI the data is inherently undersampled because the object is changing as the data is being collected, so in a sense all dynamic MRI scans (of k-t space) involve some form of CS because one must reconstruct the dynamic images from under-sampled data. The traditional approach to this problem in MRI is to use “data sharing” where data is pooled in time to make sets of k-space data (e.g., in the form of a Casorati matrix [17]) that appear to have sufficient samples, but these methods do not fully model the temporal changes in the object. CS-based dMRI can achieve improved temporal (or spatial) resolution by using more explicit signal models rather than only implicit k-space data sharing, albeit at the price of increased computation.

CSMRI reconstructions with fixed, non-adaptive signal models (e.g., wavelets or total variation sparsity) typically suffer from artifacts at high undersampling factors [18]. Thus, there has been growing interest in image reconstruction methods where the dictionary is adapted to provide highly sparse representations of data. Recent research has shown benefits for such data-driven adaptation of dictionaries [19]–[22] in many applications [18], [23]–[25]. For example, the DLMRI method [18] jointly estimates the image and a synthesis dictionary for the image patches from undersampled k-space measurements. The model there is that the unknown (vectorized) image patches can be well approximated by a sparse linear combination of the columns or atoms of a learned (a priori unknown) dictionary D. This idea of joint dictionary learning and signal reconstruction from undersampled measurements [18], known as (dictionary) blind compressed sensing (BCS) [26], has been the focus of several recent works (including for dMRI reconstruction) [18], [27]–[36]. The BCS problem is harder than conventional (non-adaptive) compressed sensing. However, the dictionaries learned in BCS typically reflect the underlying image properties better than pre-determined models, thus improving image reconstructions.

While CS methods use sparse signal models, various alternative models have been explored for dynamic data in recent years. Several works have demonstrated the efficacy of low-rank models (e.g., by constraining the Casorati data matrix to have low-rank) for dynamic MRI reconstruction [17], [37]– [39]. A recent work [40] also considered a low-rank property for local space-time image patches. For data such as videos (or collections of related images [41]), there has been growing interest in decomposing the data into the sum of a low-rank (L) and a sparse (S) component [42]–[44]. In this L+S (or equivalently Robust Principal Component Analysis (RPCA) [42]) model, the L component may capture the background of the video, while the S component captures the sparse (dynamic) foreground. The L+S model has been recently shown to be promising for CS-based dynamic MRI [45], [46]. The S component of the L+S decomposition could either be sparse by itself or sparse in some known dictionary or transform domain. Some works alternatively consider modeling the dynamic image sequence as both low-rank and sparse (L & S) [47], [48], with a recent work [49] learning dictionaries for the S part of L & S. In practice, which model provides better image reconstructions may depend on the specific properties of the underlying data.

When employing the L+S model, the CS reconstruction problem can be formulated as follows:

| (P0) |

In (P0), the underlying unknown dynamic object is x = xL+xS ∈ ℂNxNyNt, where xL and xS are vectorized versions of space-time (3D) tensors corresponding to Nt temporal frames, each an image1 of size Nx × Ny. The operator A is the sensing or encoding operator and d denotes the (undersampled) measurements. For parallel imaging with Nc receiver coils, applying the operator A involves frame-by-frame multiplication by coil sensitivities followed by applying an undersampled Fourier encoding (i.e., the SENSE method) [50]. The operation R1(xL) reshapes xL into an NxNy × Nt matrix, and ||·||* denotes the nuclear norm that sums the singular values of a matrix. The nuclear norm serves as a convex surrogate for matrix rank in (P0). Traditionally, the operator T in (P0) is a known sparsifying transform for xS, and λL and λS are non-negative weights.

B. Contributions

This work investigates in detail the extension of the L+S model for dynamic data to a Low-rank + Adaptive Sparse SIgnal (LASSI) model. In particular, we decompose the underlying temporal image sequence into a low-rank component and a component whose overlapping spatiotemporal (3D) patches are assumed sparse in some adaptive dictionary domain2. We propose a framework to jointly estimate the underlying signal components and the spatiotemporal dictionary from limited measurements. We compare using ℓ0 and ℓ1 penalties for sparsity in our formulations, and also investigate adapting structured dictionaries, where the atoms of the dictionary, after being reshaped into space-time matrices are low-rank. The proposed iterative LASSI reconstruction algorithms involve efficient block coordinate descent-type updates of the dictionary and sparse coefficients of patches, and an efficient proximal gradient-based update of the signal components. We also obtain novel sparsity penalized dictionary-blind compressed sensing methods as special cases of our LASSI approaches.

Our experiments demonstrate the promising performance of the proposed data-driven schemes for dMRI reconstruction from limited k-t space data. In particular, we show that the LASSI methods give much improved reconstructions compared to the recent L+S method and methods involving joint L & S modeling [47]. We also show improvements with LASSI compared to the proposed spatiotemporal dictionary-BCS methods (that are special cases of LASSI). Moreover, learning structured dictionaries and using the ℓ0 sparsity “norm” in LASSI are shown to be advantageous in practice. Finally, in our experiments, we compare the use of conventional singular value thresholding (SVT) for updating the low-rank signal component in the LASSI algorithms to alternative approaches including the recent OptShrink method [52]–[54].

A short version of this work investigating a specific LASSI method appears elsewhere [55]. Unlike [55], here, we study several dynamic signal models and reconstruction approaches in detail, and illustrate the convergence and learning behavior of the proposed methods, and demonstrate their effectiveness for several datasets and undersampling factors.

C. Organization

The rest of this paper is organized as follows. Section II describes our models and problem formulations for dynamic image reconstruction. Section III presents efficient algorithms for the proposed problems and discusses the algorithms’ properties. Section IV presents experimental results demonstrating the convergence behavior and performance of the proposed schemes for the dynamic MRI application. Section V concludes with proposals for future work.

II. Models and Problem Formulations

A. LASSI Formulations

We model the dynamic image data as x = xL + xS, where xL is low-rank when reshaped into a (space-time) matrix, and we assume that the spatiotemporal (3D) patches in the vectorized tensor xS are sparse in some adaptive dictionary domain. We replace the regularizer ζ(xs) = ||TxS||1 with weight λS in (P0) with the following patch-based dictionary learning regularizer

| (1) |

to arrive at the following problem for joint image sequence reconstruction and dictionary estimation:

| (P1) |

Here, Pj is a patch extraction matrix that extracts an mx × my × mt spatiotemporal patch from xS as a vector. A total of M (spatially and temporally) overlapping 3D patches are assumed. Matrix D ∈ ℂm×K with m = mxmymt is the synthesis dictionary to be learned and zj ∈ ℂK is the unknown sparse code for the jth patch, with PjxS ≈ Dzj .

We use Z ∈ ℂK×M to denote the matrix that has the sparse codes zj as its columns, ||Z||0 (based on the ℓ0 “norm”) counts the number of nonzeros in the matrix Z, and λZ ≥ 0. Problem (P1) penalizes the number of nonzeros in the (entire) coefficient matrix Z, allowing variable sparsity levels across patches. This is a general and flexible model for image patches (e.g., patches from different regions in the dynamic image sequence may contain different amounts of information and therefore all patches may not be well represented at the same sparsity) and leads to promising performance in our experiments. The constraint ||Z||∞ ≜ maxj ||zj||∞ ≤ a with a > 0 is used in (P1) because the objective (specifically the regularizer (1)) is non-coercive with respect to Z [56]. 3 The ℓ∞ constraint prevents pathologies that could theoretically arise (e.g., unbounded algorithm iterates) due to the non-coercive objective. In practice, we set a very large, and the constraint is typically inactive.

The atoms or columns of D, denoted by di, are constrained to have unit norm in (P1) to avoid scaling ambiguity between D and Z [56], [57]. We also model the reshaped dictionary atoms R2(di) as having rank at most r > 0, where the operator R2(·) reshapes di into a mxmy × mt space-time matrix. Imposing low-rank (small r) structure on reshaped dictionary atoms is motivated by our empirical observation that the dictionaries learned on image patches (without such a constraint) tend to have reshaped atoms with only a few dominant singular values. Results included in the supplement4 show that dictionaries learned on dynamic image patches with low-rank atom constraints tend to represent such data as well as learned dictionaries with full-rank atoms. Importantly, such structured dictionary learning may be less prone to over-fitting in scenarios involving limited or corrupted data. We illustrate this for the dynamic MRI application in Section IV.

When zj is highly sparse (with ||zj||0 ≪ min(mt,mxmy)) and R2(di) has low rank (say rank-1), the model PjxS ≈ Dzj corresponds to approximating the space-time patch matrix as a sum of a few reshaped low-rank (rank-1) atoms. This special (extreme) case would correspond to approximating the patch itself as low-rank. However, in general the decomposition Dzj could involve numerous (> min(mt,mxmy)) active atoms, corresponding to a rich, not necessarily low-rank, patch model. Experimental results in Section IV illustrate the benefits of such rich models.

Problem (P1) jointly learns a decomposition x = xL + xS and a dictionary D along with the sparse coefficients Z (of spatiotemporal patches) from the measurements d. Unlike (P0), the fully-adaptive Problem (P1) is nonconvex. An alternative to (P1) involves replacing the ℓ0 “norm” with the convex ℓ1 norm (with ) as follows:

| (P2) |

Problem (P2) is also nonconvex due to the product Dzj (and the nonconvex constraints), so the question of choosing (P2) or (P1) is one of image quality, not convexity.

Finally, the convex nuclear norm penalty ||R1(xL) ||* in (P1) or (P2) could be alternatively replaced with a nonconvex penalty on the rank of R1(xL), or the function for p < 1 (based on the Schatten p-norm) that is applied to the vector of singular values of R1(xL) [47]. While we focus mainly on the popular nuclear norm penalty in our investigations, we also briefly study some of the alternatives in Section III and Section IV-D.

B. Special Case of LASSI Formulations: Dictionary-Blind Image Reconstruction

When λL → ∞ in (P1) or (P2), the optimal low-rank component of the dynamic image sequence becomes inactive (zero). The problems then become pure spatiotemporal dictionary-blind image reconstruction problems (with xL = 0 and x = xS) involving ℓ0 or ℓ1 overall sparsity [56] penalties. For example, Problem (P1) reduces to the following form:

| (2) |

We refer to formulation (2) with its low-rank atom constraints as the DINO-KAT (DIctioNary with lOw-ranK AToms) blind image reconstruction problem. A similar formulation is obtained from (P2) but with an ℓ1 penalty. These formulations differ from the ones proposed for dynamic image reconstruction in prior works such as [28], [35], [31]. In [35], dynamic image reconstruction is performed by learning a common real-valued dictionary for the spatio-temporal patches of the real and imaginary parts of the dynamic image sequence. The algorithm therein involves dictionary learning using K-SVD [21], where sparse coding is performed using the approximate and expensive orthogonal matching pursuit method [58]. In contrast, the algorithms in this work (cf. Section III) for the overall sparsity penalized DINO-KAT blind image reconstruction problems involve simple and efficient updating of the complex-valued spatio-temporal dictionary (for complex-valued 3D patches) and sparse coefficients (by simple thresholding) in the formulations. The advantages of employing sparsity penalized dictionary learning over conventional approaches like K-SVD are discussed in more detail elsewhere [56]. In [31], a spatio-temporal dictionary is learned for the complex-valued 3D patches of the dynamic image sequence (a total variation penalty is also used), but the method again involves dictionary learning using K-SVD. In the blind compressed sensing method of [28], the time-profiles of individual image pixels were modeled as sparse in a learned dictionary. The 1D voxel time-profiles are a special case of general overlapping 3D (spatio-temporal) patches. Spatio-temporal dictionaries as used here may help capture redundancies in both spatial and temporal dimensions in the data. Finally, unlike the prior works, the DINO-KAT schemes in this work involve structured dictionary learning with low-rank reshaped atoms.

III. Algorithms and Properties

A. Algorithms

We propose efficient block coordinate descent-type algorithms for (P1) and (P2), where, in one step, we update (D,Z) keeping (xL, xS) fixed (Dictionary Learning Step), and then we update (xL, xS) keeping (D,Z) fixed (Image Reconstruction Step). We repeat these alternating steps in an iterative manner. The algorithm for the DINO-KAT blind image reconstruction problem (2) (or its ℓ1 version) is similar, except that xL = 0 during the update steps. Therefore, we focus on the algorithms for (P1) and (P2) in the following.

1) Dictionary Learning Step

Here, we optimize (P1) or (P2) with respect to (D,Z). We first describe the update procedure for (P1). Denoting by P the matrix that has the patches PjxS for 1 ≤ j ≤ M as its columns, and with C ≜ ZH, the optimization problem with respect to (D,Z) in the case of (P1) can be rewritten as follows:

| (P3) |

Here, we express the matrix DCH as a Sum of OUter Products (SOUP) . We then employ an iterative block coordinate descent method for (P3), where the columns ci of C and atoms di of D are updated sequentially by cycling over all i values [56]. Specifically, for each 1 ≤ i ≤ K, we solve (P3) first with respect to ci (sparse coding) and then with respect to di (dictionary atom update).

For the minimization with respect to ci, we have the following subproblem, where is computed using the most recent estimates of the other variables:

| (3) |

The minimizer ĉi of (3) is given by [56]

| (4) |

where the hard-thresholding operator HλZ (·) zeros out vector entries with magnitude less than λZ and leaves the other entries (with magnitude ≥ λZ) unaffected. Here, |·| computes the magnitude of vector entries, 1M denotes a vector of ones of length M, “⊙” denotes element-wise multiplication, min(·, ·) denotes element-wise minimum, and we choose a such that a > λZ. For a vector c ∈ ℂM, ej∠c ∈ ℂM is computed element-wise, with “∠” denoting the phase.

Optimizing (P3) with respect to the atom di while holding all other variables fixed yields the following subproblem:

| (5) |

Let denote an optimal rank-r approximation to R2 (Eici) ∈ ℂmxmy×mt that is obtained using the r leading singular vectors and singular values of the full singular value decomposition (SVD) R2 (Eici) ≜ UΣV H. Then a global minimizer of (5), upon reshaping, is

| (6) |

where W is any normalized matrix with rank at most r, of appropriate dimensions (e.g., we use the reshaped first column of the m × m identity matrix). The proof for (6) is included in the supplementary material.

If r = min(mxmy,mt), then no SVD is needed and the solution is [56]

| (7) |

where w is any vector on the m-dimensional unit sphere (e.g., we use the first column of the m × m identity).

In the case of (P2), when minimizing with respect to (D,Z), we again set C = ZH, which yields an ℓ1 penalized dictionary learning problem (a simple variant of (P3)). The dictionary and sparse coefficients are then updated using a similar block coordinate descent method as for (P3). In particular, the coefficients ci are updated using soft thresholding:

| (8) |

2) Image Reconstruction Step

Minimizing (P1) or (P2) with respect to xL and xS yields the following subproblem:

| (P4) |

Problem (P4) is convex but nonsmooth, and its objective has the form f(xL, xS) + g1(xL) + g2(xS), with , g1(xL) ≜ λL ||R1(xL)||*, and . We employ the proximal gradient method [45] for (P4), whose iterates, denoted by superscript k, take the following form:

| (9) |

| (10) |

where the proximity function is defined as

| (11) |

and the gradients of f are given by

The update in (9) corresponds to the singular value thresholding (SVT) operation [59]. Indeed, defining , it follows from (9) and (11) [59] that

| (12) |

Here, the SVT operator for a given threshold τ > 0 is

| (13) |

where UΣVH is the SVD of Y with σi denoting the ith largest singular value and ui and vi denoting the ith columns of U and V, and (·)+ = max(·, 0) sets negative values to zero.

Let . Then (10) and (11) imply that satisfies the following Normal equation:

| (14) |

Solving (14) for is straightforward because the matrix pre-multiplying is diagonal, and thus its inverse can be computed cheaply. The term in (14) can also be computed cheaply using patch-based operations.

The proximal gradient method for (P4) converges [60] for a constant step-size tk = t < 2/ℓ, where ℓ is the Lipschitz constant of ∇f(xL, xS). For (P4), . In practice, ℓ can be precomputed using standard techniques such as the power iteration method. In our dMRI experiments in Section IV, we normalize the encoding operator A so that ||A||2 = 1 for fully-sampled measurements (cf. [45], [61]) to ensure that in undersampled (k-t space) scenarios.

When the nuclear norm penalty in (P4) is replaced with a rank penalty, i.e., g1(xL) ≜ λL rank(R1(xL)), the proximity function is a modified form of the SVT operation in (12) (or (13)), where the singular values smaller than are set to zero and the other singular values are left unaffected (i.e., hard-thresholding the singular values). Alternatively, when the nuclear norm penalty is replaced with (for p < 1) applied to the vector of singular values of R1(xL) [47], the proximity function can still be computed cheaply when p = 1/2 or p = 2/3, for which the soft thresholding of singular values in (13) is replaced with the solution of an appropriate polynomial equation (see [62]). For general p, the xL update could be performed using strategies such as in [47].

The nuclear norm-based low-rank regularizer ||R1(xL)||* is popular because it is the tightest convex relaxation of the (nonconvex) matrix rank penalty. However, this does not guarantee that the nuclear norm (or its alternatives) is the optimal (in any sense) low-rank regularizer in practice. Indeed, the argument of the SVT operator in (12) can be interpreted as an estimate of the underlying (true) low-rank matrix R1(xL) plus a residual (noise) matrix. In [52], the low-rank denoising problem was studied from a random-matrix-theoretic perspective and an algorithm – OptShrink – was derived that asymptotically achieves minimum squared error among all estimators that shrink the singular values of their argument. We leverage this result for dMRI by proposing the following modification of (12):

| (15) |

Here, OptShrinkrL (.) is the data-driven OptShrink estimator from Algorithm 1 of [52] (see the supplementary material for more details and discussion of OptShrink). In this variation, the regularization parameter λL is replaced by a parameter rL ∈ ℕ that directly specifies the rank of , and the (optimal) shrinkage for each of the leading rL singular values is implicitly estimated based on the distribution of the remaining singular values. Intuitively, we expect this variation of the aforementioned (SVT-based) proximal gradient scheme to yield better estimates of the underlying low-rank component of the reconstruction because, at each iteration k (in (9)), the OptShrink-based update (15) should produce an estimate of the underlying low-rank matrix R1(xL) with smaller squared error than the corresponding SVT-based update (12). Similar OptShrink-based schemes have shown promise in practice [53], [54]. In particular, in [53] it is shown that replacing the SVT-based low-rank updates in the algorithm [45] for (P0) with OptShrink updates can improve dMRI reconstruction quality. In practice, small rL values perform well due to the high spatio-temporal correlation of the background in dMRI.

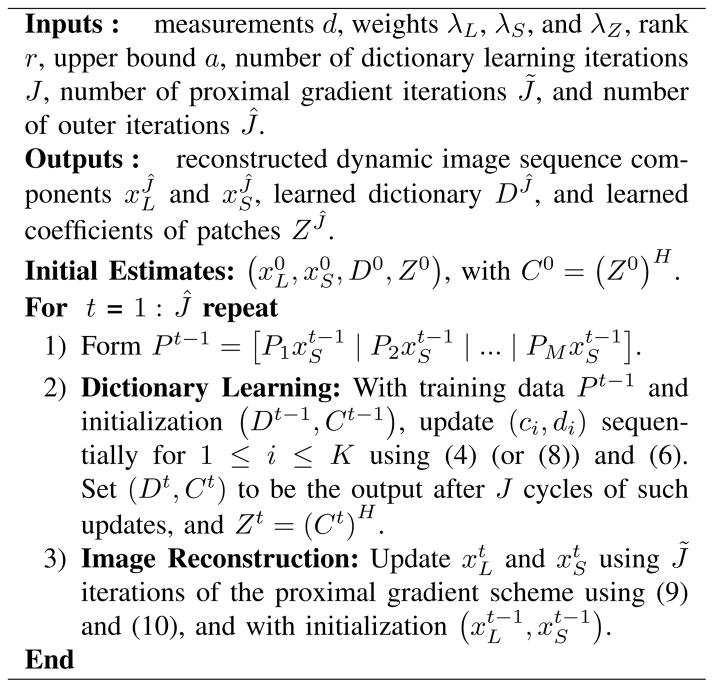

Fig. 1 shows the LASSI reconstruction algorithms for Problems (P1) and (P2), respectively. As discussed, we can obtain variants of these proposed LASSI algorithms by replacing the SVT-based xL update (12) in the image reconstruction step with an OptShrink-based update (15), or with the update arising from the rank penalty or from the Schatten p-norm (p < 1) penalty. The proposed LASSI algorithms start with an initial ( , D0, Z0) . For example, D0 can be set to an analytical dictionary, Z0 = 0, and and could be (for example) set based on some iterations of the recent L+S method [45]. In the case of Problem (2), the proposed algorithm is an efficient SOUP-based image reconstruction algorithm. We refer to it as the DINO-KAT image reconstruction algorithm in this case.

Fig. 1.

The LASSI reconstruction algorithms for Problems (P1) and (P2), respectively. Superscript t denotes the iterates in the algorithm. We do not compute the matrices explicitly in the dictionary learning iterations. Rather, we efficiently compute products of Ei or with vectors [56]. Parameter a is set very large in practice (e.g., a ∝ || A†d||2).

B. Convergence and Computational Cost

The proposed LASSI algorithms for (P1) and (P2) alternate between updating (D,Z) and (xL, xS). Since we update the dictionary atoms and sparse coefficients using an exact block coordinate descent approach, the objectives in our formulations only decrease in this step. When the (xL, xS) update is performed using proximal gradients (which is guaranteed to converge to the global minimizer of (P4)), by appropriate choice of the constant-step size [63], the objective functions can be ensured to be monotone (non-increasing) in this step. Thus, the costs in our algorithms are monotone decreasing, and because they are lower-bounded (by 0), they must converge. Whether the iterates in the LASSI algorithms converge to the critical points [64] in (P1) or (P2) [56] is an interesting question that we leave for future work.

In practice, the computational cost per outer iteration of the proposed algorithms is dominated by the cost of the dictionary learning step, which scales (assuming K ∝ m and M ∝ K,m) as O(m2MJ), where J is the number of times the matrix D is updated in the dictionary learning step. The SOUP dictionary learning cost is itself dominated by various matrix-vector products, whereas the costs of the truncated hard-thresholding (4) and low-rank approximation (6) steps are negligible. On the other hand, when dictionary learning is performed using methods like K-SVD [21] (e.g., in [18], [30]), the associated cost (assuming per-patch sparsity ∝ m) may scale worse5 as O(m3MJ). Section IV illustrates that our algorithms converge quickly in practice.

IV. Numerical Experiments

A. Framework

The proposed LASSI framework can be used for inverse problems involving dynamic data, such as in dMRI, interventional imaging, video processing, etc. Here, we illustrate the convergence behavior and performance of our methods for dMRI reconstruction from limited k-t space data. Section IV-B focuses on empirical convergence and learning behavior of the methods. Section IV-C compares the image reconstruction quality obtained with LASSI to that obtained with recent techniques. Section IV-D investigates and compares the various LASSI models and methods in detail. We compare using the ℓ0 “norm” (i.e., (P1)) to the ℓ1 norm (i.e., (P2)), structured (with low-rank atoms) dictionary learning to the learning of unstructured (with full-rank atoms) dictionaries, and singular value thresholding-based xL update to OptShrink-based or other alternative xL updates in LASSI. We also investigate the effects of the sparsity level (i.e., number of nonzeros) of the learned Z and the overcompleteness of D in LASSI, and demonstrate the advantages of adapting the patch-based LASSI dictionary compared to using fixed dictionary models in the LASSI algorithms. The LASSI methods are also shown to perform well for various initializations of xL and xS.

We work with several dMRI datasets from prior works [45], [47]: 1) the Cartesian cardiac perfusion data [45], [61], 2) a 2D cross section of the physiologically improved nonuniform cardiac torso (PINCAT) [65] phantom data (see [47], [66]), and 3) the in vivo myocardial perfusion MRI data in [47], [66]. The cardiac perfusion data were acquired with a modified TurboFLASH sequence on a 3T scanner using a 12-element coil array. The fully sampled data with an image matrix size of 128×128 (128 phase encode lines) and 40 temporal frames was acquired with FOV = 320 × 320 mm2, slice thickness = 8 mm, spatial resolution = 3.2 mm2, and temporal resolution of 307 ms [45]. The coil sensitivity maps are provided in [61]. The (single coil) PINCAT data (as in [66]) had image matrix size of 128 × 128 and 50 temporal frames. The single coil in vivo myocardial perfusion data was acquired on a 3T scanner using a saturation recovery FLASH sequence with Cartesian sampling (TR/TE = 2.5/1 ms, saturation recovery time = 100 ms), and had a image matrix size of 90×190 (phase encodes × frequency encodes) and 70 temporal frames [47].

Fully sampled data (PINCAT and in vivo data were normalized to unit peak image intensity, and the cardiac perfusion data [45] had a peak image intensity of 1.27) were retrospectively undersampled in our experiments. We used Cartesian and pseudo-radial undersampling patterns. In the case of Cartesian sampling, we used a different variable-density random Cartesian undersampling pattern for each time frame. The pseudo-radial (sampling radially at uniformly spaced angles for each time frame and with a small random rotation of the radial lines between frames) sampling patterns were obtained by subsampling on a Cartesian grid for each time frame. We simulate several undersampling (acceleration) factors of k-t space in our experiments. We measure the quality of the dMRI reconstructions using the normalized root mean square error (NRMSE) metric defined as ||xrecon – xref||2 / ||xref||2, where xref is a reference reconstruction from fully sampled data, and xrecon is the reconstruction from undersampled data.

We compare the quality of reconstructions obtained with the proposed LASSI methods to those obtained with the recent L+S method [45] and the k-t SLR method involving joint L & S modeling [47]. For the L+S and k-t SLR methods, we used the publicly available MATLAB implementations [61], [66]. We chose the parameters for both methods (e.g., λL and λS for L+S in (P0) or λ1, λ2, etc. for k-t SLR [47], [66]) by sweeping over a range of values and choosing the settings that achieved good NRMSE in our experiments. We optimized parameters separately for each dataset to achieve the lowest NRMSE at some intermediate undersampling factors, and observed that these settings also worked well at other undersampling factors. The L+S method was simulated for 250 iterations and k-t SLR was also simulated for sufficient iterations to ensure convergence. The operator T (in (P0)) for L+S was set to a temporal Fourier transform, and a total variation sparsifying penalty (together with a nuclear norm penalty for enforcing low-rankness) was used in k-t SLR. The dynamic image sequence in both methods was initialized with a baseline reconstruction (for the L+S method, L was initialized with this baseline and S with zero) that was obtained by first performing zeroth order interpolation at the nonsampled k-t space locations (by filling in with the nearest non-zero entry along time) and then backpropagating the filled k-t space to image space (i.e., pre-multiplying by the AH corresponding to fully sampled data).

For the LASSI method, we extracted spatiotemporal patches of size 8 × 8 × 5 from xS in (P1) with spatial and temporal patch overlap strides of 2 pixels.6 The dictionary atoms were reshaped into 64×5 space-time matrices, and we set the rank parameter r = 1, except for the invivo dataset [47], [66], where we set r = 5. We ran LASSI for 50 outer iterations with 1 and 5 inner iterations in the (D,Z) and (xL, xS) updates, respectively. Since Problem (P1) is nonconvex, the proposed algorithm needs to be initialized appropriately. We set the initial Z = 0, and the initial xL and xS were typically set based on the outputs of either the L+S or k-t SLR methods. When learning a square dictionary, we initialized D with a 320 × 320 DCT, and, in the overcomplete (K > m) case, we concatenated the square DCT initialization with normalized and vectorized patches that were selected from random locations of the initial reconstruction. We empirically show in Section IV-D that the proposed LASSI algorithms typically improve image reconstruction quality compared to that achieved by their initializations. We selected the weights λL, λS, and λZ for the LASSI methods separately for each dataset by sweeping over a range (3D grid) of values and picking the settings that achieved the lowest NRMSE at intermediate undersampling factors (as for L+S and k-t SLR) in our experiments. These tuned parameters also worked well at other undersampling factors (e.g., see Fig. 5(h)), and are included in the supplement for completeness.

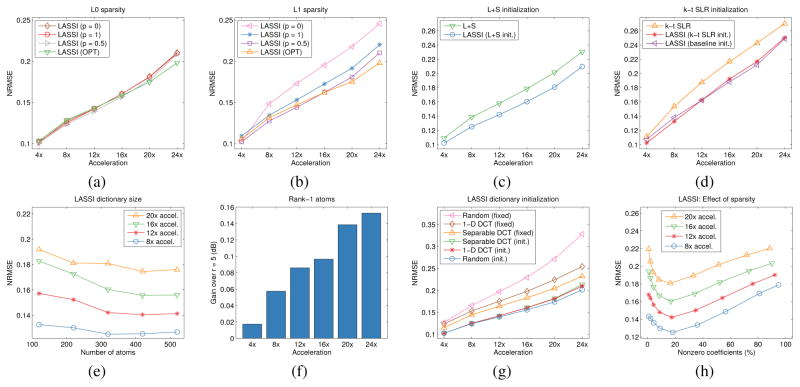

Fig. 5.

Study of LASSI models, methods, and initializations at various undersampling (acceleration) factors for the cardiac perfusion data in [45], [61] with Cartesian sampling: (a) NRMSE for LASSI with ℓ0 “norm” for sparsity and with xL updates based on SVT (p = 1), OptShrink (OPT), or based on the Schatten p-norm (p = 0.5) or rank penalty (p = 0); (b) NRMSE for LASSI with ℓ1 sparsity and with xL updates based on SVT (p = 1), OptShrink (OPT), or based on the Schatten p-norm (p = 0.5) or rank penalty (p = 0); (c) NRMSE for LASSI when initialized with the output of the L+S method [45] (used to initialize xS with ) together with the NRMSE for the L+S method; (d) NRMSE for LASSI when initialized with the output of the k-t SLR method [47] or with the baseline reconstruction (performing zeroth order interpolation at the nonsampled k-t space locations and then backpropagating to image space) mentioned in Section IV-A (these are used to initialize xS with ), together with the NRMSE values for k-t SLR; (e) NRMSE versus dictionary size at different acceleration factors; (f) NRMSE improvement (in dB) achieved with r = 1 compared to the r = 5 case in LASSI; (g) NRMSE for LASSI with different dictionary initializations (a random dictionary, a 320×320 1D DCT and a separable 3D DCT of the same size) together with the NRMSEs achieved in LASSI when the dictionary is fixed to its initial value; and (h) NRMSE versus the fraction of nonzero coefficients (expressed as percentage) in the learned Z at different acceleration factors.

We also evaluate the proposed variant of LASSI involving only spatiotemporal dictionary learning (i.e., dictionary blind compressed sensing). We refer to this method as DINOKAT dMRI, with r = 1. We use an ||0 sparsity penalty for DINO-KAT dMRI (i.e., we solve Problem (2)) in our experiments, and the other parameters are set or optimized (cf. the supplement) similarly as described above for LASSI.

The LASSI and DINO-KAT dMRI implementations were coded in Matlab R2016a. Our current Matlab implementations are not optimized for efficiency. Hence, here we perform our comparisons to recent methods based on reconstruction quality (NRMSE) rather than runtimes, since the latter are highly implementation dependant. A link to software to reproduce our results will be provided at http://web.eecs.umich.edu/~fessler/.

B. LASSI Convergence and Learning Behavior

Here, we consider the fully sampled cardiac perfusion data in [45], [61] and perform eight fold Cartesian undersampling of k-t space. We study the behavior of the proposed LASSI algorithms for reconstructing the dMRI data from (multicoil) undersampled measurements. We consider four different LASSI algorithms in our study here: the algorithms for (P1) (with ℓ0 “norm”) and (P2) (with ℓ1 norm) with SVT-based xL update; and the variants of these two algorithms where the SVT update step is replaced with an OptShrink (OPT)- type update. The other variants of the SVT update including hard thresholding of singular values or updating based on the Schatten p-norm are studied later in Section IV-D. We learned 320 × 320 dictionaries (with atoms reshaped by the operator R2(·) into 64 × 5 space-time matrices) for the patches of xS with r = 1, and xL and xS were initialized using the corresponding components of the L+S method with λL = 1.2 and λS = 0.01 in (P0) [45]. Here, we jointly tuned λL, λS, and λZ for each LASSI variation, to achieve the best NRMSE.

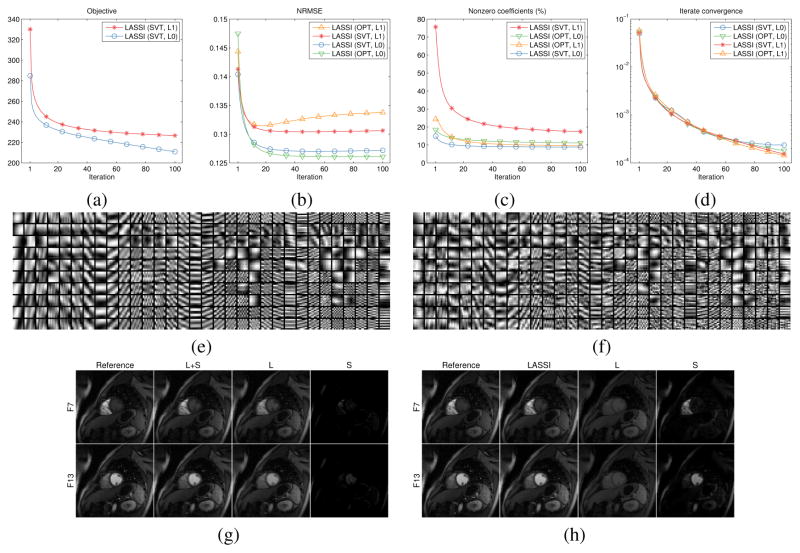

Fig. 2 shows the behavior of the proposed LASSI reconstruction methods. The objective function values (Fig. 2(a)) in (P1) and (P2) decreased monotonically and quickly for the algorithms with SVT-based xL update. The OptShrink-based xL update does not correspond to minimizing a formal cost function, so the OPT-based algorithms are omitted in Fig. 2(a). All four LASSI methods improved the NRMSE over iterations compared to the initialization. The NRMSE converged (Fig. 2(b)) in all four cases, with the ℓ0 “norm”-based methods outperforming the ℓ1 penalty methods. Moreover, when employing the ℓ0 sparsity penalty, the OPT-based method (rL = 1) outperformed the SVT-based one for the dataset. The sparsity fraction (||Z||0 /mM) for the learned coefficients matrix (Fig. 2(c)) converged to small values (about 10–20 %) in all cases indicating that highly sparse representations are obtained in the LASSI models. Lastly, the difference between successive dMRI reconstructions (Fig. 2(d)) quickly decreased to small values, suggesting iterate convergence.

Fig. 2.

Behavior of the LASSI algorithms with Cartesian sampling and 8x undersampling. The algorithms are labeled according to the method used for xL update, i.e., SVT or OptShrink (OPT), and according to the type of sparsity penalty employed for the patch coefficients (ℓ0 or ℓ1 corresponding to (P1) or (P2)). (a) Objectives (shown only for the algorithms for (P1) and (P2) with SVT-based updates, since OPT-based updates do not correspond to minimizing a formal cost function); (b) NRMSE; (c) Sparsity fraction of Z (i.e., ||Z||0 /mM) expressed as a percentage; (d) normalized changes between successive dMRI reconstructions ; (e) real and (f) imaginary parts of the atoms of the learned dictionaries in LASSI (using ℓ0 sparsity penalty and OptShrink-based xL update) shown as patches – only the 8 × 8 patches corresponding to the first time-point (column) of the rank-1 reshaped (64 × 5) atoms are shown; and frames 7 and 13 of the (g) conventional L+S reconstruction [45] and (h) the proposed LASSI (with ℓ0 penalty and OptShrink-based xL update) reconstruction shown along with the corresponding reference frames. The low-rank (L) and (transform or dictionary) sparse (S) components of each reconstructed frame are also individually shown. Only image magnitudes are displayed in (g) and (h).

Figs. 2(g) and (h) show the reconstructions7 and xL and xS components of two representative frames produced by the L+S [45] (with parameters optimized to achieve best NRMSE) and LASSI (OPT update and ℓ0 sparsity) methods, respectively. The LASSI reconstructions are sharper and a better approximation of the reference frames (fully sampled reconstructions) shown. In particular, the xL component of the LASSI reconstruction is clearly low-rank, and the xS component captures the changes in contrast and other dynamic features in the data. On the other hand, the xL component of the conventional L+S reconstruction varies more over time (i.e., it has higher rank), and the xS component contains relatively little information. The richer (xL, xS) decomposition produced by LASSI suggests that both the low-rank and adaptive dictionary-sparse components of the model are well-suited for dMRI.

Figs. 2(e) and (f) show the real and imaginary parts of the atoms of the learned D in LASSI with OptShrink-based xL updating and ℓ0 sparsity. Only the first columns (time-point) of the (rank-1) reshaped 64×5 atoms are shown as 8×8 patches. The learned atoms contain rich geometric and frequency-like structures that were jointly learned with the dynamic signal components from limited k-t space measurements.

C. Dynamic MRI Results and Comparisons

Here, we consider the fully sampled cardiac perfusion data [45], [61], PINCAT data [47], [66], and in vivo myocardial perfusion data [47], [66], and simulate k-t space undersampling at various acceleration factors. Cartesian sampling was used for the first dataset, and pseudo-radial sampling was employed for the other two. The performance of LASSI and DINO-KAT dMRI is compared to that of L+S [45] and k-t SLR [47]. The LASSI and DINO-KAT dMRI algorithms were simulated with an ℓ0 sparsity penalty and a 320×320 dictionary. OptShrink-based xL updates were employed in LASSI for the cardiac perfusion data, and SVT-based updates were used in the other cases. For the cardiac perfusion data, the initial xL and xS in LASSI were from the L+S framework [45] (and the initial x in DINO-KAT dMRI was an L+S dMRI reconstruction). For the PINCAT and in vivo myocardial perfusion data, the initial xS in LASSI (or x in DINO-KAT dMRI) was the (better) k-t SLR reconstruction and the initial xL was zero. All other settings are as discussed in Section IV-A.

Tables I, II and III list the reconstruction NRMSE values for LASSI, DINO-KAT dMRI, L+S [45] and k-t SLR [47] for the cardiac perfusion, PINCAT, and in vivo datasets, respectively. The LASSI method provides the best NRMSE values, and the proposed DINO-KAT dMRI method also outperforms the prior L+S and k-t SLR methods. The NRMSE gains achieved by LASSI over the other methods are indicated in the tables for each dataset and undersampling factor. The LASSI framework provides an average improvement of 1.9 dB, 1.5 dB, and 0.5 dB respectively, over the L+S, k-t SLR, and (proposed) DINO-KAT dMRI methods. This suggests the suitability of the richer LASSI model for dynamic image sequences compared to the jointly low-rank and sparse (k-t SLR), low-rank plus nonadaptive sparse (L+S), and purely adaptive dictionary-sparse (DINO-KAT dMRI) signal models.

TABLE I.

NRMSE values expressed as percentages for the L+S [45], k-t SLR [47], and the proposed DINO-KAT dMRI and LASSI methods at several undersampling (acceleration) factors for the cardiac perfusion data [45], [61] with Cartesian sampling. The NRMSE gain (in decibels (dB)) achieved by LASSI over the other methods is also shown. The best NRMSE for each undersampling factor is in bold.

| Undersampling | 4x | 8x | 12x | 16x | 20x | 24x |

|---|---|---|---|---|---|---|

| NRMSE (k-t SLR) % | 11.1 | 15.4 | 18.8 | 21.7 | 24.3 | 27.0 |

| NRMSE (L+S) % | 10.9 | 13.9 | 15.8 | 17.8 | 20.1 | 23.0 |

| NRMSE (DINO-KAT) % | 10.4 | 12.6 | 14.5 | 16.7 | 18.8 | 22.1 |

| NRMSE (LASSI) % | 10.0 | 12.6 | 14.3 | 16.1 | 17.6 | 20.2 |

| Gain over k-t SLR (dB) | 0.9 | 1.7 | 2.4 | 2.6 | 2.8 | 2.5 |

| Gain over L+S (dB) | 0.7 | 0.8 | 0.9 | 0.9 | 1.2 | 1.2 |

| Gain over DINO-KAT (dB) | 0.3 | 0.0 | 0.1 | 0.3 | 0.6 | 0.8 |

TABLE II.

NRMSE values expressed as percentages for the L+S [45], k-t SLR [47], and the proposed DINO-KAT dMRI and LASSI methods at several undersampling (acceleration) factors for the PINCAT data [47], [66] with pseudo-radial sampling. The best NRMSE values for each undersampling factor are marked in bold.

| Undersampling | 5x | 6x | 7x | 9x | 14x | 27x |

|---|---|---|---|---|---|---|

| NRMSE (k-t SLR) % | 9.7 | 10.7 | 12.2 | 14.5 | 18.0 | 23.7 |

| NRMSE (L+S) % | 11.7 | 12.8 | 14.2 | 16.3 | 19.6 | 25.4 |

| NRMSE (DINO-KAT) % | 8.6 | 9.5 | 10.7 | 12.6 | 15.9 | 21.8 |

| NRMSE (LASSI) % | 8.4 | 9.1 | 10.1 | 11.4 | 13.6 | 18.3 |

| Gain over k-t SLR (dB) | 1.2 | 1.4 | 1.7 | 2.1 | 2.4 | 2.2 |

| Gain over L+S (dB) | 2.8 | 2.9 | 3.0 | 3.1 | 3.2 | 2.8 |

| Gain over DINO-KAT (dB) | 0.2 | 0.3 | 0.6 | 0.9 | 1.4 | 1.5 |

TABLE III.

NRMSE values expressed as percentages for the L+S [45], k-t SLR [47], and the proposed DINO-KAT dMRI and LASSI methods at several undersampling (acceleration) factors for the myocardial perfusion MRI data in [47], [66], using pseudo-radial sampling. The best NRMSE values for each undersampling factor are marked in bold.

| Undersampling | 4x | 5x | 6x | 8x | 12x | 23x |

|---|---|---|---|---|---|---|

| NRMSE (k-t SLR) % | 10.7 | 11.6 | 12.7 | 14.0 | 16.7 | 22.1 |

| NRMSE (L+S) % | 12.5 | 13.4 | 14.6 | 16.1 | 18.8 | 24.2 |

| NRMSE (DINO-KAT) % | 10.2 | 11.0 | 12.1 | 13.5 | 16.4 | 21.9 |

| NRMSE (LASSI) % | 9.9 | 10.7 | 11.8 | 13.2 | 16.2 | 21.9 |

| Gain over k-t SLR (dB) | 0.7 | 0.7 | 0.6 | 0.5 | 0.3 | 0.1 |

| Gain over L+S (dB) | 2.1 | 2.0 | 1.8 | 1.7 | 1.3 | 0.9 |

| Gain over DINO-KAT (dB) | 0.3 | 0.3 | 0.2 | 0.2 | 0.1 | 0.0 |

It is often of interest to compute the reconstruction NRMSE over a region of interest (ROI) containing the heart. Additional tables included in the supplement show the reconstruction NRMSE values computed over such ROIs for LASSI, DINOKAT dMRI, L+S, and k-t SLR for the cardiac perfusion, PINCAT, and in vivo datasets. The proposed LASSI and DINO-KAT dMRI methods provide much lower NRMSE in the heart ROIs compared to the other methods.

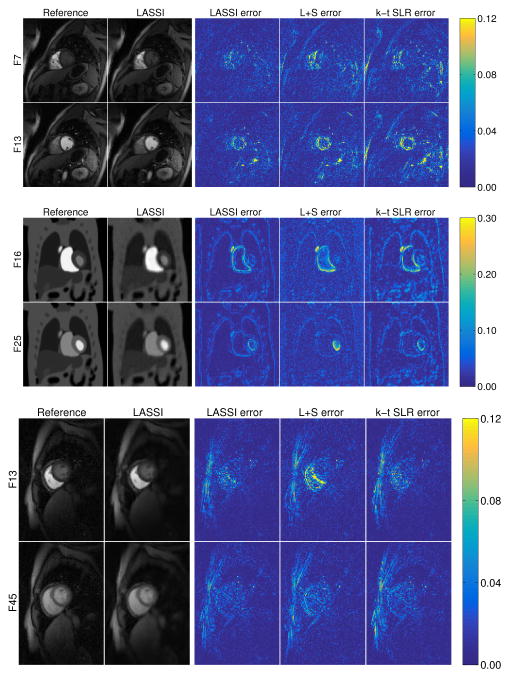

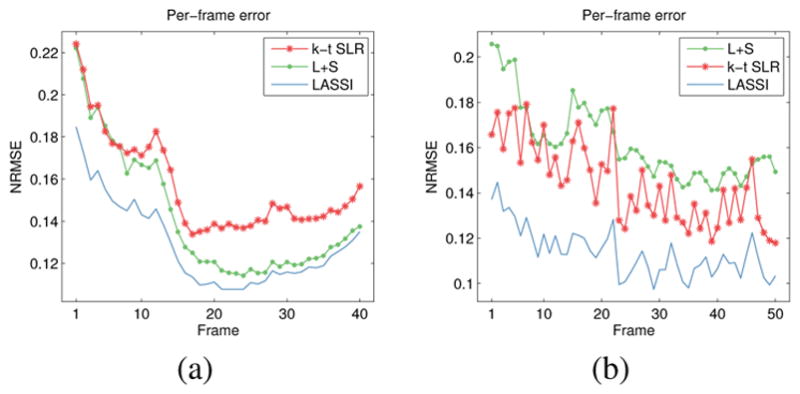

Fig. 3 shows the NRMSE values computed between each reconstructed and reference frame for the LASSI, L+S, and k-t SLR outputs for two datasets. The proposed LASSI scheme clearly outperforms the previous L+S and k-t SLR methods across frames (time). Fig. 4 shows the LASSI reconstructions of some representative frames (the supplement shows more such reconstructions) for each dataset in Tables I-III. The reconstructed frames are visually similar to the reference frames (fully sampled reconstructions) shown. Fig. 4 also shows the reconstruction error maps (i.e., the magnitude of the difference between the magnitudes of the reconstructed and reference frames) for LASSI, L+S, and k-t SLR for the representative frames of each dataset. The error maps for LASSI show fewer artifacts and smaller distortions than the other methods. Results included in the supplement show that LASSI recovers temporal (x–t) profiles in the dynamic data with greater fidelity than other methods.

Fig. 3.

NRMSE values computed between each reconstructed and reference frame for LASSI, L+S, and k-t SLR for (a) the cardiac perfusion data [45], [61] at 8x undersampling, and (b) the PINCAT data at 9x undersampling.

Fig. 4.

LASSI reconstructions and the error maps (clipped for viewing) for LASSI, L+S, and k-t SLR for frames of the cardiac perfusion data [45], [61] (first row), PINCAT data [47], [66] (second row), and in vivo myocardial perfusion data [47], [66] (third row), shown along with the reference reconstruction frames. Undersampling factors (top to bottom): 8x, 9x, and 8x. The frame numbers and method names are indicated on the images.

D. A Study of Various LASSI Models and Methods

Here, we investigate the various LASSI models and methods in detail. We work with the cardiac perfusion data [45] and simulate the reconstruction performance of LASSI for Cartesian sampling at various undersampling factors. Unless otherwise stated, we simulate LASSI here with the ℓ0 sparsity penalty, the SVT-based xL update, r = 1, an initial 320×320 (1D) DCT dictionary, and xS initialized with the dMRI reconstruction from the L+S method [45] and xL initialized to zero. In the following, we first compare SVT-based updating of xL to alternatives in the algorithms and the use of ℓ0 versus ℓ1 sparsity penalties. The weights λL, λS, and λZ were tuned for each LASSI variation. Second, we study the behavior of LASSI for different initializations of the underlying signal components or dictionary. Third, we study the effect of the number of atoms of D on LASSI performance. Fourth, we study the effect of the sparsity level of the learned Z on the reconstruction quality in LASSI. Lastly, we study the effect of the atom rank parameter r in LASSI.

1) SVT vs. Alternatives and ℓ0 vs. ℓ1 patch sparsity

Figs. 5(a) and (b) show the behavior of the LASSI algorithms using ℓ0 and ℓ1 sparsity penalties, respectively. In each case, the results obtained with xL updates based on SVT, Opt- Shrink (OPT), or based on the Schatten p-norm (p = 0.5), and rank penalty are shown. The OptShrink-based singular value shrinkage (with rL = 1) and Schatten p-norm-based shrinkage typically outperform the conventional SVT (based on nuclear norm penalty) as well as the hard thresholding of singular values (for rank penalty) for the cardiac perfusion data. The OptShrink and Schatten p-norm-based xL updates also perform quite similarly at lower undersampling factors, but OptShrink outperforms the latter approach at higher undersampling factors. Moreover, the ℓ0 “norm”-based methods outperformed the corresponding ℓ1 norm methods in many cases (with SVT or alternative approaches). These results demonstrate the benefits of appropriate nonconvex regularizers in practice.

2) Effect of Initializations

Here, we explore the behavior of LASSI for different initializations of the dictionary and the dynamic signal components. First, we consider the LASSI algorithm initialized by the L+S and k-t SLR methods as well as with the baseline reconstruction (obtained by performing zeroth order interpolation at the nonsampled k-t space locations and then backpropagating to image space) mentioned in Section IV-A (all other parameters fixed). The reconstructions from the prior methods are used to initialize xS in LASSI with 8. Figs. 5(c) and (d) show that LASSI significantly improves the dMRI reconstruction quality compared to the initializations at all undersampling factors tested. The baseline reconstructions had high NRMSE values (not shown in Fig. 5) of about 0.5. Importantly, the reconstruction NRMSE for LASSI with the simple baseline initialization (Fig. 5(d)) is comparable to the NRMSE obtained with the more sophisticated k-t SLR initialization. In general, better initializations (for xL, xS) in LASSI may lead to a better final NRMSE in practice.

Next, we consider initializing the LASSI method with the following types of dictionaries (all other parameters fixed): a random i.i.d. gaussian matrix with normalized columns, the 320×320 1D DCT, and the separable 3D DCT of size 320× 320. Fig. 5(g) shows that LASSI performs well for each choice of initialization. We also simulated the LASSI algorithm by keeping the dictionary D fixed (but still updating Z) to each of the aforementioned initializations. Importantly, the NRMSE values achieved by the adaptive-dictionary LASSI variations are substantially better than the values achieved by the fixed-dictionary schemes.

3) Effect of Overcompleteness of D

Fig. 5(e) shows the performance (NRMSE) of LASSI for various choices of the umber of atoms (K) in D at several acceleration factors. The weights in (P1) were tuned for each K. As K is increased, the NRMSE initially shows significant improvements (decrease) of more than 1 dB. This is because LASSI learns richer models that provide sparser representations of patches and, hence, better reconstructions. However, for very large K values, the NRMSE saturates or begins to degrade, since it is harder to learn very rich models using limited imaging measurements (without overfitting artifacts).

4) Effect of the Sparsity Level in LASSI

While Section IV-D1 compared the various ways of updating the low-rank signal component in LASSI, here we study the effect of the sparsity level of the learned Z on LASSI performance. In particular, we simulate LASSI at various values of the parameter λZ that controls sparsity (all other parameters fixed). Fig. 5(h) shows the NRMSE of LASSI at various sparsity levels of the learned Z and at several acceleration factors. The weight λZ decreases from left to right in the plot and the same set of λZ values were selected (for the simulation) at the various acceleration factors. Clearly, the best NRMSE values occur around 10–20% sparsity (when 32–64 dictionary atoms are used on the average to represent the reshaped 64×5 space-time patches of xS), and the NRMSE degrades when the number of nonzeros in Z is either too high (non-sparse) or too low (when the dictionary model reduces to a low-rank approximation of space-time patches in xS). This illustrates the effectiveness of the rich sparsity-driven modeling in LASSI9.

5) Effect of Rank of Reshaped Atoms

Here, we simulate LASSI with (reshaped) atom ranks r = 1 (low-rank) and r = 5 (full-rank). Fig. 5(f) shows that LASSI with r = 1 provides somewhat improved NRMSE values over the r = 5 case at several undersampling factors, with larger improvements at higher accelerations. This result suggests that structured (fewer degrees of freedom) dictionary adaptation may be useful in scenarios involving very limited measurements. In practice, the effectiveness of the low-rank model for reshaped dictionary atoms also depends on the properties of the underlying data.

V. Conclusions

In this work, we investigated a novel framework for reconstructing spatiotemporal data from limited measurements. The proposed LASSI framework jointly learns a low-rank and dictionary-sparse decomposition of the underlying dynamic image sequence together with a spatiotemporal dictionary. The proposed algorithms involve simple updates. Our experimental results showed the superior performance of LASSI methods for dynamic MR image reconstruction from limited k-t space data compared to recent works such as L+S and k-t SLR. The LASSI framework also outperformed the proposed efficient dictionary-blind compressed sensing framework (a special case of LASSI) called DINO-KAT dMRI. We also studied and compared various LASSI methods and formulations such as with ℓ0 or ℓ1 sparsity penalties, or with low-rank or full-rank reshaped dictionary atoms, or involving singular value thresholding-based optimization versus some alternatives including OptShrink-based optimization. The usefulness of LASSI-based schemes in other inverse problems and image processing applications merits further study. The LASSI schemes involve parameters (like in most regularization-based methods) that need to be set (or tuned) in practice. We leave the study of automating the parameter selection process to future work. The investigation of dynamic image priors that naturally lead to OptShrink-type low-rank updates in the LASSI algorithms is also of interest, but is beyond the scope of this work, and will be presented elsewhere.

Supplementary Material

Acknowledgments

This work was supported in part by the following grants: ONR grant N00014-15-1-2141, DARPA Young Faculty Award D14AP00086, ARO MURI grants W911NF-11-1-0391 and 2015-05174-05, NIH grants R01 EB023618 and P01 CA 059827, and a UM-SJTU seed grant.

Footnotes

We focus on 2D + time for simplicity but the concepts generalize readily to 3D + time.

The LASSI method differs from the scheme in [51] that is not (overlapping) patch-based and involves only a 2D (spatial) dictionary. The model in [51] is that R1(xS) = DZ with sparse Z and the atoms of D have size NxNy (typically very large). Since often Nt < NxNy, one can easily construct trivial (degenerate) sparsifying dictionaries (e.g., D = R1(xS)) in this case. On the other hand, in our framework, the dictionaries are for small spatiotemporal patches, and there are many such overlapping patches for a dynamic image sequence to enable the learning of rich models that capture local spatiotemporal properties.

Such a non-coercive function remains finite even in cases when ||Z|| → ∞. For example, consider a dictionary D that has a column di that repeats. Then, in this case, the patch coefficient vector zj in (P1) could have entries α and −α respectively, corresponding to the two repeated atoms in D, and the objective would be invariant to arbitrarily large scaling of |α| (i.e., non-coercive).

Supplementary material is available in the supplementary files/multimedia tab.

In [56], we have shown that efficient SOUP learning-based image reconstruction methods outperform methods based on K-SVD in practice.

While we used a stride of 2 pixels, a spatial and temporal patch overlap stride of 1 pixel would further enhance the reconstruction performance of LASSI in our experiments, but at the cost of substantially more computation.

Gamma correction was used to better display the images in this work.

We have also observed that LASSI improves the reconstruction quality over other alternative initializations such as initializing xL and xS using corresponding outputs of the L+S framework.

Fig. 5(h) shows that the same λZ value is optimal at various accelerations. An intuitive explanation for this is that as the undersampling factor increases, the weighting of the (first) data-fidelity term in (P1) or (P2) decreases (fewer k-t space samples, or rows of the sensing matrix are selected). Thus, even with fixed λZ, the relative weighting of the sparsity penalty would increase, creating a stronger sparsity regularization at higher undersampling factors.

References

- 1.Donoho D. Compressed sensing. IEEE Trans Information Theory. 2006;52(4):1289–1306. [Google Scholar]

- 2.Candès E, Romberg J, Tao T. Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans Information Theory. 2006;52(2):489–509. [Google Scholar]

- 3.Feng P, Bresler Y. Spectrum-blind minimum-rate sampling and reconstruction of multiband signals. ICASSP. 1996;3:1689– 1692. [Google Scholar]

- 4.Bresler Y, Feng P. Spectrum-blind minimum-rate sampling and reconstruction of 2-D multiband signals. Proc 3rd IEEE Int Conf on Image Processing, ICIP’96. 1996:701–704. [Google Scholar]

- 5.Lustig M, Donoho D, Pauly J. Sparse MRI: The application of compressed sensing for rapid MR imaging. Magnetic Resonance in Medicine. 2007;58(6):1182–1195. doi: 10.1002/mrm.21391. [DOI] [PubMed] [Google Scholar]

- 6.Lustig M, Santos JM, Donoho DL, Pauly JM. k-t SPARSE: High frame rate dynamic MRI exploiting spatio-temporal sparsity. Proc ISMRM. 2006:2420. [Google Scholar]

- 7.Trzasko J, Manduca A. Highly undersampled magnetic resonance image reconstruction via homotopic l0-minimization. IEEE Trans Med Imaging. 2009;28(1):106–121. doi: 10.1109/TMI.2008.927346. [DOI] [PubMed] [Google Scholar]

- 8.Kim Y, Nadar MS, Bilgin A. Wavelet-based compressed sensing using gaussian scale mixtures. Proc ISMRM. 2010:4856. doi: 10.1109/TIP.2012.2188807. [DOI] [PubMed] [Google Scholar]

- 9.Qiu C, Lu W, Vaswani N. Real-time dynamic MR image reconstruction using kalman filtered compressed sensing. Proc IEEE International Conference on Acoustics, Speech and Signal Processing. 2009:393–396. [Google Scholar]

- 10.Gamper U, Boesiger P, Kozerke S. Compressed sensing in dynamic MRI. Magnetic Resonance in Medicine. 2008;59(2):365–373. doi: 10.1002/mrm.21477. [DOI] [PubMed] [Google Scholar]

- 11.Jung H, Sung K, Nayak KS, Kim EY, Ye JC. k-t FOCUSS: A general compressed sensing framework for high resolution dynamic MRI. Magnetic Resonance in Medicine. 2009;61(1):103–116. doi: 10.1002/mrm.21757. [DOI] [PubMed] [Google Scholar]

- 12.Liang D, Liu B, Wang J, Ying L. Accelerating SENSE using compressed sensing. Magnetic Resonance in Medicine. 2009;62(6):1574–1584. doi: 10.1002/mrm.22161. [DOI] [PubMed] [Google Scholar]

- 13.Otazo R, Kim D, Axel L, Sodickson DK. Combination of compressed sensing and parallel imaging for highly accelerated first-pass cardiac perfusion MRI. Magnetic Resonance in Medicine. 2010;64(3):767–776. doi: 10.1002/mrm.22463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Wu B, Watts R, Millane R, Bones P. An improved approach in applying compressed sensing in parallel MR imaging. Proc ISMRM. 2009:4595. [Google Scholar]

- 15.Liu B, Sebert FM, Zou Y, Ying L. SparseSENSE: Randomly-sampled parallel imaging using compressed sensing. Proc ISMRM. 2008:3154. [Google Scholar]

- 16.Adluru G, DiBella EVR. Reordering for improved constrained reconstruction from undersampled k-space data. Journal of Biomedical Imaging. 2008;2008:1–12. doi: 10.1155/2008/341684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Liang ZP. Spatiotemporal imaging with partially separable functions. IEEE International Symposium on Biomedical Imaging: From Nano to Macro. 2007:988–991. [Google Scholar]

- 18.Ravishankar S, Bresler Y. MR image reconstruction from highly undersampled k-space data by dictionary learning. IEEE Trans Med Imag. 2011;30(5):1028–1041. doi: 10.1109/TMI.2010.2090538. [DOI] [PubMed] [Google Scholar]

- 19.Olshausen BA, Field DJ. Emergence of simple-cell receptive field properties by learning a sparse code for natural images. Nature. 1996;381(6583):607–609. doi: 10.1038/381607a0. [DOI] [PubMed] [Google Scholar]

- 20.Engan K, Aase S, Hakon-Husoy J. Method of optimal directions for frame design. Proc IEEE International Conference on Acoustics, Speech, and Signal Processing. 1999:2443–2446. [Google Scholar]

- 21.Aharon M, Elad M, Bruckstein A. K-SVD: An algorithm for designing overcomplete dictionaries for sparse representation. IEEE Transactions on signal processing. 2006;54(11):4311–4322. [Google Scholar]

- 22.Mairal J, Bach F, Ponce J, Sapiro G. Online learning for matrix factorization and sparse coding. J Mach Learn Res. 2010;11:19– 60. [Google Scholar]

- 23.Elad M, Aharon M. Image denoising via sparse and redundant representations over learned dictionaries. IEEE Trans Image Process. 2006;15(12):3736–3745. doi: 10.1109/tip.2006.881969. [DOI] [PubMed] [Google Scholar]

- 24.Mairal J, Elad M, Sapiro G. Sparse representation for color image restoration. IEEE Trans on Image Processing. 2008;17(1):53–69. doi: 10.1109/tip.2007.911828. [DOI] [PubMed] [Google Scholar]

- 25.Lu X, Yuan Y, Yan P. Alternatively constrained dictionary learning for image superresolution. IEEE Transactions on Cybernetics. 2014;44(3):366–377. doi: 10.1109/TCYB.2013.2256347. [DOI] [PubMed] [Google Scholar]

- 26.Gleichman S, Eldar YC. Blind compressed sensing. IEEE Transactions on Information Theory. 2011;57(10):6958–6975. [Google Scholar]

- 27.Ravishankar S, Bresler Y. Multiscale dictionary learning for MRI. Proc ISMRM. 2011:2830. [Google Scholar]

- 28.Lingala SG, Jacob M. Blind compressive sensing dynamic MRI. IEEE Transactions on Medical Imaging. 2013;32(6):1132–1145. doi: 10.1109/TMI.2013.2255133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Lingala SG, Jacob M. Blind compressed sensing with sparse dictionaries for accelerated dynamic MRI. 2013 IEEE 10th International Symposium on Biomedical Imaging. 2013:5–8. doi: 10.1109/ISBI.2013.6556398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Wang Y, Zhou Y, Ying L. Undersampled dynamic magnetic resonance imaging using patch-based spatiotemporal dictionaries. 2013 IEEE 10th International Symposium on Biomedical Imaging (ISBI); April 2013; pp. 294–297. [Google Scholar]

- 31.Wang Y, Ying L. Compressed sensing dynamic cardiac cine mri using learned spatiotemporal dictionary. IEEE Transactions on Biomedical Engineering. 2014;61(4):1109–1120. doi: 10.1109/TBME.2013.2294939. [DOI] [PubMed] [Google Scholar]

- 32.Caballero J, Rueckert D, Hajnal JV. Medical Image Computing and Computer- Assisted Intervention MICCAI 2012. Vol. 7510. Springer; Berlin Heidelberg: 2012. Dictionary learning and time sparsity in dynamic MRI; pp. 256–263. ser. Lecture Notes in Computer Science. [DOI] [PubMed] [Google Scholar]

- 33.Huang Y, Paisley J, Lin Q, Ding X, Fu X, Zhang XP. Bayesian nonparametric dictionary learning for compressed sensing MRI. IEEE Trans Image Process. 2014;23(12):5007–5019. doi: 10.1109/TIP.2014.2360122. [DOI] [PubMed] [Google Scholar]

- 34.Awate SP, DiBella EVR. Spatiotemporal dictionary learning for undersampled dynamic MRI reconstruction via joint frame-based and dictionary-based sparsity. 2012 9th IEEE International Symposium on Biomedical Imaging (ISBI) 2012:318–321. [Google Scholar]

- 35.Caballero J, Price AN, Rueckert D, Hajnal JV. Dictionary learning and time sparsity for dynamic MR data reconstruction. IEEE Transactions on Medical Imaging. 2014;33(4):979–994. doi: 10.1109/TMI.2014.2301271. [DOI] [PubMed] [Google Scholar]

- 36.Wang S, Peng X, Dong P, Ying L, Feng DD, Liang D. Parallel imaging via sparse representation over a learned dictionary. 2015 IEEE 12th International Symposium on Biomedical Imaging (ISBI) 2015:687–690. [Google Scholar]

- 37.Haldar JP, Liang ZP. Spatiotemporal imaging with partially separable functions: A matrix recovery approach. IEEE International Symposium on Biomedical Imaging: From Nano to Macro. 2010:716–719. [Google Scholar]

- 38.Zhao B, Haldar JP, Brinegar C, Liang ZP. Low rank matrix recovery for real-time cardiac mri. IEEE International Symposium on Biomedical Imaging: From Nano to Macro. 2010:996–999. [Google Scholar]

- 39.Pedersen H, Kozerke S, Ringgaard S, Nehrke K, Kim WY. kt pca: Temporally constrained k-t blast reconstruction using principal component analysis. Magnetic Resonance in Medicine. 2009;62(3):706–716. doi: 10.1002/mrm.22052. [DOI] [PubMed] [Google Scholar]

- 40.Trzasko J, Manduca A. Local versus global low-rank promotion in dynamic mri series reconstruction. Proc ISMRM. 2011:4371. [Google Scholar]

- 41.Peng Y, Ganesh A, Wright J, Xu W, Ma Y. Rasl: Robust alignment by sparse and low-rank decomposition for linearly correlated images. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2010:763–770. doi: 10.1109/TPAMI.2011.282. [DOI] [PubMed] [Google Scholar]

- 42.Candès EJ, Li X, Ma Y, Wright J. Robust principal component analysis? J ACM. 2011;58(3):11:1–11:37. [Google Scholar]

- 43.Chandrasekaran V, Sanghavi S, Parrilo PA, Willsky AS. Rank-sparsity incoherence for matrix decomposition. SIAM Journal on Optimization. 2011;21(2):572–596. [Google Scholar]

- 44.Guo H, Qiu C, Vaswani N. An online algorithm for separating sparse and low-dimensional signal sequences from their sum. IEEE Transactions on Signal Processing. 2014;62(16):4284–4297. [Google Scholar]

- 45.Otazo R, Candès E, Sodickson DK. Low-rank plus sparse matrix decomposition for accelerated dynamic MRI with separation of background and dynamic components. Magnetic Resonance in Medicine. 2015;73(3):1125–1136. doi: 10.1002/mrm.25240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Trémoulhéac B, Dikaios N, Atkinson D, Arridge SR. Dynamic mr image reconstruction - separation from undersampled ( k,t )-space via low-rank plus sparse prior. IEEE Transactions on Medical Imaging. 2014;33(8):1689–1701. doi: 10.1109/TMI.2014.2321190. [DOI] [PubMed] [Google Scholar]

- 47.Lingala SG, Hu Y, DiBella E, Jacob M. Accelerated dynamic MRI exploiting sparsity and low-rank structure: k-t SLR. IEEE Transactions on Medical Imaging. 2011;30(5):1042–1054. doi: 10.1109/TMI.2010.2100850. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Zhao B, Haldar JP, Christodoulou AG, Liang ZP. Image reconstruction from highly undersampled (k, t) -space data with joint partial separability and sparsity constraints. IEEE Transactions on Medical Imaging. 2012;31(9):1809–1820. doi: 10.1109/TMI.2012.2203921. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Majumdar A, Ward R. Learning space-time dictionaries for blind compressed sensing dynamic MRI reconstruction. 2015 IEEE International Conference on Image Processing (ICIP) 2015:4550– 4554. [Google Scholar]

- 50.Pruessmann KP, Weiger M, Börnert P, Boesiger P. Advances in sensitivity encoding with arbitrary k-space trajectories. Magnetic Resonance in Medicine. 2001;46(4):638–651. doi: 10.1002/mrm.1241. [DOI] [PubMed] [Google Scholar]

- 51.Majumdar A, Ward RK. Learning the sparsity basis in low-rank plus sparse model for dynamic MRI reconstruction. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2015:778–782. [Google Scholar]

- 52.Nadakuditi RR. OptShrink: An algorithm for improved low-rank signal matrix denoising by optimal, data-driven singular value shrinkage. IEEE Transactions of Information Theory. 2013;60(5):3002–3018. [Google Scholar]

- 53.Moore BE, Nadakuditi RR, Fessler JA. Dynamic MRI reconstruction using low-rank plus sparse model with optimal rank regularized eigen-shrinkage. Proc ISMRM. 2014 May;:740. [Google Scholar]

- 54.Moore BE, Nadakuditi RR, Fessler JA. Improved robust PCA using low-rank denoising with optimal singular value shrinkage. 2014 IEEE Workshop on Statistical Signal Processing (SSP); June 2014; pp. 13–16. [Google Scholar]

- 55.Ravishankar S, Moore BE, Nadakuditi RR, Fessler JA. LASSI: A low-rank and adaptive sparse signal model for highly accelerated dynamic imaging. IEEE Image Video and Multidimensional Signal Processing (IVMSP) workshop. 2016 doi: 10.1109/TMI.2017.2650960. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Ravishankar S, Nadakuditi RR, Fessler JA. Efficient sum of outer products dictionary learning (SOUP-DIL) and its application to inverse problems. 2016 doi: 10.1109/TCI.2017.2697206. preprint: https://www.dropbox.com/s/cog6y48fangkg07/SOUPDIL.pdf?dl=0. [DOI] [PMC free article] [PubMed]

- 57.Gribonval R, Schnass K. Dictionary identification–sparse matrix-factorization via l1 -minimization. IEEE Trans Inform Theory. 2010;56(7):3523–3539. [Google Scholar]

- 58.Pati Y, Rezaiifar R, Krishnaprasad P. Orthogonal matching pursuit : recursive function approximation with applications to wavelet decomposition. Asilomar Conf on Signals, Systems and Comput. 1993;1:40–44. [Google Scholar]

- 59.Cai JF, Candès EJ, Shen Z. A singular value thresholding algorithm for matrix completion. SIAM Journal on Optimization. 2010;20(4):1956–1982. [Google Scholar]

- 60.Combettes PL, Wajs VR. Signal recovery by proximal forward-backward splitting. Multiscale Modeling & Simulation. 2005;4(4):1168–1200. [Google Scholar]

- 61.Otazo R. [Online; accessed Mar. 2016];L+S reconstruction Matlab code. 2014 http://cai2r.net/resources/software/ls-reconstruction-matlab-code.

- 62.Woodworth J, Chartrand R. Compressed sensing recovery via nonconvex shrinkage penalties. Inverse Problems. 2016;32(7):75 004–75 028. [Google Scholar]

- 63.Parikh N, Boyd S. Proximal algorithms. Found Trends Optim. 2014 Jan;1(3):127–239. [Google Scholar]

- 64.Rockafellar RT, Wets RJ-B. Variational Analysis. Heidelberg, Germany: Springer-Verlag; 1998. [Google Scholar]

- 65.Sharif B, Bresler Y. Adaptive real-time cardiac MRI using paradise: Validation by the physiologically improved NCAT phantom. IEEE International Symposium on Biomedical Imaging: From Nano to Macro. 2007:1020–1023. doi: 10.1109/ISBI.2007.357028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Lingala SG, Hu Y, DiBella E, Jacob M. [Online; accessed 2016];k-t SLR Matlab package. 2014 http://user.engineering.uiowa.edu/~jcb/software/ktslr_matlab/Software.html.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.