Abstract

A major shortcoming of current models of ideological prejudice is that although they can anticipate the direction of the association between participants’ ideology and their prejudice against a range of target groups, they cannot predict the size of this association. I developed and tested models that can make specific size predictions for this association. A quantitative model that used the perceived ideology of the target group as the primary predictor of the ideology-prejudice relationship was developed with a representative sample of Americans (N = 4,940) and tested against models using the perceived status of and choice to belong to the target group as predictors. In four studies (total N = 2,093), ideology-prejudice associations were estimated, and these observed estimates were compared with the models’ predictions. The model that was based only on perceived ideology was the most parsimonious with the smallest errors.

Keywords: prejudice, intergroup dynamics, ideology, stereotyped attitudes, open data, open materials, preregistered

Former President Clinton (2014) argued, “We only have one remaining bigotry. We don’t want to be around anybody who disagrees with us.” Putting aside whether prejudice based on dissimilar political attitudes is the only remaining bigotry (it certainly is not), it is clear that negative affect (i.e., prejudice) toward political out-groups has deleterious effects on how people treat others who have different attitudes (Brandt, Reyna, Chambers, Crawford, & Wetherell, 2014; Chambers, Schlenker, & Collisson, 2013; Gift & Gift, 2015; Iyengar & Westwood, 2015) and on the ability to reason accurately about political issues (Crawford, Kay, & Duke, 2015; Kahan, 2013). Such negative affect may even contribute to geographic sorting into politically homogeneous neighborhoods (Motyl, Iyer, Oishi, Trawalter, & Nosek, 2014). All of these findings are based on directional predictions, for example, that conservatives will do more of X than liberals in Y condition and that liberals will do more of X than conservatives in Z condition. Directional predictions are one step in the development of theories and are often the stopping point in psychology (Meehl, 1978, 1997). This article pushes research on ideology and prejudice to the next step by reporting the development and testing of models that used the perceived characteristics of target groups to precisely predict the size of the association between participants’ political ideology and their prejudice against those groups.

In this research, I considered three perceived characteristics of target groups that are likely to be relevant to predicting both the size and the direction of participants’ ideology-prejudice association. The first was the perceived political ideology of the target group. People spontaneously stereotype groups on the basis of the groups’ political ideologies (Koch, Imhoff, Dotsch, Unkelbach, & Alves, 2016). Prior research has found that people are prejudiced toward groups they see as having political values and identities dissimilar to their own (e.g., Byrne, 1969; Chambers et al., 2013; Wynn, 2016); the greater the dissimilarity, the greater the prejudice. Conservatives tend to express prejudice toward groups perceived as liberal, and liberals tend to express prejudice toward groups perceived as conservative. This is because these groups hold values that are in opposition (Crawford, Brandt, Inbar, Chambers, & Motyl, 2017; Wetherell, Brandt, & Reyna, 2013). I also included a measure of perceived conventionalism as an alternative, less direct measure of value dissimilarity on the political dimension.

People also spontaneously stereotype groups on the basis of the groups’ social status (Koch et al., 2016), so the second group characteristic I considered was social status. Conservatives tend to support values and policies that maintain inequality more than liberals do (Graham, Haidt, & Nosek, 2009; Sidanius & Pratto, 2001), and so, compared with liberals, conservatives should express greater tolerance and admiration of high-status, privileged groups and more prejudice toward low-status, disadvantaged groups (Duckitt, 2006).

Finally, the third group characteristic I considered was degree to which membership in the group is perceived to be a choice. Low levels of perceived choice may be related to more expressed prejudice by conservatives compared with liberals because prejudice in such situations helps reinforce and maintain clear group boundaries (Haslam, Bastian, Bain, & Kashima, 2006), something that psychological models of political ideology suggest that conservatives value (Hodson & Dhont, 2015). This prediction is also consistent with recent findings showing that lower levels of cognitive ability (something that is also associated with conservative attitudes) predict prejudice toward groups perceived to have low levels of choice regarding membership (Brandt & Crawford, 2016).

The idea that these three perceived group characteristics might predict the direction of the association between participants’ ideology and their prejudice against target groups is not unique. The novelty of the research presented here is the idea that these group characteristics can be used also to predict the size of this association. In an early and incomplete test of this idea, perceived ideology of a target group was strongly correlated (r = −.95) with the size and direction of the association between participants’ ideology and prejudice against the group (Brandt et al., 2014; the data for this study came from Chambers et al., 2013). Liberals expressed more prejudice than conservatives toward groups perceived as strongly conservative, but this difference tapered off as the groups were seen as less clearly conservative and then flipped directions and strengthened as the groups were seen as increasingly liberal. This initial test was incomplete because it did not consider rival explanations, such as social status and perceived membership choice, and it did not test whether the model could accurately anticipate the size and direction of the ideology-prejudice association for specific target groups in new samples.

Although most psychologists do not focus their theoretical and empirical energy on predicting effect sizes, models that make size predictions have substantial practical and theoretical value (Meehl, 1978, 1997). On the practical side,

Such models can predict, for example, whether the association between participants’ ideology and their prejudice against target groups will be stronger when the targets are Black or atheist, or whether this association will be stronger when the targets are Christian fundamentalists or rich people. Directional hypotheses do not make such distinctions.

An accurate model that makes size predictions can be extended to new observations, beyond the original sample. Social groups are not static entities; some groups rise to prominence, whereas others fade away, depending on the cultural zeitgeist or the issues at stake. Researchers using a model that makes accurate size predictions can anticipate ideological prejudice against new groups that were not used to develop the model.

Size predictions are useful for planning and evaluating studies (e.g., conducting power analyses and calculating Bayesian priors). For example, is a manipulation that reduces the association between participants’ ideology and their prejudice against Blacks by .10 a big manipulation? Such questions cannot be answered without a precise idea of what to expect.

On the theoretical side,

Models that make size predictions facilitate falsifying predictions and learning from the data. This is because size predictions are risky predictions. They highlight when a hypothesis has failed to live up to expectations.

Comparing rival models is easier when they make size predictions. Multiple models might make similar directional predictions—as do models that use target groups’ perceived ideology, status, and choice in membership to predict the association between participants’ ideology and prejudice against those groups. Similar predictions prevent rival models from “losing” the theoretical competition, but if rival models make size predictions, the model that consistently makes more accurate size predictions can be considered a better tool.

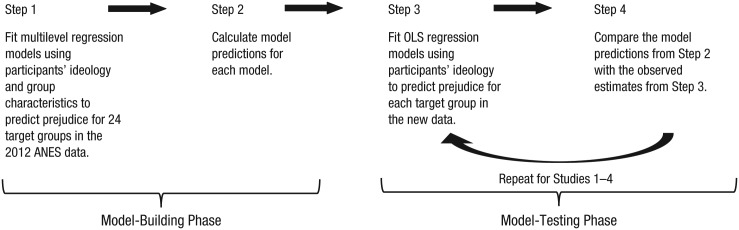

I aimed to test the ability of target groups’ perceived ideology, status, and choice in membership to predict the size and direction of the association (in new samples) between participants’ ideology and their prejudice against those target groups. This exposed the hypotheses about the role of perceived ideology, status, and choice discussed earlier to clear disconfirmation. I developed multiple models that used perceptions of group ideology, status, and choice to predict the size and direction of the ideology-prejudice association for a diverse array of target groups. The predictions were tested in a series of studies, including three preregistered studies that compared the models’ predictions with the observed outcomes. Although many research programs stop after demonstrating that an effect exists (Meehl, 1978, 1997), the purpose of these studies was to test if I could use these group characteristics to make precise predictions. Figure 1 summarizes the model-building and -testing phases for this research.

Fig. 1.

Summary of the procedure. In the model-building phase, multilevel regression models were used to estimate the association between participants’ ideology and their prejudice against each of 24 target groups. The data for this phase came from the 2012 Times Series Study of the American National Elections Studies (ANES). In the model-testing phase, ordinary least squares (OLS) regression was used to model the association between participants’ ideology and their prejudice against various target groups. The observed estimates obtained from these models in four studies were compared with the predictions from the original model.

Model Building

In the model-building phase, I used data from the 2012 Times Series Study of the American National Election Studies (ANES, 2015) to estimate the association between participants’ political ideology and their prejudice toward 24 different target groups (N = 4,940; mean age = 50.2 years, SD = 16.6; 2,464 men, 2,476 women). Participants’ ideology was measured on a scale from 1 (extremely liberal) to 7 (extremely conservative). Expressed prejudice was measured with feeling thermometers that ranged from 0 (cold/unfavorable) to 100 (warm/favorable); ratings were reverse-scored so that higher scores indicated more prejudice. Feeling thermometers are among the most widely used measures of prejudice in the psychology literature (Correll, Judd, Park, & Wittenbrink, 2010). They were particularly useful for my purposes because they (a) measure a definitional feature of prejudice (i.e., negative affect toward groups; Crandall, Eshleman, & O’Brien, 2002), (b) are applicable to a wide range of social targets, and (c) are widely used in multiple disciplines.

For a separate study (Brandt & Crawford, 2016), a different sample of American adults rated each target group on ideology, conventionalism, status, and choice in membership. Conventionalism was included as a less direct indicator of ideology. Each characteristic was measured on a scale from 0 to 100; for the current study, ratings for each characteristic were rescaled to range from 0 to 1 and centered on the scale’s midpoint. This eased the interpretation of the final models that were used to make predictions. Higher scores indicated that the target group was perceived as more conservative, more conventional, of higher status, and characterized by greater choice in membership (see Table S1 in the Supplemental Material available online for the means for each group).

I used these data to create multilevel models in which the target groups’ characteristics (perceived ideology, conventionalism, status, or choice in membership) were moderators of the relation between participants’ ideology and their prejudice toward the target groups in the 2012 ANES. The models adjusted for the following demographic covariates: age, gender (−0.5 = female, 0.5 = male), race-ethnicity (Contrast 1: −0.75 = White, 0.25 = Black, Hispanic, and other; Contrast 2: 0 = White, 0.33 = Black and Hispanic, −0.66 = other; Contrast 3: 0 = White and other, 0.5 = Black, −0.5 = Hispanic), education (1 = less than high school; 5 = graduate degree), and income (1 = under $5,000, 28 = $250,000 or more).1 All variables were recoded to range from 0 to 1, so that the coefficients represent the percentage change in prejudice as one goes from the lowest value to the highest value on the predictor variable. Age, education, and income were mean-centered. Gender and race-ethnicity contrast codes were also mean-centered to weight these contrasts at their average (Hayes, 2013). Scatterplots with linear and loess estimates of the relationship between ideology and prejudice for each target group are presented in Figure S1 in the Supplemental Material available online.

I built the predictive models using random-intercept, random-slope multilevel models estimated using the lme4 package in R (Bates, Maechler, Bolker, & Walker, 2015). Target groups were nested within participants. Prejudice was regressed on participants’ ideology, all covariates, and the three group characteristics. The group characteristics were included as random slopes. Target group was also modeled as a random variable (Judd, Westfall, & Kenny, 2012). The specific combination of group characteristics that I included depended on the model. Perceived ideology and conventionalism were not included in the same models because they were highly correlated, r(22) = .85. The models were designed to test the independent and additive contributions of the three group characteristics in explaining the size and direction of the ideology-prejudice association. For example, the first model regressed prejudice on participant’s ideology, perceived group ideology, the interaction of these two predictors, and the covariates. In addition, I created a null model that predicted no ideology-prejudice association for each target group. The results of the full models are presented in Table S2 in the Supplemental Material, but only the estimates of the participant’s ideology slope and of the interactions (see Table 1) are necessary for predicting the size and direction of the ideology-prejudice association.

Table 1.

Prediction Errors and Comparisons of the Seven Models in Studies 1 Through 4

| Model | Mean square error |

Model comparison from the meta-analysis | |||

|---|---|---|---|---|---|

| Study 1 | Study 2 | Study 3 | Study 4 | ||

| 1. Ideology only: ŷ = 0.022 − 1.420(ideology) | 0.015 (0.019) | 0.021 (0.030) | 0.035 (0.086) | 0.022 (0.035) | More accurate than Models 3, 4, 5, 6, and 7 |

| 2. Ideology, status, and choice: ŷ = 0.016 – 1.505(ideology) + 0.128(status) + 0.072(choice) | 0.013 (0.020) | 0.021 (0.032) | 0.037 (0.096) | 0.023 (0.038) | More accurate than Models 3, 4, 5, 6, and 7 |

| 3. Conventionalism only: ŷ = 0.157 − 1.947(conventionalism) | 0.040 (0.076) | 0.054 (0.083) | 0.039 (0.047) | 0.055 (0.065) | More accurate than Models 5, 6, and 7 |

| 4. Conventionalism, status, and choice: ŷ = 0.166 − 1.827(conventionalism) – 0.076(status) − 0.185(choice) | 0.041 (0.078) | 0.047 (0.079) | 0.033 (0.045) | 0.049 (0.070) | More accurate than Models 5, 6, and 7 |

| 5. Status only: ŷ = 0.001 – 0.846(status) | 0.090 (0.153) | 0.081 (0.138) | 0.095 (0.091) | 0.072 (0.106) | Not more accurate than any models |

| 6. Choice only: ŷ = 0.041 – 0.398(choice) | 0.108 (0.166) | 0.093 (0.122) | 0.111 (0.114) | 0.074 (0.117) | Not more accurate than any models |

| 7. Null: ŷ = 0 | 0.111 (0.155) | 0.095 (0.135) | 0.113 (0.101) | 0.076 (0.117) | Not more accurate than any models |

Note: For each model, the table presents mean square errors in the four studies, with standard deviations in parentheses.

Table S3 in the Supplemental Material lists indicators of model fit (Akaike’s information criterion and Bayesian information criterion) for the seven models. These indicators all pointed to models including perceived ideology or conventionalism as the best and most parsimonious models. In the model-testing phase, I determined if that was the case.

Model Testing

In four studies, I tested the seven models’ predictive accuracy by comparing their predictions. The target groups were the original target groups (Study 1) or a mix of the original and new target groups (Studies 2–4). Separate samples rated the target groups on their perceived ideology, conventionalism, status, or choice. Studies 2 through 4 were preregistered. (Differences between the preregistration plans and the final methods are reported in the Supplemental Material.) In each study, the association between participants’ ideology and prejudice was estimated, and these observed values were compared with the predicted values from the models. The model with the lowest mean square error was considered the most accurate model. Thus, these studies went beyond looking to see if perceived ideology or status was associated with the size and the direction of the ideology-prejudice association in a given sample (something prior research has done): They tested whether it is possible to accurately anticipate both the size and the direction of this association in new samples.

Method

Study 1 used previously published data from the Mechanical Turk sample of U.S. adults in Brandt and Van Tongeren’s (2017) Study 1 (N = 253; mean age = 31.4 years, SD = 10.8; 163 men, 88 women, 2 participants with unreported gender). This study was focused on the association between religious fundamentalism and prejudice, but included a measure of political ideology. This item and the measures of prejudice toward 23 groups (all of the groups from the model-building phase with the exception of Christian fundamentalists) were used for the current study. Note that Mechanical Turk samples are not representative of the U.S. population and tend to skew toward political liberalism (Berinsky, Huber, & Lenz, 2012). However, studies using these samples often replicate findings from more nationally representative surveys, including surveys on politics (Clifford, Jewell, & Waggoner, 2015) and in the domain of belief systems and prejudice (Brandt & Van Tongeren, 2017).

For Study 2, I collected new data from a Mechanical Turk sample of U.S. adults (N = 319; mean age = 38.7 years, SD = 11.8; 180 men, 139 women). Study 3 used data from the 2016 ANES Pilot Study (ANES, 2016; N = 1,195; mean age = 48.1 years, SD = 17.0; 570 men, 625 women), which surveyed a representative sample of U.S. adults. In Study 4, I collected new data from a Mechanical Turk sample of U.S. adults (N = 348; mean age = 35.4 years, SD = 10.4; 193 men, 135 women). It was important that the estimates of the ideology-prejudice association in these studies were relatively stable, so that the models’ predictions would be compared with stable observed values. (This goal is related to, but different from, achieving sufficient statistical power.) All the studies included more than 250 participants, a number considered sufficient for estimating reliable correlations in typical psychological scenarios (see the simulations in Schönbrodt & Perugini, 2013). Power ranged from 35% to 93% to detect a small effect (r = .10) and from 99.9% to more than 99.99% to detect a medium effect (r = .30); for all the samples, power to detect a large effect (r = .50) was greater than 99.99%.

In Studies 1, 2, and 4, participants’ ideology was measured on a scale from 1 (extremely conservative) to 7 (extremely liberal), and responses were reverse-coded. In Study 3, participants’ ideology was measured on a scale from 1 (strongly conservative) to 7 (strongly liberal). Prejudice was measured with feeling thermometers that asked participants to rate each group on a scale that ranged from 0 (cold/unfavorable) to 100 (warm/favorable); these ratings were reverse-scored so that higher scores indicated more prejudice. Studies 1 and 3 included all of the available target groups in these existing data sets. For Study 3, this meant there were 9 target groups, 3 of which were new. Study 2 included all the target groups of Study 1 plus additional groups that I felt were relevant and missing from the list, for a total of 35 target groups. Study 4 included the same 42 target groups that Koch et al. (2016, Study 5a) generated with a bottom-up approach. Including all these groups helped ensure that the results were not unintentionally biased by the particular target groups chosen in Studies 1 through 3.

The estimates of the association between ideology and prejudice were adjusted for the following demographic covariates: age, gender (−0.5 = female, 0.5 = male), race-ethnicity (Contrast 1: −0.75 = White, 0.25 = Black, Hispanic, and other; Contrast 2: 0 = White, 0.33 = Black and Hispanic, −0.66 = other; Contrast 3: 0 = White and other, 0.5 = Black, −0.5 = Hispanic), education (Studies 1, 2, and 4: 1 = some high school, no diploma, 8 = doctoral degree; Study 3: 1 = no high school, 6 = postgraduate studies), and income (Studies 1, 2, and 4: 1 = under $25,000, 5 = more than $250,000; Study 3: 1 = less than $10,000, 31 = $150,000). Recoding and mean centering were conducted as in the model-building phase.

Because Studies 2 and 3 included new target groups that were not included in the model-building phase or in Study 1, predictions in these studies were made using data on perceived group characteristics collected from an additional, separate Mechanical Turk sample (N = 432; mean age = 38.4 years, SD = 11.5; 214 men, 217 women, 1 participant with unreported gender). Participants were randomly assigned to complete one of the group-characteristic measures. The items were identical to those used by Brandt and Crawford (2016; see Table S4 in the Supplemental Material for the mean ratings).

Similarly, because Study 4 included new target groups that were not included in the model-building phase or Studies 1 through 3, predictions in this study were made using data on perceived group characteristics collected from an additional, separate Mechanical Turk sample (N = 422; mean age = 36.8 years, SD = 10.8; 235 men, 187 women). Participants were randomly assigned to complete one of the group-characteristic measures (see Table S5 in the Supplemental Material for the mean ratings).

Results

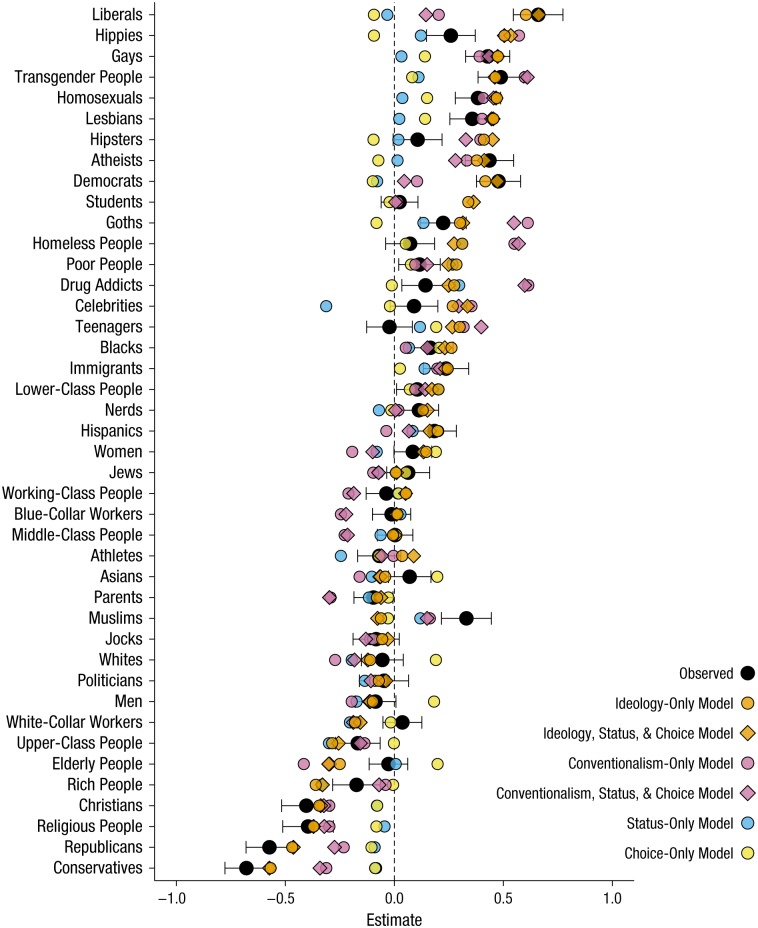

The ideology-prejudice association, adjusting for covariates, was estimated for each target group in each study and compared with the predicted estimates derived from the seven models in Table 1. Figure 2 shows how the observed estimates compared with the predicted estimates from the models in Study 4 (see Figs. S2–S4 in the Supplemental Material for results from Studies 1 through 3). The figure shows that there was significant heterogeneity in the association between ideology and prejudice from one target group to another. In general, it appears that the predictions of the ideology-only model were closest to the observations in both studies.

Fig. 2.

Comparison of the predicted and observed estimates for the association between ideology and prejudice toward the target groups in Study 4. The observed estimates were obtained using ordinary least squares regression, adjusting for age, gender, education, income, and race-ethnicity. The predicted estimates were obtained from the models described in Table 1. The dashed vertical line represents the predictions of the null model. All variables were coded to have a range of 1. Error bars represent 95% confidence intervals. The target groups are ordered from the group perceived as most liberal (top) to the group perceived as most conservative (bottom).

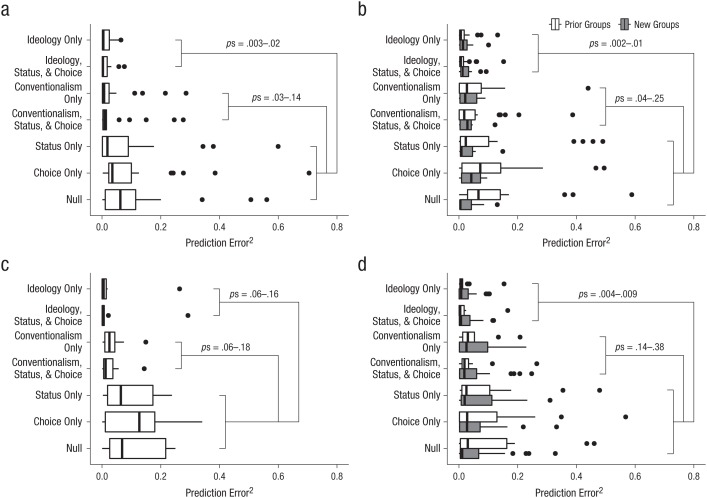

To test which model made fewer errors, I calculated the square error for each target group for each model. Table 1 shows the mean square error for each model for each study, and Figure 3 presents box plots for the square errors from all four studies.

Fig. 3.

Box plots of the seven models’ squared prediction error in (a) Study 1 (23 target groups), (b) Study 2 (35 target groups), (c) Study 3 (9 target groups), and (d) Study 4 (42 target groups). For Studies 1, 2, and 4, results are shown separately for new and original target groups, but because of the small number of target groups in Study 3, results for new and original target groups are combined. In each plot, the right and left edges of the box indicate the 75th and 25th percentiles, respectively, and the black line near the middle of the box is the 50th percentile. The whiskers represent the lowest and highest data points within 1.5 times the interquartile range of the lowest quartile and the highest quartile, respectively. The circles represent outliers. The ranges of p values indicate the values obtained when the two ideology models and the two conventionalism models were compared individually with the status-only, choice-only, and null models.

The models including ideology or conventionalism had lower mean square errors than the status-only, choice-only, and null models in three of the four studies—Study 1: F(6, 154) = 3.33, p = .004; Study 2: F(6, 238) = 3.65, p = .002;2 Study 4: F(6, 287) = 3.11, p = .006. The mean square errors did not differ significantly across the models in Study 3, the study with only nine target groups, F(6, 56) = 1.74, p = .13.

Simple comparisons showed that in Studies 1, 2, and 4, the two ideology-based models made more accurate predictions than the status-only, choice-only, and null models, ps = .002–.02. The conventionalism models were not reliably different from the ideology models (ps = .07–.43) and also did not always reliably differ from the status-only (ps = .14–.38), choice-only (ps = .04–.32), and null (ps = .03–.26) models. The status-only and choice-only models did not differ from the null model (ps = .53–.94). The comparisons in Study 3, which had only nine target groups, were similarly patterned (Table 1), but the differences were never reliably significant (Fig. 3).3 (See Table S6 in the Supplemental Material for additional measures of fit in the four studies.)

To compare the models overall, I conducted a meta-analysis of their mean square errors across the four studies, using the metafor package in R (Viechtbauer, 2010; see Table 1, Fig. S5 in the Supplemental Material).4 Because mean square error is a meaningful metric, the meta-analysis used these unstandardized values instead of standardized effect sizes (e.g., Cohen’s d). The two ideology models made significantly more accurate predictions than every other model. Because the ideology-only model was the most parsimonious model with the smallest errors, this model appears to have the best fit.

Discussion

I tested whether I could use perceived group characteristics to predict the size and direction of the association between participants’ ideology and their prejudice against a variety of target groups. Results showed that the models using perceived ideology (and, to a lesser degree, conventionalism) can be used to make precise predictions about when people will exhibit prejudice. The predictive success of perceived ideology outstripped that of both perceived status and perceived choice in membership. These latter dimensions of group perception are often tied to expressions of prejudice (Fiske, Cuddy, & Glick, 2007; Haslam et al., 2006; Sidanius & Pratto, 2001), especially ideologically based prejudice (Duckitt, 2006; Suhay & Jayaratne, 2013). However, status and choice may make only a small contribution to prejudice when in direct competition with perceived political ideology, as they were in the current studies.

There are limitations to the approach taken in this research. First, the predictive models were developed using one measure of explicit prejudice. Although this measure is a common one (Correll et al., 2010), it is not known if the precise model predictions would extend to other measures and, especially, if they would extend to measures of implicit prejudice. For example, it might be the case that status is more important for predicting the association between ideology and implicit prejudice. Answers to these questions will help further refine the models. Also, although the models were predictive in the sense that they accurately predicted the observations in future studies, the ideology-prejudice association is cross-sectional. The purpose of this study was not to pin down a causal connection, but rather to maximize prediction (Yarkoni & Westfall, in press). Finally, this study focused on ideology, and the results suggest that dissimilarity along the ideological dimension is important for understanding ideological prejudice, but that does not mean that dissimilarity is limited to politics. The effect of dissimilarity on prejudice is pervasive (Byrne, 1969; Wynn, 2016).

One might point to the unrepresentative Mechanical Turk samples to discount the findings. Although these samples have shortcomings, the findings obtained with them closely adhered to the findings obtained using the 2016 ANES Pilot Study and to the predictions of the models developed from the 2012 ANES survey, both of which are based on nationally representative samples. Another possible critique is that if status and choice in membership did not have reliable effects in the model-building phase, it would not be possible for status-only and choice-only models to demonstrate predictive accuracy greater than that of the null model. However, the results of exploratory analyses using group characteristics to predict the ideology-prejudice association in Studies 1 through 4 were similar to the results in the model-building phase (see Tables S8–S11 in the Supplemental Material). That is, status and choice remained unreliable predictors.

This study of the ideology-prejudice association makes several contributions (see also the introduction). I highlight three here: First, consistent deviations from the model’s predictions can highlight cases that warrant more in-depth investigations and suggest target groups for which perceived ideology is not enough to make accurate predictions. In the current set of target groups, prejudice toward Muslims was consistently inaccurately predicted by all seven models. Thus, for this group, it appears that accurate predictions require going beyond value dissimilarity to include additional mechanisms, such as ideological differences in associating Muslims with terrorism threats (Sides & Gross, 2013). Second, the results of this study demonstrate that researchers and practitioners can make reasonable predictions about the size and direction of the ideology-prejudice association using only a simple linear model and a group’s perceived ideology. In contrast, much of the work in social psychology makes only directional predictions—and even so the results can be replicated less often than one might expect (Open Science Collaboration, 2015; see Gilbert, King, Pettigrew, & Wilson, 2016, for a countervailing view). Third, scholars who disagree with such a simple model or believe that other theoretical processes are at play have a clear benchmark to test their own models against. My cards are on the table. Clear predictions that can be falsified will help move research forward and will lead to increasingly refined models of ideologically based prejudice.

Supplementary Material

Acknowledgments

Anthony Evans and Aislinn Callahan Brandt provided helpful comments on a prior draft of this manuscript.

The models with covariates were the primary models; however, a reviewer suggested that using covariates unfairly handicaps social status because they remove its effect. To give social status a fair shake, I report the analyses without covariates in the Supplemental Material (see Table S7 and Fig. S6). All conclusions from this exploratory analysis without covariate were identical to those reported here.

The null model was added to Study 2 after the study plan was preregistered. Results were the same when it was not included, F(5, 204) = 3.83, p = .002.

The preregistered hypothesis for Study 2 was that the ideology-only and conventionalism-only models would perform better than the status-only and choice-only models for new target groups. Unexpectedly, all of the models performed equally well for the new target groups—with the null model included: F(6, 70) = 0.32, p = .92 (see the results for the new targets in Fig. 3b); without the null model included: F(5, 60) = 0.41, p = .84. Study 4 tested this hypothesis with an exploratory analysis and found similar results, F(6, 196) = 1.72, p = .12. Simple comparisons showed that the ideology models were significantly better than the null model and the status model (ps = .04–.05) for new target groups, and the ideology-only model was better than the conventionalism-only model (p = .05) for new target groups. All other simple comparisons were nonsignificant, ps > .05. Another Study 2 hypothesis predicted that estimating the ideology-prejudice association at the mean level of political interest in the 2012 ANES would improve predictions. This adjustment did not help the ideology-only model and made only a small improvement for the conventionalism-only model (see Confirmatory and Exploratory Analyses in the Supplemental Material).

The meta-analysis was not preregistered.

Footnotes

Action Editor: Brent W. Roberts served as action editor for this article.

Declaration of Conflicting Interests: The author declared that he had no conflicts of interest with respect to his authorship or the publication of this article.

Supplemental Material: Additional supporting information can be found at http://journals.sagepub.com/doi/suppl/10.1177/0956797617693004

Open Practices:

All data and materials have been made publicly available via the Open Science Framework and can be accessed at https://osf.io/3xgtk/. The design and analysis plans for Studies 2, 3, and 4 were preregistered at the Open Science Framework and can be accessed at https://osf.io/f9dh7/, https://osf.io/tcb3a/, and https://osf.io/nfj5k/, respectively. The complete Open Practices Disclosure for this article can be found at http://journals.sagepub.com/doi/suppl/10.1177/0956797617693004. This article has received badges for Open Data, Open Materials, and Preregistration. More information about the Open Practices badges can be found at http://www.psychologicalscience.org/publications/badges.

References

- American National Election Studies. (2015). The ANES 2012 Time Series Study [Data file]. Stanford University and the University of Michigan; (Producers). Retrieved from http://www.electionstudies.org/studypages/anes_timeseries_2012/anes_timeseries_2012.htm [Google Scholar]

- American National Election Studies. (2016). The ANES 2016 Pilot Study [Data file]. Stanford University and the University of Michigan; (Producers). Retrieved from http://www.electionstudies.org/studypages/anes_pilot_2016/anes_pilot_2016.htm [Google Scholar]

- Bates D., Maechler M., Bolker B., Walker S. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software, 67, 1–48. [Google Scholar]

- Berinsky A. J., Huber G. A., Lenz G. S. (2012). Evaluating online labor markets for experimental research: Amazon.com’s Mechanical Turk. Political Analysis, 20, 351–368. [Google Scholar]

- Brandt M. J., Crawford J. T. (2016). Answering unresolved questions about the relationship between cognitive ability and prejudice. Social Psychological and Personality Science, 7, 884–892. [Google Scholar]

- Brandt M. J., Reyna C., Chambers J. R., Crawford J. T., Wetherell G. (2014). The ideological-conflict hypothesis: Intolerance among both liberals and conservatives. Current Directions in Psychological Science, 23, 27–34. [Google Scholar]

- Brandt M. J., Van Tongeren D. R. (2017). People both high and low on religious fundamentalism are prejudiced toward dissimilar groups. Journal of Personality and Social Psychology, 112, 76–97. [DOI] [PubMed] [Google Scholar]

- Byrne D. (1969). Attitudes and attraction. In Berkowitz L. (Ed.), Advances in experimental social psychology (Vol. 4, pp. 35–89). New York, NY: Academic Press. [Google Scholar]

- Chambers J. R., Schlenker B. R., Collisson B. (2013). Ideology and prejudice: The role of value conflicts. Psychological Science, 24, 140–149. [DOI] [PubMed] [Google Scholar]

- Clifford S., Jewell R. M., Waggoner P. D. (2015). Are samples drawn from Mechanical Turk valid for research on political ideology? Research & Politics, 2. doi: 10.1177/2053168015622072 [DOI] [Google Scholar]

- Clinton B. (2014, November 20). We only have one remaining bigotry: We don’t want to be around anybody who disagrees with us. New Republic. Retrieved from https://web.archive.org/web/20150419150524/ http://www.newrepublic.com/article/120330/bill-clinton-speech-new-republics-100-year-anniversary-gala

- Correll J., Judd C. M., Park B., Wittenbrink B. (2010). Measuring prejudice, stereotypes and discrimination. In Dovidio J. F., Hewstone M., Glick P., Esses V. M. (Eds.), The SAGE handbook of prejudice, stereotyping and discrimination (pp. 45–62). Thousand Oaks, CA: Sage. [Google Scholar]

- Crandall C. S., Eshleman A., O’Brien L. (2002). Social norms and the expression and suppression of prejudice: The struggle for internalization. Journal of Personality and Social Psychology, 82, 359–378. [PubMed] [Google Scholar]

- Crawford J. T., Brandt M. J., Inbar Y., Chambers J. R., Motyl M. (2017). Social and economic ideologies differentially predict prejudice across the political spectrum, but social issues are most divisive. Journal of Personality and Social Psychology, 112, 383–412. [DOI] [PubMed] [Google Scholar]

- Crawford J. T., Kay S. A., Duke K. E. (2015). Speaking out of both sides of their mouths: Biased political judgments within (and between) individuals. Social Psychological and Personality Science, 6, 422–430. [Google Scholar]

- Duckitt J. (2006). Differential effects of right wing authoritarianism and social dominance orientation on outgroup attitudes and their mediation by threat from and competitiveness to outgroups. Personality and Social Psychology Bulletin, 32, 684–696. [DOI] [PubMed] [Google Scholar]

- Fiske S. T., Cuddy A. J., Glick P. (2007). Universal dimensions of social cognition: Warmth and competence. Trends in Cognitive Sciences, 11, 77–83. [DOI] [PubMed] [Google Scholar]

- Gift K., Gift T. (2015). Does politics influence hiring? Evidence from a randomized experiment. Political Behavior, 37, 653–675. [Google Scholar]

- Gilbert G. T., King G., Pettigrew S., Wilson T. D. (2016). Comment on “Estimating the reproducibility of psychological science.” Science, 351, 1037-a. [DOI] [PubMed] [Google Scholar]

- Graham J., Haidt J., Nosek B. A. (2009). Liberals and conservatives rely on different sets of moral foundations. Journal of Personality and Social Psychology, 96, 1029–1046. [DOI] [PubMed] [Google Scholar]

- Haslam N., Bastian B., Bain P., Kashima Y. (2006). Psychological essentialism, implicit theories, and intergroup relations. Group Processes & Intergroup Relations, 9, 63–76. [Google Scholar]

- Hayes A. F. (2013). Introduction to mediation, moderation, and conditional process analysis: A regression-based approach. New York, NY: Guilford Press. [Google Scholar]

- Hodson G., Dhont K. (2015). The person-based nature of prejudice: Individual difference predictors of intergroup negativity. European Review of Social Psychology, 26, 1–42. [Google Scholar]

- Iyengar S., Westwood S. J. (2015). Fear and loathing across party lines: New evidence on group polarization. American Journal of Political Science, 59, 690–707. [Google Scholar]

- Judd C. M., Westfall J., Kenny D. A. (2012). Treating stimuli as a random factor in social psychology: A new and comprehensive solution to a pervasive but largely ignored problem. Journal of Personality and Social Psychology, 103, 54–69. [DOI] [PubMed] [Google Scholar]

- Kahan D. M. (2013). Ideology, motivated reasoning, and cognitive reflection. Judgment and Decision Making, 8, 407–424. [Google Scholar]

- Koch A., Imhoff R., Dotsch R., Unkelbach C., Alves H. (2016). The ABC of stereotypes about groups: Agency/socioeconomic success, conservative-progressive beliefs, and communion. Journal of Personality and Social Psychology, 110, 675–709. [DOI] [PubMed] [Google Scholar]

- Meehl P. E. (1978). Theoretical risks and tabular asterisks: Sir Karl, Sir Ronald, and the slow progress of soft psychology. Journal of Consulting and Clinical Psychology, 46, 806–834. [Google Scholar]

- Meehl P. E. (1997). The problem is epistemology, not statistics: Replace significance tests by confidence intervals and quantify accuracy of risky numerical predictions. In Harlow L. L., Mulaik S. A., Steiger J. H. (Eds.), What if there were no significance tests? (pp. 391–423). Hillsdale, NJ: Erlbaum. [Google Scholar]

- Motyl M., Iyer R., Oishi S., Trawalter S., Nosek B. A. (2014). How ideological migration geographically segregates groups. Journal of Experimental Social Psychology, 51, 1–14. [Google Scholar]

- Open Science Collaboration. (2015). Estimating the reproducibility of psychological science. Science, 349, aac4716. [DOI] [PubMed] [Google Scholar]

- Schönbrodt F. D., Perugini M. (2013). At what sample size do correlations stabilize? Journal of Research in Personality, 47, 609–612. [Google Scholar]

- Sidanius J., Pratto F. (2001). Social dominance: An intergroup theory of social hierarchy and oppression. Cambridge, England: Cambridge University Press. [Google Scholar]

- Sides J., Gross K. (2013). Stereotypes of Muslims and support for the war on terror. The Journal of Politics, 75, 583–598. [Google Scholar]

- Suhay E., Jayaratne T. E. (2013). Does biology justify ideology? The politics of genetic attribution. Public Opinion Quarterly, 77, 497–521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Viechtbauer W. (2010). Conducting meta-analyses in R with the metafor package. Journal of Statistical Software, 36, 1–48. [Google Scholar]

- Wetherell G. A., Brandt M. J., Reyna C. (2013). Discrimination across the ideological divide: The role of value violations and abstract values in discrimination by liberals and conservatives. Social Psychological and Personality Science, 4, 658–667. [Google Scholar]

- Wynn K. (2016). Origins of value conflict: Babies do not agree to disagree. Trends in Cognitive Sciences, 20, 3–5. [DOI] [PubMed] [Google Scholar]

- Yarkoni T., Westfall J. (in press). Choosing prediction over explanation in psychology: Lessons from machine learning. Perspectives in Psychological Science. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.