Abstract

Purpose:

Treatment summaries prepared as part of survivorship care planning should correctly and thoroughly report diagnosis and treatment information.

Methods:

As part of a clinical trial, summaries were prepared for patients with stage 0 to III breast cancer at two cancer centers. Summaries were prepared per the standard of care at each center via two methods: using the electronic health record (EHR) to create and facilitate autopopulation of content or using manual data entry into an external software program to create the summary. Each participant's clinical data were abstracted and cross-checked against each summary. Errors were defined as inaccurate information, and omissions were defined as missing information on the basis of the Institute of Medicine recommended elements.

Results:

One hundred twenty-one summaries were reviewed: 80 EHR based versus 41 software based. Twenty-four EHR-based summaries (30%) versus six software-based summaries (15%) contained one or more omissions. Omissions included failure to provide dates and specify all axillary surgeries for EHR-based summaries and failure to specify receptors for software-based summaries. Eight EHR-based summaries (10%) versus 19 software-based summaries (46%) contained one or more errors. Errors in EHR-based summaries were mostly discrepancies in dates, and errors in software-based summaries included incorrect stage, surgeries, chemotherapy, and receptors.

Conclusion:

A significant proportion of summaries contained at least one error or omission; some were potentially clinically significant. Mismatches between the clinical scenario and templates contributed to many of the errors and omissions. In an era of required care plan provision, quality measures should be considered and tracked to reduce rates, decrease inadvertent contributions from templates, and support audited data use.

INTRODUCTION

The number of cancer survivors is increasing rapidly because of improvements in detection and therapy, with nearly 18 million cancer survivors predicted by 2026.1 The majority of these survivors will live ≥ 5 years after cancer diagnosis.1 Inadequate coordination after the completion of active cancer treatment has been shown to result in failures to provide necessary surveillance as well as increased costs due to duplicative care.2-4 The Institute of Medicine (IOM) recommends that a personalized survivorship care plan summarizing diagnosis, treatment (also known as treatment summary), and recommendations for expected follow-up be prepared at the end of cancer treatment for every survivor and his or her primary care provider. Care plans may increase care coordination, with the end result of improving survivor health outcomes.5 Improvements in communication and care coordination may be especially critical, given the forecasted shortage of oncologists6 and anticipated increase in survivors transitioning to shared or primary care–directed follow-up.7 However, determining the value and impact of survivorship care plans has proven challenging, as have adoption and implementation. Despite these challenges, accreditation guidelines have been issued by the Commission on Cancer (CoC), mandating 75% or more of survivors receive a survivorship care plan by 2019.8

Care plans are intended to serve as tools facilitating communication between the oncology team and primary care, primary care and survivors, and the oncology team and survivors. To serve as an effective communication tool, the diagnosis and treatment summary information must be both accurate and complete. For example, if a survivor received anthracycline-based chemotherapy for Hodgkin lymphoma, then this chemotherapy needs to be correctly listed in order that the survivor and primary care team (eg, the end users) are aware of the possible future sequelae (increased risk of congestive heart failure and myelodysplastic syndrome). Efforts have been made to quantify the thoroughness of care plan templates.9 A 2012 study of the National Cancer Institute–designated comprehensive cancer centers noted that none delivered a plan containing all the elements recommended by the IOM.10 However, few published data exist with respect to the accuracy and thoroughness of the treatment summary portion of care plans delivered for real-world use. External accrediting bodies such as the CoC have created an impetus for plan creation and provision. This has led to a rapid increase in the number of treatment summaries being prepared, with potential consequences for quality. Declining accuracy and thoroughness could diminish impact.

As part of a larger randomized trial assessing the impact of care plans on survivor knowledge,11 we conducted a prospective exploratory analysis of the accuracy and thoroughness of treatment summaries prepared at two cancer centers. We present these results here.

METHODS

The two Midwestern cancer centers conducted a clinical trial to determine whether care plans improved breast cancer survivors’ knowledge of cancer diagnosis, treatment, and recommended follow-up.11 Patients with stage 0 to III breast cancer within 2 years of completing active primary treatment and who did not already have a care plan were eligible and were randomly assigned to immediate versus delayed receipt of a care plan. Active treatment was defined as curative-intent surgery, chemotherapy, and/or radiation. Active treatment did not include human epidermal growth factor receptor 2 (HER2) –targeted therapies for HER2-positive cancer or endocrine therapies for estrogen receptor (ER) –positive or progesterone receptor (PR) –positive cancers.

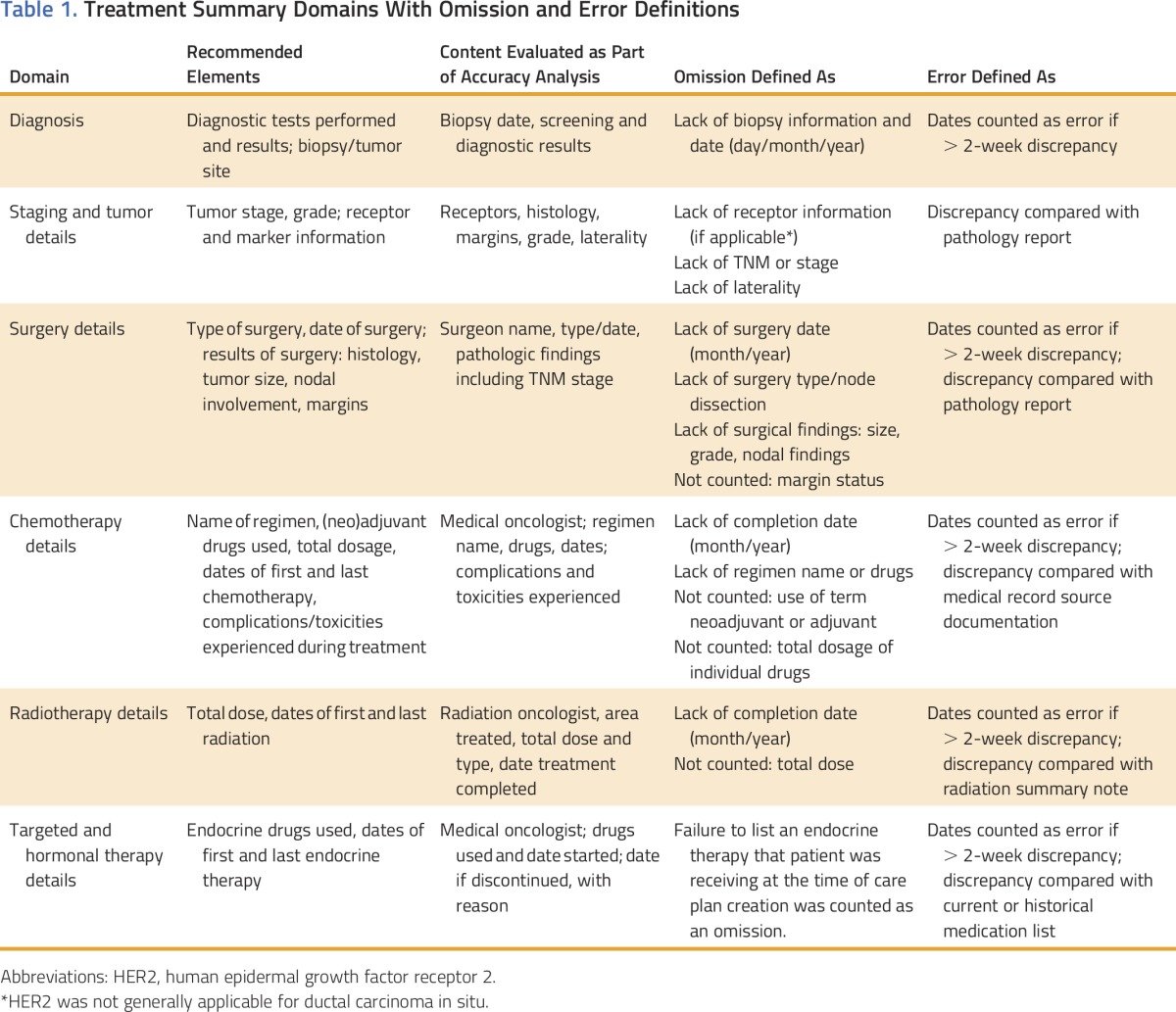

Individual treatment summaries were prepared per the standard of care at each cancer center (the method used to create a summary was not the basis of randomization). Receipt of a care-planning visit was not required by the trial; at the time, no guidelines required a visit (the CoC has since issued guidelines mandating a care-planning visit accompany the care plan document12). In creating the treatment summaries for inclusion in the care plan document, the two centers relied on different methods13,14 to provide a document containing IOM-recommended and/or CoC-required content (Table 1).15 The two methods are as described below but are largely distinguished by whether an external software program not integrated with the EHR was used in the creation of the treatment summaries. We focused our analysis on the process used rather than on the institution, as such processes could be adapted or changed by other institutions.

Table 1.

Treatment Summary Domains With Omission and Error Definitions

EHR-Based Method

Cancer Center 1 used an EHR-based method, where each care plan was autopopulated using diagnosis and treatment data that had previously been entered into the EHR for clinical use. Diagnosis and treatment data were entered by the treating physicians throughout the course of diagnosis and treatment.13 Each document was then created using a template pulling in diagnosis and treatment data along with prepopulated text about follow-up recommendations, future and chronic side effects, and additional resources. The prepopulated text could be further individualized. A document for each survivor was created within the EHR by either a treating oncologist or advanced practice provider (APP). Care summaries created by an APP were reviewed and approved by the treating physician before being provided. The document was provided to the enrolled survivor either electronically or as a paper copy and maintained within the EHR such that it could be adjusted and regenerated rapidly. When provided by the APP, the care plan document was always provided as part of a separate care-planning visit (either in person or telephone based).

External Software-Based Method

Cancer Center 2 used a software program external to their EHR (eg, no integration or communication between the software and the EHR) developed to support survivorship care planning. An oncology registered nurse reviewed each survivor’s medical records to abstract the necessary diagnosis and treatment data. These data were then manually entered into the software program to create a care plan document. The registered nurse then provided the document, typically as part of a survivorship care–planning visit (either in person or telephone based). The document was provided to the enrolled survivor either electronically or as a paper copy and then uploaded into the EHR of Cancer Center 2. Any changes to the document required generation of a new plan via the external software and then repeated upload into the EHR. If the clinical data were not stored by the software (eg, if a different computer was used), it would also need to be re-entered.

Data Abstraction and Verification

The randomized parent trial’s primary end point was change in a survivor’s knowledge of diagnosis and treatment after care plan receipt when compared with baseline. Thus, each participant’s clinical data were abstracted at enrollment and served as the basis for the planned exploratory accuracy analysis. Data were abstracted from source documentation (eg, receptor status had to be determined based on the original pathology report and could not be drawn solely from a clinic note). A second audit was performed for every chart at the end of the study.

Establishing Accuracy and Completeness

To establish accuracy and thoroughness (Table 1), medical records data were cross-checked against available individualized summaries prepared as part of this study. In the case of discrepancies between the medical record and care plan, clinicians at each location (A.J.T. or M.G./W.G.H.) reviewed the chart. The correct information was then adjudicated among at least two medical oncologists (A.J.T., W.G.H., K.B.W., M.E.B.). The decision to label each discrepancy as an omission or an error was made by two medical oncologists (A.J.T., W.G.H.). These oncologists (A.J.T., W.G.H.) also attempted to determine the reason (if possible within the limits of retrospectively identifying an issue) for the omission or error by review of the medical record and discussion with the entering or preparing providers. The errors and omissions were communicated to treating physicians as identified so that corrected treatment summaries could be issued if deemed appropriate.

Errors were defined as data reported that did not match data validated from source documents (eg, right breast cancer reported when imaging and pathology reports stated left). Omissions were defined as IOM-recommended elements missing from the care plan. The thoroughness assessment conducted for this trial focused on diagnosis and treatment details (Table 1). Vast potential differences in the amount of data reported were possible on the basis of the underlying complexity of cancer care. For example, a patient with stage III disease treated with surgery, chemotherapy, radiation, and endocrine therapy would be expected to have far more data for entry into a care plan than a patient with stage 0 disease treated with surgery alone. For this reason, analysis focused on a simple proportion of plans having at least one error and/or omission, rather than calculating an error or omission count or rate per plan.

RESULTS

Participant and Diagnosis/Treatment Characteristics

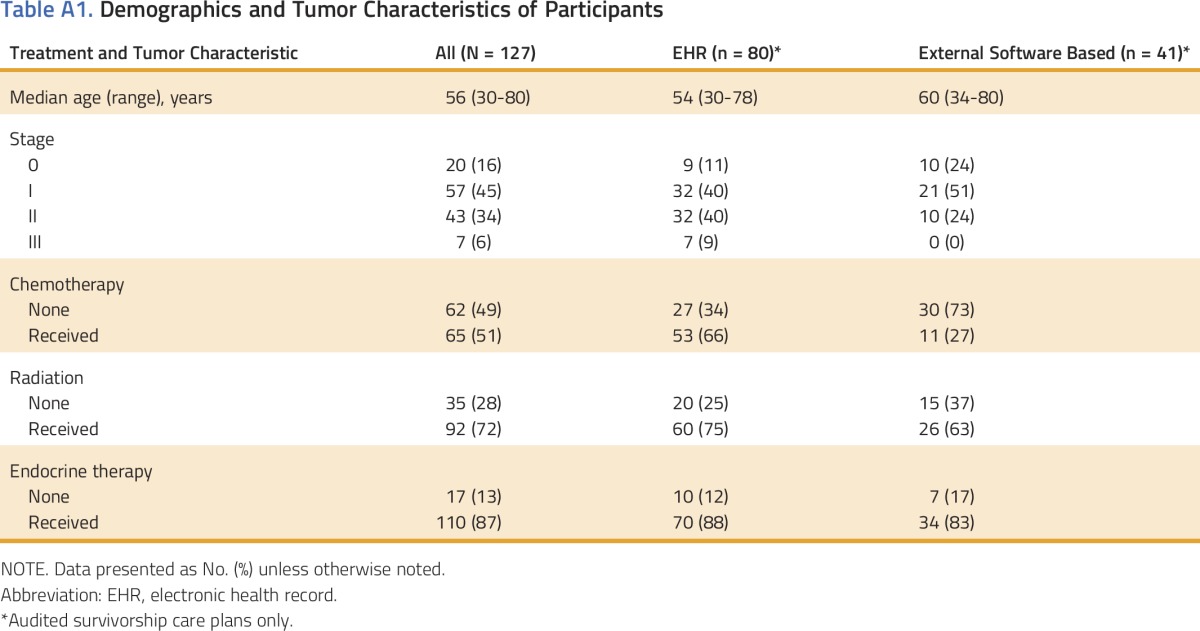

The two cancer centers enrolled 127 eligible women with breast cancer between November 2013 and December 2014 (85 at Cancer Center 1 and 42 at Cancer Center 2). Median age was 56 years (range, 30 to 80 years); 20 had stage 0 (16%), 57 had stage I (45%), 43 had stage II (34%), and 7 had stage III (6%) breast cancer. In general, patients had received several treatment modalities (51% received chemotherapy, 72% received radiation, and 87% were recommended endocrine therapy). Appendix Table A1 (online only) shows the breakdown for the whole population as well as by care plan method.

Care Plan and Visit Characteristics

Of the 127 participants, six came off study before completing a follow-up knowledge survey, and treatment summaries were either not prepared or not available for audit. Thus, 80 EHR-based and 41 external software–based treatment summaries (n = 121) were audited for this study.

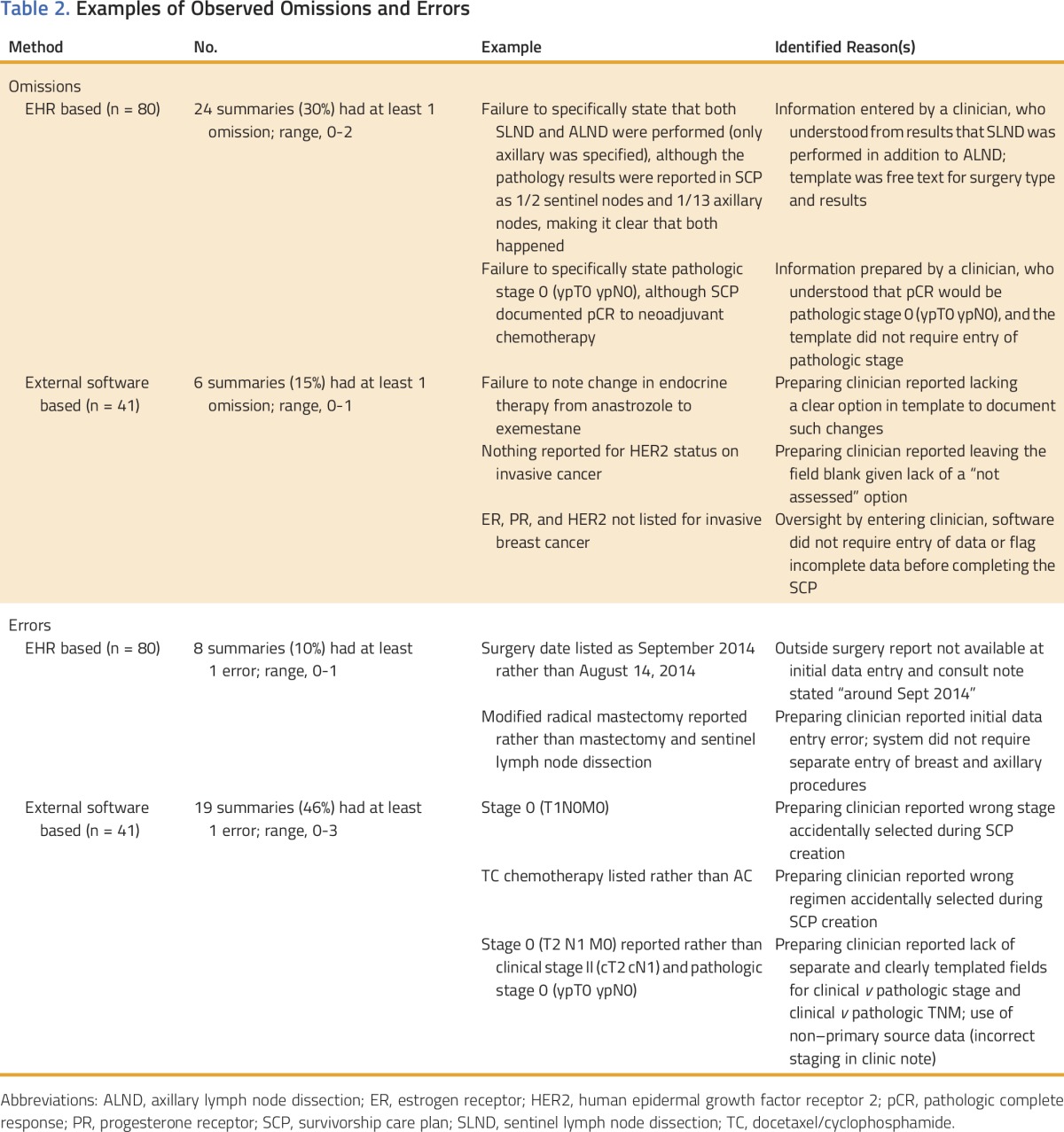

Omissions

Overall, 30 treatment summaries (25%) contained at least one omission. Table 2 lists rates and descriptions of observed omissions. The majority of omissions in EHR-based plans were failures to specifically state information. For example, not specifically reporting “pathologic stage 0” after neoadjuvant chemotherapy was counted as an omission, even though a pathologic complete response at surgery was noted in the treatment summary. Omissions in treatment summaries prepared via external software also occurred because of failure to specifically state information. However, apparent omissions in treatment summaries prepared via external software also resulted from poor match of software options with the clinical scenario. For instance, the software did not have an option to indicate that HER2 status was not assessed (clarifying text was sometimes present in the comments section).

Table 2.

Examples of Observed Omissions and Errors

Errors

Overall, 30 treatment summaries (21%) contained at least one error. Table 2 lists rates and descriptions of observed errors. The majority of errors in EHR-based treatment summaries were minor discrepancies in dates. Errors in external software–based plans included chemotherapy regimen, receptors, stage, and types of axillary surgery. External software–based plans were affected by poor fit of the templated software fields. For example, the individual preparing plans at Cancer Center 2 reported a lack of clearly templated fields for reporting clinical and pathologic stage and TNM separately. This resulted in confusing situations, where TNM and stage seemed discordant (eg, stage I and T2N0, because the c designation for clinical and the yp designation for postneoadjuvant were missing). Greater clarity would have been achieved if the software had allowed stage and TNM to be categorized as clinical versus pathologic (eg, clinical stage II [cT2 cN1] and pathologic stage I [ypT1c ypN0]). Given the lack of accompanying and clarifying c and yp designations, discordant situations were counted as errors (for instance, reporting stage I combined with reporting T2N0).

DISCUSSION

The accuracy and thoroughness of the diagnosis and treatment information (also known as the treatment summaries) being delivered with survivorship care plans are poorly understood. To the best of our knowledge, there is no standard auditing required of care plan documents before cancer survivor receipt. Therefore, as part of a randomized trial assessing care plan impact on survivor knowledge, we conducted a planned exploratory analysis of treatment summary errors and omissions. This analysis demonstrated that nearly one fourth of summaries contained at least one omission and one fifth contained at least one error. Most omissions seemed unlikely to be clinically meaningful; however, some were concerning. Failing to provide any receptors (ER, PR, or HER2) for an invasive breast cancer limits usefulness. Reporting docetaxel-cyclophosphamide rather than doxorubicin-cyclophosphamide as the chemotherapy regimen is also concerning because of the future risks associated with the anthracycline doxorubicin.

Nearly half of plans prepared using external software contained an error. This error rate should not be surprising: manual abstraction of data and re-entry are error prone. Cancer registries relying on manual data collection combined with rigorous audits still have error and incompleteness rates of 4.8% and 3.3%, respectively.16 Plans prepared by the external software-based method relied on data manually abstracted from the medical record and then manually transferred into an external software program. These data were only used for care plan creation; no systematic audit or quality checks were used. Some errors stemmed from manual abstraction from clinical notes rather than primary source documents (eg, pathology or operative reports). For example, dictated clinic notes were found to contain a staging error that was propagated into a care plan (eg, a 2.0-cm breast cancer was staged as pT2 rather than pT1c, leading to incorrectly reporting stage II rather than stage I). Other errors occurred because the software program’s predesignated options did not match clinical situations and did not readily allow free text. This forced the preparer to choose the least incorrect option of omitting data. For instance, the software template did not allow selection of unknown for HER2 status, instead forcing a choice between positive, negative, or entering nothing. The proposed importation of cancer registry data into external software programs17 may decrease error rates due to unaudited manual data transfer (note, no registry data were used in the preparation of either center’s summaries). Use of cancer registry data does not solve issues related to inappropriate templating options and raises issues regarding use of registry data clinically, with attendant implications for cancer registry data accuracy. Cancer registry data may also not be available until many months after completing treatment, whereas CoC standards require provision within 6 months of completing treatment. Further work is likely needed to ensure that external software templates support information that preparers need to enter and end users require clinically.

For EHR-based treatment summaries, the diagnosis and treatment data were used routinely in clinical notes before being used to autopopulate treatment summaries. It may be that such use results in an ongoing audit and correction of errors, but we currently have no means to assess this. Omissions were more common with EHR-based plans than plans prepared via external software: data for EHR-based plans were largely entered as free text rather than into templated fields, as the treating oncologist deemed clinically relevant. Adapting EHR data entry to include standardized templates or other prompts for required elements will likely reduce omissions (such templating is being implemented). However, we also noted in the external software–based plans that difficulties arose when the options could not adequately address unusual clinical scenarios. This suggests a free-text option needs to remain. For many apparent omissions, the information was present in the results or comments section of the care plan, although it was not always readily apparent or accessible to nononcologists. For instance, a pathologic complete response to neoadjuvant chemotherapy might not be understood to correspond to pathologic stage 0 (ypT0 ypN0). Additional work to automatically reformat information as appropriate for the intended end user (clinician v survivor) might also serve to reduce apparent omissions. Both methods (EHR, external software) would benefit from prompting on entry of discrepant data (eg, entry of stage I and T2N0) or emphasizing review of critical data.

Care-planning visits were not required for delivery of the care plan document. Visits may facilitate review for omissions and errors by patients. Our staff do anecdotally report that visits result in the identification of some errors and/or omissions within treatment summaries. The degree to which this occurred was not captured for this analysis. Furthermore, correcting errors in real time may be cumbersome and time consuming. We note that EHR-based summaries delivered as part of a survivorship care plan at a planning visit had no errors and only one omission (out of 21 summaries). However, these summaries underwent extensive review: treating physicians entered the data, and summaries were prepared and reviewed by the APP and treating physicians before delivery as well as being reviewed with a patient. Patients often cannot correctly identify chemotherapy drugs received, stage, or receptors status11,18 and may not be well-positioned to serve in this capacity. We would hesitate to rely on review by patients at care-planning visits as the sole means of correcting errors or omissions.

This analysis did not examine the impact of identified errors and omissions. Some omissions are misleading; for instance, failing to enter anything under chemotherapy could lead to the belief that chemotherapy was not administered, rather than a failure to enter data. This informed the rationale behind the 2014 ASCO guidelines16 stating that surgery, chemotherapy, and radiation should be explicitly reported as done or not done. Some plans may contain disclaimers about relying on the information provided. However, it seems unrealistic to expect nononcology users to independently verify data, especially when source documents may be difficult to find (eg, treatment occurred multiple years prior) or require specialized knowledge to interpret. Such disclaimers also beg the question: if we cannot rely on the data, what is the value of providing them?

The health care system struggles with error on a daily basis, and it should not be surprising that treatment summaries are also affected. In an era of required care plan provision, some thought should be given to maximizing quality. Defining the truly critical elements of treatment summaries16 combined with ongoing efforts to refine creation and preparation to minimize the source of errors and omissions are likely to be helpful. Whether EHR based or external software program based, templates could flag critical elements as requiring double-checks. Care plan autopopulation with real-time data abstracted from high-quality cancer registries would likely address some of the concerns raised by our analysis. We believe that continued efforts to increase the autopopulation of treatment summary fields are warranted. Finally, individuals preparing plans should be trained to interpret and extract the data. Accuracy and thoroughness are necessary if survivorship care plans are to fulfill their intended role as tools facilitating communication and coordination.

ACKNOWLEDGMENT

Supported by the National Cancer Institute Cancer Center Support Grant No. P30 CA014520, the National Center for Advancing Translational Sciences Institutional Clinical and Translational Science Award No. UL1TR000427 (University of Wisconsin-Madison), and the Clinical and Translational Science Award program, through the National Center for Advancing Translational Sciences Grants No. UL1TR000427 and KL2TR000428 (A.J.T.). We thank the participating providers, members of the University of Wisconsin Breast Cancer Disease Oriented Team, and the Wisconsin Survivorship research Program (WiSP).

Appendix

Table A1.

Demographics and Tumor Characteristics of Participants

AUTHOR CONTRIBUTIONS

Conception and design: Amye J. Tevaarwerk, William G. Hocking, Douglas A. Wiegmann, Mary E. Sesto

Administrative support: Jamie L. Zeal

Provision of study materials or patients: Amye J. Tevaarwerk, William G. Hocking, Kari B. Wisinski, Mark E. Burkard

Collection and assembly of data: Amye J. Tevaarwerk, Jamie L. Zeal, Mindy Gribble, Lori Seaborne, Kari B. Wisinski, Mark E. Burkard

Data analysis and interpretation: Amye J. Tevaarwerk, William G. Hocking, Kevin A. Buhr, Mary E. Sesto

Manuscript writing: All authors

Final approval of manuscript: All authors

Accountable for all aspects of the work: All authors

AUTHORS' DISCLOSURES OF POTENTIAL CONFLICTS OF INTEREST

Accuracy and Thoroughness of Treatment Summaries Provided as Part of Survivorship Care Plans Prepared by Two Cancer Centers

The following represents disclosure information provided by authors of this manuscript. All relationships are considered compensated. Relationships are self-held unless noted. I = Immediate Family Member, Inst = My Institution. Relationships may not relate to the subject matter of this manuscript. For more information about ASCO's conflict of interest policy, please refer to www.asco.org/rwc or ascopubs.org/journal/jop/site/misc/ifc.xhtml.

Amye J. Tevaarwerk

Research Funding: Takeda Pharmaceuticals (Inst)

Other Relationship: Epic Systems (I)

William G. Hocking

No relationship to disclose

Jamie L. Zeal

No relationship to disclose

Mindy Gribble

No relationship to disclose

Lori Seaborne

No relationship to disclose

Kevin A. Buhr

No relationship to disclose

Kari B. Wisinski

Research Funding: Novartis (Inst), Daiichi Sankyo (Inst), Genentech (Inst), AstraZeneca (Inst), Eli Lilly (Inst)

Mark E. Burkard

Consulting or Advisory Role: Pointcare Genomics

Research Funding: Genentech, AbbVie, Novartis, Pfizer

Douglas A. Wiegmann

No relationship to disclose

Mary E. Sesto

No relationship to disclose

REFERENCES

- 1.Miller KD, Siegel RL, Lin CC, et al. Cancer treatment and survivorship statistics, 2016. CA Cancer J Clin. 2016;66:271–289. doi: 10.3322/caac.21349. [DOI] [PubMed] [Google Scholar]

- 2.Mandelblatt JS, Lawrence WF, Cullen J, et al. Patterns of care in early-stage breast cancer survivors in the first year after cessation of active treatment. J Clin Oncol. 2006;24:77–84. doi: 10.1200/JCO.2005.02.2681. [DOI] [PubMed] [Google Scholar]

- 3.Earle CC, Emanuel EJ. Patterns of care studies: Creating “an environment of watchful concern”. J Clin Oncol. 2003;21:4479–4480. doi: 10.1200/JCO.2003.09.979. [DOI] [PubMed] [Google Scholar]

- 4. Institute of Medicine: Assessing and Improving Value in Cancer Care: Workshop Summary. Washington, DC, National Academies Press, 2009. [PubMed] [Google Scholar]

- 5. Hewitt ME, Greenfield S, Stovall E: From Cancer Patient to Cancer Survivor: Lost in Transition. Washington, DC, National Academy Press, 2006. [Google Scholar]

- 6.Yang W, Williams JH, Hogan PF, et al. Projected supply of and demand for oncologists and radiation oncologists through 2025: An aging, better-insured population will result in shortage. J Oncol Pract. 2014;10:39–45. doi: 10.1200/JOP.2013.001319. [DOI] [PubMed] [Google Scholar]

- 7.Halpern MT, Viswanathan M, Evans TS, et al. Models of cancer survivorship care: Overview and summary of current evidence. J Oncol Pract. 2015;11:e19–e27. doi: 10.1200/JOP.2014.001403. [DOI] [PubMed] [Google Scholar]

- 8. American College of Surgeons, Cancer Program Standards: Ensuring Patient-Centered Care. www.facs.org/∼/media/files/quality%20programs/cancer/coc/2016%20coc%20standards%20manual_interactive%20pdf.ashx.

- 9.Palmer SC, Jacobs LA, DeMichele A, et al. Metrics to evaluate treatment summaries and survivorship care plans: A scorecard. Support Care Cancer. 2014;22:1475–1483. doi: 10.1007/s00520-013-2107-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Salz T, Oeffinger KC, McCabe MS, et al. Survivorship care plans in research and practice. CA Cancer J Clin. 2012;62:101–117. doi: 10.3322/caac.20142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Tevaarwerk A, Buhr K, Wisinski K, et al: Randomized clinical trial assessing the impact of survivorship care plans on survivor knowledge. Presented at the San Antonio Breast Cancer Symposium, San Antonio, TX, December 9-13, 2014. [Google Scholar]

- 12. American College of Surgeons Commission on Cancer: Cancer Program Standards: Ensuring Patient-Centered Care. 2016. [Google Scholar]

- 13.Tevaarwerk AJ, Wisinski KB, Buhr KA, et al. Leveraging electronic health record systems to create and provide electronic cancer survivorship care plans: A pilot study. J Oncol Pract. 2014;10:e150–e159. doi: 10.1200/JOP.2013.001115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. doi: 10.1200/JOP.2011.000306. Hausman J, Ganz PA, Sellers TP, et al: Journey forward: The new face of cancer survivorship care. J Oncol Pract 7, e50s-e56s, 2011 (suppl 3) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hewitt M, Ganz P. Implementing Cancer Survivorship Care Planning: Workshop Summary. Washington, DC: National Academies Press; 2007. [Google Scholar]

- 16.Arts DG, De Keizer NF, Scheffer GJ. Defining and improving data quality in medical registries: A literature review, case study, and generic framework. J Am Med Inform Assoc. 2002;9:600–611. doi: 10.1197/jamia.M1087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Journey Forward: Registry & EHR integration. www.journeyforward.org/care-plan-builder/exporting-registry-data.

- 18. Nissen MJ, Tsai ML, Blaes AH, et al: Breast and colorectal cancer survivors' knowledge about their diagnosis and treatment. J Cancer Surviv 6:20-32, 2012. [DOI] [PubMed]