Abstract

Inappropriate training assessment might have either high social costs and economic impacts, especially in high risks categories, such as Pilots, Air Traffic Controllers, or Surgeons. One of the current limitations of the standard training assessment procedures is the lack of information about the amount of cognitive resources requested by the user for the correct execution of the proposed task. In fact, even if the task is accomplished achieving the maximum performance, by the standard training assessment methods, it would not be possible to gather and evaluate information about cognitive resources available for dealing with unexpected events or emergency conditions. Therefore, a metric based on the brain activity (neurometric) able to provide the Instructor such a kind of information should be very important. As a first step in this direction, the Electroencephalogram (EEG) and the performance of 10 participants were collected along a training period of 3 weeks, while learning the execution of a new task. Specific indexes have been estimated from the behavioral and EEG signal to objectively assess the users' training progress. Furthermore, we proposed a neurometric based on a machine learning algorithm to quantify the user's training level within each session by considering the level of task execution, and both the behavioral and cognitive stabilities between consecutive sessions. The results demonstrated that the proposed methodology and neurometric could quantify and track the users' progresses, and provide the Instructor information for a more objective evaluation and better tailoring of training programs.

Keywords: training assessment, human factor, brain activity, EEG, machine learning, human machine interaction

Introduction

More than 80 years ago, Spearman (1928) stated that psychological writings “crammed” a lot of allusions to human error in an “incidental manner,” but “they hardly arrived at considering such concept systematically and profoundly.” The past three decades have seen an increasing interest in studying the Human Factor (HF) in working environments, especially its causes and possible prevention approaches. Until the 90s, a major objective of the scientific community was to limit the human contribution to the conspicuously catastrophic breakdown of high hazard enterprises such as air, sea, and road transports, nuclear power generation, and chemical process plants. Accidents in those operative environments might cost many lives, create widespread environmental damage, and generate public and political concerns. In this regard, due to its high safety standards, the aviation domain was of great interest. In fact, aircraft accident investigations had revealed that 80% of accidents were based on human error, but further investigation indicated that a significant portion of human error was attributable to HF failures primarily associated with inadequate communication and coordination within the crew (Taggart, 1994). Beyond the technology and equipment progress, specific HF training methods (e.g., Crew Resources Management—CRM) have led to the reductions of aviation accidents. In fact, since the end of 90s, CRM training has been required for all military and commercial US aviation crews and air couriers (Helmreich, 1997). Some aviation HF programs have been adapted to the healthcare field with the aim to improve teamwork in healthcare and to reduce the error commission probability. Numerous reports, scientific meetings, and publications have continued to seek solutions to improve safety, and many of them have identified the personnel training as a significant strategy in achieving such a goal (Barach and Small, 2000; Leonard and Tarrant, 2001; Barach and Weingart, 2004; Hamman, 2004; Leonard et al., 2004). Training refers to a systematic approach to learning and development to improve individual, team, and organizational effectiveness (Goldstein and Ford, 2002). The importance and the interest in the concept of training in operative environments (e.g., aviation, hospital, public transport) is reflected by the regular publication of scientific reviews in the Annual Review of Psychology since 1971 (Campbell, 1971; Goldstein, 1980; Wexley, 1984; Tannenbaum and Yukl, 1992; Salas et al., 2001; Aguinis and Kraiger, 2009). Training not only could result in the acquisition of new skills (Hill and Lent, 2006; Satterfield and Hughes, 2007) but also in improved declarative knowledge, enhance strategic knowledge, defined as knowing when to apply a specific knowledge or skill, in particular during unexpected events (Kozlowski et al., 2001; Borghini et al., 2015). Furthermore, despite the time passed from the last training session, there is also the need to assess if the operator is still able to work ensuring a high performance level, hence, a proper level of safety. For such a reason, another issue is the necessity of objectively monitoring and assessing operators' performance (Leape and Fromson, 2006), especially in terms of cognitive resource and brain activations (Di Flumeri et al., 2015). For example, during the training courses, it could be possible to obtain a series of measures of the operator's performance by using simulators (Cronin et al., 2006). These could be used as part of an ongoing certification process to ensure that operators will be able to maintain their knowledge and skills, identify areas of weakness, and promptly react in order to avoid possible risks (Astolfi et al., 2012; Broach, 2013). Nevertheless, although the results in terms of performance should be the same, the cognitive demand for the same operator could be not. In other words, after a certain time the operator should still be able to execute the same task by achieving the same performance level, but it might require different amount of cognitive resources. Therefore, different operators could achieve the same results, but involving a different amount of cognitive resources.

Nowadays one of the current limitations of the standard training assessment procedures is indeed the lack of objective information about the amount of cognitive resources requested by the trainees during the operative activity. The difference between the available cognitive resources and the amount of those involved for the task execution is called Cognitive Spare Capacity (Borghini et al., 2014, 2015; Vecchiato et al., 2016). The higher the cognitive spare capacity during a normal working activity is (i.e., the operator is involving a low amount of cognitive resources), the greater the operator ability to perform secondary tasks or to react to unexpected—emergency events is.

Therefore, the proposed work aimed to use neurophysiological signals (i.e., EEG) to provide additional objective information regarding the progresses of a trainee throughout the training program, on the base of the current brain activations with respect to previous training sessions. Such concern is based on the experimental hypothesis that, during a training period the execution of the task become more automatic and less cognitive resources are required, thus higher amount of cognitive resources will be available. In other words, when the users are not trained, the pattern of brain activations should change any time they execute the task. On the contrary, when the users become well-trained, the task performance reach the saturation area, and the brain's patterns become stable as well. Therefore, the idea presented in this work was to use a machine learning approach to track changes in the user's brain features along 3 weeks of training. The expected result was to note a plateau of the classifier performance once the user's brain patterns become stable across consecutive training sessions, in other words, when the user should be defined “cognitively” trained.

Under the recognition of the user's mental states, machine learning techniques are able to extract the most significant characteristics (brain features) closely related to the examined mental status from the big amount of neurophysiological data. A diverse array of machine learning algorithms has been developed to cover the wide variety of applications and issues exhibited across different machine learning problems (Murphy et al., 2012). For example, machine learning algorithms have been used for Brain Computer Interface (BCI) study (Parra et al., 2003; Aricò et al., 2014; Schettini et al., 2014; Wu et al., 2014; Marathe et al., 2016), mental states evaluation such as vigilance (Shi and Lu, 2013), arousal (Wu et al., 2014), alertness (Lin et al., 2006), drowsiness (Lin et al., 2005), EEG temporal feature evaluation as the error related negativity—ERN (Parra et al., 2003), or emotions (Li and Lu, 2009; Lin et al., 2010; Wang et al., 2014) and cognitive control behavior assessment (Borghini et al., 2017). Machine learning algorithms vary greatly. Attempts to characterize machine learning algorithms have led to blends of statistical and computational theories in which the goal is to characterize simultaneously the sample complexity (how much data are required to calibrate accurately the algorithm) and the computational complexity (how much computational effort is required; see Decatur et al., 2000; Chandrasekaran and Jordan, 2013; Shalev-Shwartz and Zhang, 2013). In this regards, we used the automatic stop stepwise linear discriminant analysis (asSWLDA, Aricò et al., 2016a) algorithm, since it is able to address important issues for the application of machine learning algorithms across different days (Aricò et al., 2016b). In particular, the asSWLDA can avoid both the under- and over-fitting issue in the feature selection phase, and it does not require any calibration up to a month, since the asSWLDA showed high performance stability and reliability over time for mental workload evaluation.

Therefore, the objectives of our study were to investigate (i) the advantages of the neurophysiological measures as support for an objective training assessment with respect to the standard performance evaluation, and (ii) to provide a possible neurometric for tracking and quantifying the user's training level within each session, and across the different sessions by using a machine learning approach.

Materials and methods

Characterization of learning processes

Experimental evidences suggest that motor memory formation occurs in two subsequent phases (Karni et al., 1994; Armitage, 1995; Dudai, 2004; Luft and Buitrago, 2005). The first is the initial encoding of the experience during training that occurs within the first minutes-to-hours after training, and it is characterized by rapid improvement in performance. The second phase is the memory consolidation, and involves a series of systematic changes at the molecular level, that occur after training. This second phase requires longer time. The literature dealing with the effect of practice on the functional anatomy of task performance is extensive and complex, comprising a wide range of papers from disparate research perspectives (Chein and Schneider, 2005; Doyon and Benali, 2005; Parsons et al., 2005; Erickson et al., 2007; Dux et al., 2009; Wiestler and Diedrichsen, 2013; Parasuraman and McKinley, 2014; Sampaio-Baptista et al., 2014; Borghini et al., 2016). Across these studies, three main patterns of practice-related activation change can be distinguished. Practice may result in an increase or a decrease in activation in the brain areas involved in task performance, or it may produce a functional reorganization of brain activity, which is a combined pattern of activation increases and decreases across a number of brain areas (Kelly and Garavan, 2005). Activations seen earlier in practice involve generic attentional and control areas, especially the Prefrontal Cortex (PFC), the Anterior Cingulate Cortex (ACC) and the Posterior Parietal Cortex (PPC). It has been observed as a change in the location of activations is associated with a shift in the cognitive processes underlying task performance (Poldrack, 2000; Glabus et al., 2003). In other words, with practice the task-related processes fall away and there is a shift from controlled (mainly pre-frontal and frontal brain areas) to automatic processes (mainly parietal brain areas).

It has been demonstrated that the most important cognitive processes involved in learning are working memory, attention, procedural memory, information processing, adaptive control, and long-term memory access (Shiffrin and Schneider, 1977; Logan, 1988; Shadmehr and Holcomb, 1997; Petersen et al., 1998; Bernstein et al., 2002; Ridderinkhof et al., 2004; Kelly and Garavan, 2005; Gluck and Pew, 2006; Estes, 2014). As quoted previously, the frontal and parietal brain regions appear to create a robust network and to be the most cooperative ones during learning progress. In fact, frontal regions are essential for organizing on-line corrections in response to unexpected events, or they become activated in novel situations (Mutha et al., 2011). Parietal regions, instead, may cover the process of learning and/ or storing new visuo-motor associations leading to error reduction through adaptation (Diedrichsen et al., 2005). Moreover, once learning has occurred, parietal regions may also store the set of knowledge required to overcome eventual mismatch with respect to the plan (the so called “target jump”; Desmurget et al., 1999; Pisella et al., 2000; Gréa et al., 2002). For such reasons, the brain features selected to define a neurophysiological metric usable to give information from a cognitive point of view during a training program were the frontal and parietal theta, and the frontal and parietal alpha EEG rhythms.

In fact, one of the most prominent neurophysiological events linked to the increase of information processing, working memory, decision making process, and sustained attention (Botvinick et al., 2004; Mitchell et al., 2008) is the increase (e.g., synchronization) of the theta activity over the prefrontal and frontal brain areas (Berka et al., 2007; Berka and Johnson, 2011; Galán and Beal, 2012; Jaušovec and Jaušovec, 2012; Borghini et al., 2013, 2016; Mackie et al., 2013; Cartocci et al., 2015). Additionally, frontal theta synchronization has also been demonstrated to be correlated with memory load (Jensen and Tesche, 2002), task difficulty (Gevins et al., 1997; Aricò et al., 2015), error processing (Luu et al., 2004), and recognition of previously viewed stimuli (Arrighi et al., 2016).

Similarly, studies of spatial memory have reported increased parietal theta activity during learning (Kahana et al., 1999; Caplan et al., 2001, 2003; Sauseng et al., 2005; Jacobs et al., 2006; Gruzelier, 2009). In fact, several lines of evidence suggest that parietal theta oscillations play an important role in memory formation, and are thought to play a critical role in the induction of long-term plasticity, associated with memory consolidation (Caplan et al., 2003; Buzsáki, 2005; Anderson et al., 2010; Benchenane et al., 2010; Kropotov, 2010; Nieuwenhuis and Takashima, 2011; Chauvette, 2013).

Concerning the alpha EEG band, numerous studies have suggested that alpha is associated with the cognitive functions of attention (Klimesch, 2012), perception (Di Flumeri et al., 2016), long-term memory (LTM; Jensen et al., 2002; Klimesch, 2012; Toppi et al., 2014), and working memory (WM, Garavan et al., 2000; Jensen et al., 2002; Sauseng et al., 2005; Gruzelier, 2009; Borghini et al., 2016). Fairclough et al. (2005) found that the sustained response to a demanding task produced alpha suppression, and more recently Jaušovec and Jaušovec (2012) investigated the influence of WM training on intelligence and brain activity: they found out that the influence of WM training on patterns of neuroelectric brain activity was most pronounced in the theta and alpha bands (theta band synchronization was accompanied by alpha desynchronization), and hence concluded that WM training increased individuals performance on tests of intelligence. Furthermore, Klimesch (2012) proposed that alpha activity has both roles of task-irrelevant networks inhibition and timing within task relevant networks. Alpha activity thus plays an important role for attention by supporting processes within the attentional focus and blocking processes outside its focus. The two fundamental functions of attention as filter (suppression and selection) enable selective access to the Knowledge System (KS) and operate according to the proposed inhibition timing function of alpha-band activity (Klimesch et al., 2011). Thus, periods of prolonged access should be associated with ERS, reflecting increased (i.e., synchronization) alpha-band activity (Benedek et al., 2011; Zanto et al., 2011; Jauk et al., 2012).

Experimental group

The experiment was conducted following the principles outlined in the Declaration of Helsinki of 1975, as revised in 2000. It received the favorable opinion from the Ethical Committee of the National University of Singapore (NUS), Centre for Life Sciences (NUS-IRB Ref. No: 13-132, NUS-IRB Approval No: NUS 1864). The study involved only healthy, normal subjects, recruiting on a voluntary basis. Informed consent was obtained from each subject on paper, after the explanation of the study. The selection of the participants has been done accurately in order to ensure the homogeneity of the experimental sample. Ten healthy volunteers (students of the National University of Singapore—NUS) have given their informed consent for taking part at the experiment and each of them has been paid SG$200 to attend the whole experimentation.

NASA—Multi Attribute Task Battery (MATB)

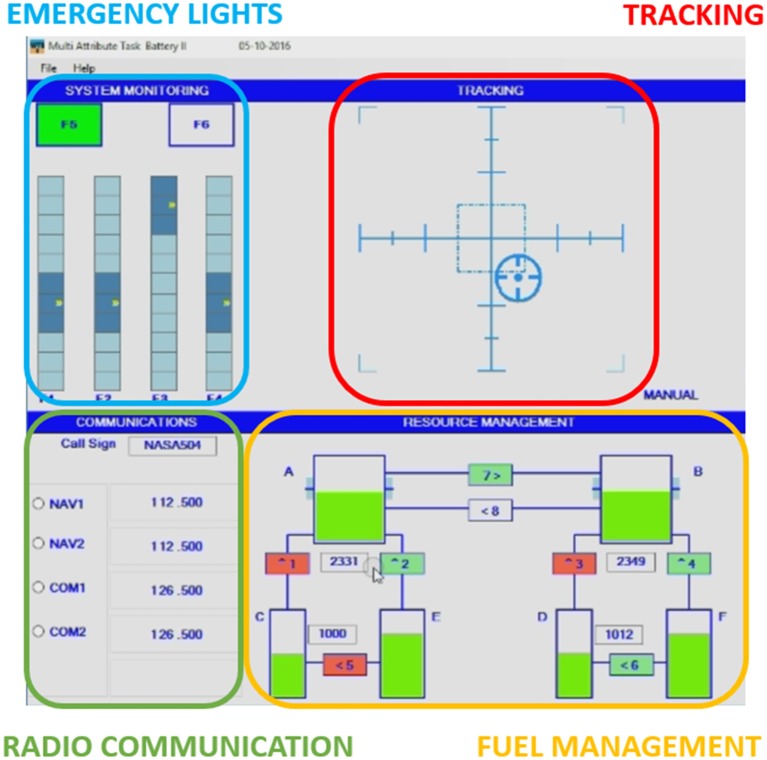

The NASA—Multi Attribute Task Battery (MATB, Comstock and Arnegard, 1992) is a computer-based task designed by the NASA to evaluate the operator task performance and workload during the execution of multi-tasks (Figure 1). It could be freely download from the NASA website at the following address: http://matb.larc.nasa.gov/. The MATB is a platform for the evaluation of the cognitive operational capability, since it could provide different tasks that have to be attended by the subject in parallel, and each task could also be modulated in difficulty. By such capabilities, it is possible to simulate many of the modern operative works (e.g., piloting an airplane, medical surgery) therefore to investigate different cognitive phenomena in operative environments who requires the simultaneous execution of actions. The MATB consists in four subtasks: tracking (TRCK), auditory monitoring (COMM), resource management (RMAN), and response to event onsets (SYSM).

Figure 1.

NASA Multi Attribute Task Battery (MATB) interface. Emergency lights task (SYSM) in the blue rectangle; Tracking task (TRCK) in the red rectangle; Radio Communication (COMM) in the green rectangle, and Fuel managing task (RMAN) in the orange rectangle (A–F are the fuel tanks).

The demand of the system monitoring task is monitoring the gauges and the warning lights by responding to the absence of the Green light, the presence of the Red light and monitors the four moving pointer dials for deviation from midpoint. The demand of manual control is simulated by the tracking task. The subject has to keep the cursor inside a squared target by moving the joystick. This task can be automated to simulate the reduced manual demands, for example if the subject became overloaded, the system would take the control. Subjects are also required to respond to a communication task. This task presents pre-recorded auditory messages at specific time intervals during the simulation. Not all of the messages are relevant to the operator. The goal of the COMM task is to determine which messages are relevant and to respond by selecting the appropriate radio and frequency on the communications task window. The demands of fuel management are simulated by the resource management task (RMAN). The goal is to maintain the fuel level of the main tanks at 2,500 (lbs) by turning on or off any of the eight pumps. Pump failures occur when they are red colored. Four performance indexes have been defined for the MATB; one index for each sub-task of the MATB. In particular, the index for the TRCK task has been defined by considering the complement of the ratio between the cursor's distance got by the subject and the maximum of this distance (fixed) from the center of the screen. The indexes of the COMM and SYSM tasks have been defined as a linear combination of accuracies in terms of correct answers (e.g., correct radio or frequency selected) and the complement of the ratio between the subject's reaction time and the maximum time for answering; then, the results have been multiplied for “100” in order to obtain a percentage. Finally, the index for the RMAN task has been defined as the mean value of the fuel's levels in the main tanks and then multiplied by “100.” In order to get a global Performance Index, the average of the previous indexes has been calculated. Additionally, two more indexes have been used in the analysis, the Mean performance (i.e., mean performance value between couple of consecutive sessions), and the Performance Stability (i.e., performance difference between couple of consecutive sessions).

Experimental protocol

The subjects have been asked to practice and learn to execute correctly the MATB for three consecutive weeks (WEEK_1, WEEK_2, and WEEK_3). In total, they have taken part in 11 training sessions with a duration of 30 min each. Each training session consisted to execute the MATB under two different difficulty levels (EASY and HARD), who differed in terms of number and the rate of events and the required time to react to them. In the EASY condition, the subject had to attend only the tracking task, while in the HARD condition, all the sub-tasks were running with different timing and number of events. For example, the easy condition time-outs were 30 (s) for the radio communications, 10 (s) for the light scales, 15 (s) for the emergency lights, pump rates of 1,000 (lbs/min) and 500 (lbs/min) for the auxiliary and main tanks, respectively, and a total of 3 radio calls. Instead, the hard conditions was characterized by time-outs of 20 (s) for the COMM task, 5 (s) for the SYSM task, pump rates of 800 (lbs/min), and 600 (lbs/min) for the auxiliary and main tanks, respectively, and a total of 7 radio calls. The subjects had to execute each task condition twice during each training session. The only difference among the same difficulty level condition was the events' order. In fact, the types and the numbers of events have been kept the same for similar conditions. For example, if the first event of the hard1 condition was an emergency light, in the hard2 condition the first event was a pump failure. Also, the temporal order of the proposed conditions has been randomly selected to avoid expectation and habituation effects.

Signals recording and processing

Electroencephalogram (EEG) has been recorded by a digital monitoring system (ANT Waveguard system) with a sampling frequency of 256 (Hz). All the 64 EEG electrodes have referred to both earlobes, grounded to the AFz channel and their impedances have been kept below 10 (kΩ). The EEG signal has been firstly band-pass filtered with a fifth-order Butterworth filter [low-pass filter cut-off frequency: 30 (Hz), high-pass filter cut-off frequency: 1 (Hz)], and then it has been segmented into epochs of 2 s (Epoch length), shifted of 0.125 s (Shift). Independent Components Analysis (Touretzky et al., 1996; ICA, Lee et al., 1999) has been performed to remove eyeblinks and eye saccades artifact, whilst for other sources of artifacts specific procedures of the EEGLAB toolbox have been used (Delorme and Makeig, 2004). In particular, three criteria have been applied to recognize artifacts. Threshold criterion: if the EEG signal amplitude exceed ±100 (μV), the corresponding epoch would be marked as artifact. Trend criterion: each EEG epoch has been interpolated in order to check the slope of the trend within the considered epoch. If such slope was higher than 3 (μV/s) the considered epoch would be marked as artifact. Sample-to-sample difference criterion: if the amplitude difference between consecutive EEG samples was higher than 25 (μV), it meant that an abrupt variation (no-physiological) happened and the EEG epoch would be marked as artifact. At the end, all the EEG epochs marked as artifact have been rejected from the EEG dataset with the aim to have an artifact-free EEG signal from which estimate the brain variations along the training period. All the previous mentioned values have been chosen following the guidelines reported Delorme and Makeig (2004). From the artifact-free EEG dataset, the Power Spectral Density (PSD) has been calculated for each EEG epoch using a Hanning window of the same length of the considered epoch (2 s length, that means 0.5 (Hz) of frequency resolution). The application of a Hanning window helped to smooth the contribution of the signal close to the extremities of the segment (epoch), improving the accuracy of the PSD estimation (Harris, 1978). Then, the EEG frequency bands have been defined accordingly with the Individual Alpha Frequency (IAF)-value estimated for each subject (Klimesch, 1999). Since the alpha peak is mainly prominent during rest conditions, the subjects have been asked to keep the eyes closed for a minute before starting with the experiment. Such condition has then been used to estimate the IAF-value specifically for each subject.

Finally, a spectral features matrix (EEG channels x Frequency bins) has been obtained in the frequency bands and EEG channels mainly correlated to learning processes, and not affected by EEG bands transition effect (Klimesch, 1999). In particular, the theta (IAF-6 ÷ IAF-2) and alpha bands (IAF-2 ÷ IAF+2) have been considered over the EEG frontal (AF7, AF3, AF8, AF4, F7, F5, F3, F1, Fz, F2, F4, F6, and F8) and parietal channels (P1, P3, P5, P7, Pz, P2, P4, P6, and P8). In the proposed study, the spectral features domain within which the asSWLDA had to select the most significant characteristics closely related to learning processes was of:

| (1) |

Machine—learning analysis

As stated previously, the idea presented in this work was to use a machine learning approach to track changes in the user's brain features along the training program. The expected result was to note a plateau of the classifier performance once the user's brain features (e.g., frontal and parietal theta and alpha EEG activations) become stable across consecutive training sessions, in other words, when the user might be “cognitively” trained. For doing this, the machine learning model should not suffer of performance decreasing over time. In other words, it should be generic enough to follow the evolution of the brain processes across a period of motor-cognitive training. In this regard, we used the automatic-stop StepWise Linear Discriminant Analaysis (asSWLDA, Aricò et al., 2016a,b), a modified version of the (SWLDA) algorithm, has been employed. With respect to the classical implementation of the SWLDA, the asSWLDA is able to select automatically the right number of features to consider into the classification model and at the same time, mitigating both under- and over-fitting problems. In particular, the asSWLDA starts by creating an initial model of the discriminant function, where the most statistically significant feature (within the spectral domain quote previously) is added to the model for predicting the target labels (pvalij < αENTER), where pvalij represents the p-value of the i-th feature at the j-th iteration (in this case the first iteration). Then, at every new iteration, a new term is added to the model (if pvalij < αENTER). If there are not more features that satisfy this condition, a backward elimination analysis is performed to remove the least statistically significant feature (if pvalij > αREMOVE). The standard implementation of the SWLDA algorithm uses αENTER = 0.05 and αREMOVE = 0.1, and no constrains on the IteractionMAX (predefined number of iterations) parameter are imposed. In other words, the feature selection keeps going unless there are no more features satisfying the entry (αENTER) and the removal (αREMOVE) conditions (Draper, 1998). However, the value of the IterationMAX parameter could affect the performance of the classifier (underfitting or overfitting), and this could be an important issue for the application of machine learning algorithm across different days (i.e., different training sessions). The optimum solution to these problems would be a criteria able to automatically stop the algorithm when the best number of features, #FeaturesOPTIMUM, are added to the model such as: #FeaturesUNDERFITTING < #FeaturesOPTIMUM < #FeaturesOVERFITTING. More the features added to the model are (number of iterations increases), more the significance (p-value) of the model (pModel) decreases (tending to zero) with a decreasing exponential shape (convergence of the model). Therefore, the asSWLDA define the model by finding the best trade-off between the number of features and the convergence of the model, that is automatically stop the algorithm in correspondence of the minimum distance of the Conv(#iter) function from the origin ([0,0]-point):

| (2) |

In other words, we sought the condition in which the following condition was satisfied (see Aricò et al., 2016a for further details):

| (3) |

A two-class asSWLDA model has been used to select within the calibration EEG dataset the most relevant EEG spectral features to discriminate the task conditions (i.e., Easy vs. Hard). A 10-fold cross-validation have been performed by segmenting the entire dataset (e.g., merging of Easy 1—Easy 2, and Hard 1—Hard 2) in 10 parts. In this regard, such parts have been shuffled and then 9 parts have been used to calibrate the asSWLDA, while the remaining part to test it, exploring all the possible combinations (10 combinations in total). In this regard, the Linear Discriminant Function has been calculated for each testing dataset and the Area Under Curve (AUC)-values of the Receiver Operating Characteristic (ROC, Bamber, 1975) have been estimated to evaluate the performance of the classifier. We chose such kind of indicator, since its statistical property, in other words, the AUC of a classifier is equivalent to the probability that the classifier will rank a randomly chosen positive instance higher than a randomly chosen negative instance. This is equivalent to the Wilcoxon test of ranks (Fawcett, 2006). Two kind of analyses have been performed for each subject: Intra- and Inter-analysis. In the Intra analysis the calibrating and testing dataset have been taken within the same session (e.g., the first 90% of Easy and Hard data of Session X to calibrate the classifier, and the last 10% of Easy and Hard data of Session X to test it). On the contrary, for the Inter analysis, calibrating and testing dataset have been considered between consecutive sessions (e.g., the first 90% of Easy and Hard data of Session X for calibrating the classifier, and the last 10% of Easy and Hard data of Session X+1 to test it, and then the first 90% of Easy and Hard data of Session X+1 for calibrating the classifier, and the last 10% of Easy and Hard data of Session X to test it), and finally averaging the related AUC-values. To test our experimental hypothesis, we compared AUC-values related to Intra analysis, with respect to AUC-values related to Inter analysis. In fact, as stated previously, we expected that if the features of the subjects were changing over time, the Inter AUCs should be significantly lower than the Intra AUCs (i.e., within the same session the classifier should work at the best). On the contrary, if the Inter AUCs did not change significantly from Intra AUCs-values, it would mean that the features selected by the classifier were successful in both consecutive sessions. In other words, we should assume that the selected brain features remain stable across sessions. In this regard, we defined an index allowing to quantify the subject progresses from a cognitive point of view (Cognitive Stability Index). In particular, two paired two-tailed t-tests (α = 0.05) performed between each Intra related cross-validations AUC-values of consecutive sessions and the related Inter session have been performed, in order to quantify from a statistical point of view any difference between Intra and Inter related AUC-values. The Cognitive Stability Index (equation 1) has been defined as the average between such two t-values. We expected a decreasing trend of this index along sessions, since at the beginning of the training period the subject should not be able to accomplish correctly and automatically the proposed task (i.e., not trained), so the Intra and Inter related AUC-values should be significantly different (high t-values). Once the subjects became more confident (i.e., trained) with the task, the difference between Intra and Inter related values of consecutive sessions should tend to zero (i.e., not significant differences), that might represent stability in terms of brain activation patterns along the training sessions.

| (4) |

Where,

| (5) |

| (6) |

and n = {T1,T2,…,TX-1}; X = 12 sessions.

To investigate the trends and changes of the EEG signal throughout the training period (3 weeks), as signs of learning progress, and to obtain a robust statistic, the behavioral and physiological data have been analyzed in 6 representative training sessions (Kelly and Garavan, 2005). In particular, the 3 consecutive recording sessions of WEEK_1 (T1, T3, and T5, in T2 and T4 only behavioral (i.e., task performance) and neurophysiological data (i.e., AUC-values) have been recorded), the session of WEEK_2 (T7), and the last session of WEEK_3 (T12) have been considered. Since the subjects had never done the MATB in the past, instructions about how to execute the task have been provided on the first day of training (T1) before starting with the experiment. To be efficient, the instructional design was tailored to the aptitude of the subject in order to avoid that the effectiveness of the training was likely random (Kalyuga et al., 2003).

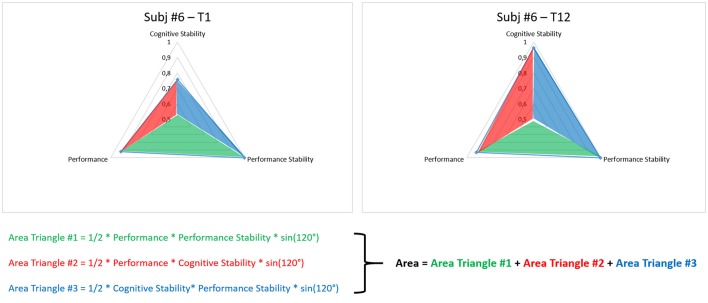

Metric proposal for training level assessment

In order to quantify the user's training level, a metric has been proposed and defined as combination of behavioral and neurophysiological data. In particular, three measures have been integrated to simultaneously take into account the level of task execution, and both the performance and cognitive stabilities over consecutive sessions. The three measures have been normalized with respect to their maximum values in order to have a domain of variation between 0 and 1. Then, they have been used to define a scalene triangle with the origin corresponding with its centroid (Figure 2). In particular, the vertexes of such a triangle (A, B, and C) have been defined by the values of the behavioral (Mean Performance, Performance Stability) and neurophysiological measures (Cognitive Stability) as distances with respect to the origin (centroid).

Figure 2.

The images reports an example of triangle by which is possible to quantify the training level of the user. In particular, the area of the triangle, defined by considering the Mean Performance, Performance Stability, and Cognitive Stability as vertices, has been calculated as the sum of the areas of three sub-triangles (Area Triangle #1, Area Triangle #2, and Area Triangle #3). Such triangles were identified by the three indexes and the origin of the coordinate system.

Therefore, such indexes identified three sub-triangles with the origin of the coordinate system, and the user's training level has been quantified by calculating the Area of the triangle as the sum of the areas of the sub-triangles:

| (7) |

Such an Area has then been normalized with respect to its maximum (Area MAX) corresponding to a triangle with all the sides equal to 1, as the following:

| (8) |

As the AREA was closer to 1, as the user's training level was achieving the maximum level, as the three measures were reaching the maximum values (i.e., 1).

Statistical analyses

Behavioral performance

A repeated measure ANOVA has been done on the performance index and within factor SESSION (6 levels: T1, T3, T5, T7, T9, and T12) with the aim to asses if significant increments happened, in terms of task execution, along the training period.

Cognitive stability index

A repeated measure ANOVA has been done on the Cognitive Stability Index and within factor CROSS-VALIDATION (6 levels: T1′, T3′, T5′, T7′, T9,′ and T12′) with the aim to asses if the subjects became trained, in terms of cognitive activations, along the training period.

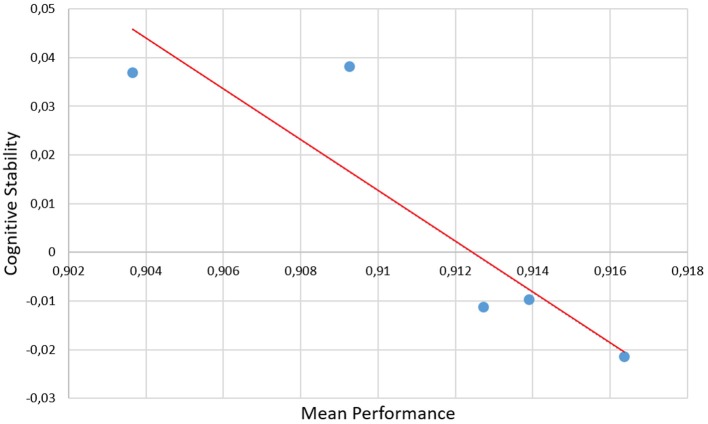

Correlation between behavioral and neurophysiological measures

Pearson's correlation has been performed between the Mean Performance and Cognitive Stability Index with the aim to assess if the measures were coherent and could provide information related to the training.

Objective training assessment

A repeated measure ANOVA has been done on the Area data and within factor CROSS-VALIDATION (6 levels: T1′, T3′, T5′, T7′, T9,′ and T12′) with the aim to asses if the proposed metric could track and provide objective information about training level progresses along the different sessions.

Results

Behavioral performance

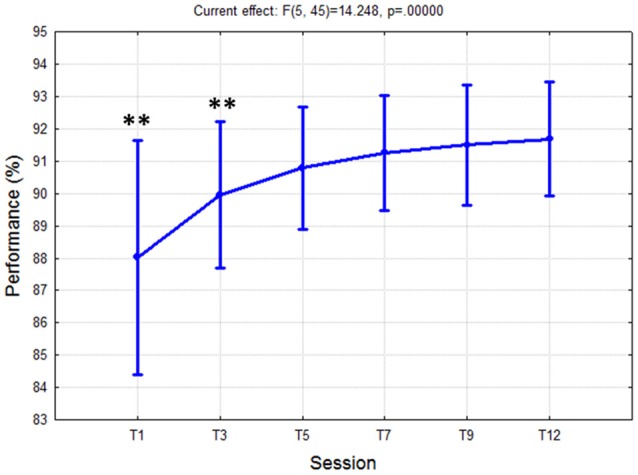

The ANOVA highlighted significant differences [F(5, 45) = 14.25; p < 10−5] on task performance (Figure 3) across the different training sessions. In particular, the post-hoc test showed that from T5 the subjects improved significantly the level of their task performance (p < 10−4) with respect to T1 (first session), and then they kept such a high level of performance (about 91%) stable (i.e., no significant differences among consecutive sessions) until the end of the training period (T5÷T12). Therefore, in terms of task execution, the subjects could be retained trained since the session T5.

Figure 3.

Task performance values over 3 weeks of training. The ANOVA showed a significant (p < 10−5) improvement of performance from T1 to T5, and then no differences were found between the rest of the sessions. Such results indicated that the subjects reached the task saturation in the session T5. “**” Means that the statistical significance level (p) is lower than 0.01. Vertical bars denote 0.95 confidence intervals (CI).

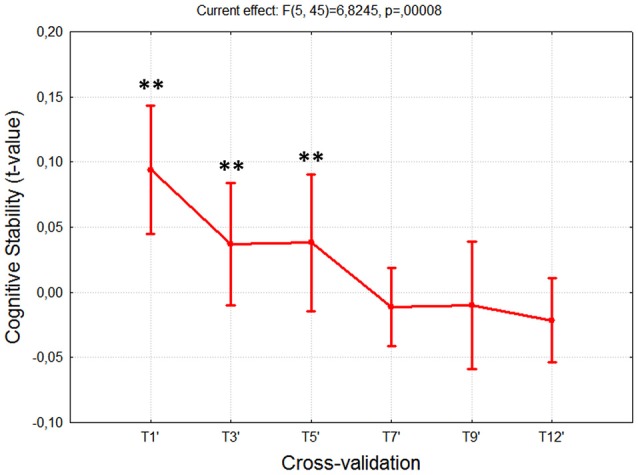

Cognitive stability

The results of the ANOVA on the Cognitive Stability Index reported significant difference along the training sessions [F(5, 45) = 6.65; p = 0.0001]. As expected, the post-hoc test showed significant reductions, tending to zero, among the sessions T1÷T7, whereas no differences were found (all p > 0.57) from the session T7 to the last one (T12), since the differences, in terms of cognitive stability, were almost equal to zero (Figure 4).

Figure 4.

Cognitive Stability Index over 3 weeks of training. The ANOVA showed significant differences (p < 10−4) among the first sessions (T1÷T7), while no differences were found among the last ones (T7÷T12). Such results indicated that the subjects reached the cognitive stability in the session T7. “**” Means that the statistical significance level (p) is lower than 0.01. Vertical bars denote 0.95 confidence intervals (CI).

Furthermore, the correlations between the behavioral and neurophysiological measures have been investigated. In particular, Figure 5 shows the scatter-plot of the correlation between the Mean Performance and the Cognitive Stability Indexes. The Pearson's analysis reported significant (p = 0.039) and high correlations (|R| > 0.89) between the measures. In fact, more the task performance became stable, more the cognitive stability index tended to zero, that could mean no significant differences in terms of brain activation patterns when the participants becomes trained.

Figure 5.

Scatter-plots of the correlation between the Mean Performance and the Cognitive Stability. The Pearson's analysis reported significant (p = 0.039) and high correlation (|R| > 0.89) between the measures, as demonstration that their integration could provide the Instructor objective data for the training assessment.

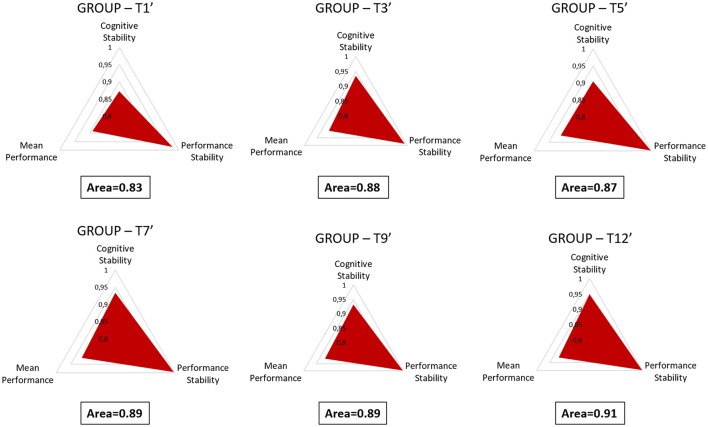

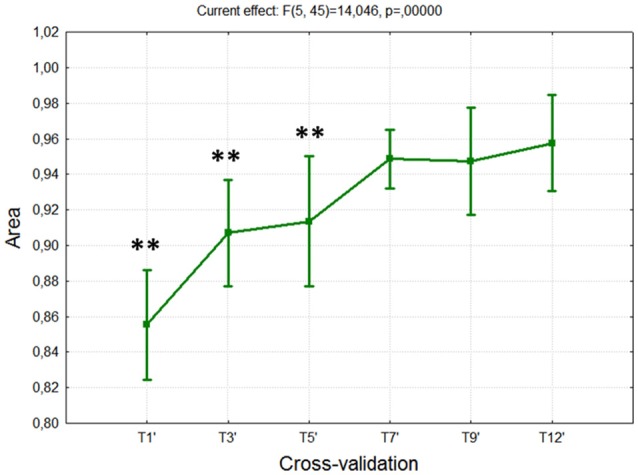

Metric for training level assessment

The ANOVA on the averaged Area reported (Figure 6) a significant increase across the training sessions [F(5, 45) = 14.05; p < 10−5]. The post-hoc test showed that by considering both the behavioral and neurophysiological measures, the subjects exhibited significant differences (all p < 0.01) in the first part of the training period (T1÷T7), and then no more differences (all p > 0.51) over the last sessions (T7÷T12). In fact, across the training sessions the subjects improved their skills in terms of task execution, performance level and capability in retaining such a high level of performance (i.e., performance stability), and cognitive activations (i.e., cognitive stability).

Figure 6.

Training metric over 3 weeks of training. The proposed metric takes into account the level of task execution (performance), and both the behavioral and cognitive stabilities. The ANOVA showed significant differences (p < 10−5) among the first sessions (T1÷T7), while no differences were found among the last ones (T7÷T12). Such results indicated that the subjects reached a training stability in the session T7. “**” Means that the statistical significance level (p) is lower than 0.01. Vertical bars denote 0.95 confidence intervals (CI).

In Figure 7, we have reported the Area values averaged across the subjects, along the considered training sessions in order to demonstrate how the experimental group kept improving their task and cognitive performance along the training period.

Figure 7.

In order to quantify the users' training level along the training sessions, the behavioral and neurophysiological measures were integrated. Such measures were used to define the sides of a scalene triangle, and then the Area of such a triangle was calculated in each training session as measure of the training level. The Area was normalized with respect to its maximum with the aim to have as references of maximum training the value “1.”

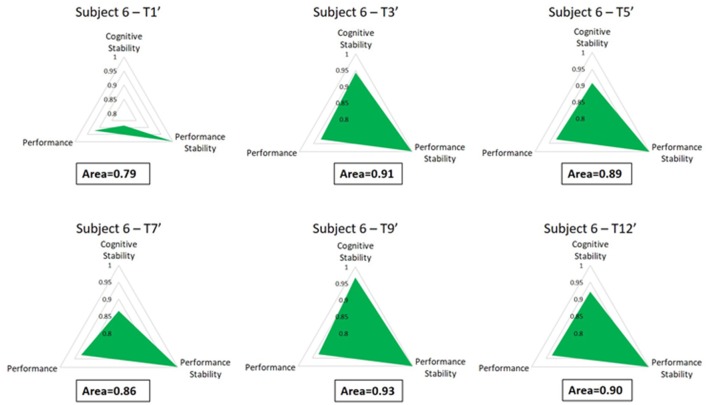

Figure 8 shows the advancement of the training metric (Area) for a representative subject (Subject 6). The gradual growth of the colored triangle is related to the training improvement along the considered sessions, as demonstration that by the proposed metric it should be possible to assess in each session the subject's training level, by considering “1” as maximum level.

Figure 8.

The figure reports the Areas of a representative subject along the considered training sessions. It is possible to appreciate how the Subject 6 improved its behavioral and cognitive skills from the beginning (T1) to the end of the training period (T12).

Discussion

The proposed work investigated the possibility to use a machine learning-based neurometric to provide additional and objective information regarding the progresses of a trainee throughout the training program on the base of the actual brain activations with respect to previous sessions. In fact, current machine learning methodologies generally aim to improve spectral and temporal features selection, reduce computational and time demand for the algorithm calibration (e.g., single trial calibration), or evaluate user's mental state and emotions. Furthermore, studies regarding learning evaluation usually highlighted the most evident changes on the considered neurophysiological parameter (e.g., event-related potentials, heart rate, specific EEG spectral power densities) between the first and last training session, without providing any objective measure to track learning progresses across the different training sessions, and to quantify the training level of the user in each session by comparing it with a maximum value.

Thus, the idea of our study was to use a machine learning algorithm in a different way and with the possibility of being applied over time, that is to compare different training sessions. In this regard, we selected the asSWLDA algorithm since it has been demonstrated to (i) being stable over time (no recalibration up to a month), and (ii) being robust to the problems of under- and overfitting, thus being able to select the features mainly linked to the considered cognitive phenomenon (Aricò et al., 2016a). The asSWLDA has been used to highlight changes in those brain features mostly linked to learning cognitive processes, and then its performance have been combined with the user's behavioral data to define a neurometric for the objective training assessment. In particular, the proposed neurometric takes into account the mean performance level achieved by the user (capability in executing correctly the task), the stability of the performance across different sessions (capability in maintaining high performance over time), and the stability of the brain activations across consecutive training sessions (capability in dealing with the task requiring the same amount of cognitive resources once it became automatic). By considering such aspects, we were able to provide a measure of the training level and to assess if the single user could be retained “trained” or not.

The analysis on the task performance (Figure 3) highlighted the existence of a learning effect. In fact, since the session T5 the subjects achieved a significantly higher (p < 0.001) level of performance than T1, and then they kept it stable throughout the rest of the sessions. In other words, no differences were found among the rest of the considered training sessions (T5÷12). Therefore, in terms of task execution the subjects seemed trained since the session T5. By the analysis of the EEG signal, the machine learning model was calibrated by selecting brain features within specific domains. In particular, the considered brain features were those mainly linked to the amount of information processing, decision making, and task difficulty (frontal theta EEG band), to the memory consolidation (parietal theta EEG band), to an improved access to the Knowledge System (KS), and more automatic actions (frontal alpha EEG band), and working memory load (parietal alpha EEG band). The analysis on the Cognitive Stability Index (neurophysiological metric) showed that (Figures 4) from the session T7, the brain activation patterns became stable until the end of the training, since significant differences were found among the first sessions (T1÷T7), while no differences were found among the last sessions (T7÷T12). We investigated the correlation between such measures with the aim to assess if they could provide coherent information about the users' training progress. The results (Figure 5) suggested that the Cognitive Stability Index and the Mean Performance showed significant (p = 0.039) and high correlation (|R| > 0.89). Therefore, they could be integrated to define a metric for an objective training assessment. In fact, we proposed a metric based on the both performance, and cognitive stability, and the level of task execution (Mean Performance). By such parameters, we could take into account the performance level of consecutive sessions (correct execution of the proposed task) and the behavioral and cognitive stability when dealing with it. The results showed that the proposed metric (Triangle's Area) was able to quantify the users' training level across the different sessions (Figure 6), by considering as “1” the maximum level of the metric, and they achieved a training stability since the session T7 (Figures 7, 8), since no statistical differences were found among the last sessions (T7÷T12).

At the moment the study presents some limitations. The first limitation is the size of the experimental group. This number is sufficient to highlight some significant statistical evidences, but of course, it needs to be enlarged to demonstrate the effectiveness of the proposed method. A second limitation is the proposed task. While the MATB is good for the analysis of the brain reactions while handling with multiple tasks, it could be reasonable to retain that training programs in realistic environments (e.g., pilots, controllers, or surgeons) could be different from those elicited by a laboratory task. Therefore, the results presented in this work have to be considered as a promising step for further and more ecological studies.

Conclusions

In this paper, we proposed a methodology able to provide quantitative information about training progresses along the training sessions. In particular, we considered specific EEG rhythms coming from a deep literature review throughout a period of 3 weeks. In this regard, we used such brain features to calibrate a machine learning algorithm (i.e., asSWLDA), and to assess when the subjects reached a stability in terms of task execution (task performance) and brain activation patterns across the different sessions. The hypothesis was that, when the subject is untrained, the brain activations should differ any time he/she will perform the proposed task. On the contrary, if the subject is trained, the patter of brain activations should be almost stable across consecutive sessions. We highlighted such effects by means of a machine learning approach. In particular, we defined a neurophysiological parameter (Cognitive Stability Index) by integrating the performance of the classifier within the same session (Intra analysis) and between sessions (Inter analysis). Such information has been combined with the behavioral data to define a neurometric by which track and quantify the training level for each participant along the different sessions. The results highlighted the added-value of the proposed neurometric as complementary information to the standard performance evaluation, and stressed the importance of multi-modal training assessment for a more accurate training evaluation. In fact, different subjects could achieve the same task performance level, but requiring different amount of brain resources and showing different expertise (Borghini et al., 2017). Therefore, by the only task performance it would not be possible to obtain information in terms of cognitive activations and to assert possible differences between the subjects. Furthermore, an objective training assessment could provide useful data for the selection of the personnel or teams both in standard and extreme working contexts (Astolfi et al., 2012; Toppi et al., 2016).

Author contributions

GB, PA, GD, and NS: EEG recordings, training neurophysiological characterization, data analysis, and paper writing. GB, PA, GD, AC, NT, and FB: experimental protocol design, methodology development. GB, PA, GD, AB, and NT: validation of the experimental procotol, recruiting of volunteers, providing experimental facilities. AC, MH, AB, NT, and FB: methodology, results and paper checking.

Conflict of interest statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work is co-financed by the European Commission by Horizon2020 projects Sesar-01-2015 Automation in ATM, “STRESS,” GA n. 699381 and Sesar-06-2015 High Performing Airport Operations, “MOTO,” GA n. 699379. The grant provided by the Italian Minister of University and Education under the PRIN 2012, GA n. 2012WAANZJ scheme to FB and the grant provided by the Ministry of Education of Singapore (MOE2014-T2-1-115) to the Singapore Institute for Neurotechnology (GA n. R-719-000-004-112) are also gratefully acknowledged.

References

- Aguinis H., Kraiger K. (2009). Benefits of training and development for individuals and teams, organizations, and society. Annu. Rev. Psychol. 60, 451–474. 10.1146/annurev.psych.60.110707.163505 [DOI] [PubMed] [Google Scholar]

- Anderson K. L., Rajagovindan R., Ghacibeh G. A., Meador K. J., Ding M. (2010). Theta oscillations mediate interaction between prefrontal cortex and medial temporal lobe in human memory. Cereb. Cortex 20, 1604–1612. 10.1093/cercor/bhp223 [DOI] [PubMed] [Google Scholar]

- Aricò P., Aloise F., Schettini F., Salinari S., Mattia D., Cincotti F. (2014). Influence of P300 latency jitter on event related potential-based brain-computer interface performance. J. Neural Eng. 11:035008. 10.1088/1741-2560/11/3/035008 [DOI] [PubMed] [Google Scholar]

- Aricò P., Borghini G., Di Flumeri G., Colosimo A., Bonelli S., Golfetti A., et al. (2016a). Adaptive automation triggered by EEG-based mental workload index: a passive brain-computer interface application in realistic air traffic control environment. Front. Hum. Neurosci. 10:539. 10.3389/fnhum.2016.00539 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aricò P., Borghini G., Di Flumeri G., Colosimo A., Pozzi S., Babiloni F. (2016b). A passive Brain-Computer Interface (p-BCI) application for the mental workload assessment on professional Air Traffic Controllers (ATCOs) during realistic ATC tasks. Prog. Brain Res. Press. 228, 295–328. 10.1016/bs.pbr.2016.04.021 [DOI] [PubMed] [Google Scholar]

- Aricò P., Borghini G., Graziani I., Imbert J.-P., Granger G., Benhacene R., et al. (2015). ATCO: neurophysiological analysis of the training and of the workload. Ital. J. Aerosp. Med. 12, 18–35. [Google Scholar]

- Armitage R. (1995). The distribution of EEG frequencies in REM and NREM sleep stages in healthy young adults. Sleep 18, 334–341. 10.1093/sleep/18.5.334 [DOI] [PubMed] [Google Scholar]

- Arrighi P., Bonfiglio L., Minichilli F., Cantore N., Carboncini M. C., Piccotti E., et al. (2016). EEG theta dynamics within frontal and parietal cortices for error processing during reaching movements in a prism adaptation study altering visuo-motor predictive planning. PLoS ONE 11:e0150265. 10.1371/journal.pone.0150265 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Astolfi L., Toppi J., Borghini G., Vecchiato G., He E. J., Roy A., et al. (2012). Cortical activity and functional hyperconnectivity by simultaneous EEG recordings from interacting couples of professional pilots. Conf. Proc. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2012, 4752–4755. 10.1109/EMBC.2012.6347029 [DOI] [PubMed] [Google Scholar]

- Bamber D. (1975). The area above the ordinal dominance graph and the area below the receiver operating characteristic graph. J. Math. Psychol. 12, 387–415. 10.1016/0022-2496(75)90001-2 [DOI] [Google Scholar]

- Barach P., Small S. D. (2000). Reporting and preventing medical mishaps: lessons from non-medical near miss reporting systems. BMJ 320, 759–763. 10.1136/bmj.320.7237.759 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barach P., Weingart M. (2004). Trauma Team Performance in: Trauma: Resuscitation, Anesthesia, Surgery, and Critical Care. New York, NY: Dekker Inc. [Google Scholar]

- Benchenane K., Peyrache A., Khamassi M., Tierney P. L., Gioanni Y., Battaglia F. P., et al. (2010). Coherent theta oscillations and reorganization of spike timing in the hippocampal- prefrontal network upon learning. Neuron 66, 921–936. 10.1016/j.neuron.2010.05.013 [DOI] [PubMed] [Google Scholar]

- Benedek M., Bergner S., Könen T., Fink A., Neubauer A. C. (2011). EEG alpha synchronization is related to top-down processing in convergent and divergent thinking. Neuropsychologia 49, 3505–3511. 10.1016/j.neuropsychologia.2011.09.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berka C., Johnson R. (2011). Exploring subjective experience during simulated reality training with psychophysiological metrics, in Marine Corp Warfighting Laboratory Workshop. Presented at the Marine Corp Warfighting Laboratory Workshop (University of Alabama iSim Laboratory; ). [Google Scholar]

- Berka C., Levendowski D. J., Lumicao M. N., Yau A., Davis G., Zivkovic V. T., et al. (2007). EEG correlates of task engagement and mental workload in vigilance, learning, and memory tasks. Aviat. Space Environ. Med. 78, B231–B244. [PubMed] [Google Scholar]

- Bernstein L. J., Beig S., Siegenthaler A. L., Grady C. L. (2002). The effect of encoding strategy on the neural correlates of memory for faces. Neuropsychologia 40, 86–98. 10.1016/S0028-3932(01)00070-7 [DOI] [PubMed] [Google Scholar]

- Borghini G., Arico P., Astolfi L., Toppi J., Cincotti F., Mattia D., et al. (2013). Frontal EEG theta changes assess the training improvements of novices in flight simulation tasks. Conf. Proc. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2013, 6619–6622. 10.1109/EMBC.2013.6611073 [DOI] [PubMed] [Google Scholar]

- Borghini G., Aricò P., Di Flumeri G., Graziani I., Colosimo A., Salinari S., et al. (2015). Skill, rule and knowledge-based behaviour detection by means of ATCOs' brain activity, in 5th SESAR Innovation Days (Delft: ). [Google Scholar]

- Borghini G., Aricò P., Flumeri G. D., Cartocci G., Colosimo A., Bonelli S., et al. (2017). EEG-based cognitive control behaviour assessment: an ecological study with professional air traffic controllers. Sci. Rep. 7, 547. 10.1038/s41598-017-00633-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borghini G., Aricò P., Graziani I., Salinari S., Sun Y., Taya F., et al. (2016). Quantitative assessment of the training improvement in a motor-cognitive task by using EEG, ECG and EOG Signals. Brain Topogr. 29, 149–161. 10.1007/s10548-015-0425-7 [DOI] [PubMed] [Google Scholar]

- Borghini G., Astolfi L., Vecchiato G., Mattia D., Babiloni F. (2014). Measuring neurophysiological signals in aircraft pilots and car drivers for the assessment of mental workload, fatigue and drowsiness. Neurosci. Biobehav. Rev. 44, 58–75. 10.1016/j.neubiorev.2012.10.003 [DOI] [PubMed] [Google Scholar]

- Botvinick M. M., Cohen J. D., Carter C. S. (2004). Conflict monitoring and anterior cingulate cortex: an update. Trends Cogn. Sci. 8, 539–546. 10.1016/j.tics.2004.10.003 [DOI] [PubMed] [Google Scholar]

- Broach D. (2013). Selection of the Next Generation of Air Traffic Control Specialists: Aptitude Requirements for the Air Traffic Control Tower Cab in 2018 (Aerospace Medicine. Technical Reports No. DOT/FAA/AM-13/5), FAA Office of Aerospace Medicine Civil Aerospace Medical Institute.

- Buzsáki G. (2005). Theta rhythm of navigation: link between path integration and landmark navigation, episodic and semantic memory. Hippocampus 15, 827–840. 10.1002/hipo.20113 [DOI] [PubMed] [Google Scholar]

- Campbell J. P. (1971). Personnel training and development. Annu. Rev. Psychol. 22, 565–602. 10.1146/annurev.ps.22.020171.003025 [DOI] [Google Scholar]

- Caplan J. B., Madsen J. R., Raghavachari S., Kahana M. J. (2001). Distinct patterns of brain oscillations underlie two basic parameters of human maze learning. J. Neurophysiol. 86, 368–380. [DOI] [PubMed] [Google Scholar]

- Caplan J. B., Madsen J. R., Schulze-Bonhage A., Aschenbrenner-Scheibe R., Newman E. L., Kahana M. J. (2003). Human theta oscillations related to sensorimotor integration and spatial learning. J. Neurosci. 23, 4726–4736. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cartocci G., Maglione A. G., Vecchiato G., Di Flumeri G., Colosimo A., Scorpecci A., et al. (2015). Mental workload estimations in unilateral deafened children. Conf. Proc. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2015, 1654–1657. 10.1109/embc.2015.7318693 [DOI] [PubMed] [Google Scholar]

- Chandrasekaran V., Jordan M. I. (2013). Computational and statistical tradeoffs via convex relaxation. Proc. Natl. Acad. Sci. U.S.A. 110, E1181–E1190. 10.1073/pnas.1302293110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chauvette S. (2013). Slow-Wave Sleep: Generation and Propagation of Slow Waves, Role in Long-Term Plasticity and Gating. Université Laval. [Google Scholar]

- Chein J. M., Schneider W. (2005). Neuroimaging studies of practice-related change: fMRI and meta-analytic evidence of a domain-general control network for learning. Brain Res. Cogn. Brain Res. 25, 607–623. 10.1016/j.cogbrainres.2005.08.013 [DOI] [PubMed] [Google Scholar]

- Comstock J. R., Arnegard R. J. (1992). The Multi-Attribute Task Battery for Human Operator Workload and Strategic Behavior Research (No. 104174). NASA Technical Memorandum. [Google Scholar]

- Cronin B., Morath R., Curtin P., Heil M. (2006). Public sector use of technology in managing human resources. Hum. Resour. Manag. Rev. 16, 416–430. 10.1016/j.hrmr.2006.05.008 [DOI] [Google Scholar]

- Decatur S., Goldreich O., Ron D. (2000). Computational Sample Complexity. SIAM J. Comput. 29, 854–879. 10.1137/S0097539797325648 [DOI] [Google Scholar]

- Delorme A., Makeig S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21. 10.1016/j.jneumeth.2003.10.009 [DOI] [PubMed] [Google Scholar]

- Desmurget M., Epstein C. M., Turner R. S., Prablanc C., Alexander G. E., Grafton S. T. (1999). Role of the posterior parietal cortex in updating reaching movements to a visual target. Nat. Neurosci. 2, 563–567. 10.1038/9219 [DOI] [PubMed] [Google Scholar]

- Diedrichsen J., Hashambhoy Y., Rane T., Shadmehr R. (2005). Neural correlates of reach errors. J. Neurosci. 25, 9919–9931. 10.1523/JNEUROSCI.1874-05.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Di Flumeri G., Borghini G., Aricò P., Colosimo A., Pozzi S., Bonelli S., et al. (2015). On the use of cognitive neurometric indexes in aeronautic and air traffic management environments, in Symbiotic Interaction, eds Blankertz B., Jacucci G., Gamberini L., Spagnolli A., Freeman J. (Cham: Springer International Publishing; ), 45–56. [Google Scholar]

- Di Flumeri G., Herrero M. T., Trettel A., Cherubino P., Maglione A. G., Colosimo A., et al. (2016). EEG frontal asymmetry related to pleasantness of olfactory stimuli in young subjects, in Selected Issues in Experimental Economics, eds Nermend K., ÅĄatuszyńska M. (Cham: Springer International Publishing; ), 373–381. [Google Scholar]

- Doyon J., Benali H. (2005). Reorganization and plasticity in the adult brain during learning of motor skills. Curr. Opin. Neurobiol. 15, 161–167. 10.1016/j.conb.2005.03.004 [DOI] [PubMed] [Google Scholar]

- Draper N. R. (1998). Applied regression analysis. Commun. Stat. 27, 2581–2623. 10.1080/03610929808832244 [DOI] [Google Scholar]

- Dudai Y. (2004). The neurobiology of consolidations, or, how stable is the engram? Annu. Rev. Psychol. 55, 51–86. 10.1146/annurev.psych.55.090902.142050 [DOI] [PubMed] [Google Scholar]

- Dux P. E., Tombu M. N., Harrison S., Rogers B. P., Tong F., Marois R. (2009). Training improves multitasking performance by increasing the speed of information processing in human prefrontal cortex. Neuron 63, 127–138. 10.1016/j.neuron.2009.06.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Erickson K. I., Colcombe S. J., Wadhwa R., Bherer L., Peterson M. S., Scalf P. E., et al. (2007). Training-induced functional activation changes in dual-task processing: an FMRI study. Cereb. Cortex 17, 192–204. 10.1093/cercor/bhj137 [DOI] [PubMed] [Google Scholar]

- Estes W. K. (2014). Handbook of Learning and Cognitive Processes (Volume 4): Attention and Memory. Abingdon; Oxford, UK: Psychology Press. [Google Scholar]

- Fairclough S. H., Venables L., Tattersall A. (2005). The influence of task demand and learning on the psychophysiological response. Int. J. Psychophysiol. 56, 171–184. 10.1016/j.ijpsycho.2004.11.003 [DOI] [PubMed] [Google Scholar]

- Fawcett T. (2006). An Introduction to ROC Analysis. Pattern Recogn. Lett. 27, 861–874. 10.1016/j.patrec.2005.10.010 [DOI] [Google Scholar]

- Galán F. C., Beal C. R. (2012). EEG estimates of engagement and cognitive workload predict math problem solving outcomes, in User Modeling, Adaptation, and Personalization, Lecture Notes in Computer Science, eds Masthoff J., Mobasher B., Desmarais M. C., Nkambou R. (Berlin; Heidelberg: Springer; ), 51–62. [Google Scholar]

- Garavan H., Kelley D., Rosen A., Rao S. M., Stein E. A. (2000). Practice-related functional activation changes in a working memory task. Microsc. Res. Tech. 51, 54–63. [DOI] [PubMed] [Google Scholar]

- Gevins A., Smith M. E., McEvoy L., Yu D. (1997). High-resolution EEG mapping of cortical activation related to working memory: effects of task difficulty, type of processing, and practice. Cereb. Cortex 7, 374–385. 10.1093/cercor/7.4.374 [DOI] [PubMed] [Google Scholar]

- Glabus M. F., Horwitz B., Holt J. L., Kohn P. D., Gerton B. K., Callicott J. H., et al. (2003). Interindividual differences in functional interactions among prefrontal, parietal and parahippocampal regions during working memory. Cereb. Cortex 13, 1352–1361. 10.1093/cercor/bhg082 [DOI] [PubMed] [Google Scholar]

- Gluck K. A., Pew R. W. (2006). Modeling Human Behavior with Integrated Cognitive Architectures: Comparison, Evaluation, and Validation. Abingdon; Oxford, UK: Psychology Press. [Google Scholar]

- Goldstein I. L. (1980). Training in work Organizations. Annu. Rev. Psychol. 31, 229–272. 10.1146/annurev.ps.31.020180.001305 [DOI] [Google Scholar]

- Goldstein I. L., Ford J. K. (2002). Training in Organizations: Needs Assessment, Development, and Evaluation. 4th Edn Wadsworth, OH: Cengage Learning. [Google Scholar]

- Gréa H., Pisella L., Rossetti Y., Desmurget M., Tilikete C., Grafton S., et al. (2002). A lesion of the posterior parietal cortex disrupts on-line adjustments during aiming movements. Neuropsychologia 40, 2471–2480. 10.1016/S0028-3932(02)00009-X [DOI] [PubMed] [Google Scholar]

- Gruzelier J. (2009). A theory of alpha/theta neurofeedback, creative performance enhancement, long distance functional connectivity and psychological integration. Cogn. Process. 10(Suppl. 1), S101–S109. 10.1007/s10339-008-0248-5 [DOI] [PubMed] [Google Scholar]

- Hamman W. (2004). The complexity of team training: what we have learned from aviation and its applications to medicine. Qual. Saf. Health Care 13, i72–i79. 10.1136/qshc.2004.009910 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris F. J. (1978). On the use of windows for harmonic analysis with the discrete fourier transform. Proc. IEEE 66, 51–83. 10.1109/PROC.1978.10837 [DOI] [Google Scholar]

- Helmreich R. L. (1997). Managing human error in aviation. Sci. Am. 276, 62–67. 10.1038/scientificamerican0597-62 [DOI] [PubMed] [Google Scholar]

- Hill C., Lent R. (2006). A narrative and meta-analytic review of helping skills training: time to revive a dormant area of inquiry. Psychotherapy 43, 154–172. 10.1037/0033-3204.43.2.154 [DOI] [PubMed] [Google Scholar]

- Jacobs J., Hwang G., Curran T., Kahana M. J. (2006). EEG oscillations and recognition memory: theta correlates of memory retrieval and decision making. NeuroImage 32, 978–987. 10.1016/j.neuroimage.2006.02.018 [DOI] [PubMed] [Google Scholar]

- Jauk E., Benedek M., Neubauer A. C. (2012). Tackling creativity at its roots: evidence for different patterns of EEG alpha activity related to convergent and divergent modes of task processing. Int. J. Psychophysiol. 84, 219–225. 10.1016/j.ijpsycho.2012.02.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jaušovec N., Jaušovec K. (2012). Working memory training: improving intelligence – changing brain activity. Brain Cogn. 79, 96–106. 10.1016/j.bandc.2012.02.007 [DOI] [PubMed] [Google Scholar]

- Jensen O., Gelfand J., Kounios J., Lisman J. E. (2002). Oscillations in the alpha band (9-12 Hz) increase with memory load during retention in a short-term memory task. Cereb. Cortex 12, 877–882. 10.1093/cercor/12.8.877 [DOI] [PubMed] [Google Scholar]

- Jensen O., Tesche C. D. (2002). Frontal theta activity in humans increases with memory load in a working memory task. Eur. J. Neurosci. 15, 1395–1399. 10.1046/j.1460-9568.2002.01975.x [DOI] [PubMed] [Google Scholar]

- Kahana M. J., Sekuler R., Caplan J. B., Kirschen M., Madsen J. R. (1999). Human theta oscillations exhibit task dependence during virtual maze navigation. Nature 399, 781–784. 10.1038/21645 [DOI] [PubMed] [Google Scholar]

- Kalyuga S., Ayres P., Chandler P., Sweller J. (2003). The expertise reversal effect. Educ. Psychol. 38, 23–31. 10.1207/S15326985EP3801_4 [DOI] [Google Scholar]

- Karni A., Tanne D., Rubenstein B. S., Askenasy J. J., Sagi D. (1994). Dependence on REM sleep of overnight improvement of a perceptual skill. Science 265, 679–682. 10.1126/science.8036518 [DOI] [PubMed] [Google Scholar]

- Kelly A. M. C., Garavan H. (2005). Human functional neuroimaging of brain changes associated with practice. Cereb. Cortex 15, 1089–1102. 10.1093/cercor/bhi005 [DOI] [PubMed] [Google Scholar]

- Klimesch W. (1999). EEG alpha and theta oscillations reflect cognitive and memory performance: a review and analysis. Brain Res. Brain Res. Rev. 29, 169–195. 10.1016/S0165-0173(98)00056-3 [DOI] [PubMed] [Google Scholar]

- Klimesch W. (2012). α-band oscillations, attention, and controlled access to stored information. Trends Cogn. Sci. 16, 606–617. 10.1016/j.tics.2012.10.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klimesch W., Fellinger R., Freunberger R. (2011). Alpha oscillations and early stages of visual encoding. Front. Psychol. 2:118. 10.3389/fpsyg.2011.00118 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kozlowski S. W. J., Gully S. M., Brown K. G., Salas E., Smith E. M., Nason E. R. (2001). Effects of training goals and goal orientation traits on multidimensional training outcomes and performance adaptability. Organ. Behav. Hum. Decis. Process. 85, 1–31. 10.1006/obhd.2000.2930 [DOI] [PubMed] [Google Scholar]

- Kropotov J. D. (2010). Quantitative EEG, Event-Related Potentials and Neurotherapy. Amsterdam: Academic Press. [Google Scholar]

- Leape L. L., Fromson J. A. (2006). Problem doctors: is there a system-level solution? Ann. Intern. Med. 144, 107–115. 10.7326/0003-4819-144-2-200601170-00008 [DOI] [PubMed] [Google Scholar]

- Lee T.-W., Girolami M., Sejnowski T. J. (1999). Independent component analysis using an extended infomax algorithm for mixed subgaussian and supergaussian sources. Neural Comput. 11, 417–441. 10.1162/089976699300016719 [DOI] [PubMed] [Google Scholar]

- Leonard M., Graham S., Bonacum D. (2004). The human factor: the critical importance of effective teamwork and communication in providing safe care. Qual. Saf. Health Care 13, i85–i90. 10.1136/qshc.2004.010033 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leonard M., Tarrant C. (2001). Culture, systems, and human factors—two tales of patient safety: the KP Colorado region's experience. Perm. J. 5, 46−49. 10.1136/qshc.2004.010033 [DOI] [Google Scholar]

- Li M., Lu B. L. (2009). Emotion classification based on gamma-band EEG, Presented at the 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society (Minneapolis, MN: ), 1223–1226. 10.1109/IEMBS.2009.5334139 [DOI] [PubMed] [Google Scholar]

- Lin C. T., Ko L. W., Chung I. F., Huang T. Y., Chen Y. C., Jung T. P., et al. (2006). Adaptive EEG-based alertness estimation system by using ICA-based fuzzy neural networks. IEEE Trans. Circuits Syst. Regul. Pap. 53, 2469–2476. 10.1109/TCSI.2006.884408 [DOI] [Google Scholar]

- Lin C.-T., Wu R.-C., Liang S.-F., Chao W.-H., Chen Y.-J., Jung T.-P. (2005). EEG-based drowsiness estimation for safety driving using independent component analysis. IEEE Trans. Circuits Syst. Regul. Pap. 52, 2726–2738. 10.1109/TCSI.2005.857555 [DOI] [Google Scholar]

- Lin Y. P., Wang C. H., Jung T. P., Wu T. L., Jeng S. K., Duann J. R., et al. (2010). EEG-based emotion recognition in music listening. IEEE Trans. Biomed. Eng. 57, 1798–1806. 10.1109/TBME.2010.2048568 [DOI] [PubMed] [Google Scholar]

- Logan G. D. (1988). Toward an instance theory of automatization. Psychol. Rev. 95, 492–527. 10.1037/0033-295X.95.4.492 [DOI] [Google Scholar]

- Luft A. R., Buitrago M. M. (2005). Stages of motor skill learning. Mol. Neurobiol. 32, 205–216. 10.1385/MN:32:3:205 [DOI] [PubMed] [Google Scholar]

- Luu P., Tucker D. M., Makeig S. (2004). Frontal midline theta and the error-related negativity: neurophysiological mechanisms of action regulation. Clin. Neurophysiol. 115, 1821–1835. 10.1016/j.clinph.2004.03.031 [DOI] [PubMed] [Google Scholar]

- Mackie M.-A., Van Dam N. T., Fan J. (2013). Cognitive control and attentional functions. Brain Cogn. 82, 301–312. 10.1016/j.bandc.2013.05.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marathe A. R., Lawhern V. J., Wu D., Slayback D., Lance B. J. (2016). Improved neural signal classification in a rapid serial visual presentation task using active learning. IEEE Trans. Neural Syst. Rehabil. Eng. 24, 333–343. 10.1109/TNSRE.2015.2502323 [DOI] [PubMed] [Google Scholar]

- Mitchell D. J., McNaughton N., Flanagan D., Kirk I. J. (2008). Frontal-midline theta from the perspective of hippocampal “theta.” Prog. Neurobiol. 86, 156–185. 10.1016/j.pneurobio.2008.09.005 [DOI] [PubMed] [Google Scholar]

- Murphy B., Talukdar P. P., Mitchell T. (2012). Learning Effective and Interpretable Semantic Models using Non-Negative Sparse Embedding. Pittsburgh, PA: MyScienceWork. [Google Scholar]

- Mutha P. K., Sainburg R. L., Haaland K. Y. (2011). Critical neural substrates for correcting unexpected trajectory errors and learning from them. Brain 134, 3647–3661. 10.1093/brain/awr275 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nieuwenhuis I. L. C., Takashima A. (2011). The role of the ventromedial prefrontal cortex in memory consolidation. Behav. Brain Res. 218, 325–334. 10.1016/j.bbr.2010.12.009 [DOI] [PubMed] [Google Scholar]

- Parasuraman R., McKinley R. A. (2014). Using noninvasive brain stimulation to accelerate learning and enhance human performance. Hum. Factors 56, 816–824. 10.1177/0018720814538815 [DOI] [PubMed] [Google Scholar]

- Parra L. C., Spence C. D., Gerson A. D., Sajda P. (2003). Response error correction-a demonstration of improved human-machine performance using real-time EEG monitoring. IEEE Trans. Neural Syst. Rehabil. Eng. 11, 173–177. 10.1109/TNSRE.2003.814446 [DOI] [PubMed] [Google Scholar]

- Parsons M. W., Harrington D. L., Rao S. M. (2005). Distinct neural systems underlie learning visuomotor and spatial representations of motor skills. Hum. Brain Mapp. 24, 229–247. 10.1002/hbm.20084 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petersen S. E., van Mier H., Fiez J. A., Raichle M. E. (1998). The effects of practice on the functional anatomy of task performance. Proc. Natl. Acad. Sci. U.S.A. 95, 853–860. 10.1073/pnas.95.3.853 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pisella L., Gréa H., Tilikete C., Vighetto A., Desmurget M., Rode G., et al. (2000). An “automatic pilot” for the hand in human posterior parietal cortex: toward reinterpreting optic ataxia. Nat. Neurosci. 3, 729–736. 10.1038/76694 [DOI] [PubMed] [Google Scholar]

- Poldrack R. A. (2000). Imaging brain plasticity: conceptual and methodological issues–a theoretical review. NeuroImage 12, 1–13. 10.1006/nimg.2000.0596 [DOI] [PubMed] [Google Scholar]

- Ridderinkhof K. R., van den Wildenberg W. P. M., Segalowitz S. J., Carter C. S. (2004). Neurocognitive mechanisms of cognitive control: the role of prefrontal cortex in action selection, response inhibition, performance monitoring, and reward-based learning. Brain Cogn. 56, 129–140. 10.1016/j.bandc.2004.09.016 [DOI] [PubMed] [Google Scholar]

- Salas E., Burke C. S., Bowers C. A., Wilson K. A. (2001). Team training in the skies: does crew resource management (CRM) training work? Hum. Factors 43, 641–674. 10.1518/001872001775870386 [DOI] [PubMed] [Google Scholar]

- Sampaio-Baptista C., Scholz J., Jenkinson M., Thomas A. G., Filippini N., Smit G., et al. (2014). Gray matter volume is associated with rate of subsequent skill learning after a long term training intervention. NeuroImage 96, 158–166. 10.1016/j.neuroimage.2014.03.056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Satterfield J. M., Hughes E. (2007). Emotion skills training for medical students: a systematic review. Med. Educ. 41, 935–941. 10.1111/j.1365-2923.2007.02835.x [DOI] [PubMed] [Google Scholar]

- Sauseng P., Klimesch W., Schabus M., Doppelmayr M. (2005). Fronto-parietal EEG coherence in theta and upper alpha reflect central executive functions of working memory. Int. J. Psychophysiol. 57, 97–103. 10.1016/j.ijpsycho.2005.03.018 [DOI] [PubMed] [Google Scholar]

- Schettini F., Aloise F., Aricò P., Salinari S., Mattia D., Cincotti F. (2014). Self-calibration algorithm in an asynchronous P300-based brain-computer interface. J. Neural Eng. 11:035004. 10.1088/1741-2560/11/3/035004 [DOI] [PubMed] [Google Scholar]

- Shadmehr R., Holcomb H. H. (1997). Neural correlates of motor memory consolidation. Science 277, 821–825. 10.1126/science.277.5327.821 [DOI] [PubMed] [Google Scholar]

- Shalev-Shwartz S., Zhang T. (2013). Stochastic dual coordinate ascent methods for regularized loss minimization. J. Mach. Learn. Res. 14, 567–599. [Google Scholar]

- Shi L.-C., Lu B.-L. (2013). EEG-based vigilance estimation using extreme learning machines. Neurocomputing 102, 135–143. 10.1016/j.neucom.2012.02.041 [DOI] [Google Scholar]

- Shiffrin R. M., Schneider W. (1977). Controlled and automatic human information processing: II. Perceptual learning, automatic attending and a general theory. Psychol. Rev. 84, 127–190. 10.1037/0033-295X.84.2.127 [DOI] [Google Scholar]

- Spearman C. (1928). The origin of error. J. Gen. Psychol. 1, 29–53. 10.1080/00221309.1928.9923410 [DOI] [Google Scholar]

- Taggart W. R. (1994). Crew resource management: achieving enhanced flight operations, in Aviation Psychology in Practice, eds Johnston N., McDonald N., Fuller R. (Aldershot: Ashgate Publishing Limited; ), 309–339. [Google Scholar]

- Tannenbaum S., Yukl G. (1992). Training and development in work organizations. Annu. Rev. Psychol. 43, 399–441. 10.1146/annurev.ps.43.020192.002151 [DOI] [Google Scholar]

- Toppi J., Borghini G., Petti M., He E. J., Giusti V. D., He B., et al. (2016). Investigating cooperative behavior in ecological settings: an EEG hyperscanning study. PLOS ONE 11:e0154236. 10.1371/journal.pone.0154236 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toppi J., Mattia D., Anzolin A., Risetti M., Petti M., Cincotti F., et al. (2014). Time varying effective connectivity for describing brain network changes induced by a memory rehabilitation treatment, Presented at the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (Chicago, IL: ), 6786–6789. 10.1109/EMBC.2014.6945186 [DOI] [PubMed] [Google Scholar]

- Touretzky D. S., Mozer M. C., Hasselmo M. E. (1996). Advances in Neural Information Processing Systems 8: Proceedings of the 1995 Conference. Denver, CO: MIT Press. [Google Scholar]