Significance

People naturally understand that emotions predict actions: angry people aggress, tired people rest, and so forth. Emotions also predict future emotions: for example, tired people become frustrated and guilty people become ashamed. Here we examined whether people understand these regularities in emotion transitions. Comparing participants’ ratings of transition likelihood to others’ experienced transitions, we found that raters’ have accurate mental models of emotion transitions. These models could allow perceivers to predict others’ emotions up to two transitions into the future with above-chance accuracy. We also identified factors that inform—but do not fully determine—these mental models: egocentric bias, the conceptual properties of valence, social impact, and rationality, and the similarity and co-occurrence between different emotions.

Keywords: emotion, experience-sampling, social cognition, theory of mind

Abstract

Successful social interactions depend on people’s ability to predict others’ future actions and emotions. People possess many mechanisms for perceiving others’ current emotional states, but how might they use this information to predict others’ future states? We hypothesized that people might capitalize on an overlooked aspect of affective experience: current emotions predict future emotions. By attending to regularities in emotion transitions, perceivers might develop accurate mental models of others’ emotional dynamics. People could then use these mental models of emotion transitions to predict others’ future emotions from currently observable emotions. To test this hypothesis, studies 1–3 used data from three extant experience-sampling datasets to establish the actual rates of emotional transitions. We then collected three parallel datasets in which participants rated the transition likelihoods between the same set of emotions. Participants’ ratings of emotion transitions predicted others’ experienced transitional likelihoods with high accuracy. Study 4 demonstrated that four conceptual dimensions of mental state representation—valence, social impact, rationality, and human mind—inform participants’ mental models. Study 5 used 2 million emotion reports on the Experience Project to replicate both of these findings: again people reported accurate models of emotion transitions, and these models were informed by the same four conceptual dimensions. Importantly, neither these conceptual dimensions nor holistic similarity could fully explain participants’ accuracy, suggesting that their mental models contain accurate information about emotion dynamics above and beyond what might be predicted by static emotion knowledge alone.

Humans must navigate a wide variety of stimuli in everyday life, ranging from apples and oranges to automobiles and computer operating systems. However, other humans are perhaps the most consequential stimuli of all, potentially driving the very evolution of the human brain (1). Despite the dazzling array of actions and internal states of which humans are capable, people are remarkably good at understanding each other (2–4). Indeed, the social mind appears particularly attuned to the problem of predicting other people (5). Perceivers make use of a wide variety of perceptible cues—including social context, facial expression, and tone of voice—to infer what emotions others’ are feeling (6–8), likely because emotions predict behavior (9, 10). However, these perceptual mechanisms only get us so far: we cannot see what expression our friend will wear next week, nor hear tomorrow’s tone of voice. How might we make social predictions beyond the immediate future? Such foresight could convey significant strategic advantages: in the social domain, as in the game of chess (11), success may depend on the depth and breadth of a player’s search through others’ possible future moves. Here we propose that people use a powerful mechanism for gaining insight into others’ future moves, one that capitalizes on an aspect of human affect often overlooked in the scientific literature: emotions predict emotions.

Although the study of state transitions has proven highly successful in animals (12, 13), little work to date has studied how human emotional states transition from one to the next (cf. ref. 14). Nonetheless, research has demonstrated that individual emotions ebb and flow over time with some regularity (15, 16), and that temporal information facilitates social functioning (17). These findings hint that certain emotions may flow into others with some regularity. For example, a person experiencing a positive emotion like awe may be more likely to next experience another positive emotion, such as gratitude, than a very different emotion, like disgust. If people experience regularities in emotion transitions, then others may be able to detect these tendencies. A person who learned these regularities could construct a mental model—a representation of how emotions tend to transition from one to the next—that accurately predicts others’ future emotions.

Here we tested whether people have accurate mental models of others’ emotion transitions. Studies 1–3 measured the actual rates of transitions between emotions using existing experience-sampling datasets (18, 19). These data served as the “ground truth” against which we could test the accuracy of people’s mental models. We collected new data paralleling these ground-truth estimates. In these studies, participants rated the likelihood that each emotion might transition into each other emotion. For example, a participant might be told that another person is currently anxious and then rate the likelihood that the person would next experience a state of calm. We compared participants’ mental models from the rating studies to the experience-sampled transitional probabilities. By applying the same analyses across multiple datasets, we aimed to provide a robust, generalizable assessment of the accuracy of participants’ mental models. Study 4 provided a convergent test of accuracy using Markov modeling over a rich sampling of 60 states.

Study 4 also investigated the conceptual building blocks of participants’ mental models, testing the extent to which transitional probability ratings were informed by four conceptual dimensions from previous research (20): valence (positive vs. negative), social impact (high arousal, social vs. low arousal, asocial), rationality (cognition vs. affect), and human mind (purely mental and human specific vs. bodily and shared with animals).

Finally, study 5 again assessed both the accuracy and the conceptual building blocks of people’s transition models using 2 million mood reports from the Experience Project (21). In addition to replicating studies 1–4, these data allowed us to examine whether participants’ transition models contained information specific to emotional dynamics, above and beyond that which might be predicted by static conceptual knowledge alone.

Results

Measuring Emotion Transitions.

We used three experience-sampling studies to estimate the actual rates of transitions between three sets of emotions. In two studies, participants were prompted via text message to report their mental state once every 3 h during the day for 2 wk; participants in the third study were prompted via a phone app at random times throughout the day for up to 1 y. At each time point, participants indicated which emotions—of a set of 25, 22, or 18 states, respectively (Fig. 1)—they were currently experiencing. Taken together, these data provided 70,642 reports by 10,803 participants. By comparing each emotion report to the next, we determined which emotion transitions they had experienced. The results were log odds that reflected which transitions were more or less likely to occur than expected by chance (Fig. 1 A–C).

Fig. 1.

Probability matrices of experience-sampled and rated (mental model) emotion transitions. (A–C) The likelihood of actual transitions between emotions, as measured in three experience-sampling datasets (18, 19). Each cell in the matrix represents the log odds of a particular transition, calculated by counting the number of such transitions and normalizing based on overall emotion frequencies. (D–F) Corresponding mental models of emotion transitions in studies 1–3. Each cell reflects the group-average rating of the likelihood of the corresponding transition from 0 to 100%. Warm colors indicate more likely transitions; cool colors indicate less likely transitions.

We then collected three parallel datasets to measure people’s mental models of emotion transitions. Participants rated the likelihood of transitions between every pair of emotions from the corresponding experience-sampling study (Fig. 1 D–F). Participants were able to reliably report their mental models of emotion transitions, as evidenced by a high degree of consistency across participants (mean interrater rs = 0.47, 0.34, 0.48, all Ps < 0.0001; Cronbach αs = 0.99, 0.98, 0.98).

The Accuracy of Mental Models.

We assessed the accuracy of people’s mental models in four ways. First, we correlated the transition odds from the experience-sampling studies with the mental model of the transition likelihoods, both averaged across all participant ratings, as well as for each individual’s ratings. Each observation in these analyses corresponded to a transition between a particular pair of emotions (Fig. S1 A–C). Second, we measured cross-validated model accuracy as normalized root mean square error (NRMSE) in simple regressions with ratings on the 100-point scale as the dependent variable for cross-study consistency (SI Text). NRMSE values represent the average error of the model, as a proportion of the range of the outcome measure. Baseline NRMSE values from randomized versions of the same models are provided for comparison.

Fig. S1.

Mental models accurately predict actual emotion transitions in studies 1–3. (A–C) The relationship between the log odds of transitions from three experience-sampling datasets and corresponding mental models of emotion transitions, averaged across distributions of accuracy of individual participants’ models of emotion transitions from three datasets. Each point corresponds to the transition likelihood between a pair of emotions; dashed lines indicate linear best fit. (D–F) The distributions of accuracy of individual participants’ models of emotion transitions from three datasets. Solid vertical lines indicate the mean correlation coefficient, and dashed lines indicate 95% CI calculated via percentile bootstrapping. (G–I) The accuracy of individual participants’ mental models at each step in a random walk through experience-sampled emotion transition matrices. Horizontal dashed lines indicate accuracy expected from random guessing. Error bars indicate 95% CIs calculated via percentile bootstrapping.

These analyses revealed strong associations between the average mental models and experience-sampling data in all three datasets (Spearman’s ρs = 0.77, 0.68, and 0.79; all Ps < 0.0001, NRMSE = 0.23, 0.17, and 0.25, with NRMSEbaseline = 0.32, 0.24, and 0.30), suggesting that people’s models were highly accurate in aggregate. Individuals’ models were also highly accurate, consistently correlating with the experience-sampling data (Fig. S1 D–F): mean Spearman’s ρs = 0.53, 0.40, and 0.55 [Ps < 0.0001; percentile bootstrap 95% CIs = (0.48, 0.58), (0.35, 0.44), and (0.51, 0.59); Cohen’s ds = 2.41, 1.92, and 2.58, NRMSE = 0.18, 0.12, and 0.18, with NRMSEbaseline = 0.25, 0.17, and 0.22]. An independent set of data from 2 million reports on the Experience Project (21) replicated both findings: mental models were accurate both in aggregate (ρ = 0.32, P < 0.0001) and in individuals [mean ρ = 0.21, P < 0.0001, percentile bootstrap 95% CI = (0.20, 0.22), d = 2.25, NRMSE = 0.29, with NRMSEbaseline = 0.30].

In a third analysis of accuracy, we used Markov chain modeling to estimate how many “steps” into the future the participants’ models could accurately predict. This analysis simultaneously initiated random walks at the same emotion state in both the experienced and mental model transitional probability matrices. The walk then continued for four steps through each matrix. We measured if these walks went to the same emotions at each step. Results indicated that participants’ mental models could significantly predict others’ actual emotions up to two steps into the future in the first and third datasets, and one step forward in the second dataset (Fig. S1 H and I).

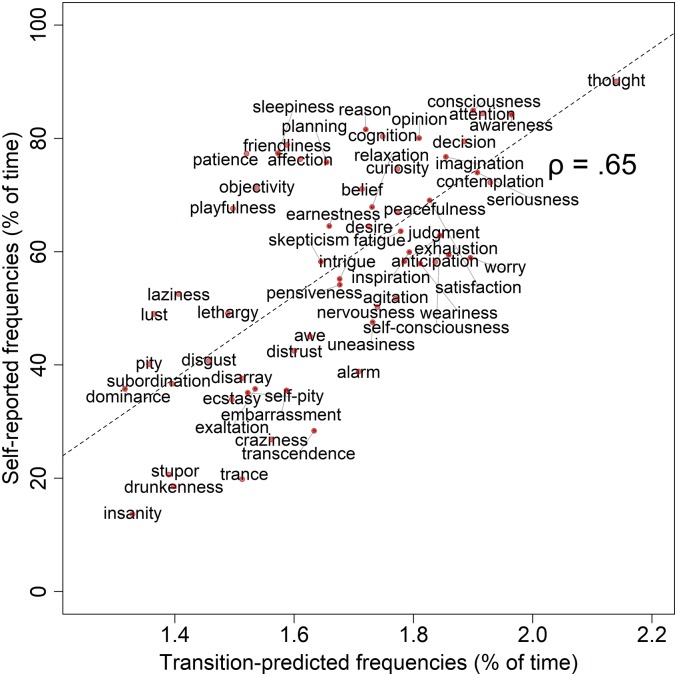

Participants in study 4 provided emotion transition ratings for a new set of 60 mental states. These states were selected from previous work to representatively sample the conceptual space of states—both emotional and cognitive—that people regularly experience (20), thus affording us a fourth approach for testing the accuracy of participants’ mental models. Using Markov modeling, we translated transition ratings into a prediction about the frequency with which people experience of each of the 60 states. We observed a substantial correlation (ρ = 0.65, P < 0.0001, NRMSE = 0.19, with NRMSEbaseline = 0.28) between Markov-predicted and self-reported frequencies (Fig. S2), providing convergent evidence for the accuracy of participants’ models of mental state transitions.

Fig. S2.

Accurate frequency predictions from mental models in study 4. The high correlation between rated mental-state frequencies and the stationary distribution of the mental model Markov chain provides convergent evidence for the accuracy of people’s mental models.

The Conceptual Building Blocks of Mental Models.

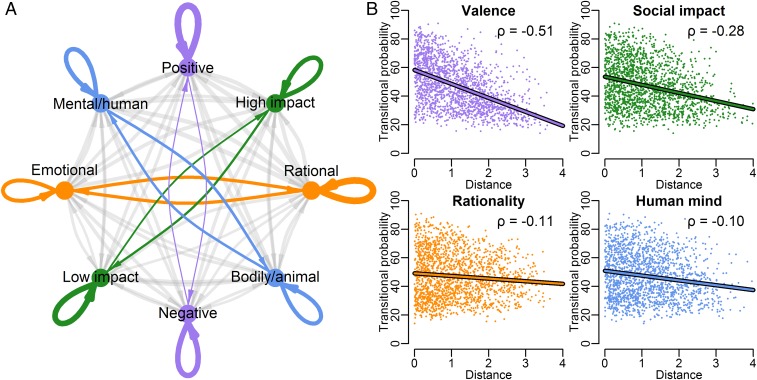

We next tested the extent to which a conceptual understanding of static emotion informs people’s models of emotion transitions. For example, people intuitively understand that some states are positively valenced and other states are negatively valenced (22). If people use this knowledge to make predictions, they might predict that another person will be more likely to transition from a positive state to another positive state than to a negative state (e.g., Fig. 1). In study 4, we tested the extent to which valence informs people’s mental models of emotion transition using the transitions ratings between 60 mental states described above. Each state’s valence had been assessed in earlier research (20). If valence informs participants’ transition models, then a pair of states that are highly similar on that dimension (e.g., two neutral states or two negative states) should be highly likely to transition from one to the other. That is, conceptual similarity should correlate with transitional probability ratings. As expected, results revealed that valence strongly informed participants transitional probability ratings (partial ρ = 0.51, P < 0.001) (Fig. 2).

Fig. 2.

Mental models of transitions between 60 mental states demonstrate multidimensionality. (A) Nodes in the network graph represent the poles of four psychological dimensions (i.e., in states in the upper or lower quartile of each dimension). Transitions between poles are represented by arrows, with thickness proportional to average transitional probability rating. Transitions are more likely within poles (e.g., positive to positive) than between opposite poles (e.g., positive to negative). (B) Scatter plots depict decreasing transition likelihoods as states get further apart on each of the four dimensions, linear best fine lines, and coefficients from Spearman rank partial correlations, accounting for the influence of the other three dimensions.

In addition to valence, previous research has demonstrated that people are attuned to at least three other conceptual dimensions (Fig. S3) when thinking about others’ static mental experiences (20): “social impact” or whether a state is highly socially relevant or irrelevant (e.g., envy vs. sleepiness); “human mind,” reflecting whether only humans can experience a state or whether other animals can experience it as well, particularly because it is more bodily in nature (e.g., self-consciousness vs. hunger); and “rationality,” indicating whether a state is consider emotional or cognitive (e.g., worry vs. thought). Study 4 tested whether these dimensions likewise inform people’s mental models of emotion transitions. As predicted, each dimension uniquely correlated with participants’ transitional probability ratings (Fig. 2): social impact (partial ρ = 0.28, P < 0.001), human mind (partial ρ = 0.10, P = 0.002), and rationality (partial ρ = 0.11, P = 0.005).

Fig. S3.

Emotion transition networks in a 4D representational space. The network graphs represent likely transitions (>75%) between mental states in study 4. Node colors indicate optimal (modularity maximizing) clusters of states that transition to each other. Descriptive labels for multinode clusters are provided for convenience. Node size indicates how frequently participants experienced the state. The positions of the states reflect where they fall on the psychological dimensions of valence and social impact (A) or rationality and human mind (B). The effects of the dimensions of rated transitions can be observed in the relatively sparsity of long-distance links in comparison with short-range links. These effects are also reflected in the spatial clustering of nodes of the same color cluster in the 4D representational space.

Results from study 5 replicated these findings: similarity of valence, social impact, rationality, and human mind all uniquely correlated with rated transitions [mean partial ρs = 0.60, 0.24, 0.09, 0.03; 95% percentile bootstrap CIs = (0.55, 0.64), (0.19, 0.29), (0.06, 0.11), (0.007, 0.05)]. Together, these findings demonstrate that people’s models of emotion transitions are informed by at least four conceptual building blocks. Moreover, three of these dimensions (valence, social impact, and rationality) likewise predicted ground-truth transitional probabilities [mean partial ρs = 0.13, 0.04, 0.06; 95% percentile bootstrap CIs = (0.11, 0.15), (0.02, 0.07), (0.03, 0.09)]. This result suggests that these dimensions may inform mental models precisely because they are derived from observation of actual emotion transitions. Indeed, each of these three dimensions uniquely mediates the relationship between transition ratings and ground truth (SI Text).

Independent Predictive Validity of Transition Models.

The preceding results raise the possibility that people lack unique insight into emotion transitions, and instead rely on their conceptual knowledge of static emotions when rating transitional probabilities. To address this issue, in study 5 we tested the extent to which the accuracy of people’s transition models could be explained by conceptual knowledge of static emotions. To do so, we recalculated the correlation between participants’ ratings and ground truth, controlling for the four conceptual dimensions described above. Results demonstrated that participants have unique knowledge about emotional dynamics: residual accuracy (mean partial ρ = 0.10) remained statistically significant [95% percentile bootstrap CI = (0.09, 0.11)], with a large standardized effect size (d = 1.51).

Next, we examined whether models of emotion transitions could be explained by autocorrelation in emotion reports, by measuring the extent to which transitional probabilities reflect holistic similarity between states. A separate group of participants rated the holistic pairwise similarity between the 57 emotions in study 5. Transition ratings were indeed highly correlated with similarity ratings (ρ = 0.97), suggesting that similarity informs emotion transitions, or vice versa. However, the shared variance (93%) could not fully account for the true variance (reliability) of the transition ratings (α = 0.99). Moreover, the transition ratings retained significant predictive validity with respect to the ground truth, both in aggregate (partial ρ = 0.14, P = 0.003) and in individuals [mean partial ρ = 0.04, P < 0.0001, percentile bootstrap 95% CI = (0.03, 0.05), d = 0.57], when controlling for aggregate similarity ratings. These results provide a broad test of the unique predictive validity of transition models, and together indicate that mental models of emotion transitions cannot be reduced to static emotion concepts or holistic similarity.

SI Text

Power Analysis.

A Monte Carlo simulation power analysis was used to determine appropriate participant numbers for rating data across studies 1–3. This power analysis targeted the most central planned hypothesis test: whether the average correlation between each participant’s transitions ratings and the group-level experienced transitions was greater than 0. On each iteration of the simulation, bivariate normal N(0, 1) data were generated based on a given population-level effect size (rs = 0.1, 0.3), corresponding to small and medium correlations between rated and experienced transitional probabilities. To be maximally conservative, data were simulated with respect to the study with the smallest number of states (18, in study 3) yielding 324 observations of each variable. Simulated data for individual participants were generated by adding additional random normal noise (independent and identically distributed) to one of these vectors for Ns ranging from 20 to 100 in increments of 5. The SD of the added noise was set to produce mean interrater reliabilities of 0.1 and 0.3. Each simulated participant’s data were converted to a discrete uniform distribution U(0, 100) to approximate actual ratings, and then Spearman-correlated with the simulated transitions odds from the earlier bivariate normal distribution. Resulting correlation coefficients were linearized using Fisher’s r-to-z transformation and entered into a one-sample t test to determine whether they were significantly greater than 0 at the α = 0.05 level. We used t tests in the power analysis, rather than the bootstrapping adopted with the actual data, because of the computational efficiency of the former relative to the latter. This process was repeated for 5,000 iterations at each combination of effect size, sample size, interrater reliability.

The pooled results indicated that the design in question was intrinsically quite well-powered, because of the number of ratings each participant provided. With as few as 20 participants (the smallest sample considered), a combination of moderate effect size (0.3) and moderate interrater correlation (0.3) guaranteed ∼100% power. As expected, decreasing either the effect size or the interrater reliability diminished power, with a superadditive effect of decreasing both simultaneously. The simulation indicated that with an effect size of r = 0.1 and a mean interrater of r = 0.3, 30 participants would provide 80% power. An effect size of r = 0.3 with a mean interrater of r = 0.1 would require 35 participants to provide 80% power. If both the effect size and reliability were small (0.1), then increasing power would be highly inefficient: 35 participants would provide 32% power, but even 100 participants would only yield 47% power. These results suggested that a sample size as low as n = 35 would provide acceptable power, but to improve our estimates of effect sizes we targeted a sample twofold larger: n = 70, after exclusions. This sample size was estimated to provide nearly 100% power if both the interrater correlation and the accuracy effect size were moderate (0.3), 98% power if the interrater correlation was small and the effect size was moderate, 88% power if the interrater correlation was moderate and the effect size was small, and 40% power if both were small. Moreover, these estimates were based on testing only 18 states, consistent with study 3 but conservative for studies 1 and 2.

An additional simulation-based power analysis was conducted for study 5. This power analysis was targeted at one of the key goals of this study: determining whether transitional probability ratings had incremental validity in predicting ground-truth positions over and above similarity ratings. The simulation paralleled the actual analysis, although simplifying measures were taken for computational tractability: t tests rather than bootstrapping were used to assess statistical significance, simulated responses were drawn from a normal distribution, and Pearson rather than Spearman correlations were used. We fixed the correlation between (group-averaged) simulated transition ratings and simulated ground-truth transitional probabilities to r = 0.7, based on the results of studies 1–3. To be highly conservative with respect to the incremental value of transition ratings, we fixed the correlation between similarity ratings and ground-truth transitional probabilities to r = 0.69, and the correlation between similarity ratings and transition ratings to 0.99. The reliabilities from studies 1–3 were used to determine the signal-to-noise ratio for adding random variance to simulated individual participants. On each iteration of the simulation, we calculated whether the average partial correlation between individual participant transition ratings and ground truth (accounting for group-averaged similarity ratings) was greater than 0. We found that a sample size of 150 would be sufficient to provide 97% power at α = 0.05, even under these conservative assumptions.

Manipulation Check.

A covert attention check was included in the rating paradigm for studies 1–4: a radio button item in the middle of the demographic posttest, which appeared to ask about United States nationality, but actually instructed participants not to respond. Although it was initially our intention to use this as an exclusion criterion, this check proved more difficult for participants than we anticipated, eliciting very high failure rates (42%, 32%, and 49% of otherwise included participants in studies 1–3). Furthermore, we found that the average interrater reliabilities were nearly numerically identical regardless of whether participants who completed this attention check were excluded, suggesting that this check was not related to data quality. Note that this reliability check is not directly related to our hypothesis test, and thus does not bias results. As a result of these indications of the dubious validity of the manipulation check, we retained all participants in the analyzed samples. We subsequently dropped this check in study 5.

Frequency-Normalizing Experienced Transition Matrices.

Raw transitional probability matrices, derived from the experience-sampling data in studies 1–3, were normalized by frequency expectations. Frequency-based expectations for each cell in the transition matrix were calculated by summing the occurrences of each emotion and then multiplying the resulting vector by its transpose. The resulting matrix was divided by its sum and then divided into the transitional probability matrix (also sum-normalized) elementwise.

Frequency normalization of the experienced transitions was undertaken for two reasons. First, without such normalization, the frequencies would likely dominate other sources of variance in the experienced transition odds matrix. This would have make it difficult to determine whether any observed accuracy resulted from meaningful mental models of emotional transitions per se, or just knowledge of the frequencies. Second, the common tendency toward base-rate neglect led us to anticipate that participants would make relative judgments of transition likelihoods that ignored the global frequencies of each emotion. Failing to normalize for frequencies would thus have unnecessarily contaminated accuracy estimates with a known source of cognitive bias.

Experience Project Transitional Probabilities.

The transitional probabilities that were provided to us were calculated in earlier work (21) by applying an exponential decay model to 2 million mood reports on the website. Thus, all states subsequent to a given emotion were considered, but down-weighted exponentially based on their temporal distance to the emotion report in question. The coefficient of this exponential model was set such that reports “t” days after the initial report would matter half as much as reports “t − 1” days after that report. Thus, the transitional probabilities in study 5 incorporate the time intervals between reports in a relatively naturalistic manner.

The transitional probabilities we were provided were normalized with respect to the state first experienced, but not with respect to the endpoint of the transition. Given that the transitional probabilities were approximately distributed according to a power law (and thus scale-free), we subtracted 1% from these values, took the mean of the values between 0 and 1%, and imputed this mean to undefined cells of the transition matrix. We then normalized with respect to the transition sums of the endpoint emotions. We included only pairs of emotions with bidirectional transitions available, leaving a final set of 456 ground-truth transitions over 57 states.

Frequency Analyses.

Participants’ ratings of their own emotional frequencies in studies 1–3 were subjected to analogous consensus and accuracy analyses as those conducted with the transitional probability ratings. The average correlations among participants for the frequency ratings were rs = 0.19, 0.25, and 0.38, respectively. In each case, the frequency consensus was less than the transitional probability consensus, suggesting that participants had more homogeneous intuitive models than actual emotional experiences. Item analysis of the intuition and experience sampling emotional frequencies yielded correlations of ρs = 0.68, 0.70, and 0.75. Individual participant ratings correlated with the group-level experience ρs = 0.31, 0.38, and 0.48, all of which were significantly significant at P < 0.05 as assessed by percentile bootstrapping.

Out-of-Sample Prediction.

To complement the primary inferential statistical approach in the paper—which relied on nonparametric null-hypothesis significance testing—we also conducted cross-validated prediction with respect to the primary accuracy relations in each study. Fivefold cross-validation was used in each case. In studies 1–3, we cross-validated with respect to both participants and emotions. In the former case, we calculated separate simple regressions predicting each participant in the training set’s responses with transition log odds from the corresponding experience sampling study. Using the average regression parameters (slope and intercept) from these regressions, we then predicted the transition ratings of participants in the left-out test set. This process was repeated iteratively leaving out each of five randomly divided “folds” in the sample. In the latter case (i.e., cross-validating with respect to emotions), on each fold we fit a simple regression predicting group-average transition ratings from experienced transition log odds for the emotions in the training set. We then predicted group-average transition ratings for the left-out emotions using the model fit to the training set. Prediction error for both the participant and emotion cross-validation was calculated using the formula for RMSE. Also, in both cases we computed RMSE for a test set that had been randomly permuted with respect to emotion pairs.

In study 4, we performed a simpler cross-validation of the correlation between self-reported frequencies and rated transition matrix stationary distribution. In each case, we fit a linear regression to this relationship using four-fifths of the 60 states, and then predicted the self-reported frequencies of the left out set using the corresponding stationary distribution values and the pretrained model. In study 5, we perform cross-validation with respect to participant in the manner described for studies 1–3, but did not also perform emotion cross-validation because of the sparse nature of the ground-truth transitional probability matrix in this dataset.

Results across all five studies supported the conclusion that participants’ mental models of emotion transitions were indeed accurate. In studies 1–3, the RMSEs in the participant-wise cross-validation were 28.49, 27.36, and 27.58. The corresponding RMSE values with randomized permuted test sets were 34.02, 30.62, and 34.51. RMSEs in the emotion-wise cross-validation were 18.13, 12.06, and 17.90, with RMSEs in the randomized equivalents of 24.83, 16.62, and 21.93. The RMSE of the correlation in study 4 was 14.17, with a randomized baseline of 21.41. The participant-wise RMSE in study 5 was 28.92, and the corresponding randomized RMSE was 29.98. Range-normalized versions of these values are reported in the results section. Note that in every case RMSE was higher for the randomly permuted test sets then for the properly order sets in the same analysis, indicating the above-chance performance of the participants’ predictions.

Dimensional Mediation Analysis.

In study 5, we tested whether the four conceptual dimensions tested in study 4 mediated the relationship between transition ratings and ground truth in study 5. Two of the three legs of this analysis were completed as described in the main text: correlating dimension ratings with transitional probability ratings, and correlating dimension ratings with ground-truth transitions. The third leg of the mediation examined whether the accuracy relationship between transition ratings and ground truth changed as a function of controlling for the dimensions. In this analysis, we calculated the partial correlation between individual participant’s transitions ratings and ground-truth transitional probabilities, controlling for aggregate dimension ratings. We then recalculated the latter value leaving out each dimension in turn and assessing the change in partial correlation in each case. Large increases in accuracy partial correlation as a function of leaving out a dimension (in conjunction with that dimension correlating with both ratings and ground truth) was taken as indicative of statistical mediation of the accuracy. Statistical significance in all portions of the mediation analysis was calculated via bootstrapping. We observed significant increases in the residual accuracy relationship when removing valence, social impact, and rationality from the model, but not human mind [mean change in partial ρs = 0.05, 0.03, 0.01, −0.0008, 95% percentile bootstrap CIs = (0.049, 0.056), (0.028, 0.033), (0.010, 0.013), (−0.001, −0.0005)]. Together with the fact that these dimensions are associated with both transition ratings and ground truth, these results provide evidence that valence, social impact, and rationality each uniquely mediate part of the accuracy of people’s mental models of emotion transitions.

The Egocentricity of Mental Models.

One possible source of inaccuracy is egocentric bias: participants’ own idiosyncratic emotional experiences may influence their intuition about others’. We took a discriminative approach to assessing the effect of egocentrism on participants’ ratings of transitional probabilities. We correlated participants’ frequency ratings with each other, and did the same with participants’ transitional probability ratings. We linearized both correlation matrices using Fisher’s r-to-z transformation, and then correlated the lower triangular portion of these two correlation matrices. The statistical significance of this relationship was assessed by permutation testing. In this case, the rows and columns of the two correlation matrices were permuted, thus treating the participant as the level of independent observation. If participants’ idiosyncratic experiences egocentrically biased their mental models, then participants with similar emotional experiences, as assessed by their frequency reports, should also have similar models. We observed a small but reliable impact of idiosyncratic emotion experiences on mental models, with significant correlations between frequency- and transition-similarity matrices (rs = 0.14, 0.17, 0.18; Ps = 0.0495, 0.016, 0.018) in studies 1–3. This relationship suggests that participants may partially base their models of others’ emotion transitions on their own emotion transition experiences.

Co-Occurrence Analysis.

For studies 1–3 we calculated emotion co-occurrence matrices using the same method used to calculate transitional probability log odds. In each case, we observed large correlations between these co-occurrence matrices and the corresponding transitional probability matrices (ρs = 0.97, 0.90, 0.99). We also observed high correlations between the co-occurrence matrices and group-average transition ratings (ρs = 0.82, 0.72, 0.82). The association between transition ratings and ground truth appeared to be fully mediated by the variance these variables shared with the co-occurrence matrices, as the average partial correlations were not significantly greater than 0 when controlling for co-occurrence odds. These results suggest that people may take advantage of the very high ecological correlation between co-occurrences and transitional probabilities by using their knowledge of the former (which is easier to acquire, requiring only a single observation) to inform their judgments of the latter. However, it should be noted that there were considerable gaps between the proportions of true variance in the group-average mental models (i.e., their reliabilities), and proportion of variance explained in these models by the co-occurrences. Thus, the transition models are not themselves completely explained by co-occurrence. Indeed, it is mathematically impossible for the co-occurrences to explain certain features of the mental models, such as the robust asymmetries in transitional probabilities for particular pairs of states or variability along the diagonal rating transitional probability matrix.

Analysis of Residuals in the Frequency–Stationary Distribution Relationship.

A substantial correlation between the emotion frequencies participants self-reported in study 4 and the stationary distribution calculated from their transitions ratings, but it is worth considering whether any identifiable factors account for the errors in this model. To this end, we recalculated the correlation as a simple regression with self-reported frequencies as the dependent variable. We then calculated the correlations between these residuals and the four dimensions of mental state representation we consider in studies 4 and 5. We found that rationality (r = 0.36) and valence (r = 0.47) were positively correlated with these residuals, suggesting that the stationary distribution overpredicted the self-reported frequencies of affective (vs. cognitive) states and negative states. The dimension of human mind was negatively correlated with residuals (r = −0.26), suggesting that uniquely human mental states were also overpredicted. Social impact expressed a very small correlation (r = 0.03) with residuals, indicating that this dimension was not related to the accuracy of the stationary distribution.

Exponential Decay Modeling.

Following ref. 21, we fit exponential decay models to the experience-sampling data in studies 1–2 to explore the characteristic time-scale of the emotions under investigation. These data were particular well-suited to this analysis, as the experience-sampling was relatively frequent (every 3 h), and there were large numbers of within-participant reports (>70), in comparison with the experience-sampling data in study 3. Within participant, and for each state in each study, we calculated the time between an emotion being reported and all subsequent experience-samples, while also calculating whether the emotion was present at the time of those subsequent reports. We then fit an exponential decay model consisting of a binary logistic regression predicting whether the emotion was present absent at the subsequent timepoint. The single predictor in this model was the natural logarithm of the time difference between pairs of reports.

All but one emotion was best fit by a negative coefficient, indicative of decay in the probability of recurrence of an emotion over time (Fig. S4A). The single exception to this rule was the state of “calm” in study 1. This exception might occur because participants viewed clam as a neutral baseline state to which they would return by default, thus leading on an increasing probability of recurrence over time. The otherwise universal decay of states suggests that the experience under study cannot be reduced to trait or dispositional tendencies to report certain states.

Fig. S4.

Exponential decay and half-lives of emotions. The figures represent statistics of the exponential decay of emotions in the experience-sampling data from studies 1 (red) and 2 (blue). In A, the characteristic decay curves from binary logistic regressions are plotted for each of the emotions in these two studies. Overall frequencies were subtracted from the curves to adjust for the different base rates of each emotion. For all but one emotion (calm) the fitted models indicate decay: that is, decreasing probability of recurrence with time. In B, the boxplots illustrate the distribution of emotional “half-lives” derived from these exponential decay models. The half-life is the time it takes for the probability of recurrence to drop below 50%, again correcting for base-rates by subtraction. Outlier emotions with long half-lives are individually labeled.

Using the logistic regression fits, we were able to calculate the emotional “half-life” of each state. This value corresponded to the time it took for the emotion to decay 50% of the time on average, correcting for its baseline frequency. A one-dimensional optimization procedure probed the fitted models to find the point that yielded the appropriate recurrence rates. The resulting half-lives were almost all less than 1 h, with most in the range of 5 min or less (Fig. S4B). Given that the experience-sampling rate was only once every 3 h, these estimates are naturally extrapolations from the observed data. However, these extrapolations rely only on the assumption that emotions undergo exponential decay, which is minimal, plausible, and has precedent in the literature (21). The short characteristic time-scales of these states suggests that they are indeed emotions in the typical sense, rather than more temporally extended moods. This result also suggests that very high density or continuous experience-sampling would likely yield much high signal-to-noise in studying emotion transitions. The results observed in the present investigation rely solely on the long tails of the exponential decay distributions for observable signal, whereas much of the meaningful variance occurs on a shorter time-scale.

Full Transition Rating Task Instructions.

Below we reproduce the full instructions presented to each participant at the beginning of the transition rating task. In this case the instructions were taken from study 5, but very similar instructions were used for each experiment.

People can experience many different emotions. These emotional states are not static. Instead, they change gradually over time. We are interested in your thoughts on how one emotion may lead to another. So for example, if a person feels tired one moment, what are the chances that they will next feel excited? Or what are the chances that they will next feel sleepy instead?

In this study you will be presented with pairs of emotions. The first emotion denotes a person’s current state; the second emotion denotes an emotional state that person could potentially feel next. You task is to estimate the likelihood of a person currently feeling the first emotion subsequently feeling the second emotion. For this example, what is the chance of a person currently feeling tired next feeling excited?

For example, this transition will be presented as:

Tired → Excited

You will make your rating on a scale from 0 to 100%, where 0% means that there is zero chance that a person feeling tired will feel excited next, and where 100% means that a person feeling tired now will definitely feel excited next.

Discussion

Despite the importance of knowing others’ thoughts, feelings, and behaviors, psychologists know very little about how people predict others’ mental states. The current research investigated one strategy for predicting how others’ might feel in the future: mentally modeling their emotion transitions. Across five studies, we observed consistent evidence that people have highly accurate mental models of others’ emotion transitions. These models could allow people to predict others’ emotions better than chance up to two transitions into the future. Indeed, almost all participants reported models that were positively correlated with experienced emotion transitions, suggesting that typical adults almost universally have an accurate mental model of others’ emotion transitions. Together, these results suggest that people have considerable insight into how emotions change from one to another over time.

People’s models of emotion transitions were shaped by four conceptual dimensions—valence, social impact, rationality, and human mind—as well as holistic similarity more broadly. Thus, people believe that transitions are more likely between conceptually similar, rather than dissimilar, states. Importantly, three dimensions—rationality, social impact, and valence—shape not only transition likelihood ratings; they also reflect actual emotion transitions. These dimensions each uniquely mediate the accuracy of participants’ mental models. We previously established that the brain is particularly attuned to these same three dimensions when thinking about others’ mental states (20). The fact that the dimensional space that the brain uses to encode emotions also facilitates prediction supports a predictive coding account of mental state representation (5), and the foundational role of these particular dimensions to that end.

That said, conceptual similarity could not fully explain mental model accuracy. People have insight into emotional dynamics beyond their understanding of static affect. People’s models remained accurate when accounting for all four of conceptual dimensions or when accounting for holistic similarity judgments. Indeed, any account of emotion transitions based on similarity is likely to be incomplete, because similarity would predict symmetrical relationships but emotion transitions can be asymmetric (e.g., people expect the transition from drunkenness to sleepiness more than the reverse). The correlation between transition and similarity ratings could suggest that similarity informs the transition models. However, the converse may also be true: experience with emotional dynamics could shape perceptions of emotional similarity.

Although people’s mental models were highly accurate, they were also somewhat egocentric (SI Text). People often draw on their privileged access to their own mental states when making inferences about others (23–25). This strategy should work particularly well when a person’s emotion transitions mirror those found in the population. However, when a person experiences atypical emotion transitions, drawing on egocentric knowledge may serve instead as a source of bias and error. We also find that people’s judgments of transition likelihoods are correlated with co-occurrence rates (SI Text). Thus, observing these co-occurrences may be another mechanism for acquiring accurate, although egocentric, models of emotion transitions.

People’s insights into others’ emotion transitions may translate into real-world social success. By predicting emotional states, a perceiver has leverage for predicting future behavior, a major advantage in navigating the social world. Studying social ability often poses a challenge because social accuracy is difficult to objectively assess. Emotion transitions offer observable data against which to ground perceivers’ judgments. The accuracy of people’s models of emotion transitions might thus provide a useful assay of real-world social ability, stratifying typically developing adults, quantifying social deficits in clinical populations (26), or tracking social abilities across development. However, it is worth noting that participants in the current study did not make predictions in a naturalistic context. Although it is impressive that participants were able to make accurate transition predictions even in the absence of knowledge about the person and situation, future research should consider such factors to understand how emotion transition models contribute to real-world social functioning.

The structure of people’s experienced state transitions was consistent across long time scales, suggesting that people may be able to use similar mental models for states, moods, or even traits. That said, analysis of the experience-sampling data (SI Text) suggests that the constructs we investigate here are states rather than traits. Exponential decay models (21) demonstrate that the emotions indeed become less and less likely to recur over increasing time intervals; in fact, most “emotional half-lives” are less than an hour (Fig. S4). However, as with radioactivity, some emotional experiences last for many half-lives. The long tails of the exponential distributions provide reliable signal in the present studies, despite limited temporal resolution.

Emotions are dynamic by nature. They fluctuate over time and transition from one to the next. Fully capturing these emotion dynamics demands a dynamic framework. To meet this demand, we introduced the concept of Markov chains to the study of emotion transitions. Markov chains provide an apt formal characterization of how emotions change over time. Our work provides two initial demonstrations of their utility: estimating the real-world foresight mental models might confer (studies 1–3), and testing the accuracy of these models through frequency predictions (study 4). We believe this mathematical framework may facilitate further insights into how people predict each other and affective experience more broadly.

People must anticipate the thoughts, feelings, and actions of other people to function in society. Such predictions help us to navigate commonplace social problems, such as reputation-management, or finding common ground for communication. Much previous work has investigated the perception of social information; here we begin to investigate how people use this information for social prediction. We show that people have accurate—although slightly egocentric—multidimensional mental models of how other people’s emotions flow from one to another. This ability to see into others’ affective future may be one way by which humans achieve their impressive social abilities. Whether attempting to comfort a loved one, outmaneuver a rival, sell a product, or pursue a scientific collaboration, the foresight granted by accurately predicting others’ emotions may prove essential for success.

Materials and Methods

Participants.

Data from three previously published emotion experience-sampling studies—studies 1 and 2 from ref. 19, study 3 from ref. 18—were obtained via correspondence with the authors. The first two datasets were each comprised of 40 participants, all recruited in the United States and performing the study in English. The third dataset comprised 12,211 participants, of which we excluded 103 for incomplete ratings and 1,385 for providing only a single set of ratings (because at least two were necessary to calculate any transitions), leaving a final n = 10,723. Participants lived in France and Belgium, and completed the study in French. These datasets provided ground truth for how individuals transition among sets of 25, 22, and 18 emotions, respectively. A fifth dataset provided estimates of ground-truth transitional probabilities based on 2 million emotion reports from the Experience Project (21) (www.experienceproject.com).

A power analysis was conducted via Monte Carlo simulation to determine appropriate sample sizes for the matched mental model ratings in studies 1–3; study 5 used a power analysis specifically targeting incremental validity (SI Text). Participants in the rating studies were recruited via Amazon Mechanical Turk, with availability restricted to those in the United States and with 95% or greater approval rates. We excluded data from participants who indicated that English was not their native language, had an imperfect grasp of English (self-reporting less than 7 on a seven-point scale), or did not comply with the task (i.e., providing 10 or fewer “unique” responses on the continuous response scale). These exclusions yielded final sample sizes of 74, 76, 102, 302, and 151 for the transitional probability ratings tasks in studies 1–5, 149 for the similarity judgment task in study 5, and 186 for the dimension judgments in study 5 (Table S1 for exclusion and demographic breakdowns). Participants in all of the online ratings studies provided informed consent in a manner approved by the Institutional Review Board at Princeton University.

Table S1.

Demographic breakdown and exclusions for samples

| Study | Rating | Total sample size (n) | Language exclusions | Unique response exclusions | Final sample size (n) | Female (n) | Male (n) | Mean age (y) | Minimum age (y) | Maximum age (y) |

| 1 | Transitions | 80 | 6 | 0 | 74 | 38 | 36 | 35.4 | 20 | 66 |

| 2 | Transitions | 82 | 6 | 0 | 76 | 51 | 25 | 32.1 | 19 | 59 |

| 3 | Transitions | 109 | 6 | 1 | 102 | 53 | 49 | 34.5 | 19 | 69 |

| 4 | Transitions | 337 | 32 | 3 | 302 | 183 | 119 | 38.0 | 19 | 73 |

| 5 | Transitions | 152 | 1 | 0 | 151 | 69 | 81 | 36.6 | 20 | 70 |

| 5 | Similarity | 154 | 1 | 4 | 149 | 70 | 79 | 36.4 | 19 | 70 |

| 5 | Rationality | 44 | 1 | 1 | 42 | 21 | 21 | 38.4 | 22 | 66 |

| 5 | Social impact | 46 | 1 | 3 | 42 | 24 | 18 | 38.7 | 21 | 67 |

| 5 | Valence | 49 | 2 | 2 | 45 | 19 | 26 | 37.0 | 21 | 60 |

| 5 | Human mind | 47 | 0 | 4 | 43 | 23 | 20 | 37.4 | 22 | 67 |

Procedure.

Participants in studies 1–2 were prompted via text message to report their mental state every 3 h during the day for 2 wk. Each time, participants reported the degree to which they were experiencing each emotion using six-point Likert scales. Participants reported an emotion in 99.9% of samples. The 25 emotions in study 1 were gloomy, sad, grouchy, failure, irritable, head-full, tense, emotional, full-thought, withdrawn, anxious, sluggish, unrestrained, assertive, energetic, cheerful, pleased, steady, relaxed, uncluttered, alert, happy, satisfied, confident, and calm. The 22 emotions in study 2 were temperamental, jittery, anxious, insecure, upset, touchy, irritable, bold, intense, nervous, full-of-pep, distressed, vigorous, excited, strong, talkative, stirred-up, lively, attentive, alert, quiet, and happy.

Participants in the third experience-sampling dataset were probed via a phone app at random times throughout the day. Participants selected the hours within which they wished to be contacted, with a default setting of 7 d per week from 9:00 AM to 10:00 PM, as well as the number of questionnaires they received per day, with a default of 4, minimum of 1, and maximum of 12. Unlike studies 1–2, in study 3 there was considerable heterogeneity in frequency. Impact of time interval on transitions was minimal: the ground-truth transitions odds with <1-d intervals and <2-d intervals were correlated at ρ = 0.996. The emotion survey was embedded within a larger menu of surveys, and asked participants which of 18 emotions (9 positive and 9 negative) they were currently experiencing: pride, love, hope, gratitude, joy, satisfaction, awe, amusement, alertness, anxiety, contempt, offense, guilt, disgust, fear, embarrassment, sadness, and anger. This list was based on the modified differential emotion scale and its French translation (27, 28). The median participant completed four emotion reports (range: 2, 257), with a median separation of 56.8 h (range = 29 s to 432 d). A total of 65,629 ratings were provided. Participants could report multiple emotions per survey (median = 2). Emotion reports were binary choices.

The Experience Project is a website devoted to sharing personal stories about life experiences. Users share experiences at will, including mood updates in the form of affect labels from a large menu of states. In previous research (21), these mood updates were entered into a computational model to calculate transitional probabilities between states. The owners of these data provided us with these transitional probabilities.

Participants in all rating tasks were recruited on Amazon Mechanical Turk. Participants rated the likelihood of transitions between pairs of states (SI Text for full instructions). In each trial, participants saw the names of two states connected by an arrow: for example, “anxious → calm.” They were told that the state on the left of the arrow was a person’s current state and the state on the right was a state the person might experience next. They rated the likelihood of the transition from the first state to the next on a continuous scale from 0 to 100%. Instructions did not include reference to any specific time interval. In studies 1–3, each participant rated all possible transitions between the sets of 25, 22, or 18 states, for a total of 625, 484, or 324 ratings, presented in random order. In study 4, participants responded to only a subset of 325 transitions. In study 5, participants rated the likelihood of the 456 transitions for which we had bidirectional ground-truth estimates. In studies 1–4 participants also rated how frequently they personally experienced all of the states in question. Participants also reported gender, age, native language, and English fluency.

Statistical Analysis.

All data were analyzed using R (29), with data and code available on the Open Science Framework (https://osf.io/zrdpa/) with the exception of the Experience Project data, which are privately owned. We first converted ratings from the first two experience-sampling datasets into categorical outcomes (i.e., emotion present or not) by binarizing responses at the scale midpoint. We tabulated transitions in studies 1–3 by examining the states reported at consecutive time points. For example, if a participant reported feeling grouchy at time t, and sluggish at time t + 1, we would increment the grouchy-to-sluggish transition count by 1. The transition count matrix was then normalized by frequency-based expectations (SI Text), producing a matrix that indicated the odds of each transition relative to chance. Raw odds values were log-transformed. Data from the Experience Project were provided to us in the form of a sparse transitional probability matrix, thresholded at transitions of 1% likelihood or more. These transitional probabilities were calculated via a computational model which took time-delay into account via exponential decay (SI Text).

We calculated the participants’ consensus (interrater r) in each rating dataset, with P values calculated for studies 1–3 via permutation testing. We also measured the reliability of group-averaged transitional probability ratings via interparticipant standardized Cronbach’s α. To assess the accuracy of participants’ mental models in studies 1–3 and 5, we Spearman-correlated the mental model transition ratings with experience-sampling transitional log odds. This analysis was performed both on the average transition ratings across participants, and individually on data from each participant. We assessed the statistical significance of the mean correlation via bootstrapping and permutation testing.

In studies 1–3, we used Markov chain modeling to measure the affective foresight that participants gained by using their mental models of emotion transitions. To do so, we simultaneously initiated random walks at the same state in experienced and mental model transitional probability matrices. For each of 10,000 random walks, starting at a randomly chosen state, a sequence of transitions was simulated by walking through the experienced transition matrix. We simulated random walks starting at the same state for each rating task participant, using their transitional probability matrix. At each of four steps in the walk, we calculated whether a participant’s mental model yielded a correct prediction regarding the simulated experiencer’s emotional state. For each participant, we calculated the proportion of accurate predictions at each step across all 10,000 walks. We then bootstrapped these proportions across participants to determine whether the average accuracy at each step was above chance (1/N emotions). Accuracy at steps was not dependent on the path, thus a participants’ model could have erred at the first step but still have been correct at the second.

We used Markov chain modeling to assess accuracy in study 4. This dataset was not paired with an extant experience-sampling dataset, so a direct test of accuracy was impossible. However, we tested accuracy indirectly, translating the average transitional probability matrix into predictions about emotional frequencies. This process is known as calculating the stationary distribution of the Markov chain. The stationary distribution reflects the proportion of time that the agent spends in each state over an indefinite random walk. This random walk can be simulated by multiplying the (row-sum–normalized) transitional probability by itself until the marginal values converge. We approximated this by raising the matrix to a high exponential power (i.e., ref. 10). We then Spearman-correlated the stationary distribution with the average rated frequencies of each of the 60 states in the dataset to complete this test of the accuracy of participants’ mental models. This approach was not possible with studies 1–3 because calculating a Markov chain’s stationary distribution can be biased if the set of observed states does not represent the true underlying Markov space. The incomplete transitions available in study 5 also precluded this technique.

In study 4, we tested whether each of four conceptual dimensions shaped participants’ mental models of emotion transitions. These dimensions—rationality, social impact, valence, and human mind—were principal components derived from ratings of the dimensions of extant theories of mental state representation in earlier research (20). To assess their influence, we first converted each of the four dimensions into distance predictions by taking the absolute differences of each pair of mental states on each dimension. We then calculated the average transitional probability matrix across all participants in the fourth rating study. For this purpose, the transitional probability matrix was made symmetric by averaging it with its transpose. The lower triangular component of the transition matrix was vectorized and Spearman-correlated with the distance predictors generated from each of the four dimensions. Partial correlations were calculated for each dimension, controlling for the influence of the other three. Statistical significance was assessed by permuting the rows and columns of the matrices.

In study 5, we conducted two analyses to determine whether participants’ transition ratings reflected specific insight into emotion dynamics, or whether they depended entirely on knowledge about static emotion. First, we examined the residual accuracy relationship controlling for the four conceptual dimensions described above by bootstrapping the average partial correlation between individual participant ratings and experienced transitions, controlling for all four dimensions. Second, we assessed the incremental validity of transition ratings in predicting ground truth after controlling for similarity. To do so, we aggregated similarity ratings across participants, and calculated the partial correlation between transition ratings (individual and aggregated) and ground-truth transitional probabilities, accounting for aggregate similarity.

Acknowledgments

We thank Ceylan Ozdem and Miriam Weaverdyck for their assistance; Joshua Wilt, Jordi Quoidbach, Moritz Sudhof, and Andrés Emilsson for sharing their datasets; and Mina Cikara, Meghan Meyer, and Betsy Levy-Paluck for comments on earlier versions of this manuscript. M.A.T. was supported by The Sackler Scholar Programme in Psychobiology.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission. A.K.A. is a guest editor invited by the Editorial Board.

Data deposition: The data and code reported in this paper are available on the Open Science Framework repository, https://osf.io/zrdpa/.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1616056114/-/DCSupplemental.

References

- 1.Dunbar RI. The social brain hypothesis. Brain. 1998;9:178–190. [Google Scholar]

- 2.Ickes WJ, editor. Empathic Accuracy. Guilford Press; New York: 1997. [Google Scholar]

- 3.Zaki J, Bolger N, Ochsner K. It takes two: The interpersonal nature of empathic accuracy. Psychol Sci. 2008;19:399–404. doi: 10.1111/j.1467-9280.2008.02099.x. [DOI] [PubMed] [Google Scholar]

- 4.Herrmann E, Call J, Hernàndez-Lloreda MV, Hare B, Tomasello M. Humans have evolved specialized skills of social cognition: The cultural intelligence hypothesis. Science. 2007;317:1360–1366. doi: 10.1126/science.1146282. [DOI] [PubMed] [Google Scholar]

- 5.Koster-Hale J, Saxe R. Theory of mind: A neural prediction problem. Neuron. 2013;79:836–848. doi: 10.1016/j.neuron.2013.08.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Atkinson AP, Dittrich WH, Gemmell AJ, Young AW. Emotion perception from dynamic and static body expressions in point-light and full-light displays. Perception. 2004;33:717–746. doi: 10.1068/p5096. [DOI] [PubMed] [Google Scholar]

- 7.Barrett LF, Mesquita B, Gendron M. Context in emotion perception. Curr Dir Psychol Sci. 2011;20:286–290. [Google Scholar]

- 8.Zaki J, Bolger N, Ochsner K. Unpacking the informational bases of empathic accuracy. Emotion. 2009;9:478–487. doi: 10.1037/a0016551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Frijda NH. Emotions and action. In: Manstead ASR, Frijda NH, Fisher AH, editors. Feelings and Emotions: The Amsterdam Symposium. Cambridge Univ Press; Cambridge, UK: 2004. pp. 158–173. [Google Scholar]

- 10.Tomkins SS. 1962. Affect Imagery Consciousness: The Positive Affects, Vol 1 (Springer, New York)

- 11.Holding DH. Theories of chess skill. Psychol Res. 1992;54:10–16. [Google Scholar]

- 12.Macdonald IL, Raubenheimer D. Hidden Markov models and animal behaviour. Biom J. 1995;37:701–712. [Google Scholar]

- 13.Patterson TA, Thomas L, Wilcox C, Ovaskainen O, Matthiopoulos J. State-space models of individual animal movement. Trends Ecol Evol. 2008;23:87–94. doi: 10.1016/j.tree.2007.10.009. [DOI] [PubMed] [Google Scholar]

- 14.Oravecz Z, Tuerlinckx F, Vandekerckhove J. A hierarchical latent stochastic differential equation model for affective dynamics. Psychol Methods. 2011;16:468–490. doi: 10.1037/a0024375. [DOI] [PubMed] [Google Scholar]

- 15.Heller AS, Casey BJ. The neurodynamics of emotion: Delineating typical and atypical emotional processes during adolescence. Dev Sci. 2016;19:3–18. doi: 10.1111/desc.12373. [DOI] [PubMed] [Google Scholar]

- 16.Cunningham WA, Dunfield KA, Stillman PE. Emotional states from affective dynamics. Emot Rev. 2013;5:344–355. [Google Scholar]

- 17.Schirmer A, Meck WH, Penney TB. The socio-temporal brain: Connecting people in time. Trends Cogn Sci. 2016;20:760–772. doi: 10.1016/j.tics.2016.08.002. [DOI] [PubMed] [Google Scholar]

- 18.Trampe D, Quoidbach J, Taquet M. Emotions in everyday life. PLoS One. 2015;10:e0145450. doi: 10.1371/journal.pone.0145450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Wilt J, Funkhouser K, Revelle W. The dynamic relationships of affective synchrony to perceptions of situations. J Res Pers. 2011;45:309–321. [Google Scholar]

- 20.Tamir DI, Thornton MA, Contreras JM, Mitchell JP. Neural evidence that three dimensions organize mental state representation: Rationality, social impact, and valence. Proc Natl Acad Sci USA. 2016;113:194–199. doi: 10.1073/pnas.1511905112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Sudhof M, Goméz Emilsson A, Maas AL, Potts C. Sentiment expression conditioned by affective transitions and social forces. In: Macskassy SA, Perlich C, editors. Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. ACM; New York: 2014. pp. 1136–1145. [Google Scholar]

- 22.Russell JA. A circumplex model of affect. J Pers Soc Psychol. 1980;39:1161–1178. [Google Scholar]

- 23.Epley N, Keysar B, Van Boven L, Gilovich T. Perspective taking as egocentric anchoring and adjustment. J Pers Soc Psychol. 2004;87:327–339. doi: 10.1037/0022-3514.87.3.327. [DOI] [PubMed] [Google Scholar]

- 24.Tamir DI, Mitchell JP. Anchoring and adjustment during social inferences. J Exp Psychol Gen. 2013;142:151–162. doi: 10.1037/a0028232. [DOI] [PubMed] [Google Scholar]

- 25.Ames DR. Strategies for social inference: A similarity contingency model of projection and stereotyping in attribute prevalence estimates. J Pers Soc Psychol. 2004;87:573–585. doi: 10.1037/0022-3514.87.5.573. [DOI] [PubMed] [Google Scholar]

- 26.Burgess AF, Gutstein SE. Quality of life for people with autism: Raising the standard for evaluating successful outcomes. Child Adolesc Ment Health. 2007;12:80–86. doi: 10.1111/j.1475-3588.2006.00432.x. [DOI] [PubMed] [Google Scholar]

- 27.Izard C. Human Emotions. Plenum Press; New York: 1977. [Google Scholar]

- 28.Philippot P, Schaefer A, Herbette G. Consequences of specific processing of emotional information: Impact of general versus specific autobiographical memory priming on emotion elicitation. Emotion. 2003;3:270–283. doi: 10.1037/1528-3542.3.3.270. [DOI] [PubMed] [Google Scholar]

- 29.R Core Team . R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing; Vienna, Austria: 2015. [Google Scholar]