Abstract

Objective. To compare learning outcomes achieved from a pharmaceutical calculations course taught in a traditional lecture (lecture model) and a flipped classroom (flipped model).

Methods. Students were randomly assigned to the lecture model and the flipped model. Course instructors, content, assessments, and instructional time for both models were equivalent. Overall group performance and pass rates on a standardized assessment (Pcalc OSCE) were compared at six weeks and at six months post-course completion.

Results. Student mean exam scores in the flipped model were higher than those in the lecture model at six weeks and six months later. Significantly more students passed the OSCE the first time in the flipped model at six weeks; however, this effect was not maintained at six months.

Conclusion. Within a 6 week course of study, use of a flipped classroom improves student pharmacy calculation skill achievement relative to a traditional lecture andragogy. Further study is needed to determine if the effect is maintained over time.

Keywords: flipped classroom, traditional lecture, randomized controlled study, pharmacy calculations, objective structured clinical exam

INTRODUCTION

For more than 20 years, higher education reformists have advocated for significant changes in the way students are educated.1,2 Gaps between learning goals and student achievement are often noted and attributed to deficiencies within the traditional, lecture-based model of instruction.3,4 This misalignment of educational goals and outcomes has resulted in a movement to clarify faculty expectations, implement systematic assessment, improve curricular integration, and increase the use of classroom active learning.5

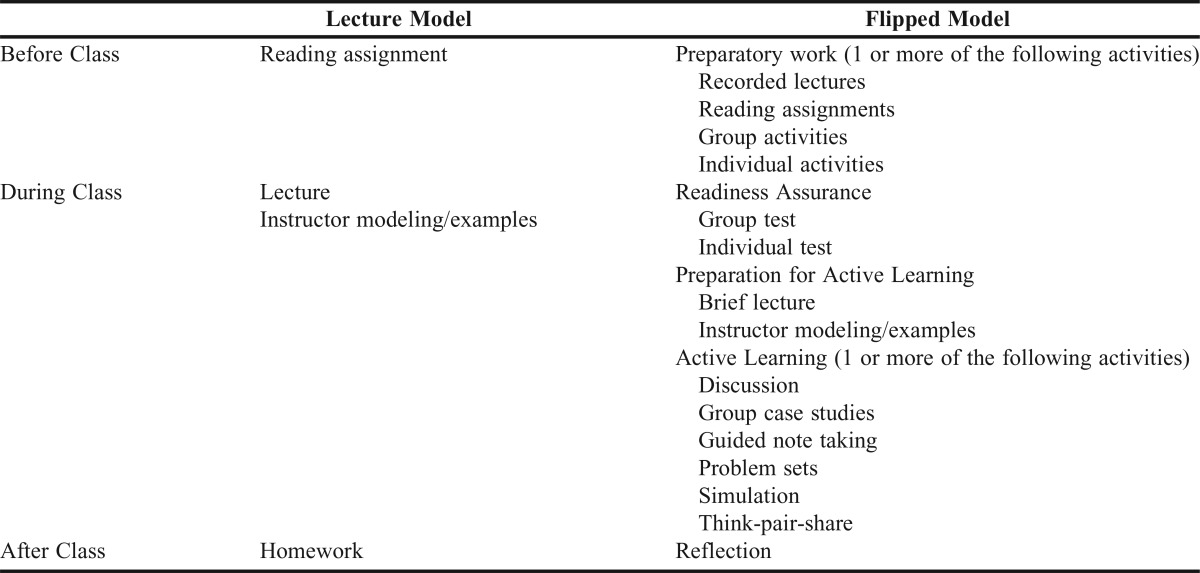

New teaching models are sought in an effort to overcome the deficiencies inherent to the lecture andragogy. One such teaching model is the flipped classroom. This model takes the traditional lecture model and “flips” the learning process (Table 1) in which instructional time is now allocated to understanding and mastery of concepts rather than dissemination of facts.

Table 1.

Comparison of the Educational Structure for the Lecture Model and Flipped Model

The flipped classroom model leverages the collaborative learning process. Classroom time provides students opportunities for concept application through active processes. Students complete problem sets, solve cases, participate in debates, or engage in a variety of other active learning methods during course meetings. These active processes provide students opportunities to leverage the benefits of group learning by discussing problems, comparing opinions, identifying outcomes, and critiquing thought processes.6

Such collaborative learning processes benefit overall student learning by providing opportunities for the student to develop a conceptual framework (individual understanding) and engage in social discourse (group understanding). The power of this learning process resides in the ability of peers to convey the meaning of difficult concepts to their fellow students more effectively than the instructor.6

Tapping the power of peer-to-peer learning requires the allocation of time. Shifting course content delivery (knowledge dissemination) to outside the actual course meeting is a requisite component of collaborative learning models. Reading assignments, recorded lectures, problem sets, and other content are assigned and completed prior to attending course meetings.

The flipped classroom andragogy is a recent target for research in medical and pharmacy education. The evidence currently available suggests that student perceptions are favorable toward the flipped classroom, as students have responded positively to questions regarding both preference for and learning within the andragogy.7-9 Improved student attendance and course outcomes have also been reported.8,10-12

Currently lacking is evidence derived from prospective, randomized, and concurrent studies comparing the short- and long-term learning outcomes of students engaged in traditional lecture and flipped classroom andragogies. In this study, student outcomes from two sections of a pharmaceutical calculations course are compared between one section delivered as a traditional lecture andragogy and the second as a flipped classroom. The sections were delivered concurrently by the same instructors at the Marshall University School of Pharmacy.

METHODS

The Marshall University School of Pharmacy curriculum is designed to allow early integration of didactic and experiential learning. The school’s first-year (P1) students begin Introductory Pharmacy Practice Experiences (IPPEs) as soon as the sixth week of the curriculum. The school has aligned the didactic and experiential curricula to allow P1 students to actively engage in early IPPEs while reinforcing core skills developed within the didactic curriculum.

The school’s faculty has identified several core skills or behaviors that are reinforced in the P1 IPPEs. These core skills and behaviors include basic communication, team work, professionalism (dress, communications, and networking), pharmacy calculations, and clinical immunizations. Matriculating students are expected to master these skills during the first five weeks of the first-year curriculum while enrolled within the PHAR 511 Clinical Immunizations and PHAR 541 Pharmacy Practice I courses.

A randomized, two-group parallel study design was selected for this investigation. All students enrolled within the PHAR 541 course (n=78) were eligible for study inclusion. Seventy of the 78 enrolled students consented to participation.

Students were randomly assigned to one of two educational conditions through use of a stratified, randomized block method. Strata for the randomization process were determined by quartile of student performance on the Pharmacy College Admission Test (PCAT) Quantitative domain.

The first educational condition was a traditional lecture model (lecture model) and the second was a flipped classroom model (flipped model). The structures of the two education conditions are summarized in Table 1. Fundamental differences exist in how time prior to class, during class, and after class were structured. Key components of the flipped model are inclusion of pre-work with each class meeting, readiness assurance, and use of classroom time primarily for active learning. Pre-work consisted of readings, recorded lectures, performance of guided tasks, or other activities developed by the instructor. The purpose of these activities was to “front load” course content and allow course time to be used for application of concepts and provision of feedback. Pre-work materials were not available to students assigned to the lecture model.

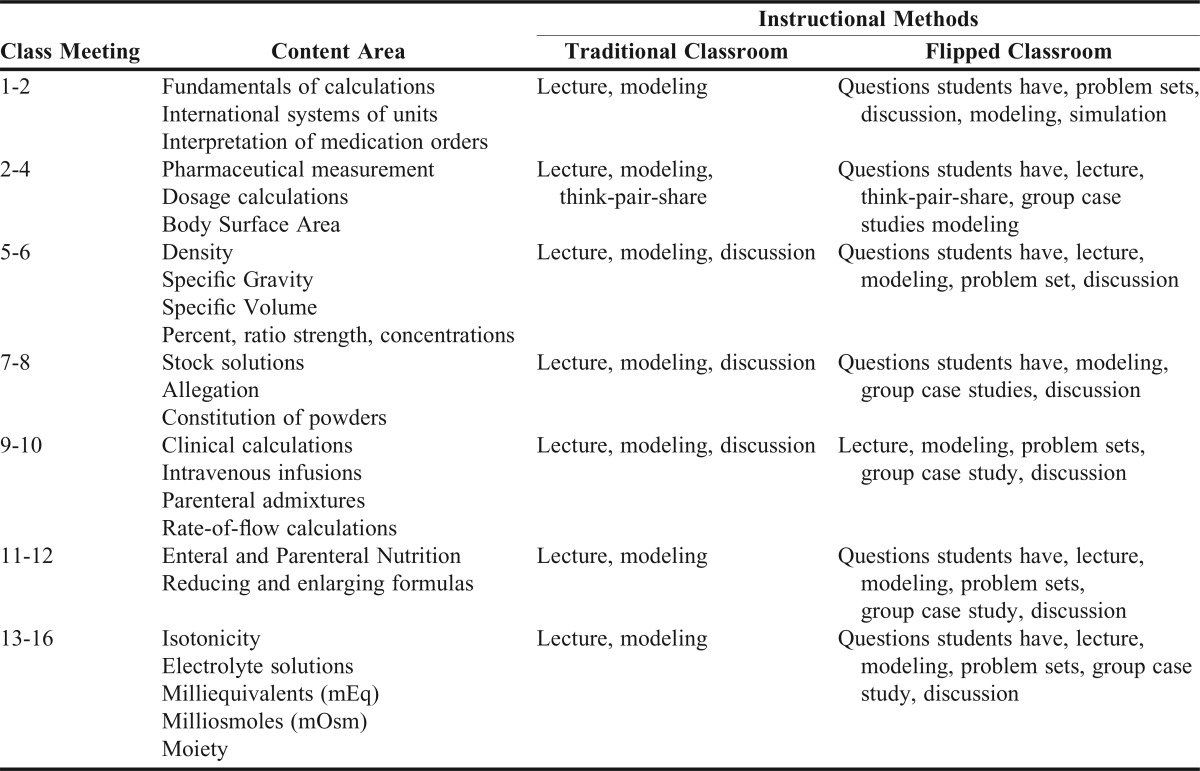

Readiness assurance is a synonym for quizzes or other activities designed to assess student understanding of assigned pre-work and is a mechanism through which responsibility for course engagement is delegated to the student. Active learning activities in the classroom included but were not limited to discussions, think-pair-share exercises, collaborative case studies, guided note taking, and problem sets (Table 2).

Table 2.

Comparison of Content Sequencing and Instructional Methods used in the Traditional Lecture and Flipped Classroom Models

The flipped model is the standard classroom environment within the Marshall University School of Pharmacy. All students not enrolled in the study were assigned to the flipped model. All students, both study participants and nonparticipants, were instructed at the beginning of the course and several times during the semester to refrain from sharing course materials with students in the learning model to which they were not assigned. This study was approved by the Marshall University Institutional Review Board.

The school’s curriculum committee approved the PHAR 541 course syllabus (including objectives, schedule, and assessment plan). This syllabus was used in both course sections.

The two educational models were taught at separate times, with the flipped model’s course meetings held in a 90-seat studio classroom and the lecture model’s held in a 35-seat stadium classroom setting. To limit the variance in student outcomes attributable to instructor-specific biases and differences in course material, the same instructors (study investigators) were assigned to teach both course sections; both course sections met on the same day of each week; and the same posted course materials (ie, notes), in-class examples, and problem sets were used during both course meetings. Lecture model problem sets assigned as homework were equivalent in number of problems and problem difficulty to pre-work problem sets assigned to the flipped model. This design decision was implemented to minimize skill practice’s ability to confound the effect of the learning model.

Students enrolled in both educational models attended 16 hours of pharmacy calculations education. The two educational models did differ in class meeting length. The Marshall University standard course meeting time is 50 minutes. This meeting length was chosen for the lecture model. As the flipped model relied heavily upon active learning, the course instructors identified the 50-minute standard meeting time to be a barrier to successful implementation of the andragogy. To overcome this perceived barrier, the flipped model’s class meeting length was extended to 100 minutes/session.

The primary outcomes of interest for this study were the students’ short- and long-term ability to perform basic pharmaceutical calculations after completion of a five-week course of instruction. Student performance on the pharmaceutical calculations objective structured clinical exam (Pcalc OSCE) was used as a surrogate marker for student skill mastery. Study investigators with expertise in the subject matter designed the Pcalc OSCE. After development, the Pcalc OSCE was assessed for content validity during the criterion determination.

The Pcalc OSCE was high-stakes, with students expected to demonstrate content mastery (competency achievement) prior to enrollment within the P2 year. The Pcalc OSCE was administered to students at six weeks and at six months after completing the course in their P1 year. The assessment was a two-hour timed exam comprised of 12 standard cases, each with multiple fill-in-the-blank items being assessed. The assessment was administered through the Blackboard Learn (Bb, Washington, DC) course management system.13 Correct answers for all assessment items were determined by the lead instructor and adjudicated by the study investigators prior to assessment administration. Student competency achievement was determined by comparison of student Pcalc OSCE scores to an absolute criterion established through use of a Modified Angoff methodology.14-16 Students whose scores were greater than or equal to the criterion were identified as demonstrating skill competency.

During the procedure for criterion establishment, item judges were school faculty who were familiar with the calculations required to complete the Pcalc OSCE. These judges were provided documents containing all items to be judged, correct answers, calculations for the correct answers, and blanks for notation of item judgments. Judges were not provided historical item difficulties for the items reviewed and were instructed not to apply a correction for guessing when rating items. Individual item probability estimates were identified through a three-step process as follows: first, judges were asked to imagine a group of 100 borderline P1 students; second, for each item, judges estimated the number of the borderline students who would provide correct answers; third, judge estimates were averaged. These judgments represented the probability that a borderline student would correctly answer each individual item, which could assume a range of 0 to 1. The final criterion was a 52% for the 12 case, 31-item Pcalc OSCE.

A single, senior investigator acquired all demographic data for matriculating students. Demographic data were acquired from the PharmCAS database, University Graduate Admissions data, and paper applications (transcripts, letters of reference, application form, supplemental application form). Individual GPAs and prerequisite GPAs (overall and prerequisite) were calculated using all applicable courses that a student completed, including duplicated course work.

The school uses a holistic admissions process that includes assessments of critical thinking, written communication, teamwork, and a standardized behavioral interview. Preadmissions interview indices collected include PCAT composite scores, reference letters scored using a standard rubric, and prerequisite GPA. Outcomes for all seven assessments were tabulated, converted to a 100-point scale, and archived by the school’s Academic Affairs Department. Each student’s Holistic Interview Score is calculated as the sum of all scaled scores resulting from the holistic admissions process assessments, prerequisite GPA, PCAT composite, and reference letter scoring.

Both Pcalc OSCE assessments were administered and scored through use of the Bb.13 Student outcomes were imported to and analyzed within SPSS version 21.0.0 (Armonk, NY).17

Descriptive statistics were performed on all data based on variable scale. Intergroup analyses were performed with Chi Square or Fisher’s Exact tests for nominal scale outcomes or student’s t-tests for variables measured upon continuous scales. Intragroup analyses of the dependent variable (Pcalc OSCE score at six weeks and six months) were assessed using paired t-tests. Effect sizes were estimated using Cohen’s d.18-20

Linear regression analyses were performed for the purpose of identifying the “true” effect of educational condition while controlling potential confounding resulting from group demographic differences. For all regression analyses, categorical variables were coded as dummy variables.21-23 Linear regression models were constructed where the Pcalc OSCE scores (dependent variable) were regressed against the main effects of educational condition (primary independent variable) and the demographic variables including age, gender, ethnicity, certified technician training, completion of post-secondary course of study, overall GPA, and percentile PCAT composite.24,25 Models for both sets of Pcalc OSCE scores (six weeks and six months) were analyzed. The initial model in each case was used to identify a more parsimonious model through a backward stepwise procedure.24-26 The stepwise process used a p>.10 criterion for variable removal. Statistical significance of all analyses was determined using p≤.05 a priori.

RESULTS

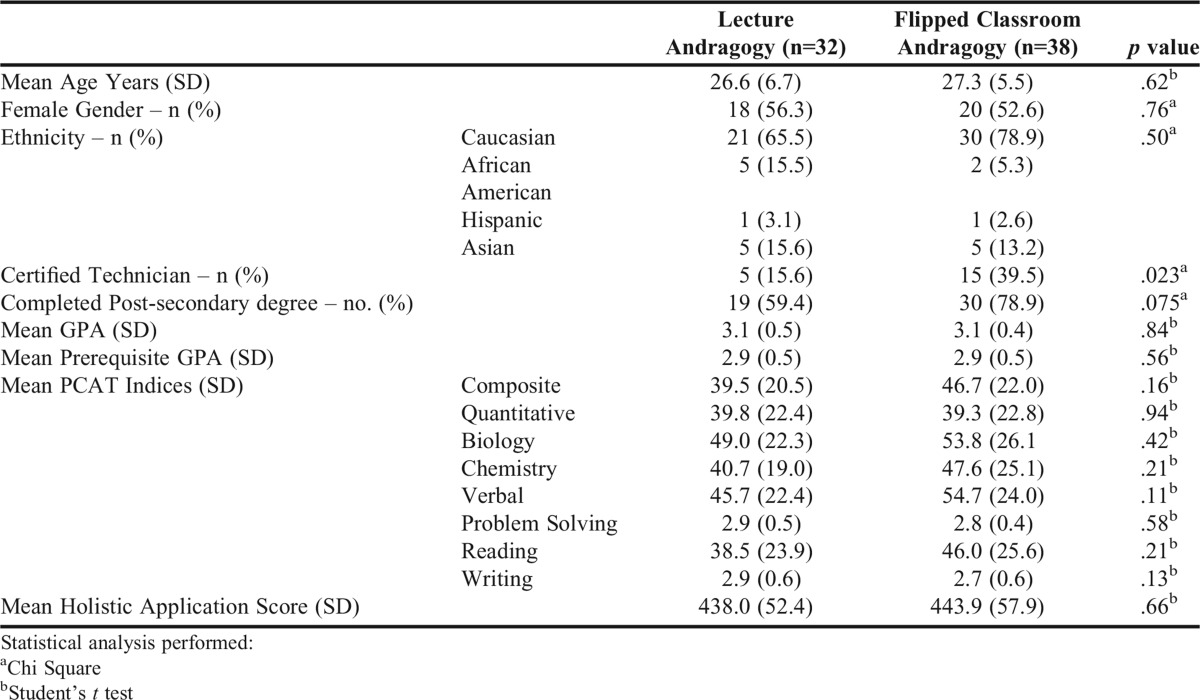

Seventy of 78 (89.7%) matriculating students provided informed consent and participated in the study. Thirty-eight participants were randomly assigned to the flipped model with the remaining 32 assigned to the lecture model. Demographic characteristics for the two study groups are summarized in Table 3. Student demographics were comparable between the two groups with the exception of a greater percentage of students in the flipped model having a pharmacy technician certification (39.5% vs 15.6%, p=.04).

Table 3.

Student Demographics

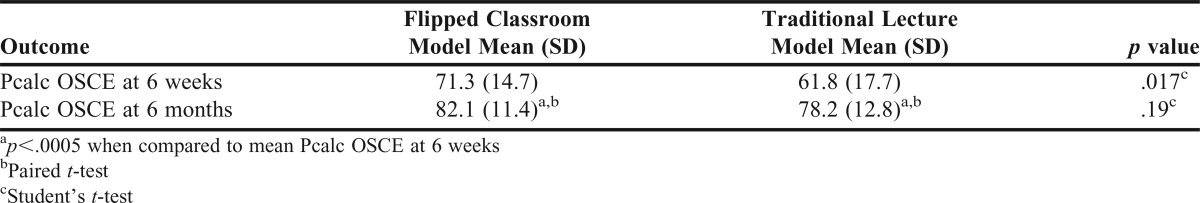

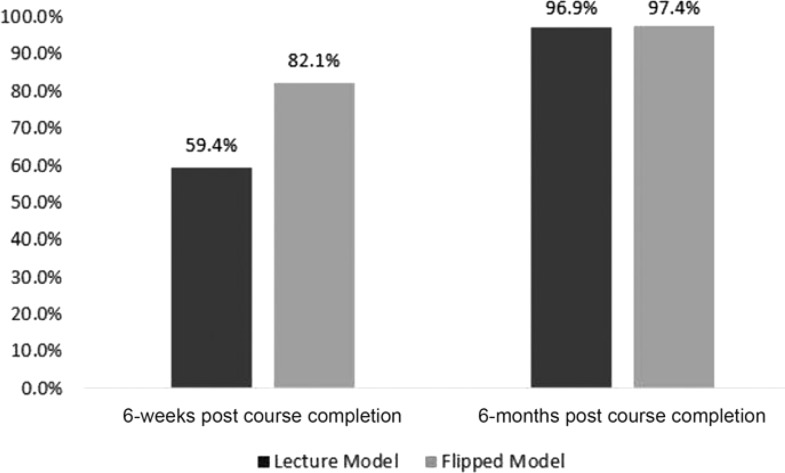

Average Pcalc OSCE performance was observed to be higher in the flipped model than the lecture model (Table 4, 71.3 (14.7)% vs 61.8 (17.7)%, mean (SD), p=.017, Cohen’s d=0.60) at six weeks. Skill competency achievement followed a similar pattern. Pharmacy calculations competency was achieved by 82.1% of students in the flipped model, but only 59.4% in the traditional model (Figure 1, p=.035) at the first assessment.

Table 4.

Comparison of Pharmacy Calculations (Pcalc) OSCE Outcomes Arising From the Flipped Classroom and Traditional Lecture Models

Figure 1.

Comparison of Pharmacy Calculations Competency.

Pcalc OSCE performance at six months was also assessed. Overall, students enrolled in the study experienced an improvement of 5.1 (10.6)% (p<.0005, Cohen’s d=0.48) in Pcalc OSCE score over the course of the study. Average Pcalc OSCE scores were 82.0 (11.4)% in the flipped model vs 78.2 (12.8)% in the lecture model (Table 4, p=.19, Cohen’s d=0.33). Similar outcomes were observed with student competency achievement. At six months, 97.4% of students in the flipped model and 96.9% of students in the lecture model had achieved pharmacy calculations competency (p=.902).

The possibility that group differences in performance may have been confounded by the greater prevalence of technician certification in the flipped model was assessed. Overall, certified technicians’ average Pcalc OSCE performance was not superior to their peers at six weeks (68.1 (15.6)% v. 66.5 (17.2)%, p=.73) or at six months (80.5 (11.9)% vs 80.3 (12.4)%, p=.95). These findings were reinforced by the outcomes of regression analyses.

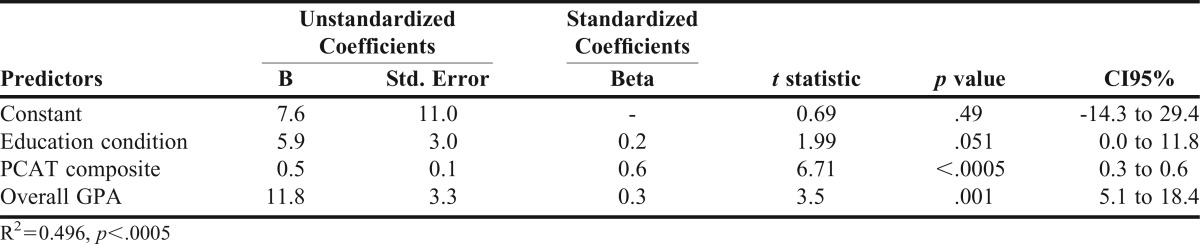

The results for the multiple linear regression analyses estimating the relationship between student Pcalc OSCE performance at six weeks and the flipped model are summarized in Table 5. A regression model was also estimated using student OSCE performance at six months; however, the flipped model was not a significant predictor at a level of p≤.05.

Table 5.

Multiple Linear Regression to Predict Pharmacy Calculations OSCE Outcomes Immediately Post Course Completion

This analysis will therefore focus upon Pcalc OSCE outcomes at six weeks. Controlling for student PCAT Composite scores and overall GPAs, students in the flipped model were found to achieve an average of 5.8% (CI95% = 0.0% to 11.8%, p=.051) higher Pcalc OSCE scores than students in the lecture model.

DISCUSSION

This study was designed to evaluate the effectiveness of a flipped classroom model versus a traditional lecture model in promoting student development of skills in pharmacy calculations. Results indicate that the flipped classroom model produces significant, meaningful gains in both overall pharmacy calculation ability and competency development.

Our study’s results expand upon those published previously. Prior research has shown students to have positive perceptions of, and preference for, the flipped model.9,12 Student preferences for the educational model increase almost three-fold after completion of a course using the flipped model.9 Improvement in student exam performance has been another observed benefit of the flipped model with gains in exam score ranging from 0% to 12%.9,12,27-30 These benefits have resulted to an ongoing interest in the flipped classroom andragogy.12,28-30

To date, the majority of studies evaluating student performance within the flipped classroom have used quasi-experimental research designs.9,12,28-30 Such studies are known to be potentially limited by both confounding factors and threats to internal validity.31,32 This study was based on an experimental design, with random allocation to intervention. Such designs reduce internal validity threats and design-related confounding.

Our finding of an approximately 6% increase in student performance, a moderate effect size (Cohen’s d=.48), supports the results of prior published work.9,12,27-30 This study has expanded upon the field by investigation of learning durability. The flipped model’s beneficial effects upon student performance persisted at six months though the difference was not statistically significant. The students enrolled in the flipped model exhibited approximately a 4% better performance overall than students in the traditional model. Failure of this outcome to reach statistical significance may be explained by the effect of forgetting or by the disproportional effect of additional student learning occurring after completion of the calculations course, but prior to the six-month observation.

Knowledge loss with time, or forgetting, is one possible explanation for the flipped model’s failure to maintain performance advantages over the study period. We argue that forgetting is an unlikely explanation in this investigation due to both learning models showing strong, statistically significant intergroup performance gains. These performance gains are indicative of learning, not forgetting.

Educational opportunities occurring between the two assessment points is another possible explanation for the observed reduction in learning model effect upon student skill performance at six months. Such educational opportunities would be a concern if they were disproportionately available to one of our two learning models.

The lockstep, cohort nature of the school’s curriculum does not support a causal explanation of disproportionate education as all study participants were enrolled in the same coursework throughout the study period.31,32 The exception is student enrollment in experiential learning activities. The school’s curriculum requires students to complete IPPEs in community and institutional practice during the P1 year. The IPPEs are scheduled as five-week block rotations, with each student scheduled to rotate through one of the P1 IPPEs during two of the five possible IPPE blocks. As they provide opportunities for skill reinforcement, practice, and mastery, it would be plausible that some students may have completed both, one, or none of the IPPE rotations prior to the six-month Pcalc OSCE and created a situation where disproportionate learning could occur. The six-month Pcalc OSCE was scheduled and administered late in the spring 2013 semester as a control for this potential confounder.

Additionally, remedial learning activities were available to all students. These educational opportunities were required for students not demonstrating pharmacy calculations competency, but participation was voluntary for all other students. Unfortunately, student participation, though perceived to be large for each remedial session, was not tracked and cannot be assessed directly as a confounder of the study’s results. However, it is likely that these interventions did confound the study’s six-month outcomes and may have hidden the existence of a stronger relationship between the flipped model and long-term skill retention. Regardless of the effect on the study, provision of remedial training was likely appropriate for the situation and students. The remedial training successfully supported the school’s desire to ensure all students were able to prove competency in pharmacy calculations prior to entering the P2 year and the associated P2 IPPEs (Figure 1).

The two learning models used concurrently in the same semester is one potential limitation of this study. The possibility that students in the two models shared materials cannot be discounted. The study investigators attempted to limit confounding due to student sharing by restricting access to course materials through both use of separate Bb courses for the two learning models and routinely reminding students throughout the semester to refrain from sharing course materials. Also, the study investigators provided limited materials in the course. The flipped model’s pre-work and the lecture model’s lecture material were of similar content and depth. Additionally, the flipped model’s in-class problem sets were similar or the same as the homework assigned to the lecture model. Students in both educational models were provided instructor-produced solutions to the problem sets. Together, these actions attempted to limit the opportunity for and benefit of sharing educational model specific course materials.

A second potential limitation is the nature and size of the sample. The study used a convenience sample taken from a single institution’s student body. Such a sample, though not directly affecting the internal validity, does have the potential to limit the study’s external validity. Generally, external validity concerns are addressed by confirming representativeness of the study sample relative to the general target group.31 In this study, external validity concerns are minimized by the sample being a subset of the larger population of pharmacy students and by the sample having demographic similarities to the larger population (2012 national means: PCAT composite=47; GPA=3.25).33

Small sample size can result in an increased probability of a type II error occurring.34,35 This situation could have occurred within the study when analyses were performed at six months; however, we contend that such an occurrence is unlikely. Comparing outcomes acquired at six weeks with those at six months shows that the variability of observations has decreased approximately 20%. Generally, such observations would be associated with comparable increases in power.34,35 Combined with the potential existence of confounding that was described previously, type II error being the cause of the observed six-month outcomes appears unlikely.

This study does not provide insight into the performance improvement or skill development that occurred while students were enrolled within the calculations course. The investigators’ choice to exclude student assignment scores as study outcomes is another possible study limitation. The decision was based upon the information attainable from the assignments. The intent of the study was to compare individual student performance and acquisition of pharmacy calculations skill competency. Course assignments, problem sets, and cases within the flipped model were completed during course meetings in collaborative groups. As a result, grades arising from assignments in the flipped model would be representative of the student group’s collective understanding or skill and not individual skill. Thus, we chose to exclude assignment scores as study outcomes.

This study has added to the growing support of using the flipped classroom model. Research to determine optimal use of classroom time and to improve efficiency of pre-work appears reasonable and could expand the benefits to student learning obtained through application of the flipped model.

A second target for future research is long-term retention of learning. The short-term learning gains associated with use of the flipped model have been well established, but such gains are of little utility if not maintained long-term. This study’s finding of a small to moderate effect (Cohen’s d=0.33) on performance at six months provides credence to the flipped model having the potential to facilitate learning retention. Although long-term gains failed to reach statistical significance within this study, their exploration continues to be a worthwhile target for future research.

CONCLUSION

Use of the flipped classroom model improves student pharmacy calculation skill performance and acquisition of skill competency relative to the traditional classroom model in the short-term. Additional study to determine if such performance gains may be of long-term duration should be pursued.

ACKNOWLEDGMENTS

The authors acknowledge and thank Dr. Janet Wolcott who co-instructed and coordinated the PHAR 541 course; Dr. Nicole Winston who provided remedial pharmacy calculations instruction to students after the PHAR 541 course concluded; and Michael Rudolph for reviewing and providing significant input in the final version of this manuscript.

REFERENCES

- 1.Bonwell CC, Eison JA. Active Learning: Creating Excitement in the Classroom, ASHE-ERIC Higher Education Report No. 1. Washington, DC: The George Washington University; 1991. [Google Scholar]

- 2.Chickering AW, Gamson ZF. Development and adaptations of the seven principles for good practice in undergraduate education. New Dir Teach Learn. 1999;80(Winter):75–81. [Google Scholar]

- 3.Allen D. Recent research in science teaching and learning. CBE Life Sci Educ. 2014;13(Winter):584–586. doi: 10.1187/cbe.14-09-0147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Freeman S, Eddy SL, McDonough M, et al. Active learning increases student performance in science, engineering, and mathematics. Proc Natl Acad Sci. 2014;111(23):8410–8415. doi: 10.1073/pnas.1319030111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Gardiner LF. Redesigning Higher Education: Producing Dramatic Gains in Student Learning. Washington, DC: ERIC Clearinghouse on Higher Education; 1994. [Google Scholar]

- 6.Lambert C. Twilight of the lecture. Harvard Magazine: Harvard Magazine, Inc. 2012:23–7. [Google Scholar]

- 7.Lage MJ, Platt GJ, Treglia M. Inverting the classroom: a gateway to creating an inclusive learning environment. J Econ Educ. 2000;31(1):30–43. [Google Scholar]

- 8.McLaughlin JE, Roth MT, Glatt DM, et al. The flipped classroom: a course redesign to foster learning and engagement in a health professions school. Acad Med. 2014;89(2):236–243. doi: 10.1097/ACM.0000000000000086. [DOI] [PubMed] [Google Scholar]

- 9.McLaughlin JE, Griffin LM, Esserman DA, et al. Pharmacy student engagement, performance, and perception in a flipped satellite classroom. Am J Pharm Educ. 2013;77(9):Article 196. doi: 10.5688/ajpe779196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Prober CG, Heath C. Lecture halls without lectures – a proposal for medical education. N Engl J Med. 2012;366(18):1657–1659. doi: 10.1056/NEJMp1202451. [DOI] [PubMed] [Google Scholar]

- 11.Straumsheim C. Stanford University and Khan Academy use flipped classroom for medical education. Inside Higher Educ. 2013 http://www.insidehighered.com/news/2013/09/09/stanford-university-and-khan-academy-use-flipped-classroom-medical-education [Google Scholar]

- 12.Pierce R, Fox J. Vodcasts and active-learning exercises in a “flipped classroom” model of a renal pharmacotherapy module. Am J Pharm Educ. 2012;76(10):Article 196. doi: 10.5688/ajpe7610196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Blackboard Learn [computer program] Version: Blackboard, Inc. 2015 [Google Scholar]

- 14.Anderson HG, Nelson AA. Reliability and credibility of progress test criteria developed by alumni, faculty, and mixed alumni-faculty judge panels. Am J Pharm Educ. 2011;75(10):Article 200. doi: 10.5688/ajpe7510200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Supernaw RB, Mehvar R. Methodology for the assessment of competence and definition of deficiencies of students in all levels of the curriculum. Am J Pharm Educ. 2002;66(1):1–4. [Google Scholar]

- 16.Downing SM, Tekian A, Yudkowsky R. Procedures for establishing defensible absolute passing scores on performance examinations in health professions education. Teach Learn Med. 2006;18(1):50–57. doi: 10.1207/s15328015tlm1801_11. [DOI] [PubMed] [Google Scholar]

- 17.IBM SPSS Statistics for Macintosh [computer program] Armonk, NY: SPSS, Inc;; 2013. Version 21.0.0.0. [Google Scholar]

- 18.Thalheimer W, Cook S. How to calculate effect sizes from published research articles: a simplified methodology. http://www.bwgriffin.com/gsu/courses/edur9131/content/Effect_Sizes_pdf5.pdf. Accessed March 8, 2016.

- 19.Lakens D. Calculating and reporting effect sizes to facilitate cumulative science: a practical primer for t-tests and ANOVAs. Front Psychol. 2013;4:863. doi: 10.3389/fpsyg.2013.00863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Sullivan GM, Feinn R. Using effect size – or why the p value is not enough. J Grad Med Educ. 2012;4(3):279–282. doi: 10.4300/JGME-D-12-00156.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Crown WH. There’s a reason they call them dummy variables: a note on the use of structural equation techniques in comparative effectiveness research. Pharmacoeconomics. 2010;28(10):947–955. doi: 10.2165/11537750-000000000-00000. [DOI] [PubMed] [Google Scholar]

- 22.Marill KA. Advanced statistics: linear regression, part I: simple linear regression. Acad Emerg Med. 2004;11(1):87–93. [PubMed] [Google Scholar]

- 23.Eisenhauer JG. How a dummy replaces a student’s test and gets an F (or, how regression substitutes for t tests and ANOVA) Teach Stat. 2006;28(3):78–80. [Google Scholar]

- 24.Kleinbaum DG, Kupper LL, Muller KE, Nizam A. Applied Regression Analysis and Other Multivariable Methods. 3rd ed. Cincinnati, OH: Duxbury Press; 1998. [Google Scholar]

- 25.Kleinbaum DG, Kupper LL, Morgenstern H. Epidemiologic Research. New York, NY: John Wiley & Sons, Inc.; 1982. [Google Scholar]

- 26.Dawson B, Trapp RG. Biostatistics & Clinical Biostatistics. 4th ed. Chicago, IL: Lange Medical Books/McGraw-Hill; 2004. [Google Scholar]

- 27.Harrington SA, Bosch MV, Schoofs N, Beel-Bates C, Anderson K. Quantitative outcomes for nursing students in a flipped classroom [Research Briefs] Nursing Educ Perspect. 2015;36(3):179–181. [Google Scholar]

- 28.Munson A, Pierce R. Flipping content to improve student examination performance in a pharmacogenomic course. Am J Pharm Educ. 2015;79(7):Article 103. doi: 10.5688/ajpe797103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Tune JD, Sturek M, Basile DP. Flipped classroom model improves graduate student performance in cardiovascular, respiratory, and renal physiology. Adv Physiol Educ. 2013;37(4):316–320. doi: 10.1152/advan.00091.2013. [DOI] [PubMed] [Google Scholar]

- 30.Geist MJ, Larimore D, Rawiszer H, Sager AW. Flipped versus traditional instruction and achievement in a bacclaaureate nursing pharmacology course [Research Brief] Nursing Educ Perspect. 2015;36(2):114–115. doi: 10.5480/13-1292. [DOI] [PubMed] [Google Scholar]

- 31.Campbell DT, Stanley JC. Experimental and Quasi-Experimental Designs for Research. Boston, MA: Houghton Mifflin Co.; 1963. [Google Scholar]

- 32.Clancy MJ. Overview of research designs. Emerg Med J. 2002;19:546–549. doi: 10.1136/emj.19.6.546. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Lightfoot S. PharmCAS update. In: Attendees of the 2013 American Association of Colleges of Pharmacy Annual Meeting AP, ed. American Association of Colleges of Pharmacy Annual Meeting. Chicago, IL; 2013.

- 34.Young MJ, Bresnitz EA, Strom BL. Sample size nomograms for interpreting negative clinical studies. Ann Intern Med. 1983;99(2):248–251. doi: 10.7326/0003-4819-99-2-248. [DOI] [PubMed] [Google Scholar]

- 35.Grunkemeier GL, Jin R. Power and sample size: how many patients do I need? Ann Thorac Surg. 2007;83(6):1934–1939. doi: 10.1016/j.athoracsur.2007.01.020. [DOI] [PubMed] [Google Scholar]