Abstract

Objectives. To identify peer reviewer and peer review characteristics that enhance manuscript quality and editorial decisions, and to identify valuable elements of peer reviewer training programs.

Methods. A three-school, 15-year review of pharmacy practice and pharmacy administration faculty’s publications was conducted to identify high-publication volume journals for inclusion. Editors-in-chief identified all editors managing manuscripts for participation. A three-round modified Delphi process was used. Rounds advanced from open-ended questions regarding actions and attributes of good reviewers to consensus-seeking and clarifying questions related to quality, importance, value, and priority.

Results. Nineteen editors representing eight pharmacy journals participated. Three characteristics of reviews were rated required or helpful in enhancing manuscript quality by all respondents: includes a critical analysis of the manuscript (88% required, 12% helpful), includes feedback that contains both strengths and areas of improvement (53% required, 47% helpful), and speaks to the manuscript’s utility in the literature (41% required, 59% helpful). Hands-on experience with review activities (88%) and exposure to good and bad reviews (88%) were identified as very valuable to peer reviewer development.

Conclusion. Reviewers, individuals involved in faculty development, and journals should work to assist new reviewers in defining focused areas of expertise, building knowledge in these areas, and developing critical analysis skills.

Keywords: peer review, manuscript, quality, faculty development, training programs

INTRODUCTION

Over the past several decades, the profession of pharmacy has experienced a period of tremendous growth and evolution. There have been increases in: enrollments in schools and colleges of pharmacy,1 interest in pharmacy residencies and other postgraduate opportunities, the number of residency programs and sites available, and also the number of people entering into academic careers. In 2007, there were 1487 PGY1 positions2 with that number doubling by 2015, with 3081 positions at 1229 programs.3 Similarly, in 2009, there were 5900 full- and part-time faculty members employed by US schools and colleges of pharmacy,4 expanding to 6626 in 2014.5 In these roles, publication is often encouraged or required, thereby increasing the interest and desire to publish. Similar to the pharmacy profession, the peer-reviewed literature has also experienced a growth boom. With the advent of online publication and increased interest in open-access publishing, more journals are available, and journals may have fewer restrictions on number of articles per volume. Rennie and colleagues have summarized the findings from the seven meetings of the International Congress on Peer Review held since 1989.6 Much of the conversation in their report describes the concerns over the quality of studies, reproducibility of results, and publication bias. Guidelines for peer reviewers specific to the moral and ethical obligations associated with peer review and publication in general are also available.7 In both of these resources, very little is discussed about the overall quality of the peer review itself.

In a description of the origins of peer review, Kronick observes that despite the much larger number of scientists and scholars today, a larger percentage of scientists participated as peer reviewers in the past compared to the present.8 This sentiment would likely be echoed by today’s journal editors, who anecdotally, experience difficulty identifying peer reviewers, while it is expected most in the Academy would agree that peer review is a professional responsibility.

Consequently, there has been research into the motivation to review,9 the time commitment involved,9,10 and the reasons that reviewers accept and decline reviews.11 After a survey of 143 medical education reviewers, Snell and Spencer stated that “the process might be easier if the reviewer were able to…receive feedback on the quality of his or her own review, and have the opportunity to receive training.”10

To assist first-time reviewers, numerous articles have been published to demystify the peer review process and provide a series of descriptive steps on the process of conducting a peer review.12–27 Many of these articles focus on the specific peer review requirements for the journal in which the article is published (eg, deadlines to follow, explanation of how to complete journal-specific form/questionnaire). Others have described general characteristics and attributes of an ideal peer reviewer (eg, timeliness, objectivity, knowledgeable).12,17,23 In addition, Keenum and Shubrook described the specifics of what to examine during the review (ie, originality, structure, language, ethical issues).22 The Committee on Publication Ethics also has produced Ethical Guidelines for Peer Reviewers, including expectations during the review, when preparing the report to the authors, and for post-review conduct.7 Although these types of articles are helpful in describing the content, structure, and expectations of the review, further discussion of quality in peer review is needed.

Several investigations have examined “accuracy” or reliability of reviews. Navalta and Lyons compared manuscript decisions of faculty reviewers to those of graduate students,28 and Callaham and colleagues measured congruence between reviewer recommendation and the editor’s final decision.29 In addition, in Baxt and colleagues’ research, a false manuscript, with strategically placed errors, was sent out to the reviewer pool to assess how many errors would be identified by the reviewers.30 In Baxt and Callaham’s investigations, the authors identified that these potential measures of “accuracy” all poorly correlated with quality of the actual reviews.29,30

While some have examined reviewer accuracy, others have investigated the use of rubrics and survey instruments to evaluate peer reviews. Feurer and colleagues developed a grading instrument with a weighted scoring system strictly focused on objective attributes of the review, such as timeliness, etiquette, and the presence or absence of specific comments (eg, summary, section-by-section review).31 Van Rooyen and colleagues developed a similar instrument for assessing the review,32 which reportedly has good test-retest and inter-rater reliability, but focused solely on whether or not the reviewer addressed specific elements of the paper (eg, originality, methods, author’s interpretation of results). Their instrument went a step further than Feurer’s by adding an additional domain assessing “overall quality” of the review, but the instrument doesn’t describe the scoring parameters for this element.

Review quality has also been assessed within a number of studies designed to investigate influences on the review process. For instance, two studies have tried to link reviews deemed to be “high quality” by editors to measurable attributes of the specific reviewer (eg, age, geographic region, training in statistics, academic rank).33,34 Callaham and Tercier state that “six components of a quality review are formally defined and editors are asked to combine assessments of all of them into their single global quality score,” but do not describe the six components.33 Black and colleagues introduce seven aspects of review quality (eg, extent to which the reviewer addressed the importance of the research question) that are rated on a 5-point Likert scale (1=poor, 5=excellent) and averaged for a total score.34 The instrument was used by both authors and editors to generate quality data, which was examined relative to reviewer characteristics (eg, publication experience). Although internal consistency and interrater reliability for their use of the tool were given, details of the instrument’s development are not reported.34 Furthermore, review quality has been assessed as one variable in studies of blinding,35,36 unmasking35 (ie, revealing a reviewer to a co-reviewer), signing reviews36 and elements of open review,37 along with other variables, such as the feasibility of the technique and the publication recommendation. McNutt and colleagues rated peer reviews using four variables of quality from the editor’s perspective (eg, identifying strengths and weaknesses of the study’s methods) and five variables from the author’s perspective (eg, thoroughness, courteousness, fairness, constructiveness and knowledge), stating that these were derived from review of the literature and interviewing other editors.36 These mechanisms for scoring the quality of the written reviews might be helpful in designing programs to build skills of new reviewers. However, more dialogue is needed on the editor’s perspective of the characteristics that strengthen the review process. What are the characteristics of peer reviews and peer reviewers that editors seek, in order to aid in enhancing manuscript quality and making editorial decisions? The editor’s perspective is important in that it influences who is invited to review, whether those individuals are invited to review again in the future, and the outcomes of the peer review process. With this information, peer reviewer development could be further tailored.

To add to the literature on review contents and structure, review accuracy, and strategies that influence the review process, the aim of this research was to: identify the attributes of a quality peer review and peer reviewer from the editors’ perspective; and identify valuable elements of training programs to be designed for pharmacy practice residents, postgraduate students, and new faculty, in order to aid peer review quality.

METHODS

This study utilized a three-round modified Delphi method. The Delphi method has been used to aid in defining quality. A 2011 systematic review identified 80 studies that had used the Delphi method to select quality indicators in health care.38 In addition, the Delphi method has been used to develop: a set of quality criteria for patient decision support technologies,39 quality indicators for general practice management,40 and a list of criteria important in assessing the quality of a randomized control trial.41 During a multi-round process of gathering and feeding back of opinions, the Delphi draws together the collective wisdom of the panelist-experts,42 with later rounds allowing consensus ratings by the group.43,44 During this anonymous, iterative process, each panelist has an equal opportunity to present ideas45 and opinions can be retracted, altered or added based on consideration of others’ responses.46,47 The Delphi also benefits from the ability to obtain opinion without bringing the experts physically together.42

The selection of participants began with the determination of journals to include. In order to identify the journals where faculty members most frequently publish their work, the publications of pharmacy practice and pharmacy administration faculty (ie, social pharmacy, health-systems, management, public health) at the authors’ home institutions were reviewed for the past 15 years, resulting in 544 publications from 70 faculty members in pharmacy practice and pharmacy administration. Recognizing the abundance of niche and specialty journals, only journals where at least ten publications occurred by at least two different institutions were selected.

The success of a Delphi study rests on the expertise of the panel selected as participants.44 Panelists should be credible44 and highly trained and competent within the area being investigated.43 To this end, investigators contacted the identified journals to determine those within their editorial staff that should be included in the invitation (eg, deputy editor, assistant editor), requesting the names and contact information for all editors that were actively managing manuscripts. Manuscript management was defined as identifying reviewers, examining peer reviews once received and deciding whether to continue inviting particular reviewers. Therefore, for the purposes of this article, the terms “editors,” “respondents,” and “participants” can include editors-in-chief, as well as other editors (eg, assistant, associate, senior, managing).

The optimal number of experts needed for a Delphi panel is not agreed upon.43,46,48 While some suggest that 10 to 15 subjects are sufficient when the subjects are homogenous,49 others indicate that rule of thumb is 15-30.47 Several authors caution investigators to use a minimally sufficient number,43,49 with larger numbers resulting in greater generation of data and the potential for analysis difficulties.42 Considering this guidance and the available expertise, a panel size of 10-20 was determined prospectively to be appropriate for this research. No prospective plans were made to stop enrollment should enrollees exceed this range.

This study collected participant responses via the web-based survey program Qualtrics (Qualtrics Labs Inc., Provo, UT). Round 1 asked four open-ended questions regarding actions of good reviewers, indicators of quality, training that would prepare new reviewers and strategies/development opportunities for reviewers to pursue on their own. The authors reviewed these data for themes, and summary statements were drafted. The statements were categorized into four areas: the reviewer, the review, a training program, and reviewer self-development.

In Round 2, a report was returned to participants containing draft summary statements (eg, “The reviewer is timely in their actions related to the review”) and quotes from the participants that were used to create the summary statements (eg, “responding to the request to review in a timely manner”). Panelists were asked to rate reviewer and review statements for importance in manuscript quality and editorial decisions. Panelists were asked to rate elements of a training program for value and priority for inclusion. Panelists also were asked to rate the anticipated return on investment of self-development options. Open-ended comments were accepted after each of the four sections.

A consensus level was defined prospectively. While there are not universally agreed upon guidelines for setting the desired level of consensus in a Delphi method,42,44,48 review of the literature has yielded agreement as low as 55% and up to 100%.44 Keeney and colleagues suggest that the importance of the topic can guide consensus level, explaining that 100% consensus may be desirable for life or death issues, while 51% may be appropriate for preferences.48 For this study, consensus was defined as a minimum of 75% of panelists rating a specific element as required/helpful for manuscript quality or editorial decision, very valuable/somewhat valuable for training, high/medium priority for inclusion in training and high/medium return on investment for self-development efforts. After Round 2, statements not reaching 75% were refined based on the comments received and returned for further rating and commenting in Round 3.

Round 3 asked several clarifying questions, including the importance of various indicators of knowledge in a peer reviewer, the degree to which certain reviewer activities were helpful in managing a journal, and the importance of various elements of a review. Clarification, rather than achieving consensus, was the goal of these questions. Round 3 also probed the value and feasibility of options for providing feedback on peer reviews, using the 75% consensus level. This study was approved by the University of Minnesota Institutional Review Board.

RESULTS

Nine journals representing practice, administration and education in pharmacy qualified for inclusion in the study. These journals included: American Journal of Health-System Pharmacy, American Journal of Pharmaceutical Education, Annals of Pharmacotherapy, Currents in Pharmacy Teaching and Learning, Innovations in Pharmacy, Journal of the American Pharmacists Association, Journal of Managed Care Pharmacy, Pharmacotherapy: The Journal of Human Pharmacology and Drug Therapy, and Research in Social and Administrative Pharmacy. One journal elected not to participate. Of the eight journals participating, 32 editors were identified by the editor-in-chief of each journal as actively managing manuscripts and were invited to participate.

Nineteen editors (59%) participated in Round 1. The investigators received a communication indicating that one journal had internal discussions and had a representative participate on behalf of their journal. This representative was counted as one participant for each of the three rounds. Because of an e-mail delivery problem, 18 editors were invited to Round 2, and 17 participated (94%). In Round 3, the same 18 editors were invited, and 16 participated (89%). All eight journals had at least one representative in each round.

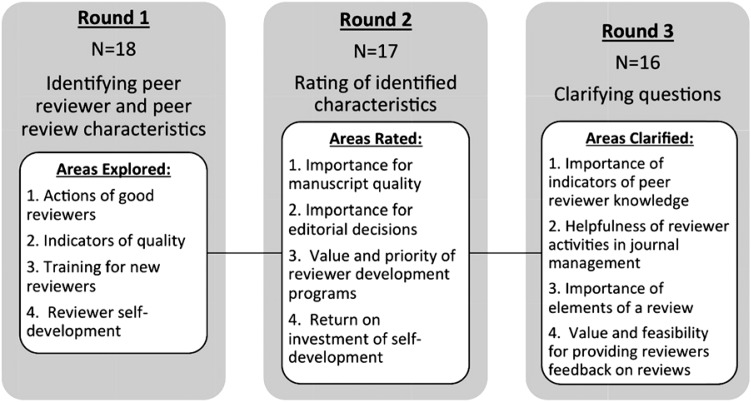

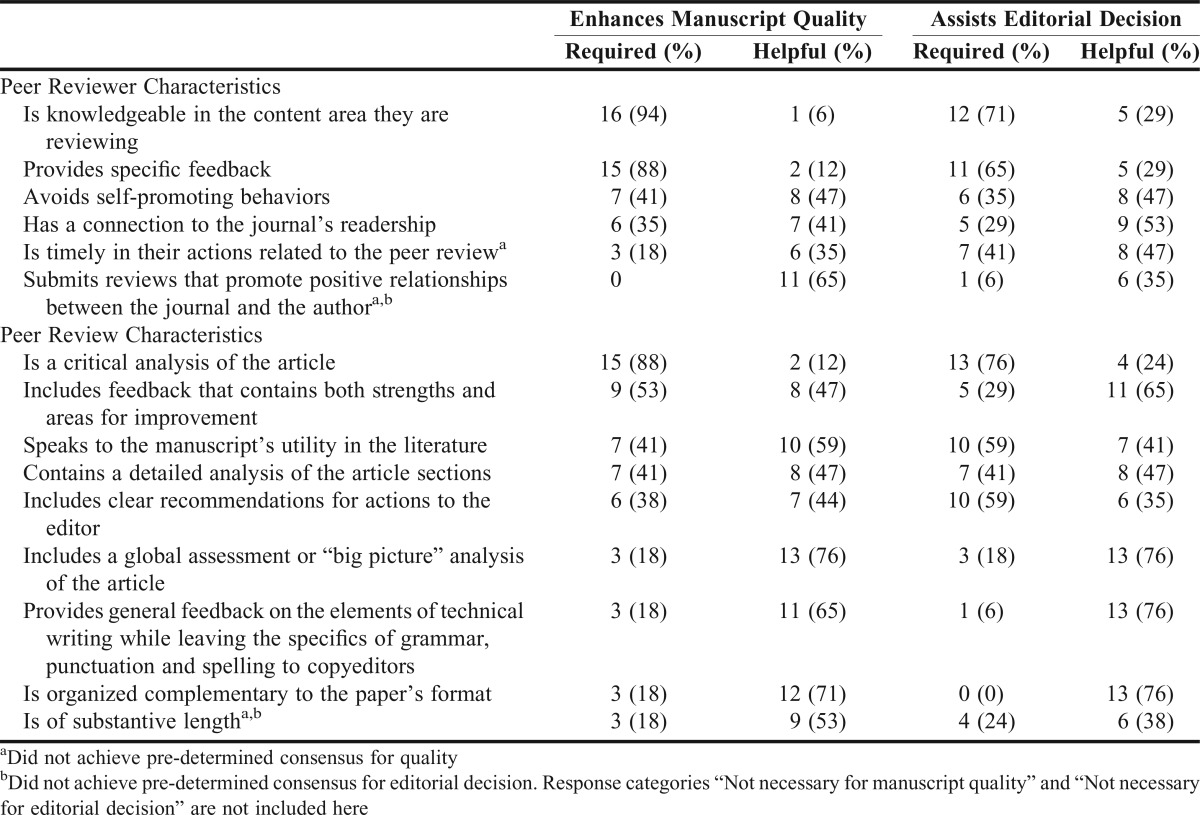

Figure 1 provides an overview of the Delphi method, which proceeded from exploration in Round 1, to ratings in Round 2, to clarifications in Round 3. In Round 1, participants were asked, “What is it that good reviewers do that provides you with a quality peer review?” Responses were grouped into six characteristics of peer reviewers. In Round 2, participants were asked to rate the importance of the characteristics for enhancing manuscript quality and making a decision on the manuscript (Table 1). In Round 3, clarifying questions, based on comments from Round 2, were asked. Low positive responses for timeliness and promoting positive relationships related to enhancing quality and making a decision on a manuscript were further explored by asking the degree to which those characteristics were important to managing a journal. When asked about importance to the editor for journal management, timeliness was rated as required (100%) and promotion of positive relationships was rated as required (31%) or helpful (63%).

Figure 1.

Delphi Process Description.

Table 1.

Peer Reviewer and Peer Review Characteristics Enhancing Manuscript Quality and Assisting Editorial Decisions (Rounds 1 and 2). N=17

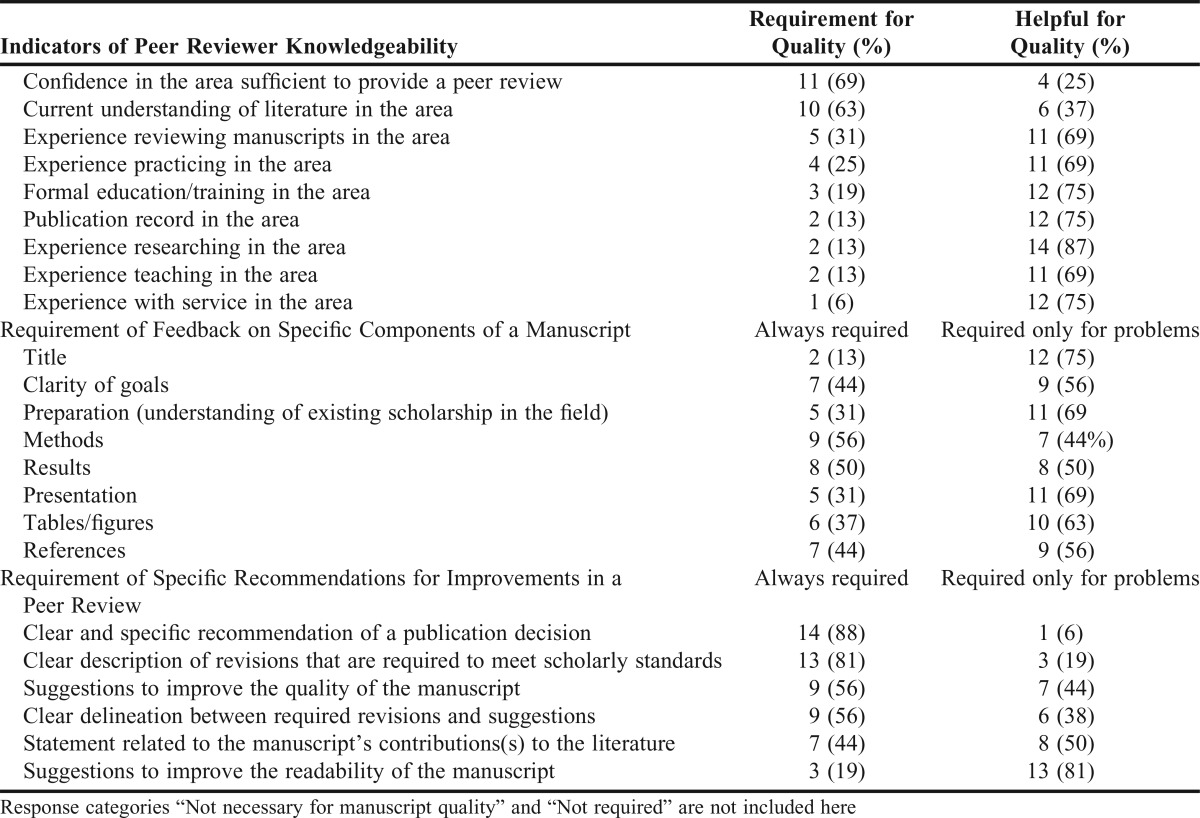

In addition, indicators of knowledge were further explored in Round 3 with the goal of seeking clarification rather than consensus. Editors were asked to rate the importance of a list of potential indicators of knowledgeability in providing a quality review (Table 2).

Table 2.

Further Exploration and Clarification of Participant Comments (Round 3). N=16

In Round 1, participants also were asked, “When reading a peer review, what are the indicators of quality that you watch for?” Responses were grouped into nine characteristics (or indicators) of quality in a peer review. In Round 2, participants were asked to rate the importance of the indicators for enhancing manuscript quality and making a decision on the manuscript (Table 1).

In Round 2, there had been conflicting views on the importance of comments on grammar, punctuation and mechanics. Some responses stated that peer reviewers should not provide comments on the “technical” aspects of writing. Other responses stated that these comments were helpful and necessary. In Round 3, clarifying questions were asked. Respondents reported that feedback on the writing in a manuscript (eg, grammar and spelling) was always required (19%) or only required when problems exist (50%). Similarly, when the conflicting views among the group were described, and respondents were asked whether “peer reviewers should provide comments on grammar, punctuation, and mechanics,” 19% identified it as a requirement and 56% identified it as helpful, which was similar to the findings from the original question in Round 2.

In addition, in Round 2 there also appeared to be conflicting views on the contents of a review for manuscripts where the recommendation was to reject, with some comments indicating that a full review wasn’t needed, and others indicating that, to help authors develop, a full review was still required. In Round 3, clarifying questions were asked. When asked, “When the recommendation is to reject a paper, is a full review needed?” the majority of respondents (69%) responded that full reviews were still needed.

Comments in Round 2 indicated frustration with reviews that were missing either comments to the editor or comments to the authors. This issue was queried in Round 3 and respondents indicated that both comments for authors and editors were required (31%) or helpful (50%) for making manuscript decisions. In Round 3, for further clarification of comments from previous rounds, the importance of feedback on various sections of the article was explored (Table 2), as well as specific types of recommendations and suggestions (Table 2).

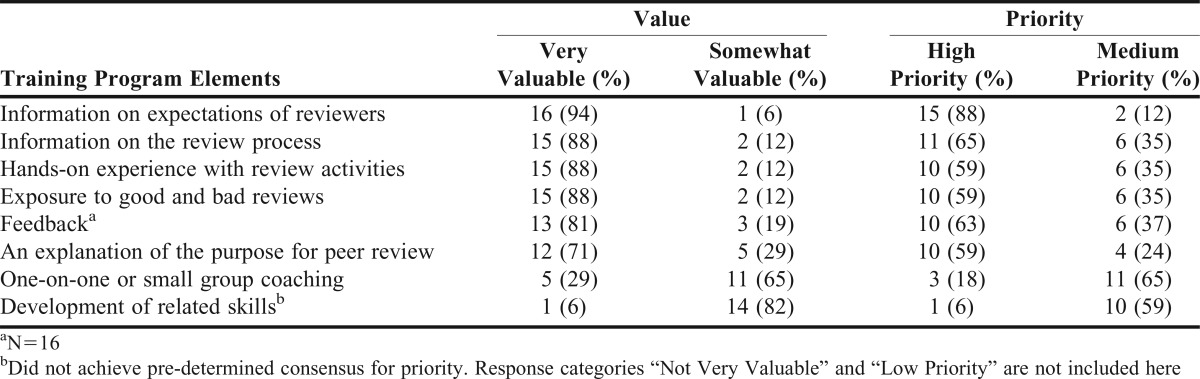

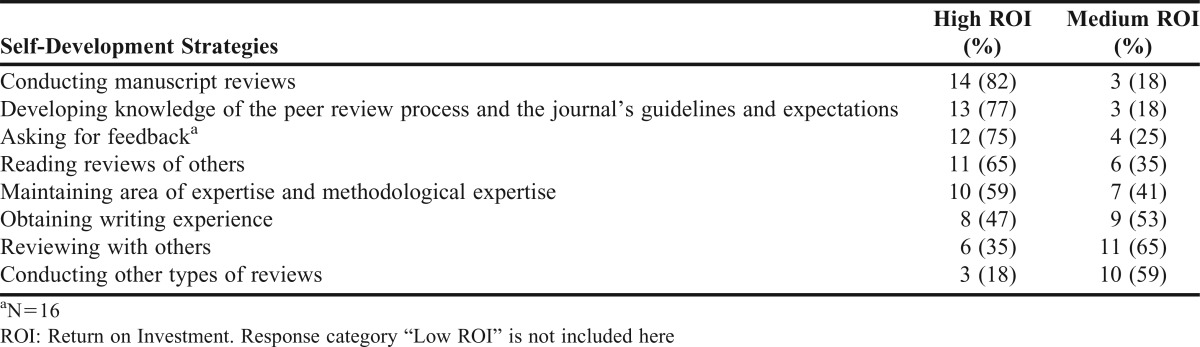

In Round 1, participants were asked to describe the training programs that would be most effective in preparing reviewers and the most successful strategies and/or development opportunities reviewers could self-direct to improve skills. Responses were grouped into eight elements for peer review training programs and eight strategies for self-development for peer reviewers. In Round 2, participants were asked to rate the value and priority of the elements for training programs (Table 3) and the perceived return on investment of self-development strategies (Table 4).

Table 3.

Value and Priority for Elements of Peer Reviewer Training Programs (Rounds 1 and 2). N=17

Table 4.

Perceived Return on Investment of Self-Development Efforts for Peer Reviewers (Rounds 1 and 2). N=17

In Round 2, options for feedback provided by journals to peer reviewers were examined. However in Round 3, with two specific modalities receiving lower value and priority ratings (ie, one-on-one and small group coaching), respondents were asked about perceptions of value and feasibility for providing other modes of feedback. Individualized, written feedback from the journal, one-to-one verbal feedback from the journal, one-to-one verbal feedback from an assigned, experienced reviewer and one-to-one verbal feedback from a self-selected, experienced reviewer all met the 75% consensus level as very or somewhat valuable. However, none of these modes of feedback reached consensus for being highly or moderately feasible. Small group coaching (eg, one or more experienced reviewers working with multiple trainees) met consensus for value (100%) and feasibility (75%). Self-assessed practice review(s) (ie, self-evaluation compared to a high quality, sample peer review) also appeared to be reasonably valuable (94%) and feasible (94%). Lastly, self-evaluation of a trainee’s review against other reviewers’ submissions (eg, accessed via a manuscript management system) was perceived as valuable (100%) and feasible (88%).

DISCUSSION

The goals of this study were to identify peer reviewer and peer review characteristics that enhance manuscript quality and editorial decisions, and identify valuable elements of training programs to be designed for pharmacy practice residents, postgraduate students, and new faculty in order to aid peer review quality. Current literature regarding the peer review process includes: journal-specific requirements for the contents of a review, elements to examine when considering the merits of a manuscript, measurements of a review’s accuracy, and general rubrics for scoring quality. This study pursued a different route, by querying editors about specific elements of a review, in addition to characteristics of a reviewer, deemed important for enhancing manuscript quality and assisting editorial decisions. Several characteristics identified by the editor panelists are consistent with those identified in the existing literature (eg, timely in their actions, detailed analysis of the article), in addition to some newly identified characteristics (eg, having a connection to the journal’s readership). Of the many identified characteristics, a small number were rated by the panelists as required. However, many were rated as helpful.

When writing reviews, conducting and communicating a critical analysis (88% required for manuscript quality) is an important focus (Table 1). In addition, in a study of reviewer comments on medical education manuscripts, about 40% of the reviewers recommended rejection but provided no unsatisfactory ratings.14 Panelists here recommended both a clear and specific recommendation of a publication decision (88% always required) and a clear description of revisions that are required to meet scholarly standards (81% always required) (Table 2). Attention also should be paid to a review that speaks to the manuscript’s utility in the literature (100% required or helpful in manuscript quality) and providing feedback that contains both strengths and areas for improvement (100% required or helpful in manuscript quality).

As development opportunities are considered, it may seem reasonable to focus on the required elements, particularly those required for editorial decision-making. However, peer review is an essential element in promoting the quality and excellence of papers;26 it goes beyond providing guidance on a publication decision. Therefore, reviewers should be familiar with elements that are considered “helpful” and develop skills in these areas, as well. Peer reviewer development is a responsibility shared by reviewers (self-development), those directly supporting individual reviewers (eg, mentors, department heads), and those supporting the peer review process at a systems-level (eg, journals). The discussion will address each of these audiences.

Trainees and new faculty should not consider themselves ineligible to review. The editors in this study agreed that reviewers should be knowledgeable in the content area they are reviewing (94% rated required). However, a publication record in the area (13% required) and experience reviewing manuscripts in the area (31% rated required) were not rated as highly as current understanding of literature in the area (63% rated required) and confidence in the area sufficient to provide a peer review (69% rated required). Building confidence and understanding of the literature may mean focusing areas of expertise and creating a habit of reading within particular areas of interest. It has been suggested that new reviewers initially select a maximum of two to three areas of expertise, when adding themselves to a journal’s reviewer database, in order to focus efforts on topics with which they are most familiar.21 To aid in staying abreast of the literature, consideration should be given to setting up automated monthly searches of selected keywords in Web of Science and other databases, enlisting a university librarian to help in establishing these automated searches, if needed.

This study also investigated the return on investment (ROI) of various self-development methods (Table 4), such as conducting manuscript reviews (82% rated high ROI). Repetition is a consideration in building manuscript peer review ability, with one study of nursing journal reviewers indicating the need to complete one to five reviews to feel comfortable.50 Other self-development activities include asking for feedback (75% rated high ROI) and reading peer reviews completed by colleagues (65% rated high ROI). In addition to asking colleagues to share a completed review (with appropriate permissions, as needed), reviewers can often see comments from others on manuscript re-review or via manuscript management systems. Although this would be a lengthy process, “self-teaching” by emulating styles of reviews that authors have personally received on their own submitted work has also been described.21 New reviewers are encouraged to consider the value of each of these strategies.

Although self-development initiatives can have an important impact, support from department chairs, graduate program directors, residency directors, faculty development offices and administrators is needed to optimize the development of the manuscript peer review abilities of faculty, residents and graduate students. Development can move beyond understanding the process. For example, hands-on experience with review activities (100% very or somewhat valuable) and exposure to good and bad reviews (100% very or somewhat valuable) could be facilitated through local efforts within departments and schools. These efforts might include incorporating manuscript peer review into an existing seminar series, mentoring program, or continuing professional development process. In particular, the panelists indicated that one-on-one or small group coaching was desirable (94% very or somewhat valuable). Berquist developed a pilot mentorship program pairing six experienced reviewers with one to two inexperienced reviewers each to conduct a minimum of four reviews within a one-year period. He noted the inexperienced reviewers had a better understanding of the structure and significance of excellent reviews after completion of the program.51

Administrators and department chairs also can assist by encouraging and supporting related activities, such as journal clubs, or groups to facilitate focused expertise development, such as communities of practice52 and faculty learning communities.53,54 With peer review being a recognizable service to the academy, intentional development efforts are important to ensure a valuable service is contributed. In addition, a solid foundation in understanding peer review criteria may contribute positively to navigating authorship prior to article submission and after receipt of editorial decisions. Because of the universal faculty expectation for participating in peer review and/or scholarship efforts, graduate, fellowship and residency programs (especially those offering faculty training options) should address peer reviewer development.

Three characteristics of reviews were rated required or helpful in enhancing manuscript quality by all respondents: including a critical analysis of the manuscript (88% required, 12% helpful), including feedback that contains both strengths and areas of improvement (53% required, 47% helpful), and speaks to the manuscript’s utility in the literature (41% required, 59% helpful) (Table 1). If reviewers are working within focused areas of expertise, an appraisal of the manuscript’s utility to the literature and feedback on strengths and weaknesses can likely be accomplished by attention to this task. Journals may need to more explicitly request these elements via directions, review forms and training. However, effective critical analysis is more complex. Health professional educators are working to incorporate evidence-based practice skills (including critical appraisal) more strongly in curricula.55-57 In addition, some efforts have been made to develop these skills in practitioners, with at least one study questioning the value of “one-off” interventions, such as workshops.58 Journals may need to consider partnering together to develop innovative approaches to building this skill, as well as partnering with educators.

Journals can assist in addressing some of the elements of training identified as valuable/somewhat valuable by this study (Table 3). Basic training for reviewers likely already includes information on expectations of reviewers (100% very or somewhat valuable) and information on the review process (100% very or somewhat valuable). While hands-on experience with review activities (100% very or somewhat valuable) and exposure to good and bad reviews (100% very or somewhat valuable) might be provided by a postgraduate training program or an employer, they may also be addressed by journals. Options may include an online program containing a practice review and/or samples of stronger and weaker reviews. In particular, the opportunity for comparison is important. Freda and colleagues reported that 80% of reviewers would like to see the reviews of others to gauge the quality of their own reviews.50 Journals also could consider an optional program pairing seasoned reviewers (eg, editorial board members) with newer reviewers. One proposed mechanism is to consider a reviewer “in training” for their first three reviews, pairing them with someone that agrees to read their reviews.50 Other editors have paired new reviewers with senior reviewers in a semi-structured mentor/mentee relationship with one reporting “significant improvement in reviews,”59 and a more controlled study not demonstrating improvement.60 In designing mentoring programs, many variables might affect outcomes. As journals consider this type of program, consultation of the existing literature is recommended and scholarship in this area is encouraged.

Several limitations exist in the present study. Although all journals are international to some degree, our sample is largely reflective of US-based perspectives. Although the Delphi is an accepted method for examining quality, it is always possible that additional panelists would yield additional perspectives meriting further discussion. Inclusion was limited to journals frequently used by pharmacy practice and pharmacy administration faculty for publication. Additional perspectives could be gained from editors representing additional disciplines, such as medicinal chemistry and pharmaceutics. While the editor’s perspective is relevant, the perspectives of new reviewers, seasoned reviewers and/or mentors may also add to the conversation on quality.

In short, additional perspectives represent an opportunity for future research. In addition, future research could explore whether the article type (eg, original research, instructional design and assessment) requires different attributes from the reviewer or unique training. In addition, future research may be pursued examining the connection between improvements in quality of peer review and improvements in quality scholarship. More broadly, research on the personal benefits of manuscript peer review may aid editors and those supporting peer reviewer training in better explaining the value of this service.

CONCLUSION

Across the scientific and health disciplines, maintaining high ethical standards and quality within the peer review process continue to be critical issues. This study identified peer reviewer and peer review characteristics that enhance manuscript quality and aid editorial decisions, as well as identified valuable elements of training programs to aid in promoting quality. Nineteen editors from eight journals publishing a high volume of pharmacy practice and pharmacy administration faculty manuscripts provided input. In order to increase the overall quality of reviews, individual reviewers must seek to define focused areas of expertise, build knowledge in those areas and gain experience through both repetition and feedback. Reviewers also should offer a clear and specific recommendation of revisions that are required to meet scholarly standards and a publication recommendation. To support peer reviewer development through mentorship and local efforts, department chairs, graduate program directors, residency directors, faculty development offices and administrators must integrate peer reviewer development in existing seminar series, mentorship programming and continuing professional development processes. In particular, hands-on experience with reviews and exposure to good and bad reviews is needed through small group and one-on-one activities. Pharmacy journals should provide explicit requests for an appraisal of the manuscript’s utility to the literature and for feedback on strengths and weaknesses. The skills needed for “a critical analysis of the manuscript” are complex and will require innovation and collaborative efforts between journals and with educators. As development programs are designed, consultation of the existing literature is recommended and additional scholarship focused on promoting quality in peer review of manuscripts is encouraged.

REFERENCES

- 1.American Association of Colleges of Pharmacy. 2013-14 Profile of pharmacy students. http://www.aacp.org/resources/research/institutionalresearch/Documents/2014_PPS_Degrees%20Conferred%20Tables.pdf. Accessed September 23, 2015.

- 2.Knapp KK, Shah BM, Kim HB, Tran H. Visions for required postgraduate year 1 residency training by 2020: a comparison of actual versus projected expansion. Pharmacotherapy. 2009;29(9):1030–1038. doi: 10.1592/phco.29.9.1030. [DOI] [PubMed] [Google Scholar]

- 3. Summary results of the match for positions beginning in 2015. National Matching Services, Inc. https://www.natmatch.com/ashprmp/stats/2015progstats.html. Accessed September 23, 2015.

- 4.American Association of Colleges of Pharmacy. 2009-10 profile of pharmacy faculty. http://www.aacp.org/resources/research/institutionalresearch/Documents/200910_PPF_tables.pdf. Accessed September 23, 2015.

- 5.American Association of Colleges of Pharmacy. 2014-15 Profile of pharmacy faculty. http://www.aacp.org/resources/research/institutionalresearch/Documents/PPF1415-final.pdf. Accessed September 23, 2015.

- 6.Rennie D, Flanagin A, Godlee F, Bloom T. The eighth international congress on peer review and biomedical publication: a call for research. JAMA. 2015;313(20):2031–2032. doi: 10.1001/jama.2015.4665. [DOI] [PubMed] [Google Scholar]

- 7.COPE ethical guidelines for peer reviewers. Committee on Publication Ethics. http://publicationethics.org/files/u7140/Peer review guidelines.pdf. Accessed September 23, 2015.

- 8.Kronick DA. Peer review in 18th-century scientific journalism. JAMA. 1990;263(10):1321–1322. [PubMed] [Google Scholar]

- 9.Kearney MH, Baggs JG, Broome ME, Dougherty MC, Freda MC. Experience, time investment, and motivators of nursing journal peer reviewers. J Nurs Scholarsh. 2008;40(4):395–400. doi: 10.1111/j.1547-5069.2008.00255.x. [DOI] [PubMed] [Google Scholar]

- 10.Snell L, Spencer J. Reviewers’ perceptions of the peer review process for a medical education journal. Med Educ. 2005;39(1):90–97. doi: 10.1111/j.1365-2929.2004.02026.x. [DOI] [PubMed] [Google Scholar]

- 11.Tite L, Schroter S. Why do peer reviewers decline to review? A survey. J Epidemiol Community Health. 2007;61(1):9–12. doi: 10.1136/jech.2006.049817. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zellmer WA. What editors expect of reviewers. Am J Heal Pharm. 1977;34:819. [Google Scholar]

- 13.Squires BP. Biomedical manuscripts: what editors want from authors and peer reviewers. CMAJ. 1989;141(1):17–19. [PMC free article] [PubMed] [Google Scholar]

- 14.Bordage G. Reasons reviewers reject and accept manuscripts: the strengths and weaknesses in medical education reports. Acad Med. 2001;76(9):889–896. doi: 10.1097/00001888-200109000-00010. [DOI] [PubMed] [Google Scholar]

- 15.Sylvia LM, Herbel JL. Manuscript peer review – a guide for health care professionals. Pharmacotherapy. 2001;21(4):395–404. doi: 10.1592/phco.21.5.395.34493. [DOI] [PubMed] [Google Scholar]

- 16.Hoppin FG., Jr How I review an original scientific article. Am J Respir Crit Care Med. 2002;166(8):1019–1023. doi: 10.1164/rccm.200204-324OE. [DOI] [PubMed] [Google Scholar]

- 17.Benos DJ, Kirk KL, Hall JE. How to review a paper. Adv Physiol Educ. 2003;27(2):47–52. doi: 10.1152/advan.00057.2002. [DOI] [PubMed] [Google Scholar]

- 18.Fisher RS, Powers LE. Peer-reviewed publication: a view from inside. Epilepsia. 2004;45(8):889–894. doi: 10.1111/j.0013-9580.2004.14204.x. [DOI] [PubMed] [Google Scholar]

- 19.Provenzale JM, Stanley RJ. A systematic guide to reviewing a manuscript. J Nucl Med Technol. 2006;34(2):92–99. [PubMed] [Google Scholar]

- 20.Neill US. How to write an effective referee report. J Clin Invest. 2009;119(5):1058–1060. doi: 10.1172/JCI39424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Lovejoy TI, Revenson TA, France CR. Reviewing manuscripts for peer-review journals: a primer for novice and seasoned reviewers. Ann Behav Med. 2011;42(1):1–13. doi: 10.1007/s12160-011-9269-x. [DOI] [PubMed] [Google Scholar]

- 22.Keenum A, Shubrook J. How to peer review a scientific or scholarly article. Osteopath Fam Physician. 2012;4(6):176–179. [Google Scholar]

- 23.Einarson TR, Koren G. To accept or reject? A guide to peer reviewing of medical journal papers. J Popul Ther Clin Pharmacol. 2012;19(2):e328–e333. [PubMed] [Google Scholar]

- 24.Allen TW. Peer review guidance: how do you write a good review? J Am Osteopath Assoc. 2013;113(12):916–920. doi: 10.7556/jaoa.2013.070. [DOI] [PubMed] [Google Scholar]

- 25.Robbins SP, Fogel SJ, Busch-Armendariz N, Wachter K, McLaughlin H, Pomeroy EC. From the editor – writing a good peer review to improve scholarship: what editors value and authors find helpful. J Soc Work Educ. 2015;51(2):199–206. [Google Scholar]

- 26.Brazeau GA, DiPiro JT, Fincham JE, Boucher BA, Tracy TS. Your role and responsibilities in the manuscript peer review process. Am J Pharm Educ. 2008;72(3):Article 69. doi: 10.5688/aj720369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Caelleigh AS, Shea JA, Penn G. Selection and qualities of reviewers. Acad Med. 2001;76(9):914–916. [Google Scholar]

- 28.Navalta JW, Lyons TS. Student peer review decisions on submitted manuscripts are as stringent as faculty peer reviewers. Adv Physiol Educ. 2010;34:170–173. doi: 10.1152/advan.00046.2010. [DOI] [PubMed] [Google Scholar]

- 29.Callaham ML, Baxt WG, Waeckerle JF, Wears RL. Reliability of editors’ subjective quality ratings of peer reviews of manuscripts. JAMA. 1998;280(3):229–231. doi: 10.1001/jama.280.3.229. [DOI] [PubMed] [Google Scholar]

- 30.Baxt WG, Waeckerle JF, Berlin JA, Callaham ML. Who reviews the reviewers? Feasibility of using a fictitious manuscript to evaluate peer reviewer performance. Ann Emerg Med. 1998;32(3):310–317. doi: 10.1016/s0196-0644(98)70006-x. [DOI] [PubMed] [Google Scholar]

- 31.Feurer ID, Becker GJ, Picus D, Ramirez E, Darcy M, Hicks E. Evaluating peer reviews: pilot testing of a grading instrument. JAMA. 1994;272(2):98–100. doi: 10.1001/jama.272.2.98. [DOI] [PubMed] [Google Scholar]

- 32.Van Rooyen S, Black N, Godlee F. Development of the Review Quality Instrument (RQI) for assessing peer reviews of manuscripts. J Clin Epidemiol. 1999;52(7):625–629. doi: 10.1016/s0895-4356(99)00047-5. [DOI] [PubMed] [Google Scholar]

- 33.Callaham ML, Tercier J. The relationship of previous training and experience of journal peer reviewers to subsequent review quality. PLoS Med. 2007;4(1):e40. doi: 10.1371/journal.pmed.0040040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Black N, Van Rooyen S, Godlee F, Smith R, Evans S. What makes a good reviewer and a good review for a general medical journal? JAMA. 1998;280(3):231–233. doi: 10.1001/jama.280.3.231. [DOI] [PubMed] [Google Scholar]

- 35.Van Rooyen S, Godlee F, Evans S, Smith R, Black N. Effect of blinding and unmasking on the quality of peer review. J Gen Intern Med. 1999;14(10):622–624. doi: 10.1046/j.1525-1497.1999.09058.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.McNutt RA, Evans AT, Fletcher RH, Fletcher SW. The effects of blinding on the quality of peer review. JAMA. 1990;263(10):1371–1376. [PubMed] [Google Scholar]

- 37.Van Rooyen S, Delamothe T, Evans SJW. Effect on peer review of telling reviewers that their signed reviews might be posted on the web: randomised controlled trial. BMJ. 2010;341:c5729. doi: 10.1136/bmj.c5729. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Boulkedid R, Abdoul H, Loustau M, Sibony O, Alberti C. Using and reporting the Delphi method for selecting healthcare quality indicators: a systematic review. PLoS One. 2011;6(6):e20476. doi: 10.1371/journal.pone.0020476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Elwyn G, O’Connor A, Stacey D, et al. Developing a quality criteria framework for patient decision aids: online international Delphi consensus process. BMJ. 2006;333(7565):417. doi: 10.1136/bmj.38926.629329.AE. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Engels Y, Campbell S, Dautzenberg M, et al. Developing a framework of, and quality indicators for, general practice management in Europe. Fam Pract. 2005;22(2):215–22. doi: 10.1093/fampra/cmi002. [DOI] [PubMed] [Google Scholar]

- 41.Sindhu F, Carpenter L, Seers K. Development of a tool to rate the quality assessment of randomized controlled trials using a Delphi technique. J Adv Nurs. 1997;25(6):1262–1268. doi: 10.1046/j.1365-2648.1997.19970251262.x. [DOI] [PubMed] [Google Scholar]

- 42.Hasson F, Keeney S, McKenna H. Research guidelines for the Delphi survey technique. J Adv Nurs. 2000;32(4):1008–15. [PubMed] [Google Scholar]

- 43.Hsu CC, Sandford BA. The Delphi technique: making sense of consensus. Pract Assess, Res Eval (online) 2007;12(10):1–8. [Google Scholar]

- 44.Powell C. The Delphi technique: myths and realities. J Adv Nurs. 2003;41(4):376–382. doi: 10.1046/j.1365-2648.2003.02537.x. [DOI] [PubMed] [Google Scholar]

- 45.Keeney S, Hasson F, McKenna HP. A critical review of the Delphi technique as a research methodology for nursing. Int J Nurs Stud. 2001;38(2):195–200. doi: 10.1016/s0020-7489(00)00044-4. [DOI] [PubMed] [Google Scholar]

- 46.Williams PL, Webb C. The Delphi technique: a methodological discussion. J Adv Nurs. 1994;19(1):180–186. doi: 10.1111/j.1365-2648.1994.tb01066.x. [DOI] [PubMed] [Google Scholar]

- 47.Clayton MJ. Delphi: a technique to harness expert opinion for critical decision-making tasks in education. Educ Psychol. 1997;17(4):373–386. [Google Scholar]

- 48.Keeney S, Hasson F, McKenna H. Consulting the oracle: ten lessons from using the Delphi technique in nursing research. J Adv Nurs. 2006;53(2):205–212. doi: 10.1111/j.1365-2648.2006.03716.x. [DOI] [PubMed] [Google Scholar]

- 49.Delbecq A, Van de Ven A, Gustafson D. Group Techniques for Program Planning: A Guide to Nominal Group and Delphi Processes. Glenview, IL: Scott, Foresman and Co.; 1975. The Delphi technique; pp. 83–107. [Google Scholar]

- 50.Freda MC, Kearney MH, Baggs JG, Broome ME, Dougherty M. Peer reviewer training and editor support: results from an international survey of nursing peer reviewers. J Prof Nurs. 2009;25(2):101–108. doi: 10.1016/j.profnurs.2008.08.007. [DOI] [PubMed] [Google Scholar]

- 51.Berquist TH. AJR reviewers: we need to continue to improve our education and monitoring methods! Am J Roentgenol. 2013;200(6):1179–1180. doi: 10.2214/AJR.13.10792. [DOI] [PubMed] [Google Scholar]

- 52.Wenger E, McDermott R, Snyder WM. Cultivating Communities of Practice. Boston, MA: Harvard Business School Publishing; 2002. [Google Scholar]

- 53. Cox MD. Introduction to faculty learning communities. New Dir Teach Learn. 2004;Spring(97):5-23.

- 54. Richlin L, Essington A. Faculty learning communities for preparing future faculty. New Dir Teach Learn. 2004;Spring(97):149-157.

- 55.Khan KS, Coomarasamy A. A hierarchy of effective teaching and learning to acquire competence in evidenced-based medicine. BMC Med Educ. 2006;6:59. doi: 10.1186/1472-6920-6-59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Maggio LA, Cate OT, Irby DM, O’Brien BC. Designing evidence-based medicine training to optimize the transfer of skills from the classroom to clinical practice. Acad Med. 2015;90(11):1457–1461. doi: 10.1097/ACM.0000000000000769. [DOI] [PubMed] [Google Scholar]

- 57.Maggio LA, Tannery NH, Chen HC, Cate OT, O’Brien B. Evidence-based medicine training in undergraduate medical education: a review and critique of the literature published 2006-2011. Acad Med. 2013;88(7):1022–1028. doi: 10.1097/ACM.0b013e3182951959. [DOI] [PubMed] [Google Scholar]

- 58.Taylor RS, Reeves BC, Ewings PE, Taylor RJ. Critical appraisal skills training for health care professionals: a randomized controlled trial. BMC Med Educ. 2004;4(1):30. doi: 10.1186/1472-6920-4-30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Berquist TH. The new reviewer assistance program: a multipronged approach! Am J Roentgenol. 2013;201(1):1. doi: 10.2214/AJR.13.10940. [DOI] [PubMed] [Google Scholar]

- 60.Houry D, Green S, Callaham M. Does mentoring new peer reviewers improve review quality? A randomized trial. BMC Med Educ. 2012;12(1):83. doi: 10.1186/1472-6920-12-83. [DOI] [PMC free article] [PubMed] [Google Scholar]