Abstract

Single-molecule super-resolution fluorescence microscopy and single-particle tracking are two imaging modalities that illuminate the properties of cells and materials on spatial scales down to tens of nanometers, or with dynamical information about nanoscale particle motion in the millisecond range, respectively. These methods generally use wide-field microscopes and two-dimensional camera detectors to localize molecules to much higher precision than the diffraction limit. Given the limited total photons available from each single-molecule label, both modalities require careful mathematical analysis and image processing. Much more information can be obtained about the system under study by extending to three-dimensional (3D) single-molecule localization: without this capability, visualization of structures or motions extending in the axial direction can easily be missed or confused, compromising scientific understanding. A variety of methods for obtaining both 3D super-resolution images and 3D tracking information have been devised, each with their own strengths and weaknesses. These include imaging of multiple focal planes, point-spread-function engineering, and interferometric detection. These methods may be compared based on their ability to provide accurate and precise position information of single-molecule emitters with limited photons. To successfully apply and further develop these methods, it is essential to consider many practical concerns, including the effects of optical aberrations, field-dependence in the imaging system, fluorophore labeling density, and registration between different color channels. Selected examples of 3D super-resolution imaging and tracking are described for illustration from a variety of biological contexts and with a variety of methods, demonstrating the power of 3D localization for understanding complex systems.

1. Introduction

Fluorescence microscopy has been a mainstay of biological and biomedical laboratories since its inception.(1) Labeling specific proteins or oligonucleotides with a small fluorophore (2) or an autofluorescent protein (3, 4) lights up those molecules of interest against a dark background in a relatively non-invasive way, painting a picture of subcellular structures and intermolecular interactions. Imaging of fluorescent labels has also been applied to the study of non-biological materials such as polymers and glasses,(5) mesoporous materials,(6) and directly to natively-fluorescent antenna complexes.(7) The resulting fluorescence images have provided useful information about structures, dynamics, and interactions for decades, first with wide-field epifluorescence microscopes.

A major advance occurred more than 27 years ago, specifically the advent of the ability to optically detect and spectrally characterize single molecules in a condensed phase by direct detection of the absorption,(8) followed a year later by detection of single-molecule absorption by sensing emitted fluorescence in solids (9) and in solution.(10) Single-molecule spectroscopy (SMS) and imaging allows exactly one molecule hidden deep within a crystal, polymer, or cell to be observed via optical excitation of the molecule of interest. This represents the ultimate sensitivity level of ~1.66 × 10−24 moles of the molecule of interest (1.66 yoctomole), but the detection must be achieved in the presence of billions to trillions of solvent or host molecules. Successful experiments must meet the requirements of (a) guaranteeing that only one molecule is in resonance in the volume probed by the laser, and (b) providing a signal-to-noise ratio (SNR) for the single-molecule signal that is greater than unity for a reasonable averaging time. The first of these two requirements means that at room temperature, the single molecules need be farther apart than about 500 nm, so that the diffraction-limited spots from each do not overlap, and this is generally achieved by dilution. The power of the method primarily rests upon the removal of ensemble averaging: in contrast to traditional spectroscopy, it is no longer necessary to average over billions to trillions of molecules to measure optical quantities such as brightness, lifetime, emission spectrum, polarization, and so on. Therefore, it becomes possible to directly measure distributions of behavior to explore hidden heterogeneity, a property that would be expected in complex environments such as in cells, polymers, or other materials. In the time domain, the ability to optically sense internal states of one molecule and the transitions among them allows measurement of hidden kinetic pathways and the detection of rare intermediates. Because typical single-molecule labels behave like tiny light sources roughly 1–3 nm in size and can report on their immediate local environment, single-molecule studies provide a new window into the nanoscale with intrinsic access to time-dependent changes. The basic principles of single-molecule optical spectroscopy and imaging have been the subject of many reviews(11–21), and books (22–25)

This article considers two applications of single-molecule spectroscopy as extended to three spatial dimensions, “single-particle tracking” (SPT) of individual molecules in motion, and “super-resolution” (SR) microscopy of extended structures to provide details of the shape of an object on a very fine scale. Both of these methods rest on imaging of single molecules, where the x–y position is extracted from the image to localize the position of the molecule. (For concision, we will generally refer to point emitters as single fluorescent molecules, since quantum dot nanocrystals, metallic nanoparticles, etc. can be localized similarly.) Many types of microscopes can be used,(18) including wide-field, confocal, total-internal-reflection, two-photon, etc. to measure the positions of isolated single molecules, but a key point is: How precisely can the position or location of a single molecule be determined? Unfortunately, fundamental diffraction effects (26) blur the molecule’s detected emission profile, limiting its smallest width on the camera in sample units to roughly the optical wavelength λ divided by two times the numerical aperture (NA) of the imaging system (Figure 1A). Since the largest values of NA for state-of-the-art, highly corrected microscope objectives are in the range of about 1.3–1.5, the minimum spatial size of single-molecule spots is limited to about ~200 nm for visible light of 500 nm wavelength. Nevertheless, the measurement of the shape of the spot is useful information, in that a fitting function can be fit to the measured pixelated image to obtain the molecular position. The precision of this localization can be far smaller than the diffraction-limited width of the spot, and is limited primarily by the number of photons detected from the molecule. Both the precision and accuracy of this localization process are described in detail in Sections 2 and 3 of this paper, not only for 2D, but also for 3D determinations of the molecular position.

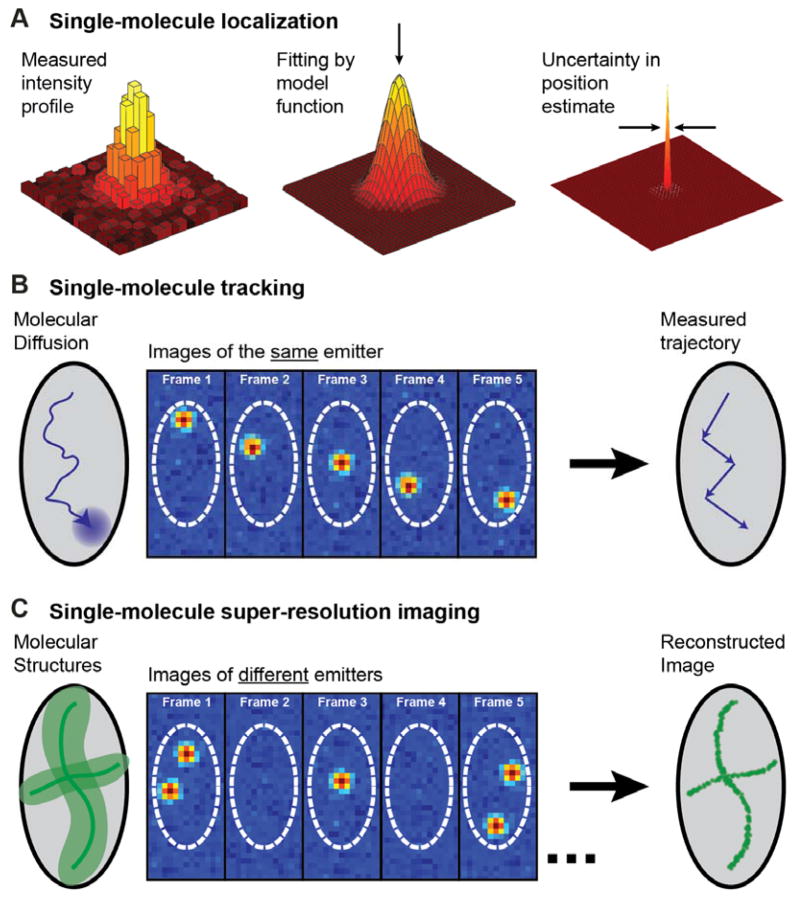

Figure 1.

The principles of single-molecule localization and its applications to particle tracking and super-resolution. (A) The pattern of light formed on a camera by a single fluorescent molecule is blurred by diffraction, but can be fit by a model function to yield a localization with uncertainty at least an order of magnitude lower than the diffraction-limited resolution, depending upon the photons detected. (B) If only one fluorescing molecule is present in a diffraction-limited volume, its motion can be localized in successive frames to build up a track of its motion, as illustrated for an abstraction of a cell. (C) Alternatively, single-molecule localization can surpass diffraction-limited resolution (left, light green area) when imaging extended structures (dark green lines). By (photo)chemically or photophysically limiting the number of simultaneously active immobile emitters, different labels are turned on, localized, and turned off in many successive frames, building up a super-resolution reconstruction of the underlying structures. Inspired by ref. (27).

Single-particle-tracking (SPT) is one method that applies single-emitter localization to answer key questions in both biology and materials science. In a SPT experiment, the spatial trajectory of a given molecule is determined by repeatedly detecting and localizing it at many sequential time points (Figure 1B).(28) Analysis of the resulting single-molecule tracks provides information on the mode of motion of the set of labelled molecules, which may be diffusive, motor-directed, confined or a mixture of these modes.(29–34) For materials science applications and in vitro reconstitutions of biological systems, dilution of the fluorescent probe used is typically sufficient to achieve single-molecule concentration. For fluorescently tagged biomolecules in living cells, it is sometimes necessary to use other means to lower the emitter concentration. These include lowering the expression levels of genetically encoded labels,(35) quenching or photobleaching an initially large number of emitters, chemical generation of emitters, or sparse activation of only a few photoactivatable molecules at a time.(36, 37) While in vitro measurements are simpler, because intracellular crowding and binding interactions with cellular structures influence measured trajectories, it is more representative to observe the motion of the molecule of interest as it performs its function in the native biological environment, either in the cytoplasm or the membrane.(38)

Turning now to the second method, let us pose a problem: how can one extract images of structures, if the power of optical microscopy to resolve two closely spaced-emitters is limited by the fundamental effects of diffraction? This problem has been overcome by the invention of “super-resolution” optical microscopy, recognized by the Nobel Prize in Chemistry in 2014, awarded to one of us (W.E.M.)(39), Eric Betzig(40) and Stefan Hell(41), an achievement which has continued to stimulate a revolution in biological and materials microscopy. Hell pursued Stimulated Emission Depletion Microscopy (STED) and its variants, while the focus of this paper is on approaches to overcome the diffraction limit using single molecules which rely on a clever modification of standard wide-field single-molecule fluorescence microscopy,(42–44) described in more detail in other papers in this special issue.

These single-molecule super-resolution (SR) imaging approaches are summarized in Figure 1C. As opposed to tracking of the same molecule, SR determines the positions of different molecule labels at different times to resolve a static structure. The essential requirements for this process are (a) sufficient sensitivity to enable imaging of single-molecule labels, (b) determination of the position of a single molecule with a precision better than the diffraction limit, and (c) the addition of some form of on/off control of the molecular emission to maintain concentrations at very low levels in each imaging frame. This last point is crucial: the experimenter must actively pick some photophysical, photochemical, or other mechanism which forces most of the emitters to be off while only a very small, non-overlapping subset is on. If these labelled copies are incorporated into a larger structure, such as a polymeric protein filament, then their positions recorded from different imaging frames randomly sample this structure. A point-by-point reconstruction can then be assembled by combining the localized positions of all detected molecules in a computational post-processing step. Importantly, because all molecules are localized with a precision of tens of nanometers, this approach circumvents the diffraction limit that otherwise limits image resolution to 200–300 nm in conventional fluorescence microscopy.

The required sparsity in the concentrations of emitting molecules can be achieved by a variety of methods. The PAINT method(45) (Points Accumulation for Imaging in Nanoscale Topography) relies upon the photophysical behavior of certain molecules that light up when bound or constrained, and this idea was initially demonstrated with the twisted intermolecular charge transfer turn-on behavior of Nile Red.(46) PAINT has advantages that the object to be imaged need not be labeled and that many individual fluorophores are used for the imaging, thus relaxing the requirement on the total number of photons detected from each single molecule. In the STORM approach (44) (Stochastic Optical Reconstruction Microscopy), cyanine dye molecules (e.g. Cy5) are forced into a dark state by photoinduced reaction with nearby thiols. A small subset of the emitters return from the dark form either thermally or by pumping of a nearby auxiliary molecule like Cy3. In the (f)PALM approach (42, 43) (fluorescence PhotoActivated Localization Microscopy), a photoactivatable fluorescent protein label is used, and a weak activation beam creates a small concentration of emitters which are localized until they are bleached, and the process is repeated many times.

Other active control mechanisms have been demonstrated giving rise to a menagerie of acronyms and names, including dSTORM (direct STORM) (47), GSDIM (Ground-State Depletion with Individual Molecule return)(48), “Blink Microscopy” (49), SPDM (Spectral Precision Determination Microscopy) (50), and many others. Photoactivation methods have been extended to organic dyes,(51, 52) photorecovery and/or photoinduced blinking can be used for SR with fluorescent proteins such as eYFP,(53, 54) and even enzymatic methods produce turn-on which may be controlled by the concentration of substrate and the enzymatic rate.(55)

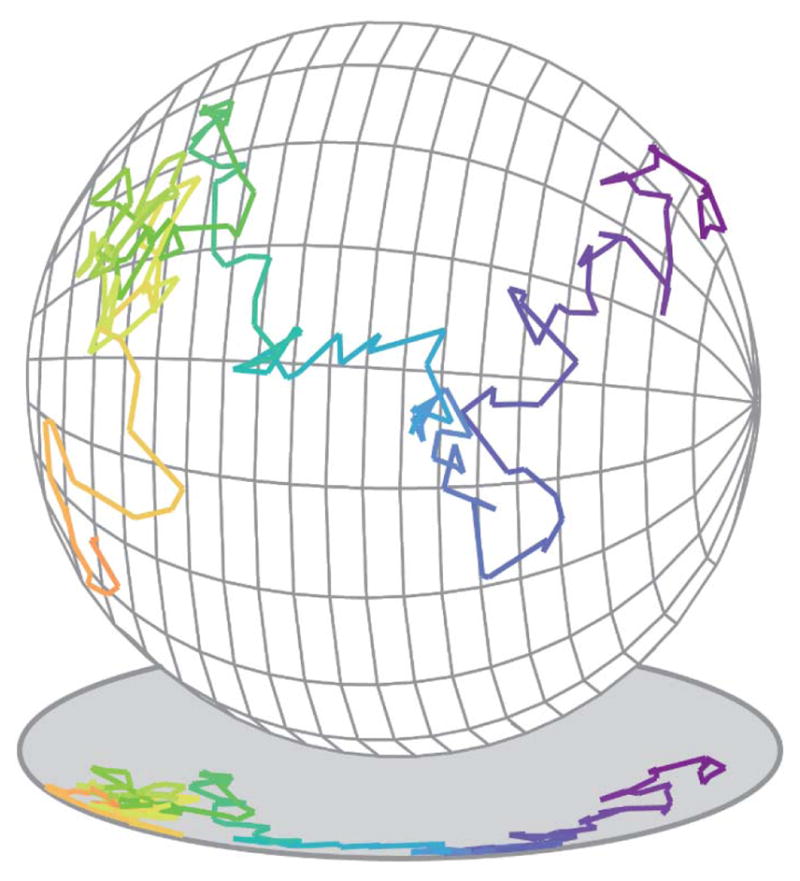

Between the large improvement in resolution (a factor of 5 or more, typically down to the 20–40 nm range) of SR microscopy, and the dynamical information and understanding of heterogeneity available from SPT, single-molecule localization has revealed structures and processes that were previously unresolvable. As treated in previous reviews,(56–65) and discussed throughout this special issue, these improvements have allowed scientists to address many long-standing questions spanning the fields of biology and materials science. The methodological challenge that we focus on in this review, of how to best obtain 3D information in SPT and SR microscopy, touches all of these subject areas. The reason 3D information is needed is simple: the world is three-dimensional, so that if we can only make 2D measurements, we will likely miss critical information. For example, as shown in Figure 2, motion on a 3D object such as the surface of a spherical cell appears contracted and warped when only 2D information is available. In the 3D trajectory, it is easy to observe that the molecule diffusely freely over the surface, while in the 2D projection, it is not even clear whether the molecule is surface-associated.

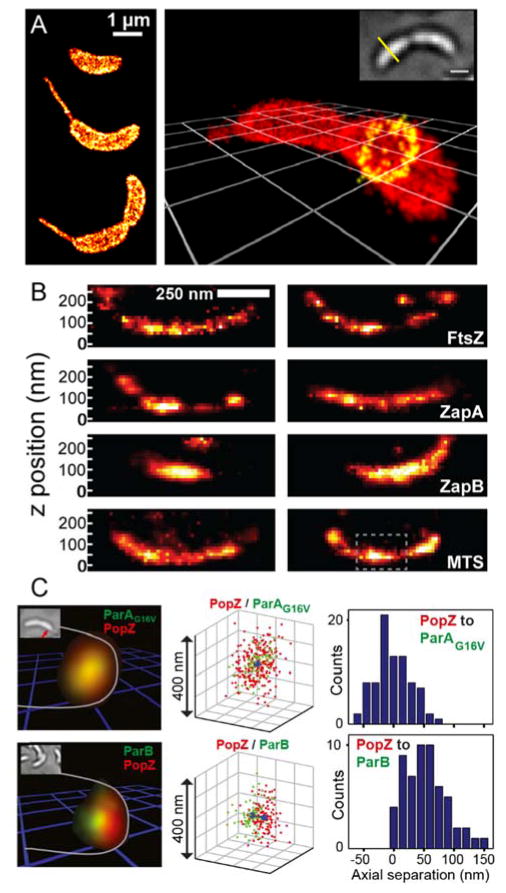

Figure 2.

Illustration of the 3D motion of a single molecule moving on the surface of a spherical cell, and its projection into 2D. The 3D trajectory shows that the molecule diffuses freely over the surface of the sphere, while the 2D projection is ambiguous.

Precise and accurate single-molecule localization is the foundation for all quantitative SR and SPT studies, and we begin in Section 2 by introducing the key principles of single-molecule detection and position estimation for the general case of 2D localization. In Section 3, we catalog and compare many of the creative and elegant means by which these measurements have been extended into the third dimension. In Section 4, we treat the physical and theoretical limits of these techniques: the possible resolution that can be attained, the corrupting influence of aberrations and other systematic errors, and how these limits have been pushed. Finally, in Section 5, we show the power of 3D localization microscopy by examining several case studies where 3D SR and SPT have offered new insights.

2. Basic Principles of Single-Molecule Localization

Many of the experimental and analytical considerations in 3D single-molecule microscopy can be seen as an extension of those in “traditional” 2D single-molecule localization experiments. To introduce vocabulary and context that is shared between many 3D localization modalities, we first give a brief overview of instrumentation and statistical theory used to localize single molecules in two dimensions. We encourage readers new to localization microscopy to peruse reviews that specifically treat these topics in detail for further background (18, 66, 67).

2.1 Detecting Single Molecules

The most common imaging geometry used in 2D single-molecule wide-field imaging experiments is sketched in Figure 3A, and provides the basis for 3D single-molecule microscopes. In most details, this is equivalent to the epifluorescence microscopes used for diffraction-limited microscopy. Here, we briefly review the foundational instrumental principles true of both 2D and 3D before discussing the microscope modifications allowing 3D localization in later sections.

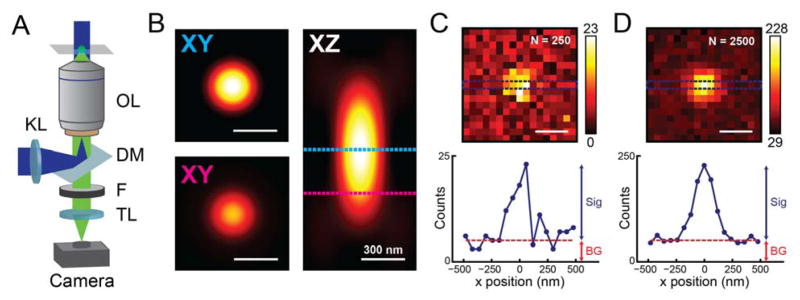

Figure 3.

Instrumentation and detection in a 2D single-molecule microscope. (A) The imaging geometry used in epifluorescence (inverted microscope shown). KL: Köhler lens; OL: objective lens; DM: dichroic mirror; F: filter(s); TL: tube lens; sample plane shown at the top. (B) Simulated x–y and x–z slices of the microscope PSF (λem = 600 nm, NA = 1.4) for scalar diffraction of an emitter near the coverslip(68) using the PSF Generator ImageJ plugin(69). Top x–y slice, in-focus PSF; bottom x–y slice, PSF at 300 nm defocus, same color scale. (C), (D) Simulated images of an in-focus single molecule formed on the camera under the same conditions, showing the effect of Poisson noise given 250 (2500) total signal photons collected and 5 (50) background photons per 60 nm (in sample plane) pixel. Counts in units of detected photons. The corresponding line profiles through the centers of the single-molecule images show the contributions to the image from signal and background. All scalebars are 300 nm.

In contrast to confocal microscopes, which scan a diffraction-limited focused laser spot across the sample, epifluorescence microscopes often produce a larger Gaussian illumination spot ranging from 1 to 100 μm in diameter in the object plane. In this way, all actively emitting fluorescent molecules in the sample can be detected simultaneously without scanning. To produce a broad illumination profile, the light source (typically a laser beam) is focused at the back focal plane of the microscope objective using a Köhler lens positioned externally to the inverted microscope body. The fluorescence from excited molecules is then collected back through the objective and imaged onto an array detector (i.e. a camera) with a separate tube lens. The objective lens is the most important detection optic in the system, and its most important parameter is numerical aperture, NA = n × sin(θ) with n the refractive index of the medium between the sample and objective and θ the objective’s maximum collection half-angle. The highest θ available from conventional objective lenses is roughly 72°, and by using high-index media such as immersion oil (n = 1.51), typical objectives used for single-molecule imaging achieve NA = 1.3–1.5.

The objective NA is a major determinant of image quality. As discussed in the introduction, optical resolution is limited by blurring of image features finer than the diffraction limit. The lateral resolution of wide-field fluorescence microscopy is thus limited to 0.61 λem, or at best ~200–250 nm for emission wavelengths λem in the visible range. Axial resolution is even more dependent on NA, and is at best roughly equal to 2λem/NA2 (~550–700 nm). Much of the behavior of the microscope is defined by the “point spread function” (PSF), which describes how light collected from a point emitter is transformed into an image. Mathematically, diffraction blur is equivalent to a convolution of the true labeled structure with the microscope’s three-dimensional PSF, shown in Figure 3B for a NA 1.4 objective and an emitter near the coverslip. This blur is directly responsible for the inability of conventional diffraction-limited microscopy to record fine details. The image formed on the camera by an ideal point emitter is a cross-section of the 3D PSF, dependent on the position of the emitter relative to the focal plane. The ~250 nm transverse width of the in-focus PSF is clearly apparent (Figure 3B, top x–y slice). By contrast, the PSF at 300 nm defocus is more diffuse and appears dimmer (bottom x–y slice). As can been seen in the x–z profile, within the scalar approximation, defocused emission profiles from above and below the focal plane are indistinguishable. As suggested by the resolution criteria above, higher NA objectives provide more compact PSFs; they also improve total signal, as light collection efficiency scales with the square of the NA.

However, even the best collection optics and brightest fluorophores available will not be enough if the signal from single molecules is drowned out by background light, and filtering this background while keeping as many signal photons as possible is a well-known requirement for every single-molecule experiment (18, 70). Carefully selected spectral filters are absolutely essential to reduce background from autofluorescence and scattering, and it is common to image at relatively red wavelengths (500–700 nm) to avoid spectral regions of particularly high cellular autofluorescence. Yet when imaging samples such as thick cells, even optimal spectral filtering may not be sufficient, as autofluorescence, scattering, and fluorescence from out-of-focus fluorophores all increase with the illuminated sample volume. In these cases, it is necessary to consider alternate illumination geometries from epiillumination. One way to reduce background is excitation by total internal reflection (thus TIR fluorescence or TIRF) which makes use of the 100–200 nm thick evanescent field generated from the excitation beam at incidence angles greater than the critical angle of the interface between the coverslip and the sample.(71–73) However, while TIRF greatly reduces out-of-focus background fluorescence above the 100–200 nm illumination region, yielding excellent results for thin imaging volumes,(74–77) having a limited axial reach away from the coverslip makes TIRF less useful for 3D imaging inside cells.

Detection is the last part of the imaging process, and the development of new, more sensitive cameras has been crucial to the progress of single-molecule microscopy. To maximize collected signal from the molecule, a single-molecule detector must have high quantum efficiency in the conversion of photons to photoelectrons. Further, all detectors introduce noise due to thermally generated current (“dark noise,” dependent on temperature and integration time) and noise from the electronics that convert the photocurrent of each pixel to a signal voltage (“read noise,” roughly constant for a given detector). For conventional detectors used in diffraction limited fluorescence microscopy such as Charge Coupled Device (CCD) cameras, this added noise can overwhelm the small signal from single molecules, and must be reduced. State-of-the-art cameras that address these needs include Electron-Multiplying CCD (EMCCD) and scientific Complementary Metal Oxide Semiconductor (sCMOS) cameras. EMCCDs have long achieved quantum efficiencies on the order of 95%, making them a common choice for single-molecule imaging, while sCMOS cameras have become increasingly popular in recent years thanks to rapid development in detector technology, and have recently reached quantum efficiencies of 95% as well (Photometrics Prime 95B; Tucson, AZ, USA). These cameras each overcome read noise in their own way, either by charge multiplication before readout (EMCCD) or by engineering extremely low read noise in the first place (sCMOS).

The contributions to the image formed on the camera from single-molecule fluorescence and background are summarized in Figure 3C,D. To design effective single-molecule experiments, whether in 2D or 3D, it is important to consider what determines the quality of such an image. Signal-to-background ratio (SBR) or contrast is a common metric in many imaging modalities, but is less informative in single-molecule imaging. As the difference between Figure 3C and Figure 3D shows, even when maintaining the same SBR, increasing the total detected photons (as is done by increasing the integration time or illumination intensity) improves the signal-to-noise ratio given shot noise statistics. That is, background is fundamentally a problem because it adds noise, rather than decreases contrast.

While increasing the pumping irradiance can greatly improve signal-to-noise (SNR), there is an upper limit to the signal available from single molecules. On a fundamental photophysical level, the emission rate from the molecule might saturate while that from the background might not. In most cases, a more serious limit is photochemical stability, i.e. the maximum possible emitted photons from single-molecule fluorophores before photobleaching. This quantity is often more important than brightness per se. For modern detectors and under most imaging conditions, these photon statistics are overwhelmingly the largest noise source, though the detector noise floor (i.e. read noise, dark noise, and other factors such as clock induced charge in EMCCDs) becomes highly significant under certain conditions with low signal in each pixel.(78) With such noise statistics in mind, we now turn to the question of how exactly we can infer accurate and precise molecular positions from the images collected by our microscope.

2.2 Estimating Single-Molecule Position

After acquiring single-molecule data, the task remains to implement algorithms that faithfully extract molecule positions from noisy, complicated images. While this task poses challenges, the reward – nanoscale position estimates of potentially millions of individually resolved molecules within a sample – is a worthy one. As with instrumentation, many of the principles of image analysis in 2D and 3D single-molecule localization are analogous. Here, we describe how molecular positions are extracted from image data in 2D, and introduce a general framework for evaluating and comparing localization methods in 3D.

Most fitting algorithms used for SR and SPT assume spatiotemporal sparsity, such that regions of interest (ROIs) can be defined in each image frame that each contain the intensity pattern of only one single-molecule emitter. As discussed in Section 2.1, under ideal conditions, the average intensity produced by a point emitter is proportional to a cross-section of the microscope’s 3D PSF, scaled by the total signal photons Nsig plus a constant background per unit area Nbg, giving us the imaging model

| (1) |

defined for position u,v in the image plane when the emitter is at position x, y, z in the sample space, where θ represents these position and photon parameters, plus any other parameterizations of the PSF. The actual image from the detector is subject to noise and binned into pixels, producing a signal nk at each of the pixels wk within the ROI. Phrased this way, single-molecule localization is revealed to be an inverse problem: given the pixelated and noisy image vector n and our knowledge of H, how can we accurately and precisely estimate the molecule’s position x = (x, y, z)?

Accuracy means that our chosen position estimator must not be systematically biased away from the true value, while precision means that the estimator makes effective use of all the available data to minimize random error. Estimators can be very simple, but not necessarily very accurate or precise. For example, since the 2D microscope PSF is brightest close to the molecule’s position, we could take the brightest of the pixel vector w of the PSF as a position estimate, , a “max-value” estimator. Or, applying the same logic slightly differently, we could use a weighted average of all the N pixels in the ROI,

| (2) |

i.e. a centroid estimator. Such estimators are computationally convenient, but limited: the max-value estimate is sensitive to noise and limited by pixel size, and so has low precision, while the centroid estimate is biased towards the center of the ROI by the background in outlying pixels, making it inaccurate (Figure 4).

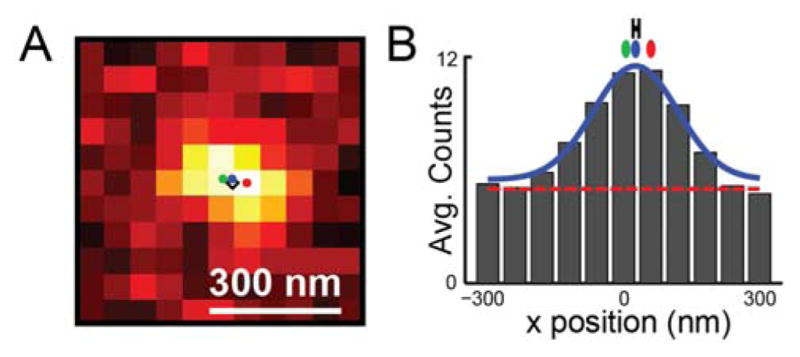

Figure 4.

Accurate localization requires unbiased estimators, an illustrative example. (A) One simulated image of a single molecule at position (x0 = 27 nm, y0 = 0 nm) with 250 signal photons and 5 background photons per pixel. Black diamond: true emitter position. Red, green, and blue dots: estimated position from max-value, centroid, and Gaussian fit estimators, respectively. (B) Image counts of (A) averaged along the x axis, with background level marked by the red dotted line, the Gaussian fit drawn in blue, and position estimates marked as in (A). Estimator accuracy and precision was tested for 10,000 simulated frames with the same parameters: Gaussian fitting accurately estimates true molecular position (mean value biased by < 0.1 nm) with a localization precision of 10.0 nm, marked by the black error bar. For the same data, the max-value estimator has a precision of 34 nm and a bias of −0.9 nm, while the centroid estimator has a precision of 5.4 nm and a bias of −20 nm.

How can one do better? While it is possible to create effective, unbiased estimators that use only basic assumptions about the PSF, such as radial symmetry,(79) the most powerful estimators implement a more detailed PSF model to solve the inverse problem of eq. (1). Least-squares (LS) fitting and maximum likelihood estimation (MLE) are two such methods that are adaptable to many models.

LS and MLE fit the image data to a model function. As implied by eq. (1), the imaging model H(θ) produces an expected value of the image, I(u,v; θ), and the best guess of θ is found by optimizing I to match the observed PSF. While tracking of the positions of large biological objects has a long history,(29, 30) deep considerations of the fitting procedure are relatively recent. LS was one of the first relatively unbiased localization algorithms used,(80, 81) and is familiar from its ubiquitous application to scientific regression problems and curve fitting. The scoring method of LS is to minimize the square error between the PSF model μ(θ) and the observed data n,

| (3) |

where x̂ is included in the optimized parameter estimate θ̂. This optimization strategy follows from the statistical assumption that each pixel value nk is an observation with normally, independently and identically distributed noise, which is generally only approximately true.(82, 83)

We must still define the imaging model H that produces the parametrized image I(u, v; θ). After more than a century of study, there is a sophisticated understanding of the standard microscope PSF, and this quantitatively describes the way light from a molecule is collected by the microscope.(26, 68, 84–86) When molecules emit light isotropically, without net polarization, the scalar approximation allows for a comprehensive treatment of the imaging system.(68) As shown in Figure 3, the image of an ideal, in-focus emitter at the coverslip is an Airy disk,

| (4) |

with J1 the first order Bessel function of the first kind, k the wavenumber 2π/λ, ρ the distance from the point source in the image plane, i.e. , and C a constant for a given number of total detected photons. While the Airy disk has several relatively dim rings, most of the PSF’s intensity is concentrated in the center. For this reason, a Gaussian function is a tractable and reasonably accurate approximation, and it is extremely common to fit single-molecule data with a symmetric Gaussian plus a constant background with the width σ the size of the spot arising from the diffraction limit(82, 87):

| (5) |

As shown in Figure 4 for a simulation with Nsig = 250 photons and Nbg = 5 photons/pixel, performing nonlinear LS fitting with a Gaussian model retrieves a position estimate ( ) close to that of the true position, and avoids the bias of the simpler estimators. We must next ask how precise an individual localization is. To empirically test the random error in our measurement, we can either simulate or measure the spread of position estimates obtained from the same molecule emitting over many frames. As shown in Figure 4B, repeated least-squares localizations on simulated data give a range of estimates ( ) normally distributed around the true values, with standard deviation, or “localization precision,” σ ≈ 10 nm. The localization precision is dependent on the estimator: for example, max-value estimation is much less precise, resulting in a localization precision of 34 nm for the exact same data. A well-known analytical expression was developed for the localization precision of LS Gaussian fitting by Thompson et al., with later corrections by Mortensen et al.:(85, 88)

| (6) |

This equation assumes the PSF is a Gaussian with standard deviation σPSF detected with pixels of diameter a, and gives the correct result within a few percent even if the true PSF is an Airy disk (such as simulated in Figure 4). For low background and ignoring pixelation, eq. (6) reduces to the approximate relationship

| (7) |

highlighting the importance of high photon counts. Eq. (6) has been widely applied to Gaussian LS estimation, but it only holds for the special case of aberration-free, in-focus, 2D PSFs, and does not reveal whether it might be possible to achieve a better precision with a different estimator. The question of how to define – and achieve – the best possible precision for an arbitrary 3D PSF is found in maximum-likelihood-estimation methods. MLE is rooted in a statistical framework that attempts to estimate a set of underlying parameters yielding a given measurement, accounting for the signal generating model (image formation) and noise statistics. Given image formation and noise models, one attempts to find the set of underlying parameters θ that maximizes the likelihood function, or, equivalently, the log-likelihood.(89, 90) Assuming shot (Poisson) noise dominates, the log-likelihood function is(82, 91)

| (8) |

where we drop terms not dependent on θ for clarity. Equivalently, MLE means seeking the set of parameters for which the measurement is most probable.

The preceding has discussed several estimators for determining molecule position. This leaves the question as to how to define the best possible precision one can achieve. This is of interest, both for practical reasons, such as estimating the resolution of an image, for designing a better imaging system, and for fundamental theoretical reasons. A useful and elegant framework for the quantification of localization precision stems from recognizing that emitter localization is a parameter estimation problem. As such, tools from estimation theory can be used to analyze it. Specifically, Fisher information is a mathematical measure of the sensitivity of an observable quantity (image) to changes in its underlying parameters (e.g. emitter position).(91–93) Intuitively, an image that is more sensitive to the emitter’s position contains more information about the position. Mathematically, Fisher information is given by:

| (9) |

where f(s; θ) is the probability density, i.e. the probability of measuring a signal s given the underlying parameter vector θ, T denotes the transpose operation, and E denotes expectation over all possible values of s. In the special case where Poisson noise is dominant and there is spatially uniform background, eq. (9) becomes:

| (10) |

where μ(k) is the model of the PSF in pixel k and β is the number of background photons per pixel. More sophisticated models can be used that account for the noise characteristics of EMCCD(94) and sCMOS detectors,(95) and calibration of the pixel-to-pixel variation of sCMOS detectors is necessary for accurate localization.

The use of Fisher information in localization analysis is motivated by its relation to precision. The inverse of the Fisher information is the Cramér-Rao lower bound (CRLB), which is the lower bound on the variance with which the set of parameters θ can be estimated using any unbiased estimator.(96) For the position coordinates (x,y,z), this translates to the optimal precision with which the source can be localized for a given imaging modality, signal, background, and noise model. The CRLB is not a quantity of merely theoretical importance – it has been shown that the CRLB can be approached in practice.(82, 85, 97) As long as the imaging model matches the experiment, the precision of MLE will always be superior to LS methods, and will generally achieve the CRLB. However, LS estimators also have merits: they do not require a comprehensive noise model and are simpler to implement, and can achieve precisions close to the CRLB for high background levels, where noise statistics are similar between pixels.(82, 83) As we discuss further in Section 3, CRLB comparisons allow us to systematically compare 3D localization methods, and are important in the design of new PSFs for 3D imaging.

3. Methods for 3D Localization

The 3D PSF shape of a standard fluorescence microscope changes relatively slowly with changing axial position relative to the focal plane (Figure 3). This is equivalent to having low Fisher information, or poor precision for determining z (see Figures 5 and 7 for comparisons of CRLB analysis of several PSFs including the standard PSF). Further, in the absence of aberrations, the PSF is symmetric above and below the focal plane, making it difficult to assign a unique z position to the emitter. While some tracking experiments have achieved nanometer axial precision with the standard PSF, these required extremely bright nanoparticle emitters and working away from focus to remove ambiguity.(98, 99) In most cases, the low photon budget of probes used in single-molecule microscopy severely limits axial localization precision with a conventional fluorescence microscope, especially over extended axial ranges.

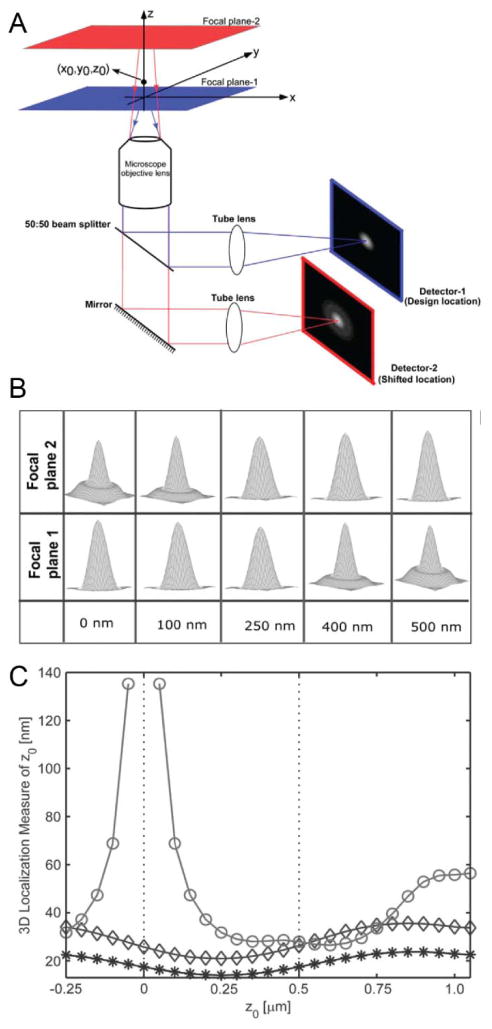

Figure 5.

Multifocal microscopy with a biplane setup. (A) The fluorescence from a point emitter at position (x0,y0,z0) is collected by the objective and directed to two different detectors using a 50:50 beamsplitter. The focal plane detected by the second detector is shifted by changing the separation between the detector and the tube lens. (B) Simulated PSFs of each channel as a function of emitter axial position relative to focal plane 1. The PSF changes relatively slowly within ~250 nm of focus. (C) The CRLB for axial localization for a conventional microscope (○) and biplane microscope with 500 nm plane separation (◇) with 2000 total detected photons and 160 background photons per ~160 nm pixel (80 photons per pixel for biplane detection). NA 1.45, λem = 655 nm, 6 e−/pixel read noise. Doubling the acquisition time (*) improves the axial CRLB for biplane localization by a factor of approximately . Reprinted from ref. (103), Copyright 2008, with permission from the Biophysical Society.

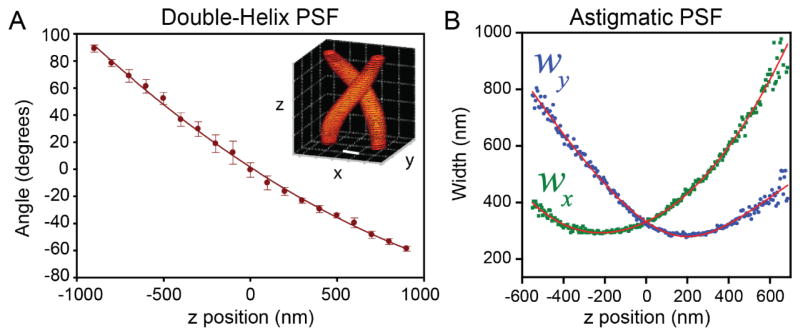

Figure 7.

Calibration curves associated with the double-helix PSF and the astigmatic PSF. (A) The angle of the line between two revolving DH-PSF lobes changes monotonically as a function of z position. Inset: intensity profile of the DH-PSF. Scale bar and grid spacing: 400 nm. Adapted from ref. (126). Copyright 2009 the authors. (B) The x and y widths wx, wy, of asymmetric Gaussian fits to the astigmatic PSF change with z position in a well-defined manner. Adapted from ref. (123). Reprinted with permission from AAAS.

Several microscope modifications have emerged that obtain high Fisher information for both axial and transverse localization, allowing precise 3D imaging for a wide range of conditions. These modifications can be classified based on the optical principle they employ: these include multifocus methods, where multiple focal planes are imaged simultaneously; PSF engineering methods, where the PSF of the microscope is altered to encode axial position in its shape; and interference-, interface-, or intensity-sensing methods, which measure the position of the molecule relative to features in the imaging geometry instead of fitting the shape of the PSF. All of these methods are compatible with a wide range of emitting labels, and are sometimes creatively combined to take advantage of the strengths of multiple approaches.

3.1 Multifocus Methods to Extend the Standard PSF

As summarized above, the standard PSF has clear limitations for 3D imaging. These limitations follow from the need to estimate molecule position from a single 2D slice of the PSF; if we were to measure the entire 3D PSF, it would be possible to fit the axial position just as we fit the lateral position. Such volumetric imaging is common in confocal microscopy, where scanning the focal spot to acquire a stack of z-slices gives axial information with gives axial sectioning to diffraction-limited resolution, even though the larger extent of the PSF in the axial direction reduces attainable precision. Since single-molecule imaging is inherently dynamic due to e.g. the movement of molecules in a tracking experiment or the blinking process in a super-resolution experiment, scanning through a focal stack is an unattractive approach.

Widefield multifocal imaging employs a microscope modification that avoids scanning and makes multiple simultaneous measurements of the 3D PSF at different focal planes. The simplest implementation of multifocal imaging, also known as biplane or bifocal imaging, splits fluorescence equally into two channels that are imaged either onto two cameras(100) or onto different regions of the same camera,(99, 101) where shifting the camera placement (or adding another lens) in one channel causes different focal planes to be detected in each channel (Figure 5A). The PSFs with focal planes at 0 nm (channel 1) and 500 nm (channel 2) are shown in Figure 5B. Near focus, each PSF is bright and changes slowly as a function of z position (good lateral precision, poor axial precision), and away from focus, each PSF is spread out but changes shape more quickly (poor lateral precision, better axial precision). When imaged together, the two channels complement each other, allowing good lateral and axial localization precision over a large axial range, as well as removing ambiguity about which side of the focal plane the emitter is on. This is described quantitatively by CRLB analysis: biplane imaging gives more significantly more precise axial localization than single-plane imaging, and has an axial range of ~1.5 μm (Figure 5C). (The best choice of spacing distance between focal planes for a given application has been investigated using CRLB analysis.(102)) Biplane data is usually fit following the approach of eq. (1), where a model of how the PSF of each channel changes as a function of the 3D emitter position (x0, y0, z0) is used to simultaneously fit both channels. The fitting task can be defined by LS or MLE with either theoretically derived or empirically measured 3D PSFs.(103, 104)

Multifocal/biplane imaging has been applied to several systems, including single-particle tracking using quantum dots(103, 105) and super-resolution imaging with synthetic dyes and fluorescent proteins(101, 106, 107) in cells. By adding additional planes,(103, 105, 108) it is possible to capture a larger depth of field at the cost of either additional cameras or lateral field of view on a single camera. For example, multifocal imaging with four planes has yielded 3D trajectories of quantum dots in cells with an axial range up to 10 μm.(105)

For high-NA optics, refocusing by displacing the camera is not exactly equivalent to displacing the emitter position within the sample, and can introduce spherical aberration for larger displacements.(109) Aberration-free refocusing can be realized in multifocal microscopy by the application of diffractive optics analogous to those used in PSF engineering (Section 3.2).(108, 110–112) By placing a custom-fabricated optical element into the detection path, the image is diffracted into many orders, each of which has a different phase term that closely mimics the effect of axial displacement within the sample, avoiding aberrations. Such an optical element effectively replaces the functions of the beamsplitter and camera displacement shown in Figure 5. While diffractive optics may incur some loss of photons, this approach has achieved up to ~84% diffraction efficiency for the case of nine planes ranging over 4 μm (113) and is compatible with single-molecule tracking(111) and super-resolution imaging with small molecules.(112) A drawback of multifocal methods that span a large axial range is inefficient use of signal, especially at the extremes of the focal stack, where much of the signal is split into planes that are too out of focus to provide useful information. To improve the 3D precision attainable from each plane, multifocal imaging has been combined with astigmatism (see section 3.2),(114, 115) and we anticipate the possibility of further interesting modifications to multifocus techniques.

Another set of microscope designs related to multifocal imaging achieve high axial resolution by viewing the emitter from multiple angles. One such approach, termed “Parallax”,(116) splits the angular distribution of detected light into two channels by placing a mirror into a plane conjugate to the back focal plane. The axial position is measured from the relative displacement between the PSF in each channel, while the lateral position is read out from the average position. This method, and an earlier version using a prism to split the light, have been applied to the 3D single-molecule tracking of motor proteins.(117, 118) Alternatively, a virtual side view of the sample can be created by mounting the sample onto a fabricated micromirror sitting at a roughly 45° angle to the optical axis. The PSFs in this virtual image can be fit to directly extract axial position. While it complicates sample preparation, this approach has been successfully demonstrated for particle tracking and for super-resolution imaging.(119, 120)

3.2 Accessing the Third Dimension via PSF Engineering

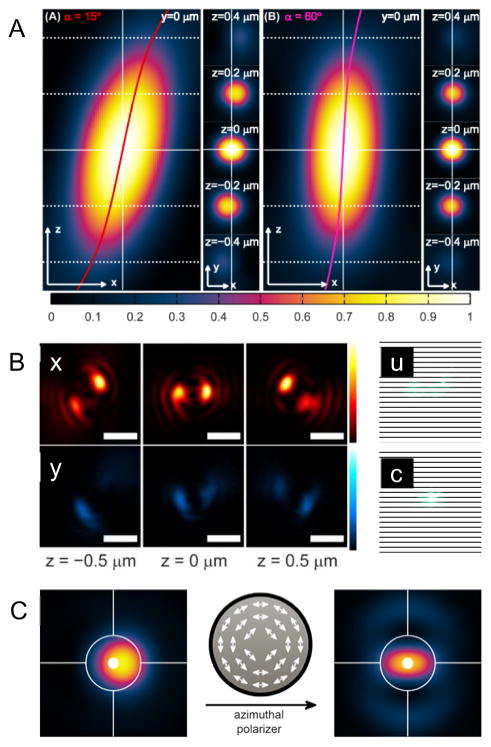

The limitations of the standard PSF make it difficult to extract emitter z position from a single image, but this need not be true for all microscope PSFs. An alternate set of methods attain precise axial localization of a point source by encoding the emitter’s depth in the shape of the PSF. This can be achieved with simple phase aberrations, such as by inserting a weak cylindrical lens before the tube lens to induce astigmatism, where the eccentricity of the PSF changes as a function of axial position (Figure 6A).(121–123) A more general-purpose way to induce phase aberrations is to place a programmable phase-modulator in a plane conjugate to the objective back focal plane, i.e. the Fourier plane of the microscope (Figure 6). (While one could modulate amplitude in addition to phase using absorptive optics, this would cause the loss of precious photons.) Performing phase modulation in this specific geometry provides a useful mathematical framework for synthesizing new PSFs, and is necessary in order to impart the same modulation throughout the field of view.(124) The relation between the electromagnetic fields in the back focal plane and the image plane is given by

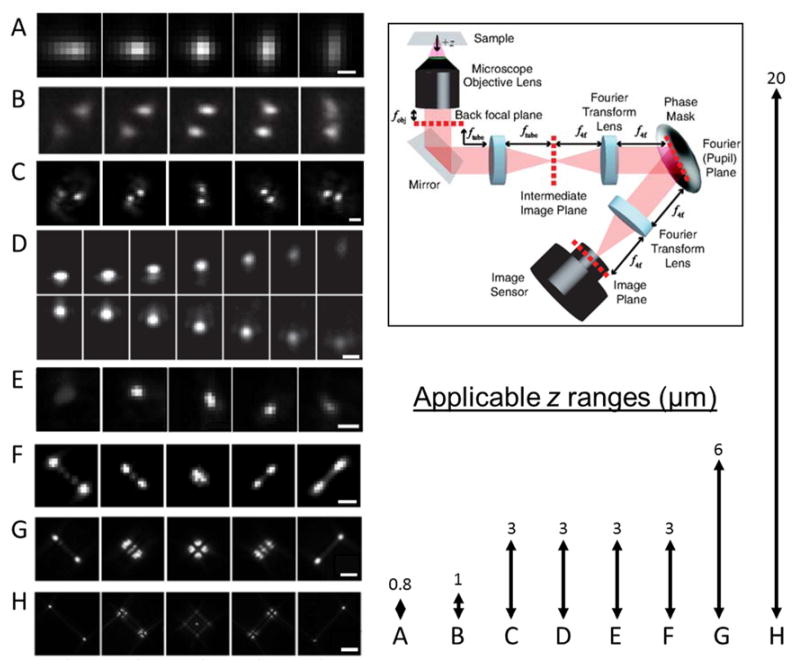

Figure 6.

Various experimentally measured PSFs for 3D localization microscopy shown as a function of axial position of the emitter (ranges shown in lower right). Adapted from ref. (134) (A) Astigmatism. Scale bar ≈ 0.5 μm. From ref. (123). Reprinted with permission from AAAS. (B) Phase-ramp. Ref. (128) Copyright Tsinghua University Press and Springer-Verlag Berlin Heidelberg 2011, with permission of Springer. (C) Double-Helix. Scale bar = 2μm. Adapted from ref. (126). Copyright 2009 the authors. (D) Accelerating beam (split onto two camera regions). Scale bar = 1 μm. Reprinted with permission from Macmillan Publishers Ltd: Nature Photonics ref. (129), copyright 2014. (E) Corkscrew. Scale bar = 1 μm. Adapted from ref. (127). Copyright 2011 the authors. (F) A 3 μm range Tetrapod (also known as the saddle-point PSF). Scale bar = 1 μm. Reprinted figure with permission from ref. (130). Copyright 2014 by the American Physical Society. (G), (H) 6 and 20 μm range Tetrapods. Scale bars =2 μm and 5 μm, respectively. Reprinted with permission from ref. (131). Copyright 2015 American Chemical Society. The arrows (right) represent the z-ranges over which the PSFs on the left were imaged, which correspond to their applicable depth ranges. Top right: experimental setup for PSF engineering based on back focal plane modulation. Reprinted figure with permission from ref. (130). Copyright 2014 by the American Physical Society.

| (11) |

where E(x′,y′) is the electric field in the back focal plane caused by a point source at (x,y,z), the 3D position relative to the focal plane and the optical axis. The function P(x′,y′) is a phase pattern imposed by optics in the Fourier plane. We denote by ℱ the 2D Fourier transform. To broadly summarize the implications of eq. (11) for PSF engineering, an axial (z) displacement of the emitter from the focal plane is equivalent to curvature in the phase of E(x′,y′), and the phase pattern P(x′,y′) changes how this manifests in the image I(u,v).

The z-dependent curvature term of the electromagnetic field at the back focal plane can be approximated by

| (12) |

derived from the scalar approximation in the Gibson-Lanni model.(68) This model assumes a two-layer experimental system consisting of a sample of refractive index n2 (e.g. water), and glass/immersion oil which have matched refractive index of n1. The distance between the emitter and the interface separating layer 1 and layer 2 (namely, the distance from the surface of the glass coverslip) is given by z1, and the distance between the microscope focal plane and the interface is denoted by z2, with k the wavenumber 2π/λ, and denoting normalized polar pupil plane coordinates. Phase curvature is added to the phase pattern P(x′,y′), and the measured PSF I(u,v) reflects both P(x′,y′) and z.(86) In the absence of a phase mask (i.e., if P(x′,y′) is a constant), z displacements appear as defocus blur. The goal of PSF engineering is to choose a pattern P(x′,y′) that makes the amount of phase curvature, and thus z position, easier to detect from the image.

There are multiple ways in which a phase mask can encode the axial position of an emitter in the shape of the PSF, and various PSF designs have been used in recent years. These include an elliptical (astigmatic) PSF,(121–123) the rotating double-helix PSF (DH-PSF),(125, 126) the corkscrew PSF,(127) the phase ramp PSF,(128) the self-bending PSF(129) and the saddle-point/Tetrapods(130, 131) (Figure 6). These engineered PSFs span different axial ranges and exhibit a wide variety of shapes, but all have distinct features that change rapidly as a function of z position, such as changing eccentricity (Figure 6A), relative motion of two lobes (Figure 6B–D), lateral translation (Figure 6E), or relative motion, splitting, and recombining of multiple lobes (Figure 6F–H).

As mentioned above, these PSFs can be generated using several approaches. Simpler phase patterns can be induced using optics such as a cylindrical lens(123) or a glass wedge,(128) while other patterns require control over the phase pattern, as can be attained with a deformable mirror (DM),(132) a lithographically etched dielectric (e.g. quartz),(62) or a liquid crystal spatial light modulator (SLM).(126, 129–131) SLMs and DMs can be flexibly programmed to generate different phase patterns, and have advantages and disadvantages. SLMs have many pixels, but are designed to work for a specific linear polarization of light, halving the photons collected. This limitation can be avoided if necessary by using a “pyramid geometry” that collects both polarizations.(133) DMs have negligible loss, but generally have a limited number of actuators and cannot generate patterns with phase singularities or other fine features. Glass or quartz optics can generate features limited in fineness only by the fabrication method and have minimal loss, but are fabricated into a set pattern and cannot be changed.

There are multiple ways to determine z positions from image features in engineered PSFs. An intuitive and widely-applied approach is to choose an easily identifiable parameter in the image and generate a calibration curve relating that parameter and z. Such calibration curves can be derived from a theoretical model of the PSF or from empirical measurement of fluorescent beads or other bright fiducials, where the focal plane is translated by changing the distance between the objective and sample. For the case of the DH-PSF (Figure 6C), the angle of the two revolving PSF lobes are such a calibration parameter (Figure 7A). For the astigmatic PSF (Figure 6A), the relevant parameters are the PSF width along the x and y axes (Figure 7B), such that z is estimated from the value that best matches the observed pair (wx,wy). These parameters are measured by fitting image data to an approximation of the PSF, such as a pair of 2D Gaussian functions fit to the DH-PSF lobes, or an asymmetric 2D Gaussian fit to the astigmatic PSF.

While intuitive, such parametrizations do not use all the data in the image. Most 3D PSFs have features that are not accurately fit by a Gaussian function; for example, it is difficult to imagine a simple parameter that uses all the information present in complicated-looking PSFs such as the Tetrapod family (Figure 6F–H). To make use of this information, one can instead fit the observed PSF to a 3D model function of the PSF. Such a model function can be numerically generated or interpolated from an experimental calibration. In this way, fine features of the PSF contribute to the estimate, improving the precision. (MLE, rather than LS fitting, is particularly desirable when fitting a complete PSF model, as MLE uses information from dim pixels more correctly.) Because the PSF need not have a single, easily-interpretable feature, this broadens the range of possible 3D PSFs.

Engineered PSFs exhibit a wide range of behavior in terms of size, applicable z-range, and attainable precision. For example, the astigmatic PSF has a small footprint on the detector, allowing for potentially more emitters per unit volume to emit simultaneously while avoiding PSF overlap, but a relatively small z-range, close to that of the standard PSF. On the other hand, the DH-PSF has a larger z-range, over which it exhibits superior and uniform precision (smaller CRLB) than astigmatism,(135) and the saddle-point/Tetrapod PSFs(130, 131) have an even larger applicable z-range at the cost of potentially larger footprint. Figure 6 shows various different PSFs along with their applicable z-ranges, and Figure 8A–C shows the phase patterns for the astigmatic, DH-PSF, and Tetrapod PSFs.

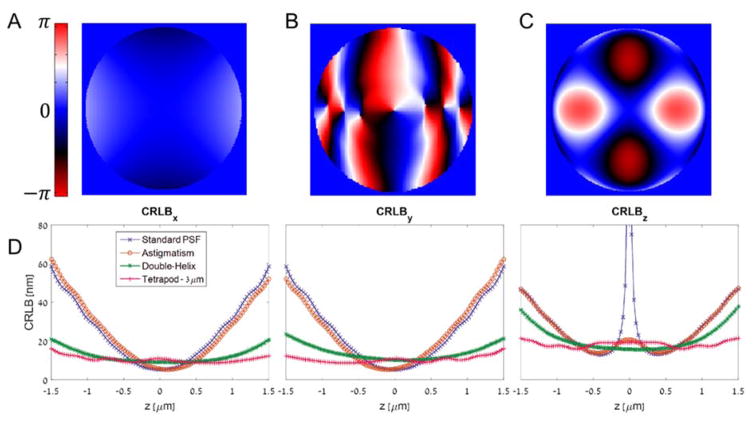

Figure 8.

Selected PSF phase patterns and Cramér-Rao Lower Bound calculations. (A) The astigmatic PSF. (B) The DH-PSF. (C) The Tetrapod PSF for 3 μm range. (D) The CRLB for estimating x,y, and z is plotted for the standard PSF and the three 3D PSFs above. Low CRLB means a good potential precision. The calculation assumes 1000 detected signal photons, a background of 2 photons per 160 nm pixel, using a 100X oil immersion objective with a NA of 1.4.

As mentioned earlier, Fisher information is a useful measure to assess the attainable precision of an imaging system, and can be used to compare different PSF designs.(135) Such a comparison is shown in Figure 8D, displaying the calculated CRLB of four PSFs – the standard PSF, astigmatism, Double-Helix, and a 3 μm range Tetrapod. The CRLB bounds the attainable variance, and hence lower CRLB is indicative of superior (smaller) precision. Key observations from Figure 8D include: 1) The standard PSF contains very little information about the axial (z) position of an emitter near the focal plane (z = 0), and only maintains good lateral (x,y) localization performance over a < 1 μm z range. Clearly, a standard PSF is a poor choice for 3D localization. 2) For all PSFs, axial precision is worse than lateral precision. 3) The Tetrapod PSF exhibits the best mean CRLB in 3 dimensions over the 3 μm range. This is because it was algorithmically designed to maximize Fisher information, and therefore to be optimally precise under the conditions simulated.(130) It is worth noting that the design algorithm assumed uniform Poisson background; in certain situations (such as in cells) this may be hard to achieve.

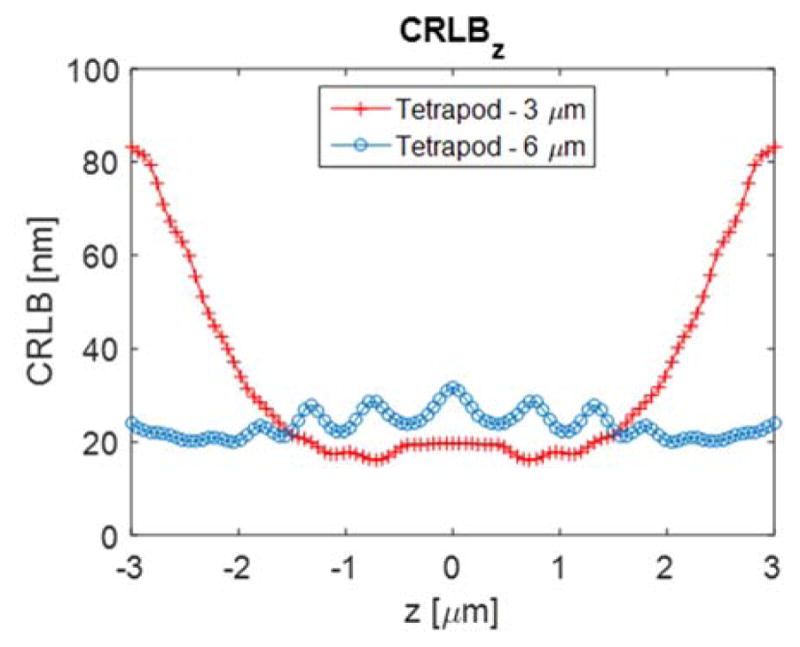

When selecting a PSF for 3D localization, a major consideration is its applicable z-range, which is the axial region where the CRLB is low. Figure 9 shows a comparison between a Tetrapod PSF designed for a 3 μm z-range and a Tetrapod PSF designed for a 6 μm z-range.(131) It is evident that the 6 μm Tetrapod exhibits a larger applicable z-range, albeit at the cost of poorer precision in the shorter (3 μm) z-range. This trade-off in performance means that the experimenter should pick a PSF with a range appropriate to the sample under consideration. If imaging time and drifts are not issues, a short-range PSF can be successively translated again and again in z to build up an image.(101)

Figure 9.

Cramér-Rao Lower Bound calculation comparing the 3 μm z-range Tetrapod PSF with the 6 μm z-range Tetrapod, in terms of precision bound on axial position estimation. The trade-off between precision and z-range is evident in this comparison. Simulation parameters are the same as in Figure 8.

While the focus of this review is on 3D localization, the type of information that one can encode in a PSF is not limited to depth. PSF engineering has been used to encode the 3D orientation of an emitter(136, 137) and even its emission wavelength,(138, 139) adding new ways to extract information about a molecule’s identity and local environment while simultaneously obtaining a nanoscale position estimate.

3.3 Intensity, Interfaces, and Interference

The methods described so far all extract axial position by measuring the shape of the PSF in the far field. However, this is not the only information available from an emitter. Several methods have emerged that perform axial localization using properties such as the intensity gradient of the excitation field, the near-field coupling of the emission to the coverslip, or the phase information of the emitted light. While these are not directly related, the three examples we discuss below highlight the power of carefully considering the physical process in which light couples to and from the emitter, without relying on information from the shape of the standard PSF.

The first class of methods uses the profile of excitation light. If the fluorescence quantum yield and absorption cross-section of an emitter are constant, the fluorescence intensity from a single molecule will depend only on the excitation irradiance. Assuming this, relative axial position can be inferred from fluorescence intensity in the presence of a well-defined axial intensity gradient. One early example made use of the exponentially-decaying evanescent wave produced at the coverslip/sample interface by TIRF microscopy.(74, 140) The lateral position of fluorophores in a polyacrylamide dye was measured as in a standard 2D microscope, while changes in detected fluorescence intensity specified relative axial position. Given the decrease in intensity and thus localization precision away from the coverslip, 3D measurement using TIRF is limited by a short effective detection range. This has been employed indirectly to allow axial “sectioning” of 2D SR data using sequential imaging at different penetration depths.(141) The steep intensity gradient of a confocal illumination volume can be employed in an analogous fashion to that of the TIR illumination profile. While not a wide-field detection method, this has been used to track the motion of single nanoparticles, one at a time, by using a xyz translation stage in closed-loop operation, permitting the observation of complex cellular transport processes with a large range and high resolution both spatially and temporally.(142–145)

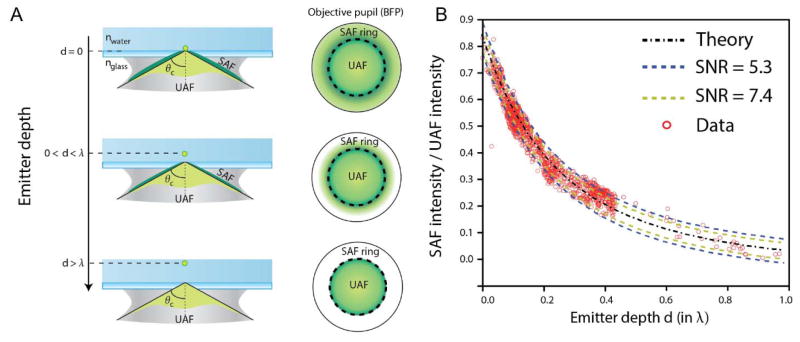

A second related set of methods uses the effects of the sample-coverslip interface on emission, rather than excitation, to extract relative axial position. This can be done with the refractive index mismatch between sample and coverslip, measuring the coupling of emitted light into the coverslip rather than the evanescent excitation profile of TIR. For emitters more than ~λ distant from the coverslip, any light emitted at more than the critical angle (θc~61° relative to vertical for a water/glass interface) will be totally internally reflected back into the sample. However, as the emitter gets closer to the surface, “supercritical” light above this angle will be coupled into the coverslip and can be collected with a high-NA objective; the axial dependence of the coupling efficiency is comparable to that of TIR excitation with highly inclined illumination (Figure 10A).(146) (This supercritical, or “forbidden,” light was used to sense the position of a near-field pumping source near a single molecule in the early days of single-molecule spectroscopy.(147)) The supercritical and “undercritical” components of the fluorescence can be discriminated by placing an annular filter in the back focal plane, and comparison of the total fluorescence relative to the undercritical-only fluorescence allows determination of axial position without a large loss in signal as in the TIRF case (Figure 10B). This method has been demonstrated for in vitro experiments with 3D origami nanostructures and for single-molecule imaging very near the interface in cells.(76, 77)

Figure 10.

(A) Coupling of emitters close to the coverslip allows the collection of supercritical angle fluorescence (SAF) in addition to “undercritical” fluorescence (UAF), as can be detected in the back focal plane (BFP). (B) The ratio of supercritical to undercritical light sharply decays away from the coverslip, allowing axial position estimation. Results shown for the ideal case, for simulations with varying signal to noise ratios, and for experimental data with 20 nm beads embedded in 3% agarose gel (n = 1.33 ≈ nwater), with depths confirmed using astigmatism. Adapted by permission from Macmillan Publishers Ltd: Nature Photonics, ref. (77), copyright 2015.

A distinct interface-based axial localization method has been demonstrated that uses a coverslip coated in a thin gold film.(148) Fluorophores close to the film (< 100 nm separation) exhibit lowered fluorescence lifetimes due to coupling to surface plasmons, and the lifetime changes steeply and monotonically with distance from the film.(149) While the quenching lowers the total number of photons detected before photobleaching, lowering lateral precision, it is possible to achieve axial precisions of a few nanometers with standard fluorescent dyes by measuring single-molecule fluorescence lifetimes using confocal detection and a single-photon-counting detector.(148)

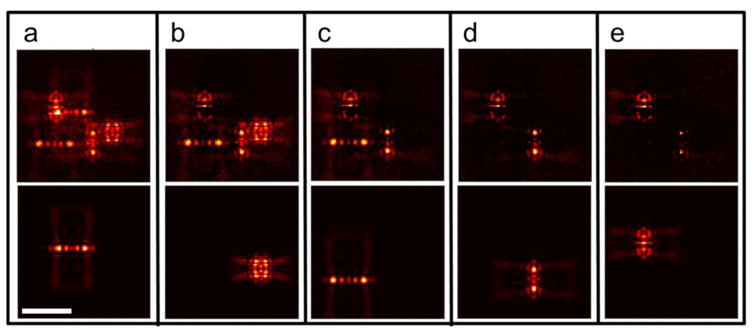

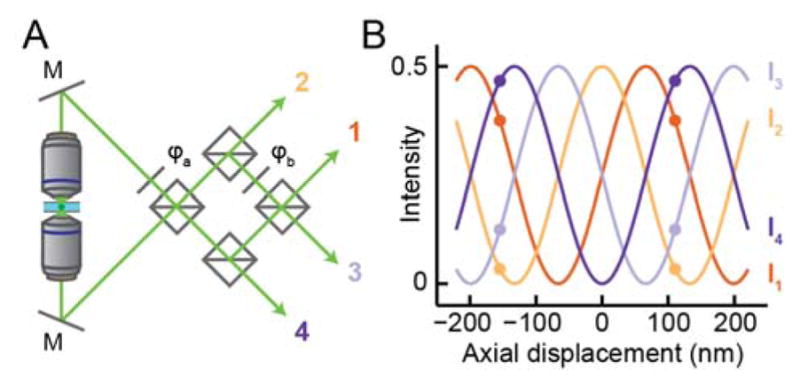

A third approach uses the phase of the single-molecule fluorescence, rather than intensity, to interferometrically estimate axial position. While this class of methods can be more experimentally demanding than others discussed in this review, it has high potential precision. Since the light emitted from a single molecule is self-coherent, it can be interfered with itself to sense the relative phase delay between different imaging paths. For a microscope, this can be accomplished using opposed parfocal objectives to collect light from above and below the sample (Figure 11A). A displacement of the emitter δ along the optical axis in a sample of refractive index n will advance the phase of light in one path and delay the other by approximately 2πnδ/λ = δk, causing a total phase shift of 2δk. Interfering the two paths adds axial interference fringes to the PSF: while a given x–y slice of the interfered PSF has roughly the same shape as it would in a conventional microscope (Figure 3), the total intensities of the PSF slices vary sinusoidally with z position, with period ~2π/2k = λ/2n. Before being applied to single-molecule studies, this effect was used in bulk fluorescence microscopies using confocal scanning (4Pi)(150) and wide-field (I5M/I5S)(151, 152) illumination. By axially scanning the sample and deconvolving the interferometric PSF, an image is produced with ≥5x improved axial resolution relative to standard confocal or wide-field microscopy.(153)

Figure 11.

Interference microscopy captures high-resolution axial information with dual-objective detection. (A) Schematic of a four-channel interference microscope, based on the example of (156). M, mirror; φa,b phase delay elements, squares, 50:50 beamsplitters. The first beamsplitter combines the light from the two objectives, producing two beams with relative phase delay π. These are recombined to produce two more channels with phase delays π/2 and 3π/2. Not shown: each interfered detection paths is focused and imaged separately, either on different parts of a camera or on separate cameras. The phase delays increase by π/2 between channels, owing to the choice of φb= −π/2 and the π/2 delay of reflected vs. transmitted beams. (B) The fraction of total signal intensity in each detection path of (A) as a function of the emitter’s axial displacement from the focal plane. The delay model is as described in ((75, 156)) assuming an aqueous environment, NA 1.4, and fluorescence at 600 nm. As shown by the colored dots, axial position can be read out precisely from a point measurement of the detected intensity in each channel, but is not uniquely determined beyond a period of ~λ/2n.

As discussed in Section 3.1, scanning is not generally feasible for dynamic single-molecule measurements, and as with multifocal microscopy, it is preferable to simultaneously sample several channels that mimic axial displacement of the sample. In the case of interference-based microscopy, this amounts to a different relative phase from the top and bottom objective. Rather than scanning the sample to create an interferogram, different effective displacements are sampled in each channel i by introducing a relative phase delay φi between the light from the two objectives. In the limiting case of low-NA collection, the total intensities detected from the molecule in each channel, Ii, have the relationship

| (13) |

for z the axial position relative to focus. (High NA lowers the effective value of k.(75, 154, 155)) A two channel interferometric setup, i ∈ (1, 2), with relative phase delays φ = (0, π) can be generated using a single 50:50 beamsplitter to recombine signal from both objectives due to the π/2 phase retardation upon reflection (Figure 11). From eq. (13),

| (14) |

letting us extract the molecule’s position z from its measured intensity in the channels I1, I2. As the range of cos−1 is [0, π], eq. (14) demonstrates that phase aliasing limits the z values that can be detected in a two-channel interferometric microscope to a range of π/2k = λ/4n ≈ 120 nm. The Fisher information vanishes when the two beams perfectly interfere (at phase delays of 0 and π/2), as the cosine response of (13) reaches a point of zero derivative simultaneously for both channels, further limiting the practical range of such a microscope.

Sampling more than two phase delays resolves ambiguity to achieve an axial range of λ/2n and provides more uniformly high Fisher information. Multiple names including “iPALM”(75) and “4Pi-SMS” (156) have been used for setups that use three or four detection paths with relative phase delays multiples of 2π/3 or π/2, respectively. These paths are created using a specially-designed beamsplitter or combination of beamsplitters and phase-shifting elements, respectively, with an approach generating four channels sketched in Figure 11A. The intensities in each detection path are analyzed following eq. (13) to extract z information: as shown in Figure 11B, the four detected intensities change in a well-defined fashion with z, repeating with period ~λ/2n. It was shown early on both by theoretical studies of the CRLB(156) and by experimental measurement(75) that such approaches provide high precision laterally (due to the increased number of photons from two objectives) and even better precision axially, on the order of σxy < 10 nm, σz < 5 nm for photon counts typical of fluorescent proteins when imaged using TIRF.

While effective for 3D SR imaging near the cell membrane, the first studies using single-molecule interference microscopy could not go beyond the λ/2n limit imposed by phase wrapping. Later implementations used additional features of the image to break this symmetry. For example, for high-NA optics and sufficiently high SNR, the phase oscillation is measurably different at the edges of the PSF, allowing fringes to be disambiguated by spatial filtering.(155) Alternatively, a feature such as astigmatism can be added to the PSF to coarsely localize the molecule to one of the fringes before extracting the precise “intra-fringe” position.(157) By avoiding phase aliasing, these methods achieve an axial range of interference localization microscopy limited primarily by the focal range of the PSF, ~700–1000 nm for the standard or astigmatic PSFs.

The high precision afforded by interference methods comes with practical drawbacks. One is complexity of alignment and maintenance. Since any difference in the path length between the two imaging arms is directly reflected in the measurement, these must be stabilized to nanoscale tolerances over a path length on the order of a meter. Such stabilization can performed with additional interferometric feedback systems.(151, 158) Alternatively, by placing a mirror directly above the sample to collect both paths through the same objective and recombining them using an SLM, it is possible to reduce the amount of stabilization needed, with a trade-off of somewhat poorer localization precision.(159) A separate difficulty arises when imaging thick samples, due to the phase aberrations produced by a heterogeneous refractive index. In a comprehensive study, it was recently demonstrated that by using adaptive optics, it is possible to compensate for both the aberrations of thick cells and the impact of sample drift to achieve accurate imaging at multiple positions within thick cells.(158) While this did not extend the range of the PSF beyond the limit of its focal range, the stability of the setup allowed whole-cell interferometric imaging over a total range of up to 10 μm by combining multiple sequentially acquired axial sections, where aberrations within each section were separately corrected.

Taken together, the multiple new methods for 3D localization described in this section give the experimenter many choices. Since the goal in all such studies is optimal utilization of the available emitted photons from single molecules, it continues to be essential to balance complexity and performance as needed for the application.

4. Challenges and New Developments in 3D Localization

As we have seen, there exist a wide variety of optical enhancements of the standard wide-field fluorescence microscope that significantly improve the precision of 3D localization, enabling otherwise impossible measurements. Along with new possibilities, this advance brings with it new challenges to overcome. Systematic errors can be introduced in 3D imaging by optical aberrations and by dipole emission effects. Similarly, the need to accurately preserve 3D information between imaging channels (such as in multicolor imaging or polarization imaging) requires a careful approach to registration. And in general, adding a new dimension can affect the resolution, sampling rate, and field of view of localization microscopy.

In this section, we highlight some technical implications of moving to 3D imaging and the approaches that have been developed to improve its accuracy and spatiotemporal resolution. This description of possible errors is not exhaustive, and other errors such as those induced by sample drift over the course of acquisition(106, 160–162) are also necessary to correct in quantitative single-molecule microscopy. (In the case of drift, correction can be achieved using fiducial markers, interferometry, or cross-correlation with post-processing or active feedback.) We close by offering a general prospectus of methodological improvements that have either just begun to be made, or that would be a desirable future addition to the field.

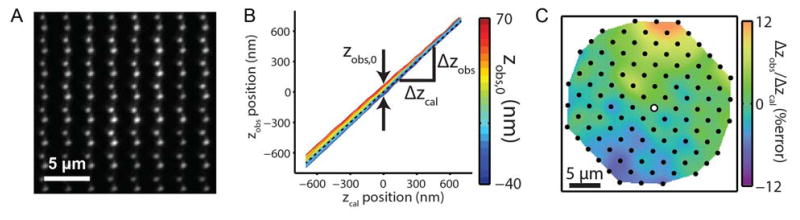

4.1 Spatially Dependent Optical Aberrations and Multichannel Registration

Nonidealities of the imaging system that would be minor for a diffraction-limited microscope can cause errors that overwhelm the much finer resolution of a single-molecule microscope if not corrected. Such aberrations can be considered as an additional undesirable phase pattern added to the system, i.e. to the function P(x′,y′) of eq. (11). Since this changes the 3D PSF and alters the expected phase of the emitter, these potential errors are relevant for most 3D microscopy methods. Careful optical alignment is the first step in minimizing aberrations, but even a perfectly aligned setup with index-matched media will have imperfect optics and deviate from the paraxial ideal.(163, 164) As long as the microscope PSF is spatially invariant across the transverse field of the image and at any depth within the sample, minor aberrations can be accounted for with a simple calibration, such as an axial scan of a fluorescent bead adhered to the coverslip. Such calibrations are used in all categories of 3D localization methods,(75, 101, 123, 126) and can be used to extract the exact wavefront aberration using phase retrieval.(165) However, several classes of aberrations undermine the assumption that there is a single PSF over the entire 3D volume, and require more comprehensive consideration and correction. These can be categorized as either depth-dependent or field-dependent aberrations.

Depth-dependent aberrations are due to a refractive index mismatch between the sample and immersion medium, as is common for biological samples (with index close to water, n = 1.33) imaged with high NA oil immersion (n = 1.51) objectives. In addition to axial distortions of the PSF due to spherical aberration, index mismatch induces a well-known rescaling of the effective focal plane depth (~30% for an oil-water mismatch).(68, 166, 167) Index mismatch can be greatly reduced by switching to water immersion objectives (and carefully aligning the sample and correction collar),(168) but due to the need for high NA in 3D single-molecule microscopy, the reduced NA when using low-index media such as water may be undesirable. Two alternative solutions include calibration, and correction via adaptive optics.

Calibrations of depth-dependent errors require a different approach than a simple axial translation of the sample, as this does not actually change the depth of the fiducial (which is typically adhered to the coverslip and does not suffer significant index mismatch effects). One powerful approach, demonstrated for the astigmatic PSF, is to move fiducials through a range of well-defined axial positions within the sample using an optical trap while keeping the focal plane fixed.(169) While it does not require any specific PSF, this approach has not been applied more generally, perhaps owing to its relative complexity. An alternative method computationally synthesizes the 3D PSF at different depths by adding appropriate amounts of aberration to the empirical phase retrieved from a standard calibration. This has been shown to be effective for multifocal(170) and astigmatic(171) localization microscopy.

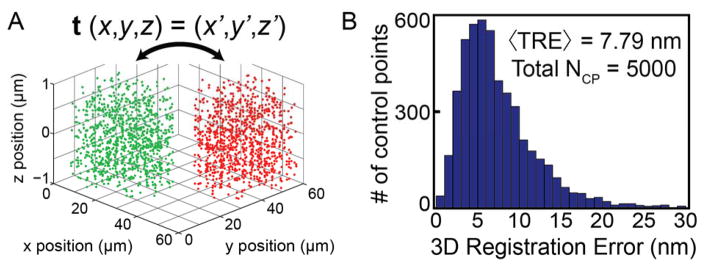

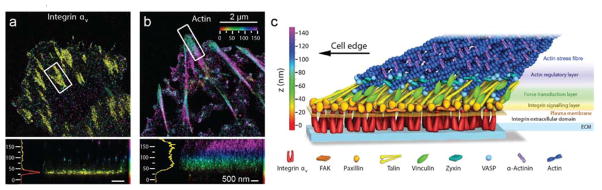

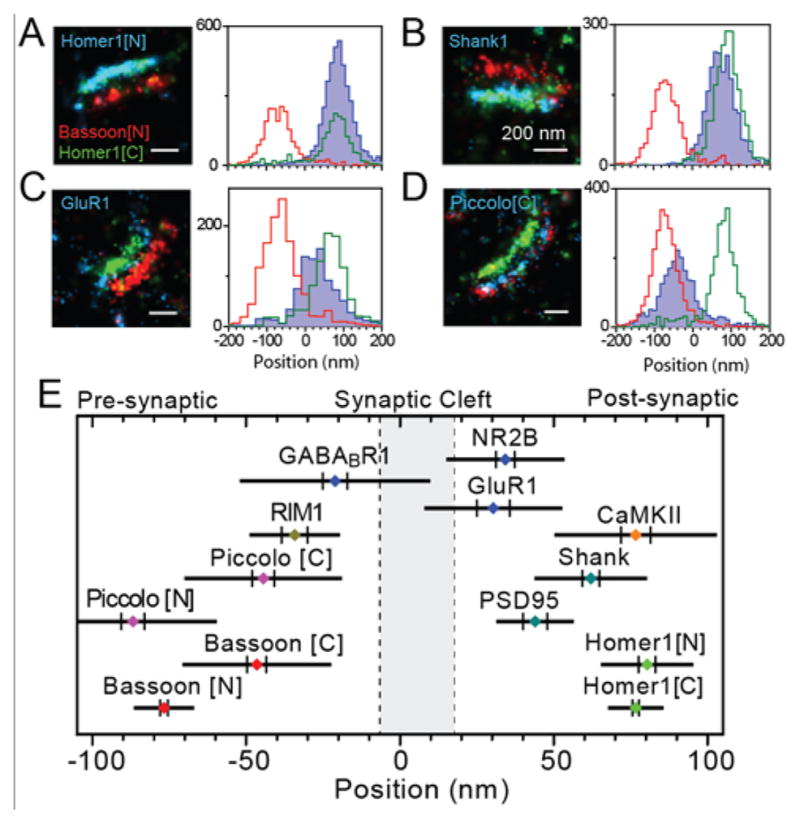

Adaptive optics (AO) is a powerful means to correct aberrations, and works by measuring or otherwise inferring the aberration phase, then using a programmable modulator to cancel it out.(172, 173) The aberration phase is typically defined and corrected in a plane conjugate to the back focal plane (also referred to as the pupil plane for AO applications), and is modulated by a programmable SLM or DM in this conjugate plane. This is accomplished similar to the 4f system described in Section 3.2, and most AO modulation schemes are straightforward to combine with other PSF engineering methods. Such a geometry is chosen in order to add the same correction to all points within the field of view. Multiple approaches have been reported to determine the appropriate correction phase for a given focal plane depth within the sample. Applications of AO to confocal and 2-photon microscopy often use a bright fluorescent point source or “guide star” to measure the 3D PSF in situ. The aberration phase can be explicitly detected, such as with a Shack-Hartman wavefront sensor conjugate to the back focal plane,(174) or inferred (as is useful for scattering samples and/or thick tissue) indirectly from image-based readouts such as pupil segmentation.(175) In the context of single-molecule imaging, aberration correction has been informed by direct wavefront measurement as well as iterative image optimization with guide stars.(132) Another approach iteratively optimizing the PSFs detected from single molecules observed in the first frames of the SR acquisition.(176) These methods result in improved 3D SR reconstructions in cells, and have permitted 2D SR imaging in tissue at depths of 50 μm.(177) AO was also recently introduced for super-resolution interference microscopy by using one DM in each imaging arm, where system aberrations were corrected by iteratively optimizing the PSF for a guide star at the coverslip.(158)