Abstract

Background

Online health information is being used more ubiquitously by the general population. However, this information typically favors only a small percentage of readers, which can result in suboptimal medical outcomes for patients.

Objective

The readability of online patient education materials regarding the topic of congestive heart failure was assessed through six readability assessment tools.

Methods

The search phrase “congestive heart failure” was employed into the search engine Google. Out of the first 100 websites, only 70 were included attending to compliance with selection and exclusion criteria. These were then assessed through six readability assessment tools.

Results

Only 5 out of 70 websites were within the limits of the recommended sixth-grade readability level. The mean readability scores were as follows: the Flesch-Kincaid Grade Level (9.79), Gunning-Fog Score (11.95), Coleman-Liau Index (15.17), Simple Measure of Gobbledygook (SMOG) index (11.39), and the Flesch Reading Ease (48.87).

Conclusion

Most of the analyzed websites were found to be above the sixth-grade readability level recommendations. Efforts need to be made to better tailor online patient education materials to the general population.

1. Introduction

The readily accessible Internet has become the most popular educational resource for the general patient population [1–3]. Patients have turned to consult the search engine Google for diagnoses and treatment of their own health conditions before their primary physician [4]. While having a nearly unlimited knowledge base can be empowering, the unfiltered nature of the Internet can result in patient misinformation and anxiety due to medical jargon and difficult readability of the patient education materials. Guidelines set forth by American Medical Association (AMA) and the US Department of Health and Human Services (USDHHS) dictate that patient reading material should be no higher than a fifth- or sixth-grade reading level in order to be more accessible and comprehensible to the general public [5].

Readability is defined through various formulas based on sentence length, word familiarity, syllables, and other factors via scores that identify a grade level needed to attain to comprehend the presented information. Many recently published articles show that medical websites are not pitched to the appropriate communication levels of the general public [6–10]. In this cross-sectional study, online patient education materials on the particular topic of congestive heart failure (CHF) were assessed by the authors. It is estimated that 5.7 million Americans suffer from CHF, and about half of those afflicted will die within five years of the diagnosis [11]. Early intervention and treatment can improve quality and length of life for most patients. Thus, it is crucial that online information pertinent to CHF be tangible to the general public in order to understand, manage, and track their condition in the appropriate manner. This study focused on assessing the readability levels and reading ease of online CHF articles available to the general public via Google.

2. Methods

2.1. Search Engine

The Google search engine was used because the majority of patients that use the Internet for health-related information reported using Google [12, 13]. The search term “congestive heart failure” was entered into a Google Chrome web browser. The search was performed on November 29, 2016.

2.2. Inclusion and Exclusion Criteria

The first 100 search results were analyzed to determine if they would be eligible for inclusion. Websites were eligible for inclusion if they (1) were in English, (2) were free to access, and (3) provided information on CHF. Websites were excluded if they were advertisements for medical products or news articles or pertained only to animal-based diseases. This caused 30 results to be excluded, leaving 70 websites to be analyzed (see Table 1 for the list of websites included).

Table 1.

List of congestive heart failure websites with their Google ranking and website type.

2.3. Readability Assessment

The readability of each website was assessed using five readability formulas (Table 2).

Table 2.

Readability test formulas used to analyze patient education websites.

| Readability test | Formula |

|---|---|

| Flesch-Kincaid Grade Level | |

| Flesch Reading Ease | |

| Gunning-Fog Score | |

| Coleman-Liau Index | CLI = 0.588L − 0.296S − 15.8 |

| SMOG Index |

Variables. Average number of letters per 100 words (L), average number of sentences per 100 words (S).

FKGL, Flesch-Kincaid Grade Level; FRE, Flesch Reading Ease; GFS, Gunning-Fog Score; CLI, Coleman-Liau Index; SMOG, Simple Measure of Gobbledygook.

The Flesch-Kincaid Grade Level (FKGL) and the Flesch Reading Ease (FRE) are both calculated using the average sentence length (i.e., the number of words divided by the number of sentences) and the average syllables per word (i.e., the number of syllables divided by the number of words) using different formulas [14, 15].

The Gunning-Fog Score (GFS) is calculated using the average sentence length and the number of polysyllabic words (i.e., those with three or more syllables) [16]. The counted polysyllabic words do not include (i) proper nouns, (ii) combinations of hyphenated words, or (iii) two-syllable verbs made into three with -es and -ed endings.

The Coleman-Liau Index (CLI) is calculated using the average number of letters per 100 words and the average sentence length [17]. Unlike the other four readability tests, the CLI does not assess the number of syllables in a given text.

The Simple Measure of Gobbledygook (SMOG) index is calculated using the number of polysyllabic words in three ten-sentence samples near the beginning, middle, and end of a piece of text [18]. If there are fewer than 30 sentences, the formula contains a factor to correct for this.

The five readability tests chosen have been widely used in a variety of previous studies. Each test assesses readability according to word difficulty and sentence length using different weighting factors. Five different readability tests were used in order to compare the readability of each website based upon different factors. The FRE is a 100-point scale with higher scores indicating more easily understood text (Table 3). The remaining four measures, FKGL, GFS, CLI, and SMOG, indicate the US academic grade level (number of years of education) necessary to comprehend the written material. For example, a score of 13.5 would indicate a grade level appropriate for a first year undergraduate student, while 6 would indicate that the available health information can be comprehended by an individual who is in or has completed the sixth grade. To prevent human error during calculations and for ease of use, a single online readability calculator recommended by the National Institutes of Health (NIH) was used for all five readability tests [19, 20].

Table 3.

Flesch reading ease scores with equivalent US education level and USDHHS readability rating.

| FRE score | Equivalent education level | USDHHS readability |

|---|---|---|

| 0–29 | College graduate | Difficult |

| 30–49 | College | |

| 50–59 | 10th–12th | |

|

| ||

| 60–69 | 8th-9th | Average |

| 70–79 | 7th | |

|

| ||

| 80–89 | 6th | Easy |

| 90–100 | 5th | |

FRE, Flesch Reading Ease; USDHHS, US Department of Health and Human Services.

Prior to analyzing the data, the “ideal” criteria for the readability of the online resources were established. The USDHHS recommends health materials to be written at the 5th- or 6th-grade level to ensure wide understanding. Thus, the level of acceptable readability was determined to be greater than or equal to 80.0 for the FRE and less than or equal to 6.9 for the FKGL, GFS, CLI, and SMOG.

2.4. Statistical Analysis

Standard data entry and analysis were done using a Microsoft Excel spreadsheet. Independent upper-tailed hypothesis tests were conducted for each readability index. Results were considered statistically significant at a p value of 0.05 or less.

3. Results

Of the 100 websites identified, only 70 met the study inclusion criteria and were analyzed for readability. Thirty websites were excluded because they did not describe CHF (14), pertained to animal-based diseases (7), were advertisements (4), required payment (3), or were news articles (2).

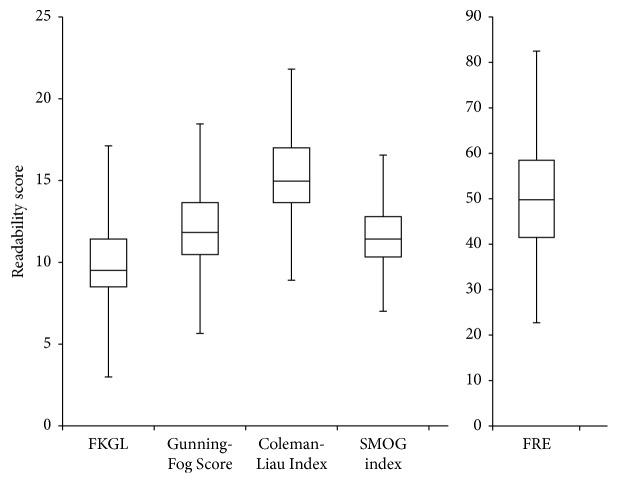

All five assessment tools reported statistically significant results in which p value was less than the standard alpha value of 0.05. The distribution of each readability score for all of the websites evaluated is summarized in Table 4. A comparison of mean readability scores between general medical websites and specialty-specific websites is shown in Table 5. Of the 70 websites, only 5 (7.1%) of them were within the limits of the recommended sixth-grade reading level on at least one assessment tool (Table 6). No websites were at or under the sixth-grade reading level using all five assessment tools. Figure 1 details the median scores of the health information websites using a box-and-whisker plot.

Table 4.

Mean readability scores of health information websites.

| Readability test | Mean score | Standard deviation | p value |

|---|---|---|---|

| FKGL | 9.79 | 2.28 | 0.0483 |

| GFS | 11.95 | 2.84 | 0.018 |

| CLI | 15.17 | 2.67 | 0.0003 |

| SMOG | 11.39 | 2.1 | 0.005 |

| FRE | 48.87 | 13.29 | 0.0096 |

Table 5.

Mean readability scores of general medical websites as compared with specialty websites.

| Readability test | General | Specialty | p value |

|---|---|---|---|

| FKGL | 9.93 | 9.08 | 0.339 |

| GFS | 12.03 | 11.61 | 0.4329 |

| CLI | 15.62 | 13.16 | 0.1893 |

| SMOG | 11.41 | 11.23 | 0.4609 |

| FRE | 47.12 | 56.77 | 0.2647 |

Table 6.

Category breakdown of readability scores of health information websites.

| Readability scores | Number of websites (n = 70) |

|---|---|

| FRES | |

| Easy (80–100) | 1 |

| Average (60–79) | 13 |

| Difficult (0–59) | 56 |

| FKGL | |

| Up to grade 6 | 3 |

| Grades 6–10 | 48 |

| Beyond grade 10 | 19 |

| GFS | |

| Up to grade 6 | 1 |

| Grades 6–10 | 20 |

| Beyond grade 10 | 49 |

| CLI | |

| Up to grade 6 | 0 |

| Grades 6–10 | 4 |

| Beyond grade 10 | 66 |

| SMOG | |

| Up to grade 6 | 0 |

| Grades 6–10 | 13 |

| Beyond grade 10 | 57 |

Figure 1.

Box-and-whisker plots showing median readability scores of health information websites (n = 70; p < 0.05).

4. Discussion

Internet access has opened up a plethora of resources to use as education materials but the writing style and jargon of most medically relevant articles favor a small percentage of the general public. In order to prevent confusion, undue stress, and misinformation, it is important for patients to have adequate and appropriate medical information available to them in all healthcare settings. However, the material presented online cannot be utilized effectively if it is presented in a style that is beyond the scope of the general population. One study showed that about 1 in 5 patients has utilized the Internet for obtaining medical information, however, the majority of them encountered difficulties comprehending the information available to them [21]. The guidelines set forth by the AMA and USDHHS state that the information must be written at or below a sixth-grade reading level in order to be accessible to the public. The aim of this study is elucidate the readability of available online health-related information in terms of these standard guidelines. This study focused specifically on congestive heart failure.

4.1. Online Health Information Readability

The search engine Google was used to assess the readability of websites relevant to congestive heart failure. Of the first 100 search results, 70 fit the inclusion criteria of this study and were consequently analyzed via five readability assessment tools. As the results indicate, 92.9% of the CHF websites assessed were above the recommended levels. As Table 5 indicates, there was no significant difference in the readability scores of general medical websites as compared to specialty-specific websites. Only five websites fell within the recommended levels but none of them passed all five assessment tools, further portraying that medical articles found online are not written at an appropriate level for the average US citizen. This was seen across both general medical websites and diagnosis-specific websites.

In the case of congestive heart failure, it is crucial for patients to understand, manage, and track their health condition to improve their quality and longevity of life. To our knowledge, this is one of few studies to use these five readability assessment tools to assess the readability of online patient education information relating specifically to congestive heart failure. The readability of web-based literature has been assessed in many healthcare arenas such as colorectal surgery, ophthalmology, dermatology, nephrology, orthopedics, psychiatry, and endocrinology [5, 22–27].

Hutchinson et al. used four of the five readability indices used in our study on websites that were also included and assessed in our study [28]. According to the prior study, the average readability of Wikipedia.org, MayoClinic.org, WebMD.com, Medicine.net, and NIH.gov on the disease-specific topic of congestive heart failure was found to be above the recommended sixth-grade reading level. This was consistent with the findings in our study when the average of all five of the readability assessment tools is taken. In both studies, the NIH website had the lowest average reading grade level of the five websites. Tulbert et al. also analyzed Wikipedia.org and WebMD.com using the FKGL and FRE readability tests and likewise found that both websites were above the sixth-grade reading level [23].

Another study analyzed a broad spectrum of websites relating to 16 medical specialties using ten readability indices, including the five used in this study [10]. According to that study, the American Academy of Family Physicians (AAFP) website was written above the recommended sixth-grade readability level using the FKGL, FRE, GFS, CLI, and SMOG. Our study also utilized the AAFP website for the more disease-specific topic of congestive heart failure and found that it was written above the recommended reading level in all five tests.

4.2. Health Literacy

Patient education is an integral part of the physician-patient relationship. However, a majority of US citizens are known to have limited health literacy [29, 30]. There have been several efforts undertaken to provide a profile of health literacy skills of specific patient populations that have found striking evidence of inadequate literacy skills, including in medical care settings [31].

Low health literacy has been found to negatively impact health and well-being. Low health literacy rates have been correlated with higher mortality in the elderly and impose a higher risk of living with chronic illnesses [29]. On an individual level, this results in preventable recurrent hospitalizations or clinic visits. On the national level, it has been found that inadequate health literacy costs the US economy between $106 and $236 billion dollars annually. Health literacy has long been recognized as one of the central challenges we all face in American healthcare [32]. We can take the necessary steps in mitigating this issue by following recommendations to improve the readability of online health information.

4.3. Recommendations

It is important to adhere to the AMA and USDHHS guidelines in keeping patient education websites at a sixth-grade readability level or below in order to broaden the patient base that the information can reach. By doing so, more patients will be able to find the appropriate information on websites, be able to understand what they found, and be able to act appropriately on that understanding [33].

According to the NIH, this is particularly important for the first few lines of text because if the reader encounters difficulty with a passage at the beginning, they may stop reading altogether [19]. The Institute for Healthcare Improvement recommends using simpler words, shorter sentences, and avoiding medical jargon, all of which will also serve to improve website readability scores [34]. Websites with scores that do not adhere to the AMA and USDHHS guidelines should consider rewriting materials to aim for a grade level less than or equal to 6.9 and FRE scores below 60. These changes should ensure a simple, quick, and cost-effective effort to make a definitive change that will improve the readability of available online health-related information for the general public.

4.4. Limitations

This study has a few important limitations. Only the first 100 results were reviewed in this study and only with one search phrase. This is only a portion of all the available websites on our topic of interest, although we found that using related search terms resulted in very similar results. In this study, we used only one search engine for retrieving information; however, the search engine we used is the most widely used engine globally for obtaining health-related information [7, 35].

The scope of this study is focused particularly on US English-speaking patient populations. Websites were excluded if they were non-English, and thus the results may not be applicable to a non-English-speaking patient population. The results obtained may be location-specific because search results will vary based on the server used; thus it is difficult to draw more general conclusions about the entire global patient population. Additionally, the readability indices that were utilized were originally created to gauge the readability of English texts using US grade levels. However, the authors of this study acknowledge that the data results may be extrapolated and applied to English-speaking patient populations outside the US, in which the readability grade level can be considered the number of years of formal education conducted in English.

As a cross-sectional study, we acknowledge that our search results reflect a snapshot in time from a single location and does not represent or account for every patient's search experience. The available resources on the Internet are always growing and changing, and thus search results retrieved at different moments in time may differ. The results of our study are meant to instigate reflection, to initiate efforts by website authors to improve readability, and to serve as a comparison point for reassessment in the future.

5. Conclusion

This study showed that the current readability of websites pertaining to congestive heart failure was poor. The Internet has become a powerful, accessible resource for many patients to use for their own medical management and comprehension. However, many patient education websites pose material at a reading level that is not suitable for the average adult, causing readability as well as comprehension to suffer. Poor health literacy has been found to negatively impact health and even inflate healthcare costs in the United States. It is therefore imperative to scrutinize the Internet resources available to the general populace in order to prevent mismanagement and subpar healthcare outcomes. We have highlighted easy, cost-effective methods such as using shorter sentences and limiting medical jargon in order to better achieve the recommended readability level. It is our recommendation that patient education websites be reevaluated for adherence to readability guidelines set forth by the NIH and USDHHS in order to ensure that resources are inclusive to a wider audience. Through this study, we hope to make website creators aware of the utility of readability indices to achieve this goal.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- 1.Hesse B. W., Nelson D. E., Kreps G. L., et al. Trust and sources of health information: the impact of the internet and its implications for health care providers: findings from the first health information national trends survey. Archives of Internal Medicine. 2005;165(22):2618–2624. doi: 10.1001/archinte.165.22.2618. [DOI] [PubMed] [Google Scholar]

- 2.Fox S., Rainie L. The online health care revolution: how the Web helps Americans take better care of themselves. Pew Internet & American Life Project. 2000:1–23. [Google Scholar]

- 3.Baker J. F., Devitt B. M., Kiely P. D., et al. Prevalence of Internet use amongst an elective spinal surgery outpatient population. European Spine Journal. 2010;19(10):1776–1779. doi: 10.1007/s00586-010-1377-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Wald H. S., Dube C. E., Anthony D. C. Untangling the web-the impact of internet use on health care and the physician-patient relationship. Patient Education and Counseling. 2007;68(3):218–224. doi: 10.1016/j.pec.2007.05.016. [DOI] [PubMed] [Google Scholar]

- 5.Edmunds M. R., Barry R. J., Denniston A. K. Readability assessment of online ophthalmic patient information. JAMA Ophthalmology. 2013;131(12):1610–1616. doi: 10.1001/jamaophthalmol.2013.5521. [DOI] [PubMed] [Google Scholar]

- 6.Raj S., Sharma V. L., Singh A. J., Goel S. Evaluation of Quality and readability of health information websites identified through india’s major search engines. Advances in Preventive Medicine. 2016;2016:6. doi: 10.1155/2016/4815285.4815285 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Memon M., Ginsberg L., Simunovic N., Ristevski B., Bhandari M., Kleinlugtenbelt Y. V. Quality of web-based information for the 10 most common fractures. interactive Journal of Medical Research. 2016;5(2):p. e19. doi: 10.2196/ijmr.5767. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Grewal P., Alagaratnam S. The quality and readability of colorectal cancer information on the internet. International Journal of Surgery. 2013;11(5):410–413. doi: 10.1016/j.ijsu.2013.03.006. [DOI] [PubMed] [Google Scholar]

- 9.Keogh C. J., McHugh S. M., Clarke Moloney M., et al. Assessing the quality of online information for patients with carotid disease. International Journal of Surgery. 2014;12(3):205–208. doi: 10.1016/j.ijsu.2013.12.011. [DOI] [PubMed] [Google Scholar]

- 10.Agarwal N., Hansberry D. R., Sabourin V., Tomei K. L., Prestigiacomo C. J. A comparative analysis of the quality of patient education materials from medical specialties. JAMA Internal Medicine. 2013;173(13):1257–1259. doi: 10.1001/jamainternmed.2013.6060. [DOI] [PubMed] [Google Scholar]

- 11.Mozaffarian D., Benjamin E. J., Go A. S., et al. Heart disease and stroke statistics—2016 update. Circulation. 2016;133(4):e338–e360. doi: 10.1161/CIR.0000000000000350. [DOI] [PubMed] [Google Scholar]

- 12.Purcell K., Brenner J., Rainie L. Search Engine Use. Pew Research Center; 2012. [Google Scholar]

- 13.Aitken M., Altmann T., Rosen D. Engaging Patients through Social Media. [Google Scholar]

- 14.Flesch R. A new readability yardstick. Journal of Applied Psychology. 1948;32(3):221–233. doi: 10.1037/h0057532. [DOI] [PubMed] [Google Scholar]

- 15.Kincaid J. P., Fishburne R. P., Jr., Rogers R. L., Chissom B. S. Research Branch Report. 56. Institute for Simulation and Training; 1975. Derivation of new readability formulas (automated readability index, fog count and flesch reading ease formula) for navy enlisted personnel. http://stars.library.ucf.edu/istlibrary/56. [Google Scholar]

- 16.Gunning R. The Technique of Clear Writing. 36-37 (1952) [Google Scholar]

- 17.Coleman M., Liau T. L. A computer readability formula designed for machine scoring. Journal of Applied Psychology. 1975;60(2):283–284. doi: 10.1037/h0076540. [DOI] [Google Scholar]

- 18.McLaughlin G. H. SMOG grading: a new readability formula. Journal of Reading. 1969;12(8):639–646. [Google Scholar]

- 19.National Institutes of Health. How to Write Easy-to-Read Health Materials [Internet] Available from: https://medlineplus.gov/etr.html.

- 20. Readability-Score.com [Internet]. Available from: https://readability-score.com/

- 21.Murero M., D'Ancona G., Karamanoukian H. Use of the Internet by patients before and after cardiac surgery: telephone survey. Journal of Medical Internet Research. 2001;3(3, article E27) doi: 10.2196/jmir.3.4.e28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Jayaweera J. M. U., De Zoysa M. I. M. Quality of information available over internet on laparoscopic cholecystectomy. Journal of Minimal Access Surgery. 2016;12(4):321–324. doi: 10.4103/0972-9941.186691. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Tulbert B. H., Snyder C. W., Brodell R. T. Readability of patient-oriented online dermatology resources. Journal of Clinical and Aesthetic Dermatology. 2011;4(3) [PMC free article] [PubMed] [Google Scholar]

- 24.Moody E. M., Clemens K. K., Storsley L., Waterman A., Parikh C. R., Garg A. X. Improving on-line information for potential living kidney donors. Kidney International. 2007;71(10):1062–1070. doi: 10.1038/sj.ki.5002168. [DOI] [PubMed] [Google Scholar]

- 25.Küçükdurmaz F., Gomez M. M., Secrist E., Parvizi J. Reliability, readability and quality of online information about femoracetabular impingement. Archives of Bone and Joint Surgery. 2015;3(3):163–168. [PMC free article] [PubMed] [Google Scholar]

- 26.Klil H., Chatton A., Zermatten A., Khan R., Preisig M., Khazaal Y. Quality of web-based information on obsessive compulsive disorder. Neuropsychiatric Disease and Treatment. 2013;9:1717–1723. doi: 10.2147/NDT.S49645. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Edmunds M. R., Denniston A. K., Boelaert K., Franklyn J. A., Durrani O. M. Patient information in graves’ disease and thyroid-associated ophthalmopathy: readability assessment of online resources. Thyroid. 2014;24(1):67–72. doi: 10.1089/thy.2013.0252. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Hutchinson N., Baird G. L., Garg M. Examining the reading level of internet medical information for common internal medicine diagnoses. American Journal of Medicine. 2016;129(6):637–639. doi: 10.1016/j.amjmed.2016.01.008. [DOI] [PubMed] [Google Scholar]

- 29.Berkman N. D., Dewalt D. A., Pignone M. P., et al. Literacy and health outcomes. Evidence Report/Technology Assessment. 2004;87:1–8. [PMC free article] [PubMed] [Google Scholar]

- 30.Kutner M., Greenberg E., Jin Y., Paulsen C. The Health Literacy of America’s Adults: Results from the 2003 National Assessment of Adult Literacy. 2003. [Google Scholar]

- 31.Rudd R. E., Moeykens B. A., Colton T. C. Health and literacy: a review of medical and public health literature. [Google Scholar]

- 32.Carmona R. H. Health literacy: a national priority. Journal of General Internal Medicine. 2006;21(8):p. 803. doi: 10.1111/j.1525-1497.2006.00569.x. [DOI] [Google Scholar]

- 33.World Health Organization. Health Literacy and Health Behaviour. WHO. 2010 [Google Scholar]

- 34.Seubert D. Health Communications Toolkits: Improving Readability of Patient Education Materials [Internet] Available from: www.ihi.org/resources/Pages/Tools/HealthCommunicationsToolkitsImprovingReadabilityPtEdMaterials.aspx. [Google Scholar]

- 35.Dalton D. M., Kelly E. G., Molony D. C. Availability of accessible and high-quality information on the Internet for patients regarding the diagnosis and management of rotator cuff tears. Journal of Shoulder and Elbow Surgery. 2015;24(5):e135–e140. doi: 10.1016/j.jse.2014.09.036. [DOI] [PubMed] [Google Scholar]