Abstract

In dialogue, language processing is adapted to the conversational partner. We hypothesize that the brain facilitates partner-adapted language processing through preparatory neural configurations (task sets) that are tailored to the conversational partner. In this experiment, we measured neural activity with functional magnetic resonance imaging (fMRI) while healthy participants in the scanner (a) engaged in a verbal communication task with a conversational partner outside of the scanner, or (b) spoke outside of a conversational context (to test the microphone). Using multivariate searchlight analysis, we identify cortical regions that represent information on whether speakers plan to speak to a conversational partner or without having a partner. Most notably a region that has been associated with processing social-affective information and perspective taking, the ventromedial prefrontal cortex, as well as regions that have been associated with prospective task representation, the bilateral ventral prefrontal cortex, are involved in encoding the speaking condition. Our results suggest that speakers prepare, in advance of speaking, for the social context in which they will speak.

Keywords: mentalizing, multivariate decoding, neuroimaging, conversational interaction, task set

Introduction

When people speak, they generally have a conversational partner they speak to. Utterances are adapted to and shaped by the conversational partner (Clark and Murphy 1982; Clark and Carlson 1982; Bell 1984). Partner-adapted language processing has been associated with the skill of taking another person’s perspective (e.g. Clark, 1996; Krauss, 1987) or mentalizing (Frith and Frith 2006). Behavioral studies show that language users take into account generic information about their partner’s identity, for example, whether the partner is an adult or a child (Newman-Norlund et al. 2009), or a human or a computer (Brennan 1991). In addition, language users also adjust to specific, situational information about their conversational partner, for example, whether the partner is familiar with the topic (Galati and Brennan 2010), can see the object under discussion (Nadig and Sedivy 2002; Lockridge and Brennan 2002), or has a different spatial perspective (Schober 1993; Duran et al. 2011). Drawing upon these types of information, speakers appear to adapt utterances to the pragmatic needs of particular conversational partners (Lockridge and Brennan 2002; Hwang et al. 2015).

How is partner-adapted language processing achieved in the brain? One challenge for partner-adapted language processing is the need to respond rapidly and flexibly to the conversational partner (Tanenhaus and Brown-Schmidt 2008; Brennan and Hanna 2009; Pickering and Garrod 2013). Recent studies in cognitive neuroscience investigating how the brain performs visuo-motor or arithmetic tasks have shown that the brain supports rapid adaptation to the environmental context by pre-activating cortical structures that will be used in the upcoming task (Brass and von Cramon 2002; Forstmann et al. 2005; Sakai and Passingham 2006; Dosenbach et al. 2006; Haynes et al. 2007).

We propose that similar mechanisms are in place when conversing with a conversational partner. Specifically, the brain, being fundamentally proactive (Bar 2009; Van Berkum 2010; A. Clark 2013), may facilitate dialogue by anticipating and adapting in advance to a conversational partner through specialized task sets.

Task sets are preparatory neurocognitive states that represent the intention to perform an upcoming task (Bunge and Wallis 2007). While often tasks are executed immediately upon forming an intention, neuroimaging studies can detect task-specific configuration of neural activity while subjects maintain the intention to perform a particular action over a delay of up to 12 s (Haynes et al. 2007; Momennejad and Haynes 2012). This pre-task activity is assumed to reflect preparation for task performance (Sakai and Passingham 2003). Task sets are characterized by neural activity specific to the upcoming task, as well as by task-independent activity (Sakai 2008). Most notably the lateral prefrontal cortex is involved in preparing an upcoming task, namely the anterior prefrontal cortex (Sakai and Passingham 2003; Haynes et al. 2007), the left inferior frontal junction (Brass and von Cramon 2004), the right inferior frontal gyrus (ibid.), and the right intraparietal sulcus (ibid.).

Task set activations have also been observed in people forming the intention to speak: In anticipation of linguistic material for articulation, subjects activate the entire speech production network 2–4 s prior to speaking, including the frontopolar (BA 10) and anterior cingulate cortices, the supplementary motor areas (SMAs), the caudate nuclei, and the perisylvian regions along with Broca’s Area (Kell et al. 2011; Gehrig et al. 2012). However, these studies investigated speech production in settings that were isolated from conversational context (e.g. comparing trials in which text was read aloud vs read silently), so these results are not informative about language processing during communication.

Speaking in a realistic communicative context, and in particular, addressing a conversational partner, is likely to require a different kind of neuro-cognitive preparation than speaking without having a conversational partner. Indeed, behavioral and neuroscientific studies suggest profound differences in cognitive and neural processing in response to the social context (e.g. Lockridge and Brennan 2002; Pickering and Garrod 2004; Brown-Schmidt 2009; Kourtis et al. 2010; Kuhlen and Brennan 2013; Schilbach et al. 2013). For example, people’s eye gaze to a listener’s face differs when they believe the listener can hear vs can’t hear offensive comments (Crosby et al. 2008). And neurophysiological data suggest that when people engage in joint action (vs individual action), they represent in advance their partner’s actions in order to facilitate coordination (Kourtis et al. 2013).

Recent neuroimaging studies support the idea that brain mechanisms underlying linguistic aspects of speech production are distinct from those underlying communicative aspects (Sassa et al. 2007; Willems et al. 2010). These studies suggest that brain areas associated with the so-called ‘mentalizing network’ (Van Overwalle and Baetens 2009), most notably the medial prefrontal cortex, are implicated when speech is produced for the purpose of communicating (Sassa et al. 2007; Willems et al. 2010). While these studies have investigated language processing in a communicative setting, they have not disentangled the process of generating an intention to communicate from the process of generating the linguistic message itself. Experimental protocols developed for investigating task sets are well suited for addressing the question how the brain prepares to communicate with a particular conversational partner (who), independent of preparing a particular linguistic content (what).

In sum, there is a need for neuroscientific studies on how language is processed in dialogue settings. With the present project, we investigate how advance information about the conversational partner may enable humans to flexibly adapt language processing to the conversational context. Specifically, as a first step towards understanding the neural basis of partner-adapted language processing, we investigate how the neural preparation associated with the task set of speaking to a conversational partner differs from the task set of speaking without having a conversational partner. Thus, by instructing participants to speak under one of two conditions we manipulated the conversational context: Participants were asked to use an ongoing live audiovisual stream to either (a) tell their partner outside of the scanner which action to execute in a spatial navigation task, or (b) to run ‘test trials,’ in which they spoke for the purpose of ‘calibrating’ the MRI microphone. The structure of the trials and the access to the live video stream, as well as the utterances participants were asked to produce, were virtually identical in both conditions; what varied was their expectation of interacting with a conversational partner. Our fMRI analysis focused on the phase in which participants form and maintain the intention to speak, whether to a particular partner or not (who), prior to their articulation of the utterance or monitoring of the partner's response. This allowed us to isolate neural responses associated with the social intention to communicate with a particular conversational partner from neural responses associated with planning an utterance (what). Data were analyzed using univariate analyses contrasting trials with respect to the activated task set (speaking to partner vs no partner) as well as multivariate pattern searchlight decoding across the whole brain, which has been shown to be more sensitive for decoding regional activation patterns associated with a particular task set (Haynes and Rees 2006; Haynes et al. 2007; Bode and Haynes 2009).

We expect that task sets associated with preparing to speak to a conversational partner will involve neural activity specific to and independent of the task domain (see e.g. Sakai and Passingham 2003; Sakai 2008). Task-independent activity is likely to involve neural structures commonly associated with task preparation and encoding future intentions, most notably the lateral prefrontal cortex (Sakai and Passingham 2003; Brass and von Cramon 2004; Haynes et al. 2007). Specific to the experimental task, we furthermore expect that speakers will consider the mental states of their conversational partner (mentalize) when intending to speak in a conversational setting (Brennan et al. 2010). Accordingly, we predict task-specific activity (i.e. in form of information about the conversational partner) to be encoded in areas associated with the ‘mentalizing network,’ in particular the medial prefrontal cortex (Sassa et al. 2007; Willems et al. 2010). Our study extends previous studies that associate the mentalizing network with communication (Sassa et al. 2007; Noordzij et al. 2009; Willems et al. 2010) and aims to identify brain areas that encode information about whether the speaker will address a conversational partner. Moreover, this study will shed light on how the brain adapts to the conversational context already in preparation for speaking. Evidence for such an early adaptation would be relevant to the question of when in the course of speech production information about the conversational partner is taken into account (see e.g. Barr and Keysar 2006; Brennan et al. 2010).

Materials and methods

Participants

Seventeen right-handed participants between the ages of 21 and 35 (7 males; mean age 27.18) were included in the analysis (one additional participant had to be excluded due to microphone failure). All participants were native speakers of German, had normal or corrected-to-normal vision, and had no reported history of neurological or psychiatric disorder. Participants gave informed consent and were compensated with €10 per hour. The study was approved by the local ethics committee of the Psychology Department of the Humboldt University of Berlin.

Design

There were two speaking conditions (partner vs calibration), the main manipulation of the experiment. To ensure that the classifier does not reflect neural activity coding low level information of the visual cues, for each speaking condition, there were two visual cues, leading to a total of four conditions for each participants (cf. Reverberi et al., 2012; Wisniewski et al., 2016).

The fMRI experiment consisted of six runs of 30 trials each. Within each run, participants formed, maintained and executed the intention to speak for the purpose of either communicating to their conversational partner (12 trials) or calibrating the microphone (12 trials). Three additional trials in each condition (six trials in total) served as catch trials with shorter delays (see below) and were not analyzed. The order of conditions was randomized within a run, with the restriction that maximally three trials of one condition appeared in a row.

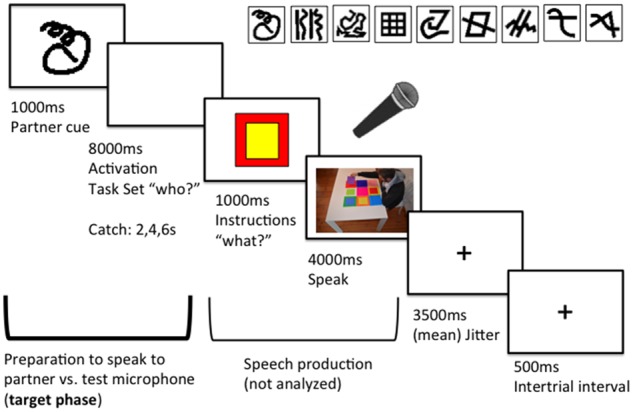

Procedure

In each trial, participants were presented with (1) an abstract cue for 1 s, informing them of the context they would be speaking in, followed by (2) a blank screen of 8 s during which they formed and maintained their intention to speak in the cued context (the who), (3) information about which action to mention (the what) for 1 s, (4) the onset of the live video stream, which connected the participant inside the scanner with their partner outside the scanner, stayed active for 4 s, and served as a prompt to speak and (5) a jitter with a mean duration of 3.5 s (range from 1.5 to 7.5 s, following roughly an exponential distribution) followed by a fixation cross as inter-trial interval of 0.5 s (see Figure 1). Each trial lasted on average 18 s. The total duration of one run was 516 s.

Fig. 1.

A trial of the experiment. After the presentation of the cue (1 s) participants had 8 s to form and maintain the intention to speak in a particular context (preparation phase). Instructions on what to say were presented (1 s) followed by the onset of the live video stream (speech production phase, 4 s). A variable delay of mean duration 3.5 s and an inter-trial interval of.5 s ended the trial. Each trial lasted on average 18 s. In the top right the set of nine possible cues, from which two were randomly chosen and associated with a given condition at the beginning of each experimental session.

In each run, six catch trials, with a shorter interval between the presentation of the cue and the instruction of the action (2 s or 4 s), made the onset of the video stream unpredictable and thus required participants to represent the cued partner immediately after cue presentation and maintain a state of readiness even across longer intervals (Sakai and Passingham 2003; Haynes et al. 2007).

Participants inside the scanner were instructed to ‘ready themselves to speak’ upon receiving the cue about the condition under which they would speak (who), until they received the information on which instructions to give (what). During communicative trials participants then spoke to their conversational partner (the experimenter, A.K., who was located outside of the scanner) via a real-time audiovisual interface, which transmitted one-way visual (partner to participant) and auditory (participant to partner) information. The participants’ task was to instruct their partner where to position small colored squares on a game board of large colored squares. Participants could speak to their conversational partner during scanning with the help of a noise-canceling MRI compatible microphone (FOMRI-II, Optoacoustics LTD), which reduces the noise during EPI acquisition. Through a live audiovisual stream participants were able to observe their partner acting upon their instructions in real time. The partner responded genuinely to participants’ instructions, occasionally responding incorrectly (e.g. if an instruction was misheard; see Kuhlen and Brennan 2013 on practices for using confederates as conversational partners). Participants were not able to correct these trials, but they commonly remarked upon them after the experiment.

For the non-communicative trials, participants were led to believe that the microphone needed periodic calibration to optimize its performance. Participants were instructed that in these ‘calibration’ trials the procedure would be identical with the only difference that their utterances would not be transmitted to their partner. In order to keep the visual input comparable between the two experimental conditions, the video stream was also active during non-communicative trials. However, the conversational partner did not react to (i.e. execute) participants’ instructions.

Participants were prompted to spontaneously use the same wording and syntactic forms (e.g. purple on red) in both conditions (for comparable procedures see e.g. Hanna and Brennan, 2007; Kraljic and Brennan, 2005). This allowed maximal experimental control, enabling a comparison of the effects of different social conditions on the neuro-cognitive processing of semantically and syntactically identical utterances.

Note that this procedure held visual input and spoken output during the speech production phase maximally comparable across experimental conditions; this was precautionary in case such information would influence participants’ preparation to speak in a given context. To further account for this possibility, speakers’ utterances were recorded to a sound file. After scanning, a student assistant, blind to the experimental condition, identified speech onset and duration using the computer program Praat (Boersma 2001), and tried to guess the experimental condition under which speakers had been speaking based on the recordings. These measures were used to examine whether utterances were produced differently in the two conditions (they were not, see ‘Results’). Note, however, that our main analyses aimed at the time period prior to speech production.

Before the experiment, participants were trained to associate the two experimental conditions with designated cues. Two abstract visual cues, randomly chosen for each participant from a set of nine possible cues, were associated with each condition. During training participants were presented with one of the four cues and had to select the speaking context (partner trials or calibration trials) associated with that cue. Training continued until the participant identified the context associated with a cue correctly at least eleven times in a row. Halfway through scanning, in between run 3 and run 4, cue training was repeated with the same completion criterion.

fMRI acquisition

Gradient-echo EPI functional MRI volumes were acquired with a Siemens TRIO 3 T scanner with standard head coil (33 slices, TR = 2000 ms, echo time TE = 30 ms, resolution 3 × 3 × 3.75 mm3 with 0.75 mm gap, FoV 192 × 192 mm). In each run, 258 images were acquired for each participant. The first three images were discarded to allow for magnetic saturation effects. For each participant six runs of functional MRI were acquired. In addition, we also acquired structural MRI data (T1-weighted MPRAGE: 192 sagittal slices, TR = 1900 ms, TE = 2.52 ms, flip angle = 9°, FOV = 256 mm).

fMRI preprocessing and analysis

Data were preprocessed using SPM8 (http://www.fil.ion.ucl.ac.uk/spm/). The functional images were slice time corrected with reference to the first recorded slice, motion corrected. For the univariate analysis the data were spatially smoothed with a Gaussian kernel of 6 mm FWHM. A general linear model (GLM) with eight HRF-convolved regressors was estimated, separately for each voxel. Data were highpass filtered with a cut-off period of 128 s. The first four regressors of the GLM estimated the response to the presentation of the four different cues. Two regressors estimated the response for the preparation for the two speaking conditions. The last two regressors estimated the response for the execution of the two speaking conditions. Here the trial-specific onset and duration of participants’ utterances were used in the model. The resultant contrast maps were normalized to a standard stereotaxic space (Montreal Neurological Institute EPI template) and re-sampled to an isotropic spatial resolution of 3 × 3 × 3 mm3. Finally, random effects general linear models were estimated across subjects.

Next, a multivariate pattern analysis was performed to search for regions where the activity in distributed local voxel ensembles encoded the preparation and the execution to speak to a conversational partner or for non-communicative purposes. For this a GLM with eight regressors (see above) was calculated. This GLM was based on unsmoothed data to maximize the sensitivity for information encoded in fine-grained spatial voxel patterns (Kamitani and Tong 2005; Haynes and Rees 2005; Kriegeskorte et al. 2006; Haynes and Rees 2006), for a discussion see (Swisher et al. 2010; Kamitani and Sawahata 2010; Op de Beeck 2010; Haynes 2015). In order to estimate the information encoded in spatially distributed response patterns at each brain location, we employed a ‘searchlight’ approach (Kriegeskorte et al. 2006; Haynes et al. 2007; Soon et al. 2008; Bode and Haynes 2009) that allowed the unbiased search for informative voxels across the whole brain. A spherical cluster of N surrounding voxels (c1…N) within a radius of four voxels was created around a voxel vi. The GLM-parameter estimates for the two speaking conditions for these voxels were extracted and transformed into vectors for each condition for each run of each subject. These vectors represented the patterns of spatial response to the given condition from the chosen cluster of voxels. In the next step, multivariate pattern classification was used to assess whether information about the two conditions was encoded in the spatial response patterns. For this purpose, the pattern vectors from five of the six runs were assigned to a ‘training data set’ that was used by a support vector pattern classification (Muller et al. 2001) with a fixed regularization parameter C = 1. First, the support vector classification was trained on these data to identify patterns corresponding to each of the two conditions (LIBSVM implementation, http://www.csie.ntu.edu.tw/∼cjlin/libsvm). Then it predicted independent data from the last run (test data set). Cross-validation (6-fold) was achieved by repeating this procedure independently, with each run acting as the test data set once, while the other runs were used as training data sets. This procedure prevented overfitting and ‘double dipping’ (Kriegeskorte et al. 2009). The accuracy between the predicted and real speaking condition was averaged across all six iterations and assigned to the central voxel vi of the cluster. It therefore reflected the fit of the prediction based on the given spatial activation patterns of this local cluster. Accuracy significantly above chance implied that the local cluster of voxels spatially encoded information about the two speaking conditions, whereas an accuracy at chance implied no information. The same analysis was then repeated with the next spherical cluster, created around the next spatial position at voxel vj. Again, an average accuracy for this cluster was extracted and assigned to the central voxel vj. By repeating this procedure for every voxel in the brain, a 3-dimensional map of accuracy values for each position was created for each subject. The resultant subject-wise accuracy maps were normalized to a standard stereotaxic space (Montreal Neurological Institute EPI template), re-sampled to an isotropic spatial resolution of 3 × 3 × 3 mm3 and smoothed with a Gaussian kernel of 6 mm FWHM using SPM8. Finally, a random effects analysis was conducted, computed on a voxel-by-voxel basis, to statistically test against chance (0.5) the accuracy for each position in the brain across all subjects (Haynes et al. 2007).

Artifacts associated with speaking during fMRI measurement were isolated from neural responses associated with utterance planning by focusing the analysis on the time bins before participants spoke (i.e. when they were cued to form and maintain the intention to address the partner). Furthermore, our analysis approach modeled speech-related variance by adding regressors time-locked to participants’ speech production. Possible movement of participants between trials was minimized by instructing participants to speak with minimal head movements, and was otherwise addressed by preprocessing.

Results

Behavioral results: speech production onset and duration

From the 3060 utterances that entered our analyses, 46 utterances (i.e. <2% of all trials) deviated from the standard form (incomplete utterances, speech disfluencies, the use of fillers, or restarts). Participants’ utterances did not differ systematically between the two speaking conditions in speech onset (Mpartner = 1062.79, SDpartner = 177.49; Mmicrophone = 1071.04; SDmicrophone = 168.65; t(16)=-0.69; P = 0.5), or utterance duration (Mpartner = 906.7, SDpartner = 151.51; Mmicrophone = 876.73; SDmicrophone = 148.52; t(16)=1.06; P = 0.31). Furthermore, our rater was not able to guess the condition under which speakers had spoken beyond chance level (mean accuracy = 0.50; SD = 0.07; t(16)=0.17; P = 0.87; t-test was calculated across the 17 subjects included in the study).

Neuroimaging results: univariate analyses

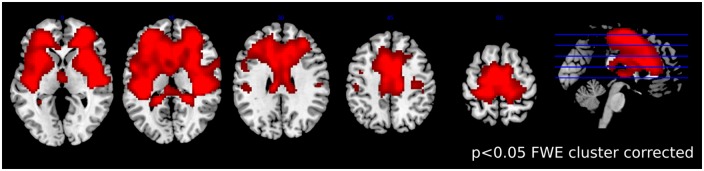

Taking the two speaking conditions together the univariate analysis revealed a large network including the SMA (BA 6), the medial and bilateral frontopolar cortex (BA 10), and Broca’s Area (BA 45) (P < 0.001, FWE cluster corrected at P < 0.05), see Figure 2. However, the univariate analysis revealed no significant differences in the preparation phase between the two speaking conditions (P < 0.001 uncorrected).

Fig. 2.

Results of the univariate fMRI analyses comparing both experimental conditions together against the implicit baseline. Increased activity was found in large networks including the SMA (BA 6), and the medial and bilateral frontopolar cortex (BA 10), and Broca’s region (BA 45). Displayed results are statistically significant, P < 0.001, FWE cluster corrected at P < 0.05.

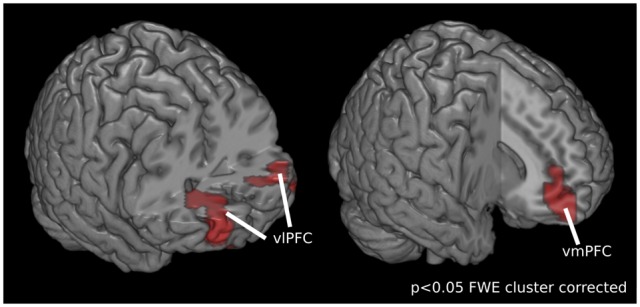

Neuroimaging results: multivariate decoding

Multivariate searchlight analysis of the preparatory phase identified the ventral medial prefrontal cortex (vmPFC; BA11, extending into BA 10 and BA 32) and the ventral bilateral prefrontal cortex (vlPFC right: BA 11, 47, vlPFC left: BA 46, extending into BA 10, BA 45 and BA 47) to distinguish the two speaking conditions at the time period when participants formed and maintained the intention to speak in a particular context (P < 0.001, FWE cluster corrected at P < 0.05), see Figure 3 and Table 1.

Fig. 3.

Results of the multivariate searchlight analysis on time period during which participants form and maintain the intention to speak in a particular context. The ventral medial prefrontal cortex (vmPFC; BA11, extending into BA 10 and BA 32) and the ventral bilateral prefrontal cortex (right vlPFC: BA 11, 47, left vlPFC: BA 46, extending into BA 10, BA 45 and BA 47) encode information about the two speaking conditions. Displayed results are statistically significant, P < 0.001, FWE cluster corrected at P < 0.05.

Table 1.

Results of whole-brain multivariate searchlight analysis decoding preparation for speaking in a particular context

| Brodmann Area | Cluster size | x | y | z | T score | Z score | |

|---|---|---|---|---|---|---|---|

| Right vlPFC | 11 | 803 | 24 | 59 | −5 | 6.75 | 4.58 |

| 47 | 24 | 38 | −2 | 6.05 | 4.30 | ||

| vmPFC | 11 | 15 | 53 | −11 | 5.68 | 4.14 | |

| Left vlPFC | 46 | 379 | −48 | 50 | −5 | 5.50 | 4.06 |

| −54 | 47 | −11 | 5.19 | 3.92 | |||

| −57 | 41 | −20 | 4.44 | 3.53 |

Note: Coordinates are in Montreal Neurological Institute space. The t value listed for each area is the value for the maximally activated voxel in that area. Listed are statistically significant results, P < 0.05, FWE cluster corrected, P < 0.001 uncorrected. vlPFC = ventrolateral prefrontal cortex, vmPFC = ventromedial prefrontal cortex.

Multivariate searchlight analysis of the execution phase identified a large network of brain areas that encode information about the speaking condition, see Figure 4, covering most of the brain. The wide spread of information is not surprising given that neural activity observed during the execution phase will be tarnished by artifacts, such as physical motion and differences in visual feedback. Notably, however, the same areas that encoded information about the speaking condition during preparation also encoded information during speech execution (see areas highlighted in green in Figure 4).

Fig. 4.

Results of the multivariate searchlight analysis on time period during which participants execute the intention to speak in a particular context. Note that during the speech production phase, results are likely to be affected by artifacts, such as physical motion and differences in visual feedback. Highlighted in green are those areas that encode information about the speaking condition during preparation as well as during speech execution. Displayed results are statistically significant, P < 0.001, FWE cluster corrected at P < 0.05.

Discussion

In this study, we identified multivariate differences in preparatory neural states associated with the intention to speak to a conversational partner and those associated with the intention to speak without having a conversational partner. Multivariate pattern analyses uncovered regions in the brain that encode information on the activated task set, most notably the ventromedial prefrontal and the ventral bilateral prefrontal cortex. These areas are likely to serve task-dependent as well as task-independent functions, as discussed presently. Our findings are in line with previous work suggesting that social context can have a significant influence on basic cognitive and neural processes (Lockridge and Brennan 2002; Pickering and Garrod 2004; Brown-Schmidt 2009; Kourtis et al. 2010; Kuhlen and Brennan 2013; Schilbach et al. 2013), including language-related processes (Nieuwland and Van Berkum 2006; Berkum 2008; Willems et al. 2010). Moreover, our study provides evidence that such an influence begins before speaking.

Numerous studies have associated the medial prefrontal cortex with perspective taking and the ability to take account of another person’s mental state (Amodio and Frith 2006; Frith and Frith 2007). Moreover, this area is said to play a central role in communication (Sassa et al. 2007; Willems et al. 2010). According to our data, the ventral part of the medial prefrontal cortex encodes information on whether or not the participant will speak to a conversational partner in the upcoming trial. This area corresponds most closely to the anatomical subdivision of the human ventral frontal cortex that has been labeled medial frontal pole (Neubert et al. 2014), or the anterior part of area 14 m (Mackey and Petrides 2014). Lesions to the ventromedial part of the mPFC are said to impair a person’s social interaction skills (Bechara et al. 2000; Moll et al. 2002). More specifically, a recent study on patients with damage to the vmPFC reports an inability of these patients to tailor communicative messages to generic characteristics of their conversational partner (being a child or an adult; Stolk et al. 2015). In particular, the latter study points towards a central role of this brain region in partner-adapted communication.

Although the brain area identified in our study is located in the ventral part of the medial prefrontal cortex, previous studies comparing communicative action to non-communicative action have reported areas located more dorsally (Sassa et al. 2007; Willems et al. 2010). A recent meta-analysis aimed at distinguishing the respective contributions of the dorsomedial and ventromedial prefrontal cortex to social cognition (Bzdok et al. 2013). According to this study, both regions are consistently associated with social, emotional and facial processing. But the study’s functional connectivity analyses linked the dorsal part of mPFC to more abstract or hypothetical perspective taking. In contrast, the vmPFC connected to areas associated with reward processing and a motivational assessment of social cues. For example, increased activity in the vmPFC observed when participants attended jointly with a partner to a visual stimulus has been associated with processing social meaning and its relevance to oneself (Schilbach et al. 2006). Along similar lines, the dmPFC has been associated with cognitive perspective taking, while the vmPFC has been associated with affective perspective taking (Hynes et al. 2006). Based on these proposals, the vmPFC may encode the affective or motivational value associated with interacting with a partner. Accordingly, our finding may reflect an affective evaluation of the participant’s intention to engage in social interaction. Such an evaluation must not be specific to communication and may generalize to other types of social interaction.

A somewhat different perspective was recently put forward by Welborn and Lieberman (2015). These researchers argue that activation of the dmPFC is elicited primarily by experimental tasks that require a ‘generic’ theory of mind (e.g. taking the perspective of imaginary or stereotypic characters). In contrast, in their fMRI study activation of the vmPFC was observed in tasks requiring person-specific mentalizing, which involved judging the idiosyncratic traits of a particular individual. Following this proposal, the ventrally located engagement of the mPFC observed in our study may have been triggered by having represented specific characteristics of the expected conversational partner. In our study, the conversational partner was very tangible due to the ongoing live video stream and could have therefore elicited a detailed partner representation (Sassa et al. 2007; Kuhlen and Brennan 2013). Taken together, the literature reviewed above suggests that the vmPFC processes social-affective information and could be involved in encoding social properties associated with speaking to a conversational partner, be it motivational aspects, or specific characteristics or needs of the partner.

Our results are relevant to an ongoing debate in the psycholinguistic literature on the time course in which a conversational partner is represented during language processing (Barr and Keysar 2006; Brennan et al. 2010). Some have argued that language processing routinely takes the conversational partner into account at an early point during language processing (Nadig and Sedivy 2002; Hanna et al. 2003; Metzing and Brennan 2003; Hanna and Tanenhaus 2004; Brennan and Hanna 2009). In contrast, others have argued that the conversational partner is not immediately taken into consideration and instead is considered only in a secondary process triggered by special cases of misunderstanding (Horton and Keysar 1996; Keysar et al. 1998; Ferreira and Dell 2000; Pickering and Garrod 2004; Barr and Keysar 2005; Kronmüller and Barr 2007). In support of the latter theory, a recent MEG study reports in listeners the engagement of brain areas associated with perspective taking (including the vmPFC) only after a speaker’s utterance had been completed, but not at a time point when listeners were anticipating their partner’s utterance (Bögels, et al. 2015a). In contrast, our data suggest that, in speakers, information about the conversational partner (in this case, whether there is a conversational partner or not) is represented early in speech planning, that is, already prior to speaking. This pattern of results is in agreement with those theories assigning a prominent role to mentalizing in early stages of processing language in dialogue settings (H. Clark 1996; Nadig and Sedivy 2002; Hanna and Tanenhaus 2004; Frith and Frith 2006; Sassa et al. 2007; Brennan and Hanna 2009; Willems et al. 2010).

Our study does not determine precisely what was encoded about the conversational partner, since we manipulated only whether utterances were addressed to a conversational partner or to a microphone. Our experimental conditions may have differed in terms of the affective or motivational value associated with the particular speaking condition (as discussed earlier). Further studies investigating neural representations associated with multiple conversational partners with different characteristics, perspectives, or momentary informational states or needs would be required to provide insights into the exact nature of the partner representation.

Aside from the ventromedial prefrontal cortex, the ventral bilateral prefrontal cortex encoded information about the activated task set. The prefrontal cortex, and in particular the lateral prefrontal cortex, is said to play a central role in task preparation and the control of complex behavior (e.g. Sakai and Passingham 2003; Badre 2008) such as the retrieval and initiation of action sequences (Crone et al. 2006; Badre and D’Esposito 2007) and task hierarchies (Koechlin et al. 2003; Badre 2008). The areas identified in our study most closely correspond to a subdivision that has been labeled the ventrolateral frontal poles (Neubert et al. 2014). These areas overlap with those previously associated with encoding the outcome of future actions (e.g. Boorman et al. 2011), task set preparation (e.g. Sakai and Passingham 2003), encoding delayed intentions (e.g. Momennejad and Haynes 2012) and complex rules (e.g. Reverberi et al. 2012). While more commonly right-lateralized (ibid.), future intentions to perform a particular task have also been decoded from the left lateral frontopolar cortex (Haynes et al. 2007).

Alternatively, linguistic features of the upcoming task may have contributed to the left-lateralized cluster (which touches on BA 45 and BA 47, and could involve Broca’s area), and also to the right-lateralized cluster (compare Kell et al. 2011 for bilateral frontal activation during speech preparation). This would suggest that these areas assist in preparing in advance different linguistic adaptations in response to speaking to a partner. However, an involvement of these areas was observed at a time point at which speakers did not know the exact content of the utterances they would be producing. Furthermore, our behavioral analyses suggest that the surface structure of the produced utterances is quite comparable between the two conditions. The closer overlap with neural structures previously reported when preparing to execute a diverse range of tasks (e.g. memory tasks; color, numerical or linguistic judgments; arithmetic operations) suggests that these brain areas rather support a task-independent function.

Taken together, the involvement of the ventral bilateral frontal cortex support the growing literature pointing to the functional role of this area (sometimes also labeled orbitofrontal cortex) in goal-directed behavior and in mapping representations of a given task state (Wilson et al. 2014; Stalnaker et al. 2015). In the context of our study, these areas may represent task-independent activity associated with maintaining the intention to perform an upcoming task.

Notably, brain regions that encoded information about the task set (i.e. whether speakers would be addressing a partner or not) during the preparation phase were also instrumental during the execution phase. Our findings are in line with the common understanding of tasks sets (e.g. Sakai 2008) as facilitating task performance by representing the operations that are necessary for generating the final response. In the context of our task, task-specific activity, such as mentalizing, is instrumental not only when planning to address a conversational partner but also in the process of speaking to a conversational partner.

A multivariate searchlight analysis approach to analyzing fMRI data yielded significant findings between our two speaking conditions, even though a more traditional univariate approach did not. In contrast to univariate analysis, which considers each voxel separately and is limited to revealing differences in average activation, multivariate analysis enables insight into information that can be detected only when taking several voxels into account (Haynes and Rees 2006; Norman et al. 2006). Previous studies have successfully employed multivariate analyses to decode the content of specific mental states and have pointed towards an increased sensitivity of this approach (Kamitani and Tong 2005; Haynes and Rees 2006; Haynes et al. 2007; Bode and Haynes 2009; Gilbert 2011).

Nevertheless, the univariate analysis did reveal a large network of brain areas active during task preparation when comparing both speaking conditions together against baseline. The activation pattern we find in this analysis overlaps in large parts with those reported in studies on neural preparation for speech production (Kell et al. 2011; Gehrig et al. 2012). This suggests that both speaking conditions recruited the general network associated with anticipating linguistic material for articulation. Remarkably, our multivariate analysis provided the additional insight that distinct brain areas, most notably the vmPFC, encode information about whether speakers expect to speak to a conversational partner.

With the current study, we make an advance towards bringing spontaneous spoken dialogue into an fMRI setting. Our results suggest profound processing differences when speakers address a conversational partner who has genuine informational needs and responds online to the speakers’ utterances, compared to when speakers speak for a non-communicative purpose such as testing a microphone. Yet our experimental setting falls short of interactive dialogue ‘in the wild’ in important ways. Most notably, communicative exchanges did not offer the opportunity to interactively establish shared understanding since speakers were not allowed to repair utterances in response to addressee’s misunderstanding. Also, the conversational roles of being a speaker vs being the addressee, as well as the linguistic output itself, was constrained by the setting (but this is not so different from routine types of dialogic exchanges, e.g. question-answer scenarios such as during an interview or quiz, see e.g. Bögels, et al. 2015b; Bašnáková et al. 2015). Despite these limitations, we believe our study takes a first step towards understanding how the brain may facilitate partner-adapted language processing through specific neural configurations, in advance of speaking.

Funding

This work was supported by the German Federal Ministry of Education and Research (Bernstein Center for Computational Neuroscience, 01GQ1001C), the Deutsche Forschungsgemeinschaft (SFB 940/1/2, EXC 257, KFO 247, DIP JA 945/3-1), and the European Regional Development Funds (10153458 and 10153460). This material is based on work done while A.K. was funded by the Berlin School of Mind and Brain, Humboldt-Universität zu Berlin (German Research Foundation, Excellence Initiative GSC 86/3); and while S.B. was serving at the National Science Foundation. Any opinion, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation.

Conflict of interest. None declared.

References

- Amodio D.M., Frith C.D. (2006). Meeting of minds: the medial frontal cortex and social cognition. Nature Reviews Neuroscience, 7, 268–77. [DOI] [PubMed] [Google Scholar]

- Badre D. (2008). Cognitive control, hierarchy, and the rostro-caudal organization of the frontal lobes. Trends in Cognitive Science, 12, 193–200. [DOI] [PubMed] [Google Scholar]

- Badre D., D’Esposito M. (2007). Functional magnetic resonance imaging evidence for a hierarchical organization of the prefrontal cortex. Journal of Cognitive Neuroscience, 19, 2082–99. [DOI] [PubMed] [Google Scholar]

- Bar M. (2009). The proactive brain: memory for predictions. Philosophical Transactions of The Royal Society B Biological Sciences, 364, 1235–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barr D.J., Keysar B.. 2005. Mindreading in an exotic case: the normal adult human In: Malle B.F., Hodges S.D., editors. Other Minds: How Humans Bridge the Divide between Self and Others. New York: Guilford Press, p. 271–83. Available: http://eprints.gla.ac.uk/45611/[2015, September 21, 2015]. [Google Scholar]

- Barr D.J., Keysar B.. 2006. Perspective taking and the coordination of meaning in language use In: Traxler M.J., Gernsbacher M.A., editors. Handbook of Psycholinguistics, 2nd edn Amsterdam, Netherlands: Elsevier, p 901–38. Available: http://eprints.gla.ac.uk/45610/[2016, April 21, 2016]. [Google Scholar]

- Bašnáková J., van Berkum J., Weber K., Hagoort P. (2015). A job interview in the MRI scanner: how does indirectness affect addressees and overhearers? Neuropsychologia, 76, 79–91. [DOI] [PubMed] [Google Scholar]

- Bechara A., Tranel D., Damasio H. (2000). Characterization of the decision-making deficit of patients with ventromedial prefrontal cortex lesions. Brain, 123 (Pt 11), 2189–202. [DOI] [PubMed] [Google Scholar]

- Bell A. (1984). Language style as audience design. Language in Society, 13, 145–204. [Google Scholar]

- Berkum J.J.A.V. (2008). Understanding sentences in context what brain waves can tell us. Current Directions in Psychological Science, 17, 376–80. [Google Scholar]

- Bode S., Haynes J.-D. (2009). Decoding sequential stages of task preparation in the human brain. NeuroImage, 45, 606–13. [DOI] [PubMed] [Google Scholar]

- Boersma P. (2001). Praat, a system for doing phonetics by computer. Glot International, 5, 341–5. [Google Scholar]

- Bögels S., Barr D.J., Garrod S., Kessler K. (2015a). Conversational interaction in the scanner: mentalizing during language processing as revealed by MEG. Cerebral Cortex, 25, 3219–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bögels S., Magyari L., Levinson S.C. (2015b). Neural signatures of response planning occur midway through an incoming question in conversation. Science Report, 5, 12881. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boorman E.D., Behrens T.E., Rushworth M.F. (2011). Counterfactual choice and learning in a neural network centered on human lateral frontopolar cortex. PLOS Biology, 9, e1001093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brass M., von Cramon D.Y. (2002). The role of the frontal cortex in task preparation. Cerebral Cortex (New York, N.Y. : 1991), 12, 908–14. [DOI] [PubMed] [Google Scholar]

- Brass M., von Cramon D.Y. (2004). Decomposing components of task preparation with functional magnetic resonance imaging. Journal of Cognitive Neuroscience, 16, 609–20. [DOI] [PubMed] [Google Scholar]

- Brennan S.E. (1991). Conversation with and through computers. User Modeling and User-Adapted Interaction, 1, 67–86. [Google Scholar]

- Brennan S.E., Galati A., Kuhlen A.K.. 2010. Two minds, one dialogue: coordinating speaking and understanding In: Psychology of Learning and Motivation, Vol. 53, New York, N.Y: Elsevier, p. 301–44. Available: http://linkinghub.elsevier.com/retrieve/pii/S0079742110530081[2015, September 21, 2015]. [Google Scholar]

- Brennan S.E., Hanna J.E. (2009). Partner-specific adaptation in dialog. Topics in Cognitive Science, 1, 274–91. [DOI] [PubMed] [Google Scholar]

- Brown-Schmidt S. (2009). Partner-specific interpretation of maintained referential precedents during interactive dialog. Journal of Memory and Language, 61, 171–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bunge S.A., Wallis J.D., editors. 2007. Neuroscience of Rule-Guided Behavior. New York: Oxford University Press; Available: http://www.oxfordscholarship.com/view/10.1093/acprof:oso/9780195314274.001.0001/acprof-9780195314274[2015, September 21, 2015]. [Google Scholar]

- Bzdok D., Langner R., Schilbach L., et al. (2013). Segregation of the human medial prefrontal cortex in social cognition. Frontiers in Human Neuroscience, 7 Available: http://www.ncbi.nlm.nih.gov/pmc/articles/PMC3665907/[2015, September 21, 2015]. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark A. (2013). Whatever next? predictive brains, situated agents, and the future of cognitive science. Behavioral and Brain Sciences, 36, 181–204. [DOI] [PubMed] [Google Scholar]

- Clark H.H. 1996. Using Language. Cambridge: Cambridge University Press. [Google Scholar]

- Clark H.H., Carlson T.B.. 1982. Context for comprehension In: Long J., Baddeley A., editors. Categorization and Cognition, Hillsdale, NJ: Erlbaum; p. 313–30. [Google Scholar]

- Clark H.H., Murphy G.L.. 1982. Audience design in meaning and reference In: Ny J.-F.L., Kintsch W., editors. Advances in Psychology, Vol. 9, New York: North-Holland, p. 287–99. Available: http://www.sciencedirect.com/science/article/pii/S0166411509600595[2015, September 21, 2015]. [Google Scholar]

- Crone E.A., Wendelken C., Donohue S.E., Bunge S.A. (2006). Neural evidence for dissociable components of task-switching. Cerebral Cortex (New York, N.Y. : 1991), 16, 475–86. [DOI] [PubMed] [Google Scholar]

- Crosby J.R., Monin B., Richardson D. (2008). Where do we look during potentially offensive behavior? Psychological Science, 19, 226–8. [DOI] [PubMed] [Google Scholar]

- Dosenbach N.U.F., Visscher K.M., Palmer E.D., et al. (2006). A core system for the implementation of task sets. Neuron, 50, 799–812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duran N.D., Dale R., Kreuz R.J. (2011). Listeners invest in an assumed other’s perspective despite cognitive cost. Cognition, 121, 22–40. [DOI] [PubMed] [Google Scholar]

- Ferreira V.S., Dell G.S. (2000). Effect of ambiguity and lexical availability on syntactic and lexical production. Cognitive Psychology, 40, 296–340. [DOI] [PubMed] [Google Scholar]

- Forstmann B.U., Brass M., Koch I., von Cramon D.Y. (2005). Internally generated and directly cued task sets: an investigation with fMRI. Neuropsychologia, 43, 943–52. [DOI] [PubMed] [Google Scholar]

- Frith C.D., Frith U. (2006). The neural basis of mentalizing. Neuron, 50, 531–4. [DOI] [PubMed] [Google Scholar]

- Frith C.D., Frith U. (2007). Social cognition in humans. Current Biology, 17, R724–32. [DOI] [PubMed] [Google Scholar]

- Galati A., Brennan S.E. (2010). Attenuating information in spoken communication: For the speaker, or for the addressee? Journal of Memory and Language, 62, 35–51. [Google Scholar]

- Gehrig J., Wibral M., Arnold C., Kell C.A. (2012). Setting up the speech production network: how oscillations contribute to lateralized information routing. Frontiers in Psychology, 3 Available: http://www.ncbi.nlm.nih.gov/pmc/articles/PMC3368321/ [2015, September 21, 2015]. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilbert S.J. (2011). Decoding the content of delayed intentions. Journal of Neuroscience, 31, 2888–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanna J.E., Brennan S.E. (2007). Speakers’ eye gaze disambiguates referring expressions early during face-to-face conversation. Journal of Memory and Language, 57, 596–615. [Google Scholar]

- Hanna J.E., Tanenhaus M.K. (2004). Pragmatic effects on reference resolution in a collaborative task: evidence from eye movements. Cognitive Science, 28, 105–15. [Google Scholar]

- Hanna J., Tanenhaus M., Trueswell J. (2003). The effects of common ground and perspective on domains of referential interpretation. Journal of Memory and Language, 49, 43–61. [Google Scholar]

- Haynes J.-D. (2015). A primer on pattern-based approaches to fMRI: principles, pitfalls, and perspectives. Neuron, 87, 257–70. [DOI] [PubMed] [Google Scholar]

- Haynes J.-D., Rees G. (2005). Predicting the stream of consciousness from activity in human visual cortex. Current Biology, 15, 1301–7. [DOI] [PubMed] [Google Scholar]

- Haynes J.-D., Rees G. (2006). Decoding mental states from brain activity in humans. Nature Reviews Neuroscience, 7, 523–34. [DOI] [PubMed] [Google Scholar]

- Haynes J.-D., Sakai K., Rees G., Gilbert S., Frith C., Passingham R.E. (2007). Reading hidden intentions in the human brain. Current Biology, 17, 323–8. [DOI] [PubMed] [Google Scholar]

- Horton W.S., Keysar B. (1996). When do speakers take into account common ground? Cognition, 59, 91–117. [DOI] [PubMed] [Google Scholar]

- Hwang J., Brennan S.E., Huffman M.K. (2015). Phonetic adaptation in non-native spoken dialogue: effects of priming and audience design. Journal of Memory and Language, 81, 72–90. [Google Scholar]

- Hynes C.A., Baird A.A., Grafton S.T. (2006). Differential role of the orbital frontal lobe in emotional versus cognitive perspective-taking. Neuropsychologia, 44, 374–83. [DOI] [PubMed] [Google Scholar]

- Kamitani Y., Sawahata Y. (2010). Spatial smoothing hurts localization but not information: pitfalls for brain mappers. NeuroImage, 49, 1949–52. [DOI] [PubMed] [Google Scholar]

- Kamitani Y., Tong F. (2005). Decoding the visual and subjective contents of the human brain. Nature Neuroscience, 8, 679–85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kell C.A., Morillon B., Kouneiher F., Giraud A.-L. (2011). Lateralization of speech production starts in sensory cortices: a possible sensory origin of cerebral left dominance for speech. Cerebral Cortex (New York, N.Y. : 1991), 21, 932–7. [DOI] [PubMed] [Google Scholar]

- Keysar B., Barr D.J., Horton W.S. (1998). The egocentric basis of language use insights from a processing approach. Current Directions in Psychological Science, 7, 46–9. [Google Scholar]

- Koechlin E., Ody C., Kouneiher F. (2003). The architecture of cognitive control in the human prefrontal cortex. Science, 302, 1181–5. [DOI] [PubMed] [Google Scholar]

- Kourtis D., Sebanz N., Knoblich G. (2010). Favouritism in the motor system: social interaction modulates action simulation. Biology Letters, 6, 758–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kourtis D., Sebanz N., Knoblich G. (2013). Predictive representation of other people’s actions in joint action planning: an EEG study. Society Neuroscience, 8, 31–42. [DOI] [PubMed] [Google Scholar]

- Kraljic T., Brennan S.E. (2005). Prosodic disambiguation of syntactic structure: for the speaker or for the addressee? Cognitive Psychology, 50, 194–231. [DOI] [PubMed] [Google Scholar]

- Krauss R.M. (1987). The role of the listener: addressee influences on message formulation. Journal of Language and Social Psychology, 6, 81–98. [Google Scholar]

- Kriegeskorte N., Goebel R., Bandettini P. (2006). Information-based functional brain mapping. Proceedings of the National Academy of Sciences USA, 103, 3863–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N., Simmons W.K., Bellgowan P.S.F., Baker C.I. (2009). Circular analysis in systems neuroscience: the dangers of double dipping. Nature Neuroscience, 12, 535–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kronmüller E., Barr D.J. (2007). Perspective-free pragmatics: broken precedents and the recovery-from-preemption hypothesis. Journal of Memory and Language, 56, 436–55. [Google Scholar]

- Kuhlen A.K., Brennan S.E. (2013). Language in dialogue: when confederates might be hazardous to your data. Psychonomic and Bulletin Review, 20, 54–72. [DOI] [PubMed] [Google Scholar]

- Lockridge C.B., Brennan S.E. (2002). Addressees’ needs influence speakers’ early syntactic choices. Psychonomic and Bulletin Review, 9, 550–7. [DOI] [PubMed] [Google Scholar]

- Mackey S., Petrides M. (2014). Architecture and morphology of the human ventromedial prefrontal cortex. European Journal of Neuroscience, 40, 2777–96. [DOI] [PubMed] [Google Scholar]

- Metzing C., Brennan S. (2003). When conceptual pacts are broken: partner-specific effects on the comprehension of referring expressions. Journal of Memory and Language, 49, 201–13. [Google Scholar]

- Moll J., de Oliveira-Souza R., Eslinger P.J., et al. (2002). The neural correlates of moral sensitivity: a functional magnetic resonance imaging investigation of basic and moral emotions. The Journal of Neuroscience : The Official Journal of the Society for Neuroscience, 22, 2730–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Momennejad I., Haynes J.-D. (2012). Human anterior prefrontal cortex encodes the “what” and “when” of future intentions. NeuroImage, 61, 139–48. [DOI] [PubMed] [Google Scholar]

- Muller K., Mika S., Ratsch G., Tsuda K., Scholkopf B. (2001). An introduction to kernel-based learning algorithms. IEEE Transactions on Neural Networks, 12, 181–201. [DOI] [PubMed] [Google Scholar]

- Nadig A.S., Sedivy J.C. (2002). Evidence of perspective-taking constraints in children’s on-line reference resolution. Psychological Science, 13, 329–36. [DOI] [PubMed] [Google Scholar]

- Neubert F.-X., Mars R.B., Thomas A.G., Sallet J., Rushworth M.F.S. (2014). Comparison of human ventral frontal cortex areas for cognitive control and language with areas in monkey frontal cortex. Neuron, 81, 700–13. [DOI] [PubMed] [Google Scholar]

- Newman-Norlund S.E., Noordzij M.L., Newman-Norlund R.D., et al. (2009). Recipient design in tacit communication. Cognition, 111, 46–54. [DOI] [PubMed] [Google Scholar]

- Nieuwland M.S., Van Berkum J.J.A. (2006). Individual differences and contextual bias in pronoun resolution: evidence from ERPs. Brain Research, 1118, 155–67. [DOI] [PubMed] [Google Scholar]

- Noordzij M.L., Newman-Norlund S.E., de Ruiter J.P., Hagoort P., Levinson S.C., Toni I. (2009). Brain mechanisms underlying human communication. Frontiers in Human Neuroscience, 3, 14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Norman K.A., Polyn S.M., Detre G.J., Haxby J.V. (2006). Beyond mind-reading: multi-voxel pattern analysis of fMRI data. Trends in Cognitive Science, 10, 424–30. [DOI] [PubMed] [Google Scholar]

- Op de Beeck H.P. (2010). Against hyperacuity in brain reading: spatial smoothing does not hurt multivariate fMRI analyses? NeuroImage, 49, 1943–8. [DOI] [PubMed] [Google Scholar]

- Pickering M.J., Garrod S. (2004). Toward a mechanistic psychology of dialogue. Behavioral and Brain Sciences, 27, 169–90. [DOI] [PubMed] [Google Scholar]

- Pickering M.J., Garrod S. (2013). An integrated theory of language production and comprehension. Behavioral and Brain Sciences, 36, 329–47. [DOI] [PubMed] [Google Scholar]

- Reverberi C., Görgen K., Haynes J.-D. (2012). Compositionality of rule representations in human prefrontal cortex. Cerebral cortex (New York, N.Y. : 1991), 22, 1237–46. [DOI] [PubMed] [Google Scholar]

- Sakai K. (2008). Task set and prefrontal cortex. The Annual Review of Neuroscience, 31, 219–45. [DOI] [PubMed] [Google Scholar]

- Sakai K., Passingham R.E. (2003). Prefrontal interactions reflect future task operations. Nature Neuroscience, 6, 75–81. [DOI] [PubMed] [Google Scholar]

- Sakai K., Passingham R.E. (2006). Prefrontal set activity predicts rule-specific neural processing during subsequent cognitive performance. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience, 26, 1211–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sassa Y., Sugiura M., Jeong H., Horie K., Sato S., Kawashima R. (2007). Cortical mechanism of communicative speech production. NeuroImage, 37, 985–92. [DOI] [PubMed] [Google Scholar]

- Schilbach L., Timmermans B., Reddy V., et al. (2013). Toward a second-person neuroscience. Behavioral and Brain Sciences, 36, 393–414. [DOI] [PubMed] [Google Scholar]

- Schilbach L., Wohlschlaeger A.M., Kraemer N.C., et al. (2006). Being with virtual others: neural correlates of social interaction. Neuropsychologia, 44, 718–30. [DOI] [PubMed] [Google Scholar]

- Schober M.F. (1993). Spatial perspective-taking in conversation. Cognition, 47, 1–24. [DOI] [PubMed] [Google Scholar]

- Soon C.S., Brass M., Heinze H.-J., Haynes J.-D. (2008). Unconscious determinants of free decisions in the human brain. Nature Neuroscience, 11, 543–5. [DOI] [PubMed] [Google Scholar]

- Stalnaker T.A., Cooch N.K., Schoenbaum G. (2015). What the orbitofrontal cortex does not do. Nature Neuroscience, 18, 620–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stolk A., D’Imperio D., di Pellegrino G., Toni I. (2015). Altered communicative decisions following ventromedial prefrontal lesions. Current Biology, 25, 1469–74. [DOI] [PubMed] [Google Scholar]

- Swisher J.D., Gatenby J.C., Gore J.C., et al. (2010). Multiscale pattern analysis of orientation-selective activity in the primary visual cortex. Journal of Neuroscience, 30, 325–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanenhaus M.K., Brown-Schmidt S. (2008). Language processing in the natural world. Philosophical Transactions of The Royal Society B Biological Sciences, 363, 1105–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Berkum J.J.A. (2010). The brain is a prediction machine that cares about good and bad: any implications for neuropragmatics? Italian Journal of Linguistics, 22, 181–208. [Google Scholar]

- Van Overwalle F., Baetens K. (2009). Understanding others’ actions and goals by mirror and mentalizing systems: a meta-analysis. NeuroImage, 48, 564–84. [DOI] [PubMed] [Google Scholar]

- Welborn B.L., Lieberman M.D. (2015). Person-specific theory of mind in medial pFC. Journal of Cognitive Neuroscience, 27, 1–12. [DOI] [PubMed] [Google Scholar]

- Willems R.M., de Boer M., de Ruiter J.P., Noordzij M.L., Hagoort P., Toni I. (2010). A dissociation between linguistic and communicative abilities in the human brain. Psychological Science, 21, 8–14. [DOI] [PubMed] [Google Scholar]

- Wilson R.C., Takahashi Y.K., Schoenbaum G., Niv Y. (2014). Orbitofrontal cortex as a cognitive map of task space. Neuron, 81, 267–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wisniewski D., Goschke T., Haynes J. D. (2016). Similar coding of freely chosen and externally cued intentions in a fronto-parietal network. Neuroimage, 134, 450–8. [DOI] [PubMed] [Google Scholar]