Abstract

Humans may cooperate strategically, cooperating at higher levels than expected from their short-term interests, to try and stimulate others to cooperate. To test this hypothesis, we experimentally manipulated the extent an individual's behaviour is known to others, and hence whether or not strategic cooperation is possible. In contrast with many previous studies, we avoided confounding factors by preventing individuals from learning during the game about either pay-offs or about how other individuals behave. We found clear evidence for strategic cooperators—just telling some individuals that their groupmates would be informed about their behaviour led to them tripling their initial level of cooperation, from 17 to 50%. We also found that many individuals play as if they do not understand the game, and their presence obscures the detection of strategic cooperation. Identifying such players allowed us to detect and study strategic motives for cooperation in novel, more powerful, ways.

Keywords: altruism, confusion, signalling, rationality, reputation

1. Introduction

Experiments using economic games have shown that humans routinely cooperate, sacrificing personal earnings in ways that benefit others [1,2]. A key question is the extent to which human cooperation is driven by a concern for the welfare of others, versus a strategic concern to increase personal success. For example, individuals may be motivated by a concern for fairness and make decisions that attempt to achieve more equitable outcomes [3–5]. Alternatively, individuals may play strategically, and initially invest in helping others if they think this will lead to greater help in return [6]. For example, if some people increase their level of cooperation in response to the cooperation of others, then individuals can be favoured to strategically cooperate, to induce this response from partners [6–23]. While many experiments suggest fairness is important ([1–5], cf. [24]), tests for the importance of strategic cooperation have produced mixed results [23,25–34].

The lack of clear experimental support for strategic cooperation may reflect how it has been tested for, rather than whether it occurs. One issue is that some studies have focused on levels of cooperation over time, which can confound strategic cooperation with learning about pay-offs and/or the behaviour of others [25,26,30]. One solution is to deprive individuals of information during a repeated version of the game. Another method is to focus upon initial levels of cooperation, before individuals can be influenced by the behaviour of others. Here, we use both of these methods.

Another potential problem is that variation between individuals might obscure strategic cooperation. For example, if a fraction of individuals are confused about the game's pay-offs, then their motivations will be hard to interpret [24]. The presence of such ‘irrational’ players will obscure strategic cooperation at the aggregate level. One way to ameliorate this problem is to focus upon individual decisions to identify and control for such ‘irrational’ players [23,35] (individuals may also play irrationally for a number of other reasons, including boredom, inattention and a desire to please or out-smart the experimenter).

Our experiment allowed us to first identify if individuals were playing irrationally or not, and then test if they cooperated strategically [6]. We measured cooperation as the value of voluntary contribution towards a public good that was personally costly but beneficial for the group [36,37]. In this game, the strategy that would give the greatest pay-off in a single round is to contribute nothing. We tested for irrationality by first making all individuals play one round of this public goods game with computerized groupmates. Individuals should have no concern for the welfare of the computer, and so, if they understand the game, rational players will contribute nothing to the public good. By contrast, individuals that cooperate with the computer are behaving irrationally within the context of the game. We then examined how players cooperated with humans depending on if they played irrationally or not and if their behaviour was visible or not to their groupmates. If individuals cooperate strategically, then we expect them to cooperate more when told that their behaviour will be visible, and thus will be able influence their groupmates' decisions.

2. Material and methods

(a). Participants

The data presented here were collected from 288 participants across 12 sessions at the Centre for Experimental Social Science (CESS) Oxford as part of a longer experiment [24,38]. For full methods, see the electronic supplementary material [5,39–43].

(b). Game parameters

Our general set-up was to make groups of four anonymous individuals play a public goods game and to vary whether their behaviour was visible or not to their groupmates. Our experiment was conducted in three stages, each involved playing the same public goods game. First, everyone played one round with computerized groupmates to test their ‘rationality’. Second, everyone played with humans in a repeated game that had no information between rounds. In truth, all players were invisible here but only some of them knew this for certain, whereas the rest faced ambiguous invisibility. Third, everyone played again with humans, but some were placed into ‘visible’ treatments whereby they knew their groupmates would learn about their behaviour in some way, thus enabling strategic cooperation. The rest were again placed into an invisible treatment, where they knew for certain that their groupmates could not observe their behaviour. Group composition was constant in each stage and all players were told this (table 1; figure 1; electronic supplementary material, figure S1).

Table 1.

A summary of the mean initial cooperation of rational (did not cooperate with computers) and irrational (cooperated with computers) players for the different treatments.

| stage and treatment | told groupmates will see |

mean initial cooperation (0–20 MU) |

|||

|---|---|---|---|---|---|

| their own pay-offs | your behaviour | behaviour of other groups | rational players (N = 103) |

irrational players (N = 185) |

|

| computer playa (stage 1) | n.a. | n.a. | n.a. | 0 by definition | 11.1 ± 0.44 |

| certain invisibilityb (stage 2) | no | no | no | 2.8 ± 1.27, N = 21 |

10.5 ± 0.80, N = 51 |

| ambiguous visibilityc | ambiguous | ambiguous | ambiguous | 6.3 ± 0.88, N = 82 |

9.8 ± 0.53, N = 134 |

| certain invisibilityd (stage 3) | no | no | yes | 3.3 ± 0.79, N = 60 |

9.3 ± 0.58, N = 132 |

| partly visiblee | yes | no | no | 9.2 ± 1.89, N = 23 |

9.2 ± 1.47, N = 25 |

| fully visiblef | yes | yes | no | 10.8 ± 1.96, N = 20 |

11.1 ± 1.03, N = 28 |

aOne round of play. Players told they were playing computerized groupmates.

bSix rounds. Players received no information and told no one else would either.

cSix rounds. Players received no information but not told no one else would either.

dSix rounds. Players told groupmates could only observe other groups.

eSix rounds. Players told that everyone would learn their personal pay-offs each round.

fSix rounds. Players told that everyone would learn pay-offs and everyone's decisions. Partly and fully visible treatments were combined for analyses in main text.

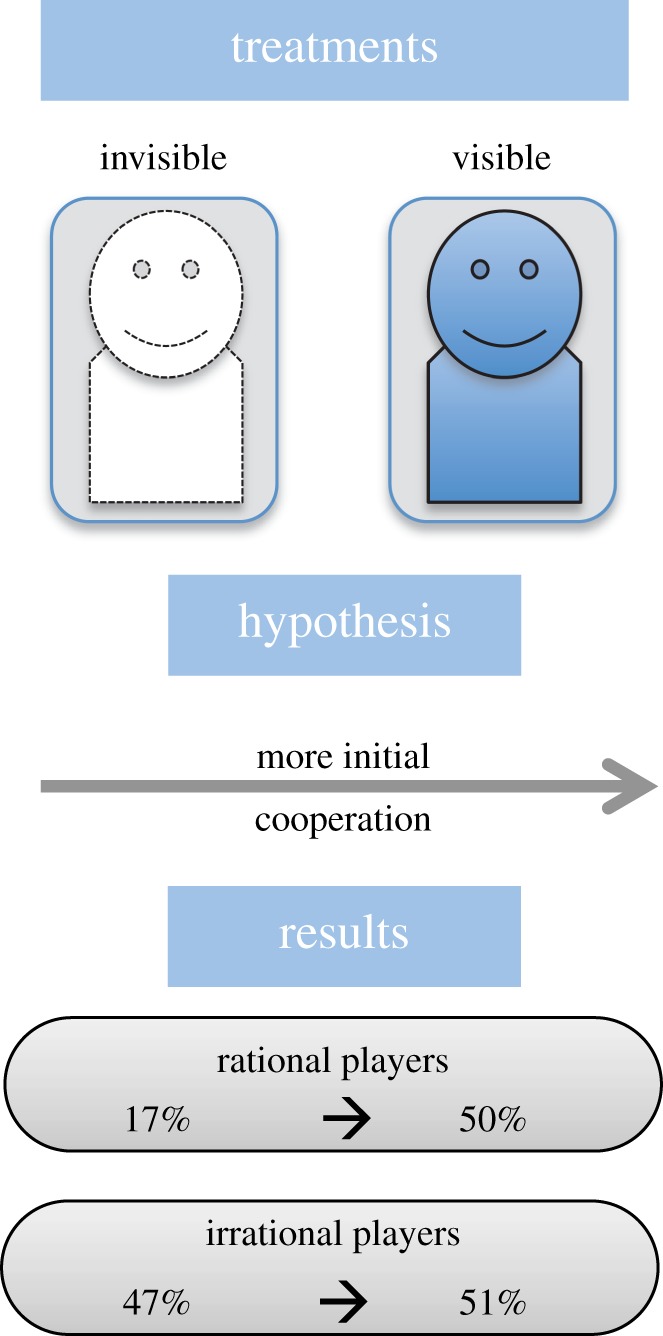

Figure 1.

Our experimental design, hypothesis and general result. In our invisible treatment, players were told that their groupmates would not be able to observe their behaviour nor their own earnings (which can be used to calculate the average cooperation of groupmates). In our visible treatments, players were told that their groupmates would see their own earnings, and half the players were also told that their groupmates would see their individual decisions. Rational players were those that did not cooperate with computers in a preceding version of the same public goods game. Irrational players did cooperate with the computer.

(c). Stage 1: testing for confused/irrational players

Experiments on cooperation using economic games often assume that the costs a player incurs are done so ‘rationally’, in order to satisfy his/her preferences for the welfare of others [3]. However, not all players may play rationally, for example, if a self-interested player is uncertain or confused about how to maximize their earnings then they may also cooperate at some level, even though this may be inconsistent with their preferences, and hence irrational by definition [24]. One way to test between these competing possibilities is to make individuals play the same game with computerized groupmates in an ‘asocial control’ that eliminates any concerns for other players [24,44–47]. If players cooperate both when playing with computers and when playing with humans, then one cannot interpret their behaviour solely on the basis of which players benefit.

We, therefore, tested for confused/irrational players by first making all individuals play a single round of the same game with computerized groupmates (with no feedback to prevent any learning). This was after receiving standard instructions and control questions that first made no mention of playing with computers [5,41]. The CESS laboratory forbids deception and participants are reminded of this at the start of experiments, and they were told many times that they were playing computerized groupmates and that only they would be affected by their decision (You are the only real person in the group, and only you will receive any money). For convenience, we hereafter refer to individuals that did not cooperate (contributed 0 MU) with computers as ‘rational players’, and individuals that cooperated (contributed more than 0 MU) with computers as ‘irrational players’. Our use of ‘rational’ here is merely a catch all term for players that are not irrational within the context of the game. An alternative nomenclature arguably could be comprehenders and non-comprehenders. However, note that if a player does not cooperate with the computer, they do not necessarily understand the game and vice versa. We use the term ‘players’ because we are not making any claims about the general rationality of these ‘individuals’ outside of the context of the laboratory game, and although interesting, the question of why some players cooperate with computers is not the focus of this study [24,44–46,48]. Instead, we show that controlling for such players facilitates the detection of strategic cooperation.

(d). Stage 2: testing cooperation over time

We then had all our participants play the same public goods game in constant groups in a ‘No information’ environment for six rounds. Here, all players were invisible, but only some knew this for certain.

Specifically, we told all the players ‘You WILL NOT receive any information about the decisions of the other players, nor about your earnings in these rounds’. In addition, players in three of our 12 sessions (72 individuals) were told explicitly that their groupmates would also not receive any information, ‘nor will anyone else at any time except for the experimenter after the experiment’. Therefore, their behaviour was ‘certainly invisible’, insofar as they trust experimenter instructions. By contrast, for the other 216 individuals in the other nine sessions, the invisibility of their behaviour was ambiguous because they were not told what information other players' received. Comparing behaviour between these treatments enables us to test if ambiguity about ones visibility to others is enough to trigger strategic cooperation. The lack of information between rounds means we can also compare how potential strategic cooperation changes over time while controlling for learning or interactions between players.

(e). Stage 3: testing for strategic cooperation

In the next stage, we directly tested if providing information that makes behaviour visible induces strategic cooperation. After stage 2, we restarted the game with new randomly formed groups and manipulated the extent to which strategic cooperation was possible across three conditions that varied in how visible they made behaviour to groupmates. We had two visible treatments, one where behaviour was ‘fully’ visible, and one where it was only ‘partly’ visible, and we had a new invisible treatment, where players could only observe the behaviour of outside groups (not their own). Contrary to stage 2, in these three treatments, all players received information during the game that could affect their behaviour. Therefore, we only compared their levels of initial cooperation, which occurred after our manipulation (telling players what information their groupmates will receive) but before any learning or in-game dynamics could occur.

Specifically, to make behaviour fully visible, we told players that they and their groupmates would be told after each round the individual contributions of each player. To make behaviour partly visible, we told players that they and their groupmates would be told their personal earnings from each round. This made an individual's behaviour ‘partly visible’, because the act of cooperation always informs groupmates that there is at least one cooperator in the group, and information on earnings reliably informs individuals of the average level of cooperation in their group. We told players in both visible treatments: ‘You and everybody else in your group will receive the SAME INFORMATION’. Players in the fully visible treatment were then told: ‘The information each person will receive is what the decisions were of each player in the group and what their own earnings are from each round’ and players in the partially visible were told: ‘The information each person will receive is what their own earnings are from each round’. We randomly assigned 48 players, regardless if they were irrational or not, to each of these two visible treatments. However, because rational players were so rare (see Results), our analyses mostly combined the fully and partially visible treatments into one ‘visible’ treatment (N = 96).

To make behaviour invisible within groups, and thus to prevent strategic cooperation from working, we again did not provide any information on either personal earnings or the behaviour of the same-group members. However, in contrast with stage 2, individuals knew they and their groupmates would receive information, but that it would only be about the behaviour of other groups that they had no connection to (for the purposes of another study, we provided these individuals with one of four types of information about other group(s) [38] (electronic supplementary material, Methods and Results)). Crucially, the flow of information between groups was strictly unidirectional, thus an individual's behaviour was neither visible to his/her groupmates nor to the groups he/she was observing. This meant an individual's cooperation could still be motivated by a concern for the welfare of others, but not sensibly by strategic concerns. Here, we are interested in how individuals initially cooperated when knowing that their behaviour will be visible or not to their groupmates. Specifically, we told all players in our invisible treatment the following:

You and everybody else in your group will receive the SAME INFORMATION.

The information you and the others in your group will receive WILL NOT COME FROM YOUR GROUP, but instead will come from (an)other group(s).

Your decisions will only affect the earnings of people in your group. [original emphases shown]

In all our treatments, all members of the same group were assigned to the same treatment, and the treatment design was common knowledge to all members of the group. Our focus here is on how individuals played in the opening round of these treatments, before they have had a chance to learn about the game or other players, depending on whether their groupmates could potentially learn something about how they had behaved. We focus on the first round because, at this stage, the only difference between individuals is the information that they have been told they and their groupmates will receive in the future. At this point, individuals cannot have been influenced by the way in which other individuals play. This allows us to test for strategic motives while controlling for learning about the game's pay-offs and the nature of other players.

3. Results

(a). Irrational players

We first tested if players cooperated with computerized groupmates (stage 1). We found that 185 (64%) of our players cooperated with the computer and thus were ‘irrational’ in the context of our experiment. This meant that, at most, only 103 (36%) of our players, who did not cooperate with the computer, could be classified as rational. Overall, the average cooperation towards computers was 36% (7.1 MU ± 0.42 s.e.m., median = 5 MU, mode = 0 MU, table 1; electronic supplementary material, figure S2). The rarity of rational players made it difficult to compare their behaviour across the partly and fully visible treatments (N = 23 and 20), and so we combined these data into one ‘visible’ treatment for all analyses in the main text (electronic supplementary material, Results and figure S3).

(b). Strategic cooperation

Our main test is how levels of cooperation (number of MU contributed) varied, in the opening round of the repeated game with information (stage 3), depending on if this information made an individual's behaviour visible or not to their groupmates, and if we had classified them as rational or not. Overall, we found that ‘rational’ and ‘irrational’ players responded differently to whether their behaviour was visible or not, with only rational players cooperating significantly more when visible (generalized linear model with a binary-logistic link set to 20 trials, hereafter referred to as GzLM: level of cooperation∼rationality×visibility: F1,284 = 12.4, p < 0.001, figure 2a,b).

Figure 2.

(a,b) Rational players are strategic cooperators. The figures show the distribution of the level of contribution in a public goods game (cooperation), distinguishing between individuals who (a) had not cooperated with computers (rational players) or (b) had previously cooperated with computers (irrational players). The light grey bars are for individuals whose behaviour would not be revealed to their groupmates (invisible), and the dark grey bars are for individuals whose behaviour would be revealed to their groupmates (visible). (a) Rational players cooperate at higher levels when their behaviour is visible.

Specifically, the rational players cooperated three times as much in the treatments where behaviour was visible. Their mean level of cooperation was 17% (3.3 ± 0.79 MU, N = 60) in the invisible treatment, and 50% (9.9 ± 1.35 MU, N = 43) in the visible treatments, a significant difference (GzLM: F1,101 = 17.9, p < 0.001, β = 1.6 ± 0.39). This difference is even more striking if we examine the initial modal cooperation, which was 0% (0 MU) when invisible and 100% (20 MU) when visible (figure 2a). The general timeline of cooperation for rational players from stage 2 to stage 3 can be seen in figure 3.

Figure 3.

The timeline of strategic cooperation. This figure examines the behaviour of the rational players in two repeated public goods games. For simplicity, only the rational players that experienced the ambiguously visible treatment in the no-information game are shown (N = 82). All players first played six rounds of the game with no information, not knowing if their behaviour was visible or not, before being randomly assigned to play another six rounds, in new groups, where their behaviour would either be observable in some form (visible, dark grey diamonds) or not (invisible, light grey circles) to their groupmates. Dashed lines show 95% CIs.

By contrast, the irrational players did not significantly vary their behaviour depending upon whether they were visible or not. Their mean level of cooperation when invisible was 47% (9.3 ± 0.58 MU, N = 132), and when visible was 51% (10.2 ± 0.88 MU, N = 53), a non-significant difference (GzLM: F1,183 = 0.6, p = 0.444, β = 0.2 ± 0.21). Their modal cooperation was 50% (10 MU) in both cases (figure 2b). These results were robust to various forms of analysis (electronic supplementary material, Results and figure S4).

Although the average behaviour of rational and irrational players was the same in the visible treatment, cooperating at 50% and 51%, respectively, the variances of their decisions were significantly different (respective variances = 78.3 and 41.0, Levene's test of homogeneity of variances = 14.4, d.f. = 1,94, p < 0.001). The contributions of rational players exhibited a bimodal distribution of 0 and 100% (0 and 20 MU), whereas the irrational players tended to contribute 50% (10 MU) (figure 2a,b), suggesting that the behaviour and cognition among rational and irrational players differed in the visible treatment, despite similar levels of average cooperation.

(c). Strategic cooperation under ambiguity

We found further support for strategic cooperation in the prior no-information game (stage 2). Again we found a significant interaction between how rational and irrational players cooperated depending on how visible their behaviour (potentially) was (GzLM, initial cooperation in the first round of the no-information game ∼ rationality×visibility: F1,284 = 5.7, p = 0.017, table 1; electronic supplementary material, figure S5).

Merely being potentially visible was enough to make rational players, but not irrational players, initially cooperate more. When rational players could be certain, their behaviour was invisible they initially only cooperated at 14% (2.8 ± 1.27 MU), but when their visibility was ambiguous, they initially cooperated at 32% (6.3 ± 0.88 MU) (GzLM: F1,101 = 3.9, p = 0.052, β = 1.0 ± 0.57; electronic supplementary material, figure S5a). By contrast, the initial cooperation of irrational players did not significantly vary depending on visibility, cooperating at 53% (10.5 ± 0.80 MU) when their invisibility was certain, and 49% (9.8 ± 0.53 MU) when their visibility was ambiguous (GzLM: F1,183 = 0.5, p = 0.468, β = 0.1 ± 0.20; electronic supplementary material, figure S5b). For an overview of levels of initial cooperation by all treatments (see table 1).

(d). Strategic decline

If individuals are cooperating in order to stimulate others to cooperate in the future, then they might cooperate less as the final round approaches. In support of this idea, we found that rational players faced with ambiguous visibility in stage 2 significantly decreased their cooperation over the six rounds, from an initial 32% (6.3 ± 0.88 MU) to 13% (2.6 ± 0.66 MU) (GzLMM: F1,412 = 91.4, p < 0.001, β = −0.48 ± 0.050, figure 3). By contrast, if they had certain invisibility, they cooperated at a low, constant, level of around 13% (2.6 ± 0.49 MU mean of six rounds) (GzLMM: F1,105 = 1.2, p = 0.276, β = −0.09 ± 0.084). This led to a significant interaction for rational players between the degree of invisibility (ambiguous versus certain) and cooperation over time (GzLMM: F1,511 = 13.9, p < 0.001; electronic supplementary material, figure S6). By contrast, this same interaction was not significant for irrational players, as their cooperation over time did not depend on if their invisibility was certain or ambiguous (GzLMM: F1,885 = 1.0, p < 0.322; electronic supplementary material, figure S6). Therefore, many rational players facing ambiguous visibility initially cooperated at a higher level, before generally decreasing their cooperation as the end of the game approached (electronic supplementary material, table S1). As no information was available between rounds, this decrease could not possibly have been due to individuals learning or responding to the behaviour of others.

4. Discussion

We found experimental support for strategic cooperation in humans. We categorized players depending upon whether they cooperated (irrational) or not (rational) with a computer in a one-shot public goods game. Telling rational players, who did not cooperate with a computer, that their groupmates could potentially learn about their behaviour, led to them tripling their level of initial cooperation, from 17 to 50% (figure 2a). This result is even more striking when examining the modal level of cooperation, which increased from 0 to 100%! By contrast, irrational players, who cooperated with the computer, did not vary their level of initial cooperation depending upon whether others could potentially learn about their contributions (figure 2b).

Our results provide a potential explanation for why previous studies often failed to find conclusive support for strategic cooperation ([25,30], although see [33,34]). We found clear evidence for strategic cooperation, but only in ‘rational’ players. Overall, we found that at most 36% of individuals played rationally, whereas the other 64% of individuals played irrationally, or just did not fully understand how to best play the game. Consequently, the irrational players who do not appear to cooperate strategically were relatively common (64%), although this number presumably could be reduced depending on what instructions are used [46,48]. This variation across individuals produces ‘noise’ that unless controlled for will reduce the chances of detecting strategic cooperation and potentially other interesting behaviours.

Are strategic cooperators self-interested individuals that take time to calculate what is best for them, or do they just intuitively cooperate in situations that potentially favour cooperation? Our study cannot differentiate between these possibilities. There is growing evidence that many humans show intuitive cooperation, cooperating without considering the costs and benefits of the situation [49–51]. Strategic cooperation could also be intuitive if natural selection has favoured heuristics that activate cooperation accordingly, such as when behaviour is visible and reputational mechanisms are likely [52–58]. However, such heuristics or priming effects would be expected to also apply to irrational players, and we found they did not, although this could perhaps be because they were generally less attentive to the instructions and less focused on the game and their environment.

A number of competing explanations for our data can be rejected. One potential hypothesis is that our test for rationality merely separates cooperators from non-cooperators. However, this is clearly not the case, because when we told rational players that their behaviour would be visible to their groupmates, they generally adjusted their behaviour from non-cooperative to cooperative. By contrast, irrational players did not behave differently when their behaviour was visible or not. Another potential hypothesis therefore is that irrational players cooperate for purely pro-social reasons. However, the fact that they also cooperated with computers, and thus paid costs when no other players would benefit, means that their motivation to pay costs cannot be interpreted purely on the basis of who benefits. Instead, the social benefits of their behaviour in this experiment appear to be by-products of their irrationality, which may be driven by confusion surrounding the game or their intuition [24,50]. Consistent with our interpretation, the modal behaviour of irrational players across all treatments was 50%, suggesting the (potentially rational) use of a bet-hedging strategy by players who were uncertain how best to play the game.

Finally, because our no information version of the game always preceded our games with information, another potential hypothesis is that the contrast in games communicated to players an experimenter demand to respond differently [59]. We do not reject the idea of experimenter demand, but point out that again it would have to not apply to irrational players whose behaviour was unchanged. Furthermore, the overall level of cooperation in the visible treatments is consistent with published behaviour in public-goods games. Instead, the novel result that illuminates strategic cooperation comes from the lack of cooperation by rational players if placed into an invisible treatment (17%), suggesting that their cooperation in visible treatments (50%) is largely strategic.

5. Conclusion

Overall, the high number of irrational players along with the low level of cooperation by rational players when invisible suggests that measures of altruistic motives may have been biased in many previous studies. Aside from this, our results have two potentially important real-world behavioural implications. First, when cooperative relationships fail, e.g. among housemates, colleagues, teams or nations, this may be because individuals perceive a reduction in future opportunities for cooperation. Attempts to maintain cooperation may, therefore, have more success by emphasizing the future benefits of mutually beneficial relationships. Second, perceived ambiguity and comprehension significantly affected how individuals behaved in response to opportunities to influence social behaviour. Understanding this will be essential if one wants to influence behaviour successfully, while avoiding harmful unintended consequences. This is becoming increasingly important as public policymakers seek to apply behavioural insights that attempt to influence or ‘nudge’ people's behaviour in socially useful ways [60].

Supplementary Material

Acknowledgements

CESS for running experiments. Miguel dos Santos, Daniel Friedman and Larry Samuelson for discussion.

Data accessibility

Data are available from the Dryad Digital Repository: http://dx.doi.org/10.5061/dryad.s612m [61].

Authors' contributions

M.N.B.-C. conceived, designed, programmed, conducted, analysed and wrote the study; C.E.M. conceived, designed, conducted, analysed and wrote the study; S.A.W. conceived, designed, analysed and wrote the study.

Competing interests

We declare we have no competing interests.

Funding

M.N.B.-C. and C.E.M. are funded by John Fell Fund Oxford; M.N.B.-C. and S.A.W. are funded by Calleva Research Centre for Evolution and Human Sciences, Magdalen College.

References

- 1.Fehr E, Fischbacher U. 2003. The nature of human altruism. Nature 425, 785–791. ( 10.1038/nature02043) [DOI] [PubMed] [Google Scholar]

- 2.Henrich J. et al 2005. ‘Economic man’ in cross-cultural perspective: behavioral experiments in 15 small-scale societies. Behav. Brain Sci. 28, 795–815; discussion 815–855. [DOI] [PubMed] [Google Scholar]

- 3.Fehr E, Schmidt KM. 1999. A theory of fairness, competition, and cooperation. Q. J. Econ. 114, 817–868. ( 10.1162/003355399556151) [DOI] [Google Scholar]

- 4.Fischbacher U, Gachter S, Fehr E. 2001. Are people conditionally cooperative? Evidence from a public goods experiment. Econ Lett. 71, 397–404. ( 10.1016/S0165-1765(01)00394-9) [DOI] [Google Scholar]

- 5.Fischbacher U, Gachter S. 2010. Social preferences, beliefs, and the dynamics of free riding in public goods experiments. Am. Econ. Rev. 100, 541–556. ( 10.1257/aer.100.1.541) [DOI] [Google Scholar]

- 6.Kreps DM, Milgrom P, Roberts J, Wilson R. 1982. Rational cooperation in the finitely repeated prisoners' dilemma. J. Econ. Theory. 27, 245–252. ( 10.1016/0022-0531(82)90029-1) [DOI] [Google Scholar]

- 7.Trivers RL. 1971. Evolution of reciprocal altruism. Q. Rev. Biol. 46, 35 ( 10.1086/406755) [DOI] [Google Scholar]

- 8.Alexander RD. 1987. The biology of moral systems. New York, NY: Aldine de Gruyter. [Google Scholar]

- 9.Roberts G. 1998. Competitive altruism: from reciprocity to the handicap principal. Proc. R. Soc. Lond. B 265, 427–431. ( 10.1098/rspb.1998.0312) [DOI] [Google Scholar]

- 10.Roberts G, Sherratt TN. 1998. Development of cooperative relationships through increasing investment. Nature 394, 175–179. ( 10.1038/28160) [DOI] [PubMed] [Google Scholar]

- 11.Sylwester K, Roberts G. 2010. Cooperators benefit through reputation-based partner choice in economic games. Biol. Lett. 6, 659–662. ( 10.1098/rsbl.2010.0209) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Nowak MA, Sigmund K. 1998. Evolution of indirect reciprocity by image scoring. Nature 393, 573–577. ( 10.1038/31225) [DOI] [PubMed] [Google Scholar]

- 13.Milinski M, Semmann D, Bakker TCM, Krambeck HJ.. 2001. Cooperation through indirect reciprocity: image scoring or standing strategy? Proc. R. Soc. Lond. B 268, 2495–2501. ( 10.1098/rspb.2001.1809) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Milinski M, Semmann D, Krambeck HJ. 2002. Donors to charity gain in both indirect reciprocity and political reputation. Proc. R. Soc. Lond. B 269, 881–883. ( 10.1098/rspb.2002.1964) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Milinski M, Semmann D, Krambeck HJ. 2002. Reputation helps solve the ‘tragedy of the commons’. Nature 415, 424–426. ( 10.1038/415424a) [DOI] [PubMed] [Google Scholar]

- 16.Yoeli E, Hoffman M, Rand DG, Nowak MA. 2013. Powering up with indirect reciprocity in a large-scale field experiment. Proc. Natl Acad. Sci. USA 110, 10 424–10 429. ( 10.1073/pnas.1301210110) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Leimar O, Hammerstein P. 2001. Evolution of cooperation through indirect reciprocity. Proc. R. Soc. Lond. B 268, 745–753. ( 10.1098/rspb.2000.1573) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Barclay P. 2004. Trustworthiness and competitive altruism can also solve the ‘tragedy of the commons'. Evol. Hum. Behav. 25, 209–220. ( 10.1016/j.evolhumbehav.2004.04.002) [DOI] [Google Scholar]

- 19.Barclay P, Willer R. 2007. Partner choice creates competitive altruism in humans. Proc. R. Soc. B 274, 749–753. ( 10.1098/rspb.2006.0209) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Barclay P. 2016. Biological markets and the effects of partner choice on cooperation and friendship. Curr. Opin. Psychol. 7, 33–38. ( 10.1016/j.copsyc.2015.07.012) [DOI] [Google Scholar]

- 21.McCullough ME, Pedersen EJ. 2013. The evolution of generosity: how natural selection builds devices for benefit delivery. Soc. Res. 80, 387–410. [Google Scholar]

- 22.Wubs M, Bshary R, Lehmann L. 2016. Coevolution between positive reciprocity, punishment, and partner switching in repeated interactions. Proc. R. Soc. B 283, 441–484. ( 10.1098/rspb.2016.0488) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Cox CA, Jones MT, Pflum KE, Healy PJ. 2015. Revealed reputations in the finitely repeated prisoners' dilemma. Econ. Theor. 58, 441–484. ( 10.1007/s00199-015-0863-1) [DOI] [Google Scholar]

- 24.Burton-Chellew MN, El Mouden C, West SA. 2016. Conditional cooperation and confusion in public-goods experiments. Proc. Natl Acad. Sci. USA 113, 1291–1296. ( 10.1073/pnas.1509740113) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Andreoni J. 1988. Why free ride?: Strategies and learning in public-goods experiments. J. Public Econ. 37, 291–304. ( 10.1016/0047-2727(88)90043-6) [DOI] [Google Scholar]

- 26.Croson RTA. 1996. Partners and strangers revisited. Econ. Lett. 53, 25–32. ( 10.1016/S0165-1765(97)82136-2) [DOI] [Google Scholar]

- 27.Engle-Warnick J, Slonim RL. 2004. The evolution of strategies in a repeated trust game. J. Econ. Behav. Organ. 55, 553–573. ( 10.1016/j.jebo.2003.11.008) [DOI] [Google Scholar]

- 28.Dal Bo P. 2005. Cooperation under the shadow of the future: experimental evidence from infinitely repeated games. Am. Econ. Rev. 95, 1591–1604. ( 10.1257/000282805775014434) [DOI] [Google Scholar]

- 29.Muller L, Sefton M, Steinberg R, Vesterlund L. 2008. Strategic behavior and learning in repeated voluntary contribution experiments. J. Econ. Behav. Organ. 67, 782–793. ( 10.1016/j.jebo.2007.09.001) [DOI] [Google Scholar]

- 30.Andreoni J, Croson R. 2008. Partners versus strangers: random rematching in public goods experiments. In Handbook of experimental economics results. 1. (eds Plott CR, Smith VL), pp. 776–783. Amsterdam, The Netherlands: North Holland. [Google Scholar]

- 31.Engelmann D, Fischbacher U. 2009. Indirect reciprocity and strategic reputation building in an experimental helping game. Games Econ. Behav. 67, 399–407. ( 10.1016/j.geb.2008.12.006) [DOI] [Google Scholar]

- 32.Ambrus A, Pathak PA. 2011. Cooperation over finite horizons: a theory and experiments. J. Public Econ. 95, 500–512. ( 10.1016/j.jpubeco.2010.11.016) [DOI] [Google Scholar]

- 33.Reuben E, Suetens S. 2012. Revisiting strategic versus non-strategic cooperation. Exp. Econ. 15, 24–43. ( 10.1007/s10683-011-9286-4) [DOI] [Google Scholar]

- 34.Dreber A, Fudenberg D, Rand DG. 2014. Who cooperates in repeated games: the role of altruism, inequity aversion, and demographics. J. Econ. Behav. Organ. 98, 41–55. ( 10.1016/j.jebo.2013.12.007) [DOI] [Google Scholar]

- 35.Cooper R, DeJong DV, Forsythe R, Ross TW. 1996. Cooperation without reputation: experimental evidence from prisoner's dilemma games. Games Econ. Behav. 12, 187–218. ( 10.1006/game.1996.0013) [DOI] [Google Scholar]

- 36.Ledyard J. 1995. Public goods: a survey of experimental research. In Handbook of experimental economics (eds Kagel JH, Roth AE), pp. 253–279. Princeton, NJ: Princeton University Press. [Google Scholar]

- 37.Chaudhuri A. 2011. Sustaining cooperation in laboratory public goods experiments: a selective survey of the literature. Exp. Econ. 14, 47–83. ( 10.1007/s10683-010-9257-1) [DOI] [Google Scholar]

- 38.Burton-Chellew MN, El Mouden C, West SA. 2014. Social learning and the demise of costly cooperation in humans. Proc. R. Soc. B 284, 20170067 ( 10.1098/rspb.2017.0067) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Greiner B. 2015. Subject pool recruitment procedures: organizing experiments with ORSEE. J. Econ. Sci. Assoc. 1, 114–125. ( 10.1007/s40881-015-0004-4) [DOI] [Google Scholar]

- 40.Fischbacher U. 2007. z-Tree: Zurich toolbox for ready-made economic experiments. Exp. Econ. 10, 171–178. ( 10.1007/s10683-006-9159-4) [DOI] [Google Scholar]

- 41.Fehr E, Gachter S. 2002. Altruistic punishment in humans. Nature 415, 137–140. ( 10.1038/415137a) [DOI] [PubMed] [Google Scholar]

- 42.Gosling SD, Rentfrow PJ, Swann WB. 2003. A very brief measure of the Big-Five personality domains. J. Res. Pers. 37, 504–528. ( 10.1016/S0092-6566(03)00046-1) [DOI] [Google Scholar]

- 43.Simmons JP, Nelson LD, Simonsohn U. 2011. False-positive psychology: undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychol. Sci. 22, 1359–1366. ( 10.1177/0956797611417632) [DOI] [PubMed] [Google Scholar]

- 44.Houser D, Kurzban R. 2002. Revisiting kindness and confusion in public goods experiments. Am. Econ. Rev. 92, 1062–1069. ( 10.1257/00028280260344605) [DOI] [Google Scholar]

- 45.Shapiro DA. 2009. The role of utility interdependence in public good experiments. Int. J. Game Theory 38, 81–106. ( 10.1007/s00182-008-0141-6) [DOI] [Google Scholar]

- 46.Ferraro PJ, Vossler CA. 2010. The source and significance of confusion in public goods experiments. B E J. Econ. Anal. Policy 10, 216–221. [Google Scholar]

- 47.Burton-Chellew MN, West SA. 2013. Prosocial preferences do not explain human cooperation in public-goods games. Proc. Natl Acad. Sci. USA 110, 216–221. ( 10.1073/pnas.1210960110) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Bigoni M, Dragone D. 2012. Effective and efficient experimental instructions. Econ. Lett. 117, 460–463. ( 10.1016/j.econlet.2012.06.049) [DOI] [Google Scholar]

- 49.Rand DG, Greene JD, Nowak MA. 2012. Spontaneous giving and calculated greed. Nature 489, 427–430. ( 10.1038/nature11467) [DOI] [PubMed] [Google Scholar]

- 50.Rand DG, Peysakhovich A, Kraft-Todd GT, Newman GE, Wurzbacher O, Nowak MA, Greene JD. et al. 2014. Social heuristics shape intuitive cooperation. Nat. Commun. 5: 3677 ( 10.1038/ncomms4677). [DOI] [PubMed] [Google Scholar]

- 51.Rand DG, Kraft-Todd GT. 2014. Reflection does not undermine self-interested prosociality. Front. Behav. Neurosci. 8, 300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Gigerenzer G. 2008. Rationality for mortals: how people cope with uncertainty. New York, NY: OUP. [Google Scholar]

- 53.Burnham TC, Hare B. 2007. Engineering human cooperation: does involuntary neural activation increase public goods contributions? Hum. Nat. 18, 88–108. ( 10.1007/s12110-007-9012-2) [DOI] [PubMed] [Google Scholar]

- 54.Bateson M, Nettle D, Roberts G. 2006. Cues of being watched enhance cooperation in a real-world setting. Biol. Lett. 2, 412–414. ( 10.1098/rsbl.2006.0509) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Sparks A, Barclay P. 2013. Eye images increase generosity, but not for long: the limited effect of a false cue. Evol. Hum. Behav. 34, 317–322. ( 10.1016/j.evolhumbehav.2013.05.001) [DOI] [Google Scholar]

- 56.Nettle D, Harper Z, Kidson A, Stone R, Penton-Voak IS, Bateson M. 2013. The watching eyes effect in the Dictator Game: it's not how much you give, it's being seen to give something. Evol. Hum. Behav. 34, 35–40. ( 10.1016/j.evolhumbehav.2012.08.004) [DOI] [Google Scholar]

- 57.Delton AW, Krasnow MM, Cosmides L, Tooby J. 2011. Evolution of direct reciprocity under uncertainty can explain human generosity in one-shot encounters. Proc. Natl Acad. Sci. USA 108, 13 335–13 340. ( 10.1073/pnas.1102131108) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Krasnow MM, Delton AW, Tooby J, Cosmides L. 2013. Meeting now suggests we will meet again: Implications for debates on the evolution of cooperation. Sci. Rep. UK 3, 01747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Zizzo DJ. 2010. Experimenter demand effects in economic experiments. Exp. Econ. 13, 75–98. ( 10.1007/s10683-009-9230-z) [DOI] [Google Scholar]

- 60.Thaler RH. 2016. Behavioral economics: past, present, and future. Am. Econ. Rev. 106, 1577–1600. ( 10.1257/aer.106.7.1577) [DOI] [Google Scholar]

- 61.Burton-Chellew MN, El Mouden C, West SA. 2017. Data from: Evidence for strategic cooperation in humans. Dryad Digital Repository. ( 10.5061/dryad.s612m) [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Citations

- Burton-Chellew MN, El Mouden C, West SA. 2017. Data from: Evidence for strategic cooperation in humans. Dryad Digital Repository. ( 10.5061/dryad.s612m) [DOI] [PMC free article] [PubMed]

Supplementary Materials

Data Availability Statement

Data are available from the Dryad Digital Repository: http://dx.doi.org/10.5061/dryad.s612m [61].