Abstract

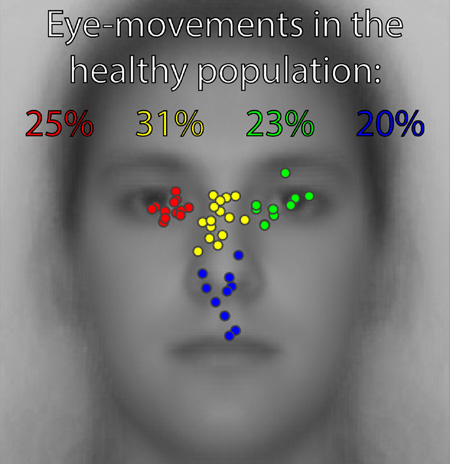

The spatial pattern of eye-movements to faces considered typical for neurologically healthy individuals is a roughly T-shaped distribution over the internal facial features with peak fixation density tending toward the left eye (observer’s perspective). However, recent studies indicate that striking deviations from this classic pattern are common within the population and are highly stable over time. The classic pattern actually reflects the average of these various idiosyncratic eye-movement patterns across individuals. The natural categories and respective frequencies of different types of idiosyncratic eye-movement patterns have not been specifically investigated before, so here we analyzed the spatial patterns of eye-movements for 48 participants to estimate the frequency of different kinds of individual eye-movement patterns to faces in the normal healthy population. Four natural clusters were discovered such that approximately 25% of our participants’ fixation density peaks clustered over the left eye region (observer’s perspective), 23% over the right eye-region, 31% over the nasion/bridge region of the nose, and 20% over the region spanning the nose, philthrum, and upper lips. We did not find any relationship between particular idiosyncratic eye-movement patterns and recognition performance. Individuals’ eye-movement patterns early in a trial were more stereotyped than later ones and idiosyncratic fixation patterns evolved with time into a trial. Finally, while face inversion strongly modulated eye-movement patterns, individual patterns did not become less distinct for inverted compared to upright faces. Group-averaged fixation patterns do not represent individual patterns well, so exploration of such individual patterns is of value for future studies of visual cognition.

Keywords: Individual Differences, Eye-Movements, Face Recognition, Face Perception, Pattern Similarity Measure, Idiosyncratic

Graphical abstract

1. - Introduction

The classic and ubiquitously reported pattern of fixations during face perception is a T-shaped distribution with peak density over the eyes, especially the left eye (from the observer’s perspective), and less fixation density over the nose and mouth (e.g., Althoff & Cohen, 1999; Barton, Radcliffe, Cherkasova, Edelman, & Intriligator, 2006; Heisz & Shore, 2008; Janik, Wellens, Goldberg, & Dell’Osso, 1978; Malcolm, Lanyon, Fugard, & Barton, 2008; Yarbus, 1965). Deviations from characteristic spatial or temporal eye-movement patterns to faces have been shown to reflect disorders including autism spectrum disorders (Kliemann, Dziobek, Hatri, Steimke, & Heekeren, 2010; Klin, Jones, Schultz, Volkmar, & Cohen, 2002; Morris, Pelphrey, & McCarthy, 2007; Pelphrey et al., 2002; Pelphrey, Morris, & McCarthy, 2005; Snow et al., 2011), schizophrenia (Green, Williams, & Davidson, 2003a, 2003b; Manor et al., 1999; M L Phillips & David, 1997; Mary L. Phillips & David, 1997, 1998; Streit, Wölwer, & Gaebel, 1997; Williams, Loughland, Gordon, & Davidson, 1999), bipolar disorder (Bestelmeyer et al., 2006; E. Kim et al., 2009; P. Kim et al., 2013; Loughland, Williams, & Gordon, 2002; Streit et al., 1997), and prosopagnosia (Schwarzer et al., 2007; Stephan & Caine, 2009; Van Belle et al., 2011), among others (Horley, Williams, Gonsalvez, & Gordon, 2003, 2004; Loughland et al., 2002; Marsh & Williams, 2006), and are thought to relate to the social and perceptual deficits associated with such disorders (e.g., see the correlation of eye-region fixations to emotion recognition performance for children with bipolar disorder, but not for healthy control children, reported in P. Kim et al., 2013). However, recent studies have uncovered striking deviations from the classic pattern of fixations even within the healthy population. Further, it appears that the classic pattern in fact holds largely only when averaging across individual participants’ eye-movement patterns (Gurler, Doyle, Walker, Magnotti, & Beauchamp, 2015; Kanan, Bseiso, Ray, Hsiao, & Cottrell, 2015; Mehoudar, Arizpe, Baker, & Yovel, 2014; Peterson & Eckstein, 2013; Peterson, Lin, Zaun, & Kanwisher, 2016). Such idiosyncratic eye-movement patterns have been shown to be highly stable even over the course of at least 18 months (Mehoudar et al., 2014), and thus variation in eye-movement patterns among individuals must be regarded as a largely stable dynamic rather than as variance from other sources. Patterns of individual differences in the laboratory have been reported to have a strong correlation with those in real-world settings (Peterson et al., 2016). Deviation from the classic spatial pattern in the healthy population was not reflected in reduced recognition performance for faces in our prior study (Mehoudar et al., 2014), which is consistent with a prior report showing no difference in the distribution of fixations between high and low face memory groups (Sekiguchi, 2011). Rather, forcing individuals to deviate from their own idiosyncratic fixation patterns has been reported to reduce performance for judgments on faces (Peterson & Eckstein, 2013). Even so, there is also evidence of an association between perception of the McGurk Effect and the degree of an individual’s tendency to fixate the mouth of McGurk stimuli (Gurler et al., 2015). Idiosyncratic scanpaths have further been shown to vary across different tasks involving judgment of faces, but to be stable within a given task (Kanan et al., 2015). In addition to these recent findings of idiosyncratic eye-movement spatial patterns to faces, other studies involving temporal measures or other visual perceptual domains have additionally reported individual differences in eye-movements (Andrews & Coppola, 1999; Boot, Becic, & Kramer, 2009; Castelhano & Henderson, 2008; Poynter, Barber, Inman, & Wiggins, 2013; Rayner, Li, Williams, Cave, & Well, 2007). These surprising findings shed light on an intriguing phenomenon of individual differences in eye-movements and raise questions of how these individual differences relate to perceptual mechanisms and performance.

The aim of the current study was to establish natural categories of individual eye-movement patterns to faces and to estimate the frequencies of such categories within the normal healthy population. As in prior studies, we additionally probed how individual eye-movement patterns might relate to recognition performance. Finally, we investigated how time into a trial and face inversion each modulated individual spatial patterns of eye-movements to faces in terms of both relative distinctiveness and consistency. We found a strikingly variable distribution of individual differences in the spatial pattern of eye-movements in our participants, which reflected a rather continuous distribution. Nevertheless, four natural clusters were discovered in the spatial distribution of the peaks in the spatial density of eye-movements across participants. Approximately 25% of our healthy participants’ peaks clustered over the left eye region (observer’s perspective), 23% over the right eye-region, 31% over the nasion/bridge region of the nose, and 20% over the region spanning the nose, philthrum, and upper lips. As in prior studies, we could not find evidence that individuals’ eye-movement patterns related to recognition performance, suggesting that idiosyncratic eye-movements that preferentially deviate from the “classic” T-shaped pattern do not result in reduced facial recognition. We also found evidence that idiosyncratic eye-movement patterns early into a trial were more stereotyped than those later into a trial, that such patterns evolved with time into a trial, and that while face inversion modulated individuals’ eye-movement patterns, inversion did not modulate the distinctiveness of those eye-movement patterns among participants.

2. - Materials and Methods

2.1 - Ethics Statement

All participants gave written informed consent and were compensated for their participation. The study was carried out in accordance with the Code of Ethics of the World Medical Association (Declaration of Helsinki) and was approved (protocol # 93-M-0170, NCT00001360) by the Institutional Review Board of the National Institutes of Health, Bethesda, Maryland, USA.

2.2 - Sources of Data

The eye-movement data for the current study were obtained from two prior published eye-tracking studies that were equivalent or highly comparable across many aspects of the stimuli and design. In the first study (J. Arizpe, Kravitz, Yovel, & Baker, 2012), Face Orientation and Start Position were manipulated. In the second study (J. Arizpe, Kravitz, Walsh, Yovel, & Baker, 2016), Race of Face and pre-stimulus Start Position were manipulated. Though all details of these studies are contained in the respective papers, for completeness a detailed re-description of the stimuli, design, and procedure for these studies are included in the Supplementary Materials.

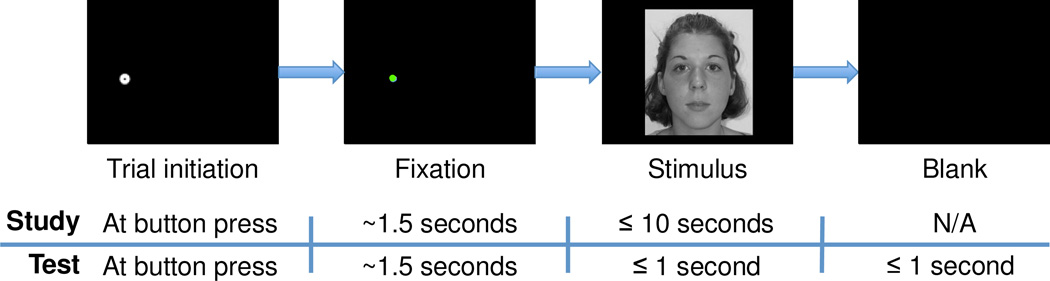

Concisely, both studies involved a study phase in which participants studied a unique face in each trial and a test phase in which participants viewed a face on each trial and responded as to whether the face was recognized as one observed during the study phase (old/new task; Figure 1). Participants were allowed to advance study phase trials in a self-paced manner (up to 10 seconds per trial, self-terminating trials with a button press). The test phase began immediately after the study phase. In each trial of the test phase, participants viewed a face for a limited duration (one second only) and were instructed to respond within two seconds following stimulus onset, as soon as they thought they knew the answer. Each stimulus was a grayscale frontal view of a young adult’s face scaled to have a forehead width subtending 10° visual angle. At the start of each trial, participants were required to maintain brief fixation on a pre-stimulus fixation location (“start position”) that was either to the right, to the left, above, or below the upcoming centrally-presented face stimulus. An additional central start position condition existed for the first (i.e., Face Orientation) study.

Figure 1.

Schematic of trial sequences. A face was only presented if the participant successfully maintained fixation for a total of 1.5 seconds. After face onset in the study phase, participants were free to study the face for up to 10 seconds and pressed a button to begin the next trial. In the test phase, faces were presented for one second only and participants responded with button presses to indicate whether the face was ‘old’ or ‘new’.

2.3 - Participants

50 individuals, who were residing in the greater Washington D.C. area, participated. Of those, 30 (11 male) participated in the experiment in which Race of Face and Start Position were manipulated. From that group, one participant’s data was excluded from analysis due to partial data corruption. The remaining 20 individuals (12 male) participated in the experiment in which Face Orientation and Start Position were manipulated. From that group, one participant’s data was excluded from analyses requiring test phase eye-movement data or recognition performance data because they did not complete the test phase. All participants were Western Caucasians because eye-movement differences have been reported among different races/cultures of observers (e.g., Blais, Jack, Scheepers, Fiset, & Caldara, 2008, though see Goldinger, He, & Papesh, 2009) and we were interested in individual difference measures that could not be explained by this effect.

2.4 - Analyses

2.4.1 - Software

We used EyeLink Data Viewer software by SR Research to obtain the fixation and AOI data. With custom Matlab (The MathWorks, Inc., Natick, MA, USA) code, we performed subsequent analyses on these data and on the behavioral data from the test phase. ANOVAs were conducted with SPSS statistical software (IBM, Somers, NY).

2.4.2 - Behavior

For the purposes of investigating the potential relationship of eye-movement patterns with facial recognition performance, we analyzed participants’ discrimination performance on the old/new recognition task. For each participant, d’ [z(hit rate) - z(false alarm rate)] was computed for discrimination performance for Caucasian faces in the other-race experiment and for upright faces in the face orientation experiment. Because only the left and right start position conditions were included in the spatial density analyses, likewise only the left and right start position condition trials were included in the d’ calculations. Additionally, to avoid infinite/undefined d’ values, we corrected hit and false alarm rates if they were at ceiling or floor values. Specifically, a hit or false alarm rate value of zero was adjusted to 1/(2*(possible responses)) and a value of one was adjusted to (2 * (possible responses) - 1)/(2 * (possible responses)).

2.4.3 - Spatial Density Analyses

To measure the individual differences in eye-movement patterns, we first mapped the spatial density of fixations for each participant under various experimental conditions (i.e., Race of Face or Face Orientation, Start Position, Study/Test Phase, Time Window). When comparing individual eye-movement patterns to behavioral performance or when attempting to discover clustering among individual eye-movement patterns, the spatial density maps utilized were those only of Caucasian/upright faces, but with left and right Start Position pooled, and study and test Phase pooled. Except for Time Window analyses, all spatial density maps were produced from all of the valid eye position samples recorded within the first second of the relevant trials. This time-restricted analysis was done so that the amount of data would be comparable across subjects for each analysis. In addition, the first second of each trial corresponds principally to those eye-movements putatively most functionally necessary and sufficient for face perception, given that optimal face recognition occurs within two fixations (Hsiao & Cottrell, 2008) and that an individual’s idiosyncratic preferred location of initial fixation has been shown to be functionally relevant to face recognition (Peterson & Eckstein, 2013). Invalid samples included samples during blinks or after button presses which signaled the end of the trial. For Time Window analyses, spatial density maps were produced from all valid samples within one-second time windows from the first to the fifth second within study phase trials of the other-race experiment. Due to computational constraints, sampling frequency was down sampled to 250Hz for data from the other-race experiment.

We ensured that summation of fixation maps across different face trials would produce spatially meaningful density maps by first aligning the fixation maps for individual faces to a common reference frame using only simple spatial translations. The internal facial features defined this reference frame. Specifically, the sum of the squared differences between the center of the AOIs for each face and the average centers of the AOIs across all faces was minimized in the alignment. Then each gaze sample was plotted in this common reference frame as a Gaussian density with a mean of 0 and a standard deviation of 0.3° of visual angle in both the x and y dimensions. We then summed these density plots across trials of the relevant experimental condition. When plotting the resulting maps, we used a color scale from zero to the maximum observed density value, with zero values represented in deep blue and the maximum density as red.

2.4.4 - Similarity Matrix Analyses

We computed similarity matrices from the spatial density data to quantify the similarity between fixation patterns among participants or across different experimental conditions. Each cell in a similarity matrix corresponds to a comparison between two conditions (or in the present study, between two participants). The value of the given similarity measure (e.g., correlation value, Euclidean distance, etc) corresponds to the specific comparison represented in each cell, referenced by its index in the matrix. This similarity matrix methodology, along with the discrimination analyses that complement it (see Discrimination Analyses subsection below), has become mainstream and ubiquitous in the fMRI literature (see Haxby et al., 2001; Kriegeskorte et al., 2008). Further, several prior eye-tracking studies have also made use of it (Benson et al., 2012; Borji & Itti, 2014; Greene, Liu, & Wolfe, 2012; Tseng et al., 2013), including two investigating face perception (Kanan et al., 2015; Mehoudar et al., 2014).

To produce similarity matrices, we conducted “split-half” analyses. We first split the eye-movement data into two halves, namely, the trials from the first and last half of the given phase (i.e., study or test), since each of these halves had equal numbers of trials of all possible condition combinations (race of face or face orientation, start position, gender). When including test phase in analyses, we included only those trials in which the observed faces were novel and, thus, not present in the study phase. This was done so that the face stimuli that had been seen between the study and test phase for a given participant were equally unfamiliar, thus removing face familiarity as a confound for any modulation in similarity measures we might measure.

Spearman’s correlations between corresponding pixels’ density values were calculated between participants across the split halves of the data. When correlating within given conditions (e.g., upright faces in the study phase) both halves of the data were of the same conditions, but when correlating between given conditions (e.g., study versus test phase) one half of the data was of one condition and the second half of the other condition. Importantly, when correlating between upright and inverted orientation conditions, the spatial density map for the inverted condition was first “un-inverted” so that it would be in the same face-centric reference frame as the upright condition map.

2.4.5 - Dissimilarity Matrix Analyses

For the purposes of visualizing potential groupings among various idiosyncratic eye-movement patterns, we produced dissimilarity matrices, which contain a measure of difference or “distance” between all of the various spatial density patterns across our participants. The distances were calculated as the correlation values of the similarity matrix subtracted from one.

2.4.6 - Discrimination Analyses

Discriminability index

Using the correlation values from the similarity matrix analyses, we conducted several discrimination analyses. These discrimination analyses quantified and tested the statistical significance of the average distinctiveness (“discriminability”) of the eye-movement patterns of given participants compared to those of the others. We focused particularly on the discriminability among participants, given certain experimental conditions (i.e., Race of Face or Face Orientation, Start Position, Study/Test Phase, Time Window) or across given conditions (e.g., discrimination of subjects for left start position condition using right start position data). The diagonals of the similarity matrices corresponded to the correlation between the two halves of the data from the same participant, while the cells off the diagonal corresponded to those split-half correlations between non-identical participants. Therefore, the discriminability value of each participant was calculated as the mean difference between the diagonal and off diagonals in the given participant’s corresponding row of the given similarity matrix, where one given row corresponds to one given participant’s first half of the data, and, likewise, each column to each participant’s second half of the data. Thus, a discriminability value existed for each participant. Larger positive values for a participant indicate greater relative discriminability. When discrimination was conducted across different conditions (e.g., discrimination of subjects for left start position condition using right start position data), only the eye-movement data of first half of the first condition and the second half of the second condition were utilized, so that the resulting discrimination measures would be conceptually and statistically comparable to those calculated within given conditions. On the discrimination index distribution across participants, we conducted a one-sampled, one-tailed (greater than zero) t-test to determine the statistical significance of average discriminability among participants. We chose a one-tailed test since, in this context, negative discrimination values are not interpretable.

Identification accuracy

We also calculated a more stringent index of discrimination that we call identification accuracy, which was the accuracy at which the second halves of participant data could be uniquely identified using the first halves. To compute this index, we again utilized the correlation values from the relevant similarity matrix. Every time the diagonal of the similarity matrix (data half 1 correlated with data half 2 for same participant) contained the highest correlation value in its row (data half 1 of a given participant correlated with data halves 2 of each and every of the participants), then data half 2 of the participant was considered to be correctly identified from data half 1. The identification accuracy index is the percent of such correct identifications over all rows (participants). Thus each similarity matrix had a single identification accuracy index associated with it. Given random data, the probability that any given participant could be correctly identified is 1/n, where n is the number of subjects (columns) in the matrix. Thus the probability (p-value) that a given identification accuracy index was at chance was also calculated using the binomial test.

2.4.7 - Eye-movement Pattern Clustering and Cluster Evaluation

In order to discover any natural clusters of idiosyncratic eye-movement patterns across participants, we applied UPGMA hierarchical agglomerative clustering (Sokal, 1958) to the eye-movement data and evaluated the relative strengths of the potential cluster solutions for different numbers of clusters using average silhouette values (Rousseeuw, 1987), which are values derived from a comparison of the tightness and separation of each cluster. Data from all the possible 48 participants from both studies were included in these analyses. Only the data from upright/Caucasian face trials but with pooled left and right Start Position and pooled study and test Phase conditions were utilized so that data between the two experiments could be combined. Two separate clustering analyses were performed: one using the participants’ spatial densities and the other using the coordinates of the peak in the spatial densities across participants. The number of clusters with the peak average silhouette value among the cluster numbers tested was used to determine the natural number of clusters, unless the average silhouette values were low (<0.5), according to standard criteria.

UPGMA rationale

Because the criteria chosen for optimization in a given clustering algorithm determines the nature (e.g., shape, density, etc) of the cluster solutions that tend to be produced, it was important to apply the criteria that are most suitable to the purpose at hand. One aim (and expectation) in our study was to discover natural clusters of peak spatial densities that correspond spatially to fairly focal regions on the face (e.g., left eye vs. right eye), so we chose the UPGMA clustering algorithm because it is well suited for data containing globular clusters. UPGMA was also well suited to our (overall) spatial density data, where distances among participants’ patterns were defined as correlation distances in a non-Euclidean space. UPGMA begins by treating each data point as a separate cluster and then proceeds in steps. At each step, the two most proximal clusters are combined, where distance between clusters is defined as the average distance of all pairs of points between given clusters.

Average silhouette value rationale

A silhouette value for a given data point is the result of a normalized contrast between (a) the average distance from all other points within the given cluster and (b) the average distance from all points in the nearest neighboring cluster. A silhouette value at or near zero thus indicates that the point lies at or near the “boundary” of the two clusters under consideration. A value closer to +1 indicates that the point is better matched to the assigned cluster than to the nearest neighbor cluster, while a value closer to −1 indicates the converse. When cluster assignments are artificial or inappropriate, relatively lower silhouette values will be more common. Therefore, an average silhouette value (i.e., the silhouette values averaged across all data points across all clusters) quantifies how natural/appropriate the assigned clusters under consideration are. The closer an average silhouette value is to +1, the tighter the points are within the clusters to which they have been assigned, notwithstanding that a few individual points may not “fit in” as strongly with the other points of their respective assigned clusters. A rule of thumb for evaluating the strength of clustering with average silhouette values is the following: < 0.25 => no clustering, 0.25–0.50 => artificial/weak clustering, 0.50–0.70 => reasonable clustering, 0.70–1.0 => strong (Kaufman & Rousseeuw, 1990). Note that even for a reasonable or strong clustering solution, there may be cluster structure within the designated clusters such that treating those “sub-clusters” as separate then results in an even stronger solution. So, in order to find the most natural number of clusters, one determines the number of clusters that results in the maximum average silhouette value.

Implementation

The hierarchical clustering was performed with the Matlab function ‘linkage’ with the distance computation method set to ‘average’ and the distance metric set to ‘spearman’ for the spatial density-based analysis and set to ‘euclidean’ for the peak-based analysis. Average silhouette value evaluations of the cluster solutions were performed with the Matlab function ‘evalclusters’ with the clustering algorithm set to ‘linkage’, the evaluation criterion set to ‘silhouette’, the range of cluster numbers to evaluate set from 2 to 15 clusters, and the distance metric set to the upper triangle vector representation of the spearman dissimilarity matrix for the spatial density-based analysis and set to squared Euclidean distance for the peak-based analysis. Cophenetic correlation coefficients for the hierarchical cluster trees were computed using the Matlab function ‘cophenet’. Cophenetic correlation is an index of how closely the cluster tree represents the actual dissimilarities among observations. Specifically, it is calculated as the linear correlation between the distances within the cluster tree and the original dissimilarities used to construct the tree. Thus, a Cophenetic correlation value close to one indicates a close correspondence between the cluster tree and the original data.

3. – Results

For clarity, results are reported in order of importance. This differs from the order of analyses as described in the Materials and Methods section, where analyses are organized according to the sequence by which the analyses were derived.

3.1 - Clustering of eye-movement density patterns among participants

We attempted to uncover any natural clusters in the eye-movement spatial density patterns across participants (see Methods). Average silhouette values (Supplementary Figure 1) for numbers of clusters from two to 15 on the hierarchical clustering solutions were quite low (<0.35) suggesting that none of these numbers of clusters correspond to natural groupings in the spatial density patterns across participants; therefore, we failed to find clusters of idiosyncratic patterns using the full maps of spatial densities. The Cophenetic correlation coefficient for the hierarchical cluster tree is C = 0.77.

3.2 - Clustering of peak eye-movement density among participants

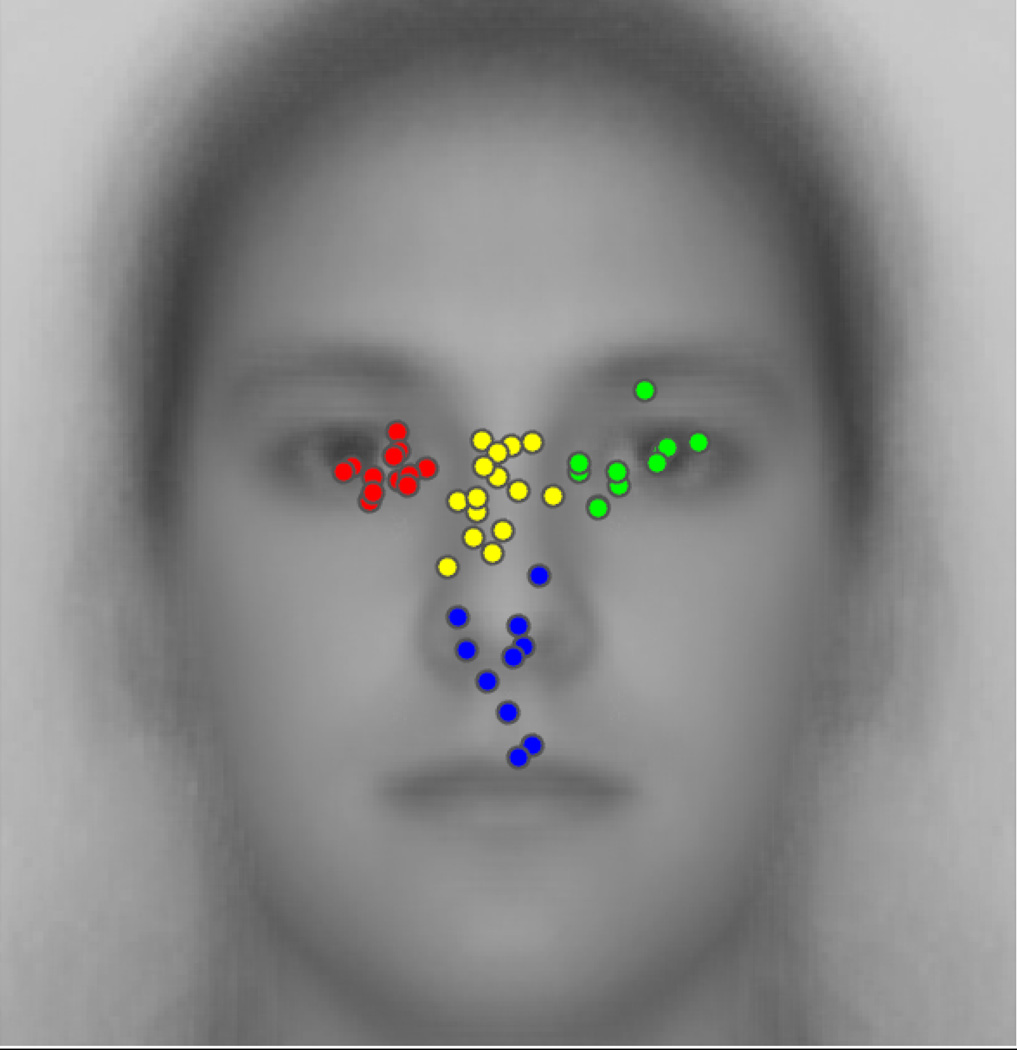

We plotted the peak spatial density of eye-movements across the 48 participants included in our analysis (Figure 2). Qualitative observation suggests a fairly continuous variability in individual differences in eye-movement density peaks. Further, this distribution of peaks across participants resembles the classic T-shaped pattern frequently reported at the group level in previous studies, while also indicating the great diversity in individual patterns.

Figure 2.

Distribution of peak eye-movement density among all participants. The four natural clusters are indicated in different dot colors. The underlain face image is the average of all the relevant faces presented during the experiments.

However, we also uncovered four moderately strong natural clusters among these peaks. Average silhouette plots (Supplementary Figure 2) for numbers of clusters from two to 15 on the hierarchical clustering solutions revealed that the solution for four clusters yielded the highest average silhouette value, namely of 0.7087. Because the solution for three clusters (where left eye and nasion/bridge clusters formed a single cluster) yielded a value (0.7074) nearly as high as that for four, we conducted an additional gap statistic evaluation on the data (Supplementary Figure 3), which confirmed that four is the optimal number of clusters. The Cophenetic correlation coefficient for the hierarchical cluster tree is C = 0.76.

These four natural clusters correspond to one cluster over the left eye region (observer’s perspective), one over the right eye-region, one over the nasion/bridge of the nose, and a final cluster spanning the nose, philthrum and upper lips. The prevalences for peaks in these four clusters are, respectively, approximately 25%, 23%, 31%, and 20%.

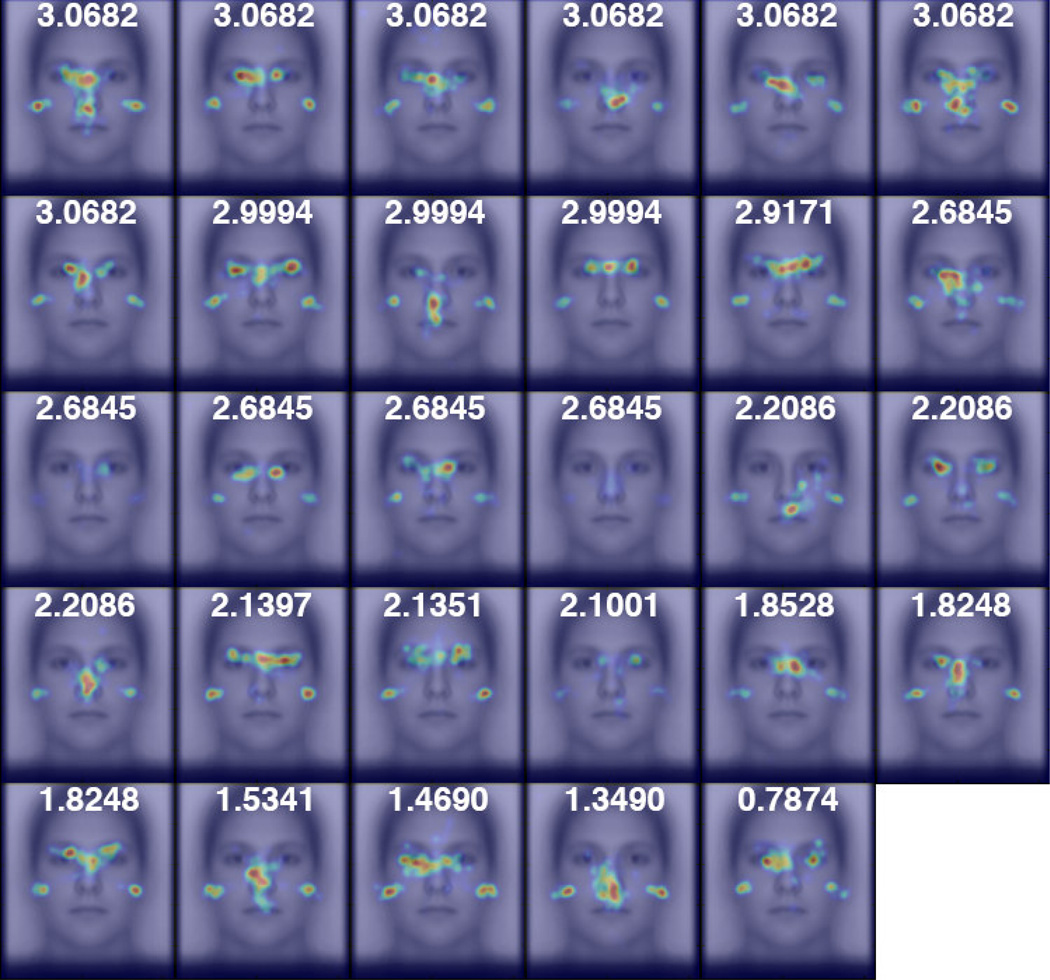

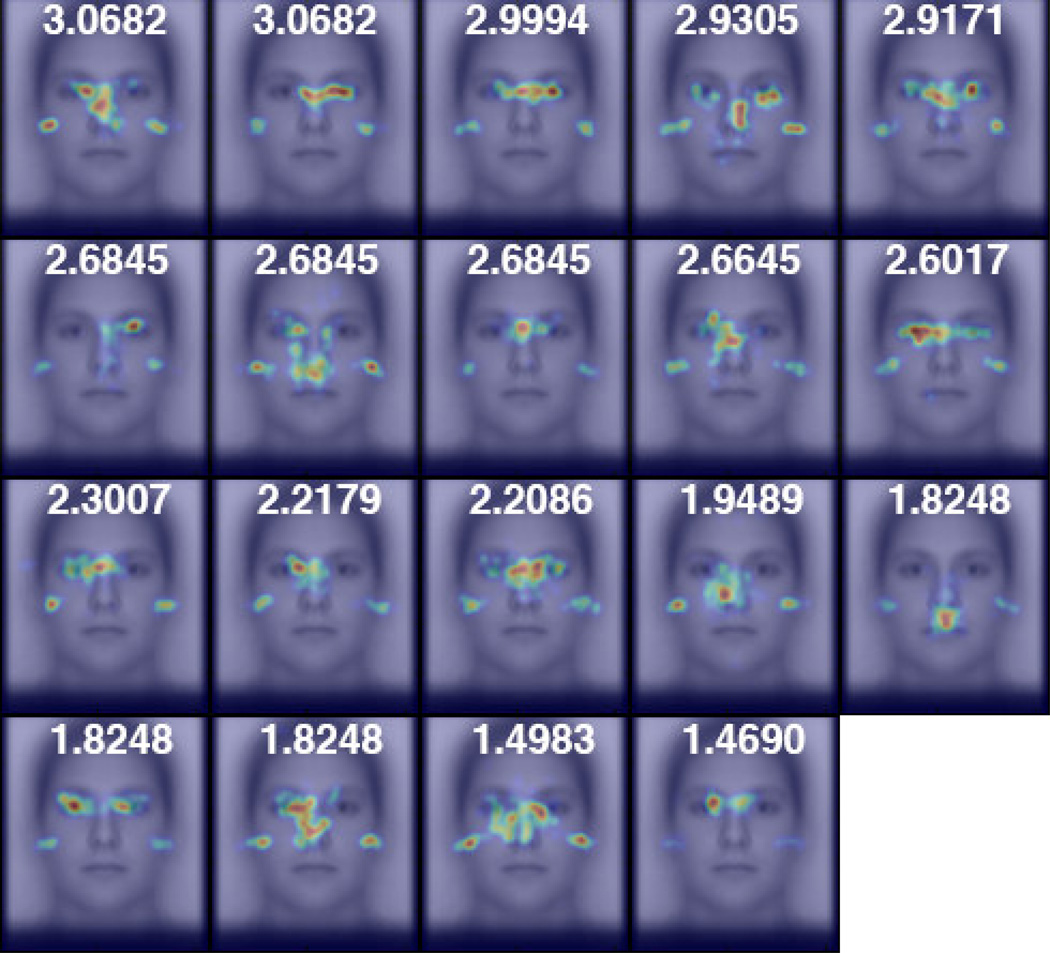

3.3 - Recognition performance versus eye-movement patterns

Given that deviation from the “classic” T-shaped eye-movement pattern to faces has been related to facial processing impairment in clinical populations, but that prior studies have failed to find a similar relationship with respect to idiosyncratic eye-movement patterns in the healthy population (see Introduction), we also investigated whether idiosyncratic eye-movement patterns are related to facial recognition performance. For each experiment, we sorted the individual spatial density maps of our participants according to the participants’ facial discrimination performance (Figures 3 and 4). From this, no clear qualitative relationship between eye-movements and recognition performance could be observed. We also plotted both the x- and y-coordinates of the peak fixation density on the face against d-prime performance in each experiment (Supplementary Figure 4). We failed to find evidence of any correlation (Spearman’s correlation) in the x- (r < 0.38, p > 0.12, both experiments) or the y-coordinates (|r| < 0.094, p > 0.70, both experiments) to recognition performance.

Figure 3.

Participants’ spatial density maps for Caucasian faces from the Other-Race experiment ordered by facial recognition performance, as measured by d’. The focal densities on the left and right edges of the face reflect participants’ gaze at left and right pre-stimulus start positions before their first saccades.

Figure 4.

Participants’ spatial density maps for upright faces from the Face Orientation experiment ordered by facial recognition performance, as measured by d’.

3.4 - What factors modulate individual differences in eye-movements?

We focused on how Time Window (1st – 5th seconds) and Face Orientation (upright, inverted) each influenced the relative distinctiveness and consistency of individual observer’s eye-movement patterns of our participant sample. In supplementary analyses (see Supplementary Material), we also investigated the same for Race of Face (Caucasian, African, Chinese), pre-stimulus Start Position (left, right of upcoming face), and Phase (study, test). In particular, for each of these factors we investigated three aspects of individual differences in eye-movement patterns: i) Discriminability at each level of the given factor (i.e., For each level, are participants’ patterns distinct relative to one another?), ii) Relative Discriminability between levels of the given factor (e.g., Are participants’ patterns more distinct relative to one another for one level than another), and iii) Individual Consistency Across Levels of the given factor (i.e., Are individual patterns consistent between levels) We quantified these aspects using discrimination index and identification accuracy (see Methods).

To investigate effects of Race of Face, Start Position, Phase and Time Window we used the Other-Race Experiment data, rather than the Face Orientation Experiment data, because this maximized the amount of data per condition. Orientation was not manipulated in the Other-Race Experiment, so we used the Orientation Experiment data to analyze effects of orientation.

3.4.1 - Summary for Race of Face, Pre-stimulus Start Position, and Phase

The full report of discrimination results for the Race of Face, Pre-stimulus Start Position, and Phase factors are reported in Supplementary Results; however, we present a brief summary of the key findings for these factors because they motivate analysis decisions implemented for the Time Window and Face Orientation factors.

Race of Face (Supplementary Figure 5) did not significantly modulate the distinctiveness of individual eye-movement patterns, and did not strongly modulate individual eye-movement patterns. Therefore, for all remaining discrimination analyses involving data from the other-race experiment (which includes the analysis of Time Window) we pooled eye-movement patterns across Race of Face.

Pre-stimulus Start Position (Supplementary Figure 6) may have modulated the distinctiveness of individual eye-movement patterns (see Start Position - Relative Discriminability in Supplementary Results for details). Further, the distinguishing information in individual eye-movement patterns differed across pre-stimulus Start Position conditions, as would be expected from prior research revealing that Start Position induces an overall fixation bias to the contralateral side of the face (J. Arizpe et al., 2012; J. M. Arizpe, Walsh, & Baker, 2015). For these reasons, for all other discrimination analyses, we averaged the correlation matrices from both start positions before calculating discriminability indices and identification accuracies.

Phase (Supplementary Figure 7) marginally significantly modulated the distinctiveness of individual eye-movement patterns, and significantly modulated individual eye-movement patterns. Given this evidence that our participants’ idiosyncratic eye-movement patterns were modulated across study and test phases, and because we cannot presently rule out that this may have been because of the artificial time restriction to make eye-movements during test phase, we focused only on data from the study phase (which was always self-paced) in all the other discrimination analyses.

3.4.2 - Time Window

Summary

Time Window modulated the distinctiveness of individual eye-movement patterns such that discriminability decreased with later time windows. Also eye-movement patterns were significantly different between time-windows (Figure 5).

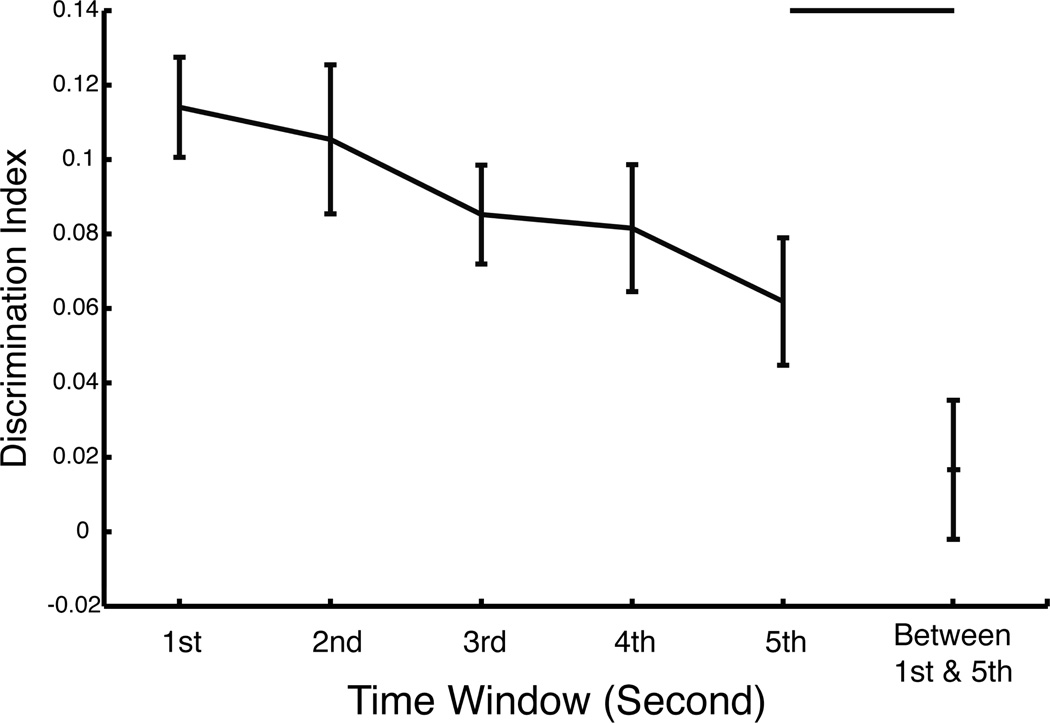

Figure 5.

Discrimination indices within- and between- Time Window (1st – 5th second) for the Other-Race experiment (all Race of Face conditions pooled and Start Position conditions averaged). Discrimination indices within each time window significantly decreased with time. Further, the between- 1st and 5th second discrimination index was not significantly greater than zero and was significantly lower than that for within the 5th second. Error bars represent ± 1 standard error.

Discriminability

Discriminability indices were significantly greater than zero for each one-second time-window (1st through 5th second, all: t(28) > 3.54, p < 0.0015, one-tailed) in the other-race experiment, thus indicating significant discriminating information in individual eye-movement patterns in each time-window. Identification accuracy was significantly greater than chance (all: p < 0.017), for each time window, except for the 3rd second (p > 0.076).

Relative Discriminability

Discriminability indices, however, decreased with time. The mean slope of the within-subject regression lines of discriminability index versus time (ordinal second) across participants was negative (m = −0.0128) and was significantly less than zero (t(28) < −2.75, p < 0.0052, one-tailed). This indicates that our participants’ idiosyncratic eye-movement patterns became less distinct with time.

Consistency Across Levels

When individual eye-movement patterns in the first Time Window were used to discriminate individuals in the fifth Time Window, the discriminability index was not significantly greater than zero (paired t(28) < 0.88, p > 0.38, two-tailed) and identification accuracy (0%) was not significantly greater than chance (p = 1). Also, interestingly, the between-time-window discrimination index was significantly lower than the within-time-window discrimination index for the fifth second (paired t(28) > 2.67, p < 0.0063, one-tailed). This suggests that our participants’ idiosyncratic eye-movement patterns varied across Time Window.

3.4.3 - Face Orientation

Summary

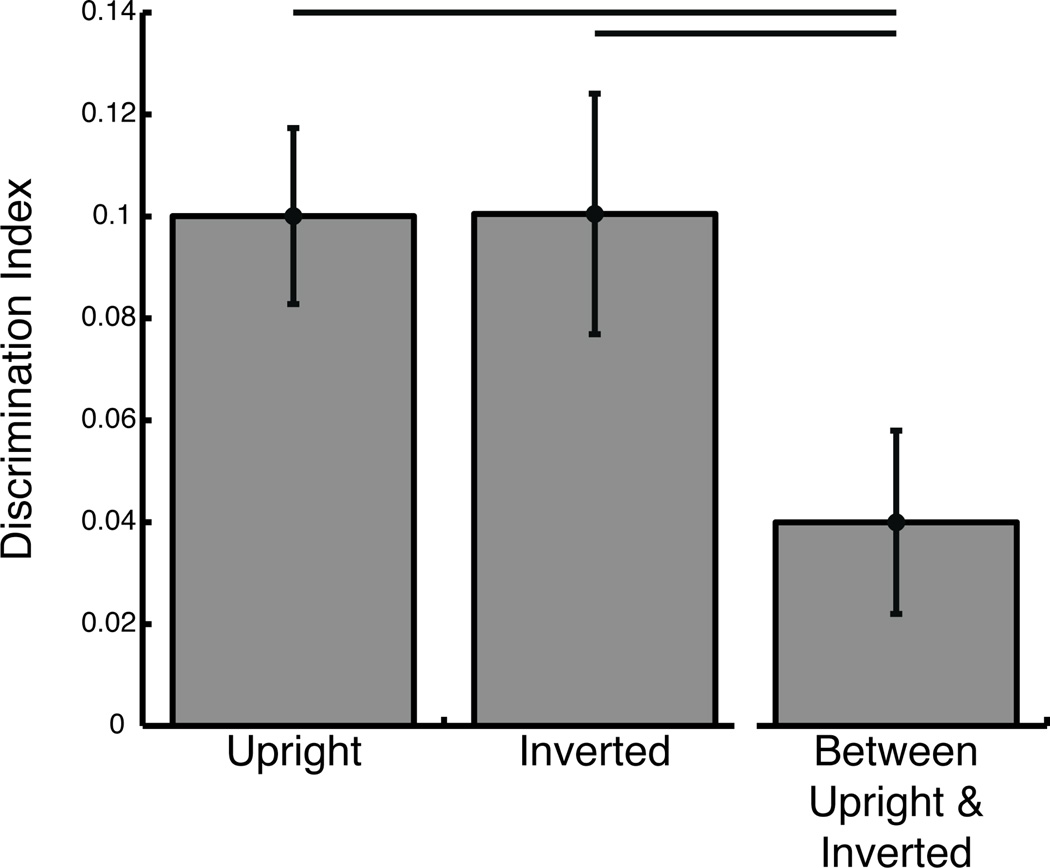

While Face Orientation modulated individual eye-movement patterns, it did not modulate the distinctiveness of those individual eye-movement patterns (Figure 6).

Figure 6.

Discrimination indices within- and between- Face Orientation (upright, inverted) conditions of the Face Orientation experiment (Start Position conditions averaged). The between-orientation discrimination index was significantly lower than either within-orientation discrimination index. Error bars represent ± 1 standard error.

Discriminability

Discriminability indices were significantly greater than zero for both upright and inverted faces (both t(19) > 4.15, p < 0.00055, one-tailed) in the face orientation experiment, and thus indicate significant discriminating information in individual eye-movement patterns in each face orientation. Identification accuracy was 25% both for upright and for inverted faces, and thus significantly greater than chance (p < 0.0027), for each face orientation.

Relative Discriminability

Discriminability indices did not differ between upright and inverted face conditions (paired t(19) < 0.016, p > 0.50, one-tailed), which suggests that participants were equally discriminable in both the upright and inverted face conditions.

Consistency Across Levels

When individual eye-movement patterns in the upright face condition were used to discriminate individuals in the inverted face condition, the discriminability index was significantly greater than zero (paired t(19) > 2.16, p < 0.044, one-tailed) though identification accuracy (10%) was not significantly greater than chance (p > 0.26). Also, interestingly, the between-orientation discrimination index was significantly lower than both of the within-orientation discrimination indices (both: paired t(19) > 2.64, p < 0.0080, one-tailed). This suggests that our participants’ idiosyncratic eye-movement patterns were different across upright and inverted face orientations, though the discriminability did not differ between face orientation conditions. Despite the quantitative differences in gaze pattern between face orientations, side-by-side upright and inverted face spatial density maps for each individual participant (Supplementary Figure 8) reveal some striking qualitative similarities that are only partially captured in the between orientation discriminability index.

4. - Discussion

4.1 - Categories and Frequencies of Idiosyncratic Eye-movement Patterns

The principal aims of our study were to estimate the diversity and frequencies of different natural categories of these idiosyncratic eye-movement patterns within the healthy population. Our findings indicate that while there may be a fairly continuous distribution of different patterns among the healthy population, distinct categories of eye-movement patterns could be discovered within the distribution. Specifically, within the spatial distribution of peaks in the spatial density of eye-movements across participants, four moderately strong natural clusters were discovered within the distribution. Approximately 25% of participants’ peaks clustered over the left eye region (observer’s perspective), 23% over the right eye-region, 31% over the nasion/bridge region of the nose, and 20% over the region spanning the nose, philthrum, and upper lips. As our participant population was screened for neurological and psychiatric disorders before participation, we estimate that these proportions approximate those found in the eye-movements across the normal healthy population. Given that our participants were all Western Caucasian individuals, and given the prior reports of differences in eye-movement patterns between different cultures/races of observers (e.g., Blais, Jack, Scheepers, Fiset, & Caldara, 2008, though see Goldinger, He, & Papesh, 2009), our findings may not generalize beyond the Western Caucasian population.

4.2 - Theoretical Considerations

Unlike studies of clinical populations, we found no evidence that deviations from the classic spatial eye-movement pattern in our healthy participant sample related to facial recognition performance. We cannot be certain that no aspects of our analytic or experimental design (e.g., using the same images for “old” test phase faces as study phase faces, or the self-paced nature of the paradigm) obscured a relationship between preferred fixation location and performance, so, as usual, caution is required in interpreting such a null result as definitive in isolation. Nonetheless, this null result is consistent with other research (P. Kim et al., 2013; Mehoudar et al., 2014), including a study that indicated that an individual fixating at his or her own idiosyncratic fixation location to a face leads to optimal facial recognition for them (Peterson & Eckstein, 2013). Such a notion of an idiosyncratic optimal fixation location for each individual, though, appears inconsistent with other research, which has reported that increased facial recognition performance was associated with increased fixation to the eyes of faces (Sekiguchi, 2011), and with the evidence in favor of the importance of the visual information in the eyes for accurate and rapid facial recognition (Caldara et al., 2005; Davies, Ellis, & Shepherd, 1977; Fraser, Craig, & Parker, 1990; Gosselin & Schyns, 2001; McKelvie, 1976; Schyns, Bonnar, & Gosselin, 2002; Sekuler, Gaspar, Gold, & Bennett, 2004; Vinette, Gosselin, & Schyns, 2004). These apparently inconsistent results are however not necessarily incompatible. While the distribution of specific spatial eye-movement patterns to faces may be rather continuous across individuals, there is still a strong bias in the population distribution overall to fixate at or near the eyes, as is apparent in the classic eye-movement pattern commonly observed when participant data is averaged. Thus associations between fixation to the eyes and information use at the group level of an experiment should indeed reflect this bias at the population level to fixate the eyes for optimal performance, even though many individuals do not directly fixate the eyes very much.

Given that fixation location does not necessarily correspond to what or how visual information is processed (Caldara, Zhou, & Miellet, 2010) it remains unclear if the same facial information is used or if the same neural processing is employed during face recognition, regardless of whether an individual’s idiosyncratic eye-movement patterns are eye-focused or are focused elsewhere on the face. If we consider racial/cultural differences in eye-movement patterns to faces as a special case of individual differences in eye-movements, then one prior study (Caldara et al., 2010) provides evidence that there is consistency in the facial feature information principally utilized during face identification between groups of participants whose preferred eye-movement patterns greatly differed. More specifically, while the Eastern Asian participants tended to fixate the center of the face more than the Western Caucasian participants, both the Eastern Asian and Western Caucasian participants utilized the same eye facial feature information to identify faces, suggesting that the Eastern Asian participants preferred to use parafoveal vision to extract that same eye feature information. Future studies of individual differences in eye-movements are needed to test the generality of such a consistency in the diagnosticity of specific facial information across individuals with various idiosyncratic eye-movement patterns. How such diversity in idiosyncratic eye-movement patterns may relate to acquired or inherited differences in ocular or cortical visual processing (e.g parafoveal acuity or cortical receptive field properties) also warrants investigation in future studies.

Along similar lines, future investigation into whether there are differences among our clusters in terms of any eye-movement or behavioral measures that are distinct from gaze location (Supplementary Figure 9 and Supplementary Figure 10) could be useful in understanding the basis of these preferred gaze location differences. If such differences in orthogonal measures were to be discovered, it would be important to determine whether the differences are intrinsically tied to gaze location or, rather, remain in effect even when participants are required to deviate from their preferred gaze locations. If the former, it would suggest a similarity in how healthy individuals process faces, in spite of the fact that some individuals deviate from a typical or optimal information sampling strategy. If the latter, it could reveal relevant mechanistic differences in how individuals process faces and, perhaps, visual stimuli more generally. We hypothesize that such mechanistic differences exist among individuals and provide a basis for the clusters we discovered.

Though the differences in idiosyncratic patterns of eye-movements in the healthy population do not seem to be associated with recognition performance (Blais et al., 2008; Peterson & Eckstein, 2013; Sekiguchi, 2011) as has been often reported in clinical populations (see Introduction), it is still possible that some of the mechanisms driving the development of the atypical eye-movement patterns in the clinical population may be at play in driving the diversity in eye-movements in the healthy population, at least for some individuals. At least two studies provide evidence for this possibility. One study (Dalton, Nacewicz, Alexander, & Davidson, 2007) reports that though the unaffected siblings of individuals with Autism did not exhibit the reduced facial recognition performance of their autistic siblings, they nonetheless exhibited reduced fixation duration over the eyes relative to a control group, just as their Autistic siblings had. Further, brain imaging analyses revealed that the unaffected siblings exhibited reduced BOLD signal change in the right posterior fusiform gyrus in response to viewing faces as well as reduced Amygdala volume relative to the control group, just as their Autistic siblings had. A sizable portion of the variance in BOLD signal change in regions of the fusiform gyrus could be accounted for by the variability in looking at the eyes for all groups though, suggesting that the individuals’ preferred eye-movement patterns could have influenced the BOLD signal changes. A second study (Adolphs, Spezio, Parlier, & Piven, 2008) reports that unaffected parents of individuals with autism, whether they exhibited aloof personality traits or not, exhibited an increased use of mouth facial information relative to controls during facial emotion judgment, in much the same way individuals with autism do.

Equally unclear and interesting is whether these individual differences in eye-movements emerge early in development, how heritable they are, and if they are associated with personality, cognitive traits, or developmental abnormalities. One study (Beevers et al., 2011) reports differences in eye-movements to emotional faces between groups of individuals with different serotonin transporter promoter region polymorphisms, indicating a link between particular alleles and particular preferred eye-movement patterns.

Our results principally reflect the spatial patterns of gaze across individuals. However, saccade characteristics and the temporal/ordinal dynamics of gaze likely also vary across individuals, possibly in ways that functionally relate to face perception. Some degree of visual perception, albeit depressed, is possible during saccades (Volkmann, 1962) and just prior to saccade onset, the location and shape of the receptive fields of some visually responsive neurons have been observed to shift with reference to the target of the saccade (Deubel & Schneider, 1996; Duhamel, Colby, & Goldberg, 1992; Hoffman & Subramaniam, 1995; Nakamura & Colby, 2002; Sommer & Wurtz, 2006; Tolias et al., 2001; Walker, Fitzgibbon, & Goldberg, 1995). Further, it has been reported that saccades and fixational eye-movements yield temporal transients of different spatial frequencies on the retina such that saccades affect contrast sensitivity at low spatial frequencies, possibly biasing stimulation to the magnocellular/dorsal visual pathway, and fixations affect sensitivity at high spatial frequencies, possibly biasing stimulation to the parvocellular/ventral visual pathway (Rucci, Poletti, Victor, & Boi, 2015). For facial recognition, human observers preferentially use a band of spatial frequency approximately 8–16 cycles per face (Costen, Parker, & Craw, 1996; Näsänen, 1999), though some evidence suggests that the role of spatial frequency differs depending on what information is used to perform the recognition (Cheung, Richler, Palmeri, & Gauthier, 2008; Goffaux, Hault, Michel, Vuong, & Rossion, 2005; Goffaux & Rossion, 2006). Further, lower spatial frequencies and distinct subcortical pathways are implicated in fear expression perception compared to facial recognition (Vuilleumier, Armony, Driver, & Dolan, 2003). Thus, the significance of any individual differences in saccade characteristics or temporal dynamics in gaze for face perception warrants future investigation.

4.3 - Time Window and Face Orientation Influences on Individual Differences

Time Window significantly modulated the distinctiveness of individual eye-movement patterns such that individual pattern discriminability decreased with later time windows (slope was significantly negative, p < 0.0052, one-tailed), suggesting that for each participant, eye-movement patterns early into a trial were more stereotyped than later ones. Additionally, discriminability was further weakened when measured between time windows (1st versus 5th second), compared to within time window (5th second), suggesting that the probability distribution of fixations employed over the various facial features was not constant across time for each participant, but rather evolved with time. This does not preclude the possibility that, over long time windows, the spatial pattern of eye-movements could be much more similar across participants, such that the differences among participants are rather more largely reflected in the ordinal sequence of eye-movements. Nonetheless, the idiosyncratic eye-movements most functionally relevant for face recognition occur within an early and short time widow, given that optimal face recognition occurs within two fixations (Hsiao & Cottrell, 2008) and that an individual’s idiosyncratic preferred location of initial fixation has been shown to be functionally relevant to face recognition (Peterson & Eckstein, 2013).

While individual eye-movement patterns were not consistent quantitatively between upright and inverted faces (though see Supplementary Figure 8 for some notable qualitative similarities), individual patterns were nonetheless equally discriminable for each face orientation. Between orientation discrimination was significantly weaker than within orientation discrimination (p < 0.0080, one-tailed), indicating that Face Orientation strongly modulated eye-movement patterns within individual. This evidence for modulation of eye-movement patterns is fully expected given prior research revealing inverted faces attracted relatively fewer fixations on the eye region and relatively more on the lower part of the face compared to upright faces (Barton et al., 2006), and especially given that such patterns were previously reported in the study from which this portion of our data was derived (J. Arizpe et al., 2012). Surprisingly, discrimination indices nonetheless did not differ between upright and inverted face orientations (p = 0.50, one-tailed), indicating that individual fixation patterns for inverted faces remained as distinct as those for upright faces. This finding seems inconsistent with a prior study (Barton et al., 2006) that reported individual eye-movement sequences were more random (less stereotyped) for inverted, compared to upright faces; however, the current study includes only the first second of eye-movements in the analysis, whereas the prior study utilized longer samples of eye-movement data. Given that earlier eye-movements appear more stereotyped than later ones, the difference in analyzed amount of eye-movement data between the current study and that prior study may factor into the discrepancy in results. Further, unlike that prior study, the current study does not take into account the order of individual fixations. Our findings for Face Orientation highlight both that equal pattern discriminability between conditions does not necessarily imply highly similar patterns in the underlying data between conditions, and that differences in patterns between conditions do not necessarily imply condition differences in pattern discriminability.

4.4 - Novel measures of eye-movement patterns

To conduct our investigation into how these experimental factors modulated the relative distinctiveness among and consistency within individual spatial patterns of eye-movements we employed discrimination index and identification accuracy measures (see Methods) adapted for our eye-movement data. These measures have become highly utilized in the functional neuroimaging field for investigating the relative distinctiveness of neural or hemodynamic activation patterns under various conditions (Haxby et al., 2001; Kriegeskorte et al., 2008); however, despite the amenability of eye-tracking data (both spatial and temporal) to be submitted to these kinds of analyses as well as the versatility and utility of these measures in eye-tracking studies, only in recent years have these measures just begun to be utilized in eye-tracking research (Benson et al., 2012; Borji & Itti, 2014; Greene et al., 2012; Kanan et al., 2015; Mehoudar et al., 2014; Tseng et al., 2013). Among other advantages, such measures can be an effective means of detecting differences in eye-movement patterns, summarizing them within a low-dimensional space, or in conducting data-driven analyses. As is also true in the case of neuroimaging though, these measures also have their limitations and have particularities in how they may be validly interpreted. Specifically, the first measure, discrimination index, allows for quantifying the relative distinctiveness in data patterns among conditions overall (or among individual participants overall in the case of the present study). This measure is a global one, dependent on the patterns of other conditions, and so does not necessarily imply that a given condition is uniquely distinguishable from others. Rather it can be interpreted as a measure that quantifies the degree to which at least some of the other conditions can be differentiated based on data patterns from the given condition. The second measure, identification accuracy, as we have employed it in the present study, does however quantify the degree to which a given condition can be uniquely distinguished from other conditions based on data patterns. The advantage of this measure is that it is a more intuitive measure and potentially a more meaningful measure, depending on the context in which it is employed. When applying identification accuracy measures in the context of investigations of differences across experimental conditions (rather than in the context of participant individual differences, as in the present study) a distribution of identification accuracy values can be produced on which standard means hypothesis testing can be conducted. However, its disadvantages are that it is a highly conservative measure, and thus can lack sensitivity. The relationship between discrimination index and identification accuracy is also not, in all cases, necessarily straightforward as it is possible for data to yield a high discriminability index with low identification accuracy, or vice versa, under certain circumstances. Further, some gaze pattern differences, for example simple translation of one pattern compared to another, may reflect strongly in these quantitative indices when using correlation dissimilarities as the distance measure, notwithstanding that the shape, distribution, and scale between two patterns may be highly similar. Such differences likely partially explains why our between orientation discrimination index was relatively low and identification accuracy was not above chance in spite of the qualitative individual pattern similarities between upright and inverted faces (Supplementary Figure 8). For our current application of detecting any modulation of eye-movements between face orientations, sensitivity to such pattern differences is an advantage; however, it is possible that for other applications, it could be regarded as a nuisance, or could at least obscure other aspects of similarity between patterns that may be of interest. Therefore, consideration of what distance metric is most appropriate and interpretable for a particular application is important. Given the advantages and suitability of such discriminability measures to eye-tracking studies, more widespread use of them is strongly advised, along with the due prudence in how they are employed and interpreted.

4.5 - Practical Considerations

Are there any practical implications for the potential to associate individuals to their eye-movement patterns? This potential could have useful applications within technological or security domains as individuals’ idiosyncratic eye-movement dynamics could serve as biometric signatures (Holland & Komogortsev, 2011; Kasprowski & Ober, 2004). While our findings suggest that the spatial patterns of eye-movements may not alone uniquely identify individuals in the majority of instances, even within just our limited participant sample, incorporating temporal and occulo-motor dynamic information into the individual’s eye-movement biometric may enable greater discriminability among individuals. Given the currently expanding prevalence of eye-tracking technology, even within mobile phones, this potential could be exploited in future practical applications.

5. - Conclusions

We found a strikingly variable and rather continuous distribution of individual differences among our participants in the spatial pattern of eye-movements to faces. Importantly, four natural clusters were discovered in the spatial distribution of the peaks in the spatial density of eye-movements across participants. Specifically, approximately 25% of our healthy participants’ peaks clustered over the left eye region (observer’s perspective), 23% over the right eye-region, 31% over the nasion/bridge region of the nose, and 20% over the region spanning the nose, philthrum, and upper lips. We therefore estimate that these categories and percentages approximate those found in the normal healthy population. No relationship was evident between idiosyncratic eye-movement patterns and recognition performance. Finally, we found evidence that eye-movement patterns early into a trial were more stereotyped than those later into a trial, that idiosyncratic fixation patterns evolved with time into a trial, and that individual patterns to inverted faces did not become less distinct than those to upright faces, despite the strong modulation of eye-movement patterns due to inversion.

Supplementary Material

Highlights.

For the healthy population, there are idiosyncratic eye-movement patterns to faces

4 natural categories of idiosyncratic patterns among the peaks in density

25% left eye, 23% right eye, 31% nasion/bridge, and 20% nose/philthrum/lip

No relation between idiosyncratic eye-movement patterns and recognition performance

Time into trial changes pattern and distinctiveness, face inversion changes pattern

Acknowledgments

The Intramural Program of the National Institutes of Mental Health (ZIAMH002909) and The United States-Israel Binational Science Foundation (Application # 2007034) provided funding for this study.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Adolphs R, Spezio ML, Parlier M, Piven J. Distinct face-processing strategies in parents of autistic children. Current Biology: CB. 2008;18(14):1090–1093. doi: 10.1016/j.cub.2008.06.073. http://doi.org/10.1016/j.cub.2008.06.073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Althoff RR, Cohen NJ. Eye-movement-based memory effect: A reprocessing effect in face perception. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1999;25(4):997–1010. doi: 10.1037//0278-7393.25.4.997. http://doi.org/10.1037/0278-7393.25.4.997. [DOI] [PubMed] [Google Scholar]

- Andrews TJ, Coppola DM. Idiosyncratic characteristics of saccadic eye movements when viewing different visual environments. Vision Research. 1999;39(17):2947–2953. doi: 10.1016/s0042-6989(99)00019-x. http://doi.org/10.1016/S0042-6989(99)00019-X. [DOI] [PubMed] [Google Scholar]

- Arizpe J, Kravitz DJ, Walsh V, Yovel G, Baker CI. Differences in Looking at Own- and Other- Race Faces Are Subtle and Analysis- Dependent: An Account of Discrepant Reports. PLoS ONE. 2016;11(2):e0148253. doi: 10.1371/journal.pone.0148253. http://doi.org/10.1371/journal.pone.0148253. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arizpe J, Kravitz DJ, Yovel G, Baker CI. Start position strongly influences fixation patterns during face processing: difficulties with eye movements as a measure of information use. PloS One. 2012;7(2):e31106. doi: 10.1371/journal.pone.0031106. http://doi.org/10.1371/journal.pone.0031106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arizpe JM, Walsh V, Baker CI. Characteristic visuomotor influences on eye-movement patterns to faces and other high level stimuli. Frontiers in Psychology. 2015;6:1027. doi: 10.3389/fpsyg.2015.01027. http://doi.org/10.3389/fpsyg.2015.01027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barton JJS, Radcliffe N, Cherkasova MV, Edelman J, Intriligator JM. Information processing during face recognition: the effects of familiarity, inversion, and morphing on scanning fixations. Perception. 2006;35:1089–1105. doi: 10.1068/p5547. http://doi.org/10.1068/p5547. [DOI] [PubMed] [Google Scholar]

- Beevers CG, Marti CN, Lee H-J, Stote DL, Ferrell RE, Hariri AR, Telch MJ. Associations between serotonin transporter gene promoter region (5-HTTLPR) polymorphism and gaze bias for emotional information. Journal of Abnormal Psychology. 2011;120(1):187. doi: 10.1037/a0022125. [DOI] [PubMed] [Google Scholar]

- Benson PJ, Beedie SA, Shephard E, Giegling I, Rujescu D, St. Clair D, St Clair D. Simple viewing tests can detect eye movement abnormalities that distinguish schizophrenia cases from controls with exceptional accuracy. Biological Psychiatry. 2012;72(9):716–724. doi: 10.1016/j.biopsych.2012.04.019. http://doi.org/10.1016/j.biopsych.2012.04.019. [DOI] [PubMed] [Google Scholar]

- Bestelmeyer PEG, Tatler BW, Phillips LH, Fraser G, Benson PJ, St. Clair D. Global visual scanning abnormalities in schizophrenia and bipolar disorder. Schizophrenia Research. 2006;87(1):212–222. doi: 10.1016/j.schres.2006.06.015. http://doi.org/10.1016/j.schres.2006.06.015. [DOI] [PubMed] [Google Scholar]

- Blais C, Jack RE, Scheepers C, Fiset D, Caldara R. Culture shapes how we look at faces. PLoS ONE. 2008;3(8):e3022. doi: 10.1371/journal.pone.0003022. http://doi.org/10.1371/journal.pone.0003022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boot WR, Becic E, Kramer AF. Stable individual differences in search strategy? The effect of task demands and motivational factors on scanning strategy in visual search. Journal of Vision. 2009;9(3):7.1–16. doi: 10.1167/9.3.7. http://doi.org/10.1167/9.3.7. [DOI] [PubMed] [Google Scholar]

- Borji A, Itti L. Defending Yarbus: Eye movements reveal observers’ task. Journal of Vision. 2014;14(3):29. doi: 10.1167/14.3.29. http://doi.org/10.1167/14.3.29. [DOI] [PubMed] [Google Scholar]

- Caldara R, Schyns P, Mayer E, Smith ML, Gosselin F, Rossion B. Does prosopagnosia take the eyes out of face representations? Evidence for a defect in representing diagnostic facial information following brain damage. Journal of Cognitive Neuroscience. 2005;17(10):1652–1666. doi: 10.1162/089892905774597254. http://doi.org/10.1162/089892905774597254. [DOI] [PubMed] [Google Scholar]

- Caldara R, Zhou X, Miellet S. Putting culture under the “Spotlight” reveals universal information use for face recognition. PLoS ONE. 2010;5(3):e9708. doi: 10.1371/journal.pone.0009708. http://doi.org/10.1371/journal.pone.0009708. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Castelhano MS, Henderson JM. Stable individual differences across images in human saccadic eye movements. Canadian Journal of Experimental Psychology = Revue Canadienne de Psychologie Expérimentale. 2008;62(1):1–14. doi: 10.1037/1196-1961.62.1.1. http://doi.org/10.1037/1196-1961.62.1.1. [DOI] [PubMed] [Google Scholar]

- Cheung OS, Richler JJ, Palmeri TJ, Gauthier I. Revisiting the role of spatial frequencies in the holistic processing of faces. Journal of Experimental Psychology: Human Perception and Performance. 2008;34(6):1327–1336. doi: 10.1037/a0011752. http://doi.org/10.1037/a0011752. [DOI] [PubMed] [Google Scholar]

- Costen NP, Parker DM, Craw I. Effects of high-pass and low-pass spatial filtering on face identification. Perception & Psychophysics. 1996;58(4):602–612. doi: 10.3758/bf03213093. http://doi.org/10.3758/BF03213093. [DOI] [PubMed] [Google Scholar]

- Dalton KM, Nacewicz BM, Alexander AL, Davidson RJ. Gaze-fixation, brain activation, and amygdala volume in unaffected siblings of individuals with autism. Biological Psychiatry. 2007;61(4):512–520. doi: 10.1016/j.biopsych.2006.05.019. http://doi.org/10.1016/j.biopsych.2006.05.019. [DOI] [PubMed] [Google Scholar]

- Davies G, Ellis H, Shepherd J. Cue saliency in faces as assessed by the “Photofit” technique. Perception. 1977;6(3):263–269. doi: 10.1068/p060263. http://doi.org/10.1068/p060263. [DOI] [PubMed] [Google Scholar]

- Deubel H, Schneider WX. Saccade target selection and object recognition: Evidence for a common attentional mechanism. Vision Research. 1996;36(12):1827–1837. doi: 10.1016/0042-6989(95)00294-4. http://doi.org/10.1016/0042-6989(95)00294-4. [DOI] [PubMed] [Google Scholar]

- Duhamel JR, Colby CL, Goldberg ME. The updating of the representation of visual space in parietal cortex by intended eye movements. Science (New York, N.Y.) 1992;255(5040):90–92. doi: 10.1126/science.1553535. http://doi.org/10.1126/science.1553535. [DOI] [PubMed] [Google Scholar]

- Fraser IH, Craig GL, Parker DM. Reaction time measures of feature saliency in schematic faces. Perception. 1990;19(5):661–673. doi: 10.1068/p190661. http://doi.org/10.1068/p190661. [DOI] [PubMed] [Google Scholar]

- Goffaux V, Hault B, Michel C, Vuong QC, Rossion B. The respective role of low and high spatial frequencies in supporting configural and featural processing of faces. Perception. 2005;34(1):77–86. doi: 10.1068/p5370. http://doi.org/10.1068/p5370. [DOI] [PubMed] [Google Scholar]

- Goffaux V, Rossion B. Faces are "spatial"--holistic face perception is supported by low spatial frequencies. Journal of Experimental Psychology: Human Perception and Performance. 2006;32(4):1023–1039. doi: 10.1037/0096-1523.32.4.1023. http://doi.org/10.1037/0096-1523.32.4.1023. [DOI] [PubMed] [Google Scholar]

- Goldinger SD, He Y, Papesh MH. Deficits in cross-race face learning: insights from eye movements and pupillometry. Journal of Experimental Psychology. Learning, Memory, and Cognition. 2009;35(5):1105–1122. doi: 10.1037/a0016548. http://doi.org/10.1037/a0016548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gosselin F, Schyns PG. Bubbles: A technique to reveal the use of information in recognition tasks. Vision Research. 2001;41(17):2261–2271. doi: 10.1016/s0042-6989(01)00097-9. http://doi.org/10.1016/S0042-6989(01)00097-9. [DOI] [PubMed] [Google Scholar]

- Green MJ, Williams LM, Davidson D. Visual scanpaths and facial affect recognition in delusion-prone individuals: Increased sensitivity to threat? Cognitive Neuropsychiatry. 2003a;8(1):19–41. doi: 10.1080/713752236. http://doi.org/10.1080/713752236. [DOI] [PubMed] [Google Scholar]

- Green MJ, Williams LM, Davidson D. Visual scanpaths to threat-related faces in deluded schizophrenia. Psychiatry Research. 2003b;119(3):271–285. doi: 10.1016/s0165-1781(03)00129-x. http://doi.org/10.1016/S0165-1781(03)00129-X. [DOI] [PubMed] [Google Scholar]

- Greene MR, Liu T, Wolfe JM. Reconsidering Yarbus: a failure to predict observers’ task from eye movement patterns. Vision Research. 2012;62:1–8. doi: 10.1016/j.visres.2012.03.019. http://doi.org/10.1016/j.visres.2012.03.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gurler D, Doyle N, Walker E, Magnotti J, Beauchamp M. A link between individual differences in multisensory speech perception and eye movements. Attention, Perception & Psychophysics. 2015 doi: 10.3758/s13414-014-0821-1. http://doi.org/10.3758/s13414-014-0821-1. [DOI] [PMC free article] [PubMed]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;293(5539):2425–2430. doi: 10.1126/science.1063736. http://doi.org/10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- Heisz JJ, Shore DI. More efficient scanning for familiar faces. Journal of Vision. 2008;8:9.1–10. doi: 10.1167/8.1.9. http://doi.org/10.1167/8.1.9. [DOI] [PubMed] [Google Scholar]

- Hoffman JE, Subramaniam B. The role of visual attention in saccadic eye movements. Perception & Psychophysics. 1995;57:787–795. doi: 10.3758/bf03206794. http://doi.org/10.3758/BF03206794. [DOI] [PubMed] [Google Scholar]

- Holland C, Komogortsev OV. Biometric identification via eye movement scanpaths in reading. 2011 International Joint Conference on Biometrics (IJCB) 2011:1–8. IEEE. http://doi.org/10.1109/IJCB.2011.6117536.

- Horley K, Williams LM, Gonsalvez C, Gordon E. Social phobics do not see eye to eye: A visual scanpath study of emotional expression processing. Journal of Anxiety Disorders. 2003;17(1):33–44. doi: 10.1016/s0887-6185(02)00180-9. http://doi.org/10.1016/S0887-6185(02)00180-9. [DOI] [PubMed] [Google Scholar]

- Horley K, Williams LM, Gonsalvez C, Gordon E. Face to face: Visual scanpath evidence for abnormal processing of facial expressions in social phobia. Psychiatry Research. 2004;127(1):43–53. doi: 10.1016/j.psychres.2004.02.016. http://doi.org/10.1016/j.psychres.2004.02.016. [DOI] [PubMed] [Google Scholar]

- Hsiao JHW, Cottrell G. Two fixations suffice in face recognition. Psychological Science. 2008;19:998–1006. doi: 10.1111/j.1467-9280.2008.02191.x. http://doi.org/10.1111/j.1467-9280.2008.02191.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Janik SW, Wellens AR, Goldberg ML, Dell’Osso LF. Eyes as the center of focus in the visual examination of human faces. Perceptual and Motor Skills. 1978;47(3 Pt 1):857–858. doi: 10.2466/pms.1978.47.3.857. http://doi.org/10.2466/pms.1978.47.3.857. [DOI] [PubMed] [Google Scholar]

- Kanan C, Bseiso DNF, Ray Na, Hsiao JH, Cottrell GW. Humans have idiosyncratic and task-specific scanpaths for judging faces. Vision Research. 2015;108:67–76. doi: 10.1016/j.visres.2015.01.013. http://doi.org/10.1016/j.visres.2015.01.013. [DOI] [PubMed] [Google Scholar]

- Kasprowski P, Ober J. Proceedings of Biometric Authentication Workshop, European Conference on Computer Vision in Prague. Berlin: Springer-Verlag; 2004. Eye Movement in Biometrics. LNCS 3087. [Google Scholar]

- Kaufman L, Rousseeuw PJ. Wiley Series in Probability and Mathematical Statistics. Applied Probability and Statistics. New York: Wiley; 1990. Finding groups in data: An introduction to cluster analysis. [Google Scholar]

- Kim E, Ku J, Kim J-J, Lee H, Han K, Kim SI, Cho H-S. Nonverbal social behaviors of patients with bipolar mania during interactions with virtual humans. The Journal of Nervous and Mental Disease. 2009;197(6):412–418. doi: 10.1097/NMD.0b013e3181a61c3d. http://doi.org/10.1097/NMD.0b013e3181a61c3d. [DOI] [PubMed] [Google Scholar]

- Kim P, Arizpe J, Rosen BH, Razdan V, Haring CT, Jenkins SE, … Leibenluft E. Impaired fixation to eyes during facial emotion labelling in children with bipolar disorder or severe mood dysregulation. Journal of Psychiatry and Neuroscience. 2013;38(6):407–416. doi: 10.1503/jpn.120232. http://doi.org/10.1503/jpn.120232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kliemann D, Dziobek I, Hatri A, Steimke R, Heekeren HR. Atypical reflexive gaze patterns on emotional faces in autism spectrum disorders. The Journal of Neuroscience : The Official Journal of the Society for Neuroscience. 2010;30:12281–12287. doi: 10.1523/JNEUROSCI.0688-10.2010. http://doi.org/10.1523/JNEUROSCI.0688-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Archives of General Psychiatry. 2002;59(9):809–816. doi: 10.1001/archpsyc.59.9.809. http://doi.org/10.1001/archpsyc.59.9.809. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M, Ruff DA, Kiani R, Bodurka J, Esteky H, … Bandettini PA. Matching Categorical Object Representations in Inferior Temporal Cortex of Man and Monkey. Neuron. 2008;60(6):1126–1141. doi: 10.1016/j.neuron.2008.10.043. http://doi.org/10.1016/j.neuron.2008.10.043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loughland CM, Williams LM, Gordon E. Schizophrenia and affective disorder show different visual scanning behavior for faces: A trait versus state-based distinction? Biological Psychiatry. 2002;52(4):338–348. doi: 10.1016/s0006-3223(02)01356-2. http://doi.org/10.1016/S0006-3223(02)01356-2. [DOI] [PubMed] [Google Scholar]

- Malcolm GL, Lanyon LJ, Fugard AJB, Barton JJS. Scan patterns during the processing of facial expression versus identity: an exploration of task-driven and stimulus-driven effects. Journal of Vision. 2008;8(8):2.1–9. doi: 10.1167/8.8.2. http://doi.org/10.1167/8.8.2. [DOI] [PubMed] [Google Scholar]

- Manor BR, Gordon E, Williams LM, Rennie CJ, Bahramali H, Latimer CR, … Meares RA. Eye movements reflect impaired face processing in patients with schizophrenia. Biological Psychiatry. 1999;46(7):963–969. doi: 10.1016/s0006-3223(99)00038-4. http://doi.org/10.1016/S0006-3223(99)00038-4. [DOI] [PubMed] [Google Scholar]

- Marsh PJ, Williams LM. ADHD and schizophrenia phenomenology: Visual scanpaths to emotional faces as a potential psychophysiological marker? Neuroscience and Biobehavioral Reviews. 2006;30(5):651–665. doi: 10.1016/j.neubiorev.2005.11.004. http://doi.org/10.1016/j.neubiorev.2005.11.004. [DOI] [PubMed] [Google Scholar]

- McKelvie SJ. The Role of Eyes and Mouth in the Memory of a Face. The American Journal of Psychology. 1976;89(2):311–323. http://doi.org/10.2307/1421414. [Google Scholar]

- Mehoudar E, Arizpe J, Baker CI, Yovel G. Faces in the eye of the beholder: Unique and stable eye scanning patterns of individual observers. Journal of Vision. 2014;14(7):6. doi: 10.1167/14.7.6. 1–11 http://doi.org/10.1167/14.7.6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris JP, Pelphrey KA, McCarthy G. Controlled scanpath variation alters fusiform face activation. Social Cognitive and Affective Neuroscience. 2007;2(1):31–38. doi: 10.1093/scan/nsl023. http://doi.org/10.1093/scan/nsl023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakamura K, Colby CL. Updating of the visual representation in monkey striate and extrastriate cortex during saccades. Proceedings of the National Academy of Sciences of the United States of America. 2002;99:4026–4031. doi: 10.1073/pnas.052379899. http://doi.org/10.1073/pnas.052379899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Näsänen R. Spatial frequency bandwidth used in the recognition of facial images. Vision Research. 1999;39(23):3824–3833. doi: 10.1016/s0042-6989(99)00096-6. http://doi.org/10.1016/S0042-6989(99)00096-6. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Morris JP, McCarthy G. Neural basis of eye gaze processing deficits in autism. Brain. 2005;128(5):1038–1048. doi: 10.1093/brain/awh404. http://doi.org/10.1093/brain/awh404. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Sasson NJ, Reznick JS, Paul G, Goldman BD, Piven J. Visual Scanning of Faces in Autism. Journal of Autism and Developmental Disorders. 2002;32(4):249–261. doi: 10.1023/a:1016374617369. http://doi.org/10.1023/A:1016374617369. [DOI] [PubMed] [Google Scholar]

- Peterson MF, Eckstein MP. Individual differences in eye movements during face identification reflect observer-specific optimal points of fixation. Psychological Science. 2013;24(7):1216–1225. doi: 10.1177/0956797612471684. http://doi.org/10.1177/0956797612471684. [DOI] [PMC free article] [PubMed] [Google Scholar]